Windows Azure and Cloud Computing Posts for 2/25/2011+

| A compendium of Windows Azure, Windows Azure Platform Appliance, SQL Azure Database, AppFabric and other cloud-computing articles. |

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database and Reporting

- Marketplace DataMarket and OData

- Windows Azure AppFabric: Access Control and Service Bus

- Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch

- Windows Azure Infrastructure

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Azure Blob, Drive, Table and Queue Services

The Windows Azure Team (@WindowsAzure) reported Windows Azure Storage Client Library Reference Documentation Updated with New Sample Code and Remarks on 2/25/2011:

The Windows Azure Storage Client Library Reference now contains sample code and remarks for common tasks such as authenticating access to the storage services, creating and enumerating containers and blobs, copying and snapshotting blobs, reading from and writing to blobs, creating and enumerating queues, and processing messages in queues. As an example, you can look at CloudBlob.CopyFromBlob Method for sample code that checks an access condition on a source blob and copies it if the condition is met. The reference documentation and samples are available for both synchronous and asynchronous methods.

<Return to section navigation list>

SQL Azure Database and Reporting

Robin Shahan (@RobinDotNet) posted How about a bootstrapper package for SQLServer Express 2008 R2? on 2/6/2011 (missed when posted):

When publishing a ClickOnce application, you can open the Prerequisites dialog and select any of the packages to be installed prior to the ClickOnce application. You would probably select the .NET Framework that your application targets and Windows Installer 3.1. You would also select the SQLServer Express 2008 database software if you are using a SQLExpress database in your application.

Last summer, Microsoft released SP-1 for SQLServer 2008 and several people posted to the MSDN ClickOnce Forum and Stack Overflow asking where they could get a new, updated bootstrapper package. I decided to pursue it and see if I could track it down.

I’ll just ask Microsoft.

Saurabh Bhatia, who’s kind enough to help me answer the most difficult ClickOnce questions, routed me to the SQLServer Express program manager, Krzysztof Kozielczyk. Krzysztof said he totally agreed that Microsoft should release a new version of the bootstrapper package every time they updated SQLExpress, but they were busy working on the upcoming releases and he did not have the resources to work on it. It’s hard to know what to say when you tell someone “You should have this” and they say “You’re right, we should, I’m sorry we don’t.” (I’m going to have to remember to use that on my boss in the future.)

According to Krzysztof, the problem is that they can’t just create the package and release it. They have to create the boostrapper package and test it in a bunch of combinations or different variables, such as operating system version, SQLServer Express version (to test that the upgrade works), .NET version, number of olives in a jar, and kinds of mayonnaise available on the west coast, among others. Then if it passes mustard (haha), they have to find somewhere to post it, make it available, and then keep it updated. So more people are involved than just his team, and at that time, everyone in DevDiv at Microsoft was working on the upcoming release of VS2010.

Persistence is one of my best qualiities, so I continued over the following months to

badgertry to convince Krzysztof to at least help me create the package and provide it to the community, especially after R2 came out. Every time someone posted the request to one of the forums, I would take a screenshot and send it to him. He was unfailingly kind, but stuck to his guns. There’s even been a bug filed in Connect for this issue. (On the bright side, Krzysztof did answer the occasional SQLExpress question from the forums for me.)Success! (and disclaimers)

Well, we’ve had quite a bit of back and forth lately, and I’m happy to report that I now have a bootstrapper package that I can use to install SQLServer Express 2008 R2 as a prerequisite to a ClickOnce application. (I skipped 2008 SP-1). Krzysztof did not provide the solution, but by peppering the guy with questions, I have finally created a working package. So big thanks to Krzysztof for continuing to answer my questions and put up with the badgering over the past few months. Now he doesn’t need to avoid me when I go to the MVP Summit at the end of this month!

Disclaimer: This did not come from Microsoft, I figured it out and am posting it for the benefit of the community. Microsoft has no responsibility or liability for this information. I tested it (more on that below), but you must try it out and make sure it works for you rather than assuming. Legally, I have to say this: Caveat emptor. There is no warranty expressed or implied. Habeas corpus. E pluribus unum. Quid pro quo. Vene vidi vici. Ad infinitum. Cogito ergo sum. That is all of the Latin-ish legalese I can come up with to cover my you-know-what. (I have more sympathy for Krzysztof now.)

I tested the package as the prerequisite for a ClickOnce application with both 32-bit and 64-bit Windows 7. I tested it with no SQLExpress installed (i.e. new installation) and with SQLExpress 2008 installed (to test that it updates it to R2). I did not test it with a setup & deployment package, but I’m certain it will work. Maybe one of you will try it out and let me know. I did not try it all of the combinations listed above for Microsoft testing, although I did eat some olives out of a jar while I was testing it.

Enough talk, show me the goods

Here are the instructions on creating your own SQLServer 2008 R2 prerequisite package. You must have a SQLServer 2008 Prerequisite package in order to do this. If you don’t have one, and you don’t have VS2010, install the Express version (it’s free). I think you can also install the Windows 7 SDK and it ill provide those prerequisite packages (I’m guessing, since they show up under the Windows folder under SDKs).

I didn’t post the whole package because there is a program included in the bootstrapper package called SqlExpressChk.exe that you need, and it is not legal for me to distribute it. (I don’t think my Latin would withstand the scrutiny of a Microsoft lawyer.)

First, locate your current bootstrapper packages. On my 64-bit Windows 7 machine, they are under C:\Program Files (x86)\Microsoft SDKs\Windows\v7.0A\Bootstrapper\Packages. If you don’t have the \en\ folder, presumably you have one for a differeng language; just wing it and substitute that folder in the following instructions.

- Copy the folder called “SQLExpress2008” to a new folder called “SQLExpress2008R2”.

- Download the zip file with the product.xml and package.xml you need from here and unzip it.

- In the new \SQLExpress2008R2\ folder, replace the product.xml file with my version.

- In the \SQLExpress2008R2\en\ folder, replace the package.xml file with my version.

- Delete the two SQLExpr*.exe files in the \SQLExpress2008R2\en\ folder.

- Download the 32-bit install package from here and put it in the \SQLExpress2008R2\en\ folder.

- Download the 64-bit install package from here and put it in the \SQLExpress2008R2\en\ folder.

- Close and re-open Visual Studio. You should be able to go to the Publish tab of your application and click on the Prerequisites button and see “SQLExpress2008R2” in the list.

Do NOT remove the SqlExpressChk.exe file from the \SQLExpress2008R2\ folder, or the eula.rtf from the \SQLExpress2008R2\en\ folder.

If you’re using ClickOnce deployment, don’t forget that it does not install updates to the prerequisites automatically – it only updates the ClickOnce application. You will either have to have your customers install the prerequisite before they upgrade the ClickOnce application (just ask them to run the setup.exe again), or programmatically uninstall and reinstall the application for them, including the new prerequisite. (The code for that is in this MSDN article.)

Robin is Dir. of Engineering at GoldMail.com and a Microsoft MVP.

The Microsoft TechNet Wiki has added a SQL Azure Survival Guide article with links to a variety of SQL Azure-related content:

Developer Centers

Visit these sites for introductory information, technical overviews, and resources.

Documentation

Visit these sites to learn what SQL Azure is in detail.

- SQL Azure Documentation on MSDN

- Getting Started with SQL Azure

- An introduction article for Developers

- SQL Azure Frequently Asked Questions

- SQL Azure Data Sync Overview

SQL Azure Code Examples

Take a look at these to learn how to program with SQL Azure.

Blogs

These are blogs by members of the SQL Azure community.

OakLeaf Systems Blog [Emphasis added.]Videos

Watch these videos to learn more about SQL Azure and Windows Azure. They are quick and useful for beginners.

- What is SQL Azure

- TechEd 2010: What is new in SQL Azure

- SQL Azure Database: Present and Future

- The Future of Database Development with SQL Azure

- Building Web Applications with SQL Azure

- What is Windows Azure

- The Future is Windows Azure

Accounts and Pricing

You have decided to try SQL azure. Now, it is time to review the pricing policy. Visit these web sites to learn more about the subscription and billing options.

- Azure Offers

- Microsoft Online Services Customer Portal

- Accounts and Billing in SQL Azure

- Account Setup

Connect

Here are ways to connect with the SQL Azure community and the Azure teams.

Other Resources

- Windows Azure Platform

- SQL Azure Migration Wizard at codeplex

- SQL Azure Developer Portal (You must sign in with your Windows Live ID to see all the projects you have created or for which you have been designated as a Service Administrator by your Account Administrator.)

See Mark Kromer (@mssqldude) ventured Onward with SQL Server Private Cloud, Virtualization, Optimization on 2/225/2011 in the Windows Azure Platform Appliance (WAPA), Hyper-V and Private Clouds section below.

<Return to section navigation list>

MarketPlace DataMarket and OData

No significant articles today.

<Return to section navigation list>

Windows Azure AppFabric: Access Control and Service Bus

Vittorio Bertocci (@vibronet) announced February 2011 Updates to the Identity Training Kit & Course: new AppFabric Portal, Windows 7 SP1 in a 2/25/2011 post:

As promised few weeks ago, here there’s the update to the identity developer training kit and the identity developer training course on MSDN.

We revved the instructions for the two HOLs about the ACS Labs release, refactoring instructions to match the look & feel f the newest AppFabric portal.

As I am sure you heard, two days ago we released the first service pack for Windows 7; since we were at it, we updated the setup scripts for all HOLs to work with Windows7 SP1 as well. Happy claims-crunching!

The Windows Azure AppFabric Team reported Windows Azure AppFabric CTP- Scheduled Maintenance Notification (February 28, 2011) on 2/25/2011:

The next maintenance to the Windows Azure AppFabric LABS environment is scheduled for February 28, 2011 (Monday). Users will have NO access to the AppFabric LABS portal and services during the scheduled maintenance down time.

We apologize in advance for any inconvenience.

When:

- START: February 28, 2011, 10 am PST

- END: February 28, 2011, 4 pm PST

Impact Alert:

- The AppFabric LABS environment (Service Bus, Access Control, Caching, and Portal) will be unavailable during this period. Additional impacts are described below.

Action Required:

- None

It’s probably safe to assume that the AppFabric LABS environment will have new content by Monday afternoon.

The Microsoft Download Center offered Single Sign-On from Active Directory to a Windows Azure Application Whitepaper on 12/20/2010 (missed when posted):

Overview

This paper contains step-by-step instructions for using Windows® Identity Foundation, Windows Azure, and Active Directory Federation Services (AD FS) 2.0 for achieving SSO across web applications that are deployed both on premises and in the cloud. Previous knowledge of these products is not required for completing the proof of concept (POC) configuration. This document is meant to be an introductory document, and it ties together examples from each component into a single, end-to-end example.

<Return to section navigation list>

Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

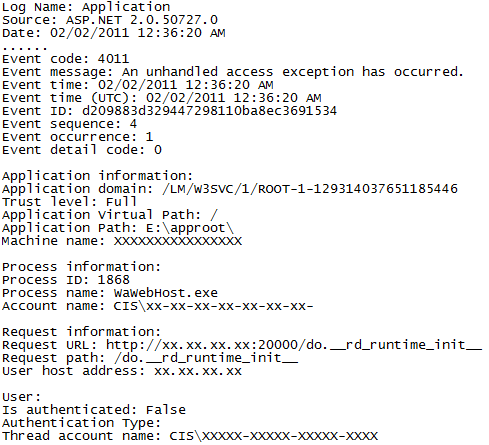

Avkash Chauhan explained the System.UnauthorizedAccessException with ASP.NET Web Role in Windows Azure in a 2/25/2011 post:

After working in one Windows Azure issue in which the ASP.NET Web Role was stuck in between "Busy" and "Starting", I decided to write the details to save some time for someone.

After I logged in to Windows Azure VM and looked for Application Event logs I found the following two errors:

Error #1:

Error #2:

After little more debugging I found that the ASP.NET Web Role was keep crashing because the code was trying write into a file name "StreamLog.txt" in the RoleRoot folder as below:

- Exception type: System.UnauthorizedAccessException

- Message: Access to the path 'E:\approot\StreamLog.txt' is denied.

The Web Role don't have write access to RoleRoot folder and if anyone needs to write on Windows Azure VM the solution is the create a local storage and write using the local storage

Avkash Chauhan explained a WebRole with WCF Service got in "Busy" state after adding StandardEndpoint in web.config problem in a 2/24/2011 post:

I recently worked on an incident in which the Windows Azure application had 1 web role and one WCF role. Initially the basics application with no specific changes worked well on compute emulator and on Windows Azure. After when we added the following configuration in the web.config the application for stuck in “Busy” on Azure:

After little more investigation inside the Windows Azure VM, I found that the reason for this issue is that the cloud VM's machine.config is missing the following section:

After I added above part in the machine.config, the role started without any further problem.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

John Moore (@john_chilmark) expressed doubts about the athenahealth-Microsoft partnership but considered the Microsoft-Dell announcement was quite significant in his HIMSS’11: Setting Expectations post of 2/25/2011 to the Chillmark Research blog:

Over a 1,000 exhibitors, some 30,000+ attendees and I come away from HIMSS, again, thinking is this all there is? Where is the innovation that the Obama administration i.e., Sec. Sebellius and Dr. Blumenthal both touted in their less than inspiring keynotes on Wednesday morn? Maybe I had my blinders on, maybe I was looking in the wrong places but honestly, outside of the expected, we now have an iPad App for that type of innovation where nearly every EHR vendor has an iPad App for the EHR, or will be realeasing such this year, I just didn’t see anything that really caught my attention. But then again, looking over my posts from previous HIMSS (this was my fourth), maybe my expectations need a serious reset and it would be wise of me to read this post next year before I get on the plane to Las Vegas and HIMSS’12.

Prior to HIMSS I participated in a webinar put on by mobihealthnews (BTW, Brian at mobi has a good article on some of those mobile apps being rolled out at HIMSS this year). My role in this webinar was to give an overview of what one might expect at HIMSS’11. Having weathered the last two HIMSS and the major hype in ’09 about Meaningful Use and ’10 when HIEs were all the rage, this year I predicted that the big hype would be around ACOs. Much to my surprise such was not the case.

The reason was quite simple and two fold.

First healthcare CIOs and their staff are going through numerous contortions to get their IT systems in order to meet Stage One Meaningful Use (MU) requirements. Looking ahead their focus is naturally myopic: What do I need to do to meet Stage Two and finally Stage Three MU requirements, requirements that have yet to be published? Then there is this little transition to ICD-10 that some pundits claim is the HIT sector’s own Y2K nightmare (not sure if that means the hype and fear is far greater than reality or what). Either way, CIOs are having a tough enough time just keeping up these demands and filling their ranks with knowledgable staff (one CIO told me he has 53 open positions he’s trying to fill) to even begin thinking about ACOs.

Secondly, there are the vendors who today are not completely sure of what exactly healthcare organizations (HCOs) will need to succeed under the new ACO model of care and bundled payments. In countless meetings I had over the course of my three days at HIMSS I did not meet one vendor that had a clear picture of what they intended to offer the market to help HCOs become successful ACOs. There was unanimous agreement among the vendors I met with that analytics/BI would play a pivotal role, but what those analytics would look like, what types of reports would be produced and for whom, were less than clear. So it looks like we may have to wait another year before the ACO banter begins in earnest at HIMSS.

Some Miscellaneous HIMSS Snippets:

Much to the chagrin of virtually every EHR vendor at HIMSS (still far too many and I just can’t even begin to figure out how they all stay in business) Chuck Friedman of ONC announced in his presentation on Sunday that they are looking into usability testing of EHRs as part of certification process. Spoke to someone from NIST who told me this is a very serious consideration and they are putting in place the necessary pieces to make it happen.

Defense contractor and beltway bandit of NHIN CONNECT fame, Harris Corp. acquired HIE/provider portal vendor Carefx (Carefx was profiled in our recent HIE Market Report) from Carlyle Grp for a relatively modest $155M. I say modest as this was some 2x sales and far less than the spectacular valuations that Axolotl and Medicity received. Could this be a reset of expectations for those other HIE vendors looking to be acquired? Reason for acquisition is likely two-fold: Carefx has a good presence in DoD and this may help Harris land some potentially very lucrative contracts as the DoD and VA look to bring their systems together. Secondly, for some bizarre, and likely highly political reason, Harris won the Florida State HIE contract and now has to go out and pull the pieces together to actually deliver a solution, which frankly they don’t have but Carefx will help them get there..

Kathleen Sebellius needs a new speech writer. David Blumenthal needs more coffee before he hits the stage.

The folks at HIStalk once again provided excellent, albeit slightly self-congratulatory coverage of HIMSS. They also threw one of the better parties that I attended. Thank you HIStalk team.

Had several people, mostly investor types contact me for my opinion of the athenahealth-Microsoft partnership that was announced. Do not see much in the way of opportunities for either party in near-term. It will take a lot of work for anything truly meaningful and profitable to come from this relationship. That being said, did think that the Microsoft-Dell announcement was quite significant and should be watched closely, especially if Microsoft can truly get Amalga down to a productized, easily deployable version for community hospitals that Dell intends to target. [Emphasis added.]

HIMSS and most vendors are still giving lip-service to patient engagement. Rather than seeing a slow rise in discussing how to engage consumers via HIT, this issue is something that few vendors bother mentioning and when they do, it is still with the old message of how to market to consumers with these types of tools rather than engaging consumers/patients as part of the care team. Hell, not even part of the care team, but the damn center of the care team. Not sure when these vendors will get religion on this issue. Maybe they are just following the lead of their customers who have yet to fully realize that in the future, a future where payment will be bundled, that actively engaging consumers in managing their health will be critical. While I have not completely given up hope on this industry to address what is arguably the most challenging issue facing healthcare’s future, I do chide them for not having more vision and frankly guts to take a leadership role and help guide their customers forward.

Doug Finke (@dfinke) provided links to posts explaining How PowerShell can Automate Deployment to Multiple Windows Azure Environments on 2/25/2011:

The two blog posts below are providing insight into how to create an automated delivery pipeline to the Cloud, Microsoft Azure. I believe the same fundamentals can and ought be applied to the software development life cycle whether you have a hybrid or Enterprise model.

The first post is the updated SDK and PowerShell cmdlets for MSBuild and Azure.

The second is a walk through showing how to automatically build and deploy your Windows Azure applications to multiple accounts and use configuration transforms to modify your Service Definition and Service Configuration files.

- Scott Densmore - Another Update for the Deployment scripts for Windows Azure [see below.]

- Tom Hollander – Using MSBuild to deploy to multiple Windows Azure environments

Doug is a Microsoft Most Valuable Professional (MVP) for PowerShell.

Scott Densmore posted Another Update for the Deployment scripts for Windows Azure on 2/24/2011:

Tom Hollander called me the other day looking for some advice on deployment scripts for Windows Azure. Given that I have not got around to updating these for the new SDK and commandlets, I decided it was about time. Tom did beat me to the punch and put out his own post (which is very good), yet I figured I would update the scripts and push them out to github. Now anytime you want, you can fork, send me pull requests etc.

The only major changes besides the SDK and Commandlet updates where the ideas incorporated from Tom's post. I now pass all the info to the PowerShell scripts from the msbuild file. I also fixed a problem in the deploy PowerShell script. If you don't have the names of your service host and storage account the same, the deploy fails. You can read more about it here.

The Windows Azure Team announced New Channel 9 Video Interview with InterGrid Discusses Building on Windows Azure in a 2/25/2011 post:

If you're looking for a developer perspective about building on Windows Azure, then you should check out this new Channel 9 video interview with InterGrid's Senior Developer, Peter Soukalopoulos. In it, he describes how - and why - InterGrid uses the Windows Azure platform.

InterGrid uses Windows Azure to provide its customers with on-demand bursts of computing power for complex and intensive computing processes. A computing process that would typically take days to complete before the cloud, can now be completed in a matter of minutes using Windows Azure. The common usage scenarios for InterGrid's solution entail the rendering of visually rich imagery such as an automobile prototypes, genetic DNA strand sequencing or animated 3-D movie frames.

To help customers take advantage of this service, the company has created an API called GreenButton to embed in third party software applications. GreenButton uses a patented Job Prediction algorithm to present the user with time and cost alternatives for running their job. The GreenButton SDK enables ISVs and developers to embed the GreenButton API in their own applications in order to provide fast and easy access to cloud computing resources for their customers. You can learn more about GreenButton here.

The New Zealand ISV Blog team posted a report about GreenButton in Intergrid Case Study–GreenButton of 9/30/2010.

Digital Signage Expo reported Ayuda Announces Media ERP Platform Shift to Microsoft’s Azure Cloud at DSE® 2011 on 2/25/2011:

Digital out-of-home (DOOH) solutions provider Ayuda Media Systems has announced at Digital Signage Expo® 2011 that it is moving its Enterprise Resource Planning (ERP) service to Microsoft’s Windows Azure cloud computing platform.

The move to Azure allows Ayuda and its global OOH and DOOH media clients to make on-demand changes in computer processing and data storage requirements using simple, web-based controls.

“We’ve run our own cloud service up until now and it has served us well, but moving to Azure gives us an incredible amount of flexibility in how we work with our customer,” said Andreas Soupliotis, president and CEO of Ayuda. “If we have a client contact us and say, ‘We need more CPU power,’ we can literally go into the Azure control console online and add more horsepower with just a couple of clicks.”

Azure gives Montreal-based Ayuda instant scalability to meet any client demands for short-term, large-volume computing or batch processing. Microsoft’s global server farm footprint also markedly strengthens Ayuda’s service level and disaster recovery position. “Prospective customers challenge us on issues like redundancy and recovery processes, and while we’ve always had a good scheme in place, Azure makes us bulletproof,” explained Soupliotis.

Ayuda has redesigned and fully tested its applications to call into and interact with the Azure platform.

Ayuda demonstrated its Splash™ ERP platform at DSE® 2011 in Las Vegas. Splash™ is a comprehensive media management system for DOOH networks that includes asset management, granular scheduling and targeting, dynamic and static loop templates, peer-to-peer content distribution and network monitoring. Splash™ ties in seamlessly with Ayuda’s BMS software suite, which includes modules for billing and invoicing, leasing, mapping, proof of performance, and reporting.

Ayuda also recently announced OpenSplash™, a free, multi-platform open source media player that can be driven by any digital signage/DOOH content management and scheduling system.

Jim Zimmerman(? jimzim) updated the Facebook C# SDK release 5.0.3 on CodePlex for running Facebook IFrame apps on 2/25/2010:

Project Description

The Facebook C# SDK helps .Net developers build web, desktop, Silverlight, and Windows Phone 7 applications that integrate with Facebook.

Like us on Facebook at our official page!

Help and Support

Use stackoverflow for help and support. We answer questions there regularly. Use the tags 'facebook-c#-sdk' and 'facebook' plus any other tags that are relevant. If you have a feature request or bug create an issue.Get a FREE Windows Azure Trial Account

- Try out a free Windows Azure account for 30 days with your Facebook app.

- Go here, select "United States" and enter promocode FBPASS

Features

- NuGet Packages Available (Facebook, FacebookWeb, FacebookWebMvc)

- Compatible with all Graph API and REST API Calls

- Supports all forms of Facebook authentication: Cookies, OAuth 2.0, Signed Requests

- Samples Applications to get started quickly

- Client authentication tool to get test access tokens

<Return to section navigation list>

Visual Studio LightSwitch

Return to section navigation list>

Windows Azure Infrastructure

Erik Von Ommeren and Martin van der Berg published an electronic copy of their of their latest book, Seize the Cloud – A Manager’s Guide to Success with Cloud Computing:

Seize the Cloud will serve as your guide through the business and enterprise architecture aspects of Cloud Computing. In a down-to-earth way, Cloud is woven into the reality of running an organization, managing IT and creating value with technology. This book has a positive note without turning evangelical or overly optimistic: it does not shy away from the barriers that could stand in the way of adoption. As a final dose of reality, eleven cases can be found in between the chapter in which leading organizations share insights gained from their experience with Cloud.

Download the book (pdf)

Download ePub (electronic book)

ISBN: 978-90-75414-32-5

Thanks to Brent Stineman (@brentcodemonkey) for the heads-up in his Cloud Computing Digest for February 28th, 2011.

The Windows Azure Dashboard reported [Windows Azure Compute] [North Central US] [Red] Windows Azure DNS issues on 2/24/2011:

Feb 24 2011 10:45PM We are experiencing an issue with Windows Azure. We are actively investigating this issue and working to resolve it as soon as possible. Further updates will be published to keep you apprised of the situation. We apologize for any inconvenience this causes our customers.

- Feb 24 2011 11:10PM DNS lookups were failing in the cloudapp.net zone. Full service functionality has been restored in all regions. We apologize for any inconvenience this caused our customers.

The Windows Azure Dashboard reported [Windows Azure Compute] [South Central US] [Red] Windows Azure DNS issues on 2/24/2011:

Feb 24 2011 10:45PM We are experiencing an issue with Windows Azure. We are actively investigating this issue and working to resolve it as soon as possible. Further updates will be published to keep you apprised of the situation. We apologize for any inconvenience this causes our customers.

- Feb 24 2011 11:10PM DNS lookups were failing in the cloudapp.net zone. Full service functionality has been restored in all regions. We apologize for any inconvenience this caused our customers.

Not good when both U.S. datacenters have simultaneous DNS outages.

The Microsoft TechNet Wiki has added a Windows Azure Survival Guide article with links to a variety of Azure-related content:

Microsoft Content

Virtual Lab

Blogs

- Windows Azure Team Blog

- SQL Azure Team Blog

- Windows Azure Storage Team Blog

- Windows Azure AppFabric Team Blog

- Windows Azure Support Team Blog

- Windows Azure MarketPlace DataMarket Blog

- Smarx Blog

- Jim Nakashima's Blog - Cloudy in Seattle

- Matias Woloski’s Blog

- Zane Adam's Blog

- OakLeaf Systems Blog

Forums

- Windows Azure Platform forums

- Scenario based forums to discuss how to use these as well as other related Windows Azure technologies:

- Windows Azure

- SQL Azure

- Windows Azure Storage

- Windows Azure AppFabric

- Windows Azure MarketPlace forums

- DataMarket

Videos

- How Do I: What is Azure?

- What is the Windows Azure Platform?

- Windows Azure Platform | Channel 9

- Deploying Applications on Windows Azure | Ch. 9

- What is Microsoft Online Services?

- Cloud Trust at 10,000 feet

- TechNet Radio: Microsoft Cloud Services: Windows Azure in K-12 Education

- TechNet Radio: Windows Azure from a Non-Microsoft Perspective

- TechNet Radio: Business Intelligence in the Cloud

- TechNet Radio: Data Storage Solutions in the Cloud

- TechNet Radio: SQL Azure: Growing Opportunities for Data in the Cloud

- Windows Azure MMC v2

- Cloud Cover Episode 14 - Diagnostics

New! Security Talk: Windows Azure Security: A Peek Under the Hood (Level 100)

New! Security Talk: Security Best Practices for Design and Deployment on Windows Azure (Level 200)

New! Security Talk: Delivering and Implementing a Secure Cloud Infrastructure (Level 200)

New! Cloud Computing & Windows Azure Learning "Snacks"

“What is Cloud Computing?” “How do I get started on the Azure platform?” Microsoft has launched a series of Cloud/Azure Learning Snacks to help satisfy your hunger for more knowledge. Time-strapped? You can learn something new in less than five minutes! Learn more about how Cloud Computing and Azure; try a “snack” today at:

The article continues with a potpourri of “Other Resources.”

<Return to section navigation list>

Windows Azure Platform Appliance (WAPA), Hyper-V and Private Clouds

Mark Kromer (@mssqldude) ventured Onward with SQL Server Private Cloud, Virtualization, Optimization on 2/225/2011:

What to call it? Personally, I don’t care for calling virtualized data centers “Private Cloud”. I prefer “Data Center Optimization”. And when you go beyond simply virtualizing SQL Server instances into automation, provisioning templates, self-servicing, billing, etc. you’ve definitely implemented a more optimized, streamlined data center.

This is what is being called a “Private Cloud”. But that is a fluffy term that does not say anything about the optimization of the data center or of the database.

Anyway, I continued this conversation through a series of responses to the online editor at SearchSQLServer.com here [in an interview format.]

<Return to section navigation list>

Cloud Security and Governance

Brian Prince claimed “A recap of the RSA Conference touches everything from cloud security to cyber-war” in a preface to his RSA Conference: Security Issues from the Cloud to Advanced Persistent Threats post to eWeek’s IT Security & Network News blog:

The 20th annual RSA Conference in San Francisco came to a close Feb. 18, ending a week of product announcements, keynotes and educational sessions that produced their share of news. This year's hot topics: cloud computing and cyber-war.

The conference included a new session track about cloud computing, and the topic was the subject of the keynote by Art Coviello, executive vice president at EMC and executive chairman of the company's RSA security division. Virtualization and cloud computing have the power to change the evolution of security dramatically in the years to come, he said.

"At this point, the IT industry believes in the potential of virtualization and cloud computing," Coviello said. "IT organizations are transforming their infrastructures. ... But in any of these transformations, the goal is always the same for security—getting the right information to the right people over a trusted infrastructure in a system that can be governed and managed."

EMC's RSA security division kicked the week off by announcing the Cloud Trust Authority, a set of cloud-based services meant to facilitate secure and compliant relationships between organizations and cloud service providers by enabling visibility and control over identities and information. EMC also announced the new EMC Cloud Advisory Service with Cloud Optimizer.

In addition, the Cloud Security Alliance (CSA) held the CSA Summit Feb. 14, featuring keynotes from Salesforce.com Chairman and CEO Marc Benioff and U.S. Chief Information Officer Vivek Kundra.

But the cloud was just one of several items touched on during the conference. Cyber-war and efforts to protect critical infrastructure companies were also discussed repeatedly. In a panel conversation, former Department of Homeland Security Secretary Michael Chertoff, security guru Bruce Schneier, former National Security Agency Director John Michael McConnell and James Lewis, director and senior fellow of the Center for Strategic and International Studies' Technology and Public Policy Program, discussed the murkiness of cyber-warfare discussions.

"We had a Cold War that allowed us to build a deterrence policy and relationships with allies and so on, and we prevailed in that war," McConnell said. "But the idea is the nation debated the issue and made some policy decisions through its elected representatives, and we got to the right place. … I would like to think we are an informed society, [and] with the right debate, we can get to the right place, but if you look at our history, we wait for a catastrophic event."

Part of the solution is partnerships between the government and the private sector.

"One of the biggest issues you got—[and] unfortunately we haven't made enough progress—we need better coordination across the government agencies, and from the government agencies to the private sector," Symantec CEO Enrique Salem said. "I think we still work too much in silos inside the government [and] work too much in silos between the government and the private sector."

The purpose of such efforts is to target advanced persistent threats (APTs).

"Part of the problem of when you define [advanced persistent threats], it's not going to be like one single piece of software or platform; it's a whole methodology for how bad guys attack the system," Bret Hartman, CTO of EMC's RSA security division, told eWEEK.

"They're going to use every zero-day attack they can throw at you," he explained. "They are going to use insider attacks; they're going to use all kinds of things because they are motivated to take out whatever it is they want."

The answer, Hartman said, is a next-generation Security Operations Center (SOC) built on six elements: This vision includes six core elements: risk planning; attack modeling; virtualized environments; automated, risk-based systems; self-learning, predictive analysis; and continual improvement through forensic analyses and community learning.

Preventing attacks also means building more secure applications. In a conversation with eWEEK, Brad Arkin, Adobe Systems' director of product security and privacy, discussed some of the ways Adobe has tried to improve its own development process, and offered advice for companies looking to do the same.

"The details of what you do with the product team are important, but if you can't convince the product team they should care about security, then they are not going to follow along with specifics," Arkin said. "So achieving that buy-in to me is one of the most critical steps."

No significant articles today.

<Return to section navigation list>

Cloud Computing Events

Bruce Kyle reminded developers on 2/24/2011 that Last Chance at TechEd Discount Ends Feb 28:

Save $200 on your Tech·Ed conference fee. Register by February 28.

The 19th annual Microsoft Tech·Ed North America conference provides developers and IT professionals the most comprehensive technical education across Microsoft's current and soon-to-be-released suite of products, solutions and services.

Our four day event at the Georgia World Congress Center in Atlanta, Georgia offers hands-on learning, deep product exploration and opportunities to connect with industry and Microsoft experts one-to-one. If you are developing, deploying, managing, securing and mobilizing Microsoft solutions, Tech·Ed is the conference that will help you solve today's real-world IT challenges and prepare for tomorrow's innovations.

Session Catalog

For more details, see our session catalog.

Pre Conference Seminars

Registration to attend a Pre-Conference Seminar held on Sunday, May 15, at the Georgia World Congress Center will be live this week. The day-long workshops are led by industry experts and focus on hot topics relevant to today’s technology. The additional investment for Tech·Ed attendees is $400, $500 for non-attendees.

Submit Birds-of-a-Feather Session

The community-led Birds-of-a-Feather (BOF) sessions, organized and selected by volunteers from GITCA and INETA, are back for 2011. BOF sessions are open discussions on developer and IT topics of interest to attendees, so please encourage your customers and partners willing to lead a BOF discussion to submit a BOF abstract.

All abstracts must be received no later than March 15. Submitters will be notified whether their session abstract was accepted, no later than April 4. Please note, Microsoft employees are not eligible to submit topics, but are encouraged to participate in BOF sessions.

See my Preliminary Session List for TechEd North America 2011’s Cloud Computing & Online Services Track post of 2/25/2011 for an early list of cloud-related sessions.

<Return to section navigation list>

Other Cloud Computing Platforms and Services

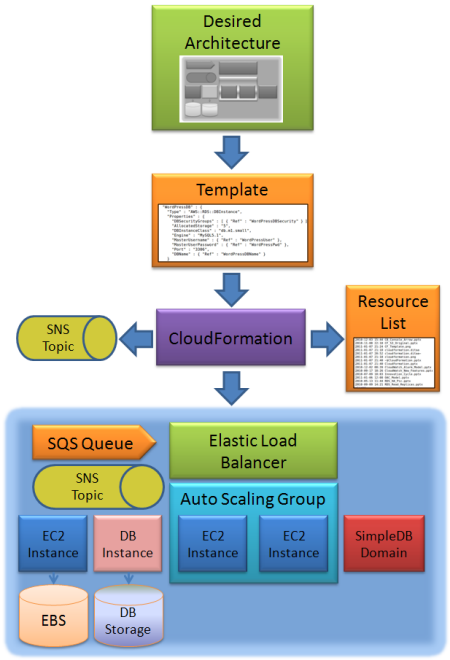

Ernest Mueller (@ernestmueller) asserted “Amazon implements Azure on Amazon” in his Amazon CloudFormation: Model Driven Automation For The Cloud post to The Agile Admin blog of 2/25/2011:

You may have heard about Amazon’s newest offering they announced today, CloudFormation. It’s the new hotness, but I see a lot of confusion in the Twitterverse about what it is and how it fits into the landscape of IaaS/PaaS/Elastic Beanstalk/etc. Read what Werner Vogels says about CloudFormation and its uses first, but then come back here! [See item below.]

Allow me to break it down for you and explain why this is such a huge leverage point for cloud developers.

What Has Come Before

Up till now on Amazon you could configure up a single virtual image the way you wanted it, with an AMI. You could even kind of construct a scalable tier of similar systems using Auto Scaling, by defining Launch Configurations. But if you wanted to construct an entire multitier system it was a lot harder. There are automated configuration management tools like chef and puppet out there, but their recipes/models tend to be oriented around getting a software loadout on an existing system, not the actual system provisioning – in general they come from the older assumption you have someone doing that on probably-physical systems using bcfg2 or cobber or vagrant or something.

So what were you to do if you wanted to bring up a simple three tier system, with a Web tier, app server tier, and database tier? Either you had to set them up and start them manually, or you had to write code against the Amazon APIs to explicitly pull up what you wanted. Or you had to use a third party provisioning provider like RightScale or EngineYard that would let you define that kind of model in their Web consoles but not construct your own model programmatically and upload it. (I’d like my product functionality in my own source control and not your GUI, thanks.)

Now, recently Amazon launched Elastic Beanstalk, which is more way over on the PaaS side of things, similar to Google App Engine. “Just upload your application and we’ll run it and scale it, you don’t have to worry about the plumbing.” Of course this sharply limits what you can do, and doesn’t address the question of “what if my overall system consists of more than just one Java app running in Beanstalk?”

If your goal is full model driven automation to achieve “infrastructure as code,” none of these solutions are entirely satisfactory. I understand CloudFormation deeply because we went down that same path and developed our own system model ourselves as a response!

I’ll also note that this is very similar to what Microsoft Azure does. Azure is a hybrid IaaS/PaaS solution – their marketing tries to say it’s more like Beanstalk or Google App Engine, but in reality it’s more like CloudFormation – you have an XML file that describes the different roles (tiers) in the system, defines what software should go on each, and lets you control the entire system as a unit.

So What Is CloudFormation?

Basically CloudFormation lets you model your Amazon cloud-based system in JSON and then provision and control it as a unit. So in our use case of a three tier system, you would model it up in their JSON markup and then CloudFormation would understand that the whole thing is a unit. See their sample template for a WordPress setup. (A mess more sample templates are here.)

Review the WordPress template; it lets you define the AMIs and instance types, what the security group and ELB setups should be, the RDS database back end, and feed in variables that’ll be used in the consuming software (like WordPress username/password in this case).

Once you have your template you can tell Amazon to start your “stack” in the console! It’ll even let you hook it up to a SNS notification that’ll let you know when it’s done. You name the whole stack, so you can distinguish between your “dev” environment and your “prod” environment for example, as opposed to the current state of the Amazon EC2 console where you get to see a big list of instance IDs – they added tagging that you can try to use for this, but it’s kinda wonky.

Why Do I Want This Again?

Because a system model lets you do a number of clever automation things.

Standard Definition

If you’ve been doing Amazon yourself, you’re used to there being a lot of stuff you have to do manually. From system build to system build even you do it differently each time, and God forbid you have multiple techies working on the same Amazon system. The basic value proposition of “don’t do things manually” is huge. You configure the security groups ONCE and put it into the template, and then you’re not going to forget to open port 23 AGAIN next time you start a system. A core part of what DevOps is realizing as its value proposition is treating system configuration as code that you can source control, fix bugs in and have them stay fixed, etc.

And if you’ve been trying to automate your infrastructure with tools like Chef, Puppet, and ControlTier, you may have been frustrated in that they address single systems well, but do not really model “systems of systems” worth a damn. Via new cloud support in knife and stuff you can execute raw “start me a cloud server” commands but all that nice recipe stuff stops at the box level and doesn’t really extend up to provisioning and tracking parts of your system.

With the CloudFormation template, you have an actual asset that defines your overall system. This definition:

- Can be controlled in source control

- Can be reviewed by others

- Is authoritative, not documentation that could differ from the reality

- Can be automatically parsed/generated by your own tools (this is huge)

It’s also nicely transparent; when you go to the console and look at the stack it shows you the history of events, the template used to start it, the startup parameters it used… Moving away from the “mystery meat” style of system config.

Coordinated Control

With CloudFormation, you can start and stop an entire environment with one operation. You can say “this is the dev environment” and be able to control it as a unit. I assume at some point you’ll be able to visualize it as a unit, right now all the bits are still stashed in their own tabs (and I notice they don’t make any default use of their own tagging, which makes it annoying to pick out what parts are from that stack).

This is handy for not missing stuff on startup and teardown… A couple weeks ago I spent an hour deleting a couple hundred rogue EBSes we had left over after a load test.

And you get some status eventing – one of the most painful parts of trying to automate against Amazon is the whole “I started an instance, I guess I’ll sit around and poll and try to figure out when the damn thing has come up right.” In CloudFront you get events that tell you when each part and then the whole are up and ready for use.

What It Doesn’t Do

It’s not a config management tool like Chef or Puppet. Except for what you bake onto your AMI it has zero software config capabilities, or command dispatch capabilities like Rundeck or mcollective or Fabric. Although it should be a good integration point with those tools.

It’s not a PaaS solution like Beanstalk or GAE; you use those when you just have an app you want to deploy to something that’ll run it. Now, it does erode some use cases – it makes a middle point between “run it all yourself and love the complexity” and “forget configurable system bits, just use PaaS.” It allows easy reusability, say having a systems guy develop the template and then a dev use it over and over again to host their app, but with more customization than the pure-play PaaSes provide.

It’s not quite like OVF, which is more fiddly and about virtually defining the guts of a single machine than defining a set of systems with roles and connections.

Competitive Analysis

It’s very similar to Microsoft Azure’s approach with their .cscfg and .csdef files which are an analogous XML model – you really could fairly call this feature “Amazon implements Azure on Amazon” (just as you could fairly call Elastic Beanstalk “Amazon implements Google App Engine on Amazon”.) In fact, the Azure Fabric has a lot more functionality than the primitive Amazon events in this first release. Of course, CloudFormation doesn’t just work on Windows, so that’s a pretty good width vs depth tradeoff. [Emphasis added.]

And it’s similar to something like a RightScale, and ideally will encourage them to let customers actually submit their own definition instead of the current clunky combo of ServerArrays and ServerTemplates (curl or Web console? Really? Why not a model like this?). RightScale must be in a tizzy right now, though really just integrating with this model should be easy enough.

Where To From Here?

As I alluded, we actually wrote our own tool like this internally called PIE that we’re looking to open source because we were feeling this whole problem space keenly. XML model of the whole system, Apache Zookeeper-based registry, kinda like CloudFormation and Azure. Does CloudFormation obsolete what we were doing? No – we built it because we wanted a model that could describe cloud systems on multiple clouds and even on premise systems. The Amazon model will only help you define Amazon bits, but if you are running cross-cloud or hybrid it is of limited value. And I’m sure model visualization tools will come, and a better registry/eventing system will come, but we’re way farther down that path at least at the moment. Also, the differentiation between “provisioning tools” that control and start systems like CloudFormation and bcfg2 and “configuration” tools that control and start software like Chef and Puppet (and some people even differentiate between those and “deploy” tools that control and start applications like Capistrano) is a false dichotomy. I’m all about the “toolchain” approach but at some point you need a toolbelt. This tool differentiation is one of the more harmful “Dev vs Ops” differentiations.

I hope that this move shows the value of system modeling and helps people understand we need an overarching model that can be used to define it all, not just “Amazon” vs “Azure” or “system packages” vs “developed applications” or “UNIX vs Windows…” True system automation will come from a UNIVERSAL model that can be used to reason about and program to your on premise systems, your Amazon systems, your Azure systems, your software, your apps, your data, your images and files…

Conclusion

You need to understand CloudFormation, because it is one of the most foundational changes that will have a lot of leverage that AWS has come out with in some time. I don’t bother to blog about most of the cool new AWS features, because they are cool and I enjoy them but this is part of a more revolutionary change in the way systems are managed, the whole DevOps thing.

Werner Vogels (@werner) described Simplifying IT - Create Your Application with AWS CloudFormation in a 2/25/2011 post:

With the launch of AWS CloudFormation today another important step has been taken in making it easier for customers to deploy applications to the cloud. Often an application requires several infrastructure resources to be created and AWS CloudFormation helps customers create and manage these collections of AWS resources in a simple and predictable way. Using declarative Templates customers can create Stacks of resources ensuring that all resources have been created, in the right sequence and with the correct confirmation.

Earlier this year I met with an ISV partner who transformed his on-premise ERP software into a software-as-a-service offering. They had taken the approach that they would not only be offering their software as a scalable multi-tenant product but also as a single tenant environment for customers that want to have their own isolated environment. When a new customer is onboarded, the ISV has to spin up a collection of AWS resources to run their web-servers, app-servers and databases in a multi-AZ (availability zone) setting to achieve high-availability. There are several resources required: Elastic Load Balancers, EC2 instances, EBS volumes, SimpleDB domains and an RDS instance. They also setup Auto Scaling, EC2 and RDS Security Groups, configure CloudWatch monitoring and alarms, and use SNS for notifications. They have a centralized control environment for managing all their customers, but creating and tearing down environments is a lot of work and it is challenging to manage the different failure scenarios during these procedures. Next to that they are often doing specialized development for these customers, meaning that for each production environment there may also be development and testing environments running. Creating and managing these environments was a pain that AWS CloudFormation set out to relieve.

AWS CloudFormation solves the complexity of managing the creation of collections of AWS resources in a predictable way. CloudFormation centers around two main abstractions: the Template in which the customer describes, in a simple text based format (JSON), what resources need to be created, what their dependencies are, what configuration parameters are needed, etc. The Template can then be used to create a Stack, which is an instantiation of the collection of AWS resources described in the template, created in the right sequence with right configuration of resources and applications. If anything goes wrong during the creation process, automatic rollback will be executed and resources created for this stack will be cleaned up. The resources in a Stack once they are created can be managed with the usual tools and controls.

Customers also frequently asked us for the ability to assign unique names to the collections of resources such that during operations administrators know exactly which resources are assigned to which application and to which of their customers. A simple scenario is for example the ability to clearly identify production from staging and development environments. AWS CloudFormation tags resources and lets you view all the resources for a Stack in a single place allowing you to quickly identify which resources are production and which are test.

If you want to see an example of how a simple application such as the Wordpress blogging platform can configured and created using AWS CloudFormation see the detailed posting by Jeff Barr at the AWS developer blog. Wordpress is just one of the many ready-to-run Templates that are available and that demonstrate how easy it is to get infrastructure for your application up and running.

Just as with AWS Elastic Beanstalk, AWS CloudFormation comes at no additional cost, you only pay for those resources that you actually consume. CloudFormation make it easier for customers to run their applications in the cloud, templates are already available for many popular applications and many application vendors will be providing their templates along with their applications.

For more information on AWS CloudFormation see their detail page and read more on the AWS developer blog.

Jeff Barr (@jeffbarr) posted AWS CloudFormation - Create Your AWS Stack From a Recipe on 2/25/2011:

My family does a lot of cooking and baking. Sometimes we start out with a goal, a menu, and some recipes. Other times we get a bit more creative and do something interesting with whatever we have on hand. As the lucky recipient of all of this calorie-laden deliciousness, I like to stand back and watch the process. Over the years I have learned something very interesting -- even the most innovative and free-spirited cooks are far more precise when they begin to bake. Precise ratios between ingredients ensure repeatable, high-quality results every time.

To date, many people have used AWS in what we'll have to think of as cooking mode. They launch some instances, assign some Elastic IP addresses, create some message queues, and so forth. Sometimes this is semi-automated with scripts or templates, and sometimes it is a manual process. As overall system complexity grows, launching the right combination of AMIs, assigning them to roles, dealing with error conditions, and getting all the moving parts into the proper positions becomes more and more challenging.

This is doubly unfortunate. First, AWS is programmable, so it should be possible to build even complex systems (sometimes called "stacks") using repeatable processes. Second, the dynamic nature of AWS makes people want to create multiple precise copies of their operating environment. This could be to create extra stacks for development and testing, or to replicate them across multiple AWS Regions.

Today, all of you cooks get to become bakers!

Our newest creation is called AWS CloudFormation. Using CloudFormation, you can create an entire stack with one function call. The stack can be comprised of multiple Amazon EC2 instances, each one fully decked out with security groups, EBS (Elastic Block Store) volumes, and an Elastic IP address (if needed). The stack can contain Load Balancers, Auto Scaling Groups, RDS (Relational Database Service) Database Instances and security groups, SNS (Simple Notification Service) topics and subscriptions, Amazon CloudWatch alarms, Amazon SQS (Simple Queuue Service) message queues, and Amazon SimpleDB domains. Here's a diagram of the entire process:

AWS CloudFormation is really easy to use. You simply describe your stack using our template language, and then call the CreateStack function to kick things into motion. CloudFormation will create the AWS resources as specified in the template, taking care to do so in the proper order, and optionally issuing a notification to the SNS topic(s) of your choice when the work is complete. Each stack also accumulates events (accessible through the DescribeStackEvents function) and retains the identities of the resources that it creates (accessible through the DescribeStackResources function).

The stack retains its identity after it has been created, so you can easily shut it all down when you no longer need it. By default, CreateStack will operate in an atomic fashion -- if it cannot create all of the resources for some reason it will clean up after itself. You can disable this "rollback" behavior if you'd rather manage things yourself.

The template language takes the form of a JSON string. It specifies the resources needed to make up the stack in a declarative, parameterized fashion. Because the templates are declarative, you need only specify what you want and CloudFormation will figure out the rest. Templates can include parameters and the parameters can have default values. You can use the parameter model to create a single template that will work across more than one AWS account, Availability Zone, or Region. You can also use the parameters to transmit changing or sensitive data (e.g. database passwords) into the templates.

We've built a number of sample CloudFormation templates to get you started. Let's take a look at the sample WordPress template to get a better idea of how everything works. This template is just 104 lines long. It accepts the following parameters:

- InstanceType - EC2 instance type; default is m1.small.

- GroupSize - Number of EC2 instances in the Auto Scaling Group; default is 2.

- AvailabilityZones - Zone or zones (comma-delimited list) of locations to create the EC2 instances.

- WordPressUser - Name of the user account to create on WordPress. Default is "admin."

- WordPressPwd - Password for the user account. Default is "password," and the parameter is flagged as "NoEcho" so that it will be masked in appropriate places.

- WordPressDBName - Name of the MySQL database to be created on the stack's DB Instance.

Here's an excerpt from the template's parameter section:

"WordPressPwd" : {

"Default" : "password",

"Type" : "String",

"NoEcho" : "TRUE"

},

"WordPressDBName" : {

"Default" : "wordpressdb",

"Type" : "String"

}The template specifies the following AWS resources:

- WordPressEC2Security - An EC2 security group.

- WordPressLaunchConfig - An Auto Scaling launch configuration.

- WordPressDBSecurity - A database security group.

- WordPressDB - An RDS DB Instance running on an m1.small, with 5 GB of storage, along with the user name, password, and database name taken from the parameters.

- WordPressELB - An Elastic Load Balancer for the specified Availability Zones.

- WordPressAutoScalingGroup An AutoScaling group for the specified Availability Zones, and the group size (desired capacity) taken from the GroupSize parameter.

Here's another excerpt from the template:

"WordPressAutoScalingGroup" : {

"Type" : "AWS::AutoScaling::AutoScalingGroup",

"DependsOn" : "WordPressDB",

"Properties" : {

"AvailabilityZones" : { "Ref" : "AvailabilityZones" },

"LaunchConfigurationName" : { "Ref" : "WordPressLaunchConfig" },

"MinSize" : "0",

"MaxSize" : "5",

"DesiredCapacity" : { "Ref" : "GroupSize" },

"LoadBalancerNames" : [ { "Ref" : "WordPressELB" } ]

}

}The template can also produce one or more outputs (accessible to your code via the DescribeStacks function). The WordPress template produces one output, the URL to the stack's Load Balancer. Here's one last excerpt from the template:

"Outputs" : {

"URL" : {

"Value" : { "Fn::Join" : [ "", [ "http://", { "Fn::GetAtt" : [ "WordPressELB" , "DNSName" ] } ] ] }

}

}The templates are just plain old text files. You can edit them with a text editor, keep them under source code control, or even generate them from another program.

The AWS Management Console also includes complete support for CloudFormation. Read my other post to learn more about it.

Putting it all together, it is now very easy to create, manage, and destroy entire application stacks with CloudFormation. Once again, we've done our best to take care of the important lower-level details so that you can be focused on building a great application.

I'm really looking forward to hearing more about the unique ways that our customers put all of this new power to use. What do you think of AWS CloudFormation and what can you bake with it?

Read More: AWS Management Console support for AWS CloudFormation. [See below.]

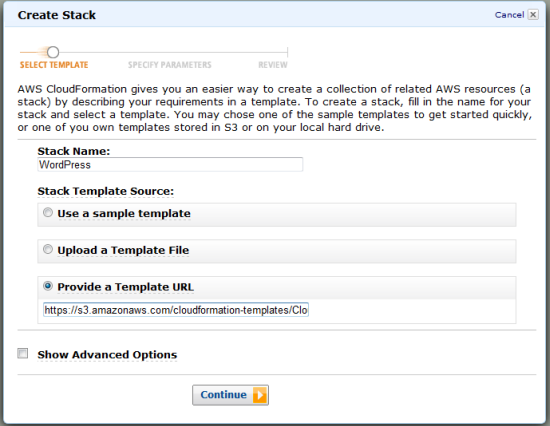

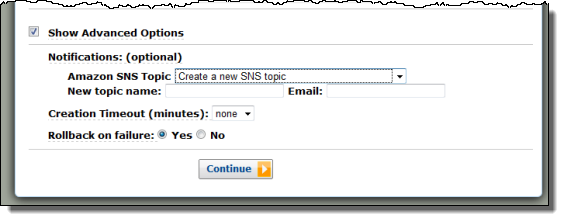

Jeff Barr (@jeffbarr) added an AWS CloudFormation in the AWS Management Console post on 2/25/2011:

The AWS Management Console now includes full support for AWS CloudFormation. You can create stacks from templates, monitor the creation process, access the parameters and the outputs, and terminate the stack when you are done with it. As is my custom, here's a tour!

Start out on the AWS CloudFormation tab. This tab displays all of your stacks and also contains the all-important Create New Stack button:

When you click that button you will be prompted for the information that AWS CloudFormation needs to create your stack. You can reference a template via a URL, upload a template from your desktop, or choose one of our sample templates. I happened to have the URL for the Wordpress template handy and used that (I could have also chosen it from the list of sample templates):

You can also choose to set some advanced options, including notification via Amazon SNS, a creation timeout, and rollback in case of failures:

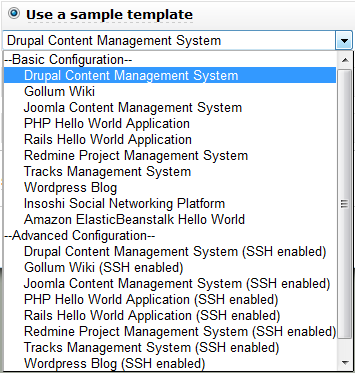

Here's what the list of sample templates looks like:

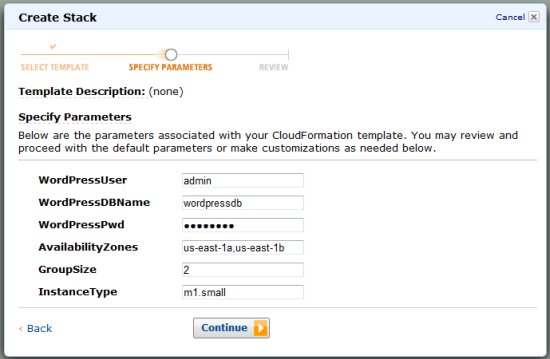

As I mentioned in my other blog post, each CloudFormation stack can include parameters. The console will prompt you for parameters based on the names and default values in your template:

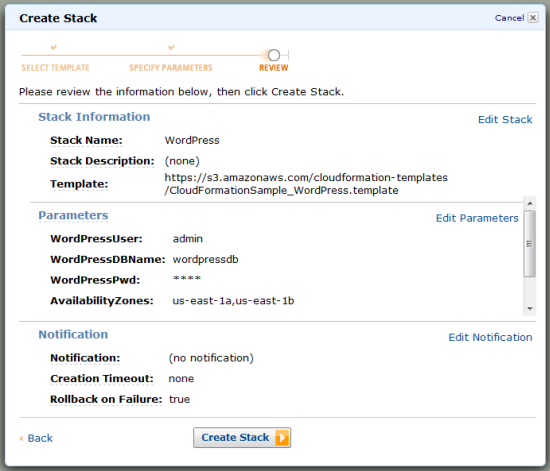

You can then verify your stack as the last step before you ask CloudFormation to create it for you:

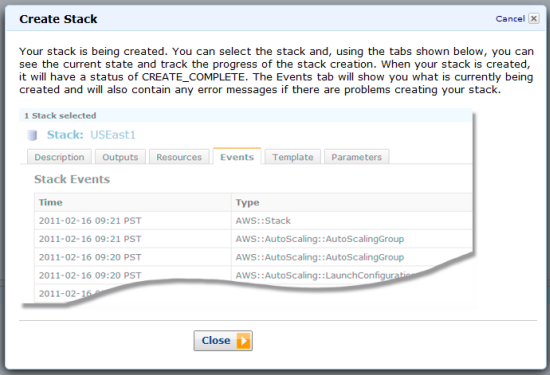

As soon as you click Create Stack, AWS CloudFormation will get right to work, creating all of the resources needed for the stack:

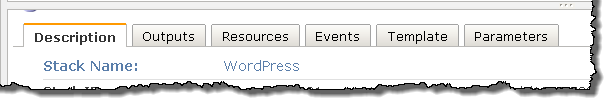

The CloudFormation tab will show your new stack, and will indicate that its state is currently CREATE_IN_PROGRESS. You can click on the stack to display additional information about it:

Here's what you get to see:

A click on the Events tab will reveal all of the actions that CloudFormation is taking to create the resources needed to realize your stack:

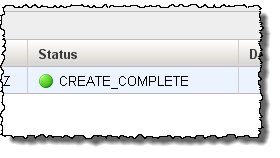

As soon as all of the resources have been created, the state of the stack will change to CREATE_COMPLETE:

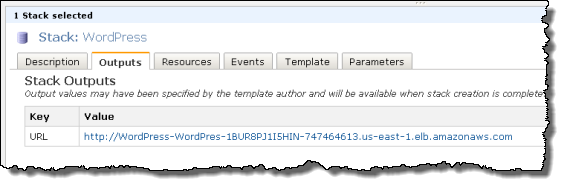

At that point, the Outputs tab will include the values specified in the template. The WordPress template that I used contains a single output, the URL of the running copy of WordPress:

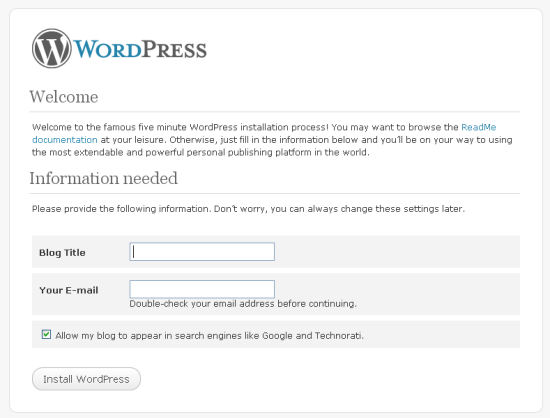

And here it is:

And there you have it. Are you ready to start making use of AWS CloudFormation now?

Maureen O’Gara prefaced her Ex-Microsoftie Barred from Working at Salesforce post of 2/25/2011 with “At least he can’t work for Salesforce in the job he was hired for”:

A Seattle Superior Court ruled Wednesday that Salesforce.com hireling Matthew Miszewski, plucked from inside Microsoft to run its government business, can't work there because it violates his non-compete with Microsoft.

At least he can't work for Salesforce in the job he was hired for - even after they tried to get around the temporary restraining order by narrowing his global charter to the US.

The court decided it was still the same kind of job he was doing at Microsoft and agreed to sign a preliminary injunction.

Miszewski claimed that the megabytes of Microsoft business plans found on his computer during discovery, documents he swore he didn't have, wouldn't be useful at Microsoft's direct competitor.

Chris Czarnecki described Cost Effective Hosting of Static Web Sites on Amazon S3 in a 2/24/2011 post to the Learning Tree blog:

Many organisations have simple Web sites that are built totally from static content. Hosting such an application is something that is not readily catered for by hosting providers. They typically provide solutions for dynamic Web sites that make use of scripts written in languages such as Java, C#, PHP or similar languages and use a database. Static sites do not require this functionality yet often the only hosting option is a dynamic Web site package that is more expensive than necessary.

Amazon AWS have a solution for static Web sites that is both simple and cost effective. This solution is built around the Amazon AWS Simple Storage Service (S3). S3 is a highly durable storage infrastructure designed for mission critical and primary data storage. Storage is charged per GB of storage per month and averages around $0.12 per GB per month – it varies slightly per region. Requests for read and write are then charged around $0.01 per 1000 requests.

S3 storage can be configured as a Web site. The domain name of the Web site has the CNAME mapped to the URL to the root of the S3 storage and the storage returns the home page of the Web site. This is very simple cost effective way of hosting a static Web site. Consider a site that requires 10GB of storage and has on average 100,000 requests per month. The AWS hosting costs approximate to $2.2 per month ! The other costs involved are the bandwidth costs that approximate to $0.100 per GB in and $0.15 per GB out although the first 1GB of data out per month has no charge.

So summarising, Amazon S3 is a highly attractive solution for static Web site hosting. In addition to the low cost, it also will scale transparently with variations in load, storage is replicated for reliability and availability and cost is proportional to usage. If you are interested in how you or your organisation can potentially benefit from Cloud Computing and the products offered by the major vendors such as Amazon, Google, Microsoft, Salesforce.com amongst others, why not consider attending Learning Trees Cloud Computing course.

<Return to section navigation list>

0 comments:

Post a Comment