Windows Azure and Cloud Computing Posts for 2/21/2011+

| A compendium of Windows Azure, Windows Azure Platform Appliance, SQL Azure Database, AppFabric and other cloud-computing articles. |

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database and Reporting

- Marketplace DataMarket and OData

- Windows Azure AppFabric: Access Control and Service Bus

- Windows Azure VM Roles, Virtual Network, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch

- Windows Azure Infrastructure

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Azure Blob, Drive, Table and Queue Services

David Pallman (@davidpallman) reported on 2/20/2011 that his Complete Windows Azure Storage Samples (REST and .NET side-by-side) are available on CodePlex:

I’ve posted new samples for Windows Azure Storage on CodePlex at http://azurestoragesamples.codeplex.com/. These samples are complete—they show every single operation you can perform in the Windows Azure Storage API against blob, queue, and table storage. In addition, there is both a REST implementation and a .NET StorageClient implementation with identical functionality that you can compare side-by-side.

I decided to put these samples up for 3 reasons:

- First of all, they're part of the code samples for my upcoming book series, The Windows Azure Handbook. Putting them out early gives me an opportunity to get some feedback and refine them.

- Second, although there is a lot of good online information and blogging about Windows Azure Storage, there doesn't seem to be a single sample you can download that does "everything". The Windows Azure SDK used to have a sample like this but no longer does.

- Third, I wanted to give complete side-by-side implementations of the REST and .NET StorageClient library approaches. Often developers starting with Windows Azure Storage are undecided about whether to use REST or the .NET StorageClient library and being able to compare them should be helpful.

These initial samples are all in C# but I would like to add other languages over time (and would welcome assistance in porting). Please do speak up if you encounter bugs, flaws, omissions in the samples -- or if you have suggestions.

Here are some good online resources for Windows Azure Table Storage:

- Windows Azure Storage Documentation: http://msdn.microsoft.com/en-us/library/dd179355.aspx

- Steve Marx's Blog (Microsoft): http://blog.smarx.com/

- Neil Mackenzie's Blog (Windows Azure MVP): http://convective.wordpress.com/

- Other storage samples: http://azuresamples.com/

Bravo, David!

Avkash Chauhan explained System.ArgumentException: Waithandle array may not be empty when uploading blobs in parallel threads in a 2/20/2011 post:

When you are uploading blobs from a stream using multiple roles (web or worker) to a single azure storage location it is possible that you may hit the following exception:

System.ArgumentException: Waithandle array may not be empty.

at Microsoft.WindowsAzure.StorageClient.Tasks.Task`1.get_Result()

at Microsoft.WindowsAzure.StorageClient.Tasks.Task`1.ExecuteAndWait()

at Microsoft.WindowsAzure.StorageClient.CloudBlob.UploadFromStream(Stream source, BlobRequestOptions options)

at Contoso.Cloud.Integration.Azure.Services.Framework.Storage.ReliableCloudBlobStorage.UploadBlob(CloudBlob blob, Stream data, Boolean overwrite, String eTag)Reason:

The above issue is caused by a known issue when utilizing parallel uploading for blobs in Storage API, and it could occur in very rare occasion if conditions are matched for this issue to appear.

Solution:

To solve this problem, disable parallel upload feature by setting as below: CloudBlobClient.ParallelOperationThreadCount=1

<Return to section navigation list>

SQL Azure Database and Reporting

The Microsoft Case Studies team posted a 4-page IT Company [INFORM] Quickly Extends Inventory Sampling Solution to the Cloud, Reduces Costs case study abut SQL Azure on 2/18/2011:

Headquartered in Aachen, Germany, INFORM develops software solutions that help companies optimize operations in various industries. The Microsoft Gold Certified Partner developed a web-based interface for its INVENT Xpert inventory sampling application and wanted to extend the application to the cloud to save deployment time and costs for customers. After evaluating Amazon Elastic Compute Cloud and Google App Engine, neither of which easily supports the Microsoft SQL Server database management software upon which its data-driven application is based, INFORM chose the Windows Azure platform, including Microsoft SQL Azure. INFORM quickly extended its application to the cloud, reduced deployment time for customers by 50 percent, and lowered capital and operational costs for customers by 85 percent. Plus, INFORM believes that having a cloud-based solution increases its competitive advantage.

Organization Profile

Established in 1969, INFORM develops software solutions that use Operations Research and Fuzzy Logic to help customers in the transportation and logistics industries optimize their operations.

Business Situation

After creating a web-based interface for its inventory sampling software, INFORM sought a cloud solution that it could deploy quickly and that would work with JavaScript and Microsoft SQL Server.

Solution

In less than three weeks, INFORM migrated its existing database to Microsoft SQL Azure in a multitenant environment and deployed its Java-based inventory optimization application to Windows Azure.

Benefits

- Quick development

- Reduced deployment time by 50 percent

- Lowered customer costs by 85 percent

- Increased competitive advantage

Allen Kinsel asked SQL Azure track at the PASS summit: Is the cloud real or hype? in a 2/11/2011 post (missed when posted):

With SQL Azure (& the cloud in general) becoming more and more mainstream, I’m seriously considering creating a new Azure track for the 2011 Summit. I’m still pulling the attendance & session evaluation scores together from 2009 and 2010 azure sessions to try and determine if its truly a good idea or not.

There’s always a tradeoff: we have a limited amount of sessions available, so creating a track would mean shifting allocations from the other tracks to cover the sessions given but, considering the future it seems to be the right move.

Just thought id throw this quick post out looking for thoughts & feedback

This is the first minor change I’m considering for the 2011 Summit.

The three comments as of 2/21/2011 were in favor of adding a SQL Azure track.

<Return to section navigation list>

MarketPlace DataMarket and OData

Alex James (@adjames) posted Vocabularies to the OData blog on 2/21/2011:

What are vocabularies?

Vocabularies are made up of a set of related 'terms' which when used can express some idea or concept. They allow producers to teach consumers richer ways to interpret and handle data.

Vocabularies can range in complexity from simple to complex. A simple vocabulary might tell a consumer which property to use as an entity's title when displaying it in a form, whereas a more complex vocabulary might tell someone how to convert an OData person entity into a vCard entry.

Here are some simple examples:

- This property should be used as the Title of this entity

- This property has a range of acceptable values (e.g. 1 to 100)

- This entity can be converted into an vCard

- This entity is a foaf:Person

- This navigation property is essentially a 'foaf:Knows [a person]' relationship

- This property is a georss:Point

- Etc

Vocabularies is not a new concept unique to OData, vocabularies are used extensively in the linked data and RDF worlds to great effect, in fact we should be able to re-use many of these existing vocabularies in OData.

Why does OData need vocabularies?

OData is being used in many different verticals now. Each vertical brings its own specific set of requirements and challenges. While some problems are general enough that solving them inside OData adds value to the OData eco-system as a whole, most don't meet that bar.

It seems clear then that we need a mechanism that allows Producers to share more information that 'smarter' Consumers MAY understand enough to enable a higher fidelity experience.

In fact some consumers are already trying to provide a higher fidelity experience, for example Sesame can render the results of OData queries on a map. Sesame does this by looking for specifically named properties, which it 'guesses' represent the entity's location. While this is powerful, it would be much better if it wasn't a 'guess', if the Producer used a well-known vocabulary to tell Consumers which property is the entity's location.

Goals

As with any new feature, we need to agree on a set of goals before we can come up with the right design. To get us started I propose this set of goals:

- Ability to re-use or reference common micro-formats and vocabularies.

- Ability to annotate OData metadata using the terms from a particular vocabulary.

- Both internally (inside the CSDL file returned from $metadata)

- And externally (allowing for third-parties to 'enrich' existing OData services they don't own).

- No matter how the annotation is made, consumers should be able to consume the annotations in much the same way.

- Ability to annotate OData data too? Although this one is beyond the scope of this post.

- Consumers that don't understand a particular vocabulary should still be able to work with services that reference that vocabulary. The goal should be to enrich the eco-system for those who 'optionally' understand the vocabulary.

- We should be able to reference terms from a vocabulary in CSDL, OData Atom and OData JSON.

It is important to note that our goal stops short of specifying how to define the vocabulary itself, or how to capture the semantics of the vocabulary, or how to enforce the vocabulary. Those concerns lay solely with vocabulary writers, and the producers and consumers that profess to understand the vocabulary. By staying out of this business it allows OData to reference many existing vocabularies and micro-formats, without being unnecessarily restrictive on how those vocabularies are defined or the types of semantics they might imply.

Exploration

Today if you ask for an OData services metadata (~/service/$metadata) you get back an EDMX document that contains a CSDL schema. Here is an example.

CSDL already supports annotations, which we could use to refer to a vocabulary and its terms. For example this EntityType definition includes both a structural annotation (validation:Constraint) and a simple attribute annotation (display:Title):

<EntityType Name="Person" display:Title="Firstname Lastname">

<Key>

<PropertyRef Name="ID" />

</Key>

<Property Name="ID" Type="Edm.Int32" Nullable="false" />

<Property Name="Firstname" Type="Edm.String" Nullable="true" />

<Property Name="Lastname" Type="Edm.String" Nullable="true" />

<Property Name="Email" Type="Edm.String" Nullable="true">

<validation:Constraint>

<validation:Regex>^[A-Z0-9._%+-]+@[A-Z0-9.-]+\.[A-Z]{2,6}$.</validation:Regex>

<validation:ErrorMessage>Please enter a valid EmailAddress</validation:ErrorMessage>

</validation:Constraint>

</Property>

<Property Name="Age" Type="Edm.Int32" />

</EntityType>For this to be valid XML the display and validation namespaces would have to be introduced somewhere something like this:

<Schema

xmlns:display="http://odata.org/vocabularies/display"

xmlns:validation="http://odata.org/vocabularies/validation">Here the URL of the xsd reference identifies the vocabulary globally.

While this allows for completely arbitrary annotations and is extremely expressive, it has a number of down-sides:

- Structural annotations (i.e. XML elements) support the full power of XML. While power is good, it comes at a price, and here the price is figuring out how to represent the same thing in say JSON? We could come up with a proposal to make CSDL and OData Atom/JSON completely isomorphic, but is that worth the effort? Probably not.

- There is no way to refer to something, like say a property, so that you can annotate it externally, which is one of our goals.

- If we allow for annotations inline in the data (and let's not forget metadata would just be data in an addressable metadata service) it would change the shape of the resulting JSON structure. For example the javascript expression to access the age property of an entity would need to change from something like object.Age to something like object.Age.Value so that object.Age can hold onto all the 'inline annotations'. This is clearly unacceptable if we want existing 'naive' consumers to continue to work.

OData values:

If we address these issues in turn, one concern for (1) is to restrict the XML available when using a vocabulary to the XML we already know how to convert from XML into JSON, i.e. OData values. For example we take something like this:

<Property Name="Email" Type="Edm.String" Nullable="true">

<validation:Constraint>

<validation:Regex>^[A-Z0-9._%+-]+@[A-Z0-9.-]+\.[A-Z]{2,6}$.</validation:Regex>

<validation:ErrorMessage>Please enter a valid EmailAddress</validation:ErrorMessage>

</validation:Constraint>

</Property>The annotation is pretty simple, and could be modeled as a ComplexType pretty easily:

<ComplexType Name="Constraint">

<Property Name="Regex" Type="Edm.String" Nullable="false" />

<Property Name="ErrorMessage" Type="Edm.String" Nullable="true" />

</ComplexType>In fact if you execute an OData request that just retrieves an instance of this complex type the response would look like this:

<Constraint

p1:type="Namespace.Constraint"

xmlns:p1="http://schemas.microsoft.com/ado/2007/08/dataservices/metadata"

xmlns="http://schemas.microsoft.com/ado/2007/08/dataservices" >

<Regex>^[A-Z0-9._%+-]+@[A-Z0-9.-]+\.[A-Z]{2,6}$.</Regex>

<ErrorMessage>Please enter a valid EmailAddress</ErrorMessage>

</Constraint>And this is almost identical to our original annotation, the only differences being around the xml namespaces.

Which means it is not too much of a stretch to say if your annotation can be modeled as a ComplexType which - of course allow nested complex types properties and multi-value properties too - then the Annotation is simply an OData value.

This is very nice because it means when we do addressable metadata you can in theory write a query like this to retrieve the annotations for a specific property:

~/$metadata.svc/Properties('Namespace.Type.PropertyName')/Annotations

ISSUE: Actually this introduces a problem, since each annotation instance would have a different 'type' we would need to support either ComplexType inheritance (so we can define the annotation as an EntityType with a Value property of type AnnotationValue, but instances of Annotations would invariably have Values derived from the base AnnotationValue type) or mark Annotation as a OpenType or provide a way to specify a property without specifying the type.

Of course today annotations are allowed that can't be modeled as ComplexTypes, so we would need to be able to distinguish those. Perhaps the easiest way is like this:

<Property Name="Email" Type="Edm.String" Nullable="true">

<validation:Constraint m:Type="validation:Constraint" >

<validation:Regex>^[A-Z0-9._%+-]+@[A-Z0-9.-]+\.[A-Z]{2,6}$.</validation:Regex>

<validation:ErrorMessage>Please enter a valid EmailAddress</validation:ErrorMessage>

</validation:Constraint>

</Property>Here the m:Type attribute indicates that the annotation is an OData value. This tells servers and clients that they can if needed convert between CSDL, ATOM and JSON formats using the above rules.

By adopting the OData atom format for annotations we can use a few more OData-ism to get clearer about the structure of the Annotation:

- By default each element in the annotation represents a string but you can use m:Type="***" to change the type to something like Edm.Int32.

e.g. <validation:ErrorSeverity m:Type="Edm.Int32">1</validation:ErrorSeverity>- We can use m:IsNull="true" to tell the difference between an empty string and null.

e.g. <validation:ErrorMessage m:IsNull="true" />This looks good. It supports both constrained (OData values) and unconstrained annotations, and is consistent with the existing annotation support in OData.

Out of line & External Annotations

Now if we turn our attention back to concern (2), this example implicitly refers to its parent; however we need to allow vocabularies to refer to something explicitly. For metadata the most obvious solution is to leverage addressable metadata, which allows you to refer to individual pieces of the metadata.

For example if this URL is the address of the metadata for the Email property: http://server/service/$metadata/Properties('Namespace.Person.Email')

Then this 'free floating' element is 'annotating' the Email property using the 'http://odata.org/vocabularies/constraints' vocabulary:

<Annotation AppliesTo="http://server/service/$metadata/Properties('Namespace.Person.Email')"

xmlns:validation="http://odata.org/vocabularies/constraints">

<validation:Constraint m:Type="validation:Constraint" >

<validation:Regex>^[A-Z0-9._%+-]+@[A-Z0-9.-]+\.[A-Z]{2,6}$.</validation:Regex>

<validation:ErrorMessage>Please enter a valid EmailAddress</validation:ErrorMessage>

</validation:Constraint>

</Annotation>Annotation by reference also neatly sidesteps issue (3), i.e. the object annotated is left structurally unchanged, which means we could use a similar approach to annotate data without breaking code (like a javascript path) that relies on a particular structure.

Another nice side-effect of this design is that you can use it 'inside' the CSDL too, simply by removing the address of the metadata service from the AppliesTo url - since we are in the CSDL we can us 'relative addressing':

<Annotation AppliesTo="Properties('Namespace.Person.Email')"

xmlns:validation="http://odata.org/vocabularies/constraints">

<validation:Constraint m:Type="validation:Constraint" >

<validation:Regex>^[A-Z0-9._%+-]+@[A-Z0-9.-]+\.[A-Z]{2,6}$.</validation:Regex>

<validation:ErrorMessage>Please enter a valid EmailAddress</validation:ErrorMessage>

</validation:Constraint>

</Annotation>Indeed if you have a separate file with many annotations for a particular model, you could group a series of annotations together like this:

<Annotations AppliesTo="http://server/service/$metadata/">

<Annotation AppliesTo="Properties('Namespace.Person.Email')"

xmlns:validation="http://odata.org/vocabularies/constraints">

<validation:Constraint m:Type="validation:Constraint" >

<validation:Regex>^[A-Z0-9._%+-]+@[A-Z0-9.-]+\.[A-Z]{2,6}$.</validation:Regex>

<validation:ErrorMessage>Please enter a valid EmailAddress</validation:ErrorMessage>

</validation:Constraint>

</Annotation>

<Annotation AppliesTo="Properties('Namespace.Customer.Email')"

xmlns:validation="http://odata.org/vocabularies/constraints">

<validation:Constraint m:Type="validation:Constraint" >

<validation:Regex>^[A-Z0-9._%+-]+@[A-Z0-9.-]+\.[A-Z]{2,6}$.</validation:Regex>

<validation:ErrorMessage>Please enter a valid EmailAddress</validation:ErrorMessage>

</validation:Constraint>

</Annotation>

</Annotations>Here the Annotations/@AppliesTo attribute indicates the shared root url for all the annotations, and could be any url that points to a model, be that http, file or whatever.

Vocabulary definitions and semantics

It is important to note, that while we are proposing how to 'bind' or 'apply' a vocabulary, we are *not* proposing how to:

- Define the terms in a vocabulary (e.g. Regex, ErrorMessage)

- Define the meaning or semantics associated with the terms (e.g. Regex should be applied to instance values, if the regex doesn't match an error/exception should be raised with the ErrorMessage).

Clearly however to interoperate two parties must agree on the vocabulary terms available and their meaning. We are however not dictating how that understanding develops. It could be done in many different ways - for example using a Hallway conversation, Word or PDF document, Diagram, or perhaps even an XSD or EDM model.

Who creates vocabularies?

The short answer is 'anyone'.

The more nuanced answer is there are many candidate vocabularies - from georss to vCard to Display to Validations - overtime people and companies from the OData ecosystem will start promoting vocabularies they have an interest in, and as is always the case the most useful will flourish.

Where are we?

I think that this proposal is a good place to gather feedback and think about the scenarios it enables. Using this approach you can imagine a world where:

- A Producer exposes a Data Service without using any terms from a useful vocabulary.

- Someone creates an 'annotation' file that attaches terms from the useful vocabulary to the service, which then enables 'smart consumers' to interact with the Data Service with higher-fidelity.

- The Producer learns of this 'annotation' file and embeds the annotations simply by converting all the 'appliesTo' urls (that are currently absolute) into relative urls.

You can also imagine a world where consumers like Tableau, PowerPivot and Sesame allow users to build up their own annotation files in response to gestures*.

*Gestures - you can think of the various mouse clicks, drags, key presses performed by a user as gestures. So for example right clicking on a column and picking from a list of well-known vocabularies could be interpreted as binding the selected vocabulary to the corresponding property definition. These 'interpretations' could easily be group and stored in an external annotation file.

Summary

I hope that you, like me, can see the potential power and expressiveness that vocabularies can bring to OData. Vocabularies will allow the OData eco-system to connect more deeply with the web and allow for ever richer and more immersive data consumption scenarios.

I really want to hear your feedback on the approach proposed above. The proposal is more exploratory than anything. It's definitely not set in stone, so tell us what you think.

Some specific questions:

- Do you agree that if we have a reference based annotation model, there is no need to support an inline model?

- What do you think of the idea of restricting annotation to the OData type system?

- Do you like the symmetry between in service (i.e. inside $metadata) and external annotations?

- Do we need to define how you attach vocabularies to data too? For example do you have scenarios where each instance has different annotations?

- Are there any particularly cool scenarios you think this enables?

- What vocabularies would you like to see pushed?

Sounds good to me. There’s an ongoing controversy in the OData mailing list about OData’s purported lack of RESTfulness as the result of use of OData-specific URI formats. I would expect similar objections to Vocabularies.

The Silverlight Show announced on 2/21/2011 a Free Telerik Webinar with Shawn Wildermuth - Consuming OData in Windows Phone 7 on 2/23/2011 at 7:00 to 8:00 PST:

Free Telerik Webinar: Consuming OData in Windows Phone 7

Date/Time: Wednesday, February 23, 2011, 10-11 a.m. EST (check your local time)

Guest Presenter: Shawn Wildermuth

The Windows Phone 7 represents a great application platform for the mobile user but being able to access data can take many forms. One particularly attractive choice for data access is OData (since it is a standard that many organizations are using to publish their data). In this talk, Shawn Wildermuth will show you how to build a Windows Phone 7 that accesses an OData feed!

Space is limited. Reserve your webinar seat now!

Find more info on the other upcoming free Telerik WP7 webinars.

SilverlightShow is the Media Partner for Telerik's Windows Phone 7 webinars. Follow all webinar news @silverlightshow.

Marcelo Lopez Ruiz answered Chris Lamont Mankowski’s How can I read and write OData calls in a secure way? (not vulnerable to CSRF for example?) Stack Overflow question of 2/11/2011:

Q: What is the most secure way to open a OData read/GET endpoint without risks to CSRF attacks like this one?

I haven't looked at the source, but how does the MSFT ODATA library compare to jQuery in this regard?

A: OData was designed to prevent the JSON-hijacking attack described in the link by returning only objects as JSON results, which makes the payload an invalid JavaScript program and as such won't be executed by the browser.

This is really independent of whether you use datajs or jQuery. I haven't looked at the exact result you get from jQuery, but I know datajs will "unwrap" the results so you get a more natural-looking result, without any artificial top-level objects.

In particular, the WCF Data Services implementation for .NET doesn't support JSONP out of the box, although there are a couple of popular simple solutions to add it. At that point, though, you've basically opted into allowing the data to be seen from other domains, so this is something that shouldn't be done for user-sensitive data.

<Return to section navigation list>

Windows Azure AppFabric: Access Control and Service Bus

Rick Garibay posted links to his Building Composite Hybrid App Services with AppFabric presentations on 2/21/2011:

Below are links to my most recent talks on AppFabric including Service Bus (1/12) and bringing it all together with Server AppFabric (2/9).

In the final webcast in my series on AppFabric, I discuss how Windows Server AppFabric extends the core capabilities of IIS and WAS by providing a streamlined on-premise hosting experience for WCF 4 and WF 4 Workflow Services, including elastic scale via distributed caching as well as how Windows AppFabric can benefit your approach to building and supporting composite application services via enhanced lifetime management, tracking and persistence of long-running work flow services all while providing a simple, IT Pro-friendly user interface.

The webcast includes a number of demos including the management of WF 4 Workflow Services on-prem with Server AppFabric as well as composing calls between a WCF service hosted in an Azure Web Role with an on-premise service via AppFabric Service Bus to deliver hybrid platform as a service capabilities today.

Click the ‘Register Here’ button for download details for a recording of each webcast.

January 12: App Fabric + Service Bus February 9: Building Composite Application Services with AppFabric Register Here Register Here

Rick is the General Manager of the Connected Systems Practice at Neudesic

Dominick Baier updated his Thinktecture.IdentityModel CodePlex project on 1/30/2011 (missed when updated):

Over the last years I have worked with several incarnations of what is now called the Windows Identity Foundation (WIF). Throughout that time I continuously built a little helper library that made common tasks easier to accomplish. Now that WIF is near to its release, I put that library (now called Thinktecture.IdentityModel) on Codeplex.

The library includes:

Feel free to contact me via the forum when you have questions or found a bug.

- Extension methods for

- IClaimsIdentity, IClaimsPrincipal, IPrincipal

- XmlElement, XElement, XmlReader

- RSA, GenericXmlSecurityToken

- Extensions to ClaimsAuthorizationManager (inspecting messages, custom principals)

- Logging extensions for SecurityTokenService

- Simple STS (e.g. for REST)

- Helpers for WS-Federation message handling (e.g. as a replacement for the STS WebControl)

- Sample security tokens and security token handlers

- simple access token with expiration

- compressed security token

- API and configuration section for easy certificate loading

- Diagnostics helpers

- ASP.NET WebControl for invoking an identity selector (e.g. CardSpace)

<Return to section navigation list>

Windows Azure VM Roles, Virtual Network, Connect, RDP and CDN

Avkash Chauhan provided a workaround for a Windows OS related Issue when you have VM Role and web/Worker Role in same Windows Azure Application in a 2/21/2011 post:

I was recently working with a partner on their Windows Azure related Project in which there was a VM Role and ASP.NET Web Role within one application which was developed using Windows Azure SDK 1.3. VM role[. The] VHD was deployed successfully to the Azure Portal using CSUPLOAD and the Windows Azure application was deployed to cloud as well. After logging into ASP.NET based Windows Azure VM over RDP, what we found is that the OS on the VM is Windows Server 2008 SP2 however on Windows Azure Portal it shows Windows Server 2008 R2 Enterprise. Changing the OS setting on Azure Portal did not help at all and the ASP.NET based web role Windows Azure VM always had Windows Server 2008 SP2 OS on it.

After more investigation the following results were discovered and potential workaround concluded:

Issue:

Today, a service in Windows Azure can only support one Operating System selection for the entire service. When a partner selects his/her specific OS, it applies to all Web and Worker roles in the running service.

VM roles have slightly changed this model by allowing the partner to bring their own operating system and define this directly in the service model. Unfortunately, because of an issue in the way the OS is selected for Web and Worker roles, if a partner service has both a VM Role and a Web/Worker role, it is possible that the partner may NOT be able to change the operating system for the Web/Worker role and always be given the default OS for these specific role instances, which is the most recent version of the Windows Azure OS comparable to Server 2008 SP2. The partner will still be able to select the OS version and OS family through the portal; however, it will not be applied to the role instances. This does NOT impact the VM Role OS supplied by the partner.

This issue is due to the way the platform selects the OS using the service model passed by the partner. It will only occur when a VM Role is listed first in the internal service model manifestation. With this listing, the default OS version is applied to all web and worker roles, which becomes the latest version of the Windows Azure OS version comparable to Server 2008 SP2.

Workaround:

The workaround for this issue is fairly trivial. The internal service model manifestation orders the roles based upon their name, in alphabetical order. Therefore, if a partner has a VM Role AND a Web/Worker role in their service, to be able to apply a specific OS, the partner can rename their Web/Worker role with a name that is alphabetically higher than the VM Role. For example, the Web/Worker role could be named, “AWebRole” and the VM Role could be named “VMRole” and the OS selection system would work for this partner because AWebRole is alphabetically higher than VMRole.

What about the fix:

I would say that, this issue is known to Windows Azure team and as VM Role is in BETA so we all can hope for something different by the time VM Role hits RTM however there is nothing conclusive I have to add.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

BusinessWire reported Dell to Collaborate with Microsoft and Stellaris Health Network to Deliver Innovative Software-as-a-Service Analytics Solution for Community Hospitals in a 2/21/2011 press release:

Dell to deliver hosted, software-as-a-service solution using Microsoft Amalga health intelligence platform

- Dell’s hosted, subscription-based service will be designed to provide community hospitals with an affordable reporting program for operational metrics and add-on applications that target key business needs such as regulatory and accreditation compliance

- Solution will enable comparative and best practice data sharing with other participants

ROUND ROCK, Texas & REDMOND, Wash.--(BUSINESS WIRE)--Dell and Microsoft Corp. will collaborate to deliver an analytics, informatics, Business Intelligence (BI) and performance improvement solution designed specifically to meet the needs of community hospitals through an affordable, subscription-based model.

“As a network of community hospitals, we are excited about the ability to more easily and affordably create an interactive environment where our physicians and other clinicians can obtain quality metrics in real-time”

Delivered by Dell as a hosted online service, the solution combines Microsoft Amalga, an enterprise health intelligence platform, with Dell’s cloud infrastructure and expertise in informatics, analytics and consulting. [Link added.]

The new offering will be developed in collaboration with Stellaris Health Network, a community hospital system that consists of Lawrence Hospital Center, Northern Westchester Hospital, Phelps Memorial Hospital Center and White Plains Hospital. These hospitals will serve as the foundation members in the solution development process.

Community hospitals will have access to targeted, focused views of consolidated patient data from source systems across the hospital, enabling organizational leaders, clinicians and physicians to rapidly gain insights into the administrative, clinical and financial data needed to make ongoing operational decisions. This information will further enable hospitals to provide patients with advancing levels of high quality, evidence-based care. In addition, the solution will provide add-on, business-focused applications.

The initial application (The Quality Indicator System or QIS) also will play a key role in helping community hospitals manage quality indicator reporting requirements as mandated by CMS, the Joint Commission and the new meaningful-use measures now mandated under the American Recovery and Reinvestment Act of 2009. This application is unique to the market in that it will go beyond the CMS-required metrics to deliver advanced quality indicator alerts and prevent metrics from being missed. The QIS will capture data as a patient enters the hospital, determine which quality measures may apply to the patient, and then enable the hospital to track and measure compliance throughout the patient’s stay.

Dell is building upon its existing relationship with Stellaris Health Network by conducting a pilot of the solution at the network’s four hospitals in Westchester, N.Y. The pilot will begin in March and will provide Stellaris hospitals with integrated workflow design, documentation integrated within its information system, and advanced reporting of quality measures.

“As a network of community hospitals, we are excited about the ability to more easily and affordably create an interactive environment where our physicians and other clinicians can obtain quality metrics in real-time,” said Arthur Nizza, CEO, Stellaris Health Network. “Through our demonstration project with Dell and Microsoft, we look forward to leveraging the considerable investment our hospitals have made in improving clinical care and patient outcomes by sharing best practices that will further advance the care provided by community hospitals.”

As the collaborative expands to include new members, additional applications will be developed to solve other commonly identified business issues found in the community hospitals such as solutions for turn-around time delays, care coordination, managing avoidable readmissions, and population based healthcare management for chronic conditions.

“Uniting our companies’ complementary strengths in healthcare software, IT services and enterprise-class server systems, Dell and Microsoft are uniquely positioned to bring to market new modular healthcare solutions aimed squarely at the needs of small and mid-sized hospitals,” said Berk Smith, vice president, Dell Healthcare and Life Sciences Services. “We’re excited to collaborate with Microsoft and Stellaris Health to deliver a set of rich informatics, analytics and reporting applications that are not only easy and cost-effective to adopt, but also are designed to create more value out of existing IT systems.”

“In a highly dynamic healthcare landscape, hospitals of all sizes are challenged by a lack of timely access to health data stored in their enterprise technology systems, which has a direct impact on timely decision-making and ultimately the quality of care,” said Peter Neupert, Corporate Vice President, Microsoft Health Solutions Group. “With Dell and Stellaris, our goal is to offer a set of solutions that make it simple for small and mid-sized hospitals, which typically don’t have extensive IT departments, to readily access and analyze the data they need to identify gaps in care quality and take the right steps to make measurable improvements.”

About Dell

Dell (NASDAQ: DELL) listens to its customers and uses that insight to make technology simpler and create innovative solutions that deliver reliable, long-term value. Learn more at www.dell.com. Dell’s Informatics and Analytics Practice spans hospitals, community based healthcare providers, physician services and payer market segments. In each of these segments, the Dell solutions encompass the entire spectrum of healthcare informatics needs including data acquisition, storage, management, analytics, and reporting. Coupled with Dell's expertise in healthcare consulting is a broad depth of knowledge and experience in technology solutions which have made Dell an award winning Technology Services organization worldwide.

About Microsoft in Health

Microsoft is committed to improving health around the world through software innovation. Over the past 13 years, Microsoft has steadily increased its investments in health with a focus on addressing the challenges of health providers, health and social services organizations, payers, consumers and life sciences companies worldwide. Microsoft closely collaborates with a broad ecosystem of partners and delivers its own powerful health solutions, such as Amalga, HealthVault, and a portfolio of identity and access management technologies acquired from Sentillion Inc. in 2010. Together, Microsoft and its industry partners are working to deliver health solutions for the way people aspire to work and live.

About Microsoft

Founded in 1975, Microsoft (Nasdaq: MSFT) is the worldwide leader in software, services and solutions that help people and businesses realize their full potential.

About Stellaris Health Network

Based in Armonk, NY and founded in 1996, HealthStar Network, Inc. (dba Stellaris Health Network) is the corporate parent of Lawrence Hospital Center (Bronxville, NY), Northern Westchester Hospital (Mount Kisco, NY), Phelps Memorial Hospital Center (Sleepy Hollow, NY), and White Plains Hospital (White Plains, NY). In addition to the network of hospitals, Stellaris provides ambulance and municipal paramedic services to Westchester County, New York through Westchester Emergency Medical Services. With nearly $850 million in combined revenue and 1,100 in-patient beds, Stellaris Hospitals account for over a third of the acute care bed capacity in Westchester County. It is one of the largest area employers, with over 5,000 employees and approximately 1,000 voluntary physicians on staff. The Stellaris Hospitals provide multidisciplinary acute care services, as well as a range of community based services such as hospice, home health, behavioral health and physical rehabilitation. As Stellaris pursues new programs and joint ventures, it will continue to evolve as the regions’ leading healthcare system, dedicated to preserving high quality, community-based care for residents of Westchester, Putnam, Rockland, Dutchess, Northern Bronx, and Fairfield Counties.

Dell continues to increase its presence in health information technology (HIT).

Vedaprakash Subramanian, Hongyi Ma, Liqiang Wang, En-Jui Lee and Po Chen posted Azure Use Case Highlights Challenges for HPC Applications in the Cloud on 2/21/2011 to the HPC in the Cloud blog:

Currently, HPC has been making a transition to the cloud computing paradigm shift. Many HPC users are porting their applications to cloud platforms. Cloud provides some major benefits, including scalability, elasticity, the illusion of infinite resources, hardware virtualization, and a “pay-as-you-go” pricing model. These benefits seem very attractive not only for general business tasks, but also for HPC applications when compared with setting up and managing dedicated clusters. However, how far these benefits pay off in terms of the performance of HPC applications is still a question.

We recently had the experience of porting an HPC application, Numerical Generation of Synthetic Seismograms, onto Microsoft’s Windows Azure cloud and have generated some opinions to share about some of the challenges ahead for HPC in the cloud.

Numerical generation of synthetic seismogram is an HPC application that generates seismic waves in three dimensional complex geological media by explicitly solving the seismic wave-equation using numerical techniques such as finite-difference, finite-element, and spectral-element methods. The computation of this application is loosely-coupled and the datasets require massive storage. Real-time processing is a critical feature for synthetic seismogram.

When executing such an application on the traditional supercomputers, the submitted jobs often wait for a few minutes or even hours to be scheduled. Although a dedicated computing cluster might be able to make a nearly real-time response, it is not elastic, which means that the response time may vary significantly when the number of service requests changes dramatically.

Given these challenges and due to the elastic nature of the cloud computing, this seems like an ideal solution for our application, which provides much faster response times and the ability to scale up and down according to the requests.

We have ported our synthetic seismogram application to Microsoft’s Windows Azure. As one of the top competing cloud service providers, Azure provides Platform as a service (PaaS) architecture, where users can manage their applications and the execution environments but do not need to control the underlying infrastructure such as networks, servers, operating system, and storage. This helps the developers focus on the applications rather than manage the cloud infrastructures.

Some useful features Windows Azure for HPC provides for applications include the automatic load balancing and checkpointing. Azure divides its storage abstractions into partitions and provides automatic load balancing of partitions across their servers. Azure monitors the usage pattern of the partitions and servers and adjusts the grouping or splitting of workload among the servers.

Checkpointing is implemented using progress tables, which support restarting previously failed jobs. These store the intermediate persistent state of a long-running job and record the progress of each step. When there is failure, we can look at the progress table and resume from the failover. The progress table is useful when a compute node fails and its job is taken over by another compute node.

Challenges Ahead for HPC in the CloudThe overall performance of our application on Azure cloud is good when compared to the clusters in terms of the execution time and storage cost. However, there are still many challenges for cloud computing, specifically, for Windows Azure.

Dynamic scalability - The first and foremost problem with Azure is that the scalability is not up to the expectation. In our application, dynamic scalability is a major feature. Dynamic scalability means that according to the response time of the user queries, the compute nodes are scaled up and down dynamically by the application. We set the threshold response time to be 2 milliseconds for queries. If the response time of a query exceeds the threshold, it will request to allocate an additional compute node to cope up with the busy queries. But the allocation of a compute node may take more than 10 minutes. Due to such a delay, the newly allocated compute node cannot handle the busy queries in time.

- Vedaprakash Subramanian is a Master student in the department of Computer Science at University of Wyoming.

- Hongyi Ma is a PhD student in the Department of Computer Science at University of Wyoming.

- Liqiang Wang is an Assistant Professor in the Department of Computer Science at the University of Wyoming.

- En-Jui Lee is a PhD student in the Department of Geology and Geophysics at the University of Wyoming.

- Po Chen is currently an Assistant Professor in the Department of Geology and Geophysics at the University of Wyoming.

ItPro.co.uk posted on 2/21/2011 a Dot Net Solutions & Windows Azure: Case Study sponsored by Microsoft:

Dot Net Solutions has leveraged the power, flexibility, security and reliability of Microsoft's Windows Azure platform to create cloud based infrastructure solutions for a variety of clients with a host of different needs.

Dot Net Solutions & Windows Azure

Dot Net Solutions is a technology-focussed systems integrator. It’s one of the UK leaders in helping organisations large and small architect and build business solutions for the Cloud. Customers range from venture backed start-ups to global multinationals.

As a boutique developer, Dot Net relies on Microsoft software to help it meet – and exceed – the needs of clients large and small, creating business solutions on time, to specification and to budget. The Windows Azure platform has become an integral part of its business, and the company's experience and expertise with Windows Azure is helping Dot Net win business and build on its success.

"There are real cost savings involved in using Windows Azure over either your internal infrastructure or a traditional hosting company. Push everything to the cloud. It doesn't need to fit within one of the classic cloud computing scenarios. With any application, you're going to be able to get massive cost savings."

Dan Scarfe, Chief Executive, Dot Net Solutions

Cory Fowler (@SyntaxC4) continued his series with Installing PHP on Windows Azure leveraging Full IIS Support: Part 2 on 2/20/2011:

In the last post of this Series, Installing PHP on Windows Azure leveraging Full IIS Support: Part 1, we created a script to launch the Web Platform Installer Command-line tool in Windows Azure in a Command-line Script.

In this post we’ll be looking at creating the Service Definition and Service Configuration files which will describe what are deployment is to consist of to the Fabric Controller running in Windows Azure.

Creating a Windows Azure Service Definition

Unfortunately there isn’t a magical tool that will create a starter point for our Service Definition file, this is mostly due to the fact that Microsoft doesn’t know what to provide as a default. Windows Azure is all about Developer freedom, you create your application and let Microsoft worry about the infrastructure that it’s running on.

Luckily, Microsoft has documented the Service Definition (.csdef) file on MSDN, so we can use this documentation to guide us through the creation of our Service Definition. Let’s create a file called ‘ServiceDefinition.csdef’ outside of our Deployment folder. We’ll add the following content to the file, and I'll explain a few of the key elements below.

Defining a Windows Azure Service

<?xml version="1.0" encoding="utf-8"?> <ServiceDefinition name="PHPonAzure" xmlns="http://schemas.microsoft.com/ServiceHosting/2008/10/ServiceDefinition"> <WebRole name="Deployment" vmsize="ExtraSmall" enableNativeCodeExecution="true"> <Startup> <Task commandLine="install-php.cmd" executionContext="elevated" taskType="background" /> </Startup> <Endpoints> <InputEndpoint name="defaultHttpEndpoint" protocol="http" port="80"/> </Endpoints> <Imports> <Import moduleName="RemoteAccess"/> <Import moduleName="RemoteForwarder"/> </Imports> <Sites> <Site name="PHPApp" physicalDirectory=".\Deployment\Websites\MyPHPApp"> <Bindings> <Binding name="HttpEndpoint" endpointName="defaultHttpEndpoint" /> </Bindings> </Site> </Sites> </WebRole> </ServiceDefinition>We will be using a Windows Azure WebRole to Host our application [remember WebRoles are IIS enabled], you’ll notice that our first element within our Service Definition is WebRole. Two Important pieces of the WebRole Element are the vmsize and enableNativeCodeExecution attributes. The VMSize Attribute hands off the VM Sizing requirements to the Fabric Controller so it can allocate our new WebRole. For those of you familiar with the .NET Stack the enabledNativeCodeExecution attribute will allow for FullTrust if set to true, or MediumTrust if set to false [For those of you that aren’t familiar, Here’s a Description of the Trust Levels in ASP.NET]. The PHP Modules for IIS need elevated privileges to run so we will need to set enableNativeCodeExecution to true.

In Part one of this series we created a command-line script that would initialize a PHP installation using WebPI. You’ll notice under the Startup Element, we’ve added our script to a list of Task Elements which defines the startup Tasks that are to be run on the Role. These scripts will run in the order stated with either limited [Standard User Access] or elevated [Administrator Access] permissions. The taskType determines how the Tasks are executed, there are three options simple, background and foreground. Our script will run in the background, this will allow us to RDP into our instance and check the Logs to ensure everything installed properly to test our deployment.

In the Service Definition above we’ve added some additional folders to our deployment, this is where we will be placing our website [in our case, we’re simply going to add an index.php file]. Within the Deployment Folder, add a new folder called Websites, within the new Websites folder, create a folder called MyPHPApp [or whatever you would like it named, be sure to modify the physicalDirectory attribute with the folder name].

Now that our directories have been added, create a new file named index.php within the MyPHPApp folder and add the lines of code below.

<?php phpinfo(); ?>Creating a Windows Azure Service Configuration

Now that we have a Service Definition to define the hard-requirements of our Service, we need to create a Service Configuration file to define the soft-requirements of our Service.

Microsoft has provided a way of creating a Service Configuration from our Service Definition to ensure we don’t miss any required elements.

If you intend to work with Windows Azure Tools on a regular basis, I would suggest adding the Path to the tools to your System Path, you can do this by executing the following script in a console window.

path=%path%;%ProgramFiles%\Windows Azure SDK\v1.3\bin;We’re going to be using the CSPack tool to create our Service Configuration file. To Generate the Service Configuration we’ll need to open a console window and navigate to our project folder. Then we’ll execute the following command to create our Service Configuration (.cscfg) file.

cspack ServiceDefinition.csdef /generateConfigurationFile:ServiceConfiguration.cscfgAfter you run this command take a look at your project folder, it should look relatively close to this:

You’ll notice that executing CSPack has generated two files. First, It has generated our Service Configuration file, which is what we’re interested in. However, the tool has also gone and compiled our project into a Cloud Service Package (.cspkg) file, which is ready for deployment to Windows Azure [we’ll get back to the Cloud Service Package in the next post in this series]. Let’s take a look at the Configuration file.

<?xml version="1.0"?> <ServiceConfiguration xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xmlns:xsd="http://www.w3.org/2001/XMLSchema" serviceName="PHPonAzure" xmlns="http://schemas.microsoft.com/ServiceHosting/2008/10/ServiceConfiguration"> <Role name="Deployment"> <ConfigurationSettings> <Setting name="Microsoft.WindowsAzure.Plugins.RemoteAccess.Enabled" value="" /> <Setting name="Microsoft.WindowsAzure.Plugins.RemoteAccess.AccountUsername" value="" /> <Setting name="Microsoft.WindowsAzure.Plugins.RemoteAccess.AccountEncryptedPassword" value="" /> <Setting name="Microsoft.WindowsAzure.Plugins.RemoteAccess.AccountExpiration" value="" /> <Setting name="Microsoft.WindowsAzure.Plugins.RemoteForwarder.Enabled" value="" /> </ConfigurationSettings> <Instances count="1" /> <Certificates> <Certificate name="Microsoft.WindowsAzure.Plugins.RemoteAccess.PasswordEncryption" thumbprint="0000000000000000000000000000000000000000" thumbprintAlgorithm="sha1" /> </Certificates> </Role> </ServiceConfiguration>Where did all of this come from? Let’s look at a simple table, that matches up how these settings relate to our Service Definition.

For more information on the RemoteAccess and RemoteForwarder check out the series that I did on RDP in a Windows Azure Instance. These posts will also take you through the instructions on how to provide proper values for the RemoteAccess and RemoteForwarder Elements that were Generated by the Import statements in the ServiceDefinition.

- Upload a Certification to an Azure Hosted Service

- Setting up RDP to a Windows Azure Instance: Part 1

- Setting up RDP to a Windows Azure Instance: Part 2

- Connecting to a Windows Azure Instance via RDP

There are two additional attributes in which I would recommend adding to the ServiceConfiguration Element, osFamily and osVersion.

osFamily="2" osVersion="*"These attributes will change the underlying Operating System Image that Windows Azure runs to Windows Server 2008 R2 and sets your Role to automatically update to the new image when available.

You can control the number of instances of your Roles are deployed by changing the value of the count attribute in the Instances Element. For now we’ll leave this value at 1, but keep in mind that Microsoft’s SLA requires 2 instances of your role to be running in order to guarantee 99.95% uptime.

Great Resources

- Cloud Cover Show, Episode 37: Multiple Websites in a Web Role

- Cloud Cover Show, Episode 34: Advanced Startup Tasks and Video Encoding

- Cloud Cover Show, Episode 31: Startup Tasks, Elevated Privileges and Classic ASP

Conclusion

In this entry we created both a Service Definition and a Service Configuration. Service Definitions provide information to the Fabric Controller to create non-changeable configurations to a Windows Azure Role. The Service Configuration file will provide additional information to the Fabric Controller to manage aspects of the environment that may change over time. In the next post we will be reviewing the Cloud Service Package and Deploying our Cloud Service into the Windows Azure Environment.

The Microsoft Case Studies team posted a 4-page Learning Content Provider Uses Cloud Platform to Enhance Content Delivery case study abut Windows Azure on 2/18/2011:

Point8020 Limited created ShowMe for SharePoint 2010 to deliver embedded, context-sensitive video help and training for Microsoft SharePoint Server 2010. Point8020 created a new menu option and a context-sensitive tab for the SharePoint Server 2010 ribbon, and it built a rich user interface with Microsoft Silverlight browser technology for displaying videos. Using the Windows Azure platform, the company moved the program’s library of video files to the cloud, enabling much simpler deployment of content updates. Point8020 found that the tight interoperation of Microsoft development tools and technologies enabled rapid development and a fast time-to-market. By using a cloud-based solution, Point8020 has also opened the way for new business models.

Partner Profile

Point8020 Limited was founded in 2007 and has its headquarters in Birmingham, England. The company has 20 employees and additional offices in Oxford, England, and Seattle, Washington.

Business Situation

Point8020 wanted to develop context-sensitive video help within Microsoft SharePoint Server 2010 and enable delivery of video content from a cloud platform.

Solution

Point8020 used Microsoft Silverlight to build a rich user interface for viewing videos within SharePoint Server 2010, and it chose Windows Azure as its cloud platform for storing and delivering videos.

Benefits

- Familiar and powerful tools

- Simplified deployment and updates

- A trusted technology provider

Software and Services

- Windows Azure

- Microsoft Sharepoint Server 2010

- Microsoft Visual Studio 2010

- Microsoft Silverlight

- Windows Azure Platform

- Microsoft SQL Azure

- Bing Technologies

- Microsoft ASP.NET

Steven Ramirez [pictured below] continued his interview of Mike Olsson with a Microsoft IT and the Road to Azure—Part 2 post to The Human Enterprise MSDN Blog on 2/18/2011:

This is the second of a two-part interview with Mike Olsson, Senior Director in Microsoft IT. In Part 1, we discuss how Microsoft IT went “all in,” what they learned along the way and how to decide which applications to migrate.

Steven Ramirez: What is the biggest challenge for developers when moving applications to the cloud?

Mike Olsson: The very biggest challenge to begin with was conceptual—what is it and how does it differ? In the Auction Tool scenario, that was the first hurdle. The architecture and the nature of what you’re doing is substantially different. The cloud is different from a server that I can touch and feel.

SR: And provisioning is different.

MO: Provisioning is different and the processes are different. How do we apply controls? Typically there are controls that are provided by the fact that you need to spend $100,000 on servers. Those controls go away. We need different controls in place.

In other areas, we’re using Visual Studio and .NET so, at the architecture and design level, it’s very different. But at the programmer level, it is substantially the same. So you can at least sit down and know that you’re dealing with tools that you understand and apply yourself to the architectural differences.

There are other differences. I can scale individual pieces of my application depending on demand. The user interface might need more processing power when my app is popular or at the end of the month and my backend jobs might need more processing power overnight. I can scale those independently, which means that there’s a tighter relationship between what the developer puts together and what the Operations guys have to control.

SR: What about training?

MO: Since April last year we have trained around fourteen hundred people in an organization of four thousand. The training for us has been absolutely critical. I think we are at a point now where a large group of people can write an Azure application. Our next step will be to get them to consistently write a good Azure application. The next level of training will depend on the processes and procedures that are put in place. Because we now need to think about how we do source control, how we do deployment, how we deal with permissions.

SR: What are some other challenges?

MO: One of the things that we’ve had to spend a little time on is, getting people to understand that there are no dev boxes in the corner. The environment is ubiquitous and the delineation between dev, test and production is not physical. That can be an interesting discussion. The flip side is, when you move from dev to test to production, you know that every single environment is absolutely identical. And for developers that’s a huge boon.

SR: How does using Azure affect the design of future applications in your opinion?

MO: Designing a new Azure application is much easier than moving an existing one. If you have the environment in mind—you’ve been trained on it—now you can design it with the scalability, the availability and with the bandwidth considerations. You can build a much better app.

SR: And those were the things we always wanted as developers, right? We always wanted the scale, the availability and the bandwidth. Now we’re in a place, it sounds like, where we can actually have those things.

MO: We can have it. Right now we are still thinking about new applications. We have examples of good design but they’re still relatively inside-the-box. I don’t think we’ve seen examples of what will happen when you get a smart developer who suddenly has infinite scalability and enormous flexibility in how he designs his application.

For new applications, the world is your oyster. You really have the flexibility to design the app the way you always wanted to.

SR: One of the things that IT is most concerned with—and has made huge investments in—is Security. Can you talk a little about what you’ve found in moving to the cloud?

MO: The first comment I’ll make is, from a physical security perspective, the folks who run the data centers know how to do that. The platform is secure.

Security is incredibly hard and best left to the professionals. We typically say to people in the class we run that, if you do it right, somewhere in the region of half your application should be dealing with security. The basic rule is, get the professionals involved and listen to them.

SR: And it sounds like this environment lends itself to security, right? In the past it was always, Well, I’ll write my app then we’ll put a layer of security on afterward. It sounds like with Azure, it’s security from the get-go.

MO: Yes. I think there is a perception that, It’s the cloud therefore it’s insecure. But it doesn’t have to be. As long as you go into this thinking about security first, the platform will be every bit as secure as what we have on-premises—maybe more so because it’s uniform, it’s patched, it’s monitored.

SR: In closing, what are three things that customers can do to get started?

MO: The first thing is, get to a definition of the cloud. Understand the Azure environment and what it can do—and it’s relatively easy to do. There’s a lot of information out there.

Second, do some simple segmentation of your application portfolio and understand where to start—the kind of segmentation we did. Business risk on one axis, technical complexity on the other.

And the third thing is, get your feet wet. It’s incredibly cheap and easy to start doing this. The barriers to entry are almost non-existent. And most of our customers already have the basic technical skills in place.

SR: Mike, thanks a lot. I really appreciate it.

MO: My pleasure.

Windows Azure Resources

- “Microsoft IT Developing Applications with Microsoft Azure“ video

- “Microsoft IT’s Lessons Learned: Moving Applications to the Cloud“ video

- “Introducing the Windows Azure Platform“ white paper

- “Windows Azure Security Overview“ white paper

- “Cloud Economics“ white paper

The opinions and views expressed in this blog are those of the author and do not necessarily state or reflect those of Microsoft.

The Microsoft Case Studies team posted Marketing Firm [Definition 6] Quickly Meets Customer Needs with Content Management in the Cloud on 2/17/2011:

Definition 6 is an interactive marketing agency that focuses on creating content-rich, engaging websites. The company uses the Umbraco content management system and wanted to implement a cloud-based hosting option to complement its traditional hosting model, but struggled to find a solution that would integrate with the content management system. Umbraco also recognized the need for a cloud-based solution and developed an accelerator that enables companies to deploy websites that are managed in the Umbraco content management system to the Windows Azure platform. By using the accelerator, Definition 6 migrated Cox Enterprises’ Cox Conserves Heroes website to the Windows Azure platform in two weeks. Definition 6 enjoyed a simple development and deployment process; can now offer customers a cost-effective, cloud-based solution; and is prepared for future business demands.

Organization Size: 150 employees

Organization Profile: Definition 6 is an interactive marketing agency that, in addition to traditional marketing, creates interactive web experiences for several national brands, including Cox Communications.

Business Situation: The marketing agency wanted to offer a cloud-based hosting option for its customers’ websites, but needed one that would integrate with the Umbraco content management system.

Solution: By using an accelerator developed by Umbraco, Definition 6 migrated one of the Cox Enterprises websites to the Windows Azure platform in only two weeks.

Benefits:

- Simplified development and deployment

- Implemented cost-effective, quick hosting option

- Prepared for future business needs

Software and Services:

- Windows Azure

- Microsoft Visual Studio 2008 Professional Edition

- Microsoft SQL Azure

- Microsoft .NET Framework 3.5

- Microsoft .NET Framework 4

<Return to section navigation list>

Visual Studio LightSwitch

Edu Lorenzo explained Creating a workflow with Visual Studio Lightswitch in a 2/21/2011 post:

No, not a SharePoint workflow. That is a totally different task and cannot be done with LS.

The workflow I am referring to is how certain data needs to go from one screen to another.

For all those who have tried VS-LS, it is really a pretty cool tool to create screens for data entry and searching. But what if I want to establish some form of workflow? Maybe creating a new client then going straight to creating a transaction for this new patient? Yes, that is what this installment of my LightSwitch blog will show.

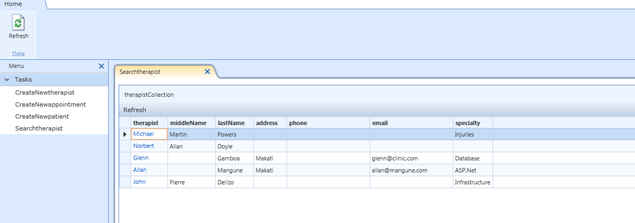

So I start off with a small application that I made for this demo. It is a simple contacts management for a small clinic (am planning to donate this app to my cousin Andrew in Canada who owns a physical therapy and rehabilitation clinic). Here is a screenshot of what I have:

It’s basically your off the shelf application that LightSwitch gives you once you add screens. I just basically have Therapist, Patient and Appointments tables set up, popped out a few screens and there it is.

Although Visual Studio Lightswitch can create a “CreateNewPatient” screen for me (which I have already done), I want to extend this application by adding a workflow. What I want is, after the secretary creates a new Patient, the system should redirect the secretary to create an appointment for that Patient.

Here is how it’s done:

First let’s take a look at the screen for adding a new Patient during design time..

I highlighted two areas; the one on the left is representative of the viewmodel that lightwsitch creates. It shows that we have a “patientProperty” object and a list of properties for this object. At the right area, I highlighted the controls that LightSwitch chose for you. What I want to highlight here, is that lightswitch will do its best to choose the proper control for the proper data type. With that in mind, users should be informed that in order for VS-LS to consistently provide functional UI, you must really design your tables correctly. Choose the proper data type, define the correct relationships and all.

And to illustrate that further, here is a screenshot of the CreateNewAppointment screen that VS-LS created for me based on the datatypes that I chose and the relationships that I defined:

VS-LS chooses an appropriate control to hold/represent the data that you need. A textbox for data entry, Date Picker for the appointmentDate and DateTimePicker for startTime and endTime. Also, you might notice that for the patient and therapist entries, LS chose to use a Modal Window Picker because I have already defined the relationships for these tables.

Here is a screenshot of the relationship definition and see how clearly a relationship can be defined:

Okay. Time to get to the task at hand, adding a “workflow”.

With VS-LS, your screens will have the objects that it created for you as the datasource. It’s pretty much like binding the whole screen to a datasource object and binding each control to a property of your object.

So since what we want is for the application to go straight to the Create New Appointment screen right after a new Patient is created, we will need to do two things:

- Overload the constructor of the create new appointment screen so that it will accept a Patient object and load the properties immediately (so to speak)

- Save the patient data upon creation then call and open the Create New Appointment screen while passing the new patient object.

So let’s modify the Create New Appointment screen to accept an optional parameter on load. Let’s take a look at the default code that LIghtswitch loads on a screen by clicking the “Write Code” button while designing the screen..

public

partial

class

CreateNewappointment{

partial

void CreateNewappointment_BeforeDataInitialize(){

// Write your code here.

this.appointmentProperty = new

appointment();}

partial

void CreateNewappointment_Saved(){

// Write your code here.

this.Close(false);

Application.Current.ShowDefaultScreen(this.appointmentProperty);

}

}

}

There is something worth taking notice of here. There are two events that you will see, BeforeDataInitialize and Saved. What you will see here is that LightSwitch’s code acts on data, rather than the screen/form. The code we have is not UI related code. This further illustrates that VS-LS follows an MVVM pattern. I just want to highlight that although it looks like LightSwitch is just a big wizard, it still follows a tried and tested pattern.

So what do I do with this code? I comment out the code for the Saved() function so I can replace it.

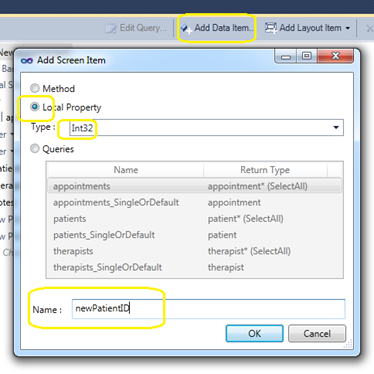

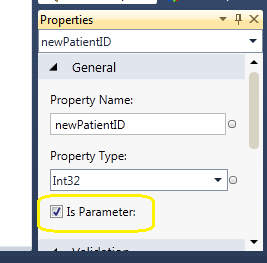

Next I add a new dataItem to my screen that will be my optional parameter to accept the PatientID. This is done by clicking “Add Data Item” and choosing the proper datatype for the parameter we will receive, give it a name and save.

Then I declare that as a Parameter on the properties explorer by checking the Is Parameter checkbox.

Okay, so now we have an optional parameter. Next thing I need to do is to go to the Loaded event of the screen then add some code.

bool hasPatient = false;

partial

void CreateNewappointment_Loaded(){

if (!hasPatient)

{

this.appointmentProperty.patient = this.DataWorkspace.ApplicationData.patients_Single(newPatientID);

hasPatient = true;

}

}

Let us dissect these few lines of code:

bool hasPatient = false; ß this line of code declares a bool variable called hasPatient.. DUH!!!!

This is a much nicer line of code:

this.appointmentProperty.patient = this.DataWorkspace.ApplicationData.patients_Single(newPatientID);

here you will see a lot of things but the most interesting part (at least for me) is the DataWorkspace so I’ll try to expand on that.

The DataWorkspace is the place where LS maintains the “state” of your data. While running your LightSwitch app, you will notice that the tab displays an Asterisk while you are either editing or adding a new row. This tells the user that the data displayed is currently in a “dirty” state and there has been changes. All this happens without you writing any code for that.

Finally, this line basically issues a “select.. where” statement to the database, pretty much like how EF(Entity Framework) does it. I haven’t tried it yet but I think there is a good chance that if one runs profilers, we will see a select statement issued somewhere :p

Now it’s time to tell the CreateNewPatient screen to get the newly saved PatientID and pass it to the CreateNewAppointment screen.

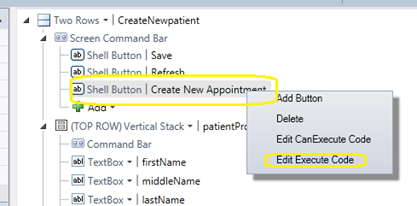

To do that, I’ll add a new button to the CreateNewPatient screen and add some code to open the CreateNewAppointment screen

And as I write the code..

Notice two things:

- I used the “Application” namespace, which is pretty familiar already and..

- The input parameter newPatientID is already showing in the intellisense, which tells us that what we did earlier was correct

So we now supply the newPatientID by getting it from the PatientProperty

Then I removed the code for the Saved method because it closes the form.

Then when I run this and add a new “familiar” patient and his health condition information:

We now see the button I added to open the CreateNewAppointment screen.. and when I click, the application opens the Create new Appointment screen with the Patient’s name loaded automagically!

I’ll still polish this up by adding validation and controlling the flow.

And that’s how you add a “workflow” in a Visual Studio Lightswitch application.

Return to section navigation list>

Windows Azure Infrastructure

TechNet has a new ITInsights blog that features Cloud Conversations posts with a Cloud Power theme, which replaces the original We’re All In slogan:

Microsoft commissioned David Linthicum (DavidLinthicum@Microsoft), a Forbes Magazine contributor, to write ROI of the Cloud, Part 1: Paas as a ForbesAdVoice item:

Here’s the full text of David’s article:

This article is commissioned by Microsoft Corp. The views expressed are the author’s own.

Platform-as-a-Service, or PaaS, provides enterprises with the ability to build, test, and deploy applications out of the cloud without having to invest in a hardware and software infrastructure.

One of the latest arrivals in the world of cloud computing, PaaS is way behind IaaS and SaaS in terms of adoption, but has the largest potential to change the way we build and deploy applications going forward. Indeed, my colleague Eric Knorr recently wrote that “PaaS may hold the greatest promise of any aspect of the cloud.” This In-Stat report indicates that PaaS spending will increase 113 percent to about $460 million between 2010 and 2014.

The big guys in this space include Windows Azure (Microsoft), Google App Engine, and Force.com. More recently, Amazon joined the PaaS game with its new “Elastic Beanstalk” offering. The existing SaaS and IaaS players, such as Salesforce.com and Amazon, respectively, see PaaS as a growth area unto itself, as well as a way to upsell their existing SaaS and IaaS services.

However, the business case around leveraging PaaS is largely dependent upon who you are, and how you approach application development. The first rule of leveraging this kind of technology is to determine the true ROI, which in the world of development will be defined by cost savings and efficiencies gained over and above the traditional models.

There are three core things to consider around PaaS and ROI:

- Application development

- Application testing

- Application deployment and management

Application development costs, mostly hardware and software, are typically significant. Back in my CTO days these costs were 10 to 20 percent of my overall R&D costs. This has not changed much today. However, while the potential to reduce costs drives the movement to PaaS, first you need to determine if a PaaS provider actually has a drop-in replacement for your existing application development platform. In most cases there are compromises. You also need to consider the time it will take to migrate over to a PaaS provider, and the fact that you’ll still be supporting your traditional local platform for some time.

Thus the ROI is the ongoing cost trajectory required to support the traditional local application development platform, and the potential savings from the use of PaaS. You need to consider ROI using a 5-year horizon, and make sure to model in training and migration costs as well, which can be significant if there are significant differences in programming language support, as well as supporting subsystems such as database processing.

While your mileage may vary, in my experience most are finding 30 to 40 percent savings, if indeed a PaaS provider is a true fit.

Application testing is really the killer application for PaaS, and it comes with the largest ROI. Why? There is a huge amount of cost associated with keeping extra hardware and software around just for testing purposes. The ability to leverage cloud-delivered platforms that you rent means your costs can drop significantly. Again, consider this using a 5-year horizon. Compare the cost of keeping and maintaining hardware and software for test platforms locally, and the cost of leveraging PaaS for testing. The savings can be as much as 70 to 90 percent in many cases, in my most recent experience. Again, this assumes that you’ll find a PaaS provider that’s close to a drop-in fit for your testing environment.

Finally, application deployment and management on a PaaS provider typically comes with handy features such as auto provisioning and auto scaling. This mean the PaaS provider will auto-magically add server instances as needed to support application processing, database processing, and storage. No more waves of hardware and software procurement cycles, or nights bolting servers into racks in the data center. Thus the ROI is second highest after application testing, and can total as much as 50 to 60 percent reductions in costs.