Windows Azure and Cloud Computing Posts for 2/24/2011+

| A compendium of Windows Azure, Windows Azure Platform Appliance, SQL Azure Database, AppFabric and other cloud-computing articles. |

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database and Reporting

- Marketplace DataMarket and OData

- Windows Azure AppFabric: Access Control and Service Bus

- Windows Azure Virtual Network, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch

- Windows Azure Infrastructure

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Azure Blob, Drive, Table and Queue Services

No significant articles today.

<Return to section navigation list>

SQL Azure Database and Reporting

No significant articles today.

<Return to section navigation list>

MarketPlace DataMarket and OData

Elisa Flasko (@eflasko) described the DataMarket Service Update 1 & February Content Update in a 2/23/2010 post to the Windows Azure Marketplace DataMarket Blog:

Announcing the release of DataMarket Service Update 1 & the February Content Update. Service Update 1 introduces a number of new improvements for our Content Providers including reporting and offer wind down features, while the February Content Updates brings with it a number of exciting new public and commercial offerings.

Take a look at some of the great new data we have:

Boundary Solutions, Inc.

ParcelAtlas BROWSE Parcel Image Tile Service - National Parcel Layer composed of 80 million parcel boundary polygons across nearly 1,000 counties suitable incorporating a national parcel layer existing geospatial data models. Operations are restricted to display of parcel boundary as graphic tiles.

- ParcelAtlas LOCATE - National Parcel Layer composed of parcel boundary polygons supported by situs address, property characteristics and owner information. Three different methods for finding an address expedite retrieving and displaying a desired parcel and its assigned attributes. Surrounding parcels are graphic tile display only.

- ParcelAtlas REPORTS - National Parcel Layer composed of parcel boundary polygons supported by situs address, property characteristics and owner information.

Government of the United Kingdom

- UK Foreign and Commonwealth Office Travel Advisory Service - Travel advice and tips for British travellers on staying safe abroad and what help the FCO can provide if something goes wrong.

Gregg London Consulting

- U.P.C. Data for Spirits - Point of Sale - U.P.C. Data for Spirits includes Basic Product Information for Items in the following Categories: Beer, Wine, Liquor, and Mixers.

- U.P.C. Data - Full Food - U.P.C. Data for Food includes Full Product Information and Attributes.

- U.P.C. Data for Tobacco - Extended – U.P.C. Data for Tobacco includes Basic plus Extended Product Information for the following Categories: Cigarettes, Cigars, Other Tobacco Products, and Roll Your Own.

- U.P.C. Data for Spirits - Extended - U.P.C. Data for Spirits includes Basic plus Extended Product Information for Items in the following Categories: Beer, Wine, Liquor, and Mixers.

- U.P.C. Data for Tobacco - Point of Sale - U.P.C. Data for Tobacco includes Basic Product Information for the following Categories: Cigarettes, Cigars, Other Tobacco Products, and Roll Your Own.

- U.P.C. Data for Vending Machines - U.P.C. Data for Food, for the Vending Machine Industry, to maintain compliance with Section 2572 of Health Care Bill H.R. 3962.

- U.P.C. Data - Ingredients for Food - U.P.C. Data for Food includes Full Ingredient Information.

- U.P.C. Data - Nutrition for Food - U.P.C. Data for Food includes Full Nutrition Information.

StockViz

- StockViz Capital Market Analytics for India - StockViz brings the power of data & technology to the individual investor. It delivers ground-breaking financial visualization, analysis and research for the Indian investor community. The StockViz dataset is being made available through Microsoft DataMarket to allow users to access the underlying data and analytics through multiple interfaces.

United Nations

- UNSD Demographic Statistics - United Nations Statistics Division - The United Nations Demographic Yearbook collects, compiles and disseminates official statistics on a wide range of topics such as population size and composition, births, deaths, marriage and divorce. Data have been collected annually from national statistical authorities since 1948.

As we post more datasets to the site we will be sure to update you here on our blog… and be sure to check the site regularly to learn more about the latest data offerings.

I complained earlier that Gregg London’s content had been advertised for several months as a coming attraction. I’m glad to see it arrive, although it’s a bit pricey for experimentation.

Wesy reported New Video: Windows Azure Market Place Data Market with Allan Naim in a 2/23/2011 post to the US ISV Evangelism blog:

Allan Naim is an Architect Evangelist focused on the Windows Azure platform in Silicon Valley. Here’s a great overview video that he created.

The Data Market is a service that provides a single consistent marketplace and delivery channel for high quality information as a cloud service.Content partners who collect data can publish it on Data Market to increase its discoverability and achieve global reach with high availability. Data from databases, image files, reports and real-time feeds is provided in a consistent manner through internet standards. Users can easily discover, explore, subscribe and consume data from both trusted public domains and from premium commercial providers.

Developers can use data feeds to create reach solutions provide up-to-date relevant information in the right context for end users. Developers can use built-in support for consumption of data feeds from Data Market within Visual Studio or from any Web development tool that supports HTTP.

If you’re new to the Windows Azure platform – get a 30 day Free Pass by using this Promo Code DPWE01

<Return to section navigation list>

Windows Azure AppFabric: Access Control and Service Bus

Itai Raz published Introduction to Windows Azure AppFabric blog posts series – Part 3: The Middleware Services Continued on 2/23/2011:

In the first post we discussed the 3 main concepts that make up Windows Azure AppFabric: Middleware Services, Building Composite Applications, and Scale-out Application Infrastructure.

In the second post we started discussing the Middleware Services that already available in production: Service Bus and Access Control service.

Focus on Caching

In this post we will discuss the Caching service. The Composite App service will be discussed in the next post as part of Building Composite Applications, and the Integration service will be discussed in a future post as well.

As noted in the previous post, the Caching service is available as a Community Technology Preview (CTP), and the Integration and Composite App services will also be released in CTPs later in 2011.

Caching

The Caching service is a distributed, in-memory, application cache that can be used to accelerate the performance of Windows Azure and SQL Azure applications. It provides applications with high-speed access and scalability to application data.

For instance, if you are developing a web application, you would use the Caching service in order to store frequently accessed data in-memory closer to the application, so you don't have to pay the penalty of fetching the data from the data store. Examples for such data would be a list of countries, or a static image that is part of your webpage.

Another very useful usage scenario of the Caching service is for ASP.NET session state data. Session state is data that is kept during a user's specific session with your website. An example for such data is a customer's shopping cart information. It would be useful to keep this data in-memory until the customer confirms the purchase. The ASP.NET application can be configured to use the Caching service to store the session state data in a cache without having to change a single line of code, you are only required to make a few minor changes in the configuration file.

When running a web application using Windows Azure Web Roles, a single user might be catered by different Web Roles as result of load balancing activities or in case one of the Web Roles stops working. The fact that the user's session data is kept in a central cache makes the user's data always available and enables you to provide a consistent experience and high-availability to the data in such cases.

Another great feature of AppFabric Caching is the "Local Cache". When using this feature you can also keep cached data in the available memory in your web or worker roles, in addition to the central cache. This way the cached data is even closer to your application.

The image below illustrates how the Caching service keeps data in-memory closer to the application and accelerates the application's performance by saving the need to fetch data every time from the data store:

The Caching service delivers all these great capabilities while providing inherent elastic scale, low latency, and high throughput. In addition, it is very easy to provision, configure and administer.

As noted earlier, the Caching service is already available for you to explore for free as a CTP in our Labs/Preview environment. We recently released an update to the service that added more cache size options, and the ability to dynamically change the cache size. More details can be found here.

The service will be released as a production service with full SLA in a few months.

This service is based on the Windows Server AppFabric Caching technology, which is a proven, on-premises technology available on top of Windows Server. If you are already using the on-premises version of the cache as part of your application, you should be able to use the Caching service in Azure AppFabric with only minor changes to your application code, if at all. Most importantly, as in all other Windows Azure AppFabric services, in the cloud you don't have to install, update, and manage the service; all you need to do is provision, configure, and use. You should note that there are some on-premises features in Windows Server AppFabric Caching that are not available in Windows Azure AppFabric Caching, but we will gradually add these features in each release of the service. For more information, see the Caching Programming Guide on MSDN.

Using the same technology both on-premises and in the cloud is one of the basic promises in AppFabric that addresses the challenges faced by developers discussed in the first post. More on this will come in future posts...

For a more in-depth and technical overview of the Caching service, please use the following resources:

- Video: What is AppFabric Caching

- PDC 10 recorded session: Introduction to the AppFabric Cache Service | Speaker: Wade Wegner

- Video: Windows Azure AppFabric Caching - Interview with Karandeep Anand

- Blog post: Introduction to Windows Azure AppFabric Caching CTP

- Wade Wegner's blog

- Caching Programming Guide on MSDN

Basically, any content related to how to use Windows Server AppFabric Caching will be relevant as well, except for content on how to install, configure, or manage, which are already taken care of by the platform.

As you can see from this post, the Caching service, like the Service Bus and Access Control services, makes the developer's life a lot easier by providing an out-of-the-box solution to complicated problems that developers are faced with when building applications.

As a reminder, you can start using our CTP services in our LABS/Preview environment at: https://portal.appfabriclabs.com/. Just sign up and get started.

Other places to learn more on Windows Azure AppFabric are:

- Windows Azure AppFabric website and specifically the Popular Videos under the Featured Content section.

- Windows Azure AppFabric developers MSDN website

Be sure to start enjoying the benefits of Windows Azure AppFabric with our free trial offer. Just click on the image below and start using it already today!

Eugenio Pace (@eugenio_pace) continued his series with Web Single Sign Out–Part II on 2/24/2011:

Following up on previous post, there were 2 questions:

Where do these green checks images come from? There are nowhere in a-Order or in a-Expense… you would spend hours looking for the PNG, or JPG or GIF and you will never find it, because it is very well concealed. Can you guess where it comes from?

I was referring to the green checks displayed here:

The src for these is a rather cryptic src=http://localhost/a-Order/?wa=signoutcleanup1.0

And the answer is: it’s coming from within WIF (the FAM more specifically). If you explore the FAM with Reflector you will see a byte array embedded in the code. That byte array is the GIF for the green check. Exercise to the reader: is this the only behaviour? Can the FAM do something else? under which circumstances?

The second question was:

Bonus question: how does the IdP know all the applications the user accessed to?

No WIF magic here. The issuer will have to keep a list of all the RP. In our sample (that we expect to release really soon) we use exactly the technique described in Vittorio’s book. We have a small helper class “SingleSignonManager” that keeps track of RPs in cookies:

Then, when the signout request is received, we simply iterate over the list and return the right markup:

The SingleSignoutManager class is mentioned in Vittorio’s book but not available there, so we included it in the sample.

<Return to section navigation list>

Windows Azure Virtual Network, Connect, RDP and CDN

No significant articles today.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

JC Cimetiere posted Improving experience for Java developers with Windows Azure to the Interoperability @ Microsoft blog on 2/23/2011:

From the early days, Windows Azure has offered choices to developers. It allows use of multiple languages (like .NET, PHP, Ruby or Java) and development tools (like Visual Studio, Eclipse) to build applications that run on Windows Azure or consume any of the Windows Azure platform services from any other cloud or on-premises platform. Java developers have had a few options to leverage Windows Azure, like the Windows Azure SDK for Java or the Tomcat Solution Accelerator.

At PDC10, we introduced our plan to improve the experience for Java developers with Windows Azure. Today, we’re excited to release a Community Technology Preview (CTP) of the Windows Azure Starter Kit for Java, which enables Java developers to simply configure, package and deploy their web applications to Windows Azure. The goal for this CTP is to get feedback from Java developers, and to nail down the correct experience for Java developers, particularly to make sure that configuring, packaging and deploying to Windows Azure integrates well with common practices.

What’s the Windows Azure Starter Kit for Java?

This Starter Kit was designed to work as a simple command line build tool or in the Eclipse integrated development environment (IDE). It uses Apache Ant as part of the build process, and includes an Ant extension that’s capable of understanding Window Azure configuration options.

The Windows Azure Starter Kit for Java is an open source project released under the Apache 2.0 license, and it is available for download at: http://wastarterkit4java.codeplex.com/

What’s inside the Windows Azure Starter Kit for Java?

The Windows Azure Starter Kit for Java is a Zip file that contains a template project and the Ant extension. If you look inside this archive you will find the typical files that constitute a Java project, as well as several files we built that will help you test, package and deploy your application to Windows Azure.

The main elements of the template are:

- .cspack.jar: This contains that java implementation of windowsazurepackage ant task.

- ServiceConfiguration.cscfg: This is the Windows Azure service configuration file.

- ServiceDefinition.csdef: This is the Windows Azure service definition file.

- Helloworld.zip: This Zip is a placeholder for your Java application.

- startup.cmd: This script is run each time your Windows Azure Worker Role starts.

Check the tutorial listed below for more details.

Using the Windows Azure Starter Kit for Java

As mentioned above, you can use the Starter Kit from a simple command line or within Eclipse. In both case the steps are similar:

- Download and unzip the Starter Kit

- Copy your Java application into the approot folder

- Copy the Java Runtime Environment and server distribution (like Tomcat or Jetty) ZIPs into the approot folder

- Configure the Startup commands in startup.cmd (specific to the server distribution)

- Configure the Windows Azure configuration in ServiceDefinition.cscfg

- Run the build and deploy commands

For detailed instructions, refer to the following tutorials, which show how to deploy a Java web application running with Tomcat and Jetty:

- Deploying a Java application to Windows Azure with Command-line Ant

- Deploying a Java application to Windows Azure with Eclipse

What’s next?

Yesterday, Microsoft announced an Introductory Special offer that includes 750 hours per month (which is one server 24x7) of the Windows Azure extra-small instance, plus one small SQL Azure database and other platform capabilities - all free until June 30, 2011. This is a great opportunity for all developers to see what the cloud can do - without any up-front investment!

You can also expect continued updates to the development tools and SDK, but the experience of Java developers is critical. Now is the perfect time to provide your feedback, so join us on the forum at: http://wastarterkit4java.codeplex.com/

Jean-Christophe is a Senior Technical Evangelist, @openatmicrosoft

Jennifer Lawinsky (@lawinski) posted Microsoft and Athenahealth Partner on Patient Information Sharing Solution on 2/22/2011 to the Channel Insider blog:

Microsoft and cloud-based health information services vendor athenahealth are teaming up on a hosted solution that allows patient data to be shared between hospitals, doctors and patients.

Microsoft is teaming up with athenahealth, a web-based practice management, electronic health record and patient communication services vendor, on an electronic health solution intended to improve communication between hospitals, doctors and patients.

The cloud-based solution combines athenahealth’s hosted services – athenaClinicals and athenaCollector – with Microsoft’s Amalga enterprise health intelligence platform software. The combined solution enables the flow of information across health care delivery settings – from hospital to doctor’s office, both in-patient and out-patient.

“athenahealth and Microsoft share a common view on the importance of improving information sharing across the health system to address the ‘data gaps’ faced by healthcare organizations striving to deliver optimal care,” Peter Neupert, corporate vice president of Microsoft Health Solutions Group, said in a statement. “By connecting athenahealth and Microsoft’s complementary health IT assets, we can open new doors for health systems looking to reduce costs and improve care through the efficient, secure exchange of health information across ambulatory and inpatient settings.”

Two health care systems have been selected to try out the solution: Steward Health Care System in Massachusetts and Cook Children’s Health Care System in Texas. Both already use athenahealth’s cloud-based HER platform and Amalga and are testing the connected solution for athenaNet to Amalga data sharing.

The hospitals will also work with Microsoft to push data from Amalga to its Health Vault online platform for collecting, sharing and storing personal health information. The projects should be completed in the second half of 2011.

“We are pleased to be an alpha site for the initial athenahealth-Microsoft collaboration that has proven the value of the alliance announced today. In the coming months, athenaNet and Amalga will provide Cook Children’s with true connectivity across our multiple IT systems, furthering the promise we made to this community to improve the health of every child in our region. Our patients and physicians will have access to an information platform that not only transcends physical location but also will evolve as knowledge grows to comply with changing healthcare requirements and rules,” said Rick Merrill, president and CEO of Cook Children’s Health Care System, in a statement.

“Outside of unique, advantaged organizations like Kaiser and the Cleveland Clinic, there are few examples of health systems with the connectivity to share data across the full enterprise. In most cases, the technology simply does not exist or is not cost efficient,” said Jonathan Bush, Chairman and CEO of athenahealth.

“With Microsoft we have created what we believe to be a low cost, rapidly deployable and infinitely scalable solution that gives healthcare professionals a single view of a patient’s activity—something that healthcare reform advocates have been striving to do for years. Our shared solution fittingly provides all sorts of opportunities for healthcare institutions to rise to the challenges of operating in a more complex environment than ever before, while at the same time providing the IT capabilities to deliver better, more coordinated care.”

<Return to section navigation list>

Visual Studio LightSwitch

Robert Green described Checking Permissions in a LightSwitch Application in a 2/24/2011 post:

My sample application is in pretty good shape. Now I want to control who can work with the data. This is accomplished in LightSwitch by setting up permissions and then checking them in code. Beth Massi wrote about security in Implementing Security in a LightSwitch Application, and I don’t want to rehash what she said, so I am just going to focus on permissions.

We’ll start with a review of the data in the application. The application includes both remote and local data. The remote data consists of the Courses, Customers and Orders tables in the TrainingCourses SQL Server database and the KB articles on a SharePoint site. The local data consists of the Visits and CustomerNotes tables in a local SQL Server Express database.

I want there to be two types of users: sales reps and support staff. Sales reps own the relationship with customers so they will have more access to data than support staff. I want to enforce the following rules for each user type:

Sales reps

- Can view, add, edit and delete visits and customer notes.

- Can view, add and edit but cannot delete customers and orders.

- Can view but cannot add, edit or delete courses.

- Can view, add, edit and delete KBs.

Support staff

- Can view and add, but cannot edit or delete, visits.

- Cannot view customer notes.

- Can view customers but cannot view the year to date revenue for customers.

- Cannot add or delete customers.

- Can modify only a customer’s phone and fax number.

- Can view but not add, edit or delete courses and orders.

- Can view, add and edit but cannot delete KBs.

LightSwitch controls access to screens and data via permissions. Once you define permissions, you can write code to check the user’s permissions and control what the user can and cannot do or see. For example, if the user does not have permission to view data, you may not let them open a screen. Or you may create a query that only includes the information they can see.

Setting Up Permissions

The first step is to create permissions. Actually, the first step is to write out the rules, which I did above. Then you can decide how many different permissions you need. How granular do you want to get? For example, can I just create CanView and CanModify? Or do I need CanView, CanAdd, CanUpdate and CanDelete? If the ability to modify data is always all or nothing, then I can create a CanModify permission and leave it at that. But if I then need to distinguish between adding, modifying and deleting, I will have to add the three more granular permissions.

You also need to decide on what verbs you are using. What are the actions you are permitting or prohibiting? Is it based on entities or screens? Do I create CanViewCustomers or CanOpenCustomersScreen?

To create permissions, go into the project properties and select the Access Control tab. You then decide whether to use Windows or Forms authentication. The recommendation is to use Windows authentication if your users are on the network. They have logged onto the network and therefore don’t need to be authenticated by the application again. If you use Forms authentication, the application doesn’t know who your users are and so they will need to login. You would then have to create users and roles, just like in an ASP.NET application. LightSwitch uses the ASP.NET Membership and Role providers to perform Forms authentication.

I will use Windows authentication for this post. I will use the following permissions:

- CanViewCustomers, CanAddCustomers, CanUpdateCustomers, CanDeleteCustomers

- CanViewOrders, CanAddAndUpdateOrders, CanDeleteOrders

- CanViewCourses, CanModifyCourses

- CanViewKBs, CanModifyKBs

- CanViewNotes, CanModifyNotes

- CanViewVisits, CanModifyVisits

- CanViewYTDRevenue

- CanOnlyUpdateCustomerPhoneAndFax

Permission names are one word. I made my display names multiple words. I chose to capitalize the display names not as the result of usability concerns, but because after copying and pasting, I didn’t want to take the time to lower case the non-first words! I made the descriptions more descriptive and more human readable.

I also checked Granted for debug for all of the permissions I want enabled when I test the application. If you leave the permissions unchecked you won’t have them when you press F5. For testing, I will play the role of Sales Rep, who can do anything except delete customers or orders and add, edit or delete a course.

Be aware that in Beta 1, if you click the grey space to the right of the authentication choices, you can select them by accident. Switching from Windows to Forms authentication does not clear out the Granted for debug check boxes, but if you accidentally select Do not enable authentication they are cleared out. Then you have to recheck Granted for debug all over again. This does not happen in later builds, so you shouldn’t see this in Beta 2.

Checking for Permissions at the Screen and Entity Level

Once you have defined permissions, you can now check them in code and control access to information. Let’s start with customers. When the application starts, users see a list of customers. That list includes the calculated year to date revenue for each customer. By clicking the Add button, the user can add a new customer.

When the user selects a customer, the customer detail screen appears, with a built-in Save button and a Delete button that I added.

I’m first going to check if the user can view customers. I open the CustomerList screen and select CanRunCustomerList from the Write Code button’s drop-down list.

This method runs right before the customers list screen appears. The screen appears if this method returns true. So I add code to check if the user has permission to view customers.

‘ VB Public Class Application Private Sub CanRunCustomerList(ByRef result As Boolean) result = Me.User.HasPermission(Permissions.CanViewCustomers) End Sub End Class // C# public partial class Application { partial void CanRunCustomerList(ref bool result) { result = this.User.HasPermission(Permissions.CanViewCustomers); } }I can test this by turning that permission off. Back to the Access Control tab of the Project Designer and uncheck Granted for debug for CanViewCustomers. When I run the application, I do not see the customers, which is the default view. I also don’t see Customer List in the menu.

I want to add a CanView check to all of my screens, so I open the CustomerDetail screen and select CanRunCustomerDetail from the Write Code buttons drop-down list. CanRunCustomerList and CanRunCustomerDetail are both methods of the Application class so I can copy the code I just wrote, as long as I remember to add the CustomerId argument. This is required because I use the same screen for both adding and editing customers.

‘ VB Private Sub CanRunCustomerDetail( ByRef result As Boolean, ByVal CustomerId As Int32?) result = Me.User.HasPermission(Permissions.CanViewCustomers) End Sub // C# partial void CanRunCustomerDetail(ref bool result, int? CustomerId) { result = this.User.HasPermission(Permissions.CanViewCustomers); }I can then create the appropriate methods for the remaining screens by copying, pasting and renaming. That is easier than opening each screen.

‘ VB Public Class Application Private Sub CanRunCustomerList(ByRef result As Boolean) result = Me.User.HasPermission(Permissions.CanViewCustomers) End Sub Private Sub CanRunCustomerDetail( ByRef result As Boolean, ByVal CustomerId As Int32?) result = Me.User.HasPermission(Permissions.CanViewCustomers) End Sub Private Sub CanRunCustomerVisits(ByRef result As Boolean) result = Me.User.HasPermission(Permissions.CanViewVisits) End Sub Private Sub CanRunCourseList(ByRef result As Boolean) result = Me.User.HasPermission(Permissions.CanViewCourses) End Sub Private Sub CanRunCourseDetail( ByRef result As Boolean, ByVal CourseId As Int32?) result = Me.User.HasPermission(Permissions.CanViewCourses) End Sub Private Sub CanRunNewOrder(ByRef result As Boolean) result = Me.User.HasPermission(Permissions.CanAddAndUpdateOrders) End Sub Private Sub CanRunNewVisit(ByRef result As Boolean) result = Me.User.HasPermission(Permissions.CanModifyVisits) End Sub End Class // C# public partial class Application { partial void CanRunCustomerList(ref bool result) { result = this.User.HasPermission(Permissions.CanViewCustomers); } partial void CanRunCustomerDetail(ref bool result, int? CustomerId) { result = this.User.HasPermission(Permissions.CanViewCustomers); } partial void CanRunCustomerVisits(ref bool result) { result = this.User.HasPermission(Permissions.CanViewVisits); } partial void CanRunCourseList(ref bool result) { result = this.User.HasPermission(Permissions.CanViewCourses); } partial void CanRunCourseDetail(ref bool result) { result = this.User.HasPermission(Permissions.CanViewCourses); } partial void CanRunNewOrder(ref bool result) { result = this.User.HasPermission( Permissions.CanAddAndUpdateOrders); } partial void CanRunNewVisit(ref bool result) { result = this.User.HasPermission(Permissions.CanModifyVisits); } }So we have now covered whether or not users can open screens. What about whether or not they can modify data? Those permissions are going to be at the entity level. I open the Customer entity and I can select the Security Methods from the Write Code button’s drop-down list. I add permissions checks to CanRead, CanInsert, CanUpdate and CanDelete.

‘ VB Public Class TrainingCoursesDataService Private Sub Customers_CanRead(ByRef result As Boolean) result = Me.Application.User.HasPermission( Permissions.CanViewCustomers) End Sub Private Sub Customers_CanInsert(ByRef result As Boolean) result = Me.Application.User.HasPermission( Permissions.CanAddCustomers) End Sub Private Sub Customers_CanUpdate(ByRef result As Boolean) result = Me.Application.User.HasPermission( Permissions.CanUpdateCustomers) End Sub Private Sub Customers_CanDelete(ByRef result As Boolean) result = Me.Application.User.HasPermission( Permissions.CanDeleteCustomers) End Sub End Class // C# public partial class TrainingCoursesDataService { partial void Customers_CanRead(ref bool result) { result = this.Application.User.HasPermission( Permissions.CanViewCustomers); } partial void Customers_CanInsert(ref bool result) { result = this.Application.User.HasPermission( Permissions.CanAddCustomers); } partial void Customers_CanUpdate(ref bool result) { result = this.Application.User.HasPermission( Permissions.CanUpdateCustomers); } partial void Customers_CanDelete(ref bool result) { result = this.Application.User.HasPermission( Permissions.CanDeleteCustomers); } }I already check if the user can open the customer screens, so why do I need the CanRead method? This method runs before a customer record is read. That includes running queries on it, whether they are default queries or queries I build myself. So here I am saying if you can’t view customers in screens, you shouldn’t be able to query customers or access them in code. Also, checking permissions at the entity level safeguards your data. If you forget to check for permissions, the user can edit data on the screen but the application will check permissions on the middle tier and prevent unauthorized actions.

I then create the corresponding methods for the Orders and Courses entities.

I have three data sources in my application: local SQL Express, remote SQL Server and remote SharePoint. The TrainingCoursesDataService class works with the remote SQL Server data. I need to add similar methods to the ApplicationDataService class to handle permissions for CustomerNotes and Visits and to the SPTrainingCoursesDataService class to handle permissions for KBs.

So I wind up with the following. I have left out the contents of each method, because you have seen that code already.

‘ VB Public Class ApplicationDataService Private Sub CustomerNotes_CanRead(ByRef result As Boolean) Private Sub CustomerNotes_CanInsert(ByRef result As Boolean) Private Sub CustomerNotes_CanUpdate(ByRef result As Boolean) Private Sub CustomerNotes_CanDelete(ByRef result As Boolean) Private Sub Visits_CanRead(ByRef result As Boolean) Private Sub Visits_CanInsert(ByRef result As Boolean) Private Sub Visits_CanUpdate(ByRef result As Boolean) Private Sub Visits_CanDelete(ByRef result As Boolean) End Sub End Class Public Class TrainingCoursesDataService Private Sub Customers_CanRead(ByRef result As Boolean) Private Sub Customers_CanInsert(ByRef result As Boolean) Private Sub Customers_CanUpdate(ByRef result As Boolean) Private Sub Customers_CanDelete(ByRef result As Boolean) Private Sub Courses_CanRead(ByRef result As Boolean) Private Sub Courses_CanInsert(ByRef result As Boolean) Private Sub Courses_CanUpdate(ByRef result As Boolean) Private Sub Courses_CanDelete(ByRef result As Boolean) result = Me.Application.User.HasPermission( Permissions.CanModifyCourses) Private Sub Orders_CanRead(ByRef result As Boolean) Private Sub Orders_CanInsert(ByRef result As Boolean) Private Sub Orders_CanUpdate(ByRef result As Boolean) Private Sub Orders_CanDelete(ByRef result As Boolean) End Class Public Class SPTrainingCoursesDataService Private Sub KBs_CanRead(ByRef result As Boolean) Private Sub KBs_CanInsert(ByRef result As Boolean) Private Sub KBs_CanUpdate(ByRef result As Boolean) Private Sub KBs_CanDelete(ByRef result As Boolean) End Class // C# public partial class ApplicationDataService { partial void CustomerNotes_CanDelete(ref bool result) partial void CustomerNotes_CanInsert(ref bool result) partial void CustomerNotes_CanUpdate(ref bool result) partial void CustomerNotes_CanRead(ref bool result) partial void Visits_CanDelete(ref bool result) partial void Visits_CanInsert(ref bool result) partial void Visits_CanUpdate(ref bool result) partial void Visits_CanRead(ref bool result) } public partial class TrainingCoursesDataService { partial void Customers_CanDelete(ref bool result) partial void Customers_CanInsert(ref bool result) partial void Customers_CanUpdate(ref bool result) partial void Customers_CanRead(ref bool result) partial void Courses_CanDelete(ref bool result) partial void Courses_CanInsert(ref bool result) partial void Courses_CanUpdate(ref bool result) partial void Courses_CanRead(ref bool result) partial void Orders_CanDelete(ref bool result) partial void Orders_CanInsert(ref bool result) partial void Orders_CanUpdate(ref bool result) } public partial class SPTrainingCoursesDataService { partial void KBs_CanDelete(ref bool result) partial void KBs_CanInsert(ref bool result) partial void KBs_CanUpdate(ref bool result) partial void KBs_CanRead(ref bool result) }Now I have a full set of permissions checking for screens and entities.

Time to test the application. I have given myself the ability to view and update courses, but not the ability to add or delete them. When I view the course list, there is now no Add button. LightSwitch doesn’t include it on the screen because I don’t have add rights. When I select a course, the Save button is disabled because I don’t have update rights.

The course detail screen shows courses and related KBs from a SharePoint list. I covered how to do this in my Extending A LightSwitch Application with SharePoint Data post. What if I don’t have the ability to view KB items? To see what happens, I’ll go back to the Access Control tab and uncheck the KB related permissions. Now when I select a course, I do not see KBs. I’m also informed that the current user doesn’t have permission to view them.

Checking for Permissions at the Property Level

Let’s get more granular. You can control not only screens and entities, but also individual entity properties. Next, I want to enforce the following rules for support staff:

- Can view customers but cannot view the year to date revenue for customers.

- Can modify only a customer’s phone and fax number.

Support staff can view customers but can only modify the phone and fax number. To enforce this, I can make all of the customer information read only except these two properties. I double-click Customers to open it in the Entity Designer. I click on CompanyName and then select CompanyName_IsReadOnly from the Write Code button’s drop-down list.

If this method returns true then the property is read-only. So I add the following code:

‘ VB Public Class Customer Private Sub CompanyName_IsReadOnly(ByRef result As Boolean) result = Me.Application.User.HasPermission( Permissions.CanOnlyUpdateCustomerPhoneAndFax) End Sub End Class // C# public partial class Customer { partial void CompanyName_IsReadOnly(ref bool result) { result = this.Application.User.HasPermission( Permissions.CanOnlyUpdateCustomerPhoneAndFax); }If the user only has permission to update phone and fax, company name will be read only. I add the same code to the corresponding methods for contact name, the various address properties and email. I don’t need to use the PhoneNumber_IsReadOnly and Fax_IsReadOnly methods, because those will never be read only if the user can modify customers.

To test this, I will check both CanUpdateCustomers and CanOnlyUpdateCustomerPhoneAndFax. When I run the application, all of the customer information except phone and fax is read-only. Nice!

Finally, I want to prohibit support staff from seeing the year to date revenue for a customer. I can think of three options for doing this:

- I can have two versions of each customer screen, one with year to date revenue and one without. When the user views the customer list or an individual customer, I can check the permission and run the appropriate screen.

- I can have two versions of each customer query, one with year to date revenue and one without. When the user views the customer list or an individual customer, I can check the permission and then run the appropriate query.

- I can continue to use one version of each screen and query. When the user views the customer list or an individual customer, I can check the permission and then hide the year to date revenue field if necessary.

The third option is the easier one, so I will go with that. I open the CustomerDetail screen in the Screen Designer and I see that the year to date revenue control has an IsVisible property. All I need to do is set this to false if the user doesn’t have permission to see the data.

To do that, I can simply add the following code to the screen’s Loaded event handler:

‘ VB Public Class CustomerDetail Private Sub CustomerDetail_Loaded() Me.FindControl("YearToDateRevenue").IsVisible = Me.Application.User.HasPermission( Permissions.CanViewYTDRevenue) End Class // C# public partial class CustomerDetail { partial void CustomerDetail_Loaded() { this.FindControl("YearToDateRevenue1").IsVisible = this.Application.User.HasPermission( Permissions.CanViewYTDRevenue); } }Year to date revenue appears in two screens: CustomerDetail and CustomerList. Visual Basic lets me name it YearToDateRevenue in both screens, but C# doesn’t. So in C# I named it YearToDateRevenue1 in the CustomerDetail screen and YearToDateRevenue2 in the CustomersList screen.

In the CustomerList screen, the year to date revenue control is contained in a grid. So I need to use FindControlInCollection rather than FindControl. FindControlInCollection takes two arguments: the name of the control and the row of data it is in. I will need to loop through all of the rows since year to date revenue is a column in the grid.

‘ VB Public Class CustomerList Private Sub CustomerList_Loaded() For Each customerRow As IEntityObject In Me.CustomerCollection Me.FindControlInCollection( "YearToDateRevenue", customerRow).IsVisible = Me.Application.User.HasPermission( Permissions.CanViewYTDRevenue) Next End Sub End Class // C# public partial class CustomerList { partial void CustomerList_Loaded() { foreach (IEntityObject customerRow in this.CustomerCollection) { this.FindControlInCollection( "YearToDateRevenue2", customerRow).IsVisible = this.Application.User.HasPermission( Permissions.CanViewYTDRevenue); } } }I then uncheck CanViewYTDRevenue in the Access Control screen and run the application. I do not see year to date revenue on either customer screen.

Summary

Access control is a big, and important, feature in LightSwitch. As a developer, you have several ways to control what users can and can’t do. You can check permissions at a relatively high level. You can also check them at more granular levels as well. Hopefully, this post has provided you with good ideas on how to add access control to your LightSwitch applications.

Return to section navigation list>

Windows Azure Infrastructure

Carl Brooks (@eekygeeky) reported Applications interfere with cloud computing adoption in a 2/22/2010 post to SearchCloudComputing.com:

As we make the cognitive leap from old ways of doing IT to new ones, a consistent theme in private cloud has emerged: it's all about the applications.

IT professionals are figuring out that, while data center consolidation and virtualization can be rewarding and even necessary elements of improving IT operations, application delivery remains the primary focus. It's sometimes worked around with great success, but often it’s the elephant in the room.

Virtualization is a great foundation for cloud, but it’s absolutely not a panacea.

Christian Reilly, IT professional

As part of the transition to cloud computing, companies are moving to a binary model where some IT needs are served by cloud platforms and others simply aren’t. Most client server apps can be virtualized to some degree, but others, like massive databases running financial systems, were designed before virtualization was mature. Due to performance issues, these cannot be virtualized.

"We have virtualized everywhere it is appropriate," said Dmitri Ilkaev, VP of enterprise architecture at Thermo Fisher Scientific (TFS). Ilkaev said his company had been opportunistic in its use of virtualization, deploying it where it made sense, but some of the company’s core business applications did not go into tidy, standardized and virtualized stacks.

Others did; testing and development, for example, are a big part of TFS’s business. A maker of largely digital and highly complex scientific instruments, the company has branched out into laboratory services and software that supports its equipment. Ilkaev said one of the initial pushes for cloud computing-style IT automation was to be able to sell internal applications as a service.

Ilkaev called private cloud a natural extension of the consolidation and virtualization TFS had already done. He noted the company already used Software as a Service (SaaS) products extensively, like Salesforce.com, and said that he'd eventually lose interest in infrastructure; it was going to be primarily about delivering applications and support in a hybrid model.

Banking giant lukewarm on private cloud

Other enterprises take a more conservative approach. Sherrie Littlejohn, who heads up the enterprise architecture and strategy group at Wells Fargo, said that the banking giant had looked at cloud infrastructure with interest but found no pressing need for it. Plus, the bank’s outlook on IT was dominated by security."We have a gazillion servers, processes up the wazoo in how you get one, and security is definitely number one (or zero)," she said.

Littlejohn said Wells Fargo could see a point at which it might want to put some business critical applications in a cloud service, but it already operated layer upon layer of fairly advanced management systems and virtualization for its infrastructure. There wasn’t a competitive edge that she could see yet in simply turning to cloud computing.

Wells Fargo does use Salesforce.com, along with several outside services to operate its websites and online services, but Littlejohn said that use case and the security implications were pretty cut and dried. When Wells Fargo is ready to build a private cloud, which would essentially involve giving internal users more leeway in provisioning their own IT, Littlejohn said that the company will do it in a deliberate manner. She said delivering business services and security took precedent over new technology.

“I don’t see us jumping into this," she said. "We will test and learn as we go; for banks in general, we’re going to be very cautious.”

Factoring applications into the cloud move

Of course, for any organizations that have been around and want to redo IT with an eye on cloud's benefits, applications are a big part of the problem.Honeywell International in the UK is retiring 12 terabytes of data in a mainframe they don’t need because the company is, almost literally, burning money operating its data center. "Can you imagine the server load? The backup requirements for that?" said Sai Gundavelli, CEO of Solix.

Gundavelli sells data management tools and manages the data move from ancient hardware to cloud storage for Honeywell. He says that application cruft is endemic; it’s often the result of numerous acquisitions or cowboy coding. It is often also the first thing an IT organization stumbles across when undertaking a large-scale reorganization, like shifting to virtualization or cloud.

“A lot of times when you run a data center across the globe, you end up with a surprising number of applications," he said. "It’s like when people buy a garage, they keep putting things in, putting things in. They never take them out," he said. He noted that German manufacturer Bombardier Inc, another client, had identified nearly 2,000 applications it wanted to retire in the process of virtualizing and modernizing its infrastructure.

Even successful private clouds feel the application impact

And as often as not, even a successful implementation of private cloud can simply miss the mark when it comes to applications.Christian Reilly, an IT professional working for a large multi-national, told an audience during a closed presentation that five years ago it took 42 days to deploy an application. Even as his organization felt its way into the cloud very early, the monumental shift over to converged infrastructure, worldwide communication, workspace virtualization and even an iPhone app for one popular business application (a time sheet for agents in the field) didn’t touch business as usual. “Anyone want to guess how long it takes now? 42 days,” he said.

What went wrong? Well, nothing. Infrastructure is solved; they’ve learned the lessons of massive online service delivery from the likes of Google, YouTube and Amazon. They’re doing quantitatively more with the same IT budget, but nobody can afford to rewrite the apps overnight for cloud.

That’s where the actual work takes place, Reilly said: “Virtualization is a great foundation for cloud, but it’s absolutely not a panacea.”

More than 90% of Reilly’s IT organization is virtualized, but it’s not automated in a way that matters to application delivery. What is missing is how to tie those application services together at a level where resource provisioning "just happens." He’s looking at server templates, preconfigured stacks, anything that can make cloud more useful than simply turning on a server.

Reilly said his application portfolio was the first thing he had to work around when trying to get consolidation moving. He went after client server applications first, standardized the virtualization platform on Xen and eventually brought everything under one central authority, which he called critical to taming security.

He noted that you either had to have control over the user or control over the back end, and it was clear where IT could be more effective.

"You can’t really have both in our experience; if you have the Wild West in the front end and the Wild West in the back end, you’re going to have the Wild West in the middle," he said. "That’s got to be avoided, or there won’t be any cloud in your enterprise."

The fact that users have access to all sorts of high-powered technology delivered as Web services from the likes of Google and Yahoo! meant that they were more than ready to see the same in the workplace. Reilly said most enterprise IT was woefully behind the times, and enterprise applications reflected that.

It’s unclear what has to change to make the enterprise application landscape catch up to the consumer experience. It may just take time and continued examples of businesses getting more done with less money. Most likely, we’ll see the same trends: SaaS feeding the shift in how users think about IT at their job, leading to small efforts and eventual transformations. Another decade might not be unreasonable to see the IT landscape transformed.

Carl Brooks is the Technology Writer at SearchCloudComputing.com.

<Return to section navigation list>

Windows Azure Platform Appliance (WAPA), Hyper-V and Private Clouds

No significant articles today.

<Return to section navigation list>

Cloud Security and Governance

Penny Crossman asserted “As part of an IT transformation project, State Street is creating a private cloud that the bank's CIO says will be more secure than the existing IT infrastructure” in a deck for her Chris Perretta, State Street CIO, on Building A Secure Cloud interview of 2/15/2011 for the Bank Systems & Technology site (missed when posted):

We sat down with Chris Perretta, CIO of Boston-based State Street ($1.90 trillion in assets under management), last week to find out what is happening with the bank's IT transformation and private cloud project.

As part of an overall IT transformation, State Street is building a production-ready internal cloud that's due to go live in April. (The bank has already tested two private clouds.) "It's a new mechanism for operating applications that's more cost-effective to operate, runs on commodity hardware; and is infinitely expandable and quick to deploy to," Perretta says. "If I have an application I want to put in production, our tests have shown that I can deploy it in five minutes to bare metal." Often software developers will say it takes ten weeks or more to obtain a configured server from IT.

Perretta would not divulge specific technologies the bank is using in its cloud, other than to say it involves commodity hardware and open source and custom software.

One thing that differentiates State Street's cloud from other banks' is that it is building a security framework for it. "We do a lot of custom work, we build very specialized systems for worldwide custody and funds accounting type systems," Perretta explains. "Security, control, auditability, and transparency are always job one in the business we're in." The security framework will include federated identity management and role-based security. "The technology is hard, but it's even harder for the business to define this is your job, this is what you get to see, especially for systems that may not have been built at that level of specificity," he notes.

When this security work is completed, the cloud will have built-in provisioning rules; it will know who may see which data and access which applications. Perretta believes the bank's private cloud will be more secure than most traditional computing environments.

On the hardware utilization side, like many organizations, State Street struggles with low server utilization rates, especially with the way it provides high availability through redundancy and failovers. In some cases the bank has three extra servers for every production server -- one that sits next to the production server in the same data center for failover, and a primary and redundant backup server at the disaster recover site. With applications running over a cloud that encompasses multiple data centers, utilization numbers could increase dramatically.

The first thing State Street will use its new cloud for is application testing. "When we bring clients into our system there's very extensive testing," Perretta says. "We test at very high volume in big environments. In the old model, you had to replicate the production environment. But now, with the flexibility of the cloud, you're going to be able to deploy test environments rapidly. For us, that's a big piece of it."

In another component of its IT transformation, State Street is reviewing all 1,000 of its major home-grown applications for efficiency and building a software framework that facilitates sharing among its developers. "We're really after speed on the development side and you get speed three ways -- you work the right projects; you share code, application services and people; and you get better utilization of hardware," he says. Eventually the applications will also share common data definitions.

Getting developers to share is not always easy; programmers tend to like to be autonomous. But Perretta says the bank has proven, by decomposing an application into building blocks that cover components such as laws in particular countries that developers can develop more rapidly. "It's a cultural change that says if you as an engineer or a programmer have got code that a lot of people use, you're more valuable" than someone who does not generate such sharable resources.

An architecture group is in charge of creating the methods and toolsets needed to build applications in reusable pieces. "There's a different way of looking at how you design systems in this environment that's a key aspect of what we need to do," Perretta says. "Your philosophy about how to design things is different in an environment where you have infinite computing power and a lot of things going on in parallel. The old model was, do something, post it, get feedback, post it again. In this model, you have infinite computing power to play with so you may structure your program differently."

Creating applications in reusable chunks could help a bank switch vendors or share people among teams more easily, Perretta says.

"We can't rewrite our entire application set overnight," Perretta notes. "But we think this lays the groundwork for a good portion of our application set over time. We're also working hard on the data side, to be more structured as to how we share data among our applications. If we can do that, we know we can drive tremendous productivity in the business and IT."

Perretta recently promoted the bank's chief architect to be a direct report of his. The chief architect has also been working with a team that did lean work in the bank's operations group. "The lean operations folks go in and find inefficiencies and try to figure out what's causing them," he says. "Then our architects can get to the root cause of the inefficiency." Some programs will be rewritten, others will be "sunsetted." In all, about two-thirds of the bank's applications will be dramatically affected by this sweeping transformation.

This is a public forum. United Business Media and its affiliates are not responsible for and do not control what is posted herein. United Business Media makes no warranties or guarantees concerning any advice dispensed by its staff members or readers.

Community standards in this comment area do not permit hate language, excessive profanity, or other patently offensive language. Please be aware that all information posted to this comment area becomes the property of United Business Media LLC and may be edited and republished in print or electronic format as outlined in United Business Media's Terms of Service.

<Return to section navigation list>

Cloud Computing Events

No significant articles today.

<Return to section navigation list>

Other Cloud Computing Platforms and Services

Klint Finley (@klintron) described how to Work with Hadoop and NoSQL Databases with Toad for Cloud in a 2/24/2011 post to the ReadWriteCloud blog:

Toad for Cloud Databases is a version of Quest's popular Toad tool specially designed for next-generation databases. So far it supports AWS SimpleDB; Microsoft Azure; Table services; Apache HBase; Microsoft SQL Azure; Apache Cassandra or any ODBC-enabled relational database. It can be downloaded here.

Toad got its start as Tool for Oracle Databases (TOAD). Quest bought the tool in 1998 and has continued its development. Toad competes with open source projects such as TOra and SQLTools++.

The existing flavors of Toad, such as Toad for MySQL, have long been popular with database professionals. Quest's move into NoSQL demonstrates how much interest there is in the area. According to RedMonk's Michael Coté, Toad for Cloud Databases already has over 2,000 users - not bad for a tool for managing relatively new and esoteric technologies. Coté writes that Hadoop is the most popular database among Toad for Cloud users.

Quest is positioning itself as a trusted source for NoSQL tools and information. In addition to Toad for Cloud, Quest is running a wiki dedicated to NoSQL. The entry "Survey Distributed Databases" is an extensive overview of burgeoning field of NoSQL players. Quest staffers have been giving interviews on the subject of NoSQL and Hadoop as well.

Michael Coté (@cote) posted Building a Hybrid Cloud to his Redmonk blog on 2/24/2011:

Hybrid cloudsView more presentations from Michael Coté

What’s the deal with “hybrid clouds”? To use the NIST definition, It’s just mixing at least two different types of clouds together (public and private, two different public clouds, or a “community cloud”). For the most part, people tend to mean mixing public and private – keeping some of your stuff on-premise, and then using public cloud resources as needed. It’s early days in any type of cloud, esp. something like hybrid. Nonetheless, I got together with RightScale and Cloud.com recently to go over some practical advice and demos of building and managing hybrid clouds, all in webinar form.

The recording is available now for replay. I start out with a very quick definition and then some advice and planning based on what RedMonk has been seeing here. The RightScale and Cloud.com demos are nicely in-depth, and the Q&A that follows was primarily very specific, technical questions. Viewing the recording is free (once you fill out the registration form, of course). You can also check out my slides over in Slideshare (or above).

A question for you, dear readers: have you been doing and “hybrid cloud” work? What’s worked well, and what hasn’t worked so well?

Disclosure: Cloud.com is a [Redmonk] client.

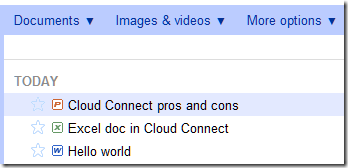

Tim Anderson went Hands on with Google Cloud Connect: Microsoft docs in Google’s cloud and reported the result in a 2/24/2011 post:

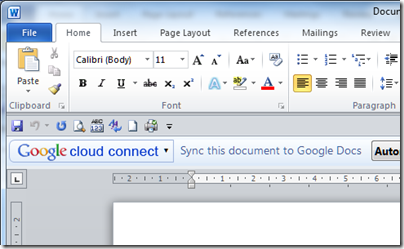

Google has released Cloud Connect for Microsoft Office, and I gave it a quick try.

Cloud Connect is a plug-in for Microsoft Office which installs a toolbar into Word, Excel and PowerPoint. There is no way that I can see to hide the toolbar. Every time you work in Office you will see Google’s logo.

From the toolbar, you sign into a Google Docs account, for which you must sign up if you have not done so already. The sign-in involves passing a rather bewildering dialog granting permission to Cloud Connect on your computer to access Google Docs and contacts on your behalf.

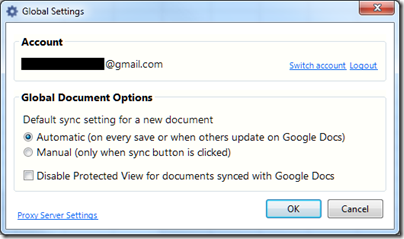

The Cloud Connect settings synchronise your document with Google Docs every time you save, or whenever the document is updated on Google’s servers.

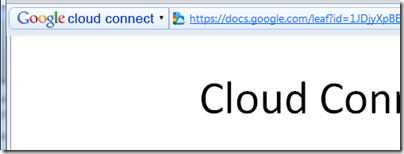

Once a document is synched, the Cloud Connect toolbar shows an URL to the document:

You get simultaneous editing if more than one person is working on the document. Google Docs will also keep a revision history.

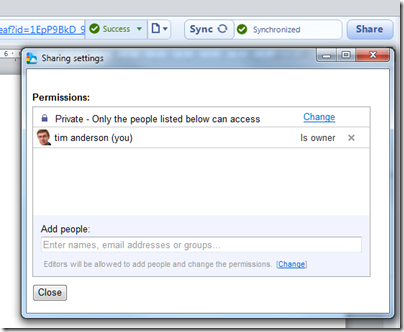

You can easily share a document by clicking the Share button in the toolbar:

I found it interesting that Google stores your document in its original Microsoft format, not as a Google document. If you go to Google Docs in a web browser, they are marked by Microsoft Office icons.

If you click on them in Google Docs online, they appear in a read-only viewer.

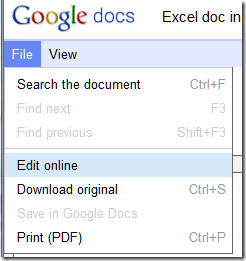

That said, in the case of Word and Excel documents the online viewer has an option to Edit Online.

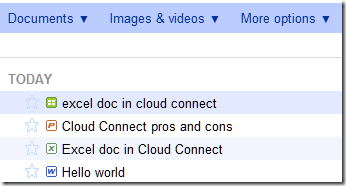

This is where it gets messy. If you choose Edit online, Google docs converts your Office document to a Google doc, which possible loss of formatting. Worse still, if you make changes these are not synched back to Microsoft Office because you are actually working on a second copy:

Note that I now have two versions of the same Excel document, distinguished only by the icon and that the title has been forced to lower case. One is a Google spreadsheet, the other an Excel spreadsheet.

Google says this is like SharePoint, but better.

Google Cloud Connect vastly improves Microsoft Office 2003, 2007 and 2010, so companies can start using web-enabled teamwork tools without upgrading Microsoft Office or implementing SharePoint 2010.

Google makes the point that Office 2010 lacks web-based collaboration unless you have SharePoint, and says its $50 per user Google Apps for Business is more affordable. I am sure that is less than typical SharePoint rollouts – though SharePoint has other features. The best current comparison would be with Business Productivity Online Standard Suite at $10 per user per month, which is more than Google but still relatively inexpensive. BPOS is out of date though and an even better comparison will be Office 365 including SharePoint 2010 online, though this is still in beta.

Like Google, Microsoft has a free offering, SkyDrive, which also lets you upload and share Office documents.

Microsoft’s Office Web Apps have an advantage over Cloud Connect, in that they allow in-browser editing without conversion to a different format, though the editing features on offer are very limited compared with what you can do in the desktop applications.

Despite a few reservations, I am impressed with Cloud Connect. Google has made setup and usage simple. Your document is always available offline, which is a significant benefit over SharePoint – and one day I intend to post on how poorly Microsoft’s SharePoint Workspace 2010 performs both in features and usability. Sharing a document with others is as easy as with other types of Google documents.

The main issue is the disconnect between Office documents and Google documents, and I can see this causing confusion.

Related posts:

Kenneth van Sarksum reported VMware has quietly phased out Lab Manager in a 2/23/2011 post to the Virtualization.info blog:

In September last year, virtualization.info reported about VMware starting the development of a management solution for Infrastructure-as-a-Service (IaaS) cloud computing platforms called vCloud Director. vCloud Director was developed from the engine of Lab Manager, which VMware acquired from Akimbi June 2006. Later we were informed by a member of the virtualization.info Vanguards community, that VMware was planning to phase out Lab Manager within a year, embedding its capabilities in vCloud Director which was released in September last year.

Last week VMware announced it will discontinue additional major releases of vCenter Lab Manager, making Lab Manager 4 (released in July 2009) the last Lab Manager product supported by VMware until May 1st, 2013.

VMware offers Support and Subscription (SnS) customers of existing Lab Manager licenses the ability to exchange their licenses for a vCloud Director license at no additional cost. For more information see the Lab Manager product lifecycle FAQ.

<Return to section navigation list>

![clip_image002[4] clip_image002[4]](http://blogs.msdn.com/cfs-file.ashx/__key/CommunityServer-Blogs-Components-WeblogFiles/00-00-01-15-67-metablogapi/3240.clip_5F00_image0024_5F00_thumb_5F00_063C4488.png)

0 comments:

Post a Comment