Windows Azure and Cloud Computing Posts for 2/18/2011+

| A compendium of Windows Azure, Windows Azure Platform Appliance, SQL Azure Database, AppFabric and other cloud-computing articles. |

• Updated 2/20/2011 with new articles marked •.

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database and Reporting

- Marketplace DataMarket and OData

- Windows Azure AppFabric: Access Control and Service Bus

- Windows Azure Virtual Network, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch

- Windows Azure Infrastructure

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Azure Blob, Drive, Table and Queue Services

No significant articles today.

<Return to section navigation list>

SQL Azure Database and Reporting

• See DreamFactory Software announced their updated DreamFactory Suite for Windows Azure and SQL Azure with a 2/2011 listing in Microsoft Pinpoint, in the Live Windows Azure Apps, APIs, Tools and Test Harnesses section, especially DreamFactory’s CTO, Bill Appleton, offers more background on the Azure implementation in his Talking with Ray Ozzie post of 5/17/2010 to the firm’s Corporate Blog (scroll down).

• Ike Ellis (@ellisteam1) posted a Top 5 things to know about sql azure for developers slide deck on 2/16/2011 (missed when posted):

Slide #12

Check out all 31 slides here.

Programming4Us continued its series with SQL Azure : Tuning Techniques (part 5) - Provider Statistics & Application Design on 2/12/2011 (missed when posted):

7. Provider Statistics

Last but not least, let's look at the ADO.NET library's performance metrics to obtain the library's point of view from a performance standpoint. The library doesn't return CPU metrics or any other SQL Azure metric; however, it can provide additional insights when you're tuning applications, such as giving you the number of roundtrips performed to the database and the number of packets transferred.

As previously mentioned, the number of packets returned by a database call is becoming more important because it can affect the overall response time of your application. If you compare the number of packets returned by a SQL statement against a regular SQL Server installation to the number of packets returned when running the same statement against SQL Azure, chances are that you see more packets returned against SQL Azure because the data is encrypted using SSL. This may not be a big deal most of the time, but it can seriously affect your application's performance if you're returning large recordsets, or if each record contains large columns (such as a varbinary column storing a PDF document).

Taking the performance metrics of an ADO.NET library is fairly simple, but it requires coding. The methods to use on the SqlConnection object are ResetStatistics()RetrieveStatistics(). Also, keep in mind that the EnableStatistics property needs to be set to true. Some of the most interesting metrics to look for are BuffersReceived and BytesReceived; they indicate how much network traffic has been generated. and

You can also download from CodePlex an open source project called Enzo SQL Baseline that provides both SQL Azure and provider statistics metrics (http://EnzoSQLBaseline.CodePlex.Com). This tool allows you to compare multiple executions side by side and review which run was the most efficient. Figure 10 shows that the latest execution returned 624 bytes over the network.

Figure 10. Viewing performance metrics in Enzo SQL Baseline

NOTE

If you'd like to see a code sample using the ADO.NET provider statistics, go to http://msdn.microsoft.com/en-us/library/7h2ahss8.aspx.

8. Application Design

Last, but certainly not least, design choices can have a significant impact on application response time. Certain coding techniques can negatively affect performance, such as excessive roundtrips. Although this may not be noticeable when you're running the application against a local database, it may turn out to be unacceptable when you're running against SQL Azure.

The following coding choices may impact your application's performance:

- Chatty design. As previously mentioned, a chatty application uses excessive roundtrips to the database and creates a significant slowdown. An example of a chatty design includes creating a programmatic loop that makes a call to a database to execute a SQL statement over and over again.

- Normalization. It's widely accepted that although a highly normalized database reduces data duplication, it also generally decreases performance due to the number of JOINs that must be included. As a result, excessive normalization can become a performance issue.

- Connection release. Generally speaking, you should open a database connection as late as possible and close it explicitly as soon as possible. Doing so improves your chances of reusing a database connection from the pool.

- Shared database account. Because SQL Azure requires a database login, you need to use a shared database account to retrieve data instead of using a per-user login. Using a per-user login prohibits the use of connection pooling and can degrade performance significantly, or even render your database unusable due to throttling.

There are many other application design considerations, but the ones listed here apply the most to programming against SQL Azure.

Other members of this series:

- QL Azure : Tuning Techniques (part 4) - Indexed Views & Stored Procedures

SQL Azure : Tuning Techniques (part 3) - Indexing SQL Azure : Tuning Techniques (part 2) - Connection Pooling & Execution Plans SQL Azure : Tuning Techniques (part 1) - Dynamic Management Views

<Return to section navigation list>

MarketPlace DataMarket and OData

• See Paul Miller asserted Google Could Make Data Marketplaces Actually Useful in a 2/20/2010 post about Dataset Publishing Language (DSPL) to GigaOm’s Structure blog in the Other Cloud Computing Platforms and Services section below.

Zoinder Tejada offered “A look at building hybrid on-premises and Windows Azure-based application architectures in 2011” with his The Future of WCF Services and Windows Azure article for DevProConnections:

By now, you've probably figured out that the name of the game at Microsoft is cloud—they are all in. Teams at Microsoft that once focused exclusively on building products for on-premises use are now being asked to cloud-enable them or build new, symmetric cloud functionality. This begs several questions: Are you still looking at your Windows Communication Foundation (WCF) service-based applications as on-premises solutions? Have you built and hosted any services on Windows Azure? More to the point, have you considered hybrid architectures where you have both on-premises and cloud-hosted services? If not, this article will help you start thinking about hybrid solution architectures.

Hybrid Application Architecture

As Figure 1 shows, services can live any many places, such as on-premises and hosted in Microsoft IIS with Windows Server AppFabric, or in the cloud hosted within a web, worker, or VM role.

Figure 1: An overview of a hybrid application architectureHowever, the clients—except for the most traditional corporate desktop applications—do not live exclusively on-premises or in the cloud. Some users may connect to your applications via laptops or smartphones across the Internet. Because of this connectivity from the Internet, you are less likely to offer solutions where such clients connect directly to your on-premises applications. There are many good reasons for this, but the typical reasons are to leverage cloud services for scale and minimize the traffic and demand against your on-premises components, as well as to decouple clients from hard-coded knowledge of the location of your on-premises components, so that you can move them around as needed.

There are also alternative considerations in a hybrid application design with respect to the placement of your on-premises services. For example, you might have regulations that require you to host the data within specific physical confines (for example, within your corporation or within your country). Additionally, cost or uptime may be factors. In some scenarios, it may be more cost effective to maintain your on-premises data center, or it may be the only way to deliver an SLA of 99.999 percent uptime.

We will return to Figure 1 many times though this article. However, at a high level, as we explore this form of hybrid architecture, we have three main concerns: how all clients, on-premises components, and cloud-hosted components communicate; how to provide access to the data that those components need; and how to secure access to the components and the data. Along the way, we will introduce the functionality of existing Azure services as well as recently announced ones.

Communication

When extending the reach of your application out into the cloud, there are two important questions to ask: How will your on-premises and Azure-hosted components communicate, and how do you expect clients to communicate with your Azure-hosted components and with your on-premises components?Cloud communication. When connecting your on-premises services with services that are hosted in Azure, you have three options: Your on-premises solutions can communicate directly to the Azure hosted services making SOAP-style or REST-style calls, they can establish a link across the Azure AppFabric Service Bus, or they can leverage the VPN-like connectivity of Windows Azure Connect. Each approach has different value propositions and important considerations, so we will briefly review each.

Communicating via direct SOAP and REST calls. In this scenario, your on-premises services become clients of WCF services hosted in Azure. This scenario is not much different from how you would build two on-premises services that communicate with each other across the same LAN. The challenge with this approach surfaces when considering how the Azure-hosted services will call back to your on-premises services. This complication is introduced by firewalls and other forms of Network Address Translation (NAT) that likely already protect your on-premises network environment from external access. You could go about "poking holes" in your firewall configuration to allow direct external access to your on-premises services, but doing so greatly diminishes your network's security and introduces a new IT management burden every time you introduce a new or reconfigure an existing on-premises service. Fortunately, for service-to-service communication, Azure provides an elegant solution in the Service Bus. …

Nuno Lunhares described Setting up the Deployer and the oData Web Service - Part 4 Configuring the oData Web Service in a 2/2011 tutorial:

Summary

This tutorial explains how to configure a Tridion Content Delivery instance with the oData Web Service.

It is meant as a guide, and it may be applicable only to the "GA" release of SDL Tridion 2011.

Tutorial Sections

- Part 1 - Creating the Content Delivery Database

- Part 2 - Configuring an application server

- Part 3 - Configuring the Tridion Deployer using Eclipse IDE

- Part 4 - Configuring the oData Web Service

- Part 5 - Testing and publishing to the web service

Configuring the oData Web Service

In this chapter we will guide through the configuration of the oData Web Service.

Configuring the Web Service Web Application

Locate the cd_webservice.war file (under [Tridion_Install]\Content Delivery\java\webservice) and rename it to cd_webservice.zip

Click Yes

Open the zip file (using Explorer) and navigate to WEB-INF\classes

Copy the configuration files you created for the deployer app from C:\Tomcat\Webapps\TridionUpload\WEB-INF\classes into this location (you don't need to copy the cd_deployer_conf.xml file)

Edit the logback.xml file and change the log location (note: you may have to copy this file to your desktop first, make the changes, then copy it back to the ZIP file)

Now, in the zip file, navigate back to WEB-INF\lib

On a different explorer window, navigate to [Tridion_Install]\Content Delivery\java\third-party-lib\1.5, and copy both jar files to the zip file's WEB-INF\lib folder

Rename the cd_webservice.zip file back to cd_webservice.war

Make sure you can set the extension correctly. By default windows will hide the .zip extension. You can change this by selecting Organize -> Folder and search options from the Windows Explorer menu

Deploy this war file in Tomcat (by copying it to C:\Tomcat\Webapps)

Test it in a browser by opening http://localhost:8080/cd_webservice/odata.svc

Tip: you may want to change your Internet Explorer settings to display the Atom+Pub xml returned by this webservice by going to Internet Explorer -> Options, Content Tab, and clicking on Settings under "Feeds and Web Slices" and unchecking the box "Turn on feed reading view". You need to restart IE for this change to be active

You now have an active oData WebService endpoint.

SDL Tridion is a content management system for marketing.

<Return to section navigation list>

Windows Azure AppFabric: Access Control and Service Bus

Uma Maheswari Anbazhagan of Microsoft’s Dynamics CRM Team explained Windows Azure AppFabric Integration with Microsoft Dynamics CRM - Step By Step in a 2/18/2011 tutorial:

Microsoft Dynamics CRM 2011 provides this cool new feature called “Windows Azure AppFabric Integration with Microsoft Dynamics CRM” that would help customers integrate their CRM with their other line of business applications without having to deal with the typical issues with polling, opening up firewall etc. Please refer to the Azure Extensions for Microsoft Dynamics CRM topic in the MIcrosoft Dynamics CRM 2011 SDK for more details on this feature.

In this blog, I will guide you step by step to get you started with CRM integration with Azure AppFabric. At the end of this blog, you would know everything to get your first CRM-AppFabric sample up and running.

Pre-Requisite - Only for on-premises and IFD CRM servers:

If you are using Microsoft Dynamics CRM Online, you do not have to do this step. This has already been done for you.

If you are using an on-premises or IFD CRM server, you will need to do your one-time setup for configuring your CRM server for Azure integration. Please refer to the topic “Configure Windows Azure Integration with Microsoft Dynamics CRM” in CRM 2011 SDK to do this.

In this blog, we will start by creating an azure account, and then run the service that listens for messages from CRM, use the plugin registration tool to configure both Azure and CRM and then see it working end to end.

1) Signup for an Azure AppFabric account:

First, let’s start off by going to http://www.microsoft.com/en-us/appfabric/azure/default.aspx and sign up for an Azure account. (At the time of this article, there are trial memberships available which you can take advantage of).

2) Create a ServiceNamespace

Login to the AppFabric Developer Portal (https://appfabric.azure.com/Default.aspx) with your Azure AppFabric account.

In the AppFabric Developer Portal, go to “AppFabric” tab and create a new AppFabric project.

Click on the project and click “Add Service NameSpace”. Provide a string for your service namespace, pick your region, and choose the number of connections you need and click “Create”.

Now you will see “Activating” icon next to your service namespace. It takes a couple of minutes to get your service namespace activated, and then the status of the servicenamespace becomes “Active”.

Click on the service namespace and you can see the “Management Key” which is a secret key to access your service.

3) Create a service that listens for messages from CRM

Now we need to create a service that listens for messages in CRM.

Wondering how to create a service?

CRM 2011 SDK has a set of four sample listeners, each one with a different service contract. Click here for Sample Code for Microsoft Dynamics CRM and AppFabric Integration.

Alternatively, you can download the complete CRM 2011 SDK (available at http://msdn.microsoft.com/en-us/dynamics/crm/default.aspx), and you can compile one of these listeners and use them. For example, compile the TwoWayListener project (under sdk\samplecode\cs\azure\twowaylistener) and create TwoWayListener.exe.

4) Expose your Service in AppFabric and start listening for messages from CRM:

Start the listening Service. (By doing this step, you are actually hosting this service in Azure AppFabric, so that it will start listening for the messages from CRM.).

If you are using the sample listener that ships in the sdk, start TwoWayListener.exe and provide the following details (Please note that the command line parameters are the same for all sample listeners in the sdk):

Service namespace: <name of your AppFabric Service Namespace>

Service namespace issue name: owner

Service namespace issue key: <Management Key of your AppFabric Service Namespace>

Endpoint path: <A subpath where you want to expose your listener. This can be any string. Separate child paths will a “/”>

5) Connect to your organization using Plugin Registration Tool:

Download the CRM 2011 SDK (available at http://msdn.microsoft.com/en-us/dynamics/crm/default.aspx). Compile and launch the using Plugin Registration Tool that ships in the SDK (under sdk\tools\pluginregistration).

In the tool, click “Create New Connection”. Provide the Discovery Url, UserName, and Password, then click “Connect”. Select the target organization in the list and click “Connect”.

6) Create a Service Endpoint:

Now you need to create a service endpoint which contains all the details about the service that is listening to the messages from CRM plugins. Following are the list of fields in service endpoint.

- Name: The name of your Azure AppFabric project.

- Description: A description of this endpoint registration.

- Solution Namespace: The solution namespace of your AppFabric project. Provide the name of the service namespace you created in Azure AppFabric Management Portal here.

- Path: The URL of your AppFabric project. Provide the subpath under the service namespace where your service is listening here.

- Contract: The endpoint contract. An AppFabric listener application must use this contract to read the posted message. You should choose the same contract that the listener is using, which in our example is TwoWay.

- Claim: The claims to send to AppFabric. Use None for the standard claim.

- Federated Mode: Check this box to use federated mode.

7) Configure ACS:

Next, you need to give permission for your CRM organization to post to your endpoint in Azure AppFabric using tools like acm.exe (this command line tool ships with Azure AppFabric Sdk).

How do I give permission? Configuring ACS? Hmm, what does that mean? Do I need to learn a brand new tool for doing all this???

Good news! Rather than using the command line tool acm.exe, plugin registration tool provides you with an easy to use, one- click setup support!

To give permission for your CRM organization to post to your endpoint in Azure AppFabric, all you need is the following information:

- Management Key: This is the management key for your AppFabric solution namespace.

- Certificate File: This is the public certificate that identifies your CRM deployment.

- Issuer Name: This is the name used to identify your CRM deployment in addition with certificate file.

You can get the Issuer Name and can download the certificate under “Windows Azure AppFabric Issuer Certificate” section in the Developer Resources page in your CRM Organization (see the screenshot below), which opens up when you click the “Go to the Developer Resources page…” link in the same “Configure ACS” screen.

You can grant permissions based of one of the many claims that CRM posts to AppFabric.

The various claims posted by CRM are:

- Organization: Name of your CRM organization

- User: User under whose context the plugin is executing

- InitiatingUser: User who triggered the plugin. This would be same as User claim in non-impersonation scenarios.

- PluginAssembly: Full name of the plugin assembly posting to AppFabric. The assembly name is fully qualified if the assembly is strongly signed, otherwise it is the short name.

“Configure ACS” button in PluginRegistrationTool creates issuers, rules (based on organization claim only, since that would be the most widely used scenario), scope, tokenpolicy etc to allow CRM. After configuring, the summary of the configuration done is displayed in the textbox in “Configure ACS” screen.

Note: To create rules based on other types of claims, you need to use the acm.exe tool that ships with Azure AppFabric SDK.

8) Verify Authentication:

This queues up a system job (Async job) which checks whether this CRM organization has access to post to your service in the Azure AppFabric. Since we granted access using “Configure ACS” in the above step, the “Verify Authentication” should succeed now. If there are any failures, System Job Message will display the details.

9) Register a SdkMessageProcessingStep:

Now that we have registered the service endpoint, go ahead and right click the service endpoint and choose “Register New Step” from the context menu. Provide the Message, PrimaryEntity, and any other fields you need and click “Register New Step”. You can also choose to register the pre/post images if applicable.

10) Trigger the plugin:

Now we are all set!

Let’s login the organization using the browser, and trigger the plugin. (For example, if the plugin was registered on create of lead, let’s trigger the plugin by creating a lead).

When the plugin is triggered, it queues up a system job which posts the execution context of the message currently being processed by CRM to the AppFabric endpoint specified in the service namespace.

You can check out the status of the plugin execution in the system jobs grid. In case of external issues (like the listener is not up and running yet), these system jobs have a better reliability story through automatic retry mechanisms etc.

Congratulations! The context data from the plugin is now received at the listener. For the purpose of simplicity the listener in this scenario is a console application that outputs the data in the console. You can implement a more realistic listener that would fit your needs and will also integrate into your other Line of Business Applications.

Panagiotis Keflidis (@pkefal) posted Using Windows Azure AppFabric Cache (CTP) on 2/16/2011 (missed when posted):

Introduction

High-scalable applications that have to serve millions of users everyday are in need of a high-scalable, highly available and durable caching platform and architecture. Distributed caching nodes are

thealmost always the best solution to avoid unnecessary round-trips to the database but it can also be a cause of problems and issues if it is implemented correctly. Possible problems would be using out-of-date objects holding an older version of data or failing to retrieve the correct, not necessarily the latest, version of an object from the cache or expiring an object to soon from the cache.

One of the most anticipated features has been significantly enhanced at the latest CTP version of Windows Azure AppFabric. Caching was introduced back in PDC '10 along with other features like durable messages on Service Bus, more identity providers for Identity Federation, VM Role, Extra small Instances, SQL Azure Reporting, Windows Azure Connect and a lot of others.

This article is based on Visual Studio 2010 using the latest version of the Windows Azure SDK v1.3 at the time it was published and also Windows Azure AppFabric SDK v2.0 CTP February release.

Background

Back in the days Windows Azure AppFabric Cache was referred by the name of "Velocity" but this is not only part of Windows Azure AppFabric but also part of Windows Server AppFabric which also includes a project referred by codename "Dublin". "Dublin" was also demonstrated as a composite building service, where you select all the necessary components of your application and along with WCF and WF hosting and orchestration you get a lot of new things, like easy scaling, point and click of type of adding new components/services to your application. All of those is just a quick overview of what is included on the Windows Azure/Server AppFabric family. Be aware because there are not align, which means that if something exists in Windows Azure AppFabric it doesn’t mean that it exists on the Windows Server AppFabric also.

What is it?

Windows Azure AppFabric Cache removes all that hassle from having to setup a new caching farm with nodes and setting up all the necessary settings to keep those nodes in sync, make sure that nodes have enough resources etc., by providing a Platform as a Service approach just like the whole Windows Azure Platform does. All you have to do is, go to http://portal.appfabriclabs.com sign up and start using all the services Windows Azure AppFabric has to offer, ACL, Service bus and Caching among others.

How does it work?

Just any other distributed caching solution Windows Azure AppFabric Cache it has two parts. The server part where the service is running and the client part where the client is using some code and configuration to access it. To create the server part you have to go to the Windows Azure AppFabric Labs portal mentioned above and select "Cache".

After that you have to click on "Create Namespace".

There you have to select a subscription to activate the feature. As this is a CTP feature, you won’t have any subscription created at the moment, so you’ll be prompted by the portal to automatically create one. As you as you click ok, you’ll get a new subscription called "LabsSubscription". Everything will be associated with this subscription as you cannot create a new one on the CTP. When the subscription is ready (takes 3-4 seconds), you have to select a name for your namespace and then check if it’s available, then select the Region (only USA is available for the CTP) and finally choose the cache size, either 128 MB or 256 MB. You can change the cache size once every 24 hours if you want once every 24 hours.

Now you can click OK and the namespace will be in an "Activating" state for a while.

When it’s ready, it will turn green and the indication will be "Activated" which means you can now use it in your application. As I said, the application has two parts; we just activated the server part and we’re going to create the client configuration now to access the service. This part is also being done automatically by the portal.

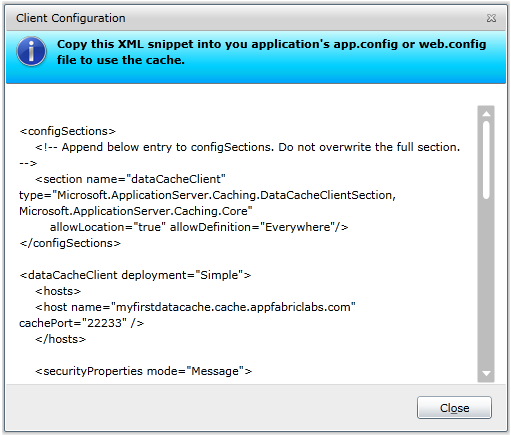

All you have to do is click ok "View Client Configuration" and select the correct part of the configuration you need, either for a non-Web Application or a Web Application where you can change the session state persistence storage to this cache. From the same menu, you can change also the cache size by click on "Change Cache Size". If you click on "View Client Configuration" you get a window containing all the necessary XML you need to put into your app.config or web.config.

After you select the part you need for your application, which in my case is a simple console application to do some very basic demonstration about how to use the cache, you have to place it inside your app.config.

<configuration> <configsections> <section name="dataCacheClient" type="Microsoft.ApplicationServer.Caching.DataCacheClientSection, Microsoft.ApplicationServer.Caching.Core" allowlocation="true" allowdefinition="Everywhere"> </section></configsections> <datacacheclient deployment="Simple"> <hosts> <host name="YOUR_NAMESPACE.cache.appfabriclabs.com" cacheport="22233"> </host></hosts> <securityproperties mode="Message"> <messagesecurity authorizationinfo="YOUR_KEY_HERE"> </messagesecurity></securityproperties> </datacacheclient> </configuration>Using the code

When you’re done adding this XML to your app.config, you’ll have to reference two assemblies from the Windows Azure AppFabric SDK v2.0 which you can download here. The assemblies you need are located into \Program Files\Windows Azure AppFabric SDK\V2.0\Assemblies\Cache\ and you need to put a reference on Microsoft.ApplicationServer.Caching.Core.dlland Microsoft.ApplicationServer.Caching.Client.dll.

Adding those two references will allow all necessary methods and namespaces to be exposed and be used by your code. We particularly need

DataCacheFactoryandDataCacheclasses which contain all the necessary methods to initialize the cache client and interact with the service.using (DataCacheFactory dataCacheFactory = new DataCacheFactory())

{

// Get a default cache client

DataCache dataCache = dataCacheFactory.GetDefaultCache();

}As we don’t provide any parameter the default values read from the configuration file will be initialized and used by the

DataCacheclient.Using the

DataCacheclient is fairly easy and it’s just like any other distributed cache it has methods toPut()something in the cache,Get()something from the cache etc. Whenever you Put or Get something from the cache, you have to specify a key that is unique to this object. In our case I’m using theFullNameof ourPersonclass which is returned by a method of the class.// Put that object into the cache dataCache.Put(nPerson.GetFullName(), nPerson); // Get the cached object back from the cache Person cachedPerson = (Person)dataCache.Get(nPerson.GetFullName());You might notice, that the

Personclass is decorated with aDataContractattribute and all the properties are decorated with aDataMemberattribute. That is needed so the object can be serialized and stored into the cache. Both attributes exist into theSystem.ServiceModelassembly for which you also have to add a reference to your project. The assembly is located into the GAC as its part of the .NET Framework.[DataContract] public class Person { [DataMember] public string FirstName { get; set; } [DataMember] public string Lastname { get; set; } [DataMember] private decimal Money { get; set; } public Person(string firstname, string lastname) { FirstName = firstname; Lastname = lastname; Money = 0; } public decimal WithdrawMoney(decimal amount) { return Money -= amount; } public void DepositMoney(decimal amount) { Money += amount; } public decimal GetBalance() { return Money; } public string GetFullName() { return string.Format("{0} {1}", FirstName, Lastname); } }There are other aspects of the Windows Azure AppFabric Cache that I’ll explore in future articles, like how to get an object only if it’s newer that the version already in the memory or how to handle versioning of multiple objects in the cache and how to automatically remove an object from the cache after a specific period of time.

All the code inside Program.cs:

// DataCacheFactory will use settings from app.config using (DataCacheFactory dataCacheFactory = new DataCacheFactory()) { // Get a default cache client DataCache dataCache = dataCacheFactory.GetDefaultCache(); // Create a new person Person nPerson = new Person("Panagiotis", "Kefalidis"); Console.WriteLine(string.Format("Currently in your account: {0}", nPerson.GetBalance())); // Deposit some money nPerson.DepositMoney(100); Console.WriteLine(string.Format("Currently in your account after deposit: {0}", nPerson.GetBalance())); // Put that object into the cache dataCache.Put(nPerson.GetFullName(), nPerson); // Remove some money nPerson.WithdrawMoney(50); // Get the cached object back from the cache Person cachedPerson = (Person)dataCache.Get(nPerson.GetFullName()); // How much money do we have now? Console.WriteLine(string.Format("Currently in your cached account after withdraw: {0}", cachedPerson.GetBalance())); // How much money we REALLY have now? Console.WriteLine(string.Format("Currently in your account after withdraw: {0}", nPerson.GetBalance())); // Update the cache with latest information dataCache.Put(nPerson.GetFullName(), nPerson); } Console.ReadKey();Points of Interest

You can learn more about Caching at this URL http://msdn.microsoft.com/en-us/library/ee790954.aspx. Be aware that this is referring to Windows Server AppFabric and not Windows Azure AppFabric but it is a very good source to learn more and get an overview of what the underlying infrastructure is.

You can also get more information about the Windows Azure Platform in general at my blog http://www.kefalidis.me.

History

- 16/02/2011: First version published

License

This article, along with any associated source code and files, is licensed under The Code Project Open License (CPOL)

Thanks to Pkefal for the heads up on his project.

<Return to section navigation list>

Windows Azure Virtual Network, Connect, RDP and CDN

No significant articles today.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

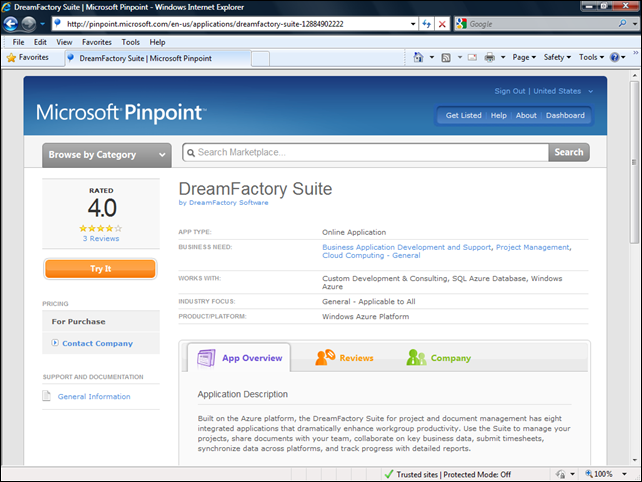

• DreamFactory Software announced their updated DreamFactory Suite for Windows Azure and SQL Azure with a 2/2011 listing in Microsoft Pinpoint:

Clicking the Try It button starts a 15-day trial subscription to the basic service.

According to the Application Description:

Built on the Azure platform, the DreamFactory Suite [DFS] for project and document management has eight integrated applications that dramatically enhance workgroup productivity. Use the Suite to manage your projects, share documents with your team, collaborate on key business data, submit timesheets, synchronize data across platforms, and track progress with detailed reports.

- Create, update, and manage projects, tasks, and resources. Visualize project status with detailed Gantt Charts.

- Conduct team activities with Collaborative Calendar, Activity Alerts, and Threaded Discussions. Visualize resource utilization.

- Organize hierarchical folders and versioned documents. Launch, edit, and upload files directly from your account.

- Log time and expenses for your projects and tasks.

- Browse offline and archived data sets and securely share selected data with colleagues, partners and customers.

- Generate advanced reports to visualize and monitor resource utilization and project profitability.

- Copy and merge data between any cloud platform and archive data sets for back up, reporting, or compliance.

- Create new team spaces with a click, add and administrate users easily with advanced permission features.

Here’s the DFS Home Page with an OakLeaf workspace, which I created with a trial subscription:

The More Apps list includes the applications available to the workspace:

- Projects

- Documents

- Database

- Calendar (Collaborative)

- Timesheets

- Project Reports

- Import & Export (Monarch)

- Application Builder

The Monarch Data Import and Export application enables data transfer to and from a variety of cloud and desktop data sources:

As shown above, Monarch supports custom interchange between the following source and destination data sources:

- Amazon Web Services

- Intuit Workspace

- Salesforce.com

- Cisco WebEx Connect

- Microsoft Windows Azure (tables and blobs)

- Microsoft SQL Azure

- Desktop Database (built-in)

- CSV Files

- Current Workspace

The Application Builder’s FormFactory provides sample invoice, packing list and quote forms that you can customize in this page:

DFS forms support programming with JavaScript and VBScript.

Inspection of HTTPS traffic with Fiddler2 indicates that basic operations store and retrieve data in dreamfactory.table.core.windows.net tables and dreamfactory.blob.core.windows.net blobs.

DreamFactory’s CTO, Bill Appleton, offers more background on the Azure implementation in his Talking with Ray Ozzie post of 5/17/2010 to the firm’s Corporate Blog (scroll down):

I had the pleasure of talking recently with Ray Ozzie about the Windows Azure platform at Microsoft's annual Professional Developers Conference. Ray had given the keynote earlier, and all of day one was focused on Windows Azure, especially the new product SQL Azure which they were rolling out at the conference. This is a self healing, zero maintenance, remotely administed SQL database hosted on an elastic grid. Customers can remotely change the size of their database up to 50 GB, and spawn any number of new databases with a click or two.

Azure is more than a software technology. Ray talked about how the software goes in the box, the box in the rack, the rack in the case, and then the cases are positioned around the world in co-located data centers. Every time Microsoft does this the cost is in the hundreds of millions of dollars. One week we noticed some speed problems on the Azure Blob service, the next week Microsoft dropped two new data centers and the problems were gone. You get the idea.

So our engineering teams are working on a direct interface to SQL Azure with no servers in between. This architecture couldn't be cleaner: the DreamFactory Player on one end an a SQL Azure database on the other. The results are quite impressive: fixed and variable response time for average transactions are up to three times faster than the other cloud platforms. There is never a lag or delay: this database is dedicated to my company. The DreamFactory Suite can load gigantic projects or very large data tables with awe-inspiring speed.

This product is a perfect fit for IT, because they can use all their existing tools for moving SQL data in and out of the database, or they can use tools from the DreamFactory Suite. Our TableTop product is great for browsing and reporting on all the data. The Monarch product can move data between SQL Azure and all other cloud platforms. They can use Formfactory for application or forms building. We have a great suite of tools for maximizing the potential of SQL Azure.

One problem is that customers must often administer their new cloud database with the same old tools they were using for their hosted database. It's three steps into the cloud and then two steps back towards the data center. To solve this problem we have created a fully functional Cloud Administrator for SQL Azure, so that end users can administer their SQL Azure database configuration from any browser with no software installation at all. This is an incredible breakthrough that makes SQL Azure easy to administer for less-technical business users and IT professionals alike. I think Mr. Ozzie would be proud.

All in all, DFS reminds me of Microsoft Access for the Cloud.

• Bruce Kyle (pictured below at right) posted ISV Video: Accumulus Makes Subscription Billing Easy for Windows Azure on 2/20/2010:

The Accumulus Subscription and Usage Billing System enables businesses to find more success with subscriptions and recurring payments. The system includes a suite of integrated tools designed to help a business grow its customer base by improving customer acquisition, fully automating the customer life-cycle and maximizing customer retention. The system handles promotion tracking, usage tracking, fee calculation, credit card processing, provisioning, billing statement emailing and customer support.

Video Link: Accumulus Makes Subscription Billing Easy for Windows Azure.

For businesses selling software, content or a service in Windows Azure, the Accumulus Subscription and Usage Billing System makes it possible to get to market quickly.

Gregory Kim, CEO of Accumulus, and Christian Dreke, President/COO of Accumulus talk with Greg Oliver, Windows Azure Architect Evangelist, about how the Accumulus Subscription and Usage Billing System makes it easy to monetize subscription services in Windows Azure. The video ends with a demonstration of how quick it is to get started with Accumulus.

About Accumulus

The Accumulus team has fifteen years of experience in the subscription billing industry, having designed systems that have supported more than 400 companies in over thirty countries, and processed more than a billion dollars of credit card transactions.

- See also Credit Card Processing for Windows Phone 7 on Windows Azure.

- Windows Azure Tips: For tips on developing for Windows Azure, see Greg Oliver's Azure Tips Blog.

- Free Trial for Windows Azure: Try out Windows Azure today. Windows Azure platform 30 day pass (US Developers) No Credit Card Required - Use Promo Code: DPWE01

- Free Support for Your Windows Azure Development Efforts: Join Microsoft Platform Ready today for free support (technical and marketing) for your Windows Azure development.

- Also see Microsoft Platform Ready to certify your Azure application for more Microsoft Platform Network benefits.

- Get Started on Windows Azure: See Start Developing Windows Azure Platform Applications.

Petri L. Salonen [pictured below] reported Former Nokia CEO Kalle Isokallio is wondering about the fuss in Finland concerning Nokia and Microsoft collaboration in a 2/19/2011 post:

In today’s Finnish Iltalehti, the former CEO of Nokia Kalle Isokallio criticizes both Finnish politicians as well as other critics that do not see the opportunity of this new partnership that Microsoft and Nokia has created. Isokallo wonders how all these skilled Symbian developers would be worse off when given modern and more productive software product development tools when compared to that of Symbian’s. He uses a metaphor in the comparison between Symbian and Microsoft development. Assume that you are building house. Which tool is better: a Leatherman knife or axe and chainsaw. With the first one you will end up with a house eventually, but with the latter, you get results much quicker as your productivity is better.

Isokallio also wonders whether he is the only one in Finland that sees this as an opportunity and wonders why politicians and labor unions refuse to see the bright side of this. Nokia did not have a future the way they were going, and this new path gives all Symbian developers, the ones that want it, a new future.

I think Isokallio brings a valid perspective to the wild discussion in the Internet about the pros and cons of this new partnership. Isokallio concludes that the future is less about hardware, but the ability to provide one consistent view to the application that is consumed from the web. Consumers are no longer willing to have different user experience between different devices if they use the same application and I have to agree on this. If a billion consumers use Windows as the operating system, doesn’t it make sense to have a consistent user experience with a mobile device that looks and feels that same. I think it does.

Also, what I did not think about before was that maybe Nokia will be the one providing the tablet experience and hardware, not Microsoft. I think we can expect to see a Windows tablet from Nokia, the only question remains whether it is the upcoming Windows 8 or Windows Phone 7-based tablet. My guess is the first one.

Isokallio also concludes that the relationship between Nokia and Microsoft has something that Apple does not have and that is the cloud as I also concluded in my prior blog entry . Nokia now has access to one of the largest cloud vendors (Windows Azure) in the world and all of the developers that build solutions to Windows Phone 7. That did not exist with the Symbian development environment. Visual Studio provides tight integration to Windows Azure and Windows Phone 7 (out –of-the-box) so developers can focus on building the solution, not supporting infrastructure. [Emphasis added.]

Microsoft has something that Google does not have and that is an operating system that everybody uses. Yes, Google is working on its own Android operating system, but if a billion consumers use Windows today, that is where the market is for time being. Google has the cloud but users will still want to use their productivity tools and provide seamless integration between different devices. Apple has its operating system and iPhone and tablet (iPad), but the market share of iOS is still very low in enterprises.

Finally, Isokallio concludes that the ridiculous amount that Nokia paid for Navteq (5,7 billion Euros) might not seem so ridiculous anymore when the technology is combined with Bing technology. Bing market share has increased considerably and Microsoft is eating into Google’s market share and profits. Recently, there have been reports that Bing search results are even more accurate when compared with that of Google’s. I think that is irrelevant as of now. What is relevant is who provides a consistent user experience in business and personal lives and Microsoft has a new chance doing this with its Windows Phone 7, Zune, xBox 360, Windows Live, Office Live and other similar services that will be integrated.

I really enjoyed Isokallio’s article (in Finnish) as he brings valid points to the discussion and his background as Nokia’s CEO gives him some perspective and validity to his opinions.

Do you agree what Isokallio is saying?

Herman Mehling asked Can Microsoft Overtake Oracle and Salesforce in CRM? in a 2/18/2011 post to eCRMGuide.com:

Microsoft is finally a serious contender in the CRM space, thanks to its mid-January launch of Microsoft Dynamics CRM Online 2011 and yesterday's launch of the on-premise version.

Regardless of how customers get it, Microsoft Dynamics CRM 2011 signals that Microsoft has upped its game against archrivals Oracle and Salesforce.com, particularly the latter, which has posted big market share gains on the strength of its early lead in online CRM.

"Microsoft Dynamics CRM is one of the few CRM solutions that enables customers to get CRM how it best fits them, whether on-premises, on demand in the cloud, or a combination of both," said Brad Wilson, general manager of Microsoft Dynamics CRM Product Management Group.

Microsoft has said it intends to aggressively pursue Salesforce's and Oracle's customers, offering companies — through June 30 — $200 per user to apply toward services to migrate data or for customization. On top of that, the vendor is offering introductory online CRM pricing of $34 per user per month — a $10 discount available through June 30 for 12 months.

Microsoft gets serious about CRM

Microsoft's release of the online version first is a clear indication of Microsoft's desire to emphasize its position as a cloud applications vendor; so are its penetration-focused promotional efforts, wrote Boston-based Nucleus Research in a research note.The firm noted that $34 per month is "a significant discount over both existing Microsoft and its competitors' per-user subscription pricing (Salesforce.com's lowest list price for complete CRM — the Professional Edition — is priced at $65 per user per month; most organizations going beyond the basics invest in the Enterprise Edition at $125)."

"Oracle and Salesforce have strong CRM solutions, but the aggressive pricing from Microsoft will cause buyers to give Dynamics CRM 2011 a serious look," said Forrester Research analyst Bill Band.

Besides low introductory pricing and other financial incentives, Microsoft hopes its flexibility will give customers another reason to switch from Salesforce.com. Microsoft gives companies a choice of online, hosted and on-premise, while Salesforce only offers an on-demand service.

Microsoft also claims that customers can integrate Microsoft Dynamics fairly easily into existing productivity software and line-of-business applications — capabilities not easily implemented by Salesforce.

Other enhancements to Dynamics CRM include a user interface that looks and feels like Microsoft's Outlook email client, an interface designed to lower training and support costs, and make it easier for users to get up to speed with the CRM applications. The product will also sport a ribbon taskbar similar to Office 2010 and 2007.

Other features include improved integration with Exchange, SharePoint and Office, as well as with the other components of Microsoft's business productivity online suite (BPOS).

Developers can take advantage of Windows Azure to develop and deploy custom code for Microsoft Dynamics CRM Online using tools such as Visual Studio, and they can also use Microsoft .NET Framework 4.0 to incorporate Microsoft Silverlight, Windows Communication Foundation and .NET Language Integrated Query (LINQ) into their cloud solutions.

The CRM offering also features an online marketplace that will let partners and customers find, download and implement custom and packaged extensions.

Microsoft's missing pieces

Still, Microsoft has some catching up to do, according to Nucleus Research: "From a functionality perspective, Microsoft is still missing a clear answer to the cloud-based crowdsourced lead generation and business contact validation capabilities Salesforce.com acquired with Jigsaw" (Microsoft provides integration with InsideView, Hoovers and ZoomData).Nucleus noted that Microsoft also has some kinks to straighten out in billing online customers. Credit card billing would be a good first step. Streamlining access to self-service account management and account information would lower Microsoft's cost of supporting small customers while making it easier for business users to adopt, Nucleus wrote.

MsCerts.net continued its tutorial series with SOA with .NET and Windows Azure : WCF Discovery (part 3) - Discovery Proxies for Managed Discovery & Implicit Service Discovery on 2/16/2011:

Discovery Proxies for Managed Discovery

Ad hoc discovery is a suitable approach for static and smaller local service networks, where all services live on the same subnet and multicasting probes or announcements don’t add a lot of network chatter.

Larger environments, with services distributed across multiple subnets (or highly dynamic networks), need to consider a Discovery Proxy to overcome the limitations of ad hoc probing. A Discovery Proxy can listen to UDP announcements on the standard udpAnnouncementEndpoint for service registration and de-registration, but also expose DiscoveryEndpoint via a WCF SOAP binding.

The implementation requirements for a Discovery Proxy can vary from a simple implementation that keeps an in-memory cache of available services, to implementations that require databases or scale-out solutions, like caching extensions provided by Windows Server AppFabric. WCF provides a DiscoveryProxy base class that can be used for general proxy implementations.

Discovering from a Discovery Proxy

Discovering services registered with a Discovery Proxy follows the steps for ad hoc discovery discussed earlier. Instead of multicasting a UDP message, DiscoveryClient now needs to contact the Discovery Proxy. Details about the discovery contract are encapsulated in the DiscoveryEndpoint class. Its constructor only takes parameters for the communication protocol details, binding, and address.

Here we are configuring a discovery client to query the Discover Proxy:

Example 9:

DiscoveryEndpoint proxyEndpoint =

new DiscoveryEndpoint(

new NetTcpBinding(),

new EndpointAddress(proxyAddressText.Text));

this.discoveryClient = new DiscoveryClient(proxyEndpoint);Implicit Service Discovery

Our coverage of WCF Discovery so far has focused on the explicit discovery of services. However, it is worth noting that WCF Discovery can also perform the same queries behind-the-scenes, when you configure endpoints as DynamicEndpoint programmatically or in the configuration file. This allows for highly dynamic, adaptive environments where virtually no location-specific details are maintained as part of code or configuration.

SOA Principles & Patterns

The consistent application of the Service Discoverability principle is vital for WCF Discovery features to be succesfully applied across a service inventory, especially in regard to managed discovery. The application of Canonical Expression ties directly to the definition and expression of any published service metadata. And, of course, Metadata Centralization represents the effective incorporation of a service registry as a central discovery mechanism.

A client endpoint, for example, can be configured to locate a service that matches on scope and contract. In the following example, we configure DynamicEndpoint to locate a service with matching contract and metadata:

Example 10:

<client>

<endpoint kind="dynamicEndpoint"

binding="basicHttpBinding"

contract="ICustomerService"

endpointConfiguration="dynamicEndpointConfiguration"

name="dynamicCustomerEndpoint" />

</client>

<standardEndpoints>

<dynamicEndpoint>

<standardEndpoint name="dynamicEndpointConfiguration">

<discoveryClientSettings>

<findCriteria duration="00:00:05" maxResults="1">

<types>

<add name="ICustomerService"/>

</types>

<extensions>

<MyCustomMetadata>

Highly Scalable

</MyCustomMetadata>

</extensions>

</findCriteria>

</discoveryClientSettings>

</standardEndpoint>

</dynamicEndpoint>

</standardEndpoints>

With this configuration, the service consumer can create a proxy object to the server with the following code:

Example 11:

ICustomerService svc =

new ChannelFactory<ICustomerService>

("dynamicCustomerEndpoint").CreateChannel();

Earlier series members:

- SOA with .NET and Windows Azure : WCF Discovery (part 3) - Discovery Proxies for Managed Discovery & Implicit Service Discovery

- SOA with .NET and Windows Azure : WCF Discovery (part 2) - Locating a Service Ad Hoc & Sending and Receiving Service Announcements

- SOA with .NET and Windows Azure : WCF Discovery (part 1) - Discovery Modes

<Return to section navigation list>

Visual Studio LightSwitch

Visual Studio Tutor posted Remote Debugging using Visual Studio on 2/19/2011:

To use Visual Studio remote debugging both the remote machine and the local machine need to be in the same domain. Ensure that you are using the same account / password on both machines and that both have Administrator privileges. Then follow the below steps to implement remote debugging:

- Log on the remote machine with the account and password as your local machine.

- Run the remote debugger monitor on the remote machine (this is located at C:\Program Files\Microsoft Visual Studio 9.0\Common7\IDE\Remote Debugger\x86). To ensure that you will be running the correct remote debugger monitor, it is a good idea to copy the local machine’s one to the remote machine.

- Run the application to be debugged on the remote machine

- Open Visual Studio on the local machine

- Go to Tools -> Attach to Process which will open the Attach to process dialog .

- Locate the Server name in the remote debugger monitor on the remote machine and enter the server’s name in the Qualifier box on the local machine

- Locate the application’s process in the Available process section, specifying the correct code type, and then click the Attach button

- Set your breakpoints and then run the app on the remote machine and a breakpoint will be hit.

Return to section navigation list>

Windows Azure Infrastructure

• James Hamilton posted Dileep Bhandarkar on Datacenter Energy Efficiency on 2/20/2011:

Dileep Bhandarkar [pictured at right] presented the keynote at Server design Summit last December. I can never find the time to attend trade shows so I often end up reading slides instead. This one had lots of interesting tidbits so I’m posting a pointer to the talk and my rough notes here.

Dileep’s talk: Watt Matters in Energy Efficiency

My notes [image sized to fit, see original for active links]:

Dileep is a Microsoft Distinguished Engineer responsible for Server Hardware Architecture and Standards for Global Foundation Services.

• Mustafa Kapadia asked Is Microsoft's "Cloud Power" campaign really driving results? on 2/16/2010 (missed when posted):

Based on the notion that cloud can fundamentally change the way people do business, Microsoft kicked off a brand new advertising campaign called “Cloud Power.” The campaign, launched in 2010, touts the benefits of cloud computing over those of traditional computing, offers prospective clients advice and how to get started, and introduces Microsoft’s cloud portfolio.

The ads, which include TV, Internet, print, and outdoor ads, feature the line “cloud power” with actors portraying different types of customers and offering various takes on what products like Windows Azure, Office 365 and Windows Server can offer. The Cloud Power campaign focuses on the public cloud, private cloud and cloud productivity.

Beyond the massive advertising campaign, which will run for at least the current fiscal year ending in July, Microsoft is also doing a series of events, including at least 200 separate gatherings with more than 50,000 customers. The company will also feature longer “Cloud Conversations” with real customers on its web site.

So with over several hundred million dollars invested in the campaign, is it really working?

The (preliminary) results are…

While it’s too early to judge the campaign’s success, early indications are positive. According to the company’s website, Microsoft cloud solutions have been adopted by:

- Seven of the top 10 global energy companies

- 13 of the top 20 global telecoms

- 15 of the top 20 global banks, and

- 16 of the top 20 global pharmaceuticals

With over 10,000 corporate customers already using Windows Azure, the company is off to a good start in defining the marketplace. According to Gartner, Microsoft offers one of the most visionary and complete views of the cloud and is predicted to be one of the two leaders in the cloud computing market. The other company is VMware.

In other words, Microsoft just upped the ante. IBM, Google, Amazon, HP… how do you plan to respond?

Yaron Naveh delivered Bart Simpson's guide to Windows Azure on 2/19/2011:

The original name of this post was "a poor developer's guide to windows azure" but then I found the Bart Simpson's Chalkboard Generator:

My Azure story begins back in PDC08 where Microsoft announced a community preview of Windows Azure which includes a free subscription for a limited period. I took advantage of this to develop the Wcf binding box (which btw got some good reviews). A few months after, the preview has ended and my account became read only. I was not too bothered by it as I had other things in mind. A few weeks ago I had a crazy idea to build a wsdl2-->wsdl1 converter. The most natural way to do it was to create an online service. But my azure trial is already in freeze, and I did not want to pay a hosting service just to host a free contribution I make for the community. What could I do? I then remembered that I (and my contest winners) have a special MSDN premium Azure offer. Ans this is how wsdl2wsdl came to life. Veni, vidi, vici? Oh my...

A few days after going on air I get this email from Microsoft:

Your Windows Azure Platform Usage Estimate - 75% of Base Units Consumed

This e-mail notification comes to you as a courtesy to update you on your Windows Azure platform usage. Our records indicate that your subscription has exceeded 75% of the compute hours amount included with your offer for your current billing period. Any hours in excess of the amount included with your offer will be charged at standard rates.

Total Consumed*: 592.000000 Compute Hours

Amount included with your offer: 750 Compute Hours

Amount over (under) your monthly average: -158.000000 Compute HoursLet's see... I should get 750 compute hours / month, a month has 31 days (a worse case analyses), 750 / 31 > 24 which means I should have more than 24 complimentary compute hours per day. How could they run out so fast?

Bart Simpson's Azure Rule #1:

As a developer, it made too much sense to develop the binding box and wsdl2wsdl in a separate visual studio solutions. This yields two separate azure hosted services:

And this yields two separate bill items per day:

This means I was not paying for 24 compute hours per day, I was paying 24*2!

Now you may say RTFM. But nobody does it. Not when we download some open source library from the web, and not when we upload something in the other direction.

But why did the bill had 4 itmes and not two?

Bart Simpson's Azure Rule #2:Not only did I had two hosted services online, but I also had two environments for each - staging and deployment. You pay for what you get:

2 services * 2 deployments = 4 * 24 hours a day = 96 hours a day!

Bart Simpson's Azure Rule #3:

I was on my way to a Chapter 11 when I decided to panically press the stop button on my unintended deployments:

However this does not reduce costs:

Suspending your deployment will still result in charges because the compute instances are allocated to you and cannot be allocated to another customer.

Remember: Always delete deployments you do not want to pay for. Suspending / stopping them still results in charges.

Bart Simpson's Azure Rule #4:For applications with very small needs an extra small instance should be enough. It can save you costs by up to 60%! Configuring it is very easy:

<WebRole name="WebRole1" vmsize="ExtraSmall"> [fixed by --rj]

Conclusion

Homer Simpson once said "Trying is the first step towards failure." In my case it was the first step to this blog post and to my first two weeks Azure bill:I'll end the month on around 50$ which is not too bad for a commercial site. But for a contribution to the community and my fun? Oh my...

Whether you are an independent developer or a Fortune 5000 company - know the Azure pricing model and how your account fits in.

Anne Grubb delivered “A comprehensive list of IT and developer resources for mobile development, cloud computing, and virtualization” in her Mobile and Cloud: Time to Ramp Up Your Learning post of 2/18/2011 to the DevProConnection blog:

It's a truism that technology change is ever accelerating. In the Windows IT/developer world, the most dramatic changes currently occurring may well be in the mobile and cloud areas. Last year, at DevProConnections, we were keeping a watchful eye on cloud and mobile. Later in 2010, we thought it was time to start covering Windows Azure and mobile topics in earnest—responding to the dev audience's growing interest in these topics by publishing several well-received articles on mobile development, cloud, and Windows Azure. Even so, we weren't seeing strong evidence of a groundswell of interest from devs in those areas or much activity from third-party vendors—yet.

But what a difference a few months make. Up until last fall, Microsoft had little significant presence in the smartphone area. But with the release of Windows Phone 7—and just in the last week, Windows Phone 7 announcements from Microsoft and several vendors in the .NET developer ecosystem, the situation is changing rapidly.

The same sort of acceleration is happening in the Microsoft platform world concerning the cloud. Azure is becoming a viable platform—for development as well as hosted services such as storage.

The challenge, as usual in our world, is to keep up with the changes—so, for example, when your CEO says, "we need a mobile app for our ordering system" or mandates putting core business apps and data in the cloud, you know how to respond to meet the request.

Mobile and Cloud Resources for Developers and IT Pros

DevProConnections, and its sister publications Windows IT Pro, SQL Server Magazine, and SharePointPro Connections, can help provide some of that knowledge. These sites offer dozens of timely how-to articles and commentaries on mobile and cloud topics for .NET developers, IT pros, and database professionals. (See the end of this article for a list of mobile and cloud articles.)Another resource I'm excited about is the upcoming Mobile, Cloud and Virtualization Connections event, April 17–24 at the Bellagio in Las Vegas. Not just because it's only a two-hour flight for me to Vegas, or publication staff get to stay in a fancy hotel... I am especially looking forward to this conference because of the energy and excitement in these technology areas. I'm looking forward to the exchange of ideas on leading-edge topics with leaders in mobile development, carriers, and cloud security, to name a few. I'm impressed with the speaker/session lineup: a panel discussion "Which Platform Do You Bet On?" with Microsoft's Joe Belfiore, Big Nerd Ranch's Aaron Hillegass, and others; Cloud Security Alliance's Jim Reavis on "Building the Trusted Cloud" (I want to know if that's possible); Michele Leroux Bustamante on Identity Federation; and a panel discussion with Riverbed's Steve Riley, Cloud.com's Peder Ulander, and others on Virtualization in the Cloud.

Finally, you can look forward to continuing coverage of mobile and cloud topics in DevProConnections in 2011—with a cluster of features in the April and May issues on Windows Phone 7 and iOS application development, a review of mobile development tools, and how-to features on implementing Windows Azure and SQL Azure from a .NET developer perspective. With all these resources, you'll be equipped with knowledge to respond to the dramatic technology changes this year has already brought and promises to continue bringing.

Mobile Development Articles

Getting Started with Windows Phone 7 Development

Build a Windows Phone 7 Application

Building a Windows Phone 7 Application: Adding Features to the Album Viewer App

How to Build Mobile Websites with ASP.NET MVC 2 and Visual Studio 2010

Build a Silverlight Application for Windows Phone 7 Series

Building Your First Silverlight Game on Windows Phone 7More mobile development articles

Windows Azure/Cloud Articles

Windows Azure Developer Tips and Tricks

A Service Level Agreement: Your Best Friend in the Cloud

The Future of WCF Services and Windows Azure

Access Control in the Cloud: Windows Azure AppFabric's ACS

Get to Know the Windows Azure Cloud Computing PlatformMore Azure/cloud computing articles

SQL Azure Articles

7 Facts About SQL Azure

Getting Started with SQL Azure Database

SQL Azure Enhancements

SQL Server vs. SQL Azure: Where SQL Azure is Limited

Keep Your Eyes on Microsoft's SQL Azure

Brian Reinholz reported Microsoft Releases MCPD: Windows Azure Developer Certifications on 2/14/2011:

For those interested in learning more about Azure and what it means to develop on Microsoft's cloud platform, the wait is over: Microsoft has released three Microsoft Certified Professional Developer (MCPD) certifications for Windows Azure. The certifications are as follows:

Exam 70-583: Designing and Developing Windows Azure Applications - This exam was released yesterday. It is a PRO level exam, meaning that developers will be required to have one of the Technical Specialist (TS) exams (listed below) in order to earn this certification.

This advanced class focuses on designing and developing applications for Azure. The class is designed for a senior developer, architect, or team lead. It is expected that candidates will have at least six months of experience developing in Azure.

To take Exam 70-583, I believe that you'll need one of the two below certifications. (It would be a best practice either way to have a TS certification first, but I'm confirming with Microsoft that it's mandatory since it doesn't say on the company's certification site.)

Update: I heard back from Microsoft and it turns out that in order to earn the MCPD Windows Azure Developer certification, you must pass all three exams mentioned in this article: 70-583, 70-513, 70-516. 513 and 516 are not necessarily pre-requisites for 583, but they are all part of an overall track. Visit this page for more information.

Exam 70-513: Windows Communication Foundation Development with Microsoft .NET Framework 4 - For this certification, you must be able to develop applications using Windows Communication Foundation (WCF) and the .NET Framework 4. Recommended skills for this exam include: experience creating service model elements, experience configuring and deploying WCF applications, and experience using Visual Studio tools.

Exam 70-516: Accessing Data with Microsoft .NET Framework 4 - This certification tests your ability to access data sources using ADO.NET and the .NET Framework. Recommended skills for this exam include: ADO.NET 4 coding techniques, LINQ to SQL, stored procedures, and Entity Framework technologies.

For training resources, consider MCTS Self-Paced Training Kit (Exam 70-516): Accessing Data with Microsoft .NET Framework 4.

Coming Soon in Certifications

I also heard from Microsoft that we can expect the following updates coming soon:

- New cloud-related certifications

- Updated training resources for the courses mentioned above (classes, books, etc)

All developers who hold any of the above MCPD: Windows Azure Developer certifications will be required to recertify every two years to keep their skills relevant.

Required Reading:

<Return to section navigation list>

Windows Azure Platform Appliance (WAPA), Hyper-V and Private Clouds

No significant articles today.

<Return to section navigation list>

Cloud Security and Governance

• Chris Hoff (@Beaker) posted Video Of My CSA Presentation: “Commode Computing: Relevant Advances In Toiletry & I.T. – From Squat Pots to Cloud Bots – Waste Management Through Security Automation” on 2/19/2011:

This is probably my most favorite presentation I have given. It was really fun. I got so much positive feedback on what amounts to a load of crap.

This video is from the Cloud Security Alliance Summit at the 2011 RSA Security Conference in San Francisco. I followed Mark Benioff from Salesforce and Vivek Kundra, CIO of the United States.

Part 1:

Part 2:

Related articles

- My Warm-Up Acts at the RSA/Cloud Security Alliance Summit Are Interesting… (rationalsurvivability.com)

- George Carlin, Lenny Bruce & The Unspeakable Seven Dirty Words of Cloud Security (rationalsurvivability.com)

- Incomplete Thought: Why Security Doesn’t Scale…Yet. (rationalsurvivability.com)

• Brian Prince asserted “A recap of the RSA Conference touches everything from cloud security to cyber-war” in a deck for his RSA Conference: Security Issues From the Cloud to Advanced Persistent Threats post of 2/20/2011 to eWeek’s IT Security & Network News blog:

The 20th annual RSA Conference in San Francisco came to a close Feb. 18. It was a week of product announcements, keynotes and educational sessions that produced their share of news. This year's hot topics - cloud computing and cyber-war.

The conference included a new session track about cloud computing, and the topic was the subject of the keynote by Art Coviello, executive vice president at EMC and executive chairman of the company’s RSA security division. Virtualization and cloud computing have the power to change the evolution of security dramatically in the years to come, he said

“At this point, the IT industry believes in the potential of virtualization and cloud computing," he said. "IT organizations are transforming their infrastructures. . . . But in any of these transformations, the goal is always the same for security–getting the right information to the right people over a trusted infrastructure in a system that can be governed and managed."

EMC’s RSA security division kicked the week off by announcing the Cloud Trust Authority, a set of cloud-based services meant to facilitate secure and compliant relationships between organizations and cloud service providers by enabling visibility and control over identities and information. EMC also announced the new EMC Cloud Advisory Service with Cloud Optimizer.

In addition, the Cloud Security Alliance (CSA) held the CSA Summit Feb. 14, featuring keynotes from salesforce.com Chairman and CEO Marc Benioff and U.S. Chief Information Officer Vivek Kundra.

But the cloud was just one of several items touched on during the conference. Cyber-war and efforts to protect critical infrastructure companies were also discussed repeatedly. In a panel conversation, former Department of Homeland Security Secretary Michael Chertoff, security guru Bruce Schneier, former National Security Agency Director John Michael McConnell, and James Lewis, director and senior fellow of the Center for Strategic and International Studies’ Technology and Public Policy Program—discussed Wednesday the murkiness of cyber-warfare discussions.

“We had a Cold War that allowed us to build a deterrence policy and relationships with allies and so on, and we prevailed in that war,” McConnell said. “But the idea is the nation debated the issue and made some policy decisions through its elected representatives, and we got to the right place…I would like to think we are an informed society, [and] with the right debate, we can get to the right place, but if you look at our history, we wait for a catastrophic event.”

Part of the solution is partnerships between the government and private sector.

"One of the biggest issues you got—[and] unfortunately we haven't made enough progress—we need better coordination across the government agencies, and from the government agencies to the private sector," Symantec CEO Enrique Salem said. "I think we still work too much in silos inside the government [and] work too much in silos between the government and the private sector.”

The purpose of such efforts is to target advanced persistent threats (APTs).

“Part of the problem of when you define [advanced persistent threats], it's not going to be like one single piece of software or platform; it's a whole methodology for how bad guys attack the system,” Bret Hartman, CTO of EMC’s RSA security division, told eWEEK.

“They're going to use every zero-day attack they can throw at you,” he explained. “They are going to use insider attacks; they're going to use all kinds of things because they are motivated to take out whatever it is they want."

The answer, Hartman said, is a next-generation Security Operations Center (SOC) built on six elements: This vision includes six core elements: risk planning; attack modeling; virtualized environments; automated, risk-based systems; self-learning, predictive analysis; and continual improvement through forensic analyses and community learning.

Preventing attacks also means building more secure applications. In a conversation with eWEEK, Brad Arkin, Adobe System’s director of product security and privacy, discussed some of the ways Adobe has tried to improve its own development process, and offered advice for companies looking to do the same.

“The details of what you do with the product team are important, but if you can’t convince the product team they should care about security, then they are not going to follow along with specifics,” Arkin said. “So achieving that buy-in to me is one of the most critical steps.”

• Kiril Kirilov posted Information Security Professionals Need New Skills to Secure Cloud-based Technologies, Study Warns to the CloudTweaks blog on 2/18/2011:

More than 70 percent of information security professionals admit they need new skills to properly secure cloud-based technologies, a survey conducted by Frost & Sullivan and sponsored by (ISC)2 revealed. The 2011 (ISC)2 Global Information Security Workforce Study (GISWS) is based on a survey of more than 10,000 (2400 in EMEA) information security professionals worldwide and some of its findings are alarming, including the fact that a growing number of technologies being widely adopted by businesses are challenging information security executives and their staffs.

The widespread use of technologies like cloud computing and deployment of mobile devices jeopardizes security of governments, agencies, corporations and consumers worldwide over the next several years, the survey said.

The survey also finds that most respondents believe they and their employees need new skills to meet the challenges of new technologies like cloud computing and the growing number of social networks and mobile device applications. Actually, these findings are not surprising since information security professionals have always been a step behind the growing number of new technologies that appear very fast. One could not expect that information security staff will be well-prepared to answer all security threats related to fast paced market of cloud computing and mobile devices. Moreover, information security professionals are under pressure from end-user who want new technologies to be deployed as soon as possible, sometimes underestimating the related security risks.

“In the modern organization, end-users are dictating IT priorities by bringing technology to the enterprise rather than the other way around. Pressure to secure too much and the resulting skills gap are creating risk for organizations worldwide,” Robert Ayoub, global program director – network security for Frost & Sullivan, commented in a press release.

More alarming is that cloud computing illustrates a serious gap between technology implementation and the skills necessary to provide security. Over 50 percent of respondents admitted they are using private clouds (55 percent EMEA), with over 70 percent (75 percent EMEA) realize the need for new skills to properly secure cloud-based technologies.

However, respondents reported application vulnerabilities as the greatest threat to organizations with 72 percent of those surveyed worldwide are ranking application vulnerabilities as No. 1 threat.