Windows Azure and Cloud Computing Posts for 7/23/2010+

| A compendium of Windows Azure, Windows Azure Platform Appliance, SQL Azure Database, AppFabric and other cloud-computing articles. |

• Update 7/25/2010: Noted more content in the Bruno Terkaly continues his Leverage Cloud Computing with Windows Azure and Windows Phone 7 – Step 2 to Infinity – Under Construction series post in the Live Windows Azure Apps, APIs, Tools and Test Harnesses section.

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database, Codename “Dallas” and OData

- AppFabric: Access Control and Service Bus

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Windows Azure Infrastructure

- Windows Azure Platform Appliance (WAPA)

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Read the detailed TOC here (PDF) and download the sample code here.

Discuss the book on its WROX P2P Forum.

See a short-form TOC, get links to live Azure sample projects, and read a detailed TOC of electronic-only chapters 12 and 13 here.

Wrox’s Web site manager posted on 9/29/2009 a lengthy excerpt from Chapter 4, “Scaling Azure Table and Blob Storage” here.

You can now freely download by FTP and save the following two online-only PDF chapters of Cloud Computing with the Windows Azure Platform, which have been updated for SQL Azure’s January 4, 2010 commercial release:

- Chapter 12: “Managing SQL Azure Accounts and Databases”

- Chapter 13: “Exploiting SQL Azure Database's Relational Features”

HTTP downloads of the two chapters are available for download at no charge from the book's Code Download page.

Azure Blob, Drive, Table and Queue Services

Tobin Titus (@tobint) explains the CloudStorageAccount.SetConfigurationSettingPublisher and CloudStorageAccount.FromConfigurationSetting methods in this 7/23/2010 post:

Those venturing into Windows Azure development for the first time may find themselves in a bit of a quandary when they try to devise a strategy for getting their storage account credentials. You dive into the Windows Azure APIs and determine that you must first get a reference to a CloudStorageAccount. You are all aglow when you find the “FromConfigurationSetting” method that takes a single string parameter named “settingName”. You assume that you can store your connection information in a configuration file, and call “FromConfigurationSetting” from your code to get dynamically load the connection information from the config file. However, like most of the Windows Azure documentation, it is very light on details and very heavy on profundity. The documentation for “FromConfigurationSetting” currently says:

“Create a new instance of a CloudStorageAccount from a configuration setting.”

That’s almost as helpful as “The Color property changes the color.” Notice that it isn’t specific about what configuration setting this comes from? The documentation is purposefully generic, but falls short of explaining why. So I’ve seen multiple questions in forums and blogs trying to figure out how this works. I’ve seen developers try to put the configuration setting in various places and make assumptions that simply providing the configuration path would get the desired result. Then they try to run the code and they get the following exception message:

“InvalidOperationException was caught

SetConfigurationSettingPublisher needs to be called before FromConfigurationSetting can be used”

Of course, this causes you to look up “SetConfigurationSettingPublisher” which also is a little light on details. It says:

“Sets the global configuration setting publisher.”

Which isn’t entirely clear. The remarks do add some details which are helpful, but don’t go as far as to help you understand what needs to be done – hence this blog post. So that was the long setup to a short answer.

The FromConfigurationSetting method makes no assumptions about where you are going to store your connection information. SetConfigurationSettingPublisher is your opportunity to determine how to acquire your Windows Azure connection information and from what source. The FromConfigurationSetting method executes the delegate passed to SetConfigurationSettingPublisher – which is why the error message says this method needs to be called first. The delegate that you configure should contain the logic specific to your application to retrieve the storage credentials. So essentially this is what happens:

- You call SetConfigurationSettingPublisher, passing in the logic to get connection string data from your custom source.

- You call FromConfigurationSetting, passing in a setting name that your delegate can use to differentiate from other configuration values.

- FromConfigurationSetting executes your delegate and sets up your environment to allow you to create valid CloudStorageAccount instances.

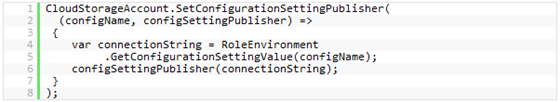

So, going back to a typical application, you want to store your configuration in a .config file and retrieve it at runtime when your Azure application runs. Here’s how you’d set that up. When your application starts up, you’ll want to configure your delegate using SetConfigurationSettingPublisher as follows:

If this is an ASP.NET application, you could call this in your Application_Start event in your global.asax.cs file. If this is a desktop application, you can set this in a static Constructor for your class or in your application startup code.

In this example, you are getting a configuration string the Windows Azure configuration file, and using the delegate to assign that string to the configuration setting publisher. This is great if you are executing in Windows Azure context all the time. But if you aren’t running your code in the Windows Azure context (for instance, you are exercising your API from NUnit tests locally) this example will throw another exception:

“SEHException was caught

External component has thrown an exception.”

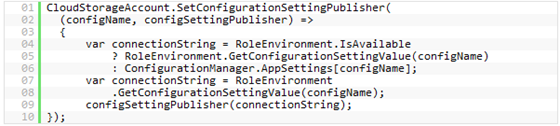

To solve this problem, you can set up the delegate to handle as many different environments as you wish. For example, the following code would handle two cases – inside a Windows Azure role instance, and in a local executable:

For your unit tests to work, just add an appSettings key to your configuration file:

Note: Make sure to replace <accountName> and <accountKey> with your custom values.

In the context of your Windows Azure application, just make sure that you configure the same configuration key name there. In Visual Studio:

- Right click your Windows Azure role and choose Properties from the dropdown menu.

- Click on the Settings tab on the left.

- Click the Add Setting button in the button bar for the Settings tab.

- Type “DataConnectionString” in the Name column

- Change the Type drop down box to Connection String

- Click the “…” (ellipsis) button in the right hand side of the Value column

- Configure your storage credentials using the values from the Windows Azure portal

- Click OK to the dialog

- Use the File | Save menu option to save your settings then close the settings tab in visual studio.

With this code, you should now be able to retrieve your connection string from configuration settings in a Windows Azure role and in a local application and retrieve a valid CloudStorageAccount instance using the following code:

Of course, you aren’t limited to these scenarios alone. Your SetConfigurationSettingPublisher delegate may retrieve your storage credentials from anywhere you choose – a database, a user prompt, etc. The choice is yours.

For those of you who need a quick and dirty way to get an CloudStorageAccount instance without all the hoops, you can simply pass your connection string in to the Parse or TryParse method.

This should give you all the information you need to get a CloudStorageAccount instance and start accessing your Windows Azure storage.

Tobin is a member of Microsoft’s Internet Explorer team.

<Return to section navigation list>

SQL Azure Database, Codename “Dallas” and OData

Wayne Walter Berry (@WayneBerry) continues his PowerPivot for the DBA: Part 3 series on 7/23/2010:

In this article I am going to continue to tie some simple terminology and methodology in business intelligence with Transact-SQL – bring it down to earth for the DBA. This is a continuation in a series of blog posts (part 1, part 2) that equates Transact-SQL to PowerPivot.

Scope

As discussed in this previous blog post, a measure is passed the rows of the cell it is evaluating, the scope of the cell in the PowerPivot table. Which works really well if you are summing a single cell in that scope. However, what if you want to get a ratio between this scope and a larger scope, like that of the row the cell is in, or the whole PivotTable?

Measures have the ability to reach outside of their scope and draw in information from the bigger picture. If a cell is the PivotTable is a set of rows that are in the Bike category and the have an order date of 7/1/2001 the measure has access to all the rows that are in the Bike Category, and all the rows with an order date of 7/1/2001, it even has access to all the rows in the whole PivotTable.

The ability to access more than just the local scope is the power of the measure in PowerPivot. This feature gives the user insight into to how the cell data compares to other tables in the PivotTable.

ALL

In Data Analysis Expressions (DAX), the language used in the measure formula, ALL returns all the rows in a table, or all the values in a column, ignoring any filters that might have been applied. Here is an example of using ALL to calculate the ratio of total line item sales in the cell to the total line item sales for the category:

=SUM(SalesOrderDetail[LineTotal])/CALCULATE(SUM(SalesOrderDetail[LineTotal]),ALL(ProductCategory))Find the division in the formula, to the left is a formula that we already discussed in this previous blog post; it sums all the LineTotal columns in the scope of the cell. To the right of the division is the interesting part of the formula, it invokes the CALCULATE keyword to change the scope of the summation. CALCULATE evaluates an expression in a context that is modified by the specified filters. In this case those filters are all the rows returned from the result in this particular row in the PivotTable. In the example above this is the order date. Here is what the results look like:

Transact-SQL

Now let’s get the same results with Transact-SQL, this turns into a 200 level transact-SQL statement, because of the nested SELECT used as a table (T1) to get the summation of the LineTotal column per date.

SELECT ProductCategory.Name, SalesOrderHeader.OrderDate, SUM(LineTotal)/ MAX(T1.ProductCategoryTotal) FROM Sales.SalesOrderHeader INNER JOIN Sales.SalesOrderDetail ON SalesOrderHeader.SalesOrderID = SalesOrderDetail.SalesOrderID INNER JOIN Production.Product ON Product.ProductID = SalesOrderDetail.ProductID INNER JOIN Production.ProductSubcategory ON Product.ProductSubcategoryID = ProductSubcategory.ProductSubcategoryID INNER JOIN Production.ProductCategory ON ProductSubcategory.ProductCategoryID = ProductCategory.ProductCategoryID INNER JOIN ( SELECT SUM(LineTotal) 'ProductCategoryTotal', SalesOrderHeader.OrderDate FROM Sales.SalesOrderHeader INNER JOIN Sales.SalesOrderDetail ON SalesOrderHeader.SalesOrderID = SalesOrderDetail.SalesOrderID GROUP BY SalesOrderHeader.OrderDate ) AS T1 ON SalesOrderHeader.OrderDate = T1.OrderDate GROUP BY ProductCategory.Name, SalesOrderHeader.OrderDate ORDER BY SalesOrderHeader.OrderDateThis returns all the right results, however it isn’t very pretty compared to the PivotTable in Excel. The percents are not formatted, the results are not pivoted, there are no grand totals and the data isn’t very easy to read.

Here is the Transact-SQL to pivot the table:

SELECT OrderDate, [1] AS Bikes, [2] AS Components, [3] AS Clothing, [4] AS Accessories FROM (SELECT Sales.SalesOrderDetail.LineTotal/T1.ProductCategoryTotal 'LineTotal', ProductCategory.ProductCategoryID, SalesOrderHeader.OrderDate FROM Sales.SalesOrderHeader INNER JOIN Sales.SalesOrderDetail ON SalesOrderHeader.SalesOrderID = SalesOrderDetail.SalesOrderID INNER JOIN Production.Product ON Product.ProductID = SalesOrderDetail.ProductID INNER JOIN Production.ProductSubcategory ON Product.ProductSubcategoryID = ProductSubcategory.ProductSubcategoryID INNER JOIN Production.ProductCategory ON ProductSubcategory.ProductCategoryID = ProductCategory.ProductCategoryID INNER JOIN ( SELECT SUM(LineTotal) 'ProductCategoryTotal', SalesOrderHeader.OrderDate FROM Sales.SalesOrderHeader INNER JOIN Sales.SalesOrderDetail ON SalesOrderHeader.SalesOrderID = SalesOrderDetail.SalesOrderID GROUP BY SalesOrderHeader.OrderDate ) AS T1 ON SalesOrderHeader.OrderDate = T1.OrderDate ) p PIVOT ( SUM(LineTotal) FOR ProductCategoryID IN ( [1], [2], [3], [4] ) ) AS pvt ORDER BY pvt.OrderDate;

Maarten Balliauw’s (@maartenballiauw) Manage your SQL Azure database from your browser post of 7/23/2010 is an abbreviated version of my Test Drive Project “Houston” CTP1 with SQL Azure updated on 7/23/2010:

Yesterday, I noticed on Twitter that the SQL Azure - Project “Houston” CTP 1 has been released online. For those who do not know Houston, this is a lightweight and easy to use database management tool for SQL Azure databases built in Silverlight. Translation: you can now easily manage your SQL Azure database using any browser. It’s not a replacement for SSMS, but it’s a viable, quick solution into connecting to your cloudy database.

A quick look around

After connecting to your SQL Azure database through http://manage.sqlazurelabs.com, you’ll see a quick overview of your database elements (tables, views, stored procedures) as well as a fancy, three-dimensional cube displaying your database details.

Let’s create a new table… After clicking the “New table” toolbar item on top, a simple table designer pops up:

You can now easily design a table (in a limited fashion), click the “Save” button and go enter some data:

Stored procedures? Those are also supported:

Even running stored procedures:

Conclusion

As you can probably see from the screenshots, project “Houston” is currently quite limited. Basic operations are supported, but for example dropping a table should be done using a custom, hand-crafted query instead of a simple box.

What I would love to see is that the tool gets a bit more of the basic database operations and a Windows Phone 7 port? That would allow me to quickly do some trivial SQL Azure tasks both from my browser as well as from my (future :-)) smartphone.

Martin’s initial “It’s not a replacement for SSMS, but it’s a viable, quick solution into connecting to your cloudy database” comment is similar to my concluson.

Richard Seroter’s Using “Houston” to Manage SQL Azure Databases post of 7/22/2010 contains yet another guided tour of SQL Houston which begins:

Up until now, your only option for managing SQL Azure cloud databases was using an on-premise SQL Server Management Console and pointing to your cloud database. The SQL Azure team has released a CTP of “Houston” which is a web-based, Silverlight environment for doing all sorts of stuff with your SQL Azure database. Instead of just telling you about it, I figured I’d show it.

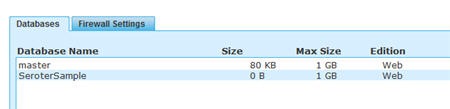

First, you need to create a SQL Azure database (assuming that you don’t already have one). Mine is named SeroterSample. I’m feeling very inspired this evening.

Next up, we make sure to have a firewall rule allowing Microsoft services to access the database.

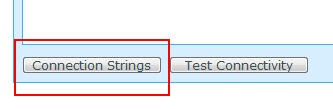

After this, we want to grab our database connection details via the button at the bottom of the Databases view.

Now go to the SQL Azure labs site and select the Project Houston CTP 1 tab.

We then see a futuristic console which either logs me into project Houston or launches a missile. …

Richard continues with a demo similar to Maarten’s and mine.

Pinal Dave’s SQLAuthority News – Guest Post – Walkthrough on Creating WCF Data Service (OData) and Consuming in Windows 7 Mobile application post of 7/23/2010 begins:

This is guest post by one of my very good friends and .NET MVP, Dhananjay Kumar. The very first impression one gets when they meet him is his politeness. He is an extremely nice person, but has superlative knowledge in .NET and is truly helpful to all of us.

Objective

In this article, I will discuss:

- How to create WCF Data Service

- How to remove digital signature on System.Data.Service.Client and add in Windows7 phone application.

- Consume in Windows 7 phone application and display data.

You can see three videos here:

- Creating WCF Data Service: http://dhananjaykumar.net/2010/06/08/wcfdataservicevideo/

- Fixing the Bug of OData Client library for Windows7 Phone: http://dhananjaykumar.net/2010/07/01/videofixingodataclientlibraryissue/

- Consuming WCF Data Service in Windows7 Phone: http://dhananjaykumar.net/2010/07/06/videodatainw7phone/

Dhananjay continues with a detailed step-by-step tutorial for consuming OData information in a Windows Phone 7 application.

The OData team reported the availability of an OData Client for Objective-C for iPhone, iPad and MacOSX in this 7/22/2010 post:

We are happy to announce that today we released the OData Client for Objective-C library. With the library it is now possible to write iOS applications that connect to OData Services. The library includes a command line tool to generate proxy classes for the Entities expose by the OData Service and static libraries for iOS 3.2 and 4.0. Source code for all the components is provided with the download.

The source code has been made available under the Apache 2.0 license and is available for download at http://odataobjc.codeplex.com

Liam Cavanagh (@liamca) announced that all registrants will be granted immediate access to SQL Server Data Sync in his Data Sync Service – Registration post of 7/22/2010:

Today we have opened up access to the Data Sync Service for SQL Azure CTP to all users. Previously, users who wanted to use the Data Sync Service to synchronize their SQL Azure databases were asked to register for access using a Live ID from which we slowly added users to the service in a controlled fashion. I am happy to announce that we have now approved all of these requests and now any users who register will be automatically approved.

To get started please visit: http://sqlazurelabs.com and click on "SQL Azure Data Sync"

<Return to section navigation list>

AppFabric: Access Control and Service Bus

Ron Jacobs reported the availability of this 00:30:05 endpoint.tv - Workflow and Custom Activities - Best Practices (Part 4) video segment on 7/23/2010:

In this episode, Windows Workflow Foundation team Program Manager Leon Welicki drops in to show us the team's guidelines for developing custom activities.

In Part 4, we cover more Activity Design guidelines including

- Body vs. Children

- Variables

- Overriding CacheMetadata

- Activity Design

For more information

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

• Bruno Terkaly continues his Leverage Cloud Computing with Windows Azure and Windows Phone 7 – Step 2 to Infinity – Under Construction series on 7/23/2010 with this brief post (updated 7/25/2010 for added content):

Our code will be composed of many projects

In order for a phone application to talk to the cloud to get data, it needs a few things setup. Here are the things that we are going to build:

- A web service that hosts a SL-enabled WCF Service

- A Silverlight client for both browser[s] and Windows Phone[s] 7

- A data tier to host our data

- A SQL Azure database and a SQL Server On-Premise database

Let’s start by creating a new "Blank Visual Studio Solution.” Remember that a solution can hold many projects.

Bruno continues with 10 similarly-illustrated steps and concludes:

More posts coming soon

Building the Windows Azure and Windows Phone 7

One step at a time is how I roll. So stay tuned for more detailed steps. Keep the emails flowing and let me know what works and what doesn’t.

“Soma” Somasegar’s MSDN: Double the Azure post of 7/23/2010 confirms yesterday’s report by Eric Golpe:

Today we are announcing that we are doubling the initial Windows Azure benefits to MSDN subscribers by extending the offer from eight months to 16 months.

Windows Azure is a is a flexible cloud-computing platform that provides developers with on-demand compute and storage to host, scale, and manage web applications on the internet through Microsoft datacenters.

This January we introduced Windows Azure benefits as part of the MSDN Premium, Ultimate and BizSpark subscriptions with an eight month introductory offer. This offer allows MSDN subscribers to take advantage of the benefits of the Windows Azure platform, including the ability to quickly scale up or down based on your business need without the hassle of dealing with operational hurdles such as server procurement, configuration, and maintenance. With Azure, you pay only for what you use.

I encourage MSDN subscribers and BizSpark members to sign up for their Azure benefits if you have not done so already.

More details on this can be found on the Windows Azure Platform Benefits for MSDN Subscribers page.

Apparently, the Windows Azure team jumped the gun by updating the MSDN Subscribers page yesterday.

Jim O’Neil continues his @home With Windows Azure series with Azure@home Part 2: WebRole Implementation of 7/23/2010:

This post is part of a series diving into the implementation of the @home With Windows Azure project, which formed the basis of a webcast series by Developer Evangelists Brian Hitney and Jim O’Neil. Be sure to read the introductory post for the context of this and subsequent articles in the series.

In my last post, I gave an overview of the @home With Windows Azure architecture, so at this point we’re ready to dive in to the details. I’m going to start with the internet-facing WebRole, the user interface for this application, and cover it in two blog posts, primarily because we’ll broach the subject of Azure storage, which really deserves a blog post (or more) in and of itself.

Getting to Where You Already Are

By now, you should have downloaded the relatively complete Azure@home application in either Visual Studio 2008 or 2010 format and magically had a bunch of code dropped in your lap – a cloud project, a web role, a worker role and a few other projects thrown in the mix. Before we tackle the WebRole code in this article, I wanted to quickly walk through how the solution was built-up from scratch. If you’ve built Azure applications before, even “Hello World”, feel free to skip this section (not that I’d really know if you did or didn’t!)

You start off just as you would to create any other type of project in Visual Studio: File->New->Project (or Ctrl+Shift+N for the keyboard junkies out there). Presuming you have the Azure Tools for Visual Studio installed, you should see a Cloud option under the Visual C# and Visual Basic categories. (It doesn’t show under Visual F# even though you can build worker roles with F#).

In the New Project dialog (above), there’s not much to choose from; however, this is where you’ll need to commit to running either .NET Framework 3.5 or .NET Framework 4 inside of Windows Azure. At the point when we started this project, there wasn’t any .NET 4 support in the cloud, so the project we’ll be working with is still built on the 3.5 framework. I generally elect to build a new solution as well when working with Cloud Service projects, but that’s not a requirement.

The next step is selecting the combination of web and worker roles that you want to encapsulate within the cloud service. There’s a few flavors of the roles depending on your needs:

- ASP.NET Web Role – a default ASP.NET application, such as you’d use for Web Forms development

- ASP.NET MVC 2 Web Role – an ASP.NET project set up with the MVC (model-view-controller) paradigm, including the Models, Views, and Controllers folders and complementary IDE support

- WCF Service Web Role – a WCF project set up with a default service and service contract

- Worker Role – a basic worker role implementation (essentially a class library with a Run method in which you’ll put your code)

- CGI Web Role – a web role that runs in IIS under the Fast CGI module. Fast CGI enables the execution of native code interpreters, like PHP, to execute within IIS. If you’re looking for more information on this topic, check out Colinizer’s blog post.

For Azure@home we need only an ASP.NET web role and a worker role, which you can see I added above. Selecting OK in this dialog results in the creation of three projects: one for each of the roles and one for the Cloud Service itself. There are a few interesting things to note here.

- WebRole and WorkerRole each come with role-specific class files, providing access points to lifecycle events within the cloud. In the WebRole, you might not need to touch this file, but it’s a good place to put initialization and diagnostic code prior to the ASP.NET application’s start event firing. Beyond that though, the WebRole files look identical to what you’d get if you were building an on-premises application, and indeed a vast majority of constructs you’d use in on-premises applications translate well to Azure.

- There is a one-to-one correspondence between the role projects and an entry beneath the Roles folder within the Cloud Service project. Those entries correspond to the configuration options for each of the roles. All of the configuration is actually contained in the ServiceConfiguration.cscfg and ServiceDefinition.csdef files, but these Role nodes provide a convenient way to set properties via a property sheet versus poring over the XML. …

Jim continues with his illustrated tutorial.

Kyle McClellan reported “IntelliTrace is not supported for the RIA Services framework” in his RIA, Azure, and IntelliTrace of 6/9/2010 to which Jim Nakashima linked on 7/22/2010:

IntelliTrace is not supported for the RIA Services framework. There is currently a bug in Visual Studio 2010 IntelliTrace that leads to a runtime exception if RIA Services is instrumented to record call information. Since this is the default tracing option chosen by the Azure SDK tools, it is an easy trap to fall into. If you do enable IntelliTrace, your domain service will always throw the following exception.

System.Security.VerificationException: Operation could destabilize the runtime

System.ServiceModel.DomainServices.Server.DomainServiceDescription.GetQueryEntityReturnType

System.ServiceModel.DomainServices.Server.DomainServiceDescription.IsValidMethodSignature

System.ServiceModel.DomainServices.Server.DomainServiceDescription.ValidateMethodSignature

System.ServiceModel.DomainServices.Server.DomainServiceDescription.AddQueryMethod

System.ServiceModel.DomainServices.Server.DomainServiceDescription.Initialize

System.ServiceModel.DomainServices.Server.DomainServiceDescription.CreateDescription

<>c__DisplayClass8.AnonymousMethod

System.ServiceModel.DomainServices.Server.DomainServiceDescription.GetDescription

System.ServiceModel.DomainServices.Hosting.DomainServiceHost..ctor

System.ServiceModel.DomainServices.Hosting.DomainServiceHostFactory.CreateServiceHost

System.ServiceModel.Activation.ServiceHostFactory.CreateServiceHost

HostingManager.CreateService

HostingManager.ActivateService

HostingManager.EnsureServiceAvailable

System.ServiceModel.ServiceHostingEnvironment.EnsureServiceAvailableFast

System.ServiceModel.Activation.HostedHttpRequestAsyncResult.HandleRequest

System.ServiceModel.Activation.HostedHttpRequestAsyncResult.BeginRequest

System.ServiceModel.Activation.HostedHttpRequestAsyncResult.OnBeginRequest

System.ServiceModel.AspNetPartialTrustHelpers.PartialTrustInvoke

System.ServiceModel.Activation.HostedHttpRequestAsyncResult.OnBeginRequestWithFlow

ScheduledOverlapped.IOCallback

IOCompletionThunk.UnhandledExceptionFrame

System.Threading._IOCompletionCallback.PerformIOCompletionCallbackOn the client, this will appear as the standard “Not Found” CommunicationException.

There are three workarounds for this problem. The first is to disable IntelliTrace altogether. This is the easiest option, but it has the broadest impact. You can disable IntelliTrace by unchecking the checkbox at the bottom of the Publish window.

The second workaround is to only collect Azure events without collecting call information. RIA Services raises some basic Azure events, so this option does have a small advantage over the final option. However, once again we’ve used a broad solution that prevents us from harnessing the full power of IntelliTrace. You can select the events only option in the IntelliTrace Settings window available on the Publish window.

The last solution is to exclude the RIA Services framework libraries from IntelliTrace call information collection. This solution enables full IntelliTrace support for the rest of your application. On the Modules tab of the IntelliTrace Settings window, you will need to add an exception for *System.ServicesModel.DomainServices.*.

I’ve tried to capture the significant points about this error so you won’t have to worry much about it yourself. The IntelliTrace bug has been reported to Visual Studio and I’m expecting a fix in their first SP. I’d assume we won’t see that for a while judging by past releases, but I’ll keep you updated if there are better fixes available later on. So for now, just pick a workaround and don’t look back.

Return to section navigation list>

Windows Azure Infrastructure

Mike West, Robert McNeill and Bruce Guptil co-authored One-Stop Shopping – Major Vendors Acquire Assets for the Cloud, a Research Alert of 7/22/2010 for Saugatuck Technology (site registration required):

What is Happening? The IT industry is in a major transition, impacted by several trends concurrently that have led to increasing partnering and consolidation, as major vendors seek to become the sole source for offerings up and down the IT EcoStack™ targeting the Cloud (please see Strategic Perspective, “Gorillas In the Cloud: Applying Saugatuck’s “Master Brand” Model to Cloud IT,” MKT-732, published 5 May 2010). Dell, HP, IBM, Microsoft, Oracle, and EMC/VMware are among the many examples of IT vendors pursuing a vertically-integrated stack approach to Cloud IT (please see Note 1 for related Saugatuck research on the Cloud strategies of these vendors).

The latest is NTT Group’s proposed $3.2B cash offer to acquire Dimension Data, a global IT services and solutions provider. Together, NTT Group and Dimension Data hope to become a one-stop, sole source provider with offerings that complement NTT Group’s telecommunications technology. Geographic expansion is also in the mix. NTT Group’s business mainly focuses on Asia and covers Europe and the USA, while Dimension Data also covers Africa, the Middle East and Australia. Thus the coverage areas of the two businesses are highly complementary. …

The authors continue with the usual “Why is it Happening?” and “Market Impact” topics.

CloudTweaks posted Vertical Industry Use Of Cloud Computing – Who’s Getting Involved? on 7/23/2010:

Here are some useful cloud based chart illustrations on the future and direction of cloud computing.

Thanks to our friends at: MimeCast and Famapr for the research and insights.

Liz MacMillan claims “Cloud Computing has Alleviated Resource Pressures and Improved End-user Experience” in a preface to her Cloud Computing Delivering on its Promise but Doubts Still Hold Back Adoption post of 7/23/2010 about MimeCast’s research report:

Mimecast, a leading supplier of cloud-based email security, continuity, policy control and archiving, today announced the results of the second annual Mimecast Cloud Adoption Survey, an annual research report examining attitudes to cloud computing services amongst IT decision-makers in UK and US businesses. The survey, conducted by independent research firm, Loudhouse, reveals that a majority of organisations (51 percent) are now using some form of cloud computing service, and the levels of satisfaction amongst those companies is high across the board. Conversely, companies not yet using cloud services cite concerns around cost and security.

The survey shows that of those businesses using cloud services, 74 percent say that the cloud has alleviated internal resource pressures, and 72 percent report an improved end-user experience. 73 percent have managed to reduce their infrastructure costs, while 57 percent of say that the cloud has resulted in improved security. However, not everyone is convinced. 74 percent of IT departments still believe that there is always a trade-off between cost and IT security and 62 percent say that storing data on servers outside of the business is always a risk.

Highlights from the research:

Cloud services are now the norm:

The majority of organisations now use cloud-based services. The report found 51 percent of organisations are now using at least one cloud-based application. Adoption rates amongst US businesses are slightly ahead of the UK with 56 percent of respondents using at least one cloud-based application, compared to 50 percent in the UK. This is a significant rise from the 2009 survey, when just 36 percent of US businesses were using cloud services.

Two thirds of businesses are considering adopting cloud computing. Encouragingly for vendors, 66 percent are now considering adopting cloud-based applications in the future. Again, US businesses are ahead of the UK in their attitudes to the cloud with 70 percent considering cloud services, compared to 60 percent in the UK.

Email, security and storage are the most popular cloud services. 62 percent of the organisations that use cloud computing are using a cloud-based email application. Security and storage are the next most popular, used by 52 percent and 50 percent of organisations with at least one cloud-based service respectively. Email services are most popular with mid-size businesses (250-1,000 employees) with 70 percent of these organisations using the cloud to support this function. Smaller businesses (under 250 employees) are most likely to use the cloud for security services, with larger enterprises (over 1,000 employees) most likely to make use of cloud storage services.

Cloud attitudes split between the 'haves' and 'have-nots':

Existing cloud users are satisfied. Security is not considered to be an issue by existing cloud users: 57 percent say that moving data to the cloud has resulted in better security, with 58 percent saying it has given them better control of their data. 73 percent of current cloud users say it has reduced the cost of their IT infrastructure and 74 percent say it has alleviated the internal resource pressures upon the department.

Security fears are still a barrier. Three quarters (74 percent) of IT departments agreed with the statement 'there is always a trade-off between cost and IT security', suggesting that many organisations feel cloud solutions are less secure than the more expensive, on-premise alternatives, simply due to their lesser cost. 62 percent believe that storing data on servers outside of the business is a significant security risk.

IT is concerned that adopting cloud will not initially result in cost savings. 58 percent of respondents thought that replacing legacy IT solutions will almost always cost more than the benefits of new IT.

Cloud concerns stem from a lack of clarity. One reason for the negative perceptions of cloud services among non-users seems to be a lack of clear communication from the industry itself. 54 percent of respondents said the potential benefits of cloud delivery models are overstated by the IT industry. …

<Return to section navigation list>

Windows Azure Platform Appliance

No significant articles today.

<Return to section navigation list>

Cloud Security and Governance

Lori MacVittie (@lmacvittie) asks Web 2.0 and cloud computing have naturally pushed all things toward application-centric views, why not the VPN? in a preface to her F5 Friday: Beyond the VPN to VAN post of 7/23/2010 to F5’s DevCentral blog:

When SSL VPNs were first introduced they were a welcome alternative to the traditional IPSEC VPN because they reduced the complexity involved with providing robust, secure remote access to corporate resources for externally located employees.

Early on SSL VPNs were fairly simple – allowing access to just about everything on the corporate network to authenticated users. It soon became apparent this was not acceptable for several reasons, most prominently standing out the risk of infection by remote employees who might have been using personal technology to work from home. While most organizations have no issue with any employee working a few extra hours at home, those few extra hours of productivity can be

easily offset by the need to clean up after a virus or bot entering the corporate network from an unsecured, non-validated remote source. This was especially true as one of the selling points for SSL VPN was (and still is) that it could be used from any endpoint. The “clientless” nature of SSL VPN made it possible to use a public kiosk to log-in to corporate resources via an SSL VPN without fear that the ability to do so would be “left behind.” I’m not really all that sure this option was ever widely used, but it was an option.

Then SSL VPNs got more intelligent. They were able to provide endpoint security and policies such that an “endpoint”, whether employee or corporate owned, had to meet certain criteria – including being “clean” – before it was allowed access to any corporate resource. This went hand in hand with the implementation of graded authentication, which determined access rights and authorization levels based on context: location, device, method of access, etc… That’s where we sat for a number of years. There were updates and upgrades and additions to functionality but nothing major about the solution changed.

Until recently. See, the advent of cloud computing and the increasing number of folks who would like to “work from home” if not as a matter of course then as a benefit occasionally has been driving all manner of solutions toward a more application-centric approach and a more normalized view of access to those applications. As more and more applications have become “webified” it’s made less sense over time to focus on securing remote access to the corporate network and more sense to focus on access to corporate applications – wherever they might be deployed.

THE NEXT GENERATION of ACCESS CONTROL

That change in focus has led to what should be the next step in the evolution of remote access – from SSL VPN to secure access management, to managing application access by policy across all users regardless of where they might be located.

Similarly, it shouldn’t matter whether corporate applications are “in the cloud” or “in the data center”. A consistent method of managing access to applications across all deployment locations and all users reduces the complexity inherent in managing both sides of the equation.

We might even call this a Virtual Application Network (VAN) instead of a Virtual Private Network (VPN) because what I’m suggesting is that we create a “network” of applications that is secured by a combination of transport layer security (SSL) and controlled by context-based access management at the application layer. Whether a user is on the corporate LAN or dialed-in from some remote location that has yet to see deployment of broadband access shouldn’t matter. The pre-access validation that the accessing system is “clean” is just as important today when the system is local as if it were remote; viruses and bots and malware don’t make the distinction between them, why should you?

By centralizing application access across users and locations, such secure access methodologies can be used to extend control over applications that may be deployed in a cloud computing environment as well. Part of F5’s position on cloud computing is that many of the solutions that will be required to make cloud-deployed applications viable is that the control that exists today over locally deployed applications must be extended somehow to those remote applications as a means to normalize management and security as well as controlling the costs of leveraging what is supposed to be a reduced cost environment.

That’s part of the promise of F5’s BIG-IP Access Policy Manager (APM). It’s the next step in secure remote access that combines years of SSL VPN (FirePass) experience with our inherent application-aware delivery infrastructure. It provides the means by which access to corporate applications can be normalized across users and application environments without compromising on security and control. And it’s context-aware because it’s integrated into F5’s core enabling technology platform, TMOS, upon which almost all other application delivery functionality is based and deployed.

I highly encourage a quick read of George Watkin’s latest blog on the topic, Securing the Corporate Intranet with Access Policy Manager, in which he details the solution and some good reasons behind why you’d want to do such a thing (in case I’m not convincing enough for you). You may also enjoy a dive into a solution presented in a previous F5 Friday, “F5 Friday: Never Outsource Control”, that describes an architectural approach to extending normalized control of application access to the cloud.

Chris Czarnecki asserts Security is Virtually Different in the Cloud and offers a cloud-computing white paper in this 7/23/2010 post to Learning Tree’s Cloud Computing blog:

I have just taught a version of the Learning Tree Cloud Computing course and top of the agenda was security and enough debate to stimulate this posting. Security is important in the cloud but is it really that different to security in general application and data security stored on private networks ? The answer is yes most probably.

Security of data and application security principles applied to private networks and deployments should still be applied to the cloud of course. Doug Rehnstrom posted on this recently. Security in the cloud is probably different from a private network and one of the major reasons is virtualization.

Cloud technology is built upon virtualization – this raises a number of security concerns – not just for the cloud but for all organisations that use virtualisation technology. The security of a virtualised solution is highly dependent on the security of each of its independent components – this has been highlighted recently by NIST who have issued guidelines on security in virtualised environments.

Security in a virtualised environment depends on the security of the hypervisor, the host operating system, guest operating system, applications, storage devices, networks connecting them. How many organisations that have deployed virtualised environments – and thats a lot, have actually considered the security implications of their implementation. I am confident that many of these organisations are the ones who state security as a barrier to adopting the cloud. As private clouds become more prevalent then the security of the virtualization, its monitoring and compromise detection will need to be carefully considered and adopted. Should that not be the case for all virtualized deployments, cloud or not ? Most definitely yes too. So if you are using a virtualized environment your security requirements are not so different from the cloud, you just may not have realised it.

If you are interested in the discussion further have a look at the white paper I recently put together. [Requires site registration.]

<Return to section navigation list>

Cloud Computing Events

Blackhat announced Blackhat USA Uplink 2010 on 7/23/2010. For US$395, you receive:

Streaming live from Black Hat USA • July 28-29

This year thousands of security professionals from around the world are making plans to be a part of Black Hat USA 2010. But not all of those people will actually be in Las Vegas. With Black Hat Uplink, you can experience essential content that shapes the security industry for the coming year - for only $395.

Now for the first time, you can get a taste of Black Hat USA from your desk - this year's live event will be streamed directly to your own machine with Black Hat Uplink:

- Access to two select tracks on each day of the Briefings and the keynote - a total of 20+ possible sessions to view.

- Post-conference access to Uplink content; go back and review the presentations that you missed or watch the presentations that interested you the most as many times as you want.

- Interact with fellow con-goers, Uplink attendees, and the security community at large via Twitter during the Briefings.

- Get show promotional pricing for the “Source of Knowledge” DVDs should you wish to purchase ALL of the recordings from Black Hat USA and/or DEF CON 18.

Presentations will be streamed live on July 28-29, but you will be able to view Uplink presentations for up to 90 days after the event.

Gartner listed cloud-focused events for 2010 in a 7/23/2010 e-mail message:

Gartner Outsourcing & Vendor Management Summit

September 14 – 16 I Orlando, FL

Learn how cloud computing fits into an effective sourcing strategy, what terms and conditions, delivery and pricing to expect, and how to select, manage and govern cloud services and service providers. View details on our Global Sourcing Invitational Program.

Dedicated cloud trackGartner Symposium/ITxpo

October 17 – 21 I Orlando, FL

Learn to build or validate your cloud strategy, measure cloud investments, evaluate cloud and software-as-a-service platforms and providers, and manage security and risk related to both building a cloud-enabled IT organization and taking advantage of cloud business opportunities.

Dedicated track on cloudGartner Application Architecture, Development & Integration Summit

November 15 – 17 I Los Angeles, CA

Assess the latest cloud trends, adoption best practices, key benefits and risks, and get a grip on the tools and techniques to architect, develop and integrate applications and services in the cloud. Stay tuned for details on a dedicated cloud track.Gartner Data Center Conference

December 6 – 9 I Las Vegas, NV

Understand the implications of cloud computing across the data center and the infrastructure and operations organization, and get an in-depth perspective on private clouds and other cloud-enabled infrastructure issues. Stay tuned for details on a dedicated cloud track.Upcoming Gartner webinar focused on cloud:

Server Virtualization: From Virtual Machines to Clouds

Thomas J. Bittman, Vice President and Distinguished Analyst

Wednesday, July 28, 2010, 9 a.m. – 10 a.m. EDT

Wednesday, July 28, 2010, 12 p.m. – 1 p.m. EDTView 2010 Gartner events: See our complete listing of 2010 Gartner events.

Stephen Forte’s Devopalooza at VS Live! Redmond post of 7/23/2010 announced his presentations at VS Live! Redmond:

In just over a week, I will be speaking at VS Live! at Microsoft’s campus in Redmond, Washington. In addition to my Scrum and OData talks, I will be helping host Devopalooza on Wednesday August 4th at 6:30-8:30pm in Building 33. We have a lot of cool stuff ready for the attendees, including a “Developer Jeopardy” contest. Brian Peek and I will be the hosts of the game show and we have been working hard coming up with good questions.

Yes, there will be prizes. Yes there will be lots of wise cracks. And yes I will be wearing my rugby jersey.

<Return to section navigation list>

Other Cloud Computing Platforms and Services

Alex Williams asserts that Opsource causes The Cloud Services Market [to Look] Like a Red Ocean in this 7/23/2010 post to the ReadWriteCloud blog:

Earlier this week, Opsource announced a partner program. The news came on Monday, the first day developers could download code from OpenStack, a separate initiative that has had considerable attention this week.

Both companies provide cloud infrastructure based upon open-source. They could easily be part of a same open cloud network such as OpenStack. But for now Opsource says they will not join the effort lead by Rackspace. Our guess is Opsource competes to some extent with Rackspace and is looking at other alternatives.

Rackspace, Opsource and almost two dozen other companies are now offering some variety of cloud infrastructure. It's an increasingly crowded market that will be due for some consolidation. But for the moment, the market is a picture of how companies perceive the opportunities the cloud provides

Rackspace, as we know, provides public cloud infrastructure. Opsource offers cloud infrastructure to the enterprise, service providers and systems integrators.

Opsource calls its program a partner ecosystem. These partners include integrators, developers, ISVs, cloud platform companies and telecom providers.

Opsource expects 50% of its revenue to come from these partners. Opsource points in particular to telecommunications companies. CEO Treb Ryan:

"The feedback we're getting from the telecoms is that a request for cloud is becoming an increasing part of the RFP's they are seeing from customers. Usually it comes as a request for bundled services (such as managed network, internet access, hosting and cloud.) One of our European telecoms stated they couldn't bid on $2 million a month worth of contracts because one of the requirements was cloud."

Ryan says customers want to work with one company:

"Most likely it's a combination of not wanting to go to separate vendors for separate services (i.e. a colo company for hosting, OpSource for cloud, a telecom for network, and a managed security company for VPN's) they want to get it all from one vendor completely integrated. Secondly, I think many customers have a trusted relationship with their telecom for IT infrastructure services already and they trust them more than a third-party company."

Opsource and Rackspace are two well-established companies in the cloud computing space. But the number of providers in the overall market is beginning to morph.

John Treadway of Cloud Bzz put together a comprehensive list. He says the market is looking more like a red ocean:

"I hope you'll pardon my dubious take, but I can't possibly understand how most of these will survive. Sure, some will because they are big and others because they are great leaps forward in technology (though I see only a bit of that now). There are three primary markets for stacks: enterprise private clouds, provider public clouds, and public sector clouds. In five years there will probably be at most 5 or 6 companies that matter in the cloud IaaS stack space, and the rest will have gone away or taken different routes to survive and (hopefully) thrive."

Lots of blood in the water. Who's going to get eaten first?

Maureen O’Gara prefaced her Unisys to Offer Price Fixe Cloud post of 7/23/2010 with “The environment can be used to integrate SOA technology:”

In what is believed to be a cloud first, Unisys is going to offer its upscale enterprise-class ClearPath users a fixed-priced PaaS cloud, a model that flies in the face of the nickel and dime'ing that goes on in commodity cloud land.

Unisys already has x86-based commodity clouds on offer but now it's drawn its proprietary mainframe-style ClearPath widgetry, based on its MCP and OS/2000 operating systems, into the new meme beginning with a managed development and testing solution that will go for $13,000 for three months use of a soup-to-nuts environment that includes 25MIPS, eight megs [gigs?] of memory and 75GB of storage. [Emphasis added.]

Unisys has a couple of other fancier configurations but it thinks this so-called basic configuration will suit 90% of its markets needs. And, to repeat, it includes everything: CPUs, memory, storage, networking, bandwidth, operating system, SDKs, maintenance, support and enhancements.

If a customer believes it needs more than three months use of the widgetry it has to buy another three-month block.

The company is starting with test & dev and means to branch out into production environments, managed SaaS solutions and data replication and disaster recovery. The production environment might suit smaller or specialized apps and large accounts that need an overflow environment.

The widgetry will be hosted in Unisys' five data centers where, it says, the infrastructure will be "over-provisioned."

Unisys has been using the test & dev stuff internally for the last couple of years to save capex costs but tidied-up the interfaces when it decided to commercialize it. That's what's taken it a while to come to market.

It says the environment can be used to develop or enhance native ClearPath apps, integrate SOA technology to create composite apps or add functions like business intelligence. The development environment can accommodate Unisys Business Information Server (BIS) and its Enterprise Application Environment (EAB)/AB Suite among other development environments.

The company already has some SaaS-style solutions running on ClearPath like its Logistics Management System where 35% of the world's airline cargo is tracked and traced.

Outside of Japan Unisys has 1,600 ClearPath customers and estimates that 5%-10% will immediately be interested in the ClearPath Cloud, which of course keeps the stuff relevant. In a recent survey Unisys found the test & dev cloud was attractive to 46% of the respondents.

That’s US$52,000 per year, folks, but I’m sure you get more than “eight megs of memory” with that.

Pat Romanski adds fuel to the ClearPath fire by claiming “Unisys-hosted solutions give ClearPath clients new, cost-efficient options” in a preface to her New Unisys ClearPath Solutions Propel Clients into the Cloud post of 7/23/2010:

Unisys Corporation on Thursday introduced the first in a planned series of managed cloud solutions for its ClearPath family of mainframe servers.

ClearPath Cloud Development and Test Solution is a "platform as a service" (PaaS) solution. It extends Unisys strategy for delivering secure IT services in the cloud and sets the stage for delivery of further ClearPath Cloud solutions in the near future.

"With the ClearPath Cloud Solutions, Unisys enables ClearPath clients to leverage a new, cost-efficient business model for use of IT resources," said Bill Maclean, vice president, ClearPath portfolio management, Unisys. "Clients can subscribe to and access Unisys-owned computing facilities incrementally to modernize existing ClearPath applications or develop new ones. They can avoid unscheduled capital equipment expenditures in this uncertain economy and make more efficient use of their existing ClearPath systems for applications critical to their business mission.

"Establishing this new cloud-based server environment for delivery of ClearPath development services is just the latest step Unisys has taken to give clients new ways to capitalize on what we believe is the most modern, secure, open mainframe platform on the market," Maclean added.

New Test and Development Solution Makes It Easy to Jump into the Cloud

In a recent Unisys online poll, 46 percent of respondents - the largest single percentage response to any question in the poll - cited development and test environments as the first workloads they would consider moving to the cloud. The Unisys ClearPath Cloud Development and Test Solution, hosted at Unisys outsourcing center in Eagan, Minnesota and available to clients around the world, simplifies the transition to the cloud.The solution is designed to supplement clients' current development and test environments, providing access to additional virtual resources when needed for creation, modernization and functional testing of specific applications. It provides expanded infrastructure sourcing options; accelerates delivery of business functionality to help clients hold down costs and keep projects on schedule; and provides a new approach that helps minimize the risks of large, costly IT projects.

Clients who choose the ClearPath Cloud Development and Test Solution purchase a three-month development-and test-block license to use virtual resources in the Unisys hosted server cloud environment. This gives them access to a ready-to-go development environment that includes:

- A Software Developer's Kit (SDK) for the Unisys MCP or OS 2200 operating environment, including relevant database software and development tools;

- A virtual development server, with amounts of memory, storage and networking resources sized to the specific development and testing requirement; and

- Software enhancement releases and maintenance support services.

Clients can use this solution to develop or enhance native ClearPath applications, integrate SOA technology to create composite applications or add functions such as business intelligence. The development environment can accommodate Unisys Business Information Server (BIS), Enterprise Application Environment (EAE)/AB Suite and other development environments.

Unisys engineering organization has deployed this solution for its internal system software development and testing, cutting virtual server provisioning time from days to less than an hour while avoiding the cost of additional capital equipment.

Services Amplify Value of ClearPath Cloud Development and Test Solution

Unisys offers optional Development and Test Planning and Setup Services to help clients define, implement and tune the best development environment for their specific initiative.Clients can also purchase Unisys Cloud Transformation Services, which help the client design and implement the right cloud strategy to support their evolving business needs. Plus, those using the solution for application modernization can opt for Unisys Application Advisory Solutions. These services help the client determine how best to streamline the environment for maximum return on investment - for example, consolidating applications, integrating SOA capabilities, or enabling wireless, web, or social-media access.

The ClearPath Cloud Development and Test Solution draws on the infrastructure behind Unisys Secure Cloud Solution for provisioning and resource management - including Unisys Converged Remote Infrastructure Management Suite solution offering, which provides a unified view of an entire IT infrastructure to facilitate integrated management.

Future ClearPath Cloud Solutions for Enhanced Production and Recovery

Over the next several months, Unisys plans to launch additional ClearPath Cloud Solutions that, when available, will give clients new options for realizing the operational and economic advantages of cloud services. The currently planned solutions include:

- A full cloud-based production environment for smaller, independent applications. This is intended to enable clients to offload specialized applications into the cloud and maximize use of their data-center resources for mission-critical applications;

- Data replication and disaster recovery for cost-effective, secure preservation of mission-critical business information; and

- Managed, "software as a service" application solutions for clients' specific industry requirements. These are intended to complement Unisys existing SaaS solutions, such as the Unisys Logistics Management System for air cargo management.

US$52,000 per year still sounds to me like a lot of money for what’s described above.

Redmonk’s Michael Coté posted IBM’s New zEnterprise – Quick Analysis about another mainframe entry in the cloud-computing sweepstakes on 7/22/2010:

IBM launched a new mainframe this morning here in Manhattan, the zEnteprise – a trio of towers bundling together mainframe (the new 196) along with stacks of Power/AIX and x86 platforms into one “cloud in a box,” as Steve Mills put it. As you can guess, the pitch from IBM was that this was a more cost effective way of doing computing than running a bunch of x86 commodity hardware.

(To be fair, there was actually little talk of cloud, perhaps 1-3 times in the general sessions. This wasn’t really a cloud announcement, per se. ”Data-center in a box” was also used.) [Emphasis added.]

TCO with zEnterprise

The belief here is two-fold:

- Consolidating to “less parts” on the zEnterprise is more cost effective – there’s less to manage.

- As part of that, you get three platforms optimized for different types of computing in one “box” instead of using the one-size-fits-all approach of Intel-based commodity hardware.

Appropriately, this launch was in Manhattan, where many of IBM’s mainframe customers operate: in finance. The phrases “work-load” and “batch-management” evokes cases of banks, insurance companies (Swiss Re was an on-site customer), and other large businesses who need to process over all sorts of data each night (or in whatever period, in batch), dotting all the i’s and crossing all the t’s in their daily data.

IBM presented much information on Total Cost of Ownership (TCO) for the new zEnterprise vs. x86 systems (or “distributed” for you non-mainframe types out there). The comparisons went over costs for servers, software, labor, network, and storage. Predictably, the zEnterprise came out on-top in IBM’s analysis, costing just over half as much in some scenarios.

Time and time again, having less parts was a source for lower costs. Also, more favorable costs for software based on per-core pricing came up. In several scenarios, hardware ended up being more expensive with zEnterprise, but software and labor were low enough to make the overall comparison cheaper.

Fit to purpose

Another background trend that comes into play with the zEnterprise platform is specialized hardware, for example in the areas of encryption, data analytics, and I/O intensive applications. The idea is that commodity hardware is, by definition, generalized for any type of application and, thus, misses the boat on optimizing per type of workload. If you’re really into this topic, look for the RedMonkTV interview I did with IBM’s Guruaj Rao, posted in the near future.

While mainframes might be an optimal fit for certain applications, Rao also mentions in tbe interview, there are many innovations coming out of the distributed (x86) space. Rather than pass up on them because they don’t run on the mainframe, part of the hope of zEnterprise is to provide a compatible platform that’s, well, more mainframe-y to run distributed systems on. What this means is that you’d still run on Unix or Linux as a platform, but those servers would be housed on the zEnterprise-hosted blades; they’d be able to take advantage of the controlled and managed resourced, and be managed as one system.

On the distributed note, a couple analysts asked about Windows during the Q&A with Software and Systems Group head Steve Mills: would zEnterprise run Windows (Server. The short answer is: no. The longer one is about lack of visibility into source code, not wanting to support an OS that “drag[s] in primitives from DOS,” and generally not being able to shape Windows to the management IBM would want. Mills said he “doesn’t really every expect to manage Windows” on zEnterprise.

During that same Q&A, Mills also alluded to one of the perfect heterogeneity in one box scenarios that the zEnterprise seems a good technological fit for: many mainframe-based applications are served by a client and middle-ware tiers for their user-facing layers, 3-tier applications, if you will, with the mainframe acting as the final tier, the system of record, to use the lingo. You could see where the web/UI tier ran on x86, the middleware & integration on AIX/Power, and the backend on z.

The Single System, for vendors, for buyers

“Everything around the server has become more expensive than the server itself.”

–Steve Mills, IBM

The word “unified” comes up in the zEnterprise context frequently. Indeed, the management software for zEnterprise is called “Unified Resource Manager.” As with other vendors – Cisco, Oracle, and private cloud platforms to some extent – the core idea here is having one, system that acts like a homogenous platform…all the while being heterogenous underneath, smoothing it over with interfaces and such.

For vendors, the promise is capturing more of the IT budget, not just the components they specialize in (servers, database, middleware, networking, storage, applications, etc.). There’s also the chance to compete on more than just pricing, which is a nasty way to go about making money from IT.

For buyers, the idea is that by having a single-sourced system, there’s more control and integration on the box, and that increased control leads to more optimization in the form of speed, breadth of functionality, and cost savings. There’s also reduced data center foot-print, power consumption, and other TCO wing-dings.

Sorting out the competing scenarios of heterogeneous vs. unified systems isn’t a simple back of the napkin affair: there’s so much apples to oranges comparisons that it’s tough to balance anything but the final bill. Part of the success of non-mainframe infrastructure is that its dumb-simplicity makes evaluating it clear and straight forward: x86-based servers have standardized computer procurement. Layering in networking, software, storage, management, and all that quickly muddies it back up, but initially it’s a lot more clear-cut than evaluating a new type of system. And it certainly feels good to buy from more than one vendor rather than put all your eggs in one gold-plated basket.

Nonetheless, you can expect vendors to increasingly look to sell you a single, unified system. To evaluate these platforms, you need a good sense of the types of applications you’ll be running on them: what you’ll be using them for. There’s still a sense that a non-mainframe system will be more flexible, agile even…but only in the short term, after which all that flexibility has created a mess of systems to sort out. Expect much epistemological debate over that mess.

More

Patrick Thibodeau at Computerworld covers many of the details: “the zEnterprise 196…includes a 5.2-GHz quad processor and up to 3TB of memory. That’s double the memory of the preceding system, the z10, which had a 4.4-GHz quad processor.”

Timothy Prickett Morgan gets more detailed on the tech-specs and possible use-patterns: “there is no way, given the security paranoia of mainframe shops, that the network that interfaces the mainframe engines and their associated Power and x64 blades to the outside world will be used to allow Power and x64 blades to talk back to the mainframes.”

Richi Jennings [no relation] wraps up lots of coverage.

Disclosure: IBM, Microsoft, and other interested parties are clients. IBM paid for my hotel and some meat from a carving station that I ate last night.

David Worthington covered the same IBM press conference in his A new onramp for the mainframe superhighway article of 7/23/2010 for SDTimes.

<Return to section navigation list>