Windows Azure and Cloud Computing Posts for 7/12/2010+

| Windows Azure, SQL Azure Database and related cloud computing topics now appear in this daily series. |

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database, Codename “Dallas” and OData

- AppFabric: Access Control and Service Bus

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Windows Azure Infrastructure

- Windows Azure Platform Appliance (WAPA)

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Read the detailed TOC here (PDF) and download the sample code here.

Discuss the book on its WROX P2P Forum.

See a short-form TOC, get links to live Azure sample projects, and read a detailed TOC of electronic-only chapters 12 and 13 here.

Wrox’s Web site manager posted on 9/29/2009 a lengthy excerpt from Chapter 4, “Scaling Azure Table and Blob Storage” here.

You can now freely download by FTP and save the following two online-only PDF chapters of Cloud Computing with the Windows Azure Platform, which have been updated for SQL Azure’s January 4, 2010 commercial release:

- Chapter 12: “Managing SQL Azure Accounts and Databases”

- Chapter 13: “Exploiting SQL Azure Database's Relational Features”

HTTP downloads of the two chapters are available for download at no charge from the book's Code Download page.

Azure Blob, Drive, Table and Queue Services

No significant articles today.

Return to section navigation list>

SQL Azure Database, Codename “Dallas” and OData

MoeK’s Microsoft Codename “Dallas” at the World Wide Partner Conference: Announcing CTP3! post of 7/12/2010 announced:

Today in his keynote at Worldwide Partner Conference Bob Muglia announced that the “Dallas” CTP3 will be made available 30 days from now and that Dallas will officially RTW in Q4 CY2010! CTP3 introduces a number of exciting new highly-requested features and marks the introduction of the first live OData services as well as:

Support for Add Service Reference in Visual Studio

New content providers and data

Flexible querying of content – beyond simple web services!

The keynote also announced a number of new content provider partners including the European Environmental Agency, Lexis Nexis, and Wolfram Alpha (full list coming soon!) The keynote also provided a preview of the brand new marketplace that will be available at V1 and the new opportunities the Dallas data marketplace opens for content providers, developers, and ISVs as seen in the demo by Donald Farmer and Bob Muglia). In the new Dallas marketplace, ISVs will be able to plug into Dallas for both marketplace discovery as well as including Dallas content directly inside their applications!

Check back soon for more info and the availability of CTP3!

Zane Adam posted News on Microsoft codename “Dallas” at WWPC 2010 to his blog on 7/12/2010 for WPC10:

Today at the Worldwide Partner Conference, we announced some continued momentum around Microsoft codename “Dallas”.

Businesses can use “Dallas” to discover, manage, and purchase premium data subscriptions that can be integrated into applications. For ISVs, this eliminates the need to sign contracts with individual content providers and instead use “Dallas” for one stop data shopping that is easy to manage. For content providers, “Dallas” provides a simplified way to distribute data to a broad audience.

The current list of content providers for “Dallas” include NASA, National Geographic, Associated Press, Zillow.com, and Weather Central—just to name a few. And the list continues to grow with some impressive new content providers being announced today.

During his keynote speech at WWPC, Microsoft President Bob Muglia made the following announcements on Microsoft Codename “Dallas”:

“Dallas” will reach commercial availability in the fourth quarter of 2010.

Demonstrated an on-stage preview of the updated and impressive “Dallas” user experience.

The third Community Technology Preview (CTP) will be available in the next 30 days.

An impressive list of new content providers will offer public and premium content on “Dallas”. See below.

Watch the keynote at www.digitalwpc.com. For additional information on “Dallas” please visit www.microsoft.com/dallas.

K. Scott Allen’s OData and Ruby post of 7/11/2010 solves a problem with use of inheritance in Pluralsight’s OData feed:

The Open Data Protocol is gaining traction and is something to look at if you expose data over the web. .NET 4 has everything you need to build and consume OData with WCF Data Services. It's also easy to consume OData from outside of .NET - everything from JavaScript to Excel.

I've been working with Ruby against a couple OData services, and using Damien White's ruby_odata. Here is some ruby code to dump out the available courses in Pluralsight's OData feed.

require 'lib/ruby_odata' svc = OData::Service.new "http://www.pluralsight-training.net/Odata/" svc.Courses courses = svc.execute courses.each do |c| puts "#{c.Title}" endruby_odata builds types to consume the feed, and also maps OData query options.

svc.Courses.filter("substringof('Fundamentals', Title) eq true") fundamentals = svc.execute fundamentals.each do |c| puts "#{c.Title}" endThere is one catch - the Pluralsight model uses inheritance for a few of the entities:

<EntityType Name="ModelItemBase"> <Property Name="Title" Type="Edm.String" ...="" /> <!-- ... --> </EntityType> <EntityType Name="Course" BaseType="ModelItemBase"> <Property Name="Name" Type="Edm.String" Nullable="true" /> <!-- ... --> </EntityType>Out of the box, ruby_odata doesn't handle the BaseType attribute and misses inherited properties, so I had to hack around in service.rb with some of the Nokogiri APIs that parse the service metadata (this code only handles a one level of inheritance, and gets invoked when building entity types):

def collect_properties(edm_ns, element, doc) props = element.xpath(".//edm:Property", "edm" => edm_ns) methods = props.collect { |p| p['Name'] } unless element["BaseType"].nil? base = element["BaseType"].split(".").last() baseType = doc.xpath("//edm:EntityType[@Name=\"#{base}\"]", "edm" => edm_ns).first() props = baseType.xpath(".//edm:Property", "edm" => edm_ns) methods = methods.concat(props.collect { |p| p['Name']}) end return methods endThe overall Ruby <-> OData experience has been quite good.

Donald Farmer posted The "Dallas" information marketplace to TechNet’s SQL Server Experts blog on 7/9/2010:

Today I'm here in Washington DC, demo-ing in Bob Muglia's keynote at our Worldwide Partner Conference. This time, I'm showing something quite new - not just a new product, but a new concept in data - Microsoft's premium information marketplace codenamed "Dallas."

"Dallas" may be better known as a village of 200 or so people in northern Scotland, but now it has a new claim to fame. "Dallas" is a place where developers on any platform as well as information workers can find data from commercial content providers as well as public domain data. They can consume this data and construct new BI scenarios and new apps…

To illustrate how easy it will be for ISVs to integrate this content into their apps and to showcase a BI scenario, in the keynote demo I'll be using United Nations data from "Dallas" integrated in an early build of Tableau Software's solution to create a visualization answering study abroad trends around the world. This took only a few minutes to create using "Dallas" and Tableau Public.

To break down the demo, here are the three key pillars behind this scenario:

Discover Data of All Types

Microsoft Codename "Dallas" makes data very easy to find and consume. The vision is to be able to post and access any data set, including rich metadata, using a common format.Explore and Consume

Using Tableau and "Dallas" together means you can explore any data set simply by dragging and dropping fields to visualize it. This is a very powerful idea: anyone can easily explore and understand data without doing any programming.Publish and Share

Once you've found a story in your data you want to share it... Using Tableau Public you can embed a live visualization in your blog, just like the one above."Dallas" creates a lot of opportunities for a company like Tableau. It makes it possible for bloggers, interested citizens and journalists to more easily find public data and tell important stories, creating a true information democracy. "Dallas" also makes it easier for Tableau's corporate customers to find relevant data to mash up with their own company data, making Tableau's corporate tools that much more compelling.

For more information on how you can be a provider or how an ISV can plug into the Dallas partnership opportunities, send mail to DallasBD@Microsoft.com.

Return to section navigation list>

AppFabric: Access Control and Service Bus

Hilton Giesenow presents a 00:25:12 Use Azure platform AppFabric to provide access control for a cloud application Screencam video:

Windows Azure platform AppFabric provides a foundation for rich cloud-based service and access control offerings. Join Hilton Giesenow, host of The Moss Show SharePoint Podcast, as he takes us through getting started with WCF services and the Windows Azure platform AppFabric ServiceBus component to extend our WCF services into the cloud.

Matias Woloski’s Consumer Identities for Business transactions post of 7/12/2010 describes his proof of concept (POC) for using Windows Identity Framework and OpenID:

A year ago I wrote a blog post about how to use the Windows Identity Foundation with OpenID. Essentially the idea was writing an STS that can speak both protocol WS-Federation and OpenID, so your apps can keep using WIF as the claims framework, no matter what your Identity Provider is. WS-Fed == enterprise, OpenID == consumer…

Fast forward to May this year, I’m happy to disclose the proof of concept we did with the Microsoft Federated Identity Interop group (represented by Mike Jones), Medtronic and PayPal. The official post from the Interoperability blog includes a video about it and Mike also did a great write up. I like how Kim Cameron summarized the challenges and lessons learnt of this PoC:

The change agent is the power of claims. The mashup Mike describes crosses boundaries in many dimensions at once:

- between industries (medical, financial, technical)

- between organizations (Medtronic, PayPal, Southworks, Microsoft)

- between protocols (OpenID and SAML)

- between computing platforms (Windows and Linux)

- between software products (Windows Identity Foundation, DotNetOpenAuth, SimpleSAMLphp)

- between identity requirements (ranging from strong identity verification to anonymous comment)

The business scenario brought by Medtronic is around an insulin pump trial. In order to register to this trial, users would login with PayPal, which represents a trusted authority for authentication and attributes like shipping address and age for them. Below are some screenshots of the actual proof of concept:

While there are different ways to solve a scenario like this, we chose to create an intermediary Security Token Service that understands the OpenID protocol (used by PayPal), WS-Federation protocol and SAML 1.1 tokens (used by Medtronic apps). This intermediary STS enables SSO between the web applications, avoiding re-authentication with the original identity provider (PayPal).

Also, we had to integrate with a PHP web application and we chose the simpleSAMLphp library. We had to adjust here and there to make it compatible with ADFS/WIF implementation of the standards. No big changes though.

We decided together with the Microsoft Federated Identity Interop team to make the implementation of this STS available under open source using the Microsoft Public License.

And not only that but also we went a step further and added a multi-protocol capability to this claims provider. This is, it’s extensible to support not only OpenID but also OAuth and even a proprietary authentication method like Windows Live.

DISCLAIMER: This code is provided as-is under the Ms-PL license. It has not been tested in production environments and it has not gone through threats and countermeasures analysis. Use it at your own risk.

- Project Home page: http://github.com/southworks/protocol-bridge-claims-provider

- Download: http://github.com/southworks/protocol-bridge-claims-provider/downloads

- Docs: http://southworks.github.com/protocol-bridge-claims-provider

If you are interested and would like to contribute, ping us through the github page, twitter @woloski or email matias at southworks dot net

This endeavor could not have been possible without the professionalism of my colleagues: Juan Pablo Garcia who was the main developer behind this project, Tim Osborn for his support and focus on the customer, Johnny Halife who helped shaping out the demo in the early stages in HTML :), and Sebastian Iacomuzzi that helped us with the packaging. Finally, Madhu Lakshmikanthan who was key in the project management to align stakeholders and Mike who was crucial in making all this happen.

Happy federation!

Wade Wegner (@wadewegner) asks How would you describe the Windows Azure AppFabric? in this 7/11/2010 post:

I was reading through the FAQ document for the Windows Azure platform this evening (what else is there to do on a Sunday night?), and I came across the following:

What is the Windows Azure AppFabric?

With AppFabric, Microsoft is delivering services that enable developers to build and manage composite applications more easily for both server and cloud environments. Windows Azure AppFabric, formerly called “.NET Services”, provides cloud-based services that help developers connect applications and services across Windows Azure, Windows Server and a number of other platforms. Today, it includes Service Bus and Access Control capabilities. Windows Server AppFabric includes caching capabilities and workflow and service management capabilities for applications that run on-premises.

Windows Azure AppFabric is built on Windows Azure, and provides secure connectivity and access control for customers with the need to integrate cloud services with on-premises systems, to perform business-to-business integration or to connect to remote devices.

The Service Bus enables secure connectivity between services and applications across firewall or network boundaries, using a variety of communication patterns. The Access Control Service provides federated, claims-based access control for REST web services. Developers can use these services to build distributed or composite applications and services.

I’ve spent a lot of time with the AppFabric, and believe I understand the intent of the above description. But what about the rest of you? If you have, or even if you haven’t, spent time using the AppFabric, how does this description resonate? Does this help you understand the AppFabric, or are you left confused? Do you understand it’s place and value in the larger Windows Azure platform?

I implore you to leave some feedback and let me know what you think. Please, share your thoughts! How can this be improved?

Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

David Aiken’s Getting Started with Windows Azure post of 7/12/2010 provides recounts the current process for creating cloud applications with the Microsoft Web Platform Installer:

A few folks today have been asking about how to get started with Windows Azure and/or SQL Azure. So here is my quick 3 steps to get you going.

- Make sure you have an OS that supports development! You can use Vista, Windows 7, Server 2008 or Server 2008 R2. My recommendation is Windows 7 64bit.

- Install the Microsoft Web Platform Installer from http://www.microsoft.com/web/downloads/platform.aspx .

- Once the installer loads – click Options:

- Click Developer Tools:

- Then click OK. You should now have the Developer Tools Tab:

- Under Visual Studio Tools, click Customize, then click Windows Azure Tools for Microsoft Visual Studio 2010 v1.2 and Visual Web Developer 2010 Express:

- Now click the Web Platform tab, and under database, click customize. Make sure SQL Server 2008 R2 Management Studio Express is clicked:

- Now Click install and confirm any options. Not only will the tools you selected be installed, the installer will also install any dependant bits too!

- While that is installing, navigate to here http://hmbl.me/1ITJKZ or here, download and install the latest version of the Windows Azure Platform Training Kit. There are some cool videos to watch to give you an overview.

- Once everything is installed, open the training kit and work through both the Introduction to Windows Azure and Introduction to SQL Azure lab.

Once you have that, the top resources to keep an eye on are http://www.microsoft.com/windowsazure, the awesome http://channel9.msdn.com/shows/Cloud+Cover/ and http://blogs.msdn.com/b/windowsazure/.

THIS POSTING IS PROVIDED “AS IS” WITH NO WARRANTIES, AND CONFERS NO RIGHTS

David Linthicum asks “The cloud is providing some great opportunities for health care, but will that industry take advantage?” in a preface to his his For what ails health care, my prescription is the cloud post of 7/13/2010 to InfoWorld’s Cloud Computing blog:

There's a lot of talk about how companies can and should take advantage of cloud computing. One industry that could get a serious advantage has, so far, not come up much in that conversation: health care. The truth of the matter is that the health care industry can take a huge edge in the emerging movement to cloud computing, considering how it needs to provide care to patients through federation and analysis of clinical data at some central location.

Let's first consider patient care. Most of us live in very populated centers with well-staffed and well-equipped clinics, having access to medical imaging systems and other diagnostic tools that make diagnosis and treatment more effective. But in rural areas, clinicians typically don't have direct access to the same technology, and in many instances have to send patients miles away for diagnosis and treatment.

The cost of this technology relative to the low usage due to low population in rural areas is prohibitive. But by leveraging core diagnostic systems, such as medical imaging, that are delivered via the cloud, there is no need for such costly on-site system procurement or operations.

Now what about clinical data analysis? The value here is huge, considering that would let medical professionals more easily aggregate clinical outcome data and better determine which treatments are working and which are not.

Today, doctors have to rely on their own experience, conversations with colleagues, and publications to keep up with current treatments. But by rolling up treatment and outcome data into a central data repository, doctors can use petabytes of information in a centralized, cloud-based database to determine exactly what's working to treat a diagnosed ailment. In other words, doctors would know what worked most of the time for others with the same issues and is very likely to be the best course of treatment. Doctors would be less likely to be using outdated assumptions or study results.

Apropos the above, Robert Rowley, MD observes in his Final Meaningful Use criteria unveiled post of 7/13/2010:

The wait is over. After long deliberation and review of over 2,000 comments, the Office of the National Coordinator for Health IT (ONC), together with the Centers for Medicare and Medicaid Services (CMS) have announced the final rule for Meaningful Use, as well as final rules for Certification. …

SugarCRM claimed Users, Developers and Partners Now Have Even Greater Choice in How They Deploy, Access and Customize Sugar 6 in the Open Cloud when it announced Sugar 6 Now Available on Microsoft Windows Azure Platform on 7/13/2010:

SugarCRM, the world's leading provider of open source customer relationship management (CRM) software, today announced that Sugar 6 is now certified for deployment on the Microsoft Windows Azure platform. The announcement was made today at Microsoft's Worldwide Partner Conference here, and coincides with general availability of Sugar 6, the latest release of the most intuitive, flexible and open CRM system on the market today.

With the availability of Sugar 6 on Windows Azure, SugarCRM developers and end-users now have greater choice in deploying and developing Sugar 6 in the cloud. Users and developers can enjoy the ease of cloud-based deployments while having complete control over their data and application, unlike the limitations placed on users and developers in older multi-tenant Software-as-a-Service (SaaS) deployment options.

"The availability of Sugar 6 on Windows Azure continues the great advancements SugarCRM has made in offering organizations and developers the most intuitive, flexible and open CRM solutions on the market," says Clint Oram, vice president of products and co-founder of SugarCRM. "It is now easier than ever for users of SugarCRM on the Windows platform to make the move into the cloud."

SugarCRM will bring the power of Sugar 6 on Windows Azure to market through its expansive channel network, including leading regional value added resellers like Plus Consulting. "Deploying Sugar 6 on Windows Azure gives our customers the ability to deploy a flexible and robust CRM solution, without the headache of managing hardware and other components themselves," says Heath Flicker, managing partner at Plus Consulting. "Windows Azure and Sugar 6 represent the future of CRM in the cloud: defined by choice, control and flexibility."

With the global reach of Windows Azure in seven data centers around the world, SugarCRM value-added resellers have a best-in-class platform for delivering localized and industry-specific SaaS CRM offerings in all geographies.

"Sugar 6 on Windows Azure showcases the openness and flexibility of the Azure platform," says Prashant Ketkar, director of product marketing for Windows Azure at Microsoft Corp. "With great performance coupled with the ease of deployment offered by the cloud, Windows Azure is an ideal environment for Sugar developers, users and partners alike."

In addition to exceptional performance and service, Windows Azure is a true "platform-as-a-service" in that all aspects of the technology infrastructure below the application layer are managed by Microsoft as a service. Other cloud service providers provide solely "infrastructure-as-a-service" where users of the cloud service must manage the necessary infrastructure software including the OS, web server, database, etc. Windows Azure includes both data and other services layers to speed deployment and development times.

For more information on deploying Sugar 6 on Microsoft Windows Azure, visit http://www.microsoft.com/windowsazure/windowsazure/.

About SugarCRM

SugarCRM makes CRM Simple. As the world's leading provider of open source customer relationship management (CRM) software, SugarCRM applications have been downloaded more than seven million times and currently serve over 600,000 end users in 80 languages. Over 6,000 customers have chosen SugarCRM's On-Site and Cloud Computing services over proprietary alternatives. SugarCRM has been recognized for its customer success and product innovation by CRM Magazine, InfoWorld, Customer Interaction Solutions and Intelligent Enterprise.

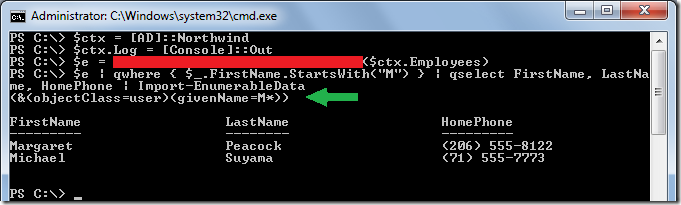

Bart De Smet tantalizes Azure developers with a brief preview of “LINQ to Anything” in his Getting Started with Active Directory Lightweight Directory Services post of 7/12/2010:

Introduction

In preparation for some upcoming posts related to LINQ (what else?), Windows PowerShell and Rx, I had to set up a local LDAP-capable directory service. (Hint: It will pay off to read till the very end of the post if you’re wondering what I’m up to...) In this post I’ll walk the reader through the installation, configuration and use of Active Directory Lightweight Directory Services (LDS), formerly known as Active Directory Application Mode (ADAM). Having used the technology several years ago, in relation to the LINQ to Active Directory project (which as an extension to this blog series will receive an update), it was a warm and welcome reencounter. …

A small preview of what’s coming up

To whet the reader’s appetite about next episodes on this blog, below is a single screenshot illustrating something – IMHO – rather cool (use of LINQ to Active Directory is just an implementation detail below):

Note: What’s shown here is the result of a very early experiment done as part of my current job on “LINQ to Anything” here in the “Cloud Data Programmability Team”. Please don’t fantasize about it as being a vNext feature of any product involved whatsoever. The core intent of those experiments is to emphasize the omnipresence of LINQ (and more widely, monads) in today’s (and tomorrow’s) world. While we’re not ready to reveal the “LINQ to Anything” mission in all its glory (rather think of it as “LINQ to the unimaginable”), we can drop some hints.

Stay tuned for more!

Toddy Mladenov asks Did You Forget Your Windows Azure Demo Deployment Running? Graybox can help you! on 7/12/2010:

Windows Azure as any other cloud platform is very convenient for doing demos or quick tests without the hassle of procuring hardware and installing bunch of software. However what happens quite often is that people forget their Windows Azure demo deployments [are] running, and this can cost them money (a lot sometimes).

Recently I bumped into a handy tool called Graybox. What it does it to regularly check your Windows Azure subscription, and notify you about your deployments.

Installing Graybox

You can download Graybox from here. Just unzip it in a local folder and that is it. The tool has no installer and application icon.

Configuring Graybox

To use Graybox you need two things:

- Windows Azure Subscription ID

- and Windows Azure Management API Certificate Thumbprint – keep in mind that you need to have the certificate imported on the machine where Graybox will be running as well as uploaded it into Windows Azure through the Windows Azure Developer Portal

The configuration is quite simple – you need to edit the Graybox.exe.config file, which is simple XML file, and add your subscription ID and management API thumbprint as follows:

<add key="SubscriptionId" value="[SUBSCRIPTION_ID]"/>

<add key="CertificateThumbprint" value="[API_THUMBPRINT]"/>Optionally you can change the refresh rate using RefreshTimerIntervalInMinutes configuration setting:

<add key="RefreshTimerIntervalInMinutes" value="10"/>

Using Graybox to Receive Notifications About Forgotten Windows Azure Deployments

Once you have Graybox configured you can start it by double-clicking on Graybox.exe. I will suggest you add the Graybox executable to All Programs –> Startup menu in order to have it started every time you start Windows. Once started it reminds resident in memory, and you can access its functionality through the tray icon:

Right-clicking on Graybox’ tray icon shows you the actions menu:

As you can see on the picture above deployments are shown in the following format:

[hosted_service_name], [deployment_label] ([status] on [environment])

For example:

toddysm-playground, test (Suspended on Production)Thus it is easy to differentiate between the different deployments. You have the option to “kill” particular deployment (this means to suspend it if it is running AND delete it or just delete it if the deployment is suspended) or to “kill” all deployments.

If you have forgotten that you have deployments Graybox will pop a message above the system tray every couple of minutes to remind you that you have something running:

The refresh rate is the one you configured in Graybox.exe.config configuration file.

Graybox Limitations

There are two things you should keep in mind when planning to use Graybox:

- Graybox can track deployments in only one subscription; if you own more than one Windows Azure subscription you will not be able to see all your deployments

- In addition Graybox requires .NET 4.0, and will not work with earlier versions of .NET framework

More Information About Graybox

Using Graybox is free because you don’t pay for accessing the Windows Azure Management APIs.

You can get additional information on their Codeplex page.

Chris Kanaracus reported Infor to Embrace Microsoft's Azure Cloud on 9/12/2010 to Bloomberg Businessweek’s Business Exchange:

Infor on Monday will announce plans for applications running on Microsoft's Azure cloud platform, deepening the vendors' already close partnership and adding momentum to the industry's shift away from on-premises ERP (enterprise resource planning) software.

The strategy will see next-generation versions of Infor applications, including for expense management, start landing on Azure in 2011.

Azure applications will also employ Infor's recently announced ION framework for data sharing between applications and BPM (business process management), allowing them to work in concert with on-premises software.

Infor is also using Microsoft's SharePoint and Silverlight technologies to build out a new user interface called CompanyOn, which will give its many application lines a common look and feel. CompanyOn will be a central home for BI (business intelligence) reports and dashboards, and provide ties into third-party sources such as LinkedIn.

Monday's announcement comes a few weeks after Infor said it would essentially standardize product development on the Microsoft stack.

Overall, the partnership has benefits for both vendors, said Altimeter Group analyst Ray Wang.

"It was a huge coup for Microsoft because it shows large ISVs [independent software vendors] are still willing to bet on the Microsoft platform. It's also good for customers, in that now people are on a standardized platform, and a platform that has a future. "

Those users will benefit from the vast pool of system integrators, ISVs and developers already working with Microsoft technologies, Wang said.

But the tie-up also raises questions for other Microsoft partners, such as Epicor, which has also embraced Redmond's stack and was an early adopter of Azure, 451 Group analyst China Martens said via e-mail.

Moreover, there's the question of "how all this embracing of Microsoft's Azure and other infrastructure by third-party ERPs affects [Microsoft] Dynamics partners," Martens said. "Does it give them more options in terms of whose apps they can sell and link into, or does it put them under more pressure to create Azure versions of Dynamics, given that Microsoft doesn't appear to have plans to do so itself?"

For Infor, it made more sense to target Azure than other players in the market, said Soma Somasundaram, senior vice president of global product development at Infor.

Infor could have gone with Amazon Web Services if its only requirement was pure IaaS (infrastructure as a service) with which to deploy its applications, he said. "But the downside is, it's not a platform, it just provides [resource] elasticity."

The company also looked at Salesforce.com's Force.com, he said. "It's a very good platform to build multitenant applications, but the problem is you have to build everything from scratch." Infor already had richly featured applications, "and we want to be able to deploy those," he said.

In addition, Infor has Java applications as well as ones that leverage Microsoft .NET, and Azure supports Java deployments, he said.

However, he termed Azure "somewhat of a new entrant" to the market, and said Infor is working with the company on "certain aspects that will let us leverage it better than now."

Infor will sell the Azure applications in multitenant form, wherein multiple customers share the same application instance with their data kept separate. This approach saves on system resources and allows upgrades to be pushed out to many customers at once.

But Infor will also run dedicated instances for companies that desire them, he said.

Chris Kanaracus covers enterprise software and general technology breaking news for The IDG News Service.

Kevin Kell continues his Worker Role Communications in Windows Azure – Part 2 series for Knowledge Tree in this 7/11/2010 post:

In a previous post we explored exposing an endpoint in a worker role to the outside world. Here we will examine one way to implement communication between worker roles internally [link added].

Basically, the idea is this:

Figure 1 Direct Role to Role Communication in Windows Azure

We could implement an architecture where all worker roles communicate with each other directly. We could do this communication over TCP.

There are a lot of good examples of code and projects available showing how to do this (and other things!) on Microsoft sites and others. See, for example, the excellent hands-on labs in the latest Windows Azure Platform Training Kit. These sample applications and labs are a great way to get started using Windows Azure. I encourage you to check them out.

One issue I have with some of those examples, however, is that they often include other details that are not directly relevant to understanding the topic at hand. This can often be overwhelming to someone encountering the technology for the first time.

Let us here consider a very over-simplified scenario. We want all worker roles to be able to directly communicate with each other. What I have tried to do with this example is to eliminate all extraneous stuff. The goal here is to focus down to just the bare necessities of how to do worker role to worker role communication in Azure. Also we want to fit the demo into a 10 minute (maximum) YouTube clip!

http://www.youtube.com/watch?v=iwOOxQc8Rs0

Obviously this stuff can still be a little complicated!

It is worthwhile, I think, to mention that more complicated doesn’t necessarily mean better. If you are considering doing something like this, take a good hard look at your design. Is it possible that some other better established pattern (the “asynchronous web/worker role” for example) would be equally well or better suited to the task at hand?

Still, it is nice to know that, as developers, we do have the flexibility in Azure to do pretty much anything that we want and that can be done in .NET.

Return to section navigation list>

Windows Azure Infrastructure

David Linthicum posted The Cloud, Agility, and Reuse Part 1 to ebizQ’s Where SOA Meets Cloud blog on 7/13/2010:

Agility and reuse are part of the value of cloud computing, and each has its own benefit to enterprise architecture. In the past, as an industry, we've had issues around altering the core IT infrastructure to adapt to the changing needs of business. While cloud computing is no "cure all" for agility, it does provide a foundation to leverage IT resources that are more easily provisioned and thus adaptable.

Agility has a few dimensions here:

First is the ability to save development dollars through reuse of services and applications. It's helpful to note that many services make up an application instance. These services may have been built inside or outside of the company, and the more services that are reusable from system to system, the more ROI from our cloud computing.

Second is the ability to change the IT infrastructure faster to adapt to the changing needs of the business, such as market downturns, or the introduction of a key product to capture a changing market. This, of course, provides a strategic advantage and allows for the business to have a better chance of long-term survival. Many enterprises are plagued these days with having IT infrastructures that are so poorly planned and fragile that they hurt the business by not providing the required degree of agility.

Under the concept of reuse, we have a few things we need to determine to better define the value. These include:

- The number of services that are reusable.

- Complexity of the services.

- The degree of reuse from system to system.

The number of reusable services is the actual number of new services created, or, existing services abstracted, that are potentially reusable from system to system. The complexity of the services is the number of functions or object points that make up the service. We use traditional functions or object points as a common means of expressing complexity in terms of the types of behaviors the service offers. Finally, the degree of reuse from system to system is the number of times you actually reuse the services. We look at this number as a percentage.

Just because we abstract existing systems as services, or create services from scratch, that does not mean that they have value until they are reused by another system. In order to determine their value we must first determine the Number of Services that are available for Reuse (NSR), the Degree of Reuse (DR) from system to system, as well as the Complexity (C) of each service, as described above. The formula to determine value looks much like this:

Value = (NSR*DR) * C

Thus, if you have 100 services available for reuse (NSR=100), and the degree of reuse at 50 percent (DR=.50), and complexity of each service is, on average, at 300 function points, the value would look like this:

Value = (100*.5) * 300

Or

Value = 15,000, in terms of reuse.

If you apply the same formula across domains/data centers, with consistent measurements of NSR, DR, and C, the relative value should be the resulting metrics. In other words, the amount of reuse directly translates into dollars saved. Also keep in mind that we have to subtract the cost of implementing the services, or, creating the cloud computing solution, since that's the investment we made to obtain the value.

Moreover, the amount of money saved depends upon your development costs, which vary greatly from company to company, data center to data center. Typically you should know what you're paying for functions or object points, and thus it's just a matter of multiplication to determine the amount of money you can save by implementing a particular cloud computing solution.

John Treadway (@cloudbzz) claims IT Chargeback Planning [is] A Critical Success Factor for Enterprise Cloud in this 7/12/2010 post to his CloudBzz blog:

That little nugget from one of my colleagues concisely sums up the theme of this brief post. After having read a recent analyst note on IT chargeback, and knowing about some of the work going on in various IT organizations in this area, I was originally going to write a detailed post about some of the most interesting aspects of this domain. While I was thinking, the folks at TechTarget were doing, resulting in a nice article on this topic that I encourage you to read if you want to understand more about IT chargeback concepts.

As companies invest more and more in cloud computing, one of the areas that seems to be generally overlooked is the central role of IT chargeback. After all, one of the key benefits of cloud is metering – knowing when you are using resources, at what level, and for how long. For the first time, it is not a lot more feasible to directly allocate or allot costs for IT back to the business units that are consuming it.

One of the reasons business units are now going around IT and using the cloud is this transparency of costs and benefits. In most enterprises, the allocation of IT expenses can be very convoluted, resulting in mistrust and confusion about how and why charges are taken. If I go to Amazon, however, I know exactly what I’m paying for and why. I can also track that back to some business value or benefit of the usage of Amazon’s services. Now businesses are asking for the same “IT as a Service” approach from their IT organizations. Anecdotally, internal customers appear willing to pay more than the public cloud price in order to get the security and manageability of an internal cloud service – at least for now.

While many IT organizations are rushing to put up any kind of internal cloud, they are often ignoring this important aspect of their program. Negotiating in advance with your business customers on how you’re going to charge for cloud services, and why, is a good first step. Building the interfaces between the cloud an internal accounting systems can be pretty difficult. It’s important to take a flexible approach to this, given that chargeback models can change quickly basesd on business conditions.

Publishing a service catalog with pricing can make it a lot easier for internal customers to evaluate, track, and audit their internal cloud expenses. Accurate usage information, pre-defined billing “dispute” processes, and - above all – high levels of transparency regarding internal costs to provide your services are all critical to user acceptance. If possible, put your cloud IT chargeback plans in place before you build your cloud. Your negotiations with business units might prompt you to make changes to your services – such as different storage solutions or networking topologies to lower costs or improve SLAs. Making these changes at the start of a cloud project can be far less expensive than making them retroactively.

Bottom line – getting IT chargeback right is key to a successful cloud program.

Lori MacVittie (@lmacvittie) asserts “Web applications that count on the advantage of not having a bloated desktop footprint need to keep one eye on the scale…” in her Does This Application Make My Browser Look Fat? post of 7/12/2010:

A recent article on CloudAve that brought back the “browser versus native app” debate caught my eye last week. After reading it, the author is really focusing on that piece of the debate which dismisses SaaS and browser-based applications in general based on the disparity in functionality between them and their “bloated desktop” cousins.

Why do I have to spend money on powerful devices when I can get an experience almost similar to what I get from native apps? More importantly, the rate of innovation on browser based apps is much higher than what we see in the traditional desktop software.

[…]

Yes, today's SaaS applications still can't do everything a desktop application can do for us. But there is a higher rate of innovation on the SaaS side and it is just a matter of time before they catch up with desktop applications on the user experience angle.

When You Can Innovate With browser, Why Do You Need Native Apps?, Krishnan Subramanian

I don’t disagree with this assessment and Krishnan’s article is a good one – a reminder that when you move from one paradigm to another it takes time to “catch up”. This is true with cloud computing in general. We’re only at the early stages of maturity, after all, so comparing the “infrastructure services” available from today’s cloud computing implementations with well-established datacenters is a bit unfair.

But while I don’t disagree with Krishnan, his discussion reminded me that there’s another piece of this debate that needs to be examined – especially in light of the impact on the entire application delivery infrastructure as browser (and browser-based applications) capabilities to reproduce a desktop experience mature. At what point do we stop looking at browser-based applications as “thin” clients and start viewing them for what they must certainly become to support the kind of user-experience we want from cloud and web applications: bloated desktop applications.

The core fallacy here is that SaaS (or any other “cloud” or web application) is not a desktop application. It is. Make no mistake. The technology that makes that interface interactive and integrated is almost all enabled on the desktop, via the use of a whole lot of client-side scripting. Just because it’s loaded and executing from within a browser doesn’t mean it isn’t executing on the desktop. It is. It’s using your desktop’s compute resources and your desktop’s network connection and it’s a whole lot more bloated than anything Tim Berners-Lee could have envisioned back in the early days of HTML and the “World Wide Web.” In fact, I’d argue that with the move to Web 2.0 and a heavy reliance on client-side scripting to implement what is presentation-layer logic that the term “web application” became a misnomer. Previously, when the interface and functionality relied solely on HTML and was assembled completely on the web-side of the equation, these were correctly called “web” applications. Today? Today they’re very nearly a perfected client-server, three-tiered architectural implementation. Presentation layer on the client, application and data on the server. That the network is the Internet instead of the LAN changes nothing; it simply introduces additional challenges into the delivery chain.

Lori continues with THE MANAGEMENT and MAINTENTANCE DISTINCTION IS NO MORE topic and concludes:

The days of SaaS and “web applications” claiming an almost moral superiority over “bloated desktop applications” are over. They went the way of “Web 1.0” – lost to memory and saved only in the “wayback machine” to be called forth and viewed with awe and disbelief by digital millennials that any one ever used applications so simple.

Today’s “web” applications are anything but simple, and the environment within which they execute is anything but thin.

Gregor Petri claims “Maybe five for the world is not so crazy after all” in a preface to his Might the Cloud Prove Thomas J. Watson Right After All? post of 7/11/2010:

In 1943 former IBM president Thomas J. Watson (pictured below) allegedly said: “I think there is a world market for maybe five computers". Will cloud computing prove Watson to be right after all?

Anyone who visited a computer-, internet- or mobile-conference in recent years, is likely to have been privy to someone quoting a statement former IBM president Thomas J. Watson allegedly *1 made in 1943: “I think there is a world market for maybe five computers". Most often it is used to show how predicting the future is a risky endeavor. But is it? Maybe cloud computing will prove Watson to be right after all, he was just a bit early?

Now don’t get me wrong, I am not suggesting there will be less digital devices in the future. In fact there will be more than we can imagine (phones, ipads, smart cars and likely several things implanted into our bodies). But the big data chrunching machines that we - and I suspect Mr. Watson - traditionally think of as a computers are likely to reduce radically in numbers as a result of cloud computing. One early sign of this may be that a leading analyst firm – who makes a living out of publishing predictions - now foresees that within 2 years, one fifth of business will own no IT assets*2.

Before we move on, let’s further define "computer" for this discussion. Is a rack with six blades one computer or six? I’d say it is one. Same as I feel a box (or block) hosting 30 or 30000 virtual machines, is still one computer. I would even go so far that a room with lots of boxes running lots of stuff could be seen as one computer. And let’s not forget that computers in the days of Mr. Watson were as big as rooms. So basically the proposed idea is: cloud computing may lead to “a world market with maybe five datacenters”. Whether these will be located at the bottom of the ocean (think we have about 5 of those*5), distributed into outer space to solve the cooling problem or located on top of nuclear plants to solve the power problem, I leave to the hardware engineers (typical implementation details).

Having five parties hosting datacenters (a.k.a. computers) to serve the world, how realistic is this? Not today, but in the long run, let’s say for our children’s children. It seems to be at odds with the idea of grids and the use all this computing power doing little or nothing in all these distributed devices (phones, ipads). But does that matter. Current statistics already show that a processor in a datacenter with 100.000 CPU’s is way cheaper to run than that same processor in a datacenter with 1000 CPUs. But if we take this “bigger is better” (Ough this hurts, at heart I am a Schumacher “small is beautiful” fan) and apply it to other industries, companies would logically try and have one factory. So Toyota would have one car factory and Intel one chip factory. Fact is they don’t , at least not today. Factors like transport cost and logistical complexity prevent this. Not to mention that nobody would wont to work there or even live near these and that China may be the only country big enough to host these factories (uhm, guess China may be already trying this?).

But with IT we theoretically can reduce transport latency to light speed and logistical complexity in a digital setting is a very different problem. Sure managing 6000 or 600.000 different virtual machines needs some thought (well maybe a lot of thought), but it does not have the physical limitations of trying to cram 60 different car models, makes and colors through one assembly line. If instead of manufacturing we look at electricity as a role model for IT - as suggested by Nicholas Carr - then the answer might be something like ten plants per state/country (but reducing). Now we need to acknowledge that electricity suffers from the same annoying physical transport limitations as manufacturing. It does not travel well.

So guess my question is: What is the optimal number?

How many datacenters will our children’s children need when this cloud thing really starts to fly.

- A. 5 (roughly one per continent/ocean)

- B. .5K (roughly the number of Nuclear power plants (439))*3

- C. 5K (roughly 25 per country)

- D. .5M (roughly/allegedly the current number of Google servers)*4

- E. 5M (roughly the current number of air-conditioned basements?)

- F. 5G (roughly the range of IP4 (4.2B))

- *1 Note: Although the statement is quoted extensively around the world, there is little evidence Mr Watson ever made it http://en.wikipedia.org/wiki/Thomas_J._Watson#Famous_misquote

- *2 http://www.gartner.com/it/page.jsp?id=1278413

- *3 http://www.icjt.org/an/tech/jesvet/jesvet.htm

- *4 http://www.datacenterknowledge.com/archives/2009/05/14/whos-got-the-most-web-servers/

- *5 http://geography.about.com/library/faq/blqzoceans.htm

- *6 http://en.wikipedia.org/wiki/Small_Is_Beautiful

Steven Willmott’s MVC for the Cloud post of 7/10/2010 proposes the MVC model as an API for cloud computing:

While planning for a talk at Cloud Expo Europe a few weeks ago I was thinking about appropriate metaphors for the way APIs are changing the web. Although the title was APIs as glue for the Cloud, I think the core metaphor behind it deserves some explanation: MVC for the Cloud. I thought I'd add some notes here as to what this might mean.

MVC or Model View Controller is an architectural pattern for software that separates out three import things - Models (or Data), Views (visualisation of that data) and Controllers (operations on the data). Since it's invention at Xerox Parc in the late 70's, MVC has a had a huge impact on software engineering and nowhere more so that on Web Applications - there are MVC frameworks for almost every Web programming language and framework out there.

What I'd call "1st generation" MVC was applied to desktop applications and gave great new flexibility at design and build time but separating these three elements out and allowing independent work on all three pieces. When the software shipped everything got baked together and the end user had no knowledge how the underlying system was stitched together.

Moving to Web Applications there is a "2nd generation" of MVC which takes this even further - M, V and C can even stay separate at deploy time for a web app - this means developers of SAAS apps can independently work on and update their data models, views and controllers without affecting the other components - again all without the user needing to know. We can even allow users to switch interfaces "views" according to their needs - and keep exactly the same underlying logic and data models. Advanced testing frameworks and continuous deployment make it increasingly feasible to decouple almost everything.

Cloudscale MVC

However, arguably we're seeing the birth of a "3rd generation" (or is that a third wave?) of MVC which has the potential to have an every broader and deeper impact - Cloud (or Web) scale MVC - all enabled by APIs. To explain this: MVC models have to date been though of as applying to single software applications (desktop or web), but the metaphor extends much further. Namely:

- Models (Data): can mean any data anywhere, held by any company.

- Views (Interfaces): can mean any view onto some data or function - i.e. an iPhone app, a mashup with other data, produced by the owners of that data / function or by somebody else.

- Controllers (Logic): can mean any piece of business logic applied to data, again by the owners of the data or by somebody else.

The implications of this are enormous - instead of a world in which individual applications/services are silos which have M+V+C locked inside of them the MVC connections a can perfectly well exist between applications and between companies. Better stated a company with great data assets can enable access to this data to aggressively grow an ecosystem of partners who provide exiting views (often coupled to audiences) and transformations of this data.

Looking at some examples:

- Companies such as the New York times, Bloomberg or Weatherbug have great data - it's often real time, often broader, deeper and unique value over their competitors.

- Companies such as Animoto, Shazam and Twitter provide amazing functionality which transforms data - Animoto turns images into video, Shazzam turns audio into metadata, Twitter organises a huge global conversation.

- Companies such as Yahoo! and Tweetdeck provide views on data and structure it in clever ways. Further in the case of Yahoo they bring it to a huge Audience.

I'd also include Apple in the later category - creating devices which end up in the hands of millions and aggregate/transform applications and content into convenient forms for apple users. Some companies (potentially the New York Times falls into this category) have assets in more than one place - in the NYT's case great data, but also a loyal audience which trusts its brand.

APIs are the key strategic asset which is needed to make this happen. Using APIs companies are now no longer forced to provide all parts of the value chain of their service themselves - in fact, it has huge value to partner with others and focus on one's core strength. Today companies can likely still be victorious in their market segment without partnering up - but as the Internet evolves it seems likely that:

- The companies with the best data should partner with those who can reach the widest (relevant) audience and who can best transform / manipulate and integrate that data.

- The companies with the best algorithms and processes should seek the best data partners.

- The companies which provide smart interfaces - be they in software, hardware or in terms of audience should bring in the best data and functionality from wherever they can find it.

Such changes might require changes in business models and strategy, but increasingly they look like a natural evolution of the Web economy. As Data and Services move to the cloud the opportunities for focus and re-use of core valuable assets is a potentially huge untapped benefit for many companies.

APIs and the Internet Operating System

APIs are the natural glue which can make Cloud scale MVC happen since they allow a complete decoupling of the different elements needed for meaningful applications. What's also interesting is that MVC generally doesn't exist without a fourth element - an MVC framework which provides the structure within which everything comes together.

At the software level, this 4th element is represented by frameworks such as Rails, Jango, Cake, Spring's Java MVC framework or any of the other many frameworks. At the cloud scale the equivalent seems to be something akin to the Internet Operating System postulated by TIm O'Reilly and others which argues that many core internet functions such as Search, Media Access, Location, Communication and others are morphing from standalone services into a commonly available infrastructure which others can rely on - again often through the use of APIs.

This metaphor seems spot on - common substrates such as twitter messaging and google search are clearly becoming the basis of many thousands of new applications. The idea extends further to services such as Facebook connect and Twitter connect which are quickly becoming a standard way to set up user authentication for new Web applications (just as Paypal has largely become for payments). (Of course in some cases there are concerns about how much power some of the players behind such services may ultimately have, but leaving this aside, they arguable provide the means for a whole new economy of applications.

The Internet Operating System seems to be clearly emerging and taking the analogy further - is beginning to make it possible to build compound applications which string together data and services from many places to create user experiences.

Back to MVC

Although there are ton of possible metaphors for the way the Web is evolving, the MVC metaphor seems to be a good fit for what is happening not only at the architectural level (and what might be happening "on top of" the Internet Operating System) but also at the business level.

It seems a fair bet that web companies over the next few years will need to establish what their core value and differentiator is (M, V or C?) and aggressively establish partnering channels to links themselves into strong partner ecosystems. This also creates opportunities for a wealth of new applications which combine data, functionality and views/audiences in new ways.

Fred O’Connor claims Cloud Computing Will Surpass the Internet in Importance in his 7/9/2010 post for IDG News Services carried by CIO.com:

Cloud computing will top the Internet in importance as development of the Web continues, according to a university professor who spoke Friday at the World Future Society conference in Boston.

While those who developed the Internet had a clear vision and the power to make choices about the road it would take -- factors that helped shape the Web -- Georgetown University professor Mike Nelson wondered during a panel discussion whether the current group of developers possesses the foresight to continue growing the Internet.

"In the mid-90s there was a clear conscience about what the Internet was going to be," he said. "We don't have as good a conscience as we did in the '90s, so we may not get there."

While a vision of the Internet's future may appear murky, Nelson said that cloud computing will be pivotal. "The cloud is even more important than the Web," he said.

Cloud computing will allow developing nations to access software once reserved for affluent countries. Small businesses will save money on capital expenditures by using services such as Amazon's Elastic Compute Cloud to store and compute their data instead of purchasing servers.

Sensors will start to appear in items such as lights, handheld devices and agriculture tools, transmitting data across the Web and into the cloud.

If survey results from the Pew Internet and American Life Project accurately reflect the U.S.' attitude toward the Internet, Nelson's cloud computing prediction could prove true.

In 2000, when the organization conducted its first survey and asked people if they used the cloud for computing, less than 10 percent of respondents replied yes. When asked the same question this May, that figure reached 66 percent, said Lee Rainie, the project's director, who also spoke on the panel.

Further emphasizing the role of cloud computing's future, the survey also revealed an increased use of mobile devices connecting to data stored at offsite servers.

However, cloud computing faces development and regulation challenges, Nelson cautioned.

"There are lots of forces that could push us away from the cloud of clouds," he said.

He advocated that companies develop cloud computing services that allow users to transfer data between systems and do not lock businesses into one provider. The possibility remains that cloud computing providers will use proprietary technology that forces users into their systems or that creates clouds that are only partially open.

"I think there is a chance that if we push hard ... we can get to this universal cloud," he said.

Continue Reading (page 2)

Return to section navigation list>

Windows Azure Platform Appliance

Jonathan Allen reported Microsoft is Planning to Allow Private Installations of Windows and SQL Azure in a 7/13/2010 post to InfoQ:

Along with partners HP, Dell, and Fujitsu, Microsoft is offering private installations of Windows Azure. The product will be offered in appliance format, meaning Microsoft will be selling the hardware and software as a bundle. While no pricing is set, the target audience is customers like eBay who can afford at least one thousand servers.

Steve Marx of Microsoft is stressing that companies will not be able to customization their Azure installations. The hardware and software stack will essentially be a black box, which will allow Microsoft’s partners to offer exactly the same features and APIs that the public cloud offers and will receive the same patches and updates. The goal is to offer a consistent development experience for programmers and make migrating from one installation to another seamless.

Customers needing less than a thousand servers are being directed to either Microsoft’s current Azure offering or, if they want an on-site data center, Microsoft’s line of Hyper-V virtual machine servers.

My Windows Azure Platform Appliance (WAPA) Announced at Microsoft Worldwide Partner Conference 2010 post of 7/12/2010 (updated 7/13/2010) provides a one-stop shop for 15+ feet of mostly full-text reports about Microsoft’s WAPA, as well as its implementations by Dell, Fujitsu, HP, and eBay.

Because of its length, here are links to its sections:

- Independent Reporters/Analysts Ring In

- Microsoft WPC10 WAPA Agitprop

- Press Releases from Dell, Fujitsu, HP and eBay

- From the Competitors’ Camps

<Return to section navigation list>

Cloud Security and Governance

Beth Schultz claims “New thinking and new tools are needed to securely run application workloads and store data in the public cloud” in her four-page The challenges of cloud security article of 7/13/2010 for NetworkWorld’s Data Center blog:

Some IT execs dismiss public cloud services as being too insecure to trust with critical or sensitive application workloads and data. But not Doug Menefee, CIO of Schumacher Group, an emergency management firm in Lafayette, La.

"Of course there's risk associated with using cloud services – there's risk associated with everything you do, whether you're walking down the street or deploying an e-mail solution out there. You have to weigh business benefits against those risks," he says.

Menefee practices what he preaches. Today 85% of Schumacher Group's business processes live inside the public cloud, he says.

The company uses cloud services from providers such as Eloqua, for e-mail marketing; Google Apps for e-mail and calendaring; Salesforce.com, for CRM software; Skillsoft, for learning management systems; and Workday, for human resources management software. "The list continues to go on for us," he says.

Yet Menefee says he doesn't consider himself a cloud advocate. Rather, he says he's simply open to the idea of cloud services and willing to do the cost-benefit and risk analysis.

To be sure, the heavy reliance on cloud services hasn't come without a security rethink, Menefee says. For one, the company needed to revamp its identity management processes. "We needed to think about how to navigate identity management and security between one application and another living out in the cloud," he says.

Identity as a start

Indeed, rethinking identity management often is the starting point for enterprises assessing cloud security, says Charles Kolodgy, research vice president of security products at IDC. They've got to consider authentication, administrative controls, where the data resides and who might have access to it, for example.

"These are similar to what enterprises do now, of course, but the difference that it no longer owns the infrastructure and doesn't have complete access to the backend so it needs strong assurances," he adds.

Start-ups ServiceMesh and Symplified have addressed the need for strong cloud security assurances with offerings aimed at unifying access management. ServiceMesh offers the Agility Access, for use with its Agility Platform, which comprises cloud management, governance and security tools and modules, as well as the services managed under the platform.

Symplified offers Trust Cloud. Built on the Amazon Elastic Compute Cloud (EC2), Trust Cloud is a unified access management and federation platform that integrates and secures software and infrastructure cloud services, EC2 and Web 2.0 applications.

In its case, Schumacher Group uses the Trust Cloud predecessor, Symplified's SinglePoint, an identity, access management and federation service that gives users single sign-on access to multiple cloud applications. In addition, SinglePoint lets IT rapidly provision and de-provision access to all applications in one pass. It's looking into moving from the appliance approach to Trust Cloud, but isn't committed to the idea yet, Menefee says.

Page 2 Page 3 Page 4

<Return to section navigation list>

Cloud Computing Events

Bruce Kyle’s Microsoft Offers Support for Moving to the Cloud post of 7/13/2010 to the US ISV Evangelism blog reported:

Today at the annual Microsoft Worldwide Partner Conference, Jon Roskill, Microsoft’s new channel chief, unveiled business strategies and resources to help partners of all types seize new opportunities in the cloud.

Tools for Your Business

Roskill detailed new tools, resources and sales support that Microsoft is delivering to help partners plan and build profitable businesses in the cloud and advance their cloud knowledge, including:

- New Microsoft Cloud Essentials and Microsoft Cloud Accelerate, Business Builder for Cloud Services,

- Cloud Profitability Modeler.

Roskill shared new Microsoft Partner Network sales and marketing resources to help partners attract customers and close deals. Some ey resources to get you started:

- Value of Earning a Microsoft Competency Guide

- 5 for 1 WPC Exam Pass for WPC attendees

- List Your Company on Microsoft Pinpoint

- Microsoft Partner Network Sales Specialist Accreditation

More information on Roskill’s announcements can be found at http://www.microsoftpartnernetwork.com/RedmondView/Permalink/Crossing-the-Chasm-Turning-Cloud-Opportunity-Into-Reality-for-Partners.

Consumer Products Connect to the Cloud

In addition to Roskill’s keynote address, Andy Lees, senior vice president of the Mobile Communications Business, and Brad Brooks, corporate vice president of Windows Consumer Marketing and Product Management, teamed up to show partners how new consumer products and services from Microsoft work across all the screens in consumers’ lives.

Showcasing Windows Phone 7, Windows Live, Windows Phone, Xbox and more, they demonstrated how Microsoft platforms combine the power of immersive software with smart services accessed instantly via the cloud to deliver experiences that make lives easier, more entertaining and more fun.

During the keynote, Lees announced that Windows Phone 7 will be complemented in the cloud by a new Windows Phone Live site. The site will also be home to the new Find My Phone feature that allows people to find and manage a missing phone with map, ring, lock and erase capabilities — all for free. More information can be found at http://windowsteamblog.com/windows_phone/b/windowsphone.

Other Announcements

Additional news from the Worldwide Partner Conference today includes the following:

- Microsoft Services Launches Practice Accelerator to Make Training and Offering IP More Accessible to Partners: http://www.microsoft.com/presspass/events/wpc/docs/ServicesPartnerStrategyFS.docx

- Intuit Partner Platform Delivers Windows Phone 7 SDK: http://windowsteamblog.com/windows_phone/b/wpdev

Find Out More

More information about the Microsoft Worldwide Partner Conference is available on the virtual event site at http://www.digitalwpc.com. You can watch select keynote addresses live or on demand, register to view sessions, and learn more about the news and events coming out of the conference.

On Wednesday, former U.S. President Bill Clinton will share his unique and motivational insights and observations with the audience in a presentation titled “Embracing Our Common Humanity.” Also on Wednesday, Microsoft Chief Operating Officer Kevin Turner will take the stage to outline strategies and opportunities for partners to successfully compete in the cloud.

The four Build Your Business in the Cloud presentations of WPC10’s Microsoft Cloud Services page (for small, medium, enterprise and public sector organizations) are now live. Here’s the table of contents for each presentation:

- The Cloud Opportunity Executive Summary

- The Cloud Opportunity and Customer Benefits

- Capitalizing on the Cloud

- Making the Transition to Cloud Services

- Understanding the Impact of Cloud Services

- Making the Transition to Selling Cloud Services

- Calls to Action Calls to Action by Partner Type

- Additional Resources

- Opportunities in Other Customer Segments

<Return to section navigation list>

Other Cloud Computing Platforms and Services

James Hamilton contributes his High Performance Computing Hits the Cloud analysis of Amazon Cluster Compute Instances on 7/13/2010:

High Performance Computing (HPC) is defined by Wikipedia as:

High-performance computing (HPC) uses supercomputers and computer clusters to solve advanced computation problems. Today, computer systems approaching the teraflops-region are counted as HPC-computers. The term is most commonly associated with computing used for scientific research or computational science. A related term, high-performance technical computing (HPTC), generally refers to the engineering applications of cluster-based computing (such as computational fluid dynamics and the building and testing of virtual prototypes). Recently, HPC has come to be applied to business uses of cluster-based supercomputers, such as data warehouses, line-of-business (LOB) applications, and transaction processing.

Predictably, I use the broadest definition of HPC including data intensive computing and all forms of computational science. It still includes the old stalwart applications of weather modeling and weapons research but the broader definition takes HPC from a niche market to being a big part of the future of server-side computing. Multi-thousand node clusters, operating at teraflop rates, running simulations over massive data sets is how petroleum exploration is done, it’s how advanced financial instruments are (partly) understood, it’s how brick and mortar retailers do shelf space layout and optimize their logistics chains, it’s how automobile manufacturers design safer cars through crash simulation, it’s how semiconductor designs are simulated, it’s how aircraft engines are engineered to be more fuel efficient, and it’s how credit card companies measure fraud risk. Today, at the core of any well run business, is a massive data store –they all have that. The measure of a truly advanced company is the depth of analysis, simulation, and modeling run against this data store. HPC workloads are incredibly important today and the market segment is growing very quickly driven by the plunging cost of computing and the business value understanding large data sets deeply.

High Performance Computing is one of those important workloads that many argue can’t move to the cloud. Interestingly, HPC has had a long history of supposedly not being able to make a transition and then, subsequently, making that transition faster than even the most optimistic would have guessed possible. In the early days of HPC, most of the workloads were run on supercomputers. These are purpose built, scale-up servers made famous by Control Data Corporation and later by Cray Research with the Cray 1 broadly covered in the popular press. At that time, many argued that slow processors and poor performing interconnects would prevent computational clusters from ever being relevant for these workloads. Today more than ¾ of the fastest HPC systems in the world are based upon commodity compute clusters.

The HPC community uses the Top-500 list as the tracking mechanism for the fastest systems in the world. The goal of the Top-500 is to provide a scale and performance metric for a given HPC system. Like all benchmarks, it is a good thing in that it removes some of the manufacturer hype but benchmarks always fail to fully characterize all workloads. They abstract performance to a single or small set of metrics which is useful but this summary data can’t faithfully represent all possible workloads. Nonetheless, in many communities including HPC and Relational Database Management Systems, benchmarks have become quite important. The HPC world uses the Top-500 list which depends upon LINPACK as the benchmark.

Looking at the most recent Top-500 list published in June 2010, we see that Intel processors now dominate the list with 81.6% of the entries. It is very clear that the HPC move to commodity clusters has happened. The move that “couldn’t happen” is near complete and the vast majority of very high scale HPC systems are now based upon commodity processors.

What about HPC in the cloud, the next “it can’t happen” for HPC? In many respects, HPC workloads are a natural for the cloud in that they are incredibly high scale and consume vast machine resources. Some HPC workloads are incredibly spiky with mammoth clusters being needed for only short periods of time. For example semiconductor design simulation workloads are incredibly computationally intensive and need to be run at high-scale but only during some phases of the design cycle. Having more resources to throw at the problem can get a design completed more quickly and possibly allow just one more verification run to potentially save millions by avoiding a design flaw. Using cloud resources, this massive fleet of servers can change size over the course of the project or be freed up when they are no longer productively needed. Cloud computing is ideal for these workloads.

Other HPC uses tend to be more steady state and yet these workloads still gain real economic advantage from the economies of extreme scale available in the cloud. See Cloud Computing Economies of Scale (talk, video) for more detail.

When I dig deeper into “steady state HPC workloads”, I often learn they are steady state as an existing constraint rather than by the fundamental nature of the work. Is there ever value in running one more simulation or one more modeling run a day? If someone on the team got a good idea or had a new approach to the problem, would it be worth being able to test that theory on real data without interrupting the production runs? More resources, if not accompanied by additional capital expense or long term utilization commitment, are often valuable even for what we typically call steady state workloads. For example, I’m guessing BP, as it battles the Gulf of Mexico oil spill, is running more oil well simulations and tidal flow analysis jobs than originally called for in their 2010 server capacity plan.

No workload is flat and unchanging. It’s just a product of a highly constrained model that can’t adapt quickly to changing workload quantities. It’s a model from the past.

There is no question there is value to being able to run HPC workloads in the cloud. What makes many folks view HPC as non-cloud hostable is these workloads need high performance, direct access to underlying server hardware without the overhead of the virtualization common in most cloud computing offerings and many of these applications need very high bandwidth, low latency networking. A big step towards this goal was made earlier today when Amazon Web Services announced the EC2 Cluster Compute Instance type.

The cc1.4xlarge instance specification:

- 23GB of 1333MHz DDR3 Registered ECC

- 64GB/s main memory bandwidth

- 2 x Intel Xeon X5570 (quad-core Nehalem)

- 2 x 845GB 7200RPM HDDs

- 10Gbps Ethernet Network Interface