Windows Azure and Cloud Computing Posts for 6/25/2010+

| Windows Azure, SQL Azure Database and related cloud computing topics now appear in this daily series. |

Updates for 6/27/2010 are marked •

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database, Codename “Dallas” and OData

- AppFabric: Access Control and Service Bus

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Windows Azure Infrastructure

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Read the detailed TOC here (PDF) and download the sample code here.

Discuss the book on its WROX P2P Forum.

See a short-form TOC, get links to live Azure sample projects, and read a detailed TOC of electronic-only chapters 12 and 13 here.

Wrox’s Web site manager posted on 9/29/2009 a lengthy excerpt from Chapter 4, “Scaling Azure Table and Blob Storage” here.

You can now download and save the following two online-only chapters in Microsoft Office Word 2003 *.doc format by FTP:

- Chapter 12: “Managing SQL Azure Accounts and Databases”

- Chapter 13: “Exploiting SQL Azure Database's Relational Features”

HTTP downloads of the two chapters are available from the book's Code Download page; these chapters will be updated in June 2010 for the January 4, 2010 commercial release.

Azure Blob, Drive, Table and Queue Services

• Matias Woloski’s Poor man’s memcached for Windows Azure post of 6/26/2010 offers code to manage cache invalidation for blobs upload to Windows Azure storage from on-premises middleware:

Part of working with the Windows Azure guidance team is not only about writing but it’s also about helping customers and understanding real life problems. This help us validate and enrich the content.

One of the customers we are helping has an hybrid solution in Windows Azure where there is a backend running on-premises that pushes information to a frontend running on Windows Azure in ASP.NET. This information is stored in blob storage and then served from the web role. To avoid going every time to the blob storage, though, they want to cache the information. But whenever you cache, you have to handle the expiration of the item you are caching, otherwise it never gets updated. That’s one option, cache it for X minutes. But the ideal would be to control the caching and whenever the information gets stale, update the cache. This is easy if you use an ORM like NHibernate or if you are using SqlCommands and SqlCacheDependency or if you use something like memcached or the AppFabric velocity. However it gets more difficult if you have other kind of resources to cache and if the web application runs in a farm.

Using Windows Azure queues to invalidate ASP.NET Cache

Maybe you need something smaller. This is what I implemented, I just posted on git two classes that can be used in a Windows Azure Web Role running ASP.NET as a very basic distributed caching mechanism. The following picture shows how it works at a high level.

Usage

Using it requires two things

Start the monitor (that listens to the queue). Write this code either in the WebRole entry point or in the Global.asax Applicaiton_Start.

Use the regular ASP.NET Cache API but providing the CloudQueueCacheDependency with a key

…

Matias provides an illustrated example and concludes:

By using this technique you can have a distributed system where the backend makes an update on-premises, pushes something to the cloud and it invalidates the cache by posting a message to a queue. I didn’t worry too much about being fault tolerant in the monitor simply because in the worst case the item keeps alive in the cache and you can repost a message.

Download the code from here

Jai Haridas reported Nagle’s Algorithm is Not Friendly towards Small Requests in a 6/25/2010 post to the Windows Azure Storage blog:

We had recommended in a forum post about how turning off Nagling can greatly improve throughput for Table Inserts and Updates. We have also been seeing a lot of improvement when application deals with small sized messages (http message size < 1460 bytes). So what is Nagling? Nagling is a TCP optimization on the sender and it is designed to reduce network congestion by coalescing small send requests into larger TCP segments. This is achieved by holding back small segments either until TCP has enough data to transmit a full sized segment or until all outstanding data has been acknowledged by the receiver

However Nagling interacts poorly with TCP Delayed ACKs, which is a TCP optimization on the receiver. It is designed to reduce the number of acknowledgement packets by delaying the ACK for a short time. RFC 1122 states that the delay should not be more than 500ms and there should be an ACK for every second segment. Since the receiver delays the ACK and the sender waits for the ACK before transmitting a small segment, the data transfer can get stalled until the delayed ACK arrives.

Since a picture speaks a thousand words, we have provided a graph of Queue PUT latencies with Nagling ON and OFF. The test was written by Shuitao, an Engineer in Storage team. The test is run as a worker role accessing our storage account in the same geo location. It inserts messages which are 480 bytes in length. The results show that the average latency improves by more than 600% with Nagling turned off.

Figure 1 – Nagling ON (Default). The x-axis shows the queue requests over time for the time period sampled. The y-axis shows the end to end time from the client in milliseconds to process the queue request.

Figure 2 - Nagling OFF. The x-axis shows the queue requests over time for the time period sampled. The y-axis shows the end to end time from the client in milliseconds to process the queue request.

Jai continues with a detailed “Compare Nagling on/off using Wireshark” section and concludes:

Since Nagling is on by default, the way to turn this off is by resetting the flag in ServicePointManager. The ServicePointManager is a .NET class that allows you to manage ServicePoint where each ServicePoint provides HTTP connection management. ServicePointManager also allows you to control settings like maximum connections, Expect 100, and Nagle for all ServicePoint instances. Therefore, if you want to turn Nagle off for just tables or just queues or just blobs in your application, you need to turn it off for the specific ServicePoint object in the ServicePointManager. Here is a code example for turning Nagle off for just the Queue and Table ServicePoints, but not Blob:

// cxnString = "DefaultEndpointsProtocol=http;AccountName=myaccount;AccountKey=mykey" CloudStorageAccount account = CloudStorageAccount.Parse(cxnString); ServicePoint tableServicePoint = ServicePointManager.FindServicePoint(account.TableEndpoint); tableServicePoint.UseNagleAlgorithm = false; ServicePoint queueServicePoint = ServicePointManager.FindServicePoint(account.QueueEndpoint); queueServicePoint.UseNagleAlgorithm = false;If you instead want to set it for all of the service points on a given role (all blob, table and queue requests) you can just reset it at the very start of your application process by executing the following:

// This sets it globally for all new ServicePoints ServicePointManager.UseNagleAlgorithm = false;Note, this has to be set for the role (process) before any calls to blob, table and queue are done for the setting to be applied. In addition, this setting will only be applied to the process that executes it (it does not affect other processes) running inside the same VM instance.

Turning Nagle off should be considered for Table and Queue (and any protocol that deals with small sized messages). For large packet segments, Nagling does not have an impact since the segments will form a full packet and will not be withheld. But as always, we suggest that you test it for your data before turning Nagle off in production.

Jason Gilmore presented a 00:05:09 Video: Storing Blobs in Microsoft Azure with the Zend Framework on 6/25/2010:

Cloud computing offers a scalable, fault-tolerant storage and computing solution, which promises to significantly reduce the bandwidth, maintenance and startup costs borne by businesses of all sizes. Microsoft's Windows Azure service provides not only .NET developers but users of other platforms the ability to take advantage of cloud computing solutions.

One increasingly common use of the cloud is storage for BLOBs (binary large objects) such as videos, applications, and even website images. Businesses use cloud storage to streamline operations. In his five-minute Internet.com video, Jason Gilmore uses the Zend Framework's

Zend_Service_WindowsAzurecomponent to demonstrate the fundamental concepts behind storing BLOBs within the Windows Azure cloud.By the video's conclusion, you'll understand how to create BLOB storage containers, upload BLOBs to your Azure account, set access privileges, retrieve BLOBs from Azure, and delete BLOBs.

See Ryan Dunn and Steve Marx presented Cloud Cover Episode 16 - Big Compute with Full Monte, a 00:50:54 video segment about high-performance-computing (HPC) with Windows Azure, on 6/25/2010 in the Live Windows Azure Apps, APIs, Tools and Test Harnesses section below, which includes “how to effectively use queues in Windows Azure.”

See Bill Zack describes the pharmaceutical industry’s use of Azure and High Scale Compute in this 6/25/2010 post in the Live Windows Azure Apps, APIs, Tools and Test Harnesses section below, which describes the use of Windows Azure queues in HPC.

<Return to section navigation list>

SQL Azure Database, Codename “Dallas” and OData

• Zane Adam is now GM Marketing and Product Management, Azure and Integration Services at Microsoft, responsible for management and marketing for MSFT public cloud services including SQL Azure, AppFabric, Azure AppFabric and codename "Dallas."

From June 2007 to May 2010, he was Product and Marketing Manager for Virtualization, Cloud and Systems Management, responsible for driving World Wide marketing and product management for Microsoft virtualization efforts from desktop to the datacenter including cloud computing and systems management.

Follow his new blog here and read his Linked In profile here. Thanks to Dave Robinson for the heads-up.

David Robinson (remember him?) reported SQL Azure SU3 is Now Live and Available in 6 Datacenters Worldwide three days early in his 6/25/2010 post to the SQL Azure Team blog:

We have just completed the rollout of SQL Azure Service Update 3 and now available are some exciting new features we have been promising for the past couple months.

50 GB Database Support – You can now store even more data in a single SQL Azure database as the database size has been increased to 50 GB. This will provide your applications increased scalability. For detailed pricing information on SQL Azure and how to create or modify your database to take advantage of the new size, see this blog post.

Spatial Data Support - SQL Azure now offers support for the Geography and Geometry types as well as spatial query support via T-SQL. This is a significant feature and now opens the Windows Azure Platform to support spatial and location aware applications.

HierarchyID Data Type Support – The HierarchyID is a variable length system data type which provides you the ability to represent tree like structures in the database. We will be following up in the coming days with a blog post on ways to use this data type in your applications.

In addition to these new features, SQL Azure is now also available in two new data centers providing your applications even more flexibility and a wider global reach. These two new data centers are located in the East Asia and the West Europe Region.

As always, your feedback matters. Please keep providing us feedback and we will do our best to prioritize the features you want.

Haven’t heard much from Dave since Wayne Walter Berry began blogging for the SQL Azure team.

Dinakar Nethi and Niraj Nagrani listed Microsoft’s current data center locations in their updated Comparing SQL Server with SQL Azure wiki page of 6/18/2010:

Apparently, Microsoft has downgraded the Quincy, WA facility as the result of an argument with Washington legislators over sales tax payments on data center hardware.

See Robert Boyer speculates that Microsoft intends to build a new data center in his Sources: Computer data storage center may be headed to county story of 6/25/2010 for the Burlington, NC Times-News in the Windows Azure Infrastructure section below.

Wayne Walter Berry continues his blog series with SQL Azure Horizontal Partitioning: Part 2 of 6/25/2010:

SQL Azure currently supports 1 GB and 10 GB databases, and on June 28th, 2010 there will be 50 GB support. If you want to store larger amounts of data in SQL Azure you can divide your tables across multiple SQL Azure databases. This article will discuss how to use a data access layer to join two tables on different SQL Azure databases using LINQ. This technique horizontal[ly] partitions your data in SQL Azure.

In our version of horizontal partitioning, every table exists in all the databases in the partition set. We are using a hash base partitioning schema in this example – hashing on the primary key of the row. The middle layer determines which database to write each row based on the primary key of the data being written. This allows us to evenly divide the data across all the databases, regardless of individual table growth. The data access knows how to find the data based on the primary key, and combines the results to return one result set to the caller.

This is considered hash based partitioning. There is another style of horizontal portioning that is range based. If you are using integers as primary keys you can implement your middle layer to fill databases in consecutive order, as the primary key grows. You can read more about the different types of partitioning here.

Performance Gain

There is also a performance gain to be obtained from partitioning your database. Since SQL Azure spreads your databases across different physical machines, you can get more CPU and RAM resources by partitioning your workload. For example, if you partition your database across 10 - 1 GB SQL Azure databases you get 10X the CPU and memory resources. There is a case study (found here) by TicketDirect, who partitioning their workload across hundreds of SQL Azure databases during peak load.

Considerations

When horizontal partitioning your database you lose some of the features of having all the data in a single database. Some considerations when using this technique include:

- Foreign keys across databases are not supported. In other words, a primary key in a lookup table in one database cannot be referenced by a foreign key in a table on another database. This is a similar restriction to SQL Server’s cross database support for foreign keys.

- You cannot have transactions that span two databases, even if you are using Microsoft Distributed Transaction Manager on the client side. This means that you cannot rollback an insert on one database, if an insert on another database fails. This restriction can be mitigated through client side coding – you need to catch exceptions and execute “undo” scripts against the successfully completed statements.

- All the primary keys need to be uniqueidentifier. This allows us to guarantee the uniqueness of the primary key in the middle layer.

- The example code shown below doesn’t allow you to dynamically change the number of databases that are in the partition set. The number of databases is hard coded in the SqlAzureHelper class in the ConnectionStringNames property.

- Importing data from SQL Server to a horizontally partitioned database requires that you move each row one at a time emulating the hashing of the primary keys like the code below.

The Code

The code will show you how to make multiple simultaneous requests to SQL Azure and combine the results to take advantage of those resources. Before you read this post you should familiarize yourself with our previous article about using Uniqueidentifier and Clustered Indexes and Connections and SQL Azure. In order to accomplish horizontal partitioning, we are using the same SQLAzureHelper class as we used in the vertical partitioning blog post.

The code has these goals:

- Use forward only cursors to maximize performance.

- Combine multiple responses into a complete response using Linq.

- Only access one database for primary key requests.

- Evenly divide row data across all the databases.

Wayne continues with source code for Accounts Table, Partitioning for Primary Key, Fetching a Single Row, and Inserting a Single Row. He concludes:

In part three, I will show how to fetch a result set that is merged from multiple responses and how to insert multiple rows into the partitioned tables, including some interesting multi-threaded aspects of calling many SQL Azure databases at the same time. Do you have questions, concerns, comments? Post them

belowand we will try to address them.SQL Azure Helper code is here: SqlAzureHelper.zip

Wayne Walter Berry (who else?) explains Accessing SQL Azure from WinForms in this 6/25/2010 post:

There are a lot of articles and discussion about calling SQL Azure from Windows Azure; however, I am personally fascinated with calling SQL Azure from the Windows’ desktop. This article will talk about some of the considerations of calling SQL Azure from an application running on a user’s local computer, and best practices around security.

The Benefits

Clearly, the biggest benefit is that you can read and write relational data to a remote database using ADO.NET without having to open access to an on premise database to the Internet. Any desktop computer can access SQL Azure as long as it has Internet access, and port 1433 open for outbound connections. To deploy such a system in your datacenter you would need to deal with installing, maintaining redundant copies of SQL Server, opening up firewalls and VPN permissions(to secure the database), and install VPN software on the client machine.

That said, it is up to the architecture of the desktop software to maintain a secure environment to the SQL Azure server and protect the access to the data on SQL Azure. Access to SQL Azure is controlled via two mechanisms: a login and password, and firewall settings.

Passwords

Best practice for dealing with logins and password on the desktop dictate:

- The login and password to SQL Azure should never be stored in the code running on the desktop,

- The login and password shouldn’t be stored on the the user’s hard drive.

- Every desktop user should have their own login and password to SQL Azure.

It is very tempting to hard-code a global login and password in your code, giving every user of the desktop software the same access permissions to SQL Azure. This is not a good practice. Managed code is very easy to decompile and it is very easy to read the hard coded logins and password. Even fully compiled code like C++ provides only a little more protection. In any language, do not hard code your login and passwords in your code where users have access to your .dlls and .exes .

Though it is possible to safely store your login and password on your hard drive by hashing it with the windows login token to encrypt it, the code and the security review of the code make it prohibitive. Instead, it is best not to store the login and password at all. The best practice would be to prompt the desktop user for the login and password every time they use your application.

Every desktop user should have their own login and password to SQL Azure. This blog post discusses how to add additional logins and password beyond the administrator account. This allows you to restrict access on a user-by-user basis at anytime. You should not distribute the administrator login and password globally to all users.

Firewall Settings

SQL Azure maintains a firewall for the SQL Azure servers, preventing anyone from connecting to your server if you do not give their IP address permissions. Permissions are granted by client IP address. Any user’s desktop application that connected to SQL Azure would need to have the SQL Azure firewall open for them in order to connect. The client IP would be the IP address of the desktop machine as seen by the Internet. In order to determine your client IP address, the user could connect to: http://www.whatsmyip.org/ with their web browser and report the IP shown to their SQL Azure administrator.

Dinakar Nethi authored a downloadable Troubleshooting and Optimizing Queries with SQL Azure seven-page white paper in .docx format on 6/25/2010:

Summary: SQL Azure Database is a cloud-based relational database service from Microsoft. SQL Azure provides Web-facing database functionality as a utility service. Cloud-based database solutions such as SQL Azure can provide many benefits, including rapid provisioning, cost-effective scalability, high availability, and reduced management overhead. This paper provides guidelines on the Dynamic Management Views that are available in SQL Azure, and how they can be used for troubleshooting purposes. [Emphasis added.]

ChrisW reported for LiveSide.net that the Windows Live Messenger Connect beta released on 6/25/2010 with support for OData and OAuthWRAP authentication:

Today, Microsoft announced the availability of the Windows Live Messenger Connect beta. Messenger Connect is a single API that enables developers to integrate social experiences into their web and rich-client applications using the Windows Live services. It consists of a REST API service along with a set of libraries for .NET, Silverlight, and JavaScript. Furthermore, Messenger Connect allows applications to provide RSS 2.0 or ATOM feeds to pull social updates from your site into Windows Live. Although participation in the Messenger Connect beta is currently only by invitation, you can apply for the beta here.

As Messenger Connect is a single API for the whole range of Windows Live services, it replaces previous APIs like the Windows Live ID SDKs (Client Authentication, Delegated Authentication, and Web Authentication, the Windows Live Contacts API, and the Windows Live Messenger Web Toolkit. Although Messenger Connect replaces the previous APIs and SDKs, it still offers all of their functionality.

Authentication

Applications previously had to use one of the different Windows Live ID SDKs to perform authentication, depending on the scenario. Instead of having different SDKs to perform authentication, Messenger Connect incorporates OAuth WRAP as a single authentication mechanism for all types of applications. While OAuth WRAP is, in concept, similar to Windows Live ID Delegated Authentication, it does not require any server-side cryptographic signing. Furthermore, it provides applications with a unique static identifier for a user, similar to Windows Live ID Web Authentication. This allows developers to incorporate authentication into their applications without having to implement the whole process of authentication and user profiles by themselves. Applications previously incorporating Windows Live ID Client Authentication have to show a web browser component that exposes the same authentication page as web applications.

User data

Applications can access the Windows Live user data via the REST API service in a consistent way and use it to retrieve the data in multiple formats including OData, AtomPub, JSON, RSS, and XML. This is similar to the REST service found previously in the Live Framework CTP. The major difference is however, that the REST API service now provides access to Activities, Calendars, SkyDrive Office Documents, and SkyDrive Photos in addition to only Contacts and Profiles. Although the Live Framework included a Mesh component and even an equivalent Sync component was shown at PDC 2009, no Sync component is included in this beta version unfortunately. [Emphasis added.]

The REST API service provides applications access to the following user data:

- Profiles – Provides access to a user’s profile data stored within Windows Live. Applications can access the profiles of the authenticated user, his friends, as well as of any other user that gave consent to the application before. While applications can read all of the user’s profiles, applications can only update a user’s personal status message.

- Activities – Provides access to a user’s Messenger social activity feed (previously known as the What’s New feed). Applications can not only read and write activities to a user’s activity feed, but also read activities performed by their contacts.

- Contacts – Provides access to a user’s social graph by allowing reading and writing to a user’s Windows Live address book. Furthermore, the address book is available in the Portable Contacts format as well. Like the Windows Live Contacts API, Messenger Connect provides access to contact categories as well.

- Calendars – Provides full read and write access to the user’s calendars, so that applications can help users manage their time or add application specific events to the user’s calendar.

- SkyDrive Office Documents – Provides access to read from and write to Office documents stored on a user’s Windows Live SkyDrive.

- SkyDrive Photos – Provides access to read from and write to albums, photos, videos, and people tags stored on a user’s Windows Live SkyDrive.

Instant-Messaging

Messenger Connect allows rich web applications to incorporate Windows Live Messenger functionality, just as the Windows Live Messenger Web Toolkit (MWT) did before. It provides a JavaScript interface for instant messaging, full access to contacts management, presence, and conversation management. Developers can either incorporate general UI Controls that can be adapted through CSS and JavaScript, or use the JavaScript API to integrate the Messenger functionality deeply within their applications.

In addition to the MWT features, Messenger Connect also provides a Chat control that allows web sites to incorporate (the often requested) chat room functionality. This control allows users to chat with other users currently visiting the same web application. Another great improvement is the introduction of the so-called Messenger Context, which provides a more simplistic interface for common Messenger functionality like detecting a contact’s online presence or a user modifying a personal status message.

Web Activity Feeds

Web Activity Feeds allow applications to host RSS 2.0 or ATOM feeds with social updates from users on that site. Windows Live automatically pulls down these feeds and incorporates them in the Messenger social feed.

Programming Libraries

Libraries for .NET, Silverlight, and JavaScript are provided. The .NET library leverages the WCF Data Services client library for handling the OData data. Any application using .NET version 3.5 or later can incorporate this library. The Silverlight library is built in a similar way as the .NET library but is only compatible with Silverlight version 4.0 or later.

The JavaScript library can be used by any in-browser application, but does not provide access to all of the services provided by the REST API service. In contrast, only the JavaScript library supports the Messenger functionality.

Conclusion

With this major overhaul of the Live Services APIs, we are thrilled with the options developers now have to incorporate Windows Live services into their applications. It is good to see that the set of available services finally expanded, but unfortunately, there is no Sync component and no support for groups. Many people requested an API for SkyDrive in the past, and it is good to see that developers can finally access photos and documents on SkyDrive.

In the next couple of weeks we are going to publish a series of posts on this topic, so stay tuned for more!

More information

Check out the revamped Windows Live Developer Center for more information on Messenger Connect. If you want to explore the capabilities of the Messenger Connect UI Controls or REST API service immediately, and without writing any code, you can check out the Windows Live Interactive SDK. You can download the SDK bits here and additional samples are found here (including PHP samples!). If you have any questions regarding Messenger Connect, you can ask them in the Windows Live Messenger Connect forum.

Jonathan Carter reported Netflix OData Service Updates in this 6/25/2010 post:

The Netflix OData Service (http://odata.netflix.com/Catalog) was updated this evening with some changes that might affect those of you that have written applications against it. All of the changes were additive (i.e. no properties were renamed or removed from the model), so it should be pretty simple to update your consumer code (famous last words).

There were three main changes made:

- The server-side paging for each of the collections has been increased to 500. The previous limit of 20 was put in place back in the prototype stage of the service and somehow it survived. We typically recommend 500 as a good number for balancing the needs of consumers while maintaining the performance/load of the server.

- The only reason this might be an issue for consumers is if you were requesting a large collection (e.g. Titles), relying on the server-driven paging size of 20, and you’re now being handed 500 titles at once all of a sudden. If that much data is more than you need (or want) at once, then you can just do client-side paging (via $top and $skip) to control the amount of data you get back per request.

- The Title type has a new property called ShortSynopsis. As you can imagine, this property is a shortened version of the existing Synopsis property. The other useful thing about ShortSynopsis is that it doesn’t include any HTML (unlike Synopsis) in its contents, so if you’re developing a client UI that doesn’t want to parse HTML content (or can’t, as is the case with SL in-browser), ShortSynopsis is your new solution.

- Each delivery format of a Title (Instant, DVD, Blu-Ray) now has its own distinct Rating and Runtime properties (e.g. Dvd/Rating, Instant/Runtime, etc.). This allows each format to differ in their MPAA rating and their overall runtime, which will happen in practice, and so that extra flexibility was needed in the data.

- In order to maintain backwards compatibility, and keep the ease-of-use of the data, we wanted to keep the Rating and Runtime properties directly off a Title. Since a title can have multiple formats, and each of those formats can have different ratings and runtimes, we obviously had to decide on which of the three take preference in representing the “canonical” format. Basically, the Title’s “root” Rating and Runtime values will come from the first format that is available, in the following order: Blu-Ray, DVD, Instant. Therefore, if a Title is available in Blu-Ray, it’s Rating property will always be equal to its BluRay/Rating property (the same goes for the runtime). If a Title isn’t available in Blu-Ray but it is in DVD, the DVD values will be used, and so on. If you don’t like this behavior, you can use the individual specific rating and runtime values.

Those of you that are consuming the service from a dynamic language (e.g. JavaScript, Ruby), you should have nothing to worry about here. But, if you’re consuming the service from a static language (e.g. C#, Java), you will most likely need to regenerate your proxy classes. I say “most likely” because every client differs in how it treats missing properties. The .NET client for instance, by default, will throw an exception if the server returns a property that doesn’t exist on the client-side proxy type. You can get around this by setting the DataServiceContext.IgnoreMissingProperties property to true, or just re-generate the proxy. [Emphasis added.]

Jeff Atwood announced Stack Exchange Data Explorer Open Sourced on 6/25/2010:

As promised, the Stack Exchange Data Explorer — a web-based tool for querying our creative commons data — is now open source!

This is, of course, the project that our newest Valued Associate, Sam Saffron, has been working so hard on over the last 6-8 weeks.

The project is hosted at Google Code in a Mercurial repository: http://code.google.com/p/stack-exchange-data-explorer/

The SEDE is built using the very same software stack we use on Stack Overflow:

- jQuery

- .NET 4.0 C#

- Visual Studio 2010

- SQL Server

- IIS7

You can get started using the completely free Visual Studio 2010 Express Edition.

Check out the readme.txt for additional details, or browse the source through Google Code’s web UI.

It was always our hope that the SEDE could be used as a freely embeddable web tool to teach SQL with a sample dataset — and now, the code itself is available to modify, improve, and learn from as well.

![]() Miguel de Icaza tweeted on 6/26/2010:

Miguel de Icaza tweeted on 6/26/2010:

Brilliant, StackOverflow can now be navigated/queried with OData: http://bit.ly/cZ6q3P about 2 hours ago Miguel de Icaza Vice President of Developer Platform at Novell .NET

Megan Keller interviewed Quest Software’s Brent Ozar and Kevin Kline on the Future of Cloud Computing on 6/24/2010 for a SQL Server Magazine article:

Despite the fact that few people in the SQL Server community appear to be using, or even testing, cloud computing in their environments, it was a main focus of the TechEd 2010 keynote, and the topic seemed to pop up in many of the discussions I had with readers and authors at the conference. So I met up with Kevin Kline, strategy manager for SQL Server at Quest Software, and Brent Ozar, a SQL Server DBA expert for Quest Software, to hear what they had to say about current cloud adoption, how cloud computing will affect SQL Server DBAs and developers, and their predictions on the future of cloud computing. (To see what Kevin and Brent had to say about other topics, such as virtualization, SQL Server 2008 R2, and NoSQL, watch for the rest of this series on the Database Administration blog.)

Megan Keller: Another big trend that we are hearing a lot about at this conference is cloud computing. People aren’t necessarily adopting, but they’re talking about adopting. They’re talking about the requirements—what do they need—and their concerns. Do you know anyone who is using cloud computing?

Brent Ozar: It’s one of those deals where I think people saw how rapidly virtualization got adopted when the bugs were ironed out. It really caught everybody by surprise when originally the hard-core shops went in, adopted it, and went under extreme pain when they chose to adopt those things early, but now everybody’s doing it and they’re seeing extreme cost benefits improvements there. Cloud, I think people look at that and say, “I bet that this is the next virtualization and it’s not ready yet, but I want to be one of the people who is there when it is ready.

If it saves as much money as virtualization did, I want to be right there because I’m under so much cost pressure.” I don’t know anybody who’s moved stuff from on-premises to the cloud, but I know a lot of developers who say, “I want to build something, but I don’t have an enterprise behind me, what’s the easiest way to get started?” Zynga Games, the guys who do Farmville, just published a big white paper on how they were able to scale with the cloud. They did everything through Amazon EC2, and they did it in the very beginning of their deployment. They said “I bet we’re going to have 200,000 users a week. We should probably build something that can scale quickly.” Well as it turned out, they were adding 10 million customers a week and they said, “Thank god we made the decision to go with the cloud initially.” And it’s the developers that have no idea what they are going to build, who have no money behind them, who are able to make those kinds of decisions.

But the people that we usually talk to at shows like TechEd aren’t really the target market yet. I think Microsoft is making brilliant decisions with Visual Studio to say, “We’re going to put it in your hands. Slap out the credit card whenever you’re ready to scale, we’ve got the tools right here at your disposal if you want to go play with something like that.”

Keller: Do you think many people just don’t yet understand the potential use cases for the cloud?

Ozar: No, DBAs will never like it. It’s much like virtualization—DBAs never wanted virtualization. They got it shoved down their throats by CIOs. This is the same thing, except it’s going to be shoved down their throats by developers. The developers will say, “If you don’t want to do this, I’m going to take my credit card and just go build the thing on my own,” just like CIOs said, “I don’t care whether you want to do this, I’m going to start with my file and print servers, and by the time it’s home, we’re going to use it here whether you like it or not.” DBAs were kind of strong armed into it.

Kevin Kline: I think there is an element to it as well that it’s still not mature enough to assuage the fears of the DBA. In many situations the DBA is the risk mitigator; their job is to minimize risk. And there is an awful lot of risk with cloud right now if you were to put production data on the cloud. The cloud is not really that secure; it’s not secure enough. In addition, if you’re a publicly traded company, it’s very difficult to keep auditing requirements in alignment so that all of the auditors would be satisfied.

Right now the way the cloud is architected, at least from Microsoft, is that it’s really not tuned toward high levels of transaction processing. The disk space that you get has been limited, and it hasn’t been a factor of “Well, we just don’t have enough disks.” They’ve got lots of disks; it’s that the disks are laid out for volume, not for high transaction performance. So, if performance is part of your issue, too, of course you could scale out, but you would have to acquire and pay for that many more resources.

There’s certain aspects of what the DBAs are risk adverse to that are on the radar of Microsoft, and they know that they are going to solve that soon, but not today. So once they have that solved, they will have an answer for those DBAs who are saying “Whoa, let’s put the brakes on this for a little bit.” So it’s coming.

And if a lot of DBAs are like “I’m not sure if I want to do that or not,” as Brent said, they will have to. You cannot get away from the ROI of this situation. The value proposition is unassailable. It’s on other issues that DBAs are currently able to push back on; security, transactional consistency. So once those are solved—and it will just be around the corner—then it’s going to be happening very rapidly. Virtualization has already proved that case for us, and as Brent said, people are lining up. They’re like, “We’ve learned our lessons from history. We’re ready to go with the next round as soon as it’s ready.” …

Megan continues the Q&A.

<Return to section navigation list>

AppFabric: Access Control and Service Bus, Workflow

• See Zane Adam is now GM Marketing and Product Management, Azure and Integration Services at Microsoft, responsible for management and marketing for MSFT public cloud services including SQL Azure, AppFabric, Azure AppFabric and codename "Dallas" in the SQL Azure Database, Codename “Dallas” and OData section above.

The Geneva Team Blog’s Using Federation Metadata to establish a Relying Party Trust in AD FS 2.0 post of 6/25/2010 is an extraordinarily detailed and profusely illustrated tutorial for enabling claims-based identity fedration:

Trust relationships are of course the sine qua non of AD FS 2.0. Relying Party Trusts or Claims Provider Trusts are necessary before AD FS 2.0 can provide benefit to any organization. That said, the establishment and maintenance of these relationships can be a time consuming task. Fortunately there are methods available that make this job significantly easier. AD FS provides three methods for creating Relying Party Trusts and Claims Provider Trusts. Manual entry of the necessary information is the most familiar method, but also the most time consuming and difficult to maintain. Additionally a trust can be created by importing "federation metadata", that is, data that describes a Relying Party or Claims Provider and allows for easy creation of the corresponding trust. A federation metadata document is an XML document that conforms to the WS-Federation 1.2 schema. Federation metadata may be imported from a file, or the partner may make the data available via https. The latter method provides the most straightforward method for creating a partnership and greatly simplifies any ongoing maintenance that may be required.

Manually creating a Relying Party Trust requires that the Administrator supply a fair amount of information that must be obtained from the partner organization through some out of band communication. This information includes the URLs for the WS-Federation Passive protocol and\or the SAML 2.0 Web SSO protocol, one or more relying party identifiers and, typically, the X.509 Certificate used to encrypt any claims sent to the relying party. Figure 1 below shows the various pages of the Add Relying Party Trust Wizard that must be navigated in order to create a relying party trust.

![clip_image002[4] clip_image002[4]](http://blogs.msdn.com/cfs-file.ashx/__key/CommunityServer-Blogs-Components-WeblogFiles/00-00-00-91-54-metablogapi/8765.clip_5F00_image0024_5F00_thumb_5F00_6B10C5D3.jpg)

The first screen capture of Figure 1 - Manually adding a relying party trust. Four more follow.Once the relying party trust is established, it must also be maintained. It is possible that one or more of the URL's that identify the relying party may change, or the set of claims that the relying party will accept might change, but more likely, the X.509 Certificate used for encryption will have to be replaced, either because it has expired or because it has become compromised. Managing the updating of encryption certificates across an organization that might contain hundreds, or thousands, of relying parties presents a daunting challenge.

The post continues for several more feet and concludes:

Figure 13 - Notification that a relying party trust needs to be updated.

If you refer to figure 13, you will notice that one of the actions available for the Contoso relying party is Update from Federation Metadata... This command allows the Administrator to force an update from metadata at will.

Federation Metadata is a powerful tool for managing AD FS 2.0. In future posts we will explore other aspects and techniques for using this data.

For more information about how to create trusts via federation metadata, see the following topics in the AD FS 2.0 Deployment Guide:

Ron Jacobs delivers endpoint.tv - Workflow and Custom Activities - Best Practices (Part 2), a 00:26:56 video segment on 6/25/2010:

In this episode, Windows Workflow Foundation team Program Manager Leon Welicki drops in to show us the team's guidelines for developing custom activities.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

• Tom Simonite asserted “Users can share information, but the network only sees encrypted data” in his A Private Social Network for Cell Phones post about Microsoft Research’s new Contrail network on Azure in this 6/22/2010 article from the MIT Technology Review:

Picture sharing: This photo will be tagged and sent to the users’ friends—but it will be encrypted so that the company that handles the network won't be able to see it. Credit: Microsoft

Researchers at Microsoft have developed mobile social networking software that lets users share personal information with friends but not the network itself.

"When you share a photo or other information with a friend on [a site like] Flickr, their servers are also able to read that information," explains Iqbal Mohomed, a researcher at Microsoft Research Silicon Valley, who developed the new network, called Contrail, with several colleagues. "With Contrail, the central location doesn't ever know my information, or what particular users care about--it just sees encrypted stuff to pass on."

When a Contrail user updates his information on the network, by adding a new photo, for example, the image file is sent to a server operating within the networks' cloud, just as with a conventional social network. But it is encrypted and appended with a list that specifies which other users are allowed to see the file. When those users' devices check in with the social network, they download the data and decrypt it to reveal the photo.

Contrail requires users to opt-in if they want to receive information from friends. When a person wants to receive a particular kind of update from a contact, a "filter" is sent to that friend's device. If, for example, a mother wants to see all the photos tagged with the word "family" by her son, she creates the filter on her phone. The filter is encrypted and sent via the cloud to her son's device.

Once decrypted, the filter ensures that every time he shares a photo tagged "family," an encrypted version is sent to the cloud with a header directing it to the cell phone belonging to his mother (as well as anyone else who has installed a similar filter on his device). Encryption hides the mother's preferences from the cloud, as well as the photos themselves. Each user has a cryptographic key on his or her device for every friend that is used to encrypt and decrypt shared information.

Contrail runs on Microsoft's cloud computing service, Windows Azure, and the team has developed three compatible applications running on HTC Windows Mobile cell phones. "This is an [application programming interface] on top of which you can build all kinds of social applications," explains Mohomed. "We just developed these applications to demonstrate what it can do."

As well as the picture-sharing app, the researchers created a tool for sharing location information with friends. Friends can receive a notification when a user enters an area drawn on a map (see [00:02:40] video of the app being demonstrated). But users restrict the amount of information shared by their phone. "It's my location, so I get control," says Mohomed. "If my boss wanted to track my location, I could allow them to do it only during the week, for example."

Mohomed thinks some people will be attracted by the idea of a more secure social network, although he admits that a provider might need to find a different business model--many networks, including Facebook, rely on being able to access user data in order to deliver tailored advertising.

"I may not care that Flickr can see my photos and messages, but people may feel differently about location sharing," says Mohomed. "Imagine you are using an application that allows you to track your kid's cell phone--what if their server is compromised?" …

Tom continues with comments from David Koll, a researcher at the University of Göttingen.

The encryption technique, described in Channel9’s Mobile-to-Mobile Networking in 3G Networks video, appears to me to be useful for managing third-party access to personal health records (PHRs) securely.

• The Geo2Web.com site’s Find-Near-Route for Bing Maps powered by SQL Azure (Spatial) post of 6/26/2010 combines Bing Maps with SQL Azure’s new Geography data type in this demonstration project:

Oh yes, SQL Azure goes spatial. Yesterday the SQL Azure team made several announcements and one of them was, that SQL Azure has now received the same spatial-treatment, that SQL Server 2008 had already since quite a while. Obviously this announcement demands a quick sample on how we could use the spatial-data types, – indexes and -functions of SQL Azure for Bing Maps applications.

Sometimes people want to filter points of interest (POI) and display only those that are within a certain distance of a route. For example: when I calculate a route from Las Vegas to San Francisco and I want to find petrol stations along the route it doesn’t help me at all when all 171 petrol stations in the map view are displayed. Some of them are 100 miles of the route and I certainly wouldn’t want to use them.

What I really would like to find are petrol stations which are no more than a certain distance off my route – let’s say 1 mile.

Here is how we can do it. In SQL Azure we have a table with our POI. One column holds data of type GEOGRAPHY (the GEOMETRY data type is supported as well). We also have a spatial index and a stored procedure that will actually do the work for us. …

You will find a live sample here. The source code and some sample data are available here.

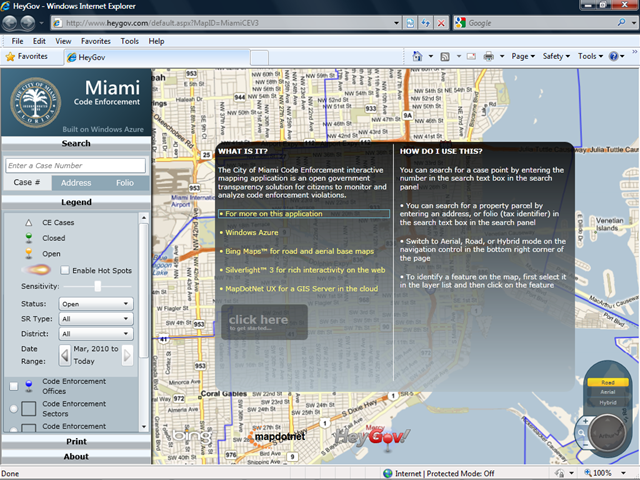

• The City of Miami’s HeyGov application (it’s second project built on Windows Azure with Bing Maps) tracks building code enforcement actions:

Hakan Onur offered a detailed Interrole Communication Example in Windows Azure using WCF on 6/26/2010:

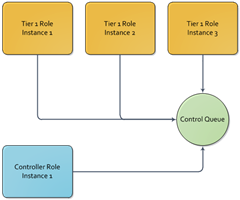

There are many scenarios where you will need to do interrole communication when creating a solution using Windows Azure platform such as multiple web roles that need to recycle when a controller triggers an event.

One way to achieve this goal is to create an event queue, modify the controller to enqueue a message that indicates a recycle is requested and make your web roles listen to this queue. Your controller can also enqueue 3 messages if you have 3 Web Roles to recycle. Below is the basic design for such an approach.

While you can use a queue as to mediate your messaging needs, you can also use WCF services to do interrole communication. In this approach, your roles would expose an internal endpoint, bind a WCF service that would listen on this endpoint. Your controller would use the RoleEnvironment to identify each role instance and invoke the service endpoints to notify each instance that a recycle is required. The design for this approach is below.

In this post I will try to provide a simple example of interrole communication using WCF – in other words, I will try to realize the design above. The code examples will not have much error handling, instead I will try to concentrate on the basics. Our Tier 1 Role Instances will expose a WCF Service that will use TCP transportation. The service interface will be a simple one that will include a one-way function called RecycleYourself. Upon retrieval of this message, our Tier 1 Role instances will recycle themselves.

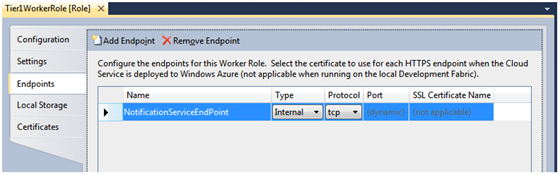

We will start with adding input endpoints for our Tier 1 Role instances. This is pretty easy to do using Visual Studio. We will start with right clicking on the Worker Role definition and selecting properties.

In the endpoints section of the Tier 1 Worker Role properties screen, we will click on the “Add Endpoint” button to add a new internal endpoint. Let’s name it “NotificationServiceEndPoint”, select “Internal” as the type (we are selecting the “Internal Endpoint Type” since we will not be exposing this endpoint to external world) and select Protocol to be TCP.Next step is to create an interface for the service that will accept the recycle notification. This interface will expose a one-way endpoint. You can modify the contracts to suit your needs. I would suggest creating a class library project and putting the contracts in this project since we will be referencing it both for hosting and consumption purposes. The project that you create for your service and contracts should reference System.Servicemodel and System.Runtime.Serialization assemblies.

Hakan continues with source code for the sample project.

Maureen O’Gara reported “Zuora will be able to meter, price and bill for the apps and services” in her Now for the Fun Part of the Cloud: The Money post of 6/25/2010:

Zuora was admitted to Azure's chi-chi hand-picked TAP club as its charter cloud-based billing supplier when the two-year-old start-up, with some handholding by Microsoft, delivered the Zuora Toolkit for Windows Azure, which developers and ISVs can use to monetize their pay-as-you-go Azure cloudware.

They'll be able to meter, price and bill for the apps and services.

The widgetry, like all in the Technology Adoption Program (TAP), is supposed to help drive the success and adoption of the Azure ecosystem and abet the shift away from perpetual licenses and one-time fees.

Zuora's Toolkit is supposed to let developers create flexible price plans and packages; support usage and pay-as-you-go pricing models; initiate a subscription order online; accept credit cards with PCI Level 1 compliance; and manage customers, recurring subscriptions and invoicing.

Zuora president Shawn Price says, "The single most important service for ISVs in the cloud is the ability to monetize, and Zuora removes the friction of building a payment ecosystem and manages the constant changes that come with subscription management." He advises against trying to build a billing system from scratch if you're in a different line of work. It's a bitch.

Zuora also has a Z-Commerce for the Cloud code sample for developers to embed in their cloud solutions at http://developer.zuora.com/samplecode.html.

Zuora folks are out of salesforce.com, WebEx, eBay and Vitra and the company got its $21.5 million in funding from Benchmark Capital, Shasta Ventures, salesforce CEO Marc Benioff and PayPal president Scott Thompson. It handles nine million cloud transactions a day at EMC. Other cloud customers include Tata, Zetta and cloud providers in Holland, Australia and Mexico.

For more about Zuora, see Leena Rao’s Zuora Launches Billing Service For Cloud Providers post of 6/23/2010 to TechCrunchIT from the Structure 2010 conference:

We’ve written about Zuora, a SaaS startup that offers online services to manage and automate customer subscriptions and payments, and its impressive backing. And the startup just signed over $1 billion in contracted subscription revenue in the first quarter of its new fiscal year, which ended April 30. Today, Zuora is announcing a subscription billing model for cloud computing, called Z-Commerce for the Cloud.

Zuora’s cloud-based billings platform aims to alleviate the need for online businesses to develop their own billing systems, especially to handle recurring payments like those associated with subscriptions.

Z-Commerce for the Cloud enables cloud providers to automate metering, pricing and billing for products, bundles, and configurations. Cloud providers can charge users per terabyte stored, IP address, gigabyte transferred, CPU instance, application user, and more. The service also includes 20 pre-built cloud charge models, including on demand, reservation, location-based, and off-peak pricing, with the ability to configure these models. And Z-commerce includes an online, PCI-compliant storefront that allows customers to make purchases, to manage their accounts, and to monitor usage in a self-service manner.

Z-Commerce for the Cloud is already being used by Cloud Central, EMC, IC&S, Nu-b, Sun, Tata, and Zetta. Zuora has seen fairly significant growth over the past year; so it’s safe to assume that this product should only help the company continue this path.

Bill Zack describes the pharmaceutical industry’s use of Azure and High Scale Compute in this 6/25/2010 post:

Azure High Scale Compute (HSC) is a Windows Azure implementation of the Map-Reduce parallel processing architecture.

In this architecture a controller task (which may be located on a customers premises, in the Azure cloud or distributed between them) is responsible for accepting a work stream, breaking it up into parallel processing units and deploying it to multiple worker nodes in the cloud. It is also responsible for collecting the results of each parallel work stream and putting it all back together again to achieve overall reduced processing time.

The implementation of this process in Windows Azure uses either an on-premises Windows based controller application or an in-cloud Azure Compute node (Web Role) that schedules many Worker nodes by uploading the work streams to Windows Azure Blob Storage and then using Windows Azure Queues to get the work to the multiple worker nodes that poll the queues looking for work to perform.

Recently I had the privilege of helping to architect an Azure HSC application for a major pharmaceutical company. (See here.)

Traditional High Performance Compute (HPC) solutions can be very expensive and time consuming to provision and maintain. Azure HSC eliminated the need to purchase hardware and software for a computational process that has peak demands but does not occur on a continuous basis.

By allowing them to parallelize long running processes and to dynamically expand and contract the number of application instances required on demand they were able to reduce a process that normally takes hours or days to a matter of minutes.

This makes it possible to perform molecular analyses that previously were either impossible or too time-consuming to perform. The result of these analyses is the development of new compounds that can be used to develop new medications.

This is an outstanding use of Windows Azure, a highly scalable fault tolerant pay for what you use service that is changing the way companies think of solving their business problems.

Ryan Dunn and Steve Marx presented Cloud Cover Episode 16 - Big Compute with Full Monte, a 00:50:54 video segment about high-performance-computing (HPC) with Windows Azure, on 6/25/2010:

Join Ryan and Steve each week as they cover the Microsoft cloud. You can follow and interact with the show at @cloudcovershow

In this episode:

- Discuss the lessons learned in building the HPC-style sample called Full Monte.

- For 'big compute' apps, learn the gotchas around partitioning work, message overhead, aggregation, and sending results.

- Listen in on how to effectively use queues in Windows Azure.

- Discover a tip for keeping your hosted services stable during upgrades.

Show Links:

Using Affinity Groups in Windows Azure

Enzo SQL Shard (SQL Azure Sharding)

CloudCache (Memcached in Windows Azure)

Windows Azure Training Kit - June Release

Ben Flock describes HPC in the Cloud for Life Sciences Industry…. in this 6/22/2010 post:

Over the last 12 months, I’ve been doing a lot of incubation work in the Cloud Computing space throughout the Healthcare & Life Sciences Industry. The results from these early efforts have been interesting…and are relevant to any Healthcare or Life Sciences organization interested in using the cloud for business application purposes. To get the word out on these efforts, I’m sponsored a series on local Cloud Computing info exchange events. On June 8th, I held a Life Sciences Cloud Computing event @ the Hilton in Iselin, NJ. Close to 50 people from the Life Sciences Industry attended the event…although the event did include a Microsoft Azure Cloud Platform Overview, the bulk of the presentations/demonstration were dedicated to “real world” solutions examples…that I believe will manifest themselves as solution patterns that apply broadly across the entire Healthcare & Life Sciences Industry. The following solutions patterns were showcased at the event;

- “HPC in the Cloud”

- “Working Securely in the Cloud”

- “Giving the Cloud a visually appealing head”

For more information on the content from the event, please visit the Microsoft Pharmaceutical Deployment User Group website: http://MSPDUG.spaces.live.com

So let’s talk about this HPC in a cloud for Life Sciences thing…

“HPC in the Cloud”: High Performance Computing (HPC) is definitely core requirement for the Life Sciences industry…they have lot’s of long running Scientific applications that require on-demand compute resources. Currently, these applications are run on dedicated hardware or on-premise managed Grid facilities. The current approach is suboptimal from a cost, scale, performance, and overall processing/results turnaround time perspective.On average, it takes several hours for many of these applications to run…in many cases they fail to complete due to memory and/or CPU availability. As a rule of thumb, It takes10 years and $1 billion to bring a drug to market…finding ways to shorten research & development timeframes can add hundreds of millions to a drugs overall revenue stream. Everyone is looking to cloud computing as a better way to HPC from a cost, scale, performance, and availability perspective.

We just completed a 30 day Windows Azure HPC PoC with JNJ’s R&D IT organization. For the PoC, we selected a typical Scientific Computation application…2D Molecular structure inputs, algorithmic calculations, 3D Visual output. Intent was to move the existing application .exe into Windows Azure to test cost, availability, scale, and performance.

JNJ representatives presented results from the PoC @ the June 8th Life Sciences Cloud Computing event. Results from the PoC were very positive. They saw a 100X reduction in end to end processing time for a typical request. Cost, although not quantifiable at this stage, will certainly be appreciably less than dedicated resource alternative. I want to err a word of caution…this is a capabilities validation exercise…although initial results are very promising…we still have some work to do before I would consider this to be a turnkey cloud capability. Nevertheless, there is no denying that this is a killer solutions pattern for cloud computing…stay tuned for info on the subject.

The Microsoft Pharmaceutical Deployment Users Group (PDUG) posted the following description of their activities:

Microsoft Pharmaceutical Deployment Users Group (PDUG)

Pharmaceutical Organizations are constantly plagued by the complexities of validation as defined by country and state governing bodies. Within these organizations, IT is faced with adhering to strict regulations yet also having to provide the lines of business with an agile and productive set of tools that represent the lowest level of complexity possible. To date, all Pharmaceutical Organizations still struggle with deploying technology into validated environments.

To help our customers tackle these issues, Microsoft has formed the Microsoft Pharmaceutical Deployment Users Group, or PDUG. While we realize that customers have differing viewpoints of what “validation” means, we hope to help our customers by providing:

- A collaborative environment where they members can share lessons learned and best practices for software deployment within the industry.

- A discussion focused on the deployment of the Microsoft Operating System, Microsoft Office, and Microsoft SharePoint.

- Process and methodologies of deployment (Not a technical forum)

The group meets on the First Tuesday of every month, between 10:30 and 12:30 ET. For those in NJ, we meet face to face at the Microsoft Offices in Islin, NJ. We also meet via via Live Meeting. PDUG also has an active online community for members to share documents and discuss current issues. PDUG is an invitation only group for Director Level individuals responsible for deployment.

For any further questions on the group, to nominate a member, or to register, please contact us at mspdug@microsoft.com or just sign the Guest Book on this site!

Important Sites

Sites with more information on Microsoft in Life Sciences.

- Microsoft Life Sciences: The main site for finding Microsoft based solutions for Life Science companies.

- Microsoft Life Sciences MSDN Site: A site for architects and developers. Includes whitepapers, free code, and examples of Microsoft solutions in Life Sciences

- Deployment Best Practices: Microsoft's IT department deploys all Microsoft products and has whitepapers and guidance for enterprise deployment on the Microsoft IT Showcase.

Here’s a SkyDrive link to the  2010-06-08 Cloud Computing Meeting.

2010-06-08 Cloud Computing Meeting.

Parse3 Solutions announced “Software Solutions Company and Windows Azure developer, Parse3, announces the launch of its own new comprehensive website; noteworthy for being hosted entirely on the Windows Azure Platform, the new cloud computing system from Microsoft” in its Microsoft Windows Azure Platform; Windows Azure Developer; Parse3 press release of 6/25/2010:

Windows Azure developer Parse3, the software solutions company based in Warwick, NY is pleased to announce the launch of its own new comprehensive website hosted on the new cloud computing platform from Microsoft. Parse3, well-known as a custom web development company for many national brands with extensive content management needs, has entirely updated its own web presence. The new website is particularly note-worthy for being hosted on the new cloud computing Microsoft Windows Azure platform.

Today’s increased bandwidth has made the shift from company-owned hardware and software to pay-as-you-go cloud services and infrastructure possible. Windows Azure Cloud computing platform is an attractive alternative for improved website performance since it offers virtually unlimited flexibility and scalability. These benefits, already important to many of the industries Parse3 serves, including Retail, Publishing, Media and Entertainment and Financial clients, become immeasurable during times of high volume online usage.

The Parse3 website not only provides a platform for demonstrating the use of the latest Microsoft Windows Azure technology along with other back-end application design capabilities, but it also demonstrates a host of other front-end services now available from Parse3, such as design, web user experience and professional search engine optimization services.

When asked about the use of the Windows Azure environment for the website, Peter Ladka, CEO of Parse3 replies, “We have been working with the Microsoft Windows Azure platform for some leading edge technology companies since it first became available early this year and our comfort with Windows Azure and SQL Azure Database made the decision to cloud-host our own website an easy one.”

Joel Varty offers his third-party status report on Windows and SQL Azure in a Thoughts on Azure – Compute, Storage and SQL post of 6/22/2010:

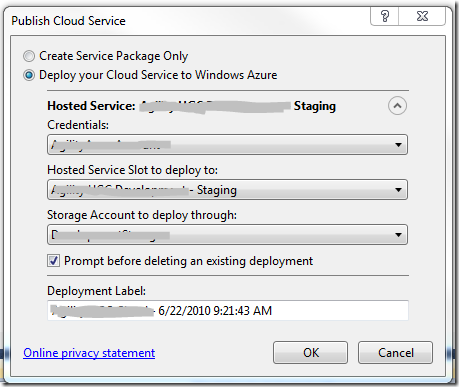

I think Azure is ready to go as a platform for a Software as a Service platform. When we think of cloud-based infrastructures, it has many pieces that make it both viable and attractive from many points of view. For one, it’s hooked tightly into a great development tool in Visual Studio 2010, and the latest SDK and Tools make it a simply process to publish to the cloud directly from the IDE.

On top of this, you can also hook into your Compute and Storage account right from Visual Studio Server Explorer windows, which is nice.

So what is Azure, really?

I attended a technical briefing for Microsoft partners in Toronto last week, and this was the biggest question. I think there is a lot of misconception out there about what is offered and was is possible with Azure.

Windows Azure - Compute

To be quite blunt, this is primarily meant for Web Apps and Web Services, with the Compute platform tailored to what are called “Web Roles” and “Worker Roles”. These can ONLY to be coded in managed code, however they can invoke executables written in whatever language you like. Basically, a web role is a web app, and do pretty much anything a web app can do. A worker role is akin to a windows service, and if you’ve coded your windows services with decent separation of concerns, you should be port one of those over with no difficulty.

The real power of the Compute platform is that is allows you to manually ramp up the number and size of Instances (read: Virtual Machines) that you want the app to be deployed, both for Web and Worker roles. This means you can flip a config variable and ramp up the power without ever calling IT or touching any routers or load balancers or anything. If you have a multi-tenanted app, this is nice. If not, if you have a an app that’s is single-server, uses a ton of session, is coded like the tutorials, and isn’t meant to be publically available… you might want to reconsider if you need a cloud solution at all.

What’s really good here is that, as of June 2010, .Net 4.0 is supported in Compute.

Windows Azure – Storage

Table Storage – this is really “entity” storage (I know, I know, kind of the same thing), but really this is just a serialized object repository which the ability to be queried in OData style using the Data Services API. Basically, you can dump objects in and get them out again. It has automatic partitioning based on a partition key, meaning this is very scalable. What stinks about it right now is that it only supports a pretty small subset of the OData (and therefore Data Serviecs) specification.

Blob Storage

Blobs equal files. You can do block (sequential, or streamed) blobs or “paged blobs”. These are not good names, but who cares? The biggest annoyance is that it isn’t as good as Amazon S3 (yet), except that it’s stored in the same environment as your Compute instance(s), meaning that you don’t pay for data transfer to and from the Compute and Storage. You only pay for data transfer in and out of Azure itself.

Queue Storage

A queue where items live for up to seven days, 8kb string max. Useful for implementing a multi-instance worker role pattern where the web roles create items in the queue and they are processed by an offline worker role. These are not MSMQ, but they are still wicked useful.

SQL Azure

Databases in the cloud. Great! Except that you can’t do Table partitioning based on filegroup, which is major pain. The only way to scale is to do what is called database sharding. I’m hoping I can hold out until we can do something less useless. I don’t want to rewrite app code and join data in my data access layer. That defeats the purpose of SQL Server at all.

To be continued…

Windows Azure AppFabric

The most important thing about Azure AppFabric is to know that it is NOTHING like Windows Server AppFabric. Which is too bad – because there isn’t yet a decent solution for distributed caching Azure Compute… a real shame.

All in all, Azure is the real deal, and you’d do well to research this carefully – the naming of the technology has changed over the last 18 months and there is as much misinformation and rumor out there as anything else. If in doubt, stick with what MSDN tells us.

more later - joel

Return to section navigation list>

Windows Azure Infrastructure

Robert Boyer speculates that Microsoft intends to build a new data center in his Sources: Computer data storage center may be headed to county story of 6/25/2010 for the Burlington, NC Times-News:

A “well-known” company is considering investing at least $120 million and as much as “billions” of dollars in a computer data storage center at the N.C. Industrial Center in Mebane, sources with knowledge of the situation have told the Times-News.

Two sources, who shared information on condition of anonymity, said the project is code-named “Deacon” and confirmed that the unnamed company is looking at the Industrial Center north of Interstate 85/40 as a possible location.

“It’s a big deal, I can tell you that … not millions, but billions,” one source said.

The other source said the business in question is some type of computer server company. …

Speculation has arisen that Microsoft Corp., the computer software and services giant based in Redmond, Wash., is the company behind Deacon.

“Microsoft has no comments at this time,” a company representative said Friday. …

Closer to home, the “Keep North Carolina Competitive Act,” a bill recently passed by the state House that is now in the state Senate finance committee, will, among other things, expand the types of data centers eligible for preferential tax treatment,” according to a portion of the bill’s text.

According to a Legislative Fiscal Note attached to the bill, the act “will exempt all eligible internet data center purchases from the sales tax.

As noted in the following article, it was the State of Washington’s failure to maintain a sales tax exemption for data center hardware that caused Microsoft to erase the Quincy, WA (Northwest) data center from its list.

See Dinakar Nethi and Niraj Nagrani listed Microsoft’s current data center locations in their updated Comparing SQL Server with SQL Azure wiki page of 6/18/2010 in the SQL Azure Database, Codename “Dallas” and OData above.

Mary Jo Foley asks Who is pushing the private cloud: Users or vendors? in this 6/25/2010 post about the “private vs. public cloud” controversy to her All About Microsoft blog on ZDNet:

No two pundits, partners or customers seem to be able to agree exactly what a “private cloud” is/isn’t. But that’s not the only cloudy party of the cloud. There’s also disagreement as to who wants private cloud computing.

Salesforce and Amazon execs have taken to calling the virtual private cloud — when that term is used to mean hosting data on-premises but making use of pay-as-you-go delivery — the “false cloud.” Their contention is Microsoft, IBM, HP and other traditional tech vendors are pushing customers to adopt private cloud solutions so they can keep selling lots of servers and software to them. Their highest-level message is everything can and should be in the cloud; there’s no need for any software to be installed locally.

Microsoft, for its part, is positioning itself as offering business customers a choice: Public cloud, private cloud or a mix of the two. Increasingly, especially in the small- and mid-size markets, however, Microsoft is leading with public cloud offerings, not with its on-premises offerings (something which even some of the company’s own product groups are still having trouble digesting). Microsoft is going to use its upcoming Worldwide Partner Conference in July to try to get its partners on the same page, so that they see the cloud as their friend and not their margin destroyer.

But all the focus on public cloud doesn’t mean Microsoft is de-emphasizing the private cloud. In fact, earlier this month, Microsoft officials said that its enterprise customers are the ones pushing the company to accelerate its private cloud strategy. A recent IDC study seemed to back Microsoft’s play: Enterprise IT customers says they want a mix of public and private cloud computing.

Vendor bickering and rhetoric aside, what do business customers want? Do they want a hybrid public/on-premises model or are they ready to be “all in” with the cloud?

At a half-day event in New York City this week sponsored by Amazon.com, a panel of four business users had their chance to present their stories as to why they decided to go with Amazon Web Services (AWS). It was interesting to hear some of these customers say they were committed to the public cloud, but then actually acknowledge that they wanted private cloud and hybrid models.

Marc Dispensa, Chief Enterprise Architect of IPG Mediabrands Global Technology Group, one of those customers, said he and his org spent three months evaluating the different cloud platforms out there. They looked at Amazon’s AWS, RackSpace and Microsoft’s Azure, among others, he said. While Mediabrands is/was primarily a Microsoft shop, meaning Azure would be “an easy fit for our developers,” Mediabrands opted against it because of the limited SQL Server storage available, as well as because of Microsoft’s “hybrid model” approach, Dispensa said.

But as he went on to describe Mediabrands’ evolving plan, Dispensa noted that the group is moving their SharePoint data into the AWS storage cloud, but is planning to keep SharePoint installed on-premises. (That sounds like a hybrid model to me.) Dispensa also said that Mediabrands still hasn’t ruled out entirely going with SharePoint Online, the Microsoft-hosted version of its SharePoint solution. …

My biggest take-aways from Amazon’s event were that Amazon and Microsoft are more similar than different, in terms of wanting to get enterprise customers into the cloud at a pace at which those users feel comfortable. To me, the talk of a “false cloud” seems to be a lot like Salesforce’s “end of software” argument — it’s more of a slogan than the real way that Salesforce’s products work and how customers (who still largely want offline data access) actually operate.

Frank Gens, senior vice president at IDC, claims Cloud computing isn't going anywhere but up in a recent report summarized by EDL Consulting on 6/24/2010:

A report recently released by market research firm IDC found cloud computing to be among the fastest growing trends in IT. From its current benchmark of $16 billion, IDC expects revenue drawn from cloud computing to reach more than $55 billion by 2014.

The annual growth rate for cloud services is more than 27 percent, which vastly outpaces the trend of on-premise IT services, which will grow at a rate of roughly 5 percent, according to IDC. SaaS applications have represented a substantial portion of cloud adoption, but IaaS and PaaS will see growth in the coming years as well.

"Additionally, our research with many CIOs about their plans for adopting cloud computing shows that IT customers are excited about the cost and agility advantages of cloud computing, but they also have serious concerns about the maturity of cloud computing offerings, specifically around security, availability, cost monitoring/management, integration and standards," Frank Gens, senior vice president at IDC, said.

Healthcare IT is partially responsible for the growth of cloud computing with more than 30 percent of hospitals currently using it and an additional 73 percent planning to implement it, according to Accenture.