Windows Azure and Cloud Computing Posts for 6/15/2010+

| Windows Azure, SQL Azure Database and related cloud computing topics now appear in this daily series. |

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database, Codename “Dallas” and OData

- AppFabric: Access Control and Service Bus

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Windows Azure Infrastructure

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Read the detailed TOC here (PDF) and download the sample code here.

Discuss the book on its WROX P2P Forum.

See a short-form TOC, get links to live Azure sample projects, and read a detailed TOC of electronic-only chapters 12 and 13 here.

Wrox’s Web site manager posted on 9/29/2009 a lengthy excerpt from Chapter 4, “Scaling Azure Table and Blob Storage” here.

You can now download and save the following two online-only chapters in Microsoft Office Word 2003 *.doc format by FTP:

- Chapter 12: “Managing SQL Azure Accounts and Databases”

- Chapter 13: “Exploiting SQL Azure Database's Relational Features”

HTTP downloads of the two chapters are available from the book's Code Download page; these chapters will be updated in June 2010 for the January 4, 2010 commercial release.

Azure Blob, Drive, Table and Queue Services

Jai Haridas of the Windows Azure Storage Team reported a Stream Position Not Reset on Retries in PageBlob WritePages API bug on 6/14/2010:

We recently came across a bug in the StorageClient library in which WritePages fails on retries because the stream is not reset to the beginning before a retry is attempted. This results in the StorageClient reading from incorrect position and hence causing WritePages to fail on retries.

The workaround is to implement a custom retry on which we reset the stream to the start before we invoke WritePages. We have taken note of this bug and it will be fixed in the next release of StorageClient library. Until then, Andrew Edwards, an architect in Storage team, has provided a workaround which can be used to implement WritePages with retries. The workaround saves the selected retry option and sets the retry policy to “None”. It then implements its own retry mechanism using the policy set. But before issuing a request, it rewinds the stream position to the beginning ensures that WritePages will read from the correct stream position.

This solution should also be used in the VHD upload code provided in blog “Using Windows Azure Page Blobs and How to Efficiently Upload and Download Page Blobs”. Please replace pageBlob.WritePages with the call to the below static method to get around the bug mentioned in this post.

Jai continues with the copyable C# code for the workaround.

See the Fabrice Marguerie announced his Sesame Data Browser: filtering, sorting, selecting and linking post of 6/9/2010 in the Live Windows Azure Apps, APIs, Tools and Test Harnesses section below.

<Return to section navigation list>

SQL Azure Database, Codename “Dallas” and OData

The SQL Azure Team announced the Windows Azure Platform - SQL Azure Development Accelerator Core offer by a 6/15/2010 e-mail message with a link to a popup page with the following details:

This promotional offer provides SQL Azure database at a deeply discounted monthly price. The offer is valid only for a six month term but can be renewed once at the same base unit rate. Customers may purchase multiple Base Units to match their development needs.

Base Unit Pricing:

$74.95 per Base Unit per month for the initial subscription term of six months, which can be renewed once at the same Base Unit price.

Monthly usage exceeding the amount included in the Base Units purchased will be charged at the rates indicated in the Overage Pricing section.

This is a one-time promotional offer and represents 25% off of our normal consumption rates.

Subscription term: 6 months

Base Unit Includes:

- SQL Azure

- 1 Business Edition database (10 GB relational database)

The remainder of the page provides details about overage pricing, which is the same as that for the initial release to the Web of SQL Azure Web and Business databases.

Mary Jo Foley quotes me in her Microsoft tweaks its cloud database pricing post to ZDNet’s All About Microsoft blog of 6/15/2010:

… “The new SQL Azure pricing is very significant,” said Microsoft cloud expert Roger Jennings, even though “prices for basic 1 GB Web and 10 GB Business databases didn’t change.”

Jennings noted that a number of TechEd attendees considered 50 GB to be still too small for enterprises, “but I wonder if IT depts are ready to pay $9,950/month for a terabyte (assuming linear pricing),” he said.

“Bear in mind that Microsoft provides an additional two replicas in the same data center for high availability, so a 1 TB database consumes 3 TB of storage. Geo-replication to other data centers for disaster recovery still isn’t available as an option, but you can use the new SQL Server Data Sync feature announced at TechEd to synchronize replicas. However, there is considerable (many minutes of) latency in the Data Sync process.” …

Wayne Walter Berry explains how to Condition Database Drop on SQL Azure in this 6/14/2010 post:

SQL Azure’s Transact-SQL syntax requires the DROP DATABASE statement be the only statement executed in the batch. For more information, see the MSDN documentation. So how do you execute a conditional drop? Maybe you only want to drop the database when the database exists. Here is how:

DECLARE @DBName SYSNAME, @Command NVARCHAR(MAX) SELECT @DBName = 'db1' IF EXISTS (SELECT * FROM sys.databases WHERE name=@DBName) BEGIN SELECT @Command = 'DROP DATABASE [' + @DBName + '];' EXEC @Command ENDOne batch contains the conditional statement and it generates and executes (in another batch) the DROP DATABASE statement.

See Fabrice Marguerie’s announcement of his Sesame Data Browser: filtering, sorting, selecting and linking post of 6/9/2010, which reports the capability to create hyperlinks to OData, in the Live Windows Azure Apps, APIs, Tools and Test Harnesses section below.

Shayne Burgess wrote a Building Rich Internet Apps with the Open Data Protocol article for MSDN Magazine’s June 2010 issue:

At PDC09 the Microsoft WCF Data Services team (formerly known as the ADO.NET Data Services team) first unveiled OData, the Open Data Protocol. The announcement was in a keynote on the second day of the conference, but that wasn’t where OData started. People familiar with ADO.NET Data Services have been using OData as the data transfer protocol for resource-based applications since ADO.NET Data Services became available in the Microsoft .NET Framework 3.5 SP1. In this article, I’ll explain how developers of Rich Internet Applications (RIAs) can use OData, and I’ll also show the benefits of doing so.

I’ll start by answering the No. 1 question I’ve been asked since the unveiling of OData in November: What is it? In very simple terms, OData is a resource-based Web protocol for querying and updating data. OData defines operations on resources using HTTP verbs (PUT, POST, UPDATE and DELETE), and it identifies those resources using a standard URI syntax. Data is transferred over HTTP using the AtomPub or JSON standards. For AtomPub, the OData protocol defines some conventions on the standard to support the exchange of query and schema information. Visit odata.org for more information on OData.

The OData Ecosystem

In this article, I’ll introduce a few products, frameworks and Web Services that consume or produce OData feeds. The protocol defines the resources and methods that can be operated on and the operations (GET, PUT, POST, MERGE and DELETE, which correspond to read, create, replace, merge and delete) that can be performed on those resources.

In practice this means any client that can consume the OData protocol can operate over any of the producers. It’s not necessary to learn the programming model of a service to program against the service; it’s only necessary to choose the target language to program in.

If, for example, you’re a Silverlight developer who learns the OData library for that platform, you can program against any OData feed. Beyond the OData library for Silverlight you’ll find libraries for the Microsoft .NET Framework client, AJAX, Java, PHP and Objective-C, with more on the way. Also, Microsoft PowerPivot for Excel supports an OData feed as one of the options for data import to its in-memory analysis engine.

And just as clients capable of consuming the OData protocol can operate over any of the producers, a service or application created using OData can be consumed by any OData-enabled client. After creating a Web service that exposes relational data as an OData endpoint (or exposes the data in a SharePoint site, tables in Windows Azure or what have you), you can easily build a rich desktop client in the .NET Framework or a rich AJAX-based Web site that consumes the same data.

The long-term goal for OData is to have an OData client library for every major technology, programming language and platform so that every client app can consume the wealth of OData feeds. Combined, the producers and consumers of OData create an OData “ecosystem.” …

AppFabric: Access Control and Service Bus

No significant articles today.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

Bob Familiar points to ARCast.TV - A.D.A.M migrates from Google Cloud to Windows Azure in this 6/14/2010 post to the Innovation Showcase blog:

A.D.A.M. www.adam.com , a leading provider of consumer health information and benefits technology solutions to 500+ Hospitals in the US, was looking to use cloud computing to revolutionize its business. In September 2009, they developed an H1N1 Swine Flu Assessment tool using Java/Google Cloud App Server.

We talked to A.D.AM. about Microsoft’s “3 Screens and a Cloud” strategic vision and how we could leverage the initial Swine Flu Assessment tool work and create a dynamically configurable cloud hosted medical assessment tool engine that includes web, pc, and mobile UX capabilities.

A.D.A.M. partnered with Microsoft and iLink Systems to rewrite the existing Swine flu assessment application as a reusable Medical Assessment tool solutions framework using the following Microsoft Platforms/Technologies:

- VS2010/.Net 4.0

- Silverlight 4.0

- Windows Azure

- SQL Azure

- W7 (Gadget)

- IE8 (Web Slice)

- Windows Phone 7.0 specification

This ARCast.TV episode gives you a full demonstration of the working production application and interview with Ravi Mallikarjuniah, iLink Systems Healthcare Practice Lead.

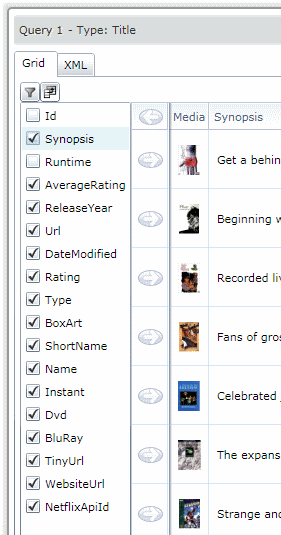

Fabrice Marguerie announced his Sesame Data Browser: filtering, sorting, selecting and linking post of 6/9/2010 in a 6/15/2010 e-mail message:

I have deferred the post about how Sesame is built in favor of publishing a new update. This new release offers major features such as the ability to quickly filter and sort data, select columns, and create hyperlinks to OData.

Filtering, sorting, selecting

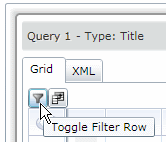

In order to filter data, you just have to use the filter row, which becomes available when you click on the funnel button:

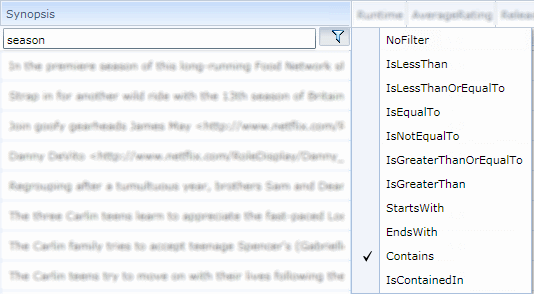

You can then type some text and select an operator:

The data grid will be refreshed immediately after you apply a filter.

It works in the same way for sorting. Clicking on a column will immediately update the query and refresh the grid.

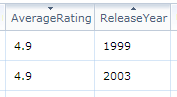

Note that multi-column sorting is possible by using SHIFT-click:

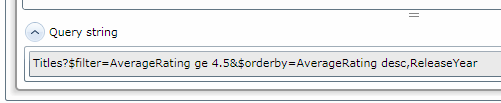

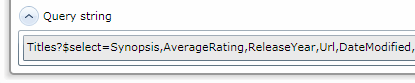

Viewing data is not enough. You can also view and copy the query string that returns that data:

One more thing you can to shape data is to select which columns are displayed. Simply use the Column Chooser and you'll be done:

Again, this will update the data and query string in real time:

Linking to Sesame, linking to OData

The other main feature of this release is the ability to create hyperlinks to Sesame. That's right, you can ask Sesame to give you a link you can display on a webpage, send in an email, or type in a chat session.

You can get a link to a connection:

or to a query:

You'll note that you can also decide to embed Sesame in a webpage...

Here are some sample links created via Sesame:

I'll give more examples in a post to follow.

There are many more minor improvements in this release, but I'll let you find out about them by yourself :-)

Please try Sesame Data Browser now and let me know what you think!PS: if you use Sesame from the desktop, please use the "Remove this application" command in the context menu of the desktop app and then "Install on desktop" again in your web browser. I'll activate automatic updates with the next release.

Microsoft Research published the Microsoft Project Hawaii Notification Service SDK on 4/13/2010, which it describes as follows:

This is a software-development kit for creating cloud services in Azure and cloud-service-aware apps on Windows Mobile. It consists entirely of sample code showing how to do various things on these platforms.

Download the SDK here.

Return to section navigation list>

Windows Azure Infrastructure

My OakLeaf Systems #1 of 587,000 in Google Blog Search for “Windows Azure” post of 6/15/2010 crows:

Tuesday, 6/15/2010, 9:30 AM PDT:

Click here for current result.

For additional background, see OakLeaf Blog #1 of 1,260,000 for “Azure” in Google Real-Time Stats for Blogs and #2 of Top Updates of 4/16/2010.

Peter Bright wrote A Microsoft Windows Azure primer: the basics for Ars Technica on 6/14/2010:

Microsoft's Windows Azure cloud computing platform has been gaining steam since its launch to paying customers in February. Just last week it reached 10,000 customers; already, Azure is shaping up to be a strong contender in the nascent cloud computing market.

Though the cloud offerings available from companies like Google, Amazon, and Microsoft are broadly similar—they each offer the basic building blocks of "computation" (i.e. applications), and "storage"—the way in which these services are offered is quite different. There are other providers out there beyond these three, but these household names are broadly representative of the market, and arguably the most important in terms of market adoption and influence.

Cloud platform primer

At one end of the spectrum is Amazon's Elastic Compute Cloud product (EC2). EC2 gives users a range of operating system images that they can install into their virtual machine and then configure any which way they choose. Customers can even create and upload their own images. Software can be written in any environment that can run on the system image. Those images can be administered and configured using SSH, remote desktop, or whatever other mechanism is preferred. Want to install software onto the virtual machine? Just run the installer.

At the opposite end of the spectrum is Google's App Engine. App Engine software runs in a sandbox, providing only limited access to the underlying operating system. Applications are restricted to being written either in Java (or at least, languages targeting the JVM) or Python 2.5. The sandbox prevents basic operations like writing to disk or opening network sockets.

In the middle ground is Microsoft's Windows Azure. In Azure, there's no direct access to the operating system or the software running on top of it—it's some kind of Windows variant, optimized for scalability, running some kind of IIS-like Web server with a .NET runtime—but with far fewer restrictions on application development than in Google's environment. Though .NET is, unsurprisingly, the preferred development platform, applications can be written using PHP, Java, or native code if preferred. The only restriction is that software must be deployable without installation—it has to support simply being copied to a directory and run from there. …

Dr. Andreas Polze from the Operating Systems and Middleware Hasso-Plattner-Institute for Software Engineering at the University Potsdam, Germany prepared A Comparative Analysis of Cloud Computing Environments, which Microsoft Research published to the Microsoft Faculty Connection on 5/26/2010:

Overview

This material was created by Professor Dr. Andreas Polze from the Operating Systems and Middleware Hasso-Plattner-Institute for Software Engineering at the University Potsdam, Germany. Included in this information is a PowerPoint file and a white paper.

Agenda for White Paper

Cloud is about a new business model for providing and obtaining IT services. Cloud computing promises to cut operational and capital cost. It lets IT departments focus on strategic projects rather than on managing the own datacenter. Cloud computing is building upon a number of ideas and principles, that have been established in context of utility computing, grid computing, and autonomic computing a couple of years ago. However, in contrast to previous approaches, cloud computing no longer assumes that developers and users are aware of the provisioning and management infrastructure for cloud services.

Within this paper, we want to focus on technical aspects of cloud computing. We will not discuss business benefits from outsourcing certain IT operations to external providers nor discuss preconditions and implications of efficient datacenter operation. Instead we will outline options available to architects and developers when choosing one or the other cloud-computing environment.

The following material is covered in the white paper.

- How it all began - Related Work

- Grid Computing

- Utility Computing

- Autonomic Computing

- Dynamic Datacenter Alliance

- Hosting / Outsourcing

- A Classification of Cloud Implementations

- Amazon Web Services - IaaS

- The Elastic Compute Cloud (EC2)

- The Simple Storage Service (S3)

- The Simple Queuing Services (SQS)

- VMware vCloud - IaaS

- vCloud Express

- Google AppEngine - PaaS

- The Java Runtime Environment

- The Python Runtime Environment

- The Datastore

- Development Workflow

- Windows Azure Platform - PaaS

- Windows Azure

- SQL Azure

- Windows Azure AppFabric

- Additional Online Services

- Salesforce.com - SaaS / PaaS

- Force.com

- Force Database - the persistency layer

- Data Security

- Microsoft Office Live - SaaS

- LiveMesh.com

- Google Apps - SaaS

- A Comparison of Cloud Computing Platforms

- Common Building Blocks

- Which Cloud to choose

- Beyond Scope

Agenda for PowerPoint File

The following material is covered in this PowerPoint file.

- Historical Perspective

- Grid Computing

- Utility Computing

- Autonomic Computing

- A Classification of Cloud Implementations

- Infrastructure as a Services (IaaS)

- Amazon Web Services

- VMware vCloud

- Platform as a Service (PaaS)

- Google AppEngine

- Windows Azure Platform

- Software as a Service (SaaS)

- Salesforce.com

- Microsoft Office Live

- Google Apps

- Which Cloud to choose – Problems and Future Directions

Related Resource

Cloud Security and Governance

The IDG Online Staff offer a Seven deadly sins of cloud security slideshow:

Hewlett-Packard Co. and the Cloud Security Alliance list seven deadly sins you ought to be aware of before putting applications in the cloud. Have you or your provider committed these sins?

Lori MacVittie asserts Top-to-Bottom is the New End-to-End in this 6/15/2010 post to F5’s DevCentral blog:

End-to-end is a popular term in marketing circles to describe some feature that acts across an entire “something.” In the case of networking solutions this generally means the feature acts from client to server. For example, end-to-end protocol optimization means the solution optimizes the protocol from the client all the way to the server, using whatever industry standard and proprietary, if applicable, techniques are available. But end-to-end is not necessarily an optimal solution – not from a performance perspective, not from a CAPEX or OPEX perspective, and certainly not from a dynamism perspective.

The better option, the more optimal, cost-efficient, and context-aware solution, is a top-to-bottom option.

WHAT’S WRONG with END-to-END?

“End-to-end optimization” is generally focused on one or two specific facets of a connection between the client and the server. WAN optimization, for example, focuses on the network connection and the data, typically reducing it in size through the use of data de-duplication technologies so that it transfers (or at least appears to transfer) faster. Web application acceleration focuses on HTTP and web application data in much the same, optimizing the protocol and trying to

reduce the amount of data that must be transferred as a means to speed up page load times. Web application acceleration often employs techniques that leverage the client’s browser cache, which makes it and end-to-end solution. Similarly, end-to-end security for web-based applications is almost always implemented through the use of SSL, which encrypts the data traversing the network from the client to the server and back.

Now, the problem with this is that each of these “end-to-end” implementations is a separate solution, usually deployed as either a network device or a software solution or, more recently, as a virtual network appliance. Taking our examples from above that means this “end-to-end” optimization solution comprises three separate and distinct solutions. These are deployed individually, which means each one has to process the data and optimize the relevant protocols individually, as islands of functionality. Each of these is a “hop” in the network and incurs the expected penalty of latency due to the processing required. Each one is separately managed and, what’s worse, each one has no idea the other exists. They each execute in an isolated, non-context aware environment.

Also problematic is that you must be concerned with the order of operations when implementing such an architecture. SSL encryption should not be applied until after application acceleration has been applied, and WAN optimization (data de-duplication) should occur before compression or protocol optimization is employed. The wrong order can reduce the effectiveness of the optimization techniques and can, in some cases, render them inert.

THE TOP-to-BOTTOM OPTION

The top-to-bottom approach is still about taking in raw data from an application and spitting out optimized data. The difference is that data is optimized from top (the application layer) to the bottom (network layer) via a unified platform approach. A top-to-bottom approach respects the rules for application of security and optimization techniques based on the appropriate

order of operations but the data never leaves the platform. Rather than stringing together multiple security and optimization solutions in an end-to-end chain of intermediaries, the “chain” is internal via a high-speed interconnect that both eliminates the negative performance impact of chaining proxies but also maintains context across each step.

In most end-to-end architectures only solution closest to the user has the endpoint context – information about the user, the user’s connection, and the user’s environment. It does not share that information with other solutions as the data is passed along the vertical chain of solutions. Similarly only the solution closest to the

application has the application endpoint’s context – status, condition of the network, and capacity.

A top-to-bottom, unified approach maintains the context across all three components - end-user endpoint, application endpoint, and the network – and allows each optimization, acceleration, and security solution to leverage that context to apply the right policy at the right time based on that information.

This is particularly useful for “perimeter” deployed solutions, such as WAN optimization, that must be by design one of the last (or the last) solutions in the chain of intermediaries in order to perform data de-duplication. Such solutions rarely have visibility into the full context of a request and response, and are therefore limited in how optimization features can be applied to the data. A top-to-bottom approach mitigates this obstacle by ensuring the WAN optimization has complete visibility into the contextual metadata for the request and response and can therefore apply optimization policies in a dynamic way based on full transactional context.

Because the data never leaves a unified application delivery platform, the traditional performance penalties associated with chaining multiple solutions together – network time to transfer, TCP connection setup and teardown – are remediated. From an operational viewpoint a top-to-bottom approach leveraging a unified application delivery platform decreases operational costs associated with management of multiple solutions and controls the complexity associated with managing multiple configurations and policies across multiple solution deployments. A unified application delivery approach uses the same device, the same management mechanisms (GUI, CLI, and scripting) to configure and manage the solution. It reduces the physical components necessary, as well, as it eliminates the need for a one-to-one physical-solution relationship between solution and hardware, which eliminates complexity of architecture and removes multiple points of failure in the data path.

TOP-to-BOTTOM is END-to-END only BETTER

Both end-to-end and top-to-bottom ultimately perform the same task: securing, optimizing and accelerating the delivery of data between a client and an application. Top-to-bottom actually is an intelligent form of end-to-end; it simply consolidates and centralizes multiple components in the end-to-end “chain” and instead employs a top-to- bottom approach to performing the same tasks on a single, unified platform.

The Windows Partner Team announced Security in the Cloud and 2 more free t-shirts to give away on 6/15/2010:

With summer vacations and WPC right around the corner the Windows Azure Partner Hub has been idol the past couple weeks. My apologies for that, but I wanted to make you aware of two interesting happenings.

First, something that I know many of you and IT organizations have been talking a lot about lately is security in the cloud. TechNet put together a three part track that outlines a background on the cloud, which contains content many of you are probably familiar with. However it goes a step further in the next two sections that go deeper into security across Microsoft software and services and concludes with a strategy section designed to help migrate to the cloud in a secure way.

Finally, I’m giving away two more t-shirts to the next two folks who provide an app profile on the forum of the Windows Azure Partner hub: http://windowsazurehub.com/forumpost/2010/05/19/First-5-App-Reports-get-a-free-t-shirt.aspx.

<Return to section navigation list>

Cloud Computing Events

My Microsoft’s Worldwide Partner Conference (WPC) 2010 to Feature 25 Cloud Services Sessions and Bill Clinton of 6/15/2010 provides details of the 25 sessions:

WPC 2010’s Session Catalog includes 25 cloud-related sessions from the Cloud Services (23) and Hosting Infrastructure (2) tracks in the following categories:

Breakout Sessions: 9

- Panel Sessions: 1

- Interactive Discussions (Small Group): 13

- Hands-On Labs (Instructor-Led): 2

Update 6/15/2010 1:30 PM PDT: Added one breakout and one interactive session from the Hosting Infrastructure track.

Update 6/15/2010 12:30 PM PDT: WPC 2010’s Main Web site has been generally unavailable since announcing (officially) that former President Bill Clinton will deliver the keynote address.

David Linthicum asserts “Even with the usual political hay-making, the House Oversight Committee's hearings could be a good thing” in his Congress to probe the feds' cloud computing strategy post of 6/15/2010 to InfoWorld’s Cloud Computing blog:

Vivek Kundra faces his first real political test after his push for cloud computing within the federal government: The House Oversight Committee will be holding hearings to discuss his IT reform efforts, including the use of cloud computing. Oversight Committee Chairman Edolphus Towns (D-N.Y.) and Government Management, Organization, and Procurement Subcommittee Chairwoman Diane Watson (D-Calif.) don't seem to be as pumped about cloud computing as Vivek is, simply put. But perhaps it's just a bit of political gaming.

The committee is taking a run at Kundra's cloud strategy, citing the fact that there are no clear published policies and procedures in place for the federal govenment to follow when using cloud computing. Thus, the committee fears, there are risks with security, interoperability, and data integration, but no solid plans in place to move this effort forward.

As the committee hearing notice stated: "There are a number of questions and concerns about the federal government's use of cloud computing. The committee is examining these issues and intends to hold a hearing on the potential benefits and risks of moving federal IT into the cloud."

To defend Kundra, there are indeed strategy development efforts taking place, led by agencies such as the National Institutes of Standards and Technology. NIST, as you may recall, created the definition of cloud computing that most of the industry is using right now. Also, there are procurements hitting the street that include cloud computing provisions.

However, it would not be accurate to say that the federal government has a common approach to cloud computing just yet. The feds need to fill in some of the missing pieces, such as approaches to architecture, economic models, security, and governance. That's why -- although I'm clearly in the camp that technology and politics don't mix -- this committee hearing actually could be a good thing.

Living in Washington, D.C., I believe this is really about the fact that Congress was not really giving a chance to make political hay out of cloud computing. Maybe a new federal cloud computing center in somebody's district awaits. Still, it's a Democrat-controlled Congress and administration, so I figure there won't be too much harsh treatment of Kundra's effort.

Moreover, the focus on the cloud could drive additional funding to finally complete the strategies and begin to make real progress. As taxpayers, we should keep an eye on this. It's all for the good, trust me.

The Public Sector DPE Team announced June 23rd Microsoft Developer Dinner for Partners: Microsoft Windows Identity Foundation: A New Age of Identity on 6/11/2010:

Click the link above, visit https://msevents.microsoft.com/CUI/EventDetail.aspx?EventID=1032453211&Culture=en-US OR call 1-877-673-8368 and reference event ID 1032453211

Event Date: June 23, 2010

Registration: 17:30

Event Time: 18:00 – 20:00

Event Location:

Microsoft Corporation

12012 Sunset Hills Road

Reston, VA 20190

(703) 673-7600Speaker for tonight's presentation: Joel Reyes, Senior Developer Evangelist, Microsoft

Background: Windows Identity Foundation helps .NET developers build claims-aware applications that externalize user authentication from the application, improving developer productivity, enhancing application security, and enabling interoperability. Developers can enjoy greater productivity, using a single simplified identity model based on claims. They can create more secure applications with a single user access model, reducing custom implementations and enabling end users to securely access applications via on-premises software as well as cloud services. Finally, they can enjoy greater flexibility in application development through built-in interoperability that allows users, applications, systems and other resources to communicate via claims.

During this developers’ dinner we will explore the Claims-Based Identity capabilities offer by Windows, Microsoft Windows Identity Foundation and its integration into AD Federation Services v2 and the Azure platform.

What you will learn: Join Joel Reyes in this session over dinner for a comprehensive, developer focused overview of:

- Windows Identity Foundation for .NET developers

- The Claims-Based Identity for Windows and its integration into AD Federation Services v2 and the Azure platform

Who Should Attend: Developers, Architects, Web Designers and technical managers who wish to get an early look at the next advancement in Software Development.

Attend this event for your chance to win a Zune HD! Zune HD will be raffled at the conclusion of the dinner.

Notice to all Public Sector Employees: Due to government gift and ethics laws and Microsoft policy, government employees (including military and employees of public education institutions) are not eligible to participate in the raffle.

<Return to section navigation list>

Other Cloud Computing Platforms and Services

Robert Mullins claims “Some deals are obvious, some controversial, but any of them would give CIOs a lot to think about” when asking Who's next on the acquisition block? Bloggers chime in for the IDG New Service’s four-page story:

Oracle buys Sun. HP buys 3Com. SAP buys Sybase. Just when it seemed like consolidation among the big data-center vendors had played itself out, we're off again at full tilt. Multiple trends are driving the latest acquisitions wave: The economy is recovering, customers are demanding systems that are easier to implement and vendors seem intent on collapsing the divide between servers, network and storage.

So who's next on the block? We asked some of IDG's expert bloggers to pull out their crystal balls and make some bold merger predictions for the near future. Some are obvious, some controversial, but any one of them would give corporate CIOs a lot to think about if they woke up tomorrow morning to find out it had happened.

IBM buys Amazon Web Services

By Alan Shimel, author of Network World's Open Source Fact and Fiction blog

The company that coined the phrase "on-demand computing" has been rather timid about the cloud. While Microsoft has Azure and Google its App Engine, IBM is not going to sit out the biggest computing migration in a generation. Jeff Bezos, on the other hand, is a Renaissance man. From his humble bookstore beginnings, he built Amazon.com into the biggest retailer on the Internet. He is bankrolling a project to put tourists in space. And unbeknown to many outside of the technology world, he has made AWS the dominant provider of public cloud services.

While cloud computing may turn out to be the biggest story in enterprise IT, Bezos' investors want Amazon to maximize their returns. The Amazon Empire is too far-flung. At the end of the day, space is far above the clouds and much sexier to Bezos. He will sell the cloud service to pursue the stars. The money AWS generates from a sale could fund a lot of rocket ships.

Who has the money, the desire and deserves to be hosting a good chunk of the public cloud? Big Blue, that's who. Who better to combine private and public clouds for true on-demand enterprise computing? IBM will make the hybrid cloud a reality. It has the software and services to offer both infrastructure as a service and platform as a service. IBM more than anyone has the resources, experience and business model to take AWS and fulfill the cloud destiny.

Who knows. When IBM reaches the cloud, it may find Jeff Bezos hovering above it in space.

Shimel, CEO of The CISO Group, can be reached at alan@thecisogroup.com and on the Web at www.securityexe.com.

Mullins continues with HP buys Teradata, Oracle buys EMC, Cisco Systems buys McAfee, Microsoft buys Red Hat and IBM buys CA Technologies guesses by bloggers on the following three pages.

Stephen Lawson asserts “The carrier will put storage facilities in large data centers around its global IP network as a draw for large enterprises to subscribe” in his Verizon to launch cloud storage service post of 6/15/2010 to InfoWorld’s Storage/News blog:

Verizon Business is set to announce a cloud-based storage service on Tuesday, leveraging the formidable Verizon Communications global data network as a draw for large enterprises to subscribe.

Starting in October, the company will launch storage facilities at Verizon data centers around the world. These facilities will be provided by cloud storage provider Nirvanix but will be located in the carrier's data centers, on its global IP network, said Patrick Verhoeven, manager of cloud services product marketing.

Verizon will get started on the offering in July by using Nirvanix's Storage Delivery Network, which is used for services sold wholesale by providers like Verizon. The five Nirvanix facilities will remain part of the offering, providing local access to storage in specific cities for customers that need it, Verizon said.

Many players are diving into the cloud-based storage business even as enterprises approach the concept warily because of worries about security, reliability and getting their data back. Storage vendors such as EMC have joined Amazon.com's S3 (Simple Storage Service) unit in building such offerings. Verizon said it can offer better value and faster access by combining network services with the storage capacity, all on a pay-as-you-go basis. The carrier already has a cloud backup service for transactional data, called Managed Data Vault. The new offering is designed more for unstructured data, according to Verizon.

Because the data centers are on Verizon's global IP network, customers will be able to get access to their data with fewer network "hops" and the security of Verizon's infrastructure, Verhoeven said. The cloud storage capacity will also be located in the same data centers with Verizon's cloud computing resources, with fast internal links between them, so the carrier can create a more complete cloud service for enterprises that want it.

Customers of the storage service will be able to reach their stored data using a variety of tools, including application programming interfaces, third-party applications and backup agents, and via standard CIFS (Common Internet File System) and NFS (Network File System), Verizon said. They will be able to manage the service, including moving data between Nirvanix and Verizon data centers, via a browser-based portal.

Alongside the cloud-based storage service, Verizon is introducing a suite of consulting services, Verizon Data Retention Services, to help customers develop storage policies and practices that fit their business objectives.

Prices for cloud storage, based on usage, will begin at $0.25 per gigabyte per month and go down with greater volume. The first nodes on Verizon's network, in San Jose, California, and Beltsville, Maryland, will go live in the U.S. in October. European facilities in London, Paris, Amsterdam and Stockholm will start up later in the fourth quarter, and as yet unnamed sites in the Asia-Pacific region will start early next year.

China’s Xinhua News Agency reports Industry: cloud computing market is expected next 3 to 5 years into the "explosion of growth" as translated by Google Translate services:

Xinhua Beijing on June 11 (Xinhua Hua Ye Di) in the 10th in Beijing at the Fourth Congress of Chinese software operation services, software application based on cloud computing once again become the focus of industry discussion.

Industry generally agree that the cloud computing market is now in cultivation and brewing, and the next 3 to 5 years is expected to usher in "outbreak of the growth period."

"Cloud computing is in the best period of development history." Industry-leading research institutions CCW Research Qu Xiaodong, General Manager, said the gradual popularity of cloud computing will make the software operational services (SaaS) recognized more and more users.

According to CCW Research statistics, in 2009 China's cloud computing market reached 40.35 billion yuan, representing an increase of 28% in 2008, of which, the software operating service (SaaS) market reached 35.42 billion yuan, accounting for cloud computing market size of 87.8%.

According to the industry's conservative estimates of cloud computing in 2010 is expected to achieve about 30% of the re-growth, market size to more than 50 billion yuan.

Related News:

- Survey: the industry in 2015 is expected to cloud computing will become the basis for enterprise IT

Qi Lu Microsoft Online CEO: The world is entering cloud computing era

- International Telecommunications Union set up a new working group to develop standards for cloud computing

- Reveal the mysterious veil of cloud computing

<Return to section navigation list>

Technorati Tags: Windows Azure, Windows Azure Platform, Azure Services Platform, Azure Storage Services, Azure Table Services, Azure Blob Services, Azure Drive Services, Azure Queue Services, SQL Azure Database, SADB, Open Data Protocol, OData, Azure AppFabric, Server AppFabric, Cloud Computing