Windows Azure and Cloud Computing Posts for 6/14/2010+

| Windows Azure, SQL Azure Database and related cloud computing topics now appear in this daily series. |

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database, Codename “Dallas” and OData

- AppFabric: Access Control and Service Bus

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Windows Azure Infrastructure

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Read the detailed TOC here (PDF) and download the sample code here.

Discuss the book on its WROX P2P Forum.

See a short-form TOC, get links to live Azure sample projects, and read a detailed TOC of electronic-only chapters 12 and 13 here.

Wrox’s Web site manager posted on 9/29/2009 a lengthy excerpt from Chapter 4, “Scaling Azure Table and Blob Storage” here.

You can now download and save the following two online-only chapters in Microsoft Office Word 2003 *.doc format by FTP:

- Chapter 12: “Managing SQL Azure Accounts and Databases”

- Chapter 13: “Exploiting SQL Azure Database's Relational Features”

HTTP downloads of the two chapters are available from the book's Code Download page; these chapters will be updated in June 2010 for the January 4, 2010 commercial release.

Azure Blob, Drive, Table and Queue Services

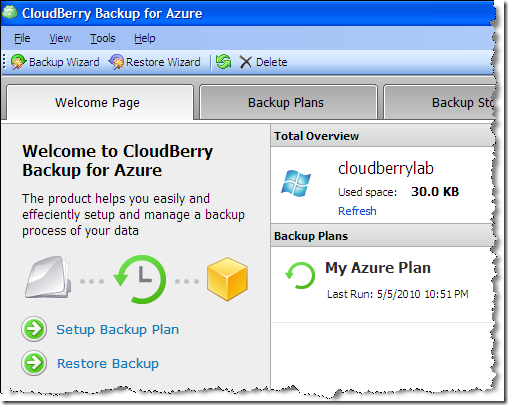

Cloudberry Lab announced on 6/14/2010 that it’s Introducing CloudBerry Backup for Windows Azure Blob Storage:

Note: this post applies to CloudBerry Backup for Azure 1.5.1 and later.

CloudBerry S3 Backup is a powerful Windows backup and restore that automate backup and restore processes to Amazon S3 cloud storage. With release 1.5 we introduce support for Windows Azure Blob Storage accounts.

CloudBerry Backup for Windows Azure has exactly the same feature set as CloudBerry S3 Backup:

Features:

- Easy installation and configuration

- Scheduling capabilities

- Data encryption

- Data retention schedule

- Secure online storage

- Data versioning

- Differential backup

- The ability to restore to a particular date

- Backup verification

- Alerting notifications

- Backup Window

- Backup exclusively locked files using Volume Shadow Copy

- Parallel data transfer - speed up backup/restore process

- Command Line interface - integrate backup with your own routines

- C# API - an ability to get backup plan statistics programatically

- Scheduling service. No dependency on Windows Task Scheduler

- The version specifically designed for Windows Home Server

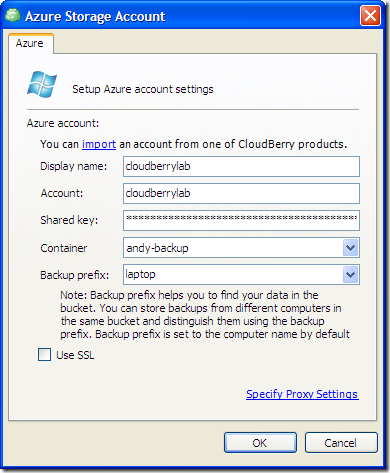

If you don’t have an account registers yet you should register it as shown on the screen below. If you are an existing CloudBerry Explorer for Azure customer you will be able to import your existing accounts.

The rest works just as if you were using Amazon S3.

Special offer for beta testers

CloudBerry Backup for Azure is free while in beta. All beta users will get a free license when the product is released. To claim your free license in the future you will need to register your copy.

Why use Windows Azure?

Advantage and disadvantages of Azure Blob storage is well outside of the scope of this article, but one thing we want to mention here. Azure storage comes with a built-in chunking mechanism. In other words, it will break down larger files into smaller chunks for you to make an upload more reliable and then it will automatically combine a file once all chunks reach the storage.

What’s next?

In the coming release of CloudBerry Backup for Azure we are planning to support page blobs. To make a long story short I will just say that these types of blobs allow you to modify only a given portion of the blob. This will allow us to implement a block level (or differential) backup relatively easy. Once this feature is in place you will be able to back up large files such as Outlook PST files, MS SQL Server backup files, MS Exchange Data files and Intuit databases in a very efficient manner. You will not have to re-upload the whole file but only the modified portion saving on upload time and transfer costs.

CloudBerry Backup for Azure is a Windows program that leverages Azure Blob storage. You can download it at http://cloudberrylab.com/default.aspx?page=backup-az . It comes with onetime fee of $29.99 (US) per copy (currently free while in beta).

Bill Zack noted the existence of VS 2010’s New Windows Azure Storage Browser in his 6/14/2010 post to the Innovation Showcase blog:

The Windows Azure team has included a new Windows Azure Storage Browser in the Visual Studio 2010 Server Explorer.

This makes it even easier to develop applications that utilize Windows Azure storage.

Right now the tool is for read-only access, but then this is only the first version. :-)

<Return to section navigation list>

SQL Azure Database, Codename “Dallas” and OData

Nahuel Beni’s SQL Azure and Bing Maps - Spatial Queries post of 6/14/2010 to the Southworks blog describes the development of the Fabrikam Shipping sample application:

During the past few weeks we had the opportunity of working together with James Conard, Vittorio Bertocci and Doug Purdy on building Fabrikam Shipping; which was shown by the latter during TechEd North America keynote.

Fabrikam Shipping is a Real-World multi-tenant application running in Windows Azure which leverages all the power of .Net 4 and the new SQL Azure capabilities announced in the event.

Leveraging Bing Maps and SQL Azure Spatial Support.

One of the cool features shown during the demo, was the integration of SQL Azure’s spatial support with Bing maps. On this post, I will walk you through a possible implementation of this functionality.

- Retrieving the Coordinates of the location entered by the user.

- Getting the radius of the area that is being displayed in the map.

- Performing the spatial query against SQL Azure.

Nahuel continues with detailed code snippets for the preceding three operations.

Pablo Castro (remember him?) reposted HTTP PATCH support for OData in WCF Data Services on 6/14/2010:

NOTE: this post was originally published on 5/20/10 but somehow disappeared, probably during the transition to the new blogging infrastructure in blogs.msdn.com. This version should be close to the original although it may differ in details.

Back when we were working on the initial version of OData (Astoria back then) there were some discussion about introducing a new HTTP method called PATCH, which would be similar to PUT but instead of having to send a whole new version of the resource you wanted to update, it would let you send a patch document with incremental changes only. We needed exactly that, but since the proposal was still in flux we decided to use a different word (to avoid any future name clash) until PATCH was ready. So OData services use a custom method called "MERGE" for now.

Now HTTP PATCH is official (see http://tools.ietf.org/html/rfc5789). We'll queue it up and get it integrated into OData and our .NET implementation WCF Data Services when we have an opportunity. Of course those things take a while to rev, and we have to find a spot in the queue, etc. In the meanwhile, here is an extremely small WCF behavior adds PATCH support to any service created with WCF Data Service. This example is built using .NET 4.0, but it should work in 3.5 SP1 as well.

http://code.msdn.microsoft.com/DataServicesPatch

To use it, just include the sample code in PatchBehavior.cs in your project and add [PatchSupportBehavior] as an attribute to your service class. For example:

[PatchSupportBehavior]

public class Northwind : DataService<NorthwindEntities>

{

// your data service code

} …

Bill Zack reported the availability of a SQL Azure Best Practices video in this 6/14/2010 post to the Innovation Showcase blog:

The SQL Azure team has produced this video on best practices for using the SQL Azure cloud database.

It walks you through the creation of a database and how to build an application that consumes it step-by-step.

It also look at importing and exporting data, and reporting. Time is also spent looking at strategies for migrating your existing applications to the cloud so that you are provided with high availability, fault tolerance and visibility to these often unseen data repositories.

Read more about it here.

Bruce Kyle claims SQL Azure Pricing Provides Flexibility in this 6/13/2010 post to the US ISV Evangelism blog:

New pricing was announced at Tech-Ed for pricing of SQL Azure.

SQL Azure today offers two editions with a ceiling of 1GB for web edition and 10GB for business edition. With our next service release. Ceiling sizes for both web and business editions will increase 5x.

Even though both editions can now support larger ceiling sizes (web up to 5GB and business up to 50GB), you will be billed based on the peak db size in a day rolled up to the next billing increment.

- Web edition will support 1GB and 5GB as billing increments.

- Business edition will support 10, 20, 30, 40 and 50GB as billing increment.

Even though both editions can grow to larger ceiling sizes (such as 50GB), you can cap the data size per database and control your bill at the billing increments. MAXSIZE option for CREATE/ALTER DATABASE will help set the cap on the size of the database. If the size of your database reaches to the cap set by MAXSIZE, you will receive an error code 40544. You will only be billed for the MAXSIZE amount for the day. When database reaches the MAXSIZE limit, you cannot insert or update data, or create new objects, such as tables, stored procedures, views, and functions. However, you can still read and delete data, truncate tables, drop tables and indexes, and rebuild indexes.

For details about the pricing and an example how to set MAXSIZE, see Pricing for the New Large SQL Azure Databases Explained.

Dot Net Rocks’ Show #566, Microsoft Townhall, of 6/10/2010 (35 minutes) features Nick Schaper and Marc Mercuri:

Nick Schaper and Marc Mercuri talk about building America Speaking Out, a website where citizens can vote on political issues. ASO uses the Microsoft TownHall platform, a low-cost framework built on Azure and used by campaigns to build websites to help them keep in touch with their constituency.

Nick currently serves as director of new media for House Republican Leader John Boehner. As a part of one of the most active and innovative communications teams on Capitol Hill, Nick focuses on developing new ways to expand Boehner's messaging online while assisting other members of the GOP Conference and their staff in executing effective, forward-thinking media strategies. Nick previously served as director of congressional affairs for Adfero Group, where he helped over 100 members and candidates harness the power of contemporary new media tools. Nick is a graduate of the University of Central Florida where he studied Political Science and Computer Science.

Marc Mercuri is Director of the Platform Strategy Team. Marc has been in the industry for 17+ years, the last 7 in senior roles across Microsoft in consulting, incubation, and evangelism. Marc is the author of Beginning Information Cards and CardSpace: From Novice to Professional, and co-authored the books Windows Communication Foundation Unleashed! and Windows Communication Foundation: Hands On!

Michael Thomassy of the Microsoft SQL Server Development Customer Advisory Team presented SQL Azure Customer Best Practices on 5/28/2010 (and I missed it):

The SQLCAT team and the SQL Azure team have been working closely together with a number of customers even before we launched our CTP (Community Technology Preview) in November, 2009 at the PDC (Professional Developers Conference). After our production release in January, 2010, we’ve continued working with some interesting customers and have captured a number of great learnings in these best practice documents. We’ve posted 2 documents to date and have a few more planned over the next few weeks. These best practice documents are being posted to the SQL Azure download center and the links are here:

Please check back here for more updates!

<Return to section navigation list>

AppFabric: Access Control and Service Bus

Brian Loesgen’s TechEd Session Links post of 6/14/2010 supplements his “Real World SOA with .NET and Windows Azure” TechEd session:

For those of you that attended the “Real World SOA with .NET and Windows Azure” TechEd session that John deVadoss, Christoph Schittko and I did last week, I discussed the following reference architecture:

I didn’t have time to do a demo/walkthrough during the session, but as I mentioned, recordings are available:

- The first video, which focuses on the on-premise side in detail, is available here

- The second video, which focuses on the Windows Azure AppFabric relay aspect and how to implement it, is available here (note that this is #1 of 3 there are three videos and corresponding blog posts in this series that cover bridging between on-premise BizTalk and Windows Azure)

Thanks all that attended, we had fun, and the feedback has been great.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

Mike Kelly recommended that you Take Control of Logging and Tracing in Windows Azure in this article in MSDN Magazine’s June 2010 issue:

Like many programmers, when I first started writing code I used print statements for debugging. I didn’t know how to use a debugger and the print statements were a crude but effective way to see what my program was doing as it ran. Later, I learned to use a real debugger and dropped the print statements as a debugging tool.

Fast forward to when I started writing code that runs on servers. I found that those print statements now go under the more sophisticated heading of “logging and tracing,” essential techniques for any server application programmer.

Even if you could attach a debugger to a production server application—which often isn’t possible due to security restrictions on the machines hosting the application—the types of issues server applications run into aren’t easily revealed with traditional debuggers. Many server applications are distributed, running on multiple machines, and debugging what’s happening on one machine isn’t always enough to diagnose real-world problems.

Moreover, server applications often run under the control of an operations staff that wouldn’t know how to use a traditional debugger—and calling in a developer for every problem isn’t desirable or practical.

In this article I’ll explain some basic logging, tracing and debugging techniques used for server applications. Then I’ll dive into how these techniques can be employed for your Windows Azure projects. Along the way you’ll see how logging and tracing are used with some real-world applications, and I’ll show you how to use Windows PowerShell to manage diagnostics for a running service.

Edisonweb claims Web Signage is the first digital signage software available through the Windows Azure cloud computing platform in it 6/14/2010 Web Signage brings Digital Signage in the Windows Azure cloud press release:

Edisonweb, the Italian software house that develops the Web Signage platform for digital signage, becomes officially a "Front Runner" and announces the release and immediate availability of its solution for Windows Azure.

Web Signage is the first digital signage software platform in the world available for Microsoft's Cloud.

The solution consists of web based management application delivered as a service and a player software managing the multimedia content playback on digital signage displays. Web Signage Player has successfully passed compatibility tests conducted by Microsoft for the 32 and 64-bit versions of Windows 7.

"We are particularly proud of having achieved first, in the software for digital signage arena, this important compatibility goal," said Riccardo D'Angelo, CEO of Edisonweb "that will allow us to further shorten the release and development times and increase both scalability and performance, thanks to the great flexibility offered by the Windows Azure platform.

The compatibility with Microsoft's cloud services of the Windows Azure platform, strengthens the offer towards the international markets: both software and infrastructure will be available as a service supplied through the Microsoft data centers spread throughout the world. Web Signage is always supplied by the nearest Windows Azure datacenter to ensure the highest performances.

Also, thanks to the evolution from SaaS to IaaS, a set of more flexible distribution and resale agreement, including OEM and co-brand formulas will be available to partners.

Edisonweb is a software house specialized in developing innovative web based solutions. For over fifteen years develops applications in the e-Government, healthcare, info-mobility, tourism and digital marketing fields, creating high performance and simple to use solutions. Edisonweb is a Microsoft Gold Partner and has achieved the ISV/Software Solutions competence, which recognizes commitment, expertise and superiority using Microsoft products and services. All Edisonweb processes, to ensure the best software quality and customer service, are managed using an ISO 9001:2000 certified quality management system.

Natesh reported VUE Software Moves Its SaaS Solutions to the Microsoft Windows Azure platform in this 6/14/2010 to the TMCnet.com blog:

VUE Software, a provider of industry-specific business technology solutions, has stated that they are moving software as a service (SaaS …) versions of the VUE Compensation Management and VUE IncentivePoint solutions to the Microsoft Windows Azure platform.

According to the company, VUE IncentivePoint, a Web-based incentive compensation solution with native integration to Microsoft … Dynamics CRM, streamlines all aspects of sales performance management for insurance organizations. VUE IncentivePoint is a highly valuable tool for insurance organizations to manage quotas, territories and incentives for complex distribution channels. Whereas VUE Compensation Management is a powerful, flexible and intuitive tool that makes it easy for insurance organizations to organize and streamline complex commission and incentive programs.

Senior leadership at VUE Software has identified the potential of this solution in promoting cutting-edge practices in health insurance organizations. As a result of which, VUE Software been named a Front Runner on the Microsoft Windows Azure platform. The solution has reduced the upfront software licensing costs and hardware infrastructure, making it easier carriers and agencies of all sizes to advance their internal processes. …

Return to section navigation list>

Windows Azure Infrastructure

James Hamilton’s SeaMicro Releases Innovative Intel Atom Server post of 6/13/2010 continues the discussion about low-power, low-cost servers:

I’ve been talking about the application low-power, low-cost processors to server workloads for years starting with The Case for Low-Cost, Low-Power Servers. Subsequent articles get into more detail: Microslice Servers, Low-Power Amdahl Blades for Data Intensive Computing, and Successfully Challenging the Server Tax

Single dimensional measures of servers like “performance” without regard to server cost or power dissipation are seriously flawed. The right way to measure server performance is work done per dollar and work done by joule. If you adopt these measures of workload performance, we find that cold storage workload and highly partitionable workloads run very well on low-cost, low-power servers. And we find the converse as well. Database workloads run poorly on these servers (see When Very Low-Power, Low-Cost Servers Don’t Make Sense).

The reasons why scale-up workloads in general and database workload specifically run poorly on low-cost, low-powered servers are fairly obvious. Workloads that don’t scale-out, need bigger single servers to scale (duh). And workloads that are CPU bound tend to run more cost effectively on higher powered nodes. The later isn’t strictly true. Even with scale-out losses, many CPU bound workloads still run efficiently on low-cost, low-powered servers because what is lost on scaling is sometimes more than gained by lower-cost and lower power consumption.

I find the bounds where a technology ceases to work efficiently to be the most interesting area to study for two reasons: 1) these boundaries teach us why current solutions don’t cross the boundary and often gives us clues on how to make the technology apply more broadly, and most important, 2) you really need to know where not to apply a new technology. It is rare that a new technology is a uniform across-the board win. For example, many of the current applications of flash memory make very little economic sense. It’s a wonderful solution for hot I/O-bound workloads where it is far superior to spinning media. But flash is a poor fit for many of the applications where it ends up being applied. You need to know where not to use a technology. …

<Return to section navigation list>

Cloud Security and Governance

Darrell K. Taft’s Microsoft Offers Security Advice for Windows Azure Developers post of 6/14/2010 to eWeek’s Application Development blog reports:

Microsoft has released [Revision 2 of] a new technical paper aimed at helping developers build more secure applications for the Microsoft Windows Azure cloud platform.

Microsoft has released a new technical paper aimed at helping developers build more secure applications for the Microsoft Windows Azure cloud platform.

In the new paper, entitled "Security Best Practices for Developing Windows Azure Applications," Microsoft explains how to use the security defenses in Windows Azure as well as how to build more secure Windows Azure applications.

Microsoft's Windows Azure security paper is available as of June 14 and is targeted at application designers, architects, developers and testers. The paper is based on the proven practices of Microsoft’s Security Development Lifecycle (SDL). Moreover, with the release of this paper, Microsoft is working to share what it has learned and build on its commitment to create a more trusted computing experience for everyone.

In a blog post on the issue, Michael Howard, principal security program manager for Security Engineering at Microsoft, said: "Over the last few months, a small cross-group team within Microsoft, including the SDL team, has written a paper that explains how to use the security defenses in Windows Azure as well as how to apply practices from the SDL to build more secure Windows Azure solutions."

It is no surprise that issues related to the security of the cloud are becoming increasingly important for businesses and consumers. As a result, it is important that people delivering products to the cloud understand that they must build applications with security in mind from the start, Howard said.

Specifically, the paper details proven practices for secure design, development and deployment, including: Service-layer/application security considerations; protections provided by the Azure platform and underlying network infrastructure; and sample design patterns for hardened/reduced-privilege services.

A summary of the Microsoft paper reads:

"This paper focuses on the security challenges and recommended approaches to design and develop more secure applications for Microsoft’s Windows Azure platform. Microsoft Security Engineering Center (MSEC) and Microsoft’s Online Services Security & Compliance (OSSC) team have partnered with the Windows Azure team to build on the same security principles and processes that Microsoft has developed through years of experience managing security risks in traditional development and operating environments."

A video about the paper is available here, and the paper is available for download here.

The co-authors are:

- Andrew Marshall (Senior Security Program Manager, Security Engineering)

- Michael Howard (Principal Security Program Manager, Security Engineering)

- Grant Bugher (Lead Security Program Manager, OSSC)

- Brian Harden (Security Architect, OSSC)

and contributors are:

- Charlie Kaufman (Principal Architect)

- Martin Rues (Director, OSSC)

- Vittorio Bertocci (Senior Technical Evangelist, Developer and Platform Evangelism)

Lori MacVittie asserts Minimizing the impact of code changes on multi-tenant applications requires a little devops “magic” and a broader architectural strategy in her Devops: Controlling Application Release Cycles to Avoid the WordPress Effect post of 6/14/2010:

Ignoring the unavoidable “cloud outage” hysteria that accompanies any Web 2.0 application outage today, there’s been some very interesting analysis of how WordPress – and other multi-tenant Web 2.0 applications – can avoid a similar mistake. One such suggestion is the use of a “feathered release schedule”, which is really just a controlled roll-out of a new codebase as a means to minimize the impact of an error. We’d call this “fault isolation” in data center architecture 101. It turns out that such an architectural strategy is fairly easy to achieve, if you have the right components and the right perspective. But before we dive into how to implement such an architecture we need to understand what caused the outage.

WHAT WENT WRONG and WHY CONTROLLED RELEASE CYCLES MITIGATE the IMPACT

What happened was a code change that modified a database table and impacted a large number of blogs, including some very high-profile ones:

Matt Mullenweg [founding developer of WordPress] responded to our email.

"The cause of the outage was a very unfortunate code change that overwrote some key options in the options table for a number of blogs. We brought the site down to prevent damage and have been bringing blogs back after we've verified that they're 100% okay."

Wordpress's hosted service, WordPress.com, was down completely for about an hour, taking blogs like TechCrunch, GigaOm and CNN with it.

WordPress.com Down for the Count, ReadWriteWeb, June 2010

Bob Warfield has since analyzed the “why” of the outage, blaming not multi-tenancy but the operational architecture enabling that multi-tenancy. In good form he also suggests solutions for preventing such a scenario from occurring again, including the notion of a feathered release cycle. This approach might also accurately be referred to as a staged, phased, or controlled release cycle. The key component is the ability to exercise control over which users are “upgraded” at what time/phase/stage of the cycle.

Don’t get me wrong, I’m all for multitenancy. In fact, it’s essential for many SaaS operations. But, companies need to have a plan to manage the risks inherent in multitenancy. The primary risk is the rapidity with which rolling out a change can affect your customer base. When operations are set up so that every tenant is in the same “hotel”, this problem is compounded, because it means everyone gets hit.

What to do?

[…]

Last step: use a feathered release cycle. When you roll out a code change, no matter how well-tested it is, don’t deploy to all the hotels. A feathered release cycle delivers the code change to one hotel at a time, and waits an appropriate length of time to see that nothing catastrophic has occurred.

This approach makes a great deal of sense. It is a standing joke amongst the Internet digerati that no Web 2.0 application every really comes out of “beta”, probably because most Web 2.0 applications developed today are done so using an agile development methodology that preaches small functional releases often rather than large, once in a while complete releases. What Bob is referring to as “feathered” is something Twitter appears to have implemented for some time, as it releases new functionality slowly – to a specific subset of users (the selection of which remains a mystery to the community at large) – and only when the new functionality is deemed stable will it be rolled out to the Twitter community at large.

This does not stop outages from occurring, as any dedicated Twitter user can attest to but it can mitigate the impact of an error hidden in that code release that could potentially take the entire site down if it were rolled out to every user.

From an architectural perspective, both Twitter and WordPress are Web 2.0 applications. They are multi-tenant in the sense that they use a database containing user-specific configuration metadata and content to enable personalization of blogs (and Twitter pages) and separation of content. The code-base is the same for every user, the appearance of personalization is achieved by applying user-specific configuration at the application and presentation layers. Using the same code-base, of course, means deploying a change to that code necessarily impacts every user.

Now, what Bob is suggesting WordPress do is what Twitter already does (somehow): further segment the code-base to isolate potential problems with code releases. This would allow operations to deploy a code change to segment X while segment Y remains running on the old code-base. The trick here is how do you do that transparently without impacting the entire site?

APPLICATION DELIVERY and CONTEXTUAL APPLICATION ROUTING

One way to accomplish this task is by leveraging the application delivery tier. That Load balancer, if it isn’t just a load balancer and is, in fact, an application delivery controller, is more than capable of enabling this scenario to occur.

There are two prerequisites:

1. You need to decide how to identify users that will directed to the new codebase. One suggestion: a simple boolean flag in the database that is served up to the user as a cookie. You could also identify users as

guinea pigsbeta testers based on location, or on a pre-determined list of accounts, or by programmatically asking users to participate. Any piece of data in the request or response is fair game; a robust network-side scripting implementation can extract information from any part of the application data, HTTP headers and network layers.2. You need to have your application delivery controller (a.k.a. load balancer) configured with two separate pools (farms, clusters) – one for each “version” of the codebase.

So let’s assume you’re using a cookie called “beta” that holds either a 1 or a 0 and that your application delivery controller (ADC) is configured with two pools called “newCodePool” and “oldCodePool”. When the application delivery controller inspects the “beta” cookie and finds a value of 1 it will make sure the client is routed to one of the application instances in “newCodePool” which one assumes is running the new version of the code. Similarly if the cookie holds a value of 0, the ADC passes the request to one of the application instances in “oldCodePool”.

At some point you’ll be satisfied that the new code is not going to take down your entire site or cause other undue harm to tenants (customers) and you can simply roll out the changes and start the cycle anew. …

Lori continues with a THIS is WHERE DEVOPS SHINES topic.

<Return to section navigation list>

Cloud Computing Events

Kevin Kell recited Observations from Microsoft Tech-Ed 2010 in his Microsoft and The Cloud post of 6/13/2010 to the learning tree blog:

I have spent the better part of this past weekend reviewing material from this year’s Tech-Ed conference which took place in New Orleans last week. In this post I offer my take on what seems to be Microsoft’s emerging position and strategy concerning cloud computing.

Certainly there is the Azure Platform as we have already discussed. To me, however, there seems to be much more to it both in their latest product offerings as well as in their own internal IT infrastructure. Since Microsoft tends to make and shake up markets it is usually worthwhile to pay attention to the directions they are taking.

In the opening keynote Bob Muglia, President of Microsoft Server and Tools Business, re-introduced the notion of “Dynamic IT”. According to Muglia Dynamic IT, which is all about connecting developers and IT operations together with systems, processes and services, has been at the core of Microsoft’s long term (ten year) strategy since 2003. Now, seven years later, Microsoft sees the cloud as a key component in the Dynamic IT vision. At a very fundamental level Microsoft views the cloud as delivering “IT as a Service”.

Microsoft sees five “dimensions” to the cloud:

- Creates opportunities and responsibilities

- Learns and helps you learn, decide and take action

- Enhances social and professional interactions

- Wants smarter devices

- Drives server advances that, in turn, drive the cloud

It seems to me that, to a greater or lesser degree, they are now offering products and services that address all of these dimensions.

Among the leading cloud providers Microsoft is uniquely positioned to extend their existing product line (servers, operating systems, tools and applications) into the cloud. Microsoft is hoping to leverage its’ extensive installed base into the cloud. In my opinion Microsoft’s ability to enable hybrid cloud solutions, especially those involving on-premise Windows deployments, is second to none. Now, with the availability of AppFabric for Windows Server 2008 this is even more so the case.

Will Microsoft succeed with this approach? Or will the market move away from the software giant in favor of open source solutions? What about Google, which, by comparison, is starting from a relatively clean slate in terms of an installed base? Obviously it is too early to tell.

One thing is for sure: cloud computing is real and it is here to stay. However it is likely that in the relatively near future the term “cloud computing” will fall into disuse. What we now know as cloud computing will probably just come to be what we will consider as best practices in IT. Competition in the industry will move the technology forward and consumers will have many choices. I expect that Microsoft technologies will continue to be a viable choice for corporations in the years to come.

Michael Coté’s Getting Cloud Crazy Microsoft TechEd 2010 post of 6/11/2010 comments on the cloud-centricity of Microsoft’s latest technical confab:

… Application Cloud Migration & Cloud App Development

As I outlined when talking with Microsoft Prashant Ketkar, I see two great scenarios for Azure usage:

Moving “under-the-desk data-centers” to the cloud – the Microsoft development stack plays well in the line of business, custom application space. Typically, small, in-house teams develop these applications and because they’re not “mission critical” are run on machines under someone’s desk, or virtualized into the datacenter somewhere. Running these on-premise is annoying after while and Azure should provide a nice, hopefully cheaper and even more robust, place for them to run. If one day Azure gets functionality to simply move Hyper-V guests up to Azure instance, this scenario is a no-brainer.

Regular cloud-based application development – Azure provides an ever growing PaaS stack to deliver on the “classic” desires for cloud computing. The easy availability of the LAMP stack on the web was arguably one of the top reasons Microsoft “lost” so much of the web development world (though, it’d be interesting to see contemporary stats on this – IIS vs. Apache httpd, I guess). If Azure made using the Microsoft stack as easy as getting ahold of a LAMP stack on the web, Microsoft has a better chance of re-gaining lost web share and starting out on a better foot in the mobile-web space (aside from anything having to do with Windows 7 Phone).

In the discussions I had with various Microsoft folks, I didn’t see any reason to believe Azure was doing anything to preclude these two scenarios. Indeed, when it comes to moving under-the-desk data-centers, Microsoft keeps saying they’re working on it, but hasn’t yet given a date.

As a side-note, while Amazon is often seen as just “Infrastructure as a Service,” they actually offer most of the parts for a PaaS: AWS’s middleware stack is broad. The distinction between a PaaS and an IaaS gets a bit murky – though a cloud taxonomist can make it as clear as an angle’s feet on a pin-head. I bring this up because Microsoft always does a good job of blurring the easy comparison touch points, so it’s worth pondering the positioning of Azure as a PaaS vs. IaaS against other clouds.

Private Cloud

Windows Server and Hyper-V are very much structured to be able to build your own private cloud, absolutely. The area where we see probably the greatest set of evolution happening is in System Center, and let me give you some examples. We will evolve System Center to have capabilities like self-service so that departments, business units within an organization can provision their own instances of the cloud to really virtual machines within the cloud to run their own applications.

–Bob Muglia interview with Ina Fried

The question of when Microsoft will come out with a private cloud came up many times, and the question specifics were deftly evaded each time. The most detailed “answer” came from Software and Tools Business leaver, Bob Muglia. At analyst “fireside chat” during the Software and Tools Business analyst summit, Peter Christy asked Muglia about private cloud offerings, suggesting that it was a requirement for many enterprises who wanted to avoid moving out of their data centers.

While there was no comment on private clouds, per say, there was much to say on how cloud computing innovations could be applied to existing IT and offerings. There were several areas to separate out.

Muglia responded that, first, you have to separate out enabling IT to deliver their offerings as a service to their internal customers from what we’d normally call cloud computing. That is, there’s plenty of learnings and technologies to take from cloud computing that make automation and self-service IT better for companies, regardless of if you want to call it “cloud” or not. This is where the immediate use of cloud-inspired technologies comes in, and where I’d expect to see offerings from Microsoft and others to simply further optimize the way good old fashioned IT is currently done. Really, it’s the continuation of virtualization (which is still far from “complete” for most IT) mixed with renewed interest and innovation in self-service and automation: runbook automation and service catalogs that don’t suck, to be blunt.

Then, Muglia said, Microsoft is “looking at” offering something to service providers who want to do cloud like things with Microsoft technologies. Windows is everywhere, the strategy would go, and as partnerships with Amazon show, enabling the running of Windows and Windows Server on various clouds is helpful.

Finally, there was some discussion of delivering features vs. services vs. “private cloud.” Here, the segue was into discussing Azure AppFabric.

AppFabric is a service bus that spans firewalls into the cloud. It’s a cloud/on-prem hybrid messaging queue, more or less. Maggie Myslinska, PM for AppFabric gave excellent overview session.

There are other “features” and services delivered over the Internet that fit in here, such as the old battle-horse Windows Update and the newer offering Windows Intune.

But, when it came to a private cloud, there was almost a sense of “that’s not really what you want,” which, really, tends to align with my more long term opinion. But, I wouldn’t write-off Microsoft doing a private cloud.

<Return to section navigation list>

Other Cloud Computing Platforms and Services

Audrey Watters claims TurnKey Linux Make Launching Open Source Appliances in the Cloud Easy in this 6/14/2010 post to the ReadWriteCloud blog:

The open source project TurnKey Linux has launched a private beta of the TurnKey Hub, a service that makes it easy to launch and manage the project's Ubuntu-based virtual appliances in the Amazon EC2 cloud.

There are currently about 40 software bundles in Turkey Linux's virtual library, including Joomla, Wordpress, and Moodle. According to TurnKey Linux, these virtual appliances are optimized for easy deployment and maintenance. And as the name implies, launching an instance with one of the virtual appliances is very simple. Custom passwords and authentication, as well as automatic setup for EBS devices and Elastic IPs, are part of the setup process.

According to their website, "Packaging a solution as a virtual appliance can be incredibly useful because it allows you to leverage guru integration skills to build ready to use systems (i.e., turn key solutions) that just work out of the box with little to no setup. Unlike with traditional software, you don't have to worry about complex OS compatibility issues, library dependencies or undesirable interactions with other applications because a virtual appliance is a self contained unit that runs directly on top of hardware or inside a virtual machine."

Currently, the TurnKey Hub launches on an instance volume in the EC2 Cloud. But according to the developers, support for additional cloud platforms, as well as automatic backup and migration functionality, is in the works.

TurnKey Linux is a project started by Alon Swartz and Lirax Siri. While there are other turnkey cloud offerings, including Dell's, but TurnKey Linux is firmly grounded in the open source community and not only strives to make the move to the cloud easier, but contends that a well-integrated and thoroughly tested virtual appliance can help facilitate open source adoption.