Windows Azure and Cloud Computing Posts for 6/12/2010+

| Windows Azure, SQL Azure Database and related cloud computing topics now appear in this daily series. |

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database, Codename “Dallas” and OData

- AppFabric: Access Control and Service Bus

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Windows Azure Infrastructure

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Read the detailed TOC here (PDF) and download the sample code here.

Discuss the book on its WROX P2P Forum.

See a short-form TOC, get links to live Azure sample projects, and read a detailed TOC of electronic-only chapters 12 and 13 here.

Wrox’s Web site manager posted on 9/29/2009 a lengthy excerpt from Chapter 4, “Scaling Azure Table and Blob Storage” here.

You can now download and save the following two online-only chapters in Microsoft Office Word 2003 *.doc format by FTP:

- Chapter 12: “Managing SQL Azure Accounts and Databases”

- Chapter 13: “Exploiting SQL Azure Database's Relational Features”

HTTP downloads of the two chapters are available from the book's Code Download page; these chapters will be updated in June 2010 for the January 4, 2010 commercial release.

Azure Blob, Drive, Table and Queue Services

No significant articles today.

<Return to section navigation list>

SQL Azure Database, Codename “Dallas” and OData

Adam Wilson’s Building Engaging Apps with Data Using Microsoft Codename “Dallas” TechEd presentation became available as a 00:47:44 Webcast on 6/12/2010:

Microsoft Codename “Dallas” enables developers to consume premium commercial and public domain data to power consumer and business apps on any platform or device. You can provide relevant data to inform business decisions, create visualizations to discover patterns, and increase user engagement with datasets from finance to demographics, and many more. A consistent REST-based API, simple terms of use, and per-transaction billing make consuming data easy. In this session, learn how "Dallas" removes the friction for accessing data by enabling you to visually discover, explore, and purchase data of any type, including images, real-time Web services and blobs, through a unified marketplace of trusted partners. We use a variety of "Dallas" datasets to develop Web and mobile applications using technologies such as Microsoft Silverlight 4, Microsoft ASP.NET MVC, and Windows Phone.

Speaker(s): Adam Wilson

Event: TechEd North America

Year: 2010

Track: Cloud Computing & Online Services

Download the slide deck here.

Colinizer’s Silverlight From The Client to The Cloud: Part 4 – OData post of 6/12/2010 is a brief tutorial:

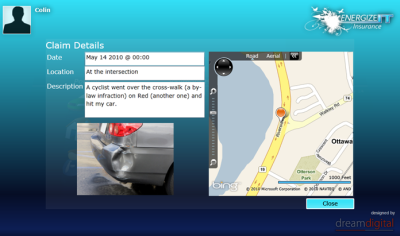

In this series blog entry I’ll show that data from an ADO.NET Data Services (the OData predecessor) can be retrieved and displayed in a sample application I created that was demonstrated across Canada in Microsoft’s EnergizeIT tour.

Silverlight 3 includes support for consuming ADO.NET Data Services which use a RESTful architecture to expose CRUD operations on data in the cloud.

OData is a Microsoft-published open protocol which builds on ADO.NET Data Services. Full OData support is not included in Silverlight 3, though a CTP add-on was made available. Silverlight 4 does include support for consuming OData.

WCF Data Services in .NET 4.0 includes the ability to publish OData-based data sets based on entity framework models, CLR objects or a custom-built publisher.

OData (and its predecessor) is typically used for publishing and consuming sets of data, but in the sample application, we published sample insurance policy, claim data and customer activities using ADO.NET Data Services, and allowed client software to query the data for a single customer as shown here…

In this case, not only was basic text data loaded into the UI, but binary image data was loaded into the application and geo-coordinates used with a Bing Maps Silverlight control to show a location.

In Silverlight 3 there are LINQ to ADO.NET Data Services classes and in Silverlight 4 (or the 3 add-on CTP) there are LINQ to OData client classes.

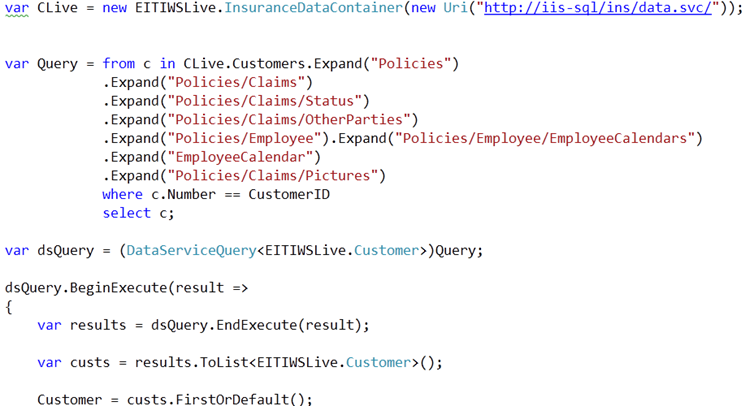

Code like the following allows Silverlight to easily connection to an OData service (after an earlier Add Service Reference) and query deeply into the datasets.

All such requests must be handled asynchronously in Silverlight, though inline anonymous delegates in C# (shown above) are very handy for processing the asynchronous results with inline code (though typically with more exception handling code than shown here).

Binding or imperative code (or MVVM) is then typically used to show the results in the UI.

This sample application represents a fairly basic case. Silverlight 4 + OData opens up some great possibilities and you can see more in my Dot Net Rocks TV episode on how to create an OData services in .NET 4 WCF Data Services and consume it in Silverlight 4.

You might be interested in the last of Colin’s Silverlight From The Client To The Cloud: Part 5 – Image Data Binding post of 6/12/2010 also:

In this series final blog entry I’ll show how to enhance Silverlight binding to show image data as shown in a sample application I created that was demonstrated across Canada in Microsoft’s EnergizeIT tour.

As I explained in Part 4 of this series, the data for the image above was downloaded from an ADO.NET Data Services (or an OData service) and displayed in the UI.

Typically in Silverlight, an Image element has its Source set to the URL of an image and Silverlight takes care of downloading the image.

When the image needs to be dynamically set, you may not have a URL available (either different URLs or one that takes a query string parameter to dynamically return an image) to get the image you want. Such a URL capability may be worth thinking about in the long run (given suitable security) using something like Windows Azure Storage (and its associated Content Delivery Network).

What you may have is the bytes for the image returned to you through some data service request.

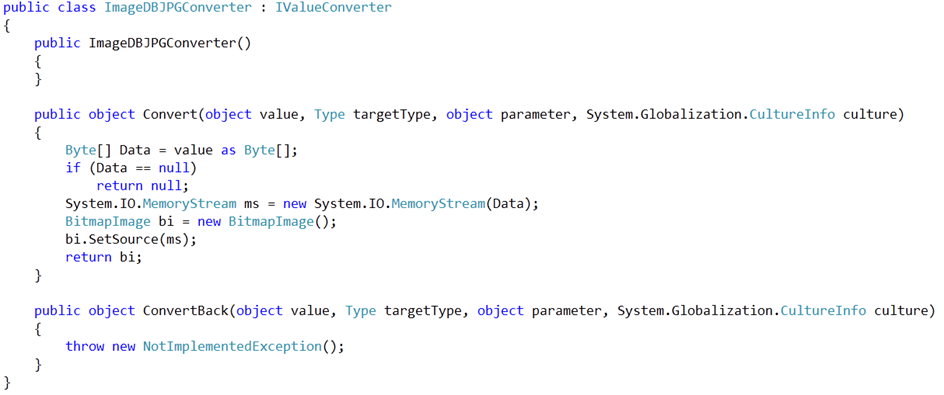

In that case you’ll need a binding convertor if you still want to use binding.

We create a class based on IValueConvertor which in this case can take the bytes and create the necessary BitmapImage as an acceptable source for the Image element.

We then need to use this in the XAML so we declare our class namespace in the XAML namespace…

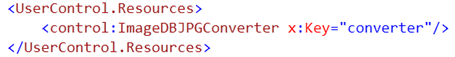

then declare a resource instance based on the class, here called “converter”

then when we bind our image to the binary data stream, we use our converter…

There are many uses for converters, typically converting between text and structured types, but this conversion between binary data and an image source is often useful and allows us to stay within a binding-based architecture.

This post concludes this series. [Emphasis added.]

Catherine Eibner’s Latest CRM 4.0 SDK now available post of 6/12/2010 notes Microsoft xRM’s capability to export OData:

Now available in the latest SDK is what i believe is one of the most exciting releases fro CRM 4.0 since the launch of Accelerators!

Thanks to contributions from the ADX Studios Team – now included the Advanced Developer Extensions

Advanced Developer Extensions for Microsoft Dynamics CRM, also referred to as Microsoft xRM, is a new set of tools included in the Microsoft Dynamics CRM SDK that simplifies the development of Internet-enabled applications that interact with Microsoft Dynamics CRM 4.0. It uses well known ADO.NET technologies. This new toolkit makes it easy for you to build an agile, integrated Web solution!

Advanced Developer Extensions for Microsoft Dynamics CRM supports all Microsoft Dynamics CRM deployment models: On-Premises, Internet-facing deployments (IFDs), and Microsoft Dynamics CRM Online. The SDK download contains everything you need to get started: binaries, tools, samples and documentation.

Now for some cool word bingo on the features! included is:

An enhanced code generation tool called CrmSvcUtil.exe that generates .NET classes (i.e. Generates a WCF Data Services (Astoria/OData) compatible data context class for managing entities)

- LINQ to CRM

- Portal Integration toolkit

- Connectivity and caching management, which provides improved scalability and application efficiency

Download it here.

I am downloading it now. As soon as it extracts am going to start playing with these extensions! Hopefully you will see some more detailed posts soon on what’s included and some of the cool things you can build!

Alex James presented Best Practices: Creating OData Services Using Windows Communication Foundation (WCF) Data Services (01:13:16) at TechEd North America 2010:

The OData ecosystem is a vibrant growing community of data producers and consumers using the OData protocol to exchange data. The easiest way to become an OData producer and join the OData community is using WCF Data Services. In this session, learn WCF Data Services best practices so you can do it right. We cover security, performance, custom business logic, and how to expose your unique data source using OData.

Speaker(s): Alex James

Event: TechEd North America

Year: 2010

Track: Developer Tools, Languages & Frameworks

Download the slide deck here.

Kevin Hoffman’s Exposing Active Directory Users as an OData Feed tutorial of 5/27/2010 shows you how to create OData content based on domains, such as Customer, User, etc.:

In my last couple of blog posts about federated security, I've been talking about the importance of removing the user data backing store from individual applications. The goal is to take that information out, centralize and decouple it from the individual applications so that as you create new applications and add new lines of business or new "heads" for existing applications, you won't need to worry about creating multiple entry points to the same user store. This makes things like federation with external partners and single sign-on (SSO) much easier.

One of the odd little side effects of creating a properly federated suite of applications (internally, externally, or both) is that each application now loses the ability to inspect the inner guts of the user data backing store. Occasionally having that information is useful. For example, if you're writing an application that controls the mapping of roles (which will eventually come out of your ADFS/STS server as claims in tokens), you need to know the list of available users. If you are using ADFS to do the federation and SSO for all your web applications and services then the list of users is actually the list of users in an Active Directory domain.

There's a couple of ways you could go here. First, you could just write some AD code in each of the applications that needs to display/query the domain user list. This is tedious and will give the clients a mismatched, odd feeling. For example, if all of your apps are using WCF Data Services to get their data from databases and other stores, wouldn't it be awesome if the apps could consume WCF Data Services (OData feeds) to get at the domain users? Yes, awesome it would be.

As you may or may not know, WCF Data Services are not limited to providing OData feeds over top of Entity Framework data sources. You can actually expose anything, so long as you can expose it as a property of IQueryable<T> on a data context class. Basically all we need to do is create a shape for our domain users as we want them exposed over the wire: a simple POCO (Plain Old CLR Object) will do just fine, decorated with a little bit of attributes to make the WCF Data Service work. So all we're really going to do here is create a data context class that has a property called DomainUsers that is of type IQuerable<DomainUser>. This will allow RESTful clients on any platform as well as .NET clients using WCF to run arbitrary LINQ queries against the active directory over the wire, using the same exact pattern the client is using to access all the other data in the app. In short, the client applications don't need to know the source of the user information, they just talk to the OData feed and remain in blissful ignorance. …

Kevin continues with detailed instructions and source code. He concludes:

In the preceding code, all of the LDAP connection strings and the container roots are all stored in the appSettings element in the project's web.config file.

At this point I can now hit a URL like http://server/Employees.svc/DomainUsers and get an OData feed containing all of the users in the employee domain. Because it's an OData feed/WCF Data Service, I can supply sort order, pagination, filtering, and even custom shaping directly on the URL.

Giving all the applications in my enterprise the ability to query the AD user objects with a standard, WCF-based service and not requiring those clients to be tightly coupled to Active Directory fits in really well with my other strategies for enterprise federation and web SSO.

Microsoft and Persistent Systems uploaded the OData SDK for PHP on 3/15/2010, but we were not following OData at that time:

The OData SDK for PHP enables PHP developers to access data services that use the OData protocol. Detailed information on the OData protocol and the other SDKs available can be found at http://www.odata.org.

New features include:Try the toolkit using some of the OData Services available on the internet:

- Support for all new OData protocol features (Projections, Server-Side paging, Blobs, RowCounter and Customizable Feeds)

- Support for Azure authentication

- Better programming model with APIs for all Query Options

- More command line options

- Additional samples

- NetFlix catalog: http://odata.netflix.com/catalog

- Services.odata.org: http://services.odata.org/OData/OData.svc/

A full list of services is available on the Producers page on the OData.org Web Site.The User Guide included in the OData SDK provides detailed documentation on the PHPDataSvcTool and methods exposed by the proxy class.

OData protocol documentation can be found on the OData Web site.

This version replaces the ADO.NET Data Services toolkit for PHP that was released in Sept 2009. [Emphasis added]

Architecture

The OData SDK for PHP is based on an utility (PHPDataSvcUtil) that is used to generate a proxy class from the metadata exposed by an OData Producer, the classes are then used in the PHP application to connect/edit/add/delete or browse records from the Data Service. Objects with methods and properties can be used instead of writing code that handles calling the service and parsing the data.

Some examples

/* connect to the OData service */ $svc = new NorthwindEntities(NORTHWIND_SERVICE_URL); /* get the list of Customers in the USA, for each customer get the list of Orders */ $query = $svc->Customers() ->filter("Country eq 'USA'") ->Expand('Orders'); $customerResponse = $query->Execute(); /* get only CustomerID and CustomerName */ $query = $svc->Customers() ->filter("Country eq 'USA'") ->Select('CustomerID, CustomerName'); $customerResponse = $query->Execute(); /* create a new customer */ $customer = Customers::CreateCustomers('channel9', 'CHAN9'); $proxy->AddToCustomers($customer); /* commit the change on the server */ $proxy->SaveChanges();

<Return to section navigation list>

AppFabric: Access Control and Service Bus

Maggie Myslinska’s Windows Azure AppFabric Overview presentation at TechEd 2010 became available as a 00:52:48 Webcast on 6/12/2010:

Come learn how to use Windows Azure AppFabric (with Service Bus and Access Control) as building block services for Web-based and hosted applications, and how developers can leverage services to create applications in the cloud and connect them with on-premises systems.

Speaker(s): Todd Holmquist-Sutherland, Maggie Myslinska

Event: TechEd North America

Year: 2010

Track: Application Server & Infrastructure

Download the slide deck here.

Ron Jacobs’ Monitoring WCF Data Service Exceptions with Windows Server AppFabric post of 6/11/2010 to The .NET Endpoint blog expands on his previous monitoring post:

After my previous post about how you can monitor service calls with AppFabric, several people asked about how you can capture errors with AppFabric. Unfortunately, the default error handling behavior of WCF Data Services does not work well with AppFabric. Though it does return HTTP status codes to the calling application to indicate the error, AppFabric will not record these as exceptions as it would normally.

To work around this behavior, you can simply add code to handle exceptions and report the error to AppFabric using the same WCFEventProvider I showed in the previous post. I’ve cleaned up and modified the code a bit to handle errors as well.

I’ve attached the sample code to this blog post. It includes the SQL script you will need to create the database.

Ron includes instructions for the following steps:

- Step 1 – Override the DataService<T>.HandleException method

- Step 2 – Invoke the service with an invalid URI

- Step 3

2– Provide Warnings for ServiceMethods- Step 4

3– Read The Monitoring DatabaseSummary

With a little bit of code in your WCF DataService, AppFabric can provide some very useful monitoring information. And because AppFabric collects this information across the server farm, all the servers will log events in the monitoring database.

See Kevin Hoffman’s Exposing Active Directory Users as an OData Feed tutorial of 5/27/2010 in the SQL Azure Database, Codename “Dallas” and OData section above for federating identities.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

Bruce Kyle’s MSDEV Offers Videos, Lab for PHP on Windows Azure Platform post of 6/12/2010 to the US ISV Developer blog describes recently added PHP resources:

This series of Web seminars and labs from MSDEV is designed to quickly guide PHP developers on how to work with Windows Azure. You’ll learn the essential concepts of the Windows Azure Platform, and then you’ll learn how to use some of the Azure services from PHP and deploy your PHP applications to Azure.

See the videos and download the labs and source code at PHP and the Windows Azure Platform.

Summary of Video Series

PHP on Windows Azure Quickstart Guide - Creating and Deploying PHP Projects on Windows Azure. The purpose of this Quickstart Guide is to show PHP developers how to work with Windows Azure. It shows how to develop and test PHP code on a local development machine, and then how to deploy that code to Windows Azure. This material is intended for developers who are already using PHP with an IDE such as Eclipse, so it doesn't cover PHP syntax or details of working with Eclipse, but experienced PHP programmers will see that it is easy to set up Eclipse to work with Windows Azure to support web-based PHP applications.

PHP and Windows Azure Platform: What Does It Mean? The purpose of this video presentation is to provide a conceptual overview of working with PHP and the Windows Azure Platform. The video introduces the Windows Azure components, development tools, data storage handling and SQL Azure service. The presentation discusses working with PHP and the Windows Azure Platform.

Summary of Labs

Deploying/Running PHP on Azure Virtual Lab. This lab is designed for developers who work with PHP and who would like to be able to run PHP applications on the Microsoft Windows Azure platform. This lab will walk you through how to test a PHP application locally in a Windows Azure simulation environment, and then deploy and run it in the cloud. It will also show you how to work with Microsoft SQL Azure, which is a version of Microsoft SQL Server, Microsoft's relational database, that's been specifically designed to run in the cloud. There is a video that accompanies this lab, so that you can follow along, step-by-step. The lab should take you about an hour.

PHP and the Windows Azure Platform: Using Windows Azure Tools for Eclipse Virtual Lab. This lab is intended to show PHP developers how they can use Windows Azure Tools for Eclipse to work with data storage on the Microsoft Windows Azure platform. This lab will walk you through how to write code for Windows Azure storage, and how to perform basic operations using the graphic interface provided by the Windows Azure Storage Explorer running within Eclipse. There is a video that accompanies this lab, so that you can follow along, step-by-step. The lab should take you about an hour.

The Windows Azure Team posted Real World Windows Azure: Interview with Steve Gable, Executive Vice President and Chief Technology Officer at Tribune Company on 6/11/2010:

As part of the Real World Windows Azure series, we talked to Steve Gable, EVP and CTO at Tribune Company, about using the Windows Azure platform to build the company's centralized content repository for all its editorial and advertising information. Here's what he had to say.

1. MSDN: Tell us more about Tribune and the services you provide.

The Chicago, Illinois-based Tribune Company operates newspapers, television stations, and a variety of news and information Web sites. Since 1847, the American public has relied on Tribune Company for news and information. The company began as a one-room publishing plant with a press run of 400 copies. Today, we employ approximately 14,000 people in the United States across the company's eight newspapers, 23 television stations, and 50 news and information Web sites. Tribune online receives about 6.1 billion page views a month.

2. MSDN: What was the biggest challenge Tribune faced prior to adopting the Windows Azure platform?

The newspaper industry is facing declining revenue, a tough economy, and the advent of new media applications that vie for consumers' attention. To compete, we needed to transform from a traditional media company into an interactive media company. One of the barriers to that sort of transformation was the company's geographically dispersed technology infrastructure. We managed 32 separate data centers, with a total of 4,000 servers and 75,000 feet of raised-floor space that is dedicated to supporting and cooling those servers. We also maintained 2,000 software applications that were not consistent from newspaper to newspaper or television station to television station. With eight individual newspapers and a "silo" approach where we had data dispersed all over the country, it was difficult to share content among our different organizations. For instance, a Tribune photographer could take a wonderful photo, yet only one paper could efficiently access and use it. We also sought to expand the number of ways in which customers and Tribune employees could consume that content. We wanted to switch from presenting the information that we thought was relevant to offering more targeted information that our readers deem relevant. Additionally, we wanted to provide greater value for our advertisers by ensuring that their ads are seen by the right customers, through whatever means those customers prefer.

3. MSDN: Can you describe the solution you built with the Windows Azure platform to help you address your need for cost-effective scalability?

The first step toward achieving this new role as an interactive media company was to establish a standardized information-sharing infrastructure for the entire company. We quickly realized that building the kind of internal infrastructure necessary to support that role was not achievable from a cost or management perspective. We already had too many data centers to manage and knew that we needed to consolidate them. We produce about 100 gigabytes of editorial content a day and about 8 terabytes at each of the 23 television stations every six to 12 months, and we kept adding hard drives to store it all. That's just not a sustainable model for a company whose storage needs grow so quickly. We decided to use several components of the Windows Azure platform to build our repository:

- Worker roles to create as many as 15 thumbnail images of each photo that is uploaded and placed in Windows Azure Blob storage, which stores named files along with metadata for each file. The multitude of thumbnails provided flexibility in using photographs of varying sizes in different media formats. We are currently using between 10 to 20 instances to handle the amount of content we upload every day.

- Windows Azure Content Delivery Network for caching blobs on its edge networks.

- Microsoft FAST Search Server 2010 to make the content fully searchable.

As of June 2010, Tribune is processing its publication content and sending it to Windows Azure. We anticipate that we will have all non-historical publications uploaded to the cloud repository by summer 2010, bringing all current Tribune publications under a single, searchable index. …

You might also be interested in the Webcast of Wade Wegner’s ARC304 | Real-World Patterns for Cloud Computing TechEd North America 2010 presentation, which “explore[s] how the Tribune Company has embraced the Windows Azure platform (including Windows Azure, Microsoft SQL Azure, Windows Azure AppFabric, and Project codename ‘Dallas’) to enhance and extend new and existing applications.”

Toddy Mladenov’s Re-Deploying your Windows Azure Service without Incurring Downtime post of 6/6/2010 explains:

Last week I wrote about the differences between update, upgrade and VIP-swap in Windows Azure, and you can use all those methods to upgrade your service without incurring any downtime - the platform takes care of managing the service instances, and makes sure that there is at least one instance (assuming you made your service redundant) that can handle the requests.

However none of the methods above will work if you change the number of roles or number of endpoints for your service (keep in mind that you can change the number of endpoints without changing the number of roles – one example is adding HTTPS endpoint for your existing Web Role). The only option you have in this case is to re-deploy your service. What this means is that you need to stop your old service (click on Suspend button in Windows Azure Developer Portal), delete it (click on Delete button in Windows Azure Developer Portal), deploy the new code, and start the new service. That would be fine if it didn’t require downtime.

Of course there is an option that allows you to re-deploy your service without downtime but it requires workaround. In order to do this you need to have your custom domain with CNAME configured to point to your hosted service DNS (something.cloudapp.net). How to do that you can read in my previous post Using Custom Domains with Windows Azure Services. Let’s for example assume that you have the following configuration for your company’s web site hosted on Windows Azure:

- Your Windows Azure service DNS is

my-current-service.cloudapp.net- Your company’s domain is

my-company.com- You have configured CNAME

www.my-company.com

to point tomy-current-service.cloudapp.netNow let’s assume that you want to add restricted area to your web site that requires username and password, and you want to serve the pages through HTTPS. In order to do that you need to change the service model to add HTTPS endpoint for the Web Role. Because you are changing the number of endpoints to your service you cannot use any of the methods above to do no-downtime deployment but you can do the following:

- Create new hosted service in Windows Azure. For example

my-new-service.cloudapp.net- Deploy and run the code for the new service

- Go to your domain registrar and change the CNAME

www.my-company.com

to point tomy-new-service.cloudapp.net- Wait at least 24 h before deleting

my-current-service.cloudapp.netThe no-downtime will be handled by the DNS servers - if a customer tries to access your website’s URL www.my-company.com and her DNS server was already updated with the new CNAME, she will hit the new bits; if the DNS server wasn’t updated with the new CNAME, she will hit the old service. After some time (based on the TTL set for the CNAME but typically 24h) all DNS servers around the world will be updated with the new CNAME, and everybody will hit the new bits

Return to section navigation list>

Windows Azure Infrastructure

Tim Anderson asserts “With progress comes ambiguity” in his Conviction and confusion in Microsoft's cloud strategy report of 6/8/2010 from TechEd North America 2010 for The Register’s Servers section:

Microsoft's Windows platform may be under attack from the cloud, but you wouldn't know it here at the company's TechEd in New Orleans, Louisiana.

The conference is a sell-out, with 10,500 attendees mostly on the IT professional side of the industry, in contrast to last year's event in Los Angeles, California, which only attracted around 7,000. Microsoft, too, seems upbeat.

"We are at the cusp of a major transformation in the industry called cloud computing," server and tools president Bob Muglia said as he set the theme of what's emerging as a hybrid strategy.

That strategy involves on-premise servers that still form most of its business, hosted services - Muglia said there are now 40 million customers for hosted Exchange, SharePoint, and Live Meeting - and the delivery of software for partners building their own cloud services.

Despite this diversity, the announcements at TechEd were mostly cloud-related.

Windows Azure itself is now updated with .NET Framework 4.0, and there are new tools for Visual Studio and an updated SDK. The tools are much improved. You can now view the status of your Azure hosted services and get read-only access to Azure data from within the Visual Studio IDE.

Debugging Azure applications is now easier thanks to a feature called IntelliTrace that keeps a configurable log of application state so you can trace errors later. Deployment is now streamlined, and it can now be done directly from the IDE rather than through an Azure portal.

The Azure database service, SQL Azure, has been updated too. It now supports spatial data types and databases up to 50GB, as promised in March. There is also a new preview of an Azure Data Synch Service, which controls synchronising data across multiple datacenters, and a web manager for SQL Server on Azure. …

So, does this all mean Microsoft now "gets" the cloud? There was a telling comment in the press briefing for Windows InTune, a cloud service for managing PCs that is aimed at small businesses. Product manager Alex Heaton had been explaining the benefits: easy management of PCs and laptops wherever they are, and a web application that upgrades itself, no need to install service packs or new versions.

In fact, it seemed better than tools in Small Business Server (SBS) that covers the same area. Should SBS users move over?

"It's an interesting question, when should you use the cloud based versus when should you use the on-premise," said Heaton. "The answer is, if you've already got an on-premise infrastructure that already does most of this, you're pretty much good, there's no reason for you to go to a separate infrastructure."

In reality this is not the case. There are strong reasons for small businesses to migrate to cloud-based solutions because keeping a complex on-premise server running sweetly is a burden. Microsoft is heavily invested in on-premise though and disrupting its own business is risky.

The result is a conflicted strategy. [Emphasis added.]

Tim continues with “Azure Days” on page 2:

I asked Muglia how cloud computing is affecting Microsoft's business and bottom line. In his answer, he was keen to emphasise the company already has substantial cloud business: "Xbox live is one of the largest paid consumer services in the world."

Muglia added: "Windows Azure is still early days for us, but our expectation is that over time the cloud services will become a very large part of our business."

In my view, the body language has changed. When Microsoft announced Windows Azure in October 2008, it felt as if Microsoft was dragging itself into the cloud business. At the Professional Developers Conference in October 2009, Microsoft announced Azure would open for business in February, but again, it seemed halfhearted, and it was developer platform vice president Scott Guthrie with Silverlight 4.0 who grabbed the limelight.

TechEd New Orleans is different. Muglia understands what is happening and presents Microsoft's cloud platform with conviction. In addition, the engineering side of Azure seems to be working. [Emphasis added.]

Early adopters have expressed frustration with the tools and sometimes the cost, but not the performance or reliability. Despite its conflicted strategy, which the company spins as hybrid, Microsoft's cloud push is finally happening, at least on the server and tools side of the business. ®

I’m at a loss to explain the registered trademark symbol (®) at the end of the text. Most journalists use –30- to indicate the end of a story.

Audrey Watters reported that the Majority of Tech Experts Think Work Will Be Cloud-Based by 2020, Finds Pew Research Center in her 6/11/2010 post to the ReadWriteCloud:

The majority of technology experts responding to a recent Pew Research Center survey believe that cloud computing will be more dominant than the desktop by the end of the decade. Undertaken by the Pew Research Center and Elon University as part of the Future of the Internet survey, the report looks at the future of cloud computing based on the opinions of almost 900 experts in the industry.

The Future of Work is in the Cloud

The key finding of the survey: 71% of those responding agreed with the statement: "By 2020, most people won't do their work with software running on a general-purpose PC. Instead, they will work in Internet-based applications such as Google Docs, and in applications run from smartphones. Aspiring application developers will develop for smartphone vendors and companies that provide Internet-based applications, because most innovative work will be done in that domain, instead of designing applications that run on a PC operating system."

27% agreed with the statement's "tension pair," that by 2020 most people will still be utilizing software run on their desktop PC in order to perform their work.

The survey respondents note a number of the challenges and opportunities that cloud computing provides, including the ease of access to data, locatable from any networked device. Increasing adoption of mobile devices, as well as interest in an "Internet of things," are given as the main driving forces for the development and deployment of cloud technologies.

Security, Privacy, Connectivity Remain Top Concerns

But some of the survey's respondents echoed what are the common concerns about cloud computing: limitations in the choice of providers, concerns about control, security, and portability of our data. Many respondents seemed to indicate that cloud computing has not become fully trustworthy yet, something that will slow its adoption. According to R. Ray Wang, a partner in the Altimeter Group, "We'll have a huge blow up with terrorism in the cloud and the PC will regain its full glory. People will lose confidence as cyber attacks cripple major systems. In fact, cloud will be there but we'll be stuck in hybrid mode for the next 40 years as people live with some level of fear."

One of the other major obstacles to widespread adoption remains broadband connectivity, as infinitely scalable storage means little if we can't easily upload or retrieve our data. "Using the cloud requires broadband access," says Tim Marema, vice president at the Center for Rural Strategies." If we really want a smart and productive America, we've got to ensure that citizens have broadband access as a civil right, not just an economic choice. Populations that don't make it to the cloud are going to be a severe disadvantage. In turn, that's going to drag down productivity overall."

Despite the rapidly changing world of technology, it may be that a decade is simply too soon to see ubiquitous adoption of cloud computing and abandonment of the desktop PC, particularly with the concerns about security and broadband. As many of the respondents to the survey suggest, it is likely that in the meantime we'll see some sort of desktop-cloud hybrid, beyond the "either/or" proposition given by the Pew survey.

Alex Williams reported What the Bankers Say About the Cloud in this 6/9/2010 post to the ReadWriteCloud:

Morgan Stanley recently released a report detailing a survey it did of 50 chief information officers. We got our hands on the report and found it interesting from a market perspective.

We do not ordinarily follow what the investment banks say about the cloud computing and virtualization markets. But the viewpoints from this breed of analysts provides a perspective about the maturity of the space.

Overall, the report shows that investment bankers are paying more attention to cloud computing with particular interest in the acceptance of virtualization in the enterprise.

The report surveyed CTO's from a number of industries. Most of them were from companies in financial services, telecommunications and healthcare. The majority of the CIO's work for companies that have more than $1 billion in revenues. Forty percent of the companies have revenues of more than $10 billion.

The survey covers software, hardware, hosting and IT services. The report cites several key trends as reasons for the interest in cloud computing:

Quality of home based computing is evolving at a far faster pace than the enterprise. With the advent of cloud computing, the enterprise is being forced to catch up.

Wireless devices such as smartphones empower employees to expect and even demand universal connectivity.

The recession has meant less spending in the enterprise. As a result, it has given cloud-based service providers the chance to catch up and provide more enterprise-ready services.

Cloud-based security concerns are abating to some degree as the enterprise realizes that the difference in security between internal and external environments is negligible at best. CIO's have a far better understanding of how to manage applications spread across cloud environments.

Software

According to Morgan Stanley, half of the CIOs surveyed plan to use desktop virtualization next year, which the firm believes could double the reach of client virtualization. Morgan sees the VDI (Virtual Desktop Infrastructure) market growing to a $1.5 billion opportunity by 2014. This would represent a 67% compound annual growth rate. No surprise, VMware and Citrix are expected to be the dominant vendors behind that trend.

Email, CRM and human resources applications will be the first to move to a cloud environment. Email will come out on top with an expected three-times increase in hosted email usage over the next year.

Hardware

CIO's will aggressively deploy virtualziation technology over the next year, altering the cycle for how frequently PCs are refreshed within the enterprise. CIOs will decrease spending on PCs due to the investment in virtualization.

Due to the acceptance of virtualization, desktop PCs represent the only hardware category that will see a decrease in spending. CIOs will virtualize 55% of production servers next year, up from 42% this year. Morgan Stanley says Dell is most at risk in light of its 25% revenue exposure to desktop PCs as compared to 14% revenue exposure to storage and servers.

Of the respondents, 56% say they expect to increase spending on storage.

Hosting

IT departments will continue to shed IT assets, benefitting data center and cloud services. CIOs will reduce PC and server footprints while managing capacity for latency and bandwidth.

CIOs expect to move 3% of their IT infrastructure and application workloads off-premise in each of the next two years. Public cloud providers should see higher growth compared to co-location facilities.

Many CIOs see the benefits of outsourcing non-critical functions to the cloud while keeping transaction-based or compliance-oriented applications on a dedicated platform.

IT Services

Similarities to past technology adoption will benefit the IT services soak. ERP, for instance, drove demand for implementation and continues to generate maintenance revenues. That's a pattern that should unfold with the adoption of cloud computing. Systems are complex and require expertise.

Trusted advisers will continue to be in high demand.; what's in vogue will not alter the relationship. Again, cloud computing implementation is a complex affair. Companies need trusted advisers to guide them through the maze.

Cloud computing projects are starting small but will become standard as the efficiencies and benefits become more tangible. Sales cycles will be shorter as acceptance grows.

Business processing optimization is an opportunity for cloud computing. The cloud opens up a number of other enterprise outsourcing opportunities.

Finally, there is some expectation that cloud computing will open the small and mid-sized business market:

"We like this processor-like approach and think that several of its attributes could translate well to IT services companies. For one, transaction-based pricing would break the linearity between headcount and revenues that puts a theoretical limit on IT services revenue growth today. As new solutions reach scale, they also offer the prospect of higher, more processor-like operating margins. Finally, multi-tenant platforms allow almost infinite scalability, potentially opening up the small- and mid-sized market to the major vendors for the first time in a meaningful way."

Conclusion

The Morgan Stanley report is most significant in its illustration about how virtualization is affecting the largest companies in the world. PC spending will go down. This may not be a huge surprise but it shows how cloud computing and virtualization are becoming mainstream in the enterprise. Cloud computing is here to stay.

<Return to section navigation list>

Cloud Security and Governance

Joey Snow and Brian Prince conspire to produce an 00:12:27 Windows Azure Security ‘trust, risk, losing control, legalities, compliance and Sarbanes Oxley’ -Video Demonstration of 6/12/2010:

Joey Snow [from TechNet Edge] brings up the big concerns: trust, risk, losing control, legalities, compliance and Sarbanes Oxley. Brian Prince [Microsoft Architect Evangelist] fills us in on what is in-place for Windows Azure in terms of physical security, information security, management and standard security audits.

Douglas Barbin asserts “In a world of ‘show me don’t tell me’ a little tell me could go a long way here” in his Hey Cloud Providers… Try Marketing Security Features post of 6/12/2010 about SAS 70 audits:

Another day and another set of Google Alerts on SAS 70. Most links are press releases saying that a cloud computing provider has been SAS 70 certified, SAS 70 secured or some other mischaracterization of what SAS 70 was actually intended to do. Other links are blog posts blasting these same marketers (and indirectly the CPAs) about how a SAS 70 is insufficient and not prescriptive enough to "secure the cloud."

For several years, I ran the product lines for one of the largest managed security service providers in the world. I was always being asked about security controls, whether it was before, during, or after the negotiation of a large global outsourcing contract.

So from my perspective, I would equate this dialogue to the following:

My wife: Doug have you had your H1N1 shot?

My response: I get an annual physical.

Sure a physical is a good thing to have, but it didn't answer her question. The same thing happens here when customers and prospects ask questions about what controls are in place only to be provided with an answer that the controls (which aren't stated) have been audited. Another good thing to have done but it does not answer the question.

So here's a novel idea.... how about tell them? Better yet, how about a white paper? Now before the security professionals start throwing tomatoes my way for suggesting more marketing involvement in security assurance, hear me out.

- The problem is that most providers are not talking about what they are doing to protect their customers' data. Instead they are citing audit reports, which are generally restricted in nature to use by customers and their auditors.

- In this day of cloud computing (and paranoia), security features are key product features.

- Service providers, your audit reports should serve to validate what you are already telling people you do, not become your source of information sharing. They should be your response when a customer says "prove it" not "show me."

The following are some examples found online:

- GotoMyPC.com - Paranoid about what GoToMyPC could do in the wrong hands? Citrix published a nine-page white paper on all of the security features and also what the user's responsibilities are.

- WebEx Security - Cisco provides an overview of controls in place but also guidance on how WebEx users can be more careful.

- Switch and Data - S&D provides a good listing of available security, physical, and environmental controls.

- Rackspace - A concise but descriptive one-page overview of security controls. (Rackspace has many other whitepapers on security as well.)

While I think these are a good start, it's clear that that sharing information about security is not common-place (and often taboo) for service providers. This is not rocket science; it's marketing 101 where you promote the features and functionality that your customers care about and we know they care about security and reliability. Despite what your CISO may say, you can actually share information about security controls without naming platforms, versions, IP addresses, and other data that could get put to use by the wrong persons.

How about a mid-year resolution to tell your customers more about how you secure their data?

This is also why I am an active participant with Chris Hoff and the CloudAudit group. We are not trying to create new standards but create an automated mechanism (via open APIs) to share security and control data with the people who need it to make decisions. While the data doesn't come from the audit firms, the providers certainly have the opportunity to prove what they are doing through SAS 70 audits, PCI validation, ISO certification, and other independent means.

In a world of "show me don't tell me" a little tell me could go a long way here.

Doug Barbin is a Director at SAS 70 Solutions, a company that provides assurance and technology compliances services with an emphasis on SAS 70 audits, PCI validation, and ISO 27001/2 compliance.

Jon Stokes asked Will the cloud have its own Deepwater Horizon disaster? in his 6/11/2010 story for Ars Technica:

A new Pew Internet survey of 900 Internet experts leads with a headline finding that will surprise few: the experts largely agree that, by 2020, we'll all be computing in the cloud. But an even more interesting notion is buried in one corner of the report, and it's an idea that came up in two of the three cloud interviews I did in the wake of Wired/Ars Smart Salon. This notion is that, at some point, there will be a massive data breach—a kind of cloud version of the Deepwater Horizon disaster, but pouring critical data out into the open instead of oil—and that this breach will cause everyone from private industry to government regulators to rethink what cloud computing can and cannot do.

"We'll have a huge blow up with terrorism in the cloud and the PC will regain its full glory," said R. Ray Wang, a partner in The Altimeter Group and a blogger on enterprise strategy. "People will lose confidence as cyber attacks cripple major systems. In fact, cloud will be there but we'll be stuck in hybrid mode for the next 40 years as people live with some level of fear." …

As [Accenture's] Joe [Tobolski] pointed out in his interview, you can actually boil down all of these concerns, justified or not, to the core issue of ownership. The anxiety that comes with not owning and operating an important piece of infrastructure—whether personal or business—gets expressed in terms of reliability, security, privacy, and lock-in, but at root it's all about ownership and control. …

I've recent run into the latter two issues with two cloud services that I've used, Ning and DabbleDB. Before these two incidents, I had an intellectual understanding of the risks inherent in not owning your own servers, but that understanding hadn't yet translated into genuine anxiety.

Jon explains that Ning was free originally but began charging for new sites and Twitter purchased DabbleDB’s owner.

I'll probably just use Filemaker Pro 11 and publish it via my home network, since I have a static IP. That way, I don't have to worry about another cloud database company cutting me off. If I do end up with a cloud-based replacement for Dabble, I'll be going with the rule that bigger and older is better. Services from a Microsoft or an Amazon are much more likely to be around for as long as I need them, and in a stable form that I can depend on. [Emphasis added] …

Because they're aiming their services at businesses, and because they've been in business long enough to know how it works, these companies are on the hook for a level of predictability that startups and larger, consumer-focused companies run by inexperienced young execs are not.

Check out other cloud-related stories by Jon Stokes: Cloud tradeoffs: freedom of choice vs. freedom from choice, a 6/4/2010 interview with Jon Tobolski, and Investing in the cloud: evolution, not revolution, a May 2010 interview with Ping Li, a partner at Accel Partners, a Palo Alto VC firm; Ping focuses on cloud infrastructure.

<Return to section navigation list>

Cloud Computing Events

My List of 75 Cloud-Computing Sessions at TechEd North America 2010 Updated with Webcast Links was updated on 6/12/2010 with links to breakout-session Webcasts and slide decks:

TechEd NA 2010 Sessions search on Windows Azure Product/Technology returns only seven sessions and Microsoft SQL Azure SQL returns only two sessions, but searching with Cloud Computing & Online Services as the Track returns 45. Strangely, searching with cloud as the Keyword shows 64 sessions.

Updated 6/12/2010: Added links to most breakout-session Webcasts and slide-deck downloads. Webcasts of Thursday sessions weren’t available on 6/12/2010. Some sessions, such as the five Windows Azure Boot Camps, don’t have Webcasts.

Updated 5/28/2010: Added ASI302 | Design Patterns, Practices, and Techniques with the Service Bus in Windows Azure AppFabric with Clemens Vasters and Juval Lowy as a Breakout Session and changed count in post title

74to 75.

Keith Combs’ Bytes by TechNet Launches with Keith Combs and Jenelle Crothers post of 6/11/2010 announced

This week at TechEd 2010 Matt Hester, Chris Henley, Harold Wong and I had the pleasure of interviewing MVPs, customers and Microsoft staff on a variety of IT Pro topics including Windows 7, Windows Server, Windows Azure, SharePoint and others. [Emphasis added.]

We have a landing page at http://technet.microsoft.com/BytesByTechNet where you will see each episode unveiled over the next few weeks and months.

The first show is a five minute “byte” I did with Jenelle Crothers. Jenelle is a Senior Systems/Network Administrator at the Conservation & Liquidation Office. She is a Microsoft MVP for the Windows Desktop Experience and we talk about Windows 7 Deployment and Virtualization on XP mode. Hit the link at the beginning of this paragraph for links to the resources we are referring to in the talk.

The [post’s] video is the high definition 720p version of the video and I encoded it with Expression Encoder 4 using the new H.264 capabilities that are available. It appears the result of the VBR encoding is a 3MB data rate so I am interested to know how this .MP4 looks and plays for you. Enjoy.

Tony Wolpe’s How Microsoft's Azure and cloud services are shaping up interviews Microsoft’s Mark Taylor in his post of 5/11/2010 to ZDNet UK’s Cloud blog:

Q: Five months on from February's commercial launch of Azure, Microsoft has made a series of announcements designed to strengthen the development and hosting platform.

A: Monday's unveilings included an update to the Windows Azure software development kit (SDK), a new public preview of the SQL Azure Data Sync Service for syncing and distributing data among several datacentres, and SQL Server Web Manager, to develop and deliver cloud applications.ZDNet UK spoke to Mark Taylor, the company's director of developer and platform evangelism, about Microsoft's whole approach to cloud computing, from infrastructure to data sovereignty, private clouds and licensing.

Q: Microsoft has been spending millions on expanding its infrastructure for the cloud, so do you have plans for datacentres in the UK?

A: We haven't announced plans for facilities in the UK, and you can imagine that in every major country in the world we are being asked that question. We are not ruling anything out or in at this stage.But there will definitely be scenarios where customers say, "I just want my data in the UK and I'm not going to proceed if it's not". We have to make sure we find the right way of dealing with that.

Some government agencies require sovereignty of data. But then when you start drilling down and understand what needs to be in the UK and what doesn't and what could be in the EU — and also what premium you'll pay for data sovereignty — then many of those who start out insisting on a UK location actually agree on a compromise.

If they have some aspect of their computing that they can't put outside the UK, they might keep that on-premise or they might use a third party. We along with many vendors provide most of the tools you need to operate cloud services within a datacentre today, whether it is a third party's datacentre or a customer's one.

In AppFabric, there is a component for Windows Server that gives you a lot of this capability inhouse. …

Tony continues his two page interview with more Q’s and A’s.

<Return to section navigation list>

Other Cloud Computing Platforms and Services

CloudHarmony’s Google Storage, a CDN/Storage Hybrid? post of 6/11/2010 analyzes the performance of Google Storage from various locations around the globe:

We received our Google Storage for Developers account today, and being the curious types, immediately ran some network tests. We maintain a network of about 40 cloud servers around the world which we use to monitor cloud servers, test cloud-to-cloud network throughput, and our cloud speedtest. We used a handful of these to test Google Storage uplink and downlink throughput and latency. We were very surprised by the low latency and consistently fast downlink throughput to most locations.

Most storage services like Amazon's S3 and Azure Blob Storage are physically hosted from a single geographical location. S3 for example, is divided into 4 regions, US West, US East, EU West and APAC. If you store a file to an S3 US West bucket, it is not replicated to the other regions, and can only be downloaded from that regions' servers. The result is much slower network performance from locations with poor connectivity to that region. Hence, you need to chose your S3 region wisely based on geographical and/or network proximity. Azure's Blob storage uses a similar approach. Users are able to add CDN on top of those services (CloudFront and Azure CDN), but the CDN does not provide the same access control and consistency features of the storage service.

In contrast, Google's new Storage for Developers service appears to store uploaded files to a globally distributed network of servers. When a file is requested, Google uses some DNS magic to direct a user to a server that will provide the fastest access to that file. This is very similar to the approach that Content Delivery Networks like Akamai and Edgecast work, wherein files are distributed to multiple globally placed PoPs (point-of-presence).

Our simple test consisted of requesting a test 10MB file from 17 different servers located in the US, EU and APAC. The test file was set to public and we used wget to test downlink and Google's gsutil to test uplink throughput (wget was faster than gsutil for downloads). In doing so, we found the same test URL resolved to 11 different Google servers with an average downlink of about 40 Mb/s! This hybrid model of CDN-like performance with enterprise storage features like durability, consistency and access control represents an exciting leap forward for cloud storage!

The post continues with upload and download speed tests at 17 international locations.

You might find CloudHarmony’s Cloud Storage Showdown Part 2 - What is the best storage for your cloud server? post of 3/24/2010, which includes speed tests of Windows Azure, and Connecting Clouds - Summary of Our Intracloud Network Testing of 3/14/2010 of interest also.

Chris Hoff’s All For One, One For All? On Standardizing Virtual Appliance Operating Systems post of 6/11/2010 analyzes VMware’s adoption of SUSE Linux Enterprise Server for its vSphere™ virtual machines:

Hot on the tail of the announcement that VMware and Novell are entering into a deeper “strategic partnership” in order to deliver and support SUSE Linux Enterprise Server (SLES) for VMware vSphere environments, was an interesting blog post from Stu (@vinternals) titled “Enter the Appliance.”

Now, before we get to Stu’s post, let’s look at the language from the press release (the emphasis is mine):

VMware and Novell today announced an expansion to their strategic partnership with an original equipment manufacturer (OEM) agreement through which VMware will distribute and support the SUSE® Linux Enterprise Server operating system. Under the agreement, VMware also intends to standardize its virtual appliance-based product offerings on SUSE Linux Enterprise Server. …

Customers who want to deploy SUSE Linux Enterprise Server for VMware® in VMware vSphere™ virtual machines will be entitled to receive a subscription to SUSE Linux Enterprise Server that includes patches and updates as part of their newly purchased qualifying VMware vSphere license and Support and Subscription. Under this agreement, VMware and its extensive network of solution provider partners will also be able to offer customers the option to purchase technical support for SUSE Linux Enterprise Server delivered directly by VMware for a seamless support experience. This expanded relationship between VMware and Novell benefits customers by reducing the cost and complexity of deploying and maintaining an enterprise operating system with VMware solutions.

As a result of this expanded collaboration, both companies intend to provide customers the ability to port their SUSE Linux-based workloads across clouds. Such portability will deliver choice and flexibility for VMware vSphere customers and is a significant step forward in delivering the benefits of seamless cloud computing.

Several VMware products are already distributed and deployed as virtual appliances. A virtual appliance is a pre-configured virtual machine that packages an operating system and application into a self-contained unit that is easy to deploy, manage and maintain. Standardizing virtual appliance-based VMware products on SUSE Linux Enterprise Server for VMware® will further simplify the deployment and ongoing management of these solutions, shortening the path to ROI.

What I read here is that VMware virtual appliances — those VMware products packaged as virtual appliances distributed by VMware — will utilized SLES as the underlying operating system of choice. I don’t see language or the inference that other virtual appliance ISVs will be required to do so. …

Here’s the interesting assertion Stu makes that inspired my commentary:

If you’re a software vendor looking to adopt the virtual appliance model to distribute your wares then I have some advice for you – if you’re not using SLES for the base of your appliance, start doing so. Now. This partnership will mean doors that were previously closed to virtual appliances will now be opened, but not to any old virtual appliance – it will need to be built on an Enterprise grade distro. And SLES is most certainly that.

Chris Wolf, Stu and I had a bit of banter on Twitter regarding this announcement wherein I suggested there’s a blurring of the lines and a conflation of messaging as well as a very unique perspective that’s not being discussed.

Specifically, I don’t see where it was implied that ISV’s would be forced to adopt SLES as their OS of choice for virtual appliances. I’m not suggesting it’s not compelling to do so for the support and distribution reasons stated above, but I suggest that the notion that “…doors that were previously closed to virtual appliances” from the perspective of support and uniformity of disto will also have and equal and opposite effect caused by a longer development lifecycle for many vendors.

Especially networking and security ISVs looking to move their products into a virtual appliance offering.

I summed up many of the issues associated with virtual security and networking appliances in my Four Horsemen of the Virtualization Security Apocalypse presentation, but let me just summarize…

Unlike most user-facing or service-delivery applications that are not topology sensitive (that is, they simply expect to be able to speak to “the network” without knowing anything about it,) network and security ISVs do very interesting things with drivers and kernel-space code in order to deal with topology, where they sit in the stack, and how they improve performance and stability that are extremely dependent upon direct access to hardware or at the very least, customer drivers or extended/hacked kernels. …

Diversity is a good thing — at least when it comes to your networking and security infrastructure. While I happen to work for a networking vendor, we all recognize that uniformity brings huge benefits as well as the potential for nasty concerns. If you want an example, check out how a simple software error affected tens of millions of users of Wordpress (Wordpress and the dark side of multitenancy.) While we’re talking about a different layer in the stack, the issue is the same.

I totally grok the standardization argument for the cost control, support and manageability reasons Stu stated but I am also fearful of the extreme levels of lock-in and monoculture this approach can take.

Mary Jo Foley’s Microsoft hits back on expanded Novell-VMware alliance post of 6/10/2010, as reported in Windows Azure and Cloud Computing Posts for 6/9/2010+ included these quotes:

… In a June 9 post, entitled “VMWare figures out that virtualization is an OS feature,” Patrick ORourke, director of communications, Server and Tools Business, highlights the 3.5 year partnership between MIcrosoft and Novell, claiming it has benefited more than 475 joint customers.

“(T)he vFolks in Palo Alto are further isolating themselves within the industry. Microsofts interop efforts have provided more choice and flexibility for customers, including our work with Novell. Were seeing VMWare go down an alternate path,” O’Rourke says.

He claimed the VMware-Novell partnership is “bad for customers as they ‘re getting locked into an inflexible offer.” (Was the uncertainty created by Microsoft over the indemnification of Novell customers using certain versions of SUSE “flexible”?) I’d say there’s quite a few hoops for customers to jump through whether they’re relying on Microsoft or VMware as their SUSE distribution partner….

O’Rourke also cites the new partnership as proving VMware has “finally determined that virtualization is a server OS feature” because of the announced appliance that will include a full version of a server OS with vSphere.

O’Rourke ended his post by pointing out VMware doesn’t have a public-cloud offering like Windows Azure.

<Return to section navigation list>