Windows Azure and Cloud Computing Posts for 8/5/2013+

Top Stories This Week:

- Adam Grocholski (@codel8r) reported availability of The Windows Azure Training Kit 2013 Refresh in an 8/6/2013 Sys-Con Media Blog Feed Post. See the Windows Azure Cloud Services, Caching, APIs, Tools and Test Harnesses section below.

- Brad Anderson (@InTheCloudMSFT) described What’s New in 2012 R2: Service Provider & Tenant IaaS Experience and other new Windows Server 2012 R2 features in the Windows Azure Pack, Hosting, Hyper-V and Private/Hybrid Clouds section below.

- Brian Hitney described Migrating a Blog to Windows Azure Web Sites in the Windows Azure Virtual Machines, Virtual Networks, Web Sites, Connect, RDP and CDN section below.

| A compendium of Windows Azure, Service Bus, BizTalk Services, Access Control, Caching, SQL Azure Database, and other cloud-computing articles. |

‡ Updated 8/11/2013 with new articles marked ‡.

• Updated 8/8/2013 with new articles marked •.

Note: This post is updated weekly or more frequently, depending on the availability of new articles in the following sections:

- Windows Azure Blob, Drive, Table, Queue, HDInsight and Media Services

- Windows Azure SQL Database, Federations and Reporting, Mobile Services

- Windows Azure Marketplace DataMarket, Power BI, Big Data and OData

- Windows Azure Service Bus, BizTalk Services and Workflow

- Windows Azure Access Control, Active Directory, and Identity

- Windows Azure Virtual Machines, Virtual Networks, Web Sites, Connect, RDP and CDN

- Windows Azure Cloud Services, Caching, APIs, Tools and Test Harnesses

- Windows Azure Infrastructure and DevOps

- Windows Azure Pack, Hosting, Hyper-V and Private/Hybrid Clouds

- Visual Studio LightSwitch and Entity Framework v4+

- Cloud Security, Compliance and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

Windows Azure Blob, Drive, Table, Queue, HDInsight and Media Services

‡ Andrew Tegethoff continued his series with a Microsoft Cloud BI: Azure Data Services for BI? post to the Perficient blog on 8/9/2013:

In my first post in this series, I talked a little about the basics of cloud BI and Microsoft’s Windows Azure public cloud platform. I gave a brief glimpse of what services Azure offers for BI. So to begin with, let’s recap the Azure data services offerings in terms of the cloud computing models available. Azure’s Platform as a Service (PaaS) offerings include Azure SQL Database, Azure SQL Reporting Services, HDInsight, and Azure Tables. Azure’s Infrastructure as a Service (IaaS) offering is Azure Virtual Machines.

So what does each of these services provide, and is it something you can leverage for BI?

Azure SQL Database

The Service Formerly Known As “SQL Azure” is essentially “SQL Server in the cloud”. With your subscription, you can establish up to 150 databases of either 5GB each for the Web Edition subscription (up to 150GB each for the Business Edition). Aside from some administration differences and some datatypes in the on-premise software not supported in the cloud service, Azure SQL Database functions very much like SQL Server. You access data with T-SQL and write queries the same way. It’s a great option for building an application data source — especially if that application is also being built in Azure. But what about for BI use? The capacity and capabilities of SQL Database are sufficient for almost any small company’s data warehouse needs — even that of many mid-size organizations. But this is not the place to store a multi-TB data warehouse. One could theoretically Federate databases to achieve that, but complexity and storage costs would be prohibitive. Connectivity and bandwidth concerns may also render typical ETL patterns impractical. And, as if that weren’t enough, Azure SQL Database does not include the BI stack tools: Analysis Services, Integration Services, or Reporting Services.

Azure SQL Reporting Services

This service is, as you might guess, basically a port of SQL Server Reporting Services into the Azure world as a PaaS offering. As such, the development experience and functionality are very much like that of the document-oriented interface of traditional RS. So this makes up for not having SSRS in SQL Database right? Well, no. Azure RS is really built for use only against a SQL Database data source. And as far as security, SQL Authentication is the only scheme supported. So, no, this isn’t a cloud-based reporting service at-large, and isn’t a general purpose BI tool.

Azure Tables

Azure Table storage provides storage primarily aimed at non-relational data. This “No SQL” option is ideal for storing big (up to 100TB with an Azure Storage account) datasets that don’t require complex joins or referential integrity. Data is accessible in tables using oData connections and LINQ in .NET applications. The term “Tables” is a little deceiving, because an Azure Table is actually a collection of Entities, each of which has Properties. You can think of this as analogous to a Row of Columns — even though it’s quite different under the covers. So, this type of storage is of great use in app development for storing, say User or Profile information. It’s less expensive than Azure, scales easily and transparently for both performance and data size. BUT it does not make for a great BI platform , as things like RI and joins tend to be fairly critical parts of a Data Warehouse.

HDInsight

A cloud-based offering for storing Big Data in the cloud, HDInsight is built on Hadoop, but offers the capability of end users to tap into big data using SQL Server and/or Excel. HDInsight uses Azure Storage , which provides the ability to contain TB-level stores of unstructured data. But the real magic happens in supplementary tools like Hive, Pig, and Sqoop, which allow users to submit queries and return results from Hadoop using SQL. One could connect to an HDInsight store using on-premise SQL Server, Azure SQL Database, or even Excel. So here, we have something extremely compelling from the perspective of analytics.

BTW – This is one way in the Microsoft stack to integrate Big Data into existing solutions. The other way, staying native to Microsoft tech, would be using Parallel Data Warehouse (PDW) 2012, which features cool new Polybase technology as a way to bridge the gap between Hadoop and SQL. But that’s an entirely different topic…

Azure Virtual Machines

So, we’ve evaluated the PaaS side and found it a questionable fit for BI. So what about the IaaS side and Azure Virtual Machines? NOW we’re talking! Azure VM’s provide the only path to running full-featured SQL Server BI in the cloud, since you can install and run a complete version of SQL server.

But since this post is already too long, so I’ll save that for next time…. See you then!

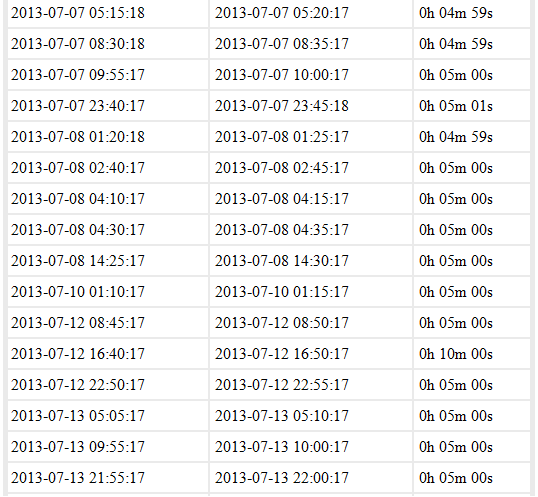

• My (@rogerjenn) Uptime Report for my Live OakLeaf Systems Azure Table Services Sample Project: July 2013 = 100.00% of 8/6/2013 is the fifth in a series of months with no downtime:

My (@rogerjenn) live OakLeaf Systems Azure Table Services Sample Project demo project runs two small Windows Azure Web role compute instances from Microsoft’s South Central US (San Antonio, TX) data center. This report now contains more than two full years of uptime data.

• Joe Giardino, Serdar Ozler, Jean Ghanem and Marcus Swenson of the Windows Azure Storage Team described a fix for .NET Clients encountering Port Exhaustion after installing KB2750149 or KB2805227 on 8/7/2013:

A recent update for .NET 4.5 introduced a regression to HttpWebRequest that may affect high scale applications. This blog post will cover the details of this change, how it impacts clients, and mitigations clients may take to avoid this issue altogether.

What is the effect?

Client would observe long latencies for their Blob, Queue, and Table Storage requests and may find either that that their requests to storage are dispatched after a delay or it is not dispatching requests to storage and instead see System.Net.WebException being thrown from their application when trying to access storage. The details about the exception is explained below. Running a netstat as described in the next section would show that the process has consumed many ports causing port exhaustion.

Who is affected?

Any client that is accessing Windows Azure Storage from a .NET platform with KB2750149 or KB2805227 installed that does not consume the entire response stream will be affected. This includes clients that are accessing the REST API directly via HttpWebRequest and HttpClient, the Storage Client for Windows RT, as well as the .NET Storage Client Library (2.0.6.0 and below provided via NuGet, GitHub, and the SDK). You can read more about the specifics of this update here.

In many cases the Storage Client Libraries do not expect a body to be returned from the server based on the REST API and subsequently do not attempt to read the response stream. Under previous behavior this “empty” response consisting of a single 0 length chunk would have been automatically consumed by the .NET networking layer allowing the socket to be reused. To address this change proactively we have added a fix to the .NET Client library in version 2.0.6.1 to explicitly drain the response stream.

A client can use the netstat utility to check for processes that are holding many ports open in the TIME_WAIT or ESTABLISHED states by issuing a nestat –a –o ( The –a will show all connections, and the -o option will display the owner process ID).

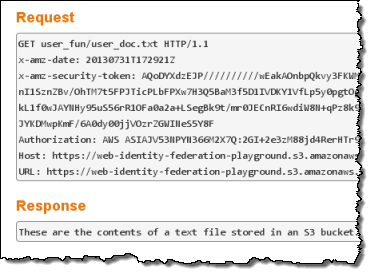

Running this command on an affected machine shows the following:

You can see above that a single process with ID 3024 is holding numerous connections open to the server.

Description

Users installing the recent update (KB2750149 or KB2805227) will observe slightly different behavior when leveraging the HttpWebRequest to communicate with a server that returns a chunked encoded response. (For more on Chunked encoded data see here).

When a server responds to an HTTP request with a chunked encoded response the client may be unaware of the entire length of the body, and therefore will read the body in a series of chunks from the response stream. The response stream is terminated when the server sends a zero length “chunk” followed by a CRLF sequence (see the article above for more details). When the server responds with an empty body this entire payload will consists of a single zero-length chunk to terminate the stream.

Prior to this update the default behavior of the HttpWebRequest was to attempt to “drain” the response stream whenever the users closes the HttpWebResponse. If the request can successfully read the rest of the response then the socket may be reused by another request in the application and is subsequently returned back to the shared pool. However, if a request still contains unread data then the underlying socket will remain open for some period of time before being explicitly disposed. This behavior will not allow the socket to be reused by the shared pool causing additional performance degradation as each request will be required to establish a new socket connection with the service.

Client Observed Behavior

In some cases older versions of the Storage Client Library will not retrieve the response stream from the HttpWebRequest (i.e. PUT operations), and therefore will not drain it, even though data is not sent by the server. Clients with KB2750149 or KB2805227 installed that leverage these libraries may begin to encounter TCP/IP port exhaustion. When TCP/IP port exhaustion does occur a client will encounter the following Web and Socket Exceptions:

- or -

System.Net.WebException: Unable to connect to the remote server

System.Net.Sockets.SocketException: Only one usage of each socket address (protocol/network address/port) is normally permitted.Note, if you are accessing storage via the Storage Client library these exceptions will be wrapped in a StorageException:

Microsoft.WindowsAzure.Storage.StorageException: Unable to connect to the remote server

System.Net.WebException: Unable to connect to the remote server

System.Net.Sockets.SocketException: Only one usage of each socket address (protocol/network address/port) is normally permittedMitigation

We have been working with the .NET team to address this issue. A permanent fix is now available which reinstates this read ahead semantic in a time bounded manner.

Install KB2846046 or .NET 4.5.1 Preview

Please consider installing the hotfix (KB2846046) from the .NET team to resolve this issue. However, please note that you need to contact Microsoft Customer Support Services to obtain the hotfix. For more information, please visit the corresponding KB article.

You can also install .NET 4.5.1 Preview that already contains this fix.

Upgrade to latest version of the Storage Client (2.0.6.1)

An update was made for the 2.0.6.1 (NuGet, GitHub) version of the Storage Client library to address this issue. If possible please upgrade your application to use the latest assembly.

Uninstall KB2750149 and KB2805227

We also recognize that some clients may be running applications that still utilize the 1.7 version of the storage client and may not be able to easily upgrade to the latest version without additional effort or install the hotfix. For such users, consider uninstalling the updates until the .NET team releases a publicly available fix for this issue. We will update this blog, once such fix is available.

Another alternative is to pin Guest OS for your Windows Azure cloud services as this prevents getting updates. This involves explicitly setting your OS to a version released before 2013.

More information on managing Guest OS updates can be found at Update the Windows Azure Guest OS from the Management Portal.

Update applications that leverage the REST API directly to explicitly drain the response stream

Any client application that directly references the Windows Azure REST API can be updated to explicitly retrieve the response stream from the HttpWebRequest via [Begin/End]GetResponseStream() and drain it manually i.e. by calling the Read or BeginRead methods until end of stream

Summary

We apologize for any inconvenience this may have caused. Please feel free to leave questions or comments below,

Joe Giardino, Serdar Ozler, Jean Ghanem, and Marcus Swenson

Resources

- Windows Azure Storage Client library 2.0.6.1 (NuGet, GitHub)

- Original KB article #1: http://support.microsoft.com/kb/2750149

- Original KB article #2: http://support.microsoft.com/kb/2805227

- Hotfix KB article: http://support.microsoft.com/kb/2846046

- .NET 4.5.1 Preview: http://go.microsoft.com/fwlink/?LinkId=309499

<Return to section navigation list>

Windows Azure SQL Database, Federations and Reporting, Mobile Services

‡ Rich Edmonds reported Microsoft's Windows Phone App Studio beta saw 30,000 projects created in just 48 hours in an 8/11/2013 post to the Windows Phone Central blog:

Microsoft launched its new online tool for new Windows Phone developers earlier this week, enabling those with app ideas to easily create and deploy working concepts. If you're a novice at app development, or simply reside in emerging markets and don't have an endless supply of funding, the Windows Phone App Studio beta is a simple solution that helps you get cracking without any obstacles. It's time to turn that app idea into reality.

‡ Matteo Pagani (@qmatteoq) posted App Studio: let’s create our first application! on 8/9/2013:

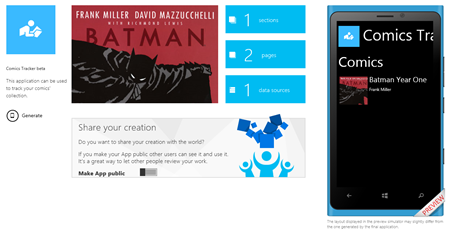

In the previous post I’ve introduced App Studio, a new tool from Microsoft to easily create Windows Phone applications. You’ll be able to define the application’s content with a series of wizards and, in the end, to deploy your application on the phone or to download the full Visual Studio project, so that you can keep working with it and add features that are not supported by the web tool. Right now access to the tool, due to the big success that caused some troubles in the past days, can be accessed only with an invite code. If you’re really interested in trying it, send me a mail using the contact form and I’ll send you an invite code. First come, first served!

Let’s see in details how to use it and how to create our first app. We’re going to create a sample comic tracker app, that we can use to keep track of our comics collection (I’m a comics fan, so I think it’s a good starting point). In these first posts we’ll see how to create the application just using the web tool: then, we’ll extend it using Visual Studio, to provide content editing features (like adding, deleting or editing a comic), since actually the web tool doesn’t support this scenario.

Let’s start!

Empty project or template?

The first thing to do is to connect to http://apps.windowsstore.com and sign in with your Microsoft Account: then you’ll have the chance to create an application from scratch or to use one of the available templates. Templates are simply a collection of already pre populated items, like pages, menus and sections. We’re going to create an empty app, so that we can better familiarize with the available options. So, choose Create an empty app and let’s start!

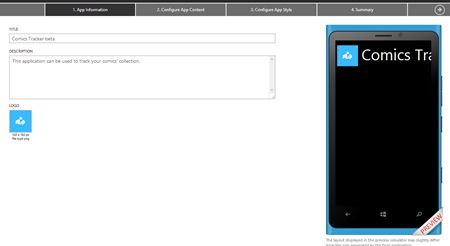

Step 1: App Information

The first step is about the basic apps information, which are the title, the description and the logo (which is a 160×160 PNG image). While you fill the required information, the phone image on the right will be updated live to reflect the changes.

There isn’t too much to see in the preview, since we’ve just defined the basic information. Let’s move on the second step, when we’ll start to see some interesting stuff.

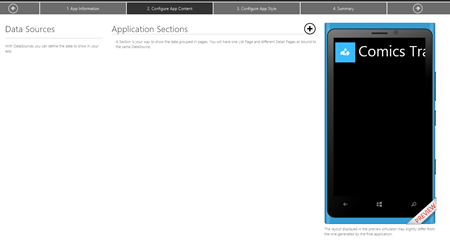

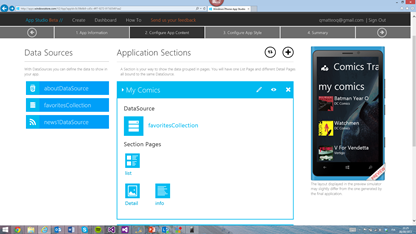

Configure App Content

In this section we’re going to define the content of our application and it’s, without any doubt, the most important one. Here is how the wizard looks like for an empty app:

The app content is based on two key concepts, which are strongly connected: data sources and sections. Data sources are, as the name says, the “containers” of the data that we’re going to display in the application. There are 4 different data sources’ types:

- Collection is a static or dynamic collection of items (we’ll see later how to define it).

- RSS is a dynamic collection of items, populated by a RSS feed.

- YouTube is a collection of YouTube videos.

- Html isn’t a real collection, but just a space that you can fill with HTML content, like formatted text.

Each data source is connected to a section, which is the page (or the pages) that will display the data taken from the source: it can be made by a single page (for example, if it’s a Html data source) or more pages (for example, if it’s a collection or RSS data source that has a main page, with the list of items, and a detail page).

As suggested by the Windows Phone guidelines, the main page of the application is a Panorama that can have up to 6 sections: each section added in this view will be treated like a separated PanoramaItem in the XAML. This means that you’ll be able to jump from one section to another simply by swiping on the left or on the right.

If you want to add more than 6 sections, you can choose to add a Menu, which is a special section that simply displays a list of link: every link can be a web link (to redirect the user to a web page) or a section link, which redirects the user to a new section. The setup process that I’m going to describe it’s exactly the same in both case: the only difference is that, if the section is placed at the main level, it will be displayed directly in the panorama; if it’s inserted using a menu, it will be placed in another page of the application.

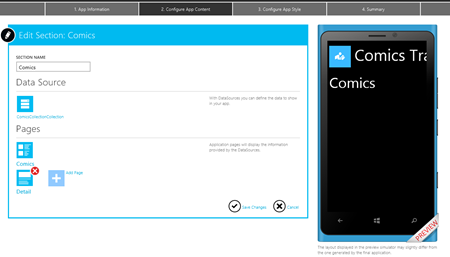

Let’s see how to define a section: you can add it by clicking on the “+” button near the Application Sections header. Here is the view that is displayed when you create a new section.:

You can give a name to the section and choose which is the data source’s type that will be connected: once you’ve made your choice, you simply have to give to the source a name and press the Save changes button. In this sample, we’re going to create a data source to store our comics, which is a Collection data source.

Here is how a typical data source looks like: the tool has already added for us two pages; a master one, which will be included in the Panorama and will display the list of items; a detail one, which is a different page that will be displayed when the user taps on an item to see the details.

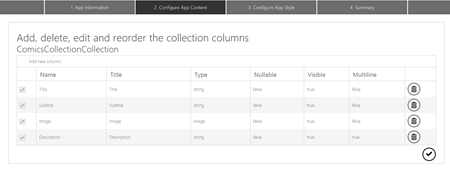

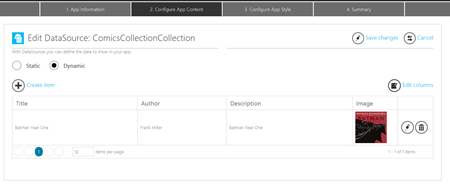

To see how you can customize a data source, click on the ComicsCollection we’ve just created: you’ll see a visual editor that that can be used to define your data source. A collection data source is just a table: data will be automatically pulled and displayed in the application. By default, a collection data source already contains some fields, like Title, Subtitle, Image and Description. You can customize them by clicking the Edit columns button (it’s important to define the fields as first step, since you can’t change them after you’ve inserted some items).

The editor is simple to use:

- You can add new columns, by clicking the Add new column button.

- You can delete a column, by clicking the Bin icon near avery field.

- You can reorder columns, by dragging them using the first icon in the table.

You’ll be able to create fields to store images, texts or numbers. After you’ve set your collection’s fields, you can use the available editor to start adding data; a nice feature is that you can choose if your collection is static or dynamic. Static means that the application will contain just the data that you’ve inserted in the editor: the only way to add new data will be to create an application update and submit it to the store. Dynamic, instead, means that the data inserted in your collection will be available through an Internet service: you’ll be able to add new data by simply inserting new items in the collection’s editor. The application will automatically download (like if it’s a RSS feed) and display them.

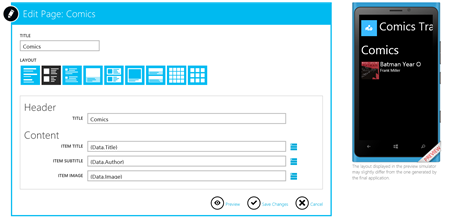

Once you’ve defined your data source, it’s time to customize the user interface and define how to display the data. As we’ve seen, the tool has automatically created two pages for us: the list and the detail page. However, we can customize them by clicking on the desired page in the editor:

In this editor you can customize the title, the layout (there are many available layouts for different purposes, for example you can choose to create an image gallery) and the content. The content editor will change, according to the layout you’ve selected: in the previous sample, we have chosen to use a list layout, so we can set which data to display as title, subtitle and image. If we would have chose an image gallery layout, we would have been able just to set the image field.

The data to display can be picked from the data source we’ve defined: by clicking on the icon near the textbox, we can choose which of the collection’s fields we want to connect to the layout. In the sample, we connect the Title to the comic’s title, the Subtitle to the comic’s author and the Image to the comic’s cover. We can update the preview in the simulator by clicking the Preview icon: items will be automatically pulled from the data source.

The detail page editor is similar: the only difference is that the available layouts will be different, since they are specific for detail pages. Plus, you’ll have access to a section called Extras, which you can use to enable Text To Speech features (the application will read the content of the specified field) and Pin To Start (so that the user can create a shorcut in the home screen to directly access to the detail’s page).

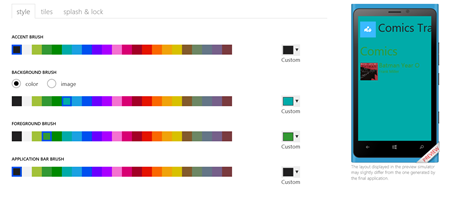

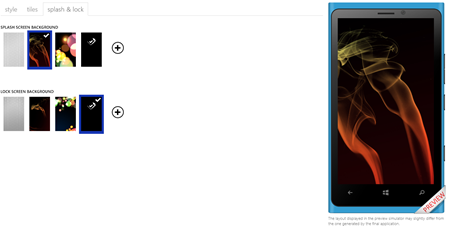

Configure App Style

This section is used to configure all the visual features of the applications: colors, images, tiles, etc. The section is split into three tabs: style, tiles and splash & lock.

Style

The Style section can be used to customize the application’s colors: you can choose between a predefined list or by picking a customized one, by specifying the hexadecimal value.

You can customize:

- The accent brush, which is the color used for the main texts, like the application’s title.

- The background brush, which is the background color of all the pages. You can choose also to use an image, which can be uploaded from your computer.

- The foreground brush, which is the color used for all the texts in the application.

- The Application bar brush, which is the color of the application bar.

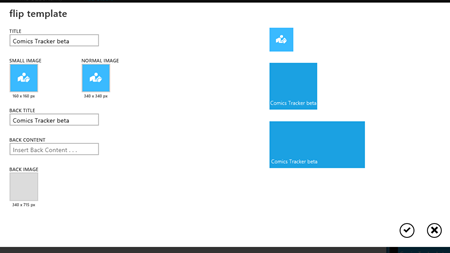

Tiles

In this section you’ll be able to customize the tile and to choose one of the standard tile templates: flip, cycle and iconic. Cycle will be available only if you use a static collection data source, since the generated application is not able to pull images from remote and to use them to update the tile.

According to the template, you’ll be able to edit the proper information: by default, the tile will use, as background image, the application’s icon you’ve uploaded in the first step, but you’re free to change it with a new image stored on your computer. On the right the tool will display a live preview of how the tile looks like.

Splash & lock

This last section can be used to customize the splash screen and the lock screen. The generated application, in fact, is able to set an image as lock screen, using the new APIs added in Windows Phone 8. In both cases you can choose between a list of available images or upload your custom one from your hard disk.

Summary

We’re at the end of the process! The summary page will show you a recap of the application: the name, the icon and the number of sections, pages and data sources we’ve added.

To complete the wizard you need to click the Generate button: the code generation process will start and will complete within minutes. You’ll get a mail when the process is finished: one of the cool App Studio features is that you’ll be able to install the created application on your phone even if it’s not unlocked and without having to copy the XAP file using the Application Deployment tool that is included in the SDK. This is made possible by using an enterprise certificate, that will allow you to simply download the XAP using the browser and to install it. This is why the e-mail you’ve received is important: it contains both the links to the certificate (that you’ll have to install first) and to the application. Installing the certificate it’s easy: just tap on the link on your phone; Windows Phone will open Internet Explorer and download the certificate, then it will prompt you if you want to add a Company account for Microsoft Corporation . Just choose “Yes” and you’re done: now you can go back to the portal, where you’ll find a QR Code that points to the application’s XAP. Just decode it using the native Bing Vision feature (press the Search hardware button, tap on the Eye icon and point the phone’s camera towards the QR code): again, Windows Phone will open Internet Explorer, download the XAP file and it will prompt you if you want to install the company app. Just tap Yes and, after a few seconds, you’ll find your app in the applications list.

The tool will provide you also two other options:

- Download source code will generate the Visual Studio project, so that you can manually add new features to the application that are not supported by the tool.

- Download publish package will generate the XAP file required if you want to publish the application on the Store.

Have fun!

Now it’s your turn to start doing experiments with the tool: try to add new sections, new pages or to use one of the already existing templates. Anytime, you’ll be able to resume your work from the Dashboard section of the website: it will list all the applications you’ve created and you’ll be able to edit or download them.

In the next posts, we’ll take a look at the code generated by the tool and how we can leverage it to add new features.

See Matteo’s initial App Studio review below.

‡ Robert Green (@rogreen_ms) produced a 00:15:00 Azure Mobile Services Tools in Visual Studio 2013 video clip for Channel9 on 7/24/2013 (missed when published):

In this show, Robert is joined by Merwan Hade, who demonstrates how easy it is to add Azure Mobile Services that use push notifications to a Windows Store app built using Visual Studio 2013. Rather than having to switch back and forth from Visual Studio to the Azure portal Web site, you can do everything from inside Visual Studio. This saves time and should make anyone using Mobile Services very happy.

• Matteo Pagani (@qmatteoq) provided a third-party review of App Studio: a new tool by Microsoft for Windows Phone developers on 8/7/2013:

Yesterday Microsoft revealed a new tool for all Windows Phone developers, called App Studio. It’s a [beta version of a] web application, that can be used to create a new Windows Phone application starting from scratch: by using a visual editor, you’ll be able to define all the visual aspects of your applications, live tiles, images, logos, etc.

Plus, you’ll be easily able to create pages and menus and to display collections, which are a series of items that can be static, or taken from an Internet resource (an RSS feed, a YouTube video, etc.). You can add also some special features, like Pin To Start (to pin a detail page to the start screen) or Text To Speech (to read a page’s content to the user). It’s the perfect starting point if you easily want to create a company app, or a website companion app and you don’t have too much time to spend on it.

After the editing process, you’ll be able to test your application directly on the phone: by using an enterprise certificate (that you’ll need to install), you’ll be able to install the generate XAP directly from the website, even if your phone is not developer unlocked.

I know what you’re thinking: “I’m an experienced Windows Phone developer, I don’t care about a tool for newbies”. Well, App Studio may reserve many surprises: other than simply generating the app, it creates also the Visual Studio project with the full source code, which is based on the MVVM pattern. It’s the perfect starting point if you want to develop a simple app and then leverage it by adding new features; or if you need fast prototyping, to show an idea to a potential customer.

The website where to start is http://apps.windowsstore.com. [Click the Start Building button.] After you’ve logged in with your Microsoft Account, you’ll be able to create an empty application or to use one of the available templates (which already contain a series of pages and menus for some common scenarios, like a company app, a hobby app or a sporting app). Anyway, you’ll be able to edit every existing item and to fully customize the template. …

Note: You’ll need an invitation code, which you can obtain from studio@microsoft.com, to create an app. I requested one and the responder said I can expect a response within the next 24 hours.

In the next posts we’ll cover the App Studio basics: how to create an application, how to customize it, how to deploy it. We’ll also see, in another series of posts, how to customize the generated Visual Studio project, to add new features to the application.

With the App Studio release Microsoft has also introduced a great news for developers: now you’ll be able to unlock a Windows Phone device even without a paid developer account. The difference is that you’ll be limited to unlock just 1 phone and to side load up to 2 apps, while regular developers will continue to be able to unlock up to 3 phones and to side load up to 10 apps.

I’m anxious to find a connection between App Studio and Windows Azure Mobile Services (WAMS.) You’ll be the first to know if one exists.

• Nick Harris (@cloudnick) posted TechEd North America 2013 sessions about Windows Azure Mobile Services on 8/6/2013:

Yep its been a couple really busy months of events. TechEd, /BUILD and ImagineCup. Thanks for coming to my TechEd North America 2013 sessions back in June. If you missed the sessions below you can find the respective Channel 9 videos and slide decks if you feel like presenting to your local user group.

Developing Connected Windows Store Apps with Windows Azure Mobile Service: Overview (200)

Join us for a demo-packed introduction to how Windows Azure Mobile Services can bring your Windows Store and Windows Phone 8 apps to life. We look at Mobile Services end-to-end and how easy it is to add authentication, secure structured storage, and send push notifications to update live tiles. We also cover adding some business logic to CRUD operations through scripts as well as running scripts on a schedule. Learn how to point your Windows Phone 8 and Windows Store apps to the same Mobile Service in order to deliver a consistent experience across devices.

Watch directly on Channel9 here and get the Slides here

Build Real-World Modern Apps with Windows Azure Mobile Services on Windows Store, Windows Phone or Android (300)

Join me for and in-depth walkthrough of everything you need to know to build an engaging and dynamic app with Mobile Services. See how to work with geospatial data, multiple auth providers (including third party providers using Auth0), periodic push notifications and your favorite APIs through scripts. We’ll begin with a Windows Store app then point Windows Phone and Android apps to the same Mobile Service in order to ensure a consistent experience across devices. We’ll finish up with versioning APIs.

Watch directly on Channel9 here and get the Slides here

Ping me on twitter @cloudnick if you have questions

I’ve pinged Nick with a request for post on integrating WAMS with App Studio.

• Nick Harris (@cloudnick) explained How to handle WNS Response codes and Expired Channels in your Windows Azure Mobile Service on 8/6/2013:

When implementing push notification solutions for your Windows Store apps many people will implement the basic flow you typically see in a demo then consider their implementation as job done. While this works well in demos and apps that are running at a small scale with few users those that are successful will likely want to optimize their solution to be more efficient to reduce compute and storage costs. While this post is not a complete guide I am providing it to at least give you enough information to get you thinking about the right things.

A few quick up front questions you should ask yourself are:

Is push appropriate? Should I be using Local, Scheduled or Periodic notifications instead?

- Do I need updates at a more granular frequency then every 30 minutes?

- Am I sending notifications that are personalized for each recipient or am I sending a broadcast style notification with the same content to a group of users?

A typical implementation

If you figured out push is the right choice and implemented the basic flow it will normally look something like this:

- Requesting a channel

using Windows.Networking.PushNotifications; … var channel = await PushNotificationChannelManager.CreatePushNotificationChannelForApplicationAsync();- Registering that channel with your cloud service

var token = Windows.System.Profile.HardwareIdentification.GetPackageSpecificToken(null); string installationId = Windows.Security.Cryptography.CryptographicBuffer.EncodeToBase64String(token.Id); var ch = new JObject(); ch.Add("channelUri", channel.Uri); ch.Add("installationId", installationId); try { await App.pushdemoClient.GetTable("channels").InsertAsync(ch); } catch (Exception exception) { HandleInsertChannelException(exception); }- Authenticate against WNS and sending a push notification

//Note: Mobile Services handles your Auth against WNS for your, all you have to do is configure your Store app and the portal WNS credentials. function SendPush() { var channelTable = tables.getTable('channels'); channelTable.read({ success: function (channels) { channels.forEach(function (channel) { push.wns.sendTileWideText03(channel.uri, { text1: 'hello W8 world: ' + new Date().toString() }); }); } }); }And for most people this is about as far as the push implementation goes. What many are not aware of is that WNS actually provides two useful pieces of information that you can use to make your push implementation more efficient – a Channel Expiry time and a WNS response that includes both notification status codes and device status codes using this information you can make your solution more efficient. Let’s take a look at the first one

Handling Expired Channels

When you request a channel from WNS it will return to you both a channel and an expiry time, typically 30 days from your request. Consider that your app over time is popular but you do have users that over time end up deciding either to not use your app for extended periods or delete it all together. Over time these channels will hit their expiry date and will no longer be of any use and there is no need to a) send notifications to these channels and b) keep these channels in your datastore. Let’s have a look at a simple implementation that will cleanup your expired channels.

Using a Scheduled Job in Mobile Services we can perform a simple clean of your channels but first we must send the Expiry to your Mobile Service. To do this you must update step 2 of the above implementation to pass up the channel expiry as a property in your JObject – you will find the channel expiration in the channel.ExpirationTime property. For my channel table I have called this Expiry.

Following that once you have created your scheduled push job at say an interval of X (I am using 15 minutes) you can then add a function that deletes the expired channels similar to the following

function cleanChannels() { var sql = "DELETE FROM channels WHERE Expiry < GetDate()"; mssql.query(sql, { success: function (results) { console.log('deleting expired channels:', results) }, error: function (error) { console.log(error); } }); }As you can see there is no real magic here and you probably want to handle UTC dates – but in short it demonstrates the concept that the expired channels are not useful for sending notifications so delete them, flag them as deleted or anything else that keeps them out of the valid set that you will push to… moving on

Handling the WNS response codes

When you send a notification to a channel via WNS, WNS will send a response. Within this response are many useful response headers. Today i’ll just focus on X-WNS-NotificationStatus but it’s worth noting that you should also consider X-WNS-DeviceConnectionStatus – more details here

Let’s look at a typical response:

{

headers:

{ ‘x-wns-notificationstatus’: ‘received’,

‘x-wns-msg-id’: ’707E20A6167E338B’,

‘x-wns-debug-trace’: ‘BN1WNS1011532′ },

statusCode: 200,

channel: ‘https://bn1.notify.windows.com/?token=AgYAAACghhaYbZa4sqjJ23pWp3kGDcEOEb3JxxdeBahCINn15fc11TiG0mlTpR5heYQmEaQrgZc3TSSwoUllW9s4Lsn3eyvSn19DcrX%2bOvSOY4Bq%2bPKGWbdy3mjTmaRi2Yb1dIM%3d’

}Of interest in this response is X-WNS-NotificationStatus which can be one of three different states:

- received

- dropped

- channelthrottled

As you can probably guess if you are sending notifications when you are either throttled or dropped it is probably not a good use of your compute power and as such you should really handle channels that are not returning received status in a fitting way. Consider the following when the scheduled job runs delete any expired channels and send notifications to channels in (the status of received) OR (that are not in the status of received AND that last had a push sent over an hour ago). This can be easily achieved by tracking the X-WNS-NotificationStatus every time a notification is sent. Code follows:

function SendPush() { cleanChannels(); doPush(); } function cleanChannels() { var sql = "DELETE FROM channel WHERE Expiry < GetDate()"; mssql.query(sql, { success: function (results) { console.log('deleting expired channels:', results) }, error: function (error) { console.log(error); } }); } function doPush() { //send only to received channels OR channels that are not in the state of received that last had a push sent over an hour ago var sql = "SELECT * FROM channel WHERE notificationStatus IS NULL OR notificationStatus = 'received' OR ( notificationStatus <> 'received' AND CAST(GetDate() - lastSend AS float) * 24 >= 1) "; mssql.query(sql, { success: function (channels) { channels.forEach(function (channel) { push.wns.sendTileWideText03(channel.uri, { text1: 'hello W8 world: ' + new Date().toString() }, { success: function (response) { handleWnsResponse(response, channel); }, error: function (error) { console.log(error); } }); }); } }); } // keep track of the last know X-WNS-NotificationStatus status for a channel function handleWnsResponse(response, channel) { console.log(response); var channelTable = tables.getTable('channel'); // http://msdn.microsoft.com/en-us/library/windows/apps/hh465435.aspx channel.notificationStatus = response.headers['x-wns-notificationstatus']; channel.lastSend = new Date(); channelTable.update(channel); }That’s about it I hope this post has helped you to start thinking about how to handle your Push Notification implementation beyond the basic 101 demo push implementation

Emilio Salvador Prieto posted Everyone can build an app – introducing Windows Phone App Studio beta to the Windows Phone Developer Blog on 8/6/2013:

Today Todd Brix outlined several new programs to make it easier for more developers to get started with the Windows Phone platform. In this post, I’d like to tell you a bit more about one of them, Windows Phone App Studio beta. I’ll cover what it does today and how you can help determine the future direction of this new tool.

Windows Phone App Studio is about giving everyone the ability to create an app, regardless of experience. It also can radically accelerate workflow for all developers.

I continue to be impressed with the rate at which the developer community has adopted the app paradigm, but I also recognize that the app economy is still in its infancy. From a few hundred apps just a few years ago to millions of apps today, developers have imagined and built amazing app experiences that elevate the concept of a smartphone to new heights. In a way, apps are the new web. Websites began as portals for large companies, then became vital to small and local business, until ultimately we all had a piece of the web via blogs and social networks. The same is now true of apps. With the industry’s best developer tools and technologies, and a growing set of innovative features and capabilities across the Windows family, we are investing in new ways to make it even easier for everyone to quickly create innovative and relevant apps.

Windows Phone App Studio beta is a web-based app creation tool designed to help people easily bring an app idea to life by applying text, web content, images, and design concepts to a rich set of customizable templates. Windows Phone App Studio can help facilitate and accelerate the app development process for developers of all levels.

For hobbyists and first-time app designers, Windows Phone App Studio can help you generate an app in 4 simple steps. When you are satisfied with your app, Windows Phone App Studio will export a file in a form that can be submitted for publication to the Windows Phone Store so the new app can be made available to friends, family, and Windows Phone users across the globe. The app’s data feed is maintained in the cloud, so there’s no hosting or maintenance for the developer to orchestrate.

More skilled developers, on the other hand, can use the tool for rapid prototyping, and then export the code and continue working with the project in Visual Studio. Unlike other app creation tools, with Windows Phone App Studio a developer can download the source code for the app to enhance it using Visual Studio. (Emphasis added.)

The Windows Phone App Studio beta is launching with a limited number of templates, plug-ins, and capabilities, and is optimized for Windows Internet Explorer 10. We have implemented full support for Live Tiles in this first release to give developers and users the opportunity to personalize their app experiences.

What features we add next are largely up to you: Windows Phone App Studio is what you make of it. We’re exploring new content, data access modules, and capabilities, and we’re eager to learn just how you use the tool and what sort of additional training, resources, or guidance you may value.

I invite you to sign up and explore Windows Phone App Studio and more importantly, I’m looking forward to hearing your suggestions on how we could expand the service.

Haddy El-Haggan (@Hhaggan) described Windows Azure Mobile Services – CORS in a 7/30/2013 post (missed when published):

For those who have worked with the Windows Azure Mobile Services, after building a new Windows Azure Mobile Services you can download the application or connect it to the application you have already built. For each application you build using this mobile service or for any change of the application domain you will have to add its domain to the Windows Azure Cross-Origin resource sharing known as CORS. The reason you will have to do so is to allow the communication between the different applications, from different platforms with different URLs to communicate with your Windows Azure Mobile Services.

I have faced this error especially when I was developing an application on the local machine. If you have downloaded the application from the portal directly and have run it without any modification it will run smoothly without any errors, the reason it worked smoothly is that if you went to the CORS under the configuration you will find the local host added to the CORS.

If you have added a new project to your solution that you have downloaded from the portal, and just run it. You will find that it will run smoothly but won’t execute any functions that require actions from the Windows Azure Mobile Services. The reason is that your application that runs on your local machine is not using local host but an IP with 127.0.0.1 that you will have to add it manually on your Windows Azure Mobile Services only for the testing after that I think that you will have to remove it before publishing the application.

<Return to section navigation list>

Windows Azure Marketplace DataMarket, Cloud Numerics, Big Data and OData

Chris Robinson described Using the new client hooks in WCF Data Services Client in a 7/26/2013 post to the WCF Data Services Blog (missed when posted):

What are the Request and Response Pipeline configurations in WCF Data Services Client?

In WCF Data Services 5.4 we added a new pattern to allow developers to hook into the client request and response pipelines. In the server, we have long had the concept of a processing pipeline. Developers can use the processing pipeline event to tweak how the server processes requests and responses. This concept has now been added to the client (though not as an event). The feature is exposed through the

Configurationsproperty on theDataServiceContext. OnConfigurationsthere are two properties, calledResponsePipelineandRequestPipeline. TheResponsePipelinecontains configuration callbacks that influence reading to OData and materializing the results to CLR objects. TheRequestPipelinecontains configuration callbacks that influence the writing of CLR objects to the wire. Developers can then build on top of the new public API and compose higher level functionality.The explanation might be a bit abstract so let’s move look at a real world example. The code below will document how to remove a property that is unnecessary on the client or that causes materialization issues. Previously this was difficult to do, and impossible if the payload was returning the newer JSON format, but this scenario is now trivially possible with the new hooks. Below is a code snippet to remove a specific property:

This code is using the

OnEntryReadresponse configuration method to remove the property. Behind the scenes what is happening is theMicrosoft.Data.ODataReadercallsreader.Read(). As it reads though the items, depending on theODataItemtype a call will be made to the all configuration callbacks of that type that are registered. A couple notes about this code:

- Since

ODataEntry.Propertiesis anIEnumerable<ODataProperty>and not anICollection<ODataProperty>, we need to replace the entireIEnumerableinstead of just callingODataEntries.Properties.Remove().ResolveTypeis used here to use theTypeNameand get theEntityType, typically for a code generatedDataServiceContextthis method is automatically hooked up but if you are using aDataServiceContextdirectly then delegate code will need to be written.What if this scenario has to occur for other properties on the same type or properties on a different type? Let’s make some changes to make this code a bit more reusable.

Extension method for removing a property from an

ODataEntry:Extension method for removing a property from the

ODataEntryon the selected type:And now finally the code that the developer would write to invoke the method above and set the configuration up:

The original code is now broken down and is more reusable. Developers can use the

RemovePropertiesextension above to remove any property from a type that is in theODataEntrypayload. These extension methods can also be chained together.The example above shows how to use

OnEntryEnded, but there are a number of other callbacks that can be used. Here is a complete list of configuration callbacks on the response pipeline:All of the configuration callbacks above with the exception of

OnEntityMaterializedandOnMessageReaderSettingsCreateare called when theODataReaderis reading through the feed or entry. TheOnMessageReaderSettingsCreatecallback is called just prior to when theODataMessageWriteris created and before any of the other callbacks are called. TheOnEntityMaterializedis called after a new entity has been converted from the givenODataEntry. The callback allows developers to apply any fix-ups to an entity after it was converted.Now let’s move on to a sample where we use a configuration on the

RequestPipelineto skip writing a property to the wire. Below is an example of an extension method that can remove the specified properties before it is written out:As you can see we are following the same pattern as the extension method we wrote to

RemovePropertiesfor theResponsePipeline. In comparison to this extension method this function doesn’t require the type resolving func, so it’s a bit simpler. The type information is specified on theOnEntryEndingargs in theEntityproperty. Again this example only touches onODataEntryEnding. Below is the complete list of configuration callbacks that can be used:With the exception of

OnMessageWriterSettingsCreated, the other configuration callbacks are called when theODataWriteris writing information to the wire.In conclusion, the request and response pipelines offer ways to configure the how payloads are read and written to the wire. Let us know any other questions you might have to leverage this feature.

<Return to section navigation list>

Windows Azure Service Bus, BizTalk Services and Workflow

<Return to section navigation list>

Windows Azure Access Control, Active Directory, Identity and Workflow

‡ Brad Anderson (@InTheCloudMSFT) posted What’s New in 2012 R2: Identity Management for Hybrid IT to the In the Cloud blog on 8/9/2013:

Part 6 of a 9-part series.

Leaders in any industry have one primary responsibility in common: Sifting through the noise to identify the right areas to focus on and invest their organization’s time, money, and people. This was especially true during our planning for the 2012 R2 wave of products; and this planning identified four key areas of investment where we focused all our resources.

These areas of focus were the consumerization of IT, the move to the cloud, the explosion of data, and new modern business applications. To enable our partners and customers to capitalize on these four areas, we developed our Cloud OS strategy, and it immediately became obvious to us that each of those focus areas relied on consistent identity management in order to operate at an enterprise level.

For example, the consumerization of IT would be impossible without the ability to verify and manage the user’s identity and devices; an organization’s move to the cloud wouldn’t be nearly as secure and dynamic without the ability to manage access and connect people to cloud-based resources based on their unique needs; the explosion of data would be useless without the ability to make sure the right data is accessible to the right people; and new cloud-based apps need to govern and manage access just like applications always have.

In the 13+ years since the original Active Directory product launched with Windows 2000, it has grown to become the default identity management and access-control solution for over 95% of organizations around the world. But, as organizations move to the cloud, their identity and access control also need to move to the cloud. As companies rely more and more on SaaS-based applications, as the range of cloud-connected devices being used to access corporate assets continue to grow, and as more hosted and public cloud capacity is used companies must expand their identity solutions to the cloud.

Simply put, hybrid identity management is foundational for enterprise computing going forward.

With this in mind, we set out to build a solution in advance of these requirements to put our customers and partners at a competitive advantage.

To build this solution, we started with our “Cloud first” design principle. To meet the needs of enterprises working in the cloud, we built a solution that took the power and proven capabilities of Active Director and combined it with the flexibility and scalability of Windows Azure. The outcome is the predictably named Windows Azure Active Directory.

By cloud optimizing Active Directory, enterprises can stretch their identity and access management to the cloud and better manage, govern, and ensure compliance throughout every corner of their organization, as well as across all their utilized resources.

This can take the form of seemingly simple processes (albeit very complex behind the scenes) like single sign-on which is a massive time and energy saver for a workforce that uses multiple devices and multiple applications per person. It can also enable the scenario where a user’s customized and personalized experience can follow them from device to device regardless of when and where they’re working. Activities like these are simply impossible without a scalable, cloud-based identity management system.

If anyone doubts how serious and enterprise-ready Windows Azure AD already is, consider these facts:

- Since we released Windows Azure AD, we've had over 265 billion authentications.

- Every two minutes Windows Azure AD services over 1,000,000 authentication requests for users and devices around the world (that’s about 9,000 requests per second).

- There are currently more than 420,000 unique domains uploaded and now represented inside of Azure Active Directory.

Windows Azure AD is battle tested, battle hardened, and many other verbs preceded by the word “battle.”

But, perhaps even more importantly, Windows Azure AD is something Microsoft has bet its own business on: Both Office 365 (the fastest growing product in Microsoft history) and Windows Intune authenticate every user and device with Windows Azure AD.

In this post, Vijay Tewari (Principle Program Manager for Windows Server & System Center), Alex Simons (Director of Program Management for Active Directory), Sam Devasahayam (Principle Program Management Lead for Windows Azure AD), and Mark Wahl (Principle Program Manager for Active Directory) take a look at one of R2’s most innovative features, Hybrid Identity Management.

As always in this series, check out the “Next Steps” at the bottom of this post for links to wide range of engineering content with deep, technical overviews of the concepts examined in this post.

Today’s hybrid IT environment dictates that customers have the ability to consume resources from on-premises infrastructure, as well as those offered by service providers and Windows Azure. Identity is a critical element that is needed to provide seamless experiences when users access these resources. The key to this is using an identity management system that enables the use of the same identities across providers.

Previously on the Active Directory blog, the Active Directory team has discussed “What’s New in Active Directory in Windows Server 2012 R2,” as well as the features which support “People-centric IT Scenarios.” These PCIT scenarios enable organizations to provide users with secure access to files on personal devices, and further control access to corporate resources on premises.

In this post, we’ll cover the investments we have made in the Active Directory family of products and services. These products dramatically simplify Hybrid IT and enable organizations to have a consistent management of services using the same identities for both on-premises and the cloud.

Windows Server Active Directory

First, let’s start with some background.

Today, Active Directory in Windows Server (Windows Server AD) is widely adopted across organizations worldwide, and it provides the common identity fabric across users, devices and their applications. This enables seamless access for end users – whether they are accessing a file server from their domain joined computer, or accessing email or documents on a SharePoint server. It also allows IT to set access policies on resources, and is the foundation for Exchange and many other enterprise critical capabilities.

Windows Server Active Directory on Windows Azure Virtual Machines

Today’s Hybrid IT world is focused on driving efficiencies in infrastructure services. As a result we see organizations move more application workloads to a virtualized environment. Windows Azure provides infrastructure services to spin up new Windows Server machines within minutes and make adjustments as usage needs change. Windows Azure also enables you to extend your enterprise network with Windows Azure Virtual Network. With this, when applications that rely on Windows Server AD need to be brought into the cloud, it is possible to locate additional domain controllers on Windows Azure Virtual Network to reduce network lag, improve redundancy, and provide domain services to virtualized workloads.

One scenario that has already been delivered (starting with Windows Server 2012) is enabling Windows Server 2012’s Active Directory Domain Services role to be run within a virtual machine on Windows Azure.

You can evaluate this scenario via this tutorial and create a new Active Directory forest in servers hosted on Windows Azure Virtual Machines. You can also review these guidelines for deploying Windows Server AD on Windows Azure Virtual Machines.

Windows Azure Active Directory

We have also been building a new set of features into Windows Azure itself – Windows Azure Active Directory. Windows Azure Active Directory (Windows Azure AD) is your organization’s cloud directory. This means that you can decide who your users are, what information to keep in the cloud, who can use or manage that information, and what applications or services are allowed to access it.

Windows Azure AD is implemented as a cloud-scale service in Microsoft data centers around the world, and it has been exhaustively architected to meet the needs of modern cloud-based applications. It provides directory, identity management, and access control capabilities for cloud applications.

Managing access to applications is a key scenario, so both single tenant and multi-tenant SaaS apps are first class citizens in the directory. Applications can be easily registered in your Windows Azure AD directory and granted access rights to use your organization’s identities. If you are a developer for a cloud ISV, you can register a multi-tenant SaaS app you've created in your Windows Azure AD directory and easily make it available for use by any other organization with a Windows Azure AD directory. We provide REST services and SDKs in many languages to make Windows Azure AD integration easy for you to enable your applications to use organizational identities.

This model powers the common identity of users across Windows Azure, Microsoft Office 365, Dynamics CRM Online, Windows Intune, and third party cloud services (see diagram below).

Relationship between Windows Server AD and Windows Azure AD

For those of you who already have a Windows Server AD deployment, you are probably wondering “What does Windows Azure AD provide?” and “How do I integrate with my own AD environment?” The answer is simple: Windows Azure AD complements and integrates with your existing Windows Server AD.

Windows Azure AD complements Windows Server AD for authentication and access control in cloud-hosted applications. Organizations which have Windows Server Active Directory in their data centers can connect their domains with their Windows Azure AD. Once the identities are in Windows Azure AD, it is easy to develop ASP.NET applications integrated with Windows Azure AD. It is also simple to provide single sign on and control access to other SaaS apps such as Box.com, Salesforce.com, Concur, Dropbox, Google Apps/Gmail. Users can also easily enable multi-factor authentication to improve security and compliance without needing to deploy or manage additional servers on-premises.

The benefit of connecting Windows Server AD to Windows Azure AD is consistency – specifically, consistent authentication for users so that they can continue with their existing credentials and will not need to perform additional authentications or remember supplementary credentials. Windows Azure AD also provides consistent identity. This means that as users are added and removed in Windows Server AD, they will automatically gain and lose access to applications backed by Windows Azure AD.

Because Windows Azure AD provides the underlying identity layer for Windows Azure, this ensures an organization can control who among their in-house developers, IT staff, and operators can access their Windows Azure Management Portal. In this scenario, these users do not need to remember a different set of credentials for Windows Azure because the same set of credentials are used across their PC, work network, and Windows Azure.

Connecting with Windows Azure Active Directory

Connecting your organization’s Windows Server AD to Windows Azure AD is a three-step process.

Step 1: Establish a Windows Azure AD tenant (if your organization doesn’t already have one).

First, your organization may already have Windows Azure AD. If you have subscribed to Office365 or Windows Intune, your users are automatically stored in Windows Azure AD and you can manage them from the Windows Azure Management Portal by signing in as your organization’s administrator and adding a Windows Azure subscription.

This video explains how to use an existing Windows Azure AD tenant with Windows Azure:

If you do not have a subscription to one of these services, you can create a new Windows Azure AD tenant by following this link to sign up for Windows Azure as an organization.

Once you sign up for Windows Azure, sign in as the new user for your tenant (e.g., “user@example.onmicrosoft.com”), and pick a Windows Azure subscription. You will then have a tenant in Windows Azure AD which you can manage.

When logged into the Windows Azure Management Portal, go to the “Active Directory” item and you will see your directory.

Step 2: Synchronize your users from Windows Server Active Directory

Next, you can bring in your users from your existing AD domains. This process is outlined in detail in the directory integration roadmap.

After clicking the “Active Directory” tab, select the directory tenant which you are managing. Then, select the “Directory Integration” option.

Once you enable integration, you can download the Windows Azure Active Directory Sync tool from the Windows Azure Management portal, which will then copy the users into Windows Azure AD and continue to keep them updated.

Step 3: Choose your authentication approach for those users

Finally, for authentication we’ve made it simple to provide consistent password-based authentication across both domains and Windows Azure AD. We do this with a new password hash sync feature.

Password hash sync is great because users can sign on to Windows Azure with the password that they already use to login to their desktop or other applications that are integrated with Windows Server AD. Also, as the Windows Azure Management portal is integrated with Windows Azure AD, it supports single sign-on with an organization’s on-premises Windows Server AD.

If you wish to enable users to automatically obtain access to Windows Azure without needing to sign in again, you can use Active Directory Federation Services (AD FS) to federate the sign-on process between Windows Server Active Directory and Windows Azure AD.

In Windows Server 2012 R2, we’ve made series of improvements to AD FS to support Hybrid IT.

We blogged about it recently in the context of People Centric IT in this post, but the same concepts of risk management apply to any resource that is protected by Windows Azure AD. AD FS in Windows Server 2012 R2 includes deployment enhancements that enable customers to reduce their infrastructure footprint by deploying AD FS on domain controllers, and it supports more geo load-balanced configurations.

AD FS includes additional pre-requisite checking, it permits group-managed service accounts to reduce downtime, and it offers enhanced sign-in experiences that provide a seamless experience for users accessing Windows Azure AD based services.

AD FS also implements new protocols (such as OAuth) that deliver consistent development interfaces for building applications that integrate with Windows Server AD and with Windows Azure AD. This makes it easy to deploy an application on-premises or on Windows Azure.

For organizations that have deployed third-party federation already, Shibboleth and other third-party identity providers are also supported by Windows Azure AD for federation to enable single sign-on for Windows Azure users.

Once your organization has a Windows Azure AD tenant, by following those steps your organization’s users will be able to seamlessly interact in the Windows Azure management, as well as in other Microsoft and third-party cloud services. And all of this can be done with the same credentials and authentication experiences which they have with their existing Windows Server Active Directory.

Summary

As IT organizations evolve to support resources that are beyond their data centers, Windows Azure AD, the Windows Server AD enhancements in Windows Server 2012, and Windows Server 2012 R2 provide seamless access to these resources.

In the coming weeks you will see more details of the Active Directory enhancements in Windows Azure and in Windows Server 2012 R2 on the Active Directory blog.

This post is just the first of three Hybrid IT posts that this “What’s New in 2012 R2” series will cover. Next week, watch for two more that cover hybrid networking and disaster recovery. If you have any questions about this topic, don’t hesitate to leave a question in the comment section below, or get in touch with me via Twitter @InTheCloudMSFT.NEXT STEPS

To learn more about the topics covered in this post, check out the following articles. You can also obtain a Windows Azure AD directory by signing up for Windows Azure as an organization.

- Using an existing Windows Azure AD Tenant with Windows Azure

The video in this blog post covers how to sign up for a Windows Azure subscription as well as how to use an organizational account to sign in to and manage Windows Azure.- Making it Simple to Connect Windows Server AD to Windows Azure AD with password Hash Sync

This blog post introduces the enhancements for you to easily synchronize users from Windows Server AD to Windows Azure AD so that users can keep their same passwords.- Easy Web App Integration with Windows Azure Active Directory, ASP.NET & Visual Studio

The ASP.NET and Web Tools for Visual Studio 2013 Preview extends the new ASP.NET project templates and tooling experience to integrate Windows Azure AD authentication and management features right at project creation time.- Application Access Enhancements for Windows Azure Active Directory

The application access enhancements preview enables you to control access to many of the Software-as-a-Service (SaaS) applications, such as Box.com, Salesforce.com, Concur, DropBox & Google Apps Gmail that your organization uses, through one simple management experience.- Windows Azure Active Authentication: Multi-Factor for Security and Compliance

The Windows Azure Active Authentication preview which enables multi-factor authentication for Windows Azure AD identities, securing access to Office 365, Windows Azure, Windows Intune, Dynamics CRM Online and many of the other applications that are integrated with Windows Azure AD. Developers can also use the Active Authentication SDK to build multi-factor authentication into their applications.

Windows Azure Virtual Machines, Virtual Networks, Web Sites, Connect, RDP and CDN

‡ My (@rogerjenn) Uptime Report for My Live Windows Azure Web Site: July 2013 = 99.37% of 8/10/2013 begins:

My Android MiniPCs and TVBoxes blog runs WordPress on WebMatrix with Super Cache on Windows Azure Web Site (WAWS) Shared Preview and ClearDB’s MySQL database (Venus plan) in Microsoft’s West U.S. (Bay Area) data center. Service Level Agreements aren’t applicable to the Web Services Preview; only sites with two or more Reserved Web Site instances qualify for the usual 99.95% uptime SLA.

Running a Shared Preview WAWS costs ~US$10/month plus MySQL charges

- Running two Reserved WAWS instances would cost ~US$150/month plus MySQL charges

I use Windows Live Writer to author posts that provide technical details of low-cost MiniPCs with HDMI outputs running Android JellyBean 4.1+, as well as Google’s new Chromecast device. The site emphases high-definition 1080p video recording and rendition.

The site commenced operation on 4/25/2013. To improve response time, I implemented WordPress Super Cache on May 15, 2013.

Here’s Pingdom’s summary report for July 2013:

The post continues with Pingdom’s graphical Uptime and Response Time reports for the same period, and concludes with this brief history table:

Month Year Uptime Downtime Outages Response Time July 2013 99.37% 04:40:00 45 2,002 ms June 2013 99.34% 04:45:00 30 2,431 ms May 2013 99.58% 03:05:00 32 2,706 ms

‡ Piyush Ranjan continued his series with SWAP space in Linux VM’s on Windows Azure – Part 2 on 8/9/2013:

This article was written by Piyush Ranjan (MSFT) from the Azure CAT team.

In a previous post, SWAP space in Linux VM’s on Windows Azure Part 1, I discussed how by default the Linux VM’s provisioned in Azure IaaS from the gallery images do not have swap space configured. The post then provided a simple set of steps with which one could configure a file based swap space on the resource disk (/mnt/resource). The key point to note, however, is that the steps described there are for a VM that has already been provisioned and is running. Ideally, one would want to have the swap space configured automatically right at the time of the VM provisioning itself, rather than having to wait for a later time and then running a bunch of commands manually.

The trick to automating the swap space configuration at the time of VM provisioning is to use the Windows Azure Linux Agent (waagent). Most people are somewhat vaguely aware that there is an agent running in the Linux VM, but most people also find it a bit too obscure and ignore it, even though the Azure portal actually has a nice documentation on waagent. See Windows Azure Linux Agent user Guide. There is one other point that needs to be mentioned before drilling down into the details of waagent and how it may be used for the task at hand. That point is that this approach works well if you have a customized Linux VM of your own and are exporting it as a reusable image for provisioning of Linux VM’s in future. There is no way of tapping into the capabilities of the waagent when using a raw base image of Linux from the Azure gallery. This is not really a limitation since the use case scenario that I find most useful is one where I start out with a VM that is provisioned using a gallery image, and is then customized for things I like to have; for example, I like to have Standard Java instead of the open-jdk Java; or I may want to install Hadoop binaries on the VM so that the image can then be used later on toward a multi-node cluster. In such a scenario, it is just as easy to configure the waagent to do a few of the additional things that I want done automatically through the provisioning process.

As discussed in the Windows Azure Linux Agent user’s guide, the agent can be configured to do many things, among which are:

- Resource disk management

- Formatting and mounting the resource disk

- Configuring swap space

The waagent is already installed in the VM provisioned from a gallery image and one needs to simply edit its configuration file located at “/etc/waagent.conf” where the configuration looks like the following:

Change the two lines shown above in the configuration file to enable swap, by setting as follows:

Set ResourceDisk.EnableSwap=y

Set ResourceDisk.SwapSizeMB=5120

The overall process, therefore, is the following:

- Provision a Linux VM in IaaS as usual using one of the images in the gallery.

- Customize the VM to your liking by installing or removing software components that you need.

- Edit the “/etc/waagent.conf” file to set the swap related lines, as shown above. Adjust the size of the swap file (the above is setting it to 5 GB).

- Capture a reusable image of the VM using instructions described here.

- Provision new Linux VM’s using the image just exported. These VM’s will have the swap space automatically enabled.

While we are on the subject of Windows Azure Linux Agent, it turns out that it provides yet another interesting capability – that of executing an arbitrary, user-specified script through the Role.StateConsumer property in the same configuration file “/etc/waagent.conf”. For example, one can create a shell script “do-cfg.sh” as follows:

Then, in the configuration file set Role.StateConsumer=/home/scripts/do-cfg.sh or whatever is the path to your script. The waagent execute the script just before sending a “Ready” signal to Azure fabric when provisioning a VM. It passes a command-line argument “Ready” to the custom script which can be tested within the script, as shown above to do some custom initialization. Likewise, the waagent executes the same script at the time of VM shutdown and passes a command-line argument “Shutdown” to the script which can be tested for and some custom cleanup task can be run in the VM.

‡ Brian Hitney described Migrating a Blog to Windows Azure Web Sites in an 8/7/2013 post:

For many years, I’ve hosted my blog on Orcsweb. I moved there about 5 years ago or so, after outgrowing webhost4life. Orcsweb is a huge supporter of the community and has offered free hosting to MVPs and MSFTies, so it seemed like a no brainer to go to a first class host at no charge. Orcs is also local (Charlotte, NC) and I know many of the great folks there. But, the time has come to move my blog to Windows Azure Web Sites (WAWS). (This switch, of course, should be transparent.)

This isn’t meant as disappointment with Orcs, but lately I’ve noticed my site throwing a lot of 503 Service Unavailable messages. Orcs was always incredibly prompt at fixing the issue (I was told the app pool was stopping for some reason), but I always felt guilty pinging support. Frankly, my blog is too important to me, so it seemed time to take responsibility for it.

WAWS allows you to host 10 sites for free, but if you want custom domain names, you need to upgrade to the shared plan at $10/mo. This is a great deal for what you get, and offers great scalability options when needed. My colleague, Andrew, recently had a great post on WebGL in IE11, and he got quite a bit of traction from that post as you can see from the green spike in his site traffic:

This is where the cloud shines: if you need multiple instances for redundancy/uptime, or simply to scale when needed, you can do it with a click of a button, or even automatically.

Migrating the blog was a piece of cake. You’ve got a couple of options with WAWS: you can either create a site from a gallery of images (like BlogEngine.net, Wordpress, et al.), as shown in red below, or simply create an empty website to which you can deploy your own code (shown in green).

Although I use BlogEngine, I already have all the code locally so instead of creating a site from the gallery, I created an empty website using Quick Create and then published the code. If you’re starting a new blog, it’s certainly faster to select a blog of your choice from the gallery and you’re up and running in a minute.

After clicking Quick Create, you just need to enter a name (your site will be *.azurewebsites.net) and a region for your app to live:

Once your site is created (this should only take a few seconds) we’ll see something like this:

Since the site I’m going to be pushing up has already been created, all I need right now is the publish profile. You can certainly publish from source control, but for the time being let’s assume we have a website in a directory we want to publish. I saved that file in a convenient place.

There are two quick ways to bring up the Publish dialog in VS, either through Build – Publish Web Site, or right click on project and select Publish Web Site.

The next step is to locate the publish profile:

Once imported, the connection details should be filled and the connection validated: