Windows Azure and Cloud Computing Posts for 8/12/2013+

Top Stories This Week:

- Scott Guthrie (@ScottGu) described Windows Azure: General Availability of SQL Server Always On Support and Notification Hubs, AutoScale Improvements + More updates in an 8/12/2013 post in the Windows Azure Infrastructure and DevOps section.

- Dave Barth, a Google product manager, reports Google now encrypts all store data by default in the Other Cloud Computing Platforms and Services section.

| A compendium of Windows Azure, Service Bus, BizTalk Services, Access Control, Caching, SQL Azure Database, and other cloud-computing articles. |

‡ Updated 8/19/2013 with new articles marked ‡.

• Updated 8/14/2013 with new articles marked •.

Note: This post is updated weekly or more frequently, depending on the availability of new articles in the following sections:

- Windows Azure Blob, Drive, Table, Queue, HDInsight and Media Services

- Windows Azure SQL Database, Federations and Reporting, Mobile Services

- Windows Azure Marketplace DataMarket, Power BI, Big Data and OData

- Windows Azure Service Bus, BizTalk Services and Workflow

- Windows Azure Access Control, Active Directory, and Identity

- Windows Azure Virtual Machines, Virtual Networks, Web Sites, Connect, RDP and CDN

- Windows Azure Cloud Services, Caching, APIs, Tools and Test Harnesses

- Windows Azure Infrastructure and DevOps

- Windows Azure Pack, Hosting, Hyper-V and Private/Hybrid Clouds

- Visual Studio LightSwitch and Entity Framework v4+

- Cloud Security, Compliance and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

Windows Azure Blob, Drive, Table, Queue, HDInsight and Media Services

<Return to section navigation list>

BusinessWire reported Hortonworks Updates Hadoop on Windows in an 8/13/2013 press release:

Hortonworks, a leading contributor to and provider of enterprise Apache™ Hadoop®, today announced the general availability of Hortonworks Data Platform 1.3 (HDP) for Windows, a 100-percent open source data platform powered by Apache Hadoop. HDP 1.3 for Windows is the only Apache Hadoop-based distribution certified to run on Windows Server 2008 R2 and Windows Server 2012, enabling Microsoft customers to build and deploy Hadoop-based analytic applications. This release is further demonstration of the deep engineering collaboration between Microsoft and Hortonworks. HDP 1.3 for Windows is generally available now for download from Hortonworks.

Application Portability

Delivering on the commitment to provide application portability across Windows, Linux and Windows Azure environments, HDP 1.3 enables the same data, scripts and jobs to run seamlessly across both Windows and Linux. Now organizations can have complete processing choice for their big data applications and port Hadoop applications from one operating system platform to another as needs and requirements change.

New Business Applications Now Possible on Windows

New functionality in HDP 1.3 for Windows includes HBase 0.94.6.1, Flume 1.3.1, ZooKeeper 3.4.5 and Mahout 0.7.0. These new capabilities enable customers to exploit net new types of data to build new business applications as part of their modern data architecture.

Hortonworks Data Platform 1.3 for Windows is the only distribution that enables organizations to run Hadoop-based applications natively on Windows and Linux, providing a common user experience and interoperability across operating systems. HDP for Windows offers the millions of customers running their businesses on Microsoft technologies an ecosystem-friendly Hadoop-based solution that integrates with familiar business analytics tools, such as Microsoft Excel and the Microsoft Power BI for Office 365 suite, and is built for the enterprise and Windows.

“Microsoft is committed to bringing big data to a billion users. To achieve this, we are working closely with Hortonworks to make Hadoop accessible to the broadest possible group of mass market and enterprise customers,” said Herain Oberoi, director, SQL Server Product Management at Microsoft. “Hortonworks Data Platform 1.3 helps us bring Hadoop to Windows so that Microsoft customers can get the best of Hadoop from Hortonworks on premises and from Microsoft in the cloud via HDInsight. In addition, customers can take advantage of integration with Microsoft’s leading business intelligence tools such as Power BI for Office 365, SQL Server and Excel.”

“Hortonworks continues to enable Windows users with powerful enterprise-grade Apache Hadoop,” said Bob Page, vice president, products, Hortonworks. “This new release enables organizations to build new types of applications that were previously not possible and to exploit the massive volumes and variety of data flowing into their data centers.”

Availability

Hortonworks Data Platform 1.3 for Windows is now available for download at: http://hortonworks.com/download/

About Hortonworks

Hortonworks is the only 100-percent open source software provider to develop, distribute and support an Apache Hadoop platform explicitly architected, built and tested for enterprise-grade deployments. Developed by the original architects, builders and operators of Hadoop, Hortonworks stewards the core and delivers the critical services required by the enterprise to reliably and effectively run Hadoop at scale. Our distribution, Hortonworks Data Platform, provides an open and stable foundation for enterprises and a growing ecosystem to build and deploy big data solutions. Hortonworks also provides unmatched technical support, training and certification programs. For more information, visit www.hortonworks.com. Go from Zero to Hadoop in 15 Minutes with the Hortonworks Sandbox.

<Return to section navigation list>

Windows Azure SQL Database, Federations and Reporting, Mobile Services

• Haddy El Haggan (@Hhagan) described differences between Windows Azure Notification Hub – General Availability and Windows Azure Mobile Services in an 8/12/2013 post:

The Windows Azure Service Bus Notification Hub is finally released and it is generally available to be used in the development. It supports multiple platform push notification like Google, Microsoft and apple push notification. The Notification Hub will easily help the application to reach millions of users through their mobile or windows application by simply sending them a Notification through the Service Bus.

Here are the differences between the Windows Azure Mobile Services and the Notification Hub push notification. This table is taken from Announcing General Availability of Windows Azure Notification Hubs & Support for SQL Server AlwaysOn Availability Group Listeners:

• Miranda Luna (@mlunes90) announced the availability of New Mobile Services Samples in an 8/13/2013 post to the Windows Azure Team blog:

Our goal for Windows Azure is to power the world’s apps—apps across every platform and device from developers using their preferred languages, tools and frameworks. We took a another step toward delivering on the promise with the recent general availability announcement of Mobile Services.

Here's a quick look at the new samples:

- Web and mobile app for a marketing contest

- Integration scenarios utilizing Service Bus Relay and BizTalk

- Samples from SendGrid, Twilio, Xamarin and Redbit

- Mobile Services sessions from //build

We hope these will serve as inspiration for your own mobile application development.

Web and mobile app for a marketing contest

The best user experience is one that’s consistent across every web and mobile platform. Windows Azure Mobile Services and Web Sites allow you to do just that for both core business applications and for brand applications. By sharing an authentication system and database or storage container between your web and mobile apps, as seen in the following demo, you can drive engagement and empower your users regardless of their access point.

In the following videos, Nik Garkusha demonstrates how Mobile Services and Web Sites can be used to create a consistent set of services used as a backend for an iOS app and a .NET web admin portal.

In Part 1, Nik covers using multiple authentication providers, reading/Writing data with tables and interacting with Windows Azure blob storage.

In Part 2, Nik continues by creating the admin portal using Web Sites, using with Custom API for cross-platform push notifications, and using Scheduler with 3rd Party add-ons for scripting admin tasks.Integration scenarios utilizing Service Bus Relay and BizTalk

Modern businesses are often faced with the challenge of innovating and reaching new platforms while also leveraging existing systems. Using Mobile Services with Service Bus Relay and BizTalk Server makes that possible.

In the following samples, Paolo Salvatori provides a detailed walk through of how to connect these services to enable such scenarios.

- Integrating with a REST Service Bus Relay Service – This sample demonstrates how to integrate Mobile Services with a line of business application running on-premises via Service Bus Relay service and REST protocol.

- Integrating with a SOAP Service Bus Relay Service – Here, a custom API can is used to invoke a WCF service that uses a BasicHttpRelayBinding endpoint to expose its functionality via a SOAP Service Bus Relay service.

- Integrating with BizTalk Server via Service Bus – In this walk through, learn how to integrate Mobile Services with line of business applications, running on-premises or in the cloud, via BizTalk Server 2013, Service Bus Brokered Messaging, and Service Bus Relay. The Access Control Service is used to authenticate Windows Azure Mobile Services against the Windows Azure Service Bus. In this scenario, BizTalk Server 2013 can run on-premises or in a Virtual Machine on Windows Azure.

- Integrating with Windows Azure BizTalk Services – See how to integrate Mobile Services with line of business applications, running on-premises or in the cloud, via Windows Azure BizTalk Services (currently in preview) and Service Bus Relay. The Access Control Service is used to authenticate Mobile Services against the XML Request-Reply Bridge used by the solution to transform and route messages to the line of business applications.

Samples from SendGrid, Twilio, Xamarin and Redbit

In March, we reiterated our commitment to making it easy for developers to build and deploy cloud-connected applications for every major mobile platform using their favorite languages, tools, and services. Today, I’m happy to share updates to both the Mobile Services partner ecosystem and the feature suite that support that ongoing commitment.

Giving developers easy access to their favorite third party services and rich samples for using Mobile Services with those services is one of our team’s highest priorities. When we unveiled the source control and Custom API features, we enabled a range of new scenarios, one of which is a more flexible way to work with third party services.

Our friends at SendGrid, Twilio, Xamarin and Redbit have all created sample apps to inspire developers to reimagine what’s possible using Mobile Services.

- SendGrid eliminates the complexity of sending email, saving time and money, while providing reliable delivery to the inbox. SendGrid released an iOS sample app that accepts and plays emailed song requests. The SendGrid documentation center and Windows Azure dev center have more information on how send emails from a Mobile Services powered app.

- Twilio provides a telephony infrastructure web service in the cloud, allowing developers to integrate phone calls, text messages and IP voice communications into their mobile apps. Twilio released a iPad sample that allows event organizers to easily capture contact information for volunteers, store it using Mobile Services and enable tap-to-call using Twilio Client. Twilio also published a new tutorial in the Windows Azure dev center that demonstrates how to use Twilio SMS & voice from a Mobile Services custom API script.

- Xamarin is a framework that allows developers to create iOS, Android, Mac and Windows apps in C#. Xamarin’s Craig Dunn recently recorded a video showing developers how to get started building a cloud-connected todolist iOS app in C#.

- The SocialCloud app, recently developed by Redbit, underscores the importance of our partner ecosystem. In addition to Mobile Services, Web Sites and the above third party services, SocialCloud also uses Service Bus, Linux VMs, and MongoDB.

Visit the Redbit blog to learn more about how they built SocialCloud and why they decided to use these services together.

Mobile Services sessions from //BUILD/

The //BUILD/ conference was packed with sessions covering every aspect of developing connected applications with Mobile Services. The best part is that, even if you weren’t in San Francisco, every session is available on Channel 9. Be sure to check out:

- Mobile Services – Soup to Nuts

- Building Cross-Platform Apps with Windows Azure Mobile Services

- Connected Windows Phone Apps made Easy with Mobile Services

- Build Connected Windows 8.1 Apps with Mobile Services

- Who’s that user? Identity in Mobile Apps

- Building REST Services with JavaScript

- Going Live and Beyond with Windows Azure Mobile Services

- Protips for Windows Azure Mobile Services

Summary

We’re committed to continuously delivering improvements to both platform and infrastructure services that developers can rely on when building modern consumer and business applications. Expect to see more new and exciting updates from us shortly. In the meantime I encourage you to:

- Visit the developer center to get started building mobile and apps

- Find answers to your questions in the Windows Azure forums and on Stack Overflow

- Continue to make feature requests on the Mobile Services uservoice

- Bookmark http://aka.ms/CommonWAMS to keep the most up to date mobile services samples right at your fingertips

If you have any questions, comments, or ideas for how we can make Windows Azure better suit your development needs, you can always find me on Twitter.

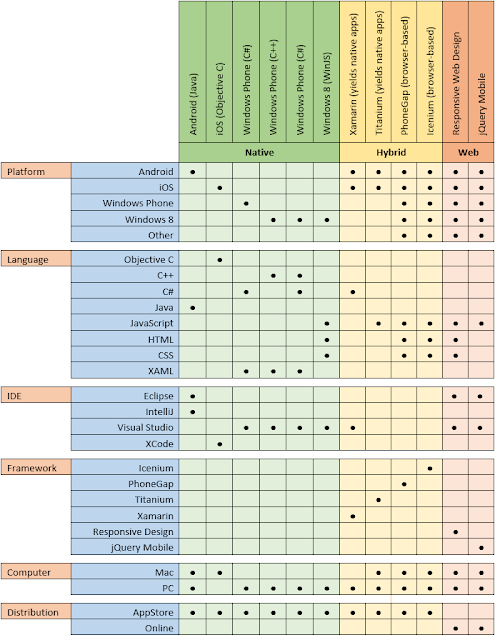

• David Pallman started a mobile development series with Getting Started with Mobility, Part 1: Understanding the Landscape on 8/13/2013:

In this series of posts, we're looking at how to get started as a mobile developer. Here in Part 1 we'll provide an overview of the landscape; in subsequent posts, we'll get down to the details of working in various platforms.

As a mobile developer, you may find yourself specializing in one particular platform (e.g. "I'm an Android developer") or perhaps supporting all of them ("I'm a mobile web developer") or specializing in mobile back-ends (services, data, security, and cloud). All of these are important.

The Front-End

You can develop mobile client apps natively, using a hybrid approach, or via mobile web. Let's look at them, one by one:

- In native development, you are using a tool and language espoused by a mobile platform vendor as the official way to develop for their platform. Depending on the platform, there may be several endorsed languages or development environments to choose from. Native development is often considered the high road, because alternative approaches can sometimes compromise performance, limit fidelity to platform usability conventions, or restrict the functionality available to apps. However, native development can also be expensive and may be a mismatch for the skills known to your developers.

- In hybrid development, a third-party provides tools and a framework that allow to develop for a platform using an alternative approach. This may allow your developers to work in a familiar language or development tool. Some hybrid solutions generate a native application as their output; others execute within an execution layer or browser contained in a native application shell. Hybrid solutions are sometimes considered risky because the third-party may not be able to stay in alignment with mobility platforms.

- In mobile web development, you develop a web site intended for consumption on mobile devices via their browsers. You detect device size and other characteristics and the web experience adapts accordingly. A mobile web approach is not always a suitable experience, but at times it can be. From a skills standpoint, mobile web is very approachable due to the large number of web developers in existence. An economical advantage to mobile apps is that you develop a single solution, rather than separate apps for each platform.

Many people have strong opinions about which approach is best, but be sure to consider the nuances of experience, device features needed, performance, skills alignment, risk, and development cost rather than making a snap decision.

The Back-End

Most mobile solutions involve more than just the app(s), that visible part you interact with on mobile devices. There often needs to be a back-end that provides one or more of the following:

- Security - user authentication and authorization.

- Storage - persistent data storage.

- Notifications - event notifications.

- Processing - you may need server-side processing to augment what the limited processing available on mobile devices.

- Integration - coordination with other systems in the enterprise or on the Internet.

- Cloud - use of cloud computing can make your back-end available across a wide geography or worldwide.

Table: Mobile Client App Development Choices

The table below shows some of the choices available to you when you are targeting Android, Apple, Windows Phone, and/or Windows 8 devices.

In Part 2, we'll look at what it takes to develop native applications for iOS.

• Nick Harris (@cloudnick) described How to implement Periodic Notifications in Windows Store apps with Azure Mobile Services Custom API in a 8/12/2013 article:

I blogged previously on how to make your Push Notification implementation more efficient. In this post I will detail an alternative to Push, that is Periodic Notifications, which is are a poll based solution for updating your live Tiles and Badge content (Note that it can’t be used for Toast or Raw). It turns out if your app scenario can deal with only receiving notifications every thirty minutes or more that this is a much easier way for you to update your tiles. All you need to do is configure you app for periodic notifications, and point it at a service API that will return the appropriate XML template for the badge or tile update in your app whether you are using WCF, Web API or others this is quite an easy thing to achieve. In this case I will demonstrate how you can implement this using Mobile Services.

Let’s start with the backend service by creating a Custom API. To do this all you need to do is select the API tab in the Mobile Services portal.

Next provide a name for your endpoint and set the permissions for get to everyone

Next define the return XML return payload for your custom API. You can see examples of each different Tile templates here

exports.get = function(request, response) { // Use "request.service" to access features of your mobile service, e.g.: // var tables = request.service.tables; // var push = request.service.push; response.send(200, ''+ ''+ ''+ ''+ '@ntotten enjoying himself a little too much'+ ''+ ''+ ''); };

Note:

- If you are supporting multiple tile sizes you should sending the whole payload in for each of the varying tile sizes

- This is just an example – you really should be providing dynamic content here rather than the same tile template every time

Next configure you’re app to point at your Custom API through the package.appxmanifest

Run your app, ensure your app is pinned to start in the right dimension for the content you are returning e.g

Pin your tile and wait for the update after app has been run once.

Job done! – the tile will be updated with the content from your site with the periodic update per your package manifest definition.

<Return to section navigation list>

Windows Azure Marketplace DataMarket, Cloud Numerics, Big Data and OData

‡ Bruno Terkaly (@brunoterkaly) asserted OData In The Cloud – One Of The Most Flexible And Powerful Ways To Provide Scalable Data Services To Virtually Any Client in a 9/15/2013 post:

Introduction

This post is dedicated to illustrating how you can create your own OData provider and host it in the cloud, specifically Windows Azure.

Open Data Protocol (a.k.a OData) is a data access protocol designed to provide standard CRUD access to a data source via a website. It is similar to JDBC and ODBC although OData is not limited to SQL databases.

OData can be thought of as an extension to REST and provides efficient and flexible ways for sharing data in a standardized format that is easily consumed by other systems. It uses well known web technologies like HTTP, AtomPub and JSON. OData is a resource-based Web protocol for querying and updating data.

OData performs operations on resources using HTTP verbs (PUT, POST, UPDATE and DELETE). It identifies those resources using a standard URI syntax. Data travels across the wire over HTTP using the AtomPub or JSON standards.Generally speaking, I would data leverages relational databases as the data store. But what I would like to illustrate is how to leverage a simple text file as the data store. I believe this will give you a quick and easy introduction to the way everything works.

Internally at Microsoft there are many products that leverage OData:

- Windows Azure Data Market

- Azure Table Storage uses OData, SharePoint 2010 allows OData Queries

- Excel PowerPivot.

There are many advantages to OData

- OData gives you an entire query language directly in the URL.

- The client only gets the data that it requests - no more are no less

- The client is very flexible, because it controls queries, not the server, which frees you from having to anticipate all the types of queries you need to support on the backend

- It can request the data in various formats, such as XML, JSON, or AtomPub

- Any client can consume the OData protocol

- You don't need to learn the programming model of a service to program against the service

- There are a lot of client libraries available, such as the as Microsoft .NET Framework client, AJAX, Java, PHP and Objective-C, and more.

- OData supports server paging limits, HTTP caching support, stateless services, streaming support and a pluggable provider model

- You can leverage LINQ as a query language

Starting with government data

The city of San Francisco provides data available for download. So what I did is download crime statistics for the trailing three months. I reduced the 30,000 records to just a few hundred to make development a little bit easier.

One thing the example does not illustrate is how to make this extremely efficient by leveraging caching. This can be easily added to the project, but was avoided in the sake of simplicity.

We will use Visual Studio 2012 and will update some assemblies by using NuGet. That is an essential piece that is necessary for success.

Starting Visual Studio

Once you have Visual Studio up and running, choose File/New from the menu and select Cloud Project as seen below.

Add an ASP.Net Web Role to your solution, as seen below. There are other options, but this one is probably the most familiar to developers today. Click OK when finished.

Solution Explorer should look like this:

As you can see from figure above, there are two projects in the solution. The top one is for deployment purposes, while the bottom one is where we will add our OData code to get the job done.Downloading data

You can navigate to the following URL to download some sample crime data.

https://data.sfgov.org/

I downloaded this data, removed some rows, and added it to the App_Data folder.

Note the file called, PoliceData.txt in the figure above.Adding code

Now we are ready to start adding some code to process this data. We will begin by adding a couple of classes.

In Visual Studio, right mouse click on the web role and add a class as seen below. Name this class CrimeProvider.

There are some important points to notice about the code below:

Also notice that we added a using statements:

CrimeProvider.svc.cs

using System;

using System.Collections.Generic;

using System.Data.Services.Common;

using System.IO;

using System.Linq;

using System.Net;

using System.Web;

using Microsoft.Data.OData;

namespace WebRole1

{

[DataServiceKey("Incident")]

public class CrimeData

{

public string Incident { get; set; } // col 0

public string CrimeType { get; set; } // col 2

public DateTime CrimeDate { get; set; } // col 4

public string Address { get; set; } // col 8

}

public class CrimeProvider

{

private List<CrimeData> crimes = new List<CrimeData>();

public CrimeProvider()

{

WebRequest request = WebRequest.CreateDefault(new Uri(HttpContext.Current.Server.MapPath("~/App_Data/PoliceData.txt")));

WebResponse response = request.GetResponse();

using (StreamReader reader = new StreamReader(response.GetResponseStream()))

{

string data = reader.ReadToEnd();

LoadData(data);

}

}

public CrimeProvider(string data)

{

LoadData(data);

}

private void LoadData(string data)

{

string[] rows = data.Split('\n');

for (int i = 1; i < rows.Length - 1; i++)

{

rows[i] = rows[i].Trim();

string[] cols = rows[i].Split('\t');

crimes.Add(new CrimeData

{

Incident = cols[0],

CrimeType = cols[2],

CrimeDate = Convert.ToDateTime(cols[4]),

Address = cols[8]

});

}

}

public IQueryable<CrimeData> Crimes

{

get { return crimes.AsQueryable(); }

}

}

}3 ways to write a provider

There are three methods that can be used to create an Odata back end:

- EF Provider - easy to use

- Reflection Provider - what I used

- Custom Provider

The technique used today will be a reflection provider. The EF provider is another popular way that makes it easy to leverage a relational database using the framework. The Custom providers is more technically challenging, but offers the greatest flexibility. …

Bruno continues with detailed code examples.

<Return to section navigation list>

Windows Azure Service Bus, BizTalk Services and Workflow

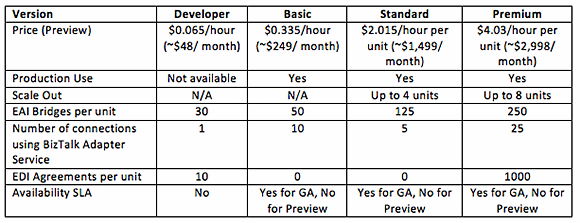

‡ SearchWinDevelopment published my (@rogerjenn) Pay-As-You-Go Windows Azure BizTalk Services Changes EAI and EDI on 7/15/2013 (missed when posted):

Microsoft released its latest incarnation of an earlier Windows Azure EAI and EDI Labs incubator project for cloud-based enterprise application integration and electronic data interchange at TechEd North America 2013. The Windows Azure BizTalk Services (WABS) preview lets developers combine Message Bus 2.1 and Workflow 1.0 with BizTalk Server components to manage message itineraries from the Azure cloud to on-premises line-of-business apps, at costs ranging from 6.5 cents to $4 per hour. (Hourly prices will double when WABS enters general availability.)

Enterprise application integration (EAI) projects consume more than 30%of current IT spending, according to Bitpipe.com. Three of the primary components of EAI solutions are the following:

- Message-oriented-middleware (MOM) to provide connectivity between applications by manipulating and passing messages in queues;

- Adapters to standardize connections between common packaged applications and message formats, such as Electronic Document Interchange (EDI, ANSI X12 and UN/EDIFACT dialects) between trading partners over HTTP (AS2) or FTP (AS3) transports, as well as Web services (AS4);

- Workflow management tools to orchestrate transactions initiated by business-to-business (B2B) message flows

BizTalk Server (BTS), which Microsoft introduced in 2000, is a packaged enterprise service bus (ESB) originally intended as MOM for constructing on-premises EAI and B2B solutions. BTS 2013 enables graphical process modeling and customization with Visual Studio 2012 and provides a Windows Communication Foundation (WCF) Adapter set for multiple transports. The included BizTalk Adapter Pack provides connectivity with 15 Line-of-Business (LOB) systems, such as SAP, Oracle Database and eBusiness Suite, IBM WebSphere and DB2, Seibel, PeopleSoft, JD Edwards and TIBCO.

Running the WABS numbers

Implementing a BTS solution requires a major up-front investment in license fees, which can be as much as $50,000 ($43,000 for the Enterprise edition on up to a four-core processor, plus $3,400 for SQL Server standard edition on two cores and $1,300 for two two-core Windows Server 2012 instances.) Add $25,000 for servers, networking hardware and data center space, as well as $25,000 in commissioning costs. A $100,000 bill before paying BizTalk consultants and developers means BTS on premises isn't practical for most small and many medium-size businesses. Substituting the Standard edition, which doesn't support scaling, reduces licensing costs to about $10,000, but there remains a hefty up-front investment for a small firm.

Windows Azure IaaS offers Virtual Machines (WAVMs) with images preconfigured with BTS Standard or Enterprise editions, which range in cost from $0.84 (Standard edition on a Medium-size instance) to $6.52 (Enterprise edition on an Extra Large instance) per hour. These prices translate to about $625 to $4,851 per month, based on 544 hours. This pay-as-you-go approach eliminates the up-front cost and lets you quickly scale up your BTS installation as business increases. However, you're required to configure, manage and protect your cloud servers. An advantage of running BTS in a WAVM is the capability to move BizTalk applications between the cloud and an on-premises data center.

Table 1. Prices and limitations of the four Windows Azure BizTalk Services versions.

Small firms and independent developers and consultants get a break with Windows Azure BizTalk Services (WABS), which ranges in cost during its preview stage from $0.065/hour (~$48/month) for the Developer edition to $4.03/hour ($2,998/month) for the Premium edition, plus standard Windows Azure data transfer charges. These hourly/monthly prices (see Table 1) reflect a 50% preview discount. Each version has twice the compute resources of its predecessor: Basic has twice the resources of Developer, Standard has twice the resources of Basic and Premium has twice the resources of Standard.

Prices reflect a 50%discount during the preview period. Standard Windows Azure data transfer charges apply. A scalability unit corresponds to a BizTalk Server unit, which represents a single CPU core. (Data is from the Introduction to Windows Azure BizTalk Services session at TechEd North America 2013.) …

Read more (might require free registration.)

‡ Nick Harris (@cloudnick) reported Updated NotificationsExtensions WnsRecipe Nuget to support Windows 8.1 templates now available on 816/2013:

A short post to let you know that I have just published the updated NotificationsExtensions WnsRecipe Nuget with support for the new notification templates that were added in Windows 8.1.

Here is a short demonstration of how to use it to send a new TileSquare310x310ImageAndText01 template with the WnsRecipe Nuget Package

Install the package using Nuget Package Manager Console. (Note you could also do this using Manage package references in solution explorer)

install-package WnsRecipeAdd using statements to the NotificationsExtensions namespace

using NotificationsExtensions; using NotificationsExtensions.TileContent;

New up a new WnsAccessTokenProvider and provide it your credentials configured in the Windows Store app Dashboard

private WnsAccessTokenProvider _tokenProvider = new WnsAccessTokenProvider("ms-app://", "");Use Tile Content Factory to create your tile template

var tile = TileContentFactory.CreateTileSquare310x310ImageAndText01(); tile.Image.Src = "https://nickha.blob.core.windows.net/tiles/empty310x310.png"; tile.Image.Alt = "Images"; tile.TextCaptionWrap.Text = "New Windows 8.1 Tile Template 310x310"; // Note you really should not do the line below, // instead you should be setting the required content // through property tile.Wide310x150Content so that users // get updates irrespective of what size tile they have pinned to Start tile.RequireWide310x150Content = false; //Send the notification to the desired channel var result = tile.Send(new Uri(channel), _tokenProvider);

and here is the output:

<Return to section navigation list>

Windows Azure Access Control, Active Directory, Identity and Workflow

No significant articles so far this week.

<Return to section navigation list>

Windows Azure Virtual Machines, Virtual Networks, Web Sites, Connect, RDP and CDN

No significant articles so far this week.

<Return to section navigation list>

Windows Azure Cloud Services, Caching, APIs, Tools and Test Harnesses

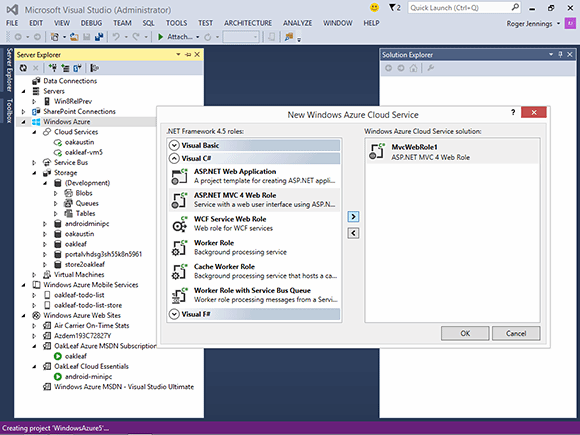

‡ SearchCloudComputing published My (@rogerjenn) Unlock Windows Azure Development in Visual Studio 2013 Preview with the .NET SDK 2.1 on 8/15/2013:

Microsoft's accelerated update schedule for Visual Studio appeared at first to have caught the Windows Azure team off-guard. Corporate vice president S. "Soma" Somasegar outlined the new features of the Visual Studio 2013 Preview and announced its availability for download at this summer's Build developer conference in June. Of particular interest to Windows Azure developers were the new features:

- The capability to create and edit new Mobile Services (WAMS) in the Visual Studio IDE

- Right-click publishing with preview, per-publish profile web.config transforms and selective publishing with diffing for Web Sites (WAWS)

- A tree view of Windows Azure subscriptions and dependent resources in the Server Explorer (see Figure 1)

- Windows Azure Active Directory (WAAD) support for Web applications

However, the Visual Studio (VS) 2013 Preview didn't support the then-current .NET SDK 2.0 for Windows Azure. Therefore, most Azure-oriented developers elected to wait for an updated SDK to avoid the inefficiency of different developer environments for cloud and on-premises .NET app development.

Figure 1. VS 2013 Preview's enhanced Server Explorer running under Windows 8.1 Preview displays Azure subscriptions and attendant resources in a hierarchical tree view; creating a new Windows Azure Cloud Service offers a choice of six Web or worker roles.

VS 2013 Preview wasn't off-limits to Windows Azure developers for very long. Scott Guthrie announced the release of the Windows Azure SDK 2.1 for .NET on July 31. According to Guthrie, this SDK offers the following new features:

- Visual Studio 2013 Preview support: Windows Azure SDK now supports the new VS 2013 Preview

- Service Bus: New high availability options, notification hub support, improved VS tooling

- Visual Studio 2013 VM image: Windows Azure now has a built-in VM image for hosting and developing with VS 2013 in the cloud

- Visual Studio Server Explorer Enhancements: Redesigned with improved filtering and auto-loading of subscription resources

- Virtual machines: Start and Stop VMs with suspended billing directly from within Visual Studio

- Cloud services: Emulator Express option with reduced footprint and Run as Normal User support

- PowerShell Automation: Lots of new PowerShell commands for automating Web sites, cloud services, VMs and more

Hot on the SDK's heels came an updated Windows Azure Training Kit (WATK) with new and refreshed content for the SDK for .NET 2.1. …

Read more (might require no-charge registration.)

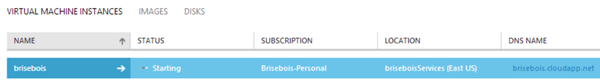

• Alexandre Brisebois (@Brisebois) described how to Create a Dev & Test Environment in Minutes! in an 8/13/2013 post:

How many times do we have to scramble to assemble a decent Dev & Test environment?

When I think back to my past lives, I can attest that it’s been a challenging mess. I used to run around to various departments in order to find available machines, software installation disks, licenses and IT resources to help me put everything together.

Taking shortcuts usually meant cutting back on the Dev & Test infrastructure. Consequently, I rarely had environments that mirrored the actual production environment. Products would make their way through development and quality assurance, but I rarely had a clear picture of how it would react to the production environment. Deploying to production usually resulted in being asked to come in on weekends because the outcome was completely unpredictable and that time needed to be scheduled in order to rollback.

Just thinking about all this sends chills down my spine!

Since then things have change quite a bit. I found shortcuts allowing me to build cost effective environments without having to run around begging for resources. Microsoft has recently introduced Dev & Test that allows me to setup my environments in a matter of minutes!

If you’re already an MSDN subscriber then you’re all set! Visual Studio Professional, Premium or Ultimate MSDN subscriptions will permit you to activate Dev & Test by creating a Windows Azure subscription from your MSDN subscription benefits page.

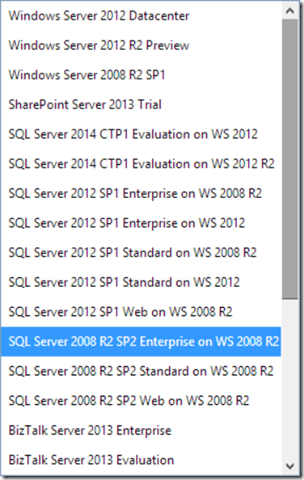

Using the MSDN Windows Azure subscription, I can spin up virtual machines that allow me to test various scenarios. For example, I can choose from a variety of pre-configured Virtual Machines like Windows Server, SQL Server, SharePoint and BizTalk. With discounts ranging from 25% on Cloud Services to upwards of 33% on BizTalk Enterprise Virtual Machines.

More Details

- See details for Windows Azure Benefits for MSDN Subscribers

- See offer details for Visual Studio Professional, Premium or Ultimate (with MSDN) for the hourly rates

- Develop and test applications faster

There are a couple of interesting benefits to building my Dev & Test environments on Windows Azure.

First of all, it’s great for short lived projects. I can create environments without major capital investments and I can rapidly decommission Virtual Machines, services and reserved resources when the project comes to an end. Best of all I don’t get stuck with the extra hardware and software licenses.

Waiting after IT departments is a thing of the past, I can get up and running quickly!

Using Windows Azure Dev & Test, I can cycle through proof of concepts using various Virtual Machine configurations. Easily playing around with OS versions, the # GBs of RAM, the # of CPU cores and the amount of available bandwidth allows me spot potential pain points before going to production. Doing the same kind of tests on-premise can be quite complex due to the sheer amount time required to deal with all the hardware and software involved.

On Windows Azure, creating a new Virtual Machine is a breeze!

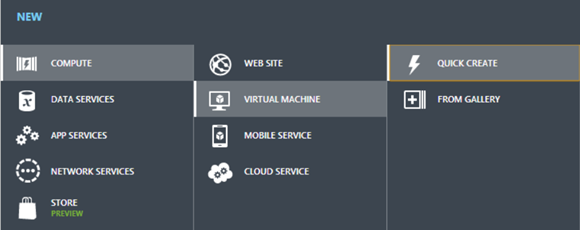

Login to the Windows Azure Management Portal and click on the NEW + menu found at the bottom left of the screen.

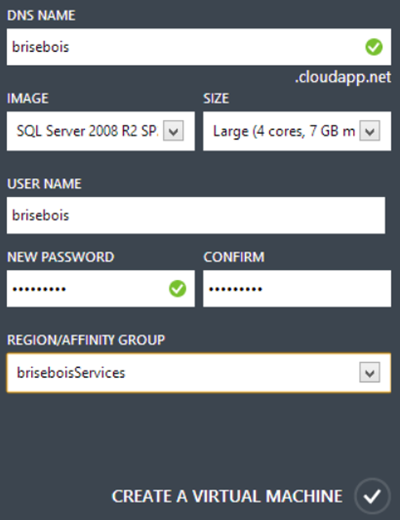

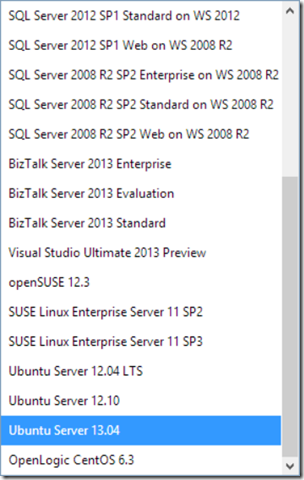

Select QUICK CREATE from the VIRTUAL MACHINE option found under COMPUTER. Then complete the form by providing your new Virtual Machine with a name, a size and by selecting the base image from the dropdown list. Provide Windows Azure with a user name and password that you will use to login. Finally select the region where you want to create your Virtual Machines.

There are quite a few pre-configured Virtual Machine Images available. If you don’t find what you are looking for, you can create your own by creating a Virtual Machine Image on-premise and by uploading it to Windows Azure. You will then be able to provision Virtual Machines base on your custom Image. See the full list of Microsoft server software supported on Windows Azure Virtual Machines.

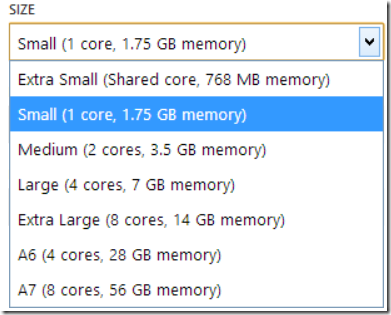

Virtual Machine and Cloud Service Sizes for Windows Azure are listed below. I usually work with Medium sized Virtual Machines because my software requires quite a bit of RAM.

Choosing the right Virtual Machine size can be challenging and being able to try them out is a huge advantage. At this point it’s also important to note that along with CPU, RAM and Disk Size each configuration comes with a specific amount of Bandwidth. Be sure that your application does not suffer because it lacks Bandwidth.

Clicking on CREATE A VIRTUAL MACHINE will start provisioning a Virtual Machine based on your specifications. This is the perfect time to get yourself a cup of coffee, by the time you get back you will be presented with your brand new Virtual Machine.

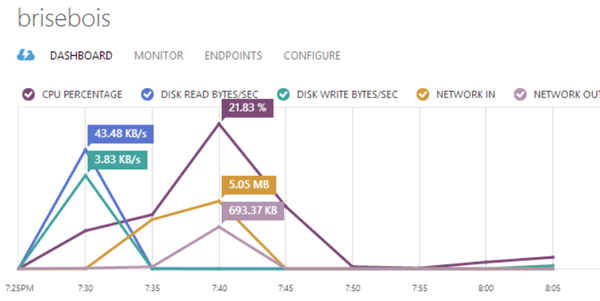

Clicking on the Virtual Machine will bring you to it’s dashboard.

This is where you are presented with diagnostics, configurations and general information about the Virtual Machine. Use this information to monitor and diagnose performance problems without using Remote Desktop.

The Windows Azure Management Portal will also provide you with the following commands.

Use CONNECT to safely Remote Desktop into your newly created Virtual Machine. RESTART or SHUTDOWN the Virtual Machine directly from the Windows Azure Management Portal. Deleting the Virtual Machine will release its resources back to Windows Azure.

Take Away

Working with Windows Azure over the last year, I have to say that much of the pain associated with creating and managing Dev & Test environments has gone away. I can finally concentrate on finding the right solution for my client’s needs without having to deal with too much politics and the red tape that comes with it.

Being able to spin up machines at a moments notice has allowed to me rapidly confirm and validate possible architectures. Above all else, it’s allowed me to do so at a very low cost because I don’t need Virtual Machines to

run 24/7.Keep in mind that prices used in this post have been taken from August 2013 and may have changed over time. Please refer to the official pricing on windowsazure.com.

Shutting down Virtual Machines when I don’t need them ends up saving me quite a bit of money! I currently start the Virtual Machine when I start working in the morning and I shut it down when I go home at night. I’m currently paying for about 8 hours worth of compute time per day. Since its only running for 8 hours per day I’m currently paying $0.96/day instead of $2.88/day, which corresponds to a full day’s worth of compute.

Lets put this back into perspective, because daily pricing doesn’t really give a good idea of the actual cost for my Dev & Test environment. So lets look at this on a long term basis. My projects usually go for 3 months, working on average 20 days per month. That means that my Dev & Test environment is costing me a total of $57.60 for the duration of whole project. Keep in mind that if my Virtual Machine had been running 24/7 it would have cost me $144.

Nevertheless, savings generated by the discounted pricing of the Windows Azure Dev & Test offering are quite significant and do make a world of difference in the long run.

I use Windows Azure Dev & Test environments because:

- You can connect securely from anywhere (working from home)

- You can test load and scalability scenarios

- You can use PowerShell to automate their creation

- You can develop Windows & Linux based solutions

- You can test newly release software (SQL Server, BizTalk, SharePoint…)

- You don’t have to wait for hardware, procurement or internal processes

- You pay for what you use (by the minute billing)

- You benefit from discounted hourly rates

- You get monthly Windows Azure Credits

- You can Dev & Test in the cloud and deploy on-premise

- You can use MSDN Software on Windows Azure

Return to section navigation list>

Windows Azure Infrastructure and DevOps

Scott Guthrie (@ScottGu) described Windows Azure: General Availability of SQL Server Always On Support and Notification Hubs, AutoScale Improvements + More updates in a 8/12/2013 post:

This morning we released some major updates to Windows Azure. These new capabilities include:

- SQL Server AlwaysOn Support: General Availability support with Windows Azure Virtual Machines (enables both high availability and disaster recovery)

- Notification Hubs: General Availability Release of Windows Azure Notification Hubs (broadcast push for Windows 8, Windows Phone, iOS and Android)

- AutoScale: Schedule-based AutoScale rules and richer logging support

- Virtual Machines: Load Balancer Configuration and Management

- Management Services: New Portal Extension for Operation logs + Alerts

All of these improvements are now available to use immediately (note: AutoScale is still in preview – everything else is general availability). Below are more details about them.

SQL Server AlwaysOn Support with Windows Azure Virtual Machines

I’m excited to announce the general availability release of SQL Server AlwaysOn Availability Groups support within Windows Azure. We have updated our official documentation to support Availability Group Listeners for SQL Server 2012 (and higher) on Windows Server 2012.

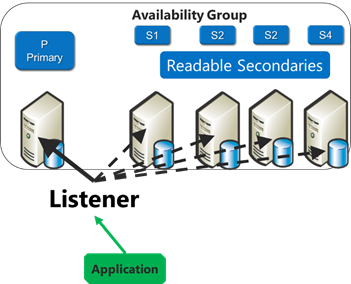

SQL Server AlwaysOn Availability Group support, which was introduced with SQL Server 2012, is Microsoft’s premier solution for enabling high availability and disaster recovery with SQL Server. SQL Server AlwaysOn Availability Groups support multi-database failover, multiple replicas (5 in SQL Server 2012, 9 in SQL Server 2014), readable secondary replicas (which can be used to offload reporting and BI applications), configurable failover policies, backups on secondary replicas, and easy monitoring.

Today, we are excited to announce that we support the complete SQL Server AlwaysOn Availability Groups technology stack with Windows Azure Virtual Machines - including enabling support for SQL Server Availability Group Listeners. We are really excited to be the first cloud provider to support the full range of scenarios enabled with SQL Server AlwaysOn Availability Groups – we think they are going to enable a ton of new scenarios for customers.

High Availability of SQL Servers running in Virtual Machines

You can now use SQL Server AlwaysOn within Windows Azure Virtual Machines to achieve high availability and global business continuity. As part of this support you can now deploy one or more readable database secondaries – which not only improves availability of your SQL Servers but also improves efficiency by allowing you to offload BI reporting tasks and backups to the secondary machines.

Today’s Windows Azure release includes changes to better support SQL Server AlwaysOn functionality with our Windows Azure Network Load Balancers. With today’s update you can now connect to your SQL Server deployment with a single client connection string using the Availability Group Listener endpoint. This will automatically route database connections to the primary replica node – and our network load balancer will automatically update to route requests to a secondary replica node in the event of an automatic or manual failover scenario:

This new SQL Server Availability Group Listener support enables you to easily deploy SQL Databases in Windows Azure Virtual Machines in a high-availability configuration, and take full advantage of the full SQL Server feature-set. It can also be used to ensure no downtime during upgrade operations or when patching the virtual machines.

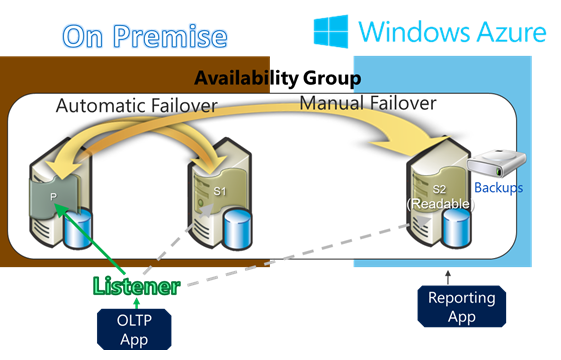

Disaster Recovery of an on-premises SQL Server using Windows Azure

In addition to enabling high availability solutions within Windows Azure, the new SQL Server AlwaysOn support can also be used to enable on-premise SQL Server solutions to be expanded to have one or more secondary replicas running in the cloud using Windows Azure Virtual Machines. This allows companies to enable high-availability disaster recovery scenarios – where in the event of a local datacenter being down (for example: due to a hurricane or natural disaster, or simply a network HW failure on-premises) they can failover and continue operations using Virtual Machines that have been deployed in the cloud using Windows Azure.

The diagram above shows a scenario where an on-premises SQL Server AlwaysOn Availability Group has been defined with a 2 database replicas - a primary and secondary replica (S1). One more secondary replica (S2) has then been configured to run in the cloud within a Windows Azure Virtual Machine. This secondary replica (S2) will continuously synchronize transactions from the on-premises primary replica. In the event of a disaster on-premises, the company can failover to the replica in the cloud and continue operations without business impact.

In addition to enabling disaster recovery, the secondary replica(s) can also be used to offload reporting applications and backups. This is valuable for companies that require maintaining backups outside of the data center for compliance reasons, and enables customers to leverage the replicas for compute scenarios even in non-disaster scenarios.

Learn more about SQL Server AlwaysOn support in Windows Azure

You can learn more about how to enable SQL Server AlwaysOn Support in Windows Azure by reading the High Availability and Disaster Recovery for SQL Server in Windows Azure Virtual Machines documentation. Also review this TechEd 2013 presentation: SQL Server High Availability and Disaster Recovery on Windows Azure VMs. We are really excited to be the first cloud provider to enable the full range of scenarios enabled with SQL Server AlwaysOn Availability Groups – we think they are going to enable a ton of new scenarios for customers.

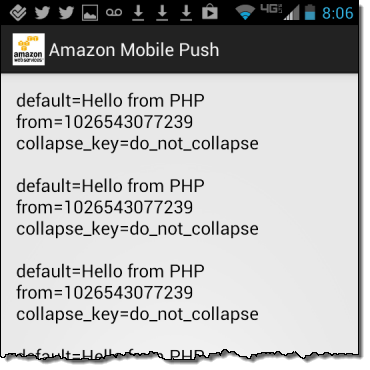

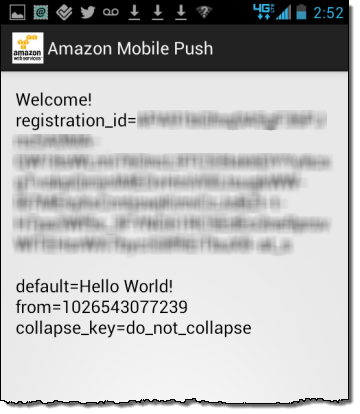

Windows Azure Notification Hubs

I’m excited today to announce the general availability release of Windows Azure Notification Hubs. Notification Hubs enable you to instantly send personalized, cross-platform, broadcast push notifications to millions of Windows 8, Windows Phone 8, iOS, and Android mobile devices.

I first blogged about Notification Hubs starting with the initial preview of Notification Hubs in January. Since the initial preview, we have added many new features (including adding support for Android and Windows Phone devices in addition to Windows 8 and iOS ones) and validated that the system is ready for any amount of scale that your next app requires.

You can use Notification Hubs from both Windows Azure Mobile Services or any other custom Mobile Backend you have already built (including non-Azure hosted ones) – which makes it really easy to start taking advantage of from any existing app.

Notification Hubs: Personalized cross platform broadcast push at scale

Push notifications are a vital component of mobile applications. They’re the most powerful customer engagement mechanism available to mobile app developers. Sending a single push notification message to one mobile user is relatively straight forward (and is already easy to-do with Windows Azure Mobile Services today). But sending simultaneous push notifications in a low-latency way to millions of mobile users, and handling real world requirements such as localization, multiple platform devices, and user personalization is much harder.

Windows Azure Notification Hubs provide you with an extremely scalable push notification infrastructure that helps you efficiently route cross-platform, personalized push notification messages to millions of users:

- Cross-platform. With a single API call using Notification Hubs, your app’s backend can send push notifications to your users running on Windows Store, Windows Phone 8, iOS, or Android devices.

- Highly personalized. Notification Hubs' built-in templating functionality allows you to let the client chose the shape, format and locale of the notifications it wants to see, while keep your backend code platform independent and really clean.

- Device token management. Notification Hubs relieves your backend from the need to store and manage channel URIs and device tokens used by Platform Notification Services (WNS, MPNS, Apple PNS, or Google Cloud Messaging Service). We securely handle the PNS feedback, device token expiry, etc. for you.

- Efficient tag-based multicast and pub/sub routing. Clients can specify one or more tags when registering with a Notification Hub thereby expressing user interest in notifications for a set of topics (favorite sport/teams, geo location, stock symbol, logical user ID, etc.). These tags do not need to be pre-provisioned or disposed, and provide a very easy way for apps to send targeted notifications to millions of users/devices with a single API call, without you having to implement your own per-user notification routing infrastructure.

- Extreme scale. Notification Hubs are optimized to enable push notification broadcast to thousands or millions of devices with low latency. Your server back-end can fire one message into a Notification Hub, and thousands/millions of push notifications can automatically be delivered to your users, without you having to re-architect or shard your application.

- Usable from any backend. Notification Hubs can be easily integrated into any back-end server app using .NET or Node.js SDK, or easy-to-use REST APIs. It works seamlessly with apps built with Windows Azure Mobile Services. It can also be used by server apps hosted within IaaS Virtual Machines (either Windows or Linux), Cloud Services or Web-Sites.

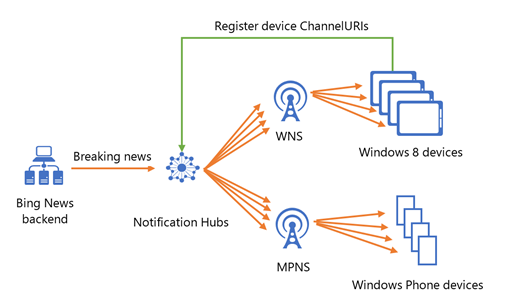

Bing News: Using Windows Azure Notification Hubs to Deliver Breaking News to Millions of Devices

A number of big apps started using Windows Azure Notification Hubs even before today’s General Availability Release. One of them is the Bing News app included on all Windows 8 and Windows Phone 8 devices.

The Bing News app needs the ability to notify their users of breaking news in an instant. This can be a daunting task for a few reasons:

- Extreme scale: Every Windows 8 user has the News app installed, and the Bing backend needs to deliver hundreds of millions of breaking news notifications to them every month

- Topic-based multicast: Broadcasting push notifications to different markets, based on interests of individual users, requires efficient pub sub routing and topic-based multicast logic

- Cross-platform delivery: Notification formats and semantics vary between mobile platforms, and tracking channels/tokens across them all can be complicated

Windows Azure Notification Hubs turned out to be a perfect fit for Bing News, and with the most recent update of the Bing News app they now use Notification Hubs to deliver push notifications to millions of Windows and Windows Phone devices every day.

The Bing News app on the client obtains the appropriate ChannelURIs from the Windows Notification Service (WNS) and the Microsoft Push Notification Service (MPNS), for the Windows 8 and Windows versions respectively, and then registers them with a Windows Azure Notification Hub . When a breaking news alert for a particular market has to be delivered, the Bing News app uses the Notification Hubs to instantly broadcast appropriate messages to all the individual devices. With a single REST call to the Notification Hub they can automatically filter the customers interested in the topic area (e.g. sports update) and instantly deliver the message to millions of customers:

Windows Azure handles all of the complex pub/sub filtering logic for them, and efficiently handles deliver of the messages in a low-latency way.

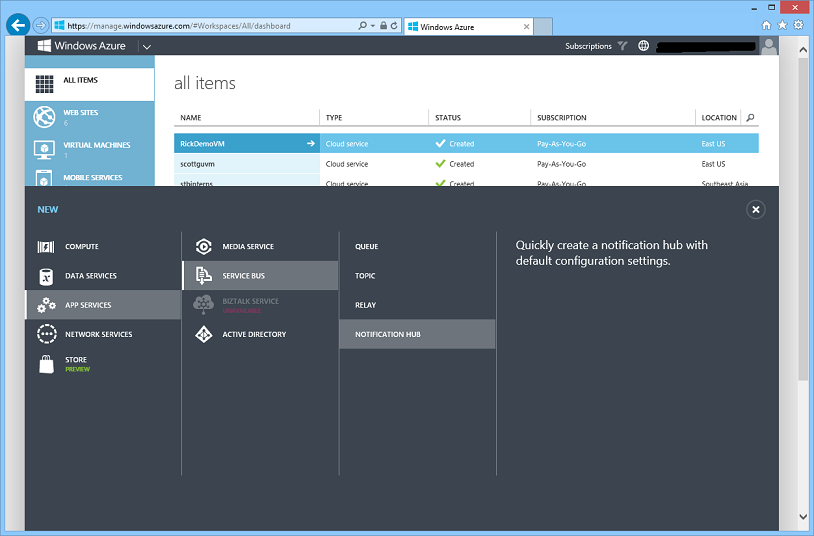

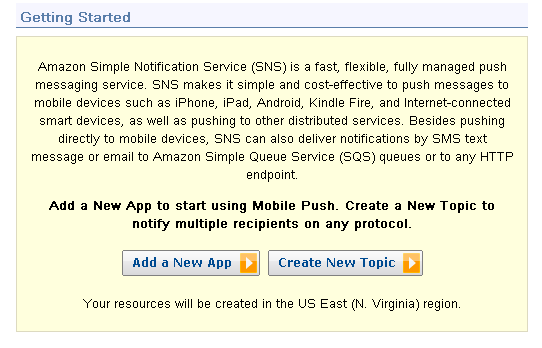

Create your first Notification Hubs Today

Notification Hubs support a free tier of usage that allows you to send 100,000 operations every month to 500 registered devices at no cost – which makes it really easy to get started.

To create a new Notification Hub simply choose New->App Services->Service Bus->Notification Hub within the Windows Azure Management Portal:

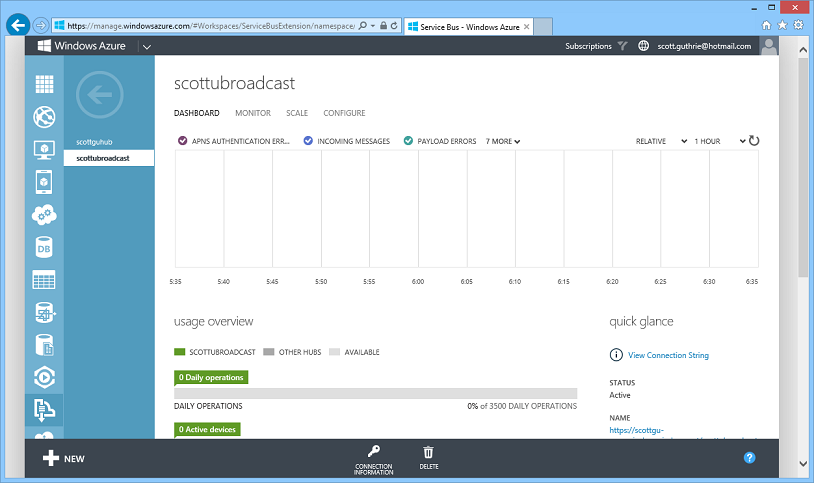

Creating a new Notification Hub takes less than a minute, and once created you can drill into it to see a dashboard view of activity with it. Among other things, the dashboard allows you to see how many devices have been registered with it, how many messages have been pushed to it, how many messages have been successfully delivered via it, and how many have failed:

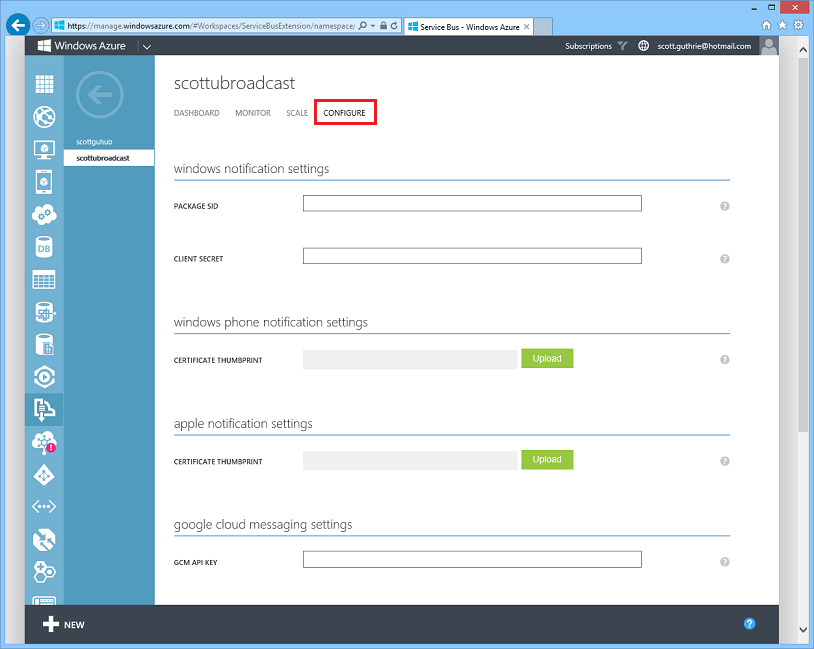

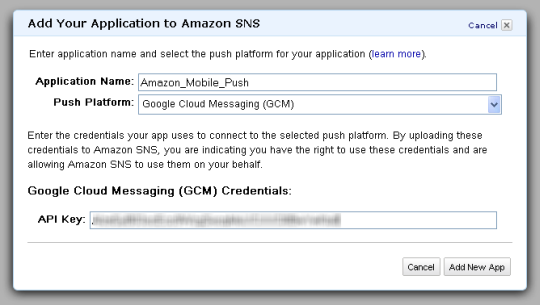

Once your hub is created, click the “Configure” tab to enter your app credentials for the various push notifications services (Windows Store/Phone, iOS, and Android) that your Notification Hub will coordinate with:

And with that your notification hub is ready to go!

Registering Devices and Sending out Broadcast Notifications

Now that a Notification Hub is created, we’ll want to register device apps with it. Doing this is really easy – we have device SDKs for Windows 8, Windows Phone 8, Android, and iOS.

Below is the code you would write within a C# Windows 8 client app to register a user’s interest in broadcast notifications sent to the “myTag” or “myOtherTags” tags/topics:

await hub.RegisterNativeAsync(channel.Uri, new string[] { "myTag", "myOtherTag" });

Once a device is registered, it will automatically receive a push notification message when your app backend sends a message to topics/tags it is registered with. You can use Notification Hubs from a Windows Azure Mobile Service, a custom .NET back-end app, or any other app back-end with our Node.js SDK or REST API. The below code illustrates how to send a message to the Notification Hub from a custom .NET backend using the .NET SDK:

var toast = @"<toast><visual><binding template=""ToastText01""><text id=""1"">Hello everybody!</text></binding></visual></toast>";

await hub.SendWindowsNativeNotificationAsync(toast);

A single call like the one above from your app backend will now securely deliver the message to any number of devices registered with your Notification Hub. The Notification Hub will handle all of the details of the delivery irrespective of how many users you are sending it to (even if there are 10s of millions of them).

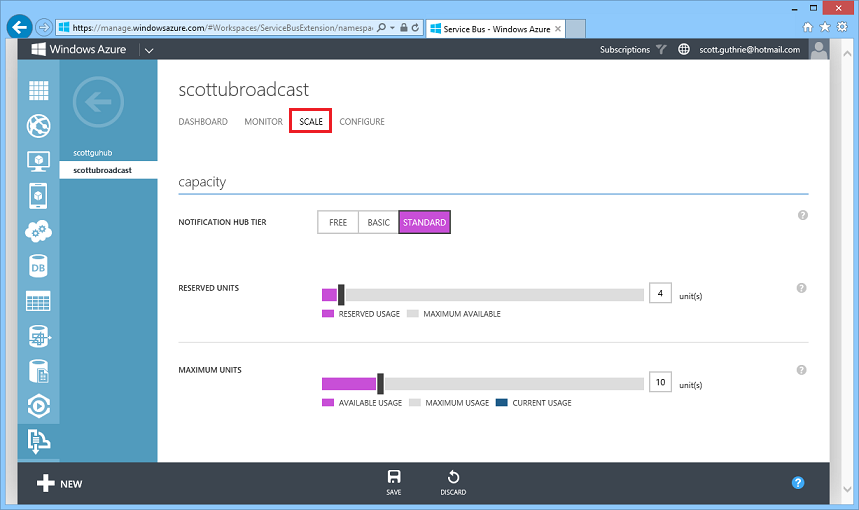

Scaling and Monitoring your Notification Hub

Once you’ve built your app, you can easily scale it to millions of users directly from the Windows Azure management portal. Just click the “scale” tab in your Notification Hub within the management portal to configure the number of devices and messages you want to support:

In addition to scaling capacity, you can also monitor and track nearly 50 different metrics about your notifications and their delivery to your customers:

Learn More about Notification Hubs

Learn more about Notification Hubs using the Notification Hubs service page, where you will find video tutorials, in-depth scenario guidance, and link to SDK references.

We are happy to continue offering Notification Hubs at no charge to all Windows Azure subscribers through September 30, 2013. We will begin billing for Notification Hubs consumption in the Basic and Standard tiers on October 1, 2013. A Free Tier will continue to also be available and supports 100,000 notifications with 500 registered devices each month at no cost.

AutoScale: Scheduled AutoScale Rules and Richer Logging

This summer we introduced new AutoScale support to Windows Azure that enables you to automatically scale Web Sites, Cloud Services, Mobile Services and Virtual Machines. AutoScale enables you to configure Windows Azure to automatically scale your application dynamically on your behalf (without any manual intervention required) so that you can achieve the ideal performance and cost balance. Once configured, AutoScale will regularly adjust the number of instances running in response to the load in your application.

Today, we are introducing even more AutoScale features – including the ability to proactively adjust your Cloud Service instance count using time scheduled rules.

Schedule AutoScale Rules

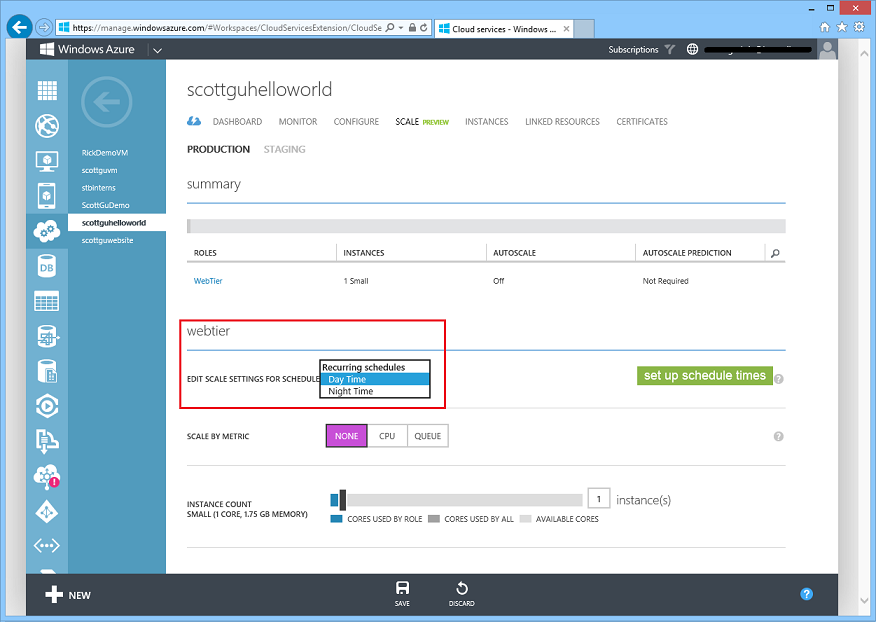

If you click on the Scale tab of a Cloud Service, you’ll see that we’ve now added support for you to configure/control different scaling rules based on schedule rules.

By default, you’ll edit scale settings for No scheduled times – this means that your scale settings will always be the same regardless of the time/day. You can scale manually by selecting None in the Scale by Metric section – this will give you the traditional Instance Count slider that you are familiar with:

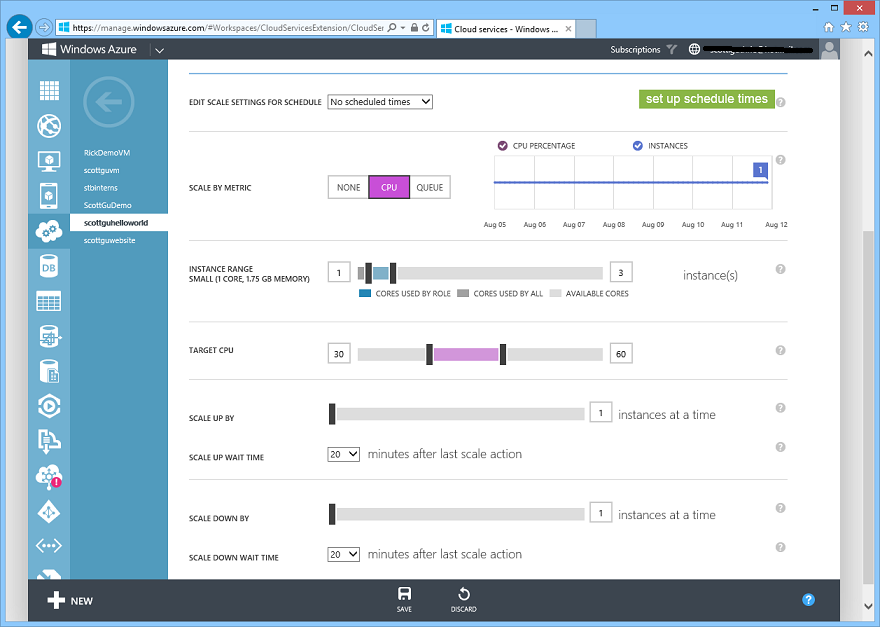

Or you can AutoScale dynamically by reacting to CPU activity or Queue Depth. The below screen-shot demonstrates configuring an auto-scale rule based on the CPU of the WebTier role and indicates to scale between 1 and 3 instances – depending on the aggregate CPU:

With today’s release, we also now allow you to setup different scale settings for different times of the day. You can enable this by clicking the “Set up Schedule Times” button above. This brings up a new dialog:

With today’s release we now offer the ability to define two different recurring schedules: Day and Night. The first schedule, Day Time, runs from the start of the day to the end of the day (which I’ve defined above as being between 8am and 8pm). The second schedule, Night Time, runs from the end of one day to the start of the next day. Both use the options in Time to define start and end of a day, and the time zone. This schedule respects daylight savings time, if it is applicable to that timezone. In the future we will add other types of time based schedules as well.

Once you’ve setup a day/night schedule, you can return to the Scale page and see that the schedule dropdown now has the two schedules you created populated within it:

You can now select each schedule from the list and edit scaling rules specific to it within it. For example, you can select the Day Time Schedule and set Instance Count on a Cloud Service role to 5, and then select Night Time and set Instance Count to 3. This will ensure that Windows Azure scales up your service to 5 instances during the day, and then cycles them down to 3 instances overnight.

You can also combine Scheduled Autoscale rules and the Metric Based AutoScale rules together. Select the CPU or Queue toggle and you can configure AutoScale rules that apply differently during the day or night. For example, you could set the Instance Range from 5 to 10 during the day, and 3 to 6 at night based on CPU activity.

Today’s release only supports Scheduled AutoScale rules on Cloud Services – but you’ll see us enable these with all types of compute resources (including Web Sites, Mobile Services + VMs) shortly.

AutoScale History

It’s now easy to know and log exactly what AutoScale has done for your service: there are four new AutoScale history features with today’s release to help with this.

First, we have added two new operations to Windows Azure’s Operation Log capability: AutoscaleAction and PutAutoscaleSetting. We now record each time that AutoScale takes a scale up or scale down action, and include the new and previous instance counts in the details. In addition, we record each time anyone changes autoscale settings – you can use this to see who on your team changed autoscale options and when. These are both now exposed in the Operation Logs tab of the new Management Services node within the Windows Azure Management Portal:

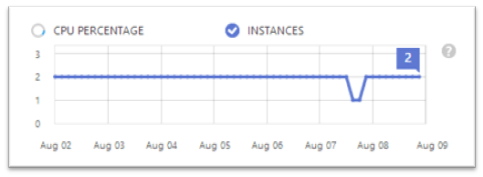

For Cloud Services, we are also adding a historical graph that shows of the number of instances over the past 7 days. This way, you can see trends in AutoScale over the span of a week:

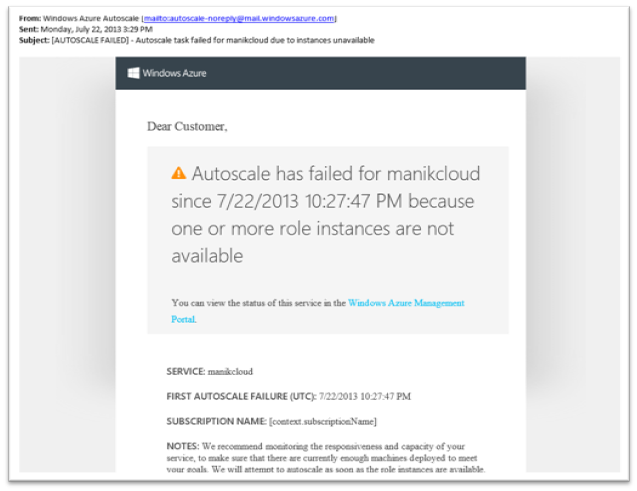

Third, if AutoScale ever fails for more than 2 hours at a time, we will automatically notify the Service Administrator and Co-Admin of the subscription via email:

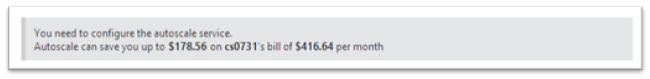

Fourth, if you are the Account Administrator for your subscription, we will now show you billing information about Autoscale in your account’s currency:

If AutoScale is on, it will show you the difference between your current instance count, and the maximum instance count – and how much you are saving by using it.

If AutoScale is off, we will show you how much we predict you could save if you were to turn on AutoScale. Put another way - we are updating your bill to include suggestions on how you can pay us less in the future (please don’t tell my boss about this… <g>)

Virtual Machines: Support for Configuring Load Balancer Probes

Every Virtual Machine, Cloud Service, Web Site and Mobile Service you deploy in Windows Azure comes with built-in load balancer support that you can use to both scale out your app and enable high availability. This load balancer support is built-into Windows Azure and included at no extra charge (most other cloud providers make you pay extra for it).

Today’s update of Windows Azure includes some nice new features that make it even easier to configure and manage load balancing support for Virtual Machines – and includes support for customizing the network probe logic that our load balancers use to determine whether your Virtual Machines are healthy and should be kept in the load balancer rotation.

Understanding Load Balancer Probes

Load-balancing network traffic across multiple Virtual Machine instances is important, both to enable scale-out of your traffic across multiple VMs, as well as to enable high availability of your app’s front-end or back-end virtual machines (as discussed in the SQL Server AlwaysOn section earlier). A network probe is how the Windows Azure load balancer detects failure of one or more of your virtual machine instances - whether due to software or hardware failure. If the network probe detects there is an issue with a specific virtual machine instance it will automatically failover traffic to your healthy virtual machine instances, and prevent customers thinking your application is down.

The default configuration for a network probe from the Windows Azure load balancer is simply using TCP on the same port your application is load-balancing. As shown in the below example, each Virtual Machine in a load-balanced set is receiving TCP traffic on port 80 from the public internet (likely a website or web service). With a simple TCP probe, the load-balancer sends an ongoing message, every 15 seconds by default, on that same port to each Virtual Machine, checking for health. Because the Virtual Machine is running a website, if the Virtual Machine and web service is healthy, it will automatically reply back to the TCP probe with a simple ACK to the load balancer. While this ACK continues, the load-balancer will continue to send traffic, knowing the website is responsive.

In any situation where the website is unhealthy, the load balancer will not receive a response from the website. When this happens the load balancer will stop sending traffic to the virtual machine that is having problems, and instead direct traffic to the other two instances, as shown for Virtual Machine 2 below. This simple high availability option will work without having to write any special code inside the VM to respond to the network probes and can protect you from failure due to the application, the virtual machine, or the underlying hardware (note: if Windows Azure detects a hardware failure we’ll automatically migrate your Virtual Machine instance to a new server).

Windows Azure allows you to configure both the time interval for sending each network probe (15 seconds is the default) and the number of probe attempts that must fail before the load balancer takes the instance offline (the default is 2). Thus, with the defaults, after 30 seconds of receiving no response from a web service, the load balancer will consider it unresponsive and stop sending traffic to it until a healthy response is received later (15 seconds per probe * 2 probes).

You can also now configure custom HTTP probes – which is a more advanced option. With HTTP probes, you can configure the load balancer’s network probe request to be sent to a separate network port than the one you are load-balancing (and this port does not have to be open to the Internet – the recommendation is for it to be a private port that only the load balancer can access). This will require your service or application to be listening on this separate port and respond to the probe request, based upon the health of the application. With HTTP probes, the load balancer will continue to send traffic to your Virtual Machine if it receives an HTTP 200 OK response from the network probe request. Similar to the above TCP intervals, with the defaults, when a Virtual Machine does not respond with an HTTP 200 OK after 30 seconds (2 x 15 second probes), the load balancer will automatically take the machine out of traffic rotation until hearing a 200 OK back on the next probe. This advanced option does require the creation of code to listen and respond on a separate port, but gives you a lot more control over traffic being delivered to your service:

Configuring Load Balancer Probe Settings

Before today’s release, configuring custom network probe settings used to require you to use PowerShell, our Cross Platform CLI tools, or write code against our REST Management API. With today’s Windows Azure release we’ve added support to configure these settings using the Windows Azure Management Portal as well.

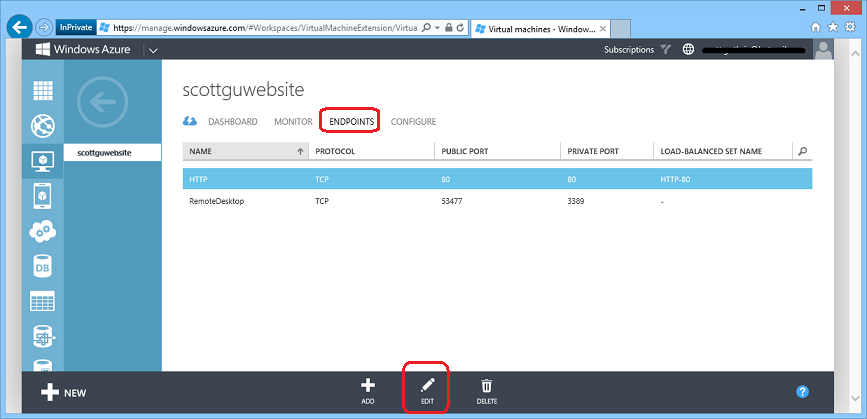

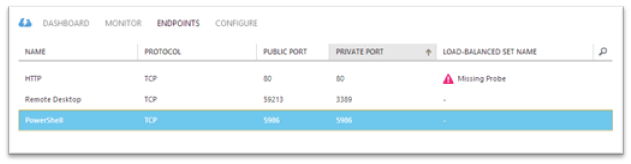

You can configure load-balanced sets for new or existing endpoints on your virtual machines. You can do this by adding or editing an endpoint on a Virtual Machine. To do this with an existing Virtual Machine, select the VM within the portal and navigate to the Endpoints tab within it. Then add or edit the endpoint you want to open to external callers:

The Edit Endpoint dialog allows you to view or change a port that is open to the Internet (and existed before today’s release):

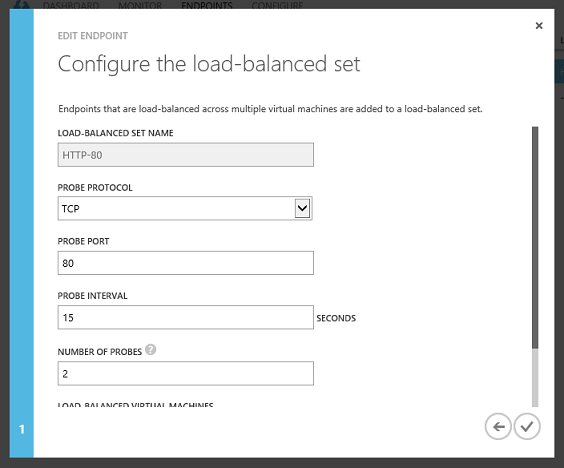

Selecting the “Create Load-Balanced Set” or “Reconfigure the Load-Balanced Set” checkbox within the dialog above will now allow you to proceed to another page within the wizard that surfaces the load balanced set and network probe properties:

Using the screen above you can now change the network probe settings to be either TCP or HTTP based, configure which internal port you wish to probe on (if you want your network probe to be private and different than the port you use to serve public traffic), configure the probe interval (default is every 15 seconds), as well as configure the number of times the network probe is allowed to fail before the machine is automatically removed from network rotation (default is 2 failures).

Identifying Network Probe Problems

In addition to allowing you to create/edit the network probe settings, today’s Windows Azure Management Portal release also now surfaces cases where network probes are misconfigured or having problems. For example, if during the Virtual Machine Preview you created a VM and configured a load-balanced sets prior to probes being a required configuration item, we will show an error icon that indicates missing probe configuration under the load-balanced set name column to indicate that the load-balanced set is not configured correctly:

Operation Logs and Alerts Now in “Management Services” section of Portal

Previously “Alerts” and “Operation Logs” tabs were under the “Settings” extension in the Windows Azure Management Portal. With today’s update, we are moving these cross cutting management and monitoring functionality to a new extension in the Windows Azure Portal named “Management Services”. The goal is to increase discoverability of common management services as well as to provide better categorization of functionality that cuts across all Windows Azure services. We will continue to enrich and add to such cross cutting functionality in Windows Azure over the next few releases.

Note that this change will not affect existing alert rules that were previously configured, only the location where they show up in the portal is different.

Additions to Operation Logs

Prior to today, you could find operation history for Cloud Services and Storage operations. With this release, we are adding additional operation history data for the following additional areas:

- Disk operations – add and delete Virtual Machine Disks

- Autoscale: Autoscale settings changes, autoscale actions

- Alerts

- SQL Backup configuration changes

We’ll add to this list in later updates this year to include all other services/operations as well.

Summary

Today’s release includes a bunch of great features that enable you to build even better cloud solutions. If you don’t already have a Windows Azure account, you can sign-up for a free trial and start using all of the above features today. Then visit the Windows Azure Developer Center to learn more about how to build apps with it.

• Michael Washam (@mwashamtx)described Creating Highly Available Workloads with Windows Azure with the Windows Azure Management Portal on 8/13/2013:

In a recent update to the Windows Azure Management portal the Windows Azure team has added the capability to create and manage endpoint probes. While this functionality has always been available it was restricted only to the Windows Azure PowerShell Cmdlets. Having this ability in the management portal is a huge improvement for those seeking every tool at their disposal for improved availability.

In this post I’m going to show how you can take advantage of this new functionality in combination with availability sets to create a true highly available workload – in this case it will be a highly available web farm.

In the Windows Azure Management Portal create a virtual machine, select the Windows Server 2012 image and on the page asking for Availability Set select Create an Availability Set.

Create Availability Set

Once selected type in a name for your availability set. The name I’ve chosen is WEBAVSET.

Specifying an availability set ensures that Windows Azure will put members of the same availability set on different physical racks in the data center. This gives you redundant power and networking. This also tells Windows Azure that while performing host updates to not take down all nodes in the set at the same time so some of the nodes for your application will always be up and running.

Note: The only way to achieve 99.95% SLA with Windows Azure is by grouping multiple VMs performing the same workload into an availability set.

On the last screen of the portal you can skip creating the HTTP Endpoint here. You will configure the load balancer in a later step.

.

Once the first virtual machine is completed provisioning create another virtual machine using the same base image.

On the screen where you are asked about cloud services use the drop down list to select the previously created cloud service.

Note: Selecting an existing cloud service is another huge improvement in the portal UI – long requested!Select the availability set drop down and you should see the AV Set name you created with the first VM. Select this AV set before proceeding.

Once both virtual machines are provisioned they should both be in the same cloud service and availability set.

Same Cloud Service (same host name)

Same Availability Set

Next configure each virtual machine for a workload to load balance. To keep it simple RDP into each VM and launch Server Manager -> Manage Roles and Features and add the Web Server role (IIS).

Once the Web Server role is installed on each server, open notepad to edit the default page (c:\Inetpub\wwwroot\IISStart.htm) to show which server is serving up traffic to verify load balancing is working.

Add the following HTML code to the page replacing VMNAME with the name of the VM you are on:

<h1>VMNAME </h1>Edited Page for webvm1

Now to add the load balanced endpoints. Go back to the Windows Azure Management Portal and under the first VM (webvm1 in my case) select Endpoints at the top.

Then click Add towards the bottom of the screen.New Endpoint Wizard

Select HTTP from the Drop Down and Check Create a Load Balanced Set then click the next arrow.

Creating a Load Balanced HTTP Endpoint

Default TCP Probe Settings

The default load balancer probe settings are set to TCP. What this screen means is every 15 seconds the load balancer probe will attempt a TCP connect on the specified probe port. If it does not receive a TCP ACK Twice (Number of Probes) it will consider the node offline and will stop directing traffic to it. This alone is a huge benefit Windows Azure is giving you for free that was previously only available via the PS Cmdlets.

Configuring HTTP Probe Settings

Changing the probe type to HTTP gives you a bit more flexibility and power on what actions you can take. You can now specify a ProbePath property on the endpoint. The ProbePath is essentially a relative HTTP URL on your web servers that will respond with an HTTP 200 if the server is fine and ANY other response if the node will be taken out of rotation. This allows you to essentially write your own page that can check the state of the VM. Whether this is verifying disk space, data base or Internet connectivity the choice is yours.

For my simplistic example I’m just going to point this to the root of the site / so as long as IIS returns a HTTP OK (200) the server should be in the load balanced rotation.

Adding an endpoint to an existing load balanced set

Next add the load balanced endpoint on the second virtual machine by opening the VM in the Windows Azure Management Portal, click Endpoints at the top of the screen and Add at the bottom.

This time instead of creating a new endpoint select “Add Endpoint to an existing Load-Balanced Set”.

On the next screen select HTTP for the name and click the checkmark to add the endpoint.

A few additional details to be aware of with endpoint probes:

- If a node is taken out of rotation, once it becomes responsive again the load balancer will automatically add it back to rotation.

- The load balancer accesses the probe port using the internal IP address of the VM and not the public IP of the cloud service. This means the probe port does not have to be the same port as defined in the endpoint.

- There are no credentials to be passed along with the load balancer requests. The ProbePath property for HTTP probes should always point to a url that can respond successfully with no authentication (for those of you deploying SharePoint you already know this).