Windows Azure and Cloud Computing Posts for 7/29/2013+

Top stories this week:

- Scott Guthrie (@scottgu) announced the availability of Windows Azure SDK v2.1, which works with Visual Studio 2013 Preview, 2012 and 2010. See the Windows Azure Cloud Services, Caching, APIs, Tools and Test Harnesses section below.

- Brian Harry reported the availability of Visual Studio 2012 Update 4 RC 1 in the Windows Azure Infrastructure and DevOps section below.

- Vittorio Bertocci (@vibronet) announced AAL becomes ADAL: Active Directory Authentication Library on 8/2/2013 in the Windows Azure Access Control, Active Directory, and Identity section below.

| A compendium of Windows Azure, Service Bus, BizTalk Services, Access Control, Connect, SQL Azure Database, and other cloud-computing articles. |

‡ Updated 8/3/2013 with new articles marked ‡.

• Updated 8/3/2013 with new articles marked •.

Note: This post is updated weekly or more frequently, depending on the availability of new articles in the following sections:

- Windows Azure Blob, Drive, Table, Queue, HDInsight and Media Services

- Windows Azure SQL Database, Federations and Reporting, Mobile Services

- Windows Azure Marketplace DataMarket, Power BI, Big Data and OData

- Windows Azure Service Bus, BizTalk Services and Workflow

- Windows Azure Access Control, Active Directory, and Identity

- Windows Azure Virtual Machines, Virtual Networks, Web Sites, Connect, RDP and CDN

- Windows Azure Cloud Services, Caching, APIs, Tools and Test Harnesses

- Windows Azure Infrastructure and DevOps

- Windows Azure Pack, Hosting, Hyper-V and Private/Hybrid Clouds

- Visual Studio LightSwitch and Entity Framework v4+

- Cloud Security, Compliance and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

Windows Azure Blob, Drive, Table, Queue, HDInsight and Media Services

Alex Sutton described Big Compute @ Microsoft in a 7/29/2013 post to the Windows HPC Team Blog:

Customers and partners have been asking us what the recent Microsoft organization changes mean for HPC. What does it mean for HPC? The answer—full

steam ahead.We call our workload “Big Compute.” Big Compute applications typically require large amounts of compute power for hours or days at a time. Some of our customers describe what they are doing as HPC, but others call it risk analysis,

rendering, transcoding, or digital design and manufacturing.We’re still working on the HPC Pack for Windows Server clusters and enabling new Big Compute scenarios in the cloud with Windows Azure. You’ll continue to see new features and new releases from us on a regular basis. The team is really excited about the new capabilities we’ll be bringing to our customers.

Microsoft’s vision for Big Compute in Windows Azure remains democratizing capabilities like performance and scale. We have demonstrated world class

performance using Windows Server in the cloud with HPC application benchmarks

and Top 500 systems. We’ll continue to make clusters easy to manage and extend

them to Windows Azure. Going forward, we want to make running cluster

applications in the cloud possible for users that don’t have or can’t get access to clusters.The economics of the cloud is fundamentally changing cluster computing by making compute power available when you need it, paying only for what you

use. Our customers in research and industry get it. Users with workstations can

have access to clusters for projects without having to invest in infrastructure

that may sit idle. Our enterprise customers are able to keep their on-premises

servers busy, while running peak load in Azure. And now developers can cost-effectively test applications and models at scale. We are part of the Enterprise and Cloud Division at Microsoft for a reason.Performance and scale remains at our heart. Because we are part of the

Windows Azure Group, we are driving capabilities like low-latency RDMA

networking in the fabric. We make sure that customers can reliably provision

thousands of cores to run their compute jobs. Windows Azure is evolving to

provide a range of capabilities for Big Compute and Big Data. High memory

instances help more applications run in the cloud, and we are tuning our RDMA

and MPI stacks with partners now.The research community advances the leading edge of HPC. Our team and Microsoft Research continue to work closely with partners in academia and labs—we value the relationships and feedback. Our mission and focus is on democratizing these capabilities and making HPC style computing broadly available through services from Microsoft and from our partners.

We’ll continue to use this blog to update you on Big Compute and HPC at Microsoft.

<Return to section navigation list>

Windows Azure SQL Database, Federations and Reporting, Mobile Services

‡ Grant Fritchey (@GFritchey, a.k.a. The Scary DBA) posted Azure for Prototyping and Development on 7/29/2013:

Developers. Man, have I got something good for you.

Are your DBAs slowing down your development processes? Are they keeping you from flying down the track? Bypass them.

Let’s assume you’re working in the Microsoft stack. Let’s further assume you have an MSDN license. Guess what? That gives you access to Azure… hang on, come here. You want to hear this. Let me tell you a quick story. See, I’m not a developer (not anymore). I’m a DBA. Wait, wait, wait. I’m on your side. It’s cool. I’m just like you guys, but in a different direction.

See, I had a database designed and already up as a Windows Azure SQL Database. I’m working with a number of Boy Scouts on their Eagle projects. They’re going around to all the cemeteries in town, identifying the veterans graves and then gathering all the information from the grave stones and marking the locations both physically by row and position as well as by latitude and longitude. Great projects. Now, here am I, a data pro, database ready to go, but I’m stuck on the front-end. I was trying to find volunteers to help me out, build a quick front-end, so we can do some basic data entry and reporting. Your bread & butter, but not mine. I couldn’t find anyone willing and able to help out.

Then, a good friend pointed me at LightSwitch (thank you Christina Leo (b|t)). I pointed that at my existing data structure, adjusted a few properties, experimented with the screens a little and BAM! I’ve got an interface that’s working. But, I still need to get it to multiple Boy Scouts, running on different versions of Windows (one poor kid was still on XP) as well as the project sponsors and ultimately anyone who wanted to look up where umpty-great grandpa who fought in the GAR was buried. A little poking around in LightSwitch within Visual Studio and I spot “Publish to Web Site.” Ahhh. No worries. I follow the prompts, download the appropriate certificates and suddenly, there it is, everything I needed, up and running.

Now, I know what you’re thinking. So. You do this stuff in your sleep. But understand two things. I set up a publicly accessible, modern(ish) web site, with database and an application front-end, and no “help” from any IT infrastructure (except Christina), developers, DBAs, QA people, anything. That’s one. The second, I had this running within about 20 minutes. 20 minutes!

We’re still working on details, ironing out some of the behaviors & stuff, we’re in a continuous development/deployment cycle, but overall, the Scouts are happy, the project sponsors are happy, and I’m happy. All on our own and quick.

Yeah, I see that light in your eye. Maybe this won’t be a final production server for you, but you can sure get some development done quick, am I right? Sure I am.

Data pros. Nervous? Well you should be. Your developers are going to hear this message and it’s going to resonate. Rightly so. They’re going to be out developing on Azure and there’s precious little you can do about it. Some of what they develop will come back in-house, guaranteed. Some will get tossed. It’s development. But some… some is going to end up being production.

Ready to support a Windows Azure SQL Database? Ready to have some data out on Azure and some on your local servers? Ready to support failover to the cloud? No? Well, let me help you out. I’m putting on an all day seminar at the PASS Summit this year called Thriving as a DBA in the World of Cloud and On-Premises Data. Get registered and get in front of and be prepared for what is absolutely coming your way

<Return to section navigation list>

Windows Azure Marketplace DataMarket, Cloud Numerics, Big Data and OData

<Return to section navigation list>

Windows Azure Service Bus, BizTalk Services and Workflow

<Return to section navigation list>

Windows Azure Access Control, Active Directory, Identity and Workflow

Vittorio Bertocci (@vibronet) reported AAL becomes ADAL: Active Directory Authentication Library on 8/2/2013:

Today we are releasing a new developer preview of our

Windows Azure Authentication Library (AAL)Active Directory Authentication Library (ADAL). You can find the NuGet package here.This refresh introduces many new features which we believe will boost your productivity even further! Below there’s a list of the most salient news.

New Assembly Name & Namespace

When we first introduced AAL, the only authorities you could leverage were Windows Azure AD tenants and ACS namespaces: hence the name Windows Azure Authentication Library (AAL) was correct and descriptive, if I can say so myself.

At //BUILD/ last June we finally disclosed that ADFS in Windows Server 2012 R2 preview supports OAuth2 and JWT tokens, and demonstrated that our libraries work with both on-premises and cloud authorities alike. Therefore, to ensure that such capability is reflected in the name of the library we are renaming it to Active Directory Authentication Library (ADAL).

Furthermore: as we did for the JWT handler, we are moving ADAL to live under the System.* namespace. The new path is System.IdentityModel.Clients.ActiveDirectory.

Once again, we believe this will help to clarify the role of the library and its scope while at the same time improving discoverability.

This change will apply to the Windows Store library as well: the next refresh will use the new name, too.

Note: we are not going to eliminate the existing AAL right away, just to give time to all the people using it (including the ASP.NET tools!) to move off it. For some time the two will coexist in the NuGet gallery, which might end up being a bit confusing. We hope to keep that transition time as short as possible.New features

Let’s take a look at some of the details.

Support for Windows Server 2012 R2 ADFS On-Premises for OAuth2 flows

As mentioned this is not really a new feature, but now we can finally talk about it! If you want to play with it, you can easily adapt those instructions to use ADAL .NET instead of a Windows Store client.

Support for the Common Endpoint

Until now, in order to use ADAL with Windows Azure AD you had to find out which tenant should be used for requesting tokens, as it was a mandatory parameter for initializing AuthenticationContext.

Windows Azure AD does offer a mechanism for authenticating a user without specifying upfront the tenant he/she belongs to: it’s the famous “common” endpoint we use in the multitenant web applications walkthrough. I also talk about it here.

In this refresh ADAL allows you to use the common endpoint for initializing AuthenticationContext: you just pass “https://login.windows.net/Common” as Authority. The result is a late-bind AuthenticationContext; basically, the actual authority remains unassigned until the first call to AcquireToken. As soon as the user authenticates to a given tenant, such tenant becomes the authority for that AuthenticationContext instance.

This is going to be super-handy for simplifying your clients, given that you don’t need to add logic or config that figures out in advance which tenant should be used (though there will always be situations in which that might be the right thing to do). Our updated Windows Azure AD sample demonstrates this.

UPN & Tenant Info in AuthenticationResult

The support for common endpoint is enabled by another new feature: we now return the user’s UPN and origin tenant within AuthenticationResult.

This enables you to find out in your code who signed in and where “after the fact”. This is key for allowing you to create dynamic experiences: for example, you can find out who signed it at a certain point, and plug it back in as login_hint in subsequent AcquireToken calls to ensure that you are acting as the same user across multiple operation. Moreover, you’ll be able to work with scenarios in which a generic application can be tailored at run time to any tenant. What’s more, this allows you to handle multiple users and multiple tenants at once! Moving forward, we will publish samples demonstrating how to take advantage of this new feature in advanced scenarios.

This feature was also key for enabling us to properly store tokens when we acquire them via common endpoint, for which we don’t know in advance user and tenant (important components of the cache key). That’s a great segue for the next new feature.

Improved Cache

ADAL now features an in-memory cache out of the box. This is on par with what we offer in the Windows Store version, with the main difference that tokens are stored in memory and are cleared up when the hosting application closes.

The presence of the cache means that now you no longer need to hold on the access tokens you obtain: you can just call AcquireToken every time you need a token, knowing that ADAL will return a cached access token or take care of refreshing it for you.The cache is also significantly simpler to use than the one in the Windows Store library. The store itself is just an IDictionary<string,string>, which you can query and manipulate just like any collection. The cache key is a composite of the various parameters used to obtain the token (resource ID, client ID, user, tenant/authority…) and the default in memory cache encodes it in a single value: however we provide a class to help you unpack it, TokenCacheEncoding, so that you can query and manipulate the token collection to your heart’s content. For example, say that you want the list of all the users who have a token in your cache. All you need to write is the following:

IDictionary<string, string> cache = myAC.TokenCacheStore; var users = cache.Keys.Select( key => TokenCacheEncoding.DecodeCacheKey(key).User).Distinct();where myAC is an AuthenticationContext instance.

Of course, plugging in your own cache is very easy. We expect you’ll create your own cache implementations to accommodate the specific storage you want to use: e.g. a desktop app will save tokens differently than a middle tier one.

That will also be handy to help you define the boundaries you want between applications. You can share the same cache across multiple AuthenticationContext or even processes, if your scenario calls for it; or enforce isolation by using different cache stores for context switches such as in multitenant apps.Our updated Windows Azure AD sample shows how you can build a custom cache to save your tokens in CredMan; very handy!

ForcePrompt

ADAL does its best to reduce the number of times in which the end user is prompted. The cache, and in minor measure the session & permanent cookies accessible from the browser window, can do a great job to keep prompts after control.

There are, however, situations in which you want your user to be prompted. For example: think of something like a management console, which can manipulate resources associated to different users or even tenants. Occasionally you’ll want to make sure that your end user is allowed to select which account should be used: for example when a new resource is added, or if the operation requested requires selecting a user with higher privileges. To that end we added ForcePrompt: it’s a new parameter in AcquireToken which allows you to tell ADAL to ignore whatever cached token or cookie that might be a fit for the requested resource, and ensure that the end user has the chance of selecting the desired account (or even add a new one). It’s very easy to use, just look for it in the AcquireToken overloads.

Note: ForcePrompt is only available for those overloads where there is uncertainty about which user could be used. That does not include cases in which the user is specified (via login_hint) or a set of credentials (tied to a specific identity, too) are provided.

ACS without HRD page

Since its very first preview, ADAL .NET offered the ability of obtaining tokens from your ACS namespaces. ADAL featured a local version of ACS’ default home realm discovery page, optimized for the browser dialog real estate (small layout, links to the external providers selecting the mobile/touch enabled experiences). Although that was great as a quick tool for development time, we didn’t think that it would have been an experience you would have wanted to use in production. At the same time, the nature of a native client is simply not conducive of customizing the experience via a Web page as it is instead the case when you use ACS with a Web application.

As a result, we decided to eliminate that locally-generated page and allow you (when your scenario calls for it, in many cases it does not) to create a home realm discovery experience native to the UX stack of your application. The ability of signing in with social providers or federated ADFS instances remains unchanged, the only part that’s different is the identity provider selection. Our updated ACS sample demonstrates the new approach.

As more ACS features light up in Windows Azure AD tenant, the Web-based IdP selection might return: but in that case you can expect it to be far more seamlessly integrated with the rest of the platform.

Broad Use Refresh Tokens

Windows Azure AD can issue refresh tokens that can be used not only for renewing the access token with which they were originally issued. That largely means that in those cases you only need to authenticate to obtain an access-refresh tokens tuple once, and access tokens for other resources within the same tenant will be obtainable silently via the first refresh token. I like to call those broad use refresh tokens: I am not sure if the term will stick, we’ll see once we get our official MSDN reference out (soon!).

ADAL .NET supports the use of refresh tokens: once you have one in the cache, ADAL will take care of using it even when you request tokens for new resources. The entire process is fully transparent, very handy.

Next Steps

From now on, you can expect us to do mostly stabilization work on ADAL .NET. We already know of a couple of small changes we want to put in before calling it done, but they should be largely transparent to you. We’re almost there, folks!

Please give the new ADAL .NET a spin and let us know if the new features are useful in your scenarios, your input will be invaluable to help us through the last sprint!

Windows Azure Virtual Machines, Virtual Networks, Web Sites, Connect, RDP and CDN

Craig Kitterman (@craigkitterman, pictured below) posted Sung Hsueh’s Using Microsoft SQL Server security features in Windows Azure Virtual Machines article on 8/1/2013 to the Windows Azure blog:

Editor's Note: This post comes from Sung Hsueh, Senior Program Manager in SQL Server Team.

One of the newest ways of using SQL Server is by leveraging Microsoft’s Windows Azure Infrastructure Services and creating Windows Azure Virtual Machines to host SQL Server. You may have already seen Il-Sung’s earlier blog post (http://blogs.msdn.com/b/windowsazure/archive/2012/12/12/regulatory-compliance-considerations-for-sql-server-running-in-windows-azure-virtual-machine.aspx) about the possibilities of leveraging this environment even for applications with compliance requirements. With the availability of SQL Server 2008 R2 enterprise edition and SQL Server 2012 enterprise edition in Windows Azure Virtual Machines, you now have the option to take advantage of our Enterprise level features such as SQL Server Audit and Transparent Data Encryption in pre-configured, ready-to-deploy, per-minute-billed Windows Azure Virtual Machines! You, of course, still have the option to take advantage of these features by using License Mobility (http://www.microsoft.com/licensing/software-assurance/license-mobility.aspx) to transfer your existing Software Assurance or Enterprise Agreement licenses as well if you prefer this over the per minute billing.

Using TDE with SQL Server in Windows Azure Virtual Machines

Let’s see how all this works by taking a quick walkthrough of Transparent Data Encryption (http://msdn.microsoft.com/en-us/library/bb934049.aspx). If you haven’t already, create a Windows Azure Virtual Machine that has SQL Server already installed on it through the Windows Azure management portal:

And start creating some databases!

Once you have a database you want to add encryption to, the next few steps are the same as if you are running SQL Server locally:

- Log in to the machine with the credentials of someone who can create objects in Master

- Run the following DDL in master (“USE MASTER”):

CREATE MASTER KEY ENCRYPTION BY PASSWORD = ‘<your password here>’;

Go

CREATE CERTIFICATE TDEServerCert WITH SUBJECT = ‘My TDE certificate’;

Go- Switch to the database you want to encrypt

- Run the following DDL:

CREATE DATABASE ENCRYPTION KEY WITH ALGORITHM = AES_256 ENCRYPTION BY SERVER CERTIFICATE TDEServerCert;

Go

ALTER DATABASE [your_database_name] SET ENCRYPTION ON;

GoAnd that’s it! The encryption will run in the background (you can check this by querying sys.dm_database_encryption_keys). No differences, exactly the same as your on-premise SQL Server instances. Similarly, you can also use SQL Server Audit (http://msdn.microsoft.com/en-us/library/cc280386.aspx) exactly like how you are already using it on-premise.

Additional security considerations

A few additional things to keep in mind, please be sure to follow the security best practices (http://msdn.microsoft.com/library/windowsazure/dn133147.aspx). A few topics to consider are:

- Reduce the surface area by disabling unneeded services

- Leverage Policy-Based Management to detect security conditions such as using weak algorithms

- Use minimal permissions where possible, avoid using built-in accounts or groups such as sa or sysadmin where possible; consider using SQL Server Audit for tracking administrative actions

- If you plan on using encryption features, think about creating a key aging/rotation policy starting with the service master key

- Consider using SSL encryption especially if connecting to SQL Server over a public endpoint in Windows Azure

- Consider changing the port SQL Server uses from 1433 for the default instance to something else especially if connecting to SQL Server over a public endpoint in Windows Azure (ideally, avoid external connections to SQL Server instances from the public internet entirely)

In closing…

Running SQL Server enterprise edition in Windows Azure Virtual Machines allows you to carry over the security best practices and expertise from your existing applications and leverage Microsoft’s Windows Azure to run your applications in the cloud and pay for only what you use (including Enterprise!) through the per minute billing option. Try it out and let us know your experience!

<Return to section navigation list>

Windows Azure Cloud Services, Caching, APIs, Tools and Test Harnesses

Scott Guthrie (@scottgu) posted Announcing the release of the Windows Azure SDK 2.1 for .NET on 8/1/2013:

Today we released the v2.1 update of the Windows Azure SDK for .NET. This is a major refresh of the Windows Azure SDK and it includes some great new features and enhancements. These new capabilities include:

- Visual Studio 2013 Preview Support: The Windows Azure SDK now supports using the new VS 2013 Preview

- Visual Studio 2013 VM Image: Windows Azure now has a built-in VM image that you can use to host and develop with VS 2013 in the cloud

- Visual Studio Server Explorer Enhancements: Redesigned with improved filtering and auto-loading of subscription resources

- Virtual Machines: Start and Stop VM’s w/suspend billing directly from within Visual Studio

- Cloud Services: New Emulator Express option with reduced footprint and Run as Normal User support

- Service Bus: New high availability options, Notification Hub support, Improved VS tooling

- PowerShell Automation: Lots of new PowerShell commands for automating Web Sites, Cloud Services, VMs and more

All of these SDK enhancements are now available to start using immediately and you can download the SDK from the Windows Azure .NET Developer Center. Visual Studio’s Team Foundation Service (http://tfs.visualstudio.com/) has also been updated to support today’s SDK 2.1 release, and the SDK 2.1 features can now be used with it (including with automated builds + tests).

Below are more details on the new features and capabilities released today:

Visual Studio 2013 Preview Support

Today’s Window Azure SDK 2.1 release adds support for the recent Visual Studio 2013 Preview. The 2.1 SDK also works with Visual Studio 2010 and Visual Studio 2012, and works side by side with the previous Windows Azure SDK 1.8 and 2.0 releases.

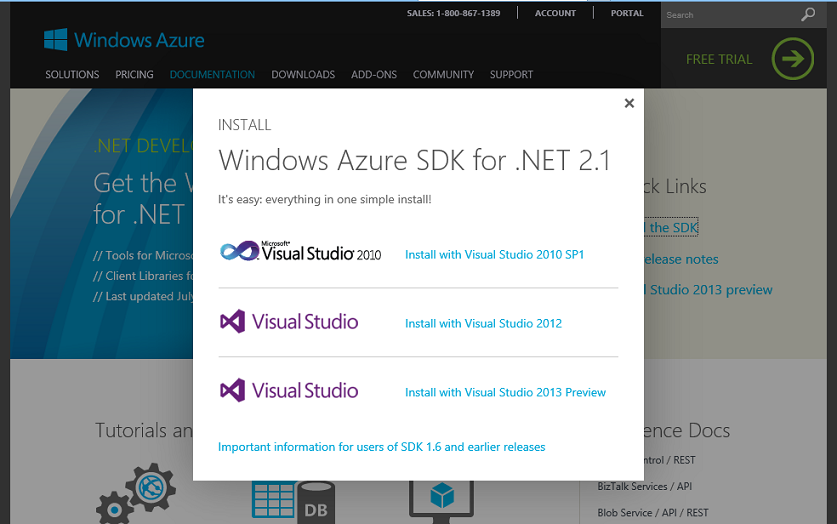

To install the Windows Azure SDK 2.1 on your local computer, choose the “install the sdk” link from the Windows Azure .NET Developer Center. Then, chose which version of Visual Studio you want to use it with. Clicking the third link will install the SDK with the latest VS 2013 Preview:

If you don’t already have the Visual Studio 2013 Preview installed on your machine, this will also install Visual Studio Express 2013 Preview for Web.

Visual Studio 2013 VM Image Hosted in the Cloud

One of the requests we’ve heard from several customers has been to have the ability to host Visual Studio within the cloud (avoiding the need to install anything locally on your computer).

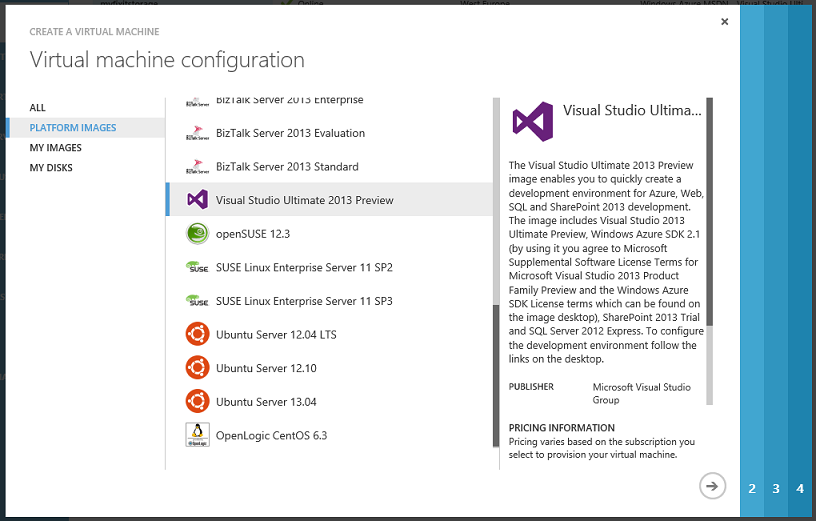

With today’s SDK update we’ve added a new VM image to the Windows Azure VM Gallery that has Visual Studio Ultimate 2013 Preview, SharePoint 2013, SQL Server 2012 Express and the Windows Azure 2.1 SDK already installed on it. This provides a really easy way to create a development environment in the cloud with the latest tools. With the recent shutdown and suspend billing feature we shipped on Windows Azure last month, you can spin up the image only when you want to do active development, and then shut down the virtual machine and not have to worry about usage charges while the virtual machine is not in use.

You can create your own VS image in the cloud by using the New->Compute->Virtual Machine->From Gallery menu within the Windows Azure Management Portal, and then by selecting the “Visual Studio Ultimate 2013 Preview” template:

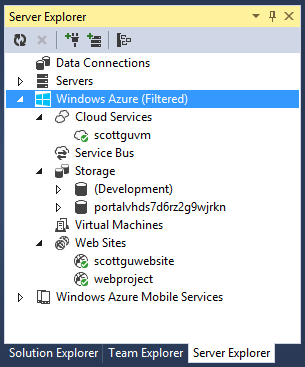

Visual Studio Server Explorer: Improved Filtering/Management of Subscription Resources

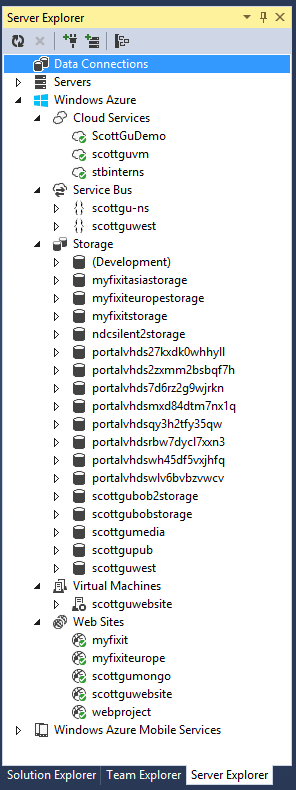

With the Windows Azure SDK 2.1 release you’ll notice significant improvements in the Visual Studio Server Explorer. The explorer has been redesigned so that all Windows Azure services are now contained under a single Windows Azure node. From the top level node you can now manage your Windows Azure credentials, import a subscription file or filter Server Explorer to only show services from particular subscriptions or regions.

Note: The Web Sites and Mobile Services nodes will appear outside the Windows Azure Node until the final release of VS 2013. If you have installed the ASP.NET and Web Tools Preview Refresh, though, the Web Sites node will appear inside the Windows Azure node even with the VS 2013 Preview.

Once your subscription information is added, Windows Azure services from all your subscriptions are automatically enumerated in the Server Explorer. You no longer need to manually add services to Server Explorer individually. This provides a convenient way of viewing all of your cloud services, storage accounts, service bus namespaces, virtual machines, and web sites from one location:

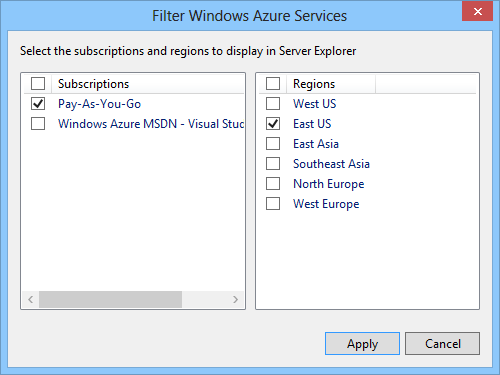

Subscription and Region Filtering Support

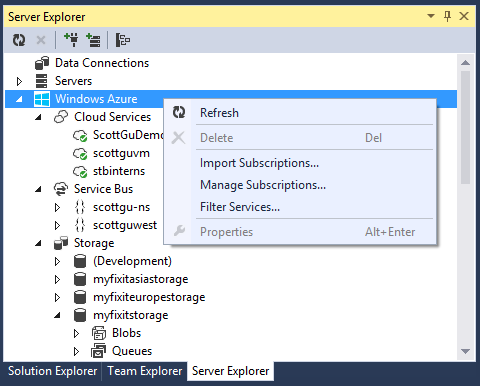

Using the Windows Azure node in Server Explorer, you can also now filter your Windows Azure services in the Server Explorer by the subscription or region they are in. If you have multiple subscriptions but need to focus your attention to just a few subscription for some period of time, this a handy way to hide the services from other subscriptions view until they become relevant. You can do the same sort of filtering by region.

To enable this, just select “Filter Services” from the context menu on the Windows Azure node:

Then choose the subscriptions and/or regions you want to filter by. In the below example, I’ve decided to show services from my pay-as-you-go subscription within the East US region:

Visual Studio will then automatically filter the items that show up in the Server Explorer appropriately:

With storage accounts and service bus namespaces, you sometimes need to work with services outside your subscription. To accommodate that scenario, those services allow you to attach an external account (from the context menu). You’ll notice that external accounts have a slightly different icon in server explorer to indicate they are from outside your subscription.

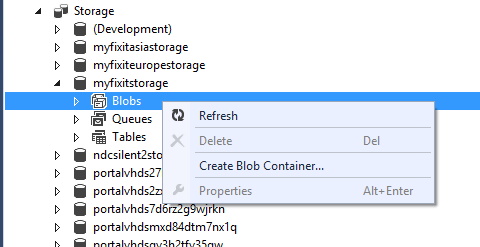

Other Improvements

We’ve also improved the Server Explorer by adding additional properties and actions to the service exposed. You now have access to most of the properties on a cloud service, deployment slot, role or role instance as well as the properties on storage accounts, virtual machines and web sites. Just select the object of interest in Server Explorer and view the properties in the property pane.

We also now have full support for creating/deleting/update storage tables, blobs and queues from directly within Server Explorer. Simply right-click on the appropriate storage account node and you can create them directly within Visual Studio:

Virtual Machines: Start/Stop within Visual Studio

Virtual Machines now have context menu actions that allow you start, shutdown, restart and delete a Virtual Machine directly within the Visual Studio Server Explorer. The shutdown action enables you to shut down the virtual machine and suspend billing when the VM is not is use, and easily restart it when you need it:

This is especially useful in Dev/Test scenarios where you can start a VM – such as a SQL Server – during your development session and then shut it down / suspend billing when you are not developing (and no longer be billed for it).

You can also now directly remote desktop into VMs using the “Connect using Remote Desktop” context menu command in VS Server Explorer.

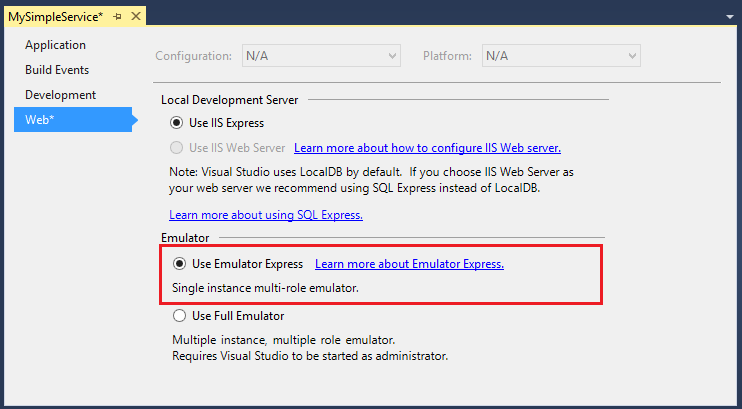

Cloud Services: Emulator Express with Run as Normal User Support

You can now launch Visual Studio and run your cloud services locally as a Normal User (without having to elevate to an administrator account) using a new Emulator Express option included as a preview feature with this SDK release. Emulator Express is a version of the Windows Azure Compute Emulator that runs a restricted mode – one instance per role – and it doesn’t require administrative permissions and uses 40% less resources than the full Windows Azure Emulator. Emulator Express supports both web and worker roles.

To run your application locally using the Emulator Express option, simply change the following settings in the Windows Azure project.

- On the shortcut menu for the Windows Azure project, choose Properties, and then choose the Web tab.

- Check the setting for IIS (Internet Information Services). Make sure that the option is set to IIS Express, not the full version of IIS. Emulator Express is not compatible with full IIS.

- On the Web tab, choose the option for Emulator Express.

Service Bus: Notification Hubs

With the Windows Azure SDK 2.1 release we are adding support for Windows Azure Notification Hubs as part of our official Windows Azure SDK, inside of Microsoft.ServiceBus.dll (previously the Notification Hub functionality was in a preview assembly).

You are now able to create, update and delete Notification Hubs programmatically, manage your device registrations, and send push notifications to all your mobile clients across all platforms (Windows Store, Windows Phone 8, iOS, and Android).

Learn more about Notification Hubs on MSDN here, or watch the Notification Hubs //BUILD/ presentation here.

Service Bus: Paired Namespaces

One of the new features included with today’s Windows Azure SDK 2.1 release is support for Service Bus “Paired Namespaces”. Paired Namespaces enable you to better handle situations where a Service Bus service namespace becomes unavailable (for example: due to connectivity issues or an outage) and you are unable to send or receive messages to the namespace hosting the queue, topic, or subscription. Previously, to handle this scenario you had to manually set up separate namespaces that can act as a backup, then implement manual failover and retry logic which was sometimes tricky to get right.

Service Bus now supports Paired Namespaces, which enables you to connect two namespaces together. When you activate the secondary namespace, messages are stored in the secondary queue for delivery to the primary queue at a later time. If the primary container (namespace) becomes unavailable for some reason, automatic failover enables the messages in the secondary queue.

For detailed information about paired namespaces and high availability, see the new topic Asynchronous Messaging Patterns and High Availability.

Service Bus: Tooling Improvements

In this release, the Windows Azure Tools for Visual Studio contain several enhancements and changes to the management of Service Bus messaging entities using Visual Studio’s Server Explorer. The most noticeable change is that the Service Bus node is now integrated into the Windows Azure node, and supports integrated subscription management.

Additionally, there has been a change to the code generated by the Windows Azure Worker Role with Service Bus Queue project template. This code now uses an event-driven “message pump” programming model using the QueueClient.OnMessage method.

PowerShell: Tons of New Automation Commands

Since my last blog post on the previous Windows Azure SDK 2.0 release, we’ve updated Windows Azure PowerShell (which is a separate download) five times. You can find the full change log here. We’ve added new cmdlets in the following areas:

- China instance and Windows Azure Pack support

- Environment Configuration

- VMs

- Cloud Services

- Web Sites

- Storage

- SQL Azure

- Service Bus

China Instance and Windows Azure Pack

We now support the following cmdlets for the China instance and Windows Azure Pack, respectively:

- China Instance: Web Sites, Service Bus, Storage, Cloud Service, VMs, Network

- Windows Azure Pack: Web Sites, Service Bus

We will have full cmdlet support for these two Windows Azure environments in PowerShell in the near future.

Virtual Machines: Stop/Start Virtual Machines

Similar to the Start/Stop VM capability in VS Server Explorer, you can now stop your VM and suspend billing:

If you want to keep the original behavior of keeping your stopped VM provisioned, you can pass in the -StayProvisioned switch parameter.

Virtual Machines: VM endpoint ACLs

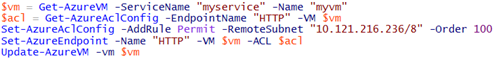

We’ve added and updated a bunch of cmdlets for you to configure fine-grained network ACL on your VM endpoints. You can use the following cmdlets to create ACL config and apply them to a VM endpoint:

- New-AzureAclConfig

- Get-AzureAclConfig

- Set-AzureAclConfig

- Remove-AzureAclConfig

- Add-AzureEndpoint -ACL

- Set-AzureEndpoint –ACL

The following example shows how to add an ACL rule to an existing endpoint of a VM.

Other improvements for Virtual Machine management includes

- Added -NoWinRMEndpoint parameter to New-AzureQuickVM and Add-AzureProvisioningConfig to disable Windows Remote Management

- Added -DirectServerReturn parameter to Add-AzureEndpoint and Set-AzureEndpoint to enable/disable direct server return

- Added Set-AzureLoadBalancedEndpoint cmdlet to modify load balanced endpoints

Cloud Services: Remote Desktop and Diagnostics

Remote Desktop and Diagnostics are popular debugging options for Cloud Services. We’ve introduced cmdlets to help you configure these two Cloud Service extensions from Windows Azure PowerShell.

Windows Azure Cloud Services Remote Desktop extension:

- New-AzureServiceRemoteDesktopExtensionConfig

- Get-AzureServiceRemoteDesktopExtension

- Set-AzureServiceRemoteDesktopExtension

- Remove-AzureServiceRemoteDesktopExtension

Windows Azure Cloud Services Diagnostics extension

- New-AzureServiceDiagnosticsExtensionConfig

- Get-AzureServiceDiagnosticsExtension

- Set-AzureServiceDiagnosticsExtension

- Remove-AzureServiceDiagnosticsExtension

The following example shows how to enable Remote Desktop for a Cloud Service.

Web Sites: Diagnostics

With our last SDK update, we introduced the Get-AzureWebsiteLog –Tail cmdlet to get the log streaming of your Web Sites. Recently, we’ve also added cmdlets to configure Web Site application diagnostics:

- Enable-AzureWebsiteApplicationDiagnostic

- Disable-AzureWebsiteApplicationDiagnostic

The following 2 examples show how to enable application diagnostics to the file system and a Windows Azure Storage Table:

SQL Database

Previously, you had to know the SQL Database server admin username and password if you want to manage the database in that SQL Database server. Recently, we’ve made the experience much easier by not requiring the admin credential if the database server is in your subscription. So you can simply specify the -ServerName parameter to tell Windows Azure PowerShell which server you want to use for the following cmdlets.

- Get-AzureSqlDatabase

- New-AzureSqlDatabase

- Remove-AzureSqlDatabase

- Set-AzureSqlDatabase

We’ve also added a -AllowAllAzureServices parameter to New-AzureSqlDatabaseServerFirewallRule so that you can easily add a firewall rule to whitelist all Windows Azure IP addresses.

Besides the above experience improvements, we’ve also added cmdlets get the database server quota and set the database service objective. Check out the following cmdlets for details.

- Get-AzureSqlDatabaseServerQuota

- Get-AzureSqlDatabaseServiceObjective

- Set-AzureSqlDatabase –ServiceObjective

Storage and Service Bus

Other new cmdlets include

- Storage: CRUD cmdlets for Azure Tables and Queues

- Service Bus: Cmdlets for managing authorization rules on your Service Bus Namespace, Queue, Topic, Relay and NotificationHub

Summary

Today’s release includes a bunch of great features that enable you to build even better cloud solutions. All the above features/enhancements are shipped and available to use immediately as part of the 2.1 release of the Windows Azure SDK for .NET.

If you don’t already have a Windows Azure account, you can sign-up for a free trial and start using all of the above features today. Then visit the Windows Azure Developer Center to learn more about how to build apps with it.

• Brian Keller and Clint Rutkas produced TWC9: Windows Azure SDK 2.1 for .NET, VS 2012.4, Web Essentials 2013 Preview and more for Channel9 on 8/2/2013:

This week on Channel 9, Brian and Clint discuss the week's top developer news, including;

- [00:27] Announcing the release of the Windows Azure SDK 2.1 for .NET (Scott Guthrie)

- [01:36] VS 2012.4 (Update 4) will exist! (Brian Harry)

- [02:26] Web Essentials 2013 Preview (Robert Green , Mads Kristensen)

- [03:18] Now available: Refresh of Office 2013 and SharePoint 2013 developer training (Kirk Evans)

- [04:10] Developing Windows 8 Apps via MVA anytime

- [04:50] Everything you wanted to know about SQL injection (but were afraid to ask) (Troy Hunt)

- [06:12] Text Editor from SkyDrive with HTML5 (Greg Edmiston), SkyDrive blog

- [06:42] Compelling Win8.1 Feature: Device-Friendly App Development (Ed Tittel)

Picks of the Week!

- Brian's Pick of the Week:[08:03] LINQ to Family Tree (Prolog Style) (Hisham Abdullah Bin Ateya)

- Clint's Pick of the Week:[08:59] 15 Sorting algorithms in 6 minutes--Audio/Visual representation.

Return to section navigation list>

Windows Azure Infrastructure and DevOps

‡ Ricardo Villalobos (@ricvilla) and Bruno Terkaly (@brunoterkaly) wrote Meter and Autoscale Multi-Tenant Applications in Windows Azure for the July 2013 Windows Azure Insider column and MSDN Magazine published it on 8/2/2013:

This month, for the MSDN Magazine Windows Azure Insider column, Bruno and I follow up on the topic of creating multi-tenant solutions in the cloud, particularly focusing on metering and auto-scaling, which are crucial tasks for properly maintaining applications that server multiple resources sharing compute and storage resource. The full article can be found here:

‡ Travis Wright reported New System Center Courses on Microsoft Virtual Academy (MVA)! in an 8/1/2013 post:

Hopefully everybody has been reading and enjoying the “What’s New in 2012 R2?” series from our VP Brad Anderson. If you haven’t been following along, check it out! http://blogs.technet.com/b/in_the_cloud/archive/tags/what_2700_s+new+in+2012+r2/

All this new stuff that has been coming out so quickly (2012, 2012 SP1, and soon 2012 R2 in only about a year and a half) can sometimes feel daunting to keep up with. We’re here to help you get up to speed on all the new technology though! We have recently published two very timely and informative courses on System Center to help you get the knowledge you need.

Customizing and Extending System Center Course

The first one is an entire 6 part series on customizing and extending System Center 2012. Here’s the abstract:

This course focuses on extensibility and customization with System Center 2012 SP1, enabling customer and partners to create their own plugins with the System Center components. First you will learn how to create service templates in Virtual Machine Manager (SCVMM) allowing management of a multi-tiered application as a single logical unit. Next you will learn the basics of creating a custom Integration Pack using Orchestrator (SCO) to enable you to integrate custom activities within a workflow. The next two modules look at Operations Manager (SCOM) extensibility through creating Management Packs to monitor any datacenter component and Dashboards to visualize the data. The final two modules look at Service Manager (SCSM) Data Warehouse and reporting to generate datacenter-wide information, and then how to customize Service Catalog offerings to enable end-users to create self-service requests through a web portal.

- VMM: Service Templates – Damien Caro (Microsoft IT Pro Evangelist)

- SCO: Integration Packs – Andreas Rynes (Microsoft Datacenter Architect)

- SCOM: Management Packs – Lukasz Rutkowski (Microsoft PFE)

- SCOM: Dashboards – Markus Klein (Microsoft PFE)

- SCSM: Data Warehouse & Reporting – Travis Wright (Microsoft PM)

- SCSM: Service Catalogs – Anshuman Nangia (Microsoft PM)

Go here to take the course:

http://www.microsoftvirtualacademy.com/training-courses/system-center-2012-sp1-extensibility

Private and Hybrid Cloud Jump Starts

These two jump start events are a comprehensive view of how to build a private cloud with Windows Server and System Center and how to embrace a “hybrid cloud”. Pete Zerger (MVP) was the instructor and Microsoft evangelists Symon Perrriman and Matt McSpirit were the hosts for the events. If you haven’t seen a presentation by the famous Pete Zerger, you need to!

Part 1: Symon & Pete: Build a Private Cloud with Windows Server & System Center Jump Start: http://www.microsoftvirtualacademy.com/training-courses/build-a-private-cloud-with-windows-server-system-center-jump-start

Part 2 :Matt & Pete: Move to Hybrid Cloud with System Center & Windows Azure Jump Start: http://www.microsoftvirtualacademy.com/training-courses/move-to-hybrid-cloud-with-system-center-windows-azure-jump-start

‡ Yung Chou (@yungchou) described Windows Azure Virtual Network and VMs Deployment with Windows Azure PowerShell Cmdlets in a 7/31/2013 post:

With Windows Azure PowerShell Cmdlets, the process to customize and automate an infrastructure deployment to Windows Azure Infrastructure Services (IaaS) has become a relatively simple and manageable task. This article examines a sample script which automatically deploys seven specified VMs into three target subnets based on a network configuration file with two availability sets and a load-balancer. In several of test runs I have done, the entire deployment took a little more than half an hour.

Assumptions

The developer PowerShell script assumes Windows Azure PowerShell Cmdlets environment has been configured with an intended Windows Azure subscription. A network configuration file is at a specified location.

Network Configuration (netcfg) File

This file can be created from scratch or exported from Windows Azure NETOWRK workspace by first creating a virtual network. Notice in the netcfg file an affinity group and a DNS server are specified.

Overall Program Structure

The four functions represent the key steps in performing a deployment including building a virtual network, deploying VMs, and configuring availability sets and load-balancers, as applicable.

Initialization, Windows Azure Virtual Network and Settings

Within the script, session variables and constants are assigned. The network information needs to be identical with what is defined in netcfg file since they are not automatically populated from an external source at this time.

Functions

To deploy multiple VMs programmatically, there is much information repeated. And in some parameters are defined with default values from session variables or the initialization section. When calling a routine, a default value can be overridden by an assigned/passed value.

Since other than the first VM, deploying additional VMs into an existing service does not need to specify the virtual network name. I however employ the same routing with VNetName specified for deploying the first and subsequent VMs into a service, it produces a warning for each VM after the first VM deployed into a service as shown in the user experience later.

Simply passing in an array to the routines and an availability set or a load-balancer will automatically built. These two functions streamline the processes.

Main Program

Rather than creating a cloud service while deploying a VM, I decided to create individual cloud services first. This makes troubleshooting easier since it is very clear when services are created.

Deploy Backend Infrastructure Servers

With a target service in place, I simply loop through and deploy VMs based on the names specified in an array. So to deploy or place VMs into an availability set or a load-balancer becomes simply by adding machine names into a target array.

Deploy Mid-Tier Workloads

Deploy Frontend Servers

Here, three frontend servers are load-balanced and configured into an availability set.

User Experience

I kicked off at 3:03 PM and it finished at 3:36 PM with 7 VMs deployed into target subnets including a SharePoint and a SQL servers. The four warnings were due to deploying additional VMs (specifically dc2, sp2013, fe2, and fe3) into their target services while in the function, DeployVMsToAService, I have VNetName specified.

Verified with Windows Azure Portal

Seven VMs were deployed with specified names.

dc1 and dc2 were placed in the availability set, dcAvSet.

fe1, fe2, and fe3 are placed in the availability set, feAvSet.

The three were load-balanced at feEndpoint as specified in the script.

All VMs were deployed into target subnet as shown in the virtual network, foonet.

Session Log and RDP files

The script also produces a log file capturing the states while completing tasks. As a VM is deployed, the associated RDP file is also downloaded into a specified working directory as shown below.

Closing Thoughts

To make the code production ready, more error handling needs to be put in place. The separation of data and program logic is critical here. PowerShell is a very fluid programming tool. If there is a way to run a statement, PowerShell will try to do just that. So knowing how data are referenced and passed in runtime is becoming critical. And by separating data and program logic will noticeably facilitate the testing/troubleshooting process. And the more experience you have scripted and run it with various data, the more you will discover how PowerShell actually behaves which is not necessarily always the same with other scripting language.

A key missing part of the script is the ability to automatically populated the network configuration from or to an external source. Still, the script provides a predictable, consistent, and efficient way to deploy infrastructure with Windows Azure Infrastructure. What must come after is to customize the runtime environment for an intended application, once VMs are in place and followed by installing the application. IaaS puts VMs in place as the foundation for PaaS which provides the runtime for a target application. It is always about the application, never should we forget.

- See more at: shttp://blogs.technet.com/b/yungchou/archive/2013/07/31/automating-windows-azure-infrastructure-services-iaas-deployment-with-powershell.aspx#sthash.CS0TcXjq.dpuf

• Brian Harry reported VS 2012.4 (Update 4) will exist! in a 7/31/2013 blog post:

Sometime this spring, though for the life of me I can’t find it, I wrote a blog post where I said I thought Update 3 (VS/TFS 2012.3) would be the last update in the VS/TFS 2012 update line. I often say that nothing that is said about the future is more than a guess with varying levels of confidence. So, it turns out that I was wrong. A month or two ago we decided that we were going to need to do a 2012.4 release.

The primary motivation is addressing compatibility and migration/round-tripping issues between VS 2012 and VS 2013 and the various platform releases to make adoption of VS 2013 as smooth as possible. The planned timing will be around the same time VS 2013 releases (and, of course, I can’t give any more detail at this time other than saying that it will be before the end of the year). Once we decide to ship a release like this, it then becomes a vehicle to deliver anything else that would be valuable/important to deliver in that timeframe. So, for instance Update 4 will also include a roll up of fixes for all important customer reported by the time it locks down.

Like Update 3, Update 4 will be a very scoped release just focused on these compatibility fixes and key customer impacting bug fixes. We will not be delivering significant new features in Update 4. I qualify it with “significant” because what is a feature and what is a bug fix is in the eye of the beholder.

Today we released the first Release Candidate for Update 4. Don’t take the “Release Candidate” designation too seriously. We’re going to have several release candidates and it’s still early in the process. I think the primary reason for calling it a release candidate is that we have validated it for production use and we wanted the name to clearly reflect that. So if you’ve got a specific issue that’s addressed in this release, installing it would make sense. Otherwise, I would just wait a little bit for a later release candidate. I’d say that, at this point, less than half the fixes that will ultimately be in the release are in this release candidate.

Here are some resources for RC1:

You’ll find the specific list of bug fixes so far in the KB article. You’ll also notice most of them are TFS related at this point.

So now you are going to ask me whether or not there will be an Update 5. At this point, my answer will be “If we need one.” But, I don’t expect so at this time.

• Darryl K. Taft reported Accenture Expands Cloud Ecosystem With Windows Azure, AWS in an 8/2/2013 post to eWeek’s Cloud blog:

Accenture launched a new version of its Accenture Cloud Platform, providing new and enhanced cloud management services for public and private cloud infrastructure offerings.

The platform now supports an expanded portfolio of infrastructure service providers including, Amazon Web Services, Microsoft Windows Azure, Verizon Terremark and NTT Communications.

By expanding its ecosystem of cloud providers, Accenture can now offer an expanded service catalog that enables customers to acquire cloud services in a pre-packaged, standardized format.

"With this next version, we've enhanced its capabilities as a management platform for clients to confidently source and procure capacity from an ecosystem of providers that meet the highest quality standards, as well as providing the critical connections required to seamlessly transition work to the cloud," Michael Liebow, managing director and global lead for the Accenture Cloud Platform, said in a statement. "An expanded provider portfolio increases our geographic footprint, helping us to better serve our clients around the world as they migrate to the cloud, and fits with our blueprint for enterprise-grade cloud services."

The new version of the Accenture Cloud Platform offers a more flexible, service-enabled architecture based on open-source components, which helps reduce deployment costs for clients while the expanding catalog of services helps to speed deployments, Liebow said.

Accenture has created a hybrid cloud platform service for enterprise customers that aggregates, integrates, automates and manages cloud services. The Accenture Cloud Platform helps minimize the IT governance and integration issues that can crop up. The platform provides secure, scalable pre-integrated enterprise-ready cloud services; automated provisioning through a self-service portal; and monitoring, metering and centralized chargebacks on a pay-as-you-go basis.

Services on the Accenture Cloud Platform include "Big Data Recommender as a Service," which employs elastic on-demand tools to analyze large amounts of structured and unstructured data to provide targeted product and customer recommendations. The platform also offers data decommissioning services for enterprises to store data from retired legacy applications in the cloud. Accenture also offers testing-as-a-service and environment provisioning and cloud management services, including an integrated 24/7 Accenture service desk for support.

The addition of the four new cloud providers extends the geographic footprint of the Accenture Cloud Platform with new data center locations in Latin America and Asia, helping to better serve customers around the world. The platform delivers infrastructure services with the following providers:

Amazon Web Services: Providing account enablement and expert assisted provisioning services, the Accenture Cloud Platform supports clients deploying Amazon Web Services environments in their nine regions around the world.

- Microsoft Windows Azure: Providing account enablement, the Accenture Cloud Platform supports the deployment of application development solutions on the Windows Azure platform.

- Verizon Terremark: Offering provisioning, as well as administrative and support services for Terremark's Enterprise Cloud, a virtual private cloud solution, the platform adds data centers around the world, extending reach into Latin America through the Verizon Terremark data center in Sao Paulo, Brazil.

- NTT Communications: Supporting NTT's private and public enterprise clouds, Accenture offers provisioning, administrative and support services, as well as professional architecture guidance to help clients design and deploy their applications in the cloud. The platform gains public data centers in Hong Kong and Tokyo, expanding locations in the Asia-Pacific region.

"The addition of Windows Azure platform and infrastructure services to the Accenture Cloud Platform simplifies adoption of cloud services and hybrid computing scenarios for our clients," Steven Martin, Microsoft's general manager for Windows Azure, said in a statement. "It offers all the benefits of Azure—rapid provisioning and market-leading price-performance—wrapped in solutions from Accenture."

"We are focused on providing global customers with a secure, enterprise-ready cloud solution," said Chris Drumgoole, senior vice president of Global Operations at Verizon Terremark, in a statement. "By participating in the Accenture Cloud Platform ecosystem, our mutual clients have another avenue for acquiring cloud services worldwide."

"The Accenture Cloud Platform offers enterprise clients total flexibility to design an ecosystem that meets their own unique technology and geography requirements," said Kazuhiro Gomi, NTT Communications board member and CEO of NTT America, in a statement.

Accenture has done more than 30 conference room pilots for ERP on the platform to help customers provision the cloud for their ERP systems, Liebow said. Accenture has worked on more than 6,000 cloud computing projects for clients, he said. The company has more than 7,900 professionals trained in cloud computing.

The Accenture Cloud Platform is a major part of Accenture's investment of more than $400 million in cloud technologies, capabilities and training by 2015. - See more at: http://www.eweek.com/cloud/accenture-expands-cloud-ecosystem-with-windows-azure-aws#sthash.qlPUuPXp.dpuf

• Xavier Decoster (@xavierdecoster) published An Overview of the NewGet Ecosystem to the CodeProject on 7/31/2013 under an MIT license:

Introduction

NuGet is a free, open source package management system for .NET and consists of a few client tools (NuGet Command Line and NuGet Visual Studio Extension) and the official NuGet Gallery hosted at http://www.nuget.org/. Combined, these tools and the gallery form the NuGet project, governed by Outercurve Foundation and part of the ASP.NET Open Source Gallery. Although fully open source, Microsoft has extensively contributed to the development of the NuGet project.

First introduced in 2010, NuGet has been around for a few years now and many people and organizations are beginning to realize that NuGet presents a great opportunity to improve and automate parts of their development processes. Whether you work on open source projects or in an enterprise environment, NuGet is here to stay, but you have a way bigger NuGet ecosystem at your disposal today.

Background

This article talks about the NuGet ecosystem. If you are new to NuGet or need practical guidance on how to use NuGet, then I highly recommend you to read through the NuGet documentation or buy the book Pro NuGet for a full reference, tips and tricks.

Training Materials

Using a new tool or technology usually comes with a learning curve. Luckily for you, NuGet has no steap learning curve it all! In fact, anyone can get started consuming packages in no time. Authoring packages however, and especially authoring good packages, as well as embracing NuGet in your automated build and deployment processes requires some research in order to get things right.

The following pointers should help you get the maximum out of NuGet:

- Official NuGet Documentation site: http://docs.nuget.org/

- NuGet Blog: http://blog.nuget.org/

- NuGet team on Twitter: @nuget

- JabbR chat: https://jabbr.net/#rooms/nuget

- MSDN article: Top 10 NuGet (anti-)patterns

- Book: Apress Pro NuGet

- My blog also has a few NuGet related posts: http://www.xavierdecoster.com/tagged/NuGet

The NuGet Ecosystem

Because the NuGet project is open source under a permissive Apache v2 license, other projects can leverage NuGet and companies can build support for it in their products. All of them extend the NuGet ecosystem to what it is today, containing:

- Outercurve Foundation NuGet Project

- NuGet-based tools by Microsoft

- NuGet Package Explorer

- MyGet (NuGet-as-a-Service)

- Chocolatey

- OctopusDeploy

- RedGate Deployment Manager

- SymbolSource

- ProGet (Inedo)

- CoApp

- BoxStarter

- SharpDevelop NuGet plug-in

- Xamarin NuGet plug-in

- TeamCity support for NuGet

- Artifactory support for NuGet

- Nexus support for NuGet

- Glimpse Plug-ins

- ReSharper Plug-ins

- Orchard

- NuGetMustHaves

- NuGetFeed

- NuGetLatest

- NuGet server in Java

- NuGet Fight

- NuGit

Outercurve Foundation

The sources for the Outercurve NuGet clients can be found on Codeplex, while the NuGet Gallery sources are available on GitHub.

The Outercurve tools for NuGet include:

NuGet Core project

Download: sources (Codeplex) or NuGet package

License: Apache v2

Most NuGet client tools are based on the cross-platform

NuGet.Coreproject. If you want to build your own NuGet client, you're best bet is to fetch the NuGet.Core project's sources from Codeplex, or to run the following command in the NuGet Package Manager Console:

Collapse | Copy Code

Install-Package NuGet.Core

- NuGet Command Line tool (which is a wrapper around NuGet.Core)

- Download: nuget.exe

- Reference: http://docs.nuget.org/reference/command-line-reference

- License: Apache v2

- NuGet Server project

- Download: http://www.nuget.org/packages/NuGet.Server

- License: Apache v2

- Sources: http://nuget.codeplex.com/

- Official NuGet Gallery at http://www.nuget.org

- Statistics: http://www.nuget.org/stats

- Availability: http://status.nuget.org

- License: Apache v2

- Sources: https://github.com/NuGet/NuGetGallery

If you want to setup your own NuGet server using the tools provided by the Outercurve Foundation, you can do so using any of the following methods:

- To create a basic NuGet server and point it to a local folder or network share, create a new ASP.NET application and run the following command in the Package Manager Console:

Collapse | Copy Code

Install-Package NuGet.Server

- Benefits:

- requires minimal infrastructure (IIS and some diskspace)

- requires .NET Framework 4.0

- Drawbacks:

- non-indexed storage (slower as repository-size increases)

- single API key for entire server

- no fine-grained security

- single NuGet feed per NuGet.Server application

- To set up your own NuGet Gallery, fetch the sources from GitHub and follow the instructions at https://github.com/NuGet/NuGetGallery.

- Benefits:

- indexed storage (faster querying)

- basic user system (authentication, API-key per user, manage own packages, emails)

- supports SSL

- Drawbacks:

- requires proper infrastructure (IIS, SqlServer)

- requires .NET Framework 4.5 (I consider this a drawback due to the fact that .NET 4.5 is an in-place upgrade)

- requires you to fetch the sources, compile everything and configure quite a lot in source code and configuration files (and repeat this step if you want to upgrade to a newer version)

- there's a NuGet Gallery Operations Toolkit, but it's not designed nor intended to work with every NuGetGallery installation

Microsoft

The following NuGet clients are being shipped as part of Microsoft products and available as extensions on each product's extension gallery:

- WebMatrix 3 NuGetPackageManager extension:

- NuGet-based Microsoft Package Manager for Visual Studio 2010 and 2012

- Download: http://visualstudiogallery.msdn.microsoft.com/27077b70-9dad-4c64-adcf-c7cf6bc9970c

- Reference:

- Using the Manage NuGet Packages dialog

- Using the Package Manager Console

- License: Microsoft Software License (custom)

- NuGet-based Microsoft Package Manager for Visual Studio 2013

- Download: http://visualstudiogallery.msdn.microsoft.com/4ec1526c-4a8c-4a84-b702-b21a8f5293ca

- Reference:

- Using the Manage NuGet Packages dialog

- Using the Package Manager Console

- License: Microsoft Software License (custom)

NuGet Package Explorer

One of the developers of the core NuGet team at Microsoft created a great graphical tool to work with NuGet packages. It allows you to very easily create, publish, download and inspect NuGet packages and their metadata.

- Click-once (desktop) application: http://npe.codeplex.com

- Windows 8 app: http://apps.microsoft.com/windows/en-us/app/nuget-package-explorer/3148c5ae-7307-454b-9eca-359fff93ea19/m/ROW

MyGet (or NuGet-as-a-Service)

MyGet is a NuGet server that allows you to create and host your own NuGet feeds. It is hosted on Windows Azure and has a freemium offering, meaning you can use it for free (within the constraints of the free plan) or subscribe to one of the paying plans if you require more resources or features. More info at https://www.myget.org.

- Availability and history: http://status.myget.org.

- Documentation: http://docs.myget.org.

- Twitter: https://twitter.com/mygetteam

- JabbR: https://jabbr.net/#/rooms/myget

- Provides:

- requires no infrastructure

- allows you to get started in a few clicks and focus on the packages instead of the server

- fully compatible with all NuGet client tools

- free software updates (including support for to the latest NuGet version)

- free for open source projects (meeting criteria and within allowed quota)

- publish and promote your feed in the public gallery

- supports NuGet Feed Discovery and Package Source Discovery

- extended feed functionality

- SSL-by-default

- feed visibility (public, readonly or private)

- activity streams

- strict SemVer validation for packages being pushed

- upload packages directly in the browser

- add packages from another feed (upstream package source)

- upstream package source presets for nuget.org (including webmatrix and other curated feeds), chocolatey, teamcity, etc

- filter upstream package sources

- mirror upstream package sources

- package promotion to an upstream package source

- (automatic or manual) package mirroring

- RSS

- package retention rules

- download entire feeds as ZIP archives for backup purposes

- download packages from the web (without the need for nuget client tools)

- integration with symbolsource (shared credentials and feed/repository security settings)

- multiple endpoints, including the v1-compatible endpoint (e.g. you can use the feed as a custom Orchard Gallery feed)

- granular security

- API key per user

- user-roles on feeds (owner, co-owner, contributor, reader)

- user management (enterprise plan)

- quota management (enterprise plan)

- web site authentication using on-premise ADFS (enterprise plan)

- web site authentication using prefered identity providers: Live ID, Google, GitHub, StackOverflow, etc (enterprise plan)

- build services

- creates the NuGet and symbols packages for you

- customizable automatic-versioning and assembly version patching

- auto-trigger builds for CodePlex, BitBucket or GitHub commits

- support for many unit testing frameworks

- support for many SDKs (including windows phone)

- build failure notifications through email and downloadable build logs

- custom logo and domain name (enterprise plan)

Chocolatey

Chocolatey.org is a system-level package manager for Windows based on NuGet, allowing you to search and install software components on your system, even unattended. Looks very promising and definitely something to keep an eye on!

- Documentation: https://github.com/chocolatey/chocolatey/wiki

- Reference: https://github.com/chocolatey/chocolatey/wiki/CommandsReference

- Twitter: http://twitter.com/chocolateynuget

- Forums: http://groups.google.com/group/chocolatey

- License: Apache v2

- Provides:

- Unattended software installations

- Installation of multiple packages with a single command

- Easy to use command line tool

- Supports any NuGet package source (feeds and file shares)

- Has a GUI as well: http://chocolatey.org/packages/ChocolateyGUI

- Integration with:

- Web Platform Installer

- Windows Features

- Ruby Gems

- CygWin

- Python

OctopusDeploy

OctopusDeploy is a convention-based automated deployment solution using NuGet as a protocol. You can use the Community edition for free (limited to 1 project) or buy one of the paying editions.

- Documentation: http://octopusdeploy.com/documentation

- Blog: http://octopusdeploy.com/blog

- Twitter: https://twitter.com/OctopusDeploy

- Provides:

- Deployment dashboard

- Scalability through lightweight service agents (tentacles)

- Deployment promotion between environments

- Support for PowerShell scripts

- Support for manual interventions

- Support for XML configuration transforms and variables

- Support for Windows Azure web and worker roles

- Support for (S)FTP

- Has a TeamCity plug-in

- Has a Command Line (octo.exe)

- Has a REST API

- Fine-grained user permissions

- Retention policies

- Automation of common tasks for ASP.NET deployments (IIS configuration) and Windows Services

Deployment Manager (RedGate)

RedGate's Deployment Manager is a custom fork of the OctopusDeploy project. History separates shortly after v1.0 of OctopusDeploy, as explained in this post.

More info: http://www.red-gate.com/delivery/deployment-manager/

SymbolSource

SymbolSource is a hosted symbolserver that integrates with NuGet and is configurable in Visual Studio, allowing you to debug NuGet packages by downloading the symbols and sources on-demand.

- Documentation: http://www.symbolsource.org/Public/Wiki/Index

- Blog: http://www.symbolsource.org/Public/Blog

- Forums: http://groups.google.com/group/symbolsource

- Provides:

- Consumes and provides NuGet symbols packages

- Consumes and provides OpenWrap packages

- Hosts symbols (PDB files) and sources (C#, VB.NET, C++)

- Symbol server and source server compatible with Visual Studio

- Flexible security for public and private content

- Integration with MyGet.org (shared credentials and feed/repository security settings)

- Integration with NuGet.org (default symbols repository)

CoAppThe CoApp project originally aimed to create a vibrant Open Source ecosystem on Windows by providing the technologies needed to build a complete community-driven Package Management System, along with tools to enable developers to take advantage of features of the Windows platform.

The project has pivoted to mesh with the NuGet project and the collaborative result is visible in NuGet 2.5 where support for native packages was first introduced. The CoApp project is still building additional tools to enhance C/C++ support in NuGet.

- Documentation: http://coapp.org/pages/reference.html

- Twitter: @CoApp

- Sources: https://github.com/coapp/

- License: Apache v2

ProGet (Inedo)

ProGet is an on-premise NuGet server with a freemium model that also provides integration with the Inedo BuildMaster product.

- Documentation: http://inedo.com/support/documentation/table-of-contents

- Twitter: http://twitter.com/inedo

- Provides:

- Compatibility with all NuGet client tools

- Custom proget.exe client tool

- Connectors to other NuGet feeds

- Connector filters

- Support for multiple feeds

- Support for private feeds

- License filtering

- Download feeds and packages

- LDAP authentication to the ProGet web application

- Upload packages to the ProGet web application

- integrated symbols and source server

- SDK and API

- Supports OData

- Supports NuGet Feed Discovery and NuSpec Extensions

BoxStarter

BoxStarter is another cool project leveraging NuGet and Chocolatey to quickly set up development environments.

- Documentation: http://boxstarter.codeplex.com/documentation

- Sources: http://boxstarter.codeplex.com/

- License: Apache v2

Other IDE support for NuGet

SharpDevelop

SharpDevelop was amongst the first IDEs other than Visual Studio to support NuGet.

More info: http://community.sharpdevelop.net/blogs/mattward/archive/2011/01/23/NuGetSupportInSharpDevelop.aspx

Xamarin and MonoDevelop

Xamarin Studio and MonoDevelop also have a NuGet extension, built on top of a custom build of the NuGet.Core.dll and a custom build of Microsoft's XML Document Transformation (XDT) library.

More info: https://github.com/mrward/monodevelop-nuget-addin

ReSharper Plug-ins

As of v8.0 of ReSharper, the built-in extension manager allows you to fetch ReSharper plug-in packages from a custom NuGet Gallery hosted at https://resharper-plugins.jetbrains.com.

Build Tools and Repository Managers

TeamCity

TeamCity has a few build steps specifically designed to deal with NuGet package consumption, creation and publication. In addition, it also comes with a built-in NuGet feed collecting all packages produced in your build artifacts.

More info: http://blogs.jetbrains.com/dotnet/2011/08/native-nuget-support-in-teamcity/

Artifactory

Artifactory is a repository manager with built-in support for various artifacts, including NuGet packages.

More info: http://www.jfrog.com/confluence/display/RTF/NuGet+Repositories

Sonatype Nexus

Nexus is another repository manager with built-in support for NuGet and they even provide a "What is NuGet for Java Developers" on their blog.

More info: http://books.sonatype.com/nexus-book/reference/nuget.html

Other NuGet-based utilities

There are quite a few other tools and utilities building further on top of NuGet. Here's a list of what I've found interesting:

- Glimpse Extensions (plug-ins are packages)

- NuGetMustHaves.com

- NuGetFeed (build a list of favorite packages)

- Orchard (CMS modules are fetched from a v1 NuGet feed hosted in the Orchard Gallery)

- Java implementation of NuGet Server

- NuGet Fight!

- NuGit

- NuGetLatest (Twitter bot tweeting new package publications)

It is great to see how NuGet adoption is growing, especially when people come up with innovative ideas that facilitate our work even further. And if any of you has a way to improve the NuGet tools, whether the Outercurve, Microsoft or any other NuGet-based product, then please tell them about your ideas. Report defects, log feature requests, provide feedback, write documentation or submit a pull request and experience eternal gratitude from an entire community :-)

History

- August 1st, 2013 - Updated Coapp project description

- July 30, 2013 - First published

License

This article, along with any associated source code and files, is licensed under The MIT License

About the Author

Xavier Decoster, Founder MyGet.org, Belgium

Xavier Decoster is a .NET/ALM consultant living in Belgium and co-founder of MyGet.org. He co-authored the book Pro NuGet and is a Microsoft Extended Experts Team member in Belgium.

He hates development friction and does not respect time zones. In order to make other developers aware of how frictionless development can and should be, he tries to share his experiences and insights by contributing back to the Community as a speaker, as an author, as a blogger, and through various open source projects.

His blog can be found at http://www.xavierdecoster.com and you can follow him on Twitter.

<Return to section navigation list>

Windows Azure Pack, Hosting, Hyper-V and Private/Hybrid Clouds

<Return to section navigation list>

Visual Studio LightSwitch and Entity Framework 4.1+

Saar Chen described how to Use Caching to Turbo-Boost your LightSwitch Apps in a 7/25/2013 post to the Visual Studio LightSwitch Team Blog:

Making use of a cache to “turbo-boost” the retrieval and presentation of read-only data is a common need in data-based applications. In this post, I'll walk you through how to speed up your LightSwitch application by using cached data. This is particularly useful for screens that use read-only reference data or data that is not updated that often.

For this example, I’ll create a Browse Orders screen to show an order list from the Northwind database. Then I’ll put caching into the application to see the performance gains. First, create a LightSwitch project. Let’s call it OrderManager for example. Then, we’ll add a SQL data source to Northwind and import the following entities: Customers, Orders, Shippers.

If you don’t have the database yet, please refer to How to: Install Sample Database to install one.

When the data source is added, we can create a screen to show a list of orders with some other information like this:

To make it easier to see the performance difference, I’ll uncheck support paging for the Orders query on the Browse Orders screen.

Now, we can press F5 to see what it will look like in the browser:

Next, let's change the implementation of the data source a little. I’ll use a RIA service to replace the use of directly attaching to the Northwind database. That way, we’ll have a spot to put in our cache logic.

There are two main things the RIA service needs to do. It needs to fetch data from Northwind database, and it needs to provide the data as a RIA service for our LightSwitch application.