Windows Azure and Cloud Computing Posts for 8/26/2013+

Top Stories This Week:

- Steven Martin (@stevemar_msft) announced Gartner Recognizes Microsoft as a Cloud Infrastructure as a Service Visionary in the Windows Azure Infrastructure and DevOps section.

| A compendium of Windows Azure, Service Bus, BizTalk Services, Access Control, Caching, SQL Azure Database, and other cloud-computing articles. |

Note: This post is updated weekly or more frequently, depending on the availability of new articles in the following sections:

- Windows Azure Blob, Drive, Table, Queue, HDInsight and Media Services

- Windows Azure SQL Database, Federations and Reporting, Mobile Services

- Windows Azure Marketplace DataMarket, Power BI, Big Data and OData

- Windows Azure Service Bus, BizTalk Services and Workflow

- Windows Azure Access Control, Active Directory, and Identity

- Windows Azure Virtual Machines, Virtual Networks, Web Sites, Connect, RDP and CDN

- Windows Azure Cloud Services, Caching, APIs, Tools and Test Harnesses

- Windows Azure Infrastructure and DevOps

- Windows Azure Pack, Hosting, Hyper-V and Private/Hybrid Clouds

- Visual Studio LightSwitch and Entity Framework v4+

- Cloud Security, Compliance and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

Windows Azure Blob, Drive, Table, Queue, HDInsight and Media Services

<Return to section navigation list>

Bill Wilder (@codingoutloud) described how to Start Windows Azure Storage Emulator from a Shortcut in an 8/25/2013 post:

When building applications to run on Windows Azure you can get a lot of development and testing done without ever leaving your developer desktop. Much of this is due to the convenient fact that much code “just works” on Windows Azure. How can that be, you might wonder? Running on Windows Azure in many cases amounts to nothing different than running on Windows Server 2012 (or Linux, should you chose). In other words, most generic PHP, C#, C++, Java, Python, and <your favorite language here> code just works.

Once your code starts accessing specific cloud features, you face a choice: access those services in the cloud, or use the local development emulator. You can access most cloud services directly from code running on your developer desktop – it usually just amounts to a REST call under the hood (with some added latency from desktop to cloud and back) – it is an efficient and effective way to debug. But the development emulator gives you another option for certain Windows Azure cloud services.

A common use case for the local development emulator is to have web applications such as with ASP.NET, ASP.NET MVC, and Web API that run either in Cloud Services or just in a Web Site. This is an important difference because when debugging, Visual Studio will start the Storage Emulator automatically, but this will not happen if you debugging web code that does not run from a Cloud Service. So if your web code is accessing Blob Storage, for example, when you run it locally you will get a timeout when it attempts to access Storage. That is, unless you ensure that the Storage Emulator has been started. Here’s an easy way to do this. (Normally, you only need to do this once per login (since it keeps running until you stop it).)

In my case, it was very convenient to have a shortcut that I could click to start the Storage Emulator on occasion. Here’s how to set it up. I’ll explain it as a shortcut (such as on a Windows 8 desktop), but the key step is very simple and easily used elsewhere.

Creating the Desktop Shortcut

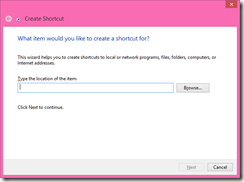

- Right-click on a desktop

- From pop-up menu, choose New –> Shortcut

- You get a dialog box asking about what you’d like to create a shortcut for:

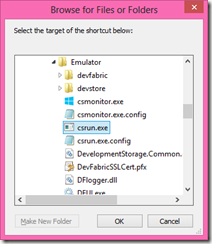

- HERE’S IMPORTANT PART 1/2: click hit the Browse button and navigate to wherever your Windows Azure SDK is installed and drill into the

- In my case this places the path "C:\Program Files\Microsoft SDKs\Windows Azure\Emulator\csrun.exe" into the text field.

- HERE’S IMPORTANT PART 2/2: Now after the end of the path (after the second double quote) add the parameter /devstore:start which indicates to start up the Storage Emulator.

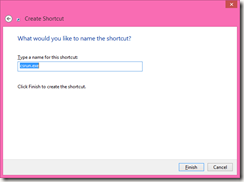

- Click Next to reach the last step – naming the shortcut:

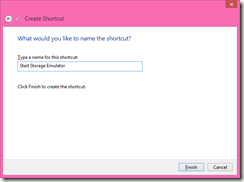

- Perhaps change the name of the shortcut from the default (csrun.exe) to something like Start Storage Emulator:

- Done! Now you can double-click this shortcut to fire up the Windows Azure Storage Emulator:

On my dev computer, the path to start the Windows Azure Storage Emulator was: "C:\Program Files\Microsoft SDKs\Windows Azure\Emulator\csrun.exe" /devstore:start

Now starting the Storage Emulator without having to use a Cloud Service from Visual Studio is only a double-click away.

RELATED

- Read about the Windows Azure Compute Emulator

<Return to section navigation list>

Windows Azure SQL Database, Federations and Reporting, Mobile Services

David Pallman continued his mobile development blog with Getting Started with Mobility, Part 6: Hybrid Development in C# with Xamarin on 8/27/2013:

In this series of posts, we're looking at how to get started as a mobile developer. In Part 1, we provided an overview of the mobile landscape, and discussed various types of client app development (native, hybrid, mobile web) and supporting back-end services. In Parts 2-5, we examined native app development for iOS, Android, Windows Phone, and Windows 8. Here in Part 6, we'll begin examining hybrid development approaches--starting with Xamarin.

Hybrid Frameworks: Assess the Risks

Hybrid mobile frameworks are a mixed bag: they may make all the difference in getting developers up to speed and productive; but they can also bring consequences. Before adopting any hybrid framework, you should get the answers to some key questions:

- Does the hybrid framework have a runtime layer or execute in a browser, or does it compile to native code?

- Does it support all of the mobile platforms you need to target?

- Does it restrict access to some of the features you need?

- Does it increase overhead or compromise performance?

- What is its cost? Is it a one-time cost, or a subscription?

- What are the limitations imposed by the license terms?

- What risks come with having a third-party dependency?

- Is it well-established and trustworthy, or is it new and unproven?

- Does the producer have a good track record of good relations with mobile platform vendors, and are they able to stay in sync with mobile platform updates in a timely manner?

- Is good support available, including community forums?

Above all, don't make the mistake of thinking all hybrid solutions are similar. They vary greatly in their approach, mechanics, performance, and reliability.Xamarin: Mobile Development in C#

Xamarin is a third-party product that allows you to develop for iOS and Android in C# and .NET--very familiar territory to Microsoft developers. How is this magic accomplished? From the Xamarin web site: "Write your app in C# and call any native platform APIs directly from C#. The Xamarin compiler bundles the .NET runtime and outputs a native ARM executable, packaged as an iOS or Android app.". In short, Microsoft developers can develop native apps for iOS and Android while working in a familiar language, C#.Xamarin is the most expensive of the various mobile development approaches we're considering in this series: the Business Edition developer license costs $1,000 per target platform, per developer, per year (there are also Starter, Indie and Enterprise editions available). It may well be worth the cost, depending on what programming language your developers are familiar with.

The Computer You'll Develop on: A PC or a Mac

Xamarin development for iOS requires a Mac; Android development can be done from a PC or a Mac.Developer Tools: Xamarin Studio

Xamarin provides an IDE, Xamarin Studio, in both Mac OS X and Windows editions. It's also possible to use Xamarin with Visual Studio.Xamarin Studio requires and makes use of the Software Development Kits for the mobile platform(s) you are targeting--automatically downloading them during installation if it needs to.

For Android development, you can work self-contained in Xamarin Studio: there's a screen editor, allowing you to see what your layout XML looks like visually. For iOS development, on the other hand, you have to use both Xamarin Studio and Apple's XCode side-by-side, so that you can use Interface Builder.

Xamarin Studio

Programming: C#

In Xamarin you program in C#, the favorite language of Microsoft.NET developers. Here's an example of Xamarin C# code for iOS (compare to Objective C):var context = CIContext.FromOptions (new CIContextOptions () {

UseSoftwareRenderer = true

});

var ciImage = new CIImage (cgImage);

var hueAdjustFilter = new CIHueAdjust {

InputAngle = 3.0f * Math.PI,

Image = ciImage,

};

var colorControlsFilter = new CIColorControls {

InputSaturation = 1.3f,

InputBrightness = 0.3f,

Image = hueAdjustFilter.OutputImage

};

ciImage = colorControlsFilter.OutputImage;

context.CreateImage (ciImage, ciImage.Extent);And, here's an example of Xamarin C# code for Android (normally programmed in Java):

using System;

using Android.App;

using Android.Content;

using Android.Runtime;

using Android.Views;

using Android.Widget;

using Android.OS;

namespace Android1

{

[Activity (Label = "Android1", MainLauncher = true)]

public class MainActivity : Activity

{

int count = 1;

protected override void OnCreate (Bundle bundle)

{

base.OnCreate (bundle);

// Set our view from the "main" layout resource

SetContentView (Resource.Layout.Main);

// Get our button from the layout resource,

// and attach an event to it

Button button = FindViewById<Button> (Resource.Id.myButton);

button.Click += delegate {

button.Text = string.Format ("{0} clicks!", count++);

};

}

}

}Debugging Experience

The debugging experience with Xamarin is similar to that of native iOS and Android development: you can run in the mobile platform's emulator, or on an actual device connected to your computer by USB cable. You're using the same emulators you would use in native development, so they are just as good (or bad, or slow).In getting Xamarin on my PC to work on actual Android devices, I did need to install a Universal Android Debug Bridge driver.

Android Emulator Selection Dialog

Android Emulator

Capabilities

Xamarin doesn't get in the way of using device features. For example, your code can access the Accelerometer and the GPS and the Camera; and can access Contacts and Photos.AccelerometerPlay Sample

In this series of posts, we're looking at how to get started as a mobile developer. In Part 1, we provided an overview of the mobile landscape, and discussed various types of client app development (native, hybrid, mobile web) and supporting back-end services. In Parts 2-5, we examined native app development for iOS, Android, Windows Phone, and Windows 8. Here in Part 6, we'll begin examining hybrid development approaches--starting with Xamarin.

Hybrid Frameworks: Assess the Risks

Hybrid mobile frameworks are a mixed bag: they may make all the difference in getting developers up to speed and productive; but they can also bring consequences. Before adopting any hybrid framework, you should get the answers to some key questions:

- Does the hybrid framework have a runtime layer or execute in a browser, or does it compile to native code?

- Does it support all of the mobile platforms you need to target?

- Does it restrict access to some of the features you need?

- Does it increase overhead or compromise performance?

- What is its cost? Is it a one-time cost, or a subscription?

- What are the limitations imposed by the license terms?

- What risks come with having a third-party dependency?

- Is it well-established and trustworthy, or is it new and unproven?

- Does the producer have a good track record of good relations with mobile platform vendors, and are they able to stay in sync with mobile platform updates in a timely manner?

- Is good support available, including community forums?

Above all, don't make the mistake of thinking all hybrid solutions are similar. They vary greatly in their approach, mechanics, performance, and reliability.

Xamarin: Mobile Development in C#

Xamarin is a third-party product that allows you to develop for iOS and Android in C# and .NET--very familiar territory to Microsoft developers. How is this magic accomplished? From the Xamarin web site: "Write your app in C# and call any native platform APIs directly from C#. The Xamarin compiler bundles the .NET runtime and outputs a native ARM executable, packaged as an iOS or Android app.". In short, Microsoft developers can develop native apps for iOS and Android while working in a familiar language, C#.Xamarin is the most expensive of the various mobile development approaches we're considering in this series: the Business Edition developer license costs $1,000 per target platform, per developer, per year (there are also Starter, Indie and Enterprise editions available). It may well be worth the cost, depending on what programming language your developers are familiar with.

The Computer You'll Develop on: A PC or a Mac

Xamarin development for iOS requires a Mac; Android development can be done from a PC or a Mac.

Developer Tools: Xamarin Studio

Xamarin provides an IDE, Xamarin Studio, in both Mac OS X and Windows editions. It's also possible to use Xamarin with Visual Studio.

Xamarin Studio requires and makes use of the Software Development Kits for the mobile platform(s) you are targeting--automatically downloading them during installation if it needs to.

For Android development, you can work self-contained in Xamarin Studio: there's a screen editor, allowing you to see what your layout XML looks like visually. For iOS development, on the other hand, you have to use both Xamarin Studio and Apple's XCode side-by-side, so that you can use Interface Builder.Xamarin Studio

Programming: C#

In Xamarin you program in C#, the favorite language of Microsoft.NET developers. Here's an example of Xamarin C# code for iOS (compare to Objective C):var context = CIContext.FromOptions (new CIContextOptions () {

UseSoftwareRenderer = true

});

var ciImage = new CIImage (cgImage);

var hueAdjustFilter = new CIHueAdjust {

InputAngle = 3.0f * Math.PI,

Image = ciImage,

};

var colorControlsFilter = new CIColorControls {

InputSaturation = 1.3f,

InputBrightness = 0.3f,

Image = hueAdjustFilter.OutputImage

};

ciImage = colorControlsFilter.OutputImage;

context.CreateImage (ciImage, ciImage.Extent);

And, here's an example of Xamarin C# code for Android (normally programmed in Java):

using System;

using Android.App;

using Android.Content;

using Android.Runtime;

using Android.Views;

using Android.Widget;

using Android.OS;

namespace Android1

{

[Activity (Label = "Android1", MainLauncher = true)]

public class MainActivity : Activity

{

int count = 1;

protected override void OnCreate (Bundle bundle)

{

base.OnCreate (bundle);

// Set our view from the "main" layout resource

SetContentView (Resource.Layout.Main);

// Get our button from the layout resource,

// and attach an event to it

Button button = FindViewById<Button> (Resource.Id.myButton);

button.Click += delegate {

button.Text = string.Format ("{0} clicks!", count++);

};

}

}

}

Debugging Experience

The debugging experience with Xamarin is similar to that of native iOS and Android development: you can run in the mobile platform's emulator, or on an actual device connected to your computer by USB cable. You're using the same emulators you would use in native development, so they are just as good (or bad, or slow).

In getting Xamarin on my PC to work on actual Android devices, I did need to install a Universal Android Debug Bridge driver.Android Emulator Selection Dialog

Android Emulator

Capabilities

Xamarin doesn't get in the way of using device features. For example, your code can access the Accelerometer and the GPS and the Camera; and can access Contacts and Photos.AccelerometerPlay Sample CameraAppDemo Sample

Online Resources

The Xamarin web site has a good developer center, whose resources include documentation, guides, recipes, samples, videos, and forums.Xamarin Developer Center

Key online resources:

Getting Started

Introduction to Mobile Development

Building Cross-Platform Applications

Hello, Android

Hello, iPhone

Android APIs

iOS APIs

Recipes

Sample Code

Videos

ForumsComponents Store

Xamarin has a components store; the components offer a broad range of functionality, from charting to syncing with DropBox. Some of the components are free.Components Gallery

Tutorials

There are a number of good tutorials to be found in the Getting Started area of the Xamarin Developer Center.1a. Android: Installing Xamarin.Android

1b. iOS: Installing Xamarin.iOSThis tutorial walks you through installation of Xamarin for Android or iOS, and has both Windows PC and Mac editions.

Installing Xamarin on a Mac

2a. Android: Hello, Android

2b iOS: Hello, iPhone

This tutorial explains how to build a Hello, World application for Android or iOS. It takes you through creating a new project, defining a simple interface and writing simple action code, building the solution, and running it on an emulator.Creating a New Project in Xamarin Studio

Defining iPhone User Interface in XCode Interface Builder

Hello, iPhone running in iOS Simulator

3a. Android: Multi-Screen Applications

3b. iOS: Multi-Screen ApplicationsThis tutorial shows how to create a multi-screen app, with data from the first view passed to the second when a button is pressed.

Android Multi-Screen App Tutorial Running in Emulator

iOS Multi-Screen App Tutorial Running in Emulator

Summary: Xamarin Hybrid Mobile Development in C#

Xamarin puts mobile development in easy reach of Microsoft developers. While it's still necessary to learn the APIs and conventions of each mobile platform, taking Objective C and Java out of the equation is nevertheless a major boost. And, being able to use a common language for your iOS and Android projects makes a good deal of code re-use possible.Next: Getting Started with Mobility, Part 7: Hybrid Development in JavaScript with Titanium

Full disclosure: I’ve received free Xamarin for Android and Xamarin for iOS licenses and have intended to test the two for some time.

Carlos Figueira (@carlos_figueira) explained Web-based login on WPF projects for Azure Mobile Services in an 8/27/2013 post:

In almost all supported client platforms for Azure Mobile Services, there are two flavors of the login operation. The first one the client talks to an SDK specific to the login provider (i.e., the Live Connect SDK for Windows Store apps, the Facebook SDK for iOS, and so on) and then uses a token which they got from that provider to login to their mobile service – that's called the client-side authentication flow, since all the authentication action happens without interaction with the mobile service. On the other alternative, called server-side authentication flow, the client opens a web browser window (or control) which talks, via the mobile service runtime, to the provider web login interface, and after a series of redirects (which I depicted in a previous post) the client gets the authentication token from the service which will be used in subsequent (authenticated) calls. There’s one platform, however, which doesn’t have this support - “full” .NET 4.5 (i.e., the “desktop” version of the framework).

That platform is lacking that functionality because there are cases where it cannot display a web interface where the user can enter their credentials. For example, it can be used in a backend service (in which really there’s no user interface to interact with, like the scenario I showed in the post about a MVC app accessing authenticated tables in an Azure Mobile Service). It can also be a console application, in which there’s no “native” way to display a web page. Even if we could come up with a way to display a login page (such as in a popup window), what kind of window to use? If we go with WPF, it wouldn’t look natural in a WinForms app, and vice-versa.

We can, however, solve this problem if we constrain the platform to one specific which supports user interface elements. In this post, I’ll show how this can be done for a WPF project. Notice that my UI skills are really, really poor, so if you plan on using it on a “real” app, I’d strongly recommend you to refine the interface. To write the code for this project, I took as a starting point the actual code for the login page from the client SDK (I used the Windows Phone as an example) – it’s good to have all the client code publicly available.

The server-side authentication flow

Borrowing a picture I used in the post about authentication, this is what happens in the server-side authentication flow, where the client shows what the browser control in each specific platform does.

What we then need to do is to have a web browser navigate to //mobileservicename.azure-mobile.net/login/<provider>, and monitor its redirects until it reaches /login/done. Once that’s reached, we can then parse the token and create the MobileServiceUser which will be set in the client.

The library

The library will have one extension method on the MobileServiceClient method, which will display our own login page (as a modal dialog / popup). When the login finishes successfully (if it fails it will throw an exception), we then parse the token returned by the login page, create the MobileServiceUser object, set it to the client, and return the user (using the same method signature as in the other platforms).

- public async static Task<MobileServiceUser> LoginAsync(this MobileServiceClient client, MobileServiceAuthenticationProvider provider)

- {

- Uri startUri = new Uri(client.ApplicationUri, "login/" + provider.ToString().ToLowerInvariant());

- Uri endUri = new Uri(client.ApplicationUri, "login/done");

- LoginPage loginPage = new LoginPage(startUri, endUri);

- string token = await loginPage.Display();

- JObject tokenObj = JObject.Parse(token.Replace("%2C", ","));

- var userId = tokenObj["user"]["userId"].ToObject<string>();

- var authToken = tokenObj["authenticationToken"].ToObject<string>();

- var result = new MobileServiceUser(userId);

- result.MobileServiceAuthenticationToken = authToken;

- client.CurrentUser = result;

- return result;

- }

The login page is shown below. To mimic the login page shown at Windows Store apps, I’ll have a page with a header, a web view and a footer with a cancel button:

To keep the UI part simple, I’m using a simple grid:

- <Grid Name="grdRootPanel">

- <Grid.RowDefinitions>

- <RowDefinition Height="80"/>

- <RowDefinition Height="*"/>

- <RowDefinition Height="80"/>

- </Grid.RowDefinitions>

- <TextBlock Text="Connecting to a service..." VerticalAlignment="Center" HorizontalAlignment="Center"

- FontSize="30" Foreground="Gray" FontWeight="Bold"/>

- <Button Name="btnCancel" Grid.Row="2" Content="Cancel" HorizontalAlignment="Left" VerticalAlignment="Stretch"

- Margin="10" FontSize="25" Width="100" Click="btnCancel_Click" />

- <ProgressBar Name="progress" IsIndeterminate="True" Visibility="Collapsed" Grid.Row="1" />

- <WebBrowser Name="webControl" Grid.Row="1" Visibility="Collapsed" />

- </Grid>

In the LoginPage.xaml.cs, the Display method (called by the extension method shown above) creates a Popup window, adds the login page as the child and shows it; when the popup is closed, it will either throw an exception if the login was cancelled, or return the stored token if successful.

- public Task<string> Display()

- {

- Popup popup = new Popup();

- popup.Child = this;

- popup.PlacementRectangle = new Rect(new Size(SystemParameters.FullPrimaryScreenWidth, SystemParameters.FullPrimaryScreenHeight));

- popup.Placement = PlacementMode.Center;

- TaskCompletionSource<string> tcs = new TaskCompletionSource<string>();

- popup.IsOpen = true;

- popup.Closed += (snd, ea) =>

- {

- if (this.loginCancelled)

- {

- tcs.SetException(new InvalidOperationException("Login cancelled"));

- }

- else

- {

- tcs.SetResult(this.loginToken);

- }

- };

- return tcs.Task;

- }

The navigation starts at the constructor of the login page (it could be moved elsewhere, but since it’s primarily used by the extension method itself, it can start navigating to the authentication page as soon as possible).

- public LoginPage(Uri startUri, Uri endUri)

- {

- InitializeComponent();

- this.startUri = startUri;

- this.endUri = endUri;

- var bounds = Application.Current.MainWindow.RenderSize;

- // TODO: check if those values work well for all providers

- this.grdRootPanel.Width = Math.Max(bounds.Width, 640);

- this.grdRootPanel.Height = Math.Max(bounds.Height, 480);

- this.webControl.LoadCompleted += webControl_LoadCompleted;

- this.webControl.Navigating += webControl_Navigating;

- this.webControl.Navigate(this.startUri);

- }

When the Navigating event of the web control is called, we can then check if the URI which the control is navigating to is the “final” URI which the authentication flow expects. If it’s the case, then we extract the token value, storing it in the object, and close the popup (which will signal the task on the Display method to be completed).

- void webControl_Navigating(object sender, NavigatingCancelEventArgs e)

- {

- if (e.Uri.Equals(this.endUri))

- {

- string uri = e.Uri.ToString();

- int tokenIndex = uri.IndexOf("#token=");

- if (tokenIndex >= 0)

- {

- this.loginToken = uri.Substring(tokenIndex + "#token=".Length);

- }

- else

- {

- // TODO: better error handling

- this.loginCancelled = true;

- }

- ((Popup)this.Parent).IsOpen = false;

- }

- }

That’s about it. There is some other code in the library (handling cancel, for example), but I’ll leave it out of this post for simplicity sake (you can still see the full code in the link at the bottom of this post).

Testing the library

To test the library, I’ll create a WPF project, with a button an a text area to write some debug information:

And on the click handler, we can invoke the LoginAsync; to test it out I’m also invoking an API which I set with user permissions, to make sure that the token which we got is actually good.

- private async void btnStart_Click(object sender, RoutedEventArgs e)

- {

- try

- {

- var user = await MobileService.LoginAsync(MobileServiceAuthenticationProvider.Facebook);

- AddToDebug("User: {0}", user.UserId);

- var apiResult = await MobileService.InvokeApiAsync("user");

- AddToDebug("API result: {0}", apiResult);

- }

- catch (Exception ex)

- {

- AddToDebug("Error: {0}", ex);

- }

- }

That’s it. The full code for this post can be found in the GitHub repository at https://github.com/carlosfigueira/blogsamples/tree/master/AzureMobileServices/AzureMobile.AuthExtensions. As usual, please let us know (either via comments in this blog or in our forums) if you have any issues, comments or suggestions.

Carlos Figueira (@carlos_figueira) described Complex types and Azure Mobile Services in an 8/23/2013 post (missed when published):

About a year ago I posted a series of entries in this blog about supporting arbitrary types in Azure Mobile Services. Back then, the managed client SDK was using a custom serializer which only supported a very limited subset of simple types. To be use other types in the CRUD operations, one would need to decorate the types / properties with a special attribute, and implement an interface which was used to convert between those types to / from a JSON representation. That was cumbersome for two reasons – the first was that even for simple types, one would need to define a converter class; the other was that the JSON representation was different for the supported platforms (JSON.NET for Windows Phone; Windows.Data.Json classes for Windows Store) – and the OM for the WinStore JSON representation was, frankly, quite poor.

With the changes made on the client SDK prior to the general release, the SDK for all managed platforms started using a unified serializer – JSON.NET for all platforms (in the context of this post, I mean all platforms using managed code). It also started taking more advantage of the extensibility features of that serializer, so that whatever JSON.NET could do on its own the mobile services SDK itself wouldn’t need to do anything else. That by itself gave the mobile services SDK the ability to serialize, in all supported platforms all primitive types which JSON.NET supported natively, so there was no need to implement custom serialization for things such as TimeSpan, enumerations, Uri, among others which weren’t supported in the initial version of the SDK.

What that means is that, for simple types, all the code which I wrote on the first post with the JSON converter isn’t required anymore. You can still change how a simple type is serialized, though, if you really want to, by using a JsonConverter (and decorating the member with the JsonConverterAttribute). For complex types, however, there’s still some work which needs to be done – the serializer on the client will happily serialize the object with its complex members, but the runtime won’t know what to do with those until we tell it what it needs to do.

Let’s look at an example – my app adds and display movies, and each movie has some reviews associated with it.

- public class Movie

- {

- [JsonProperty("id")]

- public int Id { get; set; }

- [JsonProperty("title")]

- public string Title { get; set; }

- [JsonProperty("year")]

- public int ReleaseYear { get; set; }

- [JsonProperty("reviews")]

- public MovieReview[] Reviews { get; set; }

- }

- public class MovieReview

- {

- [JsonProperty("stars")]

- public int Stars { get; set; }

- [JsonProperty("comment")]

- public string Comment { get; set; }

- }

Now let’s try to insert one movie into the server:

- try

- {

- var movieToInsert = new Movie

- {

- Title = "Pulp Fiction",

- ReleaseYear = 1994,

- Reviews = new MovieReview[] { new MovieReview { Stars = 5, Comment = "Best Movie Ever!" } }

- };

- var table = MobileService.GetTable<Movie>();

- await table.InsertAsync(movieToInsert);

- this.AddToDebug("Inserted movie {0} with id = {1}", movieToInsert.Title, movieToInsert.Id);

- }

- catch (Exception ex)

- {

- this.AddToDebug("Error: {0}", ex);

- }

The movie object is serialized without problems to the server, as can be seen in Fiddler (many headers omitted):

POST https://MY-SERVICE-NAME.azure-mobile.net/tables/Movie HTTP/1.1

Content-Type: application/json; charset=utf-8

Host: MY-SERVICE-NAME.azure-mobile.net

Content-Length: 89

Connection: Keep-Alive{"title":"Pulp Fiction","year":1994,"reviews":[{"stars":5,"comment":"Best Movie Ever!"}]}

The complex type was properly serialized as expected. But the server responds saying that it was a bad request:

HTTP/1.1 400 Bad Request

Cache-Control: no-cache

Content-Length: 112

Content-Type: application/json

Server: Microsoft-IIS/8.0

Date: Thu, 22 Aug 2013 22:10:11 GMT{"code":400,"error":"Error: The value of property 'reviews' is of type 'object' which is not a supported type."}

Since the runtime doesn’t know which column type to insert non-primitive types. So, as I mentioned on the original posts, there are two ways to solve this issue – make the data, on the client side, of a type which the runtime understands, or “teach” the runtime to understand non-primitive types, by defining scripts for the table operations. Let’s look at both alternatives.

Client-side data manipulation

For the client side, in the original post we converted the complex types into simple types by using a data member JSON converter. That interface doesn’t exist anymore, so we just use the converters from JSON.NET to do that. Below would be one possible implementation of such converter, which “flattens” the reviews array into a single string.

- public class Movie_ComplexClientSide

- {

- [JsonProperty("id")]

- public int Id { get; set; }

- [JsonProperty("title")]

- public string Title { get; set; }

- [JsonProperty("year")]

- public int ReleaseYear { get; set; }

- [JsonProperty("reviews")]

- [JsonConverter(typeof(ReviewArrayConverter))]

- public MovieReview[] Reviews { get; set; }

- }

- class ReviewArrayConverter : JsonConverter

- {

- public override bool CanConvert(Type objectType)

- {

- return objectType == typeof(MovieReview[]);

- }

- public override object ReadJson(JsonReader reader, Type objectType, object existingValue, JsonSerializer serializer)

- {

- var reviewsAsString = serializer.Deserialize<string>(reader);

- return reviewsAsString == null ?

- null :

- JsonConvert.DeserializeObject<MovieReview[]>(reviewsAsString);

- }

- public override void WriteJson(JsonWriter writer, object value, JsonSerializer serializer)

- {

- var reviewsAsString = JsonConvert.SerializeObject(value);

- serializer.Serialize(writer, reviewsAsString);

- }

- }

This approach has the advantage which it is fairly simple – the converter implementation, as seen above, is trivial, and there’s no need to change server scripts. However, this has the drawback that we’re essentially denormalizing the relationship between the movie and its comments. In this case, this actually shouldn’t be a big deal (since a review is inherently tied to a movie), but in other scenarios the loss of normalization may lead to other issue. For example, we can’t (easily) query the database for which movie has the most reviews, or which movie has more 5-star reviews. Also, if we want to use the same data in different platforms (such as JavaScript, Android, iOS, etc.) we’ll need to do this manipulation on those platforms as well.

Server-side data manipulation

Another alternative is to not do anything on the client, and deal with the complex data on the server-side itself. At the server-side, we have two more options – denormalize the data (using a similar technique as we did at the client side), or keep it normalized (in two different tables). The scripts at the second post of the original series can still be used for the denormalization technique, so I won’t repeat them here.

To keep the table normalized (i.e., to implement a 1:n relationship between the Movie and the new MovieReview tables) we need to add some scripts at the server side to deal with that data. The last post on the original series talked about that, but with a different scenario. For completeness sake, I’ll add the scripts for this scenario (movies / reviews) here as well.

First, inserting data. When the data arrives at the server, we first remove the complex type (which the runtime doesn’t know how to handle), and after inserting the movie, we iterate through the reviews to insert them with the associated movie id as the “foreign key”.

- function insert(item, user, request) {

- var reviews = item.reviews;

- if (reviews) {

- delete item.reviews; // will add in the related table later

- }

- request.execute({

- success: function () {

- var movieId = item.id;

- var reviewsTable = tables.getTable('MovieReview');

- if (reviews) {

- item.reviews = [];

- var insertNextReview = function (index) {

- if (index >= reviews.length) {

- // done inserting reviews, respond to client

- request.respond();

- } else {

- var review = reviews[index];

- review.movieId = movieId;

- reviewsTable.insert(review, {

- success: function () {

- item.reviews.push(review);

- insertNextReview(index + 1);

- }

- });

- }

- };

- insertNextReview(0);

- } else {

- // no need to do anythin else

- request.respond();

- }

- }

- });

- }

Reading is similar – first read the movies themselves, then iterate through them and read their reviews from the associated table.

- function read(query, user, request) {

- request.execute({

- success: function (movies) {

- var reviewsTable = tables.getTable('MovieReview');

- var readReviewsForMovie = function (movieIndex) {

- if (movieIndex >= movies.length) {

- request.respond();

- } else {

- reviewsTable.where({ movieId: movies[movieIndex].id }).read({

- success: function (reviews) {

- movies[movieIndex].reviews = reviews;

- readReviewsForMovie(movieIndex + 1);

- }

- });

- }

- };

- readReviewsForMovie(0);

- }

- });

- }

That’s it. I wanted to have an updated post so I could add a warning in the original ones that some of their content was out-of-date. The code for this project can be found in GitHub at https://github.com/carlosfigueira/blogsamples.

No significant articles so far this week.

<Return to section navigation list>

Windows Azure Marketplace DataMarket, Cloud Numerics, Big Data and OData

Glenn Gailey (@ggailey777) asserted his New Love for WCF Data Services: Push Notifications in an 8/26/2013 post:

I have just published a new end-to-end sample that demonstrates how to use Windows Azure Notification Hubs to add push notification functionality to the classic Northwind OData service.

I also blogged about this as a guest blogger on the Silver Lining blog.

Sample: Send Push Notifications from an OData Service by Using Notification Hubs

Windows Azure Notification Hubs is a cloud-based service that enables you to send notifications to mobile device apps running on all major device platforms. This sample demonstrates how to use Notification Hubs to send notifications from an OData service to a Windows Store app.

Blog Post: Send Push Notifications from an OData Service using Windows Azure Notification Hubs

Windows Azure Notification Hubs is a Windows Azure service that makes it easier to send notifications to mobile apps running on all major device platforms from a single backend service, be it Mobile Services or any OData service. In this article, I focus adding push notifications to a OData service, and in particular to a WCF Data Services project.

The WCF Data Services Team announced the WCF Data Services 5.6.0 Release on 8/26/2013:

Recently we released updated NuGet packages for WCF Data Services 5.6.0. You will need the updated tooling (released today) to use the portable libraries feature mentioned below with code gen.

What is in the release:

Visual Studio 2013 Support

The WCF DS 5.6.0 tooling installer has support for Visual Studio 2013. If you are using Visual Studio 2013 and would like to consume OData services, you can use this tooling installer to get Add Service Reference support for OData. Should you need to use one of our prior runtimes, you can still do so using the normal NuGet package management commands (you will need to uninstall the installed WCF DS NuGet packages and install the older WCF DS NuGet packages).

Portable Libraries

All of our client-side libraries now have portable library support. This means that you can now use the new JSON format in Windows Phone and Windows Store apps. The core libraries have portable library support for .NET 4.0, Silverlight 5, Windows Phone 8 and Windows Store apps. The WCF DS client has portable library support for .NET 4.5, Silverlight 5, Windows Phone 8 and Windows Store apps. Please note that this version of the client does not have tombstoning, so if you need that feature for Windows Phone apps you will need to continue using the Windows Phone-specific tooling.

URI Parser Integration

The URI parser is now integrated into the WCF Data Services server bits, which means that the URI parser is capable of parsing any URL supported in WCF DS. We have also added support for parsing functions in the URI Parser.

Public Provider Improvements - Reverted

In the 5.5.0 release we started working on making our providers public. In this release we hoped to make it possible to override the behavior of included providers with respect to properties that don’t have native support in OData v3, for instance enum and spatial properties. Unfortunately we ran into some non-trivial bugs with

$selectand$orderbyand needed to cut the feature for this release.Public Transport Layer

In the 5.4.0 release we added the concept of a request and response pipeline to WCF Data Service Client. In this release we have made it possible for developers to directly handle the request and response streams themselves. This was built on top of ODataLib's

IODataRequestMessageandIODataResponseMessageframework that specifies how requests and responses are sent and recieved. With this addition developers are able to tweak the request and response streams or even completely replace the HTTP layer if they so desire. We are working on a blog post and sample documenting how to use this functionality.Breaking Changes

In this release we took a couple of breaking changes. As these bugs are tremendously unlikely to affect anyone, we opted not to increment the major version number but we wanted everyone to be aware of what they were:

- Developers using the reading/writing pipeline must write to

Entryrather thanEntityon theWritingEntryArgs- Developers should no longer expect to be able to modify the navigation property source in

OnNavigationLinkStartingandOnNavigationLinkEnding- Developers making use of the

DisablePrimitiveTypeConversionknob may see a minor change in their JSON payloads; the knob previously only worked for the ATOM formatBug Fixes

- Fixes a performance issue with models that have lots of navigation properties

- Fixes a performance issue with the new JSON format when creating or deleting items

- Fixes a bug where

DisablePrimitiveTypeConversionwould cause property type annotations to be ignored in the new JSON format- Fixes a bug where

LoadPropertydoes not remove elements from a collection after deleting a link- Fixes an issue where the URI Parser would not properly bind an action to a collection of entities

- Improves some error messages

Known Issues

The NuGet runtime in Visual Studio needs to be 2.0+ for Add Service Reference to work properly. If you are having issues with Add Service Reference in Visual Studio 2012, please ensure that NuGet is up-to-date.

<Return to section navigation list>

Windows Azure Service Bus, BizTalk Services and Workflow

No significant articles so far this week.

<Return to section navigation list>

Windows Azure Access Control, Active Directory, Identity and Workflow

No significant articles so far this week.

<Return to section navigation list>

Windows Azure Virtual Machines, Virtual Networks, Web Sites, Connect, RDP and CDN

Nir Mashkowski (@nirmsk) explained Configuring Dynamic IP Address Restrictions in Windows Azure Web Sites in an 8/27/2013 post to the Windows Azure blog:

A recent upgrade of Windows Azure Web Sites enabled the Dynamic IP Restrictions module for IIS8. Developers can now enable and configure the Dynamic IP Restrictions feature (or DIPR as short-hand) for their websites.

There is a good overview of the IIS8 feature available here:

http://www.iis.net/learn/get-started/whats-new-in-iis-8/iis-80-dynamic-ip-address-restrictions

The DIPR feature provides two main protections for developers:

- Blocking of IP addresses based on number of concurrent requests

- Blocking of IP addresses based on number of requests over a period of time

Developers can additionally configure DIPR behavior such as the type of failure HTTP status code sent back on blocked requests.

In Azure Web Sites a developer configures DIPR using configuration sections added to the web.config file located in the root folder of the website.

If you want to block connections based on the number of concurrent requests (i.e. active requests currently in flight at any moment in time), add the following configuration snippet to a website’s web.config file.

By setting the enabled attribute to true in the denyByConcurrentRequests element, IIS will automatically start blocking requests from IP addresses when the maximum number of concurrent requests exceeds the value set in the maxConcurrentRequests attribute (set to 10 in the example above).

Alternatively if you want to block connections based on the total number of requests made within a specific time window you could use the following configuration snippet:

In the above example, setting the enabled attribute to true in the denyByRequestRate element tells IIS to block requests from IP addresses when the total number of requests observed within the time window defined by requestIntervalInMilliseconds (set to 2000 ms. in the example) exceeds the value set in the maxRequests attribute (set to 10 in the example). So a client making more than 10 requests within a 2 second period will be blocked.

And lastly developers can also choose to enable both blocking mechanisms simultaneously. The snippet below tells DIPR to block clients that either have more than 10 concurrent requests in-flight, or that have made more than 20 total requests within a 5 second time window:

After DIPR blocks an IP address, the address stays blocked until the end of the current time window, after which the IP address is once again able to make requests to the website. For example, if requestIntervalInMilliseconds is set to 5000 (5 seconds), and an IP address is blocked at the 2 second mark – the address will remain blocked for another 3 seconds which is the time remaining in the current time window.

Developer can customize the error returned when a client is blocked by configuring the denyAction attribute on the dynamicIpSecurity element itself. The allowable values for denyAction are:

- AbortRequest (returns an HTTP status code of 0)

- Unauthorized (returns an HTTP status code of 401)

- Forbidden (returns an HTTP status code of 403). Note this is the default setting.

- NotFound (returns an HTTP status code of 404)

For example, if you wanted to send a 404 status code instead of the default (which is Forbidden 403), you could use the following configuration:

One question that comes up is: what IP address will DIPR see when running in Azure Web Sites? Running in Windows Azure means that a web application is sitting behind various load balancers. That could potentially mean that the client IP address presented to a website is the address of an upstream load balancer instead of the actual client out on the Internet. However Azure Web Sites automatically handles the necessary translation on your behalf and ensures that the client IP address “seen” by the DIPR module is the real IP address of Internet clients making HTTP requests.

<Return to section navigation list>

Windows Azure Cloud Services, Caching, APIs, Tools and Test Harnesses

No significant articles so far this week.

Return to section navigation list>

Windows Azure Infrastructure and DevOps

David Linthicum (@DavidLinthicum) asserted “With Ballmer gone, Microsoft could go boom or bust in the cloud. To succeed, the next CEO must consider these points” in a deck for his 3 tips for Microsoft's next CEO: How to handle Windows Azure article of 8/27/2013 for InfoWorld’s Cloud Computing blog:

By now, you've surely heard: Steve Ballmer is stepping down, after serving at Microsoft for 33 years overall, including 13 years as CEO. The move is due, at least in part, to the company's poor performance.

We're told the time frame for his departure is 12 months. However, he could be gone much sooner, given the current feeling among Microsoft investors. Indeed, the stock surged 8 percent at one point Friday morning on the news that Ballmer was leaving.

Microsoft shops need to keep a close eye on this transition. This could be the beginning of Microsoft's rejuvenation -- or the beginning of the end.

The new CEO will likely focus on Azure and the movement to the cloud. To be honest, Microsoft has done better than I expected here; Azure is in second or third place behind Amazon Web Services, depending on which analyst reports you read. However, given the company's leadership position, the investors expected more from Azure and Microsoft as a whole.

The new CEO will have to renew focus on the cloud and figure out how to drive Microsoft into the forefront or at least into a solid second place. I have a few suggestions for the would-be CEO prior to getting the keys to the big office.

- First, focus more on your existing .Net developer base. Many Microsoft developers don't feel as loved as they should be by Microsoft. While Microsoft does a good job of managing developer networks, I've seen many jump to Amazon Web Services in the last year. Developers are key to the success of cloud computing, and Microsoft has a ton of them.

- Second, hire new cloud talent that will think out of the Microsoft box. Microsoft has undergone a brain drain in the last few years, and the ones who've stuck around haven't shown much innovation with Azure. Microsoft's strategy has been largely reactionary, such as the recent realignment to IaaS -- that's following, not leading.

- Finally, look at interoperability. Microsoft loves having closed technology. Instead of arguing for interoperability, Microsoft prefers you stick to its stuff because it's supposedly made to work together. The world is not that simple, and Microsoft needs to take steps to make sure Azure works and plays well with others.

Good luck to the new CEO. He or she will need it.

Scott Blodgett described Microsoft’s Azure Stores in an 8/25/2013 post:

Microsoft Azure has historically lagged far behind Amazon’s EC2 in the market and in the hearts and minds of most developers. Azure started out life as a Platform as a Service (PaaS) offering which pretty much no one wanted. Indeed most developers wanted Infrastructure as a Service (IaaS) – like EC2 has had since day one. The difference between PaaS and IaaS means that you can deploy and manage your own application in the cloud vs. being constrained to compiled / packaged offerings. Further Amazon has been innovating at such a rapid pace that pretty much at every turn Azure has looked like an inferior offering by comparison.

In mid-2011 Microsoft moved their best development manager Scott Guthrie onto Azure. Also working on the Azure project since 2010 is Mark Russinovich arguably Microsoft’s best engineer. At this point Microsoft truly has their “A” team on Azure and they are actively using it with Outlook.com (replacement for Hotmail) and SkyDrive (deeply integrated into Windows 8). Amazon EC2 is still the gold standard in cloud computing but Azure is increasingly competitive. The Azure Store is a step in the direction toward building parity. The Store was announced in the fall of 2012 at the Build Conference and has come a long way in a short period of time. By way of reference Amazon has something similar Called the AWS Marketplace.

There are actually two different entities. The Azure Store is meant for developers and the Azure Marketplace is meant for analysts and information workers. My sense is that the Marketplace has been around for longer than the Store as it has a much richer set of offerings. Some of the offerings overlap between the Store and the Marketplace. For example, the Worldwide Historical Weather Data can be access from both places.

Similarities

- Both have data and applications.

- Both operate in the Azure cloud

Differences

- Windows Azure Store: Integration point is always via API

- Marketplace: Application are accessed via a web page or other packaged application such as a mobile device; Data can be access via Excel, (sometimes) an Azure dataset viewer, or integrated into your application via web services

What is confusing to me why there are so many more data applications in the Marketplace than there are in the store. For example, none of the extensive Stats Inc data is in the Store. It may be that the Store is just newer and it has yet to be fully populated. See this Microsoft blog entry for further details.

I went and kicked the tires of Azure Store and came away very impressed with what we saw. I saw approximately 30 different applications (all in English). There are two different types of apps in the store – App Services and Data. Although I did not write a test application I am fairly confident that both types of applications are accessed via web services. App Services provide functionality where Data provide information. In both cases Azure Marketplace apps can be thought of as buy vs. build.

- App Services: You can think about a service as a feature you would want integrated into your application. For example, one of the App Services (Staq Analytics) provides real-time analytics for service-based games. In this case a game developer would code Staq Analytics into their games which in turn would provide insight on customer usage. Another applications MongoLab provides a No-SQL database. The beauty of integrating an app from the Azure marketplace is that you as the customer do not ever need to worry about scalability. Microsoft takes care of that for you.

- Data: Data application provide on-demand information. For example, Dun and Bradstreet’s offering provides credit report information, Bing provides web search results, and StrikeIron validates phone numbers. As with app services Azure takes care of the scalability under load. Additionally, using a marketplace offering the data is theoretically as fresh as possible.

Further detail on the Store can be found here.

All and all the interface is very clean and straightforward to use. There is a store and a portal. Everything in the store appears to be in English though based on the URL it looks like it might be set up for localization. The portal is localized into 11 languages. The apps do not appear to be localized – though the Azure framework is localized. As a .Net developer I feel very comfortable using this environment and am impressed with how rich the interface has become – increasingly competitive with EC2 on a usability basis.

Applications are built using the Windows Azure Store SDK. There is a generic mailto address for developers to get into contact with Microsoft. There is also an Accelerator Program which will give applications further visibility in the Azure Store.

It probably not a bad point to highlight, in that Microsoft actually does have a third “store” of a sort called VM Depot (presently in preview mode) which focuses more on the IaaS approach, and the bridging of both “on premise” with “off premise” clouds with Hyper-V and Azure portability.

Finally, Identification technologies are also gaining a lot of focus, striving to unified the experience for hybrid deployments of on premise or hosted IaaS, when combined with Azure PaaS. The ALM model is also starting to be unified so that both Azure and Windows Hyper-V will be delivered by Development teams as defined packages – Databases as DAC’s; Applications as CAB’s / MSDeploy, Sites as WebDeploy / GIT, etc. with many of the features of Azure such as the Service Bus being ported back to Windows Server. Additionally, monitoring services are starting to unify to this model to define a transparent unified distributed service.

<Return to section navigation list>

Windows Azure Pack, Hosting, Hyper-V and Private/Hybrid Clouds

J. Peter Bruzzese (@JPBruzzese) asserted “The pack lets developers write once and deploy them locally in Windows Server 2012 R2, as well as in Microsoft's cloud” in deck for his Windows Azure Pack: Cloud app convenience meets in-house hosting article of 8/28/2013 for InfoWorld’s Enterprise Windows blog:

Windows Server 2012 R2 was released to manufacturers this week, meaning the next-gen server OS is one step closer to its general availability of October 18. But you don't have to wait to start taking advantage of its ability to run Windows Azure Pack, as the pack's preview version also runs on the current Windows Server 2012. (You will need R2 to take advantage of the final version of Azure Pack that debuts with Windows Server 2012 R2.)

Windows Azure Pack is a big deal, or should be, because it extends the functionality you have in the cloud to your data center.

[ J. Peter Bruzzese explains how to get started with Windows Azure. | 10 excellent new features in Windows Server 2012 R2 | For a quick, smart take on the news you'll be talking about, check out InfoWorld TechBrief -- subscribe today. ]

In carpentry, there's an expression: Measure twice, cut once. It's good advice all around, but you must have the right tools in place for it to work. With modern applications increasingly developed in the cloud (it's easier and cheaper to do your dev and test work on a cloud-based system like Azure than to build your own infrastructure), what happens when the decision is made to bring that app in-house? With Windows Server 2012 and Windows Azure Pack, developers don't have to worry; they can measure twice and cut once by developing for the cloud and moving that same application to any Microsoft cloud (public, private, hybrid) through a service provider or through in-house servers.

The IT admins reading this post may stop right here, figuring this is an issue for developers. That would be a mistake -- although developers enjoy the direct benefit of Azure Pack, the fact is you, the IT pros, are the ones implementing the platform. Thus, you need to understand how the applications are built on it.

One of the key components to make the cross-platform connection possible for developers is Windows Azure Service Bus, which provides messaging capabilities that combines with Windows Azure Pack to form the key components developers need to write once and use anywhere by working with the same client SDK when developing apps.

Another piece to this puzzle is Advanced Message Queuing Protocol AMQP 1.0 support in Windows Azure Service Bus. This is an open-standard messaging protocol developed first at JP Morgan Chase to make it easier to build message-based applications that use different languages, frameworks, and operating systems.

Whether you're a developer or an IT admin, understanding these key components will help you take the most advantage of Azure Pack and Windows Server 2012.

Steven Martin (@stevemar_msft) announced Gartner Recognizes Microsoft as a Cloud Infrastructure as a Service Visionary in a 8/26/2013 post:

Four months ago, we moved Windows Azure Infrastructure Services out of preview and into general availability which included covering the service with our industry-leading SLA. In the short time since then, Gartner has recognized Microsoft as a Visionary in the market for its completeness of vision and ability to execute according to its 2013 Magic Quadrant report for Cloud Infrastructure as a Service (IaaS).

We believe Gartner recognized Microsoft as a visionary because our comprehensive global IaaS + PaaS + Hybrid cloud vision is one of our biggest strengths.

Microsoft’s Vision for the Cloud

Over the past year we’ve made significant investments in Windows Azure in order to deliver on our vision, from launching Infrastructure Services to making countless other services - including Media Services, Mobile Services and Web Sites – generally available. We have aggressively expanded to Windows Azure into multiple international markets, including becoming the first cloud vendor to offer public cloud services in mainland China. In addition, we have made compelling improvements to the management portal. We believe the results from this report are a tremendous validation of the work we have completed so far, as well as where we plan to go.

Over the past several weeks, Brad Anderson, Corporate Vice President, Windows Server and System Center, has been contributing to the “What’s New in 2012 R2” blog series, which details the new capabilities and customer benefits delivered in the R2 versions of System Center 2012 and Windows Server 2012, as well as updates to Windows Intune, and how these offerings, together with Windows Azure, make the Cloud OS vision come to life. We see the Cloud OS as a strong differentiator, and believe that this will continue to contribute to our success in the IaaS market.

Don’t Take Our Word for It

We have a lot of customers doing exciting work on Windows Azure. Below are some case studies we’ve compiled from companies experiencing the benefits of Windows Azure and Microsoft’s Cloud OS vision.

- Trek Bicycle Corporation Moves Retail System to Cloud, Expects to Save $15,000 a Month in IT Costs

- Telenor Uses Windows Azure Virtual Machines for Fast, Efficient, Cost-saving Development and Testing of company-wide SharePoint 2013 Platform

- Toyota Redesigns Web Portal Using Scalable Cloud and Content Management Solutions

- Digital Air Strike uses Windows Azure and on-premises Microsoft technologies to provide social media monitoring and response services to some of the largest auto retailers in the world, including General Motors

We’re excited that Gartner recognized our completeness of vision, and look forward to deliver more on our vision in the coming year. If you’d like to read the full report, “Gartner: Magic Quadrant for Cloud Infrastructure as a Service,” you can find it at the following link: http://www.gartner.com/reprints/server-tools?id=1-1IMDMZ8&ct=130819&st=sb

I’m interested to hear your perspectives on the report, and on the rapidly evolving cloud landscape.

*Gartner does not endorse any vendor, product or service depicted in its research publications, and does not advise technology users to select only those vendors with the highest ratings. Gartner research publications consist of the opinions of Gartner’s research organization and should not be construed as statements of fact. Gartner disclaims all warranties, expressed or implied, with respect to this research, including any warranties of merchantability or fitness for a particular purpose.

<Return to section navigation list>

Visual Studio LightSwitch and Entity Framework 4.1+

Beth Massi (@bethmassi) described Calling ASP.NET Web API from a LightSwitch Silverlight Client on 8/23/2013:

Before I left for a wonderful vacation camping in Lake Tahoe :) I showed you how you can use Web API with your LightSwitch middle-tier service in order to call stored procedures in your databases. I also showed you how we’ve improved database management in Visual Studio 2013 with the addition of SQL Server Data Tools (SSDT) support. If you missed them:

- Adding Stored Procs to your LightSwitch Intrinsic Database

- Calling Stored Procs in your LightSwitch Databases using Web API

You can do all sorts of wonderful things with SSDT as well as with Web API. Starting in Visual Studio 2012 Update 2 (LightSwitch V3), we added the ability to use the ServerApplicationContext on the middle-tier so you can create custom web services that utilize all the business and data logic inside LightSwitch. This makes it easy to reuse your LightSwitch business logic & data investments and extend the service layer exactly how you want. (See this and this for a couple more examples).

I got a few questions about my last post where I showed how to call the Web API we created from a LightSwitch HTML client. Folks asked how to call the same Web API from the LightSwitch Silverlight client so I thought I’d show a possible solution here since many customers use the desktop client today. And although I’ll be using it in this post, it’s not required to have Visual Studio 2013 to do this – you can do it with VS2012 Update 2 or higher.

So continuing from the example we’ve been using in the previous posts above, let’s see how we can get our Web API to return results to a LightSwitch Silverlight client.

Modifying our Web API

By default, Web API will return our results in JSON format. This is a great, lightweight format for exchanging data and is standard across a plethora of platforms including our LightSwitch HTML client which is based on jQuery mobile. You can also return JSON to a Silverlight client. However you may want to work with XML instead. The nice thing about Web API is that it will return XML formatted results as long as the client specifies “application/xml” in its Accept header when making the web request. (As an aside, LightSwitch OData services will also return data in both these formats and the LightSwitch HTML & Silverlight clients use JSON under the hood.)

Let’s make a few modifications to the Get method in our Web API Controller so that it returns a list of objects we can serialize nicely as XML. First add a reference from the Server project to System.Runtime.Serialization and import the namespace in your Controller class.

Recall that our Get method calls a stored procedure in our database that returns a list of all the tables in the database as well as the number of rows in each. So open up the TableCountsController and create a class called TableInfo that represents this data. Then attribute the class with DataContract and DataMember attributes so it serializes how we need. (Please see MSDN for more information on DataContracts).

VB:

<DataContract(Namespace:="")> Public Class TableInfo <DataMember> Property Name As String <DataMember> Property Count As Integer End ClassC#

[DataContract(Namespace="")] public class TableInfo { [DataMember] public string Name { get; set; } [DataMember] public int Count { get; set; } }Now we can tweak the code (in bold) that calls our stored proc to return a List of TableInfo objects. Note that this change will not affect our JSON clients.

VB:

Public Class TableCountsController Inherits ApiController ' GET api/<controller> Public Function GetValues() As List(Of TableInfo) Dim reportResult As List(Of TableInfo) = Nothing Using context As ServerApplicationContext = ServerApplicationContext.CreateContext() 'Only return this sensitive data if the logged in user has permission If context.Application.User.HasPermission(Permissions.SecurityAdministration) Then 'The LightSwitch internal database connection string is stored in the ' web.config as "_IntrinsicData". In order to get the name of external data ' sources, use: context.DataWorkspace.*YourDataSourceName*.Details.Name Using conn As New SqlConnection( ConfigurationManager.ConnectionStrings("_IntrinsicData").ConnectionString) Dim cmd As New SqlCommand() cmd.Connection = conn cmd.CommandText = "uspGetTableCounts" cmd.CommandType = CommandType.StoredProcedure cmd.Connection.Open() 'Execute the reader into a new named type to be serialized Using reader As SqlDataReader = cmd.ExecuteReader(System.Data.CommandBehavior.CloseConnection) reportResult = (From dr In reader.Cast(Of IDataRecord)() Select New TableInfo With { .Name = dr.GetString(0), .Count = dr.GetInt32(1) } ).ToList() End Using End Using End If Return reportResult End Using End Function End ClassC#:

public class TableCountsController : ApiController { // GET api/<controller> public List<TableInfo> Get() { List<TableInfo> reportResult = null;

using (ServerApplicationContext context = ServerApplicationContext.CreateContext()) // Only return this sensitive data if the logged in user has permission if (context.Application.User.HasPermission(Permissions.SecurityAdministration)) { { //The LightSwitch internal database connection string is stored in the // web.config as "_IntrinsicData". In order to get the name of external data // sources, use: context.DataWorkspace.*YourDataSourceName*.Details.Name using (SqlConnection conn = new SqlConnection(ConfigurationManager.ConnectionStrings ["_IntrinsicData"].ConnectionString)) { SqlCommand cmd = new SqlCommand(); cmd.Connection = conn; cmd.CommandText = "uspGetTableCounts"; cmd.CommandType = CommandType.StoredProcedure; cmd.Connection.Open(); // Execute the reader into a new named type to be serialized using (SqlDataReader reader = cmd.ExecuteReader(System.Data.CommandBehavior.CloseConnection)) { reportResult = reader.Cast<IDataRecord>() .Select(dr => new TableInfo { Name = dr.GetString(0), Count = dr.GetInt32(1) } ).ToList(); } } } } return reportResult; } }Create a Custom Silverlight Control

LightSwitch let’s you write your own custom controls no matter what client you’re using. If you’re using the HTML client, you write custom JavaScript code, if you’re using the Silverlight client, you write XAML. So add a new Silverlight Class Library to your LightSwitch solution.

Then right-click on the Silverlight Class Library and Add –> New Item, and choose Silverlight User Control. Design your control how you wish using the XAML designer. For this example I’m keeping it simple. We will write the code to call the Web API and display the results in a simple DataGrid.

<UserControl xmlns="http://schemas.microsoft.com/winfx/2006/xaml/presentation" xmlns:x="http://schemas.microsoft.com/winfx/2006/xaml" xmlns:d="http://schemas.microsoft.com/expression/blend/2008" xmlns:mc="http://schemas.openxmlformats.org/markup-compatibility/2006" xmlns:sdk=http://schemas.microsoft.com/winfx/2006/xaml/presentation/sdk

x:Class="SilverlightClassLibrary1.SilverlightControl1" mc:Ignorable="d" d:DesignHeight="300" d:DesignWidth="400"> <Grid x:Name="LayoutRoot" Background="White"> <sdk:DataGrid Name="myGrid" IsReadOnly="True" AutoGenerateColumns="True" /> </Grid> </UserControl>Calling Web API from Silverlight

Now we need to write the code for the control. With Silverlight, you can specify whether the browser or the client provides HTTP handling for your web requests. For this example we will use the browser via HttpWebRequest so that it will flow our credentials automatically with no fuss. (See How to: Specify Browser or Client HTTP Handling)

So you’ll need to call WebRequest.RegisterPrefix("http://", System.Net.Browser.WebRequestCreator.BrowserHttp) when you instantiate the control.

We’ll also need to add a reference to System.Xml.Serialization and import that at the top of our control class. We’ll use the XMLSerializer to deserialize the response and populate our DataGrid. We use the HttpWebRequest to make the request. This is where we specify the path to our Web API based on the route we set up (which I showed that in the previous post).

(Note that the URI to the Web API is on the same domain as the client. The Web API is hosted in our LightSwitch server project which is part of the same solution as the desktop client. If you’re trying to use this code to do cross-domain access you’ll need to use a ClientAccessPolicy file to allow it. See Making a Service Available Across Domain Boundaries for more information.)

Here’s the complete user control code.

VB:

Imports System.Net Imports System.Xml.Serialization Imports System.IO Public Class TableInfo Property Name As String Property Count As Integer End Class Partial Public Class SilverlightControl1 Inherits UserControl Private TableCounts As List(Of TableInfo) Public Sub New() 'Register BrowserHttp for these prefixes WebRequest.RegisterPrefix("http://", System.Net.Browser.WebRequestCreator.BrowserHttp) WebRequest.RegisterPrefix("https://", System.Net.Browser.WebRequestCreator.BrowserHttp) InitializeComponent() GetData() End Sub Private Sub GetData() 'Construct the URI to our Web API Dim apiUri = New Uri(Application.Current.Host.Source, "/api/TableCounts/") 'Make the request Dim request As HttpWebRequest = HttpWebRequest.Create(apiUri) request.Accept = "application/xml" request.BeginGetResponse(New AsyncCallback(AddressOf ProcessData), request) End Sub Private Sub ProcessData(ar As IAsyncResult) Dim request As HttpWebRequest = ar.AsyncState Dim response As HttpWebResponse = request.EndGetResponse(ar) 'Deserialize the XML response Dim serializer As New XmlSerializer(GetType(List(Of TableInfo))) Using sr As New StreamReader(response.GetResponseStream(),

System.Text.Encoding.UTF8)

Me.TableCounts = serializer.Deserialize(sr) 'Display the data back on the UI thread Dispatcher.BeginInvoke(Sub() myGrid.ItemsSource = Me.TableCounts) End Using End Sub End ClassC#:

using System.Xml.Serialization; using System.IO; namespace SilverlightClassLibrary1 { public class TableInfo { public string Name { get; set; } public int Count { get; set; } } public partial class SilverlightControl1 : UserControl { private List<TableInfo> TableCounts; public SilverlightControl1() { //Register BrowserHttp for these prefixes WebRequest.RegisterPrefix("http://", System.Net.Browser.WebRequestCreator.BrowserHttp); WebRequest.RegisterPrefix("https://", System.Net.Browser.WebRequestCreator.BrowserHttp); InitializeComponent(); GetData(); } private void GetData() { //Construct the URI to our Web API Uri apiUri = new Uri(Application.Current.Host.Source, "/api/TableCounts/"); //Make the request HttpWebRequest request = (HttpWebRequest)HttpWebRequest.Create(apiUri); request.Accept = "application/xml"; request.BeginGetResponse(new AsyncCallback(ProcessData), request); } private void ProcessData(IAsyncResult ar) { HttpWebRequest request = (HttpWebRequest)ar.AsyncState; HttpWebResponse response = (HttpWebResponse)request.EndGetResponse(ar); //Deserialize the XML response XmlSerializer serializer = new XmlSerializer(typeof(List<TableInfo>)); using (StreamReader sr = new StreamReader(response.GetResponseStream(),

System.Text.Encoding.UTF8)) { this.TableCounts = (List<TableInfo>)serializer.Deserialize(sr); //Display the data back on the UI thread

Dispatcher.BeginInvoke(() => myGrid.ItemsSource = this.TableCounts); } } } }Using the Custom Control in LightSwitch

Last but not least we need to add our custom control to a LightSwitch screen in our Silverlight client. Make sure to rebuild the solution first. Then add a new screen, select any screen template except New or Details, and don’t select any Screen Data.

In the screen content tree Add a new Custom Control.

Then add a Solution reference to your Silverlight class library and choose the custom control you built. (If you don’t see it show up, make sure you rebuild your solution.)

Finally, In the properties window I’ll name the control TableCounts, set the label position to “Top”, and set the Horizontal & Vertical alignment to “Stretch” so it looks good on the screen.

F5 to build and run the solution. (Make sure you are an administrator by checking “Grant for debug” on the access control tab for SecurityAdministration permission.) You should see the data returned from the stored procedure and displayed in the grid.

If you run the HTML client we built in the previous post, you’ll see that it runs the same as before with no changes. The JSON returned is the same shape as before.

Wrap Up

The last few posts I’ve taken you through some more advanced capabilities of LightSwitch. LightSwitch has a rich extensibility model which allows you to customize your LightSwitch applications beyond what’s in the box. When you need to provide custom functionality to your LightSwitch applications there are many options.

Using Web API with LightSwitch gives you the flexibility of creating custom web methods that can take advantage of all the data and business logic in your LightSwitch middle-tier via the ServerApplicationContext. If you have LightSwitch version 3 or higher (VS2012 Update 2+ or VS2013) then you are ready to get started.

For more information on using Web API with LightSwitch see:

- Using the LightSwitch ServerApplicationContext API

- A New API for LightSwitch Server Interaction: The ServerApplicationContext

- Create Dashboard Reports with LightSwitch, WebAPI and ServerApplicationContext

- Dashboard Reports with LightSwitch, WebAPI and ServerApplicationContext– Part Deux

And for more information on using database projects with LightSwitch in Visual Studio 2013 see:

<Return to section navigation list>

Cloud Security, Compliance and Governance

David Hardin described Azure Management Certificate Public Key / Private Key on 8/27/2013:

Today I'm exploring how the public and private keys created with MakeCert.exe are stored. Earlier I wrote about the difference between SSL certificates and those used for Azure Management API authentication. For this post I'm creating Azure Management API certificates.

I use the certificate creation scripts from a TechNet article I helped create back in 2011. Most of that article is now ancient Azure history but the scripts are still relevant so I'll list them here in case TechNet deletes the article.

Script for creating SSL certificates:

makecert -r -pe -n "CN=yourapp.cloudapp.net" -b 01/01/2000 -e 01/01/2036 -eku 1.3.6.1.5.5.7.3.1 -ss my -sky exchange -sp "Microsoft RSA SChannel Cryptographic Provider" -sy 12 -sv SSLDevCert.pvk SSLDevCert.cer del SSLDevCert.pfx pvk2pfx -pvk SSLDevCert.pvk -spc SSLDevCert.cer -pfx SSLDevCert.pfx -po passwordScript for creating Azure Management API certificates: