Windows Azure and Cloud Computing Posts for 9/2/2013+

Top Stories This Week:

- Scott Guthrie described New Distributed, Dedicated, High Performance Cache Service + More Cool Improvements for Windows Azure in the Windows Azure Cloud Services, Caching, APIs, Tools and Test Harnesses section.

- Dan Plastina reported The NEW Microsoft RMS is live, in preview! in an 8/29/2013 post in the Windows Azure Access Control, Active Directory, Identity and Rights Management section and Tim Anderson said it wasn’t ready for prime time.

- Microsoft announced in conjunction with its Nokia devices and services business acquisition plans to construct a new US$250 million data center in Finland. See the Windows Azure Infrastructure and DevOps section.

| A compendium of Windows Azure, Service Bus, BizTalk Services, Access Control, Caching, SQL Azure Database, and other cloud-computing articles. |

Note: This post is updated weekly or more frequently, depending on the availability of new articles in the following sections:

- Windows Azure Blob, Drive, Table, Queue, HDInsight and Media Services

- Windows Azure SQL Database, Federations and Reporting, Mobile Services

- Windows Azure Marketplace DataMarket, Power BI, Big Data and OData

- Windows Azure Service Bus, BizTalk Services and Workflow

- Windows Azure Access Control, Active Directory, Identity and Rights Management

- Windows Azure Virtual Machines, Virtual Networks, Web Sites, Connect, RDP and CDN

- Windows Azure Cloud Services, Caching, APIs, Tools and Test Harnesses

- Windows Azure Infrastructure and DevOps

- Windows Azure Pack, Hosting, Hyper-V and Private/Hybrid Clouds

- Visual Studio LightSwitch and Entity Framework v4+

- Cloud Security, Compliance and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

Windows Azure Blob, Drive, Table, Queue, HDInsight and Media Services

<Return to section navigation list>

Scott Guthrie (@scottgu) advised that you can use HDInsight to analyze Windows Azure WebSite log files store in Windows Azure blobs in the Windows Azure Infrastructure and DevOps section below:

…

For more advanced scenarios, you can also now spin up your own Hadoop cluster using the Windows Azure HDInsight service. HDInsight enables you to easily spin up, as well as quickly tear down, Hadoop clusters on Windows Azure that you can use to perform MapReduce and analytics jobs with.

Because HDInsight natively supports Windows Azure Blob Storage, you can now use HDInsight to perform custom MapReduce jobs on top of your Web Site Log Files stored there. This provides an even richer way to understand and analyze your site traffic and obtain rich insights from it.

…

<Return to section navigation list>

Windows Azure SQL Database, Federations and Reporting, Mobile Services

No significant articles so far this week.

<Return to section navigation list>

Windows Azure Marketplace DataMarket, Cloud Numerics, Big Data and OData

No significant articles so far this week.

<Return to section navigation list>

Windows Azure Service Bus, BizTalk Services and Workflow

No significant articles so far this week.

<Return to section navigation list>

Windows Azure Access Control, Active Directory, Identity and Rights Management

Tim Anderson (@timanderson) asserted Hands on with Microsoft’s Azure Cloud Rights Management: not ready yet in a 9/3/2013 post:

If you could describe the perfect document security system, it might go something like this. “I’d like to share this document with X, Y, and Z, but I’d like control over whether they can modify it, I’d like to forbid them to share it with anyone else, and I’d like to be able to destroy their copy at a time I specify”.

This is pretty much what Microsoft’s new Azure Rights Management system promises, kind-of:

ITPros have the flexibility in their choice of storage locale for their data and Security Officers have the flexibility of maintaining policies across these various storage classes. It can be kept on premise, placed in an business cloud data store such as SharePoint, or it can placed pretty much anywhere and remain safe (e.g. thumb drive, personal consumer-grade cloud drives).

says the blog post.

There is a crucial distinction to be made though. Does Rights Management truly enforce document security, so that it cannot be bypassed without deep hacking; or is it more of an aide-memoire, helping users to do the right thing but not really enforcing it?

I tried the preview of Azure Rights Management, available here. Currently it seems more the latter, rather than any sort of deep protection, but see what you think. It is in preview, and a number of features are missing, so expect improvements.

I signed up and installed the software into my Windows 8 PC.

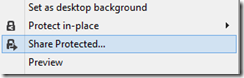

The way this works is that “enlightened” applications (currently Microsoft Office and Foxit PDF, though even they are not fully enlightened as far as I can tell) get enhancements to their user interface so you can protect documents. You can also protect *any* document by right-click in Explorer:

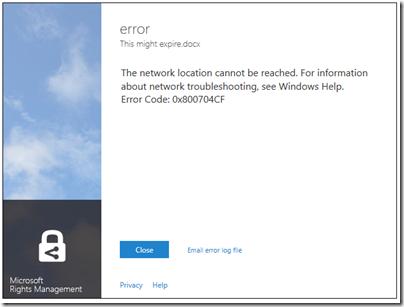

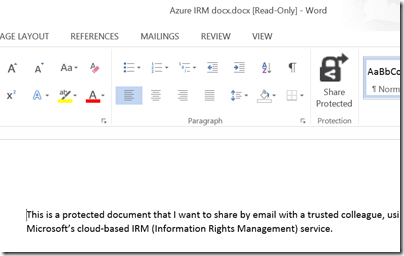

I typed a document in Word and hit Share Protected in the ribbon. Unfortunately I immediately got an error, that the network location cannot be reached:

I contacted the team about this, who asked for the log file and then gave me a quick response. The reason for the error was that Rights Management was looking for a server on my network that I sent to the skip long ago.

Many years ago I must have tried Microsoft IRM (Information Rights Management) though I barely remember. The new software was finding the old information in my Active Directory, and not trying to contact Azure at all.

This is unlikely to be a common problem, but illustrates that Microsoft is extending its existing rights management system, not creating a new one.

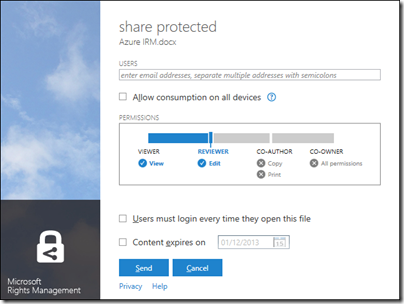

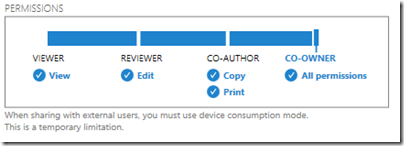

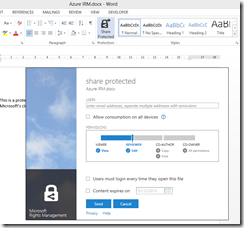

With that fixed, I was able to protect and share a document. This is the dialog:

It is not a Word dialog, but rather part of the Rights Management application that you install. You get the same dialog if you right-click any file in Explorer and choose Share Protected.

I entered a Gmail email address and sent the protected document, which was now wrapped in a file with a .pfile (Protected File) extension.

Next, I got my Gmail on another machine.

First, I tried to open the file on Android. Unfortunately only x86 Windows is supported at the moment:

There is an SDK for Android, but that is all.

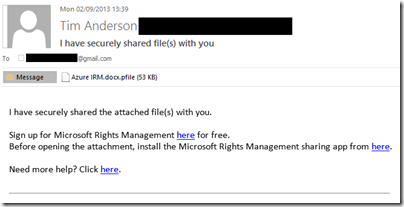

I tried again on a Windows machine. Here is the email:

There is also note in the email:

[Note: This Preview build has some limitations at this time. For example, sharing protected files with users external to your organization will result in access control without additional usage restrictions. Learn More about the Preview]

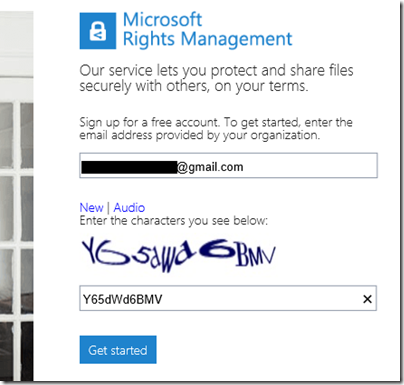

I was about to discover some more of these limitations. I attempted to sign up using the Gmail address. Registration involves solving a vile CAPTCHA

but got this message:

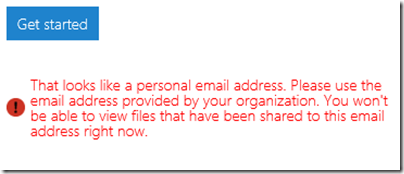

In other words, you cannot yet use the service with Gmail addresses. I tried it with a Hotmail address; but Microsoft is being even-handed; that did not work either.

Next, I tried another email address at a different, private email domain (yes, I have lots of email addresses). No go:

The message said that the address I used was from an organisation that has Office 365 (this is correct). It then remarked, bewilderingly:

If you have an account you can view protected files. If you don’t have an Office 365 account yet, we’ll soon add support…

This email address does have an Office 365 account. I am not sure what the message means; whether it means the Office 365 account needs to sign up for rights management at £2 per user per month, or what, but it was clearly not suitable for my test.

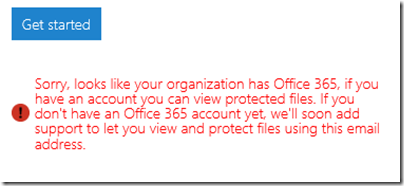

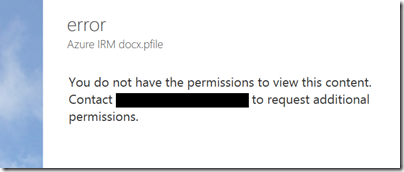

I tried yet another email address that is not in any way linked to Office 365 and I was up and running. Of course I had to resend the protected file, otherwise this message appears:

Incidentally, I think the UI for this dialog is wrong. It is not an error, it is working as designed, so it should not be titled “error”. I see little mistakes like this frequently and they do contribute to user frustration.

Finally, I received a document to an enabled email address and was able to open it:

For some reason, the packaging results in a document called “Azure IRM docx.docx” which is odd, but never mind.

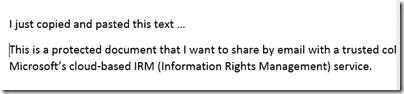

My question though: to what extent is this document protected? I took the screen grab using the Snipping Tool and pasted it into my blog for all to read, for example. The clipboard also works:

That said, the plan is for tighter protection to be offered in due course, at lease in “enlightened” applications. The problem with the preview is that if you share to someone in a different email domain, you are forced to give full access. Note the warning in the dialog:

Inherently though, the client application has to have decrypted access to the file in order to open it. All the rights management service does, really, is to decrypt the file for users logged into the Azure system and identified by their email address. What happens after that is a matter of implementation.

The consequences of documents getting into the wrong hands are a hot topic today, after Wikileaks et al. Is Microsoft’s IRM a solution?

Making this Azure-based and open to any recipient (once the limitation on “public” email addresses is lifted”) makes sense to me. However I note the following:

- As currently implemented, this provides limited security. It does encrypt the document, so an intercepted email cannot easily be read, but once opened by the recipient, anything could happen.

- The usability of the preview is horrid. Do you really want your trusted recipient to struggle with a CAPTCHA?

- Support beyond Windows is essential, and I am surprised that this even went into preview without it.

I should add that I am sceptical whether this can ever work. Would it not be easier, and just as effective (or ineffective), simply to have data on a web site with secure log-in? The idea of securely emailing documents to external recipients is great, but it seems to add immense complexity for little added value. I may be missing something here and would welcome comments.

I had to sign in twice since I didn’t check “Remember password!"

If you try recursion, it will package the already packaged file.

Dan Plastina reported The NEW Microsoft RMS is live, in preview! in an 8/29/2013 post to the Active Directory Rights Management Services Team Blog:

We made it! Today we’re sharing with you a public preview of the massively updated rights management offering. Let's jump right in...

RMS enables organizations to share sensitive documents within their organization or to other organizations with unprecedented ease. These documents can be of any type, and you can consume them on any device. Given the protection scheme is very robust, the file can even be openly shared… even on consumer services like SkyDrive™/DropBox™/GDrive™.

Today we’re announcing the preview of SDKs, Apps, and Services, and we’re giving details on how you can explore each of them. If you’d like some background on Microsoft Rights Management, check out this TechEd Talk. I’ll also strongly recommend you read the new RMS whitepaper for added details.

Promises of the new Microsoft Rights Management services

Users:

- I can protect any file type

- I can consume protected files on devices important to me

- I can share with anyone

- Initially, I can share with any business user; they can sign up for free RMS

- I can eventually share with any individual (e.g. MS Account, Google IDs in CY14)

- I can sign up for a free RMS capability if my company has yet to deploy RMS

ITPro:

- I can keep my data on-premise if I don’t yet want to move to the cloud

- I am aware of how my protected data is used (near realtime logging)

- I can control my RMS ‘tenant key’ from on-premise

- I can rely on Microsoft in collaboration with its partners for complete solutions

These promises combine to create two very powerful scenarios:

- Users can protect any file type. Then share the file with someone in their organization, in another organization, or with external users. They can feel confident that the recipient will be able to use it.

- ITPros have the flexibility in their choice of storage locale for their data and Security Officers have the flexibility of maintaining policies across these various storage classes. It can be kept on premise, placed in an business cloud data store such as SharePoint, or it can placed pretty much anywhere and remain safe (e.g. thumb drive, personal consumer-grade cloud drives).

The RMS whitepaper offers plenty of added detail.

User experience of sharing a document

Here’s a quick fly-by thru one (of the many) end to end user experiences. We’ve chosen the very common ‘Sensitive Word document’ scenario. While in Word, you can save a document and invoke SHARE PROTECTED (added by the RMS application):

You are then offered the protection screen. This screen will be provided by the SDK and thus will be the same in all RMS-enlightened applications:

When you are done with addressing and selecting permissions, you invoke SEND. An email will be created that is ready to be sent but we let you edit it first:

The recipient of this email can simply open the document.

If you’re a hands-on learner, just send us an email using this link and we’ll invite

you to consume a protected document the same way partner of yours would.If the user does not have access to RMS, they can sign up for free (Yes, free). In this flow the user will simply provide the email address they use in their day to day business (Yes, we don’t make you create a parallel free ID to consume sensitive work documents). We’ll ask the user to verify possession via a challenge/response, and then give them access to both consume and produce RMS protected content (yes, they can not only consume but also share their own sensitive documents for free).

The user can consume the content. Here we’ll show you how that looks like on an iPhone. In this case they got an email with a protected image (PJPG). They open it and are greeted with a login prompt so we can verify their right to view the protected image. Once verified, the user is granted access to see the image and to review the rights offered to them (click on the info bar):

We hope you'll agree that the above is exciting stuff! With this covered, let’s jump into the specifics of what we’re releasing today…

Foundational Developer SDKs

Today we are offering you 5 SDKs in RELEASE form. Those SDKs target Windows for PCs, Windows Store Apps, Windows for Phone 8, iOS, and Android.

The Mac OS X SDK is available in PREVIEW form on CONNECT and will be released in October. We’re intentionally holding back on the RESTful APIs documentation until we’re further along with application development. If you are a web site developer or printer/scanner manufacturer wanting to build against them, let us know and we can discuss options.

It’s worth noting the Windows SDK offers a powerful FILE API that is targeted at solution providers and IT Pros. This SDK has already been released. It will let you protect any file via PowerShell script as well. E.g. Using the FileAPI and PowerShell you can protect a PDF without any additional software.

The RMS sharing application

Today we’re releasing the RMS sharing application for Windows.

You can get the application and sign up for free RMS here.

While built, the mobile apps are not yet in their respective App Stores. Once approved we’ll have an RMS sharing application for: Windows PC, Windows store app, Windows Phone 8, iOS, Android and Mac OS X. If you can’t wait, your Microsoft field contact will know where to get these preview applications and can give you a live demo.

As a treat – we’ve not blogged about this before and it’s not in the whitepaper – here is some new scoop: The mobile applications enables consumption of RMS protected content as well as enables the user to create protected images from the camera or on-device camera roll. We call this the ‘Secure whiteboard’ feature: take a photo of the meeting room whiteboard and share it with all attendees, securely. This said, we recognize it can serve many other creative uses.

The Azure RMS Service

The above offers are bound to the Azure RMS service. This service has been in worldwide production since late 2012 as it powers the Office 365 integrated RMS features. We’ve added support for the new mobile SDKs and RESTful endpoints but overall, that servive has been up and running in 6 geographies worldwide (2x EU, 2x APAC, 2x US) and is fully fault tolerant (Active-Active for the SaaS geeks amongst you).

Today we’re also offering a preview of the BYOK – Bring Your Own Key – capability discussed in the whitepaper. This ensures that your RMS tenant key is treated with utmost care within a Thales hardware security module. This capability prevents export of the key even with a quorum of administrator cards! This same preview offer also enables near-realtime logging of all activities related to RMS and key usage.

The bridge to on premise

Today we’re also announcing the RMS Connector. This connector enables you to have your Exchange on premise and SharePoint on premise servers make use of all the above. It’s a simple relay that connects the two. The role is easy to configure and lightweight to run.

To join this preview, follow this link.

The RMS for Individuals offer

As called out above, not everyone will have RMS in their company so we’re announcing today that we’ll offer RMS for free to individuals within organizations. This offer is hosted as http://portal.aadrm.com and, within the few temporary constraints of the preview phase, let you get RMS for free. If you share with others, they can simply sign up. If you are the first one to the party, you can simply sign up. No strings attached.

Wrapping up, we hope you’ll agree that we did pretty well at solving a long standing issue. We’ve done so in a way that can also be used within your organization and that honors the critical needs of your IT staff. We’re offering you immediate access to evaluate all the relevant parts: SDKs, Apps, Azure service, connectors, and the self-sign up portal. For each, I’ve given shared with you links to help you get started.

In coming posts I’ll cover:

- An Authoritative Evaluation Guide. We’ll answer to the common ask of “Is there a straight up, no-nonsense write up of what it means to get RMS going?”.

- A Guided Tour of the Mobile Device applications. Since we’re on hold for the App Store approvals process, we’ll share with you what we have.

- A Guided Tour of the Windows application. You can download this application today, but we’ll still take the time to explore the nooks and crannies of this little gem.

- As an AD RMS user, what are my options? All of the above was bound to the Azure RMS server instance. Some of you are using Azure AD and want to better understand your options for migration and/or co-existence. We cover some of this in the whitepaper but we’ll also dive deep into this more complex topic.

We'd love to hear from you below or, more privately on mailto:AskIPTeam@microsoft.com?subject=Blog%20Feedback.

<Return to section navigation list>

Windows Azure Virtual Machines, Virtual Networks, Web Sites, Connect, RDP and CDN

Scott Guthrie (@scottgu) advised that you can store Windows Azure WebSite log files in Windows Azure blobs in the Windows Azure Infrastructure and DevOps section below:

…

For more advanced scenarios, you can also now spin up your own Hadoop cluster using the Windows Azure HDInsight service. HDInsight enables you to easily spin up, as well as quickly tear down, Hadoop clusters on Windows Azure that you can use to perform MapReduce and analytics jobs with.

Because HDInsight natively supports Windows Azure Blob Storage, you can now use HDInsight to perform custom MapReduce jobs on top of your Web Site Log Files stored there. This provides an even richer way to understand and analyze your site traffic and obtain rich insights from it.

…

<Return to section navigation list>

Windows Azure Cloud Services, Caching, APIs, Tools and Test Harnesses

The Windows Azure Team (@WindowsAzure) sent the following Announcing preview for new Windows Azure Cache message to all Windows Azure Subscribers on 9/3/2013:

Today we are announcing preview availability for the new Windows Azure Cache. We are also announcing the retirement of the current Shared Caching Service and a final decommission date for the Silverlight-based portal.

New Windows Azure Cache

Windows Azure Cache gives you access to dedicated cache that is managed by Microsoft. By using this distributed, in-memory, scalable solution, you can build highly scalable and responsive applications with super-fast access to data. A cache created using the Cache Service is accessible from applications running on Windows Azure Web Sites, web and worker roles, and Virtual Machines.

The new service differs from the old Windows Azure Shared Caching Service in several key ways:

- No transaction limits. Customers now pay based on cache size only, not transactions.

- Dedicated cache. The new service offers dedicated cache for customers who need it.

- Better management. Cache provisioning and management is done through the new Windows Azure Management Portal rather than from the old Microsoft Silverlight–based portal.

- Better pricing. The new service offers better pricing at nearly every price point. Customers using large cache sizes and high transaction volumes gain a major cost savings.

For more information, visit the Windows Azure Cache website.

Windows Azure Cache is available in three tiers: Basic, Standard, and Premium. Your pricing for the three tiers can be found in the table below. For more information about the pricing of Windows Azure Cache tiers, please see the Cache Pricing Details page.

* General availability date has not been announced.

To get started, visit the Preview Features webpage and sign up for the preview.

Retirement for existing Shared Caching Service

In coordination with the announcement of the preview for Windows Azure Cache, we are announcing the upcoming retirement for the existing Shared Caching Service.The Shared Caching Service will remain available for existing customers for a maximum of 12 months from today’s date. During the Windows Azure Cache preview period, we encourage all existing Shared Caching customers to try out Windows Azure Cache and migrate to the new service when it reaches general availability. Windows Azure Cache provides more features, is easier to manage in the new Azure Portal, and provides a better value overall.

Please note the Silverlight-based portal used to manage Shared Caching will be decommissioned on March 31, 2014. Shared Caching service users who have not migrated to Windows Azure Cache will receive communications on how to manage their shared caches if they choose to continue using the old service after that date.

Return to section navigation list>

Windows Azure Infrastructure and DevOps

Scott Guthrie (@scottgu) described Windows Azure Cloud Services, Caching, APIs, Tools and Test Harnesses in a 9/3/2013 post:

This morning we released some great updates to Windows Azure. These new capabilities include:

- Dedicated Cache Service: Announcing the preview of our new distributed, dedicated, high performance cache service

- AutoScale: Schedule-based auto-scaling for Web Sites and Virtual Machines and richer AutoScale history logs

- Web Sites: New Web Server Logging Support to save HTTP Logs to Storage Accounts

- Operation Logs: New Filtering options on top of Operation Logs

All of these improvements are now available to use immediately (note: some are still in preview). Below are more details about them.

Windows Azure Cache Service: Preview of our new Distributed Cache Service

I’m excited today to announce the preview release of the new Windows Azure Cache Service – our latest service addition to Windows Azure. The new Windows Azure Cache Service enables you to easily deploy dedicated, high performance, distributed caches that you can use from your Windows Azure applications to store data in-memory and dramatically improve their scalability and performance.

The new Windows Azure Cache Service can be used by any type of Windows Azure application – including those hosted within Windows or Linux Virtual Machines, as well as those deployed as a Windows Azure Web Site and Windows Azure Cloud Services. Support for Windows Azure Mobile Services will also be enabled in the future.

You can instantiate a dedicated instance of a Windows Azure Cache Service for each of your apps, or alternatively share a single Cache Service across multiple apps. This later scenario is particularly useful when you wish to partition your cloud backend solutions into multiple deployment units – now they can all easily share and work with the same cached data.

Benefits of the Windows Azure Cache Service

Some of the benefits of the new Windows Azure Cache Service include:

- Ability to use the Cache Service from any app type (VM, Web Site, Mobile Service, Cloud Service)

- Each Cache Service instance is deployed within dedicated VMs that are separated/isolated from other customers – which means you get fast, predictable performance.

- There are no quotas or throttling behaviors with the Cache Service – you can access your dedicated Cache Service instances as much or as hard as you want.

- Each Cache Service instance you create can store (as of today’s preview) up to 150GB of in-memory data objects or content. You can dynamically increase or shrink the memory used by a Cache Service instance without having to redeploy your apps.

- Web Sites, VMs and Cloud Service can retrieve objects from the Cache Service on average in about 1ms end-to-end (including the network round-trip to the cache service and back). Items can be inserted into the cache in about ~1.2ms end-to-end (meaning the Web Site/VM/Cloud Service can persist the object in the remote Cache Service and gets the ACK back in 1.2ms end-to-end).

- Each Cache Service instance is run as a highly available service that is distributed across multiple servers. This means that your Cache Service will remain up and available even if a server on which it is running crashes or if one of the VM instances needs to be upgraded for patching.

- The VMs that the cache service instances run within are managed as a service by Windows Azure – which means we handle patching and service lifetime of the instances. This allows you to focus on building great apps without having to worry about managing infrastructure details.

- The new Cache Service supports the same .NET Cache API that we use today with the in-role cache option that we support with Cloud Services. So code you’ve already written against that is compatible with the new managed Cache Service.

- The new Cache Service comes with built-in provider support for ASP.NET Session State and ASP.NET Output Page Caching. This enables you to easily scale-out your ASP.NET applications across multiple web servers and still share session state and/or cached page output regardless of which customer hit which server.

- The new Cache Service supports the ability to either use a separate Cache Service instance for each of your apps, or instead share a single Cache Service instance across multiple apps at once (which enables easy data sharing as well as app partitioning). This can be very useful for scenarios where you want to partition your app up across several deployment units.

Creating a Cache Service

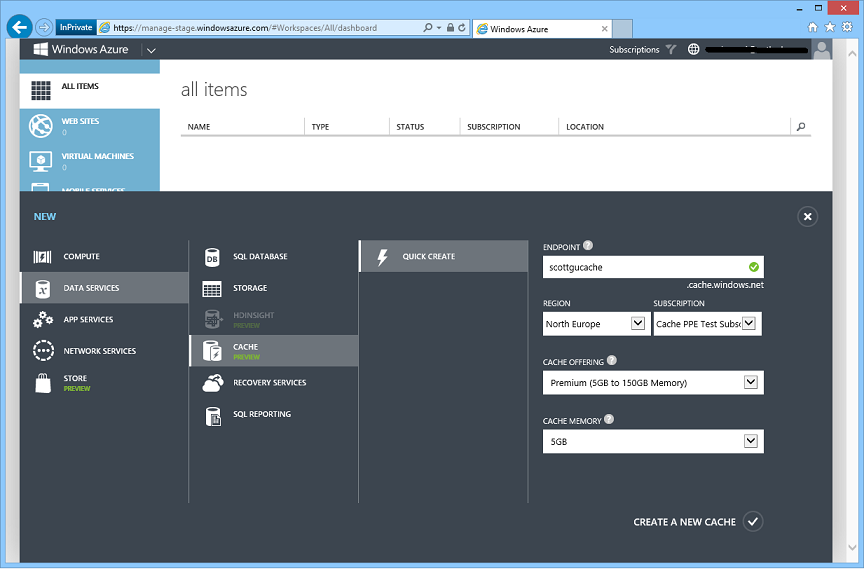

You can easily create a new Cache Service by going to the Windows Azure Management Portal and using the NEW -> DATA SERVICES -> CACHE option:

In the screenshot above, we specified that we wanted to create a new Premium cache of 5 GB named “scottgucache” in the “North Europe” region. Once we click the “Create a New Cache” button it will take about a few minutes to provision:

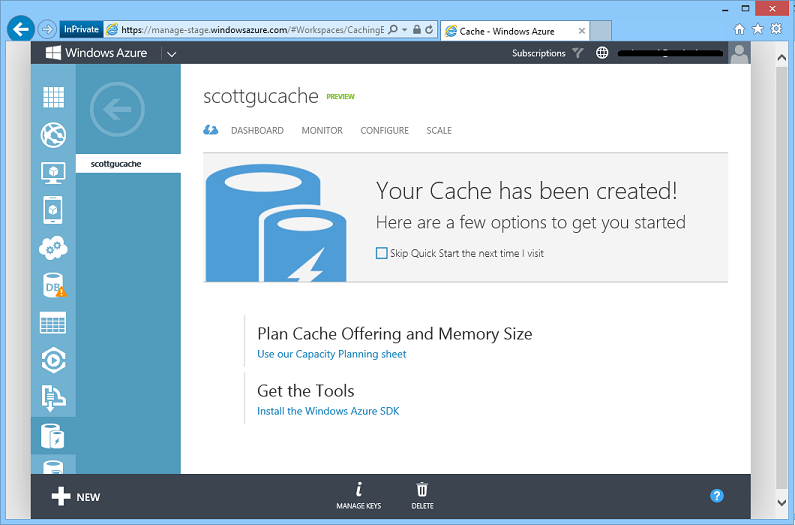

Once provisioned, the cache will show up in the Windows Azure Management Portal just like all of the other Windows Azure services (Web Sites, VMs, Databases, Storage Accounts, etc) within our subscription. We can click the DASHBOARD tab to see more details about it:

We can use the cache as-is (it comes with smart defaults and doesn’t require changes to get started). Or we can also optionally click the CONFIGURE tab to manage custom settings - like creating named cache partitions and configuring expiration behavior, evicition policy, availability settings (which means a cached item will be saved across multiple VM instances within the cache service so that they will survive even if a server crashes), and notification settings (which means our cache can call back our app when an item it updated or expired):

Once you make a change to one of these settings just click the “Save” button and it will be applied immediately (no need to redeploy).

Using the Cache

Now that we have created a Cache Service, let’s use it from within an application.

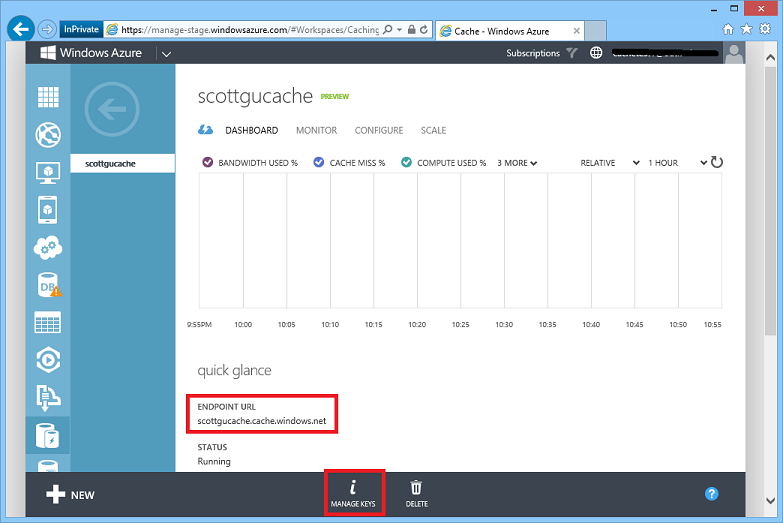

To access the Cache Service from within an app, we’ll need to retrieve the endpoint URL for the Cache Service and retrieve an access key that allows us to securely access it. We can do both of these by navigating to the DASHBOARD view of our Cache Service within the Windows Azure Management Portal:

The endpoint URL can be found in the “quick glance” view of the service, and we can retrieve the API key for the service by clicking the “Manage Keys” button:

Once we have saved the endpoint URL and access key from the portal, we’ll update our applications to use them.

Using the Cache Service Programmatically from within a .NET application

Using the Cache Service within a .NET or ASP.NET applications is easy. Simply right-click on your project within Visual Studio, choose the “Manage NuGet Packages” context menu, search the NuGet online gallery for the “Windows Azure Caching” NuGet package, and then add it to your application:

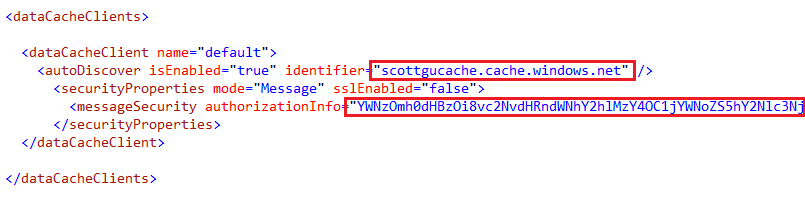

After you have installed the NuGet Windows Azure Caching package, open up your web.config/app.config file and replace the cache Service EndPoint URL and access key in the dataCacheClient section of your application’s config file:

Once you do this, you can now programmatically put and get things from the Cache Service using a .NET Cache API with code like below:

The objects we programmatically add to the cache will be automatically persisted within the Cache Service and can then be shared across any number of VMs, Web Sites, Mobile Services and Cloud Services that are using the same Cache Service instance. Because the cache is so fast (retrievals take on average about 1ms end-to-end across the wire and back) and because it can save 100s of GBs of content in-memory, you’ll find that it can dramatically improve the scalability, performance and availability of your solutions. Visit our documentation center to learn more about the Windows Azure Caching APIs.

Enabling ASP.NET Session State across a Web Farm using the Cache Service

The new Windows Azure Cache Service also comes with a supported ASP.NET Session State Provider that enables you to easily use the Cache Service to store ASP.NET Session State. This enables you to deploy your ASP.NET applications across any number of servers and have a customer’s session state be available on any of them regardless of which web server the customer happened to hit last in the web farm.

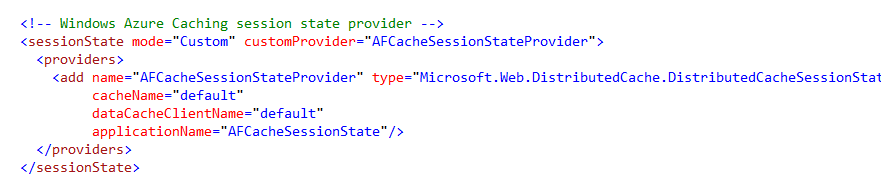

Enabling the ASP.NET Session State Cache Provider is really easy. Simply add the below configuration to your web.config file:

Once enabled your customers can hit any web server within your application’s web farm and the session state will be available. Visit our documentation center to learn more about the ASP.NET session state provider as well as the Output caching provider that we also support for ASP.NET.

Monitoring and Scaling the Cache

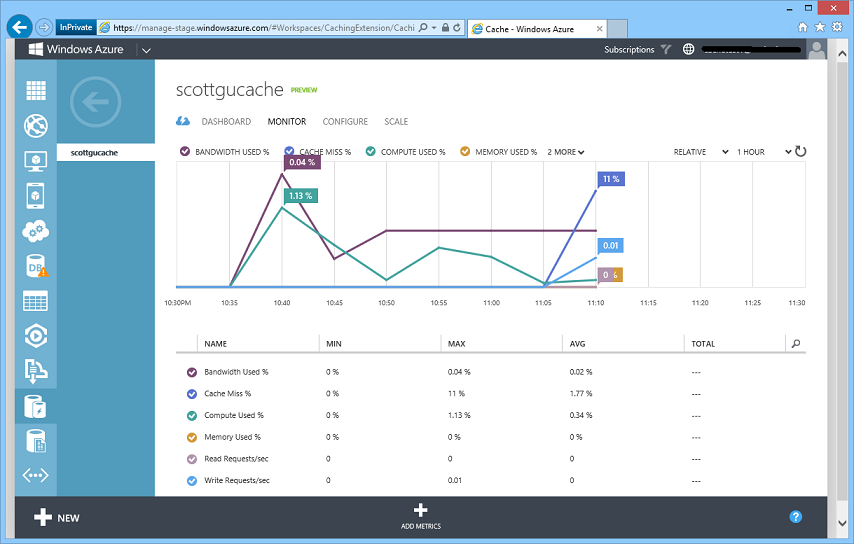

Once your Cache Service is deployed, you can track the activity and usage of the cache by going to its MONITOR tab in the Windows Azure Management Portal. You can get useful information like bandwidth used, cache miss percentage, memory used, read requests/sec, write requests/sec etc. so that you can make scaling decisions based on your real-world traffic patterns:

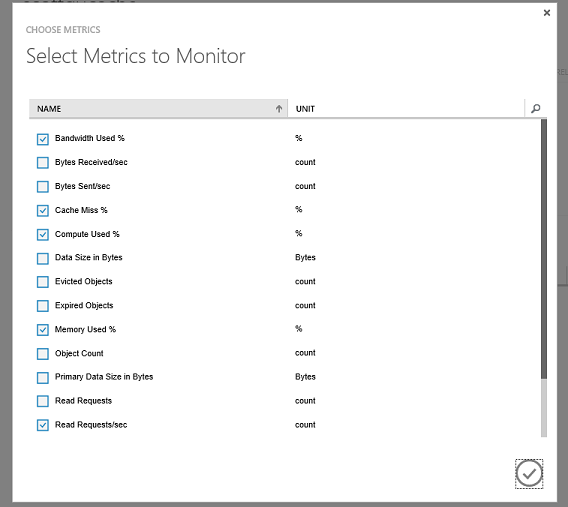

You can also customize the monitoring page to see other metrics of interest instead of or in addition to the default ones. Clicking the “Add Metrics” button above provides an easy UI to configure this:

If you need to scale your cache due to increased traffic to your application, you can go to the SCALE tab and easily change the cache offering or cache size depending on your requirements. For this example we had initially created a 5GB Premium Cache. If we wanted to scale it up we could simply expand the slider below to be 140GB and then click the “Save” button. This will dynamically scale our cache without it losing any of the existing data already persisted within it:

This makes it really easy to scale out your cache if your application load increases, or reduce your cache size if you find your application doesn’t need as much memory and you want to save costs.

Learning More

The new Windows Azure Cache Service enables you to really super-charge your Windows Azure applications. It provides a dedicated Cache that you can use from all of your Windows Azure applications – regardless of whether they are implemented within Virtual Machines or as Windows Azure Web Sites, Mobile Services, or Cloud Services. You’ll find that it can help really speed up your applications, improve your app scalability, and make your apps even more robust.

Review our Cache Service Documentation to learn more about the service. Visit here to learn more about more the details about the various cache offering sizes and pricing. And then use the Windows Azure Management Portal to try out the Windows Azure Cache Service today.

AutoScale: Schedule Updates for Web Sites + VMs, Weekend Schedules, AutoScale History

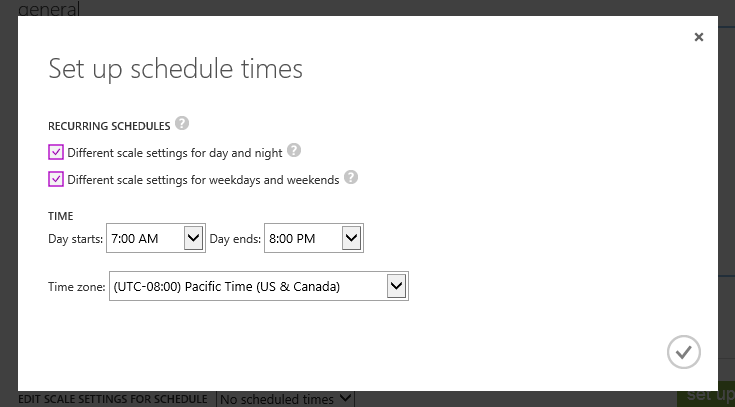

Three weeks ago we released scheduled AutoScale support for Cloud Services. Today, we are adding scheduled AutoScale support to Web sites and Virtual Machines as well, and we are also introducing support for setting up different time schedule rules depending on whether it is a weekday or weekend.

Time Scheduled AutoScale Support for Web Sites and Virtual Machines

Just like for Cloud Services, you can now go to the Scale tab for a Virtual Machines or a Web site, and you’ll see a new button to Set up schedule times:

Scheduled AutoScale works the same way now for Web Sites and Virtual Machines as for Cloud Services. You can still choose to scale the same way at all times (by selecting No scheduled times), but you can now click the “Set up schedule times” dialog to setup scale rules that run differently depending on the time of day:

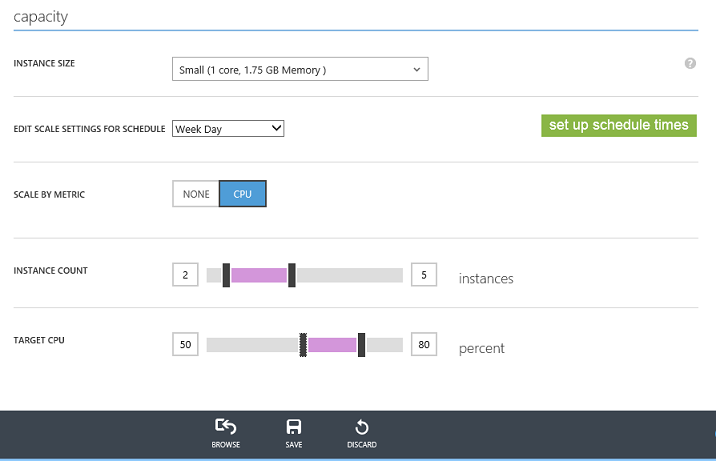

Once you define the start and stop of the day using the dialog above, you can then go back to the main scale tab and setup different rules for each time segment. For example, below I’ve setup rules so that during Week Days we’ll have between 2 and 5 small VMs running for our Web Site. I want AutoScale to scale-up/down the exact number depending on the CPU percentage of the VMs:

On Week Nights, though, I don’t want to have as many VMs running, so I’ll configure it to AutoScale only between 1 and 3 VMs. All I need to do to do this is to change the drop down from “Week Day” to “Week Night” and then edit a different set of rules and hit Save:

This makes it really easy to setup different policies and rules to use depending on the time of day – which can both improve your performance during peak times and save you more money during off-peak times.

AutoScale History

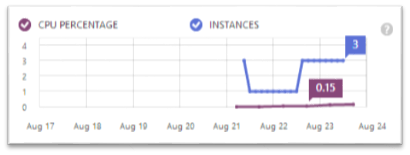

Previously, we supported an instance count graph on the scale tab so you can see the history of actions for your service. With today’s release we’ve improved this graph to now show the sum of CPU usage across all of your instances:

This means that if you have one instance, the sum of the CPU can go from 0 to 1, but if you have three instances, it can go from 0 to 3. You can use this to get a sense of the total load across your entire role, and to see how well AutosSale is performing.

Finally, we’ve also improved the Operation Log entry for AutoscaleAction: it now shows you the exact Schedule that was used to scale your service, including the settings that were in effect during that specific scale action (it’s in the section called ActiveAutoscaleProfile):

Web Sites: Web Server Logging to Storage Accounts

With today’s release you can now configure Windows Azure Web Sites to write HTTP logs directly to a Windows Azure Storage Account. This makes it really easy to persist your HTTP logs as text blobs that you can store indefinitely (since storage accounts can maintain huge amounts of data) and which you can also use to later perform rich data mining/analysis on them.

Storing HTTP Log Files as Blobs in Windows Azure Storage

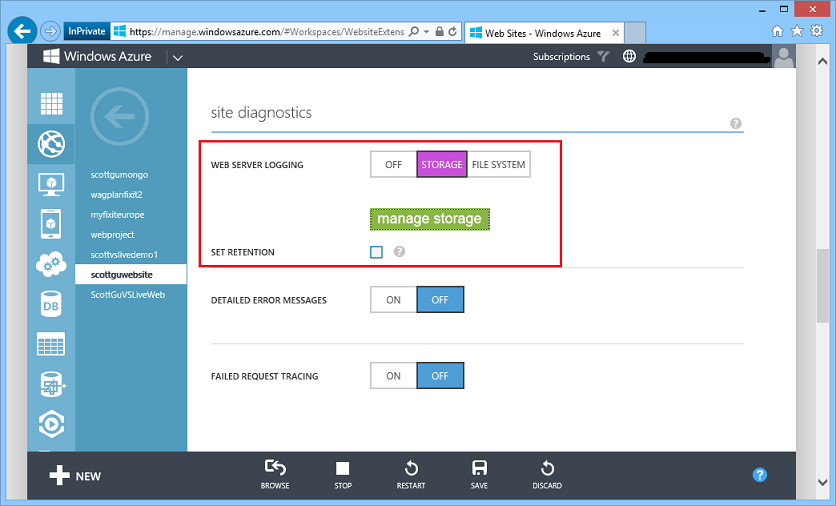

To enable HTTP logs to be written directly to blob storage, navigate to a Web Site using the Windows Azure Management Portal and click the CONFIGURE tab. Then navigate to the SITE DIAGNOSTICS section. Starting today, when you turn “Web Server Logging” ON, you can choose to store your logs either on the file system or in a storage account (support for storage accounts is new as of today):

Logging to a Storage Account

When you choose to keep your web server logs in a storage account, you can specify both the storage account and the blob container that you would like to use by clicking on the green manage storage button. This brings up a dialog that you can use to configure both:

By default, logs stored within a storage account are never deleted. You can override this by selecting the Set Retention checkbox in the site diagnostics section of the configure tab. You can use this to instead specify the number of days to keep the logs, after which they will be automatically deleted:

Once you’ve finished configuring how you want the logs to be persisted, and hit save within the portal to commit the settings, Windows Azure Web Sites will begin to automatically upload HTTP log data to the blob container in the storage account you’ve specified. The logs are continuously uploaded to the blob account – so you’ll quickly see the log files appear and then grow as traffic hits the web site.

Analysis of the Logs

The HTTP log files are persisted in a blob container using a naming scheme that makes it easy to identify which log file correlates to which activity. The log format name scheme is:

[sitename]/[year]/[month]/[day]/[hour]/[VMinstancename].log

The HTTP logs themselves are plain text files that store many different settings in a standard HTTP log file format:

You can easily download the log files using a variety of tools (Visual Studio Server Explorer, 3rd Party Storage Tools, etc.) as well as programmatically write scripts or apps to download and save them on a machine. Because the content of the files are in a standard HTTP log format you can then use a variety of tools (both free and commercial) to parse and analyze their content.

For more advanced scenarios, you can also now spin up your own Hadoop cluster using the Windows Azure HDInsight service. HDInsight enables you to easily spin up, as well as quickly tear down, Hadoop clusters on Windows Azure that you can use to perform MapReduce and analytics jobs with. Because HDInsight natively supports Windows Azure Blob Storage, you can now use HDInsight to perform custom MapReduce jobs on top of your Web Site Log Files stored there. This provides an even richer way to understand and analyze your site traffic and obtain rich insights from it.

Operation Logs: Richer Filtering

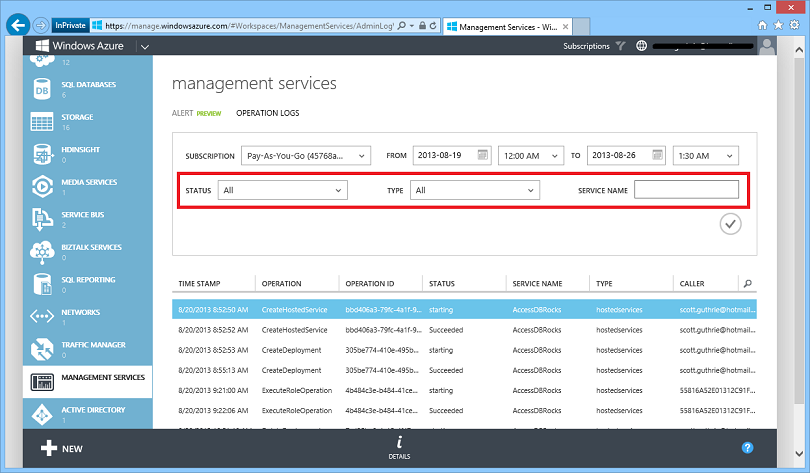

Today’s release adds some improvements to the Windows Azure OPERATION LOGS feature (which you can now access in the Windows Azure Portal within the MANAGEMENT SERVICES section of the portal). We now support filtering based on several additional fields: STATUS, TYPE and SERVICE NAME. This is in addition to the two filters we already support – filter by SUBSCRIPTION and TIME.

This makes it even easier to filter to the specific log item you are looking for quickly.

Summary

Today’s release includes a bunch of great features that enable you to build even better cloud solutions. If you don’t already have a Windows Azure account, you can sign-up for a free trial and start using all of the above features today. Then visit the Windows Azure Developer Center to learn more about how to build apps with it.

Hope this helps,

Scott

Note: I plan to implement log storage in Windows Azure blobs for my Android MiniPCs and TVBoxes demonstration Windows Azure Web Site this week and will update this post when logging starts.

Microsoft’s Public Relations team announced plans for a US$250 million data center in Finland in a Microsoft to acquire Nokia’s devices & services business, license Nokia’s patents and mapping services press release of 9/3/2013:

…

Microsoft also announced that it has selected Finland as the home for a new data center that will serve Microsoft consumers in Europe. The company said it would invest more than a quarter-billion dollars in capital and operation of the new data center over the next few years, with the potential for further expansion over time.

…

The announcement didn’t include any details about the data center’s support for Windows Azure, but it’s a good bet that it will join Windows Azure’s North Europe region or be the foundation of a new Scandavia region.

Jack Clark (@mappingbabel) asserted Microsoft “Farms cash with cache that smacks down AWS ElasticCache” as a deck for his Microsoft soars above Amazon with cloud cache article of 9/3/2013 for The Register:

Microsoft has launched a new Azure technology to give developers a dedicated storage layer for frequently accessed information, and its pricing and features trump similar tech fielded by cloud incumbent Amazon Web Services.

The caching service was launched in a preview format by Microsoft on Tuesday, and marks the creation of another front in Redmond's ongoing war with Amazon Web Services, as it will compete with Amazon ElastiCache.

The preview release of the "Windows Azure Cache Service" gives developers access to a Windows or Linux compatible caching service that can be sliced up into various dedicated instances for any application, whether a dedicated virtual machine or a platform application, or a mobile app.

"The new Cache Service supports the ability to either use a separate Cache Service instance for each of your apps, or instead share a single Cache Service instance across multiple apps at once (which enables easy data sharing as well as app partitioning). This can be very useful for scenarios where you want to partition your app up across several deployment units," Scott Guthrie, a Microsoft cloud veep, wrote.

Each cache instance can give developers up to 150GB of in-memory data for objects or content, with a retrieval latency of 1ms, and an insert latency of around 1.2ms. The service can be integrated with .NET applications via the "NuGet Windows Azure Caching package", allowing developers to

PUTandGETthings from the tech using a .NET cache API."It provides a dedicated Cache that you can use from all of your Windows Azure applications – regardless of whether they are implemented within Virtual Machines or as Windows Azure Web Sites, Mobile Services, or Cloud Services. You'll find that it can help really speed up your applications, improve your app scalability, and make your apps even more robust," Guthrie said.

Pricing for the technology starts at $12.50 per month ($0.017 per hour) for a "basic" service which has a cache size of 128MB across eight dedicated caching units, and which can scale up to 1GB in 128MB increments.

As is traditional for Microsoft, these prices include a 50 percent discount while in preview mode, so they could go to $0.032 or so. This compares with an on-demand hourly price of $0.022 for the weediest cache node available in Amazon's ElasticCache, and $0.014 for a medium utilization reserved cache node.

At the high end, the "Premium" service has caches that range in size from 5GB to 150GB in 5GB increments, and come with notifications and high availability as well.

The pricing shows a clear desire by Microsoft to be competitive with Amazon, but also implies that Redmond is not willing to go as low as Bezos & Co in the long term. The same was true of Azure's preview infrastructure-as-a-service pricing, which upon going to general availability rocketed up to meet and in some cases exceed Amazon's prices.

The service is available from Microsoft's East US, West US, North Europe, West Europe, East Asia, and South East Asia data center hubs. It is compatible with memcached.

What may set the two services apart is Microsoft's auto-scaling ability, which promises to let admins scale the cache "without it losing any of the existing data already persisted in it." This compares with ElastiCache, which requires users to manually add or delete new nodes to scale memory

Rod Trent (@rodtrent) described The Microsoft Visionary Cloud: How We Got Here in an 8/30/2013 article for the WindowsITPro site:

In Microsoft Gloats over Latest Gartner Cloud Report, but Amazon Still Makes Everyone Else Look Silly, I poked fun at Microsoft a little bit. The company revved up the disco ball and fog machine to highlight a strong first step, landing in the "Visionary" category of Gartner's recent Magic Quadrant report for IaaS.

But, once you step back and realize the enormity of what Microsoft has done in just three short years, it's not funny anymore. Being included in the report is quite an accomplishment considering they had no chance of even honorable mention a short while ago. It's amazing how quickly things change. And, there's nothing wrong with tooting your own horn. Someone has to do it.

While Amazon Web Services still leads the field for IaaS by a wide margin, it's worth noting again that things do change quickly.

Three years ago at the Microsoft Management Summit (MMS) 2010, Microsoft announced they were "all in" for the coud. The event was in Las Vegas, the casino city of the world, so the humor in the themed phrase was acknowledged and accepted. But, that's just it. Attendees took it more as humor than anything else because Microsoft had nothing at the time to really show for it. Ironically, MMS 2010 happened during the Icelandic volcanic eruption that grounded flights overseas due to the size and duration of the resulting ash "cloud." MMS 2010 sold out that year, but almost a quarter of the attendees couldn't make it due to volcanic barrier over Europe. As you can guess, there was humor in that, too. A cloud-centric conference with a cloud-centric message was muted by a cloud. The jokes were fast and furious.

Microsoft took the next two years to evolve and hone its message while developing technologies to back up their vision. Fast forward to today and you see a very different Microsoft and very unique and "visionary" cloud offerings. For the first time ever, MMS 2013 saw Amazon Web Services in attendance, and at the time I thought it strange. Even in April, when the event took place, Microsoft's cloud message was not a strong one. However, it was strong enough for Amazon not only to attend, but also to solicit attendees and invite them to private meetings during the conference. At TechEd 2013 in June, Microsoft offered a very different story. It was strong and full of substance. It offered elements that people could actually grasp and comprehend.

Microsoft is steadily improving their offerings and I can't imagine what the Microsoft cloud will look like in three more years. I can't even begin to visualize which additional cloud competitors might be in attendance at MMS 2014.

Despite taking heat quite often for providing poor forecasting, Gartner seems to have it right.

David Linthicum (@DavidLinthicum) explained What the new Microsoft CEO should understand about the cloud computing market that Ballmer never did in an 8/29/2013 post to the GigaOm Pro blog (free trial registration required):

Steve Ballmer is leaving Microsoft, and perhaps that is a good idea. Microsoft’s lack of revenue and growth put pitchforks and torches in investors’ hands. Well, they got what they wanted. While Ballmer phases out in the next 12 month[s], I suspect Microsoft will put somebody in there ASAP.

Who knows, at this point, if it will be an internal promotion, somebody from the board, or an outside hire? However, the new CEO needs to show up ready to fix some things that are clearly broken, including the innovation, or lack thereof, around Microsoft’s movement into the cloud.

The new CEO should concentrate on cloud computing as the core growth opportunity for Microsoft. This, no matter if you think about focusing on Microsoft-powered tablets or games, or keeping the enterprise market share that Microsoft fought so hard to grow over the past three decades. It all tracks back to the growth and usage of cloud-based resources.

The fundamental mistake that Microsoft made when they entered the cloud computing marketplace was to go after the wrong segment of the market; PaaS. Then, when it looked like more investments were being made in infrastructure (such as AWS killing it), Microsoft steered toward an IaaS cloud in a very familiar “me too” move that Microsoft seems to be all too good at these days.

The core problems with Microsoft, and thus the first things the CEO needs to fix, are leadership and innovation. Microsoft needs to rediscover the value of leading and innovating in the cloud computing space. This is more about what’s not been done and where the market is going, than where others are succeeding and trying to replicate their success.

While a “fast follower” strategy is okay for some companies, Microsoft won’t be able to move fast enough to get ahead of their competition. This is a bit different than in the past when Microsoft could quickly get ahead of the innovators (can you say Microsoft Explorer?), and dominate the market so much and so quickly that federal regulators came down on them.

I suspect Microsoft felt this strategy would work in the cloud computing space as well. While I would not call Microsoft’s cloud strategy a failure these days, I’m not sure anyone believes that they could ever catch up with AWS unless they become much more innovative and learn to compress time-to-market for their public and private cloud computing technology offerings.

So, what are the core items that the new Microsoft CEO should understand, that perhaps Steve Ballmer never did? It’s a rather simple list.

You can’t innovate through acquisitions. While Microsoft has not done many cloud-related acquisitions, they have done a few. The temptation is to drive further into the cloud, perhaps further into IaaS, by buying your way into the game. Companies such as Rackspace, GoGrid, and Hosting.com would be likely candidates.

The trouble comes with the fact that, by the time they integrate the technology and the people, it will be too late. The market will have left them behind, and the distraction will actually hinder progress.

If somebody else is successful in the cloud computing market, think ahead of what they are doing, don’t imitate. No matter if we’re talking IaaS clouds, phones, or tablets, by the time some company is dominating a market, it’s time to think ahead of that company. Don’t just rebrand and remake their same ideas. You have to think ahead of the market in order to build the technology that will lead the market, generally speaking.

There is much about the cloud space that has not been tapped or dominated as of yet. This includes the emerging use of cloud management platforms, resource management and service governance, as well as new approaches to cloud security and resource accounting. Pick something not yet emerged in the cloud computing space, and work like hell to lead the market.

Think open, not closed. While Microsoft has made some strides in interoperability and more open technology, they are not actively pushing their technology as open. Indeed, interoperability and migration can be a challenge when moving from non-Microsoft technology, albeit, when you’re dealing just with Microsoft technology, the task is typically a nice experience.

This is perhaps the hardest thing for Microsoft to do, considering their heritage, and the fact that it’s an approach that has proven successful in the past. However, I think those days are over. Today it’s all about leveraging open standards, open source, and open and extensible frameworks, when considering cloud computing. Azure will have to make more strides in that area to gain greater adoption.

Microsoft’s purported “lack of revenue” isn’t an issue IMO. It’s Ballmer’s lack of the clairvoyance required to anticipate future trends early enough that your enterprise can take advantage of them and the organizational skills to manage a diverse group of technologists.

Full disclosure: I’m a registered GigaOm analyst.

<Return to section navigation list>

Windows Azure Pack, Hosting, Hyper-V and Private/Hybrid Clouds

J. Peter Bruzzese (@JPBruzzese) asserted “While Windows Server 2012 supports some aspects of Azure Pack, R2 has five added or enhanced features” in a deck for his Windows Server 2012 R2 and Azure Pack work better together article of 9/4/2013 for InfoWorld’s Enterprise Windows blog:

In last week's post, I outlined how the Windows Azure Pack can help developers write software once for both their Azure cloud platform and their in-house Windows Server 2012 servers. Since then, many people have asked me what's the difference between using Windows Azure Pack with Windows Server 2012 and using it with Windows Server 2012 R2.

Here is what is supported in Azure Pack by both Windows Server 2012 and Windows Server 2012 R2:

- Windows Azure Pack Core

- System Center Virtual Machine Manager 2012 R2

- System Center Operations Manager 2012 R2

- Service Bus

- Service Reporting

[ J. Peter Bruzzese explains how to get started with Windows Azure. | 10 excellent new features in Windows Server 2012 R2 | Stay atop key Microsoft technologies in our Technology: Microsoft newsletter. ]

And here is what only Windows 2012 R2 supports:

- Client browser, meaning you need no console connect with Windows Server 2012

- Active Directory Federation Services

- Windows Azure websites, including performance enhancements such as idle page time-out

- Service Provider Foundation 2012 R2, though this is supported in Windows Server 2012 if Windows Management Framework 4.0 is installed as a prerequisite

- Service Management Automation

Some of these features may be new to you, so the fact that they are made better by or exist only in Windows Server 2012 R2 may not mean anything out of the box. Let me demystify some of this.

Take Service Management Automation, for example. This PowerShell-based capability lets operations be exposed through a self-servce portal, such as to manage workflows (called runbooks) composed of cmdlet collections.

You may also be wondering what Service Provider Foundation R2 is. This connects to System Center Orchestrator so that you can offer multitenant self-service portals as if you were an IaaS provider.

Some of these features may not be on your road map yet, in which case having Windows Server 2012 R2 isn't a prerequisite to using Azure Pack. But Windows Server 2012 R2 and Azure Pack do more together, and you'll likely take advantage of the pair in the future, if not immediately.

No significant articles so far this week.

<Return to section navigation list>

Visual Studio LightSwitch and Entity Framework 4.1+

<Return to section navigation list>

Cloud Security, Compliance and Governance

<Return to section navigation list>

Cloud Computing Events

No significant articles so far this week.

<Return to section navigation list>

Other Cloud Computing Platforms and Services

No significant articles so far this week.

<Return to section navigation list>

0 comments:

Post a Comment