Introducing Microsoft Research’s Excel Cloud Data Analytics

Microsoft Research’s eXtreme Computing Group (XCG) introduced Excel Cloud Data Analytics—then called Excel DataScope—at its TechFest 2011 conference in Redmond, WA on 3/8/2011. According to XCG’s Excel DataScope page:

Cloud-Scale Data Analytics from Excel

From the familiar interface of Microsoft Excel, Excel DataScope enables researchers to accelerate data-driven decision making. It offers data analytics, machine learning, and information visualization by using Windows Azure for data and compute-intensive tasks. Its powerful analysis techniques are applicable to any type of data, ranging from web analytics to survey, environmental, or social data.

Complex Data Analytics via Familiar Excel Interface

We are beginning to see a new class of decision makers who are very comfortable with a variety of diverse data sources and an equally diverse variety of analytical tools that they use to manipulate data sets to uncover a signal and extract new insights. These decision makers want to invoke complex models, large-scale machine learning, and data analytics algorithms over their data collection by using familiar application, such as Microsoft Excel. They also want access to extremely large data collections that live in the cloud, to sample or extract subsets for analysis or to mash up with their local data sets.

Seamless Access to Cloud Resources on Windows Azure

Excel DataScope is a cloud service that enables data scientists to take advantage of the resources of the cloud, via Windows Azure, to explore their largest data sets from familiar client applications. Our project introduces an add-in for Microsoft Excel that creates a research ribbon that provides the average Excel user seamless access to compute and storage on Windows Azure. From Excel, the user can share their data with collaborators around the world, discover and download related data sets, or sample from extremely large (terabyte sized) data sets in the cloud. The Excel research ribbon also presents the user with new data analytics and machine learning algorithms, the execution of which transparently takes place on Windows Azure by using dozens or possibly hundreds of CPU cores.

Extensible Analytics Library

The Excel DataScope analytics library is designed to be extensible and comes with algorithms to perform basic transforms such as selection, filtering, and value replacement, as well as algorithms that enable it to identify hidden associations in data, forecast time series data, discover similarities in data, categorize records, and detect anomalies.

Excel DataScope Features

- Users can upload Excel spreadsheets to the cloud, along with metadata to facilitate discovery, or search for and download spreadsheets of interest.

- Users can sample from extremely large data sets in the cloud and extract a subset of the data into Excel for inspection and manipulation.

- An extensible library of data analytics and machine learning algorithms implemented on Windows Azure allows Excel users to extract insight from their data.

- Users can select an analysis technique or model from our Excel DataScope research ribbon and request remote processing. Our runtime service in Windows Azure will scale out the processing, by using possibly hundreds of CPU cores to perform the analysis.

- Users can select a local application for remote execution in the cloud against cloud scale data with a few mouse clicks, effectively allowing them to move the compute to the data.

- We can create visualizations of the analysis output and we provide the users with an application to analyze the results, pivoting on select attributes.

Analytics Algorithms Performed in the Cloud

Excel DataScope is a technology ramp between Excel on the user’s client machine, the resources that are available in the cloud, and a new class of analytics algorithms that are being implemented in the cloud. An Excel user can simply select an analytics algorithm from the Excel DataScope Research Ribbon without concern for how to move their data to the cloud, how to start up virtual machines in the cloud, or how to scale out the execution of their selected algorithm in the cloud. They simply focus on exploring their data by using a familiar client application.

Excel DataScope is an ongoing research and development project. We envision a future in which a model developer can publish their latest data analysis algorithm or machine learning model to the cloud and within minutes Excel users around the world can discover it within their Excel Research Ribbon and begin using it to explore their data collection.

People:

Downloadable Excel CDA Binaries and Source Code

Microsoft Research released a downloadable open-source version of Excel CDA for non-commercial use under a Microsoft Research license (MSR-LA) on 11/29/2011:

Excel Cloud Data Analytics is a Microsoft Excel add-in that enables users to execute a variety of data-centric tasks on Windows Azure through a custom Ribbon in Excel. This add-in can be used to connect to data stored in the Windows Azure cloud and can be extended to connect to a variety of other data sources. It also provides a general framework in which the user can create data-analytics methods and run them in Windows Azure.

The current code base supports the execution in Windows Azure via built-in access to Daytona, an iterative MapReduce runtime for Windows Azure. Using Excel Cloud Data Analytics, users can upload data to Windows Azure, select and run registered data-analysis algorithms in the cloud through Windows Azure, monitor the execution of the data analysis, and retrieve results for display or further processing.

The source code consists of a Visual Studio 2010 ExcelCloudDataAnalyticsRibbon.sln solution, which requires .NET Framework 4.0 and Visual Studio Tools for Office (VSTO.) The source code lets you customize Cloud Data Analytics ribbon, shown here without having installed support for Project “Daytona” or specifying a local workgroup or Windows Azure Storage account during installation:

Note: I missed the availability of this download because I was expecting it to be announced on XCG’s Excel DataScope page but it wasn’t. To find the download independently, you must click the Downloads button at the top right of the page, and then click the E link in the Filter by Name navigation group:

Project “Daytona” for Scheduling MapReduce Functions in Windows Azure

From the XCG’s Daytona Page:

Iterative MapReduce on Windows Azure

Microsoft has developed an iterative MapReduce runtime for Windows Azure, code-named "Daytona." Project Daytona is designed to support a wide class of data analytics and machine learning algorithms. It can scale out to hundreds of server cores for analysis of distributed data.

Project Daytona was developed as part of the eXtreme Computing Group’s Cloud Research Engagement Initiative, and made its debut at the Microsoft Research Faculty Summit. One of the most common requests we have received from the community of researchers in our program is for a data analysis and processing framework. Increasingly, researchers in a wide range of domains—such as healthcare, education, and environmental science—have large and growing data collections and they need simple tools to help them find signals in their data and uncover insights. We are making the Project Daytona MapReduce Runtime for Windows Azure download freely available, along with sample codes and instructional materials that researchers can use to set up their own large-scale, cloud data-analysis service on Windows Azure. In addition, we will continue to improve and enhance Project Daytona (periodically making new versions available) and support our community of users.

Overview

The project code-named Daytona is built on Windows Azure and employs the available Windows Azure compute and data services to offer a scalable and high-performance system for data analytics. To deploy and use Project Daytona, you need to follow these simple steps:

- Develop your data analytics algorithm(s). Project Daytona enables a data analytics or machine learning algorithm to be authored as a set of Map and Reduce tasks, without in-depth knowledge of distributed computing or software development on Windows Azure. To get you up and running quickly, the release package includes sample data analysis algorithms to provide you with examples for building a data analysis library, as well as a developer guide with step-by-step instructions for authoring new algorithms, and source code for a sample client application for integrating your existing applications with Project Daytona on Windows Azure.

- Upload your data and library of data analytics routines into Windows Azure. Windows Azure blob storage provides reliable, scalable, easy-to-use storage for your data and library of analysis routines. Our documentation clearly outlines the steps for doing this.

- Deploy the Daytona runtime to Windows Azure. By following the steps in the deployment guide, deploy the Daytona runtime to your Windows Azure account. You can configure the number of virtual machines for the deployment, specify and configure the storage account on Windows Azure for the analysis results, and then start and verify that the service is operational. Project Daytona enables you to use as many or as few virtual machines as you wish. When you are finished with your data analysis, you can follow the steps in the deployment guide to shut down the running instances and tear down your deployment.

- Launch data analytics algorithms. The Daytona release package provides you with source code for a simple client application that you can use to select and launch a data analytics model against a data set on Project Daytona. This client application is merely one example of how to integrate Project Daytona with a client application—perhaps one already in use in your lab—or you can author a Windows Azure service interface for job submission and monitoring.

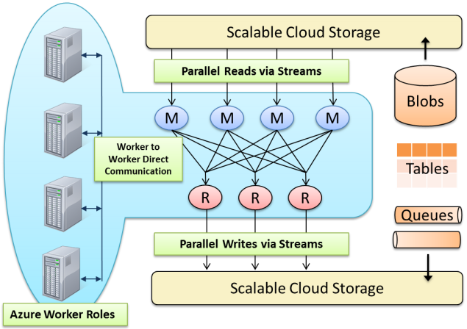

Project Daytona will automatically deploy the iterative MapReduce runtime to all of the Windows Azure virtual machines (VMs) in the deployment, sub-dividing the data into smaller chunks so that they can be processed (the “map” function of the algorithm) in parallel. Eventually, it recombines the processed data into the final solution (the “reduce” function of the algorithm). Windows Azure storage serves as the source for the data that is being analyzed and as the output destination for the end results. Once the analytics algorithm has completed, you can retrieve the output from Windows Azure storage or continue processing the output by using other analytics model(s). Project Daytona demonstrates the power of taking advantage of Windows Azure cloud services for application design.

Key Properties

Project Daytona features the following key properties.

Designed for the cloud, specifically for Windows Azure. Virtual machines, irrespective of infrastructure as a service (IaaS) or platform as a service (PaaS), introduce unique challenges and architectural tradeoffs for implementing a scale-out computation framework such as Project Daytona. Out of these, the most crucial are network communications between virtual machines (VMs) and the non-persistent disks of VMs. We have tuned the scheduling, network communications scheduling, and the fault tolerance logic of Project Daytona to suit this situation.

- Designed for cloud storage services. We have defined a streaming based, data-access layer for cloud data sources (currently, Windows Azure blob storage, but we will extend to others), which can partition data dynamically and support parallel reads. Intermediate data can reside in memory or in local non-persistent disks with backups in blobs, so that Project Daytona can consume data with minimum overheads and with the ability to recover from failures. We use the automatic persistence and replication that is provided by the Windows Azure storage services and, therefore, do not require a distributed file system.

Horizontally scalable and elastic. Computations in Project Daytona are performed in parallel, so to scale a large data-analytics computation, you can add more virtual machines to the deployment and Project Daytona will take care of the rest. By using Project Daytona on Windows Azure, you can instantly provision as much or as little capacity as you need to perform data-intensive tasks for applications such as data mining, machine learning, financial analysis, or data analytics. Project Daytona lets you focus on your data exploration; without having to worry about acquiring compute capacity or time-consuming hardware setup and management.

Optimized for data analytics. We designed Project Daytona with performance of data analytics in mind. Algorithms in data analytics and machine learning are often iterative and produce a sequence of answers of improving quality until they converge. Project Daytona provides support for iterative computations in its core runtime; it caches data between iterations to reduce communication overheads, different scheduling and relaxed fault tolerance mechanisms, and a natural programming API to author iterative algorithms.

Use Cases

There are a number of use cases for Project Daytona, such as for data analysis, machine learning, financial analysis, text processing, indexing, and search. Almost any application that involves data manipulation and analysis can take advantage of Project Daytona to scale out processing on Windows Azure.

We are actively exploring a specific use case for Project Daytona, as outlined below.

Data analytics as a service on Windows Azure, accessible to a host of clients, is about turning utility cloud computing into a service model for data analytics. In our view, this service is not limited to a single data collection or set of analytics, but the ability to upload data and select from an extensible library of models for data analysis. Powered by Project Daytona, the service will automatically scale out the data and analytics model across a pool of Windows Azure VMs without the overhead that is usually associated with typical business intelligence (BI) and data analysis projects. The analytic application possibilities are limited only by your imagination.

We have implemented one such application, which we call Excel DataScope. From the familiar interface of Microsoft Excel, Excel DataScope enables researchers to accelerate data-driven decision making. Our DataScope analytics service offers a library of data analytics and machine learning models, such as clustering, outlier detection, classification, and machine learning, along with information visualization—all implemented on Project Daytona. Users can upload data in their Excel spreadsheet to the DataScope service or select a data set already in the cloud, and then select an analysis model from our Excel DataScope research ribbon to run against the selected data. Project Daytona will scale out the model processing by using possibly hundreds of CPU cores to perform the analysis. The results can be returned to the Excel client or remain in the cloud for further processing and/or visualization. The algorithms and analysis techniques are applicable to any type of data, ranging from web analytics to survey, environmental, or social data.

- See Overview for information about what is included in the release package.

Related Work

While Daytona is a novel Iterative MapReduce runtime, designed for Microsoft Windows Azure and optimized for data analytics, the concepts in Daytona have several related works in the literature. Followings are some of the notable ones.

MapReduce[1] and Dryad[2] introduced simplified programming abstractions for large scale data processing on commodity clusters. Daytona adopts this strategy by providing a simple programming model for large scale data analytics based on MapReduce. Iterative MapReduce for distributed memory architectures was first introduced by the Twister project [3-4] and the Twister4Azure project [5-6] introduced iterative MapReduce on Windows Azure. HaLoop [7] and i-MapReduce[8] are related research efforts which optimize iterative MapReduce computations. Similar to other iterative MapReduce runtimes, Daytona also provides additional optimizations to enhance the performance of iterative computations on Windows Azure.

[1] J. Dean and S. Ghemawat, "MapReduce: simplified data processing on large clusters," Commun. ACM, vol. 51, pp. 107-113, 2008.

[2] M. Isard, M. Budiu, Y. Yu, A. Birrell, and D. Fetterly, "Dryad: distributed data-parallel programs from sequential building blocks," presented at the Proceedings of the 2nd ACM SIGOPS/EuroSys European Conference on Computer Systems 2007, Lisbon, Portugal, 2007.

[3] Twister: Iterative MapReduce

[4] Jaliya Ekanayake, Hui Li, Bingjing Zhang, Thilina Gunarathne, Seung-Hee Bae, Judy Qiu, and Geoffrey Fox, “ Twister: a runtime for iterative MapReduce,”oceedings of the 19th ACM International Symposium on High Performance Distributed Computing (HPDC '10). ACM, New York, NY, USA, 810-818. DOI=10.1145/1851476.1851593

[5] Twister 4 Azure : A Decentralized Iterative MapReduce Runtime For Windows Azure Cloud

[6] Thilina Gunarathne, BingJing Zang, Tak-Lon Wu and Judy Qiu. Portable Parallel Programming on Cloud and HPC: Scientific Applications of Twister4Azure, In Proceedings of the forth IEEE/ACM International Conference on Utility and Cloud Computing (UCC 2011) , Melbourne, Australia. Dec 2011.

[7] Yingyi Bu, Bill Howe, Magdalena Balazinska, and Michael D. Ernst. 2010. HaLoop: efficient iterative data processing on large clusters. Proc. VLDB Endow. 3, 1-2 (September 2010), 285-296.

[8] Yanfeng Zhang; Qinxin Gao; Lixin Gao; Cuirong Wang; , "iMapReduce: A Distributed Computing Framework for Iterative Computation," Parallel and Distributed Processing Workshops and Phd Forum (IPDPSW), 2011 IEEE International Symposium on , vol., no., pp.1112-1121, 16-20 May 2011, doi: 10.1109/IPDPS.2011.260

What’s Next for Project Daytona?

Project Daytona is part of an active research and development project in the eXtreme Computing Group of Microsoft Research.

The current release of Project Daytona is a research technology preview (RTP). We are still tuning the performance of Project Daytona and adding new functionality, and we will fix any software defects that are identified (see our email link below).

Our research on Project Daytona and its use for cloud data analytics is far from complete. In the summer of 2011 three PhD candidates joined our group, Romulo Goncalves (CWI), Atilla Balkir (University of Chicago), and Chen Jin (Northwestern University), to work with our team on data streaming support in Project Daytona, optimizations to the core runtime, support for incremental processing, and data services to minimize latency and data movement. We look forward to sharing these results in our technical papers and improved versions of Project Daytona that will be released in Spring 2012.

Please report any issues you encounter when using Project Daytona to xcgngage@microsoft.com, or contact Roger Barga with suggestion for improvements and/or new features. …

Articles and Papers

- Excel DataScope for Data Scientists

- Excel Gets Boost for Cloud-Based Analytics, HPC Wire, June 14, 2011

- From Excel to the Cloud

Team members are the same as those for Excel CDA.

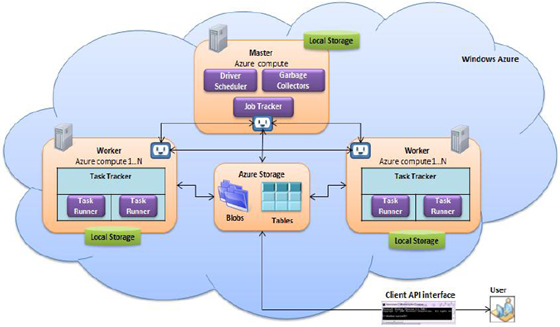

Excel CDA interacts with Project Daytona via the Scheduler Bridge:

(Graphic from Microsoft Research’s Excel Cloud Data Analytics (CDA) Design Document.docx.)

Following are the *.docx documentation files included with the release:

- Excel Cloud Data Analytics (CDA) Design Document

- Excel Cloud Data Analytics (CDA) Developer Guide

- Excel Cloud Data Analytics (CDA) Installation Guide

- Excel Cloud Data Analytics (CDA) User Guide

Downloadable Project Daytona MapReduce Runtime for Windows Azure CTP 1.2 Refresh

The Project Daytona MapReduce Runtime for Windows Azure replaces the Dryad and DryadLINQ runtimes, which are facing retirement before being widely deployed. From the Project Daytona MapReduce Runtime for Windows Azure download page of 11for the Daytona-CTP-1.2-Refresh:

Microsoft has developed an iterative MapReduce runtime for Windows Azure, code-named Daytona. Project Daytona is designed to support a wide class of data analytics and machine-learning algorithms. It can scale to hundreds of server cores for analysis of distributed data. Project Daytona was developed as part of the eXtreme Computing Group’s Cloud Research Engagement Initiative.

(Graphic from the Daytona - Runtime Developer guide documentation.))

News

On July 26, 2011, we released an updated Daytona community technical preview (CTP) that contains fixes that are related to scalability. … Learn more about this release...

(Graphic from Microsoft TechFest 2011 presentation.)

Overview

Project Daytona on Window Azure is now available, along with a deployment guide, developer and user documentation, and code samples for both data analysis algorithms and client application. This implementation of an iterative MapReduce runtime on Windows Azure allows laboratories, small groups, and individual researchers to use the power of the cloud to analyze data sets on gigabytes or terabytes of data and run large-scale machine learning algorithms on dozens or hundreds of compute cores.

Included in the CTP Refresh (July 26, 2011)

This refresh to the Daytona CTP contains the following enhancements:

- Updated

- Binaries

- Default Medium package

- Hosting project source

- API help reference file (CHM)

- Content not updated (remains same from previous package)

- Documentation

- Samples for Kmeans, Outlier, Wordcount

Included in the CTP Release (July 18, 2011)

This CTP release consists of a ZIP file (Daytona_on_Windows_Azure.zip) that includes our Windows Azure cloud service installation package along with the documentation and sample codes.

- The Deployment Package folder contains a package to be deployed on your Windows Azure account, a configuration file for setting up your account, and a guide that offers step-by-step instructions to set up Project Daytona on your Window Azure service. This package also contains the user guide that describes the user interface for launching data analytics jobs on Project Daytona from a sample client application.

- The Samples folder contains source code for sample data analytics algorithms written for Project Daytona as examples, along with source code for a sample client application to invoke data analytics algorithms on Project Daytona. The distribution also includes a developer guide for authoring new data analytics algorithms for Project Daytona, along with user guides for both the Daytona iterative MapReduce runtime and client application.

About Project Daytona

The project code-named Daytona is part of an active research and development project in the eXtreme Computing Group of Microsoft Research. We will continue to tune the performance of Project Daytona and add new functionality, fix any software defects that are identified, and periodically push out new versions.

Please report any issues you encounter in using Project Daytona to XCG Engagement or contact Roger Barga with suggestion for improvements and/or new features.

The download page doesn’t report any updates to the CTP 1.2 Refresh of 11/28/2012. Following are the *.pdf documentation files included with the release:

- Daytona - Client Application User Guide

- Daytona - Deployment guide

- Daytona - K-means algorithm user guide

- Daytona - Outlier Detection algorithm user guide

- Daytona - Runtime Developer guide

- Daytona API documentation Help file (pictured below)

- Daytona API documentation chw file

Note: Right-click the Daytona API documentation.chm file, choose properties, and click the Unblock button to activate the help files before use.

Relationship of Excel CDA to Apache Hadoop on Windows Azure and SQL Azure Labs’ Codenames “Social Analytics” and “Cloud Numerics”

Excel CDA is an alternative to using Apache Hadoop on Windows Azure for parallel processing of MapReduce functions. Both use Windows Azure storage for input data and MapReduce implementations for data processing and provide an Excel add-in for data analysis. (Hadoop on Azure provides a Hive add-in.) Hadoop on Azure uses Windows HPC clusters while Excel CDA employs Windows Azure Worker roles for scheduling. Hadoop on Azure offers a UI for setting parameter values for sample MapReduce applications. Both offer a WordCount MapReduce example. The Hadoop on Azure CTP provides small to large HTC clusters on Windows Azure without charging your credit card. You must pay for Daytona resources you deploy to Windows Azure.

Excel CDA shares some features in common with other recent big-data incentives from SQL Azure Labs. For example, Codename “Cloud Numerics” also requires C# programming for running matrix operations (other than examples) on HPC clusters you upload to Windows Azure. Like Excel CDA, “Cloud Numerics” makes you pay for the resources you consume. Cloud Numerics works primarily with dense numeric matrices, while Excel CDA’s MapReduce functions most commonly deal with strings or text documents.

“Social Analytics” performs sentiment analysis primarily on Twitter tweet streams, but also Facebook posts and StackOverflow questions but has limited data analysis features. “Social Analytics” might be a good candidate for supplying data for MapReduce analysis. I wrote a sample Winform client that downloads and analyzes trends in sentiment of tweets about Windows 8:

The following OakLeaf Systems blog posts (in reverse chronological order) describe Microsoft’s alternative approaches to big-data analytics when this post was written:

- Introducing Apache Hadoop Services for Windows Azure (1/31/2012)

- Deploying “Cloud Numerics” Sample Applications to Windows Azure HPC Clusters (1/28/2012)

- Introducing Microsoft Codename “Cloud Numerics” from SQL Azure Labs (1/28/2012)

- Problems with Microsoft Codename “Data Explorer” - Aggregate Values and Merging Tables - Solved (12/30/2011)

- Microsoft Codename “Data Explorer” Cloud Version Fails to Save Snapshots of Codename “Social Analytics” Data (12/27/2011)

- Mashup Big Data with Microsoft Codename “Data Explorer” - An Illustrated Tutorial (12/27/2011)

- More Features and the Download Link for My Codename “Social Analytics” WinForms Client Sample App (12/26/2011)

- Twitter Sentiment Analysis: A Brief Bibliography (11/26/2011)

- My Microsoft Codename “Social Analytics” Windows Form Client Detects Anomaly in VancouverWindows8 Dataset (11/19/2011)

- Microsoft Codename “Social Analytics” ContentItems Missing CalculatedToneId and ToneReliability Values (11/15/2011)

- Querying Microsoft’s Codename “Social Analytics” OData Feeds with LINQPad (11/5/2011)

- Problems Browsing Codename “Social Analytics” Collections with Popular OData Browsers (11/4/2011)

- Using the Microsoft Codename “Social Analytics” API with Excel PowerPivot and Visual Studio 2010 (11/2/2011)

- SQL Azure Labs Unveils Codename “Social Analytics” Skunkworks Project (11/1/2011)

0 comments:

Post a Comment