Windows Azure and Cloud Computing Posts for 11/21/2011+

| A compendium of Windows Azure, SQL Azure Database, AppFabric, Windows Azure Platform Appliance and other cloud-computing articles. |

• Updated 11/25/2011 1:00 PM PST: Added lots of new articles marked •.

Update 11/24/2011: This post was delayed due to Blogger’s recent HTTP 500 errors with posting and editing.

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table, Queue and Hadoop Services

- SQL Azure Database and Reporting

- Marketplace DataMarket, Social Analytics and OData

- Windows Azure AppFabric: Apps, Access Control, WIF and Service Bus

- Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch and Entity Framework v4+

- Windows Azure Infrastructure and DevOps

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

Azure Blob, Drive, Table, Queue and Hadoop Services

• Derrick Harris (@derrickharris) listed 6 reasons why 2012 could be the year of Hadoop in an 11/24/2011 post to Giga Om’s Structure blog:

Hadoop gets plenty of attention from investors and the IT press, but it’s very possible we haven’t seen anything yet. All the action of the last year has just set the stage for what should be a big year full of new companies, new users and new techniques for analyzing big data. That’s not to say there isn’t room for alternative platforms, but with even Microsoft abandoning its competitive effort and pinning its big data hopes on Hadoop, it’s difficult to see the project’s growth slowing down.

Here are six big things Hadoop has going for it as 2012 approaches.

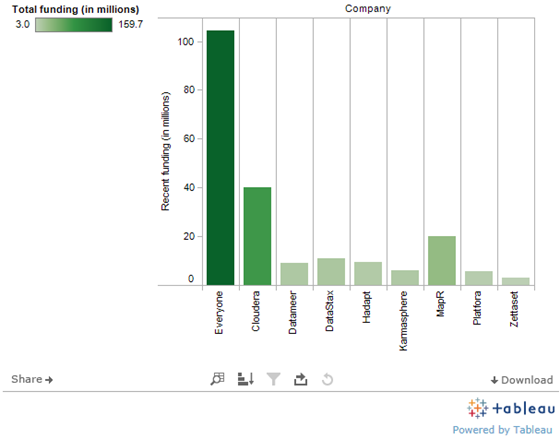

1. Investors love it

Cloudera has raised $76 million since 2009. Newcomers MapR and Hortonworks have raised $29 million and $50 million (according to multiple sources), respectively. And that’s just at the distribution layer, which is the foundation of any Hadoop deployment. Up the stack, Datameer, Karmasphere and Hadapt have each raised around $10 million, and then are newer funded companies such as Zettaset, Odiago and Platfora. Accel Partners has started a $100 million big data fund to feed applications utilizing Hadoop and other core big data technologies. If anything, funding around Hadoop should increase in 2012, or at least cover a lot more startups.

2. Competition breeds success

Whatever reasons companies had to not use Hadoop should be fading fast, especially when it comes to operational concerns such as performance and cluster management. This is because MapR, Cloudera and Hortonworks are in a heated competition to win customers’ business. Whereas the former two utilize open-source Apache Hadoop code for their distributions, MapR is pushing them on the performance front with its semi-proprietary version of Hadoop. This means an increased pace of innovation within Apache, and a major focus on management tools and support to make Hadoop easier to deploy and monitor. These three companies have lots of money, and it’s all going toward honing their offerings, which makes customers the real winners.

3. What learning curve?

Aside from the improved management and support capabilities at the distribution layer, those aforementioned up-the-stack companies are already starting to make Hadoop easier to use. Already, Karmasphere and Concurrent are helping customers write Hadoop workflows and applications, while Datameer and IBM are among the companies trying to make Hadoop usable by business users rather than just data scientists. As more Hadoop startups begin emerging from stealth mode, or at least releasing products, we should see even more innovative approaches to making analytics child’s play, so to speak.

4. Users are talking

It might not sound like a big deal, but the shared experiences of early Hadoop adopters could go a long way toward spreading Hadoop’s utility across the corporate landscape. It’s often said that knowing how to manage Hadoop clusters and write Hadoop applications is one thing, but knowing what questions to ask is something else altogether. At conferences such as Hadoop World, and on blogs across the web, companies including Walt Disney, Orbitz, LinkedIn, Etsy and others are telling their stories about what they have been able to discover since they began analyzing their data with Hadoop. With all these use cases abound, future adopters should have an easier time knowing where to get started and what types of insights they might want to go after.

5. It’s becoming less noteworthy

This point is critical, actually, to the long-term success of any core technology: at some point, it has to become so ubiquitous that using it’s no longer noteworthy. Think about relational databases in legacy applications — everyone knows Oracle, MySQL or SQL Server are lurking beneath the covers, but no one really cares anymore. We’re hardly there yet with Hadoop, but we’re getting there. Now, when you come across applications that involve capturing and processing lots of unstructured data, there’s a good chance they’re using Hadoop to do it. I’ve come across a couple of companies, however, that don’t bring up Hadoop unless they’re prodded because they’re not interested in talking about how their applications work, just the end result of better security, targeted ads or whatever it is they’re doing.

6. It’s not just Hadoop

If Hadoop were just Hadoop — that is, Apache MapReduce and the Hadoop Distributed File System — it still would be popular. But the reality is that it’s a collection of Apache projects that include everything from the SQL-like Hive query language to the NoSQL HBase database to machine-learning library Mahout. HBase, in particular, has proven particularly popular on its own, including at Facebook. Cloudera, Hortonworks and MapR all incorporate the gamut of Hadoop projects within their distributions, and Cloudera recently formed the Bigtop project within Apache, which is a central location for integrating all Hadoop-related projects within the foundation. The more use cases Hadoop as a whole addresses, the better it looks.

Disclosure: Concurrent is backed by True Ventures, a venture capital firm that is an investor in the parent company of this blog, Giga Omni Media. Om Malik, the founder of Giga Omni Media, is also a venture partner at True.

Related research and analysis from GigaOM Pro:

Subscriber content. Sign up for a free trial.

Derrick’s six points bolster the wisdom of the Windows Azure team decision to abandon Dryad and DryadLINQ in favor of Hadoop and MapReduce. See my Google, IBM, Oracle [and Microsoft] want piece of big data in the cloud post of 11/7/2011 to SearchCloudComputing.com.

Full disclosure: I’m a paid contributor to TechTarget’s SearchCloudComputing.com.

Brent Stineman (@BrentCodeMonkey) described Long Running Queue Processing Part 2 (Year of Azure–Week 20) in an 11/23/2011 post:

So back in July I published a post on doing long running queue processing. In that post we put together a nice sample app that inserted some messages into a queue, read them one at a time and would take 30 seconds to process each message. It did processing in a background thread so that we could monitor it.

This approach was all good and well but hinged on us knowing the maximum amount of time it would take us to process a message. Well fortunately for us in the latest 1.6 version of the Azure Tools (aka SDK), the storage client was updated to take advantage of the new “update message” functionality introduced to queues by an earlier service update. So I figured it was time to update my sample.

UpdateMessage

Fortunately for me given the upcoming holiday (which doesn’t leave my time for blogging given that my family lives in “the boonies” and haven’t yet opted for an internet connection much less broadband, updating a message is SUPER simple.

myQueue.UpdateMessage(aMsg, new TimeSpan(0, 0, 30), MessageUpdateFields.Visibility);

All we need is the message we read (which contains the pop-receipt the underlying API use to update the invisible mssage), the new timespan, and finally a flag to tell the API if we’re updating the message content/payload or its visibility. In the sample above we of course are setting its visibility.

Ok, time for turkey and dressing! Oh wait, you want the updated project?

QueueBackgroundProcess w/ UpdateMessage

Alright, so I took exactly the same code we used before. It inserts 5 messages into a queue, then reads and processes each individually. The outer processing loop looks like this:

while (true) { // read messages from queue and process one at a time… CloudQueueMessage aMsg = myQueue.GetMessage(new TimeSpan(0,0,30)); // 30 second timeout // trap no mesage. if (aMsg != null) { Trace.WriteLine("got a message, '"+aMsg.AsString+"'", "Information"); // start processing of message Work workerObject = new Work(); workerObject.Msg = aMsg; Thread workerThread = new Thread(workerObject.DoWork); workerThread.Start(); while (workerThread.IsAlive) { myQueue.UpdateMessage(aMsg, new TimeSpan(0, 0, 30), MessageUpdateFields.Visibility); Trace.WriteLine("Updating message expiry"); Thread.Sleep(15000); // sleep for 15 seconds } if (workerObject.isFinished) myQueue.DeleteMessage(aMsg.Id, aMsg.PopReceipt); // I could just use the message, illustraing a point else { // here, we should check the queue count // and move the msg to poison message queue } } else Trace.WriteLine("no message found", "Information"); Thread.Sleep(1000); Trace.WriteLine("Working", "Information"); }The while loop is the processor of the worker role that this all runs in. I decreased the initial visibility timeout from 2 minutes to 30 seconds, increased our monitoring of the background processing thread from every 1/10th of a second to 15 seconds, and added the updating of the message visibility timeout.

The inner process was also upped from 30 seconds to 1 minute. Now here’s where the example kicks in! Since the original read only listed a 30 second visibility timeout, and my background process will take one minute, its important that I update the visibility time or the message would fall back into view. So I’m updating it with another 30 seconds every 15 seconds, thus keeping it invisible.

Ta-da! Here’s the project if you want it.

So unfortunately that’s all I have time for this week. I hope all of you in the US enjoy your Thanksgiving holiday weekend (I’ll be spending it with family and not working thankfully). And we’ll see you next week!

• Brad Calder of the Window Azure Storage Team reported the availability of the Windows Azure Storage: A Highly Available Cloud Storage Service with Strong Consistency paper on 11/20/2011:

We recently published a paper describing the internal details of Windows Azure Storage at the 23rd ACM Symposium on Operating Systems Principles (SOSP) [#sosp11].

The paper can be found here. The conference also posted a video of the talk here. The slides are not really legible in the video, but you can view them separately here.

The paper describes how we provision and scale out capacity within and across data centers via storage stamps, and how the storage location service is used to manage our stamps and storage accounts. Then it focuses on the details for the three different layers of our architecture within a stamp (front-end layer, partition layer and stream layer), why we have these layers, what their functionality is, how they work, and the two replication engines (intra-stamp and inter-stamp). In addition, the paper summarizes some of the design decisions/tradeoffs we have made as well as lessons learned from building this large scale distributed system.

A key design goal for Windows Azure Storage is to provide Consistency, Availability, and Partition Tolerance (CAP) (all 3 of these together, instead of just 2) for the types of network partitioning we expect to see for our architecture. This is achieved by co-designing the partition layer and stream layer to provide strong consistency and high availability while being partition tolerance for the common types of partitioning/failures that occur within a stamp, such as node level and rack level network partitioning.

In this short conference talk we try to touch on the key details of how the partition layer provides an automatically load balanced object index that is scalable to 100s of billions of objects per storage stamp, how the stream layer performs its intra-stamp replication and deals with failures, and how the two layers are co-designed to provide consistency, availability, and partition tolerant for node and rack level network partitioning and failures.

Brad Calder

<Return to section navigation list>

SQL Azure Database and Reporting

• Mark Scurrell (@mscurrell) announced a SQL Azure Data Sync Service Update in an 11/21/2011 post to the Sync Framework Team Blog:

Thanks for trying out our Preview version and sending us suggestions and feedback. We released a minor service update a few days ago based on the input we have received so far.

Some of the important changes in this update are:

Log-ins with either username@server or just username are accepted.

- Column names with spaces are now supported.

- Columns with a NewSequentialID constraint are converted to NewID for SQL Azure databases in the sync group.

- Administrators and non-Administrators alike are able to install the Data Sync Agent.

- A new version of the Data Sync Agent is now available on the Download Center, but if you already have the Preview version of the Data Sync Agent it will continue to work.

<Return to section navigation list>

MarketPlace DataMarket, Social Analytics and OData

• Chris Ballard (@chrisaballard) described Creating an OData Feed to Import Google Image Search Results into a SQL Server Denali Tabular Model in an 11/23/2011 post to the Tribal Labs blog:

Recently, I worked on a prototype BI project which needed images to represent higher education institutions so that we could create a more attractive interface for an opendata mashup. Its a common data visualisation problem: you have a set of data, perhaps obtained via some open data source, but the source does not include any references to image data which you can use. So the question was, where could we obtain images for each of the institutions that we could use? Using the Google Image Search was the obvious answer but how could I get the images returned from a search in a format which I could use and combine with the rest of my data? The Google Image Search API provides a JSON interface to allow you to programmatically send image search requests and results. Unfortunately, as of May 2011 this is being deprecated (and there appears to be no Google alternative available) however it is still currently functional and so appeared to meet our needs.

Open Data Mashup

The data for our opendata mashup came from a number of different sources (expect a blog post on this very soon!) and we created a SQL Server 2012 (Denali) Tabular Model in Analysis Services to bring together a number of external opendata sets relating to higher education institutions. The main source of data about each HE institution was obtained by extracting data from the Department for Education Edubase system, which contains data on educational establishments across England and Wales. Initially, we wanted our app to query the Google Image Search API dynamically to return a corresponding image, however this proved too slow to realistically use. Instead, as our app will directly query the tabular model I decided to pull in the images associated with each institution directly into the tabular model so it is available for the app to query.

Both PowerPivot and the Tabular Model designer in BIDS allow you to import data from an OData (Open Data Protocol) feed to a model. Odata is a web protocol for querying and updating data based on Atom syndication and RESTful HTTP-based services. You can find out more information over at odata.org. So, to bring the image search results into the tabular model, I built a simple data service using WCF Data Services which serves up the image URLs as an OData feed which can then be consumed in the tabular model. The data service uses the WCF Data Service Reflection Provider to define the data model for the service based on a set of custom classes.

One issue is that the image search needs to be based on a known list of institutions (which are already in our model), so the service needed to query the model first to get the list of institutions which could then be used as the basis of our query. This does have the advantage that the image URLs will be updated dynamically if the institution data in the model changes, however it is dependent on the feed data being processed after the institution data.

OData Service

To create the OData service I created a simple .NET class called WebImageSearch which can be used to call the Google Image Search REST Service and extract the first image matching the search results:

WebImageSearch Class

This class provides two methods, ImageSearch (which is used to call the Google Image Search REST service and carry out the search):

ImageSearch Method

and ProcessGoogleSearchResults to process the JSON returned from the service call. Note that this uses an open source library called Jayrock which allows you to easily process JSON using DataReaders.

ProcessGoogleSearchResults Method

The ImageSearch method returns a list of urls as a collection of objects of type Image. This class is decorated with a number of attributes which tell the WCF Data Service Reflection Provider how to interpret the class as a data feed entity. In this case a DataServiceKeyAttribute defines the attribute which is the key for the entity in the resulting data feed. EntityPropertyMapping attributes (whilst totally optional) define a mapping from class properties to entity properties. I found that unless these were specified no data is returned in the feed when displayed in the browser.

Image Class

A helper class (in this case called InstitutionImages) does the business with reading the existing institution names from the tabular model, passing these to the ImageSearch method in the WebImageSearch class and builds a list of type List<Image> containing the returned image URLs and image IDs (so that we can relate them back to the institutions in the model). We also need to provide a property on this class which returns an IQueryable interface which are the entity sets of Image entity types in the data feed:

Images property returning IQueryable Interface

Finally to complete the picture, we need to add a new WCF Data Service to the project. In the data service definition we define it as a DataService of type InstitutionImages (which is the name of the class containing our IQueryable property) and also need to define Entity Set access rules:

WCF Data Service Definition

Importing the data

Now we are ready to test the service! To return data from the OData service, we can simply define the entity set we are interested in in the URL, for example:

http://localhost:12345/googleimagesodata.svc/ImagesIf we browse to this URL in the browser this will return the data feed as an Atom syndication. To add the feed to our tabular model in SQL Server Denali BIDS Tabular Model Designer, Click the Import from Data Source button and select “Other Feeds” and specify the URL to the OData service:

Connecting to data feed in SQL Server Denali

The data feed will then be imported into the tabular model:

Thoughts on other approaches

This is not an ideal solution by any means, but it does give a way to import more dynamic data which originates from APIs and services which don’t provide a specific OData feed into a tabular model. This approach would be fine if the data being imported was not dependent on the data in another table (in this case the URLs to return are dependent on each institution), however where there is a relationship, it becomes a bit messy as the service needs to query the model, creating a circular dependency.

A better approach would be to create a user defined function which can then be used to dynamically populate data in a new column based on existing data in the table (sort of like user defined functions in multidimensional modeling). Unfortunately this is not possible with a tabular model in SQL Server Denali. Perhaps this is something that the SQL Azure Data Explorer project may enable us to deal with more elegantly in the future by combining these sources prior to loading into the tabular model?

This has started me thinking of how OData support in SQL Server Denali could be enhanced in order to fully take advantage of the capabilities of OData, but that is the subject of another post I think!

• The Data Explorer Team answered What was the best year for kids movies? with a video in a 11/22/2011 post:

In today’s post we are featuring a demo video which uses “Data Explorer” to answer the age-old question, “What was the best year for kids movies?”

Features showcased in the video include:

- Importing data from OData and HTML sources.

- Using data in one table to look up values in another.

- Replacing values in a column.

- Summarizing/grouping rows.

• James Govenor described B2C Social Analytics: Capturing “Moments of Truth” in an 11/22/2011 post to his RedMonk blog:

I presented at ActuateOne Live, a customer event for the company behind BIRT, last week. The subject of my talk was Analytics and Data Science: the breaking wave. My key argument is that cratering costs of processing, RAM and storage, combined with a new generation of data processing technologies built and open sourced by web companies (noSQL), are combining to allow enterprises unprecedented opportunities to do the things with data they always wanted to but the DBA said they couldn’t afford.

I also sat on a panel looking at mobile, cloud and “agile analytics”. Seems the panel went well. I evidently triggered some thoughts from a colleague at another analyst firm, Richard Snow at Ventana Research.

I like Richard’s use of the phrase “moments of truth” to describe the customer service experiences that traditional CRM apps do such a terrible job of capturing.

“After consumers interact with a company in some way (for example, see an advertisement, visit a website, try to use a product, call the contact center, visit social media or even talk to a friend), they are left with a perception or feeling about that company. If the feeling is good, they feel satisfied, if it is bad they are unhappy; in either case they have had a Moment of Truth. Adding up all these moments of truth, a company can gauge their overall satisfaction level, their propensity to remain loyal and buy more, and the likelihood they will say good or bad things about the company to friends or on social media.”

As Richard says

James put forward the view that companies need to focus more on customer behavior and the likely impact on customer behavior of marketing messages, sales calls, social media content, product features, an agent’s attitude, IVR menus and other sources, or as I recently wrote, how customers are likely to react to moments of truth in their contacts. Understanding this requires analysis of masses of historic and current data, both structured and unstructured. It will be interesting to see what Actuate does in this area as it develops more customer-related solutions

Tracking social media interactions can give us insight into these moments of truth. Actuate offers Twitter integration, as do many other analytics companies, while Facebook integration is also heating up fast – see for example Adobe SocialAnalytics and Microstrategy Facebook CRM.

This stuff isn’t getting any easier though. It used to be that you could track what people said on social networks. But with Facebook turning on “automated sharing“, so tracking your apps and creating implicit declarations about what you like on your behalf, the data deluge is going to get a lot worse before it gets better.

We’ll need to understand that online persona may not always give us the “moments of truth“, because people online are trying to create a persona. There is a difference, for example, between what they share, and what they click on – that is, Kitteh vs Chickin.

"Kitteh vs. Chikin: What Data Can Tell Us About Who We Are and Who We Want to Be." (Monktoberfest 2011)View more presentations from Matt LeMayDisclosure: Actuate and Adobe are both clients.

I believe James uses someone else’s portrait as a Twitter avatar.

My (@rogerjenn) More Features and the Download Link for My Codename “Social Analytics” WinForms Client Sample App of 11/22/2011 begins:

Blogger borks edits with Windows Live Writer, so instead of updating yesterday’s New Features Added to My Microsoft Codename “Social Analytics” WinForms Client Sample App post, I’ve added this new post which shows:

- A change from numeric to text values from the ContentItemType enum

- A Cancel button to terminate downloading prematurely

- Addition of a Replies to the Return Tweets, Retweets and Replies Only check box

Here’s the latest screen capture:

Download the source files from the Social Analytics folder of my SkyDrive account:

The downloadable items are:

- SocialAnalyticsWinFormsSampleReadMe.txt

- The source files in SocialAnalyticsWinFormsSample.zip

- A sample ContentItems.csv file for 23 days of data (92,668 total ContentItems).

Earlier posts in this series include:

- New Features Added to My Microsoft Codename “Social Analytics” WinForms Client Sample App

- My Microsoft Codename “Social Analytics” Windows Form Client Detects Anomaly in VancouverWindows8 Dataset

- Microsoft Codename “Social Analytics” ContentItems Missing CalculatedToneId and ToneReliability Values

- Querying Microsoft’s Codename “Social Analytics” OData Feeds with LINQPad

- Problems Browsing Codename “Social Analytics” Collections with Popular OData Browsers

- Using the Microsoft Codename “Social Analytics” API with Excel PowerPivot and Visual Studio 2010

- SQL Azure Labs Unveils Codename “Social Analytics” Skunkworks Project

Turker Keskinpala (@tkes) reported OData Service Validation Tool Update: 12 new rules added on 11/22/2011 to the OData wiki:

OData Service Validation Tool is updated once again with 12 new rules. Below is the breakdown of added rules:

- 2 new common rules

- 2 new metadata rules

- 3 new feed rules

- 5 new entry rules

This rule update brings the total number of rules in the validation tool to 109. You can see the list of rules that are under development here.

OData Service Validation Codeplex project was also updated with all recent changes.

The SQL Server Team announced Microsoft Codename "Social Analytics" Lab in an 11/21/2011 post:

A few weeks ago we released a new “lab” on a site, which some of you may be aware of, called SQL Azure | Labs. We created this site as an outlet for projects incubated out of teams who are passionate about an idea. The labs allow you to experiment and engage with teams on some of these early service concepts and while these projects are not committed to a roadmap your feedback and engagement will help immensely in shaping future investment directions.

Microsoft Codename "Social Analytics” is an experimental cloud service that provides an API enabling developers to easily integrate relevant social web information into business applications. Also included is a simple browsing application to view the social stream and the kind of analytics that can be constructed and integrated in your application.

You can get started with “Social Analytics” by exploring the social data available via the browsing application. With this first lab release, the data available is limited to two topics (“Windows 8” and “Bill Gates”). Future releases will allow you to define your own topic(s) of interest. The data in “Social Analytics” includes top social sources like Twitter, Facebook, blogs and forums. It has also been automatically enriched to tie conversations together across sources, and to assess sentiment.

Once you’re familiar with the data you’ve chosen, you can then use our API (based on the Open Data Protocol) to bring that social data directly into your own application.

Do you want to learn more about the Microsoft Codename “Social Analytics” Lab? Get started today, or for more information visit the official homepage.

- More Features and the Download Link for My Codename “Social Analytics” WinForms Client Sample App

- New Features Added to My Microsoft Codename “Social Analytics” WinForms Client Sample App

- My Microsoft Codename “Social Analytics” Windows Form Client Detects Anomaly in VancouverWindows8 Dataset

- Microsoft Codename “Social Analytics” ContentItems Missing CalculatedToneId and ToneReliability Values

- Querying Microsoft’s Codename “Social Analytics” OData Feeds with LINQPad

- Problems Browsing Codename “Social Analytics” Collections with Popular OData Browsers

- Using the Microsoft Codename “Social Analytics” API with Excel PowerPivot and Visual Studio 2010

- SQL Azure Labs Unveils Codename “Social Analytics” Skunkworks Project

<Return to section navigation list>

Windows Azure AppFabric: Apps, Access Control, WIF and Service Bus

• Paolo Salvatori described Handling Topics, Queues and Relay Services with the Service Bus Explorer Tool in an 11/24/2011 post:

The Windows Azure Service Bus Community Technology Preview (CTP), which was released in May 2011, first introduced queues and topics. At that time, the Windows Azure Management Portal didn’t provide a user interface to administer, create and delete messaging entities and the only way to accomplish this task was using the .NET or REST API. For this reason, I decided to build a tool called Service Bus Explorer that would allow developers and system administrators to connect to a Service Bus namespace and administer its messaging entities.

Over the last few months I continued to develop this tool and add new features with the intended goal to facilitate the development and administration of new Service Bus-enabled applications. In the meantime, the Windows Azure Management Portal introduced the ability for a user to create queues, topics, and subscriptions and define their properties, but not to define or display rules for an existing subscription. Besides, the Service Bus Explorer enables to accomplish functionalities, such as importing, exporting and testing entities, which are not currently provided by the Windows Azure Management Portal. For this reason, the Service Bus Explorer tool represents the perfect companion for the official Windows Azure portal, and it can also be used to explore the features (session-based correlation, configurable detection of duplicate messages, deferring messages, etc.) provided out-of-the-box by the Service Bus brokered messaging.

I’ve just published a post where I explain the functioning and implementation details of my tool, whose source code is available on MSDN Code Gallery. In this post I explain how to use my tool to manage and test Queues and Topics.

For more information on the Windows Azure Service Bus, please refer to the following resources:

- “Service Bus” topic on the MSDN Site.

- “Now Available: The Service Bus September 2011 Release” article on the Windows Azure Blog.

- “Queues, Topics, and Subscriptions” article on the MSDN site.

- "Service Bus” topic on the MSDN site.

- “Understanding Windows Azure Service Bus Queues (and Topics)” video.

- “Building loosely-coupled apps with Windows Azure Service Bus Topics and Queues” video on the channel9 site.

- “Service Bus Topics And Queues” video on the channel9 site.

- “Securing Service Bus with ACS” video on the channel9 site.

Read the full article on MSDN.

The companion code for the article is available on MSDN Code Gallery.

Avkash Chauhan (@avkashchauhan) answered Windows Azure Libraries for .NET 1.6 (Where is Windows Azure App Fabric SDK?) on 11/20/2011:

After the release of latest Windows Azure SDK 1.6, you may have wonder[ed] where is Windows Azure AppFabric SDK 1.6? Before [the] SDK 1.6 release, AppFabric SDK was shipped separate[ly] from Azure SDK. However things [have] changed now and [the] Windows Azure SDK 1.6 merges both SDK[s] together into one SDK. So when you install new Windows Azure SDK 1.6, App Fabric SDK 1.6 is also installed.

Here are a few things to remember now about Windows Azure App Fabric SDK:

- The App Fabric SDK components are installed as “Windows Azure Libraries for .NET 1.6”, seen below:

- Because App Fabric components are is now merge into Windows Azure SDK, Add/remove program will no longer have a separate entry for Windows Azure AppFabric SDK.

- As you know Windows Azure App Fabric has two main Components ServiceBus and Cache so both of these components are inside Azure SDK in separate folders as below:

- Service Bus:

- C:\Program Files\Windows Azure SDK\v1.6\ServiceBus

- Cache:

- C:\Program Files\Windows Azure SDK\v1.6\Cache.

- Since SDK 1.6 is a side by side install, the old AppFabric SDK 1.5 can still be found under C:\Program Files\Windows Azure AppFabric SDK\V1.5. Just uninstall it if you are going to use SDK 1.6 binaries to avoid issues.

- Windows Azure Libraries for .NET 1.6 also [has] the following update [for] Queues:

- Support for UpdateMessage method (for updating queue message contents and invisibility timeout)

- New overload for AddMessage that provides the ability to make a message invisible until a future time

- The size limit of a message is raised from 8KB to 64KB

- Get/Set Service Settings for setting the analytics service settings

Windows Azure SDK 1.6 Installation Walkthrough:

<Return to section navigation list>

Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

• Avkash Chauhan (@avkashchauhan) answered “No” to Can you programmatically enable CDN within your Windows Azure Storage Account? in an 11/23/2011 post:

I was recently asked if there is an API which can enable CDN for a Windows Azure storage account programmatically?

After a little digging I found that as of now you cannot programmatically enable CDN for a Windows Azure Storage account. You will have to access Windows Azure Storage account directly on Windows Azure Management Portal and then manually enable CDN for that account as below:

I also found that you programmatically cannot get CDN URL for your Windows Azure storage account and you would need to access it directly from Windows Azure Management Portal.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

• Brian Swan (@brian_swan) described Packaging a Custom PHP Installation for Windows Azure in an 11/23/2011 post to The Silver Lining Blog:

One feature of the scaffolds that are in the Windows Azure SDK for PHP is that all rely on the Web Platform Installer to install PHP when a project is deployed. This is great until I want my application deployed with my locally customized installation of PHP. Not only might I have custom settings, but I might have custom libraries that I want to include (like some PEAR modules or any of the many PHP frameworks out there). In this tutorial, I’ll walk you through the steps for deploying a PHP application bundled with a custom installation of PHP. This tutorial does not rely on the Windows Azure SDK for PHP, but you will need…

The Windows Azure SDK.

- Windows Azure subscription You really only need this if you want to actually deploy your application (step 8 below).

- PHP installed on your local machine and IIS configured to handle PHP requests. I recommend doing this with the Web Platform Installer.

Ultimately, I’d like to make this process (below) easier (by possibly turning this into a scaffold to be included in the Windows Azure SDK for PHP?), so please provide feedback if you try this out…

1. Customize your PHP installation. Configure any settings and external libraries you want for your application.

2. Create a project directory. You’ll need to create a project directory for your application and the necessary Azure configuration files. Ultimately, your project directory should look something like this (we’ll fill in some of the missing files in the steps that follow):

-YourProjectDirectory

-YourApplicationRootDirectory

-bin

-configureIIS.cmd

-PHP

-(application files)

-(any external libraries)

-web.config

-ServiceDefinition.csdefA few things to note about the structure above:

- You need to copy your custom PHP installation to the bin directory.

- Make sure that all paths in your php.ini are relative (e.g. extension_dir=”.\ext”)

- Any external libraries need to be in your application root directory.

- Technically, this isn’t true. You could use a relative path for your include_path configuration setting (relative to your application root) and put this directory elsewhere in your project directory.

- Maybe this goes without saying, but be sure to turn off any debug settings (like display_errors) before pushing this to production in Azure.

3. Add a startup script for configuring IIS. IIS in a Web role is not configured to handle PHP requests by default. So, we need a script to run when an instance is brought on line to configure IIS. (We’ll set this script to run on start up in the next step.) Create a file called configureIIS.cmd, add the content below, and save it in the bin directory:

@ECHO ONSET PHP_FULL_PATH=%~dp0php\php-cgi.exeSET NEW_PATH=%PATH%;%RoleRoot%\base\x86%WINDIR%\system32\inetsrv\appcmd.exe set config -section:system.webServer/fastCgi /+"[fullPath='%PHP_FULL_PATH%',maxInstances='12',idleTimeout='60000',activityTimeout='3600',requestTimeout='60000',instanceMaxRequests='10000',protocol='NamedPipe',flushNamedPipe='False']" /commit:apphost%WINDIR%\system32\inetsrv\appcmd.exe set config -section:system.webServer/fastCgi /+"[fullPath='%PHP_FULL_PATH%'].environmentVariables.[name='PATH',value='%NEW_PATH%']" /commit:apphost%WINDIR%\system32\inetsrv\appcmd.exe set config -section:system.webServer/fastCgi /+"[fullPath='%PHP_FULL_PATH%'].environmentVariables.[name='PHP_FCGI_MAX_REQUESTS',value='10000']" /commit:apphost%WINDIR%\system32\inetsrv\appcmd.exe set config -section:system.webServer/handlers /+"[name='PHP',path='*.php',verb='GET,HEAD,POST',modules='FastCgiModule',scriptProcessor='%PHP_FULL_PATH%',resourceType='Either',requireAccess='Script']" /commit:apphost%WINDIR%\system32\inetsrv\appcmd.exe set config -section:system.webServer/fastCgi /"[fullPath='%PHP_FULL_PATH%'].queueLength:50000"4. Add a service definition file (ServiceDefinition.csdef). Every Azure application must have a service definition file. The important part of this one is that we set the script above to run on start up whenever an instance is provisioned:

<?xml version="1.0" encoding="utf-8"?><ServiceDefinition name="YourProjectDirectory" xmlns="http://schemas.microsoft.com/ServiceHosting/2008/10/ServiceDefinition"><WebRole name="YourApplicationRootDirectory" vmsize="ExtraSmall" enableNativeCodeExecution="true"><Sites><Site name="YourPHPSite" physicalDirectory="./YourApplicationRootDirectory"><Bindings><Binding name="HttpEndpoint1" endpointName="defaultHttpEndpoint" /></Bindings></Site></Sites><Startup><Task commandLine="configureIIS.cmd" executionContext="elevated" taskType="simple" /></Startup><InputEndpoints><InputEndpoint name="defaultHttpEndpoint" protocol="http" port="80" /></InputEndpoints></WebRole></ServiceDefinition>Note that you will need to change the names of YourProjectDirectory and YourApplicationRootDirectory depending on what names you used in your project structure from step 1. You can also configure the VM size (which is set to ExtraSmall in the file above). For more information, see Windows Azure Service Definition Schema.

5. Generate a service configuration file (ServiceConfiguration.cscfg). Every Azure application must also have a service configuration file, which you can generate using the Windows Azure SDK. Open a Windows Azure SDK command prompt, navigate to your project directory, and execute this command:

cspack ServiceDefinition.csdef /generateConfigurationFile:ServiceConfiguration.cscfg /copyOnlyThis will generate a ServiceConfiguration.cscfg file and a ServiceDefinition.csx directory in your project directory.

6. Run your application in the Compute Emulator. If you want to run your application in the Compute Emulator (for testing purposes), execute this command:

csrun ServiceDefinition.csx ServiceConfiguration.cscfg /launchbrowserOne thing to note about doing this: The configureIIS.cmd script will be executed on your local machine (setting your PHP handler to point to the PHP installation that is part of your Azure project). You’ll need to change this later.

7. Create a package for deployment to Azure. Now you can create the package file (.cspkg) that you need to upload to Windows Azure with this command:

cspack ServiceDefinition.csx ServiceConfiguration.cscfg8. Deploy your application. Finally, you can deploy your application. This tutorial will walk you through the steps: http://azurephp.interoperabilitybridges.com/articles/deploying-your-first-php-application-to-windows-azure#new_deploy.

Again, I’d be very interested to hear feedback from anyone who tries this. Like I mentioned earlier, I think turning this into a scaffold that is included in the Windows Azure SDK for PHP might be very useful.

• Avkash Chauhan (@avkashchauhan) provided Troubleshooting details: Windows Azure Web Role was stuck due to an expception in IISConfigurator.exe process in an 11/23/2011 post:

Recently I was working with a partner problem in which the Azure web role was stuck and showing “preparing node” status in Windows Azure Management portal. Luckily RDP access to Azure VM was working so investigation to the problem was easier.

After logging to Azure VM over RDP the IISConfigurator.log showed the following error:

IISConfigurator Information: 0 : [11/22/11 10:05:42.49] Started iisconfigurator with args

IISConfigurator Information: 0 : [11/22/11 10:05:42.71] Started iisconfigurator with args /start

IISConfigurator Information: 0 : [11/22/11 10:05:42.72] StartForeground selected. Check if an instance is already running

IISConfigurator Information: 0 : [11/22/11 10:05:42.80] Starting service WAS

IISConfigurator Information: 0 : [11/22/11 10:05:43.28] Starting service w3svc

IISConfigurator Information: 0 : [11/22/11 10:05:43.53] Starting service apphostsvc

IISConfigurator Information: 0 : [11/22/11 10:05:43.96] Attempting to add rewrite module section declarations

IISConfigurator Information: 0 : [11/22/11 10:05:44.00] Section rules already exists

IISConfigurator Information: 0 : [11/22/11 10:05:44.00] Section globalRules already exists

IISConfigurator Information: 0 : [11/22/11 10:05:44.00] Section rewriteMaps already exists

IISConfigurator Information: 0 : [11/22/11 10:05:44.00] Adding rewrite module global module

IISConfigurator Information: 0 : [11/22/11 10:05:44.03] Already exists

IISConfigurator Information: 0 : [11/22/11 10:05:44.05] Enabling rewrite module global module

IISConfigurator Information: 0 : [11/22/11 10:05:44.07] Already exists

IISConfigurator Information: 0 : [11/22/11 10:05:44.07] Skipping Cloud Drive setup.

IISConfigurator Information: 0 : [11/22/11 10:05:44.07] Cleaning All Sites

IISConfigurator Information: 0 : [11/22/11 10:05:44.07] Deleting sites with prefix:

IISConfigurator Information: 0 : [11/22/11 10:05:44.08] Found site:Ayuda.WebDeployHost.Web_IN_0_Web

IISConfigurator Information: 0 : [11/22/11 10:05:44.11] Excecuting process 'D:\Windows\system32\inetsrv\appcmd.exe' with args 'delete site "<sitename>.WebDeployHost.Web_IN_0_Web"'

IISConfigurator Information: 0 : [11/22/11 10:05:44.22] Process exited with code 0

IISConfigurator Information: 0 : [11/22/11 10:05:44.22] Deleting AppPool: <AppPool_GUID>

IISConfigurator Information: 0 : [11/22/11 10:05:44.28] Found site:11600

IISConfigurator Information: 0 : [11/22/11 10:05:44.30] Excecuting process 'D:\Windows\system32\inetsrv\appcmd.exe' with args 'delete site "11600"'

IISConfigurator Information: 0 : [11/22/11 10:05:44.49] Process exited with code 0

IISConfigurator Information: 0 : [11/22/11 10:05:44.49] Deleting AppPool: 11600

IISConfigurator Information: 0 : [11/22/11 10:05:44.52] Deleting AppPool: 11600

IISConfigurator Information: 0 : [11/22/11 10:05:44.55] Unhandled exception: IsTerminating 'True', Message 'System.ArgumentNullException: Value cannot be null.

Parameter name: element

at Microsoft.Web.Administration.ConfigurationElementCollectionBase`1.Remove(T element)

at Microsoft.WindowsAzure.ServiceRuntime.IISConfigurator.WasManager.RemoveAppPool(ServerManager serverManager, String appPoolName)

at Microsoft.WindowsAzure.ServiceRuntime.IISConfigurator.WasManager.TryRemoveSiteAndAppPools(String siteName)

at Microsoft.WindowsAzure.ServiceRuntime.IISConfigurator.WasManager.CleanServer(String prefix)

at Microsoft.WindowsAzure.ServiceRuntime.IISConfigurator.WCFServiceHost.Open()

at Microsoft.WindowsAzure.ServiceRuntime.IISConfigurator.Program.StartForgroundProcess()

at Microsoft.WindowsAzure.ServiceRuntime.IISConfigurator.Program.DoActions(String[] args)

at Microsoft.WindowsAzure.ServiceRuntime.IISConfigurator.Program.Main(String[] args)'

If you study the above IISConfigurator.exe process exception call stack in the log, you will see, prior to the crash the code was trying to delete the AppPool 11600 and the deletion was completed and then there was an exception. After that the role could not start correctly.

During the investigation I found the following details which I decided to share with all of you:

The Windows Azure application has a Web role which was creating using a modified version of the “Windows Azure Accelerator for Web Roles” . This Application was customized in a way that when the role starts, in the role startup code it does the following:

- Creates a few sites in the IIS

- Create appropriate bindings for new sites created in step #1.

So when Windows Azure VM was restarted/rebooted due to any reason (OS–update, manual reboot, etc) the role gets stuck due to IISConfigurator exception.

This is because the IIS was not clean, when machine started so IISConfigurator was removing all previous sites to prepare IIS for Web Role. IISConfigurator process has only 1 minute to perform all the tasks and if IISConfigurator could not finish all task within 1 minute the exception will occur due to 1 minute timeout.

Solution:

Because web role was creating all these sites during role startup to ideal solution was to clean the IIS when the machine shuts down rather then putting burden on IISConfigurator to cleanup IIS during startup.

So the perfect solution, was to cleanup IIS during the RoleEnvironment.Stopping event. So to solve this problem we just added code launching “appcmd” to clean all the sites which are created earlier in RoleEnvironment.Stopping event and made sure IIS is clean.

You can read my blog blow to understand what is Stopping event and how to handle it properly in your code:

Ultimately, the problem was related with IIS having residual setting during machine startup and IISConfigurator could not clean IIS before timeout kicks in, which ultimately cause role startup problems. After adding necessary code in Stopping event to clean IIS, the web role started without any issues on reboot. You can also add a startup task to make sure the IIS is clean prior to role start as a failsafe.

• Joel Foreman described In-Place Updates in Windows Azure in an 11/21/2011 post to the Slalom Blog:

Recent improvements to Windows Azure will now give developers better flexibility and control over updating existing deployments. There is now better support for in-place updates across a wider range of deployment scenarios without changing the assigned VIP address of a service. You can read more about these changes in the MSDN blog Announcing Improved In-place Updates by Drew McDaniel. Here is a bit more about the problem space and the new changes.

When you deploy a new service to Windows Azure, it is assigned a VIP address. This VIP address will stay the same as long as a deployment continues to occupy the deployment slot (either production or staging) for this service. But if a deployment is deleted and removed entirely, the next deployment will be assigned a new VIP.

Someday we will live in a world when this VIP address will not matter. For some applications, it doesn’t and the application continues to function just fine if it changes. But in my experience thus far, there are often cases were a dependency on this address will occur. Some very common examples are to support an A record DNS entry for a top-level domain name. Another common example the practice of IP “white-listing” for access to protected resources behind a firewall. I think that in the future, there won’t be a need for a this dependency as the infrastructure world catches up to the cloud movement. But we are not there yet.

In order to preserve the VIP address of your existing service across deployments, developers can utilize to mechanisms: in-place upgrades or the “VIP Swap” which is the swapping of two running deployments (i.e. staging and production). But there were limitations to the types of deployments that either of these methods could support. What this really meant is that as long as the topology of your service didn’t change, this would work fine. But for major releases, it likely would not work. For instance, if your new deployment added an endpoint (i.e. HTTPS), an in-place upgrade could not be performed. Or if your deployment added a new role (i.e. new worker role), a VIP swap could not be performed with the existing production instance. The end result was having to delete the existing deployment and deploy the new service. This would cause a brief interruption in service, cause a new VIP to be assigned, and any downstream dependencies on the VIP would have to be updated. It was a pain to deal with.

The new improvements to how deployments are handled, both in-place upgrades and VIP swaps, will eliminate these scenarios, along with many others! I am very pleased with these updates In fact, off the top of my head I cannot think of a deployment scenario that cannot be supported now by an in-place upgrade or VIP swap. Check out the matrix provided in the link above to see all of the different deployment scenarios which are covered.

We can’t get around taking that dependency on the VIP address of our service, but at least now we are better enabled to deal with preserving the VIP address for our service.

David Linthicum (@DavidLinthicum) asserted “With rise of supercomputing and high-end platforms in public clouds, the day will come when you can't get them any other way” in a deck for his Why supercomputers will live only in the cloud article of 11/23/2011 for InfoWorld’s Cloud Computing blog:

The new public beta of Cluster Compute Eight Extra Large is Amazon.com's most powerful cloud service yet. Its launch indicates that Amazon Web Services (AWS) intends to attract more organizations into high-performance computing. "AWS's cloud for high-performance computing applications offers the same benefits as it does for other applications: It eliminates the cost and complexity of buying, configuring, and operating in-house compute clusters, according to Amazon," notes the IDG News Service story. The applications include physics simulations, seismic analysis, drug design, genome analysis, aircraft design, and similar CPU-intensive analytics applications

This is a core advantage of cloud computing: the ability to access very expensive computing systems using a self-provisioned and time-shared model. Most organizations can't afford supercomputers, so they choose a rental arrangement. This is not unlike how I had to consume supercomputing services back when I was in college. Certainly the college could not afford a Cray.

The question then arises: What happens these advanced computing services move away from the on-premise hardware and software model completely? What if they instead choose to provide multitenant access to supercomputing services and hide the high-end MIPS behind a cloud API?

This model may offer a more practical means of providing these services, and supercomputers are not the only platform where this shift may occur. Other more obscure platforms and application[s] could be a contender for the cloud-only model, such as huge database services bound to high-end analytics, geo-analytics, any platform that deals with massive image processing, and other platforms and applications that share the same patterns.

I believe that those who vend these computing systems and sell about 20 to 30 a year will find that the cloud becomes a new and more lucrative channel. Perhaps they will support thousands of users on the cloud, an audience that would typically not be able to afford the hardware and software.

Moreover, I believe this might be the only model they support in the future, and the cloud could be the only way to access some platform services. That's a pity for those who want to see the hardware in their own data center, but perhaps that's not a bad thing.

See the Microsoft Research reported a updated CTP of Project Daytona: Iterative MapReduce on Windows Azure on 11/14/2011 article below (in this section).

Avkash Chauhan (@avkashchauhan) described Azure Diagnostics generates exception in SystemEventsListener::LoadXmlString when reading event log which includes exception as event data on 11/22/2011:

When you write [a] Windows Azure application, you may wish [to] include you[r] own error or notifications in the event log. Or you would like to save exceptions in the event log, which [is] ultimately collected by Windows Azure Diagnostics in the Azure VM and sent to Windows Azure Storage.

When you create an event log with Exception.ToString(), the event can’t be consumed by Azure Diagnostics. [I]nstead you might see an exception in [the] Azure Diagnostics module.

The exception shows a failure in SystemEventsListener::LoadXmlString while loading the event string.

If you dig deeper you will find that this exception is actually related [to the] “xmlns” attribute present in your exception string as below:

<?xml version="1.0" encoding="utf-8" standalone="yes"?> <error xmlns="http://schemas.microsoft.com/ado/2007/08/dataservices/metadata"> <code>EntityAlreadyExists</code> <message xml:lang="en-US">The specified entity already exists. RequestId:46ce3b6e-d321-3b62-1e45-de45245fac17 Time:2011-11-21T02:23:50.5356456Z </message> </error>This exception is caused by a known issue in [the] Azure diagnostics component by the string “xmlns” being included in the content of an event log.

To solve this problem you have two options:

- You can remove xmlns from the error data

- You also can replace double quote “ with single quote in the xmlns string

Brian Hitney announced Rock, Paper, Azure is back… in an 11/22/2011 post:

Rock, Paper, Azure (RPA) is back! For those of you who have played before, be sure to get back in the game! If you haven’t heard of RPA, check out my past posts on the subject. In short, RPA is a game that we built in Windows Azure. You build a bot that plays a modified version of rock, paper, scissors on your behalf, and you try to outsmart the competition.

Over the summer, we ran a Grand Tournament where the first place prize was $5,000! This time, we’ve decided to change things a bit and do both a competition and a sweepstakes. The game, of course, is a competition because you’re trying to win. But we heard from many who didn’t want to get in the game because the competition was a bit fierce.

Competition: from Nov 25 through Dec 16, each Friday, we’ll give the top 5 bots a $50 Best Buy gift card. If you’re the top bot each Friday, you’ll get a $50 gift card each Friday.

Sweepstakes: for all bots in the game on Dec 16th, we’ll run the final round and then select a winner at random to win a trip to Cancun. We’re also giving away an Acer Aspire S3 laptop, a Windows Phone, and an Xbox Kinect bundle. Perfect timing for the holidays!

Check it out at http://www.rockpaperazure.com!

The last time I flew to Cancun (in my 1956 Piper PA23-150 Apache), it was called Puerto Juarez and the first hotels were being built. Wish I’d saved the 35-mm pictures I took.

Avkash Chauhan (@avkashchauhan) listed Resources to run Open Source Stacks on Windows Azure on 11/22/2011:

- Windows Azure SDK for Java

- Installing Windows Azure SDK for Java

- Startup task based Tomcat/Java Worker Role Application for Windows Azure

- Windows Azure Tomcat Solution

PHP

- Setup the Windows Azure SDK for PHP

- Build and Deploy PHP Application on Azure

- Resources for building scalable PHP applications on Windows Azure

- Eclipse SDK for PHP Developers

Ruby on Rails

- Ruby on Rails in Windows Azure - Part 1 - Setting up Ruby on Rails in Windows 7 Machine with test Rails Application

- Ruby on Rails in Windows Azure - Part 2 - Creating Windows Azure SDK 1.4 based Application to Host Ruby on Rails Application in Cloud

Nodes.JS

- Nodes.JS in Windows Azure

- Using ProgramEntryPoint element in Service Definition to use custom application as role entry point using Windows Azure SDK 1.5

Scala & Play Framework:

- Using Scala and the Play Framework in Windows Azure

Python

- Making Songs Swing with Windows Azure, Python, and the Echo Nest API

- Python wrapper for Windows Azure storage

Video:

- Node.js, Ruby, and Python in Windows Azure: A Look at What’s Possible

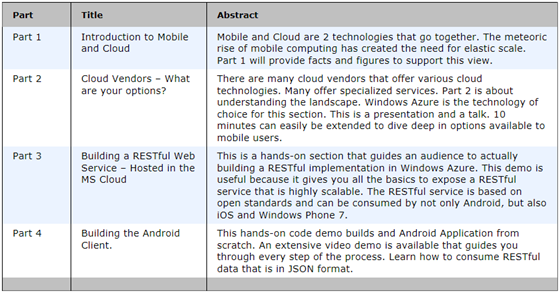

Bruno Terkaly (@brunoterkaly) posted Presentation and Training Kit: Android Consuming Cloud Data – Powered By Windows Azure on 11/21/2011:

Goal of this post – To teach you how to demo and Android Application consuming standards-based RESTful Web Services

This post has a simple goal – to prepare you to give a presentation on how you would communicate to the cloud from an Android phone. The presentation can be given a time range of 30 minutes to an hour, depending on the level of detail you wish to provide. This talk has been given at the Open Android Conference. Details can be found here:

http://androidopen.com/android2011/public/schedule/detail/22308

This is developer-centric – hands-on coding

This is designed to be a hands-on demo, meaning that there are working samples to demonstrate key concepts. Source code, PowerPoint slides, and videos are all part of this package. All the material is available on my blog posts.

Resources are publicly available

All of the materials for this talk are publicly available. This dramatically simplifies follow up with audience members, who frequently ask for the presentation materials

A flow has been defined for this talk

There are 4 main sections in this talk. Each section can take from 10 to 15 minutes. Following parts 1-4 below will allow you to give a deep, hands-on code demo of connecting Android mobile applications to the Microsoft Cloud – Windows Azure.

Bruno continues with details of the four parts of the presentation.

Microsoft Research reported a updated CTP of Project Daytona: Iterative MapReduce on Windows Azure on 11/14/2011:

Microsoft has developed an iterative MapReduce runtime for Windows Azure, code-named Daytona. Project Daytona is designed to support a wide class of data analytics and machine-learning algorithms. It can scale to hundreds of server cores for analysis of distributed data. Project Daytona was developed as part of the eXtreme Computing Group’s Cloud Research Engagement Initiative.

Download Details

File Name:

Daytona-CTP-1.2-refresh.zipVersion:

1.2Date Published:

14 November 2011Download Size:

11.16 MBNote: By installing, copying, or otherwise using this software, you agree to be bound by the terms of its license. Read the license.

News

On Nov. 14, 2011 we released an updated Daytona community technical preview (CTP) that contains a number of performance improvements from our recent development sprint, improved fault tolerance, along with enhancements for iteratative algorithms and data caching. Click the Download button above to get the latest package with these updates. Learn more about this release...

Overview

Project Daytona on Window Azure is now available, along with a deployment guide, developer and user documentation, and code samples for both data analysis algorithms and client application. This implementation of an iterative MapReduce runtime on Windows Azure allows laboratories, small groups, and individual researchers to use the power of the cloud to analyze data sets on gigabytes or terabytes of data and run large-scale machine learning algorithms on dozens or hundreds of compute cores.

Included in the CTP Refresh (Nov. 14, 2011)

This refresh to the Daytona CTP contains the following enhancments:

- Updated

- Binaries

- Hosting project source

- API help reference file (CHM

- Documentation

Included in the CTP Release (Nov. 14, 2011)

This CTP release consists of a ZIP file (Daytona_on_Windows_Azure.zip) that includes our Windows Azure cloud service installation package along with the documentation and sample codes.

- The Deployment Package folder contains a package to be deployed on your Windows Azure account, a configuration file for setting up your account, and a guide that offers step-by-step instructions to set up Project Daytona on your Window Azure service. This package also contains the user guide that describes the user interface for launching data analytics jobs on Project Daytona from a sample client application.

- The Samples folder contains source code for sample data analytics algorithms written for Project Daytona as examples, along with source code for a sample client application to invoke data analytics algorithms on Project Daytona. The distribution also includes a developer guide for authoring new data analytics algorithms for Project Daytona, along with user guides for both the Daytona iterative MapReduce runtime and client application.

About Project Daytona

The project code-named Daytona is part of an active research and development project in the eXtreme Computing Group of Microsoft Research. We will continue to tune the performance of Project Daytona and add new functionality, fix any software defects that are identified, and periodically push out new versions.

Please report any issues you encounter in using Project Daytona to XCG Engagement or contact Roger Barga with suggestion for improvements and/or new features.

Thanks to Roger Barga for the heads-up about the new CTP.

<Return to section navigation list>

Visual Studio LightSwitch and Entity Framework 4.1+

• Michael Washington (@ADefWebserver) reported in an 11/25/2011 Tweet:

Church+ is a “commercial application built with

#Visual Studio#LightSwitch”:

Personal Details

Detailed Membership information and profile window: This window displays the full data of a particular member. Where obtainable, the picture of the members can be attached to their information. It is a comprehensive window that displays everything needed to know about a member, a very important tool in following-up on the spiritual and other aspects of life of a parishioner.

Family

Detailed data on each family in the church is captured by this window. The family name, father’s name, mother’s name, and number of children/ward they have and contact address of the family are captured here.

Membership Charts

Membership Charts: This window provides detailed analysis of the church mix. It displays the mix in four pie charts each analyzing the church in terms of membership status e.g. first timer, full member, worker etc. Membership by gender i.e. Male/Female mix of the church, Membership by Marital Status, showing the percentage of married to singles and the Age Mix of the church. It describes all these parameters in percentages.

Birth Management

Birth Management: This displays detailed information about new births in the church. It captures the Father and the Mother’s name of the baby, the name(s) of the baby, time and the date of birth of the child, the day of dedication and the officiating minister.

Birthday and Anniversary Reminders

Birthday and Anniversary Reminder window is the first display the application runs once started. It displays birthday(s) and anniversary(ies) that fall to each particular day church+ is opened. It pull up birthday/anniversary information of members from the database and automatically reminds the administrator of these daily.

Detailed Church Activities

Church Activity: This view displays detailed data about a particular activity/service of the church. It captures the attendance, offerings, testimonies, the date, start and end time, description of the service, the preacher, topic of the sermon, the text and special notes. All necessary information about any type of service is captured, and can be called up at any point in time.

Church Attendance and Growth Analysis

Attendance and Church Growth Analysis Window: This view provides strategic tools to analyse how the church is doing. This is a window where detailed analysis of each activity/service is done. It helps the church to see the progress or otherwise of the services. Attendances per service are compared to previous services in a visual and graphical form using bar charts. It is a wonderful church growth tool that removes guesswork and forces the church leadership to ask intelligent questions that will result in decisions critical to the growth of the church.

Church Service Report

The Report Windows: church+ has printable reports for the first-timers, converts, members detailed report, church activity/service report, financial reports, and comparable church activity/service report over a period of time.

First Timer Report

• The Visual Studio LightSwitch Team described LightSwitch Video Training from Pluralsight! in an 11/21/2011 post:

Pluralsight provides a variety of developer training on all sorts of topics and they have generously donated some LightSwitch training for our LightSwitch Developer Center!

Just head on over the the LightSwitch “How Do I” video page and on the right you’ll see three video modules with over an hour and a half of free LightSwitch training.

1. Introduction to Visual Studio LightSwitch (27 min.) 2. Working with Data (30 min.) 3. Working with Screens (37 min.) Also, don’t forget to check out all 24 “How Do I” videos as well as other essential learning topics on the Dev Center.

• Kostas Christodoulou asserted Auditing and Concurrency don’t mix (easily)… in an 11/20/2011 post:

In MSDN forums I came across a post addressing an issue I have also faced. Auditing fields can cause concurrency issues in LightSwitch (not exclusively).

In general basic auditing includes keeping track of when an entity was created/modified and by whom. I say basic auditing because auditing is in general much more than this.Anyhow, this basic auditing mechanism is very widely implemented (it’s a way for developers to be able to easily find a user to blame for their own bugs :-p), so let’s see what this can cause and why in LightSwitch.

In the aforementioned post but also in this one, I have clearly stated that IMHO the best way to handle concurrency issues is using RIA Services. If you don’t, read what follows.Normally in any application, updating the fields that implement Audit tracking would be a task completed in the business layer (or even Data layer in some cases and this could go as deep as a database trigger). So in LightSwitch the first place one would look into to put this logic would be EntityName_Inserting and EntityName_Updating partial methods that run on the server. Which is right, but causes concurrency issues, since after saving the client instance of the entity is not updated by the changes made at the server and as soon as you try to save again this will cause concurrency error.

So, what can you do, apart from refreshing after every save which is not very appealing? Update at the client. Not appealing either but at least it can be done elegantly:

Let’s say all entities to implement auditing have 4 fields:

Add to the Common project a new interface called IAuditable like below:

- DateCreated

- CreatedBy

- DateModified

- ModifiedBy

namespace LightSwitchApplication{ public interface IAuditable{ DateTime DateCreated { get; set; } string CreatedBy { get; set; } DateTime DateModified { get; set; } string ModifiedBy { get; set; } } }

Then, also in the common project, add a new class called EntityExtensions:namespace LightSwitchApplication{ public static class EntityExtensions{ public static void Created<TEntityType>(this TEntityType entity, IUser user) where TEntityType: IAuditable{ entity.DateCreated = entity.DateModified = DateTime.Now; entity.CreatedBy = enity.ModifiedBy = user.Name; } public static void Modified<TEntityType>(this TEntityType entity, IUser user) where TEntityType: IAuditable{ entity.DateModified = DateTime.Now; entity.ModifiedBy = user.Name; } } }Now let’s suppose your entity’s name is Customer (imagination was never my strong point), the screen’s name is CustomerList and the query is called Customers.

First Viewing the Customer entity in designer click write code and make sure that:partial class Customer : IAuditable{ }

Then at your screen’s saving method write this:partial void CustomerList_Saving{ foreach(Customer customer in this.DataworkSpace.ApplicationData.Details.GetChanges().AddedEntities.OfType<Customer>()) customer.Created(this.Application.User); foreach(Customer customer in this.DataworkSpace.ApplicationData.Details.GetChanges().ModifiedEntities.OfType<Customer>()) customer.Modified(this.Application.User); }This should do it. This way you can also easily move your logic to the server as the interface and extension class are defined in the Common project and they are also available to the server.

Return to section navigation list>

Windows Azure Infrastructure and DevOps

• Nicholas Mukhar quoted Apprenda CEO: Competition Non-Existent in .NET PaaS Space in an 11/23/2011 post to the TalkinCloud blog:

In late November 2011 Apprenda, a platform-as-a-service (PaaS) provider for enterprise companies, released its Apprenda 3.0 solution featuring more manageability and platform support for developers. Shortly after the release I spoke with Apprenda CEO Sinclair Schuller to learn about the company’s background and the state of competition in the PaaS market. My most surprising discovery? Schuller noted a lack of competition in the PaaS market for .NET applications. Here’s why:

“We’re seeing more competition now, but no one else is focusing on the .NET,” Schuller said. Apprenda attributes the lack of PaaS .NET competition to two factors:

- Research and development is easier around Java applications, meaning there’s an easier entrance into the marketplace if you’re developing PaaS for Java apps.

- Developers get nervous because Microsoft owns the .NET framework.

These factors don’t seem to bother Schuller, who said Apprenda is “happy to be part of the Microsoft ecosystem.” So what has Apprenda discovered that other PaaS providers have yet to catch on to? Schuller noted two untapped areas that Apprenda has since exploited — the lack of private cloud PaaS solutions and mobile PaaS.

“If you look at other PaaS providers, they wrote a lot of software, but it’s all tied to infrastructure,” he said. “But ours is portable. It can be installed anywhere you can get a traditional Windows Server. It can take over all Windows Servers that are working together and join them together to create a platform. Developers don’t have to worry about servers. They can tell Apprenda to run an application and it decides which server is best to run it on.”

Schuller’s idea to found Apprenda came from his enterprise IT background. Specifically, building accounting applications while employed at Morgan Stanley Portfolio Accounting. “We wrote web applications for Java in about one to four months, but then it took 30 to 90 days to get the applications deployed. And there was a lot of human error throughout that process,” he said. “Accountants want their applications delivered quickly. They were breathing down our necks, and when we scaled the applications across multiple projects, the application complexity becomes higher. We found ourselves rebuilding common components.” So Sinclair and a team of entrepreneurs decided to build Apprenda — a technology layer that’s sold as an application platform so developers don’t have to rebuild mission-critical components and IT staff can scale their applications.

The next step for Apprenda? The company has two releases each year with the next one scheduled for May 2012. Schuller said Apprenda is also focused on expanding the different types of applications its solution can support.

Read More About This Topic

- Symantec Cloud Survey: Customers Think Cloud Boosts Security

- ActiveState Stackato Commits to Free Cloud PaaS Indefinitely

- Amazon Web Services Gets Elastic Beanstalk Security, VPC Load Balancing

- Study: VMware Cloud Foundry Voted the ISV’s PaaS of Choice

- Apprenda 3.0: Microsoft .NET Meets Cloud Computing

• Lori MacVittie (@lmacvittie) asserted #devops It’s a simple equation, but one that is easily overlooked in an introduction to her The Pythagorean Theorem of Operational Risk post of 11/23/2011 to F5’s DevCentral blog:

Most folks recall, I’m sure, the Pythagorean Theorem. If you don’t, what’s really important about the theorem is that any side of a right triangle can be computed if you know the other sides by using the simple formula a2 + b2 = c2. The really important thing about the theorem is that it clearly illustrates the relationship between three different pieces of a single entity. The lengths of the legs and hypotenuse of a triangle are intimately related; variations in one impact the others.

Operational risk – security, availability, performance – are interrelated in very much the same way. Changes to one impact the others. They cannot be completely separated. Much in the same way unraveling a braided rope will impact its strength, so too does unraveling the relationship between the three components of operational risk impact its overall success.

The OPERATIONAL RISK EQUATION

It is true that the theorem is not an exact match, primarily because concepts like performance and security are not easily encapsulated as concrete numerical values even if we apply mathematical concepts like Gödel numbering to it. While certainly we could use traditional metrics for availability and even performance (in terms of success at meeting specified business SLAs), still it would be difficult to nail these down in a way that makes the math make sense.

But the underlying concept - that it is always true* that the sides of a right triangle are interrelated is equally applicable to operational risk.

Consider that changes in performance impact what is defined as “availability” to end-users. Unacceptably slow applications are often defined as “unavailable” because they render it nearly impossible for end-users to work productively. Conversely, availability issues can negatively impact performance as fewer resources attempt to serve the same or more users. Security, too, is connected to both concepts, though it is more directly an enabler (or disabler) of availability and performance than vice-versa. Security solutions are not known for improving performance, after all, and the number of attacks directly attempting to negate availability is a growing concern for security. So, too, is the impact of attacks relevant to performance, as increasingly application-layer attacks are able to slip by security solutions and directly consume resources on web/application servers, degrading performance and ultimately resulting in a loss of availability.

But that is not to say that performance and availability have no impact on security at all. In fact the claim could be easily made that performance has a huge impact on security, as end-users demand more of the former and that often results in less of the latter because of the nature of traditional security solutions impact on performance.

Thus we are able to come back to the theorem in which the three sides of the data center triangle known as operational risk are, in fact, intimately linked. Changes in one impact the other, often negatively, and all three must be balanced properly in a way that maximizes them all.

WHAT DEVOPS CAN DO