Windows Azure and Cloud Computing Posts for 11/18/2013+

Top Stories This Week:

- Steven Martin (@stevemar_msft) and Scott Guthrie (@scottgu) posted Announcing the General Availability of Biz Talk Services, Azure Active Directory and Traffic Manager, and Preview of Azure Active Directory Premium articles to the Windows Azure blog on 11/21/2013 in the Windows Azure Access Control, Active Directory, and Identity section.

- Brad Anderson (@InTheCloudMSFT) continued his series with Success with Hybrid Cloud: The Components of a Hybrid Cloud on 11/18/2013 in the Windows Azure Pack, Hosting, Hyper-V and Private/Hybrid Clouds section.

- Charles Babcock (@babcockcw)reported Windows Azure Gaining 1000 Customers Per Month in an 11/20/2013 article for Information Week in the Windows Azure Infrastructure and DevOps section.

| A compendium of Windows Azure, Service Bus, BizTalk Services, Access Control, Caching, SQL Azure Database, and other cloud-computing articles. |

‡ Updated 11/22/2013 with new articles marked ‡.

• Updated 11/22/2013 with new articles marked •.

Note: This post is updated weekly or more frequently, depending on the availability of new articles in the following sections:

- Windows Azure Blob, Drive, Table, Queue, HDInsight and Media Services

- Windows Azure SQL Database, Federations and Reporting, Mobile Services

- Windows Azure Marketplace DataMarket, Power BI, Big Data and OData

- Windows Azure Service Bus, BizTalk Services and Workflow

- Windows Azure Access Control, Active Directory, and Identity

- Windows Azure Virtual Machines, Virtual Networks, Web Sites, Connect, RDP and CDN

- Windows Azure Cloud Services, Caching, APIs, Tools and Test Harnesses

- Windows Azure Infrastructure and DevOps

- Windows Azure Pack, Hosting, Hyper-V and Private/Hybrid Clouds

- Visual Studio LightSwitch and Entity Framework v4+

- Cloud Security, Compliance and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

Windows Azure Blob, Drive, Table, Queue, HDInsight and Media Services

<Return to section navigation list>

‡ The Windows Azure Storage Team reported Windows Azure Tables Breaking Changes (November 2013) on 11/23/2013:

In preparation for adding JSON support to Windows Azure Tables, we are pushing an update that introduces a few breaking changes for Windows Azure Tables. We strive hard to preserve backward compatibility and these changes were introduced due to dependencies we have on WCF Data Services. [Emphasis added.]

There are some changes in the WCF Data Services libraries which should not break XML parsers and HTTP readers written to standards. However, custom parsers may have taken certain dependencies on our previous formatting of the responses and the following breaking changes might impact them. Our recommendation is to treat XML content to standard as valid parsers do and to not take strong dependency on line breaks, whitespaces, ordering of elements etc.

Here are a list of changes:

- AtomPub XML response as part of the new release does not have line breaks and whitespaces in between the XML elements; It is in a compact form which would help in reducing the amount of data transferred while staying equivalent to the XML generated prior to the service update. Standard XML parsers are not impacted by this but customers have reported breaks in custom logic. We recommend that clients that roll their own parsers are compatible with XML specifications which handle such changes seamlessly.

- AtomPub XML response ordering of xml elements (title, id etc.) can change. Parsers should not take any dependency on ordering of elements.

- A “type” placeholder has been added to the Content-Type HTTP header. For example, for a query response (not point query) the content type will have “type=feed” in addition to charset and application/atom+xml.

- Previous version: Content-Type: application/atom+xml;charset=utf-8

- New version: Content-Type: application/atom+xml;type=feed;charset=utf-8

- A new response header is returned: X-Content-Type-Options: nosniff to reduce MIME type security risks.

Please reach out to us via forums or this blog if you have any concerns.

‡ The Windows Azure Storage Team described Windows Azure Storage Known Issues (November 2013) on 11/23/2013:

In preparation for a major feature release such as CORS, JSON etc. we are pushing an update to production that introduced some bugs. We were notified recently about these bugs and plan to address in an upcoming hotfix. We will update this blog once the fixes are pushed out.

Windows Azure Blobs, Tables and Queue Shared Access Signature (SAS)

One of our customers reported an issue that SAS with version 2012-02-12 failed with HTTP Status Code 400 (Bad Request). Upon investigation, the issue is caused by the fact that our service had a change in how “//” gets interpreted when such sequence of characters appear before the container.

Example: http://myaccount.blob.core.windows.net//container/blob?sv=2012-02-12&si=sasid&sx=xxxx

Whenever it receives a SAS request with version 2012-02-12 or prior, the previous version of our service collapses the ‘//’ into ‘/’ and hence things worked fine. However, the new service update returns 400 (Bad Request) because it interprets the above Uri as if the container name is null which is invalid. We will be fixing our service to revert back to the old behavior and collapse ‘//’ into ‘/’ for 2012-02-12 version of SAS. In the meantime, we advise our customers to refrain from sending ‘//’ at the start of the container name portion of the URI.

Windows Azure Tables

Below are 2 known issues that we intend to hotfix either on the service side or as part of our client library as noted below:

1. When clients define DataServiceContext.ResolveName and in case they provide a type name other than <Account Name>.<Table Name>, the CUD operations will return 400 (Bad Request). This is because ATOM “Category” element with “term” must either be omitted or be equal to the <Account Name>.<Table Name> as part of the new update. Previous version of the service used to ignore any type name being sent. We will be fixing this to again ignore what is being sent, but until then client applications would need to consider the below workaround. The ResolveName is not required for Azure Tables and client application can remove it to ensure that OData does not send “category” element.

Here is an example of a code snippet that would generate a request that fails on the service side:

CloudTableClient cloudTableClient = storageAccount.CreateCloudTableClient();tableServiceContext.ResolveName = delegate(Type entityType)

TableServiceContext tableServiceContext = cloudTableClient.GetDataServiceContext();

{

// This would cause class name to be sent as the value for term in the category element and service would return Bad Request.

return entityType.FullName;

};

SimpleEntity entity = new SimpleEntity("somePK", "someRK");

tableServiceContext.AddObject("sometable", entity);

tableServiceContext.SaveChanges();To mitigate the issue on the client side, please remove the highlighted “tableServiceContext.ResolveName” delegate.

We would like to thank restaurant.com for bringing this to our attention and helping us in investigating this issue.

2. The new .NET WCF Data Services library used on the server side as part of the service update rejects empty “cast” as part of the $filter query with 400 (Bad Request) whereas the older .NET framework library did not. This impacts Windows Azure Storage Client Library 2.1 since the IQueryable implementation (see this post for details) sends the cast operator in certain scenarios.

We are working on fixing the client library to match .NET’s DataServiceContext behavior which does not send the cast operator and this should be available in the next couple of weeks. In the meantime we advise our customers to consider the following workaround.

This client library issue can be avoided by ensuring you do not constrain the type of enumerable to the ITableEntity interface but to the exact type that needs to be instantiated.

The current behavior is described by the following example:

static IEnumerable<T> GetEntities<T>(CloudTable table) where T : ITableEntity, new()

{

IQueryable<T> query = table.CreateQuery<T>().Where(x => x.PartitionKey == "mypk");

return query.ToList();

}The above code in 2.1 storage client library’s IQueryable interface will dispatch a query that looks like the below Uri that is rejected by the new service update with 400 (Bad Request).

http://myaccount.table.core.windows.net/invalidfiltertable?$filter=cast%28%27%27%29%2FPartitionKey%20eq%20%27mypk%27&timeout=90 HTTP/1.1

As a mitigation, consider replacing the above code with the below query. In this case the cast operator will not be sent.

IQueryable<SimpleEntity> query = table.CreateQuery<SimpleEntity>().Where(x => x.PartitionKey == "mypk");

return query.ToList();

The Uri for the request looks like the following and is accepted by the service.

We apologize for these issues and we are working on a hotfix to address them.

‡ David Hardin described AzCopy and the Azure Storage Emulator on 11/22/2013:

AzCopy is now part of the Azure SDK and can copy files to and from Azure Storage. I couldn't find any examples of it copying to the Storage Emulator but it works. Here is an example batch file I use:

set Destination=http://127.0.0.1:10000/devstoreaccount1/

set DestinationKey=Eby8vdM02xNOcqFlqUwJPLlmEtlCDXJ1OUzFT50uSRZ6IFsuFq2UVErCz4I6tq/K1SZFPTOtr/KBHBeksoGMGw==

set AzCopy="%ProgramFiles(x86)%\Microsoft SDKs\Windows Azure\AzCopy\AzCopy.exe"

%AzCopy% FooFolder %Destination%FooContainer /S /BlobType:block /Y /DestKey:%DestinationKey%

%AzCopy% C:\BarFolder %Destination%BarContainer /S /BlobType:block /Y /DestKey:%DestinationKey%

First script copies all files and subfolders from FooFolder into FooContainer then it copies all files and subfolders from C:\BarFolder to BarContainer.

‡ Nuno Filipe Godinho (@NunoGodinho) listed Windows Azure Storage Performance Best Practices in an 11/22/2013 post:

Windows Azure storage is a very important part of Windows Azure and most applications leverage it for several different things from storing files in blobs, data in tables or even message in queues. Those are all very interesting services provided by Windows Azure but there are some performance best practices you can use in order to make your solutions even better.

In order to help you do this and speed your learning process I decided to share some of the best practices you can use in order to achieve this.

Here is a list of those best practices:

1. Turn off Nagling and Expect100 on the ServicePoint Manager

By now we might be thinking what is Nagling & Expect100. Let me help you better understand that.

1.1. Nagling

“The Nagle algorithm is used to reduce network traffic by buffering small packets of data and transmitting them as a single packet. This process is also referred to as "nagling"; it is widely used because it reduces the number of packets transmitted and lowers the overhead per packet.”

So after understanding the Nagle algorithm should we take it off?

Nagle is great for big messages and when you don’t care about latency but really about optimizing the protocol and what is sent over the wire. In small messages or when you really want to send something immediately the nagling algorithm will create an overhead since it will delay the sending of the data.

1.2. Expect100

“When this property is set to true, 100-Continue behavior is used. Client requests that use the PUT and POST methods will add an Expect header to the request if the Expect100Continue property is true and ContentLength property is greater than zero or the SendChunked property is true. The client will expect to receive a 100-Continue response from the server to indicate that the client should send the data to be posted. This mechanism allows clients to avoid sending large amounts of data over the network when the server, based on the request headers, intends to reject the request.” from MSDN

In order to do this there are two ways:

// Disconnects for all the endpoints Table/Blob/Queue

ServicePointManager.Expect100Continue = false;

ServicePointManager.UseNagleAlgorithm = false;

// Disconnects for only the Table Endpoint

var tableServicePoint = ServicePointManager.FindServicePoint(account.TableEndpoint);

tableServicePoint.UseNagleAlgorithm = false;

tableServicePoint.Expect100Continue = false;

Check that it is done before creating a connection with the client or this won’t have any effect on the performance. This means before you use one of these.

account.CreateCloudTableClient();

account.CreateCloudQueueClient();

account.CreateCloudBlobClient();

2. Turn off the Proxy Auto Detection

By default the proxy auto detection is on in Windows Azure which means that it will take a bit more time in order to do the connection since it still needs to get the proxy for each request. For that reason it is important for you to turn it off.

For that you should do the following change in the web.config / app.config file of you solution.

<defaultProxy> <proxy bypassonlocal="True" usesystemdefault="False" /> </defaultProxy>3. Adjust the DefaultConnectionLimit value of the ServicePointManager class

“The DefaultConnectionLimit property sets the default maximum number of concurrent connections that the ServicePointManager object assigns to the ConnectionLimit property when creating ServicePoint objects.” from MSDN

In order to optimize your default connection limit you first need to understand the conditions on which the application actually runs. The best way to do this is by doing performance tests with several different different values and then analyze them.

ServicePointManager.DefaultConnectionLimit = 100;Hope this helps you the way it helped me.

<Return to section navigation list>

Windows Azure SQL Database, Federations and Reporting, Mobile Services

‡ Carlos Figueira (@carlos_figueira) described New tables in Azure Mobile Services: string id, system properties and optimistic concurrency on 11/22/2013:

We just released update to Azure Mobile Services in which new tables created in the services have a different layout than what we have right until now. The main change is that they now have ids of type string (instead of integers, which is what we’ve had so far), which has been a common feature request. Tables have also by default three new system columns, which track the date each item in the table was created or updated, and its version. With the table version the service also supports conditional GET and PATCH requests, which can be used to implement optimistic concurrency. Let’s look at each of the three changes separately.

String ids

The type of the ‘id’ column of newly created tables is now string (more precisely, nvarchar(255) in the SQL database). Not only that, now the client can specify the id in the insert (POST) operation, so that developers can define the ids for the data in their applications. This is useful on scenarios where the mobile application wants to use arbitrary data as the table identifier (for example, an e-mail), make the id globally unique (not only for one mobile service but for all applications), or is offline for certain periods of time but still wants to cache data locally, and when it goes online it can perform the inserts while maintaining the row identifier.

For example, this code used to be invalid up to yesterday, but it’s perfectly valid today (if you update to the latest SDKs):

- private async void Button_Click(object sender, RoutedEventArgs e)

- {

- var person = new Person { Name = "John Doe", Age = 33, EMail = "john@doe.com" };

- var table = MobileService.GetTable<Person>();

- await table.InsertAsync(person);

- AddToDebug("Inserted: {0}", person.Id);

- }

- public class Person

- {

- [JsonProperty("id")]

- public string EMail { get; set; }

- [JsonProperty("name")]

- public string Name { get; set; }

- [JsonProperty("age")]

- public int Age { get; set; }

- }

If an id is not specified during an insert operation, the server will create a unique one by default, so code which doesn’t really care about the row id (only that it’s unique) can still be used. And as expected, if a client tries to insert an item with an id which already exists in the table, the request will fail.

Additional table columns (system properties)

In addition to the change in the type of the table id column, each new table created in a mobile service will have three new columns:

- __createdAt (date) – set when the item is inserted into the table

- __updatedAt (date) – set anytime there is an update in the item

- __version (timestamp) – a unique value which is updated any time there is a change to the item

The first two columns just make it easier to track some properties of the item, and many people used custom server-side scripts to achieve it. Now it’s done by default. The third one is actually used to implement optimistic concurrency support (conditional GET and PATCH) for the table, and I’ll talk about it in the next section.

Since those columns provide additional information which may not be necessary in many scenarios, the Mobile Service runtime will not return them to the client, unless it explicitly asks for it. So the only change in the client code necessary to use the new style of tables is really to use string as the type of the id property. Here’s an example. If I insert an item in my table using a “normal” request to insert an item in a table:

POST https://myservice.azure-mobile.net/tables/todoitem HTTP/1.1

User-Agent: Fiddler

Content-Type: application/json

Host: myservice.azure-mobile.net

Content-Length: 37

x-zumo-application: my-app-key{"text":"Buy bread","complete":false}

This is the response we’ll get (some headers omitted for brevity):

HTTP/1.1 201 Created

Cache-Control: no-cache

Content-Length: 81

Content-Type: application/json

Location: https://myservice.azure-mobile.net/tables/todoitem/51FF4269-9599-431D-B0C4-9232E0B6C4A2

Server: Microsoft-IIS/8.0

Date: Fri, 22 Nov 2013 22:39:16 GMT

Connection: close{"text":"Buy bread","complete":false,"id":"51FF4269-9599-431D-B0C4-9232E0B6C4A2”}

No mention of the system properties. But if we go to the portal we’ll be able to see that the data was correctly added.

If you want to retrieve the properties, you’ll need to request those explicitly, by using the ‘__systemProperties’ query string argument. You can ask for specific properties or use ‘__systemProperties=*’ for retrieving all system properties in the response. Again, if we use the same request but with the additional query string parameter:

POST https://myservice.azure-mobile.net/tables/todoitem?__systemProperties=createdAt HTTP/1.1

User-Agent: Fiddler

Content-Type: application/json

Host: myservice.azure-mobile.net

Content-Length: 37

x-zumo-application: my-app-key{"text":"Buy bread","complete":false}

Then the response will now contain that property:

HTTP/1.1 201 Created

Cache-Control: no-cache

Content-Length: 122

Content-Type: application/json

Location: https://myservice.azure-mobile.net/tables/todoitem/36BF3CC5-E4E9-4C31-8E64-EE87E9BFF4CA

Server: Microsoft-IIS/8.0

Date: Fri, 22 Nov 2013 22:47:50 GMT{"text":"Buy bread","complete":false,"id":"36BF3CC5-E4E9-4C31-8E64-EE87E9BFF4CA","__createdAt":"2013-11-22T22:47:51.819Z"}

You can also request the system properties in the server scripts itself, by passing a ‘systemProperties’ parameter to the ‘execute’ method of the request object. In the code below, all insert operations will now return the ‘__createdAt’ column in their responses, regardless of whether the client requested it.

- function insert(item, user, request) {

- request.execute({

- systemProperties: ['__createdAt']

- });

- }

Another aspect of the system columns is that they cannot be sent by the client. For new tables (i.e., those with string ids), if an insert of update request contains a property which starts with ‘__’ (two underscore characters), the request will be rejected. The ‘__createdAt’ property can, however, be set in the server script (although if you really don’t want that column to represent the creation time of the object, you may want to use another column for that) – the code below shows one way where this (rather bizarre) scenario can be accomplished. If you try to update the ‘__updatedAt’ property, it won’t fail, but by default that column is updated by a SQL trigger, so any updates you make to it will be overridden anyway. The ‘__version’ column uses a read-only type in the SQL database (timestamp), so it cannot be set directly.

- function insert(item, user, request) {

- request.execute({

- systemProperties: ['__createdAt'],

- success: function () {

- var created = item.__createdAt;

- // Set the created date to one day in the future

- created.setDate(created.getDate() + 1);

- item.__createdAt = created;

- tables.current.update(item, {

- // the properties can also be specified without the '__' prefix

- systemProperties: ['createdAt'],

- success: function () {

- request.respond();

- }

- });

- }

- });

- }

Finally, although those columns are added by default and have some behavior associated with them, they can be removed from any table which you don’t want. As you can see in the screenshot of the portal below, the delete button is still enabled for those columns (the only one which cannot be deleted is the ‘id’).

Conditional retrieval / updates (optimistic concurrency)

Another feature we added in the new style tables is the ability to perform conditional retrieval or updates. That is very useful in the case where multiple clients are accessing the same data, and we want to make sure that write conflicts are handled properly. The MSDN tutorial Handling Database Write Conflicts gives a very detailed, step-by-step description on how to enable this (currently only the managed client has full support for optimistic concurrency and system properties; support for the other platforms is coming soon) scenario. I’ll talk here about the behind-the-scenes of how this is implemented by the runtime.

The concept of conditional retrieval is this: if you have the same version of the item which is stored in the server, you can save a few bytes of network traffic (and time) by having the server reply with “you already have the latest version, I don’t need to send it again to you”. Likewise, conditional updates work by the client sending an update (PATCH) request to the server with a precondition that the server should only update the item if the client version matches the version of the item in the server.

The implementation of conditional retrieval / updates is based on the version of the item, from the system column ‘__version’. That version is mapped in the HTTP layer to the ETag header responses, so that when the client receives a response for which it asked for that system property, the value will be lifted to the HTTP response header:

GET /tables/todoitem/2F6025E7-0538-47B2-BD9F-186923F96E0F?__systemProperties=version HTTP/1.1

User-Agent: Fiddler

Content-Type: application/json

Host: myservice.azure-mobile.net

Content-Length: 0

x-zumo-application: my-app-keyThe response body will contain the ‘__version’ property, and that value will be reflected in the HTTP header as well:

HTTP/1.1 200 OK

Cache-Control: no-cache

Content-Length: 108

Content-Type: application/json

ETag: "AAAAAAAACBE="

Server: Microsoft-IIS/8.0

Date: Fri, 22 Nov 2013 23:44:48 GMT{"id":"2F6025E7-0538-47B2-BD9F-186923F96E0F","__version":"AAAAAAAACBE=","text":"Buy bread","complete":false}

Now, if we want to update that record, we can make a conditional GET request to the server, by using the If-None-Match HTTP header:

GET /tables/todoitem/2F6025E7-0538-47B2-BD9F-186923F96E0F?__systemProperties=version HTTP/1.1

User-Agent: Fiddler

Content-Type: application/json

Host: myservice.azure-mobile.net

If-None-Match: "AAAAAAAACBE="

Content-Length: 0

x-zumo-application: my-app-keyAnd, if the record had not been modified in the server, this is what the client would get:

HTTP/1.1 304 Not Modified

Cache-Control: no-cache

Content-Type: application/json

Server: Microsoft-IIS/8.0

Date: Fri, 22 Nov 2013 23:48:24 GMTIf however, if the record had been updated, the response will contain the updated record, and the new version (ETag) for the item.

HTTP/1.1 200 OK

Cache-Control: no-cache

Content-Length: 107

Content-Type: application/json

ETag: "AAAAAAAACBM="

Server: Microsoft-IIS/8.0

Date: Fri, 22 Nov 2013 23:52:01 GMT{"id":"2F6025E7-0538-47B2-BD9F-186923F96E0F","__version":"AAAAAAAACBM=","text":"Buy bread","complete":true}

Conditional updates are similar. Let’s say the user wanted to update the record shown above but only if nobody else had updated it. So they’ll use the If-Match header to specify the precondition for the update to succeed:

PATCH /tables/todoitem/2F6025E7-0538-47B2-BD9F-186923F96E0F?__systemProperties=version HTTP/1.1

User-Agent: Fiddler

Content-Type: application/json

Host: myservice.azure-mobile.net

If-Match: "AAAAAAAACBM="

Content-Length: 71

x-zumo-application: my-app-key{"id":"2F6025E7-0538-47B2-BD9F-186923F96E0F","text":"buy French bread"}

And assuming that it was indeed the correct version, the update would succeed, and change the item version:

HTTP/1.1 200 OK

Cache-Control: no-cache

Content-Length: 98

Content-Type: application/json

ETag: "AAAAAAAACBU="

Server: Microsoft-IIS/8.0

Date: Fri, 22 Nov 2013 23:57:47 GMT{"id":"2F6025E7-0538-47B2-BD9F-186923F96E0F","text":"buy French bread","__version":"AAAAAAAACBU="}

If another client which had the old version tried to update the item:

PATCH /tables/todoitem/2F6025E7-0538-47B2-BD9F-186923F96E0F?__systemProperties=version HTTP/1.1

User-Agent: Fiddler

Content-Type: application/json

Host: ogfiostestapp.azure-mobile.net

If-Match: "AAAAAAAACBM="

Content-Length: 72

x-zumo-application: wSdTNpzgPedSWmZeuBxXMslqNHYVZk52{"id":"2F6025E7-0538-47B2-BD9F-186923F96E0F","text":"buy two baguettes"}

The server would reject the request (and return to the client the actual version of the item in the server)

HTTP/1.1 412 Precondition Failed

Cache-Control: no-cache

Content-Length: 114

Content-Type: application/json

ETag: "AAAAAAAACBU="

Server: Microsoft-IIS/8.0

Date: Sat, 23 Nov 2013 00:19:30 GMT{"id":"2F6025E7-0538-47B2-BD9F-186923F96E0F","__version":"AAAAAAAACBU=","text":"buy French bread","complete":true}

That’s how conditional retrieval and updates are implemented in the runtime. In most cases you don’t really need to worry about those details – as can be seen in the tutorial on MSDN, the code doesn’t need to deal with any of the HTTP primitives, and the translation is done by the SDK.

Creating “old-style” tables

Ok, those are great features, but you really don’t want to change anything in your code. You still want to use integer ids, and you need to create a new table with that. It cannot be done via the Windows Azure portal, but you can still do that via the Cross-platform Command Line Interface, with the “--integerId” modifier in the “azure mobile table create” command:

azure mobile table create --integerId [servicename] [tablename]And that will create an “old-style” table, with the integer id and none of the system properties.

Next up: clients support for the new features

In this post I talked about the changes in the Mobile Services runtime (and in its HTTP interface) with the new table style. In the next post I’ll talk about the client SDK support for them – both system properties and optimistic concurrency. And as usual, please don’t hesitate in sending feedback via comments or our forums for those features.

‡ Hasan Khan (@AzureMobile) posted Welcome to the Windows Azure Mobile Services team blog! on 11/19/2013:

Since Mobile Services preview in late August last year, we have greatly appreciated the overwhelming response from and interaction with the developer community. Many team members have benefited from your feedback on their blogs so we decided to extend it to a team blog. Here, we hope to give you quick updates on new features that we ship and solicit your feedback.

This blog is complementary to existing discussion sites and venues such as:

We'll also post updates on twitter @AzureMobile. Feel free to leave your comments and suggestions on twitter and this blog. We're looking forward to your valuable feedback.

Azure Mobile Services Team

<Return to section navigation list>

Windows Azure Marketplace DataMarket, Cloud Numerics, Big Data and OData

• Glenn Gailey (@ggailey777) asserted Time to get the WCF Data Services 5.6.0 RTM Tools in an 11/18/2013 post:

Folks who have recently tried to download and install the OData Client Tools for Windows Store apps (the one that I have been using in all my apps, samples, and blog posts to date) have been blocked by a certificate issue that prevents the installation of this client and tools .msi. While a temporary workaround (hack, really) is to simply set the system clock back by a few weeks to trick the installer, this probably means that it’s time to start using the latest version of WCF Data Services tooling.

Besides actually installing, the WCF Data Services 5.6.0 Tools provide the following benefits:

- Support for Visual Studio 2013 and Windows Store apps in Windows 8.1.

- Portable libraries, which enables you to code both Windows Store and Windows Phone apps

- New JSON format

You can find a very detailed description of the goodness in this version at the WCF Data Services team blog.

Get it from: http://www.microsoft.com/en-us/download/details.aspx?id=39373

Why you need the tools and not just the NuGet Packages

Besides the 5.6.0 tools, you can also use NuGet.org to search for, download, and install the latest versions of WCF Data Services (client and server). This will get you the latest runtime libraries, but not the Visual Studio tooling integration. Even if you plan to move to a subsequent version of WCF Data Services via NuGet, make sure to first install these tools—with them you get these key components to the Visual Studio development experience:

- Add Service Reference

Without the tools, the Add Service Reference tool in Visual Studio will use the older .NET Framework version of the client tools, which means that you get the older assemblies and not the latest NuGet packages with all the goodness mentioned above.

WCF Data Services Item Template

Again, you will get the older assemblies when you create a new WCF Data Service by using the VS templates. With this tools update, you will get the latest NuGet packages instead.

Once you get the 5.6.0 tools installed on your dev machine, you can go back to using the goodness of NuGet to support WCF Data Services in your apps.

My one issue with the new OData portable client library

The coolness of a portable client library for OData is that both Windows Store and Windows Phone apps use the same set of client APIs, which live in a single namespace—making it easier to write common code that will run on either platform. However, for folks who have gotten used to the goodness of using DataServiceState to serialize and deserialize DataServiceContext and DataServiceCollection<T> objects, there’s some not great news. The DataServiceState object didn’t make it into the portable version of the OData client. Because I’m not volunteering to write a custom serializer to replace DataServiceState (I heard it was a rather tricky job), I’ll probably have to stick with the Windows Phone-specific library for the time being. I created a request to add DataServiceState to the portable client (I think that being able to cache relatively stable data between executions is a benefit, even on a Win8 device) on the feature suggestions site (please vote if you agree): Add state serialization support via DataServiceState to the new portable client.

WCF Data Services and Entity Framework 6.0

The Entity Framework folks have apparently made some significant changes to things in Entity Framework 6.0, so significant in fact that it breaks WCF Data Services. Your options to work around this are:

- Install an older version of EF (5.0 or earlier)

- If you need some EF6 goodness, install the WCF Data Services Entity Framework Provider package (requires WCF Data Services 5.6.0 or later and EF 6.0 or later)

For details about all of this, see Using WCF Data Services 5.6.0 with Entity Framework 6+.

I actually came across this EF6 issue when I was trying to re-add a bunch of NuGet packages to a WCF Data Services project. When you search for NuGet packages to add to your project, the Manage NuGet Packages wizard will only install the latest versions (pre-release or stabile) of a given package. I found that to install an older version of a package, I had to use the Package Manager Console instead. One tip is to watch out for the Package Source setting in the console, as this may be filtering out the version you are looking for.

No significant articles so far this week.

<Return to section navigation list>

Windows Azure Service Bus, BizTalk Services and Workflow

‡ Morten la Cour (@mortenlcd) described Installing Windows Azure BizTalk Services in an 11/23/2013 post to the Vertica.dk blog:

Today, Windows Azure BizTalk Services (WABS) was released for General Availability (GA). In this blog post I will show how to setup a Windows Azure BizTalk Service in Windows Azure, after the GA. Several things have changed from the Preview bits, and the setup experience has changed quite a bit, not needing to create your own certificate nor an Access Control Namespace anymore.

To follow this blog post, you will need a Windows Azure account. If you do not already have this go to this site for further details:

https://account.windowsazure.com

Setup a new BizTalk Service in Azure

before setting up a WABS, we need to consider the following:

- A SQL Azure Server and a database will be required for Tracking. Should we use an existing or let the setup wizard create a new?

- An Azure Storage Account will also be required. (Can also be created from within the setup Wizard).

It is recommended that both the BizTalk Service, SQL Server and Storage Account reside in the same region.

Setting up WABS

Now let’s start setting up a new BizTalk Service in Azure.

- In the Windows Azure Portal, choose + NEW | APP SERVICES | BIZTALK SERVICE | CUSTOM CREATE and choose a BIZTALK SERVICE NAME (making sure that the name is unique, but verifying that a green check mark appears), choose an appropriate EDITION and REGION, and select either an existing Azure SQL database, or choose to have the wizard create one for you.

The edition choice will have impact on how much you are charged for the WABS. See the pricing model for further details:

http://www.windowsazure.com/en-us/pricing/details/biztalk-services/

- Click Next (right arrow).

- Specify your SQL credentials and the name of the new database if needed, click Next.

- Choose a Storage Account or have the wizard create a new one.

- If you choose to create a new Storage Account, a name will also be required.

- Click Complete.

The BizTalk Service will take approx. 10 minutes to be created.

Once created, we need to fetch some information about our newly created WABS.

Retrieve the WABS certifcate.

First we need to download a public version of the certificate used for HTTPS communication with our BizTalk Service.

- In the Windows Azure Portal, select BizTalk Services, and select your new BizTalk Service.

- A Dashboard should now appear:

The WABS Dashboard

Note:A new Access Control Namespace has been automatically created together with our WABS.

- Select Download SSL Certificate, and save the .cer file for later usage. (This file will be needed once we start deploying and submitting to our BizTalk Service).

Register the Portal

Now all we need to do is to register our new BizTalk Service.

- Select CONNECTION INFORMATION at the bottom of the Windows Azure Portal, and copy the three values to notepad for later usage:

- Select MANAGE.

- A Register New Deployment form should appear.

- Fill in your BizTalk Service Name, the ACS Issuer name (owner) and ACS Issuer secret (DEFAULT KEY fetched from CONNECTION INFORMATION before).

Click REGISTER and the WABS Portal should now appear.

Note: In my screenshot, I have deployed a BRIDGE, so I have a 1 where you will probably have a 0. In the next blog we will look at how to setup the development environment for WABS, and later on how to deploy artifacts (such as BRIDGES), so stay tuned…

• Brian Benz (@bbenz) reported AMQP 1.0 is one step closer to being recognized as an ISO/IEC International Standard in an 11/21/2013 post to the Interoperability @ Microsoft blog by Ram Jeyaraman, Senior Standards Professional, Microsoft Open Technologies, Inc. and co-Chair of the OASIS AMQP Technical Committee:

Microsoft Open Technologies is excited to share the news from OASIS that the formal approval process is now underway to transform the AMQP 1.0 OASIS Standard to an ISO/IEC International Standard.

The Advanced Message Queuing Protocol (AMQP) specification enables interoperability between compliant clients and brokers. With AMQP, applications can achieve full-fidelity message exchange between components built using different languages and frameworks and running on different operating systems. As an inherently efficient application layer binary protocol, AMQP enables new possibilities in messaging that scale from the device to the cloud.

Submission for approval as an ISO/IEC International Standard builds on AMQP’s successes over the last 12 months, including AMQP 1.0 approval as an OASIS Standard in October 2012 and the ongoing development of extensions that greatly enhance the AMQP ecosystem.

The ISO/IEC JTC 1 international standardization process is iterative, and consensus-driven. Its goal is to deliver a technically complete standard that can be broadly adopted by nations around the world.

Throughout the remainder of this process, which may take close to a year, the MS Open Tech standards team will continue to represent Microsoft and work with OASIS to advance the specification.

You can learn more about AMQP and get an understanding of AMQP’s business value here. You can also find a list of related vendor-supported products, open source projects, and details regarding customer usage and success on the AMQP website: http://www.amqp.org/about/examples.

If you’re a developer getting started with AMQP, we recommend that you read this overview. For even more detail and guidance here’s a Service Bus AMQP Developer's Guide, which will help you get started with AMQP for the Windows Azure Service Bus using .NET, Java, PHP, or Python. Also, have a look at this recent blog post from Scott Guthrie, called Walkthrough of How to Build a Pub/Sub Solution using AMQP.

Whether you’re a novice user or an active contributor the community, we’d like to hear from you! Let us know how your experience with AMQP has been so far by leaving comments here. As well, we invite you to connect with the community and join the conversation on LinkedIn, Twitter, and Stack Overflow.

• Nick Harris (@cloudnick) and ChrisRisner (@chrisrisner) produced Cloud Cover Episode 120: Service Agility with the Service Gateway for Channel9 on 11/21/2013:

In this episode Nick Harris and Chris Risner are joined by James Baker, Principle SDE on the Windows Azure Technical Evangelism team. In this episode James goes over the Service Gateway project. The Service Gateway provides an architectural component that businesses can use for composition of disparate web assets. Using the gateway, an IT-Pro can control the configuration of:

- Roles

- AuthN/AuthZ

- A/B Testing

- Tracing

You can read more about the Service Gateway and access the source code for it here.

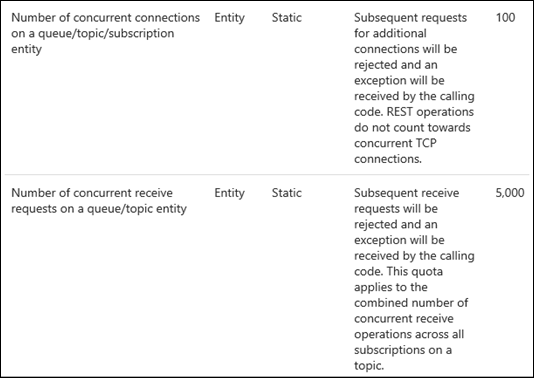

Damir Dobric (@ddobric) described Windows Azure Service Bus Connection Quotas in an 11/18/2013 post to the Microsoft MVP Award Program blog:

This table contains in fact all you want to know about quotas. But if you don’t know how to deal with TCP connection to Service Bus and if you don’t know the meaning of “connection link”, this table will not help much.

The number of “subsequent request for additional connections” is in fact the number of “connection links” which can be established to a single Messaging Entity (Queues and Topics).

In other words, if you have one Queue/Topic then you can create maximal 100

MessageSender-s/QueueClients/TopicClients which will send messages. This is the Service Bus quota independent on number of Messaging Factories used behind clients. If you are asking yourself now, why is the Messaging Factory important at all, if the quota is limited by number of “connection links” (clients). You are right. There is no correlation between Messaging Factory and quota of 100 connections.

Remember, quota is limited by number of “connection links”. Messaging Factory helps you to increase throughput, but not the number of concurrent connections.

Following picture illustrates this:

The picture above shows maximal number of 100 clients (senders, receivers, queue clients, topic clients) which are created on top of 2 Messaging Factories. Two clients uses one Messaging Factory and 98 clients use another Messaging Factory.

Altogether, there are two TCP connections and 100 “connection links” shared across two connections.

How to observe huge number of devices?

Probably most important question in this context is how to observe a huge number of devices (clients) if the Messaging entity limit is that low (remember 100). To be able to support for example 100.000 devices you will have to create 1000 connections assuming that one device creates one “connection link” through one physical connection.

That means, if you want to send messages from 100.000 devices you need 1000 queues or topics which will receive messages and aggregate them.

The quota table shown above defines also the limit of “concurrent receive requests”, which is right now limited by 5000. This means you can create maximal 100 receivers (or QueueClients, SubscriptionClients) and send 5000 receive requests shared across 100 receivers. For example you could create 100 receivers and concurrently call Receive() in 50 threads. Or, you could create one receiver and concurrently call Receive() 5000 times.But again, if devices have to receive messages from queue, then only 100 devices can be concurrently connected to the queue.

If each device has its own subscription then you will probably not have a quota issue on topic-subscription, because one subscription will usually observe one device. But if all devices are concurrently receiving messages, then there is a limit of 5000 on topic level (across all subscriptions). Here can also be important another quota which limits the number of subscriptions per topic on 2000.

If your goal is to use for example less queues, then HTTP/REST might be a better solution than SBMP and AMQP. If the send operations are not frequently executed (not very chatty), then you can use HTTP/REST. In this case the number of concurrent “connection links” statistically decreases, because HTTP does not relay on a permanent connection.

How about Windows RT wind Windows Phone?

Please also note, that Windows 8 messaging is implemented in WindowsAzure.Messaging assembly, which uses HTTP/REST as a protocol. This is because RT-devices are mobile devices, which are statistically limited to HTTP:80. In this case Windows 8 will not establish permanent connection to SB as described above. But it will activate HTTP polling, if you use Message Pump – pattern (OnMessage is used instead of on demand invoke of ReceiveAsync). That means the permanent connections to Service Bus will not be created, but the network pressure will remain du the polling process, which is right not set on 2 minutes. That means Windows RT will send receive request to Service Bus and wait for two minutes to receive the message. If the message is not received witching timeout period, request will time out and new request will be sent. By using of this pattern Windows RT device is permanently running on the receive mode.

In an enterprise can happen that many devices are doing polling for messages. If this is a problem in a case of huge number of devices on specific network segment, you can rather use dedicated ReceiveAsync() instead of OnMessage. ReceiveAsync() operation connects on demand and after receiving of response simply closes the connection. In this case you can dramatically reduce the number of connections.

<Return to section navigation list>

Windows Azure Access Control, Active Directory, Identity and Workflow

• The Microsoft Download Center made Forefront Identity Manager Connector for Windows Azure Active Directory available for download on 11/21/2013:

Overview

Forefront Identity Manager Connector for Windows Azure Active Directory helps you synchronize identity information to Azure Active Directory.

System Requirements

- Supported Operating Systems:Windows Server 2008;Windows Server 2008 R2;Windows Server 2012

Minimum Requirements

- FIM Synchronization Service (FIM2010 R2 hotfix 4.1.3496.0, or later)

- Microsoft .NET 4.0 Framework

- Microsoft Online Services Sign-In Assistant

The Windows Azure Active Directory Connector for FIM 2010 R2 Technical Reference provides additional details:

The objective of this document is to provide you with the reference information that is required to deploy the Windows Azure Active Directory (AAD) connector for Microsoft® Forefront® Identity Manager (FIM) 2010 R2.

Overview of the AAD Connector

The AAD connector enables you to connect to one or multiple AAD directories from FIM2010. AAD is the infrastructure backend for Office 365 and other cloud services from Microsoft.

The connector is available as a download from the Microsoft Download Center.

From a high level perspective, the following features are supported by the current release of the connector:

Requirement Support FIM version

FIM 2010 R2 hotfix 4.1.3493.0 or later (2906832)

Connect to data source

Windows Azure Active Directory

Scenario

- Object Lifecycle Management

- Group Management

Note

The Password Hash Sync feature available in DirSync is not supported with FIM2010 and the AAD Connector. Operations The following operations are supported:

- Full import

- Delta import

- Export

Note

This connector does not support any password management scenarios Schema The schema is fixed in the AAD connector and it is not possible to add additional objects and attributes.

Connected Data Source Requirements

In order to manage objects using a connector, you need to make sure that all requirements of the connected data source are fulfilled. This includes tasks such as opening the required network ports and granting the necessary permissions. The objective of this section is to provide an overview of the requirements of a connected data source to perform the desired operations.

Connected Data Source Permissions

When you configure the connector, in the Connectivity section, you need to provide the credentials of an account that is a Global Administrator of the AAD tenant you wish to synchronize with. This account can be either a managed (i.e. username/password) or federated identity.

Important: When you change the password associated with this AAD administrator account, you must also update the AAD connector in FIM 2010 to provide the new password.

Ports and Protocols

The AAD Connector communicates with AAD using web services. For additional information which addresses are used by AAD and Office 365, please refer to Office 365 URLs and IP address ranges. …

The Technical Reference continues with detailed deployment instructions.

Steven Martin (@stevemar_msft) posted Announcing the General Availability of Biz Talk Services, Azure Active Directory and Traffic Manager, and Preview of Azure Active Directory Premium to the Windows Azure blog on 11/21/2013:

In addition to economic benefits, cloud computing provides greater agility in application development which translates into competitive advantage. We are delighted to see over 1,000 new Windows Azure subscriptions created every day and even more excited to see that half of our customers are using higher-value services to build new modern business applications. Today, we are excited to announce general availability and preview of several services that help developers better integrate applications, manage identity and enhance load balancing.

Windows Azure Active Directory

We are thrilled to announce the general availability of the free offering of Windows Azure Active Directory.

As applications get increasingly cloud based, IT administrators are being challenged to implement single sign-on (SSO) against SaaS applications and ensure secure access. With Windows Azure Active Directory, it is easy to manage user access to hundreds of cloud SaaS applications like Office 365, Box, GoToMeeting, DropBox, Salesforce.com and others. With these free application access enhancements you can:

Seamlessly enable single sign-on to many popular pre-integrated cloud apps for your users using the new application gallery on Windows Azure portal

- Provision (and de-provision) your users' identities into selected featured SaaS apps

- Record unusual access patterns to your cloud-based applications via predefined security reports

- Assign cloud-based applications to your users so they can launch them from a single web page, the Access Panel

These features are available at no cost to all Windows Azure subscribers. If you are already using application access enhancements in preview, you do not have to take any action. You will be automatically transitioned to the generally available service.

Windows Azure Active Directory Premium Offering

The Windows Azure Active Directory Premium offering is now available in public preview. Built on top of the free offering of Windows Azure Active Directory, it provides a robust set of capabilities to empower enterprises with more demanding needs on identity and access management.

In its first milestone, the premium offer enables group-based provisioning and access management to SaaS applications, customized access panel, and detailed machine learning-based security reports. Additionally, end-users can perform self-service password resets for cloud applications.

Windows Azure Active Directory Premium is free during the public preview period and will add additional cloud focused identity and access management capabilities in the future.

At the end of the free preview, the Premium offering will be converted to a paid service, with details on pricing being communicated at least 30 days prior to the end of the free period.

We encourage you to sign up for Windows Azure Active Directory Premium public preview today.

Windows Azure Biz Talk Services

Windows Azure Biz Talk Services is now generally available. While customers continue to invest in cloud based applications, they need a scalable and reliable solution for extending their on-premises applications to the cloud. This cloud based integration service enables powerful business scenarios like supply chain, cloud-based electronic data interchange (EDI) and enterprise application integration (EAI), all with a familiar toolset and enterprise grade reliability.

If you are already using BizTalk Services in preview, you will be transitioned automatically to the generally available service and new pricing takes effect on January 1, 2014.

To learn more about the services and new pricing, visit the BizTalk Services website.

Windows Azure Traffic Manager

Windows Azure Traffic Manager service is now generally available. Leveraging the load balancing capabilities of this service, you can now create highly responsive and highly available production grade applications. Many customers like AccuWeather are already using Traffic Manager to boost performance and availability of their applications. We are also delighted to announce that Traffic Manager now carries a service level agreement of 99.99% and is supported through your existing Windows Azure support plan. We are also announcing new pricing for Traffic Manager. Free promotional pricing will remain in effect until December 31, 2013.

If you already are using Traffic Manager in preview, you do not have to take any action. You will be transitioned automatically to the generally available service, and the new pricing will take effect on January 1, 2014.

For more information on using Traffic Manager, please visit the Traffic Manager website.

For further details on these services and other enhancements, visit Scott Guthrie's blog.

Scott Guthrie (@scottgu) added details in his Windows Azure: General Availability Release of BizTalk Services, Traffic Manager, Azure AD App Access + Xamarin support for Mobile Services post of 11/21/2013:

This morning we released another major set of enhancements to Windows Azure. Today’s new capabilities include:

- BizTalk Services: General Availability Release!

- Traffic Manager: General Availability Release!

- Active Directory: General Availability Release of Application Access Support!

- Mobile Services: Active Directory Support, Xamarin support for iOS and Android with C#, Optimistic concurrency

- Notification Hubs: Price Reduction + Debug Send Support

- Web Sites: Diagnostics Support for Automatic Logging to Blob Storage

- Storage: Support for alerting based on storage metrics

- Monitoring: Preview release of Windows Azure Monitoring Service Library

All of these improvements are now available to use immediately (note that some features are still in preview). Below are more details about them:

BizTalk Services: General Availability Release

I’m excited to announce the general availability release of Windows Azure Biz Talk Services. This release is now live in production, backed by an enterprise SLA, supported by Microsoft Support, and is ready to use for production scenarios.

Windows Azure BizTalk Services enables powerful business scenarios like supply chain and cloud-based electronic data interchange and enterprise application integration, all with a familiar toolset and enterprise grade reliability. It provides built-in support for managing EDI relationships between partners, as well as setting up EAI bridges with on-premises assets – including built-in support for integrating with on-premises SAP, SQL Server, Oracle and Siebel systems. You can also optionally integrate Windows Azure BizTalk Services with on-premises BizTalk Server deployments – enabling powerful hybrid enterprise solutions.

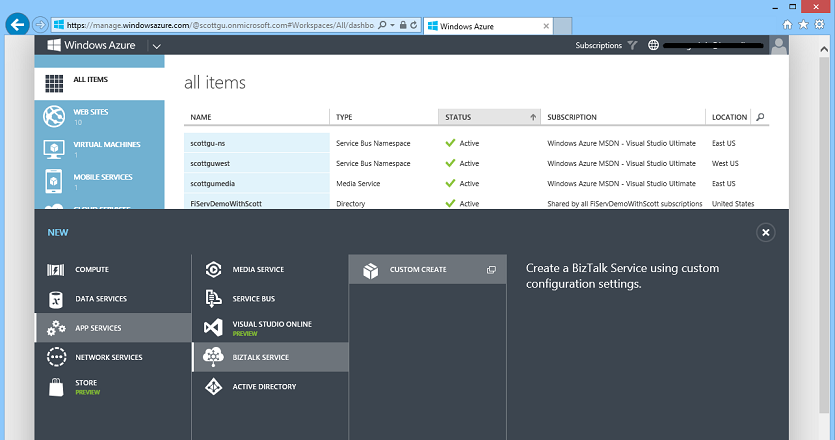

Creating a BizTalk Service

Creating a new BizTalk Service is easy – simply choose New->App Services->BizTalk Service to create a new BizTalk Service instance:

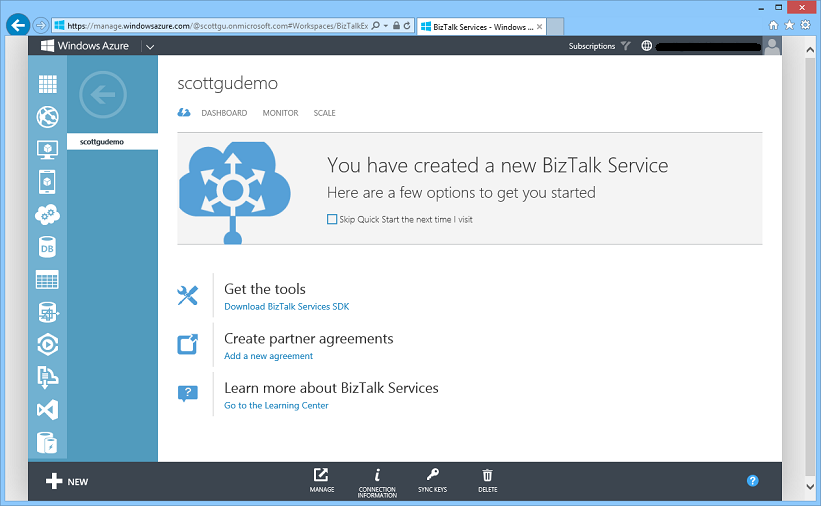

Windows Azure will then provision a new high-availability BizTalk instance for you to use:

Each BizTalk Service instance runs in a dedicated per tenant environment. Once provisioned you can use it to integrate your business better with your supply chain, enable EDI interactions with partners, and extend your on-premises systems to the cloud to facilitate EAI integration.

Changes between Preview and GA

The team has been working extremely hard in preparing Windows Azure BizTalk Services for General Availability. In addition to finalizing the quality, we also made a number of feature improvements to address customer feedback during the preview. These improvements include:

- B2B and EDI capabilities are now available even in the Basic and Standard tiers (in the preview they were only in the Premium tier)

- Significantly simplified provisioning process – ACS namespace and self-signed certificates are now automatically created for you

- Support for worldwide deployment in Windows Azure regions

- Multiple authentication IDs & multiple deployments are now supported in the BizTalk portal.

- BackUp-Restore is now supported to enable Business Continuity

If you are already using BizTalk Services in preview, you will be transitioned automatically to the GA service and new pricing will take effect on January 1, 2014.

Getting Started

Read this article to get started with provisioning your first BizTalk Service. BizTalk Services supports a Developer Tier that enables you to do full development and testing of your EDI and EAI workloads at a very inexpensive rate. To learn more about the services and new pricing, read the BizTalk Services documentation.

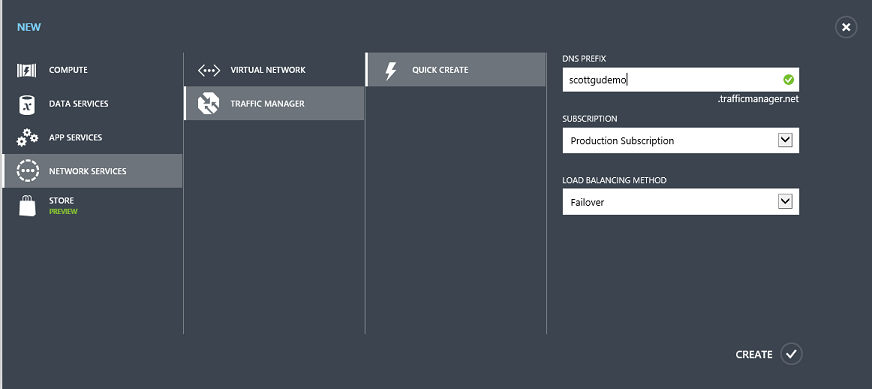

Traffic Manager: General Availability Release

I’m excited to announce that Windows Azure Traffic Manager is also now generally available. This release is now live in production, backed by an enterprise SLA, supported by Microsoft Support, and is ready to use for production scenarios.

Windows Azure Traffic Manager allows you to control the distribution of user traffic to applications that you host within Windows Azure. Your applications can run in the same data center, or be distributed across different regions across the world. Traffic Manager works by applying an intelligent routing policy engine to the Domain Name Service (DNS) queries on your domain names, and maps the DNS routes to the appropriate instances of your applications.

You can use Traffic Manager to improve application availability - by enabling automatic customer traffic fail-over scenarios in the event of issues with one of your application instances. You can also use Traffic Manager to improve application performance - by automatically routing your customers to the closet application instance nearest them (e.g. you can setup Traffic Manager to route customers in Europe to a European instance of your app, and customers in North America to a US instance of your app).

Getting Started

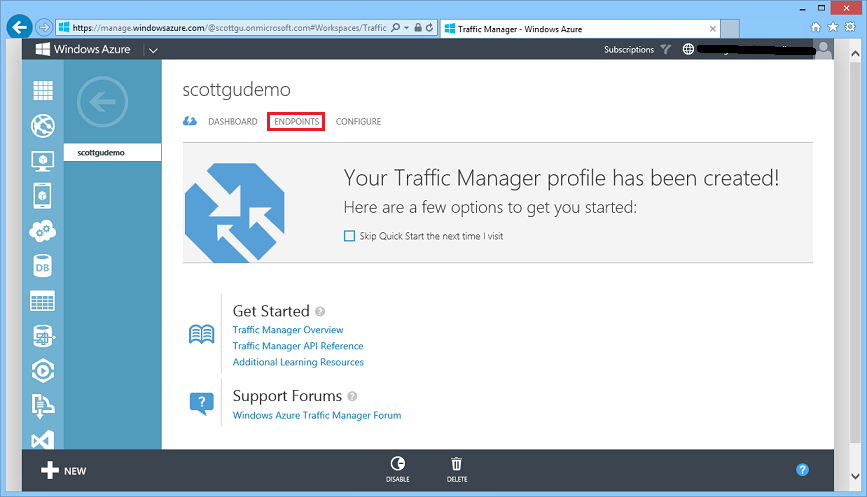

Setting up Traffic Manager is easy to do. Simply choose New->Network Services->Traffic Manager within the Windows Azure Management Portal:

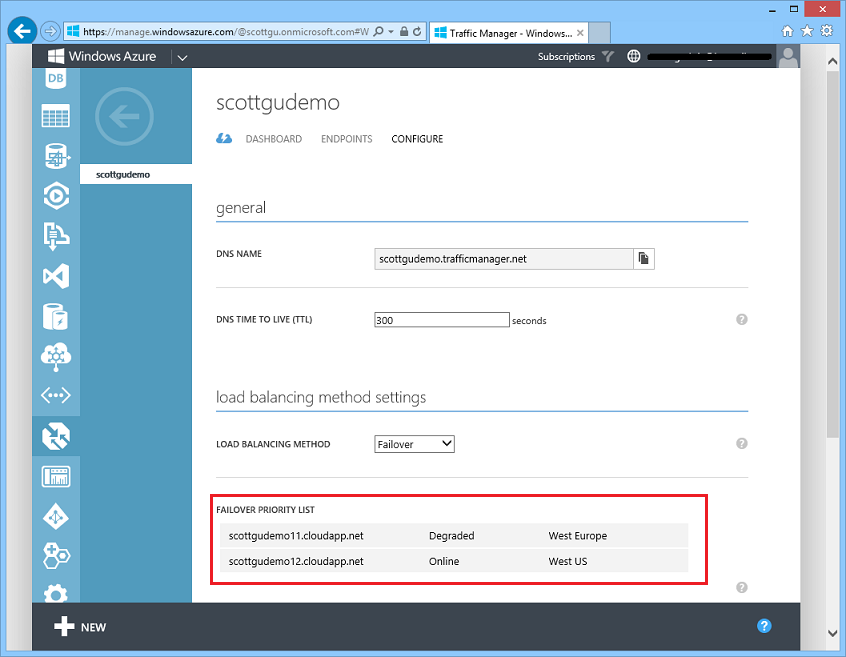

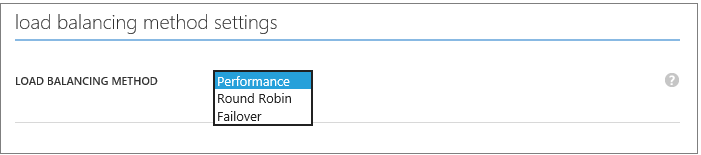

When you create a Windows Azure Traffic Manager you can specify a “load balancing method” – this indicates the default traffic routing policy engine you want to use. Above I selected the “failover” policy.

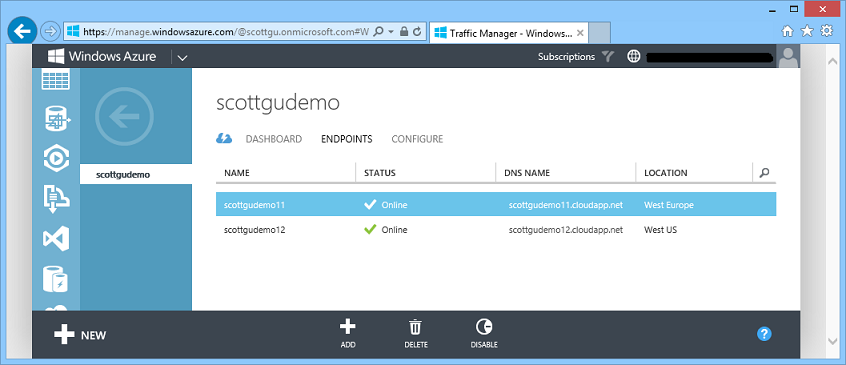

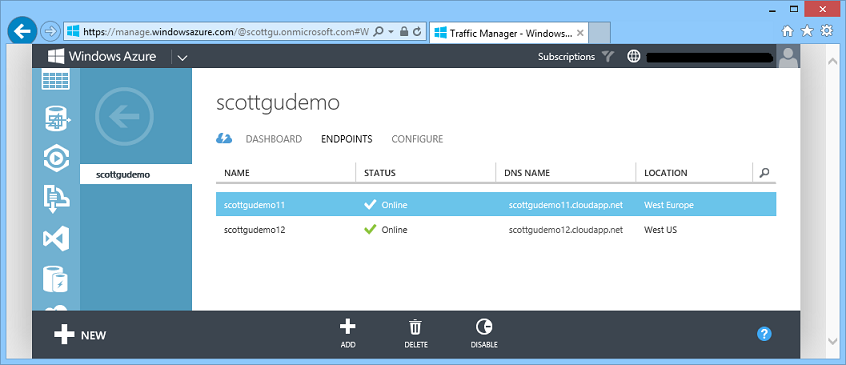

Once your Traffic Manager instance is created you can click the “endpoints” tab to select application or service endpoints you want the traffic manager to route traffic to. Below I’ve added two virtual machine deployments – one in Europe and one in the United States:

Enabling High Availability

Traffic Manager monitors the health of each application/service endpoint configured within it, and automatically re-directs traffic to other application/service endpoints should any service fail.

In the following example, Traffic Manager is configured in a ‘Failover’ policy, which means by default all traffic is sent to the first endpoint (scottgudemo11), but if that app instance is down or having problems (as it is below) then traffic is automatically redirected to the next endpoint (scottgudemo12):

Traffic Manager allows you to configure the protocol, port and monitoring path used to monitor endpoint health. You can use any of your web pages as the monitoring path, or you can use a dedicated monitoring page, which allows you to implement your own customer health check logic:

Enabling Improved Performance

You can deploy multiple instances of your application or service in different geographic regions, and use Traffic Manager’s ‘Performance’ load-balancing policy to automatically direct end users to the closest instance of your application. This improves performance for a end user by reducing the network latency they experience:

In the traffic manager instance we created earlier, we had a VM deployment in both West Europe and the West US regions of Windows Azure:

This means that when a customer in Europe accesses our application, they will automatically be routed to the West Europe application instance. When a customer in North America accesses our application, they will automatically be routed to the West US application instance.

Note that endpoint monitoring and failover is a feature of all Traffic Manager load-balancing policies, not just the ‘failover’ policy. This means that if one of the above instances has a problem and goes offline, the traffic manager will automatically direct all users to the healthy instance.

Seamless application updates

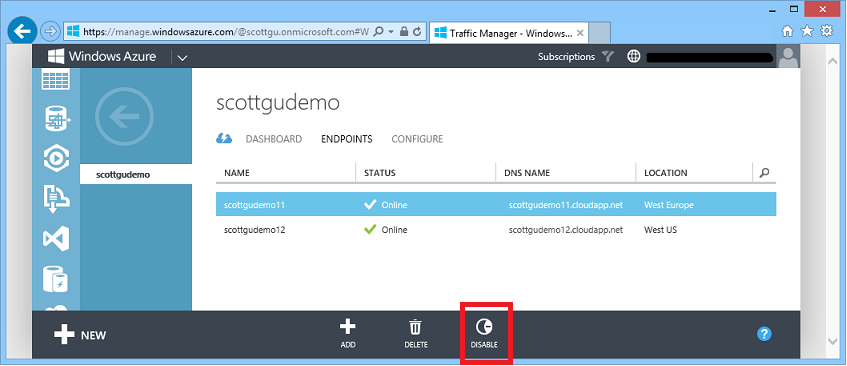

You can also explicitly enable and disable each of your application/service endpoints in Traffic Manager. To do this simply select the endpoint, and click the Disable command:

This doesn’t stop the underlying application - it just tells Traffic Manager to route traffic elsewhere. This enables you to migrate traffic away from a particular deployment of an application/service whilst it is being updated and tested and then bring the service back into rotation, all with just a couple of clicks.

General Availability

As Traffic Manager plays a key role in enabling high availability applications, it is of course vital that Traffic Manager itself is highly available. That’s why, as part of general availability, we’re announcing a 99.99% uptime SLA for Traffic Manager.

Traffic Manager has been available free of charge during preview. Free promotional pricing will remain in effect until December 31, 2013. Starting January 1, 2014, the following pricing will apply:

- $0.75 per million DNS queries (reducing to $0.375 after 1 billion queries)

- $0.50 per service endpoint/month.

Full pricing details are available on the Windows Azure Web Site. Additional details on Traffic Manager, including a detailed description of endpoint monitoring, all configuration options, and the Traffic Manager management REST APIs, are available on MSDN.

Active Directory: General Availability of Application Access

This summer we released the initial preview of our Application Access Enhancements for Windows Azure Active Directory, which enables you to securely implement single-sign-on (SSO) support against SaaS applications as well as LOB based applications. Since then we’ve added SSO support for more than 500 applications (including popular apps like Office 365, SalesForce.com, Box, Google Apps, Concur, Workday, DropBox, GitHub, etc).

Building upon the enhancements we delivered last month, with this week’s release we are excited to announce the general availability release of the application access functionality within Windows Azure Active Directory. These features are available for all Windows Azure Active Directory customers, at no additional charge, as of today’s release:

- SSO to every SaaS app we integrate with

- Application access assignment and removal

- User provisioning and de-provisioning support

- Three built-in security reports

- Management portal support

Every customer can now use the application access features in the Active Directory extension within the Windows Azure Management Portal.

Getting Started

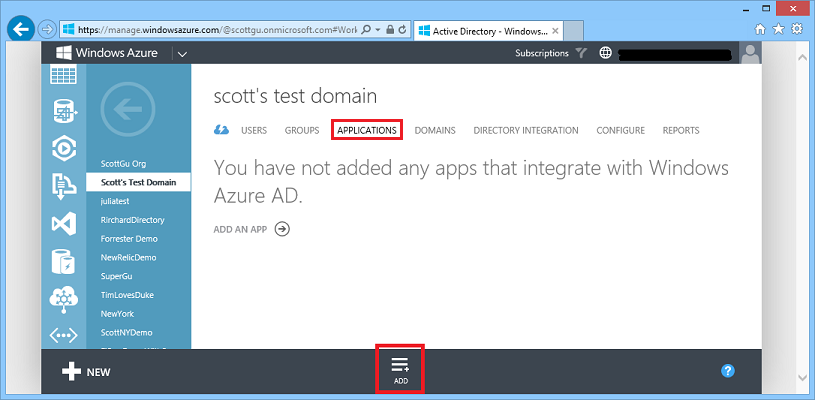

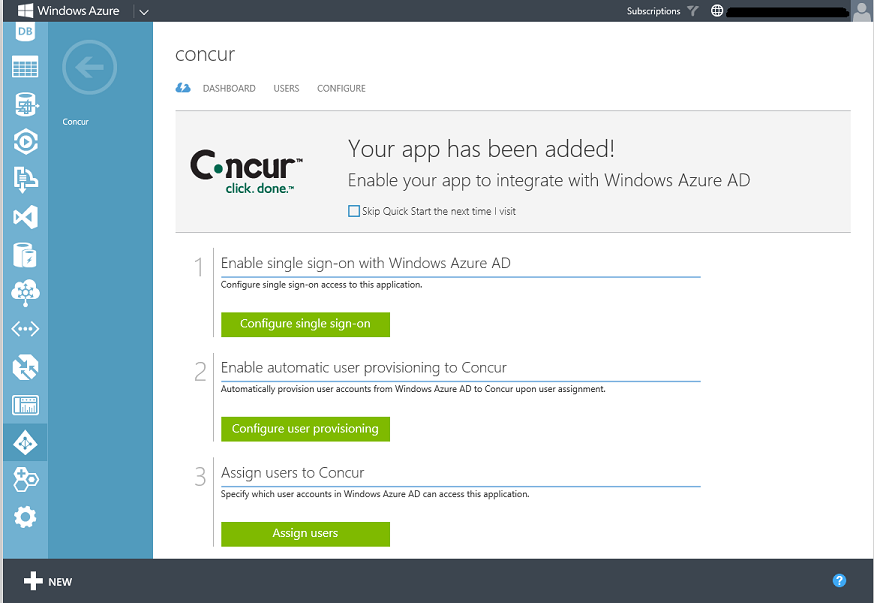

To integrate your active directory with either a SaaS or LOB application, navigate to the “Applications” tab of the Directory within the Windows Azure Management Portal and click the “Add” button:

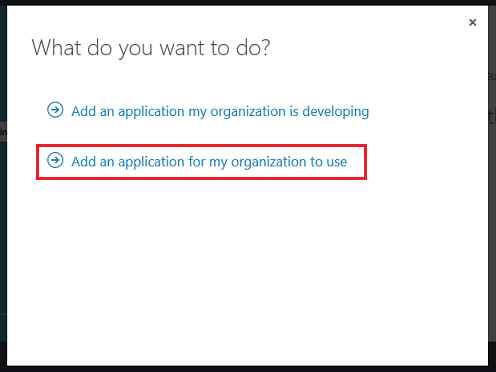

Clicking the “Add” button will bring up a dialog that allows you to select whether you want to add a LOB application or a SaaS application:

Clicking the second link will bring up a gallery of 500+ popular SaaS applications that you can easily integrate your directory with:

Choose an application you wish to enable SSO with and then click the OK button. This will register the application with your directory:

You can then quickly walkthrough setting up single-sign-on support, and enable your Active Directory to automatically provision accounts with the SaaS application. This will enable employees who are members of your Active Directory to easily sign-into the SaaS application using their corporate/active directory account.

In addition to making it more convenient for the employee to sign-into the app (one less username/password to remember), this SSO support also makes the company’s data even more secure. If the employee ever leaves the company, and their active directory account is suspended/deleted, they will lose all access to the SaaS application. The IT administrator of the Active Directory can also optionally choose to enable the Multi-Factor Authentication support that we shipped in September to require employees to use a second-form of authentication when logging into the SaaS application (e.g. a phone app or SMS challenge) to enable even more secure identity access. The Windows Azure Multi-Factor Authentication Service composes really nice with the SaaS support we are shipping today – you can literally set up secure support for any SaaS application (complete with multi-factor authentication support) to your entire enterprise within minutes.

You can learn more about what we’re providing with Azure Directory here, and you can ask questions and provide feedback on today’s release in the Windows Azure AD Forum.

Mobile Services: Active Directory integration, Xamarin support, Optimistic concurrency

Enterprises are increasingly going mobile to deliver their line of business apps. Today we are introducing a number of exciting updates to Mobile Services that make it even easier to build mobile LOB apps.

Preview of Windows Azure Active Directory integration with Mobile Services

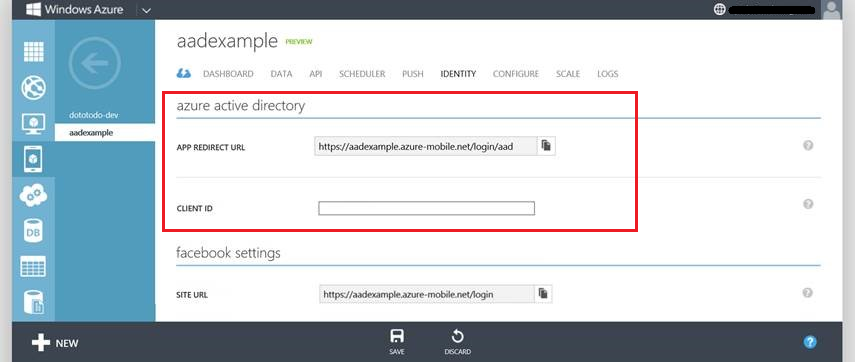

I am excited to announce the preview of Widows Azure Active Directory support in Mobile Services. Using this support, mobile business applications can now use the same easy Mobile Services authentication experience to allow employees to sign into their mobile applications with their corporate Active Directory credentials.

With this feature, Windows Azure Active Directory becomes supported as an identity provider in Mobile Services alongside with the other identity providers we already support (which include Microsoft Accounts, Facebook ID, Google ID, and Twitter ID). You can enable Active Directory support by clicking the “Identity” tab within a mobile service:

If you are an enterprise developer interested in using the Windows Azure Active Directory support in Mobile Services, please contact us at mailto:mobileservices@microsoft.com to sign-up for the private preview.

Cross-platform connected apps using Xamarin and Mobile Services

We earlier partnered with Xamarin to deliver a Mobile Services SDK that makes it easy to add capabilities such as storage, authentication and push notifications to iOS and Android applications written in C# using Xamarin. Since then, thousands of developers have downloaded the SDK and enjoyed the benefits of building cross platform mobile applications in C# with Windows Azure as their backend. More recently as part of the Visual Studio 2013 launch, Microsoft announced a broad collaboration with Xamarin which includes Portable Class Library support for Xamarin platforms.

With today’s release we are making two additional updates to Mobile Services:

- Delivering an updated Mobile Services Portable Class Library (PCL) SDK that includes support for both Xamarin.iOS and Xamarin.Android

- New quickstart projects for Xamarin.iOS and Xamarin.Android exposed directly in the Windows Azure Management Portal

These updates make it even easier to build cloud connected cross-platform mobile applications.

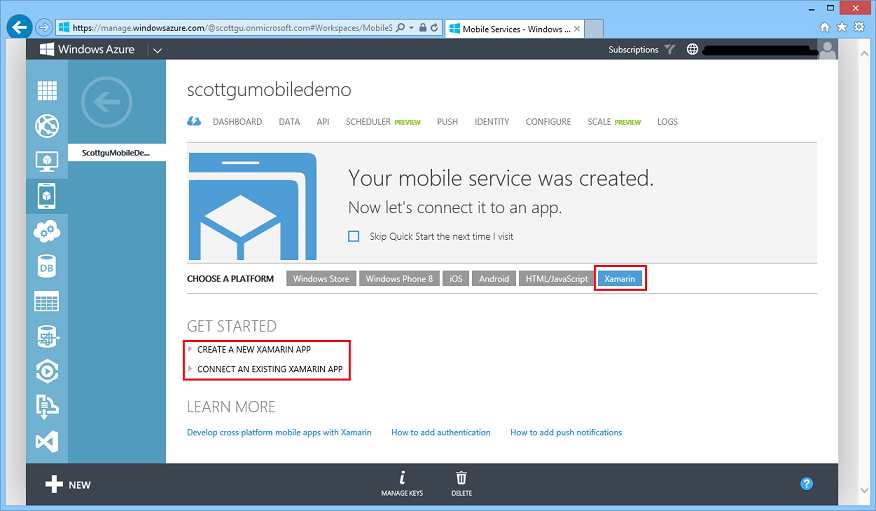

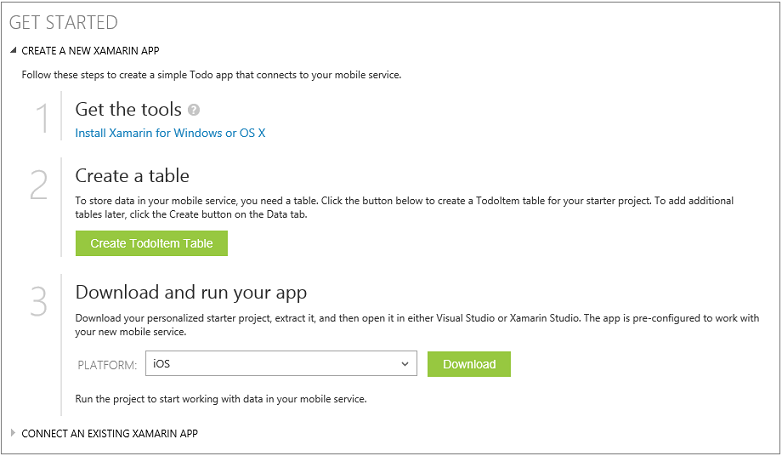

Getting started with Xamarin and Mobile Services

If you navigate to the quickstart page for your Windows Azure Mobile Service you will see there is now a new Xamarin tab:

To get started with Xamarin and Windows Azure Mobile Services, all you need to do is click one of the links circled above, install the Xamarin tools, and download the Xamarin starter project that we provide directly on the quick start page above:

After downloading the project, unzip and open it in Visual Studio 2013. You will then be prompted to pair your instance of Visual Studio with a Mac so that you can build and run the application on iOS. See here for detailed instructions on the setup process.

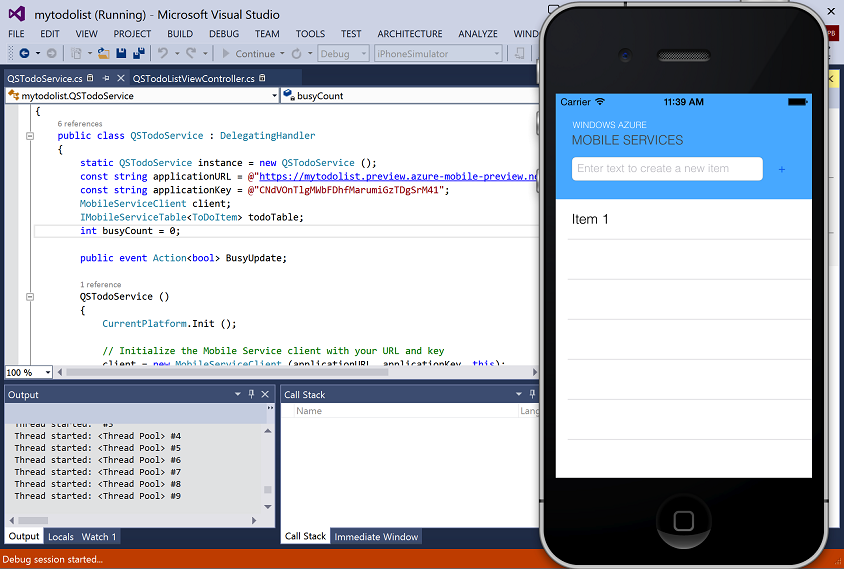

Once the setup process is complete, you can select the iPhone Simulator as the target and then just hit F5 within Visual Studio to run and debug the iOS application:

The combination of Xamarin and Windows Azure Mobile Services make it incredibly easy to build iOS and Android applications using C# and Visual Studio. For more information check out our tutorials and documentation.

Optimistic Concurrency Support

Today’s Mobile Services release also adds support for optimistic concurrency. With optimistic concurrency, your application can now detect and resolve conflicting updates submitted by multiple users. For example, if a user retrieves a record from a Mobile Services table to edit, and meanwhile another user updated this record in the table, without optimistic concurrency support the first user may overwrite the second user’s data update. With optimistic concurrency, conflicting changes can be caught, and your application can either provide a choice to the user to manually resolve the conflicts, or implement a resolution behavior.

When you create a new table, you will notice 3 system property columns added to support optimistic concurrency: (1) __version, which keeps the record’s version, (2) __createdAt, which is the time this record was inserted at, and (3) __updatedAt, which is the time the record was last updated.

You can use optimistic concurrency in your application by making two changes to your code:

First, add a version property to your data model as shown in code snippet below. Mobile Services will use this property to detect conflicts while updating the corresponding record in the table:

public class TodoItem

{

public string Id { get; set; }

[JsonProperty(PropertyName = "text")]

public string Text { get; set; }

[JsonProperty(PropertyName = "__version")]

public byte[] Version { get; set; }

}

Second, modify your application to handle conflicts by catching the new exception MobileServicePreconditionFailedException. Mobile Services will send back this error, which includes the server version of the conflicting item. Your application can then decide on which version to commit back to the server to resolve this detected conflict.

To learn more about optimistic concurrency, review our new Mobile Services optimistic concurrency tutorial. Also check out the new support for Custom ID property support we are also adding with today’s release – which makes it much easier to handle a variety of richer data modeling scenarios (including sharding support).

Notification Hubs: Price Reduction and Debug Send Improvements

In August I announced the General Availability of Windows Azure Notification Hubs - a powerful Mobile Push Notifications service that makes it easy to send high volume push notifications with low latency to any mobile device (including Windows Phone, Windows 8, iOS and Android devices). Notification hubs can be used with any mobile app back-end (including ones built using Windows Azure Mobile Services) and can also be used with back-ends that run in the cloud as well as on-premises.

Pricing update: Removing Active Device limits from Notification Hubs paid tiers

To simplify the pricing model of Notification Hubs and pass on cost savings to our customers, we are removing the limits we previously had on the number of Active Devices allowed. For example, the consumption price for Notification Hubs Standard Tier will now simply become $75 for 1 million pushes per month, and $199 per 5 million pushes per month (prorated daily).

These changes and price reductions will be available to all paid tiers starting Dec 15th. More details on the pricing can be found here.

Troubleshooting Push Notifications with Debug Send

Troubleshooting push notifications can sometimes be tricky, as there are many components involved: your backend, Notification Hubs, platform notification service, and your client app.

To help with that, today’s release adds the ability to easily send test notifications directly from the Windows Azure Management portal. Simply navigate to the new DEBUG tab in every Notification Hub, specify whether you want to broadcast to all registered devices or provide a tag (or tag expression) to only target specific devices/group of devices, specify the notifications payload you wish to send, and then hit “Send”. For example: below I am choosing to send a test notification message to all my users who have the iOS version of my app, and who have registered to subscribe to “sport-scores” within my app:

After the notification is sent, you will get a list of all the device registrations that were targeted by your notifications and the outcomes of their specific notifications sent as reported by the corresponding platform notification services (WNS, MPNS, APNS, and GCM). This makes it much easier to debug issues.

For help on getting started with Notification Hubs, visit the Notification Hub documentation center.

Web Sites: Diagnostics Support for Automatic Logging to Blob Storage

In September we released an update to Windows Azure Web Sites that enables you to automatically persist HTTP logs to Windows Azure Blob Storage.

Today we also updated Web Sites to support persisting a Web Site’s application diagnostic logs to Blob Storage as well. This makes it really easy to persist your diagnostics logs as text blobs that you can store indefinitely (since storage accounts can maintain huge amounts of data) and which you can also use to later perform rich data mining/analysis on them. This also makes it much easier to quickly diagnose and understand issues you might be having within your code.

Adding Diagnostics Statements to your Code

Below is a simple example of how you can use the built-in .NET Trace API within System.Diagnostics to instrument code within a web application. In the scenario below I’ve added a simple trace statement that logs the time it takes to call a particular method (which might call off to a remote service or database that might take awhile):

Adding instrumentation code like this makes it much easier for you to quickly determine what might be the cause of a slowdown in an application in production. By logging the performance data it also makes it possible to analyze performance trends over time (e.g. analyze what the 99th percentile latency is, etc).

Storing Diagnostics Log Files as Blobs in Windows Azure Storage

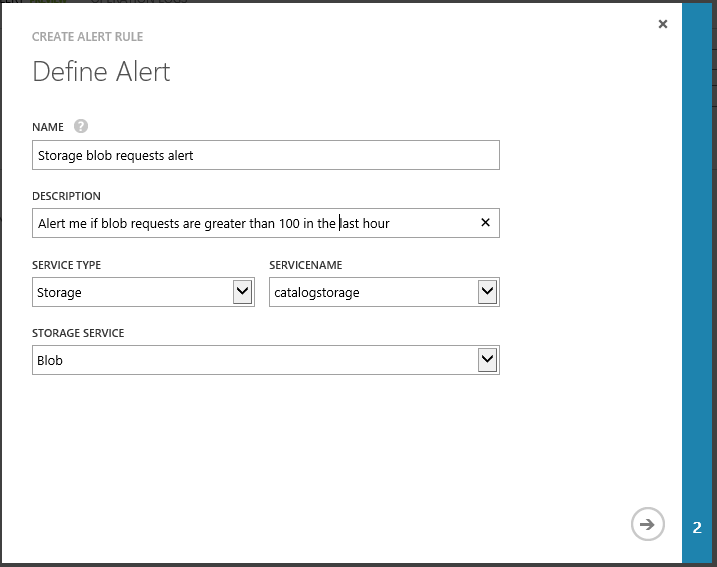

To enable diagnostic logs to be automatically written directly to blob storage, simply navigate to a Web Site using the Windows Azure Management Portal and click the CONFIGURE tab. Then navigate to the APPLICATION DIAGNOSTICS section within it. Starting today, you can now configure “Application Logging” to be persisted to blob storage. To do this, just toggle the button to be “on”, and then choose the logging level you wish to persist (error, verbose, information, etc):

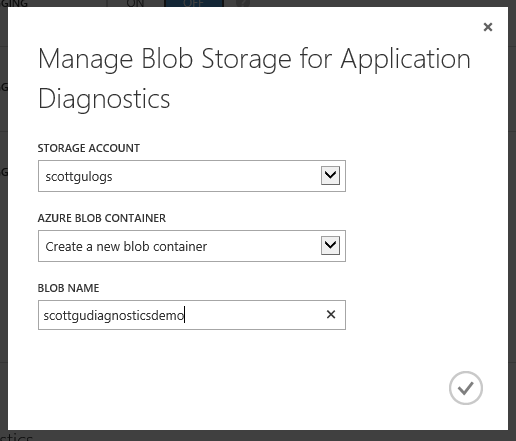

Clicking the green “manage blob storage” button brings up a dialog that allows you to configure which blob storage account you wish to store the diagnostics logs within:

Once you are done just click the “ok” button, and then hit “save”. Now when your application runs, the diagnostic data will automatically be persisted to your blob storage account.

Looking at the Application Diagnostics Data

Diagnostics logging data is persisted almost immediately as your application runs (we have a trace listener that automatically handles this within web-sites and allows you to write thousands of diagnostics messages per second).

You can use any standard tool that supports Windows Azure Blob Storage to view and download the logs. Below I’m using the CloudXplorer tool to view my blob storage account:

The application diagnostic logs are persisted as .csv text files. Windows Azure Web Sites automatically persists the files within sub-folders of the blob container that map to the year->month->day->hour of the web-site operation (which makes it easier for you to find the specific file you are looking for).

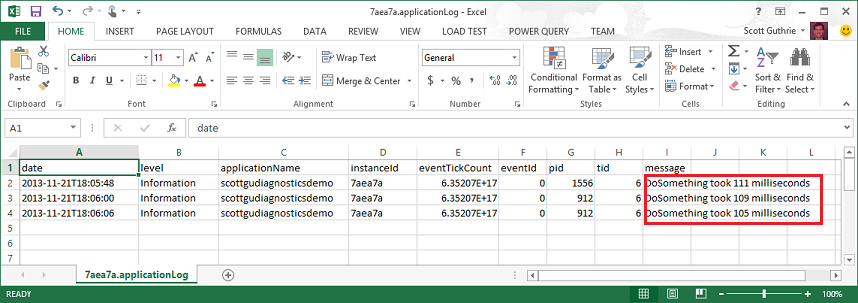

Because they are .csv text files you can open/process the log files using a wide variety of tools or custom scripts (you can even spin up a Hadoop cluster using Windows Azure HDInsight if you want to analyze lots of them quickly). Below is a simple example of opening the above file diagnostic file using Excel:

Notice above how the date/time, information level, application name, webserver instance ID, eventtick, as well as proceed and thread ID were all persisted in addition to my custom message which logged the latency of the DoSomething method.

Running with Diagnostics Always On

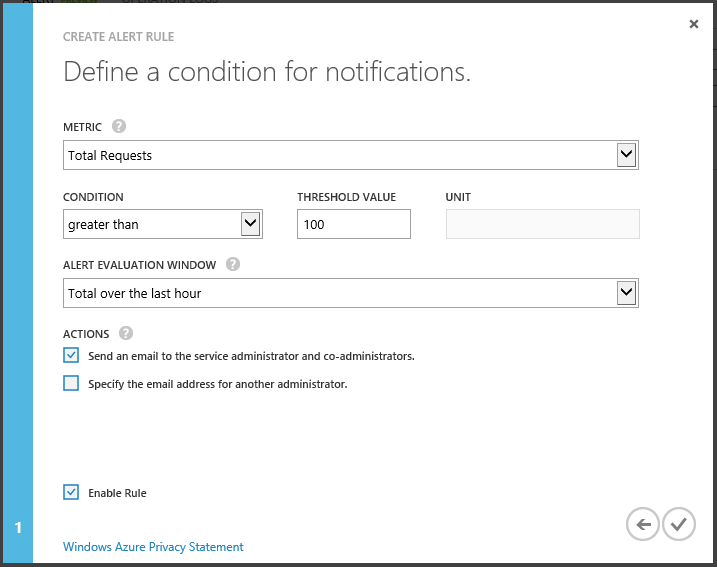

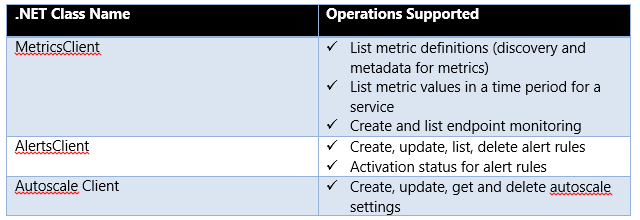

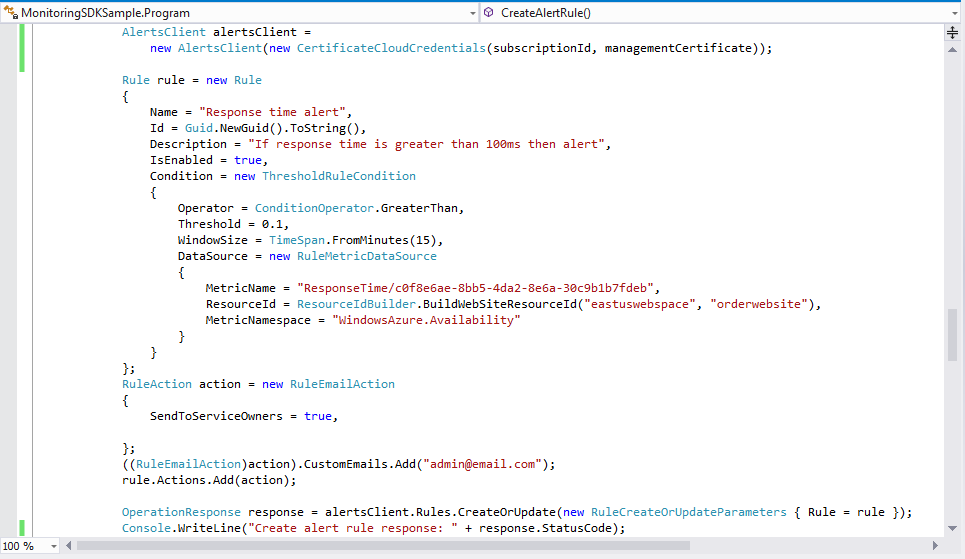

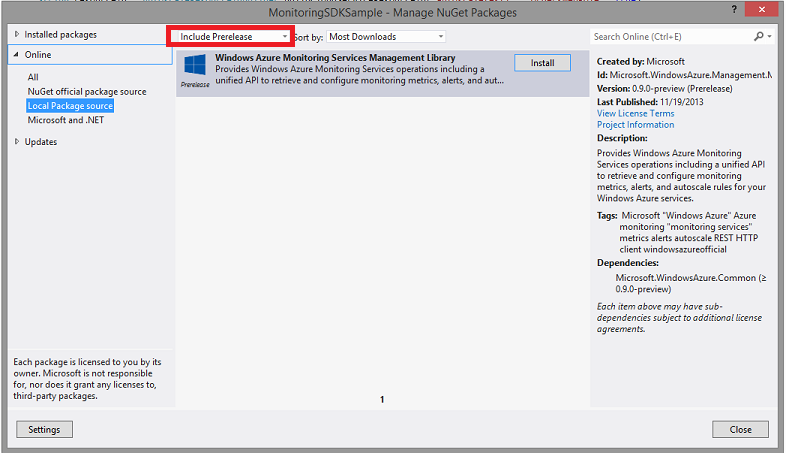

Today’s update now makes it super easy to log your diagnostics trace messages to blob storage (in addition to the HTTP logs that were already supported). The above steps are literally the only ones required to get started.