Windows Azure and Cloud Computing Posts for 6/19/2012+

| A compendium of Windows Azure, Service Bus, EAI & EDI,Access Control, Connect, SQL Azure Database, and other cloud-computing articles. |

•• Updated 6/24/2012 7:00 AM to 5:00 PM PDT with articles marked ••.

• Updated 6/23/2012 8:00 AM to 5:00 PM PDT with articles marked •.

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Windows Azure Blob, Drive, Table, Queue and Hadoop Services

- SQL Azure Database, Federations and Reporting

- Marketplace DataMarket, Social Analytics, Big Data and OData

- Windows Azure Service Bus, Active Directory, and Workflow

- Windows Azure Virtual Machines, Virtual Networks, Web Sites, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch and Entity Framework v4+

- Windows Azure Infrastructure and DevOps

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

Azure Blob, Drive, Table, Queue and Hadoop Services

•• Manu Cohen-Yashar (@ManuKahn) asked Blobs Are Case Sensitive – Why? in a 6/20/2012 post:

I notices that blob names are case sensitive, yet NTFS and general web url are not. (Linux is.)

I spoke to several friends and they told me that the HTTP standard defines URL as case sensitive but in the real world no one implements that.

The fact that blobs are case sensitive makes the task of exporting NTFS content to the cloud complicated because you know that your clients (who will access the blobs) will not assume that blobs as case sensitive.

I heard explanations such as: "The distinction matters quite a bit for security reasons; there’s a lot of material you can find on exploits around casing with Unicode for instance… " but,if no one else does case sensitive URLs, why start now and break conventions?

Today all we can do is make sure all blobs are lower case (use .ToLower()) yet the clients of the blobs must be instructed to do the same.

Best response I have seen on the subject can be found here. Read it.

• Alex Popescu (@al3xandru, pictured to right of last paragraph) posted The State of Hadoop Market on 6/15/2012:

Derrick Harris [@derrickharris, pictured at right]:

Finally, at least, the market for Hadoop distributions appears complete. There are five rather distinct offerings from five rather distinct providers — Cloudera, EMC Greenplum, Hortonworks, IBM and MapR (six if you include Amazon’s Elastic MapReduce cloud service) — and each has its merits.

2013 is going to be the year when we see [Hadoop adoption] go a lot more mainstream and [turn] into a tornado.

I still think there’s something missing. While Amazon Elastic MapReduce added support for MapR and ex-Facebook Hive creators announced an Amazon hosted on-demand Hadoop service, I think the Hadoop market could benefit from having a second option for on-demand cloud-based Hadoop services. Is it only me?

The comments to his story, including mine, should satisfy Alex’s quest for a second option for on-demand cloud-based Hadoop services: Apache Hadoop on Windows Azure.

• Carl Nolan (@carl_nolan) described a Co-occurrence Approach to an Item Based Recommender in a 6/23/2012 post as a prelude to MapReduce and Cloud Numerics approaches:

For a while I thought I would tackle the problem of creating an item-based recommender. Firstly I will start with a local variant before moving onto a MapReduce version. The current version of the code can be found at:

http://code.msdn.microsoft.com/Co-occurrence-Approach-to-57027db7

The approach taken for the item-based recommender will be to define a co-occurrence matrix based on purchased items; products purchased on an order. As an example I will use the Sales data from the Adventure Works SQL Server sample database.. The algorithm/approach is best explained, and is implemented, using a matrix.

For the matrix implementation I will be using the Math.Net Numerics libraries. To install the libraries from NuGet one can use the Manage NuGet Packages browser, or run these commands in the Package Manager Console:

Install-Package MathNet.Numerics

Install-Package MathNet.Numerics.FSharpCo-occurrence Sample

Lets start with a simple sample. Consider the following order summary:

If you look at the orders containing products 1002, you will see that there is 1 occurrence of product 1001 and 3 occurrences of product 1003. From this, one can deduce that if someone purchases product 1002 then it is likely that they will want to purchase 1003, and to a lesser extent product 1001. This concept forms the crux of the item-based recommender. [Emphasis added.]

[This technique is the basis of Amazon.com’s “Frequently Bought Together” sections.]

So if we computed the co-occurrence for every pair of products and placed them into a square matrix we would get the following:

There are a few things we can say about such a matrix, other than it will be square. More than likely, for a large product set the matrix will be sparse, where the number of rows and columns is equal to the number of items. Also, each row (and column as the matrix is symmetric) expresses similarities between an item and all others.

Due to the diagonal symmetric nature of the matrix (axy = ayx) one can think of the rows and columns as vectors, where similarity between items X and Y is the same as the similarity between items Y and X.

The algorithm/approach I will be outlining will essentially be a means to build and query this co-occurrence matrix. Co-occurrence is like similarity, the more two items occur together (in this case in a shopping basket), the more they are probably related.

Working Data

Before talking about the code a word is warranted on how I have created the sample data.

As mentioned before I am using the Adventure Works database sales information. The approach I have taken is to export the sales detail lines, ordered by the sales order identifier, into flat files. The rationale for this being that the processing of the matrix can then occur with a low impact to the OLTP system. Also, the code will support the parallel processing of multiple files. Thus one can take the approach of just exporting more recent data and using the previously exported archived data to generate the matrix.

To support this process I have created a simple view for exporting the data:

CREATE VIEW [Sales].[vSalesSummary]

AS

SELECT SOH.[SalesOrderID], CAST(SOH.[OrderDate] AS date) [OrderDate], SOD.[ProductID], SOD.[OrderQty]

FROM [Sales].[SalesOrderHeader] SOH

INNER JOIN [Sales].[SalesOrderDetail] SOD ON SOH.[SalesOrderID] = SOD.[SalesOrderID]

GOI have then used the BCP command to export the data into a Tab delimited file:

bcp

"SELECT [SalesOrderID], [OrderDate], [ProductID], [OrderQty] FROM [AdventureWorks2012].[Sales].[vSalesSummary] ORDER BY [SalesOrderID], [ProductID]"

queryout SalesOrders.dat

-T -S (local) -cOne could easily define a similar process where a file is generated for each month, with the latest month being updated on a nightly basis. One could then easily ensure only orders for the past X months are including in the metric. The only important aspect in how the data is generated is that the output must be ordered on the sales order identifier. As you will see later, this grouping is necessary to allow the co-occurrence data to be derived.

A sample output from the Adventure Works sales data is as follows, with the fields being tab delimited:

43659 2005-07-01 776 1

43659 2005-07-01 777 3

43659 2005-07-01 778 1

43660 2005-07-01 758 1

43660 2005-07-01 762 1

43661 2005-07-01 708 5

43661 2005-07-01 711 2

43661 2005-07-01 712 4

43661 2005-07-01 715 4

43661 2005-07-01 716 2

43661 2005-07-01 741 2

43661 2005-07-01 742 2

43661 2005-07-01 743 1

43661 2005-07-01 745 1

43662 2005-07-01 730 2

43662 2005-07-01 732 1

43662 2005-07-01 733 1

43662 2005-07-01 738 1Once the data has been exported building the matrix becomes a matter of counting the co-occurrence of products associated with each sales order.

Building the Matrix

The problem of building a co-occurrence matrix is simply one of counting. The basic process is as follows:

- Read the file and group each sales order along with the corresponding list of product identifiers

- For each sales order product listing, calculate all the corresponding pairs of products

- Maintain/Update the running total of each pair of products found

- At the end of the file, output a sparse matrix based on the running totals of product pairs

To support parallelism steps 1-3 are run in parallel where the output of these steps will be a collection of the product pairs with the co-occurrence count. These collections are then combined to create a single sparse matrix.

In performing this processing it is important that the reading of the file data is such that it can efficiently create a sequence of products for each sales order identifier; the grouping key. If you have been following my Hadoop Streaming blog entries one will see that this is the same process undertaken in processing data within a reducer step.

The matrix building code, in full, is as follows:

[156 lines of F# source code elided for brevity.]

In this instance the file data is processed such that order data is grouped and exposed as an OrderHeader type, along with a collection of OrderDetail types. From this the pairs of products are easily calculated; using the pairs function.

To maintain a co-occurrence count for each product pair a Dictionary is used. The key for the Dictionary is a tuple of the pair of products, with the value being the co-occurrence count. The rationale for using a Dictionary is that it supports O(1) lookups; whereas maintaining an Array will incur an O(n) lookup.

The final decision to be made is of what quantity should be added into the running total. One could use a value of 1 for all co-occurrences, but other options are available. The first is the order quantity. If one has a co-occurrence for products quantities x and y, I have defined the product quantity as x.y. The rationale for this is that having product quantities is logically equivalent to having multiple product lines in the same order. If this was the case then the co-occurrence counting would arrive at the same value. I have also limited the maximum quantity amount such that small products ordered in large volumes do not skew the numbers; such as restricting a rating from 1-10.

One optional quantity factor I have included is one based on the order header. The sample code applies a quantity scaling factor for recent orders. Again the rationale for this is such that recent orders have a greater affect on the co-occurrence values over older ones. All these scaling factors are optional and should be configured to give your desired results.

As mentioned, to achieve parallelism in the matrix building code the collection of input files can be processed in parallel with each parallel step independently outing its collection of product pairs and co-occurrence counts. This is achieved using an Array.Parallel.map function call. This maps each input file into the Dictionary for the specified file. Once all the Dictionary elements have been created they are then used to create a sequence of elements to create the sparse matrix.

One other critical element returned from defining the Dictionary is the maximum product identifier. It is assumed that the product identifier is an integer which is used as an index into the matrix; for both row and column values. Thus the maximum value is needed to define row and columns dimensions for the sparse matrix.

Whereas this approach works well for products ranges from 1 onwards, what if product identifiers are defined from a large integer base, such as 100,000, or are Guid’s. In the former case one has the option of calculating an offset such that the index for the matrix is the product identifier minus the starting offset; this being the approach I have taken. The other option is that the exported data is mapped such that the product numbers are defined from 1 onwards; again this can be a simple offset calculation. In the latter case for Guid’s, one would need to do a mapping from the Guid keys to a sequential integer.

Querying for Item Similarity

So once the sparse matrix has been built how does one make recommendations. There are basically two options, either recommendations based on a single product selection, or recommendations for multiple products, say based on the contents of a shopping basket.

The basic process for performing the recommendations, in either case, is as follows:

- Select the sparse row vectors that correspond to the products for which a recommendation is required

- Place the row values into a PriorityQueue where the key is the co-occurrence count and the value the product identifier

- Dequeue and return, as a sequence, the required number of recommendations from the PriorityQueue

For the case of a single product the recommendations are just a case of selecting the correct single vector and returning the products with the highest co-occurrence count. The process for a selection of products is almost the same, except that multiple vectors are added into the PriorityQueue. One just needs to ensure that products that are already in the selection on which the recommendation is being made are excluded from the returned values. This is easily achieved with a HashSet lookup.

So the full code that wraps building the recommendation is as follows:

[48 lines of F# source code elided for brevity.]

Using the code is a simply a matter of creating the MatrixQuery type, with the files to load, and then calling the GetRecommendations() operator for the required products (shopping basket):

let filenames = [|

@"C:\DataExport\SalesOrders201203.dat";

@"C:\DataExport\SalesOrders201204.dat";

@"C:\DataExport\SalesOrders201205.dat";

@"C:\DataExport\SalesOrders201206.dat" |]

let itemQuery = MatrixQuery(filenames)

let products = itemQuery.GetRecommendations([| 860; 870; 873 |], 25)In extracting the row vector associated with the required product one could just have used coMatrix.Row(item); but this creates a copy of the vector. To avoid this the code just does an enumeration of the required matrix row. Internally the sparse vector maintains three separate arrays for the column and row offsets and sparse value. Using these internal arrays and the associated element based operations would speed up obtaining recommendations; but currently these properties are not exposed. If one uses the sparse matrix from the F# power Pack then one can operate in this fashion using the following code:

[23 lines of F# source code elided for brevity.]

In considering a shopping basket I have assumed that each product has been selected only once. You could have the situation where one wanted to take into consideration the quantity of each product selected. In this instance one would take the approach of performing a scalar multiplication of the corresponding product vectors. What this would achieve is, for recommendations, prioritizing products for which a user has purchased multiple items.

Although this code does a lot of element-wise operations, as mentioned earlier, one can think of the operations in pure matrix terms. In this case one would just multiply the sparse matrix by a sparse column vector representing the items from which to make the recommendations.

Consider the previous sparse matrix example, and say one had a basket consisting of 2 products 1002 and 1 product 1004:

The approach mentioned here will probably work well for the cases where one has 100,000’s products with similarities between 1000’s of items, with 10’s millions of orders to be considered. For most situations this should suffice. However, if you are not in this bracket, in my next post, I will show how this approach can be mapped over to Hadoop and MapReduce, allowing for even greater scale. [Emphasis added.]

Also, in a future post I will port the code to use the [Codename] Cloud Numerics implementation of matrices.

I’m anxious to try the Codename “Cloud Numerics” implementation. Prior OakLeaf posts about Codename “Cloud Numerics” include, in chronological order:

- Introducing Microsoft Codename “Cloud Numerics” from SQL Azure Labs

- Deploying “Cloud Numerics” Sample Applications to Windows Azure HPC Clusters

- Analyzing Air Carrier Arrival Delays with Microsoft Codename “Cloud Numerics”

- Recent Articles about SQL Azure Labs and Other Added-Value Windows Azure SaaS Previews: A Bibliography

Avkash Chauhan (@avkashchauhan) described Using Git to deploy an ASP.NET website shows Windows Azure Blob Storage list at Windows Azure Websites in a 6/19/2012 post:

I have seen a few issues reported related to Windows Azure Websites, when someone deploys an ASP.NET web application (which use Azure Storage Client Library) to Windows Azure Websites. I decided to give a try and document all the steps to show how to do it correctly and what not to miss. Here are the steps:

Create ASP.NET Website and add Microsoft.WindowsAzure.ServiceRuntime and Microsoft.WindowsAzure.StorageClient references as below and set their “Copy Local” property to “True”:

When you build your application be sure that additional references are listed in your Bin folder:

Verify that your Windows Azure Storage based code is working locally:

Now you can visit your Windows Azure Websites and enable Git repository and be sure it is ready:

Open your Git Bash Command in your development machine (visit to your application folder i.e. C:\2012Data\Development\Azure\ASPStorageView\ASPStorageView) and start running Git command as below:

git init

git add .git commit -m "initial commit" (Note when you run this command be sure that all additional references are included as displayed below)

git remote add azure https://avkash@avkash.scm.azurewebsites.net/avkash.git

git push azure master

Finally when deployment is completed, you can verify your Windows Azure Website is running as expected:

The Windows Azure Team (@WindowsAzure) described how to Create & Manage Storage in a 00:01:29 video uploaded 6/7/2012:

This video shows you how to manage and monitor storage in Windows Azure using the Windows Azure Management Portal. A storage account provides access to cloud storage using blobs, tables, and queues.

<Return to section navigation list>

SQL Azure Database, Federations and Reporting

•• Mark Kromer (@mssqldude) described SQL Server 2012 Database Backups in Azure in a 6/6/2012 post (missed when published):

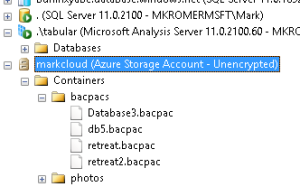

SQL Server 2012 inlcudes new & updated features to SQL Server Management Studio (SSMS) that provide out-of-the-box integration into the Windows Azure platform-as-a-service Cloud service from Microsoft. It goes beyond just SQL Azure integration as you can now also connect into Windows Azure storage accounts where you can store files and blobs, including DACPAC & BACPAC files, essentially providing DBAs with out-of-the-box backup-to-cloud capabilities.

From a DBA’s perspective, this can be very beneficial because this would allow you to take your SQL Server backups and post them into the Azure cloud where files are automatically protected and replicated for high availability. This will also eliminate the need for you to maintain infrastructure for backups locally on your site. You would utilize Azure Storage for maintenance, retrieval and disaster recovery of your database backups. Here is a link with more details on Azure Storage.

Here are the steps to backup from SSMS 2012 to Windows Azure:

- First thing to note is that you will need to sign-up for a Windows Azure account and configure a storage container. You can click here for a free trial.

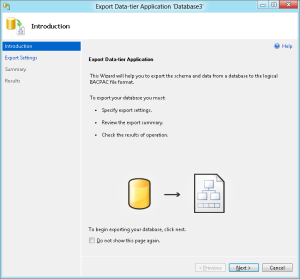

- Now, on SSMS, choose a database to backup. But instead of the normal Backup task, select “Export Data-Tier Application”. This is going to walk you through the process of exporting the schema with the data as a “BACPAC” file output.

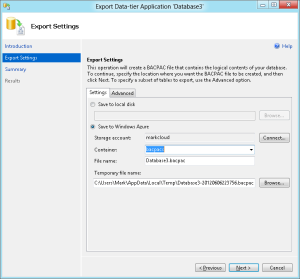

3. On the next screen in the wizard, you will select the Azure storage account and container where SQL Server will store the export. Note that it will first backup the database (schema & data) to a local file and then upload it to the cloud for you.

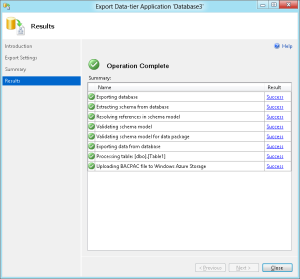

4. Once the process is complete, you will see your exported backup as a BACPAC in your storage container. To restore a BACPAC, you right-click on the file from your container and select “Import Data-Tier Application”.

BTW, this process is identical to the way that you can export & import databases in SQL Azure. You can also easily now move your databases (schema and/or data) to and from SQL Server and SQL Azure with these BACPACs. This is not a full-service TLOG and data file backup like SQL Server native backups. This is more of a database export/import mechanism. But that is pretty much the most interactive that a DBA will get with SQL Azure anyway because you do not perform any TLOG maintenance on SQL Azure database. Microsoft takes care of that maintenance for you.

<Return to section navigation list>

MarketPlace DataMarket, Social Analytics, Big Data and OData

•• A program manager for the Codename “Social Analytics Team” (@mariabalsamo) announced Microsoft Codename "Social Analytics" - Lab Phase is Complete in a 6/21/2012 post to the team blog:

The Microsoft Codename "Social Analytics" lab phase is complete.

Here is what we wanted to learn from this lab:

- How useful do customers find this scenario?

- API prioritization: We wanted to understand which features developers needed to implement first and how easy it is to implement these (for example, content

item display, how to modify filters, etc.).- API usability: Were our APIs hard to use, easy to use, consistent with existing methods, or did they add to the concept count?

We have received a lot of useful feedback which will shape our future direction. We would like to thank everybody who participated for their valuable feedback!

As of 6/24/2012 at 12:00 PM, the VancouverWindows8 dataset was still accessible from the Windows Azure Marketplace Data Market and had 1,264,281 rows with the last item dated 6/22/2012 6:59:54 AM (UTC). Data was being updated as of 6/25/2012 at 7:00 AM PDT. SQL Labs’ Microsoft Codename "Social Analytics" landing page is still accessible.

As a precaution against future loss of access by my Microsoft Codename “Social Analytics” Windows Form Client demo application, I have created a CSV file of the last million rows of the dataset. I will modify the demo client code and make the CSV file available to its users in the event the dataset becomes inaccessible. Here’s the client UI after downloading 997,168 of one million requested rows.

Note: Download time is approximately 100,000 rows per hour.

Here’s a GridData.csv file created on 6/25/2012 opened in Excel:

Note: Facebook posts have ContentItem Title and Summary values. Twitter tweets have only Title values.

Of course, users of the Lab project, like me, who invested time and energy into testing the project and providing feedback are interested to know what the team’s “future direction” will be.

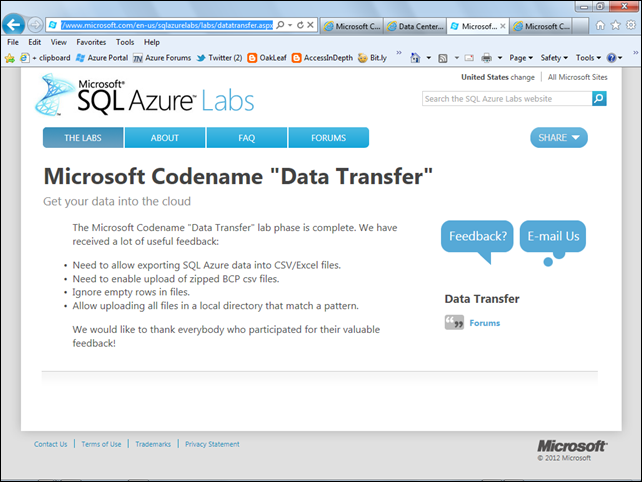

The Codename “Data Transfer” Lab Is Terminated, Also

The Codename “Data Transfer” Team recently announced the completion of that SQL Lab on its landing page:

The job logs for my tests of Codename “Data Transfer” remain accessible.

• keydet (@devkeydet) posted an UPDATED SAMPLE: CRM 2011 Metro style OData helper on 6/17/2012:

I’ve updated the sample I started here by implementing Create Update and Delete methods. The library is still not complete or well tested, but it is at least a head start to building a CRUD capable Windows 8 Metro style application using the CRM 2011 OData (aka Organization Data) service. Both the helper web resource and the Windows 8 Metro style source code have been updated. The Visual Studio solution now has a little sample Metro style app to quickly test out the functionality of the library.

To get the sample to work, you will need to import the updated CRM solution package which updates the helper web resource. Also, make sure you change the CRM organization url in the sample app. You can still follow the instructions from the first post since there are no breaking changes in the sample, just new functionality.

Barb Darrow (@gigabarb) reported Microsoft’s Nadella: We will democratize big data in a 6/21/2012 article for GigaOm’s Structure blog:

Microsoft plans to bring big data to the little guy, said Satya Nadella, president of the Microsoft Server and Tools group and the executive directing the company’s gigantic Windows Azure cloud effort.

The company’s decision to make Hadoop a first-class citizen of Azure is a huge piece of the puzzle, but linking that technology to mere mortal end users with Excel is what will make big data broadly applicable, he said.

“We need to connect those petabytes of data to humans in a workflow. We put the Excel user in the feedback loop. We have to democratize how users can gain insight with our big data strategy,” Nadella said at Structure 2012 Thursday morning.

“There will be more to come. We can bring Linq and some of our language technology to Hadoop to make it easy for us to plug .NET and Linq in,” he said.

Nadella tapped into one of the big issues around big data. The data is out there in massive amounts, but finding the right data and making it actionable and valuable is key.

Satya Nadella, President, Server and Tools Business, Microsoft

(c)2012 Pinar Ozger pinar@pinarozger.com

“Data discovery is one of the biggest issues today. If you’re doing analysis, unless it’s all pre-cooked, how do you discover the data to create insight. That’s the problem we try to solve with Data Market,” Nadella said. The tandem of Azure Data Market on the backend with Excel — and Excel’s PowerView and Powerpivot, you can pick up data sets and analyse them,” he said.

Check out the rest of our Structure 2012 coverage, including the live stream, here.

The Windows Azure Team (@WindowsAzure) described how to Install MongoDB on a virtual machine running CentOS Linux in Windows Azure in a June 2012 page in the Linux/Manage category. It begins:

To use this feature and other new Windows Azure capabilities, sign up for the free preview.

MongoDB is a popular open source, high performance NoSQL database. Using the Windows Azure (Preview) Management Portal, you can create a virtual machine running CentOS Linux from the Image Gallery. You can then install and configure a MongoDB database on the virtual machine.

How to use the Preview Management Portal to select and install a Linux virtual machine running CentOS Linux from the gallery.

How to connect to the virtual machine using SSH or PuTTY.

- How to install MongoDB on the virtual machine.

Sign up for the Virtual Machines preview feature …

Create a virtual machine running CentOS Linux …

Configure Endpoints …

Connect to the Virtual Machine [with PuTTY] …

Attach a data disk …

Connect to the Virtual Machine Using SSH or PuTTY and Complete Setup …

Install and run MongoDB on the virtual machine

- Configure the Package Management System (YUM) so that you can install MongoDB. Create a /etc/yum.repos.d/10gen.repo file to hold information about your repository and add the following: [10gen] name=10gen Repository baseurl=http://downloads-distro.mongodb.org/repo/redhat/os/x86_64 gpgcheck=0 enabled=1

Save the repo file and then run the following command to update the local package database:

$ sudo yum updateTo install the package, run the following command to install the latest stable version of MongoDB and the associated tools:

$ sudo yum install mongo-10gen mongo-10gen-serverWait while MongoDB downloads and installs.

Create a data directory. By default MongoDB stores data in the /data/db directory, but you must create that directory. To create it, run:

$ sudo mkdir -p /mnt/datadrive/data $ sudo chown `id -u` /mnt/datadrive/dataFor more information on installing MongoDB on Linux, see Quickstart Unix.

To start the database, run:

$ mongod --dbpath /mnt/datadrive/data --logpath /mnt/datadrive/data/mongod.logAll log messages will be directed to the /mnt/datadrive/data/mongod.log file as MongoDB server starts and preallocates journal files. It may take several minutes for MongoDB to preallocate the journal files and start listening for connections.

To start the MongoDB administrative shell, open a separate SSH or PuTTY window and run:

$ mongo > db.foo.save ( { a:1 } ) > db.foo.find() { _id : ..., a : 1 } > show dbs ... > show collections ... > helpThe database is created by the insert.

Once MongoDB is installed you must configure an endpoint so that MongoDB can be accessed remotely. In the Management Portal, click Virtual Machines, then click the name of your new virtual machine, then click Endpoints.

Click Add Endpoint at the bottom of the page.

Add an endpoint with name "Mongo", protocol TCP, and both Public and Private ports set to "27017". This will allow MongoDB to be accessed remotely.

Summary

In this tutorial you learned how to create a Linux virtual machine and remotely connect to it using SSH or PuTTY. You also learned how to install and configure MongoDB on the Linux virtual machine. For more information on MongoDB, see the MongoDB Documentation.

Jim O’Neil (@jimoneil) started a four-part series with Couchbase on Azure: A Tour of New Windows Azure Features on 6/19/2012:

With the recent announcements of new capabilities in Windows Azure, particularly the support of a persistent Virtual Machine instance, a multitude of opportunities emerges to host just about anything that will run on Windows or Linux (yes, Linux!) inside the Windows Azure cloud. To explore some of this new functionality, I thought I’d start by creating a Couchbase cluster and building a simple Web application that accesses the cluster – all in Windows Azure, of course.

is an open source, NoSQL offering that provides distributed, in-memory key-value data store having 100% on-the-wire protocol compatibility with memcached. Formed by the merger of Membase and CouchOne in 2011, CouchBase’s current release, 1.8, leverages SQLite as a persistence engine, and its next release, 2.0, replaces SQLite with CouchDB bringing with it such features as map-reduce and materialized views. Couchbase additionally has a strong client ecosystem with SDKs for a number of languages, including .NET.

I’m going to leverage work that John Zablocki, Developer Advocate for Couchbase and leader of the Beantown ALT.NET Group, has underway for his .NET audience. He’s built a ASP.NET MVC 3 application called TapMap (sort of a FourSquare for mpyraphiles), so the goal of this exercise is to set up a Couchbase cluster with his sample database using the new Virtual Machine feature and then deploy the ASP.NET MVC application as a traditional Web Role Cloud Service. Both the Couchbase cluster and the ASP.NET MVC application will run in the confines of a Virtual Network so the application can communicate directly with the database, but only administrative access to the cluster itself will be publically exposed.

In pictures, the architecture looks something like this:

There are several significant architectural facets at work here, and while this specific exercise uses Couchbase, the concepts and Windows Azure configuration steps largely apply to other mixed mode application scenarios. Given that, I’m going to split up what would be a really, really long post into a series of articles that cover the major features being leveraged by the application and thereby demonstrate how to:

- Create a Virtual Network with two subnets for the Couchbase cluster and ASP.NET web application

- Create Virtual Machine images for the Couchbase servers

- Install and configure the Couchbase cluster

- Deploy the TapMap Couchbase sample application to Windows Azure

- Configure admin access to the Couchbase cluster

Note: the completed application was deployed to http://tapmap.cloudapp.net, but depending on when you are reading this post, it may no longer be publically available.

Following are links to Jim’s other three posts in this series:

- Couchbase on Azure: Creating a Virtual Network: Part 2

- Couchbase on Azure: Creating Virtual Machines: Part 3

- Couchbase on Azure: Installing Couchbase: Part 4

And a bonus post: Couchbase on Azure: Deploying TapMap

<Return to section navigation list>

Windows Azure Service Bus, Active Directory and Workflow

• Francois Lascelles (@flascelles) continued his series with Mobile-friendly federated identity, Part 2 – OpenID Connect on 6/21/2012:

The idea of delegating the authentication of a user to a 3rd party is ancient. At some point however, a clever (or maybe lazy) developer thought to leverage an OAuth handshake to achieve this. In the first part of this blog post, I pointed out winning patterns associated with the popular social login trend. In this second part, I suggest the use of specific standards to achieve the same for your identities.

OAuth was originally conceived as a protocol allowing an application to consume an API on behalf of a user. As part of an OAuth handshake, the API provider authenticates the user. The outcome of the handshake is the application getting an access token. This access token does not directly provide useful information for the application to identify the user. However, when that provider exposes an API which returns information about that user, the application can use this as a means to close the loop on the delegated authentication.

Step 1 – User is subjected to an OAuth handshake with provider knowing its identity.

Step 2 – Application uses the access token to discover information about the user by calling an API.

As a provider enabling an application to discover the identity of a user through such a sequence, you could define your own simple API. Luckily, an emerging standard covers such semantics: OpenID Connect. Currently a draft spec, OpenID Connect defines (among other things) a “user info” endpoint which takes as input an OAuth access token and returns a simple JSON structure containing attributes about the user authenticated as part of the OAuth handshake.

Request:

GET /userinfo?schema=openid HTTP/1.1

Host: server.example.com

Authorization: Bearer SlAV32hkKGResponse:

200 OK

content-type: application/json

{

“user_id”: “248289761001″,

“name”: “Jane Doe”,

“given_name”: “Jane”,

“family_name”: “Doe”,

“email”: “janedoe@example.com”,

“picture”: “http://example.com/janedoe.jpg”

}In the Layer 7 Gateway OpenID Connect, a generic user info endpoint is provided which validates an incoming OAuth access token and returns user attributes for the user associated with said token. You can plug in your own identity attributes as part of this user info endpoint implementation. For example, if you are managing identities using an LDAP provider, you inject an LDAP query in the policy as illustrated below.

To get the right LDAP record, the query is configured to take as input the variable ${session.subscriber_id}. This variable is automatically set by the OAuth toolkit as part of the OAuth access token validation. You could easily lookup the appropriate identity attributes from a different source using for example a SQL query or even an API call – all the input necessary to discover these attributes is available to the manager.

Another aspect of OpenID Connect is the issuing of id tokens during the OAuth handshake. This id token is structured following the JSON Web Token specification (JWT) including JWS signatures. Layer 7’s OpenID Connect introduces the following assertions to issue and handle JWT-based id tokens:

- Generate ID Token

- Decode ID Token

Note that as of this writing, OpenID Connect is a moving target and the specification is subject to change before finalization.

• Francois Lascelles (@flascelles) began a series with Mobile-friendly federated identity, Part 1 – The social login legacy on 6/12/2012:

If I were to measure the success of a federated identity system, I would consider the following factors:

- End user experience (UX);

- How easy it is for a relying party to participate (frictionless);

- How well it meets security requirements.

I get easily frustrated when subjected to bad user experience regarding user login and SSO but I also recognize apps that get this right. In this first part of a series on the topic of mobile-friendly federated identity, I would like to identify winning patterns associated with the social login trend.

My friend Martin recently introduced me to a mobile app called Strava which tracks bike and run workouts. You start the app at the beginning of the workout, and it captures GPS data along the way – distance, speed, elevation, etc. Getting this app working on my smart phone was the easiest thing ever: download, start the app, login with facebook, ready to go. The login part was flawless; I tapped the Login with Facebook button and was redirected to my native facebook app on my smartphone from where I was able to express consent. This neat OAuth-ish handshake only required 3 taps of my thumb. If I had been redirected through a mobile browser, I would have had to type in email address and password. BTW, I don’t even know that password, it’s hidden in some encrypted file on my laptop somewhere, so at this point I move on to something else and that’s the end of the app for me. Starting such handshakes by redirecting the user through the native app is the right choice in the case of a native app relying on a social provider which also has its own native app.

Figure 1 – Create account by expressing consent on social provider native app

At this point my social identity is associated to the session that the Strava app has with the Strava API. Effectively, I have a Strava account without needing to establish a shared secret with this service. This is the part where federated identity comes in. Strava does not need to manage a shared secret with me and does not lose anything in federating my identity to a social provider. It still lets me create a profile on their service and saves data associated to me.

When I came home from my ride, I was able to get nice graphs and stats and once I accepted the fact that I have become old, fat and slow, decided to check strava.com on my laptop. Again, a friendly social login button enabled me to login in a flash and I can see the same information with a richer GUI. Of course on my laptop, I do have a session with my social provider on the same browser so this works great. The same service accessed from multiple devices each redirecting me to authenticate in the appropriate way for the device in use.

Now that we’ve established how fantastic the login user experience is, what about the effort needed on the relying party? Strava had to register an app on facebook. Once this is in place, a Strava app simply takes the user through the handshake and retrieves information about that user once the handshake is complete. In the case of facebook on an iOS device, the instructions on how to do this are available here. Even without a client library, all that would be required is implement an OAuth handshake and call an API with the resulting token to discover information about the user. There is no XML, there is no SAML, no digital signatures, and other things that would cause mobile developers to cringe.

Although a good user experience is critical to the adoption of an app, the reasons for Strava to leverage the social network for login go beyond simplifying user login. Strava also happens to rely on the social network to let users post their exploits. This in turn enhances visibility for their app and drives adoption as other users of the same social network discover Strava through these posts.

Although social login is not just about federated authentication, it creates expectations as to how federated authentication should function and what should be required to implement it. These expectations are not just from end users but also from a new breed of application developers who rely on lightweight, mobile-friendly mechanisms.

In the second part of this blog, I will illustrate how you can cater to such expectations and implement the same patterns for your own identities using standards like OAuth, OpenID Connect and the Layer 7 Gateway.

<Return to section navigation list>

Windows Azure Virtual Machines, Web Sites, Virtual Networks, Connect, RDP and CDN

•• Michael Washam (@MWashamMS) described Importing and Exporting Virtual Machine Settings in a 6/18/2012 post:

I wanted to highlight the Export-AzureVM and Import-AzureVM cmdlets as I’ve seen quite a few recent cases where they come in handy when dealing with Windows Azure Virtual Machines.

Here are a few places where they can be exceptionally useful

Situation #1: Deleting a Virtual Machine when you are not using it and restoring it when you need it

Windows Azure billing charges for virtual machines whether they are booted or not. For situations where you have an “on and off” type of deployment such as development and test or even applications that don’t get as much usage after hours having the ability to save all of the current settings and remove and re-add later is huge.

Situation #2: Moving Virtual Machines between Deployments

If you ever find yourself in the situation where you need to remove and re-configure a VM in a different deployment. This could happen because you booted the VM up but placed it in the wrong cloud service or you misconfigured the virtual network and have to reconfigure.There are likely many more scenarios but these seem to be the most common.

So here are a few examples to show how it works:

Exporting and Removing a Virtual Machine

# Exporting the settings to an XML file Export-AzureVM -ServiceName '<cloud service>' -Name '<vm name>' -Path 'c:\vms\myvm.xml' # Remove the Virtual Machine Remove-AzureVM -ServiceName '<cloud service>' -Name '<vm name>'Note: Remove-AzureVM does not remove the VHDs for the virtual machines so you are not losing data when you remove the VM.

If you open up the xml file produced you will see that it is a serialized version of your VM settings including endpoints, subnets, data disks and cache settings.

At this point you could easily recreate the same virtual machine in a new or existing cloud service (specify -Location or -AffinityGroup to create a new cloud service). This operation could easily be split up into a few scheduled tasks to automate turning on and turning off your VMs in the cloud to save money when you aren’t using them.

Import-AzureVM -Path 'c:\vms\myvm.xml' | New-AzureVM -ServiceName '<cloud service>' -Location 'East US'For more than a single VM deployment PowerShell’s foreach comes to the rescue for some nice automation.

Exporting and Removing all of the VMs in a Cloud Service

Get-AzureVM -ServiceName '<cloud service>' | foreach { $path = 'c:\vms\' + $_.Name + '.xml' Export-AzureVM -ServiceName '<cloud service>' -Name $_.Name -Path $path } # Faster way of removing all VMs while keeping the cloud service/DNS name Remove-AzureDeployment -ServiceName '<cloud service>' -Slot Production -ForceRe-Importing all of the VMs to an existing Cloud Service

$vms = @() Get-ChildItem 'c:\vms\' | foreach { $path = 'c:\vms\' + $_ $vms += Import-AzureVM -Path $path } New-AzureVM -ServiceName '<cloud service>' -VMs $vms

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

• Sanjay Mehta announced XBRL application on Microsoft Azure Cloud - Compliance Reporting - Trial Version access in a message of 6/23/2012 to LinkedIn’s Business Intelligence group:

postXBRL compliance reporting software is now available for trial version on cloud till 30th June 2012 for unrestricted or unlimited trials.

XBRL commercial solution is pay per use - SaaS subscription for less than USD 200/- per year per company. For any enquiry or information send email on sales@maia-intelligence.com.

Register your trial requests on http://174.129.248.193/xbrlenquiry/ and you will be sent the access login details on your registered enquiry in 24hours of registration on above mentioned link with all details.[*]

postXBRL is a software that allows you to prepare your Business Information Reports in the mandated XBRL format without changing your pre-existing workflow. With the benefits of business information reporting language XBRL, MAIA Intelligence has added benefits of cloud computing technology to present ‘postXBRL Cloud’ version. It provides the functional competencies like Instance creation and Modification, XBRL Validation etc. But it also provides the advantages of cloud computing like Pay/Usage, Ease of Access etc. postXBRL Cloud will help users to manage their information reporting requirements with remarkable ease.

postXBRL is built on Microsoft’s Azure platform which is a highly recognized platform for the cloud based solutions. Not only it provides the seamless user interaction but also helps with automated backup, replication and high availability through global networks & connectivity.

For any support, enquiry or information email on sales@maia-intelligence.com and register trial requests on the link http://174.129.248.193/xbrlenquiry/

We also take outsourced services contract for XBRL conversion. Our outsourced XBRL conversion deliveries are error free and timely. We are looking for partners world wide to explore opportunities in XBRL for reselling and consulting.

Team MAIA Intelligence

Mumbai - India

* Microsoft Outlook detected the registration form as a phishing message, which doesn’t appear to be the case. Telephone numbers must be entered as a numeric value without punctuation (no parenthesis or hyphens) to prevent receiving an alert.

I’ve registered for a free trial and will report my findings in a later post.

• Bruno Terkaly (@BrunoTerkaly) described How to provide cloud-based, JSON data to Windows 8 Metro Grid Applications – Part 4 in a 6/22/2012 post:

Exercise 1: Using Windows Azure (Microsoft Cloud) to supply JSON data to a Windows 8 Metro Grid Application

This post is about supplying JSON data via http.

- There is more than one way to do this.

- We will chose WCF to convert RESTful web queries to JSON string resources.

- The JSON strings represent information about Motorcycles.

Download the Windows Azure SDK and Tools - To do these posts, absolutely necessary

** Important Note ** The Windows Azure SDK now supports Visual Studio 2012 RC. But this lab uses Visual Studio 2010, due to the timing of releases.

Exercise 1: Task 1 - Some Quick Pre-requisites

- Facts about this post:

- You will need to have all the cloud tooling installed.

- You can initially go here:

- I have a post about setup here:

Exercise 1 Create a Windows Azure Cloud Project

- This requires all the Azure Tooling and SDK installed

- Also include Visual Studio 2010 (I am using Professional, but others should work)

- We will simply create a new "Windows Azure Cloud" project

- We will briefly discuss configuration settings

- Scale, Instance Count

- We will take a brief tour of the files before adding our web service

Exercise 2 Managing Graphics - Images of Motorcycles

- The back-end server will return back information about Motorcycles

- JSON string format

- Will also return images of motorycycles (image url)

- Later, we can use Azure Storage for images

- We will use a folder called "Assets"

- We can add "Assets" folder and then add graphics images

- 2 sub folders

- Sport Bikes

- Exotic Bikes

Exercise 3 Add a WCF Service and define data model for JSON data

- We will add a WCF Service

- We will then add attributes to the service, forcing the service to return JSON-formatted data

- The service will have a data model that resembles the Windows 8 Metro Grid Client

- The 3 core classes exist on server-side as well

- SampleDataCommon

- SampleDataItem

- SampleDataGroup

- The service will return hard-coded strings in the constructor

- Later we will store the image and string data in Azure Storage or SQL Database

Exercise 4 Test JSON data source with the browser

- Simply use the browser to make http calls to retrieve JSON data.

- Save data to disk and examine structure

- This is the structure the Windows 8 Metro Grid Client Application expects

- Note: We could add fields without breaking client

Exercise 5 Test with Windows 8 Metro Grid Application we built in the previous post.

- This should be as simple as running the Windows 8 Metro Grid Application

- JSON strings and images should reach the Grid application and you should see a fully functional app running

Exercise 6 Deploy to cloud

- Create a package that we can upload to the cloud

- Provision a hosted service and storage account

- We won't use the storage service yet

- Upload the application to the cloud and run

Exercise 7 Test cloud as source from Windows 8 Metro Grid Client Application

- Change the address used by the Windows 8 Metro Grid Client Applicaton to talk to the Azure-hosted http service.

- The Metro client will simply get data from a totally scalable back end in one of numerous Microsoft global data centers

Bruno posted How to provide cloud-based, JSON data to Windows 8 Metro Grid Applications – Part 1 on 6/17/2012.

• Greg Oliver (@GoLiveMSFT) posted Go Live Partners Series: Extended Results, Inc. on 6/21/2012:

Founded in 2006, Redmond, Washington based traditional ISV Extended Results (http://www.extendedresults.com) specializes in business intelligence solutions. They sell primarily to enterprises worldwide across many verticals.

Companies spend a lot of money these days building business intelligence capabilities based on various vendor technologies. Workers sitting at their desks or connected via VPN are in great shape – their KPI’s are right where they need them. Extended Result’s PushBI product sees to that. But what about when they’re NOT at work?

Mobile devices operate outside the firewall. They don’t have direct access to a company’s internal systems and for good reason – it’s too easy to lose one. So – what’s the answer to most computing problems? Add a level of indirection! That’s what Extended Results did with the Windows Azure component of PushBI Mobile. (http://www.pushbi.com) Now users of popular mobile devices (Windows Phone 7, iPhone, iPad, Android, Blackberry, Windows 7 Slate) can get access to their critical business information while on the road or in the coffee shop via a short hop through a cloud component running in Windows Azure.

This long time Microsoft partner chose Windows Azure because they know .NET well. This familiarity allowed them to create this innovation quickly and get it to market in record time.

Congratulations, Extended Results!

• Greg Oliver (@GoLiveMSFT) congratulated another Windows Azure user in his Go Live Partners Series: Rare Crowds post of 6/21/2012:

Founded in 2012, Bellevue, Washington based Rare Crowds (http://wp.rarecrowds.com), is a SaaS Cloud Service Vendor and Microsoft Bizspark partner. Rare Crowds sells ad purchasing efficiency solutions to international enterprises in the advertising industry with plans to expand into SMB over time.

Ad buys are expensive, and no one ever buys just one ad so the waste associated with buying inefficient ads multiply. Especially in large companies that buy hundreds of ad placements at a time, any efficiencies at all can yield large monetary returns. To generate these efficiencies, Rare Crowds needs data – and lots of it. Not to mention the computing power to crunch all of that data. Putting it all together yields the right ad being delivered to the right audience at the right time.

Rare Crowds had choices when it came to deciding on which cloud vendor to work with. Several factors contributed to their decision to work with Microsoft to build on Windows Azure. Obviously, the technical capabilities of the Windows Azure platform had to be there. But they also wanted great developer support and a great partner to work with – one throat to choke, as it were. Their comfort with the .NET Framework makes it important that their cloud vendor fully supports .NET as a first class citizen. The solid integration with Visual Studio, making developers more productive because of their familiarity with the tools, played a big role as well. And to top it all off, they find OPX to be noticeably cheaper on Azure.

Congratulations, Rare Crowds, and thanks for your commitment to Windows Azure!

Avkash Chauhan (@avkashchauhan) described WorkFlow (XAMLX) Service Activity with WCF Service in Windows Azure Websites in a 6/21/2012 post:

I have downloaded pre-built WCF-WF samples from the link here:

http://www.microsoft.com/en-us/download/details.aspx?id=21459

After unzipping the above sample collection, I have selected WFStockPriceApplication application which is located as below:

C:\2012\WorkFlow\WF_WCF_Samples\WF\Basic\Tracking\TextFileTracking\CS

In VS2010, opening the application WFStockPriceApplication and set the XAMLX property “Copy to Output Directory” as “Copy Always” as below:

To expose my Work Flow Service activity (StockPriceService.xamlx) as WCF service, I have added one WCF Service GetStockPriceWCFService.svc, to this application and set Workflow Service Activity (StockPriceService.xamlx) in SVC Markup as below:

<%@ ServiceHost Language="C#" Debug="true" Service="StockPriceService.xamlx"

Factory="System.ServiceModel.Activities.Activation.WorkflowServiceHostFactory,System.ServiceModel.Activation,Version=4.0.0.0,Culture=neutral,PublicKeyToken=31bf3856ad364e35"%>

Build the service and verify that my StockPriceService.xamlx file is using “Copy Local” as true setting because it is in my final output folder as below:

Finally I can run and test the application locally with both StockPriceService.xamlx

...and with GetStockPriceWCFService.svc as below:

Now I can setup Windows Azure Website to use Git deployment method:

After opening GIT Bash windows, I walked all the way to my WFStockPriceApplication application folder and ran “git init” and “git add .” commands as below:

When I ran “git commit –m “initial commit” command I could verify that xamlx content is included into the list as below:

Finally I can call “git push azure master” to publish my project and check the deployment was successful.

Now, I can access the service and see that service is deployed correct and available:

In a test application I can also add this WCF service as Web References as below:

Avkash Chauhan (@avkashchauhan) explained Windows Azure Website: Uploading/Downloading files over FTP and collecting Diagnostics logs in a 6/19/2012 post:

Go to your Windows Azure Website:

In your Windows Azure Website please select the following:

FTP Host Name: *.ftp.azurewebsites.windows.net

FTP User Login Name: yourwebsitename\yourusername

Password: your_password

Use FileZilla or any FTP client application and configure your FTP access as below:

Finally connect to your website and you will see two folders:

1. site - This is the folder where your website specific files are located

2. Logfiles : This is the folder where your website specific Diagnostics LOG files are located.

Robin Shahan (@robindotnet) posted GoldMail and Windows Azure on 6/12/2012. It begins as follows:

I am the director of software engineering at a small startup called GoldMail. We have a desktop product that lets you add images, text, Microsoft PowerPoint presentations, etc., and then record voice over them. You then share them, which uploads them to our backend, and we give you a URL. You can then send this URL to someone in an e-mail with a hyperlinked image, post it on facebook or twitter or linkedin, or even embed it in your website. When the recipient clicks the link, the GoldMail plays in any browser using either our Flash player or our html5 player, depending on the device. We track the views, so you can see how many people actually watched your GoldMail. [Link added.]

GoldMail is like video without the overhead. I use it here on my blog, and many of our customers use it for marketing and sales. (I also use it for vacation pictures.)

Robin continues with a description of her introduction to Windows Azure by Microsoft Evangelist Bruno Terkali and describes the migration of GoldMail’s migration from on-premises to Windows Azure. She concludes:

Over time, I added performance indicators and sized our instances more appropriately. Even after adding more hosted services, we are now paying 90% less than we used to pay for actual servers and hosting by running in the cloud.

Windows Azure is a great platform for startups because you can start with minimal cost and ramp up as your company expands. You used to have to buy the hardware to support your Oprah moment even though you didn’t need that much resource to start with, but this is no longer necessary. (See current Azure pricing here.)

An additional benefit that I didn’t foresee was the simplification of deployment for our applications. We used to manually deploy changes to the web services and applications out to all of the servers whenever we had a change. Manual upgrades are always fraught with peril, and it took time to make sure the right versions were in the right places. Now the build engineer just publishes the services to the staging instances, and then we VIP swap everything at once.

And here’s one of the best benefits for me personally – Since we went into production a year and a half ago, Operations and Engineering haven’t been called in the middle of the night or on a weekend even once because of a problem with the services and applications running on Windows Azure. And we know we can quickly and easily scale our infrastructure, even though Oprah has retired.

<Return to section navigation list>

Visual Studio LightSwitch and Entity Framework 4.1+

•• Jason Zander (@jlzander) included two sections about LightSwitch 2012 in his TechEd North America 2012 Keynote News of 6/11/2012 (missed when published):

LightSwitch OData Support and SAP

There are a number of other LightSwitch features to be aware of in the Visual Studio 2012 RC, such as the new Cosmopolitan theme and Azure deployment with the latest Azure release. One feature that I’m personally very excited about is the OData support. Last month, in conjunction with SAP, IBM, Citrix, Progress, and WSO2, we submitted OData to the OASIS standards org. OData is a key building block for cloud services. Using LightSwitch in Visual Studio 2012, you can both consume and produce OData feeds.

There are many OData providers available that you use with LightSwitch. Today we showed an interesting example of using LightSwitch to consume an OData feed from SAP NetWeaver Gateway, which allows you to programmatically access SAP data and business processes. Below you can see a screenshot of defining a relationship between Customer data from SQL Server and Sales Order information from SAP using the LightSwitch designer. I think this will be a really useful feature for your line of business apps, and I encourage you to try it out.

LightSwitch HTML5 Support

We are working hard to help you build great rich client experiences. At the same time, we understand that many of you are being asked to create applications that can be run on multiple devices. We want you to be able to take advantage of the same backend services in these applications, as well as the productivity gains obtained with LightSwitch. The best way to create companion applications that can run on multiple heterogeneous devices (such as a phones and slates) is to leverage a standards based approach like HTML5, JavaScript and CSS. Today we’re announcing HTML5/JavaScript client development support within LightSwitch to address these scenarios. LightSwitch continues to be the simplest way to create modern line of business applications for the enterprise. This support will be made available in a preview release, coming soon. Stay tuned to the Developer Center for updates. For more information on today’s announcements, please visit the LightSwitch team blog.

Beth Massi (@bethmassi) posted LightSwitch HTML Client Resources – Countdown to the preview release! on 6/20/2012:

Last week at TechEd Jason Zander announced that LightSwitch is embracing HTML5, JavaScript and CSS so you can build touch-centric business applications that run on multiple devices. I know you all have been very anxious to get your hands on the bits and we’re anxious to get them to you! Please be patient (I know it’s hard when you’re so excited), we’re working to make the bits available soon.

In the meantime, we’ve been publishing a regular cadence of content on the LightSwitch team blog so you can understand our goals and reasons why we’ve enabled this type of development. We’ve got some deeper articles planned on the architecture as well so keep an eye out on the team blog for that deep dive from our architects.

For now, we’ve got a page on the Developer Center that we’ve been filling up with content as we publish it. Stay tuned to that page for the bits. Here are some goodies if you need to catch up:

- Announcing the LightSwitch HTML Client!

- Channel 9 Video: Early Look at the Visual Studio LightSwitch HTML Client

- TechEd Session: What’s New in Visual Studio LightSwitch

- TechEd Session: Building Business Applications with Visual Studio LightSwitch

- Article: Creating Screens with the LightSwitch HTML Client

And please keep asking great questions in the LightSwitch HTML Client Forum. The team is very excited to speak with you and get your feedback, encouragement and support. You’ve all been overwhelmingly positive and excited and so are we! And we hear you… get the bits out ASAP so you can try it. We are working on it! :-)

Return to section navigation list>

Windows Azure Infrastructure and DevOps

•• Michael Collier (@MichaelCollier) asked and answered What’s New for the Windows Azure Developer? Lots!! in a 6/15/2012 post (missed when published):

The last two weeks have been huge for the Windows Azure platform. The Meet Windows Azure event in San Francisco on June 7th kicked off a week of announcements, which continued into TechEd last week. Getting most of the press, and rightfully so, was the announcements pertaining to the functionality now available in Windows Azure Web Sites and Virtual Machines (IaaS). These are without a doubt “game changing” in the cloud computing and Windows Azure space. There were also announcements related to Cloud Services (formerly known as ‘Hosted Services’ – the traditional PaaS offering), SQL Database (formerly SQL Azure), and Windows Azure Active Directory (which now includes Access Control Services).

Today I’d like to share a few areas of that are new for the Windows Azure developer. With the new Windows Azure SDK 1.7 and tooling now available in Visual Studio 2012 RC, there’s a wealth of new tools and techniques available. Let’s take a look at few of the new features now available.

Custom Health Probes (Pages)

The Windows Azure load balancer has always had the ability to check on the status of a role and remove it from rotation if the role is unhealthy. This was done by sending ping requests to the Guest Agent on the role instance. This was great, but it couldn’t tell if the service itself was able to handle requests. If there was a flaw with the service, such as not being able to connect to the database or a 3rd party web service, the load balancer would still send requests to the instance even though it may not be able to properly handle them.

With the new custom health probes, a custom page can be created that could do whatever checking is necessary and return an HTTP 200 if the service can handle requests. The Windows Azure load balancer uses the custom page in addition to the normal checking it does via the Guest Agent. Setting up a custom health page is easy.

1. Create the page – for example, HealthCheck.aspx. It should do whatever is needed to check that service is healthy (connect to the database, call another web service, etc.)

2. Add the configuration to the ServiceDefinition.csdef

a. Add a new LoadBalancerProbes element

b. Add the ‘loadBalancerProbe’ attribute to the InputEnpoint.

For more information on this feature, please visit http://msdn.microsoft.com/en-us/library/windowsazure/jj151530.aspx.

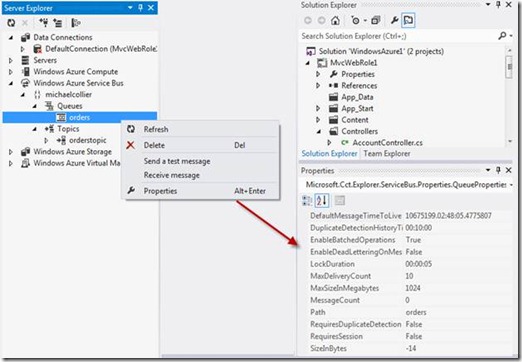

Service Bus Explorer (in Visual Studio)

Visual Studio’s support for direct management of Windows Azure roles and storage has traditionally been somewhat weak. It looks like Microsoft is investing a little more in this area now, especially for Windows Azure Service Bus. There is a new option in the Server Explorer for managing Service Bus. From there you can view the properties of a Service Bus queue, as well as send and receive test messages.

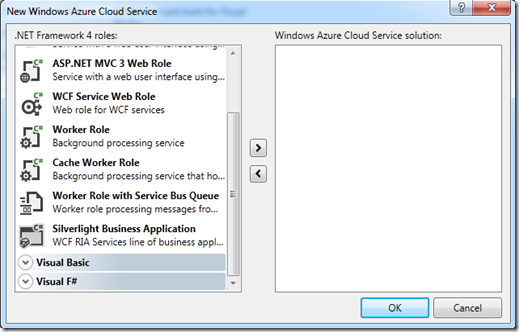

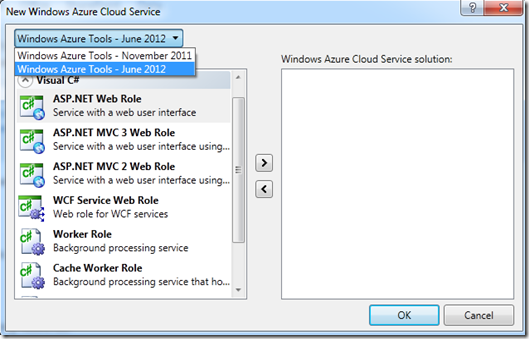

New Role Templates

When creating a new Windows Azure cloud service, there are now two new role templates to choose from: Cache Worker Role and Worker Role with Service Bus Queue. These new role templates set up a little boiler plate code to make working with the new cache features or Service Bus queues just a little easier.

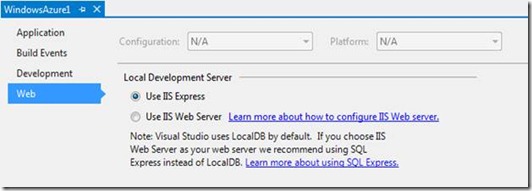

Emulator Updates

Prior to SDK 1.7 the development emulators for Windows Azure relied on IIS and SQL Server Express or SQL Server. Starting with the June 2012 updates, the compute emulator now uses IIS Express and the storage emulator uses SQL Service Express 2012 LocalDB. This should make the emulators a little faster to start up and hopefully more reliable. If an application requires the full feature set of IIS, you can revert back to the full IIS web server.

Side-by-Side SDK Support

Windows Azure now supports multiple SDK installations on the same machine – starting with SDK 1.6 and SDK 1.7. This means no longer is it necessary to uninstall the previous SDK version whenever a new version comes out. In order to choose the version to use, select it from the dropdown box at the top of the New Windows Azure Cloud Service dialog window when creating a new project.

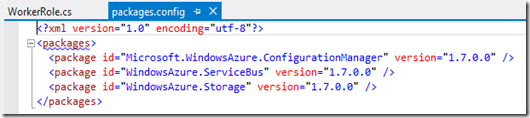

NuGet Packages

When creating a Windows Azure project in Visual Studio 2012 RC, NuGet packages are used for the Windows Azure dependencies. This means that instead of using whatever version is installed on your machine (via the SDK), Visual Studio will pull the latest version from NuGet. You can see this by looking at the packages.config file in your project. This is done for packages such as WindowsAzure.Storage (the storage client library), WindowsAzure.ServiceBus, and the new Microsoft.WindowsAzure.ConfigurationManager. This allows you to keep current (if you want) much easier.

Additionally, NuGet packages can be used to help speed the deployment and improve maintainability of our Windows Azure Web Sites. Using NuGet Package Restore we can let Visual Studio (or MSBuild) pull down the files we need, removing the need to add the files to our source control system. With Windows Azure Web Sites, the NuGet packages are cached on Microsoft’s servers allowing for faster downloads.

More details on NuGet Package Restore and WAWS at:

- http://blog.maartenballiauw.be/post/2012/06/07/Use-NuGet-Package-Restore-to-avoid-pushing-assemblies-to-Windows-Azure-Websites.aspx

- http://blog.ntotten.com/2012/06/07/10-things-about-windows-azure-web-sites/

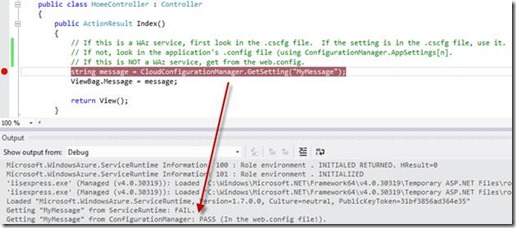

New CloudConfigurationManager class

There is a new class in SDK 1.7 that I think will be very welcome by Windows Azure developers. When writing code that needs to run, for whatever reason, both in the cloud (Windows Azure or the emulator) and locally, and that code needs to retrieve a configuration setting, it was often a minor pain to do so. Developers would often resort to writing a utility method that would check if the code was running in the emulator or not, and then retrieve the configuration setting from either the RoleEnvironment class or the ConfigurationManager class. Adding to this pain was that RoleEnvironment.GetConfigurationSettingValue() would throw an exception if the value wasn’t set – unlike ConfigurationManager.AppSettings[].

Now there is one class that can handle it all – CloudConfigurationManager. This class handles checking if the code is executing in a Windows Azure environment or not, and looks in the ServiceConfiguration file for the configuration setting. It also handles falling back to the application configuration (web.config or app.config) if the value isn’t in the ServiceConfiguration file.

Summary

As you can see there is a lot of new features and functionality available to those developing solutions that take advantage of the Windows Azure platform. I’ve only scratched the surface here. There are tons of new features in Windows Azure storage, the Service Management API, virtual networking, etc. I’ll be exploring more new features in upcoming articles. Exciting!!

•• Lori MacVittie (@lmacvittie) asserted “Devops is not something you build, it’s something you do” in an introduction to here Devops is a Verb post of 6/12/2012 to F5’s DevCentral blog:

Operations is increasingly responsible for deploying and managing applications within this architecture, requiring traditionally developer-oriented skills like integration, programming and testing as well as greater collaboration to meet business and operational goals for performance, security, and availability. To maintain the economy of scale necessary to keep up with the volatility of modern data center environments, operations is adopting modern development methodologies and practices.

Cloud computing and virtualization have elevated the API as the next generation management paradigm across IT, driven by the proliferation of virtualization and pressure on IT to become more efficient. In response, infrastructure is becoming more programmable, allowing IT to automate, integrate and manage continuous delivery of applications within the context of an overarching operational framework.

The role of infrastructure vendors in devops is to enable the automation, integration, and lifecycle management of applications and infrastructure services through APIs, programmable interfaces and reusable services. By embracing the toolsets, APIs, and methodologies of devops, infrastructure vendors can enable IT to create repeatable processes with faster feedback mechanisms that support the continuous and dynamic delivery cycle required to achieve efficiency and stability within operations.

DEVOPS MORE THAN ORCHESTRATING VM PROVISIONING

Most of the attention paid to devops today is focused on automating the virtual machine provisioning process. Do you use scripts? Cloned images? Boot scripts or APIs? Open Source tools?

But devops is more than that and it’s not what you use. You don’t suddenly get to claim you’re “doing devops” because you use a framework instead of custom scripts, or vice-versa. Devops is a broader, iterative agile methodology that enables refinement and eventually optimization of operational processes. Devops is lifecycle management with the goal of continuous delivery of applications achieved through the discovery, refinement and optimization of repeatable processes. Those processes must necessarily extend beyond the virtual machine. The bulk of time required to deploy an application to the end-user lies not in provisioning it, but in provisioning it in the context of the entire application delivery chain.

Security, access, web application security, load balancing, acceleration, optimization. These are the services that comprise an application delivery network, through which the application is secured, optimized and accelerated. These services must be defined and provisioned as well. Through the iterative development of the appropriate (read: most optimal) policies to deliver specific applications, devops is able to refine the policies and the process until it is repeatable.

Like enterprise architects, devops practitioners will see patterns emerge from the repetition that clearly indicate an ability to reuse operational processes and make them repeatable. Codifying in some way these patterns shortens the overall process. Iterations refine until the process is optimized and applications can be completely deployed in as short a time as possible. And like enterprise architects, devops practitioners know that these processes span the silos that exist in data centers today. From development to security to the network; the process of deploying an application to the end-user requires components from each of these concerns and thus devops must figure out how to build bridges between the ivory towers of the data center. Devops must discern how best to integrate processes from each concern into a holistic, application-focused operational deployment process.

To achieve this, infrastructure must be programmable, it must present the means by which it can be included the processes. We know, for example, that there are over 1200 network attributes spanning multiple concerns that must be configured in the application delivery network to successfully deploy Microsoft Exchange to ensure it is secure, fast and available. Codifying that piece of the deployment equation as a repeatable, automated process goes a long way toward reducing the average time to end-user from 3 months down to something more acceptable.

Infrastructure vendors must seek to aid those on their devops journey by not only providing the APIs and programmable interfaces, but actively building an ecosystem of devops-focused solutions that can be delivered to devops practitioners. It is not enough to say “here is an API”, go forth and integrate. Devops practitioners are not developers, and while an API in some cases may be exactly what is required, more often than not organizations are adopting platforms and frameworks through which devops will be executed. Infrastructure vendors must recognize this reality and cooperatively develop the integrations and the means to codify repeatable patterns. The collaboration across silos in the data center is difficult, but necessary. Infrastructure vendors who cross market lines, as it were, to cooperatively develop integrations that address the technological concerns of collaboration will make the people and process collaboration responsibility of devops a much less difficult task.

Devops is not something you build, it’s something you do.

•• Xath Cruz reported Free Access to Microsoft ALM Servers – TechEd Conference on 6/24/2012 in a CloudTimes article:

In its recent TechEd conference held in Orlando, Florida last June 4, Microsoft announced that they are already in the process of expanding access to their cloud-based application lifecycle management service, even though the app itself is still in preview mode.

According to the company, anyone can utilize its Team Foundation Service ALM server, which is currently hosted on Microsoft’s own Windows Azure cloud. The service was first launched in September last year, and until now the service remains limited to invite-only access. The good thing about this is that since it is still in preview mode, the service can be used for free. [See article below.]

One of the possible uses of the ALM cloud is for software development, in which developers can use the cloud service for planning, collaboration, and management of code through the Internet. The service allows code to be stored in the cloud via Visual Studio or Eclipse IDEs, and supports a wide variety of programming languages as well as platforms, such as Android and Windows.

Microsoft’s Team Foundation Service puts them in direct competition against other similar services such as Atlassian Jira, aiming to provide the same, if not better, set of capabilities regarding project planning and management through testing, development, and deployment. Microsoft hopes that their free preview phase will convince developers to give their service a try, as it doesn’t have any risk, and believes that the Team Foundation Service will fill the needs of developers that they would stay even after the free preview phase is finished.

Those who are worried about the free preview phase ending even before they settle in with their code shouldn’t worry, as Team Foundation Service’s product line manager, Brian Harry, says that they’ll eventually start charging for the service, but it will not happen this year.

Related Articles:

•• Jason Zander (@jlzander) included a section about the Team Foundatation Server Preview in his TechEd North America 2012 Keynote News of 6/11/2012 (missed when published):

Team Foundation Service Public Preview

Today we announced that we’re removing the requirement for an invitation code to the Team Foundation Service. This means anyone can sign up to use the service with no friction. We’ve also introduced a new landing page so that you have a great welcome experience, as well as additional resources. Please visit Brian Harry’s blog for a complete overview of the Team Foundation Service updates we released today.

Xath Cruz undoubtedly refers to Brian’s Team Foundation Service Preview is public! post of 6/11/2012 in her 6/24/2012 post.

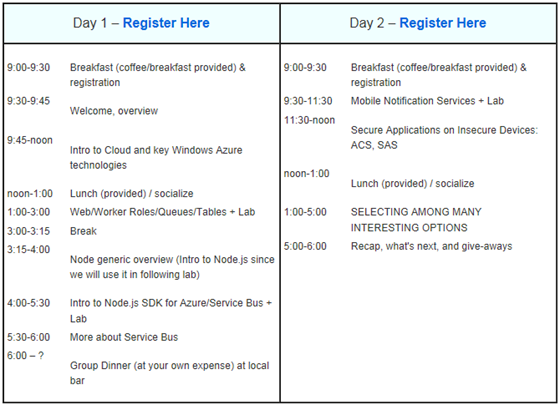

• David Linthicum (@DavidLinthicum) asserted “With proliferation of cloud computing services, a standard API remains a sci-fi notion for the foreseeable future” in a deck for his 3 reasons we won't see a cloud API standard article of 6/22/2012 for InfoWorld’s Cloud Computing blog: