Windows Azure and Cloud Computing Posts for 6/25/2012+

| A compendium of Windows Azure, Service Bus, EAI & EDI,Access Control, Connect, SQL Azure Database, and other cloud-computing articles. |

• Updated 6/25/2012 5:00 PM PDT with new articles marked •.

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

Windows Azure Blob, Drive, Table, Queue and Hadoop Services

- SQL Azure Database, Federations and Reporting

- Marketplace DataMarket, Social Analytics, Big Data and OData

- Windows Azure Service Bus, Active Directory, and Workflow

- Windows Azure Virtual Machines, Virtual Networks, Web Sites, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch and Entity Framework v4+

- Windows Azure Infrastructure and DevOps

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

Azure Blob, Drive, Table, Queue and Hadoop Services

• Carl Nolan (@carl_nolan) described a Framework for .Net Hadoop MapReduce Job Submission configuration update in a 6/25/2012 post:

To better support configuring the Stream environment whilst running .Net Streaming jobs I have made a change to the “Generic based Framework for .Net Hadoop MapReduce Job Submission” code.

I have fixed a few bugs around setting job configuration options which were being controlled by the submission code. However, more importantly, I have added support for two additional command lines submission options:

- jobconf – to support setting standard job configuration values

- cmdenv – to support setting environment variables which are accessible to the Map/Reduce code

The full set of options for the command line submission is now:

-help (Required=false) : Display Help Text

-input (Required=true) : Input Directory or Files

-output (Required=true) : Output Directory

-mapper (Required=true) : Mapper Class

-reducer (Required=true) : Reducer Class

-combiner (Required=false) : Combiner Class (Optional)

-format (Required=false) : Input Format |Text(Default)|Binary|Xml|

-numberReducers (Required=false) : Number of Reduce Tasks (Optional)

-numberKeys (Required=false) : Number of MapReduce Keys (Optional)

-outputFormat (Required=false) : Reducer Output Format |Text|Binary| (Optional)

-file (Required=true) : Processing Files (Must include Map and Reduce Class files)

-nodename (Required=false) : XML Processing Nodename (Optional)

-cmdenv (Required=false) : List of Environment Variables for Job (Optional)

-jobconf (Required=false) : List of Job Configuration Parameters (Optional)

-debug (Required=false) : Turns on Debugging OptionsCommand Options

The job configuration option supports providing a list of standard job options. As an example, to set the name of a job and compress the Map output, which could improve performance, one would add:

-jobconf mapred.compress.map.output=true

-jobconf mapred.job.name=MobileDataDebugFor a complete list of options one would need to review the Hadoop documentation.

The command environment option supports setting environment variables accessible to the Streaming process. However, it will replace non-alphanumeric characters with an underscore “_” character. As an example if one wanted to pass in a filter into the Streaming process one would add:

-cmdenv DATA_FILTER=Windows

This would then be accessible in the Streaming process.

Context Object

To support providing better feedback into the Hadoop running environment I have added a new static class into the code; named Context. The Context object contains the original FormatKeys() and GetKeys() operations, along with the following additions:

public class Context

{

public Context();

/// Formats with a TAB the provided keys for a Map/Reduce output file

public static string FormatKeys(string[] keys);

/// Return the split keys as an array of values

public static string[] GetKeys(string key);

/// Gets an environment variable for runtime configurations value

public static string GetVariable(string name);

/// Increments the specified counter for the given category

public static void IncrementCounter(string category, string instance);

/// Updates the specified counter for the given category

public static void UpdateCounter(string category, string instance, int value);

/// Emits the specified status information

public static void UpdateStatus(string message);

}The code contained in this Context object, although simple, will hopefully provide some abstraction over the idiosyncrasies of using the Streaming interface.

Surenda Singh described Processing Azure Storage analytics logs with Hadoop on Azure in a 6/25/2012 post:

Azure storage analytics is a great way to know insights about your azure storage service usage.

If you have not been using it, here are the details about what it provides. To summarize, it provides two kind of analytics

- Metrics: Provide hourly summary for bandwidth usage, errors, average client time,server time etc. and daily summary of blob storage capacity.

- Logs: Provide traces of executed requests for your storage accounts and these are stored in blob storage.

Enabling them doesn’t reduce storage account target limits in any way, but you need to pay for the transactions and capacity consumed by these logs. Metrics provides most of the data about storage usage but sometimes you need to know granular usage statistics like

- Bandwidth/s/blob

- Bandwidth/s/storage account/

- Transactions/s/blob

- Transactions/s/storage-account

- Hot blobs with respect to transactions, bandwidth etc.

- IP address of clients accessing storage account the most.

- Etc.

This requires processing analytics logs and then finding answers for these questions. If you are a heavy consumer of storage account, you could be generating 100s GBs and 100s M transactions a day. This makes it an ideal candidate to be processed using Hadoop.

Lets see how we can get these statistics using HadoopOnAzure.

At a very high level, here is what i will be doing to achieve this. Copying the relevant data from azure blob storage to HDFS using a map only MapReduce job, then importing the data to HIVE table and then running HiveQL queries to get these statistics. Note that, if you want to get just one answer, you can directly run against the blob storage without copying data to HDFS in a single pig script, for example, but in case of multiple jobs, if data is already on HDFS, it runs much faster because of data locality.

In Hadoop, code goes close to the data instead of traditional way of code fetching data close to itself. The MapReduce engine tries to assign workloads to the servers where the data to be processed is stored to avoid the network overhead. This is known as data locality.

Lets get into more details now:

1. Setup azure blob storage account to be processed. Detailed steps are available here.

2. Write a Map-only MapReduce job to pre-process the data in required format and copy it to HDFS.

Here is what my MapReduce script looks like:

StorageAnalytics.js

var myMap = function (key, value, context) {

var ipAddressIndex = 15;

var blobURLIndex = 11;

var requestHeaderIndex = 17;

var requestPacketIndex = 18;

var responseHeaderIndex = 19;

var responsePacketIndex = 20;

var quotedwords = value.split('"');

// encode semicolon character.for(i = 1; i < quotedwords.length; i+=2)

{

quotedwords[i] = quotedwords[i].replace(";", "%59");

}

var wordsstr = quotedwords.join('');

//Get URL after removing all parameters.

var words = wordsstr.split(";");

var url = words[blobURLIndex];

var index = url.indexOf("?");

if (index != -1) {

url = url.slice(0, index);

}

//Get data and time stringsvar datestr = words[1];

var seckey = datestr.substring(datestr.indexOf('T') + 1, datestr.lastIndexOf('.'));

var daykey = datestr.substring(0, datestr.indexOf('T'));

//Get IP address after removing port.

var ipaddress = words[ipAddressIndex].substring(0, words[ipAddressIndex].indexOf(':'));

var reqheader = words[requestHeaderIndex];

if(reqheader == "") {reqheader = "0";}

var reqpacket = words[requestPacketIndex];

if(reqpacket == "") {reqpacket = "0";}

var resheader = words[responseHeaderIndex];

if(resheader == "") {resheader = "0";}

var respacket = words[responsePacketIndex];

if(respacket == "") {respacket = "0";}

context.write(daykey + '\t' + seckey + '\t' + url + '\t' + reqheader + '\t' + reqpacket + '\t' + resheader + '\t' + respacket + '\t' + ipaddress, 1);

};

var myReduce = function (key, values, context) {

var sum = 0;

while(values.hasNext()) {

sum += parseInt(values.next());

}

context.write(key, sum);

};

var main = function (factory) {

var job = factory.createJob("myAnalytics", "myMap", "myReduce");//Make it Map only task by setting no of reduces to zero.

job.setNumReduceTasks(0);

job.submit();

job.waitForCompletion(true);

};Few important things to note from the script;

i. Encoding semicolon(';') character which comes in blob url or other entries by using that fact that whenever it comes in an entity that entity will be quoted. Note that ';' is used as a delimiter in log entries ans hence needs to be quoted when it is inside the entity.

ii. Picking up relevant data from analytics log entry( url, date, time, request/response header/data and ip address), but can take everything that entry provides.

iii. Making it Map only MapReduce job since aggregation/summarization will be done using hive queries on the generated data.

3. Go to javascript console and run the above job.

js> runJs("StorageAnalytics.js", "asv://$logs/blob/2012/06/", "output");

Note that i am processing data for the month of June, you can change the path above to 'asv://$logs/blob/' to process complete data. Once the job completes, it stores generated data in output folder in HDFS in part-m-0000.. files.

js> #ls output

Found 111 items

-rw-r--r-- 3 suren supergroup 0 2012-06-25 01:43 /user/suren/output/_SUCCESS

drwxr-xr-x - suren supergroup 0 2012-06-25 01:42 /user/suren/output/_logs

-rw-r--r-- 3 suren supergroup 20816 2012-06-25 01:42 /user/suren/output/part-m-00000

-rw-r--r-- 3 suren supergroup 19631 2012-06-25 01:42 /user/suren/output/part-m-00001

......

Delete _logs and _SUCCESS files so that all files in the output folder conform to the same schema.

js> #rmr output/_logs

Deleted hdfs://.../output/_logs

js> #rmr output/_SUCCESS

Deleted hdfs://... /output/_SUCCESS

4. Create a HIVE table for this schema. Go to interactive HIVE console and create this table

create table StorageAnalyticsStats(DayStr STRING, TimeStr STRING, urlStr STRING, ReqHSize BIGINT, ReqPSize BIGINT, ResHSize BIGINT, ResPSize BIGINT, IpAddress STRING, count BIGINT) PARTITIONED BY (account STRING) ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t' COLLECTION ITEMS TERMINATED BY '\n';

Note that the table is partitioned by account, so that you can insert multiple storage accounts data and then run HiveQL queries once on all accounts. Partition in hive is a logical column which is derived by file name instead of data from within the file. You can read more about partitions and other data unit concepts on hive wiki here.

5. Insert data to the table created above.

LOAD DATA INPATH 'output' INTO TABLE StorageAnalyticsStats PARTITION(account='account1');

You can repeat step 1 to 5 to load data from multiple storage accounts and then run the queries. Since we are not overwriting data while loading it will retain the data for all storage accounts. Since each storage account data goes in different partition, you can run queries scoped to a single storage account or across all storage account. After this step your data from output folder is moved inside hive, and when you drop table this data will eventually be deleted. If you want to retain the data after dropping the tables, you can use external tables. You can read more about external tables and loading data at hive wiki here.

Now we have Hive table with complete data. Not that hive doesn't process data while loading, actual processing will happen when you run your queries on this data. that's why load data command executes pretty quickly. Lets get the required statistics now:

1. Find top 10 blobs with maximum bandwidth usage /second.

select daystr, timestr, urlstr, sum(reqhsize+reqpsize+reshsize+respsize) as bandwidth, sum (reqhsize+reqpsize) as Ingress, sum(reshsize+respsize) as Egress from storageanalyticsstats group by daystr, timestr, urlstr order by bandwidth desc limit 10;

2. Find top 10 blobs with respect to transactions/s

select daystr, timestr, urlstr, count(*) as txns from storageanalyticsstats group by daystr, timestr, urlstr order by txns desc limit 10;

3. Find IP address of the client accessing storage service most in the given time.

select IpAddress, count(*) as hits from storageanalyticsstats group by IpAddress order by hits desc limit 10;

4. Find top 10 transactions/second/storageaccount

select daystr, timestr, count(*) as txns from storageanalyticsstats group by daystr, timestr order by txns desc limit 10;

<Return to section navigation list>

SQL Azure Database, Federations and Reporting

Shirley Wang reported SQL Data Sync SU5 has been released! in a 2/6/2012 post to the Sync Team blog (missed when published):

The latest Service Update 5 has just been released! SU5 delivers 2 major features designed to improve sync experience:

- Sync support for Spatial data type

- Ability to cancel an on-going sync process

SU5 also includes various fixes that provide better usage experience to our customers. To be consist with the rest of Windows Azure services, the new branded name is now SQL Data Sync. [Emphasis added.]

You can download the latest local agent (v4.0.46.0) from the download page now.

<Return to section navigation list>

MarketPlace DataMarket, Social Analytics, Big Data and OData

• Derrick Harris (@derrickharris) asked Can a big data product level the playing field in politics? in a 6/25/2012 article for GigaOm’s Structure blog:

A group of Utah State University graduates recently launched a company called PoliticIt that uses machine learning to gauge the popularity of political candidates by measuring their digital influence. Sure, PoliticIt’s system has proven remarkably accurate in predicting winners, but its real promise is in leveling the playing field between the haves and the have-nots in political campaigns — democratizing democracy, if you will.

It Scores as of May 31, 2012.

The premise behind PoliticIt is simple: Gather and crunch as much data as possible to create a candidate’s It Score, which is a measure of digital influence that takes into account factors such as a candidate’s digital footprint and citizens’ sentiment toward the candidate. To make the scores even more accurate, PoliticIt incorporates machine learning algorithms that digest the results of elections and other events recalculate which factors or which areas of digital influence are actually the most important in any given race.

PoliticIt claims that in the more than 160 elections is has tracked thus far, the candidate with the higher It Score won 87 percent of the time, even in races where conventional wisdom, campaign spending and polls suggested a different winner.

That’s all fine and dandy and makes for good press, but co-founder and CEO Joshua Light told me PoliticIt is more about data and correlations than it is about causality. What he means is that as long as the It Score actually correlates strongly with who wins and loses elections, PoliticIt just wants to give candidates a way to track it and, if they’re so inclined, figure out ways to boost it. As for the actual politics — publicly predicting who’ll win any race or determining what policy changes might improve popularity — Light and his team will leave that to political scientists and other experts.

For now, in an era when money is pouring into political campaigns like never before, PoliticIt’s simple goal could be enough. When its software is generally available, Light said he hopes it “bring[s] down that barrier of entry for people who want to run for office” by giving them a better idea of how they’re faring against their opponents in the digital realm and then figuring out how to spend money accordingly. “We think this is something that’s going to be revolutionary for our political system,” he said.

A sampling of the data sources PoliticIt tracks.

What sets PoliticIt apart from other services for gauging influence or predicting elections, Light said, is its focus on empowering the candidates. Yes, InTrade might be a fairly accurate predictor of who’s currently the frontrunner in a race, but its methodology is a something of a black box and IT doesn’t offer candidates any means of changing their fate. PoliticIt is more like Klout for politics. Not only does it gives candidates a score they can watch increase and decrease in relation to what they say or do, but it also hopes to let candidates identify other individual influencers to target. Targeting one individual with a loud voice might be a lot easier than trying to reach an entire room.

Considering how important big data and analytics, generally, have become to political campaign efforts, it’s difficult to argue with PoliticIt’s thinking. In presidential politics, candidates have invested heavily in analytics and targeted marketing efforts for years, and are now starting to get into the big data space by building Hadoop clusters and analyzing the the firehose of data coming from sources such as Twitter, Facebook and other websites.

I’m even cautiously optimistic the advent of big data might actually change the current state of election-season politicians concerning themselves more with being popular than with being visionary. Being able to analyze large amounts of data in near real-time means being able to figure out in a hurry whether or not a strategy is working. Maybe the data will show that big ideas have a bigger impact on a candidate’s influence than do fence-sitting platitudes.

The Logan, Utah-based PoliticIt has a long way to go before it’s a household name among campaign organizers, but it’s onto something. Even if candidates with bigger war chests might always be able to invest in the latest and greatest techniques, the idea of putting advanced digital-influence analysis into the hands of every candidate is pretty powerful nonetheless.

Related research and analysis from GigaOM Pro:

Subscriber content. Sign up for a free trial.

Full disclosurer: I’m a registered GigaOm Analyst.

• Joannes Vermorel (@vermorel) offered A few tips for Big Data projects in a 6/25/2012 post:

At Lokad, we are routinely working on Big Data projects, primarily for retail, but with occasional missions in energy or biotech companies. Big Data is probably going to remain as one of the big buzzword of 2012, along with a big trail of failed projects. A while ago, I was offering tips for Web API design, today, let's cover some Big Data lessons (learned the hard way, as always).

1. Small Data trump Big Data

There is one area that captures most of the community interest: web data (pages, clicks, images). Yet, the web-scale, where you have to deal with petabytes of data, is completely unlike 99% of the real-world problems faced about every other verticals beside consumer internet.

For example, at Lokad, we have found that the largest datasets found in retail could still be processed on a smartphone if the data is correctly represented. In short, for the overwhelming majority of problems, the relevant data, once properly partitioned, take less than 1GB.

With datasets smaller than 1GB, you can keep experimenting on your laptop. Map-reducing stuff on the cloud is cool, but compared to local experiments on your noteboook, cloud productivity is abysmal.

2. Smarter problems trump smarter solutions

Good developers love finding good solutions. Yet,when facing Big Data problem, it just too temping to improve stuff, as opposed to challenge the problem in the first place.

For example at Lokad, as far inventory optimization was concerned, we have been pushing years of efforts at solving the wrong problem. Worse, our competitors has been spending hundreds of man-years of efforts doing the same mistake ...

Big Data means being capable of processing large quantities of data while keeping computing resource costs negligible. Yet, most problems faced in the real world have been defined more than 3 decades ago, at a time where any calculation (no matter how trivial) was a challenge to automate. Thus, those problems come with a strong bias toward solutions that were conceivable at the time.

Rethinking those problems is long overdue.

3. Stealth is scalability-critical

The scarcest resource of all is human time. Letting a CPU chew 1 million numbers is nothing. Having people reading 1 milion numbers takes an army of clercs.

I have already posted that manpower requirements of Big Data solutions were the most frequent scalability bottleneck. Now, I believe that if any human has to read numbers from a Big Data solution, then solution won't scale. Period.

Like AntiSpam filters, Big Data solutions need to tackle problems from an angle that does not require any attention from anyone. In practice, it means that problems have to be engineered in a way so that they can be solved without user attention.

4. Too big for Excel, treats as Big Data

While the community is frequently distracted by multi-terabyte datasets, anything that does not conveniently fit in Excel is Big Data as far practicalities go:

- Nobody is going to have a look at that many numbers.

- Opportunities exist to solve a better problem.

- Any non-quasi-linear algorithm will fail at processing data in a reasonable amount of time.

- If data is poorly architectured / formatted, even sequential reading becomes a pain.

Then comes the question: how should handle Big Data? However, the answer is typically very domain-specific, so I will leave that to a later post.

5. SQL is not part of the solution

I won't enter (here) the debate SQL vs NoSQL, instead let's outline that whatever persistence approach is adopted, it won't help:

- figuring out if the problem is the proper one to be addressed,

- assessing the usefulness of the analysis performed on the data,

- blending Big Data outputs into user experience.

Most of the discussions around Big Data end up distracted by persistence strategies. Persistence is a very solvable problem, so engineers love to think about it. Yet, in Big Data, it's the wicked parts of the problem that need the most attention.

Mike described Using Schema.Org Vocabularies with OData in a 6/11/2012 post (missed when published):

With the introduction of data and metadata annotations to OData version 3.0, developers can now define common vocabularies for things like Sales (with Customers, SalesOrder, Product, etc.), Movies (with Title, Actor, Director, ....), Calendars (with Event, Venue, …), etc. Of course, such vocabularies are only useful to the extent that they are shared across a broad range of clients and services.

Over the past year, schema.org has been defining common schemas to make it easier for search engines to find and understand data on the web. Whenever we talk about shared Vocabularies in OData we inevitably get asked about the relationship of common ontologies expressed through OData Vocabularies to the work going on in schema.org.

I am happy to share that, through ongoing discussions with the members of schema.org, we have agreed that defining common OData Vocabulary encodings of the schema.org schemas is a benefit to both technologies. Sharing a common vocabulary even across different encodings like OData, RDFa 1.1, and Microdata, facilitates a common understanding and even possible transformation of data across those encodings. Toward that end we have jointly posted a discussion paper on the Web Schemas wiki on the use of schema.org schemas within OData.

From Dan Brickley's blog post on new developments around schema.org:

"We are also pleased to announce today a discussion paper on the use of OData and Schema.org, posted in the Web Schemas wiki. OData defines a RESTful interface for working with data on the Web. The newest version of OData allows service developers and third parties to annotate data or metadata exposed by an OData Service. Defining common OData Vocabulary encodings of the schema.org schemas facilitates the understanding and even transformation of data across these different encodings."

Feel free to add comments to Dan's post, or send us feedback on the odata.org mailing list.

Exciting times for OData

Clint Edmonson (@clinted) posted Windows Azure Recipe: High Performance Computing on 6/8/2012 (missed when published):

One of the most attractive ways to use a cloud platform is for parallel processing. Commonly known as high-performance computing (HPC), this approach relies on executing code on many machines at the same time. On Windows Azure, this means running many role instances simultaneously, all working in parallel to solve some problem. Doing this requires some way to schedule applications, which means distributing their work across these instances. To allow this, Windows Azure provides the HPC Scheduler.

This service can work with HPC applications built to use the industry-standard Message Passing Interface (MPI). Software that does finite element analysis, such as car crash simulations, is one example of this type of application, and there are many others. The HPC Scheduler can also be used with so-called embarrassingly parallel applications, such as Monte Carlo simulations. Whatever problem is addressed, the value this component provides is the same: It handles the complex problem of scheduling parallel computing work across many Windows Azure worker role instances.

Drivers

- Elastic compute and storage resources

- Cost avoidance

Solution

Here’s a sketch of a solution using our Windows Azure HPC SDK:

Ingredients

- Web Role – this hosts a HPC scheduler web portal to allow web based job submission and management. It also exposes an HTTP web service API to allow other tools (including Visual Studio) to post jobs as well.

- Worker Role – typically multiple worker roles are enlisted, including at least one head node that schedules jobs to be run among the remaining compute nodes.

- Database – stores state information about the job queue and resource configuration for the solution.

- Blobs, Tables, Queues, Caching (optional) – many parallel algorithms persist intermediate and/or permanent data as a result of their processing. These fast, highly reliable, parallelizable storage options are all available to all the jobs being processed.

Training

Here is a link to online Windows Azure training labs where you can learn more about the individual ingredients described above. (Note: The entire Windows Azure Training Kit can also be downloaded for offline use.)

.png)

Windows Azure HPC Scheduler (3 labs)

The Windows Azure HPC Scheduler includes modules and features that enable you to launch and manage high-performance computing (HPC) applications and other parallel workloads within a Windows Azure service. The scheduler supports parallel computational tasks such as parametric sweeps, Message Passing Interface (MPI) processes, and service-oriented architecture (SOA) requests across your computing resources in Windows Azure. With the Windows Azure HPC Scheduler SDK, developers can create Windows Azure deployments that support scalable, compute-intensive, parallel applications.

See my Windows Azure Resource Guide for more guidance on how to get started, including links web portals, training kits, samples, and blogs related to Windows Azure.

<Return to section navigation list>

Windows Azure Service Bus, Active Directory and Workflow

Kent Weare (@wearsy) described Azure–Service Bus Queues in Worker Roles in a 6/23/2012 post:

Another nugget of information that I picked up at TechEd North America is a new template that ships as part of the Azure SDK 1.7 called Worker Role with Service Bus Queue.

What got me interested in this feature is some of the work that I did last year with the AppFabric Applications (Composite Apps) CTP. I was a big fan of that CTP as it allowed you to wire-up different cloud services rather seamlessly. That CTP has been officially shut-down so I can only assume that this template was introduced to address some of the problems that the AppFabric Applications CTP sought to solve.

The Worker Role with Service Bus Queue feature works in both Visual Studio 2010 or Visual Studio 2012 RC. For this post I am going to be using 2012 RC.

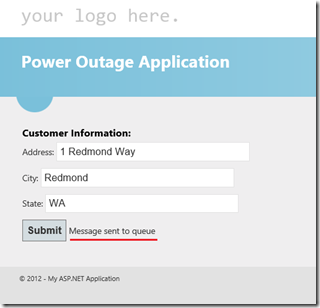

I am going to use a similar scenario to the one that I used in the App Fabric Applications CTP. I will build a simple Web Page that will allow a “customer” to populate a power outage form. I will then submit this message to a Service Bus Queue and will then have a Worker role dequeue this message. For the purpose of this post I will simply write a trace event to prove that I am able to pull the message off of the queue.

Building the Application

- Create a new project by clicking on File (or is it FILE) – New Project

- Select Cloud template and then you will see a blank pane with no template able to be selected. The Azure SDK is currently built on top of .Net 4.0 not 4.5. With this in mind, we need to select .Net Framework 4

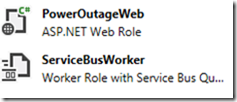

- We now need to select the Cloud Services that will make up our solution. In my scenario I am going to include an ASP.Net Web Role and a Worker Role with Service Bus Queue.

Note: we do have the opportunity to rename these artifacts by hovering over the label and then clicking on the pencil. This was a gap that existed in the old AppFabric Apps CTP. After renaming my artifacts my solution looks like this:

- I want to send and receive a strongly typed message so I am going to create a Class Library and call it CustomerEntity.

- In this project I will simply have one class called Customer with the following properties

namespace CustomerEntity

{

public class Customer

{

public string Address { get; set; }

public string City { get; set; }

public string State { get; set; }

}

}

- I will then add a reference in both the Web Project and Worker Role projects to this CustomerEntity project.

- Within the PowerOutageWeb project clear out all of the default markup in the Default.aspx page and add the following controls.

<h3>Customer Information:</h3>

Address: <asp:TextBox ID="txtAddress" runat="server"></asp:TextBox><br />

City: <asp:TextBox ID="txtCity" runat="server"></asp:TextBox> <br />

State: <asp:TextBox ID="txtState" runat="server"></asp:TextBox><br />

<asp:Button ID="btnSubmit" runat="server" Text="Submit" OnClick="btnSubmit_Click" />

<asp:Label ID="lblResult" runat="server" Text=""></asp:Label>

- Also within the PowerOutageWeb project we need to add references to the Service Bus Assembly: Microsoft.ServiceBus.dll and Runtime Serialization Assembly: System.Runtime.Serialization.dll

- We now need to provide the following include statements:

using CustomerEntity;

using Microsoft.WindowsAzure;

using Microsoft.ServiceBus;

using Microsoft.ServiceBus.Messaging;

- Next we need to provide a click event for our submit button and then include the following code:

protected void btnSubmit_Click(object sender, EventArgs e)

{

Customer cs = new Customer();

cs.Address = txtAddress.Text;

cs.City = txtCity.Text;

cs.State = txtState.Text;const string QueueName = "PowerOutageQueue";

//Get the connection string from Configuration Manager

string connectionString = CloudConfigurationManager.GetSetting("Microsoft.ServiceBus.ConnectionString");

var namespaceManager = NamespaceManager.CreateFromConnectionString(connectionString);

//Check to see if Queue exists, if it doesn’t, create it

if (!namespaceManager.QueueExists(QueueName))

{

namespaceManager.CreateQueue(QueueName);

}MessagingFactory factory = MessagingFactory.CreateFromConnectionString(connectionString);

//Create Queue CLient

QueueClient myQueueClient = factory.CreateQueueClient(QueueName);BrokeredMessage bm = new BrokeredMessage(cs);

//Send Message

myQueueClient.Send(bm);

//Update Web Page

lblResult.Text = "Message sent to queue";

}

- Another new feature in this SDK that you may have noticed is the CreateFromConnectionString method that is available to the MessagingFactory and NamespaceManager classes. This allows us to retrieve our configuration settings from our project properties page. To access the project properties right mouse click on the particular role and then select Properties. Next, click on Settings where you will find Key/Value Pairings. The name of the key that we are interested in is: Microsoft.ServiceBus.ConnectionString and our value is

Endpoint=sb://[your namespace].servicebus.windows.net;SharedSecretIssuer=owner;SharedSecretValue=[your secret]

- Since both our Web and Worker Roles will be accessing the Queue we will want to ensure that both configuration files have this entry included. This will allow our code to make a connection to our Service Bus Namespace where our Queue may be found. If we edit this property here in these locations, then we do not need to modify the Cloud.cscfg and Local.cscfg configuration files because Visual Studio will take care of this for us.

- Next we want to shift focus to the Worker Role and edit the WorkerRole.cs file. Since we are going to be dequeuing our typed CustomerService message we want to include a reference to this namespace:

using CustomerEntity;

- Something that you probably noticed when you opened up the WorkerRole.cs file is that there is already some code written for us. We can leverage most of it but can delete the code that is highlighted in red below:

- Where we deleted this code, we will want to add the following:

Customer cs = receivedMessage.GetBody<Customer>();

Trace.WriteLine(receivedMessage.SequenceNumber.ToString(), "Received Message");

Trace.WriteLine(cs.Address, "Address");

Trace.WriteLine(cs.City, "City");

Trace.WriteLine(cs.State, "State");

receivedMessage.Complete();In this code we are going to receive a typed Customer message and then simply write out the contents of this message using the Trace utility. If we wanted to save this information to a Database, this would a good place to write that code.

We also want to make sure that we update the name of Queue so that it matches the name that we specified in the Web Role:

// The name of your queue

const string QueueName = "PowerOutageQueue";Creating our Queue

We have a few options when it comes to creating our Queue that is required for this solution to work. The code in our ASP.NET Web page code-behind will take care of this for us. So for our solution to work, this method is sufficient but perhaps we want to use a design-time alternative to specify some more advanced features. In this case we do have a few options:

Using the http://www.windowsazure.com portal.

Similarly we can use the Service Bus Explorer tool that was written by Paolo Salvatori. Steef-Jan Wiggers has provided an in-depth walk through of this tool so I won’t go into more details here. http://soa-thoughts.blogspot.ca/2012/06/visual-studio-service-bus-explorer.html

As part of the Azure 1.7 SDK release, a Visual Studio Service Bus Explorer is now included. It is accessible from the Server Explorer view from within Visual Studio. Using this tool we can perform some functions like:

Creating Queues/Topics

Set advanced properties: Queue Size, Time to Live, Lock Duration etc

Send and Receive Test Messages

Get the current Queue Depth

Get our Service Bus Connection string

Any of these methods will work. As I mentioned earlier, if we do nothing, the code will take care of it for us.

Testing

We are just going to test our code locally, with the exception of our ServiceBus Queue. That is going to be created in Azure. To test our example:

Hit the F5 and our website should be displayed

Since we have our Trace statements included in our Worker Role code, we need to launch our Compute Emulator. To do this right mouse click on the Azure icon that is located in your taskbar and select Show Compute Emulator UI.

- A “Console” like window will appear. We will want to click on the ServiceBusWorker label

- Switch back to our Web Application, provide some data and click the submit button. We should see that our results label is updated to indicate that our Message sent to queue.

- If we switch back to our Compute Emulator we should discover that our message has been dequeued.

Conclusion

While the experience is a little different than that of the AppFabric Applications CTP, it is effective. Especially for developers who may not be all that familiar with “integration”. This template really provides a great starter point and allows them to wire up a Service Bus queue to their Web Application very quickly.

Vittorio Bertocci (@vibronet) posted Next Week at TechEd Europe: A Lap Around Windows Azure Active Directory on 6/22/2012:

After long months spent in my dark PM-cave in Redmond, it’s finally conferences season again!

Last week I had the pleasure of presenting WIF 4.5 at TechEd Orlando and meet many of you; this Saturday I am flying to Amsterdam, and Tuesday I am scheduled to deliver a repeat of Stuart’s session on Windows Azure Active Directory. Below you can find all the coordinates: and oh marvel of the modern technology, you can even add it directly to your calendar by clicking here.

A Lap around Windows Azure Active Directory

SIA209, a 200 level Breakout Session in Security & Identity

Vittorio Bertocci in E102 on Tue, Jun 26 12:00 PM - 1:15 PM

Windows Azure Active Directory (WAAD) is the conceptual equivalent in the cloud of Windows Server Active Directory (WS AD), and is integrated with WS AD itself. In this session, developers, administrators, and architects gain a high-level overview of WAAD, covering functional elements, supported scenarios, and an end-to-end tour, plus a roadmap for the future. #TESIA209

That is hot stuff, my friends. I am honored to be chartered to speak about such important topic, which has the attention of the Gotha of identity (Kim, John, Craig just to give you an idea). I know that the session is a 200, which means that I should not go too deep; and that most of the people in the room will be administrator, which means that I really should not open Visual Studio; but you guys know me, something tells me that I’ll do it anyway.

Also: I’ll arrive Sunday morning and leave already on Wednesday, hence I’ll be there barely he time to call Random.Next() a few times on my circadian cycle: if you want to catch me and chat, just head to the (aptly named) Directory and drop me a line!

Vittorio Bertocci (@vibronet) described Taking Control of the HRD Experience with the New WIF 4.5 Tools (with a Little Help from JQuery) in a 6/21/2012 post:

One of the key differences between using claims-based identity for one enterprise app and an app meant to be used on the web has to do with which identity providers are used, and how.

Of course there are no hard and fast rules (and the distinction above makes less and less sense as the enterprise boundaries lose their adiabatic characteristics) however in the general case you can expect the enterprise app to trust one identity provider that happens to be pretty obvious from the context. Think of the classic line of business app, an expense note app or a CRM: whether it’s running on premises or in the cloud, chances are that it trusts directly your ADFS2 instance or equivalent. Even if it’s a multitenant app, accessed by multiple companies, it is very likely that every admin will distribute to his employees deep links which include a pre-selection of the relevant STS (think whr) making it an automatic choice.

What happens when the typical Gino Impiegato (Joe Employee) uses the app?

- Gino arrives at work, logs in the workstation, opens a browser and types the address of the LoB app

- Some piece of software, say WIF, detects that the current caller is not authenticated and initiates a signin flow. When this happens regardless of the resource being requested, we call the settings triggering this flow blanket redirect.

- Gino’s browser gets redirected to his local ADFS2. Gino is signed on a domain machine, hence he gets back a token without the need of even seeing any UI

- The browser POSTs the token to the LoB app, where WIF examines it, creates a session for it, and finally responds to the request with the requested page from the application

Gino is blissfully unaware of all that HTTP tumult going on. His experience is: I type the app address, I get the app UI. As simple as that. Nothing in between.

Now, let’s talk about a classic web application on the public Internet. Imagine Gino at home, planning his upcoming vacation. Gino opens up the browser, types the address of one of those travel booking sites, hit enters… and the browser shows a completely different page. The page offers a list of identity providers: live id, facebook, google… and no sign of anything related to travelling. What just happened? The travel booking site decided to allow its users to sign in with their social account, and implemented the feature using the blanket redirect.

The blanket redirect works great when the IdP to use is obvious and the user is already signed in with it, as it happened with the LoB app, but that’s not what is happening here. The travel app does not know a priori which identity provider Gino wants to use. Even if it would know in advance, chances are that Gino might not be signed in with it and would be prompted for credential: that would mean typing the address of the app, and landing on say the live id login page instead. Poor Gino, very confusing.

Luckily the blanket redirect is just a default, and like every default it can be changed: WIF always gave the possibility of overriding the redirect behavior, and ACS does provide the code of a sample HRD page to incorporate in your site if you so choose.

With the new WIF tools we make a step forward in helping you to take control of how the authentication experience is initiated. I personally believe that we can do much better, but we need to start from somewhere…

Without further ado, let’s take a quick look at how to take advantage of the approach.

Fire up your brand new install of Visual Studio 2012 RC (make sure you have the WIF 4.5 tools installed!), head to the New Project dialog, and create a new ASP.NET MVC 4 Web Application. Pick the Internet Application template.

Now that you have a project, let’s configure it to use ACS with the blanket redirect approach; after that, we’ll see how to change the settings to offer to the user a list of identity providers directly in the application. CTRL+click on this link to open in another tab the tutorial for adding ACS support to the application; once you are done, come back here and we’ll go on.

All done? Excellent. Now, go back to the WIF tool and click on the Configuration tab. This is what you’ll see:

That’s the default. When you configured the application to use WIF, the tool got rid of the forms authentication settings from the MVC template,added the necessary sections in the web.config and changed the <authorization> settings to trigger a redirect to the STS every time.

Let’s choose “Redirect to the following address” and let’s paste “~/Account/Login” in the corresponding text field.Click OK. This will have the effect of re-instating the forms authentication settings: by default all requests will succeed now. The WIF settings which describe the ACS namespace you chose as the trusted authority are still there, they are simply no longer triggered automatically. In fact, go ahead and add [Authorize] to the About action, run the project and click “About”: you’ll get the default behavior from the MVC, the Login action from the Account controller.

Our job is now to modify the Login so that, instead of showing the membership provider form, it will show links corresponding to the identity providers configured in the ACS namespace.

We actually don’t need to change anything in the controller: let’s head directly to the Login.cshtml view in Views/Account. Once there, rip out everything between the hgroup and the @section Scripts line. Let’s keep things simple and just add an empty <div> where we will add all the identity providers’ links. Give it an id you’ll remember. Also, change the title to match the new behavior of the view. You should end up with something along the lines of what’s shown below

1: @model MvcApplication8.Models.LoginModel2:3: @{4: ViewBag.Title = "Log in";5: }6:7: <hgroup class="title">8: <h1>@ViewBag.Title </h1>9: <h2> using one of the identity providers below</h2>10: </hgroup>11:12: <div id="IPDiv"></div>13:14: @section Scripts {15: @Scripts.Render("~/bundles/jqueryval")16: ...Great. Now what? From this other post, we know that ACS offers a JSON feed containing the login URLs of all the identity providers trusted by a RP in a namespace. Now, that post goes far too deep in the details of what’s inside the feed: we are not all that interested in that today, we are rather looking after how to consume that feed from our application. Luckily, that’s super easy. Head to the ACS management portal for your namespace. Pick the Application Integration link on the left side menu, choose Login Pages, pick your application, You’ll land on a page that looks like the following:

What you need is highlighted in red. Getting in the details is really not necessary, you can look them up in the other post if you are interested. The main thing to understand here is how to use this in your application. The idea is very simple: you add a <script> element to you app and you use the above as its src, then you append to it (after “callback=”) a javascript function (say that you call it “ShowSignInPage” with great disregard for the MVC pattern

) containing the logic you want to run upon receiving the list of identity providers. When the view will be rendered, once the execution will reach the <script> element the JSON feed of IdPs will be downloaded and passed to your function, where you’ll have your shot at adding the necessary UI elements to the view.

Assuming that you are a good web citizen and you subscribe to the unobtrusive javascript movement, and assuming that you’ll define your ShowSignInPage function in ilmioscript.js, your new Login.cshtml will look like the following (line 18 has been formatted to fit this silly blog theme)1: @model MvcApplication8.Models.LoginModel2:3: @{4: ViewBag.Title = "Log in";5: }6:7: <hgroup class="title">8: <h1>@ViewBag.Title </h1>9: <h2> using one of the identity providers below</h2>10: </hgroup>11:12: <div id="IPDiv"></div>13:14: @section Scripts {15: @Scripts.Render("~/bundles/jqueryval")16:17: @Scripts.Render("~/scripts/ilmioscript.js")18: <script src="https://vibrorepro.accesscontrol.windows.net:443/v2/metadata/

IdentityProviders.js?protocol=wsfederation&realm=http%3a%2f%2flocalhost%3a45743%2f

&reply_to=http%3a%2f%2flocalhost%3a45743%2f&context=&request_id=

&version=1.0&callback=ShowSigninPage" type="text/javascript"></script>

19:20: }Grreat, now we moved the action to the ilmioscript.js file and the ShowSigninPage function. Tantalizing, right? Be patient, I promise this is the last step we need before being able to hit F5.

As you can see from the screenshot above, ACS provides you with a sample page which shows how to reproduce the exact behavior of its default home realm discovery page, including all the remember-in-a-cookie-which-IP-was-picked-and-hide-all-others-next-time brouhaha. However here we are interested in something quick & dirty, hence I am going to merrily ignore all that and will provide appropriately quick & dirty minimal JQuery to demonstrate my point.

Create a new js file under the Scripts folder, and paste in it the following:

1: function ShowSigninPage(IPs) {2: $.each(IPs, function (i, ip) {3: $("#IPDiv").append('<a href="' + ip.LoginUrl + '">' + ip.Name + '</a><br/>');4: });5: }Isn’t JQuery conciseness astounding? It gets me every time. In barely 3 lines of code I am saying that for every identity provider descriptor in the feed I want to append a new link to my base div, with text from the name of the IdP and href to the login URL. Bam. Done.

Save the entire contraption and hit F5, then click on About:

Marvel oh marvel, the Login view now offers me the same list of IdPs directly within my app experience instead of redirecting me in strange places. Also, I can add helpful messages to prepare the user to what will happen when he’ll click one of the links, why he might want to do so, and whatever else crosses my mind. Oh my, all this power: will it corrupt you as it corrupted me?

Go ahead and click on one of those links: you’ll be transported thru the usual sign in experience of the provider of choice, and you’ll come back authenticated. That’s because WIF might no longer be proactive, but it certainly can recognize WS-Federation wsignin1.0 message when it sees one and that will trigger it to go through the usual motions: token validation, session cookie creation… just like it normally happens with the blanket redirect.

Here I was pretty heavy-handed with my simplifications: I disregarded all cleanups (there still code form the MVC template that won’t be used after this change), I went the easy route (you might want a more elaborate HRD experience, or you might want to keep the membership provider and add federated identity side by side: it’s possible and not too hard) and I didn’t tie all the loose ends (when you login you aren’t redirected to the About view, as you’d expect: restoring that behavior is simple, but it will be topic for another blog post).

Despite all that, the core of the idea is all here: I hope you’ll agree with me that once you’ve seen how it works this looks ridiculously easy.

Now it’s your turn to try! Is this really as easy as I make it look? Would you like the tools to go even further and perhaps emit this code for you when you choose the non-blanket option? Or do you only write enterprise apps and you don’t need any of this stuff? The floor is your, we look forward for your feedback!

Vittorio Bertocci (@vibronet, who else?) reported The Recording of “What’s New in WIF 4.5” From TechEd USA is Live on 6/19/2012:

Howdy! Last week I had the pleasure of increasing my curliness by a 3x factor thanks to the moist Orlando weather, which I visited for delivering a session on what’s new with WIF in the .NET framework 4.5. I also took advantage of the occasion for having detailed conversations with many of you guys, which is always a fantastic balsam to counter the filter bubble that enshrouds me when I spend too long in Redmond. Thank you!

Giving the talk was great fun, and a source of great satisfaction. If you follow this blog since the first days, you know that I’ve been (literally) preaching this claims-based identity religion for many many years, and seeing how deep it is being wedged in the platform and how mainstream it became… well, it’s an incredible feeling.

I was also impressed by the turnover: despite it being awfully, awfully early in the morning (8:30am in Orlando time is 5:30am in Redmond time) you guys showed up on time, filled the room, gave me your attention (yes, I can see you even if they shoot a lot of MegaLumens straight in my face) and followed up with great questions. Thank you!!!

Well, as you already guessed from the title of the post, I am writing this because the recording of the talk is now up and available on channel9 for your viewing pleasure (if you can stomach my Italian-Genovese-English accent, that is). The deck is not up yet (as usual I turned it in AFTER the talk) but should be shortly.

Here there’s a quick summary of the topics touched:

- WIF1.0 vs. WIF in .NET 4.5

- ClaimsIdentity’s role in the .NET classes hierarchy

- Improvements in accessing claims

- Class movements across namespaces

- Config elements movements across sections

- Web Farms and sessions

- Win8 claims

- Misc improvements

- The new tools: design & capabilities

- New samples

- Using WIF to SSO from Windows Azure Active Directory to your own web applications

If that sounds like a tasty menu, dive right in! Also note, this morning we released the refresh of the WIF tools for Visual Studio 2012 RC hence you can experience firsthand all the things you’ll see in the video.

Alrighty. Next week I will show up for a short 2 days at TechEd EU in Amsterdam: if you are around and you want to have a chat, please come find me. As usual, have fun!

Vittorio Bertocci (@vibronet, who else?) delivered Windows Identity Foundation Tools for Visual Studio 2012 RC on 6/19/2012:

They’re out, folks. Few minutes ago Marc flipped the switch on the refresh of the WIF tools for the RC of Visual Studio 2012. There’s not a lot of news, this was mostly a stabilization effort; if you want to learn about what the tools do, the original posts we did for Beta are still valid. Also, last week I gave a presentation at TechEd USA about what’s new in WIF 4.5: the tools took a fairly big portion of the talk, so if you prefer videos to written text head to the recording on Channel9.

Here there are some sparse comments on the release:

- We fixed tens of bugs, significantly improving the stability of the experience. Thank you all for your feedback, which helped us to track those down!

- The RC of the tool still requires you to run VS as administrator. That’s unfortunate, I know: we are looking into it. However I have to give you a heads-up: during the TechEd talk I asked to the audience (~150) who would not be able to use the tools at all if they’d require running VS as administrator, and only 3 raised their hands. If the running as admin is a complete blocker for you, please leave comments to this post or in the tools download pages ASAP. Thanks!

- Now for the good news: once you install the RC of the tools, the WIF samples released for Beta will keep working in VS 2012 RC without modifications

That’s it. Download, code away & be merry!

<Return to section navigation list>

Windows Azure Virtual Machines, Virtual Networks, Web Sites, Connect, RDP and CDN

• Cory Sanders (@SyntaxC4) continued his Infrastructure as a Service Series: Virtual Machines and Windows series on 6/25/2012:

We recently announced the release of Windows Azure Virtual Machines, an Infrastructure-as-a-Service (IaaS) offering in Windows Azure. I wanted to share my insights into how you can quickly start to use and best take advantage of this service.

Since I helped build it, I will warn you: I do love it!

Virtual Machines Migration Patterns

At a glance, Virtual Machines (VMs) consist of infrastructure to deploy an application. Specifically, this includes a persistent OS disk, possibly some persistent data disks, and internal/external networking glue to hold it all together. Despite the boring list, with these infrastructure ingredients, the possibilities are so much more exciting…

In this preview release, we wanted to create a platform where customers and partners can deploy existing applications to take advantage of the reduced cost and ease of deployment offered in Windows Azure. While ‘migration’ is a simple goal for the IaaS offering, the process might involve transferring an entire multi-VM application, like SharePoint. Or it might be moving a single Virtual Machine, like SQL Server, as part of a cloud-based application. It may even include creating a brand new application that requires Virtual Networks for hybrid connectivity to an on-premises Active Directory deployment.

What Applications Do you Mean?

As part of the launch of Virtual Machines in Windows Azure, we focused on the support for three key Microsoft applications, shown in Figure 1. We selected these applications and associated versions because of the high customer demand we received for them, either standalone or as part of a larger application.

Figure 1 – Applications and Versions

Additionally, these applications represent broad workload types we believe customers want to deploy in the cloud:

1) SQL Server is a database workload with expectations for exceptional disk performance;

2) Active Directory is a hybrid identity solution with extensive networking expectations;

3) SharePoint Server is a large-scale, multi-tier application with a load-balanced front-end. In fact, as shown in Figure 2, SharePoint Server 2010 deployments will include SQL Server and Active Directory.

Figure 2 – A SharePoint Server Deployment

Getting Started with Virtual Machines for Windows

The easiest way to get going with Windows Azure Virtual Machines for Windows is through our new HTML-based portal. The portal offers a simple and enjoyable experience for deploying new Virtual Machines based on one of the several platform images supplied by Microsoft including: Microsoft SQL Server 2012 Evaluation, Windows Server 2008 R2 SP1, and Windows Server 2012 Release Candidate.

We update these images regularly with the most recent patches to make sure that you start with a secure virtual machine, by default. Further details on getting started can be found here. Once deployed, you can patch the running Virtual Machines using a management product or simply use Windows Update.

Figure 3 – The Virtual Machine OS Selection

We also wanted to make it easy for you to deploy Virtual Machines using scripts or build on our programmatic interface. Therefore, we also released PowerShell cmdlets that allow scripted deployment of Virtual Machines for Windows. Finally, both the portal and the Command-Line tooling are built on REST APIs that are available for programmatic usage, described here, giving you the ability to develop your own tools and management solutions.

Figure 4 – Scripting, Portal and the REST API

How does it work, Really?

The persistence of the OS and data disks is a crucial aspect of the Virtual Machines offering. Because we wanted the experience to be completely seamless, we do all disk management directly from the hypervisor. Thus, the disks are exposed as SATA (OS disk) and SCSI (data disks) ‘hardware’ to the Virtual Machine, when actually the ‘disks’ are VHDs sitting in a storage account. Because the VHDs are stored in Windows Azure Storage accounts, you have direct access to your files (stored as page blobs) and get the highly durable triplicate copies implemented by Windows Azure storage for all its data.

Look for more details on how disks work in Windows Azure, in tomorrow’s blog post by Brad Calder.

Figure 5 – Windows Azure Storage as the disk

I like to move it, Move It!

You can copy, back-up, move and download your VHDs using standard storage API commands or storage utilities, creating endless possibilities for managing and re-deploying instances. Instead of spending a lot of time to set-up a test environment to quickly destroy it, you can simply copy the VHD’s blob and boot the same set-up multiple times, to then destroy it multiple times. You can even capture running VMs so that you can make clones of them with new machine names and passwords, a process we call ‘capturing’, described here.

This VHD ‘mobility’ also allows you to take your VHDs previously deployed in Windows Azure and bring them back on-premises (assuming you have the appropriate licenses) for management or even deployment. The reverse is possible, as well. Details on how to upload your VHD into Windows Azure can be found here.

Figure 6 – VHD Mobility in Action

Sounds cool. What should I do first?

The best way to get started with the offering is just to do some experimenting, developing and testing on a Virtual Machine. This means you can deploy a Virtual Machine, perhaps as part of your new free trial and install your favorite server application like SharePoint, SQL, IIS, or something else. Once you are done, you can export all aspects of the Virtual Machine, including the connected data disks and endpoint information, so that you can import it later for further development and testing.

Use this as a quick development box and try building something. It is easy, and fun! Of course, only a geeky infrastructure guy would call deploying a VM fun. But it sure is fun.

If you have any issues, problems, or questions, you can always find us on the help forums.

• Shaun Xu continued his series with Windows Azure Evolution – Deploy Web Sites (WAWS Part 3) on 6/24/2012:

This is the sixth post of my Windows Azure Evolution series. After talked a bit about the new caching preview feature in the previous one, let’s back to the Windows Azure Web Sites (WAWS).

Git and GitHub Integration

In the third post I introduced the overview functionality of WAWS and demonstrated how to create a WordPress blog through the build-in application gallery. And in the fourth post I covered how to use the TFS service preview to deploy an ASP.NET MVC application to the web site through the TFS integration. WAWS also have the Git integration.

I’m not going to talk very detailed about the Git and GitHub integration since there are a bunch of information on the internet you can refer to. To enable the Git just go to the web site item in the developer portal and click the “Set up Git publishing”.

After specified the username and password the windows azure platform will establish the Git integration and provide some basic guide. As you can see, you can download the Git binaries, commit the files and then push to the remote repository.

Regarding the GitHub, since it’s built on top of Git it should work. Maarten Balliauw have a wonderful post about how to integrate GitHub to Windows Azure Web Site you can find here.

WebMatrix 2 RC

WebMatrix is a lightweight web application development tool provided by Microsoft. It utilizes WebDeploy or FTP to deploy the web application to the server. And in WebMatrix 2.0 RC it added the feature to work with Windows Azure.

First of all we need to download the latest WebMatrix 2 through the Web Platform Installer 4.0. Just open the WebPI and search “WebMatrix”, or go to its home page download its web installer.

Once we have WebMatrix 2, we need to download the publish file of our WAWS. Let’s go to the developer portal and open the web site we want to deploy and download the publish file from the link on the right hand side.

This file contains the necessary information of publishing the web site through WebDeploy and FTP, which can be used in WebMatrix, Visual Studio, etc..

Once we have the publish file we can open the WebMatrix, click the Open Site, Remote Site. Then it will bring up a dialog where we can input the information of the remote site. Since we have our publish file already, we can click the “Import publish settings” and select the publish file, then we can see the site information will be populated automatically.

Click OK, the WebMatrix will connect to the remote site, which is the WAWS we had deployed already, retrieve the folders and files information. We can open files in WebMatrix and modify. But since WebMatrix is a lightweight web application tool, we cannot update the backend C# code. So in this case, we will modify the frontend home page only.

After saved our modification, WebMatrix will compare the files between in local and remote and then it will only upload the modified files to Windows Azure through the connection information in the publish file.

Since it only update the files which were changed, this minimized the bandwidth and deployment duration. After few seconds we back to the website and the modification had been applied.

Visual Studio and WebDeploy

The publish file we had downloaded can be used not only in WebMatrix but also Visual Studio. As we know in Visual Studio we can publish a web application by clicking the “Publish” item from the project context menu in the solution explorer, and we can specify the WebDeploy, FTP or File System for the publish target. Now we can use the WAWS publish file to let Visual Studio publish the web application to WAWS.

Let’s create a new ASP.NET MVC Web Application in Visual Studio 2010 and then click the “Publish” in solution explorer. Once we have the Windows Azure SDK 1.7 installed, it will update the web application publish dialog. So now we can import the publish information from the publish file.

Select WebDeploy as the publish method.

We can select FTP as well, which is supported by Windows Azure and the FTP information was in the same publish file.

In the last step the publish wizard can check the files which will be uploaded to the remote site before the actually publishing. This gives us a chance to review and amend the files. Same as the WebMatrix, Visual Studio will compare the files between local and WAWS and determined which had been changed and need to be published.

Finally Visual Studio will publish the web application to windows azure through WebDeploy protocol.

Once it finished we can browse our website.

FTP Deployment

The publish file we downloaded contains the connection information to our web site via both WebDeploy and FTP. When using WebMatrix and Visual Studio we can select WebDeploy or FTP. WebDeploy method can be used very easily from WebMatrix and Visual Studio, with the file compare feature. But the FTP gives more flexibility. We can use any FTP client to upload files to windows azure regardless which client and OS we are using.

Open the publish file in any text editor, we can find the connection information very easily. As you can see the publish file is actually a XML file with WebDeploy and FTP information in plain text attributes.

And once we have the FTP URL, username and password, when can connect to the site and upload and download files. For example I opened FileZilla and connected to my WAWS through FTP.

Then I can download files I am interested in and modify them on my local disk. Then upload back to windows azure through FileZilla.

Then I can see the new page.

Summary

In this simple and quick post I introduced vary approaches to deploy our web application to Windows Azure Web Site. It supports TFS integration which I mentioned previously. It also supports Git and GitHub, WebDeploy and FTP as well.

Faith Allington (@faithallington) started a Web Sites Series: Create PHP and MySQL sites with Windows Azure Web Sites and WebMatrix on 6/22/2012:

The newly introduced Windows Azure Web Sites and latest release of Microsoft WebMatrix provide a great way to create and host PHP + MySQL sites. You can write your own or get started with one of the popular open source applications that run in Windows Azure, such as Drupal, Joomla! or WordPress.

This post covers how to create a site in Windows Azure, download and customize it, then deploy back to Windows Azure Web Sites. We will be using WebMatrix, a free, lightweight web development tool. We’ll look at some of the new capabilities in WebMatrix that make writing sites easier and new features in the Windows Azure portal.

Create a new Web Site

With the release of Windows Azure Web Sites, you can create a new website in the portal in minutes. After signing up for a free trial, you have the option of creating an empty site without a database, with a database or install an open source application automatically.

While you can create an empty site with a database and then publish a site to it, we’re going to start by using the gallery.

For those of you familiar with the Web Platform Installer, you will recognize some of the same popular applications, specifically ones that have been tested on Windows Azure.

I want to build a content management based site; I will use Acquia Drupal as an example. When you choose an application, enter a site name and choose the database.

You can customize the database name and choose the region. Be sure to choose the same region as the site for best performance. You will have to configure a username and password for MySQL if you haven’t already.

Progress is displayed in the command bar area at the bottom of the portal. When site creation and installation of the app is complete, the notification may include a link to finish configuration. Click Setup to finish Acquia Drupal’s installation in the browser.

In the browser, most of the fields have been pre-filled in. Just type your MySQL password and continue.

After you have completed Acquia Drupal’s browser install, you can now login and customize your site!

Download and customize a Web Site in WebMatrix

When you’re ready to start making changes, you can download the site to your computer to edit offline. Downloading is useful if you don’t want to break your site running in production, or you want a rich editing experience with PHP code completion, colorization and other editing features.

We’ll start by navigating to the site in the portal and clicking the WebMatrix button in the command bar.

If WebMatrix is not already installed, you will be prompted to install WebPI and WebMatrix, along with any pre-requisites that are needed on your machine. Once it completes, WebMatrix will launch and automatically begin downloading your site.

Since you are running an application from the Web Application Gallery, WebMatrix will automatically install dependencies and make any changes needed so that the application runs locally.

Enter the database administrator password when prompted. Any required dependencies, such as MySQL or PHP, will be listed.

The final steps of the wizard will download the database and make any configuration changes needed for the site to run locally.

After the wizard completes, you will see the Site dashboard with helpful getting started links provided by Acquia Drupal. WebMatrix is split into four workspaces, Site, Files, Database and Reports.

Since we’re customizing the site, let’s go to the Files workspace. We’ll start by adding a custom module, learning from a Drupal tutorial. Notice the Drupal specific code completion that appears, allowing us to learn about and quickly use Drupal functions.

You can change the theme, install new modules and more using the Acquia Drupal control panel in the browser by clicking Run in the ribbon.

Deploy the Web Site

Once you’ve customized the local version, you can publish it back to your live site. Simply click the Publish button in the ribbon.

You’ll be able to preview the files. Check the database in order to publish changes.

Note: Publishing the database will replace your remote database with the local one. If you have data in the remote database that you don’t want to overwrite, you should skip publishing the database.

Once publishing completes, you can browse to your remote site.

If you need to quickly make a change to the remote site, such as changing a configuration setting that is only applicable on the remote server, this is easy to do – select the Remote tab and then Open Remote View in the ribbon.

Create a Custom Web Site

If you prefer to create your own sites from scratch, you can use the built-in templates. Follow the steps in this article on the Windows Azure development center.

Summary

This post covered how Windows Azure Web Sites and WebMatrix make it easier to get started with open source web applications, or create your own custom site.

Please share your feedback on the post, the features discussed or new topics you’d like to see us cover.

– by Faith Allington, Sr. Program Manager, Windows Azure

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

• Avkash Chauhan (@avkashchauhan) explained Installing Node.js on a CentOS Linux Virtual Machine in Windows Azure in a 6/25/2012 post:

First I created a CentOS Linux Virtual Machine with host names as “nodejsbox” and the DNS name as http://nodejsbox.cloudapp.net

As I will use this machine as node.js web server so I have added https endpoint for protocol TCP at port 80 as below:

Now I can log into my Linux machine by following instructions as directly here How to Log on to a Virtual Machine Running Linux.

After that I ran the following commands:

$ sudo –i

Entered my password

Installing stable release of node.js directly from node.js repo

[root@nodejsbox ~]# wget http://nodejs.tchol.org/repocfg/el/nodejs-stable-release.noarch.rpm

[root@nodejsbox ~]# yum localinstall --nogpgcheck nodejs-stable-release.noarch.rpm

[root@nodejsbox ~]# yum install nodejs-compat-symlinks npmVerify that node is running in my machine:

[root@nodejsbox /]# node --version

v0.6.18Now created a folder name nodeserver at /usr/local and then created server.js as below:

# cd /usr/local

# mkdir nodeserver

# cd nodeserver

# vi server.jsvar http = require("http");

http.createServer(function(request, response) {

response.writeHead(200, {"Content-Type": "text/plain"});

response.write("Hello from Node.js @ Windows Azure Linux Virtual Machine");

response.end();

}).listen(80);Finally launch node.js

[root@nodejsbox nodeserver]# node server.js

You can verify that your node.js is running on Linux machine:

• Robert Green (@rogreen_ms) reported Episode 40 of Visual Studio Toolbox: What's New in the Windows Azure June 2012 Release is now live in a 6/20/2012 post:

In this episode, Nick Harris joins us to talk about what's new in Windows Azure tooling. On Cloud Cover, Nick and his co-hosts covered the Windows Azure June 2012 Release from a much broader perspective. Here, we take a different approach and look at the new release from the perspective of a Visual Studio developer who is either new to Azure or only somewhat familiar with it.

Nick shows the following:

Alan Smith described 256 Windows Azure Worker Roles, Windows Kinect and a 90's Text-Based Ray-Tracer in a 6/25/2012 post:

For a couple of years I have been demoing a simple render farm hosted in Windows Azure using worker roles and the Azure Storage service. At the start of the presentation I deploy an Azure application that uses 16 worker roles to render a 1,500 frame 3D ray-traced animation. At the end of the presentation, when the animation was complete, I would play the animation delete the Azure deployment. The standing joke with the audience was that it was that it was a “$2 demo”, as the compute charges for running the 16 instances for an hour was $1.92, factor in the bandwidth charges and it’s a couple of dollars. The point of the demo is that it highlights one of the great benefits of cloud computing, you pay for what you use, and if you need massive compute power for a short period of time using Windows Azure can work out very cost effective.

The “$2 demo” was great for presenting at user groups and conferences in that it could be deployed to Azure, used to render an animation, and then removed in a one hour session. I have always had the idea of doing something a bit more impressive with the demo, and scaling it from a “$2 demo” to a “$30 demo”. The challenge was to create a visually appealing animation in high definition format and keep the demo time down to one hour. This article will take a run through how I achieved this.

Ray Tracing

Ray tracing, a technique for generating high quality photorealistic images, gained popularity in the 90’s with companies like Pixar creating feature length computer animations, and also the emergence of shareware text-based ray tracers that could run on a home PC. In order to render a ray traced image, the ray of light that would pass from the view point must be tracked until it intersects with an object. At the intersection, the color, reflectiveness, transparency, and refractive index of the object are used to calculate if the ray will be reflected or refracted. Each pixel may require thousands of calculations to determine what color it will be in the rendered image.

Pin-Board Toys

Having very little artistic talent and a basic understanding of maths I decided to focus on an animation that could be modeled fairly easily and would look visually impressive. I’ve always liked the pin-board desktop toys that become popular in the 80’s and when I was working as a 3D animator back in the 90’s I always had the idea of creating a 3D ray-traced animation of a pin-board, but never found the energy to do it. Even if I had a go at it, the render time to produce an animation that would look respectable on a 486 would have been measured in months.

PolyRay

Back in 1995 I landed my first real job, after spending three years being a beach-ski-climbing-paragliding-bum, and was employed to create 3D ray-traced animations for a CD-ROM that school kids would use to learn physics. I had got into the strange and wonderful world of text-based ray tracing, and was using a shareware ray-tracer called PolyRay. PolyRay takes a text file describing a scene as input and, after a few hours processing on a 486, produced a high quality ray-traced image. …

Alan continues with details of PolyRay tracing and then discusses use of Windows Kinect for depth-field animation:

Windows Kinect