Creating an Interactive Hadoop on Azure Hive Table from an Amazon Elastic MapReduce Hive Output File

Note: This post is the second half of my recent Executing an Elastic MapReduce Hive Workflow from the AWS Management Console article with a slightly modified title. It is intended for folks who only want to try S3 files as a source for the Apache Hadoop on Windows Azure (a.k.a., HadoopOnAzure or HoA) preview’s Interactive Hive feature. You’ll need an Amazon Web Services (AWS) account to log into AWS in step 4 and open the ~100 MB public feature_index file from the location specified in step 7.

• Update 6/2/2011: Microsoft’s Brad Sarsfield (@bradooop), Senior Developer - Hadoop for Windows and Windows Azure, reported in an answer to my “What Hive Version Does HadoopOnAzure Use? thread in the HadoopOnAzure Yahoo! Group:

We will be moving the preview to use Hive 0.8.1 very soon, weeks not months. We've been working on this as part of a larger version bump that includes a core update of Hadoop to the 1.0.x codeline.

Some of you have noticed that we have pushed a set of patches back into Hadoop core recently. We're really excited to see that the patches are flowing and are starting to get committed.

For those of you who are using the Hadoop for Windows preview, which is currently in a limited invite only preview, a refresh MSI will come in a similar timeframe.

Brad Sarsfield

In an earlier tweet Brad reported that the Apache Hadoop on Windows Azure preview runs Apache Hive 0.7.1.

My Using Data from Windows Azure Blobs with Apache Hadoop on Windows Azure CTP tutorial describes how to specify a Windows Azure blob as the data source for an interactive Hive query using the SQL Server team’s HadoopOnAzure preview. This section describes a similar process that substitutes the file created in my Executing an Elastic MapReduce Hive Workflow from the AWS Management Console article as the Hive table’s data source. (This file has public list/read permissions in S3.)

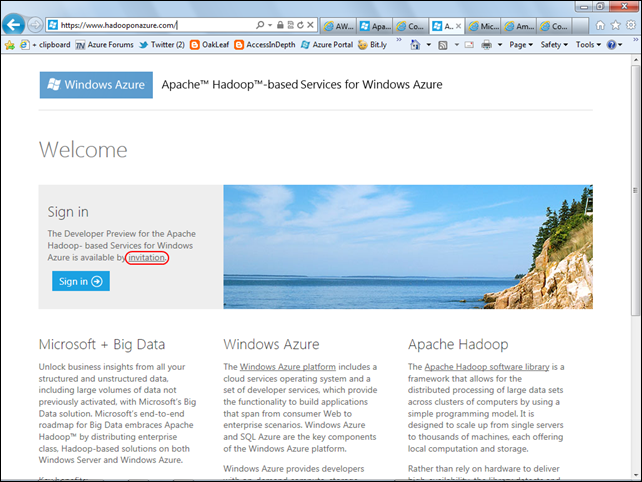

This section assumes you’ve received an invitation to test the Hadoop on Azure preview. If not, go to this landing page, click the invitation link and complete a questionnaire to obtain an invitation code by e-mail:

Note: If you don’t want to create an Amazon Web Services account, you can download the feature_index/000000 file from my SkyDrive account here, upload it to a Windows Azure blob and connect to the blob as the data source for this exercise..

To set up the S3 data source and execute HiveQL queries interactively, do the following:

1. Go to the HadoopOnAzure landing page, click Sign In, and provide your Live ID and password to open the main HoA page (see step 2), if you have an active cluster, or the Request a Cluster page if you don’t. In the latter case, type a globally-unique DNS prefix for the cluster, select a Cluster Size, type your administrative login, password and password confirmation:

Note: Passwords must contain upper and lower case letters and numerals. Symbols aren’t allowed. When your password and confirmation passes muster, the Request Cluster button becomes enabled. If a Large cluster is available, choose it. No charges accrue during the preview period.

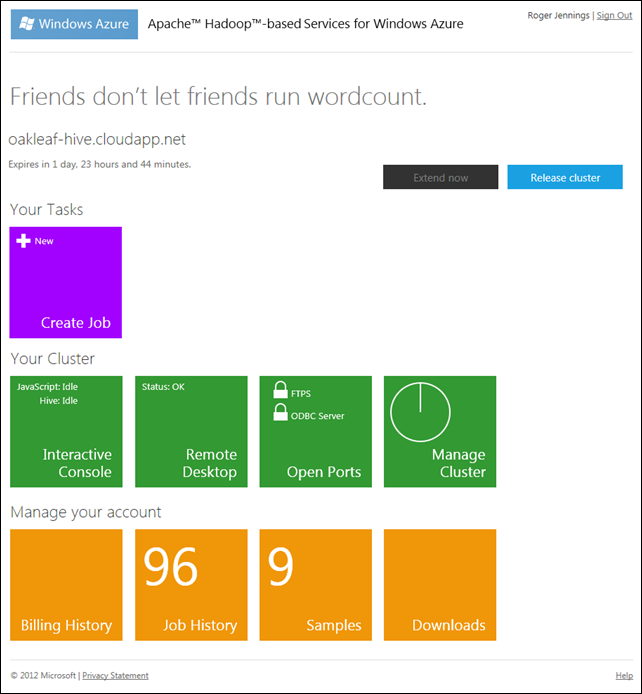

2. Click the Request Cluster button to start provisioning and display its status. After a few minutes the main HoA page opens:

Note: The cluster lifespan is 48 hours; you can renew the cluster during its last six hours of life only. Job History count will be 0, unless you’ve previously complete jobs.

3. Click the Manage Cluster tile to open the page of the same name:

4. Click the Set Up S3 button to open the Upload from Amazon S3 page, type your AWS Access Key and Secret Key, and accept the default S3N Native File System:

5. Click Save Settings to display an Amazon S3 Upload Successful message, despite the fact that you haven’t uploaded anything.

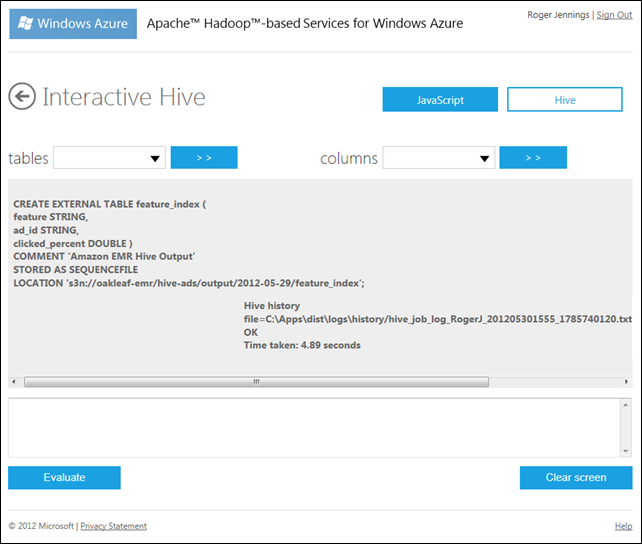

6. Click the back arrow twice to return to the main HoA page, click the Interactive Console tile to open the console and click the Hive button to select the Interactive Hive feature.

7. Type the following HiveQL DDL query in the text box at the bottom of the page to define the linked table:

CREATE EXTERNAL TABLE feature_index (

feature STRING,

ad_id STRING,

clicked_percent DOUBLE )

COMMENT 'Amazon EMR Hive Output'

STORED AS SEQUENCEFILE

LOCATION 's3n://oakleaf-emr/hive-ads/output/2012-05-29/feature_index';

8. Click the Evaluate button to execute the query and create the linked Hive table:

Note: Data isn’t downloaded until you execute a query that returns rows. Executing SELECT COUNT(*) FROM feature_index indicates that the table has 1,750,650 rows.

9. Open the Tables list which displays the table you just created and HoA’s hivesampletable. The Columns list displays a list of the select table’s column names.

10. To display the first 20 rows of the table, click Clear Screen, and then type and execute the following HiveQL query:

SELECT * FROM feature_index LIMIT 20

11. The “Applying the Heuristic” section of AWS’ Contextual Advertising using Apache Hive and Amazon EMR article suggests executing the following sample HiveQL query against the feature_index table “to see how it performs for the features 'us:safari' and 'ua:chrome'”:

SELECT ad_id, -sum(log(if(0.0001 > clicked_percent, 0.0001, clicked_percent))) AS value

FROM feature_index

WHERE feature = 'ua:safari' OR feature = 'ua:chrome'

GROUP BY ad_id

ORDER BY value DESC

LIMIT 100 ;

According to the article:

The result is advertisements ordered by a heuristic estimate of the chance of a click. At this point, we could look up the advertisements and see, perhaps, a predominance of advertisements for Apple products.

Note: The original query sorted ascending; sorting descending gives more interesting results (higher chances of a click) first:

Here’s the hidden Hive History’s significant content:

2012-05-30 18:34:58,774 Stage-1 map = 0%, reduce = 0%

2012-05-30 18:35:13,836 Stage-1 map = 1%, reduce = 0%

2012-05-30 18:35:16,852 Stage-1 map = 63%, reduce = 0%

2012-05-30 18:35:19,883 Stage-1 map = 100%, reduce = 0%

2012-05-30 18:35:34,899 Stage-1 map = 100%, reduce = 33%

2012-05-30 18:35:37,930 Stage-1 map = 100%, reduce = 100%

Ended Job = job_201205301808_0002

Launching Job 2 out of 2

Number of reduce tasks determined at compile time: 1

…

2012-05-30 18:36:05,649 Stage-2 map = 0%, reduce = 0%

2012-05-30 18:36:17,664 Stage-2 map = 50%, reduce = 0%

2012-05-30 18:36:20,680 Stage-2 map = 100%, reduce = 0%

2012-05-30 18:36:35,711 Stage-2 map = 100%, reduce = 100%

Ended Job = job_201205301808_0003

OK

Time taken: 133.969 seconds

0 comments:

Post a Comment