Windows Azure and Cloud Computing Posts for 11/8/2011+

| A compendium of Windows Azure, SQL Azure Database, AppFabric, Windows Azure Platform Appliance and other cloud-computing articles. |

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table, Queue and Hadoop Services

- SQL Azure Database and Reporting

- Marketplace DataMarket, Social Analytics and OData

- Windows Azure AppFabric: Apps, Access Control, WIF and Service Bus

- Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch and Entity Framework v4+

- Windows Azure Infrastructure and DevOps

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

Azure Blob, Drive, Table, Queue and Hadoop Services

Alex Handy described Diving into the Big Data pool in an 11/9/2011 post to the SD Times on the Web blog:

When technology is widely adopted, focus moves from functionality toward solving the remaining problems. For the Apache Hadoop project, these remaining problems are many. While Hadoop is the standard for map/reduce in enterprises, it suffers from instability, difficulty of administration, and slowness of implementation. And there are plenty of projects and companies trying to address these issues.

MapR is one such company. M.C. Srivas, the company's CTO and cofounder, said that when he founded the company, it was designed to tackle the shortcomings of Hadoop without changing the way programmers interacted with it.

“The way Hadoop is deployed nowadays, you hire some Ph.D.s from Stanford and ask them to write your code as well as manage the cluster,” he said. “We want a large ops team to run this, and we want to separate them from the application team.”But the real secret for MapR isn't just fixing Hadoop’s problems with its own distribution of map/reduce, it's also about maintaining API compatibility with Hadoop. “Hadoop is a de facto standard, and we cannot go and change that," said Srivas. "I want to go with the flow. We are very careful not to change anything or deviate from Hadoop. We keep all the interfaces identical and make changes where we think there's a technical problem.

"With the file system, we thought its architecture was broken, so we just replaced it, but kept the interfaces identical. We looked at the map/reduce layer, and we saw enormous inefficiencies that we could take care of. We took a different approach. There are lots of internal interfaces people use. We took the open-source stuff and enhanced it significantly. The stuff above these layers, like Hive and Pig and HBase, which is a pretty big NoSQL database, we kept those more or less unchanged. In fact, HBase runs better and is more reliable."

Additionally, Hadoop has a minor issue with the way it handles its NameNode. This node is the manager node in a Hadoop cluster, and it keeps a list of where all files in the cluster are stored. Hadoop can be configured to have a backup NameNode, but if both of these nodes fail, all the data in a Hadoop cluster instantly vanishes. Or, rather, it becomes unindexed, and might as well be lost.

Read more: Next Page Pages 2, 3, 4

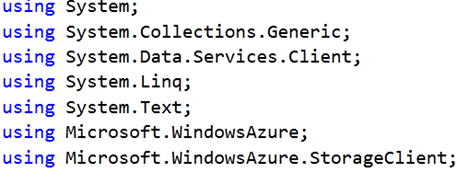

Dhananjay Kumar (@debug_mode) described Fetching name of all tables in Windows Azure Storage in an 11/9/2011 post:

To list all the table name from Windows Azure storage account, you need to call ListTable() function of CloudTableClient class. To start with first you need to add below namespaces.

Then create a storage account as below,

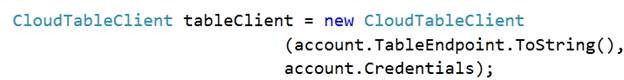

Make sure to put your own connection string to azure storage to parse. Next you need to create instance of table client

Once tableclient is created you can list all the tables by making call to ListTables() method.

For your reference full source code to fetch all the tables name from Windows Azure Storage account is given below,

using System; using System.Collections.Generic; using System.Linq; using Microsoft.WindowsAzure.StorageClient; using Microsoft.WindowsAzure; namespace ConsoleClient { class Program { static void Main(string[] args) { string connectionString = "DefaultEndpointsProtocol=https;AccountName=abc;AccountKey=jsjdsfjgdsfj09==="; var TablesName = GetTablesNameForAzureSubscription(connectionString); foreach (var r in TablesName) { Console.WriteLine(r.ToString()); } Console.ReadKey(true); } private static List<string> GetTablesNameForAzureSubscription(string connectionString) { CloudStorageAccount account =CloudStorageAccount .Parse(connectionString); CloudTableClient tableClient = new CloudTableClient (account.TableEndpoint.ToString(), account.Credentials); var result = tableClient.ListTables(); return result.ToList(); } On running of above code you should be getting the table name listed for the account mentioned in connection string. In this case I have three tables listed to the account as below,

In this way you can list all the tables name from Windows Azure Storage Account. I hope this post is useful.

Liam Cavanagh (@liamca) offered Tips for uploading large files to Windows Azure Blob Storage through a browser on 8/9/2011:

For a new service I have been working on, I had the need to upload a pretty large file (100M+) to Windows Azure Blob Storage. This service is MVC based and is hosted in Windows Azure. To make things even more difficult, I needed to route the file through the MVC service rather than just writing JavaScript or a client side application to apply the file directly to the blob storage.

For any of you that have tried uploading large files through a browser, you likely already know that there are numerous issues and just to get it to work often requires you to alter some of the web.config settings. Here are a few common ones that need to be set:

<system.web> <httpRuntimemaxRequestLength=“1048576” executionTimeout=“7200“ /> </system.web>For myself, I applied all of these changes but I still had issues getting a large file uploaded. Every time, the browser would timeout around 2 minutes. This was confusing since I thought the timeouts I had set would handle this.

As it turns out, in order to protect against denial of service (DOS) attacks, Windows Azure’s load balancers have a timeout that will close connections after this amount of time. In order to get around this problem, I added a Keep-Alive header. This line goes in the controller ActionResult that loads your page:

Response.AddHeader(“Keep-Alive”, “21600″);After these updates I was able to upload much larger files.

Barton George (@barton808) posted Hadoop World: Cloudera CEO reflects on this year’s event on 11/10/2011:

A few hours ago the third annual Hadoop World conference wrapped up here in New York city. It has been a packed couple of days with keynotes, sessions and exhibits from all sorts of folks within the greater big data ecosystem.

I caught up with master of ceremonies and Cloudera CEO Mike Olsen to get his thoughts on the event and predictions for next year.

Some of the ground Mike covers:

- How this year’s event compares to the first two and how its grown (it ain’t Mikey Rooney anymore)

- (2:06) Key trends and customers at the event

- (4:02) Mike’s thoughts on the Dell/Cloudera partnership

- (5:35) Looking forward to Hadoop world 2012 and where to go next

Stay tuned

If you’re interested in seeing more interviews from Hadoop World 2011 be sure to check back. I have eight other vlogs that I will be posting in the upcoming days with folks from Mongo DB, O’Reilly Media, Facebook, Couchbase, Karmasphere, Splunk, Ubuntu and Battery Ventures.

Extra-credit reading:

Barton George (@barton808) expanded on Hadoop World: Accel’s $100M Big Data fund in an 11/9/2011 post:

Yesterday Hadoop World kicked off here in New York city. As part of the opening keynotes, Ping Li of Accel Partners got on stage and announced that they are opening a $100 million dollar fund focusing on big data. If you’re not familiar with Accel, they are the venture capital firm that have invested in such hot companies as Facebook, Cloudera, Couchbase, Groupon and Fusion IO.

I grabbed some time with Ping at the end of the sessions yesterday to learn more about their fund:

Some of the ground Ping covers:

What areas within the data world the fund will focus on.

- Who are some of the current players within their portfolio that fall into the big data space.

- What trends Ping’s seeing within the field of Big Data.

- How to engage with Accel and why it would make sense to work with them.

Extra-credit reading:

- Accel forms $100M big data fund – GigaOm

Herman Mehling asserted “How big is 'Big Data' for the future of enterprise app development? Even software giants such as Microsoft are embracing Hadoop, an open source framework for data-intensive distributed apps” in a deck for his Hadoop on the Rise as Enterprise Developers Tackle Big Data article of 11/1/2011 for the DevX.com blog (missed when posted):

Hadoop, it seems, is everywhere these days. IBM, Oracle and Yahoo are among the big guns that have been supporting Hadoop for years. Recently, Microsoft joined the club by announcing it will integrate Hadoop into its upcoming SQL Server 2012 release and Azure platforms.

Apache Hadoop -- to give Hadoop its proper name -- is a software framework that supports data-intensive distributed applications (think Big Data). The framework, written in Java and supported by the Apache Software Foundation, enables applications to work with thousands of nodes and petabytes of data.

Microsoft's embracing of Hadoop is proof that the vendor has seen the writing on the wall about big data -- namely that it must give customers and developers the tools they need (be they proprietary or open-source) to work with all kinds of big data.

"The next frontier is all about uniting the power of the cloud with the power of data to gain insights that simply weren't possible even just a few years ago," said Microsoft Corporate Vice President Ted Kummert in a statement. "Microsoft is committed to making this possible for every organization, and it begins with SQL Server 2012."

As part of its commitment to help customers and developers process "any data, any size, anywhere," Microsoft is working with the Hadoop ecosystem, including core contributors from Hortonworks, to deliver Hadoop-based distributions for Windows Server and Windows Azure that work with industry-leading business intelligence tools.

A Community Technology Preview (CTP) of the Hadoop-based service for Windows Azure will be available by the end of 2011, and a CTP of the Hadoop-based service for Windows Server will follow in 2012.

Microsoft said it will work closely with the Hadoop community and propose contributions back to the Apache Software Foundation and the Hadoop project.

Why is adding a fraction of the Microsoft Windows, Azure and SQL Server user bases to the Hadoop community a good thing for Apache Hadoop, asked Eric Baldeschwieler, the CEO of Hortonworks in a recent blog post.

"Microsoft technology is used broadly across enterprises today. Ultimately, open source is all about community building. A growing user community feeds a virtuous circle. More users means more visibility for the project... More users mean more folks who will ultimately become contributors or committers. This makes the code evolve more quickly, which allows it to satisfy more use cases and hence attract more users, which further drives the project forward." …

Read more: Next Page: Hadoop and Managing Big Data in the Enterprise

<Return to section navigation list>

SQL Azure Database and Reporting

Erik Elskov Jensen (@ErikEJ) reported SQL Server Compact 3.5 SP2 now supports Merge Replication with SQL Server 2012 in an 11/9/2011 post:

A major update to SQL Server Compact 3.5 SP2 has just been released, version 3.5.8088.0, disguised as a “Cumulative Update Package”. Microsoft knowledgebase article 2628887 describes the update.

The update contains updated Server Tools, and updated desktop and device runtimes, all updated to support Merge Replication with the next version of SQL Server, SQL Server 2012.

For a complete list of Cumulative Updates released for SQL Server Compact 3.5 SP2, see my blog post here.

It is nice to see that the 3.5 SP2 product, with it’s full range of device support and synchronization technologies is kept alive and kicking.

NOTE: I blogged about this update earlier, but it was pulled back. Now it is finally available, and all downloads can be requested. (I have downloaded all the ENU ones, anyway)

Stephen Withers asserted “Quest Software's free Toad for Cloud Databases makes it easier for database professionals and developers to work with cloud databases” in a deck for his Hop into cloud databases with Toad post of 11/9/2011 to the ITWire site:

Toad for Cloud Databases simplifies cloud database tasks such as generating queries; migrating, browsing and editing data; and creating reports and tables.

The product supports Apache Hive, Apache HBase, Apache Cassandra, MongoDB, Amazon SimpleDB, Microsoft Azure Table Services, Microsoft SQL Azure, and all ODBC-enabled relational databases including Oracle, SQL Server, MySQL, and DB2. [Emphasis added.]

Toad for Cloud Databases also includes Quest's bidirectional connector between Oracle and Hadoop (the open source system for the distributed processing of large data sets across clusters of computers).

"Quest Software began development for Toad for Cloud Databases more than three years ago, knowing that the way organisations manage data, and the database management industry itself, was about to change dramatically," said Guy Harrison, senior director of research and development, Quest Software.

"Quest Software continues to develop its technologies to address both today's market needs and what it anticipates is on the horizon, a dual focus that lets it improve the performance, productivity, and, ultimately, operational value of its users. With support for accessing seven emerging big data technologies, and the familiar interface that has won Toad millions of loyal users, the community edition of Toad for Cloud Databases will let Quest Software keep running fast at adding new features and bringing more value to the developer community."

<Return to section navigation list>

MarketPlace DataMarket, Social Analytics and OData

Maarten Balliauw (@maartenballiauw) described Rewriting WCF OData Services base URL with load balancing & reverse proxy in an 11/8/2011 post:

When scaling out an application to multiple servers, often a form of load balancing or reverse proxying is used to provide external users access to a web server. For example, one can be in the situation where two servers are hosting a WCF OData Service and are exposed to the Internet through either a load balancer or a reverse proxy. Below is a figure of such setup using a reverse proxy.

As you can see, the external server listens on the URL www.example.com, while both internal servers are listening on their respective host names. Guess what: whenever someone accesses a WCF OData Service through the reverse proxy, the XML generated by one of the two backend servers is slightly invalid:

While valid XML, the hostname provided to all our clients is wrong. The host name of the backend machine is in there and not the hostname of the reverse proxy URL…

How can this be solved? There are a couple of answers to that, one that popped into our minds was to rewrite the XML on the reverse proxy and simply “string.Replace” the invalid URLs. This will probably work, but it feels… dirty. We chose to create WCF inspector, which simply changes this at the WCF level on each backend node.

Our inspector looks like this: (note I did some hardcoding of the base hostname in here, which obviously should not be done in your code)

1 public class RewriteBaseUrlMessageInspector 2 : IDispatchMessageInspector 3 { 4 public object AfterReceiveRequest(ref Message request, IClientChannel channel, InstanceContext instanceContext) 5 { 6 if (WebOperationContext.Current != null && WebOperationContext.Current.IncomingRequest.UriTemplateMatch != null) 7 { 8 UriBuilder baseUriBuilder = new UriBuilder(WebOperationContext.Current.IncomingRequest.UriTemplateMatch.BaseUri); 9 UriBuilder requestUriBuilder = new UriBuilder(WebOperationContext.Current.IncomingRequest.UriTemplateMatch.RequestUri); 10 11 baseUriBuilder.Host = "www.example.com"; 12 requestUriBuilder.Host = baseUriBuilder.Host; 13 14 OperationContext.Current.IncomingMessageProperties["MicrosoftDataServicesRootUri"] = baseUriBuilder.Uri; 15 OperationContext.Current.IncomingMessageProperties["MicrosoftDataServicesRequestUri"] = requestUriBuilder.Uri; 16 } 17 18 return null; 19 } 20 21 public void BeforeSendReply(ref Message reply, object correlationState) 22 { 23 // Noop 24 } 25 }

There’s not much rocket science in there, although some noteworthy actions are being performed:

- The current WebOperationContext is queried for the full incoming request URI as well as the base URI. These values are based on the local server, in our example “srvweb01” and “srvweb02”.

- The Host part of that URI is being replaced with the external hostname, www.example.com

- These two values are stored in the current OperationContext’s IncomingMessageProperties. Apparently the keys MicrosoftDataServicesRootUri and MicrosoftDataServicesRequestUri affect the URL being generated in the XML feed

To apply this inspector to our WCF OData Service, we’ve created a behavior and applied the inspector to our service channel. Here’s the code for that:

1 [AttributeUsage(AttributeTargets.Class)] 2 public class RewriteBaseUrlBehavior 3 : Attribute, IServiceBehavior 4 { 5 public void Validate(ServiceDescription serviceDescription, ServiceHostBase serviceHostBase) 6 { 7 // Noop 8 } 9 10 public void AddBindingParameters(ServiceDescription serviceDescription, ServiceHostBase serviceHostBase, Collection<ServiceEndpoint> endpoints, BindingParameterCollection bindingParameters) 11 { 12 // Noop 13 } 14 15 public void ApplyDispatchBehavior(ServiceDescription serviceDescription, ServiceHostBase serviceHostBase) 16 { 17 foreach (ChannelDispatcher channelDispatcher in serviceHostBase.ChannelDispatchers) 18 { 19 foreach (EndpointDispatcher endpointDispatcher in channelDispatcher.Endpoints) 20 { 21 endpointDispatcher.DispatchRuntime.MessageInspectors.Add( 22 new RewriteBaseUrlMessageInspector()); 23 } 24 } 25 } 26 }

This behavior simply loops all channel dispatchers and their endpoints and applies our inspector to them.

Finally, there’s nothing left to do to fix our reverse proxy issue than to just annotate our WCF OData Service with this behavior attribute:

1 [RewriteBaseUrlBehavior] 2 public class PackageFeedHandler 3 : DataService<PackageEntities> 4 { 5 // ... 6 }

Working with URL routing

A while ago, I posted about Using dynamic WCF service routes. The technique described below is also appropriate for services created using that technique. When working with that implementation, the source code for the inspector would be slightly different.

1 public class RewriteBaseUrlMessageInspector 2 : IDispatchMessageInspector 3 { 4 public object AfterReceiveRequest(ref Message request, IClientChannel channel, InstanceContext instanceContext) 5 { 6 if (WebOperationContext.Current != null && WebOperationContext.Current.IncomingRequest.UriTemplateMatch != null) 7 { 8 UriBuilder baseUriBuilder = new UriBuilder(WebOperationContext.Current.IncomingRequest.UriTemplateMatch.BaseUri); 9 UriBuilder requestUriBuilder = new UriBuilder(WebOperationContext.Current.IncomingRequest.UriTemplateMatch.RequestUri); 10 11 var routeData = MyGet.Server.Routing.DynamicServiceRoute.GetCurrentRouteData(); 12 var route = routeData.Route as Route; 13 if (route != null) 14 { 15 string servicePath = route.Url; 16 servicePath = Regex.Replace(servicePath, @"({\*.*})", ""); // strip out catch-all 17 foreach (var routeValue in routeData.Values) 18 { 19 if (routeValue.Value != null) 20 { 21 servicePath = servicePath.Replace("{" + routeValue.Key + "}", routeValue.Value.ToString()); 22 } 23 } 24 25 if (!servicePath.StartsWith("/")) 26 { 27 servicePath = "/" + servicePath; 28 } 29 30 if (!servicePath.EndsWith("/")) 31 { 32 servicePath = servicePath + "/"; 33 } 34 35 requestUriBuilder.Path = requestUriBuilder.Path.Replace(baseUriBuilder.Path, servicePath); 36 requestUriBuilder.Host = baseUriBuilder.Host; 37 baseUriBuilder.Path = servicePath; 38 } 39 40 OperationContext.Current.IncomingMessageProperties["MicrosoftDataServicesRootUri"] = baseUriBuilder.Uri; 41 OperationContext.Current.IncomingMessageProperties["MicrosoftDataServicesRequestUri"] = requestUriBuilder.Uri; 42 } 43 44 return null; 45 } 46 47 public void BeforeSendReply(ref Message reply, object correlationState) 48 { 49 // Noop 50 } 51 }

The idea is identical, except that we’re updating the incoming URL path for reasons described in the aforementioned blog post.

Jerry Nixon (@jerrynixon) posted a Mango Sample: Consume Odata on 11/7/2011:

Odata is a standard method to expose data through a WCF service. Typical services have GetThis() & GetThat() methods, Odata services expose Queriable interfaces that allow consumers to define data queries on the fly.

Odata delivers data as standard, XML Atom. But reference an Odata service in a Windows Phone project, and that XML is abstracted away – you get simple objects instead. Yeah!

Note: This post is about consuming Odata. Perhaps I will create an Odata service post later. One interesting point: consuming Odata in a Windows Phone project is identical to the techniques in ANY Silverlight application.

STEP 1 CREATE AZURE MARKETPLACE ACCOUNT

Go to https://datamarket.azure.com/ and register for a free account. Once registered, you should see the screen below. Your Customer ID is important to access Marketplace data.

Not only will you have a Customer ID – this is your login username. But you will also get an Account Key – this is your login password to access Marketplace data.

Hey! What is this Azure Data Marketplace?

The Azure Data Marketplace is where people with data can come sell it – letting the Azure infrastructure promote and deliver the data for them. Some of this data is free. And since it is exposed as Odata, it’s a great source for us to use in this sample.

Specifically, we will use the City Crime statistics.

Extraction of offense, arrest, and clearance data as well as law enforcement staffing information from the FBI's Uniform Crime Reporting (UCR) Program.

Step 2 Add a Service Reference:

For this demo we will use the Federal data on city crime statistics. There’s nothing tricky to adding a service reference. Just add the service like normal and Visual Studio will detect that it is an Odata feed and create the proxy correctly.

Here’s the service reference URL to Crime Data (call it CityCrimeService):

https://api.datamarket.azure.com/Data.ashx/data.gov/Crimes/Step 3 Create a Context to the Feed

The context will connect to the source, supply your credentials, and allow you to query the data as you like. Odata really is this neat and simple.

Get real code here.

Step 4 Query for Data

It’s not likely that you know the structure of the Crime data. If this were a real scenario, you would just reference the documentation on the Azure Data Marketplace for this. Let me help, the data is basically structured like this:

Querying this data is as simple as using Linq to Objects. Let’s pretend that what we want is only the crime in State “Colorado”, City “Denver”, Year “All”.

Remember that these calls, like all service calls in Silverlight, are asynchronous. That means you execute the query, and must then handle the completed event.

Here’s the syntax to do the query:

Get real code here.

Conclusion

Hopefully, you have everything you need to start querying an Odata service. Odata sources allow you to create the query as you need the data – this improves payload and performance. Hopefully, you will also investigate the Azure Data Marketplace.

Remember this one Thing

Whenever you execute an asynchronous method and handle the completed event, the completed event code is no longer on the UI thread. In Silverlight you use the Dispatcher to return to the UI thread. Otherwise you get a cross thread exception.

Jerry is a Microsoft developer evangelist in Colorado.

Wriju Ghosh (@wriju_ghosh) posted Azure OData and Windows Phone 7 on 11/5/2011 to his MSDN blog:

We have data available in SQL Azure. So we will create one let’s say and application ASP.NET MVC and add the Entity Framework data model there.

After we have added the model we will build the project and add one WCF Data Services file and modify the generated code as below,

public class EmpDS : DataService<DBAzEntities>

{

public static void InitializeService(DataServiceConfiguration config)

{

config.SetEntitySetAccessRule("*", EntitySetRights.All);

config.DataServiceBehavior.MaxProtocolVersion = DataServiceProtocolVersion.V2;

}

protected override DBAzEntities CreateDataSource()

{

DBAzEntities ctx = new DBAzEntities();

ctx.ContextOptions.ProxyCreationEnabled = false;

return ctx;

}

}

After that upload it to the Azure Hosted services. Once that is done we now can view it in browser to check the validity of the URL. This would allow you to view your data in browser. After that we will build the Windows Phone 7 application to consume the data.

Build Windows Phone 7 Silverlight application and add “Service Reference” pointing to the URL

After that write the below code to MainPage.xaml

public DataServiceCollection<Emp> Emps { get; set; }

public void LoadData()

{

DBAzEntities ctx = new DBAzEntities(new Uri(@"http://127.0.0.1:81/Models/EmpDS.svc/"));

Emps = new DataServiceCollection<Emp>();

var query = from em in ctx.Emps

select em;

Emps.LoadCompleted += new EventHandler<LoadCompletedEventArgs>(Emps_LoadCompleted);

Emps.LoadAsync(query);

}

void Emps_LoadCompleted(object sender, LoadCompletedEventArgs e)

{

lstData.ItemsSource = Emps;

}

void Emps_LoadCompleted(object sender, LoadCompletedEventArgs e)

{

lstData.ItemsSource = Emps;

}

And the corresponding XAML would look like,

<ListBox x:Name="lstData" >

<ListBox.ItemTemplate>

<DataTemplate>

<StackPanel>

<TextBlock Text="{Binding Path=FullName}"></TextBlock>

</StackPanel>

</DataTemplate>

</ListBox.ItemTemplate>

</ListBox>

And the final output would look like [the above screen capture:]

Joe Kunk (@JoeKunk) asserted “The Windows Azure Marketplace has a hidden jewel: a host of free and nearly free databases ready for monetization” in an introduction to his Free Databases in the Window Azure Marketplace article of 11/1/2011 for Visual Studio Magazine’s November 2011 issue:

During the second day keynote of the Microsoft BUILD conference in September, Satya Nadella, president of the Microsoft Server & Tools Business, announced that the Bing Translator API was available from the Windows Azure Marketplace and used by large sites such as eBay. Microsoft expanded the Marketplace to 25 new countries in early October. According to Nadella, developers can easily monetize databases through the Windows Azure Marketplace. (You can view the keynote and read the full announcement.)

I hadn't heard of the Windows Azure Marketplace prior to the keynote, so I was intrigued to see how data was being sold in it and, more specifically, to see what interesting databases I might find for free or at very low cost. And find them I did.

In this article, I'll look at how to access the Windows Azure Marketplace and a baker's dozen of databases (data feeds) that are free or available in limited subscriptions -- free for the first several thousand transactions per month. The code download includes a sample ASP.NET MVC 3 Web site that presents sample data queries from these services. The Web site is written in Visual Basic 10 for the Microsoft .NET Framework 4; equivalent data-access routines for C# developers are included for some of the databases as well.

Windows Azure Marketplace

The Windows Azure Marketplace is at datamarket.azure.com, and a good description of the highlights and benefits can be found here. The Marketplace includes software application subscriptions as well as databases. A Windows Live ID is required to create a Marketplace account. Once created, you're issued a Customer ID and an Access Key. This is your username and password for each data service accessed; it must be protected with the same privacy level as a credit card, as they tie directly to your online billing account for paid services.Some databases, such as those provided by the United Nations and the U.S. government, are free at any level of usage. Other databases, such as the Microsoft Translator, are free to a certain level and carry a fee above that level.

Fortunately, you determine what usage level and expense is appropriate for you. Each subscription level will prevent further use if the usage limit is exceeded, so you won't automatically incur fees beyond that subscription level. See Figure 1 for the subscription levels of the Microsoft Translator service as an example. To increase the available usage level, the existing subscription must be canceled and the higher-level subscription purchased. Any remaining usage on the canceled subscription level will be forfeited.

[Click on image for larger view.]Figure 1. Subscription levels for the Microsoft Translator service.

Database subscriptions can be added to your account and become available under the "My Data" link within your account profile, collecting all your database subscriptions together for easy review.

Accessing the Databases

The Marketplace supports both fixed query and flexible query databases. The fixed query services make available a C# source code library; all access is done via a secured OData feed from the provided Service URI via predefined methods and parameters. The flexible query service involves creating a Service Reference to the Service root URL, then using LINQ to query the service. A particular data feed is either fixed or flexible. The code download demonstrates accessing both fixed query and flexible query databases.To run the code download and access the Windows Azure Marketplace databases, you'll need to use your Windows Live ID to create a Marketplace account and then subscribe to the appropriate databases at the desired level. No credit card is needed as long as only free and limited-tier subscriptions are added. The Settings tab of the AzureMarketplaceDemo project has a CustomerID field and AccessKey field to hold your identifying information. Alternatively, you can place that information in the hosting PC's Windows Registry, as described in the GetCredentials method.

The Service URIs are not meant to be accessed outside an application because they must be passed valid network credentials (username = CustomerID, password = AccessKey) in order to return a valid response.

[Screen images of sample query results from the databases are included in the code download for those who would like to see more of the type of data available without creating a Windows Azure Marketplace account. -- Ed.]

Microsoft Translation Services

The Microsoft Translator page is found here. It's a fixed query service that provides the first 2,000 monthly translations for free. In general, try to keep each translation request at less than 1,500 characters. Translation requests are capped at 50 per second per TCP/IP address.As you can see in Listing 1, the service takes the source language string, the destination language code and the source language code. The OData Service URI is https://api.datamarket.azure.com/Bing/MicrosoftTranslator. The available transaction count is decremented for the database on your "My Data" Marketplace account page after each call, so it's easy to determine how much usage remains at the subscription level for the current subscription period. …

Full disclosure: I’m a contributing editor for Visual Studio Magazine.

<Return to section navigation list>

Windows Azure AppFabric: Apps, Access Control, WIF and Service Bus

Ruppert Koch reported a New Article: Best Practices for Performance Improvements Using Service Bus Brokered Messaging in an 11/9/2011 post to the Windows Azure Team blog:

This post describes how to use the Service Bus brokered messaging features in a way to yield best performance. You can find more details in the full article on MSDN.

Use the Service Bus Client Protocol

The Service Bus supports the Service Bus client protocol and HTTP. The Service Bus client protocol is more efficient, because it maintains the connection to the Service Bus service as long as the message factory exists. It also implements batching and prefetching. The Service Bus client protocol is available for .NET applications using the .NET managed API. Whenever possible, connect to the Service Bus via the Service Bus client protocol.

Reuse Factories and Clients

Service Bus client objects, such as QueueClient or MessageSender, are created through a MessagingFactory, which also provides internal management of connections. When using the Service Bus client protocol avoid closing any messaging factories and queue, topic and subscription clients after sending a message and then recreating them when sending the next message. Instead, use the factory and client for multiple operations. Closing a messaging factory deletes the connection to the Service Bus. Establishing a connection is an expensive operation.

Use Concurrent Operations

Performing an operation (send, receive, delete, etc.) takes a certain amount of time. This time includes the processing of the operation by the Service Bus service as well as the latency of the request and the reply. To increase the number of operations per time, operations should be executed concurrently. This is particularly true if the latency of the data exchange between the client and the datacenter that hosts the Service Bus namespace is large.

Executing multiple operations concurrently can be done in several different ways:

- Asynchronous operations. The client pipelines operations by performing asynchronous operations. The next request is started before the previous request completes.

- Multiple factories. All clients (senders as well as receivers) that are created by the same factory share one TCP connection. The maximum message throughput is limited by the number of operations that can go through this TCP connection. The throughput that can be obtained with a single factory varies greatly with TCP round-trip times and message size.

Use client-side batching

Client-side batching allows a queue/topic client to batch multiple send operations into a single request. It also allows a queue/subscription client to batch multiple Complete requests into a single request. By default, a client uses a batch interval of 20ms. You can change the batch interval by setting MessagingFactorySettings.NetMessagingTransportSettings.BatchFlushInterval before creating the messaging factory. This setting affects all clients that are created by this factory.

MessagingFactorySettings mfs = new MessagingFactorySettings(); mfs.TokenProvider = tokenProvider; mfs.NetMessagingTransportSettings.BatchFlushInterval = TimeSpan.FromSeconds(0.05); MessagingFactory messagingFactory = MessagingFactory.Create(namespaceUri, mfs);For low-throughput, low-latency scenarios you want to disable batching. To do so, set the batch flush interval to 0. For high-throughput scenarios, increase the batching interval to 50ms. If multiple senders are used, increase the batching interval to 100ms.

Batching is only available for asynchronous Send and Complete operations. Synchronous operations are immediately sent to the Service Bus service. Batching does not occur for Peek or Receive operations, nor does batching occur across clients.

Use batched store access

To increase the throughput of a queue/topic/subscription, the Service Bus service batches multiple messages when writing to its internal store. If enabled on a queue or topic, writing messages into the store will be batched. If enabled on a queue or subscription, deleting messages from the store will be batched. Batched store access only affects Send and Complete operations; receive operations are not affected.

When creating a new queue, topic or subscription, batched store access is enabled with a batch interval is 20ms. For low-throughput, low-latency scenarios you want to disable batched store access by setting QueueDescription.EnableBatchedOperations to false before creating the entity.

QueueDescription qd = new QueueDescription(); qd.EnableBatchedOperations = false; Queue q = namespaceManager.CreateQueue(qd);Use prefetching

Prefetching causes the queue/subscription client to load additional messages from the service when performing a receive operation. The client stores these messages in a local cache. The QueueClient.PrefetchCount and SubscriptionClient.PrefetchCount values specify the number of messages that can be prefetched. Each client that enables prefetching maintains its own cache. A cache is not shared across clients.

Service Bus locks any prefetched messages so that prefetched messages cannot be received by a different receiver. If the receiver fails to complete the message before the lock expires, the message becomes available to other receivers. The prefetched copy of the message remains in the cache. The receiver will receive an exception when it tries to complete the expired cached copy of the message.

To prevent the consumption of expired messages, the cache size must be smaller than the number of messages that can be consumed by a client within the lock timeout interval. When using the default lock expiration of 60 seconds, a good value for SubscriptionClient.PrefetchCount is 20 times the maximum processing rates of all receivers of the factory. If, for example, a factory creates 3 receivers and each receiver can process up to 10 messages per second, the prefetch count should not exceed 20*3*10 = 600.

By default, QueueClient.PrefetchCount is set to 0, which means that no additional messages are fetched from the service. Enable prefetching if receivers consume messages at a high rate. In low-latency scenarios, enable prefetching if a single client consumes messages from the queue or subscription. If multiple clients are used, set the prefetch count to 0. By doing so, the second client can receive the second message while the first client is still processing the first message.

Read the full article on MSDN.

<Return to section navigation list>

Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

No significant articles today.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

David Pallman continued his An HTML5-Windows Azure Dashboard, Part 2: HTML5 Video series on 11/8/2011:

As I continue to experiment with HTML5-cloud dashboards, I wanted to move beyond charts and also include media content, especially HTML5 video. More and more, modern interfaces need movement and video is one source of movement. Of course, it should only be there if it’s providing some value and you definitely don’t want to overdo it. In particular you need to think about the impact of video on tablets and phones where download speed and device power may be constrained.

In my second dashboard prototype, I created another fictional company, Contoso Health. In this medical scenario there are plenty of places for video: patient monitoring, MRIs, endoscopy, even reference materials. It is in need of someoptimization for tablets and especially phones but it’s off to a good start. It demos well on computers and tablets and works in the latest editions of Chrome, FireFox, IE, and Safari. While there is plenty of work still to be done to make this a real functional dashboard—the content is somewhat canned in this first incarnation-- it does convey a compelling vision for where this can go.HTML5 Video

This was my first opportunity to play with HTML5 video.

The good news about HTML5 video is we can do video and audio in modern browsers without plug-ins, and thanks to hardware acceleration we have more horsepower on the web client side than ever before. Even the media controls are built into the browsers. Belowyou can see the browser controls for IE9, Chrome, and FireFox (which onlyappear when you hover over or touch the video). In addition, Safari Mobile onthe iPad will let you take a video full-screen.The bad news is, video can be complicated at times and there is no single best format: the browser makers have not been able to come to agreement on a common video format they will support. That means you’ll be encoding your video to several formats if you want broad browser coverage.

A good place to see what HTML5 video looks like is at the HTML5 video online tutorial at w3schools.com. Within a <video> tag, you specify one or more video sources. If the browser doesn’t support any of your choices, the content within the<video> tag is displayed, most commonly an apologetic message or fallback content such as an image.

<video width="320" height="240" controls="controls">

<source src="movie.mp4" type="video/mp4" />

<source src="movie.ogg" type="video/ogg" />

Your browser does not support the video tag.

</video>Now about video formats. Microsoft is championing H.264,which several other browsers also support, and FireFox prefers OGG-Theora.From my experimentation, if you provide your video content in these two formatsyou’ll be largely in good shape across popular browsers and devices. If youfind you need more coverage and want to add a third format, you might look intoWebM.

My starting point was stock footage MPEGs, and I needed tocreate H.264 and OGG video content from that. My first thought was to useExpression Encoder but it turns out the version available to me doesn’thave the codecs needed. I ended up using AVS Video Converterfor conversion to H.264 video and FoxTab Video Converter for conversionto OGG, both low-priced tools.

My video tags ended up looking like this:

<video id="video1" controls="controls" loop autoplay>

<source src="heartscan.mpg" type="video/mp4" />

<source src="heartscan.ogg" type="video/ogg" />

Your browser does not support the video tag.

</video>In addition to having good format coverage, the correct MIMEtype (Content-Type header) needs to be served up for each video. Since we’reaccessing the video blobs from Windows Azure blob storage, we put them in acontainer that allows web access and set each blob’s ContentType metadatafield. I received the best results with “video/mp4” for H.264 and “video/ogg”for OGG. I didn’t use WebM, but if you’re planning to I believe “video/webm”is the right content type to specify.

Now that I’ve made some progress envisioning the dashboarduser experience it’s time to start making it functional. Stay tuned!

Brian Prince interviewed Raymon Resma in Bytes by MSDN: November 8 - Ramon Resma on 11/8/2011:

Join Brian Prince, Principle Developer Evangelist at Microsoft, and Ramon Resma, Software Architect Principal at Travelocity, as they discuss Windows Azure. Ramon talks about the advantages of utilizing Windows Azure for web applications. Using Azure for a specific customer facing web app, allowed Travelocity to track metrics of how the user was engaging with the new feature and scale accordingly to accommodate traffic. Tune in to hear this great Azure story!

About Ramon

Ramon Resma is a Software Architect in mobile development at travelocity.com .He leads the development of mobile travel applications running on Windows Phone 7, Android, and iOS. He also works on cloud-based technologies to support development of applications for travel planning, travel shopping, and in-trip management. More recently, he worked on Microsoft Axure's cloud-based technology to support certain analytics tracking of Ajax-based functions on travelocity.com. Azure provided the significant increase on CPU and storage resources required to support the spike in traffic required by the application on an as-needed basis. He has worked in the past on web development, SOA, search engine, distributed caching, database and data-mning technology. Ramon has over 22 years of experience in the travel industry and has worked on architecture leadership roles for Travelocity and Sabre Airline Solutions.

About Brian

Expect Brian Prince to get (in his own words) “super excited” whenever he talks about technology, especially cloud computing, patterns, and practices. That’s a good thing, given that his job is to help customers strategically leverage Microsoft technologies and take their architecture to new heights. Brian’s the co-founder of the non-profit organization CodeMash (www.codemash.org), runs the global Windows Azure Boot Camp program, and speaks at various regional and international events. Armed with a Bachelor of Arts degree in computer science and physics from Capital University in Columbus, Ohio, Brian is a zealous gamer with a special weakness for Fallout 3. Brian is the co-author of “Azure in Action”, published by Manning Press.

Avkash Chauhan (@avkashchauhan) described How to access Service Configuration settings in Windows Azure Startup Task in an 11/9/2011 post:

When just running or debugging your Windows Azure application in Compute Emulator, you might hit the following error:

Error, 0, 5c7858b7-deaf-41cb-9a87-ad2356fffedb`GetAgentState`System.ServiceModel.EndpointNotFoundException:

Could not connect to net.tcp://localhost/dfagent/2/host. The connection attempt lasted for a time span of 00:00:02.0001978. TCP error code 10061:

No connection could be made because the target machine actively refused it 127.0.0.1:808. ---> System.Net.Sockets.SocketException: No connection could be made because the target machine actively refused it 127.0.0.1:808

at System.Net.Sockets.Socket.DoConnect(EndPoint endPointSnapshot, SocketAddress socketAddress)

at System.Net.Sockets.Socket.Connect(EndPoint remoteEP)

at System.ServiceModel.Channels.SocketConnectionInitiator.Connect(Uri uri, TimeSpan timeout)

--- End of inner exception stack trace ---

Server stack trace:

at System.ServiceModel.Channels.SocketConnectionInitiator.Connect(Uri uri, TimeSpan timeout)

at System.ServiceModel.Channels.BufferedConnectionInitiator.Connect(Uri uri, TimeSpan timeout)

at System.ServiceModel.Channels.ConnectionPoolHelper.EstablishConnection(TimeSpan timeout)

at System.ServiceModel.Channels.ClientFramingDuplexSessionChannel.OnOpen(TimeSpan timeout)

at System.ServiceModel.Channels.CommunicationObject.Open(TimeSpan timeout)

at System.ServiceModel.Channels.ServiceChannel.OnOpen(TimeSpan timeout)

at System.ServiceModel.Channels.CommunicationObject.Open(TimeSpan timeout)

at System.ServiceModel.Channels.ServiceChannel.CallOnceManager.CallOnce(TimeSpan timeout, CallOnceManager cascade)

at System.ServiceModel.Channels.ServiceChannel.EnsureOpened(TimeSpan timeout)

at System.ServiceModel.Channels.ServiceChannel.Call(String action, Boolean oneway, ProxyOperationRuntime operation, Object[] ins, Object[] outs, TimeSpan timeout)

at System.ServiceModel.Channels.ServiceChannelProxy.InvokeService(IMethodCallMessage methodCall, ProxyOperationRuntime operation)

at System.ServiceModel.Channels.ServiceChannelProxy.Invoke(IMessage message)

Exception rethrown at [0]:

at System.Runtime.Remoting.Proxies.RealProxy.HandleReturnMessage(IMessage reqMsg, IMessage retMsg)

at System.Runtime.Remoting.Proxies.RealProxy.PrivateInvoke(MessageData& msgData, Int32 type)

at RD.Fabric.Controller.IAgent.GetState()

at RD.Fabric.Controller.DevFabricAgentInterface.<>c__DisplayClass2b.<GetAgentState>b__2a(IAgent agent)

at RD.Fabric.Controller.DevFabricAgentInterface.CallAgent(AgentCallDelegate agentCall, String operation)`

During this time if you Windows Azure Compute Azure notification are enabled, you will see the following dialog bog:

And finally you Visual Studio will show the following dialog to further stop the deployment in compute emulator:

As you can see the in the error the binding could not occurred at port 808 in the local machine:

TCP error code 10061: No connection could be made because the target machine actively refused it 127.0.0.1:808. ---> System.Net.Sockets.SocketException: No connection could be made because the target machine actively refused it 127.0.0.1:808

To further investigate and solve this problem let see what application occupying port 808 using netstat command as below:

- “netstat -p tcp -ano | findstr :808”

Potential reason for this problem:

In most probable case you will see the port 808 is consumed by application SMSvcHost.exe. If you search about SMSvcHost.exe application on internet you will find several incidents when port 808 is occupied by this application.

Solution:

To further solve this problem one option you have is to kill SMSvcHost.exe or use utility like “Autorun” to disable it from running in the machine at first. The overall idea is to let the port 808 free so when Azure application run the binding could be occurred.

Note: It is also possible that above suggestion may not solve your problem. I am willing to work with you if you hit the same problem and above solution did not work for you. Please contact me directly or please open a Windows Azure support incident and I or my team will be glad to help you.

Andy Cross (@AndyBareWeb) posted Hello Azure! on 11/6/2011 (missed when posted):

For over a year my blog has been running on a non-Windows Azure platform, despite the fact I evangelise for Azure. The time came to change, and now this blog is hosted 100% on Windows Azure.

Thanks to the team who produced AzurePHP, and particualrly this tutorial: http://azurephp.interoperabilitybridges.com/articles/how-to-deploy-wordpress-using-the-windows-azure-sdk-for-php-wordpress-scaffold …

PS. Any broken links or other errors are related to the import mechanism which wasn’t exactly flawless! Write a comment one the post if you are missing something important.

PPS. Thanks again to Azure PHP and Azure PHP SDK.

<Return to section navigation list>

Visual Studio LightSwitch and Entity Framework 4.1+

Julie Lerman (@julielerman) reported her New [Entity Framework] book(s) status on 11/9/2011:

Rowan and [I] are are doing double duty this week as we review the proof for the Code First book (Programming Entity Framework: Code First) and write chapters for the next book, Programming Entity Framework: DbContext.

The Code First book is already listed on O’Reilly’s website (http://shop.oreilly.com/product/0636920022220.do) although they have it listed as 100 pages. HA! It’s closer to 175! The book is also pre-listed on Amazon.com (here’s the direct link). You can get e-books (PDF, Mobi (for Kindle) and more) as well as a print-on-demand version from O’Reilly. Currently Amazon is only listing the print on demand but there will be a kindle version.

The e-books will be in color (though with kindle, that depends on your device) and we have copy/pasted code directly from Visual Studio. This means that code samples will have the VS color syntax as well –in the e-books. Printed books will be b&w.

We’re also working to get the chapter by chapter samples ready for downloads on the download page of this website. We’ll convert them to VB as well.

So I think we should be seeing this edition in a few more weeks. We’ve still got a bunch of writing, reviewing etc etc for the DbContext book.To get it out more quickly, we decided to focus the DbContext edition oh how to use DbContext and Validation API but not bog ourselves down with application patterns. the plan is to follow up with a cook book style edition that will use code first and dbcontext in a variety of application patterns …from drag & drop databinding, to using repositories, automated testing, services, MVVM etc.

And now I have to get *back* to reviewing that Code First book!

Return to section navigation list>

Windows Azure Infrastructure and DevOps

David Linthicum (@DavidLinthicum) asserted “As we gain experience with the cloud, expect to see centralized trust systems, amazingly large databases, and more” in a deck for his 5 key trends in cloud computing's future article of 11/10/2011 for InfoWorld’s Cloud Computing blog:

I was asked to talk about the future of cloud computing at Cloud Expo, taking place this week in Santa Clara, Calif. For those of you not at the show, I identified five key trends to anticipate.

First, the buzzwords "cloud computing" are enmeshed in computing. I'm not sure I ever liked the term, though I've built my career around it for the last 10 years. The concept predated the rise of the phrase, and the concept will outlive the buzzwords. "Cloud computing" will become just "computing" at some point, but it will still be around as an approach to computing. …

Second, we're beginning to focus on fit and function, and not the hype. However, I still see many square cloud pegs going into round enterprise holes. Why? The hype drives the movement to cloud computing, but there is little thought as to the actual fit of the technology. Thus, there is diminished business value and even a failed project or two. We'll find the right fit for this stuff in a few years. We just need to learn from our failures and become better at using clouds.

Third, security will move to "centralized trust." This means we'll learn to manage identities within enterprises -- and within clouds. From there we'll create places on the Internet where we'll be able to validate identities, like the DMV validates your license. There will be so many clouds that we'll have to deal with the need for a single sign-on, and identity-based security will become a requirement.

Fourth, centralized data will become a key strategic advantage. We'll get good at creating huge databases in the sky that aggregate valuable information that anybody can use through a publicly accessible API, such as stock market behavior over decades or clinical outcome data to provide better patient care. These databases will use big data technology such as Hadoop, and they will reach sizes once unheard of.

Fifth, mobile devices will become more powerful and thinner. That's a no-brainer. With the continued rise of mobile computing and the reliance on clouds to support mobile applications, mobile devices will have more capabilities, but the data will live in the cloud. Apple's iCloud is just one example.

That's the top five. Give them at least three years to play out.

Michael Collier (@MichaelCollier) posted the slides to his presentation at Cloud Expo West 2011 on 11/9/2011:

On Tuesday I had the privilege of speaking at Cloud Expo West 2011 in Santa Clara, CA. This was my first time at a Cloud Expo event. It was a pretty cool experience. There were a lot of people there – all interested in cloud computing!

My presentation on Tuesday was on “Migrating Enterprise Applications to the Cloud”. Following the presentation I received a few requests from those that attended to share my presentation deck. You can now download a copy of the material here.

I hope those that attended the session found the information helpful. Thank you!

Lydia Leong (@cloudpundit) reflected on IT Operations and button-pushing in a 11/9/2011 post to her Cloud Pundit blog:

The fine folks at Nodeable gave me an informal introductory briefing today; they’ve got a pretty cool concept for a cloud-oriented monitoring and management SaaS-based tool that’s aimed at DevOps.

I’ve been having stray thoughts on DevOps and the future of IT Operations in the couple of hours that have passed since then, and reflecting on the following problem:

At an awful lot of companies, IT Operations, especially lower-level folks, are button-pushing monkeys — specifically, they are people who know how to use the vendor-supplied GUI to perform particular tasks. They may know the vendor-recommended ways to do things with a particular bit of hardware or software. But only a few of them have architect-level knowledge, the deep understanding of the esoterica of systems and how this stuff is actually built and engineered. (Some of this is a reflection of education; a lot of IT Operations people don’t come from a computer science background, but have what they’ve needed to know on the job.)

Today’s DevOps person is likely to have a skillset that we used to call systems programming. They understand systems architecture, they understand operating systems, they can write system-level code, including the scripting necessary for automation. The programmatic access to infrastructure exemplified by cloud IaaS providers has moved this up a layer of abstraction, so that you don’t have to be a deep-voodoo guy to do this kind of thing.

We’re moving towards a world where you have really low-level button-pushers — possibly where the button-pushing is so simple that you don’t need a specialist to do it any longer, anyone reasonably technical can do it — and senior architects whoo design things, and systems programmers who automate things. Whether those systems programmers work in application development and are “DevOps”, or whether those systems programmers work in IT Operations and just happen to be systems guys who program (mostly scripting), doesn’t really matter — the era of the button-pusher is drawing towards its close either way, at least for organizations who are going to efficiently increase IT Operations efficiency.

I want to share a story. It is, in some ways, a story about cruelty and unprofessionalism, but it’s funny in its own way.

About fifteen years ago, I was working as an engineer at Digex (the first real managed hosting company). We had a pretty highly skilled group of engineers there, and we never did anything using a GUI. We had hundreds of customers on dedicated Sun servers, and you’d either SSH into the systems or, in a pinch, go to the data center and log in on console. We were also the kind of people who would fix issues by making kernel modifications — for instance, the day that the SYN flood attack showed up, a bunch of customers went down hard, meaning that we could not afford to wait for Sun to come up with a patch, since we had customer SLAs to meet, so one of our security engineers rewrote the kernel’s queueing code for TCP accepts.

We were without a manager for some time, and they finally hired a guy who was supposedly a great Sun sysadmin. He didn’t actually get a technical interview, but he had a good work history of completed projects and happy teams and so forth. He was supposed to be both the manager and the technical lead for the team.

The problem was that he had no idea how to do anything that wasn’t in Sun’s administrator GUI. He didn’t even know how to attach a console cable to a server, much less log in remotely to a system. Since we did absolutely nothing with a GUI, this was a big problem. An even bigger problem was that he didn’t understand anything about the underlying technologies we were supporting. If he had a problem, he was used to calling Sun and having them tell him what to do. This, clearly, is a big problem in a managed hosting environment where you’re the first line of support for your customers, who may do arbitrary wacky things.

He also worked a nine-to-five day at a startup where engineers routinely spent sixteen hours at work. His team, and the other engineers at the company, had nothing but contempt for him. And one night, having dinner at 10 pm as a break before going right back into work, someone had an idea.

“Let’s recompile his kernel without mouse support.” (Like all the engineers, he had a Sun workstation at his desk.)

And so when he came to work the next morning, his mouse didn’t work — and every trace of the intrusion had been covered, thanks to the complicity of one of the security engineers.

Someone who had an idea of what he was doing wouldn’t have been phazed; they’d have verified the mouse wasn’t working, then done an L1-A to put the workstation into PROM mode, and easily done troubleshooting from there (although admittedly, nobody thinks, “I wonder if somebody recompiled my kernel without mouse support after I went home last night”). This poor guy couldn’t do anything other than pick up his mouse to make sure the underside hadn’t gotten dirty. It turned out that he had no idea how to do anything with the workstation if he couldn’t log in via the GUI.

It proved to be a remarkably effective demonstration to management that this guy was a yahoo and needed to be fired. (Fortunately, there were plenty of suspect engineers, and management never found out who was responsible. Earl Galleher, who ran that part of the business at the time, and is the chairman at Basho now, probably still wonders… It wasn’t me, Earl.)

But it makes me wonder what is the future of all the GUI masters in IT Operations, because the world is evolving to be more like the teams that I had before I came to Gartner — systems programmers with strong systems and operations skills, who could also code.

DevOps: Now you know how to deal with the IT Operations guy who can only use a GUI…

Lori MacVittie (@lmacvittie) offered a “Hint: The answer lies in being aware of the entire application context and a little pre-planning” in the introduction to her The Secret to Doing Cloud Scalability Right post of 11/9/2011 to F5’s DevCentral blog:

Thanks to the maturity of load balancing services and technology, dynamically scaling applications in pre-cloud and cloud computing environments is a fairly simple task. But doing it right – in a way that maintains performance while maximizing resources and minimizing costs well, that is not so trivial a task unless you have the right tools.

SCALABILITY RECAP

Before we can explain how to do it right, we have to dig into the basics of how scalability (and more precisely auto-scalability) works and what’s required to scale not only dynamically.

A key characteristic of cloud computing is scalability, or more precisely the ease with which scalability can be achieved.

Scalability and Elasticity via dynamic ("on-demand") provisioning of resources on a fine-grained, self-service basis near real-time, without users having to engineer for peak loads.

-- Wikipedia, “Cloud Computing”

When you take this goal apart, what folks are really after is the ability to transparently add and/or remove resources to an “application” as needed to meet demand. Interestingly enough, both in pre-cloud and cloud computing environments this happens due to two key components: load balancing and automation.

Load balancing has always been used to scale applications transparently. The load balancing service provides a layer of virtualization in the network that abstracts the “real” resources providing the application and makes many instances of that application appear to be a single, holistic entity. This layer of abstraction has the added benefit of allowing the load balancing service to see both the overall demand on the “application” as well as each individual instance. This is important to cloud scalability because a single application instance does not have the visibility necessary to see load at the “application” layer, it sees only load at the application instance layer, i.e. itself.

Visibility is paramount to scalability to maintain efficiency of scale. That means measuring CAP (capacity, availability, and performance) both at the “virtual” application and application instance layers. These measurements are generally tied to business and operational goals – the goals upon which IT is measured by its consumers. The three are inseparable and impact each other in very real ways. High capacity utilization often results in degrading performance, availability impacts both capacity and performance, and poor performance can in turn degrade capacity. Measuring only one or two is insufficient; all three variables must be monitored and, ultimately, acted upon to achieve not only scalability but efficiency of scale. Just as important is flexibility in determining what defines “capacity” for an application. In some cases it may be connections, in other CPU and/or memory load, and in still others it may be some other measurement. It may be (should be) a combination of both capacity and performance, and any load balancing service ought to be able to balance all three variables dynamically to achieve maximum results with minimum resources (and therefore in a cloud environment, costs).

WHAT YOU NEED TO KNOW BEFORE YOU CONFIGURE

There are three things you must do in order to ensure cloud scalability is efficient:

1. Determine what “capacity” means for your application. This will likely require load testing of a single instance to understand resource consumption and determine an appropriate set of thresholds based on connections, memory and CPU utilization. Depending on what load balancing service you will ultimately use, you may be limited to only viewing capacity in terms of concurrent connections. If this is the case – as is generally true in an off-premise cloud environment where services are limited – then ramp up connections while measuring performance (be sure to read #3 before you measure “performance”). Do this multiple times until you’re sure you have a good average connection limit at which performance becomes an issue.

2. Determine what “available” means for an application instance. Try not to think in simple terms such as “responds to a ping” or “returns an HTTP response”. Such health checks are not valid when measuring application availability as they only determine whether the network and web server stack are available and responding properly. Both can be true yet the application may be experiencing troubles and returning error codes or bad data (or no data). In any dynamic environment, availability must focus on the core unit of scalability – the application. If that’s all you’ve got in an off-premise cloud load balancing service, however, be aware of the risk to availability and pass on the warning to the business side of the house.

3. Determine “performance” threshold limitations for application instances. This value directly impacts the performance of the virtual application. Remember to factor in that application responses times are the sum of the time it takes to traverse from the client to the application and back. That means the application instance response time is only a portion, albeit likely the largest portion, of the overall performance threshold. Determine the RTT (round trip time) for an average request/response and factor that into the performance thresholds for the application instances.

WHY IS THIS ALL IMPORTANT

If you’re thinking at this point that it’s not supposed to require so much work to “auto-scale” in cloud computing environments, well, it doesn’t have to. As long as you’re willing to trade a higher risk of unnoticed failure with performance degradation as well as potentially higher-costs in inefficient scaling strategies, then you need do nothing more than just “let go, let cloud” (to shamelessly quote the 451 Group’s Wendy Nather

).

The reason that ignoring all the factors that impact when to scale out and back down is so perilous is because of the limitations in load balancing algorithms and, in particular in off-premise cloud environments – inability to leverage layer 7 load balancing (application switching, page routing, et al) to architect scalability domains. You are left with a few simple and often inefficient algorithms from which to choose, which impedes efficiency by making it more difficult to actually scale in response to actual demand and its impact on the application. You are instead reacting (and often too late) to individual pieces of data that alone do not provide a holistic view of the application, but rather only limited views into application instances.

Cloud scalability – whether on-premise or off – should be a balancing (pun only somewhat intended) act that maximizes performance and efficiency while minimizing costs. While allowing “the cloud” to auto-scale encourages operational efficiency, it often does so at the expense of performance and higher costs.

An ounce of prevention is worth a pound of cure, and in the case of scalability a few hours of testing is worth a month of additional uptime.

Curt Mackie asserted “General Manager of Microsoft Server and Tools says 75 percent of new server shipments use the Windows Server operating system for private cloud deployments” in a deck for his Microsoft: Tens of Thousands of Customers Use Windows Azure Cloud article of 11/8/2011 for the Redmond Developer News blog:

Microsoft expects the future of its Windows Azure public cloud to be more in the platform-as-a-service (PaaS) realm, offering support for multiple programming platforms besides .NET.

Charles Di Bona, general manager of Microsoft Server and Tools, said Microsoft is the only company that offers public cloud, private cloud and hybrid solutions to its potential customers. He spoke in San Francisco at the CLSA Asia USA Forum for investors on Monday.

Microsoft's cloud position is not too new, but it's become a little more nuanced after about a year's time. The company's marketing term for this scenario used to be called "Software Plus Services." However, lately Microsoft just talks "cloud," and describes companies that deploy their own servers in datacenters as deploying "private clouds." Windows Azure, by contrast, is Microsoft's "public cloud." Microsoft went "all-in" for the cloud in March 2010, announcing a major business shift at that time. However, Di Bona admitted in his talk that new technologies, such as Windows Azure, never wholly supplant the old ones, such as Microsoft server technologies.

"The reality is that the cloud part of our business is much smaller than the server part of our business," Di Bona said, according to a Microsoft transcript (Word doc). "And that's going to continue to be the way it is for the foreseeable future here."

Microsoft has "tens of thousands of customers on Azure, and increasingly on Office 365," Di Bona said.

Microsoft's $17-billion Server and Tools business produces Windows Server, SQL Server and Windows Azure, all of which Di Bona described as "the infrastructure for data centers." That division doesn't include Office 365, which is organized under Microsoft Online Services.

Di Bona claimed that 75 percent of new server shipments use the Windows Server operating system for private cloud deployments. Microsoft sees its System Center management software products as a key component for private datacenters, whether they use Microsoft Hyper-V or VMware products for virtualization. He said that Microsoft's System Center financials were "up over 20 percent year over year."

Amazon Web Services is a more static infrastructure-as-a-service play, Di Bona contended, but he said that's changing.

"And we see Amazon trying to add now PAAS [platform as a service] componentry on top of what they do. So we think that where we ended up is the right place."

Microsoft is building infrastructure-as-a-service capabilities into Windows Azure, Di Bona said. He cited interoperability with other frameworks, such as the open source Apache Hadoop, as an example. Microsoft is partnering with Horton Works on Hadoop interoperability with Windows Azure and Windows Server. Hadoop, which supports big data-type projects, was originally fostered by Yahoo.

"So, it [Hadoop] is a new framework that is not ours," Di Bona said. "We're embracing it, because we understand that for a lot of big data solutions that is clearly the way a lot of developers and end-users and customers want to go, at least for that part of what they do."

Di Bona's talk at the CLSA Asia USA Forum can be accessed at Microsoft's investor relations page here.

Full disclosure: I’m a contributing editor for Visual Studio Magazine, which 1105 Media publishes also.

<Return to section navigation list>

Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

Mike Neil of the Windows Server and Cloud Platform Team posted Windows Server 8: Introducing Hyper-V Extensible Switch on 11/8/2011:

Hyper-V users have asked for a number of new networking capabilities and we’ve heard them. In codename Windows Server 8 we are adding many new features to the Hyper-V virtual switch for virtual machine protection, traffic isolation, traffic prioritization, usage metering, and the troubleshooting. We are also introducing rich new management capabilities that support both WMI and PowerShell. It’s an exciting set of functionality! But we didn’t stop there.

Many customers have asked us for the ability to more deeply integrate Hyper-V networking into their existing network infrastructure, their existing monitoring and security tools, or with other types of specialized functionality. We know that we have an exciting virtual switch in Hyper-V, but there are still opportunities for partners to bring additional capabilities to Hyper-V networking.

In Windows Server 8 we are opening up the virtual switch to allow plug-ins (we call “extensions”) so that partners can add functionality to the switch, transforming the virtual switch into the Hyper-V Extensible Switch.

There are several reasons why IT professionals should be excited by the functionality for the Hyper-V Extensible Switch. First, extensions only deliver the functionality you want in the switch. You do not need to replace the entire switch just to add a single capability.

Second, the framework makes extensions into first class citizens of the system, with support for the same customer scenarios as the switch itself. That means capabilities like Live Migration work on extensions automatically. Switch extensions can be managed through Hyper-V Manager and by WMI or PowerShell.

Third, you can expect to see a rich ecosystem of new extensions allowing you to customize Hyper-V networking for your environment. Developers code extensions using existing, public API so there isn’t a new programming model to learn. Extensions are coded using WFP or NDIS, just like other networking filters and drivers, a model most developers are already familiar with. They can use existing tools and know-how to quickly build extensions.

Fourth, extensions are reliable because they run within a framework and are backed with Windows 8 Certification tools to test and certify them. The result is fewer bugs and higher satisfaction.

Fifth, we’ve extended Unified Tracing through the switch to make it easier for you to diagnose issues, which will lower your support costs.

We unveiled the Hyper-V Extensible Switch at the //BUILD/ conference, along with an ecosystem of partners showing live demonstrations of early versions of their products. The session of live demonstrations can be viewed on our web site at http://channel9.msdn.com/Events/BUILD/BUILD2011/SAC-559T

Briefly, the extensions showcased were the following:

- Cisco unveiled the Nexus 1000V for Hyper-V, in addition to their VM-FEX extension with direct I/O (SR-IOV). The CISCO announcement can be found here http://newsroom.cisco.com/press-release-content?type=webcontent&articleId=473289

- Inmon demonstrated traffic capturing and analysis with their sFlow product.

- 5Nine showed their Virtual Firewall.

- Broadcom demonstrated a DoS Prevention extension that emulates the functionality they provide in their OEM switch platform.

- NEC demonstrated an extension that converts Hyper-V to an OpenFlow virtual switch and integrates it with their Programmable Flow product.

These partners showed six great demonstrations of the flexibility and openness of the Hyper-V Extensible Switch. We are excited by each of these partnerships, as they are helping us validate the Windows Server 8 platform. They also suggest the broad range of customer value that can be delivered through an extensible networking platform in Hyper-V.

I encourage you to check out the Windows Server 8 Hyper-V Extensible Switch.

This new feature should have interesting ramifications for future Windows Azure and SQL Azure implementations.

<Return to section navigation list>

Cloud Security and Governance

<Return to section navigation list>

Cloud Computing Events

Mike Benkovich (@mbenko) and Michael Wood (@mikewo) will present a MSDN Webcast: Windows Azure Office Hours, featuring Mike Benkovich and Michael Wood (Level 100 ) on 9/11/2011 at 11 AM PST:

This week we invite Michael Wood to join us as we answer your questions to also include a conversation about the future of Windows Azure Boot Camps. These are a two day deep dive class designed to help get you up to speed on developing for Windows Azure. The class includes a trainer with real world experience with Azure, as well as a series of labs so you can practice what you just learned. Michael is leading the community efforts to drive these events to a city near you.

Azure Office Hours are an unstructured weekly event where you can get your questions answered. Browse to http://wabc.benkotips.com/OfficeHrs and enter your questions and we will answer them live during the event every Friday beginning 11 am PST. Check the event site for scheduling details and links to join the live conversation.

Presented By:

- Mike Benkovich, Cloud Evangelist, Microsoft Corporation

- Michael Wood, Solution Architect, Cumulux, Inc.