Windows Azure and Cloud Computing Posts for 12/16/2011+

| A compendium of Windows Azure, Service Bus, Access Control, Connect, SQL Azure Database, and related cloud-computing articles. |

•• Updated 12/18/2011 9:00 AM PST with new articles marked •• about Windows Azure Service Bus Connect EAI and EDI by Kent Weare and the updated Plugin for Eclipse with Java by Avkash Chauhan.

• Updated 12/17/2011 with new articles marked • about Windows Azure Service Bus Connect EAI and EDI, BizTalk 2010, AWS Toolkit for Visual Basic, an update to Microsoft Codename “Data Transfer”, Storing decimal data types in Windows Azure tables, Windows Azure developer training kits, SQL Database Federations specification, and Windows Azure Interactive Hive Console.

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Windows Azure Blob, Drive, Table, Queue and Hadoop Services

- SQL Azure Database and Reporting

- Marketplace DataMarket, Social Analytics and OData

- Windows Azure Access Control, Service Bus, and Workflow

- Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch and Entity Framework v4+

- Windows Azure Infrastructure and DevOps

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

Azure Blob, Drive, Table, Queue and Hadoop Services

• Lengning Liu of the Microsoft BI team posted an Introduction to the Hadoop on Azure Interactive Hive Console video to YouTube on 12/16/2011:

From the description:

The Microsoft deployment of Apache Hadoop for Windows lets you set up a private Hadoop cluster on Azure. One of the included administration/deployment tools is an Interactive Console for JavaScript and Hive. This video introduces the Interactive Hive console. Developer Lengning Liu demonstrates running several Hive commands against your Hadoop cluster.

• Avkash Chauhan (@avkashchauhan) described How you can store decimal data in Windows Azure Table Storage? in a 12/16/2011 post:

At this point Windows Azure Table Storage does not support natively storing decimal point data as described in the below supported property types which is a subset of data types defined by the ADO.NET Data Services specification:

While you can store your decimal data as string in the table and in your code you can use client library based serializer which supports decimal. So you can in effect use decimal by just changing the data type to PrimitiveTypeKind.String in your ReadingWritingEntityEvent handler.

Using custom attribute the solution becomes fairly convenient. For example, the entity classes just use the following code to get decimal data:

[EntityDataType(PrimitiveTypeKind.String)]

public decimal Quantity { get; set; }The above code just works fine with string type data but doesn’t work for other unsupported types like enums or TimeSpan.

Thanks to Windows Azure Product team for providing this information.

Quantity isn’t an ideal column for demonstrating decimal values because the quantity of most items is an integer.

Maureen O’Gara asserted “It gives Azure Big Data capabilities and advanced data analytics and a better shot at competing with rival clouds” in a deck for her Microsoft Tries Hadoop on Azure post of 12/16/2011 to the Azure Cloud on Ulitzer blog:

Microsoft added a trial version of Apache's open source Hadoop to its Windows Azure PaaS Monday as it said it would in October when it teamed up with Hortonworks, the Yahoo spin-off.

It gives Azure Big Data capabilities and advanced data analytics and a better shot at competing with rival clouds like Amazon's EC2 and Google's App Engine.

Users can build MapReduce jobs. Microsoft said a Hive ODBC Driver and Hive Add-in for Excel would enable data analysis of unstructured data through Excel and PowerPivot.

Would-be users have to submit a form and Microsoft will pick the testers it wants based on use cases. Microsoft gave no indication when the widgetry would be more than a preview.

It's part of an upgrade that includes SQL Azure Database tickles and simplified Azure billing and management.

The size of the database can now top out at 150GB, up from 50GB without costing more and it's supposedly easier to scale-out a "virtually unlimited" elastic database tier.

A downloadable SDK has JavaScript libraries and a run-time engine for the Node.JS framework, with support for hosting, storage and service bus.

It's for creating and scaling web apps that run on the Azure Windows Server.

The added language support means Microsoft wants Azure to appeal to developers beyond the .NET walled garden.

With the SDK Microsoft says Azure can integrate with other open source applications, including the Eclipse IDE (an updated plug-in), MongoDB and Solr/Lucene search engine. Access to Azure libraries for .NET, Java and Node.js is now available under an Apache 2 open-source license and hosted on GitHub.

The update offers a free 90-day trial and spending caps with views of real-time usage and billing details at Azure's new Metro-style Management Portal.

Pricing has been adjusted. The maximum price per SQL Azure DB is $499.95.The price per gigabyte of large SQL Azure databases has been cut 67% and data transfer prices in North America and Europe have been reduced by 25% to 12 cent a GB. Asia-Pacific data transfers that were 20 cents a GB are now 19 cents a GB, 5% cheaper.

<Return to section navigation list>

SQL Azure Database and Reporting

• Shoshana Budzianowski (@shoshe) reported Microsoft Codename "Data Transfer" Lab Refresh on 12/16/2011:

We committed to continually improving Microsoft Codename “Data Transfer” lab. We launched the lab less than a month ago, and we just completed our first major refresh to the service. This refresh addresses a few issues we found and a few issues you found.

- We’re more forgiving on blob names, but remember container names still have their own limitations. See: Naming and Referencing Containers, Blobs, and Metadata

- You can now set CSV delimiters and qualifiers

- Field type guessing does a better job. We analyze as much of the file possible in a few short seconds, rather than just deferring to the top 100 rows. If you don’t like our guess, you can still override it in the Table Settings page. You can get to that page by selecting Edit table Defaults on the File selection page:

- You now have the option to treat empty string as NULL

- And, most importantly, we’re continuing to improve our handling of large files. We’ve made significant improvements upload reliability for files up to 200 MB in size

Thanks for all the feedback you’ve submitted through surveys and feature voting. Keep the feedback coming, and get your vote in. Please feel free to contact us directly at datatransfer@microsoft.com, take the survey, or add your suggestions and issues to the SQL Azure Labs Support Forum. We look forward to hearing from you.

Microsoft Access also had a problem guessing data types as a result of only analyzing the first 50 or so rows of data to choose a data type. For example, if the first 50 rows contained 5-digit ZIP Codes and later rows included alphanumeric Canadian or UK zip codes, the row would be be assigned the Long Integer Access data type, which causes an error when encountering a non-integer code.

• My (@rogerjenn) Test-Drive SQL Azure Labs’ New Codename “Data Transfer” Web UI for Copying *.csv Files to SQL Azure Tables or Azure Blobs article of 11/30/1022 is an illustrated tutorial that describes how to load Excel or *.csv files into Windows Azure blob storage and from there into SQL Azure.

• Ram Jeyaraman described Database Federations: Enhancing SQL to enable Data Sharding for Scalability in the Cloud in a 12/12/2011 post (missed when published):

I am thrilled to announce the availability of a new specification called SQL Database Federations, which describes additional SQL capabilities that enable data sharding (horizontal partitioning of data) for scalability in the cloud.

The specification has been released under the Microsoft Open Specification Promise. With these additional SQL capabilities, the database tier can provide built-in support for data sharding to elastically scale-out the data. This is yet another milestone in our Openness and Interoperability journey.

As you may know, multi-tier applications scale-out their front and middle tiers for elastic scale-out. With this model, as the demand on the application varies, administrators add and remove new instances of the front end and middle tier nodes to handle the workload.

However, the database tier in general does not yet provide built-in support for such an elastic scale-out model and, as a result, applications had to custom build their own data-tier scale-out solution. Using the additional SQL capabilities for data sharding described in the SQL Database Federations specification the database tier can now provide built-in support to elastically scale-out the data-tier much like the middle and front tiers of applications. Applications and middle-tier frameworks can also more easily use data sharding and delegate data tier scale-out to database platforms.

Openness and interoperability are important to Microsoft, our customers, partners, and developers, and so the publication of SQL Database Federations specification under the Microsoft Open Specification Promise will enable applications and middle-tier frameworks to more easily use data sharding, and also enable database platforms to provide built-in support for data sharding in order to elastically scale-out the data.

Also of note: The additional SQL capabilities for data sharding described in the SQL Database Federations specification are now supported in Microsoft SQL Azure via the SQL Azure Federation feature.

Here is an example that uses Microsoft SQL Azure to illustrate the use of the additional SQL capabilities for data sharding described in the SQL Database Federations specification.

-- Assume the existence of a user database called sales_db. Connect to sales_db and create a federation called orders_federation to scale out the tables: customers and orders. This creates the federation represented as an object in the sales_db database (root database for this federation) and also creates the first federation member of the federation. CREATE FEDERATION orders_federation(c_id BIGINT RANGE) GO -- Deploy schema to root, create tables in the root database (sales_db) CREATE TABLE application_configuration(…) GO … -- Connect to the federation member and deploy schema to the federation member USE FEDERATION orders_federation(c_id=0) … GO -- Create federated tables: customers and orders CREATE TABLE customers (customer_id BIGINT PRIMARY KEY, …) FEDERATED ON (c_id = customer_id) GO CREATE TABLE orders (…, customer_id BIGINT NOT NULL) FEDERATED ON (c_id = customer_id) GO -- To scale out customer’s orders, SPLIT the federation data into two federation members USE FEDERATION ROOT … GO ALTER FEDERATION orders_federation SPLIT AT(c_id=100) GO -- Connect to the federation member that contains the value ‘55’ USE FEDERATION orders_federation(c_id=55) … GO -- Query the federation member that contains the value ‘55’ UPDATE orders SET last_order_date=getutcdate()… GOI am confident that you will find the additional SQL capabilities for data sharding described in the SQL Database Federations specification very useful as you consider scaling-out the data-tier of your applications. We welcome your feedback on the SQL Database Federations specification.

Ram is a Senior Program Manager in Microsoft’s Interoperability Group.

Rick Saling reported Windows Azure Prescriptive Guidance [for SQL Azure] Released in a 12/12/2011 post (missed when published):

A virtual team of us released this last Friday. It provides you with "best practices and recomendations for working with the Windows Azure platform". So if you find yourself overwhelmed and intimidated by "The Cloud", this is a really good place to go to get specific recomendations and "best practices".

My contribution to this, largely co-authored with James Podgorski from the Windows Azure Customer Advisory Team (who did the really hard work of discovering customer pain points, and coming up with code to solve them), is inside Developing Windows Azure Applications: the section titled SQL Azure and On-Premises SQL Server: Development Differences. The section contains topics about things that are different between SQL Server and SQL Azure: things that you know about developing with SQL Server (including Entity Framework), that work differently in SQL Azure. Or to be blunt about it: some code that you write for SQL Server will not work in SQL Azure without some modifications.

The section includes these topics:

- Handling Transient Connections in Windows Azure: my last two blog posts were about this. Please note that this topic currently has a broken link for the Microsoft Enterprise Library 5.0 Integration Pack for Windows Azure.

- Entity Framework Connections and Federations

- Issues with Transactions and Federated Databases

- Transactions with Entity Framework in Federated Databases

These topics show you the code changes needed to resolve specific problems. We are also researching more general solutions that might require less code on your part.

The section overview, SQL Azure and On-Premises SQL Server: Development Differences, lists two topics as being in the section that are not linked to:

- Unsupported Features in SQL Azure: SQL Server commands that are not implemented in SQL Azure

- Schema Definition Differences: good database schema design principles are slightly different for SQL Azure

These topics will be coming out "real soon now", and the broken link mentioned above will get fixed ASAP.

Are there other obstacles you run into when you take your existing SQL Server knowlege and apply it to SQL Azure? Things that you "know" from developing with SQL Server that "don't work" in SQL Azure? Let me know in the comments to this post, and if they are of general enough interest, I'll get them written up and added. And if you've already solved similar problems in the past, again, let me know.

<Return to section navigation list>

MarketPlace DataMarket, Social Analytics and OData

No significant articles today.

<Return to section navigation list>

Windows Azure Access Control, Service Bus and Workflow

•• Kent Weare (@wearsy) posted Introduction to the Azure Service Bus EAI/EDI December 2011 CTP on 12/17/2011:

The Azure team has recently reached a new milestone; delivering on Service Bus enhancements for the December 2011 CTP. More specifically, this CTP provides Enterprise Application Integration (EAI) and Electronic Data Interchange (EDI) functionality. Within the Microsoft stack, both EAI and EDI have historically been tackled by BizTalk. With this CTP we are seeing an early glimpse into how Microsoft envisions these types of integration scenarios being addressed in a Platform as a Service (PaaS) based environment.

What is included in this CTP?

There are 3 core areas to this release:

- Enterprise Application Integration: In Visual Studio, we will now have the ability to create Enterprise Application Integration and Transform projects.

- Connectivity to On-premise LOB applications: Using this feature will allow us to bridge our on-premise world with the other trading partners using the Azure platform. This is of particular interest to me. My organization utilizes systems like SAP, Oracle and 3rd party work order management and GIS systems. In our environment, these applications are not going anywhere near the cloud anytime soon. But, we also do a lot of data exchange with external parties. This creates an opportunity to bridge the gap using existing on-premise systems in conjunction with Azure.

- Electronic Data Interchange: We now have a Trading Partner Management portal that allows us to manage EDI message exchanges with our various business partners. The Trading Partner Management Portal is available here.

What do I need to run the CTP?

- The first thing you will need is the actual SDK (WindowsAzureServiceBusEAI-EDILabsSDK-x64.exe) which you can download here.

- A tool called Service Bus Connect (not to be confused with AppFabric Connect) enables us to bridge on-premise with Azure. The setup msi can also be accessed from the same page as the SDK. Within this setup we have the ability to install the Service Bus Connect SDK(which includes the BizTalk Adapter Pack), Runtime and Management tools. In order for the runtime to be installed, we need to have Windows Server AppFabric 1.0 installed. [Emphasis added.]

- Once we have the SDKs installed, we will have the ability to create ServiceBus projects in Visual Studio.

- Since our applications will require resources from the Azure cloud, we need to create an account in the Azure CTP Labs environment. To create a namespace in this environment, just follow these simple instructions.

- Next, we need to register our account with the Windows Azure EDI Portal.

Digging into the samples

For the purpose of this blog post I will describe some of what is going on in the first Getting Started sample called Order Processing.

When we open up the project and launch the BridgeConfiguration.bcs file we will discover a canvas with a couple shapes on it. I have outlined, in black, a Bridge and I have highlighted, in Green, what is called a Route Destination. So the scenario that has been built for us is one that will receive a typed xml message, transform it and then place it on a Service Bus Queue.

When we dig into the Xml One-Way Bridge shape, we will discover something that looks similar to a BizTalk Pipeline. Within this “shape” we can add the various message types that are going to be involved in the bridge. We can then provide the names of the maps that we want to execute.

Within our BridgeConfiguration.bcs “canvas” we need to provide our service namespace. We can set this by click on the whitespace within the canvas and then populate the Service Namespace property.

We need to set this Service Namespace so that we know where to send the result of Xml One-Way bridge. In this case we are sending it to a Service Bus Queue that resides within our namespace.

With our application configured we can build our application as we normally would. In order to deploy, we simply right mouse click on solution, or project, and select Deploy. We will once again be asked to provide our Service Bus credentials.

The deployment is quick, but then again we aren’t pushing too many artifacts to the Service Bus.

Within the SDK samples, Microsoft has provided us with MessageReceiver and MessageSender tools. With the MessageReceiver tool, we can use it to create our Queue and receive a message. The MessageSender tool is used to send the message to the Queue.

When we test our application we will be asked to provide an instance of our typed Xml message. It will be sent to the Service Bus, transformed (twice) and then the result will be placed on a Queue. We will then pull this message off of the queue and the result will be displayed in our Console.

Conclusion

So far is seems pretty cool and looks like this team is headed in the right direction. Much like the AppFabric Apps CTP, there will be some gaps in the technology, and bugs, that have been delivered as this is still just a technical preview. If you have any feedback for the Service Bus team or want some help troubleshooting a problem, a forum has been set up for this purpose.

I am definitely looking forward to digging into this technology further – especially in the area of connecting to on-premise LOB systems such as SAP. Stay tuned for more posts on these topics.

Wearsy makes the important point that Service Bus Connect is not to be confused with AppFabric Connect, as emphasized above.

•• Kent Weare (@wearsy) described the Azure Service Bus EAI/EDI December 2011 CTP – New Mapper in a 12/17/2011 post:

In this blog post we are going to explore some of the new functoids that are available in the Azure Service Bus Mapper.

At first glance, the Mapper looks pretty similar to the BizTalk 2010 Mapper. Sure there are some different icons, perhaps some lipstick applied but conceptually we are dealing with the same thing right?

Wrong! It isn’t until we take a look at the toolbox that we discover this isn’t your Father’s mapper.

This post isn’t meant to be an exhaustive reference guide for the new mapper but here are some of the new functoids that stick out for me.

What’s Missing?

I can’t take credit for discovering this, but while chatting with Mikael Håkansson he mentioned “hey – where is the scripting functoid?” Perhaps this is just a limitation of the CTP but definitely something that needs to be addressed for RTM. It is always nice to be able to fall back on a .Net helper assembly or custom XSLT.

Conclusion

While this post was not intended to be comprehensive, I hope it has highlighted some new opportunities that warrant some further investigation. It is nice to see that Microsoft is evolving and maturing in this area of EAI

Mick Badran (@mickba) posted an illustrated Azure AppFabric Labs–EAI, Service Bus in the Cloud tutorial on 12/17/2011:

Well folks – the appfabric labs have come out with a real gem recently.

In CTP we have:

- EDI + EAI processing

- AS2 http/s endpoints

- ‘Bridges’

- Transforms

and of course the latest version of

- ServcieBus, Queues and Topics.

To get the real benefit from this ‘sneak peek’ there’s a bit of setup required. To those familiar with BizTalk there’s a few EDI screens declaring parties/partners and agreements you’ll have seen before.

To get cracking:

- Update your local bits with the latest and greatest - Installing the Windows Azure Service Bus EAI and EDI Labs - December 2011

Part of this install is to install the Service Bus Connect component, which installs the BizTalk 2010 LOB Adapter pack.

So this is really quite interesting. As the WCF LOB Adapter SDK provides a framework for developers to build out ‘adapters’ to connect systems/endpoints through a sync/async messaging pattern.

The BizTalk Adapter Pack 2010 is the BizTalk Team set of adapters built on top of the WCF Adapter Framework. The BizTalk Adapter pack includes:

- SQL Server Adapter. Hi performance sql work, notifications, async reads, writes etc.

- SAP Adapter – uses the SAP Client APIs (under the hood) to talk directly to SAP. Very powerful

- SIEBEL Adapter

- Oracle DB Adapter

- Oracle ES Adapter

These adapters are exposed as ‘WCF Bindings’ with BizTalk or a small amount of code, allows you to expose these adapters as callable WCF Services.

What does this mean in our case here?

If you think about your on-premise Oracle system, we now have a local means of accessing Oracle and we can then push the message processing (e.g. a new order arrived) into our ‘cloud’ bridge where we have the immediate benefit of HA + Scale. Do some work there, and spit the result out any which way you want. Maybe back down to on-premise, or in a Queue or to Azure Storage.- Sign up to AppFabricLabs – http://portal.appfabriclabs.com and provision your ‘servicebus’ service.

This provides your EDI/EAI relay endpoints and also provides a way for you to listen/send requests to/from the cloud.- Here I have used mickservices as my ServiceBus namespace.

(I created a Queue and a couple of Topics for later use – not really needed here)

Note: grab your HIDDEN KEY details from here – owner + <key#>- From within the Portal Create a Queue called samples/gettingstarted/queueorders

- Register at the EDI Portal – http://edi.appfabriclabs.com

Even though this says ‘EDI’ think of it as your sandpit. It’s where all your ‘widgets’ live that are to run in Azure Integration Services.

The registration form had me stumped for a little bit. Here’s the details that work.

Notice my servicebus namespace – just the first word. I previously had the whole thing, then variations of it.

Issuer Name: owner

Issuer secret: <the hidden key from above>

Click save/register and you should be good here.- Once this is done – click on Settings –> AS2 and Enable AS2 message processing (which is EDI/HTTP – you might be lucky enough to get the msgs as XML, but most times no). This will create some endpoints for you b2bgateway… style endpoints.

- At this stage, have a look under Resources and you’ll notice that it’s empty. But…they have Schemas, Transforms and Certificates. We’ll come back to that later.

- Let’s head to Visual Studio 2010 with the updates installed and open up the Sample Order Processing project.

I installed my samples under c:\samples

If all opens well you should see:

Note: there’s a couple of new items here: (expand out artifacts)

*.bcs – Bridge. There’s a MSDN Article describing these – I was like ‘what???’. Basically these are a ‘processing pipe’ of which various operations can be performed on a message in stages. These stages are ‘atomic’ and they also have ‘conditions’ as to whether they *need* to be applied to the said message. So a bridge could take a message, convert it to XML and broadcast the message out to a Topic.

Opening up the designer – it gets pretty cool I must say!!!

Note the ‘operations’ on the LHS. I must have a play with these guys

Another thought – how extensible is this? I’d bet we could write our own widgets to throw on the design surface as well.

By double clicking on the BridgeOrders component, you can see the designer surface come up with the ‘stage processing’.

Here you can see the ‘bridge’ (I wonder if that term will last till the release) will accept only 2 types of message schemas – PO1 + PO2. Maps them out to a more generic PO format.

The map – XMLTransform from my initial testing only applies one map, the first one that matches the source schema (this is the same as BizTalk).

Close the bridge view down and leave the BridgeConfiguration open.- Click anywhere on the white surface of the BridgeConfiguration and set your Service Namespace property from the Properties window (this guy was hard to find!!)

Put <your service namespace> you created originally.

- Save and click Deploy and a Deployment window comes up – put your details in from above.

After deployment completes, keep an eye on the Output window as this has all the URLs you’ll need for the next step. In particular the BridgeOrders.

Feel free to go back to your Azure Portal –> Resources and see your deployed bits in there, Schemas, Transforms etc.- Running what you’ve built – sending a message to the ‘bridge’ (here I’ve borrowed info from the ‘Readme.html’ in the sample project folder)

We don’t need to setup the whole EDI Trading partner piece. – just send messages to a restful endpoint – aka the bridge.

- From the samples folder locate the Tools\MessageSender project. (you may have to build it in VS.NET first)

- from a command prompt run messagesender.exe

In my case it looks like this:

Took me a little to get this originally, make sure all your VS.NET stuff is deployed properly.

So effectively we have sent PO1.xml to our ‘Bridge’ and it’s been accepted, validated and transformed into ‘something else’ and popped onto a Queue called Samples/gettingstarted/QueueOrders.

We will now get the message Reader to Read it.- From under the Samples\Tools folder locate the MessageReceiver project and build if required.

From a command prompt at that location, run the following to Listen to the queue

>

Wrapping up -

Here is obviously a quick walk through of what’s possible, performance, scale and throughput are other measures that we haven’t got here – given it’s CTP/Labs we’re not quite ready for that conversation.BizTalk adapter pack will expose out for e.g. your SAP system to a wider audience and imagine having restful WCF services to call that provide you customer data in the format you want…or better still…deliver it straight to you!

(Currently in BTS 2010, the adapter pack is licensed separately, it’s part of BTS standard or enterprise. BTS2009 it *was* licensed separately for RRP $5K. Maybe we’ll see this as a separate component again.)

Or you could do like the SharePoint team and write a brand new WCF Adapter (‘connector’ in their terms) – ‘Duet’ and spend 18 months doing so.

Some things I’d like to see here is a Rules Processor or Engine – being a long long BizTalk fan, the rules engine is a massive strength of any loosely coupled solution. The majority of BizTalk solutions I come across don’t employ any rules engines…or better still, Windows Workflow 2,3+ (but not 4 or 4.5) has a rules ‘executor’ which is very powerful in it’s own right. Who’s heard or used the Policy shape?Given that this is a sneak peak at what is on the horizon, this is definitely a space not to miss.

Get those trial accounts going and enjoy!

In particular I’d like to call out Rick’s Article (well done Rick!) for a great read on this space also. [See below]

Rick Garibay announced the Azure Service Bus Connect EAI and EDI “Integration Services” CTP in a 12/16/2011 post:

I am thrilled to share in the announcement that the first public CTP of Azure Service Bus Integration Services is now LIVE at http://portal.appfabriclabs.com [see the screen capture below].

The focus of this release is to enable you to build hybrid composite solutions that span on-premise investments such as Microsoft SQL Server, Oracle Database, SAP, Siebel eBusiness Applications, Oracle E-Business Suite, allowing you to compose these mission critical systems with applications, assets and workloads that you have deployed to Windows Azure, enabling first-class hybrid integration across traditional network and trust boundaries.

In a web to web world, many of the frictions addressed in these capabilities still exist, albeit to a smaller degree. The reality is that as the web and cloud computing continue to gain momentum, investments on-premise are, and will continue to be critical to realizing the full spectrum of benefits that cloud computing provides both in the short and long term.

Azure Service Bus Connect provides a new server explorer experience for LOB integration exposing a management head that can be accessed on-prem via Server Explorer or PowerShell to create, update, delete or retrieve information from LOB targets. This provides a robust extension of the Azure Service Bus relay endpoint concept, which acts a LOB conduit (LobTarget, LobRelay) for bridging these assets by extending the WCF LOB Adapters that ship with BizTalk Server 2010. The beauty of this approach is that you can leverage the LOB Adapters using BizTalk as a host, or, for a lighter weight

wayapproach, use IIS/Windows Server AppFabric to compose business operations on-premise and beyond. [WCF LOB Adapters link to MSDN technical articles added.]In addition, support for messaging between trading partners across traditional trust boundaries in business-to-business (B2B) scenarios using is EDI is also provided in this preview, including AS2 protocol support with X12 chaining for send and receive pipelines, FTP as transport for X12, agreement templates, partners view with profiles per partner, resources view, and an intuitive, metro style EDI Portal.

Just as with on-premise integration, friction always exists when integrating different assets which may exist on different platforms, implement different standards and at a minimum have different representations of common entities that are part of your composite solution’s domain. What is needed is a mediation broker that can be leveraged at internet-scale, and apply message and protocol transformations across disparate parties and this is exactly what the Transforms capability provides. Taking an approach that will be immediately familiar to the BizTalk developer, a familiar mapper-like experience is provided within Visual Studio for interactively mapping message elements and applying additional processing logic via operations (functoids).

In addition, XML Bridges which include the XML One-Way Bridge and XML Request-Reply Bridge are an extension to the Azure Service Bus which supports critical patterns such as protocol bridging, routing actions, external data lookup for message enrichment and support for both WS-I and REST endpoints and any combination thereof.

As shown below in the MSDN documentation, “bridges are composed of stages and activities where each stage is a message processing unit in itself. Each stage of a bridge is atomic, which means either a message completes a stage or not. A stage can be turned on or off, indicating whether to process a message or simply let it pass through”.

Taking a familiar VETR approach to validate, extract, transform and route messages from one party to another, along with the ability to enrich messages by composing other endpoint in-flight (supported protocols include HTTP, WS-HTTP and Basic HTTP, HTTP Relay Endpoint, Service Bus Queues/Topics and any other XML bridge) the Bridge is a very important capability and brings very robust capabilities for extending Azure Service Bus as a key messaging broker across integration disciplines.

In reality, these patterns have no more to do with EAI than with traditional, contemporary service composition and become necessary once you move from a point-to-point approach and need to elegantly manage integration and composition across assets. As such, this capability acts as a bridge to Azure Service Bus that is very powerful in and of itself, even in non-EAI/EDI scenarios where endpoints can be virtualized increasing decoupling between parties (clients/services). In addition, this capability further enriches what is possible when using the BrokeredMessage property construct as a potential poor-man’s routing mechanism.

In closing, the need to address the impedance mismatch that exists between disparate applications that must communicate with each other is a friction that will continue to exist for many years to come, and while traditionally, many of these problems have been solved by expensive, big iron middleware servers, this is changing.

As with most technologies, often new possibilities are unlocked that are residual side-effects of something bigger, and this is certainly the case with how both innovative and strategic Azure Service Bus is to Microsoft’s PaaS strategy. Azure Service Bus continues to serve as a great example of a welcomed shift to a lightweight capability-based, platform-oriented approach to solving tough distributed messaging/integration problems while honoring the existing investments that organizations have made and benefiting from a common platform approach which is extremely unique in the market. And while this shift will take some time, in the long-run enterprises of all shapes and sizes only stand to benefit.

To get started, download the SDK & samples from http://go.microsoft.com/fwlink/?LinkID=184288 and the tutorial & documentation from http://go.microsoft.com/fwlink/?LinkID=235197 and watch this and the Windows Azure blog for more details coming soon.

• See below to learn more about BizTalk Server 2010 and WCF Adapters with the BizTalk Server 2010 Developer Training Kit.

This is a high-end feature that’s likely to remain Azure-only forever. Click the AppFabric button at the bottom of the AppFabric Labs Portal page’s navigation pane to open the Windows Service Bus EIA and EDI Connect, a.k.a. “Integration Services.”

Here’s documentation for the Windows Azure Service Bus EAI and EDI Labs - December 2011 Release.

Following is a screen capture of part of the Download page:

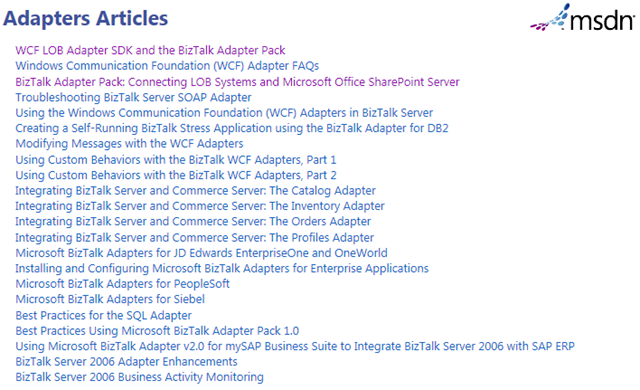

Here’s a list of the current WCF LOB Adapter articles from MSDN:

And here’s a capture of the Downloads page:

• To learn more about BizTalk Server 2010 and WCF Adapters, you can download the BizTalk Server 2010 Developer Training Kit. From the description:

This training kit contains a complete set of materials that will enable you to learn the core developer capabilities in BizTalk Server 2010. This kit includes lab manuals, PowerPoint presentations and videos, all designed to help you learn about BizTalk Server 2010. There is also an option below to download a Virtual Machine that is ready for you to use with the training kit.

From the Overview:

At Hands-On Lab Completion

After completing this course, students will be able to:

- Work with schemas, maps, and pipelines, and create flat file schemas.

- Configure a new FTP, HTTP and Windows SharePoint Services (WSS) adapter for BizTalk Server

- Use BizTalk Orchestration Designer to create and test a simple orchestration.

- Configure orchestration properties and variables, deploy an orchestration, and create and deploy a rule set and execute those rules from within an orchestration.

- Define, deploy, and map a BAM observation model.

- Use the deployment and management features in BizTalk Server

- Build a simple Windows Communication Foundation (WCF) service and client and configure BizTalk Server to use a WCF Adapter.

- Create a Microsoft .NET class library project that will contain a WCF Adapter.

- Deploy artifacts needed for processing certain EDI documents and for turning XML messages into EDI.

Thanks to Rick Garibay for the heads up about the training kit in this tweet.

Itai Raz wrote an official Announcing the Service Bus EAI & EDI Labs Release post on 12/16/2011 for the Windows Azure blog:

As part of our continuous innovation on Windows Azure, today we are excited to announce the Windows Azure Service Bus EAI & EDI Labs release. As with former labs releases, we are sharing some early thinking on possible feature additions to Windows Azure and are committed to gaining your feedback right from the beginning.

The capabilities showcased in this release enable two key scenarios on Windows Azure:

- Enterprise Application Integration (EAI) which provide rich message processing capabilities and the ability to connect private cloud assets to the public cloud.

- Electronic Data Interchange (EDI) targeted at business-to-business (B2B) scenarios in the form of a finished service built for trading partner management.

Signing up for the labs is easy and free of charge. All you need to do to check out the new capabilities is:

- Download and install the SDK

- Sign in to the labs environment using a Windows Live ID

We encourage you to ask questions and provide feedback on the Service Bus EAI & EDI Labs Release forum.

You can read more on how to use the new capabilities in the MSDN Documentation.

Please keep in mind that there is no SLA promise for labs, so you should not use these capabilities for any production needs. We will provide a 30 day notice before making changes or ending the lab.

Additional details on this release

EAI capabilities include:

- Easily validate, enrich and transform messages and route them to endpoints or systems exposed on private or public cloud. This includes capabilities such as external data lookup, xpath extraction, route actions, transformations of response messages, and so on.

- Hybrid connectivity to on-premises Line-of-business (LOB) systems, applications and data, using the Service Bus Relay capabilities. This includes out-of-the-box connectivity to LOB system such as: SAP, Oracle DB, Oracle EBS, Siebel and SQL DB.

These capabilities are supported by a rich development experience in Visual Studio to:

- Use a visual designer to define and test complex message transformation between different message structures.

- Configure a bridge (a.k.a. pipeline) that represents and manages the processing and routing of messages using a visual designer. Also find a simple experience of deploying it to Windows Azure.

- Use the Visual Studio Server Explorer to create, configure and deploy LOB entities on-premises, and expose them on Windows Azure.

The EAI capabilities can be monitored by IT, and are also exposed through REST APIs which enable developers to create management consoles.

EDI capabilities include:

- Enabling business users or service providers to onboard trading partners, such as suppliers or retailers, to create partner profiles and EDI agreements through a user friendly web based portal.

- Receiving and processing business transactions over EDI or XML messages.

Scott Dunsmore (@scottdunsmore) described Using Facebook with ACS and Windows Azure iOS Toolkit in a 12/16/2011 post:

Simon Guest posted a way to extract your Facebook information from the ACS token. Now there is nothing wrong with how Simon did this, I am just updating the toolkit to add all the claims to the claim set from ACS that will make this easier.

If you look in the master branch in file WACloudAccessToken.m line 81, you can see that when the claims are created yet filtered based on a claims prefix. We are now going to remove this requirement and allow all the claims to come through which will now make extracting the information for Facebook much easier. The following is the code to do exactly what Simon did in the new code:

NSString * const ACSNamespace = @"your ACS namespace"; NSString * const ACSRealm = @"your relying party realm"; NSString * const NameIdentifierClaim = @"http://schemas.xmlsoap.org/ws/2005/05/identity/claims/nameidentifier"; NSString * const AccessTokenClaim = @"http://www.facebook.com/claims/AccessToken"; - (IBAction)login:(id)sender { self.loginButton.hidden = YES; WACloudAccessControlClient *acsClient = [WACloudAccessControlClient accessControlClientForNamespace:ACSNamespace realm:ACSRealm]; [acsClient showInViewController:self allowsClose:NO withCompletionHandler:^(BOOL authenticated) { if (!authenticated) { NSLog(@"Error authenticating"); self.loginButton.hidden = NO; } else { _token = [WACloudAccessControlClient sharedToken]; self.friendsButton.hidden = NO; } }]; } - (IBAction)friends:(id)sender { // Get claims NSString *fbuserId = [[_token claims] objectForKey:NameIdentifierClaim]; NSString *oauthToken = [[_token claims] objectForKey:AccessTokenClaim]; // Get my friends NSError *error = NULL; NSString *graphURL = [NSString stringWithFormat:@"https://graph.facebook.com/%@/friends?access_token=%@",fbuserId,oauthToken]; NSURLRequest *request = [NSURLRequest requestWithURL:[NSURL URLWithString:graphURL]]; NSURLResponse *response = NULL; NSData *data = [NSURLConnection sendSynchronousRequest:request returningResponse:&response error:&error]; NSString *friendsList = [[NSString alloc] initWithData:data encoding:NSUTF8StringEncoding]; NSRegularExpression *regex = [NSRegularExpression regularExpressionWithPattern:@"id" options:0 error:&error]; NSUInteger friendCount = [regex numberOfMatchesInString:friendsList options:0 range:NSMakeRange(0, [friendsList length])]; [friendsList release]; self.friendLabel.text = [NSString stringWithFormat:@"%d friends", friendCount]; self.friendLabel.hidden = NO; }This code is checked into the develop branch. I even create a sample based on this in that branch.

<Return to section navigation list>

Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

Edu Lorenzo (@edulorenzo) described Editing a Single file on a Windows Azure Application using Remote Desktop in a 12/15/2011 post:

Ok, as promised, let’s learn this.

This has always been part of the Q&A portion whenever I evangelize Windows Azure. “How can I edit just one file on my Azure based app?” either that or “Is there a way to RDP into my instance?”

My answer, as usual, starts with “it depends.” Bcause, really, the need for this depends.

Well it is possible, but ill-advised for most scenarios. I have never been a big fan of editing a single file on the webserver. There are numerous downsides to doing this, starting with having a rogue copy of a file outside of source control.

Another thing that one should consider is the fact that if you RDP into your Azure instance, your in premise copy of the file will not be synched to it. And when that instance closes down, you don’t have any more copies.

But on the other hand, there is still the scenario where all you want to do is replace the word “Submit” with “Go” in just one button. Re packaging and re-uploading the whole app would seem, well, too hard.

So let’s go into how to RDP into a Windows Azure app’s instance.

First of course is to run Visual Studio, in Admin Mode. Let’s go admin mode so I can show two of the available ways to enable RDP on a Windows Azure Hosted Service. Then we create a new cloud project.

I’ll just go for a barebones WebRole.

Ok. Nothing fancy here, just a barebones app but need to pause for something.

As mentioned, I will show two of the possible ways to deploy a Windows Azure application with RDP Enabled. Both are of course basically the same:

Create an App, Package it, Create a Certificate, Upload the Certificate to your Azure subscription, Enable RDP, tell your app that RDP is enabled.

For the first option, I will package the application, create and export a certificate, upload the certificate to the Azure instance, then deploy the app to Azure.

Right-click on the cloud project and choose Package

This will bring up the Package dialog where we will tick the “Enable Desktop for all Roles” checkbox; which is unchecked by default.

After you check that, it will ask you for a few items:

You will be presented with a dropdown list that says <Automatic> by default. Change that to “create..” and it will ask you for a friendly name for your certificate. Provide a name for the certificate.

Then provide credentials (User name, Password and Expiration Date). It is usually a good idea to choose a future date for the expiration. Here I chose the day after Christmas of 2012.. if the Mayan Calendar was wrong, then this date should be good.

Clicking OK will close this dialog and then you can go ahead and Package the app.

This should open up Windows Explorer and show you where the package and config files for your app are located.

Before we continue, let’s take a look at what happened behind the scenes. A quick look at the “new” config file shows something like this:

<ConfigurationSettings> <Setting name=“Microsoft.WindowsAzure.Plugins.Diagnostics.ConnectionString“ value=“UseDevelopmentStorage=true“ /> <Setting name=“Microsoft.WindowsAzure.Plugins.RemoteAccess.Enabled“ value=“true“ /> <Setting name=“Microsoft.WindowsAzure.Plugins.RemoteAccess.AccountUsername“ value=“rdpadmin“ /> <Setting name=“Microsoft.WindowsAzure.Plugins.RemoteAccess.AccountEncryptedPassword“ value=“password is here, encrypted. I just changed it for the blog“ /> <Setting name=“Microsoft.WindowsAzure.Plugins.RemoteAccess.AccountExpiration“ value=“2012-12-26T23:59:59.0000000+08:00“ /> <Setting name=“Microsoft.WindowsAzure.Plugins.RemoteForwarder.Enabled“ value=“true“ /> </ConfigurationSettings> <Certificates> <Certificate name=“Microsoft.WindowsAzure.Plugins.RemoteAccess.PasswordEncryption“ thumbprint=“this will be the thumbprint“ thumbprintAlgorithm=“sha1“ /> </Certificates>You will see a few changes, noticeably the Certificate entry and the settings for RemoteAccess. Yes you can do these changes by hand. You can also create a self-signed certificate, get the thumbprint and use that. But as one of my friends say “why would you want to do stuff that is automated for you?”

What these changes does for you is it assigns a certificate and ties it with your application through the Certificate node in the config. This means, when your app is uploaded to Windows Azure, you should already have that particular certificate already existing.

So, with this first method, the next thing we need to do is export the Certificate file and upload it to your instance.

Export using the certificate manager.

And after successful export, upload the certificate to your Azure instance by clicking Add Certificate and uploading the cert file you just exported.

Once you have that going, next thing to do is deploy your app to Azure!

Once it is ready, you will see that you can enable RDP and Connect.

And there you go!

Next blog will be about how to do this using VS alone.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

•• Avkash Chauhan (@avkashchauhan) reported Windows Azure Plugin for Eclipse with Java, December 2011 CTP is now available in a 12/18/2011 post:

Windows Azure Plugin for Eclipse with Java, December 2011 CTP is now available for public download at http://dl.windowsazure.com/eclipse. The December CTP is a major update to the Eclipse tooling that was last released last June. It introduces a number of impactful usability and functional enhancements.

Download the documentation from the link below:

A detailed introduction video is available at:

Please visit recently released Windows Azure Developer center for Java below:

What’s new in December 2011, CTP Release:

Session affinity (“sticky sessions”) support - helping enable stateful, clustered Java applications with just a single checkbox

- Pre-made startup script samples - for the most popular Java servers (Tomcat, Jetty, JBoss, GlassFish) make it easier to get started

- Emulator startup output in real time – you can now watch the execution of all the steps from your startup script in a dedicated console window, showing you the progress and failures in your script as it is executed by Azure

- Automatic, light-weight java.exe monitoring – that will force a role recycle when java.exe stops running, using a lightweight, pre-made script automatically included in your deployment

- Remote Java app debugging config UI – allows you to easily enable Eclipse’s remote debugger to access your Java app running in the Emulator or the Azure cloud, so you can step through and debug your Java code in real time

- Local storage resource config UI - so you no longer have to configure local resources by manipulating the XML directly. This feature also enables you to access to the effective file path of your local resource after it’s deployed via an environment variable you can reference directly from your startup script.

- Environment variable config UI – so you no longer have to set environment variables via manual editing of the configuration XML

- JDBC driver for SQL Azure- gets installed via the plugin as a seamlessly integrated Eclipse library, enabling easier programing against SQL Azure

- Quick context-menu access to role config UI - Just right click on the role folder, and click Properties

- Custom Azure project and role folder icons - for better visibility and easier navigation within your workspace and project

• Bruce Kyle reported New December Windows Azure Developer Training Tools, Kits Shows How to Get Started in a 12/15/2011 post to the US ISV Evangelism blog:

The new Windows Azure tools and training kit includes a comprehensive set of technical content to help you learn how to use Windows Azure. The kits support multiple languages and environments such as .Net, Node.js, Java, PHP, and others.

Developer Tools

The new Azure site that launched this week includes the Visual Studio tools, client libraries for .NET, PowerShell tools for Node.js, Eclipse tools, client libraries for Java, PHP command line tools, and client libraries for PHP.

Get Windows Azure developer tools.

Azure Developer Training Kit

The Windows Azure Training Kit includes hands-on labs, presentations, and samples to help you understand how to build applications that utilize Windows Azure services and SQL Azure. Get the kit from Windows Azure Developer December Toolkit.

Please note: The hands-on labs and demos in this kit requires you to have developer accounts for the Windows Azure services and SQL Azure. To register for an account, please visit the Windows Azure site.

The developer training labs include:

- Introduction to Windows Azure

- Building ASP.NET Applications with Windows Azure

- Exploring Windows Azure Storage

- Deploying Applications in Windows Azure

- Windows Azure CDN

- Worker Role Communication

- Debugging Applications in Windows Azure

- Federated Authentication in a Windows Azure Web Role Application

- Web Services and Identity in Windows Azure

- Windows Azure Native Code

- Advanced Web and Worker Roles

- Connecting Apps with Windows Azure Connect

- Virtual Machine Role

- Windows Phone 7 And The Cloud

- Windows Azure Traffic Manager

Also included are demos and presentations.

The December 2011 update of the training kit includes the following updates:

- [New Presentations] 33 updated/new PowerPoint presentations

- [New Hands-On lab] Running a parametric sweep application with the Windows Azure HPC Scheduler

- [New Hands-On lab] Running SOA Services with the Windows Azure HPC Scheduler

- [New Hands-On lab] Running MPI Applications with the Windows Azure HPC Scheduler

- [New Demo] Node.js On Windows Azure

- [New Demo] Image Rendering Parametric Sweep Application with the Windows Azure HPC Scheduler

- [New Demo] BLAST Parametric Sweep Application with the Windows Azure HPC Scheduler

- [Renamed Lab] Connecting Applications through Windows Azure Service Bus (formerly Introduction to Service Bus Part 1)

- [Renamed Lab] Windows Azure Service Bus Advanced Configurations (formerly Introduction to Service Bus Part 2

About Windows Azure

The Windows Azure is an internet-scale cloud services platform hosted in Microsoft data centers, which provides an operating system and a set of developer services that can be used individually or together. Windows Azure's flexible and interoperable platform can be used to build new applications to run from the cloud or enhance existing applications with cloud-based capabilities. Learn more...

David Chou delivered a Java in Windows Azure presentation to the Netherlands Java Users Group (NLUG) on 11/2/2011 (missed when video published):

From the description:

Windows Azure and SQL Azure help developers build, host and scale applications through Microsoft datacenters.

<Return to section navigation list>

Visual Studio LightSwitch and Entity Framework 4.1+

Beth Massi (@bethmassi) continued her series with Beginning LightSwitch Part 4: Too much information! Sorting and Filtering Data with Queries on 12/15/2011:

Welcome to Part 4 of the Beginning LightSwitch series! In part 1, 2 and 3 we learned about entities, relationships and screens in Visual Studio LightSwitch. If you missed them:

- Part 1: What’s in a Table? Describing Your Data

- Part 2: Feel the Love. Defining Data Relationships

- Part 3: Screen Templates, Which One Do I Choose?

In this post I want to talk about queries. In real life a query is just a question. But when we talk about queries in the context of databases, we are referring to a query language used to request particular subsets of data from our database. You use queries to help users find the information they are looking for and focus them on the data needed for the task at hand. As your data grows, queries become extremely necessary to keep your application productive for users. Instead of searching an entire table one page at a time for the information you want, you use queries to narrow down the results to a manageable list. For example, if you want to know how many contacts live in California, you create a query that looks at the list of Contacts and checks the State in their Address.

If you’ve been following this article series, you actually already know how to execute queries in LightSwitch. In part 3 we built a Search Data Screen. This screen has a built-in search feature that allows users to type in search terms and return rows where any string field matches that term. In this post I want to show you how you can define your own queries using the Query Designer and how you can use them on screens.

The LightSwitch Query Designer

The Query Designer helps you construct queries sent to the backend data source in order to retrieve the entities you want. You use the designer to create filter conditions and specify sorting options. A query in LightSwitch is based on an entity in your data model (for example, a Contact entity). A query can also be based on other queries so they can be built-up easily. For instance, if you define a query called SortedContacts that sorts Contacts by their LastName property, you can then use this query as the source of other queries that return Contacts. This avoids having to repeat filter and/or sort conditions that you may want to apply on every query.

For a tour of the Query Designer, see Queries: Retrieving Information from a Data Source

For a video demonstration on how to use the Query Designer, see: How Do I: Sort and Filter Data on a Screen in a LightSwitch Application?

Creating a “SortedContacts” Query

Let’s walk through some concrete examples of creating queries in LightSwitch using the Contact Manager Address Book application we’ve been building. In part 3 we built a Search Data Screen for our Contacts, but if you notice the Contacts are not sorted when the screen is initially displayed. The user must click on the desired grid column to apply a sort manually. Once the user changes the sort order LightSwitch will remember that on a per-user basis, but we should really be sorting the Contacts for them when the Search screen is displayed the first time.

To create a query, in the Solution Explorer right-click on the entity you want to base it on (in our case Contacts) and choose “Add Query”.

The Query Designer will open and the first thing you do is name your query. We’ll name ours “SortedContacts”. Once you do this, you will see the query listed under the entity in the Solution Explorer.

Next we need to define the sort order so click “+Add Sort” in the Sort section of the designer then select the LastName property from the drop down. Click “+Add Sort” again and this time select the FirstName property. Leave the order Ascending for both.

Before we jump into Filter conditions and Parameters, let’s see how we can use this simple query on our Search Screen. It’s actually easier in this case to re-create the Search Screen we created in Part 3 because we didn’t make any modifications to the layout. So in the Solution Explorer select the SearchContacts screen and delete it. Then right-click on the Screens node and select “Add Screen…” to open the Add New Screen dialog.

Select the Search Data Screen template then for the Screen Data you will see the SortedCOntacts query. Choose that then click OK.

Hit F5 to build and run the application and notice this time the contacts are sorted in alphabetical order immediately.

Defining Filter Conditions and Parameters

Our Search Screen is in better shape now but what if we wanted to allow the user to find contacts who’s birth date falls within a specific range? Out of the box, LightSwitch will automatically search across all String properties on an entity but not Dates. Therefore, in order to allow the user the ability to search within a birth date range, we need to define our own query.

So let’s create a query that filters by date range but this time we will specify the Source of the query be the SortedContacts query. Right-click on the Contacts entity and choose “Add Query” to open the Query Designer again. Name the query “ContactsByBirthDate” and then select “SortedContacts” in the Source drop down on the top right of the designer.

Now the query is sorted but we need to add a filter condition. Defining filter conditions can take some practice (like designing a good data model) but LightSwitch tries to make it as easy as possible while still remaining powerful. You can specify fairly complex conditions and groupings in your filter, however the one we need to define isn’t too complex. When you need to find records within a range of values you will need 2 conditions. Once that checks records fall “above” the minimum value and one that checks records fall “below” the maximum value.

So in the Query Designer, click “+ Add Filter” and specify the condition like so:

Where

the BirthDate property

is greater than or equal to

a parameter.

Then select “Add New” to add a new parameter.

The parameter’s name will default to “BirthDate” so change it to MinimumBirthDate down in the Parameters section.

Similarly, add the filter condition for “Where the BirthDate property is less than or equal to a new parameter called MaximumBirthDate”. The Query Designer should now look like this:

One last thing we want to think about with respect to parameters is whether they should be required or not. Meaning must the user fill out the filter criteria parameters in order to execute the query? In this case, I don’t want to force the user to enter either one so we want to make them optional. You do that by selecting the parameter and checking “Is Optional” in the properties window.

Okay now let’s use this query for our Search Screen. In the Solution Explorer select the SearchSortedContacts screen and delete it. Then right-click on the Screens node and select “Add Screen…” to open the Add New Screen dialog again. Select the Search Data Screen template and for the Screen Data select the ContactsByBirthDate query and click OK.

Hit F5 to build and run the application. Notice the contacts are still sorted in alphabetical order on our search screen but you see additional fields at the top of the screen that let us specify the birth date range. LightSwitch sees that our query specified parameters so when we used it as the basis of the screen, the correct controls were automatically generated for us! And since both of these parameters are optional, users can enter none, one, or both dates and the query will automatically execute correctly based on that criteria.

As you can see using queries with parameters like this allows you to perform much more specialized searches than what is provided by default. When using queries as the basis of Screen Data, LightSwitch will automatically look at the query’s parameters and create the corresponding screen parameters and controls which saves you time.

For more information on creating custom search screens, see: Creating a Custom Search Screen in Visual Studio LightSwitch and How to Create a Screen with Multiple Search Parameters in LightSwitch

For a video demonstration, see: How Do I: Create Custom Search Screens in LightSwitch?

Querying Related Entities

Before we wrap this up I want to touch on one more type of query. What if we wanted to allow the user to search Contacts by phone number? If you recall our data is modeled so that Contacts can have many Phone Numbers so they are stored in a separate related table. In order to query these, we need to base the query on the PhoneNumber entity, not Contact.

So right-click on the PhoneNumbers entity in the Solution Explorer and select “Add Query”. I’ll name it ContactsByPhone. Besides searching on the PhoneNumber I also want to allow users to search on the Contact’s LastName and FirstName. This is easy to do because the Query Designer will allow you to create conditions that filter on related parent tables, in this case the Contact. When you select the property, you can expand the Contact node and get at all the properties.

So in the Query Designer, click “+ Add Filter” and specify the condition like so:

Where the Contact’s LastName property

contains

a parameter

Then select “Add New” to add a new parameter.

The parameter’s name will default to “LastName” so change it to SearchTerm down in the Parameters section and make it optional by checking “Is Optional” in the properties window.

We’re going to use the same parameter for the rest of our conditions. This will allow the user to type their search criteria in one textbox and the query will search across all three fields for a match. So the next filter condition will be:

Or the Contact’s FirstName property contains the parameter of SearchTerm

And finally add the last filter condition:

Or the Phone property contains the parameter of SearchTerm. I’ll also add a Sort on the PhoneNumber Ascending. The Query Designer should now look like this:

Now it’s time to create a Search Screen for this query. Instead of deleting the other Search screen that filters by birth date range, I’m going to create another new search screen for this query. Another option would be to add the previous date range condition to this query, which would create a more complex query but would allow us to have one search screen that does it all. For this example let’s keep it simple, but here’s a hint on how you would construct the query by using a group:

For more information on writing complex queries see: Queries: Retrieving Information from a Data Source and How to Create Composed and Scalar Queries

So to add the new Search Screen right-click on the Screens node again and select “Add Screen…” to open the Add New Screen dialog. Select the Search Data Screen template and for the Screen Data select the ContactsByPhone query this time and click OK.

Now before we run this I want to make a small change to the screen. The default behavior of a search screen is to make the first column a link that opens the Details screen for that record. Since we had to base our query on the PhoneNumber entity, LightSwitch will make the Phone property a link and not the Contact. So in the Screen Designer we need to make a small change. Select the Phone in the content tree and in the properties window uncheck “Show as Link”. Then select the Contact and check “Show as Link”.

Also change Display Name in the properties window for the PhoneNumberSearchTerm textbox to “Phone Number or Name” to make it more clear to the user what they are filtering on. And while we’re at it, we should disable the default search box by selecting the ContactsByPhone query on the left-hand side and unchecking “Supports search” in the properties window. This isn’t necessary since we are providing more search functionality with our own query.

Okay hit F5 and let’s see what we get. Open the “Search Contact By Phone” Search screen from the navigation menu and now users can search for contacts by name or by phone number. When you click on the Contact link, the Details screen we created in part 3 will open automatically.

Wrap Up

As you can see queries help narrow down the amount of data to just the information users need to get the task done. LightSwitch provides a simple, easy-to-use Query Designer that lets you base queries on entities as well as other queries. And the LightSwitch Screen Designer does all the heavy lifting for you when you base a screen on a query that uses parameters.

Writing good queries takes practice so I encourage you to work through the resources provided in the Working with Queries topic on the LightSwitch Developer Center.

In the next post we’ll look at user permissions and you will see how to write your first lines of LightSwitch code! Until next time!

Return to section navigation list>

Windows Azure Infrastructure and DevOps

David Pallmann (@davidpallmann) makes the case for Responsive Cloud Design in a 12/16/2011 post:

As readers of this blog know, I’m big on both web and cloud, especially when used together: particularly, HTML5 and mobile devices on the front end and the Microsoft cloud (Windows Azure) on the back end. A big movement in the modern web world is Responsive Web Design (RWD), the brainchild of Ethan Marcotte. When web and cloud are used together, what is the cloud’s role in an RWD approach? I’d like to suggest there are symmetric principles and that there is such a thing as Responsive Cloud Design (RCD). RWD and RCD are a powerful combination.

Principles of Responsive Web Design

Responsive Web Design lets you create a single web application that works across desktop browsers, tablets, and phones. Here’s an example of responsive web design (from an Adobe sample):

The driving idea behind RWD is that your web application detects the dimensions and characteristics of the browser and device it is running on and adapts layout and behavior accordingly. That means working well from big screens on the desktop down to tablets and phone-size screens. It also means supporting touch, mouse, and keyboard equally well. This involves use of fluid grids, media queries, browser feature detection, and use of percentages and proportional values in CSS, all of which are explained well in Ethan’s book. A corollary of this approach is that to be efficient you will download smaller media content for a small screen than you would for a large screen.

1. Detection: Sense what you are running on

2. Adaptation: Adapt layout and behavior to what you are running on

3. Efficiency: Deliver appropriately-sized content for efficiencyI can’t help expanding on these three core tenets because the RWD philosophy so easily applies to other key considerations we see in the web/mobile space. I like to add three more: location, availability, and sync. In location, we adjust content and input defaults (and perhaps even language) according to where a user is right now. Just as we detect device characteristics for a fluid layout we can also detect location to give a localized experience. In availability, the user should be able to access the web application no matter where they are and whether or not they are online at the moment.

This is more than just being able to render well on any convenient device, it also means the application can intelligently know where to find its back end services (which may be multiply deployed) and can handle temporarily disconnected use. Lastly, sync is vital to making availability a reality so that user information and state can be consistently maintained despite switching between devices and being occasionally disconnected. This means having a well-thought out data and state strategy that leverages local browser/device caching and storage as well as back-end services and storage, possibly across multiple data centers.

4. Location: Detect location and adjust content accordingly

5. Availability: Your web application is always available to you

6. Sync: Data and state consistency across devicesRWD is awesome. What about the back end? You can appreciate how the server side of your web application can play an important role in serving up tailored, efficient content and supporting the web client well. Anders Andersen has a good presentation on RESS, RWD with server-side components. I think if you add cloud computing to the mix and use its strengths, you not only support RWD well but extend it with synergistic capabilities. …

David continues with a detailed Principles of Responsive Cloud Design section and a delightful illustration:

Jennifer deJong Lent asked Are tech books dead? in an article of 12/15/2011 for SD Times on the Web:

Like many software developers, Stephen Forte was quick to pick up a book when stumped by a technical problem. “I’d go into Barnes & Noble, thumb through several books and spend 50 bucks on the one that had the answer,” said the chief strategy officer for tool maker Telerik. “Books were a great resource for solving technical problems, but now all that has moved to the blogs,” he said.

Blogs written by developers offer fast answers to the programming quandaries that inevitably come up when writing software, said Richard Campbell, cofounder of software consultancy Strangeloop Networks. “Search on an exact error code, and you get a ream of pages that work extremely well. No book comes close to that.”Not so black and white

SD Times asked a handful of developers when and why they turn to technical books as an aid to programming efforts—or whether these books even matter anymore. Those developers said that blogs have not completely supplanted books as a source of solutions to software development problems, and that they come with their own set of limitations.Nonetheless, technical books aren’t nearly as important as they used to be. “What’s emerging is a more nuanced world with a combination of formats,” said Andrew Brust, CEO of Blue Badge Insights, a Microsoft strategy consultancy. “And books are still in the mix.”

The developers emphasized that the books vs. blogs debate applies only to purely technical titles that address specific versions of specific technologies. Books that deal with big ideas, such as software methodologies, programming practices, and management approaches, play largely the same role they have always played. They remain important to developers [see related story Best Books for Developers]. The only real change is that these days many prefer to read the electronic version instead of the physical book. “Books that teach a practice or philosophy still stand out and sell well,” said Forte.

Despite the wide array of information available on developers’ blogs—and the opportunity to easily copy and paste code samples—technical books remain the preferred choice in some situations. Patrick Hynds, president of software consultancy CriticalSites, laid out a couple of scenarios. …

As a long time computer book author, I occasionally ask myself the same question.

Jennifer then goes on to list The best books for software developers.

Avkash Chauhan (@avkashchauhan) listed 7 Steps [that] will help you to write or migrate your application to Windows Azure on 12/15/2011:

When you decide to write a new Web Application to host on Windows Azure or decide to migrated [an app] to Windows Azure, the following resources will help you immensely:

1. Prepare yourself with necessary hands on labs and training:

- Please download Windows Azure Training Kit form the link below, and finish the following hands on labs and Demos first at least:

- Demos:

- Building and Deploying a Service

- Hello Windows Azure

- Windows Azure using Blobs Demo

- Preparing your SQL Azure Account

- Connecting to SQL Azure

Hands on Labs:

- Introduction to Windows Azure

- Building ASP.NET Applications with Windows Azure

- Exploring Windows Azure Storage

- Deploying Applications in Windows Azure

2. Using HTTPs Endpoint and SSL certificate

- You can use a SSL 2048bit certificate from any certificate authority and use with Windows Azure Role. More details are located below:

3. Using startup Task for specific action in your Windows Azure Application :

- Learn More about Start Up Task in Windows Azure

4. Using Startup Task to configure IIS Application pool:

- You can use Startup task to configure IIS Application pool.

5. Monitoring your Windows Azure application:

- So far application specific analytics/statistics is not available in the platform itself, you would need to add your own code in web app to get it. However for application diagnostics and monitoring, you can use Azure Diagnostics as described below:

- You can also use a 3rd party tool, [such] as Cerebrata Diagnostics Monitor, for Windows Azure, to monitor your application:

- Link below will help with Azure Diagnostics issues:

6. Domain Name Setup:

- It is very important to setup your Domain Name (www.yourcompanydomain.com) with Windows Azure Application. To do please setup the CNAME entry in your DNS Registrar as directed in the link below:

- http://blog.smarx.com/posts/custom-domain-names-in-windows-azure

7. Sending Email and configure SMTP services from your application:

- You can only send SMTP emails from Azure Application by using a SMTP module in your application, but you cannot accept SMTP emails in Azure. The following blogs outline the custom email solution on Azure such as SendGrid, as well as paid/enterprise level business email solution on Azure:

- Or you can use email forwarding service We describe how to use forwarding services at:

- If you want to just send emails from your application, you can include SMTP references in your WCF endpoint, and send email from there. You will be restricted to send emails only.

<Return to section navigation list>

Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

No significant articles today.

<Return to section navigation list>

Cloud Security and Governance

Lori MacVittie (@lmacvittie) asserted “Storing sensitive data in the cloud is made more palatable by applying a little security before the data leaves the building…” in an introduction to her F5 Friday: Big Data? Big Risk… post of 12/16/2011 to F5’s DevCentral blog:

When corporate hardware, usually laptops, are stolen, one of the first questions asked by information security professionals is whether or not the data on the drive was encrypted. While encryption of data is certainly not a panacea, it’s a major deterrent to those who would engage in the practice of stealing data for dollars. Many organizations are aware of this and use encryption judiciously when data is at rest in the data center storage network.

But as the Corollary to Hoff’s Law states, even “if your security practices don’t suck in the physical realm, you’ll be concerned by the inability to continue that practice when you move to Cloud.”

It’s not that you can’t encrypt data being moved to cloud storage services, it’s that doing so isn’t necessarily a part of the processes or APIs used to do so. This makes it much more difficult to enforce such a policy and, for some organizations, unless they are guaranteed data will be secured at rest they aren’t going to give the okay.

A recent Ponemon study speaks to just this issue: