Windows Azure and Cloud Computing Posts for 9/27/2010+

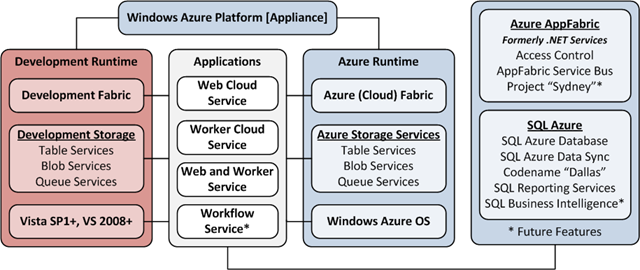

| A compendium of Windows Azure, Windows Azure Platform Appliance, SQL Azure Database, AppFabric and other cloud-computing articles. |

Updated 10/9/2010: Replaced missing charts in Rob Gillen’s Maximizing Throughput in Windows Azure [Blobs] – Part 3 post of 9/24/2010 (in the Azure Blob, Drive, Table and Queue Services section).

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database, Codename “Dallas” and OData

- AppFabric: Access Control and Service Bus

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch

- Windows Azure Infrastructure

- Windows Azure Platform Appliance (WAPA)

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Read the detailed TOC here (PDF) and download the sample code here.

Discuss the book on its WROX P2P Forum.

See a short-form TOC, get links to live Azure sample projects, and read a detailed TOC of electronic-only chapters 12 and 13 here.

Wrox’s Web site manager posted on 9/29/2009 a lengthy excerpt from Chapter 4, “Scaling Azure Table and Blob Storage” here.

You can now freely download by FTP and save the following two online-only PDF chapters of Cloud Computing with the Windows Azure Platform, which have been updated for SQL Azure’s January 4, 2010 commercial release:

- Chapter 12: “Managing SQL Azure Accounts and Databases”

- Chapter 13: “Exploiting SQL Azure Database's Relational Features”

HTTP downloads of the two chapters are available for download at no charge from the book's Code Download page.

Supergadgets rated my Cloud Computing with the Windows Azure Platform among the Best Windows Azure Books in a recent HubPages post:

… Cloud Computing with the Windows Azure Platform

I prefer Wrox's IT books much as well because they are mostly written by very experienced authors. Cloud computing with Azure book is an excellent work with the best code samples I have seen for Azure platform. Although the book is a bit old and some Azure concepts evolved in a different way, still it is one of the best about Azure. Starting with the concept of cloud computing, the author takes the reader to a progressive journey into the depths of Azure: Data center architecture, Azure product fabric, upgrade & failure domains, hypervisor (in which some books neglect the details), Azure application lifecycle, EAV tables usage, blobs. Plus, we learn how to use C# in .Net 3.5 for Azure programming. Cryptography, AES topics in security section are also well-explained. Chapter 6 is also important to learn about Azure Worker management issues.

Overall, another good reference on Windows Azure which I can suggest reading to the end.

p.s: Yes, the author had written over 30 books on different MS technologies.

Book Highlights from Amazon

- Handles numerous difficulties you might come across when relocating from on-premise to cloud-based apps (for example security, privacy, regulatory compliance, as well as back-up and data recovery)

- Displays how you can conform to ASP.NET validation and role administration to Azure web roles

- Discloses the advantages of offloading computing solutions to more than one WorkerRoles when moving to Windows Azure

- Shows you how to pick the ideal mixture of PartitionKey and RowKey values for sharding Azure tables

- Talks about methods to enhance the scalability and overall performance of Azure tables …

Tip: If you encounter articles from MSDN or TechNet blogs that are missing screen shots or other images, click the empty frame to generate an HTTP 404 (Not Found) error, and then click the back button to load the image.

Azure Blob, Drive, Table and Queue Services

Rob Gillen continued his series Maximizing Throughput in Windows Azure [Blobs] – Part 3 on 9/24/2010:

This is the third in a series of posts I’m writing while working on a paper dealing with the issue of maximizing data throughput when interacting with the Windows Azure compute cloud. You can read the first post here and the second here. This post assumes you’ve read the other two, so if you haven’t now might be a good time to at least peruse them.

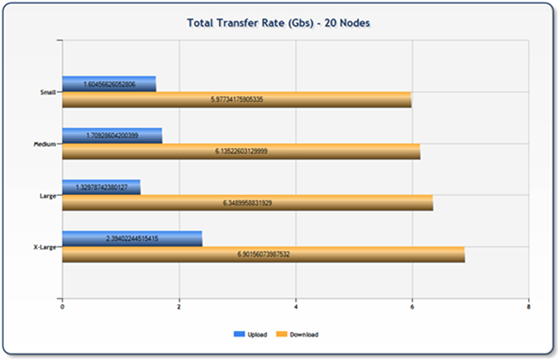

Summary: Based on the work performed and detailed in the first two parts of this series, we scaled the load tests horizontally to 20 concurrent nodes to ensure that the performance characteristics of the storage platform were not overly degraded. We found that while we were able to move a significant amount of data in a relatively short period of time (500 GB in around 10 minutes – roughly 6.9 Gbs), we experienced something less than a linear scale up from what a single node could transfer (up to 39% attenuation in our tests).

Detail: After the work done in the first two stages, I decided to see what the affect of horizontal scaling would have on the realized throughput. To test this, I took the test harness (code links below) and set it for the “optimal” approach for both upload and download as determined by the prior runs (sub-file chunked & parallelized uploads combined with whole-file parallelized downloads) and then deployed it to 20 nodes and then did a parameter sweep on node size. I tested a few different methods of starting all of the nodes simultaneously (including Wade Wegner’s “Release the Hounds” approach) but settled on Steve Marx’s “pseudo code” (Wade’s words – not mine) approach as I had issues with getting multicasting on the service bus to scale using the on-demand payment model. This provided for a slightly crude (triggers were not *exactly* timed together) start time, but was more-or-less concurrent.

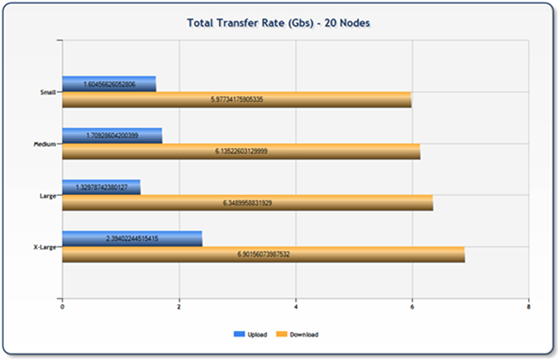

You can see from the following chart that my overall performance wasn’t too bad – based on the node type we saw upwards of 6Gbs download speed and around 2Gbs upload. Also consistent with our prior tests, we saw a direct relationship between node size and realized throughput rates.

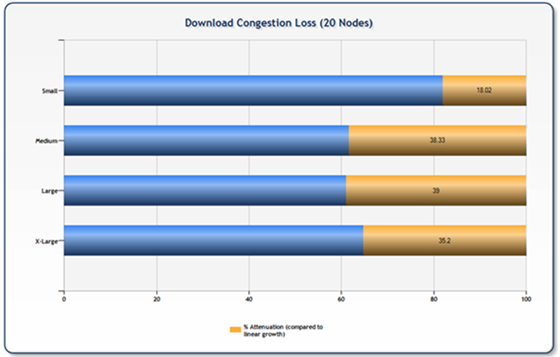

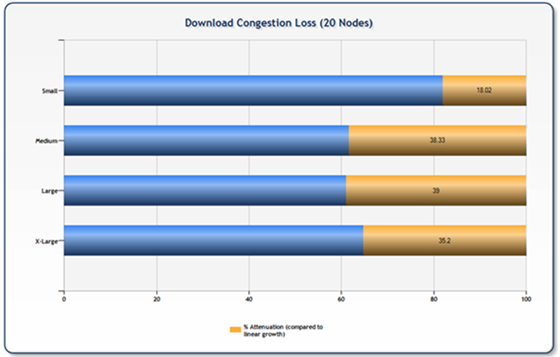

While the chart above is interesting, the real question is whether or not the effective throughput was linear based on node count. The following charts compare the average results from three runs per node size of 20 concurrent nodes to the average numbers from the prior tests by node size multiplied by 20 (perfect scale). What we see is that uploads demonstrate an attenuation of between 25% and 45% while downloads taper between 18% and 39%.

Note: the XL size uploads actually demonstrated a better-than-linear scale (around 101% of linear) which is attributed to a generally good result for the three test sets for this experiment and a comparatively poor result set (likely network congestion) for the XL nodes in the prior tests. The results in this test are from an average of three runs (each run consisting of 20 nodes transferring 50 files each) – performing more runs would likely render a higher accuracy of trend data.

Looking at the data triggered some follow-on questions such as what the attenuation curve would look like (at what node count do we stop scaling linearly) or what do the individual transfer times per file look like. This prompted me to dig a bit further into the collected data and generate some additional charts. I’ve not displayed them all here (the entire collection is available in the related resources section below), however I’ve selected a few that are illustrative of a my subsequent line of questioning.

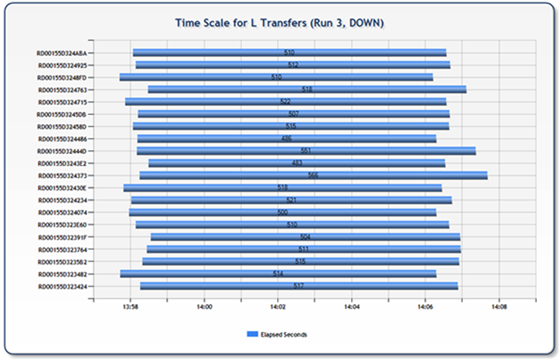

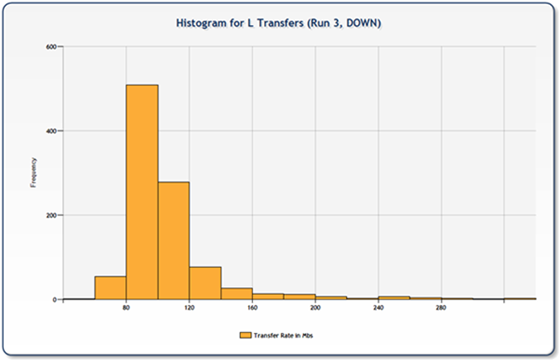

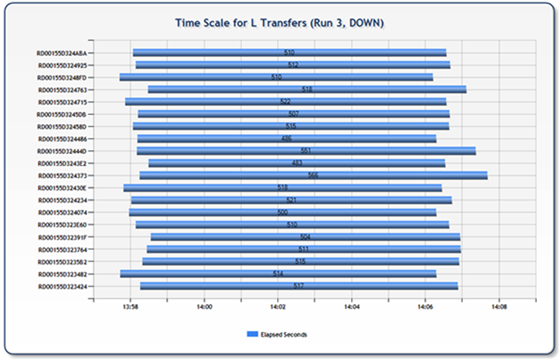

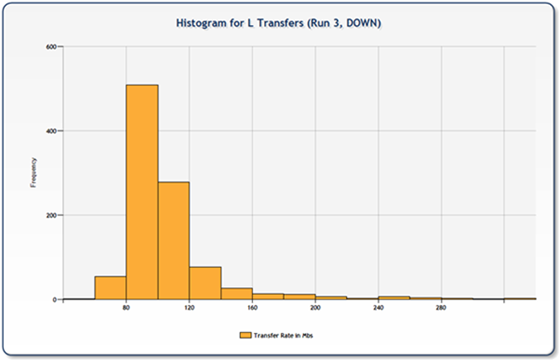

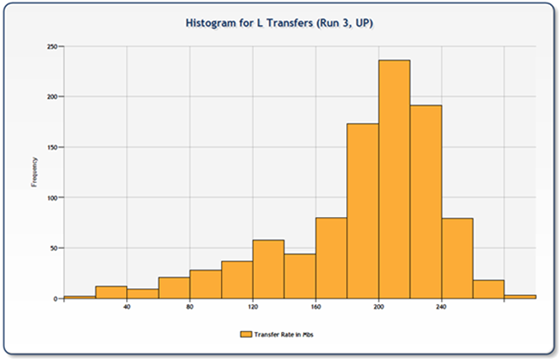

For the downloads, most of the charts looked pretty good and we saw a distribution similar to the following two charts. As you can tell, the transfers are of similar length and the histogram shows a fairly tight distribution curve.

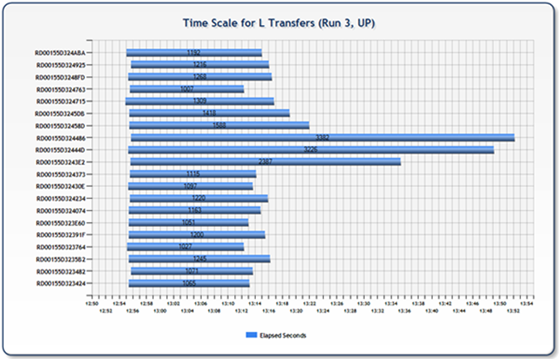

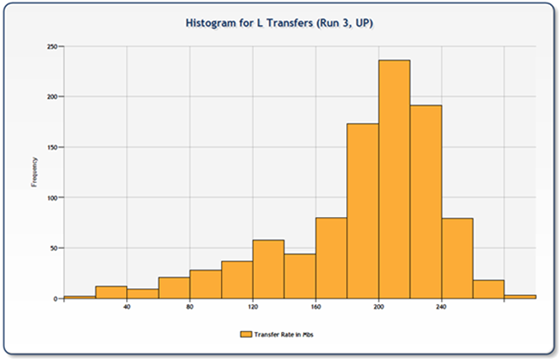

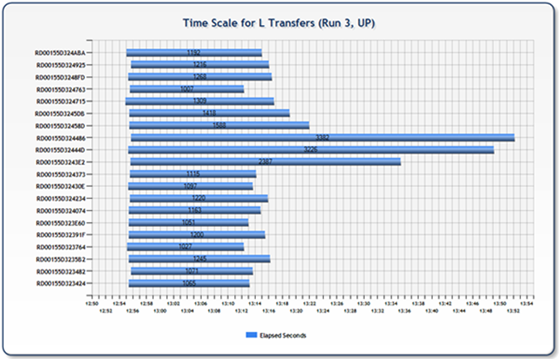

Uploads on the other hand, were all over the board. The following two charts are representative of some of the data inconsistencies we found. What is interesting to note here, is there there are three legs that are significantly longer (visually double) than the mean of the remainder. This would cause one to wonder if the storage platform was getting pounded, effectively placing those three nodes on hold until the pressure abated. You can see from the associated histogram that the distribution was much broader representing less consistency in transfer rates.

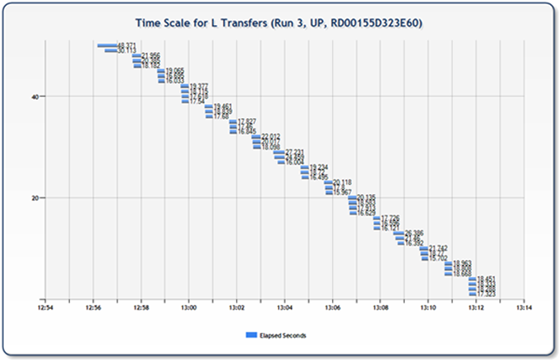

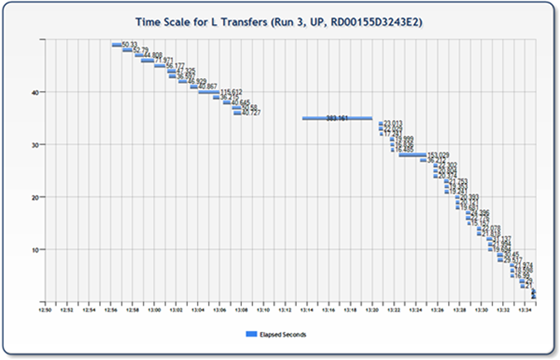

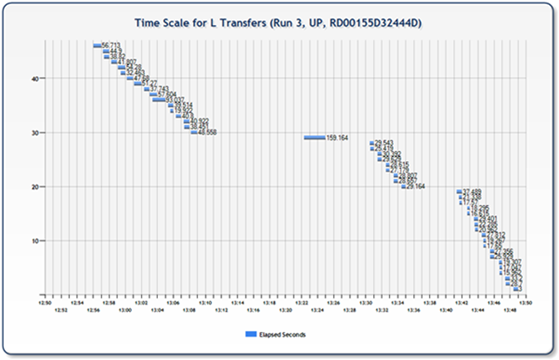

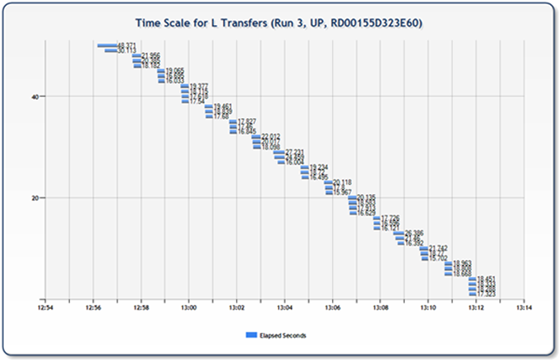

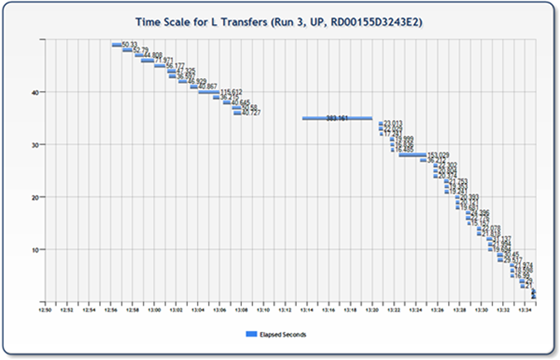

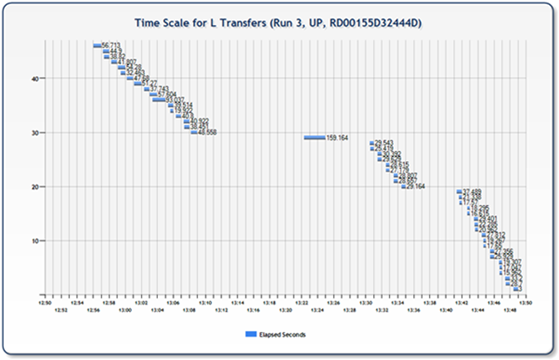

The previous charts got me to wondering further, so I wrote some code to generate charts (timeline) of transfers for each node within size/run collection (one chart for each of the horizontal bars in the chart above). Immediately obvious in the charts below is a bug in my data collection (my log data for the individual files was tracking the total seconds elapsed, but the end time was being recorded in minutes – resulting in the oddities (right alignment) in the bar display below – this will be fixed in future runs/posts). Ignoring the bug for a minute, the first chart is something similar to what you would expect… parallelized transfers that overlap some and stair-step over the elapsed time.

The following two charts, however, represent situations different than you would expect and illustrate what would appear to be problems in the network/transfer/nodes/my code (something). In both scenarios there are large blocks of time with apparently nothing happening, as well as individual files that apparently took significantly longer than the rest to transfer. In the next set of tests (and follow on blog post) I’ll be digging into this issue and looking to understand exactly what is happening and, hopefully, be able to explain a little bit of why.

Related Resources:

- Data Files

- Generated Charts (see explanation of right-alignment problem above)

- Source code for generating charts

- Source code for executing tests

Research sponsored by the Laboratory Directed Research and Development Program of Oak Ridge National Laboratory, managed by UT-Battelle, LLC, for the U. S. Department of Energy.

<Return to section navigation list>

SQL Azure Database, Codename “Dallas” and OData

Steve Yi asked and answered Why use the Entity Framework with SQL Azure? in a 9/28/2010 post to the SQL Azure Team blog:

This week I’ll be spending some time diving into using Entity Framework with SQL Azure.

The Entity Framework provides the glue between your object oriented code and the SQL Azure relational database in a framework that is fully compatible with your skills and development tools. Integrated into Visual Studio, and part of ADO.NET, the entity framework provides object relational map capabilities that help an application developer focus on the needs of the application as opposed to the complexities of bridging disparate data representations.

If you’re new to Entity Framework, MSDN has some great resources as well as walkthrough tutorials that quickly get you started. Go here to learn more.

I like to use the Entity Framework with SQL Azure because:

- I can write code against the Entity Framework and the system will automatically produce objects for me as well as track changes on those objects and simplify the process of updating SQL Azure.

- Model first design (which I am blogging about in a future post), gives me an easily way to design SQL Azure database schemes. A good walkthrough of this technique is here.

- It is integrated deeply with Visual Studio so there is no flipping between applications to design the database, generated my objects, write my code and execute Transact-SQL – it’s all done inside Visual Studio.

- The Entity Framework replaces a large chunk of data-access code I used to have to write and maintain myself – pure laziness here.

- The mapping between my objects and my database is specified declaratively instead of in code, if I need to change the database schema, I can minimize the impact on the code that I have to modify in my applications -- so the system provides a level of abstraction which helps isolate the app from the database. Plus, I don’t have to touch the code to change the mapping, properties of the entities, and the Entity Framework designer do that for me.

- The queries and other operations I write into code are specified in a syntax that is not specific to any particular database, I can easily move from SQL Express, to SQL Azure, to an on-premise SQL Server. With the Entity Framework, the queries are written in LINQ or Entity SQL and then translated at runtime by the providers to the particular back-end query syntax for that database.

Dhananjay Kumar showed how to enable Authentication on WCF Data Service or OData: Windows Authentication Part 1 on 9/28/2010:

In this article, I am going to show how to enable windows authentication on WCF Data Service.

Step 1

Create WCF Data Service. Read below how to create WCF Data Service and introduction to OData.

http://dhananjaykumar.net/2010/06/13/introduction-to-wcf-data-service-and-odata/

While creating data model to be exposed as WCF Data Service, we need to take care of only one thing that Data model should be created as SQL Login.

So while creating data connection for data model connect to data base through SQL Login.

Step 2

Host WCF Data Service in IIS. WCF Data Service can be hosted in exactly the same way a WCF Service can be hosted. Read below how to host WCF 4.0 service in IIS 7.5

http://dhananjaykumar.net/2010/09/07/walkthrough-on-creating-wcf-4-0-service-and-hosting-in-iis-7-5/

Step 3

Now we need to configure WCF Service hosted in IIS for Windows authentication.

Here I have hosted WCF Data Service in WcfDataService IIS web site:

- Select WcfDataService and in IIS category you can see Authentication tab.

- On clicking on Authentication tab, you can see various authentication options.

- Enable Windows authentication and disable all other authentication

- To enable or disable a particular option just click on that and at left top you can see the option to toggle

Now by completing this step you have enabled the Windows authentication on WCF Data Service hosted in IIS.

Passing credential from .Net Client

If client windows domain is having access to server then

If client is not running in windows domain which is having access to server then credential we need to pass the as below,

So to fetch all the records

In above article we saw how to enable Windows authentication on WCF Data Service and then how to consume from .Net client. In next article we will see how to consume Windows authenticated WCF Data Service from SilverLight client.

Buck Woody explained SQL Azure and On-Premise Solutions - Weighing your Options in a 9/28/2010 post to TechNet’s SQL Experts blog:

Since the introduction of SQL Azure, there has been some confusion about how it should be used. Some think that the primary goal for SQL Azure is simply “SQL Server Somewhere Else”. They evaluate a multi-terabyte database in their current environment, along with the maintenance, backups, disaster recovery and more and try to apply those patterns to SQL Azure. SQL Azure, however, has another set of use-cases that are very compelling.

What SQL Azure represents is a change in how you consider your options for a solution. It allows you to think about adding a relational storage engine to an application where the storage needs are below 50 gigabytes (although you could federate databases to get larger than that – stay tuned for a post on that process) that needs to be accessible from web locations, or to be used as a web service accessible from Windows Azure or other cloud provider programs. That’s one solution pattern.

Another pattern is a “start there, come here” solution. In this case, you want to rapidly create and deploy a relational database for an application using someone else’s hardware. SQL Azure lets you spin up an instance that is available worldwide in a matter of minutes with a simple credit-card transaction. Once the application is up, the usage monitoring is quite simple – you get a bill at the end of the month with a list of what you’ve used. From there, you can re-deploy the application locally, based on the usage pattern, to the “right” server. This gives your organization a “tower of Hanoi” approach for systems architecture.

There’s also a “here and there” approach. This means that you can place the initial set of data in SQL Azure, and use Sync Services or other replication mechanisms to roll off a window of data to a local store, using the larger storage capabilities for maintenance, backups, and reporting, while leveraging the web for distribution and access. This protects the local store of data from the web while providing the largest access footprint for the data you want to provide.

These are only a few of the options you have – and they are only that, options. SQL Azure isn’t meant to replace a large on-premise solution, and the future for SQL Server installations remains firm. This is another way of providing your organization the application data they need.

There are some valid questions about a “cloud” offering like SQL Azure. Some of these include things like security, performance, disaster recovery and bringing data back in-house should you ever decide to do that. In future posts here I’ll address each of these so that you can feel comfortable in your choice. I’ve found that the more you know about a technical solution, the better your design will be. It’s like cooking with more ingredients rather than the same two or three items you’re used to.

Buck (blog | twitter) is a Senior Technical Specialist for Microsoft, working with enterprise-level clients to develop data and computing platform architecture solutions within their organizations. He has over twenty years professional and practical experience in computer technology in general and database design and implementation in specific. He is a popular speaker at TechEd, PASS and many other conferences; the author of over 400 articles and five books on SQL Server; and he teaches a Database Design course at the University of Washington.

Alex James (@adjames) added a Summary of Feedback to the #OData Bags Proposal (see article below) to the OData mailing list on 9/28/2010 at ~3:00 PDT:

There seem to be 3 core issues people are struggling with:

(1) What is the best Name for 'Bags' This we thought long and hard about. Given that they are Unordered and that AtomPub already uses Collection, we think Bag while not ideal is the best we've got. However we are all ears if anyone has a compelling alternative.

(2) Everyone wants to be able to use query options with Bags. $expand, $top, $skip, $select: Because Bags are structurally part of the entity they are designed for scenarios where there are multiple but not a great number of members. So when the Bag are included in the results either implicitly or explicitly (using $select) they are always $expand(ed) and because there is not supposed to be a great number of members it is all or nothing so $skip and $top makes no sense.

$filter: Items in a Bag can't be accessed by index (it is unordered) or by identity (they only contain ComplexTypes or Primitives which have no identity) so that really just leaves Any/All type queries, i.e., something like this:

~/People/?$filter=any(Addresses/City) eq 'Redmond' Unfortunately, despite my made up URI, Any/All isn't supported in OData yet. So - for now - that leaves no query support.

(3) Given the - current - lack of query option support are Bags still useful? Yes. We think Bags are still useful.

Bags are designed for situations where an Entity has more than one 'something' and those something's should always be passed with the entity (unless explicitly excluded using $select).

Good examples include:

- Addresses

- EmailAddresses

- Aliases

- Identities

- Tags

- etc

We think being able to retrieve a Person and all their email addresses, without the bloat associated with a full EmailAddress entity, and the semantics implied by entities (EmailAddresses is not a feed, and EmailAddresses have identities, etc) is still useful.

Any other concerns? Or suggestions? Other than of course your xsd/edm one Erik ? :)

Alex

See the remaining messages in the thread after Alex’s.

Ahmed Moustafa posted Adding support for Bags to the OData blog on 9/27/2010:

Today entries in OData (Customer, Order, etc) may have properties whose value are primitive types, complex types or represent a reference to another entry or collection of entries. However there is no support for properties that *contain* a collection (or bag) of values.

For example, a sample entry which represents a Customer is shown below. Notice the entry has primitive properties (firstname, last name) and a navigation property (orders) which refers to the Order entries associated with the Customer.

Sample Customer Entry listing

What if you wanted to enhance the definition of the Customer entry above to include all the email addresses for the customer? To model this using OData v2 you have two a few options. One option would be to create multiple string properties (email1, email2, email3) to hold alternate email addresses. This has the obvious limitation that the number of alternates is bounded and needs to be known at schema definition time. Another approach would be to create a single 'emails' string property which is a comma separated list of email addresses. While this is more dynamic it overloads a single property and requires client and server up front to agree on the formatting of the string (comma separated, etc).

To enable modeling these type of situations easier in OData, this document outlines a proposal for the changes required to represent Entries with properties that are unordered homogenous sets of non-null primitive or complex values (aka "Bags").

Proposal:

Metadata

As you know, OData services may expose a metadata document ($metadata endpoint) which describes the data model exposed by the service. Below is an example of the proposed extension of the metadata document to define Bag properties. In this case, the Customer Entry has a Bag of email addresses and apartment addresses.

Uri Construction Rules

The optional, but recommended, URI path construction rules defined by OData will be extended as proposed below to support addressing Bag properties.

A Bag Property is addressable by appending the name of the Bag Property as a URI path segment after a path segment that identifies an Entry (assuming the Entry Type associated with the Entry identified defines the named Bag Property as a property of the type).

- A Bag property cannot be dereference by appending the $value operator to the URI path segment identifying the named Bag Property.

- The individual elements of a bag are NOT addressable

- If a URI addresses a Bag Property directly (i.e. /Customers(1)/Addresses ) then the only valid OData query string option is the $format option. All other OData query string options MUST NOT be present.

- Except for $select, a Bag Property name MUST NOT be present in the value of any of the query string operators ($filter, etc) in a request URI.

Example: sample valid URIs to address the Customer Entry Type described in the 'Metadata Description' section above

- Customer(1) == identifies the Customer Entry with key value equal to 1

- Customer(1)/Name == identifies only the Name property of the Customer Entry with key value 1

- Customer(1)/Addresses== identifies the bag of addresses for Customer 1.

- No further path composition is allowed after a Collection Property in a URI path

Protocol Semantics/Rules

- A Bag represents an unordered homogenous set of non-null primitive or complex values. Therefore, Data services and data service clients are not required to maintain the original order of items in a bag.

- For requests addressing an Entry which includes a Bag Property (i.e. request URI == /Customer(1) )

- Processing is as per any other Entry which does not have a Bag Property (i.e. Bag Properties are just "regular" properties in this regard)

- Rules for Responses to Requests sent to a URI addressing Bag Properties (i.e. request URI == /Customer(1)/Addresses ):

- Requests using any HTTP verb other than GET or PUT MUST result in a 405 (Method Not Allowed) response.

- All error responses MUST include a response body which conforms to the error response format as described here: http://msdn.microsoft.com/en-us/library/dd541497(PROT.10).aspx

- If the Entry Type which defines the Bag Property defines a concurrency token, then a GET or PUT request to the bag MUST return an ETag header with the value of the concurrency token equal to that which would be used for the associated Entry Type instance (i.e. same as "regular" properties).

- Responses from a GET request to a Bag Property:

- If the response indicates success, the response code MUST be 2xx and the response body, if any, MUST be formatted as per one of the serializations described below

- Responses from a PUT request to a Bag Property:

- If the response indicates success, the response code MUST be 204 (OK) and the response body MUST be formatted as per one of the serializations described below.

Payload Examples

Example 1 : Representing Bag Properties

Request Uri: GET http:// host /service.svc/Customers(1)

Response:

Example 2: Representing Bag properties with Customizable Feed Mapping

NOTE: When a Bag is represented outside of <atom:content> using Customizable Feed mappings we'll use the Atom collection representation semantics of repeating top level element. We'll do this both for properties mapped to Atom elements and properties mapped to arbitrary elements in a custom namespace. The Bag MAY still be represented in the <atom:content> section.

Example 3: Response to a GET request to /Customers(1)/Addresses

Example 4: Response to a GET request to /Customers(1)/EmailAddresses

Example 5: JSON representation for an Entry with Bag properties

Additional Rules

Customizable feed mapping

The following rules define the customizable feed mappings rules for Bags and are in addition to those already defined for entries here.

- Each instance in the bag must be represented as a child element of the element representing the bag as a whole and be named 'element' and MUST be defined in the data service namespace (http://schemas.microsoft.com/ado/2007/08/dataservices).

- An attribute named 'type' (in the Data Service Metadata namespace) MAY exist on the element representing the bag as a whole.

- If a Bag of Complex type instances with Customizable Feeds annotations in the data services metadata document and has a value of null then the element being mapped to MUST be present with an attribute named 'null' that MUST be defined in the data service namespace (http://schemas.microsoft.com/ado/2007/08/dataservices). If the null value is being mapped to an attribute the Server or Client MUST return status code 500

Versioning

- The DataServiceVersion header in a response to a GET request for a Service Metadata Document which includes a type with a Bag property MUST be set to 3.0 or greater.

- Servers MUST respond with an error if they process a request with a MaxDataServiceVersion header set to <=2.0 but would result in Bag Properties in the response payload.

- Servers MUST set the DataServiceVersion header to 3.0 in responses containing Bag Properties.

- Servers MUST fail if a request payload contains Bag Properties but it doesn't have the DataServiceVersion set to 3.0 or greater.

- Client MUST set the MaxDataServiceVersion header to 3.0 or greater to be able to get Bag Properties. The DataServiceVersion header of the request MAY be lower.

- If the Client sends Bag Properties in the payload the DataServiceVersion header that request MUST be set to 3.0 or greater.

- If the Client receives payload from the Server which contains Bag Properties, it MUST fail if the response doesn't have DataServiceVersion header set to 3.0 or greater.

We look forward to hearing what you think of this approach…

Whatever you think, please tell us all about it on the OData Mailing List.

Sean McCown said “I'm seriously just sick of hearing SQL Azure everywhere I turn” as a deck for his 9/27/2010 “SQL Marklar” column for Network World’s Microsoft Subnet:

Sick to death of Azure

I don't know about you guys, but I'm getting to sick of hearing about SQL Azure. Microsoft is trying to make it sound like everyone is going to give up their DBAs and start managing all of their data in the cloud. I already know I'm not their audience for this technology, but I'm still just getting sick of hearing about it everywhere I turn. Remember what clouds are guys. They're big fluffy things that you can't really control and you can't really see into. And that's the point isn't it though? A cloud DB is one that you have only limited access to and limited visibility into, and you certainly can't control it. The hosting company controls pretty much every aspect of your DB implementation. And like I said, I'm not their target audience because I make my living as a DBA so they're saying we've got a product here that'll replace Sean at the place he feeds his family. And I'm sure there are plenty of other DBAs who are thinking that exact same thing. How many DBAs would honestly recommend a cloud DB to their boss?

That's not why I'm getting sick of it though, because I work with sensitive data that will never go to the cloud. I'm getting sick of it because MS is acting like everyone has been screaming for this and now they've finally got it. Personally, I think it has more to do with them wanting to control licensing than it does them thinking it's really the direction DBs should be going. And they make this excuse that it's perfect for small DBs that nobody wants to manage or aren't worth anyone managing. Ok, I'll start believing you when you finally dump Access in favor of the cloud. Tell all of your hardcore Access users that DBs are moving away from the desktop (or the server) and moving to the cloud and they need to spin something up on the internet next time they want to put together a small app. I don't see that happening, do you? [*]

And it's not like there's no use at all for the cloud. I'm sure there are some out there who don't want to buy a DBA, but I also just don't think that the need is a great as they're making it. This is just another step into the part-time DBA market that tried to boom a while back and has gotten very little momentum. You were seeing it all over the place there for a while weren't you? All those ads for companies that would manage your DBs for you and no matter how small you were, they could make sure you at least had the essentials covered... well, some of them did ok, but a large portion of them just died because there aren't as many people out there looking for that kind of business as you'd think. And those people who don't know how to put their own DB together really wouldn't know where to start to find someone to put something together and manage it for them. And if you're talking about small groups in an enterprise, well if the app is truly that small or insignificant then you can always just slap it on a server real quick. So it seems like they're trying to create a market here.

I don't know... maybe I'm just being stubborn. Maybe I've got my head so far in my own cloud that I can't see what's really going on. Somehow I doubt it, but anything's possible.

Perhaps Sean should visit a psychiatrist to purge his SQL Azure phobia.

* I see considerable interest in moving Access back-end databases to SQL Azure in Microsoft data centers with the SQL Server Migration Assistant. See my Migrating a Moderate-Size Access 2010 Database to SQL Azure with the SQL Server Migration Assistant post of 8/21/2010.

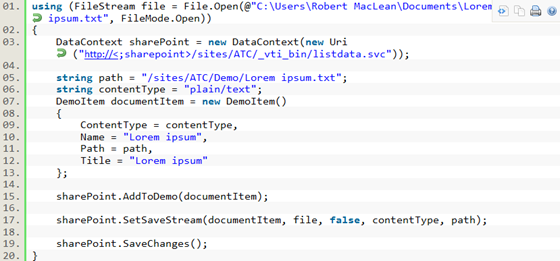

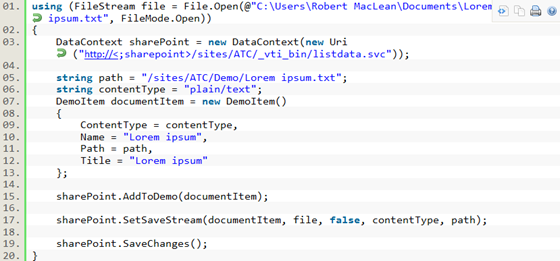

Robert MacLean explained how to Upload files to SharePoint using OData! on 9/28/2010:

I posted yesterday about some pain I felt when working with SharePoint and the OData API, to balance the story this post cover some pleasure of working with it – that being uploading a file to document library using OData!

This is really easy to do, once you know how – but it’s the learning curve of Everest here which makes this really hard to get right, as you have both OData specialisations and SharePoint quirks to contend with. The requirements before we start is we need a file (as a stream), we need to know it’s filename, we need it’s content type and we need to know where it will go.

For this post I am posting to a document library called Demo (which is why OData generated the name of DemoItem) and the item is a text file called Lorem ipsum.txt. I know it is a text file, which means I also know it’s Content Type is plain/text.

The code, below, is really simple and here are what is going on:

- Line 1: I am opening the file using the System.IO.File class, this gives me the stream I need.

- Line 3: To communicate with the OData service I use the DataContext class which was generated when I added the service reference to the OData service and passed in the URI to the OData service.

- Line 8: Here I create a DemoItem - remember in SharePoint everything is a list or a list item, even a document which means I need to create the item first. I set the properties of the item over the next few lines. It is vital you set these and set them correctly or it will fail.

- Line 16: I add the item to the context, this means that it is being tracked now locally – it is not in SharePoint yet. It is vital that this be done prior to you associating the stream.

- Line 18: I associate the stream of the file to the item. Once again, this is still only happening locally – SharePoint has not been touched yet.

- Line 20: SaveChanges handles the actual writing to SharePoint.

Path Property

The path property which is set on the item (line 12) and when I associate the stream (line 18, final parameter) is vital. This must be the path to where the file will exist on the server. This is the relative path to the file regardless of what SharePoint site you are in for example:

- Path: /Documents/demo.txt

- Server: http://sharepoint1

- Site: /

- Document Library: Documents

- Filename: demo.txt

- Path: /hrDept/CVs/abc.docx

- Server: http://sharepoint1

- Site: /hrDept

- Document Library: CVs

- Filename: abc.docx

Wrap-up

I still think you need to still look at WebDav as a viable way to handle documents that do not have metadata requirements, but if you have metadata requirements this is a great alternative to the standard web services.

See Nick Berardi reported on 9/27/2010 that You’re Invited To Code Camp 2010.2 at the DeVry University campus in Fort Washington, PA on Saturday, October 9 from 8:30-5:00 in the Cloud Computing Events section for OData and SQL Azure sessions.

Elisa Flasko reported a New version of LINQPad shipped with support for “Dallas” on 9/27/2010:

Check out the new version of LINQPad. The announced today that the program supports “Dallas” services out of the box. The new version is available from www.linqpad.com.

Adding “Dallas” services into LINQPad is simple.

1. Click on the “Add connection” link

2. In the “Choose Data Context” window choose the “Microsoft ‘Dallas’ Service” entry and click on “Next”.

3. In the following dialog copy and paste the service URL from “Dallas”. This dialog allows also to sign-up for “Dallas”, subscribe to datasets and browse for your account keys. When done click on “OK”

4. The dataset shows up in LINQPad like any other dataset. You can compose LINQ queries and execute them against “Dallas”. The results will be shown in the “Results” view.

- The Dallas Team

Mike Flasko announced WCF Data Services Client Library and Windows Phone 7 – Next Steps in a 9/27/2010 post to the WCF Data Services (OData) blog:

We’ve received a fair number of questions regarding our current CTP library for Windows Phone 7 and what our RTM plans are. The goal of this post is to address many of those questions by describing our plans for the coming months….

Background

The CTP release of the data services client that is currently available for the phone was an early release by our team to enable apps to be built and to allow us to validate how much of the data services client experience that we have in SL4 desktop today we could enable on the phone platform. We've been very pleased by the number of people using the library! That said, the phone platform currently doesn't provide the core types required for the data service client LINQ provider to function. The CTP that is currently available has LINQ support via some creative, but insufficient\shaky workarounds.

Going Forward

Ok, given that context, here is what we're currently planning to do: To enable development in the near term, we are going to create a client library that is Windows Phone 7 friendly by removing the LINQ translator from the client. This will be done by adding #ifdefs to our SL4 client code as well as some additional code changes as required. This means you'll get many of the features you are used to from the Silverlight 4 WCF Data Service client library (serialization, materialization, change tracking, binding, identity resolution, etc), but you will need to formulate queries via URI instead of via LINQ. To mitigate the lack of Add Service Reference support in the current version of the tools, we're looking at providing a T4 template (or command line tool) to enable the simple creation of phone-friendly client code generation. We are going to release this phone-friendly version of the data services client library by refreshing the source code for the client which is currently available from: http://odata.codeplex.com site. This way (after the refresh) the source code for the .NET, Silverlight and Windows Phone 7 client libraries will be available from the CodePlex site. We are working on this refresh right now and plan to release it as described above by mid-October. All that said, we are actively working with the Windows Phone platform groups to add the features required to enable the client library to support a LINQ-based query experience in future. We're also working on how to enable the Add Service Reference gesture in the phone version of Visual Studio which didn't quite land in the first release due to these other issues.

How to Develop OData-based Apps Now

To get your odata-based app ready for near launch you can develop now with the current CTP. To ensure your code will port to the new release (once it's available) as smoothly as possible be sure not to use LINQ to formulate queries, but instead formulate queries via URI (as per the guidance below). To put this in class name terms, stay away from using the DataServiceQuery class or anything methods that require that type as a parameter.

-Mike Flasko, Lead Program Manager, Microsoft

Robert McLean posted a workaround for a Cannot add a Service Reference to SharePoint 2010 OData! problem on 9/27/2010:

SharePoint 2010 has a number of API’s (an API is a way we communicate with SharePoint), some we have had for a while like the web services but one is new – OData. What is OData?

The Open Data Protocol (OData) is a Webprotocol for querying and updating data that provides a way tounlock your data and free it from silos that exist in applicationstoday. OData does this by applying and building upon Webtechnologies such as HTTP, Atom PublishingProtocol (AtomPub) and JSON toprovide access to information from a variety of applications,services, and stores.

The main reason I like OData over the web services is that it is lightweight, works well in Visual Studio and works easily across platform, thanks to all the SDK’s.

SharePoint 2010 exposes these on the following URL http(s)://<site>/_vti_bin/listdata.svc and you can add this to Visual Studio to consume using the exact same as a web service to SharePoint, right click on the project and select Add Service Reference.

Once loaded, each list is a contract and listed on the left and to add it to code, you just hit OK and start using it.

Add Service Reference Failed

The procedure above works well, until it doesn’t and oddly enough my current work found a situation which one which caused the add reference to fail! The experience isn’t great when it does fail – the Add dialog closes and pops back up blank! Try it again and it disappears again but stays away.

If you check the status bar in VS, you will see the error message indicating it has failed – but by this point you may see the service reference is listed there but no code works, because the adding failed.

If you right click and say delete, it will also refuse to delete because the adding failed. The only way to get rid of it is to close Visual Studio, go to the service reference folder (<Solution Folder>\<Project Folder>\Service References) and delete the folder in there which matches the name of your service. You will now be able to launch Visual Studio again, and will be able to delete the service reference.

What went wrong?

Since we have no way to know what went wrong, we need to get a lot more low level. We start off by launching a web browser and going to the meta data URL for the service: http(s)://<site>/_vti_bin/listdata.svc/$metadata

In Internet Explorer 9 this just gives a useless blank page

but if you use the right click menu option in IE 9, View Source, it will show you the XML in notepad. This XML is what Visual Studio is taking, trying to parse and failing on. For us to diagnose the cause we need to work with this XML, so save it to your machine and save it with a .csdl file extension. We need this special extension for the next tool we will use which refuses to work with files without it.

The next step is to open the Visual Studio Command Prompt and navigate to where you saved the CSDL file. We will use a command line tool called DataSvcUtil.exe. This may be familiar to WCF people who know SvcUtil.exe which is very similar, but this one is specifically for OData services. All it does is take the CSDL file and produce a code contract from it, the syntax is very easy: datasvcutil.exe /out:<file.cs> /in:<file.csdl>

Immediately you will see a mass of red, and you know that red means error. In my case I have a list called 1 History which in the OData service is known by it’s gangster name _1History. This problem child is breaking my ability to generate code, which you can figure out by reading the errors.

Solving the problem!

Thankfully I do not need 1 History, so to fix this issue I need to clean up the CSDL file of _1History references. I switched to Visual Studio and loaded the CSDL file in it and begin to start removing all references to the troublemaker. I also needed to remove the item contract for the list which is __1HistoryItem. I start off by removing the item contract EntityType which is highlighted in the image along side.

The next cleanup step is to remove all the associations to __1HistoryItem.

Finally the last item I need to remove is the EntitySet for the list:

BREATH! RELAX!

Ok, now the hard work is done and so I jump back to the command prompt and re-run the DataSvcUtil tool, and it now works:

This produces a file, in my case sharepoint.cs, which I am able to add that to my project just as any other class file and I am able to make use of OData in my solution just like it is supposed to work!

<Return to section navigation list>

AppFabric: Access Control and Service Bus

Clemens Vasters (@clemensv) posted Cloud Architecture: The Scheduler-Agent-Supervisor Pattern on 9/27/2010:

As our team was starting to transform our parts of the Azure Services Platform from a CTP ‘labs’ service exploring features into a full-on commercial service, it started to dawn on us that we had set ourselves up for writing a bunch of ‘enterprise apps’. The shiny parts of Service Bus and Access Control that we parade around are all about user-facing features, but if I look back at the work we had to go from a toy service to a commercial offering, I’d guess that 80%-90% of the effort went into aspects like infrastructure, deployment, upgradeability, billing, provisioning, throttling, quotas, security hardening, and service optimization. The lesson there was: when you’re boarding the train to shipping a V1, you don’t load new features on that train – you rather throw some off.

The most interesting challenge for these infrastructure apps sitting on the backend was that we didn’t have much solid ground to stand on. Remember – these were very early days, so we couldn’t use SQL Azure since the folks over in SQL were on a pretty heroic schedule themselves and didn’t want to take on any external dependencies even from close friends. We also couldn’t use any of the capabilities of our own bits because building infrastructure for your features on your features would just be plain dumb. And while we could use capabilities of the Windows Azure platform we were building on, a lot of those parts still had rough edges as those folks were going through a lot of the same that we went through. In those days, the table store would be very moody, the queue store would sometimes swallow or duplicate messages, the Azure fabric controller would occasionally go around and kill things. All normal – bugs.

So under those circumstances we had to figure out the architecture for some subsystems where we need to do a set of coordinated action across a distributed set of resources – a distributed transaction or saga of sorts. The architecture had a few simple goals: when we get an activation request, we must not fumble that request under any circumstance, we must run the job to completion for all resources and, at the same time, we need to minimize any potential for required operator intervention, i.e. if something goes wrong, the system better knows how to deal with it – at best it should self-heal.

My solution to that puzzle is a pattern I call “Scheduler-Agent-Supervisor Pattern” or, short, “Supervisor Pattern”. We keep finding applications for this pattern in different places, so I think it’s worth writing about it in generic terms – even without going into the details of our system.

The pattern foots on two seemingly odd and very related assumptions: ‘the system is perfect’ and ‘all error conditions are transient’. As a consequence, the architecture has some character traits of a toddler. It’s generally happily optimistic and gets very grumpy, very quickly when things go wrong – to the point that it will simply drop everything and run away screaming. It’s very precisely like that, in fact.

The first picture here shows all key pieces except the Supervisor that I’ll introduce later. At the core we have a Scheduler that manages a simple state machine made up of Jobs and those jobs have Steps. The steps may have a notion of interdependency or may be completely parallelizable. There is a Job Store that holds jobs and steps and there are Agents that execute operations on some resource. Each Agent is (usually) fronted by a queue and the Scheduler has a queue (or service endpoint) through which it receives reply messages from the Agents.

Steps are recorded in a durable storage table of some sort that has at least the following fields: Current State (say: Disabled, Active), Desired State (say: Disabled, Active), LockedUntil (Date/Time value), and Actor plus any step specific information you want to store and eventually submit with the job to the step agent.

When Things Go Right

The initial flow is as follows:

(1)a – Submit a new job into the Scheduler (and wait)

(2)a – The Scheduler creates a new job and steps with an initial current state (‘Disabled’) in the job store

(2)b – The Scheduler sets ‘desired state’ of the job and of all schedulable steps (dependencies?) to the target state (‘Active’) and sets the ‘locked until’ timeout of the step to a value in the near future, e.g. ‘Now’ + 2 minutes.

(1)b – Job submission request unblocks and returnsIf all went well, we now have a job record and, here in this example, two step records in our store. They have a current state of ‘Disabled’ and a desired state of ‘Active’. If things didn’t go well, we’d have incomplete or partially wedged records or nothing in the job store, at all. The client would also know about it since we’ve held on to the reply until we have everything done – so the client is encouraged to retry. If we have nothing in the store and the client doesn’t retry – well, then the job probably wasn’t all that important, after all. But if we have at least a job record, we can make it all right later. We’re optimists, though; let’s assume it all went well.

For the next steps we assume that there’s a notion of dependencies between the steps and the second steps depends on the first. If that were not the case, the two actions would just be happening in parallel.

(3) – Place a step message into the queue for the actor for the first step; Agent 1 in this case. The message contains all the information about the step, including the current and desired state and also the LockedUntil that puts an ultimatum on the activity. The message may further contain an action indicator or arguments that are taken from the step record.

(4) – After the agent has done the work, it places a completion record into the reply queue of the Scheduler.

(5) – The Scheduler records the step as complete by setting the current state from ‘Disabled’ to ‘Active’; as a result the desired and the current state are now equal.

(6) – The Scheduler sets the next step’s desired state to the target state (‘Active’) and sets the LockedUntil timeout of the step to a value in the near future, e.g. ‘Now’ + 1 minute. The lock timeout value is an ultimatum for when the operation is expected to be complete and reported back as being complete in a worst-case success case. The actual value therefore depends on the common latency of operations in the system. If operations usually complete in milliseconds and at worst within a second, the lock timeout can be short – but not too short. We’ll discuss this value in more detail a bit later.

(7), (8), (9) are equivalent to (3), (4), (5).Once the last step’s current state is equal to the current state, the job’s current state gets set to the desired state and we’re done. So that was the “99% of the time” happy path.

When Things Go Wrong

So what happens when anything goes wrong? Remember the principle ‘all errors are transient’. What we do in the error case – anywhere – is to log the error condition and then promptly drop everything and simply hope that time, a change in system conditions, human or divine intervention, or – at worst – a patch will heal matters. That’s what the second principle ‘the system is perfect’ is about; the system obviously isn’t really perfect, but if we construct it in a way that we can either wait for it to return from a wedged state into a functional state or where we enable someone to go in and apply a fix for a blocking bug while preserving the system state, we can consider the system ‘perfect’ in the sense that pretty much any conceivable job that’s already in the system can be driven to completion.

In the second picture, we have Agent 2 blowing up as it is processing the step it got handed in (7). If the agent just can’t get its work done since some external dependency isn’t available – maybe a database can’t be reached or a server it’s talking to spews out ‘server too busy’ errors – it may be able to back off for a moment and retry. However, it must not retry past the LockedUntil ultimatum that’s in the step record. When things fail and the agent is still breathing, it may, as a matter of courtesy, notify the scheduler of the fact and report that the step was completed with no result, i.e. the desired state and the achieved state don’t match. That notification may also include diagnostic information. Once the LockedUntil ultimatum has passed, the Agent no longer owns the job and must drop it. It must even not report failure state back to the Scheduler past that point.

If the agent keels over and dies as it is processing the step (or right before or right after), it is obviously no longer in a position to let the scheduler know about its fate. Thus, there won’t be any message flowing back to the scheduler and the job is stalled. But we expect that. In fact, we’re ok with any failure anywhere in the system. We could lose or fumble a queue message, we could get a duplicate message, we could have the scheduler die a fiery death (or just being recycled for patching at some unfortunate moment) – all of those conditions are fine since we’ve brought the doctor on board with us: the Supervisor.

The Supervisor

The Supervisor is a schedule driven process (or thread) of which one or a few instances may run occasionally. The frequency depends on much on the average duration of operations and the expected overall latency for completion of jobs.

The Supervisor’s job is to recover steps or jobs that have failed – and we’re assuming that failures are due to some transient condition. So if the system would expect a transient resource failure condition that prevented a job from completing just a second ago to be healed two seconds later, it’d depend on the kind of system and resource whether that’d be a good strategy. What’s described here is a pattern, not a solution, so it depends on the concrete scenario to get the timing right for when to try operations again once they fail.

This desired back-off time manifests in the LockedUntil value. When a step gets scheduled, the Scheduler needs to state how long it is willing to wait for that step to complete; this includes some back-off time padding. Once that ultimatum has passed and the step is still in an inconsistent state (desired state doesn’t equal the current state) the Supervisor can pick it up at any time and schedule it.

(1) – Supervisor queries the job store for any inconsistent steps whose LockedUntil value has expired.

(2) – The Supervisor schedules the step again by setting the LockedUntil value to a new timeout and submitting the step into the target actor’s queue

(3) – Once the step succeeds, the step is reported as complete on the regular path back to the Scheduler where it completes normally as in steps (8), (9) from the happy-path scenario above. If it fails, we simply drop it again. For failures that allow reporting an error back to the Scheduler it may make sense to introduce an error counter that round-trips with the step so that the system could detect poisonous steps that fail ‘forever’ and have the Supervisor ignore those after some threshold.The Supervisor can pursue a range of strategies for recovery. It can just take a look at individual steps and recover them by rescheduling them – assuming the steps are implemented as idempotent operations. If it were a bit cleverer, it may consider error information that a cooperative (and breathing) agent has submitted back to the Scheduler and even go as far as to fire an alert to an operator if the error condition were to require intervention and then take the step out of the loop by marking it and setting the LockedUntil value to some longer timeout so it’s taken out of the loop and someone can take a look.

At the job-scope, the Supervisor may want to perform recovery such that it first schedules all previously executed steps to revert back to the initial state by performing compensation work (all resources that got set to active are getting disabled again here in our example) and then scheduling another attempt at getting to the desired state.

In step (2)b up above, we’ve been logging current and desired state at the job-scope and with that we can also always find inconsistent jobs where all steps are consistent and wouldn’t show up in the step-level recovery query. That situation can occur if the Scheduler were to crash between logging one step as complete and scheduling the next step. If we find inconsistent jobs with all-consistent steps, we just need to reschedule the next step in the dependency sequence whose desired state isn’t matching the desired state of the overall job.

To be thorough, we could now take a look at all the places where things can go wrong in the system. I expect that survey to yield that at as long we can successfully get past step (2)b from the first diagram, the Supervisor is always in a position to either detect that a job isn’t making progress and help with recovery or can at least call for help. The system always knows what its current intent is, i.e. which state transitions it wants to drive, and never forgets about that intent since that intent is logged in the job store at all times and all progress against that intent is logged as well. The submission request (1) depends on the outcome of (2)a/b to guard against failures while putting a job and its steps into the system so that a client can take corrective action. In fact, once the job record is marked as inconsistent in step (2)b, the scheduler could already report success back to the submitting party even before the first step is scheduled, because the Supervisor would pick up that inconsistency eventually.

Sample code for a working demonstration would be appreciated.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

Barry Givens posted Social CRM with Microsoft Dynamics CRM and Windows Azure featuring new ClickDimensions product built on Microsoft Dynamics CRM on 9/28/2010 to the CRM Team Blog:

Seven years ago we launched Microsoft CRM 1.2 to a small but enthusiastic audience. CRM 1.2 was a market breakthrough for us but it was only after a healthy independent software vendor community came to fill critical feature gaps. One of the first people to recognize those needs in CRM 1.2 was John Gravely. He built a tremendous business around add-on utilities for CRM 1.2 and was instrumental to the early success of Microsoft CRM.

As we get ready to launch Microsoft Dynamics CRM 2011 I see fewer product gaps but no less opportunity for ISVs. ISVs will continue to add tremendous value to CRM through both horizontal and vertical industry extensions to the product. Once again John has identified a critical set of customer needs around CRM and has been driving great innovation with his new ClickDimensions product built on Microsoft Dynamics CRM.

ClickDimensions is a digital marketing automation application that connects your Web and e-mail analytics to your sales and marketing efforts within CRM. The application provides lead scoring based on Web tracking, social discovery of leads and multi-channel communications capabilities powered by the ExactTarget engine. Today, most of these digital marketing tasks have to be managed with a hodge-podge of tools that aren’t well integrated. ClickDimensions will make it easy to use Microsoft Dynamics CRM as the one place you can go to manage and execute digital marketing campaigns.

The CRM portion of the product can run on premises or in CRM Online but the real intelligence of the application is in Windows Azure where the lead scoring, Web tracking and logic for social discovery occur. This will let ClickDimensions provide mass scale without the need for a complicated deployment – a key selling feature for nimble marketing organizations. [Emphasis added.]

I am hoping to get John to share some of his architectural learnings with us at Extreme CRM 2010 this November (please encourage him to come out for the event and do a presentation for us) but if you’d like information and a demontration sooner you can register for a September 28th Webinar hosted by ExactTarget.

Karsten Januszewski asked How Do You Use The Archivist? in this 9/28/2010 post to the MIX Online blog:

The Archivist—our lab focused on archiving, analyzing and exporting tweets—just had its 3-month birthday. Hurray! The service has been humming along nicely, with over 150,000,000 tweets archived and over 20,000 unique visitors since June of 2010.

It’s always fascinating to find out all the ways in which the software we create gets used, many of which we never anticipated. Here’s a sampling of interesting uses of The Archivist we’ve seen since launch:

- Mashable used The Archivist to do an analysis of the popularity of different Android phones based on their Tweet volume.

- Chris Pirillo, well-known Internet celebrity, employed The Archivist to track tweets about the Gnomedex conference.

- In concert with Dr. Boynton’s article on using The Archivist in Academia was a fascinating piece by Mark Sample from The Chronicle of Higher Education, called Practical Advice for Teaching with Twitter. Sample’s tact is a little different than Boynton’s, in that he tracks students’ tweets about a given class. To facilitate this, he suggests that professors explicitly create and advertise a hashtag (aka #PHIL101) for their courses and then track that hashtag as the course proceeds. By using a tool like The Archivist, professors can then see which students tweeted the most during the course, which words were most commonly used, when tweets were most frequent (right before the end of the semester?), etc.

- Another fascinating example of Archivist usage came from Australian politics; you can read about it here and here. I’m not an expert on Australian politics so I can’t fully explicate what happened, but from what I can glean, a candidate in the election started tweeting controversial tweets that were ultimately tracked by The Archivist.

This is just a sample of how people are using The Archivist. But we’re certain there are other examples out there, given the service’s number of active users. In fact, we’re hoping to write up a whole article with examples of how people use The Archivist.

To that end, we’d like to offer $10 Starbuck’s gift cards to the first 5 people who send mail to archivist@microsoft.com telling us how you use The Archivist. We’ll feature your submissions in our upcoming article. So don’t be shy – do tell!

Oh, and if you have any suggestions or ideas on what archives we should feature on the homepage, let us know that as well.

I asked for an increase in the limit of the number of archives from three to 10. You can check my @rogerjenn tweets, as well as tweets about #SQLAzure and #OData.

David Linthicum posted Understanding Coupling for the Clouds to ebizQ’s Where SOA Meets Cloud blog on 9/28/2010:

One of the key concepts to consider when talking about services and cloud computing is the notion of coupling. We need to focus on this since, in many instances, coupling is not a good architectural choice considering that the services are not only hosted within separate data centers, but hosted by one or more cloud computing providers.

Since the beginning of computing we've been dealing with the notion of coupling, or the degree that one component is dependent upon another component, in both the domain of an application or an architecture. Lately, the movement has been toward loose coupling for some very good reasons, but many architects who build enterprise architectures that leverage cloud computing understand the motivations behind this since we don't want to become operationally dependent upon a component we don't own nor control.

Breaking this concept down to its essence, we can state that tightly coupled systems/architectures are dependent upon each other. Thus changes to any one component may prompt changes to many other components. Loosely coupled systems/architectures, in contrast, leverage independent components, and thus can operate independently. Therefore, when looking to create a SOA and leverage cloud computing resources, generally speaking, the best approach is a loosely coupled architecture.

Keep in mind, how loosely or tightly coupled your architecture exists is a matter of requirements, and not as much about what's popular. Indeed, architects need to understand the value of cloud computing and loose coupling, and make the right calls to insure that the architecture matches the business objectives. It's helpful to walk through this notion of coupling as you approach your cloud computing architecture.

With the advent of web services and SOA, we've been seeking to create architectures and systems that are more loosely coupled. Loosely coupled systems provide many advantages including support for late or dynamic binding to other components while running, and can mediate the difference in the component's structure, security model, protocols, and semantics, thus abstracting volatility.This is in contrast to compile-time or runtime binding, which requires that you bind the components at compile time or runtime (synchronous calls), respectively, and also requires that changes be designed into all components at the same time due to the dependencies. As you can imagine, this type of coupling makes testing and component changes much more difficult, and is almost unheard of when leveraging cloud computing platforms for processes that span on-premise to the cloud providers.

The advantages of loosely coupled architectures, as found within many SOAs, are apparent to many of us who have built architectures and systems in the past, at least from a technical perspective. However, they have business value as well.

First and foremost, a loosely coupled architecture allows you to replace components, or change components, without having to make reflective changes to other components in the architecture/systems. This means businesses can change their business systems as needed, with much more agility than if the architecture/systems were more tightly coupled.

Second, developers can pick and choose the right enabling technology for the job without having to concern themselves with technical dependencies such as security models. Thus, you can build new components using a cloud-based platform, say a PaaS provider, which will work and play well with other components written in Cobol or perhaps C++which are on-premise. Same goes for persistence layers, middleware, protocols, etc., cloud delivered or on-premise. You can mix and match to exactly meet your needs, even leverage services that may exist outside of your organization without regard for how that service was created, how it communicates, nor where it is running, cloud or on-premise.

Finally, with this degree of independence, components are protected from each other and can better recover from component failure. If the cloud computing architecture is designed correctly, the failure of a single component should not take down other components in the system such as a cloud platform outage stopping the processing of key on-premise enterprise applications. Therefore, loose coupling creates architectures that are more resilient. Moreover, this also better lends itself to creating failover subsystems, moving from one instance of a component to another without affecting the other components, which is very important when using cloud computing platforms.

J. D. Meier uses the Windows Azure Training Map as an example in his Microsoft Developer Guidance Maps post of 9/26/2010:

As part of creating an "information architecture" for developer guidance at Microsoft, one of the tasks means mapping out what we already have. That means mapping out out our Microsoft developer content assets across Channel9, MSDN Developer Centers, MSDN Library, Code Gallery, CodePlex, the All-in-One Code Framework, etc.

You can browse our Developer Guidance Maps at http://innovation.connect.microsoft.com/devguidancemaps

One of my favorite features is the one-click access that bubbles up high value code samples, how tos, and walkthroughs from the product documentation. Here is an example of showcasing the ASP.NET documentation team’s ASP.NET Product Documentation Map. Another of my favorite features is one-click access to consolidated training maps. Here is an example showcasing Microsoft Developer Platform Evangelism’s Windows Azure Training Map.

Content Types

Here are direct jumps to pages that let you browse by content type:Developer Guidance Maps

Here are direct jumps to specific Developer Guidance maps:

- ADO.NET Developer Guidance Map

- ASP.NET Developer Guidance Map

- Silverlight Developer Guidance Map

- WCF Developer Guidance Map

- Windows Azure Developer Guidance Map

- Windows Client Developer Guidance Map

- Windows Phone Developer Guidance Maps

The Approach

Rather than boil the ocean, so we’ve used a systematic and repeatable model. We’ve focused on topics, features, and content types for key technologies. Here is how we prioritized our focus:

- Content Types: Code, How Tos, Videos, Training

- App Platforms for Key Areas of Focus: Cloud, Data, Desktop, Phone, Service, Web

- Technology Building Blocks for the stories above: ADO.NET, ASP.NET, Silverlight, WCF, Windows Azure, Windows Client, Windows Phone

The Maps are Works in Progress

Keep in mind these maps are works in progress and they help us pressure test our simple information architecture (“Simple IA”) for developer guidance at Microsoft. Creating the maps helps us test our models, create a catalog of developer guidance, and easily find the gaps and opportunities. While the maps are incomplete, they may help you find content and sources of content that you didn’t even know existed. For example, the All-In-One Code Framework has more than 450 code examples that cover 24 Microsoft development technologies such as Windows Azure, Windows 7, Silverlight, etc. … and the collection grows by six samples per week.Here’s another powerful usage scenario. Use the maps as a template to create your own map for a particular technology. By creating a map or catalog of content for a specific technology, and organizing it by topic, feature, and content type, you can dramatically speed up your ability to map out a space and leverage existing knowledge. (… and you can share your maps with a friend ;)

J. D. Meier classified Microsoft Code Sample Types in this 9/26/2010 post:

As part of rounding up our available developer content, one area I can’t ignore is code samples. Code samples are where the rubber meets the road. They turn theory into practice. They also help eliminate ambiguity … As Ward Cunningham says, “It’s all talk until the code runs.” This post is a quick rundown of how I’m looking at code samples.

Code Snippets, Code Samples, and Sample Applications

I’ve found it useful to think of code samples in three sizes: code snippets, code samples, and sample applications. Sure there are other variations and flavors, but I’ve found these especially useful on the application development and solution architecture side of the house. Here is a quick rundown of each code sample type:

- Code Snippets - Code snippets are the smallest form of a code sample. They are a few lines of code to show the gist of the solution. They can be used to show product documentation or a particular API. They can also be used to show application development tasks. For example, a snippet might be just a few lines to show how to connect to a database. Usually code snippets are used in conjunction with code samples to walkthrough the implementation and show pieces along the way.

- Code Samples /Code Examples - Code samples are modular code samples that illustrate how to solve a particular problem or perform a specific task. They are bigger than a code snippet and smaller than a sample application. In fact, a common use for code samples is to show how to perform an application development task, such as how to page records or how to log exceptions.

- Sample Apps - Sample applications are the largest form of code sample provided as guidance. They are provided as to demonstrate application design concepts, and larger processes or algorithms.

Additional Types of Code Samples …

- Application Blocks – Application Blocks are reusable implementations of libraries or frameworks for recurring problems within application domains. For example, within line-of-business applications (LOB), this would be caching, authorization, aggregation, etc.

- Frameworks – Frameworks provide solutions for cross-cutting concerns. They can be lower-level frameworks, oriented around code, or they can be higher-level frameworks, oriented around application concerns. Enterprise Library is an example of an application framework, similar to Java EE APIs. In the case of Enterprise Library, the APIs provide a common set of features for solving application infrastructure concerns.

- Reusable Libraries – Reusable libraries are code samples packaged in a reusable way, such as a .NET Assembly. They typically encapsulate a set of related APIs.

- Reference Implementations – These are scenario-based, working implementations of code. A reference implementation might be a reusable library or it might be a framework or it might be a sample application. In any event, it’s a “reference” implementation because it’s code that’s been implemented as an illustration to model from.

J. D. continues with a table of examples.

Return to section navigation list>

VisualStudio LightSwitch

See Nick Berardi reported on 9/27/2010 that You’re Invited To Code Camp 2010.2 at the DeVry University campus in Fort Washington, PA on Saturday, October 9 from 8:30-5:00 in the Cloud Computing Events section for a LightSwitch session.

See Nick Berardi reported on 9/27/2010 that You’re Invited To Code Camp 2010.2 at the DeVry University campus in Fort Washington, PA on Saturday, October 9 from 8:30-5:00 in the Cloud Computing Events section for a LightSwitch session.

<Return to section navigation list>

Windows Azure Infrastructure

Eric Knorr asked “In an hourlong interview, Microsoft's Bob Muglia offered a surprisingly clear cloud computing vision. Can Microsoft make good on it?” in a teaser for his Microsoft exec: We 'get' the cloud article of 9/28/2010 for Infoworld’s Modernizing IT blog:

Last week I visited Bob Muglia, president of Microsoft's Server and Tools Division, mainly to interview him about cloud computing. On my way to the conference room, I was offered coffee and directed to a large, shiny, self-service Starbucks machine. I pressed a button. The machine buzzed, whirred, and wheezed, its progress tracked by an LED readout that looked like a download bar. After what seemed like forever, it produced a good cup of coffee.

So it has gone with Microsoft's strategy for cloud computing. For over two years we've heard a variety of noises, some incomprehensible, about Microsoft's plans for the cloud. Although some business questions remain, I can report that -- if Muglia's responses to IDG Enterprise Content Officer John Gallant and me can be taken at face value -- Microsoft finally seems to have brewed up a good strategy.

Muglia [pictured at right] began with perhaps the best practical description of the core benefit of cloud computing that I've heard. It's worth quoting in its entirety:

“The promise of the cloud is that by running [workloads] at very high scale, by using software to standardize and deliver a consistent set of services to customers, we can reduce the cost of running operations very substantially. And we know that the majority of cost that our customers spend in IT is associated with the people cost associated with operations. And that's where the cloud really brings the advantages.

“The main advantage in terms of how customers will be able to get better business value at a lower cost is brought because the cloud standardizes the way operations is done and really dramatically reduces that.

If you look at most of our customers, they will have a ratio of somewhere between 50 and 100 servers per administrator. And a world class IT shop might get that up to 300 or 400 servers per administrator. When we run these cloud services, we run them at 2000 to 4000 servers per administrator internally. The translation of that is very dramatic in terms of what it can do. And by running it ourselves we are also able to engineer the software to just continue to drive out that cost of operations in a way that I don't think the industry has ever seen before.”

That's exactly right. Have you wondered why our slow and painful crawl out of the Great Recession has been accompanied by a huge surge of interest in cloud computing? Because companies have laid off people across the board, including IT admins, and have discovered -- whaddaya know? -- that the joint is still running with that cost taken out. Maybe with the self-service, standardization, and data center automation of cloud services they can reduce the "people costs" of IT even further.

Michael Feldman reported Microsoft Aims for Client-Cluster-Cloud Unification in Technical Computing in a 9/27/2010 post to the HPC in the Cloud HPCWire blog:

Last week's High Performance Computing Financial Markets conference in New York gave Microsoft an opening to announce the official release of Windows HPC Server 2008 R2, the software giant's third generation HPC server platform. It also provided Microsoft a venue to spell out its technical computing strategy in more detail, a process the company began in May.

The thrust of Microsoft's new vision is to converge the software environment for clients, clusters and clouds so that HPC-style applications can run across all three platforms with minimal fuss for developers and end users. Between the parallel support in Visual Studio, .NET, Parallel LINQ, Dryad, HPC server and Windows Azure, the company has built an impressive portfolio of software for scale-out applications. At the center of the technical computing strategy is Microsoft's HPC server, currently Windows HPC Server 2008 R2, that can hook into the desktop today and will be able to extend into the company's Azure cloud in the very near future.