| A compendium of Windows Azure, Service Bus, EAI & EDI,Access Control, Connect, SQL Azure Database, and other cloud-computing articles. |  |

‡‡ Updated 10/27/2012 with new articles marked

‡‡:

Management Portal Updates, Add-Ons‡ Updated 10/27/2012 with new articles marked

‡.

•• Updated 10/25/2012 with new articles marked

••.

• Updated 10/24/2012 with new articles marked

•.

Tip: Copy bullet(s) or dagger(s), press Ctrl+f, paste it/them to the Find textbox and click Next to locate updated articles:

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Windows Azure Blob, Drive, Table, Queue, Hadoop and Media Services

- Windows Azure SQL Database, Federations and Reporting, Mobile Services

- Marketplace DataMarket, Cloud Numerics, Big Data and OData

- Windows Azure Service Bus, Caching, Access & Identity Control, Active Directory, and Workflow

- Windows Azure Virtual Machines, Virtual Networks, Web Sites, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch and Entity Framework v4+

- Windows Azure Infrastructure and DevOps

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

•• Yogeeta Kumari Yadav described Best Practices: Azure Storage Analytics in a 10/25/2012 post to the Aditi Technologies blog:

Windows Azure Storage Analytics feature helps users to identify usage patterns for all services available within an Azure storage account. This feature provides a trace of the executed requests against your storage account (Blobs, Tables and Queues).

Windows Azure Storage Analytics feature helps users to identify usage patterns for all services available within an Azure storage account. This feature provides a trace of the executed requests against your storage account (Blobs, Tables and Queues).

Azure Storage Analytics allows you to:

- monitor requests to your storage account

- understand performance of individual requests

- analyse usage of specific containers and blobs

- debug storage APIs at a request level.

Storage Analytics Log Format

Each version 1.0 log entry adheres to the following format:

Sample Log

1.0;2012-09-05T09:22:27.2477320Z;ListContainers;Success;200;4;4;authenticated;mediavideos;mediavideos;blob;"http://mediavideos.blob.core.windows.net/?comp=list&timeout=90";"/mediavideos";fac965bb-c481-49bf-ae80-7920d49449c6;0;121.244.158.2:15621;2011-08-18;306;0;152;1859;0;;;;;;"WA-Storage/1.7.0";;

As you can see logs presented in this manner are not in a human readable format. To find out which entry represents the request packet size, you have to count the fields from the first entry and then get to the required field. This is no easy task.

I have now come across some tools that will simplify things and present the logs in a readable format.

Let us now look at some of these tools:

Azure Storage Explorer 5 Preview 1 - This tool helps to view the logs created after enabling storage analytics against a storage account. The logs seen with help of this tool are in same format as described above.

CloudBerry Explorer for Azure blob storage - This tool provides support for viewing Windows Azure Storage Analytics log in readable format. I have added here sample log information retrieved with the help of this tool.

Figure 1: Sample log information from CloudBerry Explorer

Isn't it easy to read the log in the screen shot than in the sample log?

Azure-Storage-Analytics Viewer – This is a visual tool that you can use to download Azure Storage Metrics and Log data, and display them the form of a chart. You can download this tool from the github website.

Figure 2: Azure-Storage-Analytics Viewer

To use this tool, enter the storage account information in the Azure-Storage-Analytics Viewer window and click the Load Metrics button.

You can see the various metrics represented in the form of a chart. To add metrics to the chart, right-click on a chart and select any option from the pop-up menu.

Figure 3: Azure-Storage-Analytics Viewer

You can select the period for which you wish to analyse the log information and save it to a csv file. Following is a sample snapshot of the csv file which contains storage analytics information for the period between 9 AM on 5th September 2012 and 9 AM on 6th September 2012.

Figure 4: Log information saved in an Excel file

This is the best way to analyse the usage patterns for a storage account and make decisions for effective utilization of storage account.

References

• David Campbell (@SQLServer) posted Simplifying Big Data for the Enterprise to the SQL Server blog on 10/24/2012:

Earlier this year we announced partnerships with key players in the Apache Hadoop community to ensure customers have all the necessary solutions to connect with, manage and analyze big data. Today we’re excited to provide an update on how we’re working to broaden adoption of Hadoop with the simplicity and manageability of Windows.

Earlier this year we announced partnerships with key players in the Apache Hadoop community to ensure customers have all the necessary solutions to connect with, manage and analyze big data. Today we’re excited to provide an update on how we’re working to broaden adoption of Hadoop with the simplicity and manageability of Windows.

First, we’re releasing new previews of our Hadoop-based solutions for Windows Server and Windows Azure, now called Microsoft HDInsight Server for Windows and Windows Azure HDInsight Service. Today, customers can access the first community technology preview of Microsoft HDInsight Server and a new preview of Windows Azure HDInsight Service at Microsoft.com/BigData [see below.] Both of these new previews make it easier to configure and deploy Hadoop on the Windows platform, and enable customers to apply rich business intelligence tools such as Microsoft Excel, PowerPivot for Excel and Power View to pull actionable insights from big data.

First, we’re releasing new previews of our Hadoop-based solutions for Windows Server and Windows Azure, now called Microsoft HDInsight Server for Windows and Windows Azure HDInsight Service. Today, customers can access the first community technology preview of Microsoft HDInsight Server and a new preview of Windows Azure HDInsight Service at Microsoft.com/BigData [see below.] Both of these new previews make it easier to configure and deploy Hadoop on the Windows platform, and enable customers to apply rich business intelligence tools such as Microsoft Excel, PowerPivot for Excel and Power View to pull actionable insights from big data.

Second, we are expanding our partnership with Hortonworks, a pioneer in the Hadoop community and a leading contributor to the Apache Hadoop project. This expanded partnership will enable us to provide customers access to an enterprise-ready version of Hadoop that is fully compatible with Windows Server and Windows Azure.

Second, we are expanding our partnership with Hortonworks, a pioneer in the Hadoop community and a leading contributor to the Apache Hadoop project. This expanded partnership will enable us to provide customers access to an enterprise-ready version of Hadoop that is fully compatible with Windows Server and Windows Azure.

To download Microsoft HDInsight Server or Windows Azure HDInsight Service, or for more information about our expanded partnership with Hortonworks, visit Microsoft.com/BigData today.

To download Microsoft HDInsight Server or Windows Azure HDInsight Service, or for more information about our expanded partnership with Hortonworks, visit Microsoft.com/BigData today.

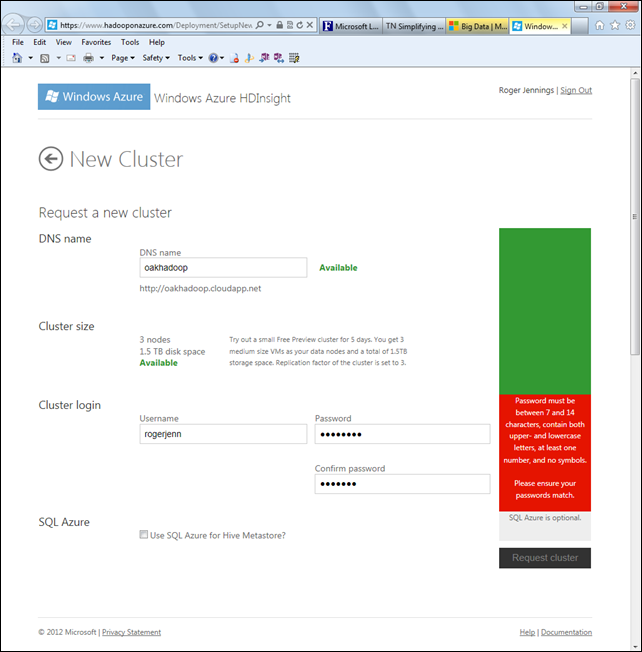

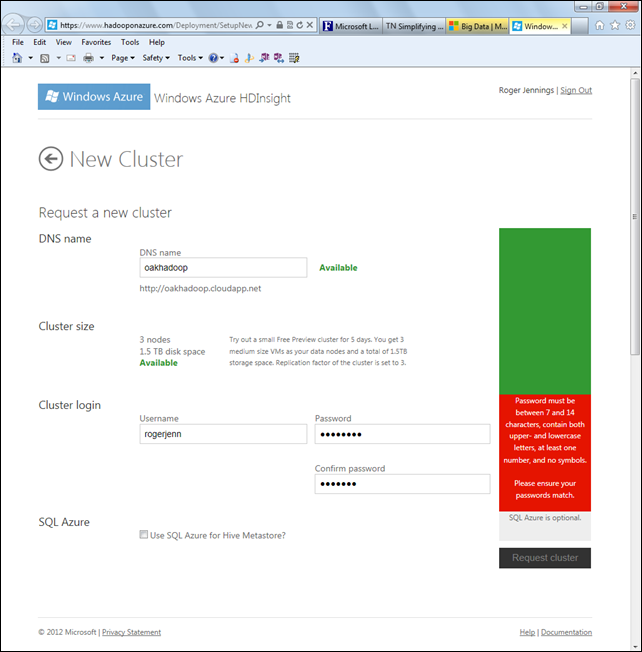

Click the Sign Up for HDInsight Service button on the Big Data page, type your Windows Account (nee Live Id) name and password, and click Submit. If you’ve signed up for the Apache Hadoop on Windows Azure preview, you’ll be invited to request a new cluster:

Provisioning a new free five-day cluster takes about 30 minutes. When provisioning is complete, click the Go To Cluster link to open the HDInsight Dashboard, which is almost identical to that for Hadoop on Azure. According to a message of 10/23/2012 from Brad Sarsfield (@bradoop) on the Apache Hadoop on Azure CTP Yahoo! Group:

Based on usage patterns and feedback we have removed the FTP, S3 and Data Market functionality from the web based Hadoop portal. We strongly recommend leveraging Azure storage as the primary long term persistent data store for Hadoop on Azure. This allows the Hadoop cluster to be transient and size independent from the amount of data stored, and represents a significant $/GB savings over the long run.

Based on usage patterns and feedback we have removed the FTP, S3 and Data Market functionality from the web based Hadoop portal. We strongly recommend leveraging Azure storage as the primary long term persistent data store for Hadoop on Azure. This allows the Hadoop cluster to be transient and size independent from the amount of data stored, and represents a significant $/GB savings over the long run.

Note: The Hadoop on Azure Yahoo! Group has moved to the HDInsight (Windows and Windows Azure) forum on MSDN, effective 10/24/2012.

Following are links to my earlier posts about HDInsight’s predecessor, Apache Hadoop on Windows Azure:

• Himanshu Singh (@himanshuks) posted Matt Winkler’s Getting Started with Windows Azure HDInsight Service on 10/24/2012:

Editor's Note: This post comes from Matt Winkler (pictured below), Principal Program Manager at Microsoft.

This morning we made some big announcements about delivering Hadoop for Windows Azure users. Windows Azure HDInsight Service is the easiest way to deploy, manage and scale Hadoop based solutions. This release includes:

This morning we made some big announcements about delivering Hadoop for Windows Azure users. Windows Azure HDInsight Service is the easiest way to deploy, manage and scale Hadoop based solutions. This release includes:

Hadoop updates that ensure the latest stable versions of:

Hadoop updates that ensure the latest stable versions of: - HDFS and Map/Reduce

- Pig

- Hive

- Sqoop

- Increased availability of the preview service

- A local, developer installation of Microsoft HDInsight Server

- An SDK for writing Hadoop jobs using .NET and Visual Studio

Community Contributions

As part of our ongoing commitment to Apache™ Hadoop®, the team has been actively working to submit our changes to Apache™. You can follow the progress of this work by following branch-1-win for check-ins related to HDFS and Map/Reduce. We’re also contributing patches to other projects, including Hive, Pig and HBase. This set of components is just the beginning, with monthly refreshes ahead we’ll be adding additional projects, such as HCatalog.

As part of our ongoing commitment to Apache™ Hadoop®, the team has been actively working to submit our changes to Apache™. You can follow the progress of this work by following branch-1-win for check-ins related to HDFS and Map/Reduce. We’re also contributing patches to other projects, including Hive, Pig and HBase. This set of components is just the beginning, with monthly refreshes ahead we’ll be adding additional projects, such as HCatalog.

Getting Access to the HDInsight Service

In order to get started, head to http://www.hadooponazure.com and submit the invitation form. We are sending out invitation codes as capacity allows. Once in the preview, you can provision a cluster, for free, for 5 days. We’ve made it super easy to leverage Windows Azure Blob storage, so that you can store your data permanently in Blob storage, and bring your Hadoop cluster online only when you need to process data. In this way, you only use the compute you need, when you need it, and take advantage of the great features of Windows Azure storage, such as geo-replication of data and using that data from any application.

Simplifying Development

Hadoop has been built to allow a rich developer ecosystem, and we’re taking advantage of that in order to make it easier to get started writing Hadoop jobs using the languages you’re familiar with. In this release, you can use JavaScript to build Map/Reduce jobs, as well as compose Pig and Hive queries using the JavaScript console hosted on the cluster dashboard. The JavaScript console also provides the ability to explore data and refine your jobs in an easy syntax, directly from a web browser.

For .NET developers, we’ve built an API on top of Hadoop streaming that allows for writing Map/Reduce jobs using .NET. This is available in NuGet, and the code is hosted on CodePlex. Some of the features include:

- Choice of loose or strong typing

- In memory debugging

- Submission of jobs directly to a Hadoop cluster

- Samples in C# and F#

Get Started

•• Thor Olavsrud (@ThorOlavsrud), a senior writer for CIO.com, provides a third-party view of HDInsight in an article for NetworkWorld of 10/24/2012:

Microsoft this week is focused on the launch of its converged Windows 8 operating system, which a number of pundits and industry watchers have declared a make-or-break release for the company, but in the meantime Microsoft is setting its sights on the nascent but much-hyped big data market by giving organizations the capability to deploy and manage Hadoop in a familiar Windows context.

Microsoft this week is focused on the launch of its converged Windows 8 operating system, which a number of pundits and industry watchers have declared a make-or-break release for the company, but in the meantime Microsoft is setting its sights on the nascent but much-hyped big data market by giving organizations the capability to deploy and manage Hadoop in a familiar Windows context.

Two days ahead of the Windows 8 launch, Microsoft used the platform provided by the O'Reilly Strata Conference + Hadoop World here in New York to announce an expanded partnership with Hortonworks-provider of a Hadoop distribution and one of the companies that has taken a leading role in the open source Apache Hadoop project- and to unveil new previews of a cloud-based solution and an on-premise solution for deploying and managing Hadoop. The previews also give customers the capability to use Excel, PowerPivot for Excel and Power View for business intelligence (BI) and data visualization on the data in Hadoop.

Two days ahead of the Windows 8 launch, Microsoft used the platform provided by the O'Reilly Strata Conference + Hadoop World here in New York to announce an expanded partnership with Hortonworks-provider of a Hadoop distribution and one of the companies that has taken a leading role in the open source Apache Hadoop project- and to unveil new previews of a cloud-based solution and an on-premise solution for deploying and managing Hadoop. The previews also give customers the capability to use Excel, PowerPivot for Excel and Power View for business intelligence (BI) and data visualization on the data in Hadoop.

IN DEPTH: Hadoop wins over enterprise IT, spurs talent crunch

Microsoft has dubbed the cloud-based version Windows Azure HDInsight Service, while the on-premise offering is Microsoft HDInsight Server for Windows.

Microsoft has dubbed the cloud-based version Windows Azure HDInsight Service, while the on-premise offering is Microsoft HDInsight Server for Windows.

"Microsoft's entry expands the potential market dramatically and connects Hadoop directly to the largest population of business analysts: users of Microsoft's BI tools," says Merv Adrian, research vice president, Information Management, at Gartner. "If used effectively, Microsoft HDInsight will enable a significant expansion of the scope of data available to analysts without introducing substantial new complexity to them."

Microsoft Promises to Reduce Big Data Complexity

"This provides a unique set of offerings in the marketplace," says Doug Leland, general manager of SQL Server Marketing at Microsoft. "For the first time, customers will have the enterprise characteristics of a Windows offering-the simplicity and manageability of Hadoop on Windows-wrapped up with the security of the Windows infrastructure in an offering that is available both on-premise and in the cloud. This will ultimately take out some of the complexity that customers have experienced with some of their earlier investigations of big data technologies."

"Big data should provide answers for business, not complexity for IT," says David Campbell, technical fellow, Microsoft. "Providing Hadoop compatibility on Windows Server and Azure dramatically lowers the barriers to setup and deployment and enables customers to pull insights from any data, any size, on-premises or in the cloud."

One of the pain points experienced by just about any organization that seeks to deploy Hadoop is the shortage of Hadoop skills among the IT staff. Engineers and developers with Hadoop chops are difficult to come by. Gartner's Adrian is quick to note that HDInsight in either flavor won't eliminate that issue, but it will allow more people in the organization to benefit from big data faster.

"The shortage of skills continues to be a major impediment to adoption," Adrian says. "Microsoft's entry does not relieve the shortage of experienced Hadoop staff, but it does amplify their ability to deliver their solutions to a broad audience when their key foundation work has been done." …

Read more: 2, Next

<Return to section navigation list>

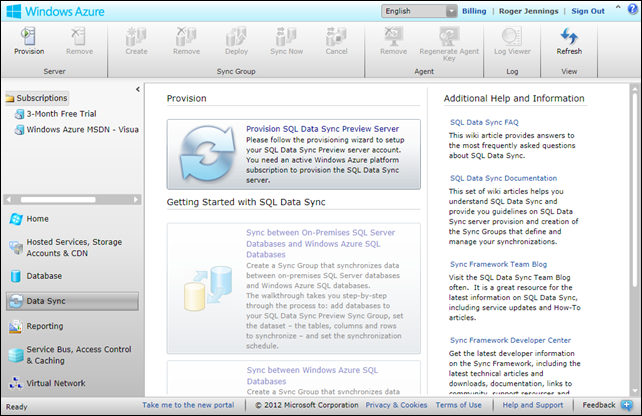

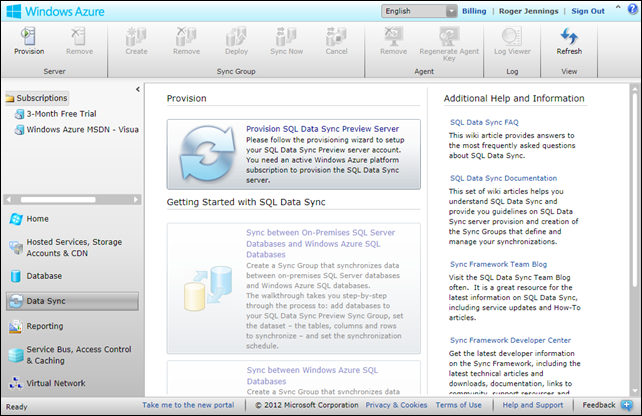

‡‡ Han, MSFT of the Sync Team answered Where in the world is SQL Data Sync? in a 10/28/2012 post:

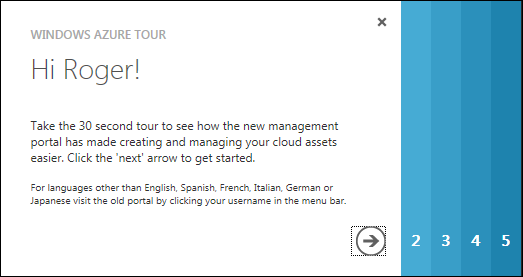

During this past weekend, the new Windows Azure portal was officially released. Windows Azure subscribers are now directed to the new portal once they log in. Now, you may have noticed that SQL Data Sync is not in the new Windows Azure portal. Don't worry, SQL Data Sync still exists. We are working to port SQL Data Sync onto the new portal soon. In the mean time, SQL Data Sync users can continue to access SQL Data Sync via the old portal. To access the old portal, you will need to click on your user name on the top right corner. A context menu will appear. Click on the Previous portal link to redirect to the old portal (see below).

During this past weekend, the new Windows Azure portal was officially released. Windows Azure subscribers are now directed to the new portal once they log in. Now, you may have noticed that SQL Data Sync is not in the new Windows Azure portal. Don't worry, SQL Data Sync still exists. We are working to port SQL Data Sync onto the new portal soon. In the mean time, SQL Data Sync users can continue to access SQL Data Sync via the old portal. To access the old portal, you will need to click on your user name on the top right corner. A context menu will appear. Click on the Previous portal link to redirect to the old portal (see below).

“Old portal” = Silverlight portal:

‡‡ Ralph Squillace described Data Paging using the Windows Azure Mobile Services iOS client SDK in a 10/28/2012 post:

As another follow up to an earlier post, I'm walking through the Windows Azure Mobile Services Tutorials to add in the iOS client version of handling data validation. This tutorial (like the earlier one), will appear on windowsazure.com soon, builds up from either the Getting Started With Data or the Using Scripts to Authorize Users iOS tutorials, in that you can use a running application and service from either of those tutorials to get service validation working and handled properly on the client. Then we'll walk through data paging and continue on the tour.

As another follow up to an earlier post, I'm walking through the Windows Azure Mobile Services Tutorials to add in the iOS client version of handling data validation. This tutorial (like the earlier one), will appear on windowsazure.com soon, builds up from either the Getting Started With Data or the Using Scripts to Authorize Users iOS tutorials, in that you can use a running application and service from either of those tutorials to get service validation working and handled properly on the client. Then we'll walk through data paging and continue on the tour.

The steps are as follows. You must have either the Getting Started With Data or the Using Scripts to Authorize Users iOS tutorials completed and running.

The steps are as follows. You must have either the Getting Started With Data or the Using Scripts to Authorize Users iOS tutorials completed and running.

- Open the project of one of the preceding iOS tutorial applications.

- Run the application, and enter at least four (4) items.

- Open the TodoService.m file, and locate the - (void) refreshDataOnSuccess:(CompletionBlock)completion method. Replace the body of the entire method with the following code. This query returns the top three items that are not marked as completed. (Note: To perform more interesting queries, you use the MSQuery instance directly.)

// Create a predicate that finds active items in which complete is false

NSPredicate * predicate = [NSPredicate predicateWithFormat:@"complete == NO"];

// Retrieve the MSTable's MSQuery instance with the predicate you just created.

MSQuery * query = [self.table queryWhere:predicate];

query.includeTotalCount = TRUE; // Request the total item count

// Start with the first item, and retrieve only three items

query.fetchOffset = 0;

query.fetchLimit = 3;

// Invoke the MSQuery instance directly, rather than using the MSTable helper methods.

[query readWithCompletion:^(NSArray *results, NSInteger totalCount, NSError *error) {

[self logErrorIfNotNil:error];

if (!error)

{

// Log total count.

NSLog(@"Total item count: %@",[NSString stringWithFormat:@"%zd", (ssize_t) totalCount]);

}

items = [results mutableCopy];

// Let the caller know that we finished

completion();

}];

4. Press Command + R to run the application in the iPhone or iPad simulator. You should see only the first three results listed in the application.

5. (Optional) View the URI of the request sent to the mobile service by using message inspection software, such as browser developer tools or Fiddler. Notice that the Take(3) method was translated into the query option $top=3 in the query URI.

6. Update the RefreshTodoItems method once more by locating the query.fetchOffset = 0; line and setting the query.fetchOffset value to 3. This will take the next three items after the first three.

These modified query properties:

query.includeTotalCount = TRUE; // Request the total item count

// Start with the first item, and retrieve only three items

query.fetchOffset = 0;

query.fetchLimit = 3;

skips the first three results and returns the next three after that (in addition to returning the total number of items available for your use). This is effectively the second "page" of data, where the page size is three items.

7. (Optional) Again view the URI of the request sent to the mobile service. Notice that the Skip(3) method was translated into the query option $skip=3 in the query URI.

8. Finally, if you want to note the total number of possible Todo items that can be returned, we wrote that to the output window in Xcode. Mine looked like this:

2012-10-28 00:02:34.182 Quickstart[1863:11303] Total item count: 8

but my application looked like this:

With that, and the total count, and you can implement any paging you might like, including infinite lazy scrolling.

‡ Gregory Leake posted Announcing the Windows Azure SQL Data Sync October Update to the Window Azure blog on 10/26/2012:

We are happy to announce the new October update for the SQL Data Sync service is now operational in all Windows Azure data centers.

We are happy to announce the new October update for the SQL Data Sync service is now operational in all Windows Azure data centers.

In this update, users can now create multiple Sync Servers under a single Windows Azure subscription. With this feature, users intending to create multiple sync groups with sync group hubs in different regions will enjoy performance improvements in data synchronization by provisioning the corresponding Sync Server in the same region where the synchronization hub is provisioned.

Further information on SQL Data Sync

SQL Data Sync enables creating and scheduling regular synchronizations between Windows Azure SQL Database and either SQL Server or other SQL Databases. You can read more about SQL Data Sync on MSDN. We have also published SQL Data Sync Best Practices on MSDN.

The team is hard at work on future updates as we march towards General Availability, and we really appreciate your feedback to date! Please keep the feedback coming and use the Windows Azure SQL Database Forum to ask questions or get assistance with issues. Have a feature you’d like to see in SQL Data Sync? Be sure to vote on features you’d like to see added or updated using the Feature Voting Forum.

‡ Nick Harris (@cloudnick) and Nate Totten (@ntotten) posted Episode 92 - iOS SDK for Windows Azure Mobile Services of the CloudCover show on 10/27/2012:

In this episode Nick and Nate are joined by Chris Risner who is a Technical Evangelist on the team focusing on iOS and Android development and Windows Azure. Chris shows us the latest addition to Windows Azure Mobile Services, the iOS SDK. Chris demonstrates, from his Mac, how easy it is to get started using Windows Azure and build a cloud connected iOS app using the new Mobile Services SDK.

In this episode Nick and Nate are joined by Chris Risner who is a Technical Evangelist on the team focusing on iOS and Android development and Windows Azure. Chris shows us the latest addition to Windows Azure Mobile Services, the iOS SDK. Chris demonstrates, from his Mac, how easy it is to get started using Windows Azure and build a cloud connected iOS app using the new Mobile Services SDK.

In the News:

•• Carlos Figuera explained Getting user information on Azure Mobile Services in a 10/24/2012 post:

With the introduction of the server-side authentication flow (which I mentioned in my last post), it’s now a lot simpler to authenticate users with Windows Azure Mobile Services. Once the LoginAsync / login / loginViewControllerWithProvider:completion: method / selector completes, the user is authenticated, the MobileServiceClient / MSClient object will hold a token that is used for authenticating requests, and it can now be used to access authentication-protected tables. But there’s more to authentication than just getting a unique identifier for a user – we can also get more information about the user from the providers we used to authenticate, or even act on their behalf if they allowed the application to do so.

With the introduction of the server-side authentication flow (which I mentioned in my last post), it’s now a lot simpler to authenticate users with Windows Azure Mobile Services. Once the LoginAsync / login / loginViewControllerWithProvider:completion: method / selector completes, the user is authenticated, the MobileServiceClient / MSClient object will hold a token that is used for authenticating requests, and it can now be used to access authentication-protected tables. But there’s more to authentication than just getting a unique identifier for a user – we can also get more information about the user from the providers we used to authenticate, or even act on their behalf if they allowed the application to do so.

With Azure Mobile Services you can still do this. However, the property is not available at the user object stored at the client – the only property it exposes is the user id, which doesn’t give the information that the user authorized the providers to give. This post will show, for the supported providers, how to get access to some of their properties, using their specific APIs.

User identities

The client objects doesn’t expose any of that information to the application, but at the server side, we can get what we need. The User object which is passed to all scripts has now a new function, getIdentities(), which returns an object with provider-specific data which can be used to query their user information. For example, for a user authenticated with a Facebook credential in my app, this is the object returned by calling user.getIdentities():

{

"facebook":{

"userId":"Facebook:my-actual-user-id",

"accessToken":"the-actual-access-token"

}

}

And for Twitter:

{

"twitter":{

"userId":"Twitter:my-actual-user-id",

"accessToken":"the-actual-access-token",

"accessTokenSecret":"the-actual-access-token-secret"

}

}

Microsoft:

{

"microsoft":{

"userId":"MicrosoftAccount:my-actual-user-id",

"accessToken":"the-actual-access-token"

}

}

Google:

{

"google":{

"userId":"Google:my-actual-user-id",

"accessToken":"the-actual-access-token"

}

}

Each of those objects has the information that we need to talk to the providers API. So let’s see how we can talk to their APIs to get more information about the user which has logged in to our application. For the examples in this post, I’ll simply store the user name alongside the item which is being inserted

Talking to the Facebook Graph API

To interact with the Facebook world, you can either use one of their native SDKs, or you can talk to their REST-based Graph API. To talk to it, all we need is a HTTP client, and we do have the nice request module which we can import (require) on our server scripts. To get the user information, we can send a request to https://graph.facebook.com/me, passing the access token as a query string parameter. The code below does that. It checks whether the user is logged in via Facebook; if so, it will send a request to the Graph API, passing the token stored in the user identities object. If everything goes right, it will parse the result (which is a JSON object), and retrieve the user name (from its “name” property) and store in the item being added to the table.

- function insert(item, user, request) {

- item.UserName = "<unknown>"; // default

- var identities = user.getIdentities();

- var req = require('request');

- if (identities.facebook) {

- var fbAccessToken = identities.facebook.accessToken;

- var url = 'https://graph.facebook.com/me?access_token=' + fbAccessToken;

- req(url, function (err, resp, body) {

- if (err || resp.statusCode !== 200) {

- console.error('Error sending data to FB Graph API: ', err);

- request.respond(statusCodes.INTERNAL_SERVER_ERROR, body);

- } else {

- try {

- var userData = JSON.parse(body);

- item.UserName = userData.name;

- request.execute();

- } catch (ex) {

- console.error('Error parsing response from FB Graph API: ', ex);

- request.respond(statusCodes.INTERNAL_SERVER_ERROR, ex);

- }

- }

- });

- } else {

- // Insert with default user name

- request.execute();

- }

- }

With the access token you can also call other functions on the Graph API, depending on what the user allowed the application to access. But if all you want is the user name, there’s another way to get this information: the userId property of the User object, for users logged in via Facebook is in the format “Facebook:<graph unique id>”. You can use that as well, without needing the access token, to get the public information exposed by the user:

- function insert(item, user, request) {

- item.UserName = "<unknown>"; // default

- var providerId = user.userId.substring(user.userId.indexOf(':') + 1);

- var identities = user.getIdentities();

- var req = require('request');

- if (identities.facebook) {

- var url = 'https://graph.facebook.com/' + providerId;

- req(url, function (err, resp, body) {

- if (err || resp.statusCode !== 200) {

- console.error('Error sending data to FB Graph API: ', err);

- request.respond(statusCodes.INTERNAL_SERVER_ERROR, body);

- } else {

- try {

- var userData = JSON.parse(body);

- item.UserName = userData.name;

- request.execute();

- } catch (ex) {

- console.error('Error parsing response from FB Graph API: ', ex);

- request.respond(statusCodes.INTERNAL_SERVER_ERROR, ex);

- }

- }

- });

- } else {

- // Insert with default user name

- request.execute();

- }

- }

The main advantage of this last method is that it can not only be used in the client-side as well.

- private async void btnFacebookLogin_Click_1(object sender, RoutedEventArgs e)

- {

- await MobileService.LoginAsync(MobileServiceAuthenticationProvider.Facebook);

- var userId = MobileService.CurrentUser.UserId;

- var facebookId = userId.Substring(userId.IndexOf(':') + 1);

- var client = new HttpClient();

- var fbUser = await client.GetAsync("https://graph.facebook.com/" + facebookId);

- var response = await fbUser.Content.ReadAsStringAsync();

- var jo = JsonObject.Parse(response);

- var userName = jo["name"].GetString();

- this.lblTitle.Text = "Multi-auth Blog: " + userName;

- }

That’s it for Facebook; let’s move on to another provider.

Talking to the Google API

The code for the Google API is fairly similar to the one for Facebook. To get user information, we send a request to https://www.googleapis.com/oauth2/v1/userinfo, again passing the access token as a query string parameter.

- function insert(item, user, request) {

- item.UserName = "<unknown>"; // default

- var identities = user.getIdentities();

- var req = require('request');

- if (identities.google) {

- var googleAccessToken = identities.google.accessToken;

- var url = 'https://www.googleapis.com/oauth2/v1/userinfo?access_token=' + googleAccessToken;

- req(url, function (err, resp, body) {

- if (err || resp.statusCode !== 200) {

- console.error('Error sending data to Google API: ', err);

- request.respond(statusCodes.INTERNAL_SERVER_ERROR, body);

- } else {

- try {

- var userData = JSON.parse(body);

- item.UserName = userData.name;

- request.execute();

- } catch (ex) {

- console.error('Error parsing response from Google API: ', ex);

- request.respond(statusCodes.INTERNAL_SERVER_ERROR, ex);

- }

- }

- });

- } else {

- // Insert with default user name

- request.execute();

- }

- }

Notice that the code is so similar that we can just merge them into one:

- function insert(item, user, request) {

- item.UserName = "<unknown>"; // default

- var identities = user.getIdentities();

- var url;

- if (identities.google) {

- var googleAccessToken = identities.google.accessToken;

- url = 'https://www.googleapis.com/oauth2/v1/userinfo?access_token=' + googleAccessToken;

- } else if (identities.facebook) {

- var fbAccessToken = identities.facebook.accessToken;

- url = 'https://graph.facebook.com/me?access_token=' + fbAccessToken;

- }

-

- if (url) {

- var requestCallback = function (err, resp, body) {

- if (err || resp.statusCode !== 200) {

- console.error('Error sending data to the provider: ', err);

- request.respond(statusCodes.INTERNAL_SERVER_ERROR, body);

- } else {

- try {

- var userData = JSON.parse(body);

- item.UserName = userData.name;

- request.execute();

- } catch (ex) {

- console.error('Error parsing response from the provider API: ', ex);

- request.respond(statusCodes.INTERNAL_SERVER_ERROR, ex);

- }

- }

- }

- var req = require('request');

- var reqOptions = {

- uri: url,

- headers: { Accept: "application/json" }

- };

- req(reqOptions, requestCallback);

- } else {

- // Insert with default user name

- request.execute();

- }

- }

And with this generic framework we can add one more:

Talking to the Windows Live API

Very similar to the previous ones, just a different URL. Most of the code is the same, we just need to add a new else if branch:

- if (identities.google) {

- var googleAccessToken = identities.google.accessToken;

- url = 'https://www.googleapis.com/oauth2/v1/userinfo?access_token=' + googleAccessToken;

- } else if (identities.facebook) {

- var fbAccessToken = identities.facebook.accessToken;

- url = 'https://graph.facebook.com/me?access_token=' + fbAccessToken;

- } else if (identities.microsoft) {

- var liveAccessToken = identities.microsoft.accessToken;

- url = 'https://apis.live.net/v5.0/me/?method=GET&access_token=' + liveAccessToken;

- }

And the user name can be retrieved in the same way as the others – notice that this is true because all three providers seen so far return the user name in the “name” property, so we didn’t need to change the callback code.

Getting Twitter user data

Twitter is a little harder than the other providers, since it needs two things from the identity (access token and access token secret), and one of the request headers needs to be signed. For simplicity here I’ll just use the user id trick as we did for Facebook:

- if (identities.google) {

- var googleAccessToken = identities.google.accessToken;

- url = 'https://www.googleapis.com/oauth2/v1/userinfo?access_token=' + googleAccessToken;

- } else if (identities.facebook) {

- var fbAccessToken = identities.facebook.accessToken;

- url = 'https://graph.facebook.com/me?access_token=' + fbAccessToken;

- } else if (identities.microsoft) {

- var liveAccessToken = identities.microsoft.accessToken;

- url = 'https://apis.live.net/v5.0/me/?method=GET&access_token=' + liveAccessToken;

- } else if (identities.twitter) {

- var userId = user.userId;

- var twitterId = userId.substring(userId.indexOf(':') + 1);

- url = 'https://api.twitter.com/users/' + twitterId;

- }

And since the user name is also stored in the “name” property of the Twitter response, the callback doesn’t need to be modified.

Accessing provider APIs from the client

So far I’ve shown how you can get user information from the script, and some simplified version of it for the client side (for Facebook and Twitter). But what if we want the logic to access the provider APIs to live in the client, and just want to retrieve the access token which is stored in the server? Right now, there’s no clean way of doing that (no “non-CRUD operation” support on Azure Mobile Services), so what you can do is to create a “dummy” table that is just used for that purpose.

In the portal, create a new table for your application – for this example I’ll call it Identities, set the permissions for Insert / Delete and Update to “Only Scripts and Admins” (so that nobody will insert any data in this table), and for Read set to “Only Authenticated Users”

Now in the Read script, return the response as requested by the caller, with the user identities stored in a field of the response. For the response: if a specific item was requested, return only one element; otherwise return a collection with only that element:

- function read(query, user, request) {

- var result = {

- id: query.id,

- identities: user.getIdentities()

- };

- if (query.id) {

- request.respond(200, result);

- } else {

- request.respond(200, [result]);

- }

- }

And we can then get the identities on the client as a JsonObject by retrieving data from that “table”.

- var table = MobileService.GetTable("Identities");

- var response = await table.ReadAsync("");

- var identities = response.GetArray()[0].GetObject();

Notice that there’s no LookupAsync method on the “untyped” table, so the result is returned as an array; it’s possible that this will be added to the client SDK in the future, so we won’t need to get the object from the (single-element) array, receiving the object itself directly.

Wrapping up

The new multi-provider authentication support added in Azure Mobile Services made it quite easy to authenticate users to your mobile application, and it also gives you the power to access the provider APIs. If you have any comments or feedback, don’t hesitate to send either here on in the Azure Mobile Services forum.

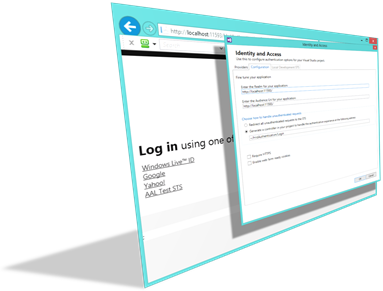

Carlos Figuera posted Troubleshooting authentication issues in Azure Mobile Services on 10/22/2012:

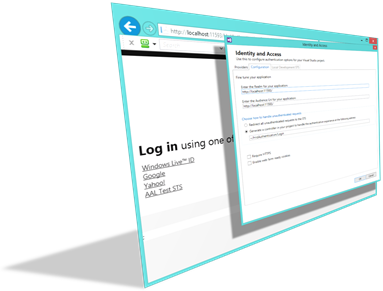

With the announcement last week in ScottGu’s blog, Azure Mobile Service now supports different kinds of authentication in addition to authentication using the Windows Live SDK which was supported at first. You can now authenticate the users of your applications using Facebook, Google, Twitter and even Microsoft Accounts (formerly known as Live IDs) without any native SDK for those providers, just like on web applications. In fact, the authentication is done exactly by the application showing an embedded web browser control which talks to the authentication provider’s websites. In the example below, we see an app using Twitter to authenticate its user.

With the announcement last week in ScottGu’s blog, Azure Mobile Service now supports different kinds of authentication in addition to authentication using the Windows Live SDK which was supported at first. You can now authenticate the users of your applications using Facebook, Google, Twitter and even Microsoft Accounts (formerly known as Live IDs) without any native SDK for those providers, just like on web applications. In fact, the authentication is done exactly by the application showing an embedded web browser control which talks to the authentication provider’s websites. In the example below, we see an app using Twitter to authenticate its user.

The tutorial Getting Started with Users shows how to set up the authentication, including creating applications in each of the supported providers. It’s a great tutorial, and when everything works fine, then great, we’ll add the authentication code to the client (quite small, especially for managed Windows Store apps), users can login and we’re all good. However, there are cases where we just don’t get the behavior we want, and we end up with a client which just can’t correctly authenticate.

The tutorial Getting Started with Users shows how to set up the authentication, including creating applications in each of the supported providers. It’s a great tutorial, and when everything works fine, then great, we’ll add the authentication code to the client (quite small, especially for managed Windows Store apps), users can login and we’re all good. However, there are cases where we just don’t get the behavior we want, and we end up with a client which just can’t correctly authenticate.

There are a number of issues which may be causing this problem, and the nature of authentication of connected mobile applications, with three distinct components (the mobile app itself, the Azure Mobile Service, and the identity provider) makes debugging it harder than simple applications.

There is, however, one nice trick which @tjanczuk (who actually implemented this feature) taught me and can make troubleshooting such problems a little easier. What we do essentially is to remove one component of the equation (the mobile application), to make debugging the issue simpler. The trick is simple: since the application is actually hosting a browser control to perform the authentication, we’ll simply use a real browser to do that. By talking to the authentication endpoints of the mobile service runtime directly, we can see what’s going on behind the scenes of the authentication protocol, and hopefully fix our application.

The authentication endpoint

Before we go into broken scenarios, let’s talk a about the authentication endpoint which we have in the Azure Mobile Services runtime. As of the writing of this post the REST API Reference for Windows Azure Mobile Services has yet to be updated for the server-side (web-based) authentication support, so I’ll cover it briefly here.

The authentication endpoint for an Azure Mobile Service responds to GET requests to https://<service-name>.azure-mobile.net/login/<providerName>, where <providerName> is one of the supported authentication providers (currently “facebook”, “google”, “microsoftaccount” or “twitter”). When a browser (or the embedded browser control) sends a request to that address, the Azure Mobile Service runtime will respond with a redirect (HTTP 302) response to the appropriate page on the authentication provider (for example, the twitter page shown in the first image of this post). Once the user enters valid credentials, the provider will redirect it back to the Azure Mobile Service runtime with its specific authentication token. At that time, the runtime will validate those credentials with the provider, and then issue its own token, which will be used by the client as the authentication token to communicate with the service.

The diagram above shows a rough picture of the authentication flow. Notice that the client may send more than one request to the authentication provider, as it’s often first asks the user to enter its credentials, then (at least once per application) asks the user to allow the application to use its credentials. What the browser control in the client does is to monitor the URL to where it’s navigating, and when it sees that it’s navigating to the /login/done endpoint, it will know that the whole authentication “dance” has finished. At that point, the client can dispose the browser control and store the token to authenticate future requests it sends.

This whole protocol is just a bunch of GET requests and redirect responses. That’s something that a “regular” browser can handle pretty well, so we can use that to make sure that the server is properly set up. So we’ll now see some scenarios where we can use a browser to troubleshoot the server-side authentication. For this scenario I prefer to use either Google Chrome or Mozilla Firefox, since they can display JSON payloads in the browser itself, without needing to go to the developer tools. With Internet Explorer you can also do that, but by default it asks you to save the JSON response in a file, which I personally find annoying. Let’s move on to some problems and how to identify them.

Missing configuration

Once the mobile service application is created, no identity provider credentials are set in the portal. If the authentication with a specific provider is not working, you can try browsing to it. In my application I haven’t set the authentication credentials for Google login, so I’ll get a response saying so in the browser.

To fix this, go to the portal, and enter the correct credentials. Notice that there was a bug in the portal until last week where the credentials were not being properly propagated to the mobile service runtime (it has since been fixed). If you added the credentials once this was released, you can try removing them, then adding them again, and it should go through.

Missing redirect URL

In all of the providers you need to set the redirect URL so that the provider knows to, after authenticating the user, redirect it back to the Azure Mobile Service login page. By using the browser we can check see that error clearer than when using an actual mobile application. For example, this is what we get when we forget to set the “Site URL” property on Facebook, after we browse to https://my-application-name.azure-mobile.net/login/facebook:

And for Windows Live (a.k.a. Microsoft Accounts), after browsing to https://my-application-name.azure-mobile/login/microsoftaccount:

Not as clear an error as the one from Facebook, but if you look at the URL in the browser (may need to copy/paste to a text editor to see better):

https://login.live.com/err.srf?lc=1033

#error=invalid_request&

error_description=The%20provided%20value%20for%20the%20input%20parameter%20'redirect_uri'%20is%20not%20valid.%20The%20expected%20value%20is%20'https://login.live.com/oauth20_desktop.srf'%20or%20a%20URL%20which%20matches%20the%20redirect%20URI%20registered%20for%20this%20client%20application.&

state=13cdf4b00313a8b4302f6

It will have an error description saying that “The provided value for the input parameter 'redirect_uri' is not valid”.

For other providers the experience is similar.

Invalid credentials

Maybe when copying the credentials from the provider site to the Windows Azure Mobile Services portal, the authentication will also fail, but only after going through . In this case, most providers will just say that there is a problem in the request, so one spot to look for issues is on the credentials to see if the ones in the portal match the ones in the provider page. Here are some examples of what you’ll see in the browser when that problem happens. Twitter will mention a problem with the OAuth request (OAuth being the protocol used in the authentication):

Facebook, Microsoft and Google accounts have different errors depending on whether the error is at the client / app id or the client secret. If the error is at the client id, then the provider will display an error right away. For example, Microsoft accounts will show its common error page

But the error description parameter in the URL shows the actual problem: “The client does not exist. If you are the application developer, configure a new application through the application management site at https://manage.dev.live.com/.” Facebook isn’t as clear, with a generic error.

Google is clearer, showing the error right on the first page:

Now, when the client / app id is correct, but the problem is on the app secret, then all three providers (Microsoft, Facebook, Google) will show the correct authentication page, asking for the user credentials. Only when the authentication with the provider is complete, and it redirects it back to the Azure Mobile Service (step 5 in the authentication flow diagram above), and the runtime tries to validate the token with the provider is that it will show the error. Here are the errors which the browser will show in this case. First, Facebook:

Google:

Microsoft:

Other issues

I’ve shown the most common problems which we’ve seen here which we can control. But as usual, there may be times where things just don’t work – network connectivity issues, blackouts on the providers. As with all distributed systems, those issues can arise from time to time which are beyond the control of the Azure Mobile Services. For those cases, you should confirm that those components are working correctly as well.

When everything is fine

Hopefully some of the troubleshoots steps I’ve shown here you’ve been able to fix your server-side authentication with the Azure Mobile Services. If that’s the case, you should see this window.

And with that window (code: 200), you’ll know that, at least the server / provider portion of the authentication dance is ready. With the simple client API, hopefully that will be enough to get your application authentication support.

<Return to section navigation list>

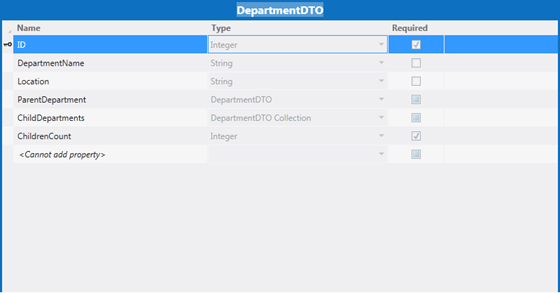

‡ Paul van Bladel (@paulbladel) described A cool OData hack in LightSwitch on 10/27/2012:

Introduction

Introduction

Warning: this post is nerd-only.

We’ll try to add a record directly over the Odata service, even without making a service reference !

Our Setting

Our Setting

We simply start with an empty LightSwitch application in which we add:

- an entity type “Customer” with 2 fields: LastName and FirstName

- A completely empty screen (start with a new data screen and do not relate it to an entity type)

How?

using System;

using System.Linq;

using System.IO;

using System.IO.IsolatedStorage;

using System.Collections.Generic;

using Microsoft.LightSwitch;

using Microsoft.LightSwitch.Framework.Client;

using Microsoft.LightSwitch.Presentation;

using Microsoft.LightSwitch.Presentation.Extensions;

using LightSwitchApplication.Implementation;

using System.Data.Services.Client;

namespace LightSwitchApplication

{

public partial class ODataUpdate

{

[global::System.Data.Services.Common.EntitySetAttribute("Customers")]

[global::System.Data.Services.Common.DataServiceKeyAttribute("Id")]

public class MyCustomer

{

public int Id { get; set; }

public string LastName { get; set; }

public string FirstName { get; set; }

public byte[] RowVersion { get; set; }

}

partial void AddCustomer_Execute()

{

MyCustomer c = new MyCustomer();

c.FirstName = "paul";

c.LastName = "van bladel";

Microsoft.LightSwitch.Threading.Dispatchers.Main.BeginInvoke(() =>

{

Uri uri = new Uri(System.Windows.Application.Current.Host.Source, "../ApplicationData.svc");

DataServiceContext _appService = new DataServiceContext(uri);

_appService.AddObject("Customers", c);

_appService.BeginSaveChanges(SaveChangesOptions.Batch, (IAsyncResult ac) =>

{

_appService = ac.AsyncState as DataServiceContext;

DataServiceResponse response = _appService.EndSaveChanges(ac);

},

_appService);

});

}

}

}

I needed to introduce a separate MyCustomer class and decorate this with the EntitySet and DataServiceKey attributes. When I try to do the save with the Customer type instead I get, for unknown reasons, and error: “This operation requires the entity to be of an Entity Type, either mark its key properties, or attribute the class with DataServiceEntityAttribute”. Strange, because I would suspect the build-in LightSwitch type Customer having these attributes. The MyCustomer type needs to have as well the RowVersion field.

As you can see, it works with the good old BeginExecute and EndExecute async pattern.

Could this be useful?

For your daily LightSwitch work, the answer is: by and large no !

But… imagine you want to do inter-application communications without making explicit service references towards eachother… Just update the Uri variable and you are up speed

‡ Andrew Brust (@andrewbrust) asserted “Strata + Hadoop World NYC is done, but cataloging the Big Data announcements isn't. This post aims to remedy that” in a deck for his NYC Data Week News Wraps-Up post for ZDNet’s Big Data blog:

With NYC Data Week and the Starta + Hadoop World NYC event, lots of Big Data news annoucements have been made, many of which I've covered.

With NYC Data Week and the Starta + Hadoop World NYC event, lots of Big Data news annoucements have been made, many of which I've covered.

After a full day at the show and series of vendor briefings this week, I wanted to report back on the additional Big Data news coming out with the events' conclusion.

Cloudera Impala

Cloudera announced a new Hadoop component, Impala, that elevates SQL to peer level with MapReduce as a query tool for Hadoop. Although API-compatible with Hive, Impala is a native SQL engine that runs on the Hadoop cluster and can query data in the Hadoop Distributed File System (HDFS) and HBase. (Hive merely translates the SQL-like HiveQL language to Java code and then runs a standard batch-mode Hadoop MapReduce job.)

Impala, currently in Beta, is part of Cloudera’s Distribution including Apache Hadoop (CDH) 4.1, but is not currently included with other Hadoop distributions. Impala is open source, and it’s Apache-licensed, but it is not an Apache Software Foundation project, as most Hadoop components are. Keep in mind, though, that Sqoop, the import-export framework that moves data between Hadoop and Data Warehouses/relational databases, also began as a Cloudera-managed open source project and is now an Apache project. The same may happen with Impala.

MapR optimizes HBase, sets new Terasort record

MapR, makers of a Hadoop distribution which replaces HDFS with an API-compatible layer over standard network file systems, and which is offered as a cloud service via Amazon Elastic Map Reduce and soon on Google Compute Engine, introduced a new Hadoop Distribution at Strata+ Hadoop World. Dubbed M7, the new distribution includes a customized version of HBase, the Wide Column Store NoSQL database included with most Hadoop distributions.

For this special version of HBase in M7, MapR has integrated HBase directly into the MapR distribution. And since MapR’s file system is not write-once as is HDFS, MapR’s HBase can avoid buffered writes and compactions, making for faster operation and largely eliminating limits on the number of tables in the database. Additionally, various HBase components have been rewritten in C++, eliminating the Java Virtual Machine as a layer in the database operations, and further boosting performance.

And a postscript: MapR announced that its distribution (ostensibly M3 or M5) running on the Google Compute Engine cloud platform, has broken the time record for the Big Data Terasort benchmark, coming in at under one minute -- a first. The cloud cluster employed 1,003 servers, 4,012 cores and 1,003 disks. The previous Terasort record, 62 seconds, was set by Yahoo running vanilla Apache Hadoop on 1,460 servers, 11,680 cores and 5,840 disks.

SAP Big Data Bundle

While SAP has interesting Big Data/analytics offerings, including the SAP HANA in-memory database, the Sybase IQ columnar database, the Business Objects business intelligence suite, and its Data Integrator Extract Transform and Load (ETL) product, it doesn’t have its own Hadoop distro. Neither do a lot of companies. Instead, they partner with Cloudera or Hortonworks shipping one of their distributions instead.

SAP has joined this club, and then some. The German software giant announced its Big Data Bundle, which can include all of the aforementioned Big Data/analytics products of its own, optionally in combination with Cloudera’s or Hortonworks' Hadoop distributions. Moreover, the company is partnering with IBM, HP and Hitachi to make the Big Data Bundle available as a hardware-integrated appliance. Big stuff.

EMC/Greenplum open sources Chorus

The Greenplum division of EMC announced the open source release of its Chorus collaboration platform for Big Data. Chorus is a Yammer-like tool for various Big Project team members to communicate and collaborate in their various roles. Chorus is both Greenplum database- and Hadoop-aware.

On Chorus, data scientists might communicate their data modeling work, Hadoop specialists might mention the data they have amassed and analyzed, BI specialists might chime in about the refinement of that data they have performed in loading it into Greenplum, and business users might convey their success in using the Green plum data and articulate new requirements, iteratively. The source code for this platform is now in an open source repository on GitHub.

Greenplum also announced a partnership with Kaggle, a firm that runs data science competitions, which will now use the Chrous platform.

Pentaho partners

Pentaho, a leading open source business intelligence provider announced its close collaboration with Cloudera on the Impala project, and a partnership with Greenplum on Chorus. Because of these partnerships, Pentaho’s Interactive Report Writer integrates tightly with Impala and the company’s stack is compatible with Chorus. …

Read more: 2, Next

‡ Andrew Lavshinsky posted First Look: Querying Project Server 2013 OData with LINQPad on 10/21/2012 (missed when published):

As I gradually immerse myself into the world of Project Server 2013, one of the major changes I’ve been forced to come to grips with is the new method of querying Project Server data through OData. OData is now the preferred mechanism to surface cloud based data, and is designed to replace direct access to the SQL database.

As I gradually immerse myself into the world of Project Server 2013, one of the major changes I’ve been forced to come to grips with is the new method of querying Project Server data through OData. OData is now the preferred mechanism to surface cloud based data, and is designed to replace direct access to the SQL database.

To access Project Server OData feeds, simply add this to your PWA URL:

To access Project Server OData feeds, simply add this to your PWA URL:

…//_api/ProjectData/

…meaning that the PWA site at http://demo/pwa would have an OData feed at http://demo/PWA//_api/ProjectData/.

The results look something like this, i.e. pretty much like an RSS feed:

In fact, one of the tricks you’ll pick up after working with OData is turning off the default Internet Explorer RSS interface, which tends to get in the way of viewing OData feeds. Access that via the Internet Explorer > Internet Options page.

I can also consume OData feeds directly in Office applications such as Excel. In Excel 2013, I now have the option to connect to OData directly…

That yields the table selection which I may then use to develop my reports.

More on that topic in later posts. In this post, I want to talk about writing queries against OData using LINQ a querying language that some of you are probably familiar with. I would hardly call myself an expert, but I’ve found the easiest way to get up to speed is to download and install LINQPad, a free query writing tool.

With LINQPad, I can teach myself LINQ, following a simple step by step tutorial.

…and then point LINQPad at a hyper-V image of Project Server to test my queries.

…even better, LINQPad generates the URL that I’ll need to customize the OData feed from Project Server:

I.e. the query above to select the Project Name and Project Start from all projects starting after 1/1/2013 yields a URL of:

http://demo/PWA//_api/ProjectData/Projects()?$filter=ProjectStartDate ge datetime’2013-01-01T00:00:00′&$select=ProjectName,ProjectStartDate

Minor caveat to this approach: out of the box, LINQPad doesn’t authenticate to Office 365 tenants. It looks like other folks have already figured out a solution to this, which I haven’t gotten around to deciphering on my own. In the meantime, LINQPad works fine against on-premises installations. For now, I’ll probably be developing my queries against an on-prem data set, then applying the URLs to my Office 365 tenant.

For example, using my online tenant, I can parse the following URL:

https://lavinsky4.sharepoint.com/sites/pwa//_api/ProjectData/Projects()?$filter=ProjectStartDate ge datetime’2012-01-01T00:00:00′&$select=ProjectName,ProjectStartDate

…and get the correct results. Here’s what it looks like in Excel:

Coming up….porting some of my previous report queries into LINQ.

•• Gianugo Rabellino (@gianugo) described Simplifying Big Data Interop – Apache Hadoop on Windows Server & Windows Azure in a 10/23/2012 post to the Interoperability @ Microsoft blog:

As a proud member of the Apache Software Foundation, it’s always great to see the growth and adoption of Apache community projects. The Apache Hadoop project is a prime example. Last year I blogged about how Microsoft was engaging with this vibrant community, Microsoft, Hadoop and Big Data. Today, I’m pleased to relay the news about increased interoperability capabilities for Apache Hadoop on the Windows Server and Windows Azure platforms and an expanded Microsoft partnership with Hortonworks.

As a proud member of the Apache Software Foundation, it’s always great to see the growth and adoption of Apache community projects. The Apache Hadoop project is a prime example. Last year I blogged about how Microsoft was engaging with this vibrant community, Microsoft, Hadoop and Big Data. Today, I’m pleased to relay the news about increased interoperability capabilities for Apache Hadoop on the Windows Server and Windows Azure platforms and an expanded Microsoft partnership with Hortonworks.

Microsoft Technical Fellow David Campbell announced today new previews of Windows Azure HDInsight Service and Microsoft HDInsight Server, the company’s Hadoop-based solutions for Windows Azure and Windows Server. [See the Windows Azure Blob, Drive, Table, Queue, Hadoop and Media Services section above.]

Microsoft Technical Fellow David Campbell announced today new previews of Windows Azure HDInsight Service and Microsoft HDInsight Server, the company’s Hadoop-based solutions for Windows Azure and Windows Server. [See the Windows Azure Blob, Drive, Table, Queue, Hadoop and Media Services section above.]

Here’s what Dave had to say in the official news about how this partnership is simplifying big data in the enterprise.

“Big Data should provide answers for business, not complexity for IT. Providing Hadoop compatibility on Windows Server and Azure dramatically lowers the barriers to setup and deployment and enables customers to pull insights from any data, any size, on-premises or in the cloud.”

Dave also outlined how the Hortonworks partnership will give customers access to an enterprise-ready distribution of Hadoop with the newly released solutions.

And here’s what Hortonworks CEO Rob Bearden said about this expanded Microsoft collaboration.

“Hortonworks is the only provider of Apache Hadoop that ensures a 100% open source platform. Our expanded partnership with Microsoft empowers customers to build and deploy on platforms that are fully compatible with Apache Hadoop.”

An interesting part of my open source community role at MS Open Tech is meeting with customers and trying to better understand their needs for interoperable solutions. Enhancing our products with new Interop capabilities helps reduce the cost and complexity of running mixed IT environments. Today’s news helps simplify deployment of Hadoop-based solutions and allows customers to use Microsoft business intelligence tools to extract insights from big data.

Peter Horsman posted Here’s What’s New with the Windows Azure Marketplace on 10/23/2012:

Making things easier and more efficient while helping you improve productivity, is the name of the game at the Windows Azure Marketplace. In this month’s release, the addition of Windows Azure Active Directory simplifies the user experience, while access to new content expands your capabilities.

Making things easier and more efficient while helping you improve productivity, is the name of the game at the Windows Azure Marketplace. In this month’s release, the addition of Windows Azure Active Directory simplifies the user experience, while access to new content expands your capabilities.

Improved Experience

Now you can use your Windows Azure Active Directory ID (your Office 365 login) to access the Windows Azure Marketplace. We heard your feedback about improving efficiency. With just a single identity to manage, publishing and promoting—even purchasing--offerings becomes easier. And the process of updating, organizing and managing contact and ecommerce details becomes more secure and streamlined too.

In addition, the marketplace has lots of new content from great providers who are committed to expanding the power of the Windows Azure platform.

New Data Sources

Check out new data offerings from RegioData Research GmbH, including RegioData Purchasing Power Austria 2012 and RegioData Purchasing Power United Kingdom 2012. Purchasing Power refers to the ability of one person or one household to buy goods, services or rights with a given amount of money within a certain period of time. These indices clearly represent the regional prosperity levels and disposable incomes (including primary and transfer income) in Austria and the UK respectively. You can browse the full list of data sources available in the Marketplace here.

New Apps

In the realm of apps, we have new content from High 5 Software, ClearTrend Research, QuickTracPlus, and multiple providers out of Barcelona including Santin e Associati S.r.l. (Tempestive). You’ll find everything from web content management tools to document generation to staff auditing and management apps. Take a look:

You can check out the complete list of apps available in the Marketplace here.

We’ve got more exciting features in coming releases, so stay tuned for more updates!

<Return to section navigation list>

•• Mick Badran (@mickba) announced Azure: Windows Workflow Manager 1.0 RTMed on 10/24/2012:

Great news – Jurgen Willis and his team have worked hard to bring Microsoft’s first V1.0 WF Workflow Hosting Manager.

Great news – Jurgen Willis and his team have worked hard to bring Microsoft’s first V1.0 WF Workflow Hosting Manager.

It runs both as part of Windows Server and within Azure VMs also. It also is used by the SharePoint team in 2013, so learn it once and you’ll get great mileage out of it.

(I’m yet to put it through serious paces)

Some links to help you out…

Some links to help you out…

The following main areas for WF improvements in .NET 4.5: (great MSDN magazine article)

- Workflow Designer enhancements

- C# expressions

- Contract-first authoring of WCF Workflow Services

- Workflow versioning

- Dynamic update

- Partial trust

- Performance enhancements

Specifically for WorkflowManager there’s integration with:

1. Windows Azure Service Bus.

So all in all a major improvement and we’ve now got somewhere serious to host our WF Services. If you’ve ever gone through the process of creating your own WF host, you’ll appreciate it’s not a trivial task especially if you want some deeper functionality such as restartability and fault tolerance.

but…. if you want to kick off a quick WF to be part of an install script, evaluate an Excel spreadsheet and set results, then hosting within the app, spreadsheet is fine.

Let’s go through installation:

Download from here

Workflow_Manager_BPA.msi = Best Practices Analyser.

WorfklowClient = Client APIs, install on machines that want to communicate to WF Manager.

WorkflowManager = the Server/Service Component.

WorkflowTools = VS2012 plugin tools – project types etc.

And we’ll grab the 4 or you can you the Web Platform Installer

The Workflow Client should install fine on it’s own (mine didn’t as I had to remove some of the beta bits that were previously installed).

Installing the Workflow Manager – create a farm, I went for a Custom Setting install below, just to show you the options.

As you scroll down on this page, you’ll notice a HTTP Port – check the check box to enable HTTP communications to the Workflow Manager.

This just makes it easier if we need to debug anything across the wire.

Select NEXT or the cool little Arrow->

On Prem Service Bus is rolled into this install now – accepting defaults.

Plugin your Service Accounts and passphrase (for Farm membership and an encryption seed).

Click Next –> to reveal….

As with the latest set of MS Products a cool cool feature is the ‘Get PowerShell Commands’ so you can see the script behind your UI choices (VMM manager, SCCM 2012 has all this right through). BTW – passwords don’t get exported in the script, you’ll need to add.

Script Sample:

# To be run in Workflow Manager PowerShell console that has both Workflow Manager and Service Bus installed.

# Create new SB Farm

$SBCertificateAutoGenerationKey = ConvertTo-SecureString -AsPlainText -Force -String '***** Replace with Service Bus Certificate Auto-generation key ******' -Verbose;

New-SBFarm -SBFarmDBConnectionString 'Data Source=BTS2012DEV;Initial Catalog=SbManagementDB;Integrated Security=True;Encrypt=False' -InternalPortRangeStart 9000 -TcpPort 9354 -MessageBrokerPort 9356 -RunAsAccount 'administrator' -AdminGroup 'BUILTIN\Administrators' -GatewayDBConnectionString 'Data Source=BTS2012DEV;Initial Catalog=SbGatewayDatabase;Integrated Security=True;Encrypt=False' -CertificateAutoGenerationKey $SBCertificateAutoGenerationKey -MessageContainerDBConnectionString 'Data Source=BTS2012DEV;Initial Catalog=SBMessageContainer01;Integrated Security=True;Encrypt=False' -Verbose;

# To be run in Workflow Manager PowerShell console that has both Workflow Manager and Service Bus installed.

# Create new WF Farm

$WFCertAutoGenerationKey = ConvertTo-SecureString -AsPlainText -Force -String '***** Replace with Workflow Manager Certificate Auto-generation key ******' -Verbose;

New-WFFarm -WFFarmDBConnectionString 'Data Source=BTS2012DEV;Initial Catalog=BreezeWFManagementDB;Integrated Security=True;Encrypt=False' -RunAsAccount 'administrator' -AdminGroup 'BUILTIN\Administrators' -HttpsPort 12290 -HttpPort 12291 -InstanceDBConnectionString 'Data Source=BTS2012DEV;Initial Catalog=WFInstanceManagementDB;Integrated Security=True;Encrypt=False' -ResourceDBConnectionString 'Data Source=BTS2012DEV;Initial Catalog=WFResourceManagementDB;Integrated Security=True;Encrypt=False' -CertificateAutoGenerationKey $WFCertAutoGenerationKey -Verbose;

# Add SB Host

$SBRunAsPassword = ConvertTo-SecureString -AsPlainText -Force -String '***** Replace with RunAs Password for Service Bus ******' -Verbose;

Add-SBHost -SBFarmDBConnectionString 'Data Source=BTS2012DEV;Initial Catalog=SbManagementDB;Integrated Security=True;Encrypt=False' -RunAsPassword $SBRunAsPassword -EnableFirewallRules $true -CertificateAutoGenerationKey $SBCertificateAutoGenerationKey -Verbose;

Try

{

# Create new SB Namespace

New-SBNamespace -Name 'WorkflowDefaultNamespace' -AddressingScheme 'Path' -ManageUsers 'administrator','mickb' -Verbose;

Start-Sleep -s 90

}

Catch [system.InvalidOperationException]

{

}

# Get SB Client Configuration

$SBClientConfiguration = Get-SBClientConfiguration -Namespaces 'WorkflowDefaultNamespace' -Verbose;

# Add WF Host

$WFRunAsPassword = ConvertTo-SecureString -AsPlainText -Force -String '***** Replace with RunAs Password for Workflow Manager ******' -Verbose;

Add-WFHost -WFFarmDBConnectionString 'Data Source=BTS2012DEV;Initial Catalog=BreezeWFManagementDB;Integrated Security=True;Encrypt=False' -RunAsPassword $WFRunAsPassword -EnableFirewallRules $true -SBClientConfiguration $SBClientConfiguration -EnableHttpPort -CertificateAutoGenerationKey $WFCertAutoGenerationKey -Verbose;

Upon completion you should see a new IIS Site…. with the ‘management ports’ of in my case HTTPS

Let’s Play

Go and grab the samples and have a play – make sure you run the samples as the user you’ve nominated as ‘Admin’ during the setup – for now.

•• Jesus Rodriguez posted NodeJS and Windows Azure: Using Service Bus Queues on 10/24/2012:

I have been doing a lot of work with NodeJS and Windows Azure lately. I am planning to write a series of blog post about the techniques required build NodeJS applications that leverage different Windows Azure components. I am also planning on deep diving into the different elements of the NodeJS modules to integrate with Windows Azure.

Let’s begin with a simple tutorial of how to implement NodeJS applications that leverage one of the most popular components of the Windows Azure Service Bus: Queues. When using the NodeJS module for Windows Azure, developers can perform different operations on Azure Service Bus queues. The following sections will provide an overview of some of those operations.

Let’s begin with a simple tutorial of how to implement NodeJS applications that leverage one of the most popular components of the Windows Azure Service Bus: Queues. When using the NodeJS module for Windows Azure, developers can perform different operations on Azure Service Bus queues. The following sections will provide an overview of some of those operations.

Getting Started

The initial step to use Azure Service Bus queues from a NodeJS application is to instantiate the ServiceBusService object as illustrated in the following code:

1: process.env.AZURE_SERVICEBUS_NAMESPACE= "MY NAMESPACE...";

2: process.env.AZURE_SERVICEBUS_ACCESS_KEY= "MY ACCESS KEY....;

3: var sb= require('azure'); 4: var serviceBusService = sb.createServiceBusService();

Creating a Queue

Create a service bus queue using NodeJS is accomplished by invoking a createQueueIfNotExists operation of the ServiceBusService object. The operation can take similar several parameters to customize the settings of the queue. The following code illustrates this process.

1: function createQueueTest(queuename)

2: { 3: serviceBusService.createQueueIfNotExists(queuename, function(error){ 4: if(!error){ 5: console.log('queue created...'); 6:

7: }

8: })

9: }

Sending a Message

Placing a message in a service bus queue from NodeJS can be accomplished using the sendQueueMessage operation of the ServiceBusService object. In addition to the message payload, we can include additional properties that describe metadata associated with the message. The following NodeJS code illustrates the process of enqueueing a message in an Azure Service Bus queue using NodeJS.

1: function sendMessageTest(queue)

2: { 3: var message = { 4: body: 'Test message',

5: customProperties: { 6: testproperty: 'TestValue'

7: }}

8:

9: serviceBusService.sendQueueMessage(queue, message, function(error){ 10: if(!error){ 11: console.log('Message sent....'); 12: }})

13: }

Receiving a Message

Similarly, to the process of enqueuing a message, we can dequeue a message from a service bus queue by invoking the ReceiveMessage operation from the ServiceBusService object. By default, messages are deleted from the queue as they are read; however, you can read (peek) and lock the message without deleting it from the queue by setting the optional parameter isPeekLock to true. The following NodeJS code illustrates this technique.

1: function receiveMessageTest(queue)

2: { 3: serviceBusService.receiveQueueMessage(queue, function(error, receivedMessage){ 4: if(!error){ 5: console.log(receivedMessage);

6: }

7: })

8: }

Putting it all together