| A compendium of Windows Azure, Service Bus, EAI & EDI Access Control, Connect, SQL Azure Database, and other cloud-computing articles. |  |

• Updated 2/24/2012 with new articles marked •.

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Windows Azure Blob, Drive, Table, Queue and Hadoop Services

- SQL Azure Database, Federations and Reporting

- Marketplace DataMarket, Social Analytics and OData

- Windows Azure Access Control, Service Bus, and Workflow

- Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch and Entity Framework v4+

- Windows Azure Infrastructure and DevOps

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

Larry Franks (@larry_franks) explained Windows Azure Storage and Concurrent Access in a 2/23/2012 post to the [Windows Azure’s] Silver Lining blog:

A lot of the examples of using Windows Azure Storage that I run across are pretty simple and just demonstrate the basics of reading and writing. Knowing how to read and write usually isn't sufficient for a multi-instance, distributed application. For applications that run more than one instance (i.e. pretty much everything you'd run in the cloud,) handling concurrent writes to a shared resource is usually important.

A lot of the examples of using Windows Azure Storage that I run across are pretty simple and just demonstrate the basics of reading and writing. Knowing how to read and write usually isn't sufficient for a multi-instance, distributed application. For applications that run more than one instance (i.e. pretty much everything you'd run in the cloud,) handling concurrent writes to a shared resource is usually important.

Luckily, Windows Azure Tables and Blobs have support for concurrent access through ETags. ETags are part of the HTML 1.1 specification, and work like this:

Luckily, Windows Azure Tables and Blobs have support for concurrent access through ETags. ETags are part of the HTML 1.1 specification, and work like this:

- You request a resource (table entity or blob) and when you get the resource you also get an ETag value. This value is a unique value for the current version of the resource; the version you were just handed.

- You do some modifications to the data and try to store it back to the cloud. As part of the request, you specify a conditional HTTP Request header such as If-Match HTTP along with the value of the ETag you got in step 1.

-

If the ETag you specify matches the current value of the resource in the cloud, the save happens. If the value in the cloud is different, someone's changed the data between steps 1 and 2 above and an error is returned.

Note: If you don't care and want to always write the data, you can specify an '*' value for the ETag in the If-Match header.

Unfortunately there's no centralized ETag document I can find for Windows Azure Storage. Instead it's discussed for the specific APIs that use it. See Windows Azure Storage Services REST API Reference as a starting point for further reading.

This is all low level HTTP though, and most people would rather use a wrapper around the Azure APIs to make them easier to use.

Wrappers

So how does this work with a wrapper? Well, it really depends on the wrapper you're using. For example, the Azure module for Node.js allows you to specify optional parameters that work on ETags. For instance, when storing table entities you can specify checkEtag: true. This translates into an HTTP Request Header of 'If-Match', which means "only perform the operation if the ETag I've specified matches the one on this resource in the cloud". If the parameter isn't present, the default is to use an ETag value of '*' to overwrite. Here's an example of using checkEtag:

tableService.updateEntity('tasktable',serverEntity, {checkEtag: true}, function(error, updateResponse) {

if(!error){

console.log('success');

} else {

console.log(error);

}

});

Note that I don't specify an ETag value anywhere above. This is because it's part of serverEntry, which I previously read from the server. You can see the value by looking at serverEntry['etag']. If the ETag value in serverEntity matches the value on the server, the operation fails and you'll receive an error similar to the following:

{ code: 'UpdateConditionNotSatisfied',

message: 'The update condition specified in the request was not satisfied.\nRequestId:a5243266-ac68-4c64-bc55-650da40bfba0\nTime:2012-02-14T15:04:43.9702840Z' }

Blob's are slightly different, in they can use more conditions than If-Match, as well as combine conditionals. Specifying Conditional Headers for Blob Service Operations has a list of the supported conditionals, note that you can use DateTime conditionals as well as ETag. Since you can do combinations of conditionals, the syntax is slightly different; you have to specify the conditions as part of an accessConditions. For example:

var options = { accessConditions: { 'If-None-Match': '*'}};

blobService.createBlockBlobFromText('taskcontainer', 'blah.txt', 'random text', options, function(error){

if(!error){

console.log('success');

} else {

console.log(error);

}

});

For this I just used one condition - If-None-Match - but I could have also added another to the accessConditions collection if needed. Note that I used a wildcard instead of an actual ETag value. What this does is only create the 'blah.txt' blob if it doesn't already exist.

Summary

For cloud applications that need to handle concurrent access to file resources such as blobs and tables, Windows Azure Storage provides this functionality through ETags. If you're not coding directly against the Windows Azure Storage REST API, you should ensure that the wrapper/convenience library you are using exposes this functionality if you plan on using it.

Janikiram MSV described Windows Azure Storage for PHP Developers in a 2/23/2012 post:

Consumer web applications today need to be web-scale to handle the increasing traffic requirements. One of the key components of the contemporary web application architecture is storage. Social networking and media sites are dealing with massive user generated content that is growing disproportionately. This is where Cloud storage can help. By leveraging the Cloud storage effectively, applications can scale better.

Consumer web applications today need to be web-scale to handle the increasing traffic requirements. One of the key components of the contemporary web application architecture is storage. Social networking and media sites are dealing with massive user generated content that is growing disproportionately. This is where Cloud storage can help. By leveraging the Cloud storage effectively, applications can scale better.

Windows Azure Storage has multiple offerings in the form of Blob, Table and Queues. Blob are used to store binary objects like images, videos and other static assets while Table is used for dealing with the flexible, schema-less entities. Queues allow asynchronous communication across the role instances and components of Cloud applications.

Windows Azure Storage has multiple offerings in the form of Blob, Table and Queues. Blob are used to store binary objects like images, videos and other static assets while Table is used for dealing with the flexible, schema-less entities. Queues allow asynchronous communication across the role instances and components of Cloud applications.

In this article, we will see how PHP developers can talk to Azure Blob storage to store and retrieve objects. Before that, let’s understand the key concepts of Azure Blobs.

Azure Blobs are a part of Windows Azure Storage accounts. If you have a valid Windows Azure account, you can create a storage account to gain access to the Blobs, Tables and Queues. Storage accounts come with a unique name that will act as a DNS name and storage access key that is required for authentication. Storage account will be associated with the REST endpoints of Blobs, Tables and Queues. For example, when I created a Storage account named janakiramm, the Blob endpoint is http://janakiramm.blob.core.windows.net. This endpoint will be used to perform Blob operations like creating and deleting containers and uploading objects.

Azure Blobs are easy to deal with it. The only concepts you need to understand are containers, objects and permissions. Containers act as buckets to store binary objects. Since Containers are accessible over the public internet, the names should be DNS compliant. Every Container that is created is accessible through a scheme which looks like http://.blob.core.windows.net/.Containers group multiple objects together. You can add metadata to the Container which can be upto 8KB in size.

If you do not have the Windows Azure SDK for PHP installed, you can get it from the PHP Developer Center of Windows Azure portal. Follow the instructions and set it up on your development machine.

Let’s take a look at the code to create a Container from PHP.

Start by setting a reference to the PHP SDK with the following statement.

require_once 'Microsoft/WindowsAzure/Storage/Blob.php';

Create an instance of Storage Client with the access key.

$storageClient = new Microsoft_WindowsAzure_Storage_Blob('blob.core.windows.net', '<endpoint>', '<accesskey>');

To list all the containers and print the names, we will use the following code

$containers=$blob->listContainers();

for ( $counter = 0; $counter < count($containers); $counter += 1)

print($containers[$counter]->name)."\n";

Uploading a file to the Blob storage is simple.

$obj=$blob->putBlob('<container>','<key>','<path_to_the_file>');

print($obj->Url);

To list all the blobs in a container, just call listBlobs() method.

$blobs=$blob->listBlobs('<container>');

for ( $counter = 0; $counter < count($blobs); $counter += 1)

print($blobs[$counter]->name)."\n";

Finally, you can delete the blob by passing the container name and the key.

$blob.deleteBlob('<container>','<blob_name>');

PHP Developers can consume Windows Azure storage to take advantage of the scalable, pay-as-you-go storage service.

<Return to section navigation list>

• Cihan Biyikoglu (@cihangirb) described Teach the old dog new tricks: How to work with Federations in Legacy Tools and Utilities? in a 2/23/2012 post:

I started programming on mainframes and all of us would have thoughts these mainframes would be gone by now. Today, mainframes are still around as well as many Windows XPs, Excel 2003s and many more Reporting Services 2008s out there. Doors aren't shut for these tools to talk to federations in SQL Azure. As long as you can get to a regular SQL Azure database with one of these legacy tools, you can connect to any of the federation members as well. Your tool does not even have to even know to issue a USE FEDERATION statement. You can connect to members without USE FEDERATION as well. Here is how;

I started programming on mainframes and all of us would have thoughts these mainframes would be gone by now. Today, mainframes are still around as well as many Windows XPs, Excel 2003s and many more Reporting Services 2008s out there. Doors aren't shut for these tools to talk to federations in SQL Azure. As long as you can get to a regular SQL Azure database with one of these legacy tools, you can connect to any of the federation members as well. Your tool does not even have to even know to issue a USE FEDERATION statement. You can connect to members without USE FEDERATION as well. Here is how;

- First step is to get the member database name discovered. You can do that simply by getting to the member using USE FEDERATION and run SELECT db_name(). The name of the member database will be system-<GUID>. The name is unique because of the GUID. If you need a tool to show you the way; use the fanout tool to run SELECT db_name() to get names of all members. Info on the fanout tool can be found on this post; http://blogs.msdn.com/b/cbiyikoglu/archive/2011/12/29/introduction-to-fan-out-queries-querying-multiple-federation-members-with-federations-in-sql-azure.aspx

- Once you have the database name, connect to the server and the database name in your connection string and you are in. At this point you have a connection to the member. This connection isn't any different from a FILTERING=OFF connection that is established through USE FEDERATION.

This legacy connection mode is very useful for tools like bcp.exe, reporting services (SSRS), integration services (SSIS), analyses services (SSAS) or Office tools like Excel or Access. None of them need to natively understand federations to get to data in a member.

This legacy connection mode is very useful for tools like bcp.exe, reporting services (SSRS), integration services (SSIS), analyses services (SSAS) or Office tools like Excel or Access. None of them need to natively understand federations to get to data in a member.

Obviously, future looks much better and eventually you won’t have to jump through these steps but 8 weeks into shipping federations, if you are thinking about all the existing systems you have surrounding your data, this backdoor connection through the database name to members will save the day!

Russell Solberg (@RusselSolberg) described how SQL Azure & Updated Pricing resulted in a decision to move his company’s CRM database to SQL Azure in a 2/17/2012 post (missed when published):

One of the biggest challenges I face daily is how to build and architect cost effective technical solutions to improve our business processes. While we have no shortage of ideas for processes we can enhance, we are very constrained on capital to invest. With all that said, we still have to operate and improve our processes. Our goal as a business is to be the best at what we do which means we also need to make sure we have appropriate scalable technical solutions in please to meet the demands of our users.

One of the biggest challenges I face daily is how to build and architect cost effective technical solutions to improve our business processes. While we have no shortage of ideas for processes we can enhance, we are very constrained on capital to invest. With all that said, we still have to operate and improve our processes. Our goal as a business is to be the best at what we do which means we also need to make sure we have appropriate scalable technical solutions in please to meet the demands of our users.

Over the past few years our Customer Relationship Management (CRM) database has become our Enterprise Resource Planning (ERP) system. This system tells our staff everything they need to know about our customers, potential customers, and what activities need to be completed and when. This system is an ASP.NET web application with a SQL database on the back end. In the days leading up to our busy season, we had an Internet outage (thanks to some meth tweakers for digging up some copper!) and only one of our offices had access to our system. At this time, I knew that our business required us to rethink the hosting of our server and services.

Over the past few years our Customer Relationship Management (CRM) database has become our Enterprise Resource Planning (ERP) system. This system tells our staff everything they need to know about our customers, potential customers, and what activities need to be completed and when. This system is an ASP.NET web application with a SQL database on the back end. In the days leading up to our busy season, we had an Internet outage (thanks to some meth tweakers for digging up some copper!) and only one of our offices had access to our system. At this time, I knew that our business required us to rethink the hosting of our server and services.

After working with various technical partners, we determined we could collocate our existing server in a data center for a mere $400 per month. While this was a workable solution, the only perk this would really provide us was redundant power and internet access which we didn’t have in our primary location. We still had huge redundancy issues with our server in general, and by huge I mean non-existent. In other words, if a hard disk failed, we were SOL until Dell was able to get us a replacement. Since our busy season of work had just begun, I decided that we’d roll the dice and address the concerns in the 1Q of 2012.

Enter Azure

When Azure was announced from Microsoft, I initially brushed off the platform. I found the Azure pricing model way too difficult to comprehend and I really wasn’t willing to spend the hours, weeks, or months trying to put it all together. Things have changed though!

On February 14th, 2012 Microsoft announced that it was reducing the pricing of SQL Azure and I decided to see if I could figure out what that meant. While digging into this, I came across a nice article written by Steven Martin that did a good job explaining the costs. After reading the article, I decided to evaluate the pricing calculator again. Winner Winner Chicken Dinner!

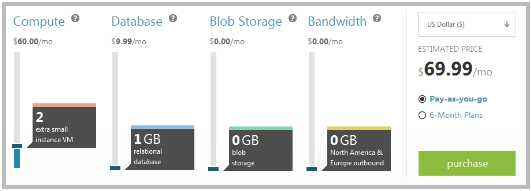

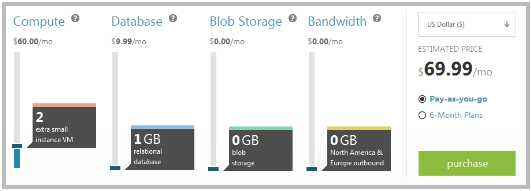

I could move our entire database to the SQL Azure platform for $9.99 per month! This would at least handle some of the disaster recovery and scalability concerns. The only other piece to the puzzle would be our ASP.NET web application. While I have scalability concerns with that, disaster recovery isn’t really a concern because the data drives the app. In other words, a Windows PC can running IIS would work until the server is fixed. But what if the cost wasn’t an issue on the Azure platform? Would it be worth it? Five months ago, I couldn’t have told you the cost. With the updated pricing calculator, I can see that running 2 extra-small instances of our ASP.NET web app will cost $60.00 per month. VERY AFFORDABLE!

While I’ve not deployed our solutions to Azure yet, it is something that I’ve got on the list to complete within the next 60 days. Hosting our entire application for less than $70 per month (ok, a penny less… but still!) is amazing! I’ll write another blog entry once we’ve tested the Azure system out, but very promising!!!!!

What if your database was 30GB?

<Return to section navigation list>

• Scott Guthrie (@scottgu) began a Web API series with ASP.NET Web API (Part 1) of 2/23/2012:

Earlier this week I blogged about the release of the ASP.NET MVC 4 Beta. ASP.NET MVC 4 is a significant update that brings with it a bunch of great new features and capabilities. One of the improvements I’m most excited about is the support it brings for creating “Web APIs”. Today’s blog post is the first of several I’m going to do that talk about this new functionality.

Earlier this week I blogged about the release of the ASP.NET MVC 4 Beta. ASP.NET MVC 4 is a significant update that brings with it a bunch of great new features and capabilities. One of the improvements I’m most excited about is the support it brings for creating “Web APIs”. Today’s blog post is the first of several I’m going to do that talk about this new functionality.

Web APIs

The last few years have seen the rise of Web APIs - services exposed over plain HTTP rather than through a more formal service contract (like SOAP or WS*). Exposing services this way can make it easier to integrate functionality with a broad variety of device and client platforms, as well as create richer HTML experiences using JavaScript from within the browser. Most large sites on the web now expose Web APIs (some examples: Facebook, Twitter, LinkedIn, Netflix, etc), and the usage of them is going to accelerate even more in the years ahead as connected devices proliferate and users demand richer user experiences.

Our new ASP.NET Web API support enables you to easily create powerful Web APIs that can be accessed from a broad range of clients (ranging from browsers using JavaScript, to native apps on any mobile/client platform). It provides the following support:

- Modern HTTP programming model: Directly access and manipulate HTTP requests and responses in your Web APIs using a clean, strongly typed HTTP object model. In addition to supporting this HTTP programming model on the server, we also support the same programming model on the client with the new HttpClient API that can be used to call Web APIs from any .NET application.

- Content negotiation: Web API has built-in support for content negotiation – which enables the client and server to work together to determine the right format for data being returned from an API. We provide default support for JSON, XML and Form URL-encoded formats, and you can extend this support by adding your own formatters, or even replace the default content negotiation strategy with one of your own.

Query composition: Web API enables you to easily support querying via the OData URL conventions. When you return a type of IQueryable<T> from your Web API, the framework will automatically provide OData query support over it – making it easy to implement paging and sorting. [Emphasis added.]

Query composition: Web API enables you to easily support querying via the OData URL conventions. When you return a type of IQueryable<T> from your Web API, the framework will automatically provide OData query support over it – making it easy to implement paging and sorting. [Emphasis added.]

- Model binding and validation: Model binders provide an easy way to extract data from various parts of an HTTP request and convert those message parts into .NET objects which can be used by Web API actions. Web API supports the same model binding and validation infrastructure that ASP.NET MVC supports today.

- Routes: Web APIs support the full set of routing capabilities supported within ASP.NET MVC and ASP.NET today, including route parameters and constraints. Web API also provides smart conventions by default, enabling you to easily create classes that implement Web APIs without having to apply attributes to your classes or methods. Web API configuration is accomplished solely through code – leaving your config files clean.

- Filters: Web APIs enables you to easily use and create filters (for example: [authorization]) that enable you to encapsulate and apply cross-cutting behavior.

- Improved testability: Rather than setting HTTP details in static context objects, Web API actions can now work with instances of HttpRequestMessage and HttpResponseMessage – two new HTTP objects that (among other things) make testing much easier. As an example, you can unit test your Web APIs without having to use a Mocking framework.

- IoC Support: Web API supports the service locator pattern implemented by ASP.NET MVC, which enables you to resolve dependencies for many different facilities. You can easily integrate this with an IoC container or dependency injection framework to enable clean resolution of dependencies.

- Flexible Hosting: Web APIs can be hosted within any type of ASP.NET application (including both ASP.NET MVC and ASP.NET Web Forms based applications). We’ve also designed the Web API support so that you can also optionally host/expose them within your own process if you don’t want to use ASP.NET/IIS to do so. This gives you maximum flexibility in how and where you use it.

Learning More

Visit www.asp.net/web-api to find tutorials on how to use ASP.NET Web API. You can also watch me talk about and demo ASP.NET Web API in the video of my ASP.NET MVC 4 Talk (I cover it 36 minutes into the talk).

In my next blog post I’ll walk-through how to create a new Web API, the basics of how it works, and how you can programmatically invoke it from a client.

• Paul Miller (@PaulMiller, pictured below) posted Data Market Chat: Piyush Lumba discusses Microsoft’s Windows Azure Marketplace on 2/23/2012:

As CEO Steve Ballmer has noted more than once, Microsoft’s future plans see the company going “all in” with the cloud. The company’s cloud play, Azure, offers the capabilities that we might expect from a cloud, and includes infrastructure such as virtual machines and storage as well as the capability to host and run software such as Office 365. Microsoft also recognises the importance of data, and with the Windows Azure Marketplace and the nurturing of specification such as OData, the company is playing its part in ensuring that data can be found, trusted, and incorporated into a host of different applications.

As CEO Steve Ballmer has noted more than once, Microsoft’s future plans see the company going “all in” with the cloud. The company’s cloud play, Azure, offers the capabilities that we might expect from a cloud, and includes infrastructure such as virtual machines and storage as well as the capability to host and run software such as Office 365. Microsoft also recognises the importance of data, and with the Windows Azure Marketplace and the nurturing of specification such as OData, the company is playing its part in ensuring that data can be found, trusted, and incorporated into a host of different applications.

Piyush Lumba, Director of Product Management for Azure Data Services at Microsoft, talks about what the Marketplace can do today and shares some of his perspectives on ways that the nascent data market space could evolve.

Piyush Lumba, Director of Product Management for Azure Data Services at Microsoft, talks about what the Marketplace can do today and shares some of his perspectives on ways that the nascent data market space could evolve.

Data Market Chat: Piyush Lumba discusses Microsoft's Azure Data Marketplace [ 41:10 ] Hide Player | Play in Popup | Download

Data Market Chat: Piyush Lumba discusses Microsoft's Azure Data Marketplace [ 41:10 ] Hide Player | Play in Popup | Download

Following up on a blog post that I wrote at the start of 2012, this is the eighth in an ongoing series of podcasts with key stakeholders in the emerging category of Data Markets.

Related articles

James Kobielus (@jameskobielus) asked Big Data: Does It Make Sense To Hope For An Integrated Development Environment, Or Am I Just Whistling In The Wind? in a 2/23/2012 post to his Forrester Research blog:

Is big data just more marketecture? Or does the term refer to a set of approaches that are converging toward a common architecture that might evolve into a well-defined data analytics market segment?

Is big data just more marketecture? Or does the term refer to a set of approaches that are converging toward a common architecture that might evolve into a well-defined data analytics market segment?

That’s a huge question, and I won’t waste your time waving my hands with grandiose speculation. Let me get a bit more specific: When, if ever, will data scientists and others be able to lay their hands on truly integrated tools that speed development of the full range of big data applications on the full range of big data platforms?

Perhaps that question is also a bit overbroad. Here’s even greater specificity: When will one-stop-shop data analytic tool vendors emerge to field integrated development environments (IDEs) for all or most of the following advanced analytics capabilities at the heart of Big Data?

Perhaps that question is also a bit overbroad. Here’s even greater specificity: When will one-stop-shop data analytic tool vendors emerge to field integrated development environments (IDEs) for all or most of the following advanced analytics capabilities at the heart of Big Data?

Of course, that’s not enough. No big data application would be complete without the panoply of data architecture, data integration, data governance, master data management, metadata management, business rules management, business process management, online analytical processing, dashboarding, advanced visualization, and other key infrastructure components. Development and deployment of all of these must also be supported within the nirvana-grade big data IDE I’m envisioning.

And I’d be remiss if I didn’t mention that the über-IDE should work with whatever big data platform — enterprise data warehouse, Hadoop, NoSQL, etc. — that you may have now or are likely to adopt. And it should support collaboration, model governance, and automation features that facilitate the work of teams of data scientists, not just individual big data developers.

I think I’ve essentially answered the question in the title of this blog. It doesn’t make a whole lot of sense to hope for this big data IDE to emerge any time soon. The only vendors whose current product portfolios span most of this functional range are SAS Institute, IBM, and Oracle. I haven’t seen any push by any of them to coalesce what they each have into unified big data tools.

It would be great if the big data industry could leverage the Eclipse framework to catalyze evolution toward such an IDE, but nobody has proposed it (that I’m aware of).

I’ll just whistle a hopeful tune till that happens.

I’ll be looking for that uber-UI in Visual Studio 11 and SQL Server/SQL Azure BI.

Yi-Lun Luo posted More about REST: File upload download service with ASP.NET Web API and Windows Phone background file transfer on 2/23/2012:

Last week we discussed RESTful services, as well as how to create a REST service using WCF Web API. I'd like to remind you what's really important is the concepts, not how to implement the services using a particular technology. Shortly after that, WCF Web API was renamed to

Last week we discussed RESTful services, as well as how to create a REST service using WCF Web API. I'd like to remind you what's really important is the concepts, not how to implement the services using a particular technology. Shortly after that, WCF Web API was renamed to

ASP.NET Web API, and formally entered beta.

In this post, we'll discuss how to upgrade from WCF Web API to ASP.NET Web API. We'll create a file uploading/downloading service. Before that, I'd like to

In this post, we'll discuss how to upgrade from WCF Web API to ASP.NET Web API. We'll create a file uploading/downloading service. Before that, I'd like to

give you some background about the motivation of this post.

The current released version of Story Creator allows you to encode pictures to videos. But the videos are mute. Windows Phone allows users to record sound using microphone, as do many existing PCs (thus future Windows 8 devices). So in the next version, we'd like to introduce some sound. That will make the application even cooler, right?

The sound recorded by Windows Phone microphone only contains raw PCM data. This is not very useful other than using XNA to play the sound. So I wrote a

prototype (a Windows Phone program) to encode it to wav. Then I expanded the

prototype with a REST service that allows the phone to upload the wav file to a

server, where ultimately it will be encoded to mp4 using Media Foundation.

With the release of ASP.NET Web API beta, I think I'll change the original

plan (write about OAuth this week), to continue the discussion of RESTful

services. You'll also see how to use Windows Phone's background file transfer to

upload files to your own REST services. However, due to time and effort

limitations, this post will not cover how to use microphone and how to create

wav files on Windows Phone (although you'll find it if you download the

prototyp), or how to use Media Foundation to encode

audio/videos (not included in the prototype yet, perhaps in the future).

You can download the prototype here. Once again, note this is just a prototype, not a sample. Use it as a reference only.

The service Review: What is REST service

First let's recall what it means by a RESTful service. This concept is independent from which technology you use, be it WCF Web API, ASP.NET Web API, Java, or anything else. The most important things to remember are:

First let's recall what it means by a RESTful service. This concept is independent from which technology you use, be it WCF Web API, ASP.NET Web API, Java, or anything else. The most important things to remember are:

- REST is resource centric (while SOAP is operation centric).

- REST uses HTTP protocol. You define how clients interact with resources

using HTTP requests (such as URI and HTTP method).

In most cases, the upgrade from WCF Web API to ASP.NET Web API is simple. Those two do not only share the same underlying concepts (REST), but also share a lot of code base. You can think ASP.NET Web API as a new version of WCF Web API, although it does introduce some break changes, and has more to do with ASP.NET MVC. You can find a lot of resources here.

Today I'll specifically discuss one topic: How to build a file upload/download

service. This is somewhat different from the tutorials you'll find on the above

web site, and actually I encountered some difficulties when creating the

prototype.

Using ASP.NET Web API

The first thing to do when using ASP.NET Web API is to download ASP.NET MVC 4 beta, which includes the new Web API. Of course you can also get it from NuGet.

To create a project that uses the new Web API, you can simply use Visual Studio's project template.

This template is ideal for those who want to use Web API together with ASP.NET MVC. It will create views and JavaScript files in addition to files necessary for a service. If you don't want to use MVC, you can remove the unused files, or create an empty ASP.NET application and manually create the service. This is the approach we'll take today.

If you've followed last week's post to create a REST service using WCF Web API, you need to make a few modifications. First remove any old Web API related assembly references. You have to add references to the new version:

System.Web.Http.dll, System.Web.Http.Common, System.Web.Http.WebHost, and

System.Net.Http.dll.

Note many Microsoft.*** assemblies have been renamed to

System.***. As for System.Net.Http.dll, make sure you reference the new version, even if the name remains the same. Once again, make sure you set Copy Local to true, if you plan to deploy the service to Windows Azure or somewhere else.

Like assmblies, many namespaces have been changed from Microsoft.*** to

System.***. If you're not sure, simply remove all namespaces, and let Visual

Studio automatically find which ones you need.

The next step is to modify Global.asax. Before, the code looks like this, where ServiceRoute is used to define base URI using ASP.NET URL Routing. This allows you to remove the .svc extension. Other parts of URI are defined in UriTemplate.

routes.Add(new ServiceRoute( "files", new HttpServiceHostFactory(),

typeof(FileUploadService)));

In ASP.NET Web API, URL Routing is used to define the complete URI, thus

removes the need to use a separate UriTemplate.

public static void RegisterRoutes(RouteCollection routes){

routes.MapHttpRoute(

name: "DefaultApi",

routeTemplate: "{controller}/{filename}",

defaults: new { filename = RouteParameter.Optional }

);

}

The above code defines how a request is routed. If a request is sent to

http://[server name]/files/myfile.wav, Web API will try to find a class named

FilesController ({controller} is mapped to this class, and it's case insensitive).

Then it will invoke a method which contains a parameter filename, and map

myfile.wav to the value of the parameter. This parameter is optional.

So you must have a class FilesController. You cannot use names like

FileUploadService. This is because now Web API relies on some of ASP.NET MVC's

features (although you can still host the service in your own process without

ASP.NET). However, this class does not have to be put under the Controllers

folder. You're free to put it anywhere, such as under a services folder to make

it more like a service instead of a controller. Anyway, this class must inherite

ApiController (in WCF Web API, you didn't need to inherite anything).

For a REST service, HTTP method is as important as URI. In ASP.NET Web API,

you no longer use WebGet/Invoke. Instead, you use HttpGet/AcceptVerbs. They're almost identical to the counter parts in old Web API. One improvement is if your service method begins with Get/Put/Post/Delete, you can omit those attributes completely. Web API will automatically invoke those methods based on the HTTP method. For example, when a POST request is made, the following method will automatically be invoked:

public HttpResponseMessage Post([FromUri]string filename)

You may also notice the FromUri attribute. This one is tricky. If you omit

it, you'll encounter exceptions like:

No 'MediaTypeFormatter' is available to read an object of type 'String'

with the media type ''undefined''.

This has to do with ASP.NET MVC's model binding. By default, the last parameter in the method for a POST/PUT request is considered to be request body, and will deserialized to a model object. Since we don't have a model here, an exception is thrown. FromUri tells MVC this parameter is actually part of URI, so it won't try to deserialize it to a model object. This attribute is optional for requests that do not have request bodies, such as GET.

This one proved to be a trap for me, and web searches do not yield any useful

results. Finally I asked the Web API team directly, where I got the answer

(Thanks Web API team!). I'm lucky enough to have the oppotunity to talk with the team directly. As for you, if you encoutner errors that you're unable to find

the answer yourself, post a question in the forum! Like all new products, some Web API team members will directly monitor that forum.

You can find more about URL Routing on http://www.asp.net/web-api/overview/web-api-routing-and-actions/routing-in-aspnet-web-api.

Implement file uploading

Now let's implement the file uploading feature. There're a lot ways to handle

the uploaded file. In this prototype, we simply save the file to a folder of the

service application. Note in many cases this will not work. For example, in

Windows Azure, by default you don't have write access to folders under the web

role's directory. You have to store the file in local storage. In order for

multiple instances to see the same file, you also need to upload the file to

blob storage. However, in the prototype, let's not consider so many issues.

Actually the prototype does not use Windows Azure at all.

Below is the code to handle the uploaded file:

public HttpResponseMessage Post([FromUri]string filename)

{

var task = this.Request.Content.ReadAsStreamAsync();

task.Wait();

Stream requestStream = task.Result;

try

{

Stream fileStream = File.Create(HttpContext.Current.Server.MapPath("~/" + filename));

requestStream.CopyTo(fileStream);

fileStream.Close();

requestStream.Close();

}

catch (IOException)

{

throw new HttpResponseException("A generic error occured. Please try again later.", HttpStatusCode.InternalServerError);

}

HttpResponseMessage response = new HttpResponseMessage();

response.StatusCode = HttpStatusCode.Created;

return response;

}

Unlike last week's post, this time we don't have a HttpRequestMessage

parameter. The reason is you can now use this.Request to get information about

request. Others have not changed much in the new Web API. For example, to obtain the request body, you use ReadAsStreamAsync (or another ReadAs*** method).

Note in real world product, it is recommended to handle the request asynchronously. This is again not considered in our prototype, so we simply let the thread wait until the request body is read.

When creating the response, you still need to pay attention to status code.

Usually for a POST request which creates a resource on the server, the

response's status code is 201 Created. When an error occurs, however, you need

to return an error status code. This can be done by throwing a

HttpResponseException, the same as in previous Web API.

File downloading and Range header

While the audio recoding feature does not need file downloading, this may be

required in the future. So I also implemented it in the prototye. Another reason

is I want to test the Range header (not very relavent to Story Creator, but one

day it may prove to be useful).

Range header is a standard HTTP header. Usually only GET requests will use

it. If a GET request contains a Range header, it means the client wants to get

partial resource instead of the complete resource. This can be very useful

sometimes. For example, when downloading large files, it is expected to pause

the download and resume sometime later. You can search for 14.35 on

http://www.w3.org/Protocols/rfc2616/rfc2616-sec14.html, which exlains the

Range header.

To give you some practical examples on how Range header is used in real

world, consider Windows Azure blob storage. Certain blob service requests

support the Range header. Refer to

http://msdn.microsoft.com/en-us/library/windowsazure/ee691967.aspx for more

information. As another example, Windows Phone background file transfer may (or may not) use the Range header, in case the downloading is interrupted.

The format of Range header is usually:

bytes=10-20,30-40

It means the client wants the 10th to 20th bytes and the 30th to 40th bytes

of the resource, instead of the complete resource.

Range header may also come in the following forms:

bytes=-100

bytes=300-

In the former case, the client wants to obtain from the beginning to the

100th byte of the resource. In the latter case, the client wants to obtain from

the 300th bytes to the end of the resource.

As you can see, the client may request for more than one ranges. However in

practice, usually there's only one range. This is in particular true for file

downloading scenarios. It is quite rare to request for discrete data. So many

services only support a single range. That's also what we'll do in the prototype

today. If you want to support multiple ranges, you can use the prototype as a

reference and implement additional logic.

The following code implements file downloading. It only takes the first range

into account, and ignores the remaining.

public HttpResponseMessage Get([FromUri]string filename)

{

string path = HttpContext.Current.Server.MapPath("~/" + filename);

if (!File.Exists(path))

{

throw new HttpResponseException("The file does not exist.", HttpStatusCode.NotFound);

}

try

{

MemoryStream responseStream = new MemoryStream();

Stream fileStream = File.Open(path, FileMode.Open);

bool fullContent = true;

if (this.Request.Headers.Range != null)

{

fullContent = false;

// Currently we only support a single range.

RangeItemHeaderValue range = this.Request.Headers.Range.Ranges.First();

// From specified, so seek to the requested position.

if (range.From != null)

{

fileStream.Seek(range.From.Value, SeekOrigin.Begin);

// In this case, actually the complete file will be returned.

if (range.From == 0 && (range.To == null || range.To >= fileStream.Length))

{

fileStream.CopyTo(responseStream);

fullContent = true;

}

}

if (range.To != null)

{

// 10-20, return the range.

if (range.From != null)

{

long? rangeLength = range.To - range.From;

int length = (int)Math.Min(rangeLength.Value, fileStream.Length - range.From.Value);

byte[] buffer = new byte[length];

fileStream.Read(buffer, 0, length);

responseStream.Write(buffer, 0, length);

}

// -20, return the bytes from beginning to the specified value.

else

{

int length = (int)Math.Min(range.To.Value, fileStream.Length);

byte[] buffer = new byte[length];

fileStream.Read(buffer, 0, length);

responseStream.Write(buffer, 0, length);

}

}

// No Range.To

else

{

// 10-, return from the specified value to the end of file.

if (range.From != null)

{

if (range.From < fileStream.Length)

{

int length = (int)(fileStream.Length - range.From.Value);

byte[] buffer = new byte[length];

fileStream.Read(buffer, 0, length);

responseStream.Write(buffer, 0, length);

}

}

}

}

// No Range header. Return the complete file.

else

{

fileStream.CopyTo(responseStream);

}

fileStream.Close();

responseStream.Position = 0;

HttpResponseMessage response = new HttpResponseMessage();

response.StatusCode = fullContent ? HttpStatusCode.OK : HttpStatusCode.PartialContent;

response.Content = new StreamContent(responseStream);

return response;

}

catch (IOException)

{

throw new HttpResponseException("A generic error occured. Please try again later.", HttpStatusCode.InternalServerError);

}

}

Note when using Web API, you don't need to manually parse the Range header in

the form of text. Web API automatically parses it for you, and gives you a From

and a To property for each range. The type of From and To is Nullable<long>, as

those properties can be null (think bytes=-100 and bytes=300-). Those special

cases must be handled carefully.

Another special case to consider is where To is larger than the resource

size. In this case, it is equivalent to To is null, where you need to return

starting with From to the end of the resource.

If the complete resource is returned, usually status code is set to 200 OK.

If only part of the resource is returned, usually status code is set to 206

PartialContent.

Test the service

To test a REST service, we need a client, just like testing other kinds of

services. But often we don't need to write clients ourselves. For simple GET

requests, a browser can serve as a client. For other HTTP methods, you can use

Fiddler. Fiddler also helps to test some advanced GET requests, such as Range header.

I think most of you already know how to use Fiddler. So today I won't discuss

it in detail. Below are some screenshots that will give you an idea how to test

the Range header:

Note here we request the range 1424040-1500000. But actually the resource

size is 1424044. 1500000 is out of range, so only 4 bytes are returned.

You need to test many different use cases, to make sure your logic is

correct. It is also a good idea to write a custom test client with all test

cases written beforehand. This is useful in case you change service

implementation. If you use Fiddler, you have to manually go through all test

cases again. But with some pre-written test cases, you can do automatic tests.

However, unit test is beyond the scope of today's post.

Windows Phone Background File Transfer

While we're at it, let's also briefly discuss Windows Phone background file transfer. This is also part of the prototype. It uploads a wav file to the service. One goal of the next Story Creator is to show case some of Windows Phone Mango's new features. Background file transfer is one of them.

You can find a lot of resources about background file transfer. Today my focus will be pointing out some points that you may potentially miss.

When to use background file transfer

Usually when a file is small, there's no need to use background file transfer, as using a background agent do introduce some overhead. You will use it when you need to transfer big files (but it cannot be too big, as usually using phone network costs users). Background file transfer supports some nice features, like automatic pause/resume downloading as the OS thinks it's needed (requires service to support Range header), allows user to cancel a request, and so on.

In the prototype, we simply decide to use background file transfer to upload

all files larger than 1MB, and use HttpWebRequest directly when the file size is

smaller. Note this is rather an objective choice. You need to do some test and

maybe some statictics to find the optimal border between background file

transfer and HttpWebRequest in your case.

if (wavStream.Length < 1048576)

{

this.UploadDirectly(wavStream);

}

else

{

this.UploadInBackground(wavStream);

}

Using background file transfer

To use background file transfer, refer to the document on

http://msdn.microsoft.com/en-us/library/hh202959(v=vs.92).aspx. Pay special

attention to the following:

The maximum allowed file upload size for uploading is 5MB (5242880 bytes).

The maximum allowed file download size is 20MB or 100MB (depending on whether

wifi is available). Our prototype limits the audio recording to 5242000 bytes.

It's less than 5242880 because we may need additional data. For example,

additional 44 bytes are required to write wav header.

if (this._stream.Length + offset > 5242000)

{

this._microphone.Stop();

MessageBox.Show("The recording has been stopped as it is too long.");

return;

}

In order to use background file transfer, the file must be put in isolated

storage, under /shared/transfers folder. So our prototype saves the wav file to

that folder if it needs to use background file transfer. But if it uses

HttpWebRequest directly, it transfers the file directly from memory (thus a bit

faster as no I/O is needed).

In addition, for a single application, at maximum you can create 5 background

file transfer requests in parallel. If the limit is reached, you can either

notify the user to wait for previous files to be transferred, or manually queue

the files. Our prototype, of course, takes the simpler approach to notify the

user to wait.

The following code checks if there're already 5 background file transfer

requests and notifies the user to wait if needed. If less than 5 are found, a

BackgroundTransferRequest is created to upload the file. The prototype simply

hardcodes the file name to be test.wav. Of course in real world applications,

you need to get the file name from user input.

private void UploadInBackground(Stream wavStream)

{

// Check if there're already 5 requests.

if (BackgroundTransferService.Requests.Count() >= 5)

{

MessageBox.Show("Please wait until other records have been uploaded.");

return;

}

// Store the file in isolated storage.

var iso = IsolatedStorageFile.GetUserStoreForApplication();

if (!iso.DirectoryExists("/shared/transfers"))

{

iso.CreateDirectory("/shared/transfers");

}

using (var fileStream = iso.CreateFile("/shared/transfers/test.wav"))

{

wavStream.CopyTo(fileStream);

}

// Transfer the file.

try

{

BackgroundTransferRequest request = new BackgroundTransferRequest(new Uri("http://localhost:4349/files/test.wav"));

request.Method = "POST";

request.UploadLocation = new Uri("shared/transfers/test.wav", UriKind.Relative);

request.TransferPreferences = TransferPreferences.AllowCellularAndBattery;

request.TransferStatusChanged += new EventHandler<BackgroundTransferEventArgs>(Request_TransferStatusChanged);

BackgroundTransferService.Add(request);

}

catch

{

MessageBox.Show("Unable to upload the file at the moment. Please try again later.");

}

}

Here we set TransferPreferences to AllowCellularAndBattery, so the file can

be transferred even if no wifi is available (thus the user has to use phone's

celluar network) and battery is low. In real world, please be very careful when

setting the value. 5MB usually will not cost the user too much. But if you need

to transfer larger files, consider to disallow cellular network. Battery is

usually less of a concern in case of file transfer, but it is still recommended

to do some test and gather practical data.

Once a file transfer is completed, the background file transfer request will

not be removed automatically. You need to manually remove it. You may need to do that quite often, so a global static method (such as a method in the App class) will help.

internal static void OnBackgroundTransferStatusChanged(BackgroundTransferRequest request)

{

if (request.TransferStatus == TransferStatus.Completed)

{

BackgroundTransferService.Remove(request);

if (request.StatusCode == 201)

{

MessageBox.Show("Upload completed.");

}

else

{

MessageBox.Show("An error occured during uploading. Please try again later.");

}

}

}

Here we check if the file transfer has completed, and remove the request if

it has. We also check the response status code of the request, and display

either succeed or failed information to user.

You invoke this method in the event handler of TransferStatusChanged. Note

you do not only need to handle this event when the request is created, but also

need to handle it in case of application launch and tomestone. After all, as the

name suggests, the request can be executed in background. However, if your

application is not killed (not tomestoned), you don't need to handle the events

again, as your application still remains in memory.

Below is the code handling application lifecycle events and check for

background file transfer:

// Code to execute when the application is launching (eg, from Start)

// This code will not execute when the application is reactivated

private void Application_Launching(object sender, LaunchingEventArgs e)

{

this.HandleBackgroundTransfer();

}

// Code to execute when the application is activated (brought to foreground)

// This code will not execute when the application is first launched

private void Application_Activated(object sender, ActivatedEventArgs e)

{

if (!e.IsApplicationInstancePreserved)

{

this.HandleBackgroundTransfer();

}

} private void HandleBackgroundTransfer()

{

foreach (var request in BackgroundTransferService.Requests)

{

if (request.TransferStatus == TransferStatus.Completed)

{

BackgroundTransferService.Remove(request);

}

else

{

request.TransferStatusChanged += new EventHandler<BackgroundTransferEventArgs>(Request_TransferStatusChanged);

}

}

}

Finally, in a real world application, you need to provide some UI to allow

users to monitor background file transfer requests, and cancel them if

necessary. A progess indicator will also be nice. However, those features are

really out of scope for a prototype.

Upload files directly

Just to make the post complete, I'll also list the code that uses

HttpWebRequest to upload the file directly to the service (same code you can

find all over the web).

private void UploadDirectly(Stream wavStream)

{

string serviceUri = "http://localhost:4349/files/test.wav";

HttpWebRequest request = (HttpWebRequest)HttpWebRequest.Create(serviceUri);

request.Method = "POST";

request.BeginGetRequestStream(result =>

{

Stream requestStream = request.EndGetRequestStream(result);

wavStream.CopyTo(requestStream);

requestStream.Close();

request.BeginGetResponse(result2 =>

{

try

{

HttpWebResponse response = (HttpWebResponse)request.EndGetResponse(result2);

if (response.StatusCode == HttpStatusCode.Created)

{

this.Dispatcher.BeginInvoke(() =>

{

MessageBox.Show("Upload completed.");

});

}

else

{

this.Dispatcher.BeginInvoke(() =>

{

MessageBox.Show("An error occured during uploading. Please try again later.");

});

}

}

catch

{

this.Dispatcher.BeginInvoke(() =>

{

MessageBox.Show("An error occured during uploading. Please try again later.");

});

}

wavStream.Close();

}, null);

}, null);

}

The only thing worth noting here is Dispatcher.BeginInvoke. HttpWebRequest

returns the response on a background thread (and actually I already start the

wav encoding and file uploading using a background worker to avoid blocking UI

thread), thus you have to delegate all UI manipulations to UI thread.

Finally, if you want to test the protypte on emulator, make sure your PC has

a microphone. If you want to test it on a real phone, you cannot use localhost

any more. You need to use your PC's name (assume your phone can connect to PC using wifi), or host the service on internet such as in Windows Azure.

Conclusion

I didn't intend to write such a long post. But it seems there're just so many

topics in development that makes me difficult to stop. Even a single theme

(RESTful services) involves a lot of topics. Imagine how many themes you will

use in a real world application. Software development is fun. Do you agree? You

can combine all those technologies together to create your own product.

While this post discusses how to use ASP.NET Web API to build file

upload/download services, the underlying concept (such as the Range header) can be ported to other technologies as well, even on non-Microsoft platforms. And the service can be accessed from any client that supports HTTP.

If I have time next week, let's continue the topic by introducing OAuth into

the discussion, to see how to protect REST services. And in the future, if

you're interested, I can talk about wav encoding on Windows Phone, and more

advanced encodings on Windows Server using Media Foundation. However, time is

really short for me...

Jonathan Allen described ASP.NET Web API – A New Way to Handle REST in a 2/23/2012 post to InfoQ:

Web API is the first real alternative to WCF that .NET developers have seen in the last six years. Until now emerging trends such as JSON were merely exposed as WCF extensions. With Web API, developers can leave the WCF abstraction and start working with the underlying HTTP stack.

Web API is the first real alternative to WCF that .NET developers have seen in the last six years. Until now emerging trends such as JSON were merely exposed as WCF extensions. With Web API, developers can leave the WCF abstraction and start working with the underlying HTTP stack.

Web API is built on top of the ASP.NET stack and shares many of the features found in ASP.NET MVC. For example, it fully supports MVC-style Routes and Filters. Filters are especially useful for authorization and exception handling.

In order to reduce the amount of mapping code needed, Web API supports the same Model binding and validation used by MVC (and soon to be released NET Web Forms 4.5). The flip side of this is the content negotiation features. Web API automatically supports XML and JSON, but the developer can add their own formats as well. The client determines which format(s) it can accept and includes them in the request header.

In order to reduce the amount of mapping code needed, Web API supports the same Model binding and validation used by MVC (and soon to be released NET Web Forms 4.5). The flip side of this is the content negotiation features. Web API automatically supports XML and JSON, but the developer can add their own formats as well. The client determines which format(s) it can accept and includes them in the request header.

Making your API queryable is surprisingly easy. Merely have the service method return IQueryable<T> (e.g. from an ORM) and it Web API will automatically enable OData query conventions.

Making your API queryable is surprisingly easy. Merely have the service method return IQueryable<T> (e.g. from an ORM) and it Web API will automatically enable OData query conventions.

ASP.NET Web API can be self-hosted or run inside IIS.

The ASP.NET Web API is part of ASP.NET MVC 4.0 Beta and ASP.NET 4.5, which you can download here.

<Return to section navigation list>

No significant articles today.

No significant articles today.

<Return to section navigation list>

No significant articles today.

No significant articles today.

<Return to section navigation list>

• Ayman Zaza (@aymanpvt) began a TFS Services series with Team Foundation Server on Windows Azure Cloud – Part 1 on 2/24/2012:

In this article I will show you how to use the latest preview release of Team Foundation Server available on windows Azure cloud. This preview release have limited invitation codes available for the members to validate the Team Foundation Services on the cloud.

In this article I will show you how to use the latest preview release of Team Foundation Server available on windows Azure cloud. This preview release have limited invitation codes available for the members to validate the Team Foundation Services on the cloud.

Every user who successfully creates an account (windows life account) will have 5 additional invitation codes that can be distributed to the friends and team to have a work around on the Team Foundation Server with Windows Azure, so if you need an account send request to me I will add you in my TFS preview account since there was limitation of invitations.

Team Foundation Service Preview enables everyone on your team to collaborate more effectively, be more agile, and deliver better quality software.

Team Foundation Service Preview enables everyone on your team to collaborate more effectively, be more agile, and deliver better quality software.

- Make sure you are using IE 8 or later.

- Download and install visual studio 2011 developer preview from here or you can use visual studio 2010 SP1 after installing this hotfix KB2581206.

- Open this URL (http://tfspreview.com/)

- Click on “Click here to register.”

- After receiving an email with invitation code continue the process of account creation.

- Open IE and refer to this page https://TFSPREVIENAME.tfspreview.com

- Register with you Windows Live ID

- Now you are in TFS cloud

In next post i will show you how to use it in both web and visual studio 2011.

• MarketWatch (@MarketWatch) reported Film Industry Selects Windows Azure for High-Capacity Business Needs in a 2/24/2012 press release:

The Screen Actors Guild (SAG) has selected Windows Azure to provide the cloud technology necessary to handle high volumes of traffic to its website during its biggest annual event, the SAG Awards. SAG worked with Microsoft Corp. to port its entire awards site from Linux servers to Windows Azure, to gain greater storage capacity and the ability to handle increased traffic on the site.

The Screen Actors Guild (SAG) has selected Windows Azure to provide the cloud technology necessary to handle high volumes of traffic to its website during its biggest annual event, the SAG Awards. SAG worked with Microsoft Corp. to port its entire awards site from Linux servers to Windows Azure, to gain greater storage capacity and the ability to handle increased traffic on the site.

"Windows Azure is committed to making it easier for customers to use cloud computing to address their specific needs," said Doug Hauger, general manager, Windows Azure Business Development at Microsoft. "For the entertainment industry, that means deploying creative technology solutions that are flexible, easy to implement and cost-effective for whatever opportunities our customers can dream up."

And the Winner Is ... Windows Azure

And the Winner Is ... Windows Azure

Previously hosted on internal Linux boxes, the SAG website experienced negative impact due to high traffic each year leading up to the SAG Awards. Peak usage is on the night of the awards ceremony, when the site hosts a tremendous increase in visitors who view uploaded video clips and news articles from the event. To meet demand during that time, SAG had to continually upgrade its hardware. That is, until the SAG Awards team moved the site to Windows Azure.

"We moved to Windows Azure after looking at the services it offered," said Erin Griffin, chief information officer at SAG. "Understanding the best usage scenario for us took time and effort, but with help from Microsoft, we successfully moved our site to Windows Azure, and the biggest traffic day for us went off with flying colors."

Windows Azure helped the SAG website handle the anticipated traffic spike during its 2012 SAG Awards show, which generated a significant increase in visits and page views over the previous year. This year's show generated 325,403 website visits and 789,310 page views. In comparison, the 2011 awards show saw 222,816 total visits and 434,743 page views.

Windows Azure provides a business-class platform for the SAG website, providing low latency, increased storage, and the ability to scale up or down as needed.

Founded in 1975, Microsoft (Nasdaq "MSFT") is the worldwide leader in software, services and solutions that help people and businesses realize their full potential.

SOURCE Microsoft Corp.

• Brian Harry announced Coming Soon: TFS Express on 2/23/2012:

Soon, we will be announcing the availability of our VS/TFS 11 Beta. This is a major new release for us that includes big enhancements for developer, project managers, testers and Analysts. Over the next month or two, I’ll write a series of posts to demonstrate some of those improvements. Today I want to let you know about a new way to get TFS.

Soon, we will be announcing the availability of our VS/TFS 11 Beta. This is a major new release for us that includes big enhancements for developer, project managers, testers and Analysts. Over the next month or two, I’ll write a series of posts to demonstrate some of those improvements. Today I want to let you know about a new way to get TFS.

In TFS 11, we are introducing a new download of TFS, called Team Foundation Server Express, that includes core developer features:

In TFS 11, we are introducing a new download of TFS, called Team Foundation Server Express, that includes core developer features:

- Source Code Control

- Work Item Tracking

- Build Automation

- Agile Taskboard

- and more…

The best news is that it’s FREE for individuals and teams of up to 5 users. TFS Express and the Team Foundation Service provide two easy ways for you to get started with Team Foundation Server quickly. Team Foundation Service is great for teams that want to share their TFS data in the cloud wherever you are and with whomever you want. TFS Express is a great way to get started with TFS if you really want to install and host your own server.

The best news is that it’s FREE for individuals and teams of up to 5 users. TFS Express and the Team Foundation Service provide two easy ways for you to get started with Team Foundation Server quickly. Team Foundation Service is great for teams that want to share their TFS data in the cloud wherever you are and with whomever you want. TFS Express is a great way to get started with TFS if you really want to install and host your own server.

The Express edition is essentially the same TFS as you get when you install the TFS Basic wizard except that the install is trimmed down and streamlined to make it incredibly fast and easy. In addition to the normal TFS Basic install limitations (no Sharepoint integration, no reporting), TFS Express:

- Is limited to no more than 5 named users.

- Only supports SQL Server Express Edition (which we’ll install for you, if you don’t have it)

- Can only be installed on a single server (no multi-server configurations)

- Includes the Agile Taskboard but not sprint/backlog planning or feedback management.

- Excludes the TFS Proxy and the new Preemptive analytics add-on.

Of course, your team might grow or you might want more capability. You can add more users by simply buying Client Access Licenses (CALs) for the additional users – users #6 and beyond. And if you want more of the standard TFS features, you can upgrade to a full TFS license without losing any data.

In addition to the new TFS Express download, we have also enabled TFS integration in our Visual Studio Express products – giving developers a great end-to-end development experience. The Visual Studio Express client integration with work with any Team Foundation Server – including both TFS Express and the Team Foundation Service.

When we release our TFS 11 Beta here shortly, I’ll post a download link to the TFS Express installer. Installing it is a snap. No more downloading and mounting an ISO image. You can install TFS Express by just clicking the link, running the web installer and you’re up and running in no time.

Please check it out and pass it on to a friend. I’m eager to hear what you think!

Jason Zander (@jlzander) posted a Sneak Preview of Visual Studio 11 and .NET Framework 4.5 Beta in a 2/23/2012 post:

Today we’re giving a “sneak peek” into the upcoming beta release of Visual Studio 11 and .NET Framework 4.5. Soma has announced on his blog that the beta will be released on February 29th! We look forward to seeing what you will build with the release, and will be including a “Go Live” license with the beta, so that it can be used in production environments.

Today we’re giving a “sneak peek” into the upcoming beta release of Visual Studio 11 and .NET Framework 4.5. Soma has announced on his blog that the beta will be released on February 29th! We look forward to seeing what you will build with the release, and will be including a “Go Live” license with the beta, so that it can be used in production environments.

Visual Studio 11 Beta features a clean, professional developer experience. These improvements were brought about through a thoughtful reduction of the user interface, and a simplification of common developer workflows. They were also based upon insights gathered by our user experience research team. I think you will find it both easier to discover and navigate code, as well as search assets in this streamlined environment. For more information, please visit the Visual Studio team blog. [See post below.]

Visual Studio 11 Beta features a clean, professional developer experience. These improvements were brought about through a thoughtful reduction of the user interface, and a simplification of common developer workflows. They were also based upon insights gathered by our user experience research team. I think you will find it both easier to discover and navigate code, as well as search assets in this streamlined environment. For more information, please visit the Visual Studio team blog. [See post below.]

In preparation for the beta, today we’re also announcing the Visual Studio 11 Beta product lineup, which will be available for download next week. You can learn about these products on the Visual Studio product website. One new addition you will notice is Team Foundation Server Express Beta, which is free collaboration software that we’re making available for small teams. Please see Brian Harry’s blog for the complete announcement and more details on this new product.

In the Visual Studio 11 release, we’re providing a continuous flow of value, allowing teams to use agile processes, and gather feedback early and often. Storyboarding and Feedback Manager enable development teams to react rapidly to change, allowing stakeholder requirements to be captured and traced throughout the entire delivery cycle. Visual Studio 11 also introduces support for teams working together in the DevOps cycle. IntelliTrace in production allows teams to debug issues that occur on production servers, which is a key capability for software teams delivering services.

I encourage you to view the presspass story with additional footage from today’s news events, including a highlight video and product screenshots. Then stay tuned for an in-depth overview of the release with the general availability announcement on February 29th.

Monty Hammontree posted Introducing the New Developer Experience to the Visual Studio blog on 2/23/2012:

In this blog post (and the one that will follow) we’d like to introduce a few of the broad reaching experience improvements that we’ve delivered in Visual Studio 11. We’ve worked hard on them over the last two years and believe that they will significantly improve the experience that you will have with Visual Studio.

Introduction

We know that developers often spend more of their time than they would like orienting themselves to the project and tools they are working with and, in some cases, only about 15% of their time actually writing new code. This is based on observations we’ve made in our research labs and observations that other independent researchers have made (for example, take a look at this paper). Obviously you need to spend some time orienting yourself to your code and tools, but wouldn’t it be good to spend more time adding new value to your applications? In Visual Studio 11 we’ve focused on giving you back more time by streamlining your development experience. Through thoughtful reduction in user interface complexity, and by the introduction of new experience patterns that simplify common workflows, we’ve targeted what we observed to be three major hurdles to developer efficiency.

The problem areas we targeted are:

- Coping with tool overload. Visual Studio provides a large amount of information and capabilities that relate to your code. The sheer breadth and depth of capabilities that Visual Studio provides, at times, makes it challenging to find and make effective use of desired commands, options, or pieces of information.

- Comprehending and navigating complex codebases and related artifacts (bugs, work items, tests etc.). Most code has a large number of dependencies and relationships with other code and content such as bugs, specs, etc. Chaining these dependencies together to make sense of code is more difficult and time-consuming than it needs to be due to the need to establish and re-establish the same context across multiple tools or tool windows.

- Dealing with large numbers of documents. It is very common for developers to end up opening a large number of documents. Whether they are documents containing code, or documents containing information such as bugs or specs, these documents need to be managed by the developer. In some cases, the information contained in these documents is only needed for a short period of time. In other cases documents that are opened during common workflows such as exploring project files, looking through search results, or stepping through code while debugging are not relevant at all to the task the developer is working on. The obligation to explicitly close or manage these irrelevant or fleetingly relevant documents is an ongoing issue that detracts from your productivity.

Developer Impact

In the remainder of this post we’ll describe in a lot more detail how we have given you more time to focus on adding value to your applications by reducing UI complexity in VS 11. In tomorrow’s post we’ll go into details regarding the new experience patterns we’ve introduced to simplify many of your common development workflows. The overall effect of the changes we’ve introduced is that Visual Studio 11 demands less of your focus, and instead allows you to focus far more on your code and the value that you can add to your applications.

Improved Efficiency through Thoughtful Reduction

Developers have repeatedly and passionately shared with us the degree to which tool overload is negatively impacting their ability to focus on their work. The effort to address these challenges began during development of VS 2010 and continues in VS 11 today. In VS 2010 we focused on putting in place the engineering infrastructure to enable us to have fine grained control over the look and feel of Visual Studio.

With this as the backdrop we set out in Visual Studio 11 to attack the tool overload challenge through thoughtful yet aggressive reduction in the following areas:

- Command Placements

- Colorized Chrome

- Line Work

- Iconography

Command Placements

Toolbars are a prominent area where unnecessary command placements compete for valuable screen real-estate and user attention. In VS 11 we thoughtfully, based on user instrumentation data, but aggressively reduced toolbar command placements throughout the product by an average of 35%.When you open Visual Studio 11 for the first time you’ll notice that there are far fewer toolbar commands displayed by default. These commands haven’t been removed completely; we’ve just removed the toolbar placements for these commands. For example, the cut, copy and paste toolbar commands have been removed since we know from our instrumentation data that the majority of developers use the keyboard shortcuts for these commands. So, rather than have them take up space in the UI, we removed them from the toolbar.

The default toolbars in VS 2010

The default toolbars in VS 11

Feedback relating to the command placement reductions has been overwhelmingly positive. Developers have shared stories with us of discovering what they perceive to be new valuable features that are in fact pre-existing features that have only now gained their attention following the reductions. For example, during usability studies with the new toolbar settings, many users have noticed the Navigate Forward and Backward buttons and have assumed that this was new functionality added to the product when in fact this capability has been in the product for a number of releases.

Colorized Chrome