Windows Azure and Cloud Computing Posts for 3/25/2013+

| A compendium of Windows Azure, Service Bus, EAI & EDI, Access Control, Connect, SQL Azure Database, and other cloud-computing articles. |

• Updated 3/26/2013 with new articles marked • .

Note: This post is updated weekly or more frequently, depending on the availability of new articles in the following sections:

- Windows Azure Blob, Drive, Table, Queue, HDInsight and Media Services

- Windows Azure SQL Database, Federations and Reporting, Mobile Services

- Marketplace DataMarket, Cloud Numerics, Big Data and OData

- Windows Azure Service Bus, Caching, Access Control, Active Directory, Identity and Workflow

- Windows Azure Virtual Machines, Virtual Networks, Web Sites, Connect, Traffic Manager, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch and Entity Framework v4+

- Windows Azure Infrastructure and DevOps

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

- Cloud Security, Compliance and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

Azure Blob, Drive, Table, Queue, HDInsight and Media Services

• Sebastian Burckhardt (pictured below), Alexey Gotsman and Hongseok Yang authored an Understanding Eventual Consistency technical report, which Microsoft Research published on 3/25/2013. From the Abstract:

Modern geo-replicated databases underlying large-scale Internet services guarantee immediate availability and tolerate network partitions at the expense of providing only weak forms of consistency, commonly dubbed eventual consistency. At the moment there is a lot of confusion about the semantics of eventual consistency, as different systems implement it with different sets of features and in subtly different forms, stated either informally or using disparate and low-level formalisms.

We address this problem by proposing a framework for formal and declarative specification of the semantics of eventually consistent systems using axioms. Our framework is fully customisable: by varying the set of axioms, we can rigorously define the semantics of systems that combine any subset of typical guarantees or features, including conflict resolution policies, session guarantees, causality guarantees, multiple consistency levels and transactions. We prove that our specifications are validated by an example abstract implementation, based

on algorithms used in real-world systems. These results demonstrate that our framework provides system architects with a tool for exploring the design space, and lays the foundation for formal reasoning about eventually consistent systems.

The topic of eventual consistency is of interest to users of georeplicated cloud data storage with geofailover for enhanced availability and durability, such as that offered since September 2011 at no cost for Windows Azure blobs and tables. It also applies to most NoSQL databases, such as Amazon’s DynamoDB, for transacted operations. From the technical report’s Introduction:

Modern large-scale Internet services rely on distributed database systems that maintain multiple replicas of data. Often such systems are geo-replicated, meaning that the replicas are located in geographically distinct locations. Geo-replication requires the systems to tolerate network partitions, yet end-user

applications also require them to provide immediate availability. Ideally, we would like to achieve these two requirements while also providing strong consistency, which roughly guarantees that the outcome of a set of concurrent requests to the

database is the same as what one can obtain by executing these requests atomically in some sequence. Unfortunately, the famous CAP theorem [18] shows that this is impossible. For this reason, modern geo-replicated systems provide weaker forms of consistency, commonly dubbed eventual consistency [32].Here the word ‘eventual’ refers to the guarantee that if update requests stop arriving to the database, then it will eventually reach a consistent state. (1)

Geo-replication is a hot research area, and new architectures for eventually consistent systems appear every year [5, 14, 15, 17, 21, 24, 29, 30]. Unfortunately, whereas consistency models of classical relational databases have been well-studied [9, 26], those of geo-replicated systems are poorly understood. The very term eventual consistency is a catch-all buzzword, and different systems claiming to be eventually consistent actually provide subtly different guarantees and features. Commonly used ways of their specification are inadequate for several reasons:

- Disparate and low-level formalisms. Specifications of consistency models proposed for various systems are stated informally or using disparate formalisms, often tied to system implementations. This makes it hard to compare guarantees provided by different systems or apply ideas from one

of them in another.- Weak guarantees. More declarative attempts to formalise eventual consistency [29] have identified it with property (1), which actually corresponds to a form of quiescent consistency from distributed computing [19]. However, such reading of eventual consistency does not allow making conclusions about the behaviour of the database in realistic scenarios,

when updates never stop arriving. [Emphasis added.]- Conflict resolution policies. To satisfy the requirement of availability, geo-replicated systems have to allow making updates to the same object on different, potentially disconnected replicas. The systems then have to resolve conflicts, arising when replicas exchange the updates, according

to certain policies, often encapsulated in replicated data types [27, 29]. The use of such policies complicates the semantics provided by eventually consistent systems and makes its formal specification challenging.- Combinations of different consistency levels. Even in applications where basic eventual consistency is sufficient most of the time, stronger consistency may be needed occasionally. This has given rise to a wide variety of features for strengthening consistency on demand. Thus, some systems now provide a mixture of eventual and strong consistency

[1, 13, 21], and researchers have argued for doing the same with different forms of eventual consistency [5]. Other systems have allowed strengthening consistency by implementing transactions, usually not provided by geo-replicated systems [14, 24, 30]. Understanding the semantics of such features and their combinations is very difficult.The absence of a uniform and widely applicable specification formalism complicates the development and use of eventually consistent systems. Currently, there is no easy way for developers of such systems to answer basic questions when designing their programming interfaces: Are the requirements

of my application okay with a given form of eventual consistency? Can I use a replicated data type implemented in a system X in a different system Y? What is the semantics of combining two given forms of eventual consistency?We address this problem by proposing a formal and declarative framework for specifying the semantics of eventually consistent systems. …

See also:

- Sebastian Burckhardt, Manuel Fahndrich, Daan Leijen, and Benjamin P. Wood, Cloud Types for Eventual Consistency, in Proceedings of the 26th European Conference on Object-Oriented Programming (ECOOP), Springer, 15 June 2012

- Sebastian Burckhardt, Manuel Fahndrich, Daan Leijen, and Mooly Sagiv, Eventually Consistent Transactions, in Proceedings of the 22n European Symposium on Programming (ESOP), Springer, 24 March 2012

- Sebastian Burckhardt, Daan Leijen, Caitlin Sadowski, Jaeheon Yi, and Thomas Ball, Two for the Price of One: A Model for Parallel and Incremental Computation, in Proceedings of the ACM International Conference on Object Oriented Programming Systems Languages and Applications (OOPSLA'11), ACM SIGPLAN, Portland, Oregon, 22 October 2011

- Sebastian Burckhardt, Manuel Fahndrich, Daan Leijen, and Mooly Sagiv, Eventually Consistent Transactions (full version), no. MSR-TR-2011-117, October 2011

- Daan Leijen, Sebastian Burckhardt, and Manuel Fahndrich, Prettier Concurrency: Purely Functional Concurrent Revisions, in Haskell Symposium 2011 (Haskell'11), ACM SIGPLAN, Tokyo, Japan, 7 July 2011

- Sebastian Burckhardt and Daan Leijen, Semantics of Concurrent Revisions, in European Symposium on Programming (ESOP'11), Springer Verlag, Saarbrucken, Germany, March 2011

- Sebastian Burckhardt, Daan Leijen, and Manuel Fahndrich, Roll Forward, Not Back: A Case for Deterministic Conflict Resolution, in The 2nd Workshop on Determinism and Correctness in Parallel Programming (WODET'11), Newbeach, California, March 2011

- Sebastian Burckhardt, Alexandro Baldassion, and Daan Leijen, Concurrent Programming with Revisions and Isolation Types, in Proceedings of the ACM International Conference on Object Oriented Programming Systems Languages and Applications (OOPSLA'10), ACM SIGPLAN, Reno, NV, October 2010

- Sebastian Burckhardt and Daan Leijen, Semantics of Concurrent Revisions, no. MSR-TR-2010-94, 15 July 2010

Denny Lee (@dennylee) described Updated HDInsight on Azure ASV paths for multiple storage accounts in a 3/25/2013 post:

If you’ve joined the HDInsight Preview – you will notice many new changes including the tight integration with Windows Azure and that HDInsight defaults to ASV. As noted in Why use Blob Storage with HDInsight on Azure, there are some interesting technical (performance) and business reasons for utilizing Azure storage accounts. But if you had been playing with the HadoopOnAzure.com beta and switched over to the Windows Azure HDInsight Service Preview – you’ll may have noticed a quick change in the way asv paths work. Here’s a quick cheat sheet for you.

In general, to access ASV sources

#ls asv://$container$@$storage_account$.blob.core.windows.net/$path$

The exception is the default container which was created when you originally setup your cluster. For example, my storage account is “doctorwho” and the container (which is the name of my HDInsight cluster) is “caprica” (Yes, I’m mixing Battlestar Galactica and Doctor Who – deal with it!):

#ls asv://caprica@doctorwho.blob.core.windows.net/

Yet because this is also the default container / storage account, you can also just go:

#ls /

If you want to access another container in the same storage account, you’ll have to specify the entire statement. For example, if I wanted to access the rainier container, muir folder in my doctorwho account

#ls asv://rainier@doctorwho.blob.core.windows.net/muir

As well, if you want to access a completely separate storage account, provided you have specified the account information within the core-site.xml (more info below), then you can follow the same path. For example, if I wanted to access the ultimate container, frisbee folder in my riversong account:

#ls asv://ultimate@riversong.blob.core.windows.net/frisbee

Note, for the above to work, you will need to modify your core-site.xml and add a fs.azure.account.key.$full account path$ – the template would look like:

<property>

< name>fs.azure.account.key.$account$.blob.core.windows.net</name>

< value>$account-key$</value>

< /property>For my riversong account, it would look like:

<property>

< name>fs.azure.account.key.riversong.blob.core.windows.net</name>

< value>$riversong-account-key$</value>

< /property>Enjoy!

The Windows Azure (@WindowsAzure) team described How to Administer HDInsight Service in a 3/22/2013 post:

In this topic, you will learn how to create an HDInsight Service cluster, and how to open the administrative tools.

Table of Contents

- How to: Create a HDInsight cluster

- How to: Open the interactive JavaScript console

- How to: open the Hadoop command console

How to: Create a HDInsight cluster

A Windows Azure storage account is required before you can create a HDInsight cluster. HDInsight uses Windows Azure Blob Storage to store data. For information on creating a Windows Azure storage account, see How to Create a Storage Account.

Note

Currently HDInsight is only available in the US East data center, so you must specify the US East location when creating your storage account.

1. Sign in to the Management Portal.

2. Click + NEW on the bottom of the page, click DATA SERVICES, click HDINSIGHT, and then click QUICK CREATE.

3. When choosing CUSTOM CREATE, you need to specify the following properties:

4. Provide Cluster Name, Cluster Size, Cluster Admin Password, and a Windows Azure Storage Account, and then click Create HDInsight Cluster. Once the cluster is created and running, the status shows Running.

The default name for the administrator's account is admin. To give the account a different name, you can use the custom create option instead of quick create.

When using the quick create option to create a cluster, a new container with the name of the HDInsight cluster is created automatically in the storage account specified. If you want to customize the name, you can use the custom create option.

Important: Once a Windows Azure storage account is chosen for your HDInsight cluster, you can neither delete the account, nor change the account to a different account.

5. Click the newly created cluster. It shows the summary page:

6. Click either the Go to cluster link, or Start Dashboard on the bottom of the page to open HDInsight Dashboard.

How to: Open the interactive JavaScript console

Windows Azure HDInsight Service comes with a web based interactive JavaScript console that can be used as an administration/deployment tool. The console evaluates simple JavaScript expressions. It also lets you run HDFS commands.

- Sign in to the Management Portal.

- Click HDINSIGHT. You will see a list of deployed Hadoop clusters.

- Click the Hadoop cluster where you want to upload data to.

- From HDInsight Dashboard, click the cluster URL.

- Enter User name and Password for the cluster, and then click Log On.

Click Interactive Console.

From the Interactive JavaScript console, type the following command to get a list of supported commands:

help()

To run HDFS commands, use # in front of the commands. For example:

#lsr /How to: Open the Hadoop command line

To use Hadoop command line, you must first connect to the cluster using remote desktop.

- Sign in to the Management Portal.

- Click HDINSIGHT. You will see a list of deployed Hadoop clusters.

- Click the Hadoop cluster where you want to upload data to.

- Click Connect on the bottom of the page.

- Click Open.

- Enter your credentials, and then click OK. Use the username and password you configured when you created the cluster.

- Click Yes.

From the desktop, double-click Hadoop Command Line.

For more information on Hadoop command, see Hadoop commands reference.

See Also

The Microsoft “Data Explorer” Preview for Excel team (@DataExplorer) posted Mashups and visualizations over Big Data and Azure HDInsight using Data Explorer on 3/18/2013:

With the recent announcement about Azure HDInsight, now is a good time to look at how one might use Data Explorer to connect to data sitting in Windows Azure HDInsight.

As you might be aware, HDInsight is Microsoft’s own offering of Hadoop as a service. In this example, we are going to look at how data can be consumed from HDInsight. We will be using a dataset in HDInsight that contains historical stock prices for all stocks traded on the NYSE between 1970 and 2010. While this dataset is not too big compared to “Big Data” standards, it does represent many of the challenges posed by big data as far as end user consumption goes. In case you are interested in trying this yourself, the source of this data is Infochimps. You will need to get the data into an Azure Blob Storage account that is associated with your HDInsight cluster.

The goal of this post is to show you how to use this data to build a report of those stocks that are traded on the NYSE and are part of the S&P 500 index. This dataset by alone isn’t enough, because all it provides is price and volume information by stock symbol and date. So we will need to ultimately find company name/sector information from another dataset. More on that later.

Here’s a view of the report we are attempting to build:

The steps below show you how to build this interactive report.

Step 1: Connect to HDInsight and shape the data

The first step is to connect to HDInsight and get the data in the right shape. HDInsight is a supported data source in the Data Explorer ribbon.

If you are following along and don’t see HDInsight in the Other Sources dropdown, you need to get the latest update for Data Explorer.

Once the account details are provided, we will eventually be connected up to the HDFS filesystem view:

At this point, you will likely notice the first challenge. The data that we want to consume is scattered across dozens of files – and we absolutely need the data from all of these files. However, this is an easy problem for Data Explorer.

As a first step, we need to use Data Explorer to subset the files based on a condition, so that all unnecessary files are filtered out. We can use a condition that filters down to the list of files that contain “daily_price” in the filename.

After this step, we have just the files needed. Now for the magic.

Data Explorer has a really cool feature that lets you create a logical table out of multiple text files. You can “combine” multiple files in a filesystem view by simply clicking on the Combine icon in the Content column header.

Clicking on that icon produces this:

At this point, one of the top rows can be promoted as header using the feature Data Explorer provides for creating a header row. The rest of the header rows can be filtered out also using the filter capabilities.

A few more operations to hide unwanted columns will produce our final view:

Clicking on Done will start to run the query and stream the data down into Excel.

Step 2: Find the S&P 500 list of companies along with company information

At this point, the data should be streaming down into Excel. There is quite a bit of data that will find its way down into Excel. However, since we are not done with data shaping, we need to toggle a setting that will disable evaluation/download of the results for this particular query.

Clicking on Enable Download stops the download:

In order to fully build out the report, the next thing we need is the list of companies that are part of the S&P 500 index. We can try to find this in Data Explorer’s Online Search.

Searching for S&P 500 yields a few results:

The first one looks pretty close to the data we need. So we can import that into Excel by clicking Use:

Step 3: Merging the two tables, and subsetting to the S&P 500 price data

The last step in our scenario is to combine the two tables using Data Explorer. We can do this by clicking on the Merge button in the Data Explorer ribbon:

The Merge dialog lets us pick the tables we’d like to merge, along with the common columns between the two tables so that Data Explorer can do a join. Note that a left outer join is used when merging is done this way.

Clicking on Apply completes the merge, and we are presented with a resulting table:

Columns from the second table can be added by expanding and looking up columns from the NewColumn column:

The result of selecting the columns we’d like to add to the table produces this:

Note that there are many columns that have null values for the new columns. This is expected as we have more companies in the left table (the one we pulled from HDInsight).

A simple filtering out of nulls fixes the problem, and leaves us with what we need:

We are now left with the historical end of day figures for all companies in the S&P 500. Clicking on Done will now bring the data into Excel.

Step 4: Fix up a few types in PowerPivot and visualize in Power View

Once the data is downloaded, adding it to Excel’s data model (xVelocity) is easy. Clicking on the Load to data model link puts the data into the model in one click:

Once the data is in the data model, it can easily be modeled using Excel’s PowerPivot functionality.

In order for the visualization to work correctly, we need to adjust the types of the following columns in PowerPivot:

- date – this column needs to be converted to Date type

- stock_price_close – this column needs to be converted to Decimal type

Once this is done, adding the visualization via Power View is easy.

- We can insert a Power View from the Insert ribbon/tab in Excel.

- Delete any default visualizations. From the table named Query in the Power View fields list, select the following columns:

- date

- stock_price_close

- NewColumn.Company

- Change the visualization to a Line chart.

This will produce a visualization that shows all the companies on a single chart. We can then customize the chart and see just the companies we are interested in.

That’s all it takes to get all that data from HDInsight, and to combine that data with some publicly available information. Data Explorer’s Online Search is a good source for public data.

We hope this gives you an idea for how Data Explorer enables richer connectivity, discovery and data shaping scenarios while enhancing your Self Service BI experience in Excel. An interesting thought exercise will be to consider how you might accomplish this scenario without using Data Explorer.

Let us know what you think!

<Return to section navigation list>

Windows Azure SQL Database, Federations and Reporting, Mobile Services

Nick Harris (@cloudnick) described how to Build Websites and Apache Cordova/PhoneGap apps using the new HTML client for Azure Mobile Services on 3/18/2013 (missed when published):

Today Scott Guthrie announced HTML client support for Windows Azure Mobile Services such that developers can begin using Windows Azure Mobile Services to build both HTML5/JS Websites and Apache Cordova/PhoneGap apps.

The two major changes in this update include:

New Mobile Services HTML client library that supports IE8+ browsers, current versions of Chrome, Firefox, and Safari, plus PhoneGap 2.3.0+. It provides a simple JavaScript API to enable both the same storage API support we provide in other native SDKs and easy user authentication via any of the four supported identity providers – Microsoft Account, Google, Facebook, and Twitter.

- Cross Origin Resource Sharing (CORS) support to enable your Mobile Service to accept cross-domain Ajax requests. You can now configure a whitelist of allowed domains for your Mobile Service using the Windows Azure management portal.

With this update Windows Azure Mobile Services now provides a scalable turnkey backend solution for your Windows Store, Windows Phone, iOS, Android and HTML5/JS applications.

To learn more about the new HTML client library for Windows Azure Mobile Services please see checkout the new HTML tutorials on WindowsAzure.com and the following short 4 minute video where Yavor Georgiev demonstrates how to quickly create a new mobile service, download the HTML client quick start app, run the app and store data within the Mobile Service then configure a custom domain with Cross-origin Resource Sharing (CORS) support

Watch on Channel9 here

If you have any questions please reach out to us via dedicated Windows Azure Mobile Services our forum.

<Return to section navigation list>

Marketplace DataMarket, Cloud Numerics, Big Data and OData

<Return to section navigation list>

Windows Azure Service Bus, Caching Access Control, Active Directory, Identity and Workflow

Vittorio Bertocci (@vibronet) posted A Refresh of the Identity and Access Tool for VS 2012 on 3/25/2013:

Today we released a refresh of the Identity and Access Tool for Visual Studio 2012.

We moved the current release # from 1.0.2 to 1.1.0.

VS should let you know that there’s a new version waiting for you, but if you’re in a hurry you can go here and get it right away.

We didn’t add any new features: this is largely a service release, with lots of bug fixes.

There is an exception to that, though. We changed the way in which we handle issuer validation of incoming tokens. We now use the ValidatingIssuerNameRegistry by default; however we also added in the UI the necessary knobs for you to opt out and fall back on the old ConfigBasedIssuerNameRegistry, should you need to. Details below.

The New Issuer Validation Strategy

Traditionally, WIF tools (from fedutil.exe in .NET 3.5 and 4.0 to the Identity and Access Tools in .NET 4.5) used the ConfigBasedIssuerNameRegistry class to capture the coordinates (issuer name and signing verification key) of trusted issuers. In config it would look as something like the following:

<issuerNameRegistry type="System.IdentityModel.Tokens.ConfigurationBasedIssuerNameRegistry,System.IdentityModel, Version=4.0.0.0, Culture=neutral, PublicKeyToken=b77a5c561934e089"> <trustedIssuers> <add thumbprint="9B74CB2F320F7AAFC156E1252270B1DC01EF40D0" name="LocalSTS" /> </trustedIssuers> </issuerNameRegistry>Its semantic is straightforward: if an incoming token is signed with the key corresponding to that thumbprint, accept it (provided that all other checks pass as well!) and use the value in “name” for the “Issuer” property of the resulting claims.

That worked out great for the first generation of identity providers, but as the expressive power of issuers grew (multiple keys, multiple tenants as issuers leveraging the same issuing endpoint and crypto infrastructure) we felt we needed to provide a better issuer name registry canonical class, the ValidatingIssuerNameRegistry (VINR for short).

We already introduced VINR here, hence I won’t repeat the details here. What’s new is that the Identity and Access Tool now uses VINR by default.

If you run the tool against the project containing the settings above, afterwards your config will look like the following:<!--Commented by Identity and Access VS Package--> <!--<issuerNameRegistry type="System.IdentityModel.Tokens.ConfigurationBasedIssuerNameRegistry,System.IdentityModel, Version=4.0.0.0, Culture=neutral, PublicKeyToken=b77a5c561934e089"><trustedIssuers><add thumbprint="9B74CB2F320F7AAFC156E1252270B1DC01EF40D0" name="LocalSTS" /></trustedIssuers></issuerNameRegistry>--> <issuerNameRegistry type="System.IdentityModel.Tokens.ValidatingIssuerNameRegistry,System.IdentityModel.Tokens.ValidatingIssuerNameRegistry"> <authority name="LocalSTS"> <keys> <add thumbprint="9B74CB2F320F7AAFC156E1252270B1DC01EF40D0" /> </keys> <validIssuers> <add name="LocalSTS" /> </validIssuers> </authority> </issuerNameRegistry>Apart from the syntactic sugar, the important difference in semantics between the two is that whereas the ConfigBasedIssuerNameRegistry will just use “LocalSTS” as the Issuer property in the ClaimsIdentity representing the caller, regardless of what the issuer is in the incoming token, VINR will enforce that “LocalSTS” is actually the issuer name in the incoming token. If the issuer in the token is different from the value recorded, ConfigBasedIssuerNameRegistry will accept the token nonetheless: VINR will refuse it. The stricter validation rules are necessary when working with multitenant STSes, and are not a bad thing for traditional cases either (ADFS2.0 does this consistently).

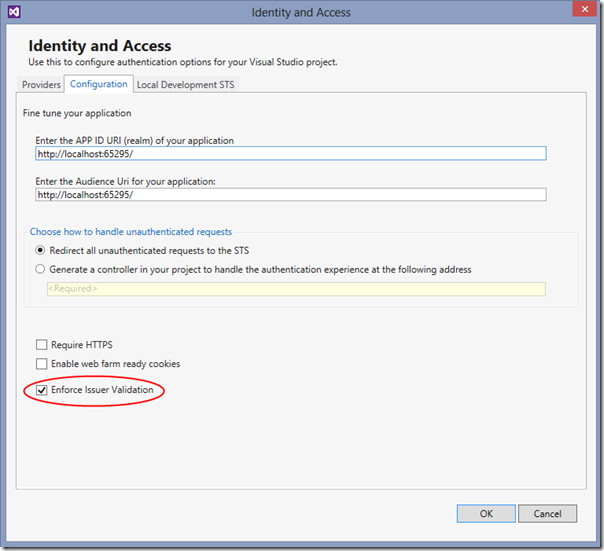

If for any reasons you rely on the ConfigBasedIssuerNameRegistry more relaxed validation criterion, I would suggest considering whether you can move to a stricter validation mode: but if you absolutely can’t, the tool offers you a way out. In the config tab you will now find the following new checkbox:

If you want to go back to ConfigBasedIssuerNameRegistry, all you need to do is unchecking that box and hit OK.

Miscellanea

Here there are few of the most notable fixes we (well, “we”… it actually was Brent ) added in this refresh. The list is longer, here I am highlighting just the ones for which we received explicit feedback in the past.

- <serviceModel> bug. We had a bug for which the Tool would throw if a <serviceModel> element was present in the config; that behavior has been fixed

- We weren’t setting certificate validation mode to none for the Business STS providers, but we got feedback that self signed certificates are in common use and developers needed to turn off cert validation by hand; hence, we included all providers in the cert validation == none logic and added a comment in the config to clarify that this is for development purposes only.

- Better support for ACS namespace keys. We did a flurry of improvements there (better cut & paste support, comments, gracefully handling projects for which we don’t have keys, etc)

- More informative error messages

That’s it. We hope that the improvements in this refresh will help you with your apps: please keep the feedback coming!

No significant articles today

<Return to section navigation list>

Windows Azure Virtual Machines, Virtual Networks, Web Sites, Connect, Traffic Manger, RDP and CDN

Jim O’Neill (@jimoneil) produced Practical Azure #16: Windows Azure Traffic Manager on 3/12/2013:

Like what you heard? Try Windows Azure for FREE and enjoy the freedom to use your preferred OS, language, database or tool. Windows Azure can help you deploy sites to a highly scalable environment, deploy and run virtual machines, and create highly scalable application in a rich PaaS environment. Give it a try!

_________________

Abstract:

In Part 16 of his Windows Azure series, Jim O’Neil breaks down Windows Azure Traffic Manager. Tune in as he describes how Traffic Manager allows you to control the distribution of user traffic to Windows Azure hosted services as well as demos a scenario in which you can easily manage and coordinate various cloud services across datacenters and geographies.

After watching this video, follow these next steps:

Step #1 – Try Windows Azure: No cost. No obligation. 90-Day FREE trial.

Step #2 – Download the Tools for Windows 8 App Development

Step #3 – Start building your own Apps for Windows 8

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

<Return to section navigation list>

Visual Studio LightSwitch and Entity Framework 4.1+

Return to section navigation list>

Windows Azure Infrastructure and DevOps

<Return to section navigation list>

Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

<Return to section navigation list>

Cloud Security, Compliance and Governance

<Return to section navigation list>

Cloud Computing Events

Craig Kitterman (@craigkitterman, pictured below) posted Windows Azure Community News Roundup (Edition #59) on 3/22/2013:

Editor's Note: This post comes from Mark Brown, Windows Azure Community Manager.

Welcome to the newest edition of our weekly roundup of the latest community-driven news, content and conversations about cloud computing and Windows Azure.

Here is what we pulled together for the past week based on your feedback:

Articles, Videos and Blog Posts

- Blog: Remote profiling Windows Azure Cloud Services with dotTrace by

@maartenballiauw (posted Mar. 13)- Blog: Website Authentication with Social Identity Providers and ACS Part 1 by @alansmith (posted Mar. 17)

- Blog: Expanding the #windowsazure CI / CD / ALM landscape – integrating Dropbox #MEETBE by @techmike2kx (posted Mar. 19)

Upcoming Events and User Group Meetings

North America

- October - June, 2013: Windows Azure Developer Camp – Various (50+ cities)

Europe

- April 2, 2013: Real world architectures on Windows Azure - Heverlee, Belgium

- April 10-11, 2013: Microsoft Synopsis 2013 - Darmstadt, Germany

- April 17-19, 2013: Database Days 2013 - Baden, Switzerland

- April 24-25, 2013: Events and Workshops - Brussels, Belgium

Rest of World/Virtual

- April 27, 2013: Global Windows Azure Bootcamp - Various Locations

- Ongoing: Windows Azure Virtual Labs – On-demand

Code on GitHub

Interesting Recent Windows Azure Discussions on Stack Overflow

- How to download large azure blobls with linux python - 1 answer, 1 vote

- Deploy a Web Role and Worker Role in a single instance - 2 answers, 1 vote

- Storing binary data (images) using Windows Azure. images can be small in size - 1 answer, 0 votes

<Return to section navigation list>

Other Cloud Computing Platforms and Services

<Return to section navigation list>

0 comments:

Post a Comment