Windows Azure and Cloud Computing Posts for 3/18/2012+

| A compendium of Windows Azure, Service Bus, EAI & EDI, Access Control, Connect, SQL Azure Database, and other cloud-computing articles. |

‡ Updated 3/22/2013 with new articles marked ‡.

•• Updated 3/21/2013 with new articles marked ••.

• Updated 3/18/2013 with new articles marked •.

Note: This post is updated weekly or more frequently, depending on the availability of new articles in the following sections:

- Windows Azure Blob, Drive, Table, Queue, HDInsight and Media Services

- Windows Azure SQL Database, Federations and Reporting, Mobile Services

- Marketplace DataMarket, Cloud Numerics, Big Data and OData

- Windows Azure Service Bus, Caching, Access Control, Active Directory, Identity and Workflow

- Windows Azure Virtual Machines, Virtual Networks, Web Sites, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch and Entity Framework v4+

- Windows Azure Infrastructure and DevOps

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

- Cloud Security, Compliance and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

Azure Blob, Drive, Table, Queue, HDInsight and Media Services

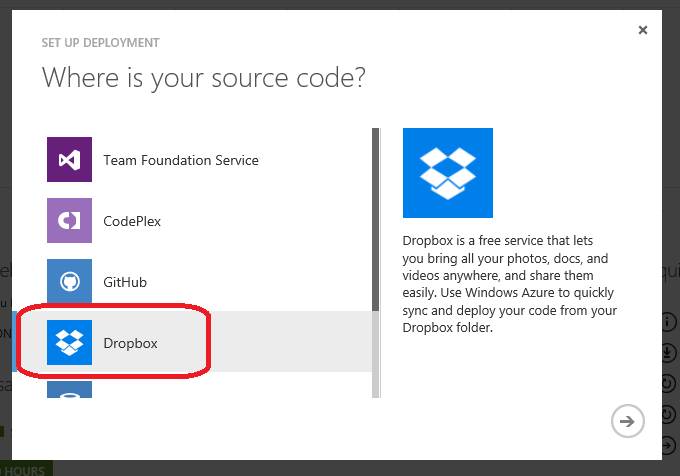

‡ Eron Kelly described Developing for HDInsight in a 3/22/2013 post:

Windows Azure HDInsight provides the capability to dynamically provision clusters running Apache Hadoop to process Big Data. You can find more information here in the initial blog post for this series, and you can click here to get started using it in the Windows Azure portal. This post enumerates the different ways for a developer to interact with HDInsight, first by discussing the different scenarios and then diving into the variety of capabilities in HDInsight. As we are built on top of Apache Hadoop, there is a broad and rich ecosystem of tools and capabilities that one can leverage.

In terms of scenarios, as we've worked with customers, there are really two distinct scenarios, authoring jobs where one is using the tool to process big data, and integrating HDInsight with your application where the input and output of jobs are incorporated as part of a larger application architecture. One key design aspect of HDInsight is the integration with Windows Azure Blob Storage as the default file system. What this means is that in order to interact with data, you can use existing tools and API's for accessing data in blob storage. This blog post goes into more detail on our utilization of Blob Storage.

Within the context of authoring jobs, there is a wide array of tools available. From a high level, there are a set of tools that are part of the existing Hadoop ecosystem, a set of projects we've built to get .NET developers started with Hadoop, and work we've begun to leverage JavaScript for interacting with Hadoop.

Job Authoring

Existing Hadoop Tools

As HDInsight leverages Apache Hadoop via the Hortonworks Data Platform, there is a high degree of fidelity with the Hadoop ecosystem. As such, many capabilities will work “as-is.” This means that investments and knowledge in any of the following tools will work in HDInsight. Clusters are created with the following Apache projects for distributed processing:

- Map/Reduce

- Map/Reduce is the foundation of distributed processing in Hadoop. One can write jobs either in Java or can leverage other languages and runtimes through the use of Hadoop Streaming.

- A simple guide to writing Map/Reduce jobs on HDInsight is available here.

- Hive

- Hive uses a syntax similar to SQL to express queries that compile to a set of Map/Reduce programs. Hive has support for many of the constructs that one would expect in SQL (aggregation, groupings, filtering, etc.), and easily parallelizes across the nodes in your cluster.

- A guide to using Hive is here

- Pig

- Pig is a dataflow language that compiles to a series of Map/Reduce programs using a language called Pig Latin.

- A guide to getting started with Pig on HDInsight is here.

- Oozie

- Oozie is a workflow scheduler for managing a directed acyclic graph of actions, where actions can be Map/Reduce, Pig, Hive or other jobs. You can find more details in the quick start guide here.

You can find an updated list of Hadoop components here. The table below represents the versions for the current preview:

Additionally, other projects in the Hadoop space, such as Mahout (see this sample) or Cascading can easily be used on top of HDInsight. We will be publishing additional blog post on these topics in the future.

.NET Tooling

We're working to build out a portfolio of tools that allow developers to leverage their skills and investments in .NET to use Hadoop. These projects are hosted on CodePlex, with packages available from NuGet to author jobs to run on HDInsight. For instructions on these, please see the getting started pages on the CodePlex site.

Running Jobs

In order to run any of these jobs, there are a few options:

- Run them directly from the head node. To do this, RDP to your cluster, open the Hadoop command prompt, and use the command line tools directly

- Submit them remotely using the REST API's on the cluster (see the following section on integrating HDInsight with your applications for more details)

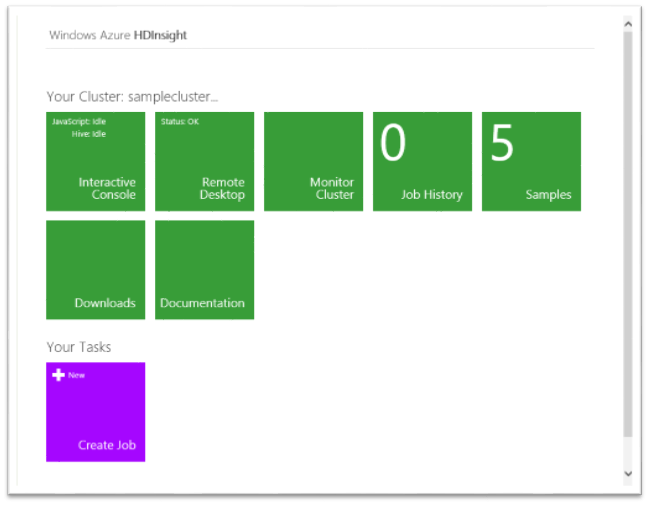

- Leverage tools on the HDInsight dashboard. After you create your cluster, there are a few capabilities in the cluster dashboard for submitting jobs:

- Create Job

- Interactive Console

•• Yves Goeleven (@YvesGoeleven) delivered a 01:19:11 Deep Dive and Best Practices for Windows Azure Storage Services session to Tech Ed Days Belgium on 3/6/2013 and Channel 9 published in on 3/18/2013:

Windows Azure Storage Services provides a scalable and reliable storage service in the cloud for blobs, tables and queues. In this session I will provide you with more insight in how this service works and share the lessons I learned building applications in real life.

• Craig Kitterman (@craigkitterman, pictured below) posted Crunch Big Data in the Cloud with Windows Azure HDInsight Service with Preview pricing on 3/18/2013:

Editor’s Note: This post comes from Eron Kelly, General Manager for SQL Server Product Management. This is the first in our 5-part blogging series on HDInsight Service.

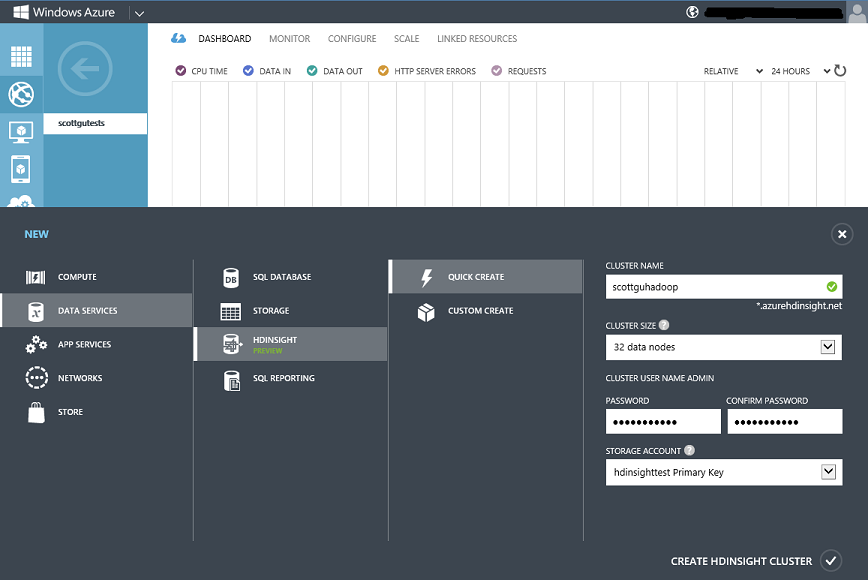

Microsoft has been hard at work over the past year developing solutions that allow businesses to get started doing big data analytics with the tools and processes familiar to them. Today, I’m happy to share that one of those solutions, Windows Azure HDInsight Service – our cloud-based distribution of Hadoop – is now available as a standard preview service on the Windows Azure Portal. As Scott Guthrie noted in his blog today, customers can get started doing Hadoop-based big data analytics in the cloud today, paying for only the storage and compute they use through their regular Windows Azure subscription.

Windows Azure HDInsight Service offers full compatibility with Apache Hadoop, which ensures that when customers choose HDInsight they can do so with confidence, knowing that they are 100 percent Apache Hadoop compatible now and in the future. HDInsight also simplifies Hadoop by enabling customers to deploy clusters in just minutes instead of hours or days. These clusters are scaled to fit specific demands and integrate with simple web-based tools and APIs to ensure customers can easily deploy, monitor and shut down their cloud-based cluster. In addition, Windows Azure HDInsight Service integrates with our business intelligence tools including Excel, PowerPivot and Power View, allowing customers to easily analyze and interpret their data to garner valuable insights for their organization.

Microsoft is offering a 50% discount on Windows Azure HDInsight Service during the preview period. A Hadoop-based cluster will need one Head node and one or more compute nodes. During the preview period, the head node is available as an Extra Large instance at $0.48 per hour per cluster, and the compute node is available as a Large instance at $0.24 per hour per instance. More information regarding Windows Azure pricing is available here. [Emphasis added.]

Click here to get started using Windows Azure HDInsight Service today. For more information about Windows Azure HDInsight Service and our work with the Hadoop community, visit the Data Platform Insider blog. We look forward to seeing where your Hadoop clusters take you!

Stay tuned for tomorrow’s blog that will provide a walkthrough of the updated HDInsight Service.

Denny Lee (@dennylee) explained Why use Blob Storage with HDInsight on Azure in a 3/18/2013 post coauthored with Brad Sarsfield (@bradoop):

One of the questions we are commonly asked concerning HDInsight, Azure, and Azure Blob Storage is why one should store their data into Azure Blob Storage instead of HDFS on the HDInsight Azure Compute nodes. After all, Hadoop is all about moving compute to data vs. traditionally moving data to compute as noted in Moving data to compute or compute to data? That is the Big Data question.

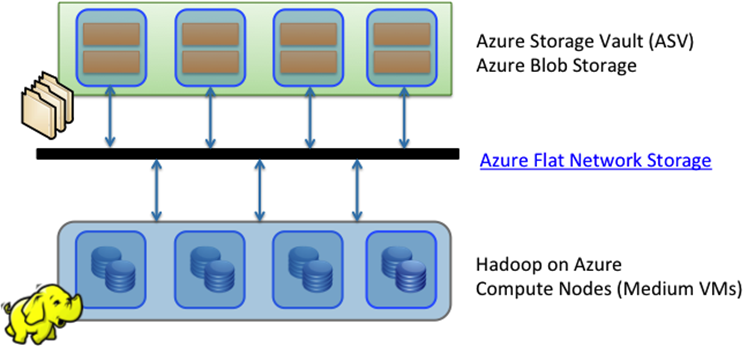

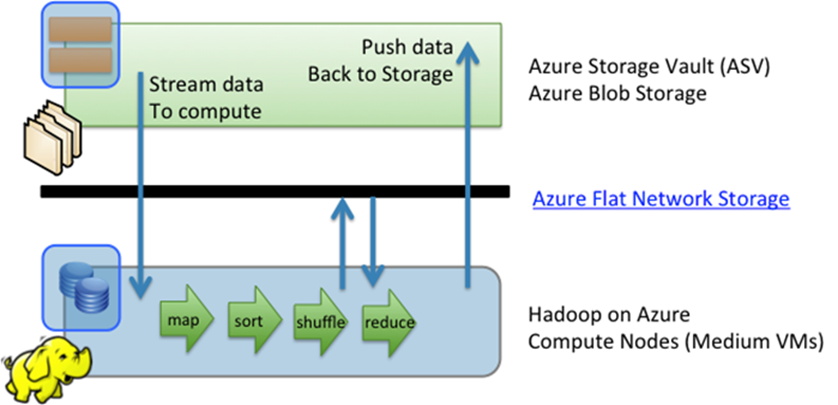

The network is often the bottleneck and making it performant can be expensive. Yet the practice for HDInsight on Azure is to place the data into Azure Blob Storage (also known by the moniker ASV – Azure Storage Vault); these storage nodes are separate from the compute nodes that Hadoop uses to perform its calculations. This seems to be in conflict with the idea of moving compute to the data.

It’s all about the Network

As noted in the above diagram,the typical HDInsight infrastructure is that HDInsight is located on the compute nodes while the data resides in the Azure Blob Storage. But to ensure that the transfer of data from storage to compute is fast, Azure recently deployed the Azure Flat Network Storage (also known as Quantum 10 or Q10 network) which is a mesh grid network that allows very high bandwidth connectivity for storage clients. For more information, please refer to Brad Calder’s very informative post: Windows Azure’s Flat Network Storage and 2012 Scalability Targets. Suffice it to say, the performance of by utilizing HDFS with local disk or HDFS using ASV is comparable and in some cases, we have seen it run faster on ASV due to the fast performance of the Q10 network.

But all that data movement?!

But if I’m moving all of this data from the storage node to the compute nodes and then back (for storage), wouldn’t that be a lot of data to move – thus slowing query performance. While that is true, there are technical and business reasons why something like Q10 would satisfy most requirements.

Technical

When HDInsight is performing its task, it is streaming data from the storage node to the compute node. But many of the map, sort, shuffle,and reduce tasks that Hadoop is performing is being done on the local disk residing with the compute nodes themselves. The map, reduce, and sort tasks typically will be performed on compute nodes with minimal network load while the shuffle tasks will use some network to move the data from the mappers nodes to less reduce nodes. The final step of storing the dat back to the storage is typically a much smaller dataset (e.g. a query dataset or report). In the end, the network is being more heavily utilized during the initial and final streaming phases while most of the other tasks are being performed intra-nodally (i.e. minimal network utilization).

Business

Another important aspect is that when one queries data from a Hadoop cluster, they are typically not asking for all of the data in the cluster. Common queries involve the current day, week, or month of data. That is, a much smaller subset of data is being transferred from the storage node to compute nodes.

So how is the performance?

The quick summary on performance is:

- Azure Blob storage provides near identical HDFS access characteristics for reading (performance and task splitting) into map tasks.

- Azure Blob provides faster write access for Hadoop HDFS; allowing jobs to complete faster when writing data to disk from reduce tasks.

HDFS: Azure Blob Storage vs. Local Disk

Map Reduce uses HDFS which itself is actually just a file system abstraction. There are two implementations of HDFS file system when running Hadoop in Azure; is either local file system another is Azure Blob. Both are still HDFS; the code path for map reduce against local file system HDFS or Azure Blob filesystem are identical. You can specify the file split size (minimum 64MB, max 100GB, default 5GB). So a single file will be split and read by different mappers, just like local disk HDFS.

Where processing happens…

The processing in both case happens on the Hadoop clusters task tracker/worker nodes. So if you have a cluster with 5 worker nodes (medium VM’s) and one head node, you will have 2 map and 1 reduce slots per workers; so when map reduce process data from HDFS it will happen on the 5 worker nodes across all of the map / reduce task slots. Data is read from HDFS, regardless of location (local disk, remote disk, azure blob).

Data locality?

As noted above, we have re-architected our networking infrastructure with Q10 in our datacenters to accommodate the Hadoop scenario. All up we have an incredibly low overhead / subscription ratio for networking, therefore we can have a lot of throughput between Hadoop and Blob. The worker nodes, Medium VM’s, will each read upto 800Mbps from Azure blob (which is running remotely); this is equivalent to how fast the VM can read off of disk. With the right storage account placement and settings we can achieve disk speed for aprox 50 worker nodes. It’s screaming fast today; and there are some mind bindingly fast networking speed stuff coming down the pipe in the next year that will likely triple that number. The question at that point is; can the maps consume the data faster than it can read off of disk; this will be the case sometimes, others where computationally intensive will be bottlenecked on the map CPU rather than HDFS bandwidth.

Where Azure Blob HDFS start to win is on writing data out. Ie. When your map reduce program finishes and it writes to HDFS. With local disk HDFS, to write 3 copies, first #1 is written and then #2 and #3 are, in parallel written to two other nodes, remotely. The write is not completed or “sealed” until the two remote copies have been written. With azure blob storage the write is completed and sealed after #1 is written; and then, Azure blob takes care of, by default 6x replication (three to the local datacenter, three to the remote) on its own time. This gives us high performance, high durability and high availably.

As well, it is important to note that we do not have a data durability service level objective for data stored in local disk HDFS while Azure provides a high level of data durability and availability. It is important to note that all of these parameters are highly dependent on the actual workload characteristic.

Addendum

In reference to Nasuni’s The State of Cloud Storage 2013 Industry Report, notes the following concerning Azure Blob Storage

- Speed: Azure was 56% faster than the No. 2 Amazon S3 in write speed, and 39% faster at reading files than the No. 2 HP Cloud Object Storage in read speed.

- Availability: Azure’s average response time was 25% faster than Amazon S3, which had the second fastest average time.

- Scalability: Amazon S3 varied only 0.6% from its average the scaling tests, with Microsoft Windows Azure varying 1.9% (both very acceptable levels of variance). The two OpenStack-based clouds – HP and Rackspace – showed variance of 23.5% and 26.1%, respectively, with performance becoming more and more unpredictable as object counts increased.

See also the New HDInsight Server: Deploy and Manage Hadoop Clusters on Azure section of Scott Guthrie’s post quoted in the Windows Azure Infrastructure and DevOps section below.

Eron Kelly (@eronkelly) posted Gartner BI Summit 2013: Bringing Hadoop to the Enterprise to the SQL Server Team Blog on 3/18/2013:

Gartner’s annual Business Intelligence & Analytics Summit kicked off earlier today where we are focused on sharing our perspective and solutions with customers, partners and industry leaders interested in big data and business intelligence. Later this week, we’ll also be at the GigaOM Structure event. While we’re out attending all these industry events, our engineering efforts have been moving at light speed. I’d like to highlight two efforts from our engineering team that have now come to fruition.

New HDInsight Service Preview

First, I’m excited to announce that a preview of the Windows Azure HDInsight Service -- our cloud-based distribution of Hadoop – is broadly available through the Windows Azure Portal. The Windows Azure HDInsight Service is built on the Hortonworks Data Platform to ensure long term compatibility with Apache Hadoop, while providing customers with the low cost and elasticity of cloud computing. Starting today, customers can perform Hadoop-based big data analysis in the cloud through the Windows Azure Portal, paying only for the storage and compute capacity that they use. The preview will enable customers to deploy permanent clusters with up to 40 nodes.

Customers like Microsoft’s own 343 Industries and UK-based Microsoft partner Ascribe have been early adopters of HDInsight Service and are enjoying the benefits of big data insights at cloud speed and scale. Their feedback, and the feedback of all those who participated in the private preview was invaluable – thank you! Based on their participation we are able to deliver a rock solid and high performance service. We are excited to offer this preview broadly to everyone and look forward to hearing your feedback.

Working with the Hadoop Community

Second, we’ve also been working with Hortonworks as part of our commitment to accelerating enterprise adoption of Hadoop by offering a solution that is open and fully compatible for the long run with Apache Hadoop. As part of that I am excited to share that last week Hortonworks reported that the Hadoop community voted to move Hadoop on Windows to the main Apache Hadoop trunk. The engineering team at Microsoft and Hortonworks in collaboration with the Hadoop community made a number of enhancements to the Windows Server and Windows Azure Hadoop development and runtime environments which were merged into the Apache trunk. All of these enhancements are also incorporated into our Windows Azure HDInsight Service and HDInsight Server products and in Hortonworks’ HDP for Windows product.

Moving Hadoop on Windows to the main Apache Hadoop trunk and our continued investment in the Hadoop project is important to us. It means that when our customers choose to use our HDInsight products, they can do so with the confidence of knowing they are 100 percent Apache Hadoop compatible now and in the future.

Stop by and See us at Gartner BI

Big data holds great promise – for companies and for the world. We’re excited about the progress we’re making with our products, and the community, to help customers realize that promise and we’ll have more to share over the next few months.

If you’re attending the Gartner BI Summit, visit our booth to meet our business intelligence experts and enter to win an Xbox 360 with Kinect. Also, don’t miss Herain Oberoi and Marc Reguera’s session on the new self-service BI capabilities in Excel 2013 on Tuesday at 3:15PM, and a chance to win a Surface with Windows RT. If you’re attending GigaOM Structure, be sure to stop by our booth hear more about our approach to big data. For those not attending these events, follow us on Facebook and Twitter @microsoftbi to get the latest on Microsoft at the Summit.

See also the New HDInsight Server: Deploy and Manage Hadoop Clusters on Azure section of Scott Guthrie’s post quoted in the Windows Azure Infrastructure and DevOps section below.

<Return to section navigation list>

Windows Azure SQL Database, Federations and Reporting, Mobile Services

Keith Mayer (@KeithMayer) asserted “New feature in SQL Server 2012 SP1 CU2 provides native backup to Windows Azure cloud storage” in a deck for his Step-by-Step: Tired of Tapes? Backup SQL Databases to the Cloud post of 3/18/2013 to Ulitzer’s Cloud Computing blog:

I think every IT Pro I’ve ever met hates tape backups … but having an offsite component in your backup strategy is absolutely necessary for effective disaster recovery. One of the new features provided in SQL Server 2012 Service Pack 1 Cumulative Update 2 is the ability to now backup SQL databases and logs to Windows Azure cloud storage using native SQL Server Backup via both Transact-SQL (T-SQL) and SQL Server Management Objects (SMO).

Backup to cloud storage is a natural fit for disaster recovery, as our backups are instantly located offsite when completed. And, the pay-as-you-go model of cloud storage economics makes it really cost effective – Windows Azure storage costs are less than $100/TB per month for geo-redundant storage based on current published costs as of this article’s date. That’s less than the cost of a couple SDLT tapes! You can check out our current pricing model for Windows Azure Storage on our Price Calculator page.

In this article, I’ll step through the process of using SQL Server 2012 SP1 CU2 native backup capabilities to create database backups on Windows Azure cloud storage.

How do I get started?

To get started, you’ll need a Windows Azure subscription. Good news! You can get a FREE 90-Day Windows Azure subscription to follow along with this article, evaluate and test … this subscription is 100% free for 90-days and there’s absolutely no obligation to convert to a paid subscription.

- DO IT: Sign-up for a Free 90-Day Windows Azure Subscription

NOTE: When activating your FREE 90-Day Subscription for Windows Azure, you will be prompted for credit card information. This information is used only to validate your identity and your credit card will not be charged, unless you explicitly convert your FREE Trial account to a paid subscription at a later point in time.You’ll also need to download Cumulative Update 2 for SQL Server 2012 Service Pack 1 and apply that to the SQL Server instance with which you’ll be testing.

Don’t have a SQL Server 2012 instance in your data center that you can test with? No problem! You can spin up a SQL Server 2012 VM in the Windows Azure Cloud using your Free 90-Day subscription.

Let’s grab some cloud storage

Once you’ve got your Windows Azure subscription activated and your SQL Server 2012 lab environment patched with SP1 CU2, you’re ready to provision some cloud storage that can be used as a backup location for SQL databases …

- Launch the Windows Azure Management Portal and login with the credentials used when activating your FREE 90-Day Subscription above.

- Click Storage in the left navigation pane of the Windows Azure Management Portal.

Windows Azure Management Portal – Storage Accounts- On the Storage page of the Windows Azure Management Portal, click +NEW on the bottom toolbar to create a new storage account location.

Creating a new Windows Azure Storage Account location- Click Quick Create on the New > Storage popup menu and complete the fields as listed below:

- URL: XXXbackup01 ( where XXX represents your initials in lowercase )

- Region / Affinity Group: Select an available Windows Azure datacenter region for your new Storage Account.

NOTE: Because you will be using this Storage Account location for backup / disaster recovery scenarios, be sure to select a Datacenter Region that is not near to you for additional protection against disasters that may affect your entire local area.

Click the Create Storage Account button to create your new Storage Account location.- Wait for your new Storage Account to be provisioned.

Provisioning new Windows Azure Storage Account

Once the status of your new Storage Account shows as Online, you may continue with the next step.- Select your newly created Storage Account and click the Manage Keys button on the bottom toolbar to display the Manage Access Keys dialog box.

Manage Access Keys dialog box

Click thebutton located next to the Secondary Access Key field to copy this access key to your clipboard for later use.

- Create a container within your Windows Azure Storage Account to store backups. Click on the name of your Storage Account on the Storage page in the Windows Azure Management Portal to drill into the details of this account, then select the Containers tab located at the top of the page.

Containers tab within a Windows Azure Storage Account

On the bottom toolbar, click the Add Container button to create a new container named “backups”.You’ve now completed the provisioning of your new Windows Azure storage account location.

We’re ready to backup to the cloud

When you’re ready to test a SQL database backup to the cloud, launch SQL Server Management Studio and connect to your SQL Server 2012 SP1 CU2 database engine instance. After you’ve done this, proceed with the following steps to complete a backup …

- In SQL Server Management Studio, right-click on the database you wish to backup in the Object Explorer list pane and select New Query.

SQL Server Management Studio- In the new SQL Query Window, execute the following Transact-SQL code to create a credential that can be used to authenticate to your Windows Azure Storage Account with secure read/write access:

CREATE CREDENTIAL myAzureCredential

WITH IDENTITY='XXXbackup01',

SECRET=’PASTE IN YOUR COPIED ACCESS KEY HERE';Prior to running this code, be sure to replace XXXbackup01 with the name of your Windows Azure Storage Account created above and paste in the Access Key you previously copied to your clipboard.

In the SQL Query Window, execute the following Transact-SQL code to perform the database backup to your Windows Azure Storage Account:

BACKUP DATABASE database_name TO

URL='https://XXXbackup01.blob.core.windows.net/backups/database_name.bak'

WITH CREDENTIAL='myAzureCredential' , STATS = 5;

Prior to running this code, be sure to replace XXXbackup01 with the name of your Windows Azure Storage Account and replace database_name with the name of your database.

Upon successful execution of the backup, you should see SQL Query result messages similar to the following:

Successful Backup ResultsWhat about restoring?

Restoring from the cloud is just as easy as backing up … to restore we can use the following Transact-SQL syntax:RESTORE DATABASE database_name FROM

URL='https://XXXbackup01.blob.core.windows.net/backups/database_name.bak'

WITH CREDENTIAL=’myAzureCredential’, STATS = 5, REPLACE

<Return to section navigation list>

Marketplace DataMarket, Cloud Numerics, Big Data and OData

<Return to section navigation list>

Windows Azure Service Bus, Caching, Access Control, Active Directory, Identity and Workflow

‡ Vittorio Bertocci (@vibronet) announced Now [from] Amazon: A Guide to Claims-Based Identity and Access Control, 2nd Edition (for Windows Azure) on 3/19/2013:

It took some time, but the IRL version of A Guide to Claims-Based Identity and Access Control

is finally out.

Whereas the 148 pages of the first edition were a groundbreaker, the 374 pages of the 2nd edition are the herald of an approach well into the mainstream. Just look at that the two side by side!

Note, if physical books are not your thing you can always take advantage of the digital copy, freely available as PDF or as unbundled text in MSDN.

I will be always grateful to my friend Eugenio Pace for having included me in this project, and for the super-nice words he has for me in the acknowledgement section.

I have been handing copies of this 2nd edition at the last few events, inside and outside Microsoft, and I only heard good things about it. I’ll leave it to you to connect the dots…

‡ Chris Klug (@ZeroKoll)described Compressing messages for the Windows Azure Service Bus in a 3/19/2013 post:

As a follow up to my previous post about encrypting messages for the Service Bus, I thought I would re-use the concepts but instead of encrypting the messages I would compress them.

As the Service bus has limitations on how big messages are allowed to be, compressing the message body is actually something that can be really helpful. Not that I think sending massive messages is the best thing in all cases, the 256kb limit can be a little low some times.

Anyhow… The basic idea is exactly the same as last time, no news there…but to be honest, I think this type of compressions should be there by default, or at least be available as a feature of BrokeredMessage by default… However, as it isn’t I will just make do with extension methods…

And if you haven’t read the previous post, here is a quick recap. There is no way to inherit from BrokeredMessage as it is sealed. Instead, I have decided to create extension methods, which makes it really easy to use them. I have also decided to do two versions. one that relies on reflection, which might be considered bad practice by some, and one that creates a new BrokeredMessage with the compressed version of the body.

So, let’s get started. The extension methods I want to create look like this

public static void Compress(this BrokeredMessage msg)

public static void Decompress(this BrokeredMessage msg)

public static BrokeredMessage Compress<T>(this BrokeredMessage msg)

public static BrokeredMessage Decompress<T>(this BrokeredMessage msg)Let’s start with the reflection based ones, which means the non-generic ones…

The first thing I need to do is get hold of the internal Stream that holds the serialized object used for the body. This is done through reflection as follows

var member = typeof(BrokeredMessage)

.GetProperty("BodyStream", BindingFlags.NonPublic | BindingFlags.Instance);

var dataStream = (Stream)member.GetValue(msg)Next, I need to compress the serialized data, which I have decided to do using the GZipStream class from the framework.

This is really not rocket science, but it does include a little quirk. First you create a new Stream to hold the result, that is the compressed data. In my case, that is a MemoryStream, which is the reason for the quirk. If you write to disk, this isn’t a problem, but for MemoryStream it is… Anyhow, once I have a target Stream, I create a new GZipStream that wraps the target Stream.

The GZipStream behaves like a Stream, but when you read/write to it, it uses the underlying Stream for the data, and compresses/decompresses the data on the way in and out… Makes sense? I hope so… Whether it compresses or decompresses, which basically means whether you write or read from it, is defined by the second parameter to the constructor. That parameter is of type CompressionMode, which is an enum with 2 values, Compress and Decompress.

Once I have my GZipStream, I basically just copy my data from the source Stream into it, and then close it. And this is where the quirk I talked about before comes into play. The GZipStream won\t finish writing everything until it is closed. Flush does not help. So we need to close the GZipStream, which then also closes the underlying Stream. In most cases, this might nor be a problem, but for a MemoryStream, it actually is. Luckily, the MemoryStream will still allow me to read the data in it by using the ToArray() method. Using the returned byte[], I can create a new MemoryStream, which I can use to re-set the body of the BrokeredMessage.

And after all that talk, the actual code looks like this

var member = typeof(BrokeredMessage).GetProperty("BodyStream", BindingFlags.NonPublic | BindingFlags.Instance);

using (var dataStream = (Stream)member.GetValue(msg))

{

var compressedStream = new MemoryStream();

using (var compressionStream = new GZipStream(compressedStream, CompressionMode.Compress))

{

dataStream.CopyTo(compressionStream);

}

compressedStream = new MemoryStream(compressedStream.ToArray());

member.SetValue(msg, compressedStream);

}Ok, so going in the other direction and decompressing the data is not much harder…

Once again, I pull out the Stream for the body using reflection. I then create a Stream to hold the decompressed data. After that, I wrap the body-stream in a GZipStream set to Decompress, and copy the contents of it to the MemoryStream. After closing the GZipStream, I Seek() the beginning of the MemoryStream before re-setting the BrokeredMessage’s Stream.

Like this…

var member = typeof(BrokeredMessage).GetProperty("BodyStream", BindingFlags.NonPublic | BindingFlags.Instance);

using (var dataStream = (Stream)member.GetValue(msg))

{

var decompressedStream = new MemoryStream();

using (var compressionStream = new GZipStream(dataStream, CompressionMode.Decompress))

{

compressionStream.CopyTo(decompressedStream);

}

decompressedStream.Seek(0, SeekOrigin.Begin);

member.SetValue(msg, decompressedStream);

}Ok, that’s it! At least for the reflection based methods. Let’s have a look at the ones that don’t use reflection…

Just as in the previous post, I will be extracting the body as the type defined. I then use Json.NET to serialize the object. I then use GZipStream to compress the string.

So let’s take it step by step… First I read the body based on the genericly defined type. I then serialize it using Json.NET. Like this

var bodyObject = msg.GetBody<T>();

var json = JsonConvert.SerializeObject(bodyObject);Once I have the Json, I create a MemoryStream to hold the data, wnd then use the same method as shown before to compress it. The main difference is that I don’t re-set the Stream on the BrokeredMessage, instead I convert the compressed data to a Base64 encoded string and use that as the body for a new message. Finally, I copy across all the properties for the message.

There is a tiny issue here in my download, which is also present in the download for the previous blog post. It only copies the custom properties, it does not include the built in ones… But hey, it is demo code…

Ok, so it looks like this

BrokeredMessage returnMessage;

var bodyObject = msg.GetBody<T>();

var json = JsonConvert.SerializeObject(bodyObject);

using (var dataStream = new MemoryStream(Encoding.UTF8.GetBytes(json)))

{

var compressedStream = new MemoryStream();

using (var compressionStream = new GZipStream(compressedStream, CompressionMode.Compress))

{

dataStream.CopyTo(compressionStream);

}

returnMessage = new BrokeredMessage(Convert.ToBase64String(compressedStream.ToArray()));

}

CopyProperties(msg, returnMessage);And the decompression is not much more complicated. It reads the body of the message as a string. It then gets the byte[] of the string by using the Convert.FromBase64String() method. Those bytes are then wrapped in a MemoryStream and Decompressed using GZipStream. The resulting byte[] is converted back to a string using Encoding.UTF8.GetString(), and then deserialized using Json.NET. The resulting object is set as the body for a new BrokeredMessage right before the rest of the properties are copied across.

BrokeredMessage returnMessage;

var body = msg.GetBody<string>();

var data = Convert.FromBase64String(body);

using (var dataStream = new MemoryStream(data))

{

var decompressedStream = new MemoryStream();

using (var compressionStream = new GZipStream(dataStream, CompressionMode.Decompress))

{

compressionStream.CopyTo(decompressedStream);

}

var json = Encoding.UTF8.GetString(decompressedStream.ToArray());

returnMessage = new BrokeredMessage(JsonConvert.DeserializeObject<T>(json));

}

CopyProperties(msg, returnMessage);That’s it! That is all the code needed to compress and decompress BrokeredMessages.

To try it out, I created a little demo application. It uses a ridiculous class to break down text into paragraphs, sentences and words to generate a object to send across the wire.

I decided to create something quickly that would generate a largish object without too much work, and string manipulation seemed like a good idea, as I can just paste in lots of text to get a big object. It might not properly show the efficiency of the compression as text compresses very well, but it was easy to build. But do remember that different object will get different levels of compression…

The result of the compression is quite cool. Running it on a message that by default is 72,669 bytes, compresses it down to 3,359 bytes. Once again, it is a lot of text and so on, but to be fairs, the data before compression is binary XML, which is also text…

So the conclusion is that compressing the messages isn’t that hard, but you will potentially gain a lot form doing it. Not only for when your messages break the 256kb limit, but also for smaller messages as they will be even smaller and thus faster across the network…

As usual, there is source code for download. It is available here: DarksideCookie.Azure.Sb.Compression.zip (216.65 kb)

Haishi Bai (@haishibai2010) posted A Tour of Windows Azure Active Directory Features in Windows Azure Management Portal – Logging in and Enabling Multi-factor Authentication on 3/5/2013 (missed when posted):

With new release of Windows Azure Management Portal (Scott Gu’s announcement), it’s now possible for you to manage your Windows Azure AD objects in the management portal itself (team announcement). This post provides guided tour of these exciting new features. First, we’ll have a brief review what Windows Azure Active Directory is. Then, we’ll introduce how to get a Windows Azure subscription that is linked to your Windows Azure AD tenant. And then, we’ll go through the basics, starting with correct steps to authenticate in order to see these new features. And at last, we’ll go through steps of setting up multi-factor authentication for managing your Windows Azure subscriptions as well as using it in your own ASP.NET applications.

What is Windows Azure Active Directory

Windows Azure Active Directory is a REST-based service made by Microsoft that provides multi-tenant directory service for the cloud. You can either project users from your on-premises Active Directory to your Windows Azure AD tenant, or start with a fresh Windows Azure AD tenant and keep the entire directory on the cloud. Windows Azure Active Directory is designed for SaaS application developers to have a low cost (actually, it’s completely free!), scalable, and cloud-ready directory service that they can easily leverage in their applications. It provides WebSSO capabilities with ws-Federation and SAML 2.0. It also provides a REST-based Graph API that you can use to query directory objects. There are many contents available from Microsoft about Windows Azure Active Directory. I did a survey a while ago. I recommend you to read through the listed resources in the survey if you want to learn more about Windows Azure Active Directory.

A Subscription with a Tenant

At the time when this post is written, you need to create a new Windows Azure subscription using your Windows Azure AD tenant credential. If you are an Office365 user, you already have a Windows Azure AD tenant. If you want to sign up for a free testing tenant, you can follow this link. Once you’ve provisioned your administrator for your Windows Azure AD tenant, you can use that account to log in to www.windowsazure.com to get a new Windows Azure subscription associated with the tenant.

Basics

- Go to https://manage.windowsazure.com.

- Instead of typing in your user id and password in the log in form, click on Office 365 users: sign in with your organizational account link to the left of the screen:

- Log in with your Windows Azure Active Directory tenant account:

- Once you log in, click on ACTIVE DIRECTORY link in the left pane. You’ll see two tabs on the page: one is ENTERPRISE DIRECTORY, where you manage your tenant objects; the other is ACCESS CONTROL NAMESPACES, where you manage your ACS namespaces:

- Click on the tenant name. You’ll enter the page where you can mange your users, domains, as well as directory sync with on-premises directories:

- Now, with USERS tab selected, let’s click on CREATE button on command bar to create a new user.

- Fill out the form. At this point you can choose to assign the user to one of two pre-defined roles, User or Global Administrator. Only Global Administrators can manage your Windows Azure AD tenants. Here we’ll choose User role. Click next arrow to continue.

- The new user will be assigned with a temporary password, which has to be changed when the user first logs in. Click on Create button to display the temporary password:

- Here you can choose to copy the password to clipboard and share the password manually, or to directly send the password to up to 5 mail addresses for the new user. Click check button to complete the process.

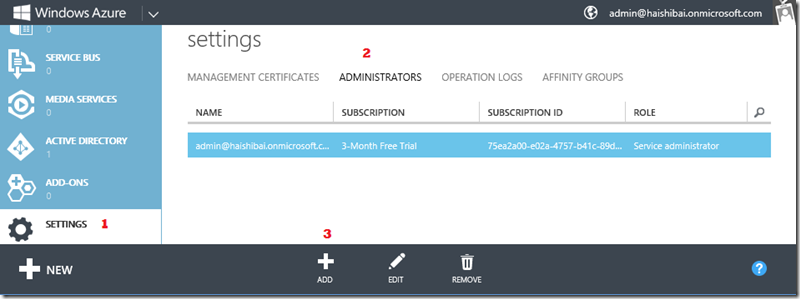

- To allow this new user to log in to management portal, we need to add him as a co-administrator of our subscription. To do this, click on SETTINGS in the left pane (1), then make sure ADMINISTRATORS tab is selected (2), and finally click on ADD button in command bar (3).

- Enter the email address of this new user, select the subscription(s) you want to grant access to, and then click on check icon to complete the process.

- Now you can log out and log in using the new user account. You’ll have to change your password when you log in for the first time:

- Note because now you are logged in as a User, you can’t manage your Windows Azure AD tenant using this account. When you click on ACTIVE DIRECTORY in the left pane, you only see your ACS namespaces but not tenant objects.

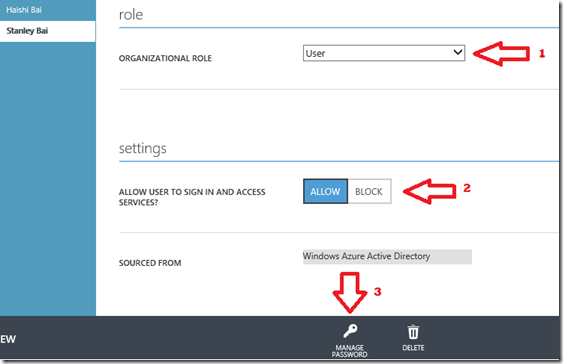

- Now, log back in as an administrator. Go back to ACTIVE DIRECTORY, open your AD tenant, and click on the user name to bring up his profile. Here you can promote the user to administrator (1), or block user from accessing the portal (2), or rest user’s password (3).

- Just for fun, let’s block the account, save changes, and log out. Then, when you try to log in using the account, your access is denied:

2-Factored Authentication – the # key is your friend!

Many of our customers have requested the ability of enabling 2-factored authentication when users try to access the management portal. Now this has been enabled and the configuration is really easy.

- The 2-factored authentication only applies to Global Administrators. So first let’s promote the user we just created to administrator. The following screenshot shows that I’ve changed user role to Global Administrator (1), entered an alternative email address as requested (2), checked Require Multi-factor Authentication (3), and I’ve also kindly unblocked user from logging in (4). Once all these are done, click SAVE (5)to save the changes.

- Log out and try to log in using the account. You’ll see something different this time, telling you the user has not configured for multi-factor authentication yet. Click on Set it up now to continue.

- You’ll see the page where you are asked to enter a mobile phone number. Enter your phone number (make sure your phone is accessible to you) and click on Save.

- A computer will call you and ask you to press # key on your phone. Press the # key to finish verification. Once verification successes, click Close button to complete this step:

- Now the log in process continues. A computer will call you again to ask you to press # key. Answering the call and pressing the # key allow you to log in to the management portal. From now on, whenever you try to log in using this user account, you’ll need to answer the phone and press the # key as the second factor authentication before you are granted access to the portal. That’s pretty cool, isn’t it?

A code sample

At last, let’s have a simple code sample to leverage the multi-factor authentication in your own web applications. It’s amazingly easy:

- If you haven’t done so, download and install Microsoft ASP.NET Tools for Windows Azure Active Directory – Visual Studio 2012 (preview). Note, before installing ASP.NET Tools for Windows Azure AD you need to install ASP.NET and Web Tools 2012.2 Update first. For more details please read here.

- Launch Visual Studio, create a new ASP.NET MVC 4 Internet application.

- Click on PROJECT –> Enable Windows Azure Authentication … menu.

- You’ll be asked to enter your Windows Azure AD tenant. Enter your Windows Azure AD domain, and click Enable:

- A log in window shows up. Log in as an administrator of your tenant.

- And it’s done! Click Close to close the dialog box.

- Press F5 to launch application.

- Log in using a user that you’ve already configured multi-factor authentication. You’ll get the same 2-factored authentication experience using the combination of your credential and mobile phone.

<Return to section navigation list>

Windows Azure Virtual Machines, Virtual Networks, Web Sites, Connect, RDP and CDN

‡ Tyler Doerksen (@tyler_gd) posted a Web Camp Session Video – Websites in the Cloud on 3/15/2013 (missed when published):

Windows Azure Web Sites is a simple, yet powerful platform for web applications in the cloud. In this session you will see how you can build and deploy everything from WordPress blogs to high scale web applications using your favorite languages, frameworks and tools.

Part of the Developer Movement Web Camp

Contents

- Comparing Azure Virtual Machines, Cloud Services, and Websites

- Websites – start simple, code smart, go live

- Hello World Demo

- Modern Apps Demo – deploying from git and using the cross-platform command line interface

- Diagnostics and Scale Demo

- Shared Instances

- Reserved Instances

- Website Gallery and WordPress Demo

- Supported Frameworks – ASP, .NET, PHP, and Node.js

- Publishing Methods – FTP, TFS, WebDeploy, and git

- Partners

- Recap

- Closing

Jim O’Neil (@jimoneil) continued his series with Practical Azure #15: Windows Azure Virtual Machines (Part 2) on 3/15/2013:

We looked at Windows Azure Virtual Machines in the last episode from the perspective of creating a VM from a base operating system image, installing software (the Mongoose HTTP server) and configuring some settings, such as the firewall to enable HTTP traffic.

In this follow-on episode, I take that same example a step further, showing how to create an image from the VMs created last time, so that you can reuse it as a template for additional VM instances.

Download: MP3 MP4

(iPod, Zune HD)High Quality MP4

(iPad, PC)Mid Quality MP4

(WP7, HTML5)High Quality WMV

(PC, Xbox, MCE)Here are the Handy Links for this episode:

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

‡ Tyler Doerksen (@tyler_gd) posted a .NET Camp Session Video – Azure Cloud Services on 3/15/2013 (missed when published):

Windows Azure Cloud Services offers a great way to logically separate your applications and scale the components up and out independently and easily. In this session you will get a complete overview of Cloud Services and a clear understanding of Web Roles and Worker Roles. Furthermore you’ll see how Visual Studio 2012 and the Windows Azure SDK makes it easy to develop and test your cloud services locally.

Part of the Developer Movement .NET Camp

Contents

- Introduction

- Azure Ecosystem

- Cloud Services Overview

- Why a Cloud Service?

- What is a Cloud Service? Web and Worker Roles

- What can it run? Languages and Frameworks

- Web Roles

- Worker Roles

- Role Lifecycle

- Roles and Instances

- Fault Domains

- Upgrade Domains

- Hello World Demo

- Packaging and Configuration

- Deployment

- Application Upgrade Strategies

- Service Management Demo

- Cloud Development Lifecycle

- Team Foundation Service integration

- TFS Online and Azure Demo

- Diagnostics Demo

- Closing

‡ Brady Gaster (@bradygaster) reported WebMatrix Templates in the App Gallery on 3/19/2013:

If you’ve not yet used WebMatrix,

what are you waiting for?!?!?!?you’re missing out on a great IDE that helps you get a web site up and running in very little time. Whether you’re coding your site in PHP, Node.js, or ASP.NET, WebMatrix has you covered with all sorts of great features. One of the awesome features of WebMatrix is the number of templates it has baked in. Starter templates for any of the languages it supports are available, as are a number of practical templates for things like stores, personal sites, and so on. As of the latest release of Windows Azure Web Sites, all the awesome WebMatrix templates are now also available in the Web Sites application gallery. Below, you’ll see a screen shot of the web application gallery, with the Boilerplate template selected. [Emphasis added.]Each of the WebMatrix gallery projects is now duplicated for you as a Web Site application gallery entry. So even if you’re not yet using WebMatrix, you’ll still have the ability to start your site using one of its handy templates.

Impossible, you say?

See below for a screenshot from within the WebMatrix templates dialog, and you’re sure to notice the similarities that exist between the Application Gallery entries and those previously only available from directly within WebMatrix.

As before, WebMatrix is fully supported as the easiest Windows Azure-based web development IDE. After you follow through with the creation of a site from the Application Gallery template list from within the Windows Azure portal, you can open the site live directly within WebMatrix. From directly within the portal’s dashboard of a new Boilerplate site, I can click the WebMatrix icon and the site will be opened up in the IDE.

Now that it’s open, if I make any changes to the site, they’ll be uploaded right back into Windows Azure Web Sites, live for consumption by my site’s visitors. Below, you’ll see the site opened up in WebMatrix.

If you’ve not already signed up, go ahead and sign up for a free trial of Windows Azure, and you’ll get 10 free sites (per region) to use for as long as you want. Grab yourself a free copy of WebMatrix, and you’ll have everything you need to build – and publish – your site directly into the cloud.

•• Chris Kanaracus (@chriskanaracus) asserted “The arrival in June of Azure ERP deployment options will usher in some changes” in a deck for his FAQ: Inside Microsoft's cloud ERP strategy article of 3/20/2013 for NetworkWorld:

Microsoft has announced some key details of how it will introduce Dynamics ERP (enterprise resource planning) software products to the cloud computing model, from initial release dates to the precise role of partners.

Dynamics NAV 2013 and GP 2013, both of which cater to smaller and midsized companies, will be available on Microsoft's Azure cloud service through partners in June, Microsoft announced this week at the Convergence conference in New Orleans. Microsoft also affirmed that the next major version of AX, its enterprise-focused product, will be available on Azure. [Emphasis added.]

Here's a look at some of the biggest questions raised by those announcements.

Partners have long hosted Dynamics software and made their own data center optimizations, so what do they stand to gain, as well as lose, from the Azure deployment option?

"It's all the things you can expect from an enterprise-grade cloud service," namely "stability, protection, security and the ability to scale and grow," said Kirill Tatarinov, president of Microsoft's Business Solutions division, in an interview at Convergence. "Azure is elastic. The fact they can grow without adding servers is huge for them."

In addition, it's all business as usual for partners with respect to Dynamics consulting on Azure. Dynamics ERP is sold through channel partners, many of whom add extensions that fine tune the software for a particular customer's needs. That won't change, and partner-built extensions will also run on Azure.

But will Azure save people money over regular hosting or on-premises installations?

They will, according to Tatarinov. "The cost variables in the cloud change so rapidly between different providers and suppliers, but Azure is and will continue to be cost-competitive," he said. "And ultimately, yes, they will benefit."

That's not to say Microsoft intends Azure as a be-all, end-all cloud platform for Dynamics ERP, said Michael Ehrenberg, a Microsoft technical fellow who oversees development of the Dynamics products, in an interview at Convergence.

"Our target is to be the best provider at volume, at scale," he said. Some hosting partners have specialized offerings, such as for local legal requirements, a higher service-level agreement, or extensive hands-on support; Microsoft won't try to supplant them with Azure, he said. "That's where the partner hosts have a long-term model."

"We have some partners that host today, but there's nothing really special about it," Ehrenberg added. "We expect most of them to transition [to Azure]."

Saving money is nice, but is it the only point of the Azure option?

"Now the customer's dealing with their partner, the software is from Microsoft, and there's some third-party [hosting service] like Rackspace," Ehrenberg said. "This is more, let's give them the comfort of infrastructure run by Microsoft. Now, I'm dealing with my partner and Microsoft." That said, "over time we expect that our costs in the cloud will become super-competitive," he added.

Why did the availability date for NAV and GP on Azure slip from December 2012 to this June?

There are a few reasons for this, according to Ehrenberg. First of all, the bulk of customers on those products run them on-premises, so Microsoft ended up putting off some work needed to cloud-enable GP and NAV in order to ensure the on-premises version shipped on time, he said. (NAV 2013 was released in October and GP 2013 in December.)

Secondly, Microsoft wanted to make sure the Azure rollout would go right, he added. "We ended up adding a bunch of time to get partners ready," he said, mentioning that other vendors made "early promises" about cloud ERP that "didn't end up happening," in an apparent allusion to SAP's Business ByDesign.

SAP launched ByDesign several years ago with great fanfare but ended up having to scale back the rollout and do some retooling in order to ensure it could make money selling the on-demand software suite at scale.

Microsoft is wise to take its time before opening up access to Dynamics ERP on Azure, both for that reason as well as the mission-critical nature of ERP systems, a factor that has resulted in the software category lagging others when it comes to cloud deployments. Microsoft is confident that all will be ready for launch in June, according to Ehrenberg.

How will Microsoft handle AX on Azure?

The next major release of Dynamics AX will get an Azure option as well. That product is scheduled for early-adopter access in 2014. AX played a prominent role at Convergence as Microsoft, in a shot across the bow of Oracle and SAP, showcased how global cosmetics maker Revlon was standardizing its business on AX.

Microsoft recently rolled out an update to AX that made it possible to run a company on a single global instance of the software, and is hoping to win many more deals like the Revlon one.

"That is essentially going to be first time ever in the industry where someone delivers the ability to run their full business end-to-end in the cloud," Tatarinov claimed during a session at Convergence this week.

While AX partners will play mostly similar roles with respect to Azure on AX, one key difference is that Microsoft will handle the billing and subscription relationship with a customer, rather than having the money flow through partners. Microsoft may desire a greater level of control because AX customers could have more complex licensing agreements that will need to be mapped to the Azure pricing model.

Will Dynamics in the cloud shake up the product release cycle?

Cloud software vendors tend to roll out new features a number of times each year, taking advantage of the deployment model's flexibility, rather than deliver big-bang releases every two or three years.

For Dynamics, Microsoft has done a large release in that time frame while also issuing smaller service packs in between, Ehrenberg said. The cloud option will have an influence on that schedule, he said.

"We will definitely be on a faster cadence," he said. However, "for me, quarterly [updating] just doesn't make sense for these kinds of apps," Ehrenberg added. ..

Scott Bekker (@scottbekker) posted Tales of Partner Successes in Microsoft's 'Cloud OS' to his Redmond Channel Partner column on 3/18/2013:

The combination of Windows Server 2012, System Center 2012 SP1 and Windows Intune have given Microsoft partners new opportunities to expand their businesses into the cloud. Here are a few examples.

Alan Bourassa has a double identity in his job at EmpireCLS Worldwide Chauffeured Services.

On the one hand, he's the chief information officer for a luxury limo service with more than 1,000 employees that offers chauffeur services in about 700 cities around the world. With more than 30 years in business, the company has established a polished reputation that attracts senior executives of Fortune 500 companies shuttling to airports and Hollywood celebrities on their way to the red carpet at events like the recent Golden Globes.

In other words, Bourassa runs an IT operation with global enterprise requirements, including hardware and software for three datacenters. Through the lens of the Microsoft ecosystem, that makes EmpireCLS a high-level customer for Microsoft and a potential customer for Microsoft partners.

At the same time, Bourassa is the CIO of EmpireCLS, the startup, Software as a Service (SaaS) provider offering a proprietary dispatch and reservation system to other ground transportation companies. Looked at that way, EmpireCLS is becoming a Microsoft partner itself.

SaaS on the Rise

EmpireCLS manifests a predicted result of trends toward cloud -- the emergence in the SaaS channel of vertically focused companies with highly specialized expertise. Many observers expected that some of those companies would turn their proprietary solutions into SaaS offerings for other companies in their own industries. The recent release of Microsoft System Center SP1 puts a finishing touch on a set of integrated technologies, including Windows Server 2012 and Windows Azure, that Microsoft calls the cloud OS. It's that environment that EmpireCLS and other forward-looking companies are using to broaden their own definitions of what their businesses do."Over the last several years, we've been transforming our business from just a luxury ground transportation company to a world-class hosting provider, targeting and specializing specifically in the ground transportation segment," Bourassa said in a January news conference organized by Microsoft for the System Center SP1 launch.

"We now offer Software as a Service for our proprietary dispatch and reservation systems that we built, and Infrastructure as a Service to those companies specializing in the ground transportation industry," he said. "We took an existing software asset from our company and developed it and used it in a business model with our intellectual property and capital to make a public cloud offering to build on that model for our cloud services to maximize our original software investment."

"Over the last several years, we've been transforming our business from just a luxury ground transportation company to a world-class hosting provider, targeting and specializing specifically in the ground transportation segment."

Alan Bourassa, CIO, EmpireCLS [pictured at right.]

Last April, EmpireCLS allocated about 90 percent of its datacenter resources for the legacy business and 10 percent for the new SaaS business. Since then, EmpireCLS has crossed the milestone of 550 customers and vendors on its combined private, public and Windows Azure cloud services offering. [Emphasis added.]

In another year, the company has said it expects the split of its cloud OS dedicated to private versus public use will be 50-50.

And as go the datacenter resource allocations, so go the revenues. The luxury chauffeur business may have taken 30 years to reach its current revenue levels, but executives have high hopes for online services such as the dispatch/reservation system and other offerings, such as BeTransported.com, an online rate-shopping ground transportation service.

"We actually expect that over the next several years, more than 50 percent of our total revenue is going to come from this new business transformation and venture," Bourassa said.

The possibilities for new business for EmpireCLS came about during a technical transformation of the company's datacenters from pure Unix shops to the Microsoft platform. EmpireCLS started standardizing its datacenter on the Windows Server 2008 OS in 2008. A year later, EmpireCLS upgraded to the R2 release and began using Hyper-V to virtualize its operations. Other important Microsoft technologies for the company at that stage included live migration, Cluster Shared Volume (CSV) features, System Center Virtual Machine Manager (VMM) 2008 R2 and Remote Desktop Services for virtual desktop infrastructure (VDI).

The first release of System Center 2012 allowed EmpireCLS to create the scalable private cloud that began to allow the pooling, provisioning and de-provisioning that would let the company create its new hosting-style businesses without adding IT staff.

"Without Microsoft's total cloud vision, we'd never have been able to have accomplished this. This has allowed us to expand our business model and to become a public hosting provider in the cloud," Bourassa said.

Super Service Pack

A service pack release is usually a relatively minor milestone on a Microsoft roadmap. System Center 2012 SP1, however, is not your standard service pack. System Center 2012 SP1 lights up much of the functionality of the cloud OS. One major way it does so is that it's the first version of System Center 2012 to support Windows Server 2012.

<Return to section navigation list>

Visual Studio LightSwitch and Entity Framework 4.1+

‡ Beth Massi (@bethmassi) explained Master-Detail Screens with the LightSwitch HTML Client on 3/21/2013:

Since we released the latest version of the LightSwitch HTML Client a couple weeks ago, the team has been cranking out some awesome content on the LightSwitch Team blog around the new capabilities as well as some good How-To’s. And there’s a lot more coming!

If you’re just getting started with the HTML client, see: Getting Started with LightSwitch in Visual Studio 2012 Update 2

In this post I want to show you how you can use the LightSwitch Screen Designer to create master-detail screens the way you want them. The Screen Designer is very flexible in allowing you to customize the screen layouts, it just takes some practice. In fact, there are so many goodies in the Screen Designer I won’t be going over all you can do (look for some videos on that soon). Instead I want to focus on a couple different ways you can present master-detail screens. What inspired me to write this post was Heinrich’s brilliant article on Designing for Multiple Form Factors which explains how LightSwitch does all the heavy-lifting for you to determine the best way to lay out content on a screen depending on the screen size.

I’ll present a couple different ways you can design master-detail screens to get your creative juices flowing, but keep in mind these are just suggestions. In the end you have total flexibility to create screens the way you need with your data and users in mind.

Setup our Data Model

I’m going to use an application that we built in the Beginning LightSwitch Series and extend it with a mobile client. It’s a very simple application that manages contacts or business partners. This would typically be part of a larger business system but for this example it works well because there are multiple one-to-many relationships. Download the Sample App and feel free to follow along at home!

Here’s our data model. A Contact has a one-to-many relationship between its PhoneNumbers, EmailAddresses and Addresses.

Add an HTML Client “Home” Screen

First we need to upgrade this application to take advantage of the new HTML client, then we’ll be able to add a screen that users will see when they launch the app.

In the Solution Explorer, right-click on the project and select “Add Client…”.

Name the client “HTMLClient” and click OK. This will upgrade your project from LightSwitch version 2 to version 3. You’ll now see a node called “HTMLClient (startup)” in the Solution Explorer. Right-click on that and select “Add Screen…” and choose the Browse Data Screen template. Select the “SortedContacts” query as the Screen Data and name the screen “Home” then click OK.

Next, let’s modify what we’re showing in the list. In the Screen Designer, change the Summary control to a Columns Layout. A Summary control displays the first string field defined on the entity in the Data Designer. However we want to see both the FirstName & LastName so a Columns Layout will do this for us.

Run the application (F5). Since there’s already some data in this sample, you’ll see a few contacts displayed in the list.

Now let’s see a couple ways in which we can present the contact details as well as their related data, PhoneNumbers, EmailAddresses & Addresses.

Master-Details with Tab Controls

Close the app and return to the Screen Designer for the Home screen. Select the List control and in the Properties Window set the Item Tap action by clicking on the link.

Then select the SortedContacts.viewSelected method and click OK to create a new screen.

This will open the familiar Add New Screen dialog with the View Details Screen already selected. Make sure to check off the additional data to include the EmailAddresses, PhoneNumbers and Addresses. Then click OK.

By default, LightSwitch will set up the contact details and all the related children into separate Tab controls. When we run the app again, the tabs appear across the top in a scrollable/swipe-able container.

As we open each tab, you will see a simple list of items displayed.

Let’s see how we can customize these lists a little better so that a user can see all the data at one glance as well as formatting the data better.

Back in the Screen Designer for the ViewContact screen, first select the Email Addresses List and change it to a Tile List. This will automatically change the Summary control to a Rows Layout and will set the Email field to display as a formatted, clickable email address. Let’s also de-emphasize the Email Type field by setting its Font Style to “Small”.

Repeat the same process for Phone Numbers and Addresses. For Addresses, in order to show the City State & ZIP on one line of the tile, select the Rows Layout and under the field list click + Add, and select New Group. Then change the control to a Columns Layout and add the City State & ZIP fields to that group. In the end, your screen’s content tree should looks like this.

Now when we run it, you’ll see our data displayed and formatted much better.

Using Tab controls for master-details screens is a good idea when you will potentially have a lot of child data to display or you are targeting smaller mobile devices like phones. This limits the amount of (potentially overwhelming) information that a user has to view at once.

Editing Data in Tabs

Now let’s see how we can edit our data using the Tab design. Go back to the ViewContact screen and under the Details Tab expand the Command Bar and click +Add to add a button. Select an existing method Contact.edit and click OK to create a new screen.

The Add New Screen dialog will open with an Add/Edit Details Screen template automatically selected with the data we want. This time I’m not going to select all the related children because we’ll be editing each tab of data separately in this design.

Click OK and this will open the Add Edit Contact screen in the designer. Go back to the ViewContact screen by clicking the back arrow at the upper left hand side of the designer.

Select the Edit Contact button we just added and change the icon to “Edit”.

Now when we run the application you will see the Edit Contact button in the command bar and clicking it will bring up the Edit screen for our contact.

Now let’s provide editing of data for the rest of our tabs. Go back to the ViewContact screen and select Tile List control for Email Addresses and set it’s Tap action to an existing method: EmailAddresses.editSelected and click OK to create a new screen.

Accept the defaults on the Add New Screen dialog by clicking OK. This will navigate the Screen Designer to the new AddEditEmailAddresses screen. Drag the Email & Email Type fields under the Command Bar and delete the Columns Layout control. We don’t need to display the Contact to the user, this is automatically set for us from the previous screen.

Now go back to the ViewContact screen by clicking the back arrow at the upper left hand side of the designer again. Next select the Command Bar directly above the Tile List and add a new button. Choose the existing method EmailAddresses.addAndEditNew. Notice LightSwitch will correctly suggest that we use the same screen we just created. Click OK.

Select the button and this time set the Icon to “Add” in the properties window. Repeat these steps for PhoneNumbers and Addresses to add editing and adding capabilities to the rest of the tabs. Now when we run it and edit a Contact, you will see a button in the command bar of each of the tabs that allow you to add new child records. If you click an item in the Tile List, you will see the edit screen instead.

As I mentioned this technique of allowing targeted edits of data in separate tabs is useful when displaying large amount of data to users with small form factor mobile devices. Typically users will not be entering large amounts of data but instead be “tweaking” (making small edits to) what data is already there.

However the screen designer is not limited to this screen flow. If you want to display more data on a screen you can totally do that. For instance, in our example it’s unlikely we will have more than three phone numbers, email addresses or addresses for a contact. It might make better sense to show everything on one screen, even if it is a phone, and just have the user scroll the screen vertically. Or maybe we’re targeting tablet users primarily and we don’t want to have to force them to enter the data on small screens with lots of clicks to the Save button. If we’re entering contact information, then most likely we want to capture all the data at once. Let’s see how we can do that.

Master-Details Full Screen Alternative

First let’s change our View Contact screen. First drag all the Add buttons from the child Tabs Command Bars to the top Command Bar. Then drag each of the Tile Lists into the Details Tab and then delete the now empty child tabs. Finally, select the Tile Lists and in the properties window check the “Show Header” checkbox.

This allows the user to see all the information at once. LightSwitch will automatically adjust the screen layout as necessary as we resize our browser. This particular example works well for smaller sets of data because we’ll never have more than a handful of child data.

Here’s what it looks like on my phone when I scroll to the children. Notice the command bar is always shown on the bottom no matter where you scroll. Also if I tap the email link it will start an email automatically and when I tap the phone number link the phone will ask to dial the number. But if I tap on the tile, it will open the associated Edit screen.

You can also add specific buttons to edit the lists instead of requiring the user to tap on the tiles. In fact, you don’t even need to put buttons in the Command Bar. In the Screen Designer, you can add a button anywhere to a screen simply by right-clicking on a any item in the content tree or by selecting “Add Layout Item” at the top of the Screen Designer and choosing “Button…”.

What about adding a new contact? Let’s go back to our Home screen and add that ability. Since the user is almost always going to add additional data when entering a new contact, let’s create a screen we can use for new contacts that allows us to enter them in one dialog.

Specific to this sample, is a permissions check to allow inserts into the contact table. We’ll need to grant that permission for our debug session if we want to test this so open up the project properties and on the Access Control tab, check off “Granted for Debug” on the app-specific permissions.

Now open the Home Screen and add a button to the Command Bar. Select the existing method SortedContacts.addAndEditNew then select (New Screen…) to Navigate to.

This time go ahead and include all the related data on the screen and name the screen AddContact. Click OK.

We’ll set this screen up very similar to the ViewContact screen but this time we’ll allow editing of all the data fields. First add “Add” buttons to the top Command Bar so the user can add Email Addresses, Phone Numbers and Addresses to their new contact all on one screen. Select the existing methods .addAndEditNew and go with the suggested Navigate To screens we already added previously.

Now just like before, change the Lists to Tile Lists in order to display all the fields in the Tile. This time however, change the field controls to editable controls.

Next drag each of the Tile Lists into the Details Tab and then delete the now empty child tabs. Finally, select the Tile Lists and in the properties window check the “Show Header” checkbox.

You should end up with the screen content tree looking like this.

And now when we run this and add a Contact, you are able to enter all the data at once on one screen.

By default all edit screens are shown as dialogs. As the screen size decreases, LightSwitch will show the dialog as full screen, however, in this case we probably want to force it so that as much of the screen is shown to the user as possible all the time. Particularly for tablet form factors with larger screens, it would be better to show this screen in full view.

We can force the screen to display as full screen by opening the Screen Designer and selecting the screen node at the top. Then in the properties window uncheck “Show as Dialog”.

Now when we run the app again and add a new contact, the screen takes up the full width & height of the browser.

Wrap Up

I hope I showed you a couple different ways you can create master-detail screens with the LightSwitch HTML client (and we did it without writing any code!). Of course you can pick and choose which techniques apply to your situation or come up with a completely different approach. The point is the screen designer is very flexible in what it can do, it just takes a little practice.

In closing, here are some tips to keep in mind:

- LightSwitch will automatically adjust the screen layout as necessary for different form factors.

- Using Tab controls for master-details screens is a good idea when you will potentially have a lot of child data to display or you are targeting smaller mobile devices like phones. This limits the amount of information that a user has to view/edit at once.

- Showing all the data on a screen works well for smaller sets of data where you’ll never have more than a handful of child data to view/edit at once (like in our example) or when your users are using larger devices like tablets.

I should also point out that there are a lot of hooks for creating custom controls and styling of the app that you can utilize for your unique situations, maybe I’ll dig into that in a future post.

•• Rowan Miller posted Entity Framework Links #4 on 3/18/2013:

This is the fourth post in a regular series to recap interesting articles, posts and other happenings in the EF world.

Entity Framework 6

Since our last EF Links post we announced the availability of EF6 Alpha 3. Of particular note, this preview introduced support of mapping to insert/update/delete stored procedures with Code First and connection resiliency to automatically recover from transient connection failures

There were a number of blog posts about new features coming in EF6:

- Arthur Vickers blogged about the changes we checked in to support nested types and more for Code First.

- Jiri Cincura posted a two part series about using custom conventions to help build the EF6 Firebird provider (Part 1, Part 2).

- Rowan Miller wrote about writing your own Code First Migrations operations, configuring unmapped base types using Code First custom conventions, and mapping all private properties using Code First custom conventions.

We also shared some changes we are planning to make to custom conventions to improve this new feature. These changes will be included in a future preview.

Entity Framework 5

Julie Lerman appeared on Visual Studio Toolbox sharing Entity Framework tips and tricks. She also released a Getting Started with Entity Framework 5 course on Pluralsight

Rowan Miller published a two part series about extending and customizing Code First models at runtime (Part 1, Part 2).

EF Power Tools

We announced Beta 3 of the EF Power Tools. We also shared plans to provide a single set of tooling for EF, which means we won't be releasing an RTM version of the Power Tools. However, we will continue to maintain Beta releases of the Power Tools until the functionality becomes available in a pre-release version of the primary EF tooling.

Andy Kung posted Signed-In Part 1 – Introduction to the LightSwitch Team Blog on 3/18/2013: