Windows Azure and Cloud Computing Posts for 3/11/2012+

| A compendium of Windows Azure, Service Bus, EAI & EDI, Access Control, Connect, SQL Azure Database, and other cloud-computing articles. |

•• Updated 3/16/2013 with new articles marked ••, including Windows Azure Root Certificate Migration in the Windows Azure Infrastructure and DevOps section below.

• Updated 3/15/2013 with new articles marked •.

Note: This post is updated weekly or more frequently, depending on the availability of new articles in the following sections:

- Windows Azure Blob, Drive, Table, Queue, HDInsight and Media Services

- Windows Azure SQL Database, Federations and Reporting, Mobile Services

- Marketplace DataMarket, Cloud Numerics, Big Data and OData

- Windows Azure Service Bus, Caching, Access Control, Active Directory, Identity and Workflow

- Windows Azure Virtual Machines, Virtual Networks, Web Sites, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch and Entity Framework v4+

- Windows Azure Infrastructure and DevOps

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

- Cloud Security, Compliance and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

Azure Blob, Drive, Table, Queue, HDInsight and Media Services

• Microsoft’s patterns & practices (@mspnp) group posted the 123-page first (alpha) drop of Developing Big Data Solutions on Windows Azure on 3/14/2013. From the Preface:

Do you know what visitors to your website really think about your carefully crafted content? Or, if you run a business, can you tell what your customers actually think about your products or services? Did you realize that your latest promotional campaign had the biggest effect on people aged between 40 and 50 living in Wisconsin (and, more importantly, why)?

Being able to get answers to these kinds of questions is increasingly vital in today's competitive environment, but the source data that can provide these answers is often hidden away; and when you can find it, it's very difficult to analyze successfully. It might be distributed across many different databases or files, be in a format that is hard to process, or may even have been discarded because it didn’t seem useful at the time.

To resolve these issues, data analysts and business managers are fast adopting techniques that were commonly at the core of data processing in the past, but have been sidelined in the rush to modern relational database systems and structured data storage. The new buzzword is “Big Data,” and it encompasses a range of technologies and techniques that allow you to extract real, useful, and previously hidden information from the often very large quantities of data that previously may have been left dormant and, ultimately, thrown away because storage was too costly.

In the days before Structured Query Language (SQL) and relational databases, data was typically stored in flat files, often is simple text format, with fixed width columns. Application code would open and read one or more files sequentially, or jump to specific locations based on the known line width of a row, to read the text and parse it into columns to extract the values. Results would be written back by creating a new copy of the file, or a separate results file.

Modern relational databases put an end to all this, giving us greater power and additional capabilities for extracting information simply by writing queries in a standard format such as SQL. The database system hides all the complexity of the underlying storage mechanism and the logic for assembling the query that extracts and formats the information. However, as the volume of data that we collect continues to increase, and the native structure of this information is less clearly defined, we are moving beyond the capabilities of even enterprise-level

relational database systems.Big Data encompasses techniques for storing vast quantities of structured, semi-structured, and unstructured data that is distributed across many data stores, and analyzing this data where it's stored instead of moving it all across the network to be processed in one location—as is typically the case with relational databases. The huge volumes of data, often multiple Terabytes or Petabytes, means that distributed processing is far more efficient than streaming all the source data across a network.

Big Data also deals with the issues of data formats by allowing you to store the data in its native form, and then apply a schema to it later, when you need to query it. This means that you don’t inadvertently lose any information by forcing the data into a format that may later prove to be too restrictive. It also means that you can simply store the data now—even though you don’t know how, when, or even whether it will be useful—safe in the knowledge that, should the need arise in the future, you can extract any useful information it contains.

Microsoft offers a Big Data solution called HDInsight, as both an online service in Windows Azure and as an onpremises mechanism running on Windows Server. This guide primarily focuses on HDInsight for Windows Azure, but explores more general Big Data techniques as well. For example, it provides guidance on configuring your

storage clusters, collecting and storing the data, and designing and implementing real-time and batch queries using both the interactive query languages and custom map/reduce components.

Big Data solutions can help you to discover information that you didn’t know existed, complement your existing knowledge about your business and your customers, and boost competitiveness. By using the cloud as the data store and Windows Azure HDInsight as the query mechanism you benefit from very affordable storage costs (at the time of writing 1TB of Windows Azure storage costs only $95 per month), and the flexibility and elasticity of the “pay and go” model where you only pay for the resources you use.

Who This Guide Is For

This guide is aimed at anyone who is already (or is thinking of) collecting large volumes of data, and needs to analyze it to gain a better business or commercial insight into the information hidden inside. This includes business managers, data analysts, system administrators, and developers that need to create queries against massive data repositories.

Why This Guide Is Pertinent Now

Businesses and organizations are increasingly collecting huge volumes of data that may be useful now or in the future, and they need to know how to store and query it to extract the hidden information it contains. This might be webserver log files, click-through data, financial information, medical data, user feedback, or a range of social sentiment data such as Twitter messages or comments to blog posts.

Big Data techniques and mechanisms such as HDInsight provide a mechanism to efficiently store this data, analyze it to extract useful information, and visualize the information in a range of display applications and tools.

It is, realistically, the only way to handle the volume and the inherent semi-structured nature of this data.

No matter what type of service you provide, what industry or market sector you are in, or even if you only run a blog or website forum, you are highly likely to benefit from collecting and analyzing data that is easily available, often collected automatically (such as server log files), or can be obtained from other sources and combined with your own data to help you better understand your customers, your users, and your business; and to help you plan for the future.

A Roadmap for This Guide

This guide contains a range of information to help you understand where and how you might apply Big Data solutions. This is the map of our guide:

- Chapter 1, “What is Big Data?” provides an overview of the principles and benefits of Big Data, and the differences between it and more traditional database systems, to help you decide where and when you might benefit from applying it. This chapter also discusses Windows Azure HDInsight, its place within Microsoft’s wider data platform, and provides a road map for the remainder of the guide.

- Chapter 2, “Implementing a Big Data Solution with HDInsight,” explains how you can implement a Big Data solution using Windows Azure HDInsight. It contains general guidance for applying Big Data techniques by exploring in more depth topics such as the technical capabilities, design patterns, query construction, data visualization, and more. You will find much of the information about the design, development, and testing lifecycle of a Big Data solution useful, even if you choose not to use HDInsight as the platform for your own solution.

- Chapter 3, “Using HDInsight for Business Analytics,” explores how, now that you understand how HDInsight works, you can use it within your own business processes, depending on your data analysis requirements and your existing business intelligence (BI) systems. For example, you might use HDInsight as a query tool and then visualize the results in a program such as Microsoft Excel. Alternatively, if you already have a data warehouse or an enterprise BI mechanism you may choose to use HDInsight as just another data source that integrates at different levels and feeds data into it.

The remaining chapters of the guide concentrate on specific scenarios for applying a Big Data solution, ranging from simple web server log file analysis to analyzing sentiment data such as users’ feedback and comments, and handling streaming data. Each chapter explores specific techniques and solutions relevant to the scenario, and shows an implementation based on Windows Azure HDInsight. The scenarios the guide covers are:

- Chapter 4, “Scenario 1 - Sentiment Analysis” covers obtaining information about the way that users and customers perceive your products and services by analyzing “sentiment” data. This is data such as emails, comments, feedback, and social media posts that refer to your products and services, or even to a specific topic or market sector that you are interested in investigating. In the example you will see how you can use Twitter data to analyze sentiment for a fictitious company.

- Chapter 5, “Scenario 2 - Analyzing Web Logs” covers one of the most common scenarios for Big Data solutions: analyzing log files to obtain information about visitors or users and visualizing this information in a range of different ways. This information can help you to better understand trends and traffic patterns, plan for the required capacity now and into the future, and discover which services, products, or areas of a website are underperforming. In this example you will see how you can extract useful data and then integrate it with existing BI systems at different levels and by using different tools.

- Chapter 6, Scenario 3 [in process]

- Chapter 7, Scenario 4 [in process]

- Chapter 8, Scenario 5 [in process]

- Finally, the appendices contain additional reference material that you may find useful as you explore the tools and utilities that are part of the Big Data framework.

…

I find it interesting that the p&p team made sentiment analysis the first scenario of their guide. The Microsoft “Social Analytics” Team put considerable effort into their preview, which I summarized in my Recent Articles about SQL Azure Labs and Other Added-Value Windows Azure SaaS Previews: A Bibliography. The team abandoned Project “Social Analytics” SQL Azure Lab on 6/21/2012 without explanation other than “The lab phase is complete.”

Amazon.com recently released hard-copy versions of the following p&p group publications:

- Building Hybrid Applications in the Cloud on Windows Azure

- Exploring CQRS and Event Sourcing: A journey into high scalability, availability, and maintainability with Windows Azure

- Moving Applications to the Cloud on Windows Azure

- Developing Multi-tenant Applications for the Cloud on Windows Azure

IMO, the books are a bit expensive at US$40 to $50 each.

Michael Wetzel, Tamir Melamed, Mark Vayman and Denny Lee (@dennylee) described Using Avro with HDInsight on Azure at 343 Industries in an 3/13/2013 post:

By Michael Wetzel, Tamir Melamed, Mark Vayman, Denny Lee

Reviewed by Pedro Urbina Escos, Brad Sarsfield, Rui Martins

Thanks to Krishnan Kaniappan, Che Chou, Jennifer Yi, and Rob Semsey

As noted in the Windows Azure Customer Solution Case Study, Halo 4 developer 343 Industries Gets New User Insights from Big Data in the Cloud, a critical component to achieve faster Hadoop query and processing performance AND keep file sizes small (thus Azure storage savings, faster query performance, and reduced network overhead) was to utilize Avro sequence files.

Avro was designed for Hadoop to help make Hadoop more interoperable with other languages; within our context, Avro has a C# API. Another popular format is protobuf (Google’s data interchange format) which was also under consideration. Altogether, we had compared the various formats (avro, protobuf, compressed JSON, compressed CSV, etc.) for our specific scenarios, we had found Avro to be smallest and fastest. In general, we found that in our case, Avro resulted in Hive queries with 1/3 the duration (i.e. 3x faster) while only 4% of the original data size. For more information about Avro, please refer to the latest Apache Avro documentation (as of this post, it is Apache Avro 1.7.3).

We will review some of the key components to get Avro up and running with HDInsight on Azure.

The Architecture

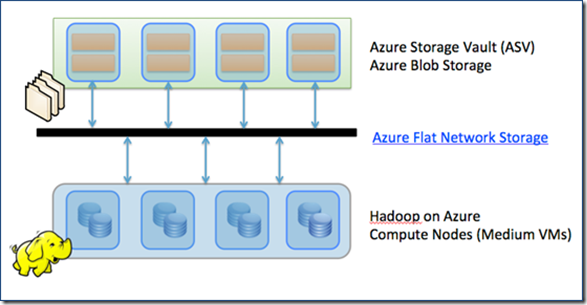

Many of the queries we executed against our HDInsight on Azure cluster were using the Hive Data Warehousing framework. Working within the context of HDInsight on Azure, all of the data is stored in the Azure Blob Storage using Block Blobs (the top set of boxes in the diagram below). Within HDInsight on Azure preview mode, the context of Azure Blob Storage is also known as the ASV protocol (Azure Storage Vault).

HDInsight itself is running on the Azure Compute nodes so that the Map Reduce computation is performed here while the source and target “tables” are in ASV.

To improve query performance, HDInsight on Azure utilizes Azure’s Flat Network storage – a grid network between compute and storage – to provide high bandwidth connectivity for compute and storage. For more information, please refer to Brad Calder’s informative post: Windows Azure’s Flat Network Storage and 2012 Scalability Targets.

Hive and Sequence Files

What’s great about Hive is that it is a data warehouse framework on top of Hadoop. What Hive really does is allow you to write HiveQL (a SQL-like language) that ultimately is translated to Map Reduce jobs. This means that Hive can take advantage of Hadoop’s ubiquity to read and store files of different formats and compressions.

In our particular case, we had our own binary format of data files that we had to process and store within Hadoop. Since we had to convert the files – we decided to test various formats including CSV, JSON, Avro, and Protobuf. We had the option to build a Serializer / Deserializer (SerDe) so Hive could read our binary format directly but in our case it was faster and easier to write our own mechanism vs. the custom SerDe.

Ultimately, whatever format chosen, the files would be stored in Azure Blob Storage. HDInsight on Azure would ultimately have a Hive table that would point to these files so we could query them as tables.

Jump into Avro with Haivvreo

A slight detour here in that because 343 Industries Foundation Services Team went into production on a preview instance of HDInsight on Azure, to get avro to work with HDInsight, we needed to utilize Jakob Homan GitHub project Haivvreo. It is a Hive SerDe that LinkedIn had developed to process Avro-encoded data in hive.

Note, Haivvreo is part of the standard Hive installation as of Hive 0.9.1 via HIVE-895. But if you are using a version of Hive prior to Hive 0.9.1, you will need to build Haivvreo yourself. Fortunately, the documentation for Haivvreo is straightforward and very complete. For more information on Haivvreo, please go to: https://github.com/jghoman/haivvreo

A quick configuration note, Haivvreo uses the two Jackson libraries:

- Jackson-core-asl-1.4.2.jar

- Jackson-mapper-asl-1.4.2.jar

As of this post, the Jackson libraries in HDInsight are version 1.8 and are fully backward compatible therefore you will not need to manually add these jars.

Jump into Protobuf with Elephant-Bird

While we ultimately had chosen Avro, Protobuf was a solid choice and if our data profile was different, we may have very well chosen Protobuf. This format is so popular that it is now native within Hadoop 2.0 / YARN (which as of this writing is still in beta). But for systems that are currently running on the Hadoop 1.0 tree (such as the current version of HDInsight), one of the most complete is Kevin Weil’s Elephant-Bird GitHub project – it is Twitter’s collection of LZO and Protocol Buffer code-base for Hadoop, Pig, Hive, and HBase.

Format Performance Review

As noted earlier, we had tested in their respective C# libraries the various file formats for compression and query performance. Below is a sample of the same set file in JSON (with Newtonsoft), Avro (with .NET 4.5 Deflate Fastest), and Protobuf (with LZO) format.

Based on these and other tests, it was observed that avro was faster and smaller in size. Faster is always good but smaller in size is more important than most may realize. The smaller size means there is less data to send across the network between storage and compute helping with query performance. But more importantly, it means you can save on Azure Blob Storage costs because you are using less storage space.

Additional tests were performed and the overall pro/con comparison can be seen below.

After prototyping and evaluating, we had decided to use Avro for the following reasons:

- Expressive

- Smaller and Faster

- Dynamic (schema store with data and APIs permit reading and creating)

- Include a file format and a textual encoding

- Leverage versioning support

- For Hadoop service provide cross language access.

Please note that these were the based on the test we had performed with our data profile. Yours may be different so it will be important for you to test before committing.

Getting Avro data into Azure Blob Storage

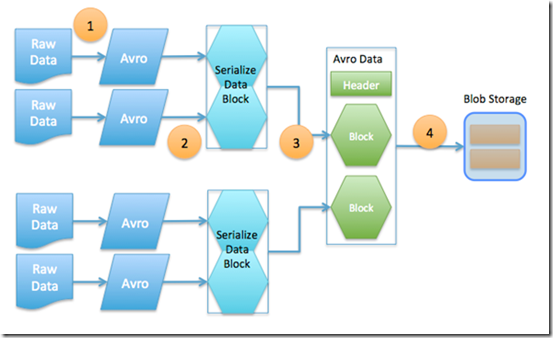

The Foundation Services Team at 343 Industries team is already using Windows Azure elastic infrastructure to provide them maximum flexibility – and much of that code is written in C# .NET. Therefore to make sense of the raw game data collected for Halo 4, the Halo Engineering team wrote Azure-based services that converted the raw game data into Avro.

The basic workflow is as follows:

- Take the raw data and use the patched C# Avro library to convert the raw data into an Avro object.

- As Avro data is always serialized with its schema (thus allowing you to have different versions of Avros but still being able to read them because the schema is embedded with it), we perform a data serialization step to create serialized data block.

- This block of data is then compressed using the deflate options within .NET 4.5 (in our case, using the Fastest option). Quick note, we started with .NET 4.0 but switching to .NET 4.5 Deflate Fastest gave us slightly better compression and approximately 3x speed improvement. Ultimately, multiple blocks (with the same schema) are placed into a single Avro data file.

- Finally, this Avro data file is sent to Azure Blob Storage.

A quick configuration note, you will need to take the AVRO-823: Support Avro data files in C# to get this up and running.

Configuration Quick Step by Step

As noted earlier, the documentation within Haivvreo is excellent and we definitely used it as our primary source. Therefore, before doing anything, please start with the Avro and Haivvreo documentation.

The configuration steps below are more specific to how to get the avro jars up and running within HDInsight per se.

1) Ensure that you’ve added the Hive bin to your PATH variables

;%HIVE_HOME%\bin

2) Ensure that you have already configured your ASV account within the core-site.xml if you have not already done so.

<property>

<name>fs.azure.account.key.$myStorageAccountName

<key>$myStorageAccountKey</key>

</name>

</property>

3) Copy your avro jars created within Haivvreo to your ASV storage account

4) Edit the hive-site.xml to include the path to your jars within ASV

<property>

<name>hive.aux.jars.path</name>

<value>asv://$container@account/$folder/avro-1.7.2.jar,asv://$container@account/$folder/avro-mapred-1.7.2.jar,asv://$container@account/$folder/haivvreo-1.0.7-cdh.jar</value>

</property>

5) Ensure that the avro schema (when you create an avro object, you also can create an avro schema that defines that object) is placed into HDFS so you can access it. Refer to the below Avros Tips and Tricks section concerning the use of schema.literal and schema.url.

Querying the Data

What’s great about this technique is that now that you have put your Avro data files into a folder within Azure Blob Storage, you need only to create a Hive EXTERNAL table to access and query this data. The Hive external table DDL is in the form of:

CREATE EXTERNAL TABLE GameDataAvro (

…

)

ROW FORMAT SERDE ‘com.linkedin.haivvreo.AvroSerDe’

STORED AS INPUTFORMAT ‘com.linkedin.haivvreo.AvroContainerInputFormat’

OUTPUTFORMAT

‘com.linkedin.haivvreo.AvroContainerOutputFormat’

LOCATION ‘asv://container/folder’

For a quick HDInsight on Azure tutorial with Hive, please check the post Hadoop on Azure: HiveQL query against Azure Blob Storage.

Avro Tips and Tricks

Some quick tips and tricks based on our experiences with Avros:

- Compression not only resulted in saving disk space, but less CPU resources were used to process the data

- Mixing compressed and uncompressed Avro in the same folder works!

- Corrupt file in ASV may cause jobs to fail. To workaround this problem, either manually delete the corrupt files and/or configure Hadoop/Hive to skip x number of errors.

- Passing long string literals into the Hive Metastore DB may result in truncation. To avoid this issue, you can use the schema.literal instead of schema.url if the literal is < 8K. If it is too big, then you can embed the schema within HDFS as per below.

CREATE EXTERNAL TABLE GameDataAvro (

…

)

ROW FORMAT SERDE ‘com.linkedin.haivvreo.AvroSerDe’

WITH SERDEPROPERTIES (

‘schema.url’=’hdfs://namenodehost:9000/schema/schema.avsc’)

STORED AS INPUTFORMAT ‘com.linkedin.haivvreo.AvroContainerInputFormat’

OUTPUTFORMAT

‘com.linkedin.haivvreo.AvroContainerOutputFormat’

LOCATION ‘asv://container/folder’

<Return to section navigation list>

Windows Azure SQL Database, Federations and Reporting, Mobile Services

• Luiz Santos of the ADO.NET Team explained SQL Database Connectivity and the Idempotency Issue in a 3/11/2013 post:

Applications connecting to SQL Server sometimes experience connections breaks due back-end failures. In general, they tend to be uncommon in LAN environment and more frequent in WAN topologies, when connectivity tends to be challenged by geographically dispersed links.

The introduction of Windows Azure SQL Database (WASD), which is a cloud-based SQL Server, added new availability and reliability challenges to the connectivity space. As a result, applications connecting to WASD witness frequent connection breaks due to backend reconfigurations and failures, forcing developers to include special logic for every database operation in order to handle these unexpected situations.

Basically, every time a connection drops, the application needs to decide to either reconnect (if it was a recoverable error (e.g. “server is busy)) or return an exception to the end user (if it was a non-recoverable one (e.g. “invalid password”)). In order to better handle these situations, developers can use a set of resources and best practices to avoid unwelcomed surprises after deploying applications to production. The Transient Fault Handling Framework for SQL Database, Windows Azure Storage, Service Bus & Cache is a free component that encapsulates all the logic required to handle most of the disconnections happening in SQL Database. For more information, please refer to its MSDN’s documentation here.

Also, for general resiliency guidance, please refer to Failsafe: Guidance for Resilient Cloud Architectures.

The Idempotency Issue

Now, special care must be considered when writing to a SQL Database table because a simple IUD (INSERT, UPDATE or DELETE) statement can potentially lead to data corruption. This is because there is no way to know if the statement was executed by the server immediately after a connection failure. Consider the code snippet below:

public class Data

{

public void Query(SqlConnection sqlConnection, string szQuery)

{

try

{

using (SqlConnection conn = new SqlConnection("…"))

{

conn.Open();

SqlCommand sqlCommand = new SqlCommand(szQuery, conn);

sqlCommand.ExecuteNonQuery("UPDATE productList SET Price=Price*1.01");

}

}

catch (SqlException)

{

//...

throw;

}

}

}

If the connection fails immediately after sqlCommand.ExecuteNonQuery, there is no way for the application to know if it needs to reissue the query (the UPDATE didn’t execute) or not (the UPDATE executed) upon reconnection.

In order to avoid this problem, the application must track if a command was uniquely executed (idempotent command) or not. If it were, then, there is nothing to do, but if it weren’t, then the application can safely re-execute it. Currently, SQL Database does not offer any idempotency capability, transferring this responsibility to the application.

One way to track idempotent activities is to track all writing database operations in a table, like the one below. This way, the application can query the table to know if a command was executed or not.

Once the tracking information is available, there are many ways to query it in order to reissue the command. Personally, I like to encapsulate the code in stored procedures and confine the idempotent logic to the database. This way, it becomes transparent to the application and simplifies the use of the Transient Fault Handling Framework for SQL Database, Windows Azure Storage, Service Bus & Cache component. The snippet below exemplifies this approach:

CREATE PROCEDURE UpdateBranch

-- Add the parameters for the stored procedure here

@Operation AS UNIQUEIDENTIFIER,

@ParentId AS INT,

@BranchDbId as INT

AS

BEGIN

DECLARE @COUNT_ID AS INT = 0

-- SET NOCOUNT ON added to prevent extra result sets from

-- interfering with SELECT statements.

SET NOCOUNT ON;

SET @COUNT_ID = (SELECT COUNT([ID]) FROM dbo.IdempotencyTracker WHERE ID = @Operation)

IF (@COUNT_ID = 0)

BEGIN

BEGIN TRANSACTION

UPDATE [dbo].[Branch] SET [BranchDbId] = @BranchDbId WHERE ParentId = @ParentId

INSERT INTO [dbo].[IdempotencyTracker] ([ID]) VALUES (@Operation)

COMMIT

END

END

In the scenario above, the application is responsible for generating and saving @Operation value in order to avoid losing track of the operation.

Concluding, although using the Transient Fault Handling Framework for SQL Database, Windows Azure Storage, Service Bus & Cache component is the right way to avoid connectivity issues in SQL Database, it’s not enough. You really need to implement an idempotency strategy in order to avoid data duplications or inconsistencies when writing to the database.

Carlos Figueira (@carlos_figueira) described Azure Mobile Services managed client – portable libraries and breaking changes in a 3/13/2013 post:

Among the requested features in the various forums for Azure Mobile Services, the usage of portable libraries has popped up quite often. We now have that support, which unifies the managed clients for the Windows Store and Windows Phone 8 platforms – and we’re also adding support for Windows Phone 7.5 as well. Johan Laanstra wrote a great post explaining the architecture of the new packages, so I won’t repeat what he wrote.

However, since there were some differences between the Windows Phone 8 and the Windows Store SDKs, unifying them meant some breaking changes. We felt that the grief we’ll take for them is worth, given the gains we can get by the platform unification, and we also used this opportunity to make some other changes to the client SDK to make it more polished before the general availability of the service. This post will try to list to the best of my knowledge the changes which will need to be addressed for apps using the new client. Notice that we’ll still support the “non-portable” clients for some more time, but it will be discontinued likely before the Azure Mobile Services goes out of preview mode and into a general availability,

Breaking changes / functionality changes

This is the grouped list of changes, along with the updates one needs to make to their projects to account for them.

Serialization changes

This is the place where the majority of the changes were made. For the Windows Store SDK, conversion between the typed objects and the JSON which was actually transferred over the wire was done via a custom serializer which converted between the two formats. The serializer was fairly simple, and could only deal with simple types (numbers, strings, dates and,Boolean values). To support more complex types (unsupported primitives, arrays, complex objects), we’d need to provide a custom implementation of the IDataMemberJsonCoverter interface, and decorate the appropriate member with the [DataMemberJsonConverter] attribute. Even simple things such as enumerations were not supported. With this new client, all the custom serialization code goes away, as we’ll be using the JSON.NET serializer, and by that move we gain all of the support of that great serializer. That means that complex types, arrays, enumerations will just work. The new client also exposes the serializer settings which allow the developer to fine-tune the output data, including adding custom converters and changing policies such as default value serialization.

Now for the list of changes:

- JSON.NET: that’s the serializer used for all platforms (not only for Windows Phone 8 as before). That causes the following behavioral changes from the current Windows Store SDK

- JSON bool values cannot be parsed as integers; false was interpreted as 0, and true as 1; now an exception will be thrown.

- Better / consistent exception messages for failed JSON parsing: there was a lot of code in the custom serializer for dealing with bad data; now this is all taken care of by JSON.NET, and its exception messages include more data such as the path in the JSON document, and position of the error

- All floating point members (even those with integer values) will be serialized with a decimal point: a JSON.NET behavior. Before a float / double with value 2 would be serialized as {“number”:2}; now it will be serialized as {“number”:2.0}.

- To maintain the previous behavior, one possibility is to use a custom JsonConverter class that first casts the values to integers if they are indeed integers.

- Float (System.Single) will be serialized with its “native” precision: before a member of type float (Single) would be serialized as if it had double precision. For example, the object new MyType { fltValue = float.MinValue } would be serialized as {“fltValue”:1.4012984643248171E-45}; now it will be serialized as {"fltValue":1.401298E-45}.

- To maintain the previous behavior, use a JsonConverter class that serializes float values as doubles (or change the property type to double)

- Float and double values less than their respective Epsilon now deserialize as 0.0: before it used to throw an exception. Now deserializing something like {“dblValue:1.23E-1000} will cause the dblValue member to be set to zero, instead of an exception being thrown.

- Fields are now serialized before properties: before properties were serialized before fields; now is the opposite. Since JSON objects are supposed to be unordered sets of members, this shouldn’t affect existing apps.

- Finer control of serialization via settings: the MobileServiceClient now exposes the JsonSerializerSettings object which is used by the JSON.NET serializer, so you can now have greater control of the serialization.

- Miscellaneous bugs fixed:

- Tab / newline characters can now be deserialized (before it would result in an exception)

- Integer values can now be deserialized to members of type char.

- Miscellaneous scenarios which are now supported:

- Uri / TimeSpan members don’t need a special converter anymore: they’re serialized as JSON strings

- Arrays / objects also now just work. They’re serialized as JSON array / object respectively. Notice that the server runtime still needs to process that value, since they cannot be inserted into the database directly.

- Non JSON.NET specific changes:

- Enumeration support: it was enabled by the move to JSON.NET, but our code now serializes the string value of the enumerations. Enums can also be used in ‘Where’ clauses while querying data from the service.

- Loss of precision handling: the runtime for Azure Mobile Services is based on JavaScript (node.js), which only stores numbers in a double-precision floating representation. That means that there are some integer and decimal values which cannot be represented accurately. Basically, any long number less than 2^53 can be represented, without loss of precision, in the server, so those are serialized correctly; any values larger than that (or smaller than -2^53) will cause an exception to be thrown. Notice that this is the current behavior, although it was a little buggy in which it would report some numbers within the valid range as invalid as well. Decimal values can also lose precision, and now this validation (exception thrown if precision would be lost) is applied to them as well. If you want to bypass the validation, either change the data type of the properties to double, or remove the converter (MobileServicePrecisionCheckConverter) from the serializer settings.

- One minor breaking change: before the exception thrown for long values outside the non-precision-loss range was ArgumentOutOfRangeException; now the exception type is InvalidOperationException.

- IDataMemberJsonConverter, [DataMemberJsonConverter] removed: now they either are not necessary anymore, or if you still want full control over the serialization of a property, you can use the [JsonConverterAttribute] applied to that property.

- ICustomMobileTableSerialization removed: if a type needs special serialization, decorate it with [JsonConverterAttribute].

- Only one member with name ‘id’ – regardless of casing – can exist in a type being serialized: before an object with members called “id”, “Id” and “ID” would be serialized to the server – where it would fail, since only one id is allowed. Now it will throw during serialization.

- [DataMember] attributes in members now require a [DataContract] attribute in the class: the contract of [DataMember] for serialization is tied with [DataContract], but we had a bug in which data members were being honored even if the containing class itself didn’t opt in to the data contract model (by using the [DataContract] attribute). Now if the SDK finds such a scenario it will throw an exception to signal that this is not a valid scenario. The typical scenario for using [DataMember] would be to change the serialized name of the member; now it can be accomplished via the [JsonPropertyAttribute] instead.

- The [DataTableAttribute] now takes the table name in the constructor: before: [DataTable(Name = “MyTable”)]; after:: [DataTable(“MyTable”)]

If I remember more or if you find one which is not listed here, please add a comment and I’ll update this post.

Operations with untyped (i.e., JSON) data

When performing CRUD operations in a managed client, usually we’d create a data type to store our information (e.g., Person, Order, etc.) and pass them to the Insert/Read/Update/Delete operations. We could also work directly with JSON data, and the table would support those as well. In the Windows Store client the SDK used the classes in the Windows.Data.Json namespace, native to the WinRT platform. However, those classes were not present in the Windows Phone 8, so that SDK was already using the JSON.NET classes from the Newtonsoft.Json.Linq namespace (JToken / JArray / JValue / JObject). To move to a common set of classes, now all managed libraries use the JSON.NET classes, including the Windows Store apps.

And the list of changes:

- [Windows Store only] Windows.Data.Json –> Newtonsoft.Json.Linq: there’s an added advantage that the JSON.NET classes have an API which is way more user-friendly than the “dry” WinRT one.

- Insert / update operations do not change the input object (untyped data only): This is a behavioral change which was the outcome of a lengthy discussion within the team. Before, the insert / operations would mutate the JsonObject (or JObject) value which was passed to it, by “patching” it with whatever the server returned. The most common case was the “id” in insert operations, but the server scripts are were free to mutate the object, even changing the shape of the value returned by the client (i.e., in an insert operation, the service could receive an object and return an array, or a primitive value). Before that would mean that the client had no way to retrieve that value. Now, the object passed to InsertAsync (and UpdateAsync) is not mutated, and the return of the server operation is returned as a new object instead. Notice that for typed operations (e.g., inserting / updating an Order object), the original object is still patched in place.

- This may require some code changes. For example, the code below:

- var table = MobileService.GetTable(“Clients”);

- var obj = JsonObject.Parse("{\"name\":\"John Doe\",\"age\":33}");

- await table.InsertAsync(obj);

- var id = (int)obj["id"].GetNumber();

- Would need to be rewritten as (notice changes in italics)

- var table = MobileService.GetTable(“Clients”);

- var obj = JObject.Parse("{\"name\":\"John Doe\",\"age\":33}");

- var inserted = await table.InsertAsync(obj);

- var id = inserted["id"].Value<int>();

Again, I’ll update the post if I can remember (or if you can add a comment) of any additional changes.

Service filters –> HttpClient primitives

Service filters are an advanced concept which implement a pipeline over the request / response. There is already such a pipeline in the HttpClient libraries – and since it has recently been made available for downlevel platforms (it originally only worked for .NET 4.5 and .NET 4.0) the Mobile Services SDK can now use this existing code instead of creating yet another pipeline.

And the changes:

- Goodbye IServiceFilter, hello DelegatingHandler: this is a sample implementation of a service filter, and the equivalent implementation with a delegating handler. Notice that there’s no IAsyncOperation<T> anymore, so the code is cleaner. Below you can see an example of the before / after the change.

- Message handlers are passed to the MobileServiceClient constructor, not to a method (WithFilter): that method created a clone of the client, and applied the filters to it. That name caused some confusion among users, with many people thinking that it would modify the client. By moving it to a constructor the API now makes it clear.

Here’s an example of a service filter converted to a message handler. The filter will add a custom header to the request, and change the response status code (useful for testing the behavior on unexpected responses from the server). First the service filter.

- public async Task CallFilteredClient()

- {

- var client = new MobileServiceClient(appUrl, appKey).WithFilter(new MyFilter());

- var table = client.GetTable<Person>();

- var p = new Person { Name = "John Doe" };

- await table.InsertAsync(p);

- }

- public class MyFilter : IServiceFilter

- {

- public IAsyncOperation<IServiceFilterResponse> Handle(IServiceFilterRequest request, IServiceFilterContinuation continuation)

- {

- request.Headers.Add("x-my-header", "my value");

- return continuation.Handle(request).AsTask().ContinueWith<IServiceFilterResponse>(t => {

- HttpStatusCode newStatusCode = HttpStatusCode.ServiceUnavailable;

- var response = t.Result;

- var newResponse = new MyResponse(response.Content, response.ContentType, response.Headers,

- response.ResponseStatus, (int)newStatusCode, newStatusCode.ToString());

- return newResponse;

- }).AsAsyncOperation();

- }

- class MyResponse : IServiceFilterResponse

- {

- string content;

- string contentType;

- IDictionary<string, string> headers;

- ServiceFilterResponseStatus responseStatus;

- int statusCode;

- string statusDescription;

- public MyResponse(string content, string contentType, IDictionary<string, string> headers,

- ServiceFilterResponseStatus responseStatus, int statusCode, string statusDescription)

- {

- this.content = content;

- this.contentType = contentType;

- this.headers = headers;

- this.responseStatus = responseStatus;

- this.statusCode = statusCode;

- this.statusDescription = statusDescription;

- }

- public string Content

- {

- get { return this.content; }

- }

- public string ContentType

- {

- get { return this.contentType; }

- }

- public IDictionary<string, string> Headers

- {

- get { return this.headers; }

- }

- public ServiceFilterResponseStatus ResponseStatus

- {

- get { return this.responseStatus; }

- }

- public int StatusCode

- {

- get { return this.statusCode; }

- }

- public string StatusDescription

- {

- get { return this.statusDescription; }

- }

- }

- }

And the same code implemented with a delegating handler:

- public async Task CallClientWithHandler()

- {

- var client = new MobileServiceClient(appUrl, appKey, new MyHandler());

- var table = client.GetTable<Person>();

- var p = new Person { Name = "John Doe" };

- await table.InsertAsync(p);

- }

- public class MyHandler : DelegatingHandler

- {

- protected override async Task<HttpResponseMessage> SendAsync(HttpRequestMessage request, CancellationToken cancellationToken)

- {

- request.Headers.Add("x-my-header", "my value");

- var response = await base.SendAsync(request, cancellationToken);

- response.StatusCode = HttpStatusCode.ServiceUnavailable;

- return response;

- }

- }

For more information on the HttpClient primitives, check the blog post from the BCL team.

Miscellaneous changes

Those are the other minor changes in the library:

- MobileServiceClient.LoginInProgress has been removed

- MobileServiceClient.LoginAsync(string) has been removed. This is now called LoginWithMicrosoftAccountAsync, which is added as an extension method to the class.

- MobileServiceTable, MobileServiceTable<T> and MobileServiceTableQuery<T> are now internal classes: all methods which used to return a table object now return the interface type IMobileServiceTable (untyped) or IMobileServiceTable<T> (typed). Typed queries are now exposed as the IMobileServiceTableQuery<T> interface.

- MobileServiceTable.<T>ToCollectionView() is now ToCollection(): the collection view implementation had some bugs, and it has been rewritten.

- Multiple Take operations now considers the minimum value: before it would use the last value. The call table.Take(5).Take(3).Take(7).ToListAsync() would issue a request with $top=7; now it sends a request with $top=3.

- Multiple Skip operations now add the values: before it would use the last value. The call table.Skip(3).Skip(5).Skip(4) would issue a request with $skip=4; now it sends a request with $skip=12.

That should be it. Please start using the new libraries and let us know what you think! We still have some (although not much) time to react to user feedback before we have to lock the API down as we move to the general release.

Kirill Gavryluk said “Nick [Harris] and Chris [Risner] have put together an awesome training kit on github. Here are few handy decks from it (including Android)” in a 3/12/2013 message:

Windows Store: https://github.com/WindowsAzure-TrainingKit/Presentation-Windows8AndWindowsAzureMobileServices

- Windows Phone: https://github.com/WindowsAzure-TrainingKit/Presentation-WindowsPhoneAndWindowsAzureMobileServices

iOS: https://github.com/WindowsAzure-TrainingKit/Presentation-BuildingiOSAppsWithWindowsAzureMobileServices

- Android: https://github.com/WindowsAzure-TrainingKit/Presentation-BuildingAndroidAppsWithWindowsAzureMobileServices

<Return to section navigation list>

Marketplace DataMarket, Cloud Numerics, Big Data and OData

•• Keith Craigo (@kcraigo) suggested that you Flex your ODATA in a 3/16/2013 post:

… ODATA stands for Open Data Protocol. ODATA is a web protocol used for querying and updating your data that may exist in what's called silos in your apps. With ODATA you can utilize and enhance other technologies such as HTTP, ATOM Publishing Protocol, XML and JSON. You can read more about ODATA at ODATA.org or view Scott Hanselman's talk

OData Basics - At the AZGroups "Day of .NET" with ScottGu

This post is about developing an Apache Flex mobile application that talks with a SQL Server Express database via ODATA. We're going to build a simple Project Manager application.

What! No LightSwitch!!! But But I thought you were a LightSwitch developer, hold your tater tots there partner. You can still use LightSwitch to create your ODATA service and manage your data.

But for this post I'm going to show you another way by using Visual Studio

Express 2012 for Web.

Why am I using Flex instead of LightSwitch, LightSwitch can now create an HTML client right? This is true but you have to have the latest edition of Visual Studio. The HTML client is not available in the express editions and it requires you upgrade your OS to Windows 8. Neither myself nor my employer is able to upgrade at the moment.

I've been developing Flex/Flash applications since Flash Builder, formerly known as Flex Builder version 1.1, so I have all the necessary components on my MacBook. And I just learned I could build an ODATA service using Visual Studio 2012 Express which I will pass on to you now.

There are other ways to connect your Flex application to SQL Server, such as WebORB for .NET from http://www.themidnightcoders.com/ but that is beyond the scope of this tutorial.

NOTE: If you have Visual Studio 2012 Pro you already have WCF Data Services installed.

My Setup on my wi-fi home network, I have a MacBook Pro OSX 10.7.5 and an ACER Aspire Ultrabook Windows 7:

Hosted on my ACER Ultrabook

Hosted on my MacBook Pro

- SQL Server Express -

http://www.microsoft.com/en-us/sqlserver/editions/2012-editions/express.aspx- Visual Studio Express 2012 for Web -

http://www.microsoft.com/visualstudio/eng/products/visual-studio-express-for-web- WCF Data Services 5.0 for ODATA-

http://www.microsoft.com/en-us/download/details.aspx?id=29306- Microsoft .NET Framework 5.0

- Adobe Flash Builder 4.7 Standard - http://www.adobe.com/products/flash-builder-family.html

- Latest version of Apache Flex SDK - http://flex.apache.org/

Useful but not required - if you are on Windows you can download the open source application LinqPad to help with your ODATA services.

What this tutorial is about:

A simple Project Management application that we can run on our iPhone or iPad. If you have an Android phone this should work also, I only have an iPhone though.

I'll try and keep this simple but make the project easy to expand upon. We will use straight out of the box components and technologies from Flex and Actionscript. We won't use any special frameworks like the excellent Inversion of Control Frameworks such as RobotLegs, Swiz, Mate, Parsley or the Event helper library AS3Signals. Maybe in another post but for now I'll keep this simple, I only provided the links for you to browse at your convenience if wish to learn more.Setup our development environment:

Download and install the items mentioned above if you don't already have them installed. I won't go over how to install all the above in this tutorial, each of the resources above have good documentation covering this.

Ok assuming you have everything installed and configured:

Let's make sure SQL Server can recieve requests from other machines on our home network, this should be the same setup for your company's internal network, consult your network admin about this.

WARNING! If you are following this tutorial from your Corporate Network, please check with your Network Administrator before making any of the following changes. You probably won't have the necessary privileges to complete this but make sure you know what you are doing first if you do. Your Network Administrator may have a better solution.

On your Windows machineSetup Our DATABASE

Click the Windows Start button then right click Computer, select Manage.

- Expand the SQL Server Configuration Manager located under Services and Applications.

- Click Protocols for SQLEXPRESS.

- Enable Shared Memory, Named Pipes, and TCP/IP

- Double click TCP/IP then click the IP Addresses tab

- Locate IPALL, if there is an entry for TCP Dynamic ports delete this, leave it blank. You need a hard coded port to be able to setup the Windows Firewall Inbound Rule.

- For TCP Port set it to 1433, SQL Server usually listens on port 1433. You will get an alert that no changes will take effect until SQL Server is restarted

- Click OK to close this dialog.

- Click SQL Server Services, click SQL Server then click the Restart button in the menu bar or right click and select Restart

- Make sure SQL Server Browser is running.

- You can now close Computer Management

Setup an Inbound Rule in Windows Firewall

Click the Windows Start button and select Control panel, then open Windows Firewall

- Click Advanced Settings

- Click Inbound Rules

- Under Actions select New Rule

- In the Name field I chose to give this rule the name SQL SERVER

- Make sure the Enabled box is checked

- Make sure the Allow the connection box is checked

- Click the Protocols and Ports tab

- For Protocol Type select TCP

- Local Port - select Specific Ports and type in 1433 or whatever you typed for IPALL

- Remote Ports - select Specific Ports, and type in 1433 or whatever you typed for IPALL

- Under the Advanced tab, for Profiles I deselected Domain and Public. I only have Private checked.

- Click OK to close this dialog

- Click New Rule again

- In the Name field I chose to give this rule the name SQL SERVER BROWSER

- Make sure the Enabled box is checked

- Make sure the Allow the connection box is checked

- Click the Protocols and Ports tab

- For Protocol Type select UDP

- Local Port - select Specific Ports and type in 1434

- Remote Ports - select Specific Ports, and type in 1434

- Under the Advanced tab, for Profiles I deselected Domain and Public. I only have Private checked.

- Click OK to close this dialog

- Close the Windows Firewall with Advanced Security screen

- Close Control Panel

Now let's setup our Database tables

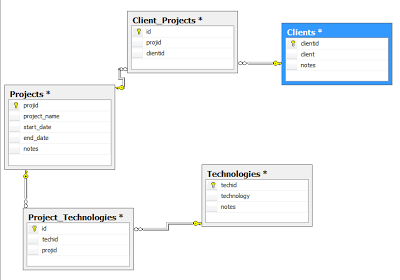

We will use three tables Projects, Clients, Technologies and two look up tables Project_Technologies and Client_Projects.

NOTE: You could setup your database from within Visual Studio using the Entity Data Model Designer but that creates the database in the app data and this is not covered by our Firewall Rule.

- Open SQL Server Management Studio and login to your server

- Right click the Databases folder icon and select New Database

- For Database name I gave mine the name of MyProjects

- Click OK to create the DB

- Expand the db and right click the Tables folder icon and select New Table

- Create the table with the following structure:

- projid (PK, int, not null) Identity set to YES

- project_name (varchar(50), not null)

- start_date (date, not null)

- end_date (date, null)

- notes (text, null)

- Save the table with the name Projects

- Create a second table with the following:

- clientid (PK, int, not null) Identity set to YES

- client (varchar(50), not null)

- notes (text, null)

- Save this table as Clients

- Create a third table with the following

- techid (PK, int, not null) Identity set to YES

- technology (varchar(50), not null)

- notes (text, null)

- Save this table as Technologies

- Create our two look up tables

- 1st table for our Clients and Projects relationships

- id (PK, int, not null) Identity set to YES

- projid (int, not null)

- clientid (int, not null)

- Save this table as Client_Projects

- 2nd table for our Projects and Technologies relationships

- id (PK, int, not null) Identity set to YES

- techid (int, not null)

- projid (int, not null)

- Next expand the Database Diagrams folder

Create a new diagram

Add all the tables and set the appropriate relationships.

Client (One) - Client_Projects (Many)

Project (One) - Client_Projects (Many)

Project (One) - Project_Technologies (Many)

Technology (One) - Project_Technologies (Many)

You should have something similar to the following:

- We now need to give permission to the user who will be used to connect to our project database.

- Open the Server-Security folder, then select Logins.

If NT AUTHORITY\NETWORK SERVICE is NOT present follow Step 16. If NT AUTHORITY\NETWORK SERVICE IS present skip to step 17.- Right click Logins, select New Login

Click Search

- The easiest way I've found is to click Advanced

- Then click Find Now

- Locate and select Network Service

- Click OK

- Click OK again

- Select User Mapping

- Check the box next to MyProjects, or whatever you named the db in this tutorial

- Then check the box next to db_datareader in the Database role membership for : MyProjects

- Click OK

- If NT AUTHORITY\NETWORK SERVICE IS present following Steps 6 - 9 above to map the user to our MyProjects database.

Setup Our ODATA Service

Open Visual Studio for Web or Visual Studio Professional and create a new

WCF Service Application.NOTE: I'm choosing the C# version, but the Visual Basic version will work as well.

I named this new service application ProjectManagerWCFService

Delete the default IService1.cs and Service1.svc, we will create our own.

Right click the ProjectManagerWCFService and select Add then WCF Data Service 5.3, I named this new Data Service ProjectManagerWCFDataService, click OK.NOTE: If WCF Data Service 5.3 is not present, select Add New Item and look for this library under your language, then Web. Or you may need to install it.

Next we need to add a data model.Right click ProjectManagerWCFService again, click Add and choose ADO.NET Entity Data Model.

NOTE: If ADO.NET Entity Data Model. is not present, select Add New Item and look for this library under your language, then Data.

I named this ProjectManagerDataModelYou should now see the following screen shot:

I chose the default, Generate from database.

- Click Next.

- Click New Connection.

You should see the following screen shot:

- Select your Server - HINT: If the server is on the same machine, just put a dot in the Server name field, this is a shortcut for localhost.

- I left the default Windows Authentication

- Select your database

- You can test the connection if you want at this point or just click OK

- I accepted the defaults of this screen.

- Click OK

The following screen will be shown

NOTE: Make a note of the name I've circled in the screen shot, we will need this later.

- Click Next

- Expand the Table, dbo disclosure buttons

- Check Clients_Projects, Clients, Project_Technologies, Projects and Technologies

- Accept the defaults and click Finish

- You may receive a warning message that "Running this template could possibly harm your computer, I chose to ignore". You have to make your own decision.

- OK, if the template above did not generate the connector lines? How do I correct this? Well the reason this will happen is if I forget to set the relationships in the database as I described in step 13. But not to worry, just go back to the database and follow step 13, then in Visual Studio update the model based on the database.

You could just create the relationships in the Entity Model Designer by Right clicking the Client Model, make sure your clicking in the title area of the Model box. Choose Add New then select Association. Create a One to Many relationship with the Client table table on the one side and Client_Projects on the many side. But be aware this is only for the data model not the database, so if there are any changes in the database you will need to update the model any ways so I suggest to just make the changes in the database.

- Open the ProjectManagerWCFDataService.svc file

- In the line

Public class ProjectManagerWCFDataService : DataService< /* TODO: put your data source class name here. */ > replace the /* TODO: put your data source class name here. */ comment with the name I mentioned above MyProjectsEntities

This line should now look like the following

Public class ProjectManagerWCFDataService : DataService<MyProjectsEntities>- We need to make one more change to this file. Uncomment the first config line

config.SetEntitySetAccessRule("MyEntitySet", EntitySetRights.AllRead);

replace "MyEntitySet" with an Asterisk, it should look the following

config.SetEntitySetAccessRule("*", EntitySetRights.AllRead);

This will give us unrestricted read access, you may need to change this before releasing to production.- OK now we can now test the service.

- Click the the green arrow button for Internet Explorer or the run button in Visual Studio.

- If all goes well you should see the following:

- I'll cover running queries against this service a little later.

- Under the wwwroot create a new folder named ProjectManager

- We now need to create an application named ProjectManager in IIS Manager

- Open IIS Manager, click the Application Pool, I like to create a new Application Pool for each of my apps to isolate them from other processes. I'm not a NetworkAdmin so let me know if this is wrong and why.

- Click Add Application Pool, for the name I chose Project Manager

- Set .NET Framework version to .NET Framework v4.0.3019 or higher

- Managed pipeline mode: I selected Integrated

- Leave the check box for Start application pool immediately checked

- Click OK

- Now highlight the App Pool you just created

- Even though we set the .NET Framework to v4.0, the Managed .NET Framework version default is 2.0, we need to change this to 4.0 as well.

- Change the highlighted .NET Framework from v2.0 to v4.0

- Right click Default Web Site then select Add Application

- Click the Select button

- Select the Application Pool we just created

- Click OK

- For Alias I just type PM

- Click the button with the ... next to the Physical Path field

- Navigate to the ProjectManager folder we created in Step 22.

- Click OK

- You can now close IIS Manager.

PLEASE NOTE: If you are on a public server, check with your Network Admin. This tutorial is for localhost development. Be aware additional security settings may need to be set on a public server.- You have to complete this next part as the Administrator. Launch Visual Studio as Administrator.

- We need to set up publishing.

- Click Build in the menu bar, then select Publish Selection or Publish in Visuaal Studio.

- Select Profile, Select or Publish Profile. In the drop down, select new.

- For Profile Name type localhost|

- Connection

Service URL: localhost

Site / application: Default Web Site/ProjectManager- Click the Validate Connection Button you should see

- Click Next

- Settings: In the Databases section select the drop down under MyProjectEntities make sure the connection strings are correct.

- Select Next

- Click the Start Preview button or you can click the Publish button.

- If all goes well open a browser on the web server and type

http://localhost/ProjectManager/ProjectManagerWCFDataService.svc/- You should see the same screen as Step 20.

- OK now if your setup is like mine for your MAC, you need to know the ip address of your Windows machine, the web service host.

- Open a browser on your MAC and type your ip address, mine is

http://192.168.1.78/ProjectManager/ProjectManagerWCFDataService.svc/- You should see the same screen as Step 20.

- You can now close Visual Studio.

Setup Flash Builder

See video on Keith’s site.

AFAIK, the accepted abbreviation for Open Data Protocol is OData.

•• XRMLabs (@XrmLabs) described How to retrieve more than 50 records through OData in CRM 2011? in a 3/8/2013 post (missed when published):

If you are using OData end points to retrieve records in MS CRM 2011 then you might be surprised to know that the maximum number of records that can be retrieved in a single retrieve call is 50. This is because the page size is fixed to Max 50 records.

When working with large set of data we were faced with a similar problem. Thankfully MS CRM Odata has a next link which can be used to retrieve next 50 records. The following example shows how to retrieve more than 50 records using Odata end point. In this example we push the first 50 records in an array and retrieve next page results using the URL ("__next") provided in the JSON response object.

Note we are using Jquery and JSON2 in the following example. We presume you have already added JQuery and JSON to your Form. To know more about how to add jquery please follow this blog entry (How toadd JQuery support to CRM-2011)

The javascript code is

suchas follows.

varrelatedContact = [];

functiononloadFetchAllContactRecords(){

varserverUrl = Xrm.Page.context.getServerUrl();

varoDataUrl = serverUrl +"/xrmservices/2011/OrganizationData.svc/ContactSet?$select=EMailAddress1,FirstName,LastName&$filter=StateCode/Value eq 0";

GetContactRecords(oDataUrl);

vartotalRecords = relatedContact.length;

}

functionGetContactRecords(url){

jQuery.ajax({

type:"GET",

contentType:"application/json; charset=utf-8",

datatype:"json",

url: url,

async:false,

beforeSend:function(XMLHttpRequest){

XMLHttpRequest.setRequestHeader("Accept","application/json");

},

success:function(data, textStatus, XmlHttpRequest){

if(data && data.d !=null&& data.d.results !=null){

varobjrecord = data.d;

varrecords = objrecord.results;

insertRecordsToArray(records);

FetchRecordsCallBack(objrecord);

}

},

error:function(XmlHttpRequest, textStatus, errorThrown){

alert("An error has occurred");

}

});

}

functioninsertRecordsToArray(records){

for(vari = 0; i < records.length; i++){

relatedContact.push(records[i]);

}

}

functionFetchRecordsCallBack(objrecord){

if(objrecord.__next !=null){

varurl = objrecord.__next;

GetContactRecords(url);

}

}Hope this helps.

• Alejandro Jezierski (@alexjota) described Developing Big Data Solutions on Windows Azure, the blind and the elephant in a 3/15/2013 post to his SouthWorks blog:

What is a Big Data solution to you? Whatever you are thinking of, I cannot think of a better example than the story of the blind and the elephant. ”I’m a dev, It’s about writing some Map/Reduce awesomeness”, or “I’m a business analyst, I just want to query data in Excel!”, ”I’m a passer-by, but whatever this is, it’s yellow”… and so on.

I’m currently working with Microsoft’s Patterns and Practices team, developing a new guide in the Windows Azure Guidance Series called “Developing Big Data Solutions on Windows Azure”.

The guide will focus on discussing a wide range of scenarios, all of which have HDInsight on Windows Azure as a key player, the related technologies from the Hadoop ecosystem and Microsoft’s current BI solution stack.

We just made our first early preview drop on codeplex, so you can take a peek and see how the guide is shaping up so far.

So go get it, have a read and tell us what you think, we appreciate your feedback!

See the Microsoft’s patterns & practices (@mspnp) group posted the 123-page first (alpha) drop of Developing Big Data Solutions on Windows Azure on 3/14/2013 article at the beginning of the Windows Azure Blob, Drive, Table, Queue, HDInsight and Media Services section above.

Andrew Brust (@andrewbrust) asked “Microsoft wants to enable everyone to work with big data, using Excel, SQL Server, and current skill sets. With a solid stack, albeit one vulnerable in predictive analytics and mobility, can Microsoft become the McDonald's of big data "insights?" in a deck for his Microsoft's Big Data strategy: Democratize, in-memory and the cloud post of 3/8/2013 to ZDNet’s Big Data blog (missed when posted):

I've mentioned before that I've done a lot of work with Microsoft. Recently, I was visiting the company's corporate campus in Redmond, Washington, for the Global Summit of its Most Valuable Professionals program, in which I participate. As I was on campus, and it was the week before O'Reilly's big data-focused Strata conference, of which Microsoft is a big sponsor, I took the opportunity to sit down with Microsoft's Director of Program Management for BI in its Data Platform Group, Kamal Hathi.

It's not just about Strata, either. Hathi is gearing up to deliver the keynote address at the PASS Business Analytics Conference in Chicago next month and so his mind is pretty well immersed in strategic questions around big data that are relevant to the software giant that employs him.

Redmond's big data worldview

My goal was to find out how Redmond views the worlds of big data, analytics, and business intelligence, and what motivates those views, too. What I found out is that Microsoft sees big data mostly through two lenses: That of its business intelligence sensibility, formed over more than a decade of being in that market; and those of its other lines of business, including online services, gaming, and cloud platforms.

This combination makes Microsoft's analytics profile a combination of old-school mega vendor BI market contender and modern-day customer of analytics technologies. And because Microsoft has had to use its own BI tools in the service of big data analyses, it's been forced to find a way to make them work together, to ponder the mismatch between the two, and how best to productize a solution to that mismatch.

Strata reveals

I mentioned last week's Strata conference, and that really is germane to my conversation with Hathi, because Microsoft made three key announcements, all of which tie into the ideas Hathi and I discussed. Those announcements are as follows:

Version 2 of its SQL Server Parallel Data Warehouse product is complete, with Dell and HP standing by to take orders now for delivery of appliances this month. PDW v2 includes PolyBase, which integrates PDW's Massively Parallel Processing (MPP) SQL query engine with data stored in Hadoop.

Microsoft released a preview of its "Data Explorer" add-in for Excel. Data Explorer can be used to import data from a variety of sources, including Facebook and Hadoop's Distributed File System, and can import data from the web much more adeptly than can Excel on its own. Data Explorer can import from conventional relational data sources as well. All data imported by Data Explorer can be added to PowerPivot data models and then analyzed and visualized in Power View.

Hortonworks, Microsoft's partner in all things Hadoop, has released a beta of its own distribution of the Hortwonworks Data Platform (HDP) for Windows. This more "vanilla" HDP for Windows will coexist with Microsoft's HDInsight distribution of Hadoop, which is itself based on the HDP for Windows code base.

As I said, these announcements tie into the ideas Hathi discussed with me, but I haven't told you what they are yet. Hathi explained to me that Microsoft's strategy for "Insights" (the term it typically applies to BI and analytics) is woven around a few key pillars: "democratization", cloud, and in-memory. I'll try now to relay Hathi's elaboration of each pillar.

Democratization

"Democratization" is a concept Microsoft has always seen as key to its own value proposition. It's based on the idea that new areas of technology, in their early stages, typically are catered to by smaller pure play, specialist companies, whose products are sometimes quite expensive. In addition, the skills required to take advantage of these technologies are usually in short supply, driving costs up even further. Democratization disrupts this exclusivity with products that are often less expensive, integrate more easily in the corporate datacenter and, importantly, are accessible to mainstream information workers and developers using the skills they already have.

In the case of Hadoop, which is based on Apache Software Foundation open-source software projects, democratization is less about the cost savings aspect and much more about datacenter integration and skill set accessibility. The on-premises version of Microsoft's HDInsight distribution of Hadoop will integrate with Active Directory, System Center, and other back-end products; the Azure cloud-based version integrates with Azure cloud storage and with the Azure SQL Database offering as well.

In terms of skill set accessibility, Microsoft's integration of Excel/PowerPivot and Hadoop through Hive and ODBC means any Excel user that even aspires to power user status will be able to analyze big data on her own, using the familiar spreadsheet tool that has been established for decades.

The other thing to keep in mind is that HDInsight runs on Windows Server, rather than Linux. Given that a majority of Intel-based servers run Windows and that a majority of corporate IT personnel are trained on it, providing a Hadoop distribution that runs there, in and of itself, enlarges the Hadoop tent. …

Glenn Gailey (@ggailey777) described Extending the OData Async Extensions to DataServiceCollection<T> Methods in a 3/4/2013 post (missed when published):

As I announced in a previous post, there is an OData client library that supports Windows Store app development. This is a the good news, but folks who have tried to use it in their Windows Store apps are quick to note that the library’s asynchronous APIs don’t support the nice new await/async behaviors in .NET Framework 4.5. Our friend Phani Raju provided a bit of help here in his post Async extension methods for OData Windows 8 client library. In this post, Phani provides us with some nice awaitable wrappers around the Begin/End methods in the OData client APIs. This makes is easy to add these extension methods into your project and use the await pattern, like this:

var results = await ODataAsyncExtensions .ExecuteAsync<AnswerLink>(_context.AnswerLinks);

I used var to define the variable…but when you code this, you will notice that this awaitable method (along with the other Execute and Load methods) actually returns an IEnumerable<T>, which is not the ideal collection for data binding, especially two-way binding. OData clients already have an ideal binding collection in DataServiceCollection<T>, but Phani didn’t provide for us the nice awaitable wrapper for the load methods on this collection.

Fortunately, I came across the article Convert Events to Async methods posted by Mandelbug, which showed how to convert the old style event/delegate based async operation into an awaitable Task-based one. As such, an awaitable version of the LoadAsync(DataServiceQuery) method looks like this:

public static async Task<LoadCompletedEventArgs> LoadAsync<T>(DataServiceCollection<T> bindingCollection, DataServiceQuery<T> query) {

var tcs = new TaskCompletionSource<LoadCompletedEventArgs>(); EventHandler<LoadCompletedEventArgs> handler =

delegate(object o, LoadCompletedEventArgs e) { if (e.Error != null) { tcs.TrySetException(e.Error); } else if (e.Cancelled) { tcs.TrySetCanceled(); } else { tcs.TrySetResult(e); } }; bindingCollection.LoadCompleted += handler; bindingCollection.LoadAsync(query); LoadCompletedEventArgs eventArgs = await tcs.Task; bindingCollection.LoadCompleted -= handler; return eventArgs; }I have attached the entire ODataAsyncExtensions class code page to this post, which includes Phani’s methods plus my new LoadAsync ones. As always, this code is provided to you “as is," I ‘m not going to support it, so use it at your own risk….but please let me know if you find any bugs.

<Return to section navigation list>

Windows Azure Service Bus, Caching Access Control, Active Directory, Identity and Workflow

• Haddy El-Haggan (@Hhaggan) posted Introducing Service Bus on 3/9/2013 (missed when published):

Service Bus is a software model, a middleware, that is mainly used with WCF or SOA to handle and loosens all the coupled messaging between all the applications required. There are 2 types of Service Bus, the one running on Windows Azure and the one that can run on the local servers which we call Enterprise Service Bus.

To clarify more what Service Bus can do, it can work with different applications, reach them and interact with them regardless of the different mechanism they work with.

Here is a video from Channel 9 that clarifies more the concept of EAI and What Service Bus can do: http://channel9.msdn.com/shows/Cloud+Cover/Cloud-Cover-Episode-23-AppFabric-Service-Bus/

For the Windows Azure Service Bus, it provides REST, relayed and brokered messaging capabilities for the Messaging, connecting the applications running on premises with the ones running on Windows Azure and finally works also for the mobile devices push notifications.

For more information about the Windows Azure Service Bus Relayed Messaging you can go to the following link [or see post below] and for more information about the Windows Azure Service Bus Brokered Messaging you can find it in the following link.

So why people use the Service Bus?

- It can be used for delivering messages to multiple connectors or endpoints.

- Simplify the integration between multiple applications as each one of them is using different connector with different way of integration.

- Ensure the delivery of the messaging to the applications.

• Haddy El-Haggan (@Hhaggan) continued his Service Bus series with Windows Azure Service Bus Relayed Messaging on 3/9/2013:

As for the Service Bus, the Windows Azure middleware solving the EAI (Enterprise application integration), here is the first way to do the messaging using the Relayed Messaging. Let me first explain what does Relayed Messaging means.

Here are the 3 ways of messaging using the Relay messaging:

- Direct one way messaging

- Request/response messaging

- Peer to Peer messaging

For the Binding there are several ways of bindings, certainly that matches all the different ways of Relay Messaging.

- TCP Relay Binding know as: NetTcpRelayBinding

- WS Relay Binding know as: WSHttpRelayBinding

- One Way Relay Binding known as: NetOneWayRelayBinding

- Event Relay Binding Known as: NetEventRelayBinding

Now starting for the first type of binding the TCP Relay Binding. Actually this type of binding has also three types of binding mode:

- Relay

- Direct

- Hybrid

Each one of them has a specific time to be used in, however there are some people who always go for the easiest one which is the hybrid but I have to clarify all of them. The TCP Relay binding mode “Relay” is used when you are connecting the service and the client through the service itself. First of all the Service has to authenticate itself in the service bus, the clients too has to authenticate itself to the service then it can sends the required message to the service. At the end, the service would be able to send the required message to the client.

The second TCP Relay Binding mode “Direct”, this mode is used for establishing direct connections between the client and the service without having any needs to use the service or passing by it. Like the Relay mode the Service has to authenticate itself, then the client too after that service bus will show them, the client and the service how they can establish connection between them and communicate directly. Working on the Message Security is a must.

The third TCP Relay Binding mode “Hybrid”, from its name you can get that it is a mix from the 2 other modes the Relay and the Direct. Well it is a Yes, like the two other services the authentication for the client and the service has to be done first after that the initialized mode is the relayed. The second step is to check if the direct mode could be achieved successfully, if yes than the switch will be done automatically otherwise the binding will remain the same. Also working on the security is a must.