Windows Azure and Cloud Computing Posts for 6/26/2012+

| A compendium of Windows Azure, Service Bus, EAI & EDI,Access Control, Connect, SQL Azure Database, and other cloud-computing articles. |

• Updated 6/30/2012 with new articles marked •.

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Windows Azure Blob, Drive, Table, Queue and Hadoop Services

- SQL Azure Database, Federations and Reporting

- Marketplace DataMarket, Social Analytics, Big Data and OData

- Windows Azure Service Bus, Active Directory, and Workflow

- Windows Azure Virtual Machines, Virtual Networks, Web Sites, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch and Entity Framework v4+

- Windows Azure Infrastructure and DevOps

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

Azure Blob, Drive, Table, Queue and Hadoop Services

• The Windows Azure Storage Team posted Introducing Table SAS (Shared Access Signature), Queue SAS and update to Blob SAS on 6/12/2012 (missed when posted due to MEETAzure traffic):

We’re excited to announce that, as part of version 2012-02-12, we have introduced Table Shared Access Signatures (SAS), Queue SAS and updates to Blob SAS. In this blog, we will highlight usage scenarios for these new features along with sample code using the Windows Azure Storage Client Library v1.7.1, which is available on GitHub.

Shared Access Signatures allow granular access to tables, queues, blob containers, and blobs. A SAS token can be configured to provide specific access rights, such as read, write, update, delete, etc. to a specific table, key range within a table, queue, blob, or blob container; for a specified time period or without any limit. The SAS token appears as part of the resource’s URI as a series of query parameters. Prior to version 2012-02-12, Shared Access Signature could only grant access to blobs and blob containers.

SAS Update to Blob in version 2012-02-12

In the 2012-02-12 version, Blob SAS has been extended to allow unbounded access time to a blob resource instead of the previously limited one hour expiry time for non-revocable SAS tokens. To make use of this additional feature, the sv (signed version) query parameter must be set to "2012-02-12" which would allow the difference between se (signed expiry, which is mandatory) and st (signed start, which is optional) to be larger than one hour. For more details, refer to the MSDN documentation.

Best Practices When Using SAS

The following are best practices to follow when using Shared Access Signatures.

- Always use HTTPS when making SAS requests. SAS tokens are sent over the wire as part of a URL, and can potentially be leaked if HTTP is used. A leaked SAS token grants access until it either expires or is revoked.

- Use server stored access policies for revokable SAS. Each container, table, and queue can now have up to five server stored access policies at once. Revoking one of these policies invalidates all SAS tokens issued using that policy. Consider grouping SAS tokens such that logically related tokens share the same server stored access policy. Avoid inadvertently reusing revoked access policy identifiers by including a unique string in them, such as the date and time the policy was created.

- Don’t specify a start time or allow at least five minutes for clock skew. Due to clock skew, a SAS token might start or expire earlier or later than expected. If you do not specify a start time, then the start time is considered to be now, and you do not have to worry about clock skew for the start time.

- Limit the lifetime of SAS tokens and treat it as a Lease. Clients that need more time can request an updated SAS token.

- Be aware of version: Starting 2012-02-12 version, SAS tokens will contain a new version parameter (sv). sv defines how the various parameters in the SAS token must be interpreted and the version of the REST API to use to execute the operation. This implies that services that are responsible for providing SAS tokens to client applications for the version of the REST protocol that they understand. Make sure clients understand the REST protocol version specified by sv when they are given a SAS to use.

Table SAS

SAS for table allows account owners to grant SAS token access by defining the following restriction on the SAS policy:

1. Table granularity: users can grant access to an entire table (tn) or to a table range defined by a table (tn) along with a partition key range (startpk/endpk) and row key range (startrk/endrk).

To better understand the range to which access is granted, let us take an example data set:

The permission is specified as range of rows from (starpk,startrk) until (endpk, endrk).

Example 1: (starpk,startrk) =(,) (endpk, endrk)=(,)

Allowed Range = All rows in tableExample 2: (starpk,startrk) =(PK002,) (endpk, endrk)=(,)

Allowed Range = All rows starting from row # 301Example 3: (starpk,startrk) =(PK002,) (endpk, endrk)=(PK002,)

Allowed Range = All rows starting from row # 301 and ending at row # 600Example 3: (starpk,startrk) =(PK001,RK002) (endpk, endrk)=(PK003,RK003)

Allowed Range = All rows starting from row # 2 and ending at row # 603.

NOTE: The row (PK002, RK100) is accessible and the row key limit is hierarchical and not absolute (i.e. it is not applied as startrk <= rowkey <= endrk).2. Access permissions (sp): user can grant access rights to the specified table or table range such as Query (r), Add (a), Update (u), Delete (d) or a combination of them.

3. Time range (st/se): users can limit the SAS token access time. Start time (st) is optional but Expiry time (se) is mandatory, and no limits are enforced on these parameters. Therefore a SAS token may be valid for a very large time period.

4. Server stored access policy (si): users can either generate offline SAS tokens where the policy permissions described above is part of the SAS token, or they can choose to store specific policy settings associated with a table. These policy settings are limited to the time range (start time and end time) and the access permissions. Stored access policy provides additional control over generated SAS tokens where policy settings could be changed at any time without the need to re-issue a new token. In addition, revoking SAS access would become possible without the need to change the account’s key.

For more information on the different policy settings for Table SAS and the REST interface, please refer to the SAS MSDN documentation.

Though non-revocable Table SAS provides large time period access to a resource, we highly recommend that you always limit its validity to a minimum required amount of time in case the SAS token is leaked or the holder of the token is no longer trusted. In that case, the only way to revoke access is to rotate the account’s key that was used to generate the SAS, which would also revoke any other SAS tokens that were already issued and are currently in use. In cases where large time period access is needed, we recommend that you use a server stored access policy as described above.

Most Shared Access Signature usage falls into two different scenarios:

- A service granting access to clients, so those clients can access their parts of the storage account or access the storage account with restricted permissions. Example: a Windows Phone app for a service running on Windows Azure. A SAS token would be distributed to clients (the Windows Phone app) so it can have direct access to storage.

- A service owner who needs to keep his production storage account credentials confined within a limited set of machines or Windows Azure roles which act as a key management system. In this case, a SAS token will be issued on an as-needed basis to worker or web roles that require access to specific storage resources. This allows services to reduce the risk of getting their keys compromised.

Along with the different usage scenarios, SAS token generation usually follows the models below:

- A SAS Token Generator or producer service responsible for issuing SAS tokens to applications, referred to as SAS consumers. The SAS token generated is usually for limited amount of time to control access. This model usually works best with the first scenario described earlier where a phone app (SAS consumer) would request access to a certain resource by contacting a SAS generator service running in the cloud. Before the SAS token expires, the consumer would again contact the service for a renewed SAS. The service can refuse to produce any further tokens to certain applications or users, for example in the scenario where a user’s subscription to the service has expired. Diagram 1 illustrates this model.

Diagram 1: SAS Consumer/Producer Request Flow

- The communication channel between the application (SAS consumer) and SAS Token Generator could be service specific where the service would authenticate the application/user (for example, using OAuth authentication mechanism) before issuing or renewing the SAS token. We highly recommend that the communication be a secure one in order to avoid any SAS token leak. Note that steps 1 and 2 would only be needed whenever the SAS token approaches its expiry time or the application is requesting access to a different resource. A SAS token can be used as long as it is valid which means multiple requests could be issued (steps 3 and 4) before consulting back with the SAS Token Generator service.

- A one-time generated SAS token tied to a signed identifier controlled as part of a stored access policy. This model would work best in the second scenario described earlier where the SAS token could either be part of a worker role configuration file, or issued once by a SAS token generator/producer service where maximum access time could be provided. In case access needs to be revoked or permission and/or duration changed, the account owner can use the Set Table ACL API to modify the stored policy associated with issued SAS token. …

The post continues with sample C# code.

Andrew Edwards and Brad Calder of the Windows Azure Storage Team posted Exploring Windows Azure Drives, Disks, and Images on 6/27/2012:

With the preview of Windows Azure Virtual Machines, we have two new special types of blobs stored in Windows Azure Storage: Windows Azure Virtual Machine Disks and Window Azure Virtual Machine Images. And of course we also have the existing preview of Windows Azure Drives. In the rest of this post, we will refer to these as storage, disks, images, and drives. This post explores what drives, disks, and images are and how they interact with storage.

Virtual Hard Drives (VHDs)

Drives, disks, and images are all VHDs stored as page blobs within your storage account. There are actually several slightly different VHD formats: fixed, dynamic, and differencing. Currently, Windows Azure only supports the format named ‘fixed’. This format lays the logical disk out linearly within the file format, such that disk offset X is stored at blob offset X. At the end of the blob, there is a small footer that describes the properties of the VHD. All of this stored in the page blob adheres to the standard VHD format, so you can take this VHD and mount it on your server on-premises if you choose to. Often, the fixed format wastes space because most disks have large unused ranges in them. However, we store our ‘fixed’ VHDs as a page blob, which is a sparse format, so we get the benefits of both the ‘fixed’ and ‘expandable’ disks at the same time.

Uploading VHDs to Windows Azure Storage

You can upload your VHD into your storage account to use it for either PaaS or IaaS. When you are uploading your VHD into storage, you will want to use a tool that understands that page blobs are sparse, and only uploads the portions of the VHD that have actual data in them. Also, if you have dynamic VHDs, you want to use a tool that will convert your dynamic VHD into a fixed VHD as it is doing the upload. CSUpload will do both of these things for you, and it is included as part of the Windows Azure SDK.

Persistence and Durability

Since drives, disks, and images are all stored in storage, your data will be persisted even when your virtual machine has to be moved to another physical machine. This means your data gets to take advantage of the durability offered by the Windows Azure Storage architecture, where all of your non-buffered and flushed writes to the disk/drive are replicated 3 times in storage to make it durable before returning success back to your application.

Drives (PaaS)

Drives are used by the PaaS roles (Worker Role, Web Role, and VM Role) to mount a VHD and assign a drive letter. There are many details about how you use these drives here. Drives are implemented with a kernel mode driver that runs within your VM, so your disk IO to and from the drive in the VM will cause network IO to and from the VM to your page blob in Windows Azure Storage. The follow diagram shows the driver running inside the VM, communicating with storage through the VM’s virtual network adapter.

PaaS roles are allowed to mount up to 16 drives per role.

Disks (IaaS)

When you create a Windows Azure Virtual Machine, the platform will attach at least one disk to the VM for your operating system disk. This disk will also be a VHD stored as a page blob in storage. As you write to the disk in the VM, the changes to the disk will be made to the page blob inside storage. You can also attach additional disks to your VM as data disks, and these will be stored in storage as page blobs as well.

Unlike for drives, the code that communicates with storage on behalf of your disk is not within your VM, so doing IO to the disk will not cause network activity in the VM, although it will cause network activity on the physical node. The following diagram shows how the driver runs in the host operating system, and the VM communicates through the disk interface to the driver, which then communicates through the host network adapter to storage.

There are limits to the number of disks a virtual machine can mount, varying from 16 data disks for an extra-large virtual machine, to one data disk for an extra small virtual machine. Details can be found here.

IMPORTANT: The Windows Azure platform holds an infinite lease on all the page blobs that it considers disks in your storage account so that you don’t accidently delete the underlying page blob, container, nor the storage account while the VM is using the VHD. If you want to delete the underlying page blob, the container it is within, or the storage account, you will need to detach the disk from the VM first as shown here:

And then select the disk you want to detach and then delete:

Then you need to remove the disk from the portal:

and then you can select ‘delete disk’ from the bottom of the window:

Note: when you delete the disk you are not deleting the disk (VHD page blob) in your storage account. You are only disassociating it from the images that can be used for Windows Azure Virtual Machines. After you have done all of the above, you will be able to delete the disk from your storage account, using Windows Azure Storage REST APIs or storage explorers.

Images (IaaS)

Windows Azure uses the concept of an “Image” to describe a template VHD that can be used to create one or more Virtual Machines. Windows Azure and some partners provide images that can be used to create Virtual Machines. You can also create images for yourself by capturing an image of an existing Windows Azure Virtual Machine, or you can upload a sysprep’d image to your storage account. An image is also in the VHD format, but the platform will not write to the image. Instead, when you create a Virtual Machine from an image, the system will create a copy of that image’s page blob in your storage account, and that copy will be used for the Virtual Machine’s operating system disk.

IMPORTANT: Windows Azure holds an infinite lease on all the page blobs, the blob container and the storage account that it considers images in your storage account. Therefore, to delete the underlying page blob, you need to delete the image from the portal by going to the “Virtual Machines” section, clicking on “Images”:

Then you select your image and press “Delete Image” at the bottom of the screen. This will disassociate the VHD from your set of registered images, but it does not delete the page blob from your storage account. At that point, you will be able to delete the image from your storage account.

Temporary Disk

There is another disk present in all web roles, worker roles, VM Roles, and Windows Azure Virtual Machines, called the temporary disk. This is a physical disk on the node that can be used for scratch space. Data on this disk will be lost when the VM is moved to another physical machine, which can happen during upgrades, patches, and when Windows Azure detects something is wrong with the node you are running on. The sizes offered for the temporary disk are defined here.

The temporary disk is the ideal place to store your operating system’s pagefile.

IMPORTANT: The temporary disk is not persistent. You should only write data onto this disk that you are willing to lose at any time.

Billing

Windows Azure Storage charges for Bandwidth, Transactions, and Storage Capacity. The per-unit costs of each can be found here.

Bandwidth

We recommend mounting drives from within the same location (e.g., US East) as the storage account they are stored in, as this offers the best performance, and also will not incur bandwidth charges. With disks, you are required to use them within the same location the disk is stored.

Transactions

When connected to a VM, disk IOs from both drives and disks will be satisfied from storage (unless one of the layers of cache described below can satisfy the request first). Small disk IOs will incur one Windows Azure Storage transaction per IO. Larger disk IOs will be split into smaller IOs, so they will incur more transaction charges. The breakdown for this is:

- Drives

- IO < 2 megabytes will be 1 transaction

- IO >= 2 megabytes will be broken into transactions of 2MBs or smaller

- Disks

- IO < 128 kilobytes will be 1 transaction

- IO >= 128 kilobytes will be broken into transactions of 128KBs or smaller

In addition, operating systems often perform a little read-ahead for small sequential IOs (typically less than 64 kilobytes), which may result in larger sized IOs to drives/disks than the IO size being issued by the application. If the prefetched data is used, then this can result in fewer transactions to your storage account than the number of IOs issued by your application.

Storage Capacity

Windows Azure Storage stores pages blobs and thus VHDs in sparse format, and therefore only charges for data within the VHD that has actually been written to during the life of the VHD. Therefore, we recommend using ‘quick format’ because this will avoid storing large ranges of zeros within the page blob. When creating a VHD you can choose the quick format option by specifying the below:

It is also important to note that when you delete files within the file system used by the VHD, most operating systems do not clear or zero these ranges, so you can still be paying capacity charges within a blob for the data that you deleted via a disk/drive.

Caches, Caches, and more Caches

Drives and disks both support on-disk caching and some limited in-memory caching. Many layers of the operating system as well as application libraries do in-memory caching as well. This section highlights some of the caching choices you have as an application developer.

Caching can be used to improve performance, as well as to reduce transaction costs. The following table outlines some of the caches that are available for use with disks and drives. Each is described in more detail below the table.

FileStream (applies to both disks and drives)

.NET framework’s FileStream class will cache reads and writes in memory to reduce IOs to the disk. Some of the FileStream constructors take a cache size, and others will choose the default 8k cache size for you. You can not specify that the class use no memory cache, as the minimum cache size is 8 bytes. You can force the buffer to be written to disk by calling the FileStream.Flush(bool) API.

Operating System Caching (applies to both disks and drives)

The operating system itself will do in-memory buffering for both reads and writes, unless you explicitly turn it off when you open a file using FILE_FLAG_WRITE_THROUGH and/or FILE_FLAG_NO_BUFFERING. An in-depth discussion of the in memory caching behavior of windows is available here.

Windows Azure Drive Caches

Drives allow you to choose whether to use the node’s local temporary disk as a read cache, or to use no cache at all. The space for a drive’s cache is allocated from your web role or worker role’s temporary disk. This cache is write-through, so writes are always committed immediately to storage. Reads will be satisfied either from the local disk, or from storage.

Using the drive local cache can improve sequential IO read performance when the reads ‘hit’ the cache. Sequential reads will hit the cache if:

- The data has been read before. The data is cached on the first time it is read, not on first write.

- The cache is large enough to hold all of the data.

Access to the blob can often deliver a higher rate of random IOs than the local disk. However, these random IOs will incur storage transaction costs. To reduce the number of transactions to storage, you can use the local disk cache for random IOs as well. For best results, ensure that your random writes to the disk are 8KB aligned, and the IO sizes are in multiples of 8KB.

Windows Azure Virtual Machine Disk Caches

When deploying a Virtual Machine, the OS disk has two host caching choices:

- Read/Write (Default) – write back cache

- Read - write through cache

When you setup a data disk on a virtual machine, you get three host caching choices:

- Read/Write – write back cache

- Read – write through cache

- None (Default)

The type of cache to use for data disks and the OS disk is not currently exposed through the portal. To set the type of host caching, you must either use the Service Management APIs (either Add Data Disk or Update Data Disk) or the Powershell commands (Add-AzureDataDisk or Set-AzureDataDisk).

The read cache is stored both on disk and in memory in the host OS. The write cache is stored in memory in the host OS.

WARNING: If your application does not use FILE_FLAG_WRITE_THROUGH, the write cache could result in data loss because the data could be sitting in the host OS memory waiting to be written when the physical machine crashes unexpectedly.

Using the read cache will improve sequential IO read performance when the reads ‘hit’ the cache. Sequential reads will hit the cache if:

- The data has been read before.

- The cache is large enough to hold all of the data.

The cache’s size for a disk varies based on instance size and the number of disks mounted. Caching can only be enabled for up to four data disks.

No Caching for Windows Azure Drives and VM Disks

Windows Azure Storage can provide a higher rate of random IOs than the local disk on your node that is used for caching. If your application needs to do lots of random IOs, and throughput is important to you, then you may want to consider not using the above caches. Keep in mind, however, that IOs to Windows Azure Storage do incur transaction costs, while IOs to the local cache do not.

To disable your Windows Azure Drive cache, pass ‘0’ for the cache size when you call the Mount() API.

For a Virtual Machine data disk the default behavior is to not use the cache. If you have enabled the cache on a data disk, you can disable it using the Update Data Disk service management API, or the Set-AzureDataDisk powershell command.

For a Virtual Machine operating system disk the default behavior is to use the cache. If your application will do lots of random IOs to data files, you may want to consider moving those files to a data disk which has the caching turned off.

David Linthicum (@DavidLinthicum) asserted “By hemming and hawing, you're retrenching further into the data center and missing out on tremendous business benefits” in a deck for his Your corporate data needs to be in the public cloud -- starting now post of 6/29/2012 to InfoWorld’s Cloud Computing blog:

Looking for your first cloud computing project? Chances are you're considering a very small, very low-risk application to create on a public PaaS or IaaS cloud provider.

I get the logic: It's a low-value application. If the thing tanks or your information is hacked, no harm, no foul. However, I assert that you could move backward by hedging your bets, retrenching further and further into the data center and missing out on the game-changing advantages of the cloud.

You need to bite the bullet, update that résumé (in case your superiors don't agree), and push your strategic corporate data to the public cloud.

Using the public cloud lets you leverage this data in new ways, thanks to new tools -- without having to pay millions of dollars for new infrastructure to support the database processing. When you have such inexpensive capacity, you'll figure out new ways to analyze your business using this data, and that will lead to improved decisions and -- call me crazy -- a much better business. Isn't that the objective?

Of course, the downside is that your data could be carted off by the feds in a data center raid or hacked through an opening your cloud provider forgot to close. Right? Wrong. The chances of those events (or similar events) occurring are very slim. Indeed, your data is more vulnerable where it now exists, but you have a false sense of security because you can hug your servers.

If you're playing with the public clouds just to say you're in them, while at the same time avoiding any potential downside, you're actually doing more harm than good. Cloud technology has evolved in the last five years, so put aside those old prejudices and assumptions. Now is the time to take calculated risks and get some of your data assets out on the cloud. Most of the Global 2000 will find value there.

Denny Lee (@dennylee) posted To the Cloud…and Beyond! on 6/28/2012:

After all the years of working on enterprise Analysis Services implementations, there were definitely some raised eyebrows when I had started running around with my MacBook Air on the merits of Hadoop – and Hadoop in the Cloud for that matter.

It got a little worse when I was hinting at the shift to Big Data:

It sounded strange until we had announced during the PASS 2011 Day One Keynote which I also called out in my post Connecting PowerPivot to Hadoop on Azure – Self Service BI to Big Data in the Cloud.

…

The reason for my personal interest in Big Data isn’t just because my web analytics background during my days at digiMine or Microsoft adCenter. In fact it was spurned by my years of working on exceedingly complex DW and BI implementations during the awesome craziness as part of the SQL Customer Advisory Team.

Source: https://twitter.com/ftgfop1/status/213309905929637888/photo/1

The uber-examples of the importance of Big Data and BI working together include:

- Yahoo! TAO is the largest Analysis Services cube as called by Scott Burke, SVP of User Data and Analytics, at this year’s Hadoop Summit.

- Dave Mariani (@dmariani) and my session “How Klout is changing the landscape of social media with Hadoop and BI” – more info at: http://dennyglee.com/2012/05/30/sql-bi-at-hadoop-summit-awesomesauce/

The key theme of our session was simply that:

Hadoop and BI are better together

…

Saying all of this, after this fun ride, I am both excited and sad to announce that I will be leaving the SQL Customer Advisory Team and joining the SQL BI organization. It’s pretty cool opportunity as I will get to live the theme of Hadoop and BI are better together by helping to build some internet scale Hadoop and BI systems – and all within the Cloud! I will reveal more later, eh?!

Meanwhile, I will still be blogging and running around talking about Hadoop and BI – so keep on pinging me, eh?! And yes, SSAS Maestros is still very much going to be continuing – its its new home as part of the SQL BI Org.

<Return to section navigation list>

SQL Azure Database, Federations and Reporting

Gregory Leake posted Data Series: SQL Server in Windows Azure Virtual Machine vs. SQL Database to the Windows Azure blog on 6/26/2012:

Two weeks ago we announced many new upcoming Windows Azure features that are now in public preview. One of these is the new Windows Azure Virtual Machine (VM), which makes it very easy to deploy dedicated instances of SQL Server in the Windows Azure cloud. You can read more about this new capability here. SQL Server running in a Windows Azure VM can serve as the backing database to both cloud-based applications, as well as on-premise applications, much like Windows Azure SQL Database (formerly known as “SQL Azure”). This capability is our implementation of “Infrastructure as a Service” (IaaS).

Windows Azure SQL Database, which is a commercially released service, is our implementation of “Platform as a Service” (PaaS) for a relational database service in the cloud. The introduction of new IaaS capabilities for Windows Azure leads to an important question: when should I choose Windows Azure SQL Database, and when should I choose SQL Server running in a Windows Azure VM when deploying a database to the cloud? In this blog post, we provide some early information to help customers understand some of the differences between the two options, and their relative strengths and core scenarios. Each of these choices might be a better fit than the other depending on what kind of problem you want to solve.

The key criteria in determining which of these two cloud database choices will be the better option for a particular solution are:

- Full compatibility with SQL Server box product editions

- Control vs. cost

- Database scale-out requirements

In general, the two options are optimized for different purposes:

- SQL Database is optimized to reduce costs to the minimum amount possible. It provides a very quick and easy way to build a scale-out data tier in the cloud, while lowering ongoing administration costs since customers do not have to provision or maintain any virtual machines or database software.

- SQL Server running in a Windows Azure VM is optimized for the best compatibility with existing applications and for hybrid applications. It provides full SQL Server box product features and gives the administrator full control over a dedicated SQL Server instance and cloud-based VM.

Compatibility with SQL Server Box Product Editions

From a features and compatibility standpoint, running SQL Server 2012 (or earlier edition) in a Windows Azure VM is no different than running full SQL Server box product in a VM hosted in your own data center: it is full box product, and the features supported just depend on the edition of SQL Server you deploy (note that AlwaysOn availability groups are targeted for support at GA but not the current preview release; and that Windows Clustering will not be available at GA). The advantage of running SQL Server in a Windows Azure VM is that you do not need to buy or maintain any infrastructure whatsoever, leading to lower TCO.

Existing SQL Server-based applications will “just work” with SQL Server running in a Windows Azure VM, as long as you deploy the correct edition. If your application requires full SQL Server Enterprise Edition, your existing applications will work as long as you deploy SQL Server Enterprise Edition to the Windows Azure VM(s). This includes features such as SQL Server Integration Services, Analysis Services and Reporting Services. No code migration will be required, and you can run your applications in the cloud or on-premise. Using the new Windows Azure Virtual Network, also announced this month, you will even be able to domain-join your Windows Azure VM running SQL Server to your on-premise domain(s).

This is critical to enabling development of hybrid applications that can span both on-premises and off-premises under a single corporate trust boundary. Also, VM images with SQL Server can be created in the cloud from stock image galleries provided within Windows Azure, or created on-premises from existing deployments and uploaded to Windows Azure. Once deployed, VM images can be moved between on-premises and the cloud with SQL Server License mobility, which is provided for those customers that have licensed SQL Server with Software Assurance (SA).

Windows Azure SQL Database, on the other hand, does not support all SQL Server features. While a very large subset of features are supported (and this set of features is growing over time), it is not full SQL Server Enterprise Edition, and differences will always exist based on different design goals for SQL Database as pointed out above. A guide is available on MSDN that explains the important feature-level differences between SQL Database and SQL Server box product. Even with these differences, however, tools such as SQL Server Management Studio and SQL Server Data Tools can be used with SQL Database as well as SQL Server running on premises and in a Windows Azure VM.

In a nutshell, running SQL Server in a Windows Azure VM will most often be the best route to migrate existing applications and services to Windows Azure given its compatibility with the full SQL Server box product, and for building hybrid applications and services spanning on-premises and the cloud under a single corporate trust boundary. However, for new cloud-based applications and services, SQL Database might be the better choice for reasons discussed further below.

Control vs. Cost

While SQL Server running in a Windows Azure VM will offer the same database features as the box product, SQL Database aims as service to minimize costs and administration overhead. With SQL Database, for example, you do not pay for compute resources in the cloud. Rather, you just pay a consumption fee per database based on the size of the database—from as little as $5.00 per month for a 100MB database, to $228.00 per month for a 150GB database (the current size limit for a single SQL Database database).

And while SQL Server running in a Windows Azure VM will offer the best application compatibility, there are two important features of SQL Database that customers should understand:

- High Availability (HA) and 99.9% database uptime SLA built-in

- SQL Database Federation

With SQL Database, high availability is a standard feature at no additional cost. Each time you create a Windows Azure SQL Database, that database is actually operating across a primary node and multiple online replicas, such that if the primary fails, a secondary node automatically replaces it within seconds, with no application downtime. This is how we are able to offer a 99.9% uptime SLA with SQL Database at no additional charge.

For SQL Server in a Windows Azure VM, the virtual machine instance will have an SLA (99.9% uptime) at commercial release. This SLA is for the VM itself, not the SQL Server databases. For database HA, you will be able to configure multiple VMs running SQL Server 2012 and setup an AlwaysOn Availability Group; but this will require some manual configuration and management, and you will pay extra for each secondary you operate—just as you would for an on-premises HA configuration.

With SQL Server running in a Windows Azure VM, not only can you control the operating system and database configuration (since it’s your dedicated VM to configure). But it is up to you to configure and maintain this VM over time, including patching and upgrading the OS and database software over time, as well as installing any additional software such as anti-virus, backup tools, etc. With SQL Database, you are not running in a VM, and have no control over a VM configuration. However, the database software is automatically configured, patched and upgraded by Microsoft in the data centers, so this lowers administration costs.

With SQL Server in a Windows Azure VM, you can also control the size of the VM, providing some level of scale up from smaller compute, storage and memory configurations to larger VM sizes. SQL Database, on the other hand, is designed for a scale-out vs. a scale-up approach to achieving higher throughput rates. This is achieved through a unique feature of SQL Database called Federation. Federation makes it very easy to partition (shard) a single logical database into many physical nodes, providing very high throughput for the most demanding database-driven applications. The SQL Database Federation feature is possible because of the unique PaaS characteristics of SQL Database and its almost friction-free provisioning and automated management. SQL Database Federation is discussed in more detail below.

Database Scale-out Requirements

Another key evaluation criterion for choosing SQL Server running in a Windows Azure VM vs. SQL Database will be performance and scalability. Customers will always get the best vertical scalability (aka ‘scale up’) when running SQL Server on their own hardware, since customers can buy hardware that is highly optimized for performance. With SQL Server running in a Windows Azure VM, performance for a single database will be constrained to the largest virtual machine image possible on Windows Azure—which at its introduction will be a VM with 8 virtual CPUs, 14GB of RAM, 16 TB of storage, and 800 MB/s network bandwidth. Storage will be optimized for performance and configurable by customers. Customers will also be able to configure and run AlwaysOn Availability Groups (at GA, not for preview release), and optionally get additional performance by using read-only secondaries or other scale out mechanisms such as scalable shared databases, peer-to-peer replication, Distributed Partitioned Views, and data-dependent routing.

With SQL Database, on the other hand, customers do not choose how many CPUs or memory: SQL Database operates across shared resources that do not need to be configured by the customer. We strive to balance the resource usage of SQL Database so that no one application continuously dominates any resource. However, this means a single SQL Database is in nature limited in its throughput capabilities, and will be automatically throttled if a specific database is pushed beyond certain resource limits. But via a feature called SQL Database Federation, customers can achieve much greater scalability via native scale-out capabilities. Federation enables a single logical database to be easily portioned into multiple physical nodes.

This native feature in SQL Database makes scale-out much easier to setup and manage. For example, with SQL Database, you can quickly partition a database into a few or even hundreds of nodes, with each node adding to the overall capacity of the data tier (note that applications need to be specifically designed to take advantage of this feature). Partitioning operations are as simple as one line of T-SQL, and the database remains online even during re-partitioning. More information on SQL Database Federation is available here.

Summary

We hope this blog has helped to introduce some of the key differences and similarities between SQL Server running in a Windows Azure VM (IaaS) and Window Azure SQL Database (PaaS). The good news is that in the near future, customers will have a choice between these two models, and the two models can be easily mixed and matched for different types of solutions.

Criteria SQL Server inside Windows Azure VM Windows Azure SQL Database Time to Solution Migrate Existing Apps Fast Moderate Build New Apps Moderate Fast Cost of Solution Hardware Administration None None Software Administration (Database & OS) Manual None Machine High Availability Automated (99.9% Uptime SLA at commercial release) N/A Database High Availability With extra VMs and manual setup via AlwaysOn (at commercial release), DBM; DR via log shipping, transactional replication Standard Feature (99.9% DB uptime SLA) Cost Medium Low Scale Model Scale-Up X-Large VM

(8 cores, 14GB RAM, up to 16 TB disk space)Not Supported Scale-Out Manual via AlwaysOn read-only secondaries, scalable shared databases, peer-to-peer replication, Distributed Partitioned Views, and data-dependent routing (manual to setup, and applications must be designed for these features) SQL Database Federation (automated at data tier, with applications designed for Federation) Control & Customize OS and VM Full Control No Control SQL Server Database Compatibility, Customization Full support for SQL Server 2012 box product features including database engine, SSIS, SSAS, SSRS Large subset of SQL Server 2012 features Hybrid Domain Join and Windows Authentication Yes Not possible Data Synchronization via Azure Data Sync Supported Supported Manageability Resource Governance & Security Level SQL Instance/VM Logical DB Server Tools Support Existing SQL Server tools such as SSMS, System Center, and SSDT Existing SQL Server tools such as SSMS, System Center, and SSDT Manage at Scale Capabilities Fair Good

Arnd Christian Koenig, Bolin Ding, Surajit Chaudhuri and Vivek Narasayya published A Statistical Approach Towards Robust Progress Estimation recently. From the Introduction:

Accurate estimation of the progress of database queries can be crucial to a number of applications such as administration of longrunning decision support queries. As a consequence, the problem of estimating the progress of SQL queries has received significant attention in recent years [6, 13, 14, 5, 12, 16, 15, 17]. The key requirement for all of these techniques (aside from small overhead

and memory footprint) is their robustness, meaning that the estimators need to be accurate across a wide range of queries, parameters and data distributions.

Unfortunately, as was shown in [5], the problem of accurate progress estimation for arbitrary SQL queries is hard in terms of worst-case guarantees: none of the proposed techniques can guarantee any but trivial bounds on the accuracy of the estimation (unless some common SQL operators are not allowed). While the

work of [5] is theoretical and mainly interested in the worst case, the property that no single proposed estimator is robust in general holds in practice as well.

We find that each of the main estimators proposed in the literature performs poorly relative to the alternative estimators for some (types of) queries.To illustrate this, we compared the estimation errors for 3 major estimators proposed in the literature (DNE [6], the estimator of Luo et al (LUO) [13] and the TGN estimator based on the Total GetNext model [6] tracking the GetNext calls at each node in a query plan) over a number of real-life and benchmark workloads (described in detail in Section 6). We use the average absolute difference between the estimated progress and true progress as the estimator error for each query and the compare the ratio of this error to the minimum error among all three estimators. The results are shown in Figure 1, where the Y-axis shows the ratio and the X-axis iterates over all queries, ordered by ascending ratio for each estimator – note that the Y-axis is in log-scale. As we can see, each estimator is (close to) optimal for a subset of the queries, but also degrades severely (in comparison to the other two), with an error-ratio of 5x or more for a significant fraction of the workload. No single existing estimator performs sufficiently well across the spectrum of queries and data distributions to rely on it exclusively.

However, the relative errors in Figure 1 also suggest that by judiciously selecting the best among the three estimators, we can reduce the progress estimation error. Hence, in absence of a single estimator that is always accurate, an approach that chooses among them could go a long way towards making progress estimation robust.

Unfortunately, there appears to be no straightforward way to precisely state simple conditions under which one estimator outperforms another. While we know that e.g., the TGN estimator is more sensitive to cardinality estimation errors than DNE, but more robust with regards to variance in the number of GetNext calls issued in response to input tuples, neither of these effects be reliably quantified before a query starts execution. Moreover, a large numbers of other factors such as tuple spills due to memory contention, certain optimizations in the processing of nested iterations (see Section 5.1), etc., all impact which progress estimator performs best for a given query.

From Proceedings of the VLDB Endowment, Vol. 5, No. 4. Bolin Ding is at the University of Illinois at UrbanaChampaign; the other three authors are at Microsoft Research.

Progress estimation is becoming more important with increasing adoption of Big Data technologies. Similar work is going on for estimating MapReduce application progress.

<Return to section navigation list>

MarketPlace DataMarket, Social Analytics, Big Data and OData

My Big Data in the Cloud article for Visual Studio Magazine asserts “Microsoft has cooked up a feast of value-added big data cloud apps featuring Apache Hadoop, MapReduce, Hive and Pig, as well as free apps and utilities for numerical analysis, publishing data sets, data encryption, uploading files to SQL Azure and blobs.” Here’s the introduction:

Competition is heating up for Platform as a Service (PaaS) providers such as Microsoft Windows Azure, Google App Engine, VMware Cloud Foundry and Salesforce.com Heroku, but cutting compute and storage charges no longer increases PaaS market share. So traditional Infrastructure as a Service (IaaS) vendors, led by Amazon Web Services (AWS) LLC, are encroaching on PaaS providers by adding new features to abstract cloud computing functions that formerly required provisioning by users. For example, AWS introduced Elastic MapReduce (EMR) with Apache Hive for big data analytics in April 2009. In October 2009, Amazon added a Relational Database Services (RDS) beta to its bag of cloud tricks to compete with SQL Azure.

Microsoft finally countered with a multipronged Apache Hadoop on Windows Azure preview in December 2011, aided by Hadoop consultants from Hortonworks Inc., a Yahoo! Inc. spin-off. Microsoft also intends to enter the highly competitive IaaS market; a breakout session at the Microsoft Worldwide Partner Conference 2012 will unveil Windows Azure IaaS for hybrid and public clouds. In late 2011, Microsoft began leveraging its technical depth in business intelligence (BI) and data management with free previews of a wide variety of value-added Software as a Service (SaaS) add-ins for Windows Azure and SQL Azure (see Table 1).

Codename Description Link to Tutorial “Social Analytics” Summarizes big data from millions of tweets and other unstructured social data provided by the “Social Analytics” Team http://bit.ly/Kluwd1 “Data Transfer” Moves comma-separated-value (SSV) and other structured data to SQL Azure or Windows Azure blobs http://bit.ly/IC1DJp “Data Hub” Enables data mavens to establish private data markets that run in Windows Azure http://bit.ly/IjRCE0 “Cloud Numerics” Supports developers who use Visual Studio to analyze distributed arrays of numeric data with Windows High-Performance Clusters (HPCs) in the cloud or on premises http://bit.ly/IccY3o “Data Explorer” Provides a UI to quickly mash up BigData from various sources and publish the mash-up to a Workspace in Windows Azure http://bit.ly/IMaOIN “Trust Services” Enables programmatically encrypting Windows Azure and SQL Azure data http://bit.ly/IxJfqL “SQL Azure Security Services” Enables assessing the security state of one or all of the databases on a SQL Azure server. http://bit.ly/IxJ0M8 “Austin” Helps developers process StreamInsight data in Windows Azure Table 1. The SQL Azure Labs team and the StreamInsight unit have published no-charge previews of several experimental SaaS apps and utilities for Windows Azure and SQL Azure. The Labs team characterizes these offerings as "concept ideas and prototypes," and states that they are "experiments with no current plans to be included in a product and are not production quality."

In this article, I'll describe how the Microsoft Hadoop on Windows Azure project eases big data analytics for data-oriented developers and provide brief summaries of free SaaS previews that aid developers in deploying their apps to public and private clouds. (Only a couple require a fee for the Windows Azure resources they consume.) I'll also include instructions for obtaining invitations for the previews, as well as links to tutorials and source code for some of them. These SaaS previews demonstrate to independent software vendors (ISVs) the ease of migrating conventional, earth-bound apps to SaaS in the Windows Azure cloud.

…

This article went to press before the Windows Azure Team’s Meet Azure event on 6/7/2012, where the team unveiled the “Spring Wave” of new features, upgrades and updates to Windows Azure, including Windows Azure Virtual Machines, Virtual Networks, Web Sites and other new and exciting services. Also, the team terminated Codenames “Social Analytics” and “Data Transfer” projects in late June. However, as of 6/27/2012, the “Social Analytics” data stream from the Windows Azure Marketplace Data Market was still operational, so the downloadable C# code for the Microsoft Codename “Social Analytics” Windows Form Client still works.

Note: I modified my working version of the project to copy the data from about a million rows in the DataGridView to a DataGrid.csv file, which can be loaded on demand. Copies of this file and the associated source file for the client’s chart are available from my SkyDrive account. I will update the sample code to use the DataGrid.csv file if the Data Market stream becomes unavailable.

I updated my Visual Studio Magazine Article Retrospective list of cover stories with this latest piece.

Steve Fox (@redmondhockey) described SharePoint Online & Windows Azure: Building Hybrid Applications in a 6/28/2012 post:

Have been spending time here at TechEd EMEA and one of the topics I presented on this week was how you can built hybrid applications using SharePoint Online and Windows Azure. I think there’s an incredible amount of power here for building cloud apps; it represents a great cloud story and one that complements the O365 SAAS capabilities very well.

I’ve not seen a universally agreed-upon definition of hybrid, so in the talk we started by defining a hybrid application as follows:

Within this frame, I then discussed four hybrid scenarios that enable you to connect to SharePoint Online (SPO) in some hybrid way. These scenarios were:

- Leveraging Windows Azure SQL Data Sync to synchronize on-premises SQL Server data with Azure SQL Database. With this mechanism, you can then sync your data from on-premises to the cloud and then consume using a WCF service and BCS within SPO, or wrap the data in a REST call and project to a device.

Service-mediated applications, where you can connect cloud-to-cloud systems (in this case I used an example with Windows Azure Data Marketplace) or on-premises-to-cloud systems (where I showed an on-premises LOB example to SPO example). Here, we discussed the WCF, REST, and Service Bus—endpoints and transport vehicles for data/messages.

- Cloud and Device apps, which is where you can take a RESTified service around your data and expose it to a device (in this case a WP7 app).

- Windows Azure SP Instance on the new Virtual Machine (IAAS) to show how you can pull on-premises data using the Service Bus and interact with PAAS applications built using WCF and Windows Azure and expose those in SP.

You can view the deck for the session below. (

It’s not up now, but you should be able toYou can view the session here on Channel 9soon.)The areas for discussion represented four patterns for discussion around how you can integrate cloud and on-premises systems to build some really interesting hybrid applications—and then leverage the collaborative power of SPO.

Some things we discussed during the session that are worth calling out here:

- In many cases, when building hybrid cloud apps that integrate with SPO, you’ll be leveraging some type of ‘service.’ This could be WCF, REST, or Web API. Each has its own merits and challenges. If you’re like me and don’t like spending time debugging XML config files, then I would recommend you take a look at the new Web API option for building services. It uses the MVC method and you can use the Azure SDK to build Mobile apps as well as vanilla Web API apps.

- I’ve seen some discussion on the JSONP method when issuing cross-domain calls for services. I would argue this is okay for endpoints/domains you trust; however, always take care when leveraging methods that are injecting script into your page—this allows for malicious code to be run. And given you’re executing code on the client, malicious code could be run that pooches your page—imagine a hack that attempts to use the SPCOM to do something malicious to your SPO instance. Setting header formatting in your service code can also be a chore.

- Cross-origin resource sharing (CORS) is an area I’m looking into as a more browser-supported method of cross-domain calls. This enables you to specify or set a wildcard (“*”) flag and pass back to the browser to accept the cross-domain call.

- JSON is increasingly being used in building web services, so ensure you’re up to speed with what jQuery has to offer. Lots of great plug-ins, plus you then have a leg up when looking at building apps through, say, jQuery for mobile.

All in all, there’s a ton of options available for you when building SPO apps, and I believe that MS has a great story here for building compelling cloud applications.

For more information and resources re the above, check out:

Mark Stafford (@markdstafford) posted OData 101: Building our first OData consumer on 6/27/2012:

In this OData 101, we will build a trivial OData consumption app that displays some titles from the Netflix OData feed along with some of the information that corresponds to those titles. Along the way, we will learn about:

Adding service references and how adding a reference to an OData service is different in Visual Studio 2012

- NuGet package management basics

- The LINQ provider in the WCF Data Services client

Getting Started

Let’s get started!

First we need to create a new solution in Visual Studio 2012. I’ll just create a simple C# Console Application:

From the Solution Explorer, right-click the project or the References node in the project and select Add Service Reference:

This will bring up the Add Service Reference dialog. Paste http://odata.netflix.com/Catalog in the Address textbox, click Go and then replace the contents of the Namespace textbox with Netflix:

Notice that the service is recognized as a WCF Data Service (see the message in the Operations pane).

Managing NuGet Packages

Now for the exciting part: if you check the installed NuGet packages (right-click the project in Solution Explorer, choose Manage NuGet Packages, and select Installed from the left nav), you’ll see that the Add Service Reference wizard also added a reference to the Microsoft.Data.Services.Client NuGet package!

This is new behavior in Visual Studio 2012. Any time you use the Add Service Reference wizard or create a WCF Data Service from an item template, references to the WCF Data Services NuGet packages will be added for you. This means that you can update to the most recent version of WCF Data Services very easily!

NuGet is a package management system that makes it very easy to pull in dependencies on various libraries. For instance, I can easily update the packages added by ASR (the 5.0.0.50403 versions) to the most recent version by clicking on Updates on the left or issuing the Update-Package command in the Package Manager Console:

NuGet has a number of powerful management commands. If you aren’t familiar with NuGet yet, I’d recommend that you browse their documentation. Some of the most important commands are:

Install-Package Microsoft.Data.Services.Client -Pre -Version 5.0.1-rc

Installs a specific prerelease version of Microsoft.Data.Services.ClientUninstall-Package Microsoft.Data.Services.Client -RemoveDependencies

Removes Microsoft.Data.Services.Client and all of its dependenciesUpdate-Package Microsoft.Data.Services.Client -IgnoreDependencies

Updates Microsoft.Data.Services.Client without updated its dependenciesLINQ Provider

Last but not least, let’s write the code for our simple application. What we want to do is select some of the information about a few titles.

The WCF Data Services client includes a powerful LINQ provider for working with OData services. Below is a simple example of a LINQ query against the Netflix OData service.

- using System;

- using System.Linq;

- namespace OData101.BuildingOurFirstODataConsumer

- {

- internal class Program

- {

- private static void Main()

- {

- var context = new Netflix.NetflixCatalog(new Uri("http://odata.netflix.com/Catalog"));

- var titles = context.Titles

- .Where(t => t.Name.StartsWith("St") && t.Synopsis.Contains("of the"))

- .OrderByDescending(t => t.AverageRating)

- .Take(10)

- .Select(t => new { t.Name, t.Rating, t.AverageRating });

- Console.WriteLine(titles.ToString());

- foreach (var title in titles)

- {

- Console.WriteLine("{0} ({1}) was rated {2}", title.Name, title.Rating, title.AverageRating);

- }

- }

- }

- }

In this sample, we start with all of the titles, filter them down using a compound where clause, order the results, take the top ten, and create a projection that returns only portions of those records. Then we write

titles.ToString()to the console, which outputs the URL used to query the OData service. Finally, we iterate the actual results and print relevant data to the console:Summary

Here’s what we learned in this post:

- It’s very easy to use the Add Service Reference wizard to add a reference to an OData service

- In Visual Studio 2012, the Add Service Reference wizard and the item template for a WCF Data Service add references to our NuGet packages

- Shifting our distribution vehicle to NuGet allows people to easily update their version of WCF Data Services simply by using the Update-Package NuGet command

- The WCF Data Services client includes a powerful LINQ provider that makes it easy to compose OData queries

Sample source is attached; I’d encourage you to try it out!

<Return to section navigation list>

Windows Azure Service Bus, Active Directory and Workflow

Ram Jeyaraman reported Windows Azure SDK for PHP available, including support for Service Bus in a 6/29/2012 post to the Interoperability @ Microsoft blog:

Good news for all you PHP developers out there: I am happy to share with you the availability of Windows Azure SDK for PHP, which provides PHP-based access to the functionality exposed via the REST API in Windows Azure Service Bus. The SDK is available as open source and you can download it here.

This is an early step as we continue to make Windows Azure a great cloud platform for many languages, including .NET, Java, and PHP. If you’re using Windows Azure Service Bus from PHP, please let us know your feedback on how this SDK is working for you and how we can improve them. Your feedback is very important to us!

You may refer to Windows Azure PHP Developer Center for related information.

Openness and interoperability are important to Microsoft, our customers, partners, and developers. We believe this SDK will enable PHP applications to more easily connect to Windows Azure making it easier for applications written on any platform to interoperate with one another through Windows Azure.

Thanks,

Ram Jeyaraman

Senior Program Manager

Microsoft Open Technologies, Inc.

Vittorio Bertocci (@vibronet) reported The Recording of “A Lap Around Windows Azure Active Directory” From TechEd Europe is Live in a 6/27/2012 post:

Hi all! I am typing this post from Shiphol, Amsterdam’s airport, where I am waiting to fly back to Seattle after a super-intense 2 days at TechEd Europe.

As it is by now tradition for Microsoft’s big events, the video recording of the breakout sessions are available on Channel9 within 24 hours from the delivery. Yesterday I presented “A Lap Around Active Directory”, and the recording punctually just popped up: check it out!

The fact that the internet connectivity was down for most of the talk is unfortunate, although I am told it made for a good comic relief. Sorry about that, guys!

Luckily I managed to go through the main demo, which is what I needed for making my point. The other demos I planned were more of a nice to have.

I wanted to query the Directory Graph from Fiddler or the RestClient Firefox plugin, to show how incredibly easy it is to connect with the directory and navigate relationships: however I did have the backup slide showing a prototypical query and results in JSON, albeit less spectacular it hopefully conveyed the point.

The other thing I wanted to show you was a couple of projects which demonstrate web sign on with the directory from PHP and Java apps: given that I had those running on a remote machine (my laptop’s SSD does not have all that room) the absence of connectivity killed the demo from the start; once again, though, those would have demonstrated the same SSO feature I have shown with the expense reporting app; and I would not have been able to show the differences in code anyway, given that the session was a 200. So, all in all a lot of drama but not a lot of damage after all.

Thanks again for having shown up at the session, and for all the interesting feedback at the book signing. Windows Azure Active Directory is a Big Deal, and I am honored to have had the chance to be among the first to introduce it to you. The developer preview will come out real soon, and I can’t wait to see what you will achieve with it!

Mary Jo Foley (@maryjofoley) asserted “A soon-to-be-delivered preview of a Windows Azure Active Directory update will include integration with Google and Facebook identity providers” in a summary of her With Azure Active Directory, Microsoft wants to be the meta ID hub post of 6/25/2012 for ZDNet’s All About Microsoft blog:

Microsoft isn’t just reimaginging Windows and reimaginging tablets. It’s also reimaginging Active Directory in the form of the recently (officially) unveiled Windows Azure Active Directory (WAAD).

In a June 19 blog post that largely got lost among the Microsoft Surface shuffle last week, Microsoft Technical Fellow John Shewchuk delivered the promised Part 2 of Microsoft’s overall vision for WAAD.

WAAD is the cloud complement to Microsoft’s Active Directory directory service. Here’s more about Microsoft’s thinking about WAAD, based on the first of Shewchuk’s posts. It already is being used by Office 365, Windows InTune and Windows Azure. Microsoft’s goal is to convince non-Microsoft businesses and product teams to use WAAD, too.

This is how the identity-management world looks today, in the WAAD team’s view:

And this is the ideal and brave new world they want to see, going forward.

WAAD is the center of the universe in this scenario (something with which some of Microsoft’s competitors unsurprisingly have problem).How is Microsoft proposing to go from A to B? Shewchuk explains:

“(W)e currently support WS-Federation to enable SSO (single sign-on) between the application and the directory. We also see the SAML/P, OAuth 2, and OpenID Connect protocols as a strategic focus and will be increasing support for these protocols. Because integration with applications occurs over standard protocols, this SSO capability is available to any application running on any technology stack…

“Because Windows Azure Active Directory integrates with both consumer-focused and enterprise-focused identity providers, developers can easily support many new scenarios—such as managing customer or partner access to information—all using the same Active Directory–based approach that traditionally has been used for organizations’ internal identities.”

Microsoft execs are sharing more information and conducting sessions about WAAD at TechEd Europe, which kicks off on June 25 in Amsterdam.

Microsoft announced the developer preview for WAAD on June 7. This preview includes two capabilities that are not currently in WAAD as it exists today, Shewchuk noted. The two: 1. The ability to connect and use information in the directory through a REST interface; 2. The ability for third-party developers to connect to the SSO the way Microsoft’s own apps do.

The preview also will “include support for integration with consumer-oriented Internet identity providers such as Google and Facebook, and the ability to support Active Directory in deployments that span the cloud and enterprise through synchronization technology,” he blogged.

Shewchuk said the WAAD developer preview should be available “soon.”

<Return to section navigation list>

Windows Azure Virtual Machines, Virtual Networks, Web Sites, Connect, RDP and CDN

Brian Swan (@brian_swan) posted Windows Azure Websites, Web Roles, and VMs: When to use which? on 6/27/2012 to the [Windows Azure’s] Silver Lining blog:

The June 7th update to Windows Azure introduced two new services (Widows Azure Websites and persistent VMs) that beg the question “When should I use a Windows Azure Website vs. a Web Role vs. a VM?” That’s exactly the question I’ll try to help you answer in this post. (I say “help you answer” because there is no simple, clear-cut answer in all cases. What I’ll try to do here is give you enough information to help you make an informed decision.)

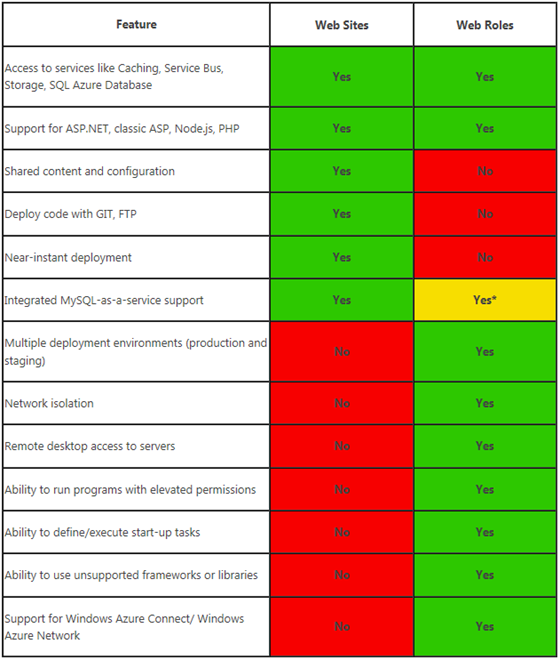

The following table should give you some idea of what each option is ideal for:

Actually, I think the use cases for VMs are wide open. You can use them for just about anything you could imagine using a VM for. The tougher distinction (and decision) is between Web Sites and Web Roles. The following table should give you some idea of what Windows Azure features are available in Web Sites and Web Roles:

* Web or Worker Roles can integrate MySQL-as-a-service through ClearDB's offerings, but not as part of the Management Portal workflow.

As I said earlier, it’s impossible to provide a definitive answer to the question of which option you should use (Web Sites, Web Roles, or VMs). It really does depend on your application. With that said, I hope the information in the tables above helps you decide what is right for your application. Of course, if you have any questions and/or feedback, let us know in the comments.

Avkash Chauhan (@avkashchauhan) described Deploying Windows Azure Web Sites using Visual Studio Web Publish Wizard in a 6/26/2012 post:

Create your Windows Azure Websites (shared or reserved) and get the publish profile by selecting “Download publish profile” option as shown below:

Once your PublishSettings file name as _yourwebsitename_.azurewebsites.net.PublishSettings is download, you can import it in your Visual Studio Publish Web Application wizard as below:

Select _yourwebsitename_.azurewebsites.net.PublishSettings

Once PublishSettings is loaded, all the fields in your web publishing wizard will be filled automatically using the info in PublishSettings file.

You can test your connection by “validate connection” option as well for correctness.

Finally you can start publish by selecting “Publish” option and in your VS2010 output window you will see the publish log as below:

------ Build started: Project: LittleWorld, Configuration: Release Any CPU ------

LittleWorld -> C:\2012Data\Development\Azure\LittleWorld\LittleWorld\bin\LittleWorld.dll

------ Publish started: Project: LittleWorld, Configuration: Release Any CPU ------

Transformed Web.config using C:\2012Data\Development\Azure\LittleWorld\LittleWorld\\Web.Release.config into obj\Release\TransformWebConfig\transformed\Web.config.

Auto ConnectionString Transformed Account\Web.config into obj\Release\CSAutoParameterize\transformed\Account\Web.config.

Auto ConnectionString Transformed obj\Release\TransformWebConfig\transformed\Web.config into obj\Release\CSAutoParameterize\transformed\Web.config.

Copying all files to temporary location below for package/publish:

obj\Release\Package\PackageTmp.

Start Web Deploy Publish the Application/package to https://waws-prod-blu-001.publish.azurewebsites.windows.net/msdeploy.axd?site=littleworld ...

Updating setAcl (littleworld).

Updating setAcl (littleworld).

Updating filePath (littleworld\bin\LittleWorld.dll).

Updating setAcl (littleworld).

Updating setAcl (littleworld).

Publish is successfully deployed.

Site was published successfully http://littleworld.azurewebsites.net/

========== Build: 1 succeeded or up-to-date, 0 failed, 0 skipped ==========

========== Publish: 1 succeeded, 0 failed, 0 skipped ==========And your website will be ready to Rock:

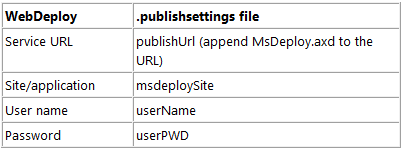

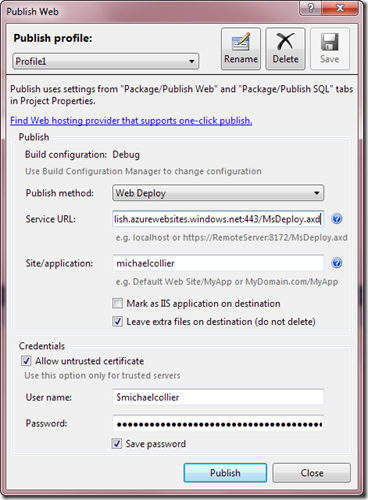

Michael Collier (@MichaelCollier) showed Windows Azure Web Sites – Using WebDeploy without the New Tools in a 6/27/2012 post:

With the new Windows Azure Web Sites it is very easily to use your favorite deployment tool/technology to deploy the web solution to Windows Azure. You can choose from continuous delivery with Git or TFS, or use tools like FTP or WebDeploy.

As long as you have at least the June 2012 Windows Azure tools update for Visual Studio 2010 (or 2012), using WebDeploy to deploy a Windows Azure Web Site is really easy. Simply import the .publishsettings file, Visual Studio reads the pertinent data and populates the WebDeploy wizard. If you’re not familiar with this process, please have a look at https://www.windowsazure.com/en-us/develop/net/tutorials/web-site-with-sql-database/#deploytowindowsazure as the process is explained very well there, and even has nice pictures.

But what if you don’t have the latest tools update? Or, what if you don’t have any Windows Azure tools installed? After all, why should you have to install Windows Azure tools to use WebDeploy?

You can surely use WebDeploy to deploy a web app to Windows Azure Web Sites – you just have to do a little more manual configuration. You do what Visual Studio does for you as part of the latest Windows Azure tools update.

How to Deploy via WebDeploy without the Windows Azure Tools

- Download the .publishsettings file for the target Windows Azure Web Site.

- Launch the WebDeploy wizard in Visual Studio.

- Open the .publishsettings file in Notepad or your favorite text editor. You’ll need to copy a few settings out of this .publishsettings file and paste them into the WebDeploy wizard.

- publishUrl

- msdeploySite

- userName

- userPWD

- Back in WebDeploy, update the following settings:

In the end, your WebDeploy wizard dialog should look like the following:

Hit the “Publish” button and WebDeploy should quickly publish to Windows Azure Web Sites.

To get started with Windows Azure Web Sites, if you don’t already have Windows Azure, sign up for a FREE Windows Azure 90-day trial account. To start using Windows Azure Web Sites, request access on the ‘Preview Features’ page under the ‘account’ tab, after you log into your Windows Azure account.

Brian Loesgen (@BrianLoesgen) explained Cloning Azure Virtual Machines in a 6/26/2012 post:

Azure Virtual Machines are still new to everyone, and I got a great question from a partner a few days ago: “I have an Azure Virtual Machine set up just the way I want it, now I want to spin up multiple instances, how do I do that?”

In the “picture is worth 1,000 words” category (and I don’t have time to write 1,000 words), please see the following sequence of screen shots for the answer.

Things to note:

- run sysprep (%windir%\system32\sysprep) with “generalize” so each machine will have a unique SID (security ID)

- when you “capture” the VM, it will be deleted. You can re-create it from the image as shown below

Shan MacArthur described a more complex process for Cloning Windows Azure Virtual Machines in a 6/28/2012 post:

In one of my previous blog articles, I demonstrated how to build a demonstration or development environment for Microsoft Dynamics CRM 2011 using Windows Azure Virtual Machine technology. Once you get a basic virtual machine installed, you will likely want to back it up, or clone it. This article will show you how to manage your virtual machines once you get them set up.

Background

Before I go into some of the details, I want to give a little background on how Windows Azure manages hard disk images that it uses for virtual machines. When you first create a virtual machine, you start with an image, and that image can be one that you have 'captured', or it can be one from the Azure gallery of images. Microsoft provides basic installs of Windows Server 2008 R2, various Linux distros and even Windows Server 2012 Release Candidate. These base images are basically unattended installs that Microsoft (or you) maintain. When you create a new virtual machine, the Azure fabric controller will start the machine up in provisioning mode, which will allow Azure to specify the password for your virtual machine. The machine will initialize itself when it boots for the first time. The new virtual machine will have a single 30GB hard drive that is attached as the C: drive and used for the system installation, as well as D: drive that is used for the swap file and temporary storage.

One of the new features that makes Azure Virtual Machines possible is that the hard drive is now durable, and they do this by storing the hard drive blocks in Azure blobs. This means that your hard drive now can benefit from the redundancy and durability that is baked into the Azure blob storage infrastructure, including multiple geo-distributed replica copies. The downside is that you are dealing with blob storage which is not quite as fast as a physical disk. The operating system volume (C: drive) is stored in Azure blobs, but the temporary volume (D: drive) is not stored in blob storage. As such, the D: drive is a little faster, but it is not durable and should not be used to install applications or their permanent data on it.

You can create additional drives in Windows Azure and attach them to your Azure virtual machine. The number of drives that can be attached to a virtual machine is determined by the virtual machine size. For most real-world installations, you are going to want to create an additional data drive and attach it to your Azure virtual machine. Keep in mind that your C: drive is only 30GB and it will fill up when Windows applies updates or other middleware components get installed. If you have a choice of where to install any application, choose your additional permanent data drive over the system drive whenever possible. …

Shan continues with a detailed, illustrated description of the cloning process.

Following are links to Shan’s two earlier posts on related Virtual Machine topics (missed when posted):

Avkash Chauhan (@avkashchauhan) described Working with Yum on CentOS in a 6/26/2012 post:

Yum (YELLOWDOG UPDATE MANAGER)

Searching/Listing package in List of packages:List everything available to install from , a list of all packages

- # yum list

- # yum list | grep openssl

Example: list all packages starts with python

- # yum list python*

Example: List of package names as openssl Install:

- #yum list openssl

List all available versions of a package:

- # yum –showduplicates list php

- Available Packages php.x86_64 5.3.3-3.el6_1.3 base php.x86_64 5.3.3-3.el6_2.5 updates php.x86_64 5.3.3-3.el6_2.6 updates php.x86_64 5.3.3-3.el6_2.8 updates

- # yum –showduplicates list python

- Installed Packages python.x86_64 2.6.6-29.el6_2.2 @updates Available Packages python.i686 2.6.6-29.el6 base python.x86_64 2.6.6-29.el6 base python.i686 2.6.6-29.el6_2.2 updates python.x86_64 2.6.6-29.el6_2.2 updates

Searching into your Linux Box

Search a specific package in all installed packages in the box

- # yum search <packagename>

For example search for openssl

- # yum search openssl

Check if this file belongs which package

- # yum provides nodejs

- # yum provides */ssl

Find out the all locations where the package is installed:

- # yum provides */nodejs

List the dependencies of a specific package

- # yum deplist nodejs

Installing a package in your Linux Box:

Install Package in your machine:

- # yum install <package_name>

Install a collection of Packages “group package” in your machine:

- # yum groupinstall <group package name>

Updating a package in your Linux Box:

To update the list of YUM packages to the latest edition. This may take some time to get all the stuff updated.

- # yum update

- #yum groupupdate

Remove an installed package:

- # yum remove <package name>

- #yum groupremove <group package name>

Downgrade a package:

- # yum install yum-allowdowngrade

List of software groups available to install

- # yum grouplist

- # yum grouplist | grep gn

List of available repolist for yum

- #yum repolist all

Clean the package install cache:

- # yum clean all

Other Yum Commands:

• yum-aliases

• yum-allowdowngrade

• yum-arch

• yum-basearchonly

• yum-changelog

• yum-cron

• yum-downloadonly

• yumex.noarch

• yum-fastestmirror

• yum-filter-data