Windows Azure and Cloud Computing Posts for 11/19/2012+

| A compendium of Windows Azure, Service Bus, EAI & EDI, Access Control, Connect, SQL Azure Database, and other cloud-computing articles. |

•• Updated 11/21/2012 5:00 PM PST with new articles marked ••.

• Updated 11/20/2012 5:00 PM PST with new articles marked •.

Tip: Copy bullet(s), press Ctrl+f, paste it/them to the Find textbox and click Next to locate updated articles:

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Windows Azure Blob, Drive, Table, Queue, Hadoop and Media Services

- Windows Azure SQL Database, Federations and Reporting, Mobile Services

- Marketplace DataMarket, Cloud Numerics, Big Data and OData

- Windows Azure Service Bus, Caching, Access & Identity Control, Active Directory, and Workflow

- Windows Azure Virtual Machines, Virtual Networks, Web Sites, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch and Entity Framework v4+

- Windows Azure Infrastructure and DevOps

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

Azure Blob, Drive, Table, Queue, Hadoop and Media Services

•• M Sheik Uduman Ali (@Udooz) described WAS StartCopyFromBlob operation and Transaction Compensation in a 11/21/2012 post to the Aditi Technologies blog:

The latest Windows Azure SDKs v1.7.1 and 1.8 have a nice feature called “StartCopyFromBlob” that enables us to instruct a Windows Azure data center to perform cross-storage accounts blob copy. Prior to this, we need to download chunks of blob content then upload into the destination storage account. Hence, “StartCopyFromBlob” is more efficient in terms of cost and time as well.

The notable difference in version 2012-02-12 is that copy operation is now asynchronous. It means once you made a copy request to Windows Azure Storage service, it returns a copy ID (a GUID string), copy state and HTTP status code 202 (Accepted). This means that your request is scheduled. Post to this call, when you check the copy state immediately, it is most probably in “pending” state.

StartCopyFromBlob – A TxnCompensation operation

An extra care is required while using this API, since this is one of the real world transaction compensation service operation. After making the copy request, you need to verify the actual status of the copy operation at later point in time. The later point in time would be varied from very few seconds to 2 weeks based on various constraints like source blob size, permission, connectivity, etc.

The figure below shows a typical sequence of StartCopyFromBlob operation invocation:

CloudBlockBlob and CloudPageBlob classes in Windows Azure storage SDK v1.8 provide StartCopyFromBlob() method which in turn calls the WAS REST service operation. Based on the Windows Azure Storage Team blog post, this request is placed on internal queue and it returns copy ID and copy state. The copy ID is a unique ID for the copy operation. This can be used later to verify the destination blob copy ID and also the way to abort copy operation later point in time. CopyState gives you copy operation status, number of bytes copying, etc.

Note that sequence 3 “PushCopyBlobMessage” in the above figure is my assumption about the operation.

ListBlobs – Way for Compensation

Although, copy ID is in your hand, there is no simple API that receives array of copy IDs and to return the appropriate copy states. Instead, you have to call CloudBlobContainer‘s ListBlobs() or GetXXXBlobReference() to get the copy state. If the blob is created by the copy operation, then it will have the CopyState.

CopyState might be null for blobs that are not created by copy operation

The compensation action here is to take what we need to do when a blob copy operation is neither succeeded nor in pending state. Mostly, the next call of StartCopyFromBlob() will end up with successful blob copy. Otherwise, further remedy should be taken.

It’s a pleasure to use StartCopyFromBlob(). It would be more of a pleasure, if the SDK or REST version provides simple operations like the following:

- GetCopyState(string[] copyIDs) : CopyState[]

- RetryCopyFromBlob(string failedCopyId) : void

M Sheik Uduman Ali (@Udooz) explained Authorization request signing for Windows Azure Storage REST API from PowerShell in an 11/16/2012 post:

Recently, while working on one of the Windows Azure migration engagement, we were need to have a simple and portable utility scripts that manipulate on various Windows Azure Storage (WAS) services APIs like “Get all blob metadata details from selected containers”. This is further enabled to perform various manipulations for the business.

There are various options like LINQPad queries, WAPPSCmdlets or Azure Storage Explorer + Fiddler. However, in-terms of considering the computation post to the WAS invocation, repetitiveness of the work and considering the various type of users environment, simple PowerShell script is the option. So, I have decided to write simple PowerShell script using WAS REST API. This does not require any other snap-in or WAS storage client assemblies. (Re-inventing the wheel?!)

One of the main hurdle is creating Authorization header (signing the request). It should contains the following:

- HTTP verb

- all standard HTTP headers, or empty line instead (canonicalized headers)

- the URI for the storage service (canonicalized resource)

A sample string for the signing mentioned below:

<div id="LC1">GET\n /*HTTP Verb*/</div> <div id="LC2">\n /*Content-Encoding*/</div> <div id="LC3">\n /*Content-Language*/</div> <div id="LC4">\n /*Content-Length*/</div> <div id="LC5">\n /*Content-MD5*/</div> <div id="LC6">\n /*Content-Type*/</div> <div id="LC7">\n /*Date*/</div> <div id="LC8">\n /*If-Modified-Since */</div> <div id="LC9">\n /*If-Match*/</div> <div id="LC10">\n /*If-None-Match*/</div> <div id="LC11">\n /*If-Unmodified-Since*/</div> <div id="LC12">\n /*Range*/</div> <div id="LC13">x-ms-date:Sun, 11 Oct 2009 21:49:13 GMT\nx-ms-version:2009-09-19\n /*CanonicalizedHeaders*/</div> <div id="LC14">/udooz/photos/festival\ncomp:metadata\nrestype:container\ntimeout:20 /*CanonicalizedResource*/</div>Read http://msdn.microsoft.com/en-us/library/windowsazure/dd179428.aspx and particularly the section http://msdn.microsoft.com/en-us/library/windowsazure/dd179428.aspx#Constructing_Element for request signing of “Authorization” header.

I have written a simple and dirty PowerShell function for blob metadata access.

function Generate-AuthString { param( [string]$url ,[string]$accountName ,[string]$accountKey ,[string]$requestUtcTime ) $uri = New-Object System.Uri -ArgumentList $url $authString = "GET$([char]10)$([char]10)$([char]10)$([char]10)$([char]10)$([char]10)$([char]10)$([char]10)$([char]10)$([char]10)$([char]10)$([char]10)" $authString += "x-ms-date:" + $requestUtcTime + "$([char]10)" $authString += "x-ms-version:2011-08-18" + "$([char]10)" $authString += "/" + $accountName + $uri.AbsolutePath + "$([char]10)" $authString += "comp:list$([char]10)" $authString += "include:snapshots,uncommittedblobs,metadata$([char]10)" $authString += "restype:container$([char]10)" $authString += "timeout:90" $dataToMac = [System.Text.Encoding]::UTF8.GetBytes($authString) $accountKeyBytes = [System.Convert]::FromBase64String($accountKey) $hmac = new-object System.Security.Cryptography.HMACSHA256((,$accountKeyBytes)) [System.Convert]::ToBase64String($hmac.ComputeHash($dataToMac)) }Use [char] 10 for new-line, instead of “`r`n”.

Now, you need to add “Authorization” header while making the request.

[System.Net.HttpWebRequest] $request = [System.Net.WebRequest]::Create($url) ... $request.Headers.Add("Authorization", "SharedKey " + $accountName + ":" + $authHeader);The complete script is available at my GitHub repo https://github.com/udooz/powerplay/blob/master/README.md.

The real power when accessing REST API from PowerShell is the “XML processing”. I can simply access ListBlob() atom fields like

$xml.EnumerationResults.Blobs.Blob

Sandrino di Mattia (@sandrinodm) described DictionaryTableEntity: Working with untyped entities in the Table Storage Service in a 11/15/2012 post:

About 2 weeks ago Microsoft released the new version of the Windows Azure Storage SDK, version 2.0.0.0. This version introduces a new way to work with Table Storage which is similar to the Java implementation of the SDK. Instead of working with a DataServiceContext (which comes from WCF Data Services), you’ll work with operations. Here is an example of this new implementation:

- First we initialize the storage account, the table client and we make sure the table exists.

- We create a new customer which inherits from TableEntity

- Finally we create a TableOperation and we execute it to commit the changes.

Taking a deeper look TableEntity

You’ll see a few changes compared to the old TableServiceEntity class:

- The ETag property was added

- The Timestamp is now a DateTimeOffset (much better for working with different timezones)

- 2 new virtual methods: ReadEntity and WriteEntity

By default, these methods are implemented as follows:

- ReadEntity: Will use reflection to get a list of all properties for the current entity type. Then it will try to map the values received in the properties parameter and try to map the values.

- WriteEntity: Will use reflection to get the values of each property and add all these values to a dictionary.

As you can see, both of these methods can come in handy if you want to do something a little more advanced. Let’s see how easy it is to create a new TableEntity which acts like a dictionary.

Introducing DictionaryTableEntity

The following code overrides both the ReadEntity and WriteEntity methods. When reading the entity, instead of using reflection, the list of properties is simply stored as a Dictionary in the object. When inserting or updating the entity, it will use that Dictionary and persist the values to Table Storage. This new class also implementas the IDictionary interface and adds a few extra methods which make it easy to add new properties to the object.

Creating new entities

Ok so previously I created the Customer entity and added 2 customers. Now I want to be able to manage a bunch of information about this customer like the locations of the customer and the websites. The following implementation would even make it possible to declare the possible ‘content types’ at runtime. This means you could even extend your application without having to recompile or redeploy the application.

In this code I’m doing 2 things:

- Create a new entity to which I add the city and street properties (this represents the customer’s address)

- Create 2 new entities to which I add the url property (this represents the customer’s website)

The advantage here is that we can store all this information in a single partition (see how I’m using the customer’s name as partition key). And as a result, we can insert or update all this information in a batch transaction.

And with TableXplorer you can see the result:

Reading existing entities

Reading data with the DictionaryTableEntity is also very easy, you can access a specific property directly (as if the entity was a dictionary) or you could also iterate over all properties available in the current entity:

To get started simply grab DictionaryTableEntity.cs from GitHub and you’re good to go.

<Return to section navigation list>

Windows Azure SQL Database, Federations and Reporting, Mobile Services

• Chihan Biyikoglu (@cihangirb) posted WANT MY DB_NAMEs BACK! - How to get database names for federation members from your federations and federated databases... on 11/19/2012:

Well, I get the question on how do I get the database names for my members frequently from users. I know this is harder than it should be but hiding this information helps future proof certain operations that we are working on for future for federations so we need to continue to keep them hidden.

I realize we don't leave you many options these days for getting the database names for all your members; Today sys.databases reports just the is_federation_member property to tell you if a database is a member. The federation views in the root database like sys.federation_* does not tell you the member ids and their ranges. I know it gets complicated to generate the database names for all your members or for a given federation. So here is a quick script that will generate this the right batch for you: Run the script in the root database and it will return all the database names on all federations based on your existing distribution points.

-- GENERATE DB_NAME SCRIPT

USE FEDERATION ROOT WITH RESET

GO

SELECT CASE

WHEN SQL_VARIANT_PROPERTY ( fmd.range_low , 'BaseType' ) IN ('int','bigint')

THEN 'USE FEDERATION '+f.name+' (id='+cast(fmd.range_low as nvarchar)+') WITH RESET, FILTERING=OFF

GO

SET NOCOUNT ON

SELECT db_name()

GO'

WHEN SQL_VARIANT_PROPERTY ( fmd.range_low , 'BaseType' ) IN ('varbinary')

THEN 'USE FEDERATION '+f.name+' (id='+convert(nvarchar(max),fmd.range_low,1)+') WITH RESET, FILTERING=OFF

GO

SET NOCOUNT ON

SELECT db_name()

GO'

WHEN SQL_VARIANT_PROPERTY ( fmd.range_low , 'BaseType' ) IN ('uniqueidentifier')

THEN 'USE FEDERATION '+f.name+' (id='''+convert(nvarchar(max),fmd.range_low,1)+''') WITH RESET, FILTERING=OFF

GO

SET NOCOUNT ON

SELECT db_name()

GO'

END

FROM sys.federation_member_distributions fmd join sys.federations f on fmd.federation_id=f.federation_id

order by fmd.range_low asc

GOOne important note: If you repartition federation with ALTER FEDERATION .. SPLIT or DROP, rerun the script so you can get the new list of databases.

As we improve development experience, scriptability and overall manageability, these will become less of an issue but for now, the above script generation should help you work with federation member database names.

Himanshu Singh (@himanshuks) posted Windows Azure SQL Database named an Enterprise Cloud Database Leader by Forrester Research by Ann Bachrach on 11/14/2012:

Editor's Note: This post comes from Ann Bachrach, Senior Product Marketing Manager in our SQL Server team.

Forrester Research, Inc. has positioned Microsoft as a Leader in The Forrester Wave™: Enterprise Cloud Databases, Q4 2012. In the report posted here Microsoft received the highest scores of any vendor in Current Offering and Market Presence. Forrester describes its rigorous and lengthy Wave process: “To evaluate the vendors and their products against our set of criteria, we gather details of product qualifications through a combination of lab evaluations, questionnaires, demos, and/or discussions with client references.”

Forrester notes that “cloud database offerings represent a new space within the broader data management platform market, providing enterprises with an abstracted option to support agile development and new social, mobile, cloud, and eCommerce applications as well as lower IT costs.”

Within this context, Forrester identified the benefits of Windows Azure SQL Database as follows: “With this service, you can provision a SQL Server database easily, with simplified administration, high availability, scalability, and its familiar development model,” and “although there is a 150 GB limit on the individual database size with SQL Database, customers are supporting multiple terabytes by using each database as a shard and integrating it through the application.”

From the Microsoft case study site, here are a few examples of customers taking advantage of these features:

- Fujitsu System Solutions: “Developers at Fsol can also rapidly provision new databases on SQL Database, helping the company to quickly scale up or scale down its databases, just as it can for its compute needs with Windows Azure.”

- Connect2Field: “With SQL Database, the replication of data happens automatically…. For an individual company to run its own data replication is really complicated.… If we were to lose any one of our customer’s data, we would lose so much credibility that we wouldn’t be able to get any more customers. Data loss would destroy our customers’ businesses too.”

- Flavorus: “By using sharding with SQL Database, we can have tons of customers on the site at once trying to buy tickets.”

The best way to try out SQL Database and Windows Azure is through the free trial. Click here to get started.

<Return to section navigation list>

Marketplace DataMarket, Cloud Numerics, Big Data and OData

•• Wenming Ye (@wenmingye) posted Super computing 2012 and new Windows Azure HPC Hardware Announcement on 11/21/2012:

The Super Computing conference attracts some of biggest names in the industry, academia, and government institutions. This year’s attendance was down from 11,000 to about 8,000. The main floor was completely full even without some of the largest Department of Energy labs; travel restrictions and cutbacks have prevented them from setting up some of the largest booths in the past. Universities and foreign supercomputing centers helped to fill up the space. Microsoft booth sat close to the entrance of the exhibit hall which attracted good foot traffic. Don’t miss the 10 minute virtual tour with lots of exiting new hardware, etc, virtual tour ink.

Dr. Michio Kaku, the celebrity physicist and author presented the keynote on “Physics of the Future.” From Microsoft External Research group, Dr. Tony Hey delivered a session on Monday, The Fourth Paradigm - Data-Intensive Scientific Discovery. There were hundreds of sessions and tutorials, technical programs, and academic posters at the conference. This year’s Gordon Bell Prize was awarded to Tsukuba University and Tokyo Institution of Technology on “4.45 Pflops Astrophysical N-Body Simulation on K computer – The Gravitational Trillion Body Problem”. It is a typical large scale HPC problem to solve on the supercomputers.

As many of you know, Microsoft announced new Windows Azure hardware for Big Compute. As a proof of concept, the test cluster ranked #165 on the top 500 list, achieved a power efficiency of 90.2% on top of Hyper-V virtualization. More details at Bill Hilf’s blog: Windows Azure Benchmarks Show Top Performance for Big Compute.

Oakridge’s Titan with 560,640 processors, including 261,632 NVIDIA K20x accelerator cores made #1 on this year’s top 500 list with over 20 Petaflop peak performance.

After walking around the show floor and poster sessions, here are my 12 words that best describes supercomputing,

exascale, science, modeling, bigdata, parallel, performance, cloud, storage, collaboration, challenge, power, and accelerators.

I’ve added a link to photos taken at the conference with additional comments on industry news and trend:

The Exhibit Hall

This year, SC continued to focus on the Cloud and GPGPU. Both have had amazing progress in the past year. Many vendors are rushing to offer NVidia newly-announced Kepler-based GPU products. Intel now offers their 60 core Phi processor as a competition.

BigData optimized super computers are starting to appear, SDSC’s Gordon (Lustre-based), and Sherlock from Pittsburgh supercomputing center are being used for large scale graph analytics.

HDInsight: Quite a few people came by the Microsoft Booth at the BigData Station. Our customers’ reactions to HDInsight are very positive. They especially liked the fact there’s a supported distribution of Hadoop on Windows; dealing with Cygwin-based solution has been a painful experience for many of them. You can sign up for a free Hadoop cluster (HDInsight) at https://www.hadooponazure.com/ using the invitation link.

Another exciting new development is that you can get a ‘one box’ Hadoop installer for your workstation/laptop for development purposes, the installation is simple.

1. http://www.microsoft.com/web/downloads/platform.aspx Download the Microsoft Web Platform Installer.

2. Search for HDInsight, and click on “Add” to install. (my screenshot shows that I have already installed it myself)

References:

- Great posters on Data & Compute topics: http://sdrv.ms/UG75ja

- My weather demo as a service: http://weatherservice.cloudapp.net/ This demo shows how even individuals can pack complex HPC code into a service to leverage Windows Azure’s computing power.

- HDInsight: https://www.hadooponazure.com/

- SC12 Home Page: http://sc12.supercomputing.org/

- Photos taken at the Conference with detailed comments: Slide show link

- HPC Wire Magazine: www.hpcwire.com

•• Nick King of the SQL Server Team (@SQLServer) answered Oracle’s “Hekaton” challenge in an Oracle Surprised by the Present post of 11/20/2011:

I’d like to clear up some confusion from a recent Oracle-sponsored blog. It seems we hit a nerve by announcing our planned In-Memory OLTP technology, aka Project ‘Hekaton’, to be shipped as part of the next major release of SQL Server. We’ve noticed the market has also been calling out Oracle on its use of the phrase ‘In-Memory’, so it wasn’t unexpected to see a rant from Bob Evans, Oracle’s SVP of Communications, on the topic. [Editorial update: Oracle rant removed from Forbes.com on 11/20, see Bing cached page 1 and page 2]

Here on the Microsoft Server & Tools team that develops SQL Server, we’re working towards shipping products in a way that delivers maximum benefits to the customer. We don’t want to have dozens of add-ons to do something the product, in this case the database, should just do. In-Memory OLTP, aka ‘Hekaton’, is just one example of this.

It’s worth mentioning that we’ve been in the In-memory game for a couple of years now. We shipped the xVelocity Analytics Engine in SQL Server 2012 Analysis Services, and the xVelocity Columnstore index as part of SQL Server 2012. We’ve shown a 100x reduction in query processing times with this technology, and scan rates of 20 billion rows per second on industry-standard hardware, not some overpriced appliance. In 2010, we shipped the xVelocity in-memory engine as part of PowerPivot, allowing users to easily manipulate millions of rows of data in Excel on their desktops. Today, over 1.5 million customers are using Microsoft’s In-memory technology to accelerate their business. This is before ‘Hekaton’ even enters the conversation.

It was great to see Doug from Information Week also respond to Bob at Oracle, and highlight that in fact Oracle doesn’t yet ship In-Memory database technology in its Exadata appliances. Instead, Oracle requires customers to purchase yet another appliance, Exalytics, to make In-Memory happen.

We’re also realists here at Microsoft, and we know that customers want choices for their technology deployments. So we build our products that way, flexible, open to multiple deployment options, and cloud-ready. For those of you that have dealt with Oracle lately, I’m going to make my own prediction here: ask them to solve a problem for you and the solution is going to be Exadata. Am I right? And as Doug points out in his first InformationWeek article, Oracle’s approach to In-memory in Exadata is “cache-centric”, in contrast to which “Hekaton will deliver true in-memory performance”.

So I challenge Oracle, since our customers are increasingly looking to In-Memory technologies to accelerate their business. Why don’t you stop shipping TimesTen as a separate product and simply build the technology in to the next version of your flagship database? That’s what we’re going to do.

This shouldn’t be construed as a “knee-jerk” reaction to anything Oracle did. We’ve already got customers running ‘Hekaton’ today, including online gaming company Bwin, who have seen a 10x gain in performance just by enabling ‘Hekaton’ for an existing SQL Server application. As Rick Kutschera, IT Solutions Engineer at Bwin puts it, “If you know SQL Server, you know Hekaton”. This is what we mean by “built in”. Not bad for a “vaporware” project we just “invented”.

As for academic references, we’re glad to see that Oracle is reading from the Microsoft Research Database Group. But crowing triumphantly that there is “no mention of papers dealing with in-memory databases” [your emphasis] does not serve you well. Couple of suggestions for Oracle: Switch to Bing; and how about this VLDB paper as a starting point.

Ultimately, it’s customers who will choose from among the multiple competing In-memory visions on offer. And given that we as enterprise vendors tend to share our customers, we would do well to spend more time listening to what they’re saying, helping them solve their problems, and less time firing off blog posts filled with ill-informed and self-serving conclusions.

Clearly, Oracle is fighting its own fight. An Exadata in every data center is not far off from Bill’s dream of a “computer on every desk.” But, as with Bill’s vision, the world is changing. There will always be a need for a computer on a desk or a big box in a data center, but now there is so much more to enterprise technology. Cloud, mobility, virtualization, and data everywhere. The question is, how can a company called “Oracle” be surprised by the trends we see developing all around us?

What’s most interesting is Forbes Online having retracted the Oracle tirade.

No significant OData articles today

<Return to section navigation list>

Windows Azure Service Bus, Access Control Services, Caching, Active Directory and Workflow

•• Haishi Bai (@HaishiBai2010) posted Complete walkthrough: Setting up a ADFS 2.0 Server on Windows Azure IaaS and Configuring it as an Identity Provider in Windows Azure ACS on 11/20/2012:

I’ve done this several times but never got a chance to document all the steps. Since now I’ve got another opportunity to do this yet again, I’ll document all the steps necessary, starting from scratch, to configure an ADFS 2.0 server on Windows Azure IaaS, and to configure it as an Identity Provider in Windows Azure ACS.

Then we’ll use ACS to protect our web application that uses role-based security. Although I’m stripping down the steps to the bare minimum and cutting some corners , this will still be a long post, so please bear with me. Hopeful this could be your one-stop reference if you have such a task at hand. Here we go:

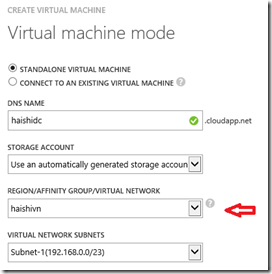

Create a Virtual Network

Since we are starting from scratch, we’ll start from a Virtual Network. Then, on this Virtual Network, we’ll set up our all-in-one AD forest with a single server what is both a Domain Controller (DC) and an ADFS 2.0 server. The reason we put this server on a Virtual Network is to ensure the server get a local static IP (or an ever-lasting dynamic) address.

- Log on to Windows Azure Management Portal.

- Add a new Virtual Network. In the following sample, I named my Virtual Network haishivn, with address space 192.168.0.0 – 192.168.15.255.

- Click CREATE A VIRTUAL NETWORK link to create the network.

Provision the Virtual Machine

- In Windows Azure Management Portal, select NEW->COMPUTE->VIRTUAL MACHINE->FROM GALLERY.

- Select Windows Server 2012, October 2012, and then click Next arrow.

- On next screen, enter the name of your virtual machine, enter administrator passport, pick the size you want to use, and then click Next arrow.

(Note in the following screenshot I’m using haishidc, while in later steps I’m using haishidc2 as I messed up something in the first run and had to start over. So, please consider haishidc and haishidc2 the same)- On last screen, leave availability set empty for now. Complete the wizard.

- Once the virtual machine is created, click on the name of the machine and select ENDPOINT tab.

- Click ADD ENDPOINT icon on the bottom toolbar.

- In ADD ENDPOINT dialog, click next arrow to continue.

- Add port 443 as shown in the following screenshot:

- Similarly, add port 80.

Set up The Virtual Machine

Once the virtual machine is provisioned, we are ready to set up our cozy Active Directory with one member, which will be the Domain Controller as well as the ADFS 2.0 Server.

- RDP into the virtual machine you just created. Wait patiently till Server Manager shows up.

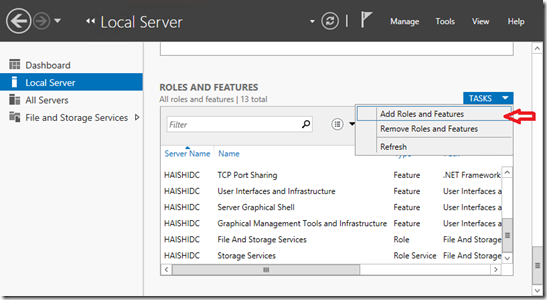

- Go to Local Server tab, scroll down to the ROLES AND FEATURES section, then click TASKS->Add Roles and Features.

- In Add Roles and Features Wizard, click Next to continue.

- On next screen, keep Role-based or feature-based installation checked, click Next to continue.

- On Server selection screen, accept default settings and click Next.

- On Server Roles screen, check Active Directory Domain Service. This will pop up a dialog prompting to enable required features. Click Add Features to continue.

- Check Active Directory Federation Services. Again, click Add Features in the pop-up to add required features.

- Click Next all the way till the end of the wizard workflow, accepting all default settings.

- Click Install to continue. Once installation completes, click Close to close the wizard.

Configure AD and Domain controller

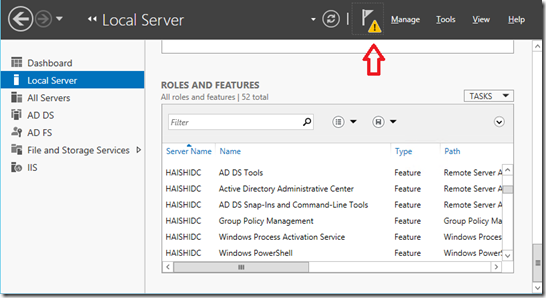

- Now you’ll see a warning icon reminding you some additional configurations are needed

- Click on the icon and click on the second item, which is Promote this server to a domain controller.

- In Active Directory Domain Services Configuration Wizard, select Add a new forest, enter cloudapp.net as Root domain name, and then click Next to continue. Wait, what? How come our root domain name is cloudapp.net? Actually it doesn’t matter that much – you can call it microsoft.com if you want. However, using cloudapp.net saves us a little trouble when we try to use self-issued certificates in later steps. From the perspective of outside word, the ADFS server who issues the token will be [your virtual machine name].cloudapp.net. In the following steps, we’ll use IIS to generate a self-issued cert that matches with this DNS name. The goal of this post is to get the infrastructure up and running with minimum efforts. Proper configuration and usage of various certificates deserves another post by itself.

- On next screen, provide a DSRM password. Uncheck Domain Name System (DNS) server as we don’t need this capability in our scenario (this is an all-in-one forest anyway). Click Next to continue.

- Keep clicking Next till Install button is enabled. Then click Install.

- The machine reboots and you loose your RDP connection.

(optional: grab a soda and some snacks. This will take a while)

Create Some Test Accounts

Before we move forward, let’s create a couple of user groups and a couple of test accounts.

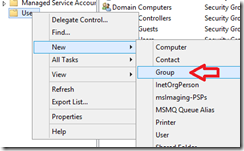

- Launch Active Directory Users and Computers (Window + Q, then search for “users”).

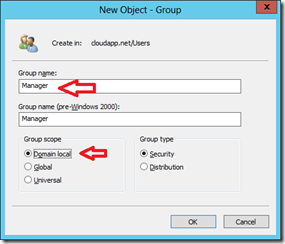

- Right-click on Users node, then select New->Group:

- In New Object window, enter Manager as group name, and change Group scope to Domain local:

- Follow the same step, create a Staff group.

- Right-click on Users node, then select New->User to create a new user:

- Set up a password for the user, then finish the wizard. On a test environment, you can disallow password change and make the the password never expire to simplify password management:

- Double-click on the user name, and add the user to Manager group:

- Create another user, and add the user to Staff group.

Configure SSL Certificate

Now it’s the fun part – to configure ADFS.

- Reconnect to the Virtual Machine.

- Launch Internet Information Services Manager (Window + Q, then search for “iis”).

- Select the server node, and then double-click Server Certificates icon in the center pane.

- In the right pane, click on Create Self-Signed Certificate… link. Give a friendly name to the cert, for example haishidc2.cloudapp.net. Click OK. If you open the cert, you can see the cert is issued to [your virtual machine name].cloudapp.net. This is the reason why we used cloudapp.net domain name.

Configure ADFS Server

- Go back to Server Manager. Click on the warning icon and select Run the AD FS Management snap-in.

- Click on AD FS Federation Server Configuration Wizard link in the center pane.

- In AD FS Federation Server Configuration Wizard, leave Create a new Federation Service checked, click Next to continue.

- On next screen, keep New federation server farm checked, click Next to continue.

- On next screen, You’ll see our self-issued certificate is automatically chosen. Click Next to continue.

- On next screen, setup Administrator as the service account. Click Next.

- Click Next to complete the wizard.

Provision ACS namespace

If you haven’t done so, you can follow these steps to provision a Windows Azure ACS namespace:

- Log on to Windows Azure Management Portal.

- At upper-right corner, click on your user name, and then click Previous portal:

- This redirects to the old Silverlight portal. Click on Service Bus, Access Control & Caching in the left pane:

- Click New icon in the top tool bar to create a new ACS namespace:

- Enter a unique ACS namespace name, and click Create Namespace:

- Once the namespace is activated. Click on Access Control Service in the top tool bar to manage the ACS namespace.

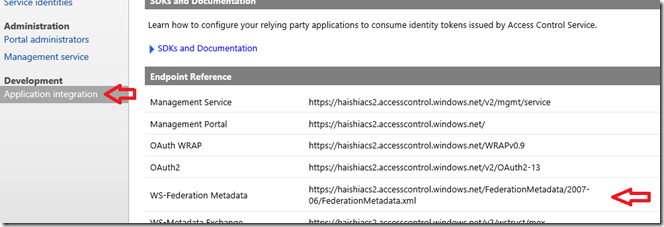

- Click on Application integration link in the left pane. Then copy the URL to ws-Federation metadata. You’ll need the URL in next section.

Configure Trust Relationship with ACS – ADFS Configuration

Now it’s the fun part! Let’s configure ADFS as a trusted Identity Provider of your ACS namespace. The trust relationship is mutual, which means it needs to be configured on both ADFS side and ACS side. From ADFS side, we’ll configure ACS as a trusted relying party. And from ACS side, we’ll configure ADFS as a trusted identity provider. Let’s start with ADFS configuration.

- Back in AD FS Management snap-in, click on Required: Add a trusted relying party in the center pane.

- In Add Relying Party Trust Wizard, click Start to continue.

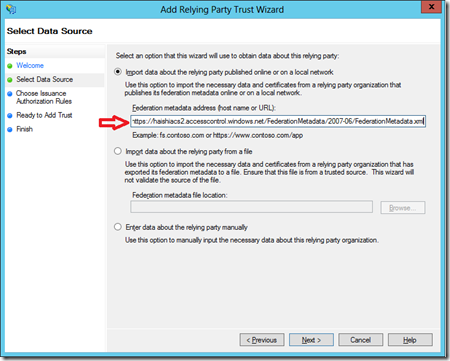

- Paste in the ACS ws-Federation metadata URL you got from your ACS namespace (see above steps), and click Next to continue:

- Keep clicking Next, then finally Close to complete the wizard.

- This brings up the claim rules window. Close it for now.

- Back in the main window, click on Trust Relationships->Claims Provider Trust node. You’ll see Active Directory listed in the center pane. Right-click and select Edit Claim Rules…

- In the Edit Claim Rules for Active Directory dialog, click Add Rule… button.

- Select Send Group Membership as a Claim template. Click Next.

- On next screen, set the rule name as Role claim. Pick the Manager group using the Browse… button. Pick Role as output claim type. And set claim value to be Manager. Then click Finish. What we are doing here is to generate a Role claim with value Manager for all users in the Manager group in our AD.

- Add another rule, and this time select Send LDAP Attribute as Claims template.

- Set rule name as Name claim. Pick Active Directory as attribute store, and set up the rule to map Given-Name attribute to Name claim:

- Back in the main window, click on Trust Relationships->Relying Party Trusts node. You’ll see your ACS namespace listed in the center pane. Right-click on it and select Edit Claim Rules…

- Add a new rule using Pass Through or Filter an Incoming Claim template.

- Pass through all Role claims:

- Similarly, add another pass-through rule for Name claim.

Now our ADFS server is configured to trust our ACS namespace, and it will issue a Name claim and a Role claim for authenticated users.

Configure Trust Relationship with ACS – ACS Configuration

Now let’s configure the second part of the trust relationship.

- Download your ADFS metadata from https://[your virtual machine name].cloudapp.net/FederationMetadata/2007-06/FederationMetadata.xml (hint: use Chrome or Firefox to make download easier – ignore the certificate warnings).

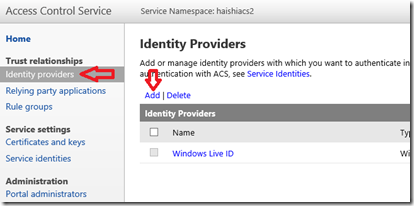

- In ACS management portal, click on Identity providers link in the left pane. Then click on Add link:

- On next screen, select WS-Federation identity provider, then click Next:

- On the next screen, enter a display name for the identity provider. Browse to the metadata file you’ve downloaded in step 1. Finally, set up a login link text, and click Save to finish.

Test with a web site

Now everything is up and running, let’s put them into work.

- Launch Visual Studio 2012.

- Create a new Web application using ASP.NET MVC 4 Web Application – Internet Application template.

- Open _LoginPartial.cshtml and remove the following part. We don’t have required claims (name identifier and provider) to make this part work. For simplicity let’s just remove it

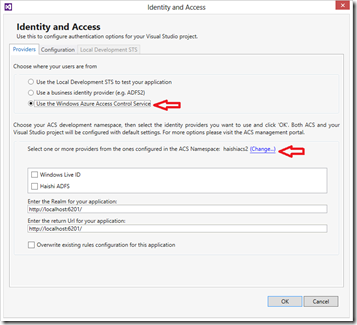

@using (Html.BeginForm("LogOff", "Account", FormMethod.Post, new { id = "logoutForm" })) { @Html.AntiForgeryToken() <a href="javascript:document.getElementById('logoutForm').submit()">Log off</a> }- Right-click on the project and select Access and Identity… menu. Not seeing the menu? Probably you don’t have the awesome extension installed yet. You can download it here.

- In the Identity and Access wizard, select Use the Windows Azure Access Control Service option. If you haven’t used the wizard before, you’ll need to enter your ACS namespace name and management key. You can also click on the (Change…) link to switch to a different namespace if needed:

- Where to get the ACS namespace management key, you ask? In ACS namespace management portal, click on the Management service link in the left pane, then click on ManagementClient link in the center pane:

- On next screen, click on Symmetric Key link, and finally in the next screen, copy the Key field.

- Where were we… right, the Identity and Access wizard. Once you’ve entered your ACS information correctly, you’ll see the list of trusted identity providers populated. Select identity provider(s) you want to use. In this case we’ll select the single ADFS provider:

- Click OK to complete the wizard – that was easy, wasn’t it?

- Now launch the application by pressing F5.

- You’ll see a certificate warning – that’s a good sign! This means the redirection to ADFS is working and the browser is complaining about our self-issued cert. Click Continue to this website to continue.

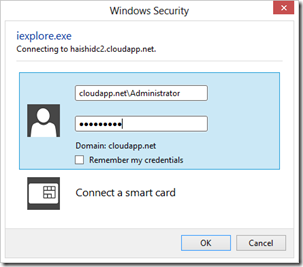

- You’ll be asked to log on to your domain. Type in your credential to log in (make sure you are using the right domain name). You can also use [user]@[domain] format, for example joe@cloudapp.net:

- And it works just as designed:

Role-based security

That was exciting, wasn’t it? Now let’s have more fun. In this part we’ll restrict access to the About() method of home controller to Manager role only.

- Open HomeController.cs and decorate the About() method:

[Authorize(Roles="Manager")] public ActionResult About() { ViewBag.Message = "Your app description page."; return View(); }- Launch the app, log in as joe, who’s a Manager. Click on About link. Everything is good.

- Now restart the app, log in as a Staff user – access denied! A-xcellent.

Summary

There you go! An Active Directory, a Domain Controller, an ADFS server, all on Windows Azure IaaS. And the ADFS server is configured as a trusted identity provider to our ACS namespace, which in turn provides claim-based authentication to our web application who uses role-based security! That’s really fun!

Bonus Item

Still reading? Thank you! Now you deserve a little bonus. Remember the log on dialog in above test? That’s not very nice looking. Follow this link to learn a little trick to bring up a login form instead.

I’ve been waiting for a detailed tutorial on this topic. Thanks, Haishi.

Vittorio Bertocci (@vibronet) posted Introducing the Developer Preview of the JSON Web Token Handler for the Microsoft .NET Framework 4.5 on 11/20/2012:

The JWT handler class diagram, spanning 3 monitors :-)

Today I am really, really happy to announce the developer preview of a new extension that will make the JSON Web Token format (JWT) a first-class citizen in the .NET Framework: the JSON Web Token Handler for the Microsoft .NET Framework 4.5 (JWT handler from now on in this post :-)).

What is the JSON Web Token (JWT) Format Anyway?

“JSON Web Token (JWT) is a compact token format intended for space constrained environments such as HTTP Authorization headers and URI query parameters. JWTs encode claims to be transmitted as a JavaScript Object Notation (JSON) object […]”. That quote is taken straight from the IETF’s (OAuth Working Group) Internet Draft that specifies the format.

That’s a remarkably straightforward definition, which hints at the good properties of the JWT format which make it especially useful in REST based solutions. JWT is very popular. Just search the web for “JWT token” followed by your programming language of choice, and chances are you’ll find an implementation; Oracle uses it in its Fusion Middleware; Google uses it in its App Engine Security Module; Salesforce uses it for handling application access; and closer to home, JWT is the token format used by Windows Azure Active Directory for issuing claims for all of its workloads entailing REST exchanges, such as issuing tokens for querying the Graph API; ACS namespaces can issue JWTs as well, even for Web SSO. If that would not be enough, consider that JWT is the token format used in OpenID Connect. Convinced of the importance of JWT yet? :-)

The JWT Handler

If you want to protect your resources using Windows Azure Active Directory and a lightweight token format, you’ll want your applications to be able to work with JWTs. That will be the appropriate choice especially when more demanding options are not viable: for example, securing a REST service with a SAML token is very likely to put your system under strain, whereas a JWT is the perfect choice for it. And if you are building your applications on .NET 4.5, that’s where the JWT handler comes in.

Given that I am giving the formal definition, let me use the full name of the product for the occasion:

The JSON Web Token Handler for the Microsoft .NET Framework 4.5 is a .NET 4.5 assembly, distributed via a NuGet package, providing a collection of classes you can use for deserializing, validating, manipulating, generating, issuing and serializing JWTs.

The .NET Framework 4.5 already has the concept of security token, in the form of the WIF classes providing abstract types (SecurityToken, SecurityTokenHandler) and out of the box support for concrete formats: SAML1.1, SAML 2.0, X.509, and the like. The JWT handler builds on that framework, providing the necessary classes to allow you to work with JWTs like if they were just as another token format among the ones provided directly in .NET 4.5.

As such, you’ll find in the package classes like JWTSecurityTokenHandler, JWTSecurityToken, JWTIssuerNameRegistry, JWTIssuerTokenResolver and all the classes which are necessary for the WIF machinery to work with the new token type. You would not normally deal with those directly, you’d just configure your app to use WIF (say for Web SSO) and add the JWTSecurityTokenHandler to the handlers collection; the WIF pipeline would call those classes at the right time for you.Although integration with the existing WIF pipeline is good, we wanted to make sure that you’d have a great developer experience in handling JWTs even when your application is not configured to use WIF. After all, the .NET framework does not offer anything out of the box for enforcing access control for REST services hence (for now ;-)) you’d have to code the request authentication logic yourself, and asking you to set up the WIF config environment on top of that would make things more difficult for you. To that purpose, we made two key design decisions:

We created an explicit representation of the validation coordinates that should be used to establish if a JWT is valid, and codified it in a public class (TokenValidationParameters). We added the necessary logic for populating this class from the usual WIF web.config settings.

Along with the usual methods you’d find in a normal implementation of SecurityTokenHandler, which operate with the data retrieved from the WIF configuration, we added an overload of ValidateToken that accept the bits of the token and a TokenValidationParameters instance; that allows you to take advantage of the handler’s validation logic without adding any config overhead.

This is just an introduction, which will be followed by deep dives and documentation: hence I won’t go too deep into the details; however I just want to stress that we really tried our best to make things as easy as we could to program against. For example: although in the generic case you should be able to validate issuer and audience from lists of multiple values, we provided both collection and scalar versions of the corresponding properties in TokenValidationParameters so that the code in the single values case is as simple as possible. Your feedback will be super important to validate those choices or critique them!

After this super-quick introduction, let’s get a bit more practical with a couple of concrete examples.

Using the JWT Handler with REST Services

Today we also released a refresh of the developer preview of our Windows Azure Authentication Library (AAL). As part of that refresh, we updated all the associated samples to use the JWT hander to validate tokens on the resource side: hence, those samples are meant to demonstrate both AAL and the JWT handler.

To give you a taste of the “WIF-less” use of the JWT token, I’ll walk you through a short snippet from one of the AAL samples.

The ShipperServiceWebAPI project is a simple Web API based service. If you go to the global.asax, you’ll find that we added a DelegatingHandler for extracting the token from incoming requests secured via OAuth2 bearer tokens, which in our specific case (tokens obtained from Windows Azure AD via AAL) happen to be JWTs. Below you can find the relevant code using the JWT handler:

1: JWTSecurityTokenHandler tokenHandler =new JWTSecurityTokenHandler();2: // Set the expected properties of the JWT token in the TokenValidationParameters3: TokenValidationParameters validationParameters =new TokenValidationParameters()4: {5: AllowedAudience = ConfigurationManager.AppSettings["AllowedAudience"],6: ValidIssuer = ConfigurationManager.AppSettings["Issuer"],8: // Fetch the signing token from the FederationMetadata document of the tenant.9: SigningToken = new X509SecurityToken(new X509Certificate2(GetSigningCertificate(ConfigurationManager.AppSettings["FedMetadataEndpoint"])))10: };11:12: Thread.CurrentPrincipal = tokenHandler.ValidateToken(token,validationParameters);1: create a new JWTSecurityTokenHandler

3: create a new instance of TokenValidationParameters, passing in

5: the expected audience for the service (in our case, urn:shipperservice:interactiveauthentication)

6: the expected issuer of the token (in our case, an ACS namespace: https://humongousinsurance.accesscontrol.windows.net/)

9: the key we use for validating the issuer’s signature. Here we are using a simple utility function for reaching out to ACS’ metadata to get the certificate on the fly, but you could also have it installed in the store, saved it in the file system, or whatever other mechanism comes to mind. Also, of course you could have used a simple symmetric key if the issuer is configured to sign with it.

12: call ValidateToken on tokenHandler, passing in the JWT bits and the validation coordinates. If successfully validated, a ClaimsPrincipal instance will be populated with the claims received in the token and assigned in the current principal.

That’s all there is to it! Very straightforward, right? If you’d compare it with the initialization required by “traditional” WIF token handlers, I am sure you’d be pleasantly surprised :-)

Using the JWT Handler With WIF Applications

Great, you can use the JWT handler outside of WIF; but what about using with your existing WIF applications? Well, that’s quite straightforward.

Let’s say you have an MVC4 application configured to handle Web Sign On using ACS; any application will do. For example: remember the blog post I published a couple of weeks ago, the one about creating an ACS namespace which trusts both a Windows Azure Active Directory tenant and Facebook? I will refer to that (although, it’s worth stressing it, ANY web app trusting ACS via WIF will do).

Head to the ACS management portal, select your RP and scroll down to the token format section. By default, SAML is selected; hit the dropdown and select JWT instead.

Great! Now open your solution in Visual Studio, and add a package reference to the JSON Web Token Handler for the Microsoft .NET Framework 4.5 NuGet.

That said, open your config file and locate the system.identityModel section:

1:<system.identityModel>2: <identityConfiguration>3: <audienceUris>4: <add value="http://localhost:61211/" />5: </audienceUris>6: <securityTokenHandlers>7: <add type="Microsoft.IdentityModel.Tokens.JWT.JWTSecurityTokenHandler,Microsoft.IdentityModel.Tokens.JWT" />8: <securityTokenHandlerConfiguration>9: <certificateValidation certificateValidationMode="PeerTrust"/>10: </securityTokenHandlerConfiguration>11: </securityTokenHandlers>Right under </audienceURIs,> paste the lines from 6 to 11. Those lines tell to WIF that there is a class for handling JWT tokens; furthermore, it specifies what signing certificates should be considered valid. Now, that requires a little digression. WIF has another config element, the IssuerNameRegistry, which specifies the thumbprints of the certificates that are associated with trusted issuers. The good news is that the JWT handler will automatically pick up the IssuerNameRegistry settings.

Issuers in the Microsoft world (ACS, ADFS, custom STSes based on WIF) will typically send together with the SAML token itself the bits of the certificate whose corresponding private key was used for signing. That means that you do not really need to install the certificate bits in order to verify the signature of incoming tokens, as you’ll receive the cert bits just in time. And given that the cert bits must correspond to the thumbprint specified by IssuerNameRegistry anyway, you can turn off cert validation (which would verify whether the cert is installed in the trusted people store, that it has been emitted by a trusted CA, or both) without being too worried about spoofing.

Now, JWT is ALL about being nimble: as such, it would be pretty surprising if it too would carry an entire X.509 certificate at every roundtrip; right? The implication for us is that in order to validate the signature of the incoming JWT, we must install the signature verification certificate in the trusted people store.

How do you do that? Well, there are many different tricks you can use. The simplest: open the metadata document (example: https://lefederateur.accesscontrol.windows.net/FederationMetadata/2007-06/FederationMetadata.xml), copy the text of the X509Certificate element from the RoleDescriptor/KeyDescriptor/KeyInfo/X509Data path and save it in a text file with extension .CER. Double-click on the file, hit the “Install Certificate…” button, choose Local Machine, Trusted People, and you’re in business. Yes, I too wish it would be less complicated; I wrote a little utility for automating this, I’ll see if we can find a way to publish it.

Anyway, at this point we are done! Hit F5, and you’ll experience Web SSO backed by a JWT instead of a SAML token. The only visible difference at this point is that your Name property will likely look a bit odd: right now we are assigning the nameidentifier claim to it, which is not what we want to do moving forward, but we wanted to make sure that there is a value in that property for you as you experiment with the handler.

How Will You Use It?

Well, there you have it. JWT is a very important format, and Windows Azure Active Directory uses it across the board. With the developer preview of the JWT handler, you now have a way to process JWTs in your applications. We’ll talk more about the JWT handler and suggest more ways you can take advantage of the handler. Above all, we are very interested to hear from you how you want to use the handler in your own solutions: the time to speak up is now, as during the dev preview we still have time to adjust the aim. Looking forward for your feedback!

Vittorio Bertocci (@vibronet) reported a A Refresh of the Windows Azure Authentication Library Developer Preview on 11/20/2012:

Three months ago we released the first developer preview of the Windows Azure Authentication Library. Today we are publishing a refresh of the developer preview, with some important (and at times radical) improvements.

We already kind of released this update, albeit in stealth mode, to enable the Windows Azure authentication bits in the ASP.NET Fall 2012 Update which is the result of a collaboration between our team and ASP.NET; we also used the new AAL in our in our end to end tutorial on how to develop multitenant applications with Windows Azure Active Directory.

In this post I will give you a quick overview of the main changes; if you have questions please leave a comment to this post (or in the forums) and we’ll get back to you! And now, without further ado:The New AAL is 100% Managed

As mentioned in the announcement of the first preview, the presence of a native core of AAL was only as a temporary state of affairs. With this release AAL becomes 100% managed, and targeted to any CPU. This represents a tremendous improvement in the ease of use of the library. For example:

- No more need to choose between x86 or x64 NuGets

- No need to worry about bitness of your development platform

- also, no need to worry about use ov IIS vs IIS Express vs Visual Studio Development Server

- No need to worry about bitness of your target platform

- No need to worry about bitness mismatches between your development platform and your target platform

- No need to install the Microsoft Visual C++ Runtime on your target platform

- access to target environments where you would not have been able to install the runtime

- No native/managed barriers in call stacks when debugging

From the feedback we received about this I know that many of you will be happy to hear this :-)

The New AAL is Client-Only

The intention behind the first developer preview of AAL was to provide a deliverable that would satisfy the needs of both service requestors and service providers. The idea was that when you outsource authentication and access control to Windows Azure Active Directory, both the client and the resources role could rely on the directory’s knowledge of the scenario and lighten the burden of what the developer had to explicitly provide in his apps. Also, we worked hard to keep the details of underlying protocols hidden away by our AuthenticationContext abstraction (read more about it here).

Although that worked reasonably well on the client side, things weren’t as straightforward on the service/protected resource side. Namely:

- Having a black box on the service made very difficult to troubleshoot issues

- The black box approach didn’t leave any room for some basic customizations that service authors wanted to apply

- In multi-tenant services you had to construct a different AuthenticationContext per request; definitely possible, but not a simplifying factor

- There are a number of scenarios where the resource developer does not even have a tenant in Windows Azure AD, but expects his callers to have one. In those cases the concept of AuthenticationContext wasn’t just less than useful, it was entirely out of the picture (hence the extension methods that some of you found for ClaimsPrincipal).

Those were some of the conceptual problems. But there were more issues, tied to more practical considerations:

- We wanted to ensure that you can write a client on .NET4.0; the presence of service side features, combined with the .NET 4.0 constraint, forced us to take a dependency on WIF 1.0. That was less than ideal:

- We missed out on the great new features in WIF 4.5

- It introduced a dependency on another package (the WIF runtime)

- Less interesting for you: however for us it would have inflated the matrix of the scenarios we’d have to test and support when eventually moving to 4.5

- The presence of service side features forced us to depend on the full .NET framework, which means that apps written against the client profile (the default for many client project types) would cough

Those were important considerations. We weighted our options, and decided that the AAL approach was better suited for the client role and that the resource role was better served by a more traditional approach. As a result:

- From this release on, AAL only contains client-side features. In a nutshell: you use AAL to obtain a token, but you no longer use AAL for validating it.

- We are introducing new artifacts that will help you to implement the resource side of your scenarios. The first of those new artifacts is the JSON Web Token Handler for the .NET Framework 4.5, which we are releasing in developer preview today. You can read more about it here.

This change further reduced the list of constraints you need to take into account when developing with AAL; in fact, combining this improvement with the fact that we are now 100% managed we were able to get rid of ALL of the gotchas in the release notes of the first preview!

I go in more details about the JWT handler in this other post, but let me spend few words here about its relationship with AAL. The first developer preview already contained most of the de/serialization and validation logic for the JWT format; however it was locked away in AAL’s black box. That made it hard for you to debug JWT-related problems, and impossible to influence its behavior or reuse that logic outside of the (intentionally) narrow scenarios supported by AAL. The JWT format is a rising star in the identity space, and it deserves to be a first class citizen in the .NET framework: which is why we decided to create a dedicated extension for it, to be used whenever and wherever you want with the same ease with which you use WIF’s out-of-box token handlers (in fact, with even more ease :-)). Some more details about this in the next section.

The Samples Are Fully Revamped

The three samples we released with the first developer preview have been adapted to use the new bits. The scenarios they implement remain the same, however the client side projects in the various solutions are now taking advantage of the new “anyCPU” NuGet package; you’ll see that very little has actually changed.

The projects representing protected resources, conversely, no longer have a reference to AAL. Instead, they make use of the new JWT handler to validate the incoming tokens obtained via AAL on the clients. The use of the JWT handler awards you finer control over how you validate incoming tokens.

Of course, with more control the abstraction level plummets: wherever with the old AAL approach you just had to initialize your AuthenticationContext an call Accept(), provided that you were on the blessed path where all settings align, here you have to take control of finding out the validation coordinates and feed them in. It’s not as bad as it sounds: you can still automate the retrieval of settings from metadata (the new samples show how) and the JWT handler is designed to be easy to use even standalone, in absence of the WIF configuration. Furthermore: we are not giving up on making things super-simple on the service side! We are simply starting bottom-up: today we are releasing a preview of token handling logic, moving forward you can expect more artifacts that will build on the more fundamental ones to give you an easier experience for selected scenarios, but without losing control over the finer details if you choose to customize things. Stay tuned!

IMPORTANT: the samples have been upgraded in-place: that means that the bits of the samples referring to the old NuGets are no longer available. More about that later.

Miscellaneous

There are various targeted improvements here and there, below I list the ones you are most likely to encounter:

- For my joy, “AcquireUserCredentialUsingUI” is no more. The same functionality is offered as an overload of AcquireToken.

- There is a new flavor of Credential, ClientCredential, which is used to obtain tokens from Windows Azure Active Directory for calling the Graph on behalf of applications that have been published via the seller dashboard (as shown in the Windows Azure AD session at //BUILD). You can see that in action here.

In the spirit of empowering you to use the protocol directly if you don’t want to rely on libraries, here there’s what happens: when you feed a ClientCredential to an AuthenticationContext and call AcquireToken AAL will send the provided key as a password, whereas SymmetricKeyCredential will use the provided key to perform a signature.- You’ll find that you’ll have an easier time dealing with exceptions

The Old Bits Are Gone

If you made it this far in the post, by know you realized that the changes in this refresh are substantial.

Maintaining a dependency on the old bits would not be very productive, given that those will not be moved forward. Furthermore, given the dev preview state of the libraries (and the fact that we were pretty upfront about changes coming) we do not expect anybody to have any business critical dependencies on those. Add to that the fact that according to NuGet.org no other package is chaining the old bits: the three AAL samples were the only samples we know of that took a dependency on the AAL native core NuGets, and those samples are being revamped to use the new 100% managed NuGet anyway.For all those reasons, we decided to pull the plug on the x86 and x64 NuGets: we hope that nobody will have issues for it! If you have problems because of this please let us know ASAP.

What’s Next

Feedback, feedback, feedback! AAL is already used in various Microsoft properties: but we want to make sure it works for your projects as well!

Of course we didn’t forget that you don't exclusively target .NET on Windows desktop; please continue to send up feedback about what other platforms you’d like us to target with our client and service libraries.We are confident that the improvements we introduced in this release will make it much easier for you to take advantage of AAL in a wider range of scenarios, and we are eager to hear from you about it. Please don’t be shy on the forums :-)

•• Manu Cohen-Yashar (@ManuKahn) reported Visual Studio Identity Support Works with .Net 4.5 Only on 11/20/2012:

Visual Studio has an Identity and Access tool extension which enables simple integration of claim based identity authentication into a web project (WCF and ASP.Net)

It turns out that the tool depends on Windows Identity Framework (WIF) 4.5 which was integrated into the .Net framework and is not compatible with WIF 4.0.

For .Net 4.5 only applications you will see the following when you right click the project.

“Enable Windows Azure Authentication” integrate your project with Windows Azure Active Directory (WAAD). “Identity and Access” integrate your project with Windows Azure Access Control Service (ACS) or any other STS (Identity Provider) including a test STS which will run on your development machine.

If you install the Identity and Access tool extension and you don’t see the above option just change your framework to 4.5.

<Return to section navigation list>

Windows Azure Virtual Machines, Virtual Networks, Web Sites, Connect, RDP and CDN

•• Sandrino di Matteo (@sandrinodm) described using Passive FTP and dynamic ports in IIS8 and Windows Azure Virtual Machines in an 11/19/2012 post:

Today Windows Azure supports up to 150 endpoints which is great for those applications that rely on persistent connections, like an FTP Server. You can run an FTP Server in 2 modes:

- Active mode: The server connects to a negotiated client port

- Passive mode: The client connects to a negotiated server port

Passive mode is by far the most popular choice since it doesn’t require you to open ports on your machine together with firewall exceptions and port forwarding. With passive mode it’s up to the server to open the required ports. Let’s see how we can get an FTP Server running in Passive mode on Windows Azure…

Configuring the endpoints

So I’ve created a new Windows Server 2012 VM in the portal. What we need to do now is open a range of ports (let’s say 100) that can be used by the FTP Server for the data connection. Usually you would do this through the portal:

Adding 100 ports manually through the portal can take some time, that’s why we’ll do it with Powershell. Take a look at the following script:

This simple script does the required work for you:

- Checks if you’re adding more than 150 ports, but it doesn’t check if you already have endpoints defined on the VM

- Add an endpoint for the public FTP port

- Add the range of dynamic ports used for the data connection

Calling it is simple (here I’m opening port 2500 for the control connection and port range 10000-10125 for the data connection on my VM called passiveftp):

And here is the result, all ports have been added:

Configuring the FTP Server

We made the required changes to the endpoints, the only thing we need to do now is configure the FTP Server. First we’ll see how we can configure the server in the UI. The first thing we need to do is add a Web Role and choose to install the FTP Server role services:

Then we need to create a new FTP Site in IIS, configure the port (2500) and set the authentication:

In the portal we opened the tcp ports 10000 to 10125. If we want Passive FTP to work, we need to configure the same range in IIS. This is done in the FTP Firewall Support feature. You’ll need to fill in exactly the same port range together with the public IP of the VM. To find it simply ping the VM (ping xxx.cloudapp.net) or go to the portal.

Finally open the firewall and open the control channel port (2500) and the data channel port range (10000-10125):

And there you go, I’m able to connect to my FTP Server using Passive mode:

Installing and configuring the FTP Server automatically

While it’s great to click around like an IT Pro, it’s always useful to have a script that does all the heavy lifting for you.

This script does… about everything:

- Install IIS with FTP Server

- Create the root directory with the required permissions

- Create the FTP Site

- Activate basic authentication and grant access to all users

- Disable SSL (remove this if you’re using the FTP Site in production)

- Configure the dynamic ports and the public IP

- Open the ports in the firewall

Calling the script is very easy, you simply pass the name of the FTP Site, the root directory, the public port, the data channel range and the public IP. Remember that you need to run this on the VM, not on your own machine.

Both scripts are available on GitHub: https://github.com/sandrinodimattia/WindowsAzure-PassiveFTPinVM

• Himanshu Singh (@himanshuks) reported the availability of New tutorials for SQL Server in Windows Azure Virtual Machines in an 11/19/2012 post to the Windows Azure blog:

We've just released three new tutorials that will help you learn how to use specific features of SQL Server in Windows Azure Virtual Machines:

- Tutorial 1: Connect to SQL Server in the same cloud service: Demonstrates how to connect to SQL Server in the same cloud service within the Windows Azure Virtual Machine environment.

- Tutorial 2: Connect to SQL Server in a different cloud service: Demonstrates how to connect to SQL Server in a different cloud service within the Windows Azure Virtual Machine environment.

Tutorial 3: Connect ASP.NET application to SQL Server in Windows Azure via Virtual Network: Demonstrates how to connect an ASP.NET application (a Web role, platform-as-a-service) to SQL Server in a Windows Azure Virtual Machine (infrastructure-as-a-service) via Windows Azure Virtual Network.

Go and check them out at Tutorials for SQL Server in Windows Azure Virtual Machines in the MSDN library.

Shaun Xu (@shaunxu) described how to Install NPM Packages Automatically for Node.js on Windows Azure Web Site in an 11/15/2012 post:

In one of my previous post I described and demonstrated how to use NPM packages in Node.js and Windows Azure Web Site (WAWS). In that post I used NPM command to install packages, and then use Git for Windows to commit my changes and sync them to WAWS git repository. Then WAWS will trigger a new deployment to host my Node.js application.

Someone may notice that, a NPM package may contains many files and could be a little bit huge. For example, the “azure” package, which is the Windows Azure SDK for Node.js, is about 6MB. Another popular package “express”, which is a rich MVC framework for Node.js, is about 1MB. When I firstly push my codes to Windows Azure, all of them must be uploaded to the cloud.

Is that possible to let Windows Azure download and install these packages for us? In this post, I will introduce how to make WAWS install all required packages for us when deploying.

Let’s Start with Demo

Demo is most straightforward. Let’s create a new WAWS and clone it to my local disk. Drag the folder into Git for Windows so that it can help us commit and push.

Please refer to this post if you are not familiar with how to use Windows Azure Web Site, Git deployment, git clone and Git for Windows.

And then open a command windows and install a package in our code folder. Let’s say I want to install “express”.

And then created a new Node.js file named “server.js” and pasted the code as below.

1: var express = require("express");2: var app = express();3:4: app.get("/", function(req, res) {5: res.send("Hello Node.js and Express.");6: });7:8: console.log("Web application opened.");9: app.listen(process.env.PORT);If we switch to Git for Windows right now we will find that it detected the changes we made, which includes the “server.js” and all files under “node_modules” folder. What we need to upload should only be our source code, but the huge package files also have to be uploaded as well. Now I will show you how to exclude them and let Windows Azure install the package on the cloud.

First we need to add a special file named “.gitignore”. It seems cannot be done directly from the file explorer since this file only contains extension name. So we need to do it from command line. Navigate to the local repository folder and execute the command below to create an empty file named “.gitignore”. If the command windows asked for input just press Enter.

1: echo > .gitignoreNow open this file and copy the content below and save.

1: node_modulesNow if we switch to Git for Windows we will found that the packages under the “node_modules” were not in the change list. So now if we commit and push, the “express” packages will not be uploaded to Windows Azure.

Second, let’s tell Windows Azure which packages it needs to install when deploying. Create another file named “package.json” and copy the content below into that file and save.

1: {2: "name": "npmdemo",3: "version": "1.0.0",4: "dependencies": {5: "express": "*"6: }7: }Now back to Git for Windows, commit our changes and push it to WAWS.

Then let’s open the WAWS in developer portal, we will see that there’s a new deployment finished. Click the arrow right side of this deployment we can see how WAWS handle this deployment. Especially we can find WAWS executed NPM.

And if we opened the log we can review what command WAWS executed to install the packages and the installation output messages. As you can see WAWS installed “express” for me from the cloud side, so that I don’t need to upload the whole bunch of the package to Azure.

Open this website and we can see the result, which proved the “express” had been installed successfully.

What’s Happened Under the Hood

Now let’s explain a bit on what the “.gitignore” and “package.json” mean.

The “.gitignore” is an ignore configuration file for git repository. All files and folders listed in the “.gitignore” will be skipped from git push. In the example below I copied “node_modules” into this file in my local repository. This means, do not track and upload all files under the “node_modules” folder. So by using “.gitignore” I skipped all packages from uploading to Windows Azure.

“.gitignore” can contain files, folders. It can also contain the files and folders that we do NOT want to ignore. In the next section we will see how to use the un-ignore syntax to make the SQL package included.

The “package.json” file is the package definition file for Node.js application. We can define the application name, version, description, author, etc. information in it in JSON format. And we can also put the dependent packages as well, to indicate which packages this Node.js application is needed.

In WAWS, name and version is necessary. And when a deployment happened, WAWS will look into this file, find the dependent packages, execute the NPM command to install them one by one. So in the demo above I copied “express” into this file so that WAWS will install it for me automatically.

I updated the dependencies section of the “package.json” file manually. But this can be done partially automatically. If we have a valid “package.json” in our local repository, then when we are going to install some packages we can specify “--save” parameter in “npm install” command, so that NPM will help us upgrade the dependencies part.

For example, when I wanted to install “azure” package I should execute the command as below. Note that I added “--save” with the command.

1: npm install azure --saveOnce it finished my “package.json” will be updated automatically.

Each dependent packages will be presented here. The JSON key is the package name while the value is the version range. Below is a brief list of the version range format. For more information about the “package.json” please refer here.

And WAWS will install the proper version of the packages based on what you defined here. The process of WAWS git deployment and NPM installation would be like this.

But Some Packages…

As we know, when we specified the dependencies in “package.json” WAWS will download and install them on the cloud. For most of packages it works very well. But there are some special packages may not work. This means, if the package installation needs some special environment restraints it might be failed.

For example, the SQL Server Driver for Node.js package needs “node-gyp”, Python and C++ 2010 installed on the target machine during the NPM installation. If we just put the “msnodesql” in “package.json” file and push it to WAWS, the deployment will be failed since there’s no “node-gyp”, Python and C++ 2010 in the WAWS virtual machine.

For example, the “server.js” file.

1: var express = require("express");2: var app = express();3:4: app.get("/", function(req, res) {5: res.send("Hello Node.js and Express.");6: });7:8: var sql = require("msnodesql");9: var connectionString = "Driver={SQL Server Native Client 10.0};Server=tcp:tqy4c0isfr.database.windows.net,1433;Database=msteched2012;Uid=shaunxu@tqy4c0isfr;Pwd=P@ssw0rd123;Encrypt=yes;Connection Timeout=30;";10: app.get("/sql", function (req, res) {11: sql.open(connectionString, function (err, conn) {12: if (err) {13: console.log(err);14: res.send(500, "Cannot open connection.");15: }16: else {17: conn.queryRaw("SELECT * FROM [Resource]", function (err, results) {18: if (err) {19: console.log(err);20: res.send(500, "Cannot retrieve records.");21: }22: else {23: res.json(results);24: }25: });26: }27: });28: });29:30: console.log("Web application opened.");31: app.listen(process.env.PORT);The “package.json” file.

1: {2: "name": "npmdemo",3: "version": "1.0.0",4: "dependencies": {5: "express": "*",6: "msnodesql": "*"7: }8: }And it failed to deploy to WAWS.

From the NPM log we can see it’s because “msnodesql” cannot be installed on WAWS.

The solution is, in “.gitignore” file we should ignore all packages except the “msnodesql”, and upload the package by ourselves. This can be done by use the content as below. We firstly un-ignored the “node_modules” folder. And then we ignored all sub folders but need git to check each sub folders. And then we un-ignore one of the sub folders named “msnodesql” which is the SQL Server Node.js Driver.

1: !node_modules/2:3: node_modules/*4: !node_modules/msnodesqlFor more information about the syntax of “.gitignore” please refer to this thread.

Now if we go to Git for Windows we will find the “msnodesql” was included in the uncommitted set while “express” was not. I also need remove the dependency of “msnodesql” from “package.json”.

Commit and push to WAWS. Now we can see the deployment successfully done.

And then we can use the Windows Azure SQL Database from our Node.js application through the “msnodesql” package we uploaded.

Summary

In this post I demonstrated how to leverage the deployment process of Windows Azure Web Site to install NPM packages during the publish action. With the “.gitignore” and “package.json” file we can ignore the dependent packages from our Node.js and let Windows Azure Web Site download and install them while deployed.

For some special packages that cannot be installed by Windows Azure Web Site, such as “msnodesql”, we can put them into the publish payload as well.

With the combination of Windows Azure Web Site, Node.js and NPM it makes even more easy and quick for us to develop and deploy our Node.js application to the cloud.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses