Windows Azure and Cloud Computing Posts for 10/29/2012+

| A compendium of Windows Azure, Service Bus, EAI & EDI,Access Control, Connect, SQL Azure Database, and other cloud-computing articles. |

‡ Updated 11/2/2012 with new articles marked ‡.

•• Updated 11/1/2012 with new articles marked ••.

• Updated 10/31/2012 with new articles marked •.

Tip: Copy bullet(s) or dagger(s), press Ctrl+f, paste it/them to the Find textbox and click Next to locate updated articles:

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Windows Azure Blob, Drive, Table, Queue, Hadoop and Media Services

- Windows Azure SQL Database, Federations and Reporting, Mobile Services

- Marketplace DataMarket, Cloud Numerics, Big Data and OData

- Windows Azure Service Bus, Caching, Access & Identity Control, Active Directory, and Workflow

- Windows Azure Virtual Machines, Virtual Networks, Web Sites, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch and Entity Framework v4+

- Windows Azure Infrastructure and DevOps

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

Azure Blob, Drive, Table, Queue, Hadoop and Media Services

‡ Brad Calder (@CalderBrad, pictured below) and Aaron Ogus of the Windows Azure Storage Team described Windows Azure’s Flat Network Storage and 2012 Scalability Targets in an 11/2/2012 post:

Earlier this year, we deployed a flat network for Windows Azure across all of our datacenters to create Flat Network Storage (FNS) for Windows Azure Storage. We used a flat network design in order to provide very high bandwidth network connectivity for storage clients. This new network design and resulting bandwidth improvements allows us to support Windows Azure Virtual Machines, where we store VM persistent disks as durable network attached blobs in Windows Azure Storage. Additionally, the new network design enables scenarios such as MapReduce and HPC that can require significant bandwidth between compute and storage.

From the start of Windows Azure, we decided to separate customer VM-based computation from storage, allowing each of them to scale independently, making it easier to provide multi-tenancy, and making it easier to provide isolation. To make this work for the scenarios we need to address, a quantum leap in network scale and throughput was required. This resulted in FNS, where the Windows Azure Networking team (under Albert Greenberg) along with the Windows Azure Storage, Fabric and OS teams made and deployed several hardware and software networking improvements.

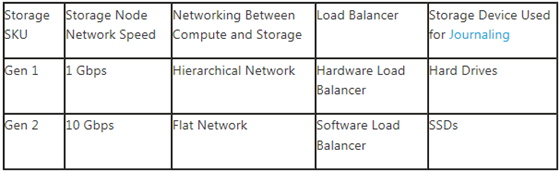

The changes to new storage hardware and to a high bandwidth network comprise the significant improvements in our second generation storage (Gen 2), when compared to our first generation (Gen 1) hardware, as outlined below:

The deployment of our Gen 2 SKU, along with software improvements, provides significant bandwidth between compute and storage using a flat network topology. The specific implementation of our flat network for Windows Azure is referred to as the “Quantum 10” (Q10) network architecture. Q10 provides a fully non-blocking 10Gbps based fully meshed network, providing an aggregate backplane in excess of 50 Tbps of bandwidth for each Windows Azure datacenter. Another major improvement in reliability and throughput is moving from a hardware load balancer to a software load balancer. Then the storage architecture and design described here, has been tuned to fully leverage the new Q10 network to provide flat network storage for Windows Azure Storage.

With these improvements, we are pleased to announce an increase in the scalability targets for Windows Azure Storage, where all new storage accounts are created on the Gen 2 hardware SKU. These new scalability targets apply to all storage accounts created after June 7th, 2012. Storage accounts created before this date have the prior scalability targets described here. Unfortunately, we do not offer the ability to migrate storage accounts, so only storage accounts created after June 7th, 2012 have these new scalability targets.

To find out the creation date of your storage account, you can go to the new portal, click on the storage account, and see the creation date on the right in the quick glance section as shown below:

Storage Account Scalability Targets

By the end of 2012, we will have finished rolling out the software improvements for our flat network design. This will provide the following scalability targets for a single storage account created after June 7th 2012.

- Capacity – Up to 200 TBs

- Transactions – Up to 20,000 entities/messages/blobs per second

- Bandwidth for a Geo Redundant storage account

- Ingress - up to 5 gigabits per second

- Egress - up to 10 gigabits per second

- Bandwidth for a Locally Redundant storage account

- Ingress - up to 10 gigabits per second

- Egress - up to 15 gigabits per second

Storage accounts have geo-replication on by default to provide what we call Geo Redundant Storage. Customers can turn geo-replication off to use what we call Locally Redundant Storage, which results in a discounted price relative to Geo Redundant Storage and higher ingress and egress targets (by end of 2012) as described above. For more information on Geo Redundant Storage and Locally Redundant Storage, please see here.

Note, the actual transaction and bandwidth targets achieved by your storage account will very much depend upon the size of objects, access patterns, and the type of workload your application exhibits. To go above these targets, a service should be built to use multiple storage accounts, and partition the blob containers, tables and queues and objects across those storage accounts. By default, a single Windows Azure subscription gets 5 storage accounts. However, you can contact customer support to get more storage accounts if you need to store more than that (e.g., petabytes) of data.

Partition Scalability Targets

Within a storage account, all of the objects are grouped into partitions as described here. Therefore, it is important to understand the performance targets of a single partition for our storage abstractions, which are (the below Queue and Table throughputs were achieved using an object size of 1KB):

- Single Queue– all of the messages in a queue are accessed via a single queue partition. A single queue is targeted to be able to process:

- Up to 2,000 messages per second

- Single Table Partition– a table partition are all of the entities in a table with the same partition key value, and usually tables have many partitions. The throughput target for a single table partition is:

- Up to 2,000 entities per second

- Note, this is for a single partition, and not a single table. Therefore, a table with good partitioning, can process up to the 20,000 entities/second, which is the overall account target described above.

- Single Blob– the partition key for blobs is the “container name + blob name”, therefore we can partition blobs down to a single blob per partition to spread out blob access across our servers. The target throughput of a single blob is:

- Up to 60 MBytes/sec

The above throughputs are the high end targets. What can be achieved by your application very much depends upon the size of the objects being accessed, the operation types (workload) and the access patterns. We encourage all services to test the performance at the partition level for their workload.

When your application reaches the limit to what a partition can handle for your workload, it will start to get back “503 Server Busy” or “500 Operation Timeout” responses. When this occurs, the application should use exponential backoff for retries. The exponential backoff allows the load on the partition to decrease, and to ease out spikes in traffic to that partition.

In summary, we are excited to announce our first step towards providing flat network storage. We plan to continue to invest in improving bandwidth between compute and storage as well as increase the scalability targets of storage accounts and partitions over time.

•• Benjamin Guinebertiere (@benjguin) described Installing HDInsight (Hadoop) [on-premises verion] on a single Windows box in a 10/31 bilingual post. From the English version:

Announced at the //build conference, HDInsight is available as a Web Platform Installer installation. This allows to have Hadoop on a Windows box (like a laptop) without requiring cygwin.

Let’s see how to install this from a blank Windows Server 2012 server (example).

Go to http://www.microsoft.com/web

This installed the Web Platform Installer

Type HDInsight in the search box and press ENTER

…

• Nagarjun Guraja of the Windows Azure Storage Team described Windows Azure Storage Emulator 1.8 in a 10/30/2012 post:

In our continuous endeavor to enrich the development experience, we are extremely pleased to announce the new Storage Emulator, which has much improved parity with the Windows Azure Storage cloud service.

What is Storage Emulator?

Storage Emulator emulates the Windows Azure Storage blob, table and queue cloud services on local machine which helps developers in getting started and basic testing of their storage applications locally without incurring the cost associated with cloud service. This version of Windows Azure Storage emulator supports Blob, Tables and Queues up until REST version 2012-02-12.

How it works?

Storage Emulator exposes different HTTP end points (port numbers: 10000 for blob, 10001 for queue and 10002 for table services) on local host to receive and serve storage requests. Upon receiving a request, the emulator validates the request for its correctness, authenticates it, authorizes (if necessary) it, works with the data in SQL tables and file system and finally sends a response to the client.

Delving deeper into the internals, Storage Emulator efficiently stores the data associated with queues and tables in SQL tables. However, for blobs, it stores the metadata in SQL tables and actual data on local disk one file for each blob, for better performance. When deleting blobs, the Storage Emulator does not synchronously clean up unreferenced blob data on disk while performing blob operations. Instead it compacts and garbage collects such data in the background for better scalability and concurrency.

Storage Emulator Dependencies:

- SQL Express or LocalDB

- .NET 4.0 or later with SQL Express or .NET 4.0.2 or later with LocalDB

Installing Storage Emulator

Storage Emulator can work with LocalDB, SQL express or even a full blown SQL server as its SQL store.

The following steps would help in getting started with emulator using LocalDB.

- Install .NET framework 4.5 from here.

- Install X64 or X86 LocalDB from here.

- Install the Windows Azure Emulator from here.

Alternatively, if you have storage emulator 1.7 installed, you can do an in place update to the existing emulator. Please note that storage emulator 1.8 uses a new SQL schema and hence a DB reset is required for doing an in place update, which would result in loss of your existing data.

The following steps would help in performing an in place update.

- Shutdown the storage emulator, if running

- Replace the binaries ‘Microsoft.WindowsAzure.DevelopmentStorage.Services.dll’, ‘Microsoft.WindowsAzure.DevelopmentStorage.Store.dll’ and ‘Microsoft.WindowsAzure.DevelopmentStorage.Storev4.0.2.dll’, located at storage emulator installation path (Default path is "%systemdrive%\Program Files\Microsoft SDKs\Windows Azure\Emulator\devstore") with those available here.

- Open up the command prompt in admin mode and run ‘dsinit /forceCreate’ to recreate the DB. You can find the ‘dsinit’ tool at the storage emulator installation path.

- Start the storage emulator

What’s new in 1.8?

Storage emulator 1.8 supports the REST version 2012-02-12, along with earlier versions. Below are the service specific enhancements.

Blob Service Enhancements:

In 2012-02-12 REST version, Windows Azure Storage cloud service introduced support for container leases, improved blob leases and asynchronous copy blob across different storage accounts. Also, there were enhancements for blob shared access signatures and blob leases in the 2012-02-12 version. All those new features are supported in Storage Emulator 1.8.

Since the emulator has just one built in account, one can initiate cross account copy blob by providing a valid cloud based URL. Emulator serves such cross account copy blob requests, asynchronously, by downloading the blob data, in chunks of 4MB, and updating the copy status.

To know more about the new features in general, the following links would be helpful:

- Updated Shared Access Signatures

- Container Leases and improved blob leases

- Asynchronous copy blob with ability to copy across accounts

Storage Emulator 1.8 also garbage collects the unreferenced page blob files which may be produced as a result of delete blob requests, failed copy blob requests etc.

Queue Service Enhancements:

In 2012-02-12 REST version, Windows Azure Storage cloud service introduced support for Queue shared access signatures (SAS). Storage Emulator 1.8 supports Queue SAS.

Table Service Enhancements:

In 2012-02-12 REST version, Windows Azure Storage cloud service introduced support for table shared access signatures (SAS). Storage Emulator 1.8 supports Table SAS.

In order to achieve full parity with Windows Azure Storage table service APIs, the table service in emulator is completely rewritten from scratch to support truly schema less tables and expose data for querying and updating using ODATA protocol. As a result, Storage Emulator 1.8 fully supports the below table operations which were not supported in Emulator 1.7.

- Query Projection: You can read more about it here.

- Upsert operations: You can read more about it here.

Known Issues/Limitations

- The storage emulator supports only a single fixed account and a well-known authentication key. They are: Account name: devstoreaccount1, Account key: Eby8vdM02xNOcqFlqUwJPLlmEtlCDXJ1OUzFT50uSRZ6IFsuFq2UVErCz4I6tq/K1SZFPTOtr/KBHBeksoGMGw==

- The URI scheme supported by the storage emulator differs from the URI scheme supported by the cloud storage services. The development URI scheme specifies the account name as part of the hierarchical path of the URI, rather than as part of the domain name. This difference is due to the fact that domain name resolution is available in the cloud but not on the local computer. For more information about URI differences in the development and production environments, see “Using storage service URIs” section in Overview of Running a Windows Azure Application with the Storage Emulator.

- The storage emulator does not support Set Blob Service Properties or SetServiceProperties for blob, queue and table services.

- Date properties in the Table service in the storage emulator support only the range supported by SQL Server 2005 (For example, they are required to be later than January 1, 1753). All dates before January 1, 1753 are changed to this value. The precision of dates is limited to the precision of SQL Server 2005, meaning that dates are precise to 1/300th of a second.

- The storage emulator supports partition key and row key property values of less than 900 bytes. The total size of the account name, table name, and key property names together cannot exceed 900 bytes.

- The storage emulator does not validate that the size of a batch in an entity group transaction is less than 4 MB. Batches are limited to 4 MB in Windows Azure, so you must ensure that a batch does not exceed this size before transitioning to the Windows Azure storage services.

- Avoid using ‘PartitionKey’ or ‘RowKey’ that contains ‘%’ character due to the double decoding bug

- Get messages from queue might not return messages in the strict increasing order of message’s ‘Insertion TimeStamp’ + ‘visibilitytimeout’

Summary

Storage Emulator 1.8 has a great extent of parity with the Windows Azure Storage cloud service in terms of API support and usability and we will continue to improve it. We hope you all like it and please share your feedback with us to make it better.

• The SQL Server Team (@SQLServer) posted a Strata-Hadoop World 2012 Recap on 10/30/2012:

We just wrapped up a busy week at Strata-Hadoop World, one of the predominant Big Data conferences. Microsoft showed up in force, with the big news being our press announcement around the availability of public previews for Windows Azure HDInsight Service and Microsoft HDInsight Server for Windows, our 100 percent Apache Hadoop compatible solution for Windows Azure and Windows Server.

With HDInsight, Microsoft truly democratizes Big Data by opening up Hadoop to the Windows ecosystem. When you combine HDInsight with curated datasets from Windows Azure Data Market and powerful self-service BI tools like Excel Power View, it’s easy to see why customers can count on the comprehensiveness and simplicity of Microsoft’s enterprise-grade Big Data solution.

We kicked off the conference with Shawn Bice and Mike Flasko’s session Drive Smarter Decisions with Microsoft Big Data, which offered attendees a hands-on look at how simple it is to deploy and manage Hadoop clusters on Windows; the demo combined corporate data with curated datasets from Windows Azure Data Market and then analyzed and visualized this data with Excel Power View (you can watch Mike deliver a repeat performance of his session demos in this SiliconAngle interview). For folks that want to hear even more, Mike will be delivering a webcast later this month that dives into the business value that Microsoft Big Data unlocks, including more demos of HDInisght in action.

Matt Winkler then gave a session on the .NET and JavaScript frameworks for Hadoop, which enable millions of additional developers to program Hadoop jobs. These frameworks further Microsoft’s mission of bringing big data to the masses; learn more and download the .NET SDK here.

Roger Barga and Dipanjan Banik closed the conference with their editorial session Predictive Modeling & Operational Analytics over Streaming Data, discussing how to leverage the StreamInsight platform for operational analytics. By using a “Monitor, Manage and Mine” architectural pattern, businesses are able to store/process event streams, analyze them and produce predictive models that can then be installed directly into event processing services to predict real-time alerts/actions.

We had the opportunity to talk with hundreds of customers who were very excited to hear about our Big Data vision and see the concrete progress we’ve made with HDInsight. Be sure to join Microsoft at the Strata Santa Clara conference in February, where we’ll have much more to share!

Learn more about Microsoft Big Data and test out the HDInsight previews for yourself at microsoft.com/bigdata.

Joe Giardino, Serdar Ozler, Justin Yu and Veena Udayabhanu of the Windows Azure Storage Team posted Introducing Windows Azure Storage Client Library 2.0 for .NET and Windows Runtime on 10/29/2012:

Today we are releasing version 2.0 of the Windows Azure Storage Client Library. This is our largest update to our .NET library to date which includes new features, broader platform compatibility, and revisions to address the great feedback you’ve given us over time. The code is available on GitHub now. The libraries are also available through NuGet, and also included in the Windows Azure SDK for .NET - October 2012; for more information and links see below.

In addition to the .NET 4.0 library, we are also releasing two libraries for Windows Store apps as Community Technology Preview (CTP) that fully supports the Windows Runtime platform and can be used to build modern Windows Store apps for both Windows RT (which supports ARM based systems), and Windows 8, which runs in any of the languages supported by Windows Store apps (JavaScript, C++, C#, and Visual Basic). This blog post serves as an overview of these libraries and covers some of the implementation details that will be helpful to understand when developing cloud applications in .NET regardless of platform.

What’s New

We have introduced a number of new features in this release of the Storage Client Library including:

- Simplicity and Usability - A greatly simplified API surface which will allow developers new to storage to get up and running faster while still providing the extensibility for developers who wish to customize the behavior of their applications beyond the default implementation.

- New Table Implementation - An entirely new Table Service implementation which provides a simple interface that is optimized for low latency/high performance workloads, as well as providing a more extensible serialization model to allow developers more control over their data.

- Rich debugging and configuration capabilities – One common piece of feedback we receive is that it’s too difficult to know what happened “under the covers” when making a call to the storage service. How many retries were there? What were the error codes? The OperationContext object provides rich debugging information, real-time status events for parallel and complex actions, and extension points allowing users the ability to customize requests or enable end to end client tracing

- Windows Runtime Support - A Windows Runtime component with support for developing Windows Store apps using JavaScript, C++,C#, and Visual Basic; as well as a Strong Type Tables Extension library for C++, C#, and Visual Basic

- Complete Sync and Asynchronous Programming Model (APM) implementation - A complete Synchronous API for .Net 4.0. Previous releases of the client implemented synchronous methods by simply surrounding the corresponding APM methods with a ManualResetEvent, this was not ideal as extra threads remained blocked during execution. In this release all synchronous methods will complete work on the thread in which they are called with the notable exceptions of the stream implementations available via Cloud[Page|Block]Blob.Open[Read|Write]due to parallelism.

- Simplified RetryPolicies - Easy and reusable RetryPolicies

- .NET Client Profile – The library now supports the .NET Client Profile. For more on the .Net Client Profile see here.

- Streamlined Authentication Model - There is now a single StorageCredentials type that supports Anonymous, Shared Access Signature, and Account and Key authentication schemes

- Consistent Exception Handling - The library immediately will throw any exception encountered prior to making the request to the server. Any exception that occurs during the execution of the request will subsequently be wrapped inside a single StorageException type that wraps all other exceptions as well as providing rich information regarding the execution of the request.

- API Clarity - All methods that make requests to the server are clearly marked with the [DoesServiceRequest] attribute

- Expanded Blob API - Blob DownloadRange allows user to specify a given range of bytes to download rather than rely on a stream implementation

- Blob download resume - A feature that will issue a subsequent range request(s) to download only the bytes not received in the event of a loss of connectivity

- Improved MD5 - Simplified MD5 behavior that is consistent across all client APIs

- Updated Page Blob Implementation - Full Page Blob implementation including read and write streams

- Cancellation - Support for Asynchronous Cancellation via the ICancellableAsyncResult. Note, this can be used with .NET CancellationTokens via the CancellationToken.Register() method.

- Timeouts - Separate client and server timeouts which support end to end timeout scenarios

- Expanded Azure Storage Feature Support – It supports the 2012-02-12 REST API version with implementation for for Blob & Container Leases, Blob, Table, and Queue Shared Access Signatures, and Asynchronous Cross-Account Copy Blob

Design

When designing the new Storage Client for .NET and Windows Runtime, we set up a series of design guidelines to follow throughout the development process. In addition to these guidelines, there are some unique requirements when developing for Windows Runtime, and specifically when projecting into JavaScript, that has driven some key architectural decisions.

For example, our previous RetryPolicy was based on a delegate that the user could configure; however as this cannot be supported on all platforms we have redesigned the RetryPolicy to be a simple and consistent implementation everywhere. This change has also allowed us to simplify the interface in order to address user feedback regarding the complexity of the previous implementation. Now a user who constructs a custom RetryPolicy can re-use that same implementation across platforms.

Windows Runtime

A key driver in this release was expanding platform support, specifically targeting the upcoming releases of Windows 8, Windows RT, and Windows Server 2012. As such, we are releasing the following two Windows Runtime components to support Windows Runtime as Community Technology Preview (CTP):

- Microsoft.WindowsAzure.Storage.winmd - A fully projectable storage client that supports JavaScript, C++, C#, and VB. This library contains all core objects as well as support for Blobs, Queues, and a base Tables Implementation consumable by JavaScript

- Microsoft.WindowsAzure.Storage.Table.dll – A table extension library that provides generic query support and strong type entities. This is used by non-JavaScript applications to provide strong type entities as well as reflection based serialization of POCO objects

Breaking Changes

With the introduction of Windows 8, Windows RT, and Windows Server 2012 we needed to broaden the platform support of our current libraries. To meet this requirement we have invested significant effort in reworking the existing Storage Client codebase to broaden platform support, while also delivering new features and significant performance improvements (more details below). One of the primary goals in this version of the client libraries was to maintain a consistent API across platforms so that developer’s knowledge and code could transfer naturally from one platform to another. As such, we have introduced some breaking changes from the previous version of the library to support this common interface. We have also used this opportunity to act on user feedback we have received via the forums and elsewhere regarding both the .Net library as well as the recently released Windows Azure Storage Client Library for Java. For existing users we will be posting an upgrade guide for breaking changes to this blog that describes each change in more detail.

Please note the new client is published under the same NuGet package as previous 1.x releases. As such, please check any existing projects as an automatic upgrade will introduce breaking changes.

Additional Dependencies

The new table implementation depends on three libraries (collectively referred to as ODataLib), which are resolved through the ODataLib (version 5.0.2) packages available through NuGet and not the WCF Data Services installer which currently contains 5.0.0 versions. The ODataLib libraries can be downloaded directly or referenced by your code project through NuGet. The specific ODataLib packages are:

- http://nuget.org/packages/Microsoft.Data.OData/5.0.2

- http://nuget.org/packages/Microsoft.Data.Edm/5.0.2

- http://nuget.org/packages/System.Spatial/5.0.2

Namespaces

One particular breaking change of note is that the name of the assembly and root namespace has moved to Microsoft.WindowsAzure.Storage instead of Microsoft.WindowsAzure.StorageClient. In addition to aligning better with other Windows Azure service libraries this change allows developers to use the legacy 1.X versions of the library and the 2.0 release side-by-side as they migrate their applications. Additionally, each Storage Abstraction (Blob, Table, and Queue) has now been moved to its own sub-namespace to provide a more targeted developer experience and cleaner IntelliSense experience. For example the Blob implementation is located in Microsoft.WindowsAzure.Storage.Blob, and all relevant protocol constructs are located in Microsoft.WindowsAzure.Storage.Blob.Protocol.

Testing, stability, and engaging the open source community

We are committed to providing a rock solid API that is consistent, stable, and reliable. In this release we have made significant progress in increasing test coverage as well as breaking apart large test scenarios into more targeted ones that are more consumable by the public.

Microsoft and Windows Azure are making great efforts to be as open and transparent as possible regarding the client libraries for our services. The source code for all the libraries can be downloaded via GitHub under the Apache 2.0 license. In addition we have provided over 450 new Unit Tests for the .Net 4.0 library alone. Now users who wish to modify the codebase have a simple and light weight way to validate their changes. It is also important to note that most of these tests run against the Storage Emulator that ships via the Windows Azure SDK for .NET allowing users to execute tests without incurring any usage on their storage accounts. We will also be providing a series of higher level scenarios and How-To’s to get users up and running both simple and advanced topics relating to using Windows Azure Storage.

Summary

We have put a lot of work into providing a truly first class development experience for the .NET community to work with Windows Azure Storage. In addition to the content provided in these blog posts we will continue to release a series of additional blog posts which will target various features and scenarios in more detail, so check back soon. Hopefully you can see your past feedback reflected in this new library. We really do appreciate the feedback we have gotten from the community, so please keep it coming by leaving a comment below or participating on our forums.

Joe Giardino, Serdar Ozler, Justin Yu and Veena Udayabhanu of the Windows Azure Storage Team followed up with a detailed Windows Azure Storage Client Library 2.0 Breaking Changes & Migration Guide post on 10/29/2012:

The recently released Windows Azure Storage Client Library for .Net includes many new features, expanded platform support, extensibility points, and performance improvements. In developing this version of the library we made some distinct breaks with Storage Client 1.7 and prior in order to support common paradigms across .NET and Windows Runtime applications.

Additionally, we have addressed distinct pieces of user feedback from the forums and users we’ve spoken with. We have made great effort to provide a stable platform for clients to develop their applications on and will continue to do so. This blog post serves as a reference point for these changes as well as a migration guide to assist clients in migrating existing applications to the 2.0 release. If you are new to developing applications using the Storage Client in .Net you may want to refer to the overview here to get acquainted with the basic concepts. This blog post will focus on changes and future posts will be introducing the concepts that the Storage Client supports.

Namespaces

The core namespaces of the library have been reworked to provide a more targeted Intellisense experience, as well as more closely align with the programming experience provided by other Windows Azure Services. The root namespace as well as the assembly name itself have been changed from Microsoft.WindowsAzure.StorageClient to Microsoft.WindowsAzure.Storage. Additionally, each service has been broken out into its own sub namespace. For example the blob implementation is located in Microsoft.WindowsAzure.Storage.Blob, and all protocol relevant constructs are in Microsoft.WindowsAzure.Storage.Blob.Protocol. Note: Windows Runtime component will not expose Microsoft.WindowsAzure.Storage.[Blob|Table|Queue].Protocol namespaces as they contain dependencies on .Net specific types and are therefore not projectable.

The following is a detailed listing of client accessible namespaces in the assembly.

- Microsoft.WindowsAzure.Storage – Common types such as CloudStorageAccount and StorageException. Most applications should include this namespace in their using statements.

- Microsoft.WindowsAzure.Storage.Auth – The StorageCredentials object that is used to encapsulate multiple forms of access (Account & Key, Shared Access Signature, and Anonymous).

- Microsoft.WindowsAzure.Storage.Auth.Protocol – Authentication handlers that support SharedKey and SharedKeyLite for manual signing of requests

- Microsoft.WindowsAzure.Storage.Blob – Blob convenience implementation, applications utilizing Windows Azure Blobs should include this namespace in their using statements

- Microsoft.WindowsAzure.Storage.Blob.Protocol – Blob Protocol layer

- Microsoft.WindowsAzure.Storage.Queue – Queue convenience implementation, applications utilizing Windows Azure Queues should include this namespace in their using statements

- Microsoft.WindowsAzure.Storage.Queue.Protocol – Queue Protocol layer

- Microsoft.WindowsAzure.Storage.Table – New lightweight Table Service implementation based on OdataLib. We will be posting an additional blog that dives into this new Table implementation in more greater detail.

- Microsoft.WindowsAzure.Storage.Table.DataServices – The legacy Table Service implementation based on System.Data.Services.Client. This includes TableServiceContext, CloudTableQuery, etc.

- Microsoft.WindowsAzure.Storage.Table.Protocol – Table Protocol layer implementation

- Microsoft.WindowsAzure.Storage.RetryPolicies - Default RetryPolicy implementations (NoRetry, LinearRetry, and ExponentialRetry) as well as the IRetryPolicy interface

- Microsoft.WindowsAzure.Storage.Shared.Protocol – Analytics objects and core HttpWebRequestFactory

What’s New

- Added support for the .NET Client Profile, allowing for easier installation of your application on machines where the full .NET Framework has not been installed.

- There is a new dependency on the three libraries released as OdataLib, which are available via nuget and codeplex.

- A reworked and simplified codebase that shares a large amount of code between platforms

- Over 450 new unit tests published to GitHub

- All APIs that execute a request against the storage service are marked with the DoesServiceRequest attribute

- Support for custom user headers

- OperationContext – Provides an optional source of diagnostic information about how a given operation is executing. Provides mechanism for E2E tracing by allowing clients to specify a client trace id per logical operation to be logged by the Windows Azure Storage Analytics service.

- True “synchronous” method support. SDK 1.7 implemented synchronous methods by simply wrapping a corresponding Asynchronous Programming Model (APM) method with a ManualResetEvent. In this release all work is done on the calling thread. This excludes stream implementations available via Cloud[Page|Block]Blob.OpenRead and OpenWrite and parallel uploads.

- Support for Asynchronous cancellation via ICancellableAsyncResult. Note this can be hooked up to .NET cancellation tokens via the Register() method as illustrated below:

…

ICancellableAsyncResult result = container.BeginExists(callback, state);

token.Register((o) => result.Cancel(), null /* state */);

…

- Timeouts – The Library now allows two separate timeouts to be specified. These timeouts can be specified directly on the service client (i.e. CloudBlobClient) or overridden via the RequestOptions. These timeouts are nullable and therefore can be disabled.

- The ServerTimeout is the timeout given to the server for each request executed in a given logical operation. An operation may make more than one requests in the case of a Retry, parallel upload etc., the ServerTimeout is sent for each of these requests. This is set to 90 seconds by default.

- The MaximumExecutionTime provides a true end to end timeout. This timeout is a client side timeout that spans all requests, including any potential retries, a given operation may execute. This is disabled by default.

- Full PageBlob support including lease, cross account copy, and read/write streams

- Cloud[Block|Page]Blob DownloadRange support

- Blobs support download resume, in the event of an error the subsequent request will be truncated to specify a range at the correct byte offset.

- The default MD5 behavior has been updated to utilize a FIPS compliant implementation. To use the default .NET MD5 please set CloudStorageAccount.UseV1MD5 = true;

Breaking Changes

General

- Dropped support for .NET Framework 3.5, Clients must use .Net 4.0 or above

- Cloud[Blob|Table|Queue]Client.ResponseReceived event has been removed, instead there are SendingRequest and ResponseReceived events on the OperationContext which can be passed into each logical operation

- All Classes are sealed by default to maintain consistency with Windows RT library

- ResultSegments are no longer generic. For example, in Storage Client 1.7 there is a ResultSegment<CloudTable>, while in 2.0 there is a TableResultSegment to maintain consistency with Windows RT library.

- RetryPolicies

- The Storage Client will no longer prefilter certain types of exceptions or HTTP status codes prior to evaluating the users RetryPolicy. The RetryPolicies contained in the library will by default not retry 400 class errors, but this can be overridden by implementing your own policy

- A retry policy is now a class that implements the IRetryPolicy interface. This is to simplify the syntax as well as provide commonality with the Windows RT library

- StorageCredentials

- CloudStorageAccount.SetConfigurationSettingPublisher has been removed. Instead the members of StorageCredentials are now mutable allowing users to accomplish similar scenarios in a more streamlined manner by simply mutating the StorageCredentials instance associated with a given client(s) via the provided UpdateKey methods.

- All credentials types have been simplified into a single StorageCredentials object that supports Anonymous requests, Shared Access Signature, and Account and Key authentication.

- Exceptions

- StorageClientException and StorageServerException are now simplified into a single Exception type: StorageException. All APIs will throw argument exceptions immediately; once a request is initiated all other exceptions will be wrapped.

- StorageException no longer directly contains ExtendedErrorInformation. This has been moved inside the RequestResult object which tracks the current state of a given request

- Pagination has been simplified. A segmented result will simply return up to the maximum number of results specified. If a continuation token is received it is left to the user to make any subsequent requests to complete a given page size.

Blobs

- All blobs must be accessed via CloudPageBlob or CloudBlockBlob, the CloudBlob base class has been removed. To get a reference to the concrete blob class when the client does not know the type please see the GetBlobReferenceFromServer on CloudBlobClient and CloudBlobContainer

- In an effort to be more transparent to the application layer the default parallelism is now set to 1 for blob clients. (This can be configured via CloudBlobClient.ParallelOperationThreadCount) In previous releases of the sdk, we observed many users scheduling multiple concurrent blob uploads to more fully exploit the parallelism of the system. However, when each of these operations was internally processing up to 8 simultaneous operations itself there were some adverse side effects on the system. By setting parallelism to 1 by default it is now up to the user to opt in to this concurrent behavior.

- CloudBlobClient.SingleBlobUploadThresholdInBytes can now be set as low as 1 MB.

- StreamWriteSizeInBytes has been moved to CloudBlockBlob and can now be set as low as 16KB. Please note that the maximum number of blocks a blob can contain is 50,000 meaning that with a block size of 16KB, the maximum blob size that can be stored is 800,000KB or ~ 781 MB.

- All upload and download methods are now stream based, the FromFile, ByteArray, Text overloads have been removed.

- The stream implementation available via CloudBlockBlob.OpenWrite will no longer encode MD5 into the block id. Instead the block id is now a sequential block counter appended to a fixed random integer in the format of [Random:8]-[Seq:6].

- For uploads if a given stream is not seekable it will be uploaded via the stream implementation which will result in multiple operations regardless of length. As such, when available it is considered best practice to pass in seekable streams.

- MD5 has been simplified, all methods will honor the three MD5 related flags exposed via BlobRequestOptions

- StoreBlobContentMD5 – Stores the Content MD5 on the Blob on the server (default to true for Block Blobs and false for Page Blobs)

- UseTransactionalMD5 – Will ensure each upload and download provides transactional security via the HTTP Content-MD5 header. Note: When enabled, all Download Range requests must be 4MB or less. (default is disabled, however any time a Content-MD5 is sent by the server the client will validate it unless DisableContentMD5Validation is set)

- DisableContentMD5Validation – Disables any Content-MD5 validation on downloads. This is needed to download any blobs that may have had their Content-MD5 set incorrectly

- Cloud[Page|Block]Blob no longer exposes BlobAttributes. Instead the BlobProperties, Metadata, Uri, etc. are exposed on the Cloud[Page|Block]Blob object itself

- The stream available via Cloud[Page|Block]Blob.OpenRead() does not support multiple Asynchronous reads prior to the first call completing. You must first call EndRead prior to a subsequent call to BeginRead.

- Protocol

- All blob Protocol constructs have been moved to the Microsoft.WindowsAzure.Storage.Blob.Protocol namespace. BlobRequest and BlobResponse have been renamed to BlobHttpWebRequestFactory and BlobHttpResponseParsers respectively.

- Signing Methods have been removed from BlobHttpWebRequestFactory, alternatively use the SharedKeyAuthenticationHandler in the Microsoft.WindowsAzure.Storage.Auth.Protocol namespace

Tables

- New Table Service Implementation - A new lightweight table implementation is provided in the Microsoft.WindowsAzure.Storage.Table namespace. Note: For backwards compatibility the Microsoft.WindowsAzure.Storage.Table.DataServices.TableServiceEntity was not renamed, however this entity type is not compatible with the Microsoft.WindowsAzure.Storage.Table.TableEntity as it does not implement ITableEntity interface.

- DataServices

- The legacy System.Data.Services.Client based implementation has been migrated to the Microsoft.WindowsAzure.Storage.Table.DataServices namespace.

- The CloudTableClient.Attach method has been removed. Alternatively, use a new TableServiceContext

- TableServiceContext will now protect concurrent requests against the same context. To execute concurrent requests please use a separate TableServiceContext per logical operation.

- TableServiceQueries will no longer rewrite the take count in the URI query string to take smaller amounts of entities based on the legacy pagination construct. Instead, the client side Lazy Enumerable will stop yielding results when the specified take count is reached. This could potentially result in retrieving a larger number of entities from the service for the last page of results. Developers who need a finer grained control over the pagination of their queries should leverage the segmented execution methods provided.

- Protocol

- All Table protocol constructs have been moved to the Microsoft.WindowsAzure.Storage.Table.Protocol namespace. TableRequest and TableResponse have been renamed to TableHttpWebRequestFactory and TableHttpResponseParsers respectively.

- Signing Methods have been removed from TableHttpWebRequestFactory, alternatively use the SharedKeyLiteAuthenticationHandler in the Microsoft.WindowsAzure.Storage.Auth.Protocol namespace

Queues

- Protocol

- All Queue protocol constructs have been moved to the Microsoft.WindowsAzure.Storage.Queue.Protocol namespace. QueueRequest and QueueResponse have been renamed to QueueHttpWebRequestFactory and QueueHttpResponseParsers respectively.

- Signing Methods have been removed from QueueHttpWebRequestFactory, alternatively use the SharedKeyAuthenticationHandler in the Microsoft.WindowsAzure.Storage.Auth.Protocol namespace

Migration Guide

In addition to the detailed steps above, below is a simple migration guide to help clients begin migrating existing applications.

Namespaces

A legacy application will need to update their “using” to include:

- Microsoft.WindowsAzure.Storage

- If using credentials types directly add a using statement to Microsoft.WindowsAzure.Storage.Auth

- If you are using a non-default RetryPolicy add a using statement to Microsoft.WindowsAzure.Storage.RetryPolicies

- For each Storage abstraction add the relevant using statement Microsoft.WindowsAzure.Storage.[Blob|Table|Queue]

Blobs

- Any code that access a blob via CloudBlob will have to be updated to use the concrete types CloudPageBlob and CloudBlockBlob. The listing methods will return the correct object type, alternatively you may discern this from via FetchAttributes(). To get a reference to the concrete blob class when the client does not know the type please see the GetBlobReferenceFromServer on CloudBlobClient and CloudBlobContainer objects

- Be sure to set the desired Parallelism via CloudBlobClient.ParallelOperationThreadCount

- Any code that may rely on the internal MD5 semantics detailed here, should update to set the correct MD5 flags via BlobRequestOptions

Tables

- If you are migrating an existing Table application you can choose to re-implement it via the new simplified Table Service implementation, otherwise add a using to the Microsoft.WindowsAzure.Storage.Table.DataServices namespace

DataServiceContext (the base implementation of the TableServiceContext) is not threadsafe, subsequently it has been considered best practice to avoid concurrent requests against a single context, though not explicitly prevented. The 2.0 release will now protect against simultaneous operations on a given context. Any code that may rely on concurrent requests on the same TableServiceContext should be updated to execute serially, or utilize multiple contexts.

Summary

This blog posts serves as a guide to the changes introduced by the 2.0 release of the Windows Azure Storage Client libraries.

We very much appreciate all the feedback we have gotten from customers and through the forums, please keep it coming. Feel free to leave comments below,

Joe Giardino

Serdar Ozler

Veena Udayabhanu

Justin YuWindows Azure Storage

Resources

Get the Windows Azure SDK for .Net

Denny Lee (@dennylee) answered Oh where, oh where did my S3N go? (in Windows Azure HDInsight) Oh where, Oh where, can it be?! in a 10/29/2012 post:

As noted in my previous post Connecting Hadoop on Azure to your Amazon S3 Blob storage, you could easily setup HDInsight Azure to go against your Amazon S3 / S3N storage. With the updates to HDInsight, you’ll notice that Manage Cluster dialog no longer includes the quick access to Set up S3.

Yet, there are times where you may want to connect your HDInsight cluster to access your S3 storage. Note, this can be a tad expensive due to transfer costs.

To get S3 setup on your Hadoop cluster, from the HDInsight dashboard click on the Remote Desktop tile so you can log onto the name node.

Once you are logged in, open up the Hadoop Command Line Interface link from the desktop.

From here, switch to the c:\apps\dist\Hadoop[]\conf folder and edit the core-site.xml file. The code to add is noted below.

<property><name>fs.s3n.awsAccessKeyId</name><value>[Access Key ID]</value></property><property><name>fs.s3n.awsSecretAccessKey</name><value>[Secret Access Key]</value></property>Once this is setup, you will be able to access your S3 account from your Hadoop cluster.

Herve Roggero (@hroggero) reported Faster, Simpler access to Azure Tables with Enzo Azure API in a 10/29/2012 post:

After developing the latest version of Enzo Cloud Backup I took the time to create an API that would simplify access to Azure Tables (the Enzo Azure API). At first, my goal was to make the code simpler compared to the Microsoft Azure SDK. But as it turns out it is also a little faster; and when using the specialized methods (the fetch strategies) it is much faster out of the box than the Microsoft SDK, unless you start creating complex parallel and resilient routines yourself. Last but not least, I decided to add a few extension methods that I think you will find attractive, such as the ability to transform a list of entities into a DataTable. So let’s review each area in more details.

Simpler Code

My first objective was to make the API much easier to use than the Azure SDK. I wanted to reduce the amount of code necessary to fetch entities, remove the code needed to add automatic retries and handle transient conditions, and give additional control, such as a way to cancel operations, obtain basic statistics on the calls, and control the maximum number of REST calls the API generates in an attempt to avoid throttling conditions in the first place (something you cannot do with the Azure SDK at this time).

Strongly Typed

Before diving into the code, the following examples rely on a strongly typed class called MyData. The way MyData is defined for the Azure SDK is similar to the Enzo Azure API, with the exception that they inherit from different classes. With the Azure SDK, classes that represent entities must inherit from TableServiceEntity, while classes with the Enzo Azure API must inherit from BaseAzureTable or implement a specific interface.

// With the SDK

public class MyData1 : TableServiceEntity

{

public string Message { get; set; }

public string Level { get; set; }

public string Severity { get; set; }

}// With the Enzo Azure API

public class MyData2 : BaseAzureTable

{

public string Message { get; set; }

public string Level { get; set; }

public string Severity { get; set; }

}Simpler Code

Now that the classes representing an Azure Table entity are defined, let’s review the methods that the Azure SDK would look like when fetching all the entities from an Azure Table (note the use of a few variables: the _tableName variable stores the name of the Azure Table, and the ConnectionString property returns the connection string for the Storage Account containing the table):

// With the Azure SDK

public List<MyData1> FetchAllEntities()

{

CloudStorageAccount storageAccount = CloudStorageAccount.Parse(ConnectionString);

CloudTableClient tableClient = storageAccount.CreateCloudTableClient();

TableServiceContext serviceContext = tableClient.GetDataServiceContext();CloudTableQuery<MyData1> partitionQuery =

(from e in serviceContext.CreateQuery<MyData1>(_tableName)

select new MyData1()

{

PartitionKey = e.PartitionKey,

RowKey = e.RowKey,

Timestamp = e.Timestamp,

Message = e.Message,

Level = e.Level,

Severity = e.Severity

}).AsTableServiceQuery<MyData1>();return partitionQuery.ToList();

}This code gives you automatic retries because the AsTableServiceQuery does that for you. Also, note that this method is strongly-typed because it is using LINQ. Although this doesn’t look like too much code at first glance, you are actually mapping the strongly-typed object manually. So for larger entities, with dozens of properties, your code will grow. And from a maintenance standpoint, when a new property is added, you may need to change the mapping code. You will also note that the mapping being performed is optional; it is desired when you want to retrieve specific properties of the entities (not all) to reduce the network traffic. If you do not specify the properties you want, all the properties will be returned; in this example we are returning the Message, Level and Severity properties (in addition to the required PartitionKey, RowKey and Timestamp).

The Enzo Azure API does the mapping automatically and also handles automatic reties when fetching entities. The equivalent code to fetch all the entities (with the same three properties) from the same Azure Table looks like this:

// With the Enzo Azure API

public List<MyData2> FetchAllEntities()

{

AzureTable at = new AzureTable(_accountName, _accountKey, _ssl, _tableName);

List<MyData2> res = at.Fetch<MyData2>("", "Message,Level,Severity");

return res;

}As you can see, the Enzo Azure API returns the entities already strongly typed, so there is no need to map the output. Also, the Enzo Azure API makes it easy to specify the list of properties to return, and to specify a filter as well (no filter was provided in this example; the filter is passed as the first parameter).

Fetch Strategies

Both approaches discussed above fetch the data sequentially. In addition to the linear/sequential fetch methods, the Enzo Azure API provides specific fetch strategies. Fetch strategies are designed to prepare a set of REST calls, executed in parallel, in a way that performs faster that if you were to fetch the data sequentially. For example, if the PartitionKey is a GUID string, you could prepare multiple calls, providing appropriate filters ([‘a’, ‘b’[, [‘b’, ‘c’[, [‘c’, ‘d[, …), and send those calls in parallel. As you can imagine, the code necessary to create these requests would be fairly large. With the Enzo Azure API, two strategies are provided out of the box: the GUID and List strategies. If you are interested in how these strategies work, see the Enzo Azure API Online Help. Here is an example code that performs parallel requests using the GUID strategy (which executes more than 2 t o3 times faster than the sequential methods discussed previously):

public List<MyData2> FetchAllEntitiesGUID()

{

AzureTable at = new AzureTable(_accountName, _accountKey, _ssl, _tableName);

List<MyData2> res = at.FetchWithGuid<MyData2>("", "Message,Level,Severity");

return res;

}Faster Results

With Sequential Fetch Methods

Developing a faster API wasn’t a primary objective; but it appears that the performance tests performed with the Enzo Azure API deliver the data a little faster out of the box (5%-10% on average, and sometimes to up 50% faster) with the sequential fetch methods. Although the amount of data is the same regardless of the approach (and the REST calls are almost exactly identical), the object mapping approach is different. So it is likely that the slight performance increase is due to a lighter API. Using LINQ offers many advantages and tremendous flexibility; nevertheless when fetching data it seems that the Enzo Azure API delivers faster. For example, the same code previously discussed delivered the following results when fetching 3,000 entities (about 1KB each). The average elapsed time shows that the Azure SDK returned the 3000 entities in about 5.9 seconds on average, while the Enzo Azure API took 4.2 seconds on average (39% improvement).

With Fetch Strategies

When using the fetch strategies we are no longer comparing apples to apples; the Azure SDK is not designed to implement fetch strategies out of the box, so you would need to code the strategies yourself. Nevertheless I wanted to provide out of the box capabilities, and as a result you see a test that returned about 10,000 entities (1KB each entity), and an average execution time over 5 runs. The Azure SDK implemented a sequential fetch while the Enzo Azure API implemented the List fetch strategy. The fetch strategy was 2.3 times faster. Note that the following test hit a limit on my network bandwidth quickly (3.56Mbps), so the results of the fetch strategy is significantly below what it could be with a higher bandwidth.

Additional Methods

The API wouldn’t be complete without support for a few important methods other than the fetch methods discussed previously. The Enzo Azure API offers these additional capabilities:

- - Support for batch updates, deletes and inserts

- - Conversion of entities to DataRow, and List<> to a DataTable

- - Extension methods for Delete, Merge, Update, Insert

- - Support for asynchronous calls and cancellation

- - Support for fetch statistics (total bytes, total REST calls, retries…)

For more information, visit http://www.bluesyntax.net or go directly to the Enzo Azure API page (http://www.bluesyntax.net/EnzoAzureAPI.aspx).

About Herve Roggero

Herve Roggero, Windows Azure MVP, is the founder of Blue Syntax Consulting, a company specialized in cloud computing products and services. Herve's experience includes software development, architecture, database administration and senior management with both global corporations and startup companies. Herve holds multiple certifications, including an MCDBA, MCSE, MCSD. He also holds a Master's degree in Business Administration from Indiana University. Herve is the co-author of "PRO SQL Azure" from Apress and runs the Azure Florida Association (on LinkedIn: http://www.linkedin.com/groups?gid=4177626). For more information on Blue Syntax Consulting, visit www.bluesyntax.net.

<Return to section navigation list>

Windows Azure SQL Database, Federations and Reporting, Mobile Services

•• My (@rogerjenn) Windows Azure Mobile Services creates backends for Windows 8, iPhone tip of 10/31/2012 for TechTarget’s SearchCloudComputing.com blog begins:

Windows front-end developers have enough on their plates simply migrating from Windows 32 desktop and Web apps to XAML/C# or HTML5/JavaScript and the new Windows Runtime (WinRT) for Windows Store apps.

The cost of teaching teams new back-end coding skills for user authentication and authorization with Microsoft Accounts (formerly Live IDs) could be the straw the breaks the camel's back. Add to that messaging with Windows Notification Services (WNS), email and SMS as well as structured storage with Windows Azure SQL Databases (WASDB) and targeting Windows 8 and Azure: Microsoft's legacy escape route, and it all becomes a bit much.

To ease .NET developers into this brave new world of multiple devices ranging from Win32 legacy laptops to touchscreen tablets and smartphones, Microsoft's Windows Azure team released a Windows Azure Mobile Services (WAMS) Preview. The initial release supports Windows Store apps (formerly Windows 8 applications) only; Microsoft promises support for iOS and Android devices soon.

The release consists of the following:

- A Mobile Services option added to the new HTML Windows Azure Management Portal generates the back-end database, authentication/authorization, notification and server-side scripting services automatically.

- An open-source WAMS Client SDK Preview with an Apache 2.0 license is downloadable from GitHub; the SDK requires Windows 8 RTM and Visual Studio 2012 RTM.

- A sample doto application demonstrates WAMS multi-tenant features and is also available from GitHub. This sample is in addition to the tutorial walkthroughs offered from the Mobile Services Developer pages and the OakLeaf Systems blog.

- A Store menu add-in to Visual Studio 2012's Project menu accelerates app registration with and deployment to the Windows Store.

- A form opened in Visual Studio 2012 can obtain a developer license for Windows 8, which is required for creating Windows Store apps.

WAMS eliminates the need to handcraft the more than a thousand lines of XAML and C# required to implement and configure Windows Azure SQL Database (WASDB), as well as corresponding Access Control and Service Bus notification components for clients and device-agnostic mobile back ends. The RESTful back end uses OData's new JSON Light payload option to support multiple front-end operating systems with minimum data overhead. WAMS's Dynamic Schema feature auto-generates WASDB table schemas and relationships so front-end designers don't need database design expertise.

Expanding the WAMS device and feature repertoire

Scott Guthrie, Microsoft corporate VP in charge of Windows Azure development, announced the following device support and features in a blog post:

- iOS support, which enables companies to connect iPhone and iPad apps to Mobile Services

- Facebook, Twitter and Google authentication support with Mobile Services

- Blob, Table, Queue and Service Bus support from within Mobile Services

- The ability to send email from Mobile Services (in partnership with SendGrid)

- The ability to send SMS messages from Mobile Services (in partnership with Twilio)

- The capability to deploy mobile services in the West U.S. region.

The update didn't modify the original Get Started with data or Get Started with push notifications C# and JavaScript sample projects from the Developer Center or Paul Batum's original DoTo sample application in the GitHub samples folder. …

Read more.

Post-publication update: Windows Azure Mobile Services now supports Windows Phone 8 apps.

•• Josh Holmes (@joshholmes) described Custom Search with Azure Mobile Services in JavaScript in an 11/1/2012 post:

I’ve published my first little Windows 8 app using the Azure Mobile Services in JavaScript. It was incredibly quick to get up and running and more flexible than I thought it would.

The one thing that was tricky was that I’m using JavaScript/HTML5 to build my app and since I don’t have LINQ in JavaScript, doing a custom date search was difficult. Fortunately I got to sit down with Paul Batum from the Azure Mobile Services team and he learned me a thing or two.

I already knew the backend of Azure Mobile Services was node.js. What I didn’t realize is that we can pass in a javascript function to be executed server side for a highly custom search the way that we can with LINQ from C#. The syntax is a little weird but it works a treat.

itemTable.where(function (startDate, endDate) { return this.Date >= endDate && this.Date <= startDate; }, startDate, endDate) .read() .done(function (results) { for (var i = 0; i < results.length; i++) { //do something interesting };

Notice that inside the where function, I’m passing in another function. This function gets passed back and operates server side. The slightly wonky part is that the function has to accept the parameters that you pass in as well as you have to pass the variables that will be passed to this function. So reading that sample carefully, see that we’re passing three variables to the server side including the function and then the two actual variables that we want to pass to the function that executes on the server.

This allows for some awesome flexibility, well beyond custom date searches.

• Glenn Gailey (@ggailey777) expands on Windows Azure Mobile Services for Windows Phone 8 in his Windows Phone 8 is Finally Here! post of 10/31/2012:

This is a great day for Microsoft’s Windows Phone platform, with the official launch of Windows Phone 8 along with the following Windows Phone 8-related clients and toolkits:

- Windows Phone 8 SDK

- Mobile Services SDK for Windows Phone 8 [see post below]

- Windows Phone Toolkit for Windows Phone 8

- OData Client Library for Windows Phone 8 [see the Marketplace DataMarket, Cloud Numerics, Big Data and OData section below]

Windows Phone SDK 8.0

Yesterday morning we announced the release of the Windows Phone SDK 8.0. I only got hold of this new SDK about a week ago, so I haven’t had much time to play around with it, but here’s what’s in it:

- Visual Studio Express 2012 for Windows Phone

- Windows Phone emulator(s)†

- Expression blend for Windows Phone

- Team Explorer

† One reason that it took me so long to start using Windows Phone SDK 8.0 (aside from the difficulty of even getting access to it inside of Microsoft) was the stringent platform requirement of “a machine running Windows 8 with SLAT enabled.” This meant that I had to wait to upgrade my x64 Lenovo W520 laptop to Windows 8 before I could start working with Windows Phone 8 apps. This is because the emulator runs in a VM. It seems much faster and more stabile than the old 7.1 version, but its virtual networking adapters do confuse my laptop from time to time.

The first thing that I noticed was support for doing much cooler things with live tiles that was even possible in 7.1, including providing different tile sizes in the app that customers can set and new kinds of notifications. For more information about what’s new, see the post Introducing Windows Phone SDK 8.0.

Windows Phone Toolkit for Windows Phone 8

This critical toolkit has always been chock full of useful controls (I’ve used it quite a bit in my apps), and it’s now updated to support Windows Phone 8 development and the new SDK. You can get the toolkit from NuGet. Documentation and sources are published on CodePlex at http://phone.codeplex.com.

OData Client Library for Windows Phone 8

Microsoft had already released OData v3 client support for both .NET Framework and Windows Store apps, but support for Windows Phone had been noticeably missing from the suite of OData clients. Yesterday, we also unveiled the OData Client Tools for Windows Phone Apps. As is now usually the case for OData clients, this library isn’t included with the Windows Phone SDK 8.0, but you can easily downloaded it from here and install it with the Windows Phone SDK 8.0.

Mobile Services SDK for Windows Phone 8

The main reason that I needed to install the Windows Phone SDK 8.0 was to test the new support in Windows Azure Mobile Services. Since Microsoft is, of course, committed to supporting it’s Windows Phone platform, Mobile Services also released the Windows Phone 8 SDK, along with updates to the Management Portal for Windows Phone 8. This is all tied to this week’s //BUILD conference, hosted by Microsoft, and the release of the Windows Phone SDK 8.0.

Mobile Services quickstart for Windows Phone 8 in the Windows Azure Management Portal

If you’ve been using the Mobile Services SDK for Windows Store apps, the .NET Framework libraries in this SDK are nearly identical to the client library in the Windows Phone 8 SDK (with some behavioral exceptions). As such , anything that you could do with Mobile Services in a Windows Store app you can also do in a Windows Phone app. As such, we created Windows Phone 8 versions of the original Windows Store quickstarts:

- Get started with Mobile Services [see post below]

- Get started with data

- Get started with authentication

- Get started with push notifications

I’m definitely excited to (finally) be working on Windows Phone 8 apps and hope to be blogging more in the future about Mobile Services and Windows Phone.

With so much great buzz around Windows Phone 8, now I just need AT&T to start selling them so I can go get mine.

• John Koetsier reported from BUILD 2012: Microsoft demos simple cloud-enabling of mobile apps with Azure Mobile Services in a 10/31/2012 post to VentureBeat’s /Cloud blog:

Looking to cloud-enable your mobile app? Looks like Microsoft can help make that a lot easier.

Microsoft just demoed some very slick new mobile and cloud connections today at its BUILD conference in Redmond, showing how simple it is for developers to store their data in the cloud and perform operations on that data.

Josh Twist from Windows Azure Mobile Services — which he announced now support Windows Phone 8 — connected an app to Azure authentication services live onstage. Authentication protocols not only include Microsoft accounts, but also Facebook, Twitter, and Google accounts, and Twist showed how, in just a few lines of code, developers can add social login to their apps.

This works on any app on iOS as well as more traditional desktop apps for Windows Store, and now, of course, Windows Phone 8.

Even more interestingly, Twist demoed how simple it is to set event handlers in Azure that execute code securely and automatically in the cloud whenever data changes. One example he showed was to automatically grab a user’s Twitter avatar when the user logs in via Twitter. In a few lines of Javascript, saved on Azure and triggered automatically when a user logged in, Mobile Services talked to Twitter, retrieved the user icon, saved it locally, and sent it to the mobile app for use in the user interface.

Then Twist connected the cloud app to a live tile on his Windows 8 PC, enabling quick and easy desktop monitoring of his mobile app’s activity. Also impressive.

A preview is available today, Twist said, and developers who sign up will receive 10 mobile services for free.

And the Windows Phone app data arrives on the Windows 8 desktop, via Azure Mobile Services

Image credits: vernieman via photopin cc, Microsoft

CloudBeat 2012 assembles the biggest names in the cloud’s evolving story to

uncover real cases of revolutionary adoption. Unlike other cloud

events, the customers themselves are front and center. Their

discussions with vendors and other experts give you rare insights into

what really works, who’s buying what, and where the industry is going.

CloudBeat takes place Nov. 28-29 in Redwood City, Calif. Register today!

• The Windows Azure Mobile Services team posted a Get started with Mobile Services tutorial for Windows Phone 8 on 10/30/2012:

This tutorial shows you how to add a cloud-based backend service to a Windows Phone 8 app using Windows Azure Mobile Services. In this tutorial, you will create both a new mobile service and a simple To do list app that stores app data in the new mobile service.

A screenshot from the completed app is below:

Note: To complete this tutorial, you need a Windows Azure account that has the Windows Azure Mobile Services feature enabled.

- If you don't have an account, you can create a free trial account in just a couple of minutes. For details, see Windows Azure Free Trial.

- If you have an existing account but need to enable the Windows Azure Mobile Services preview, see Enable Windows Azure preview features.

Create a new mobile service

Follow these steps to create a new mobile service.

- Log into the Management Portal.

At the bottom of the navigation pane, click +NEW.

Expand Mobile Service, then click Create.

This displays the New Mobile Service dialog.

In the Create a mobile service page, type a subdomain name for the new mobile service in the URL textbox and wait for name verification. Once name verification completes, click the right arrow button to go to the next page.

This displays the Specify database settings page.

Note: As part of this tutorial, you create a new SQL Database instance and server. You can reuse this new database and administer it as you would any other SQL Database instance. If you already have a database in the same region as the new mobile service, you can instead choose Use existing Database and then select that database. The use of a database in a different region is not recommended because of additional bandwidth costs and higher latencies.

In Name, type the name of the new database, then type Login name, which is the administrator login name for the new SQL Database server, type and confirm the password, and click the check button to complete the process.

Note: When the password that you supply does not meet the minimum requirements or when there is a mismatch, a warning is displayed.

We recommend that you make a note of the administrator login name and password that you specify; you will need this information to reuse the SQL Database instance or the server in the future.You have now created a new mobile service that can be used by your mobile apps.

Create a new Windows Phone app

Once you have created your mobile service, you can follow an easy quickstart in the Management Portal to either create a new app or modify an existing app to connect to your mobile service.

In this section you will create a new Windows Phone 8 app that is connected to your mobile service.

In the Management Portal, click Mobile Services, and then click the mobile service that you just created.

In the quickstart tab, click Windows Phone 8 under Choose platform and expand Create a new Windows Phone 8 app.

This displays the three easy steps to create a Windows Phone app connected to your mobile service.

If you haven't already done so, download and install Visual Studio 2012 Express for Windows Phone and the Mobile Services SDK on your local computer or virtual machine.

Click Create TodoItems table to create a table to store app data.

Under Download and run app, click Download.

This downloads the project for the sample To do list application that is connected to your mobile service. Save the compressed project file to your local computer, and make a note of where you save it.

Run your Windows Phone app

The final stage of this tutorial is to build and run your new app.

Browse to the location where you saved the compressed project files, expand the files on your computer, and open the solution file in Visual Studio 2012 Express for Windows Phone.

Press the F5 key to rebuild the project and start the app.

In the app, type meaningful text, such as Complete the tutorial and then click Save.

This sends a POST request to the new mobile service hosted in Windows Azure. Data from the request is inserted into the TodoItem table. Items stored in the table are returned by the mobile service, and the data is displayed in the list.

Note: You can review the code that accesses your mobile service to query and insert data, which is found in the MainPage.xaml.cs file.

Back in the Management Portal, click the Data tab and then click the TodoItems table.

This lets you browse the data inserted by the app into the table.

Next Steps

Now that you have completed the quickstart, learn how to perform additional important tasks in Mobile Services:

Get started with data

Learn more about storing and querying data using Mobile Services.Get started with authentication

Learn how to authenticate users of your app with an identity provider.

• Cihan Biyikoglu (@cihangirb) announced a SQL PASS Summit 2012 – Disaster Recovery for Federations in Azure SQL DB session on 10/30/2012:

We have been working heads down on some new improvement to federations around disaster recovery and ease of working with a group of databases. We are ready to demo one key improvement we are building for federations to you at PASS 2012: the new SWITCH statement that make it easy to manipulate a group flexibly to move members dbs in and out.

We’ll talk about why this is important to DR and how the DR scenarios will evolve around these concepts when it comes to rolling back an application upgrade gone bad, or creating a copy of a federation on another server for safekeeping and more.

Look forward to seeing all of you there; http://www.sqlpass.org/summit/2012/Sessions/SessionDetails.aspx?sid=2906

Han, MSFT reported SQL Data Sync now available in the East and West US Data Centers! in a 10/30/2012 post:

We are excited to announce that we have just completed the deployment of SQL Data Sync into the East and West US data centers. Now, what does that mean to you? For those who intend to have their sync hubs in the East or West US, you can now provision the Sync Server in the respective regions thus allowing better sync performance for the particular sync groups.

It’s about time.

The TechNet Wiki recently updated its detailed How to Shard with Windows Azure SQL Database article. From the Introduction:

Database sharding is a technique of horizontal partitioning data across multiple physical servers to provide application scale-out. Windows Azure SQL Database is a cloud database service from Microsoft that provides database functionality as a utility service, offering many benefits including rapid provisioning, cost-effective scalability, high availability and reduced management overhead.

SQL Database combined with database sharding techniques provides for virtually unlimited scalability of data for an application. This paper provides an overview of sharding with SQL Database, covering challenges that would be experienced today, as well as how these can be addressed with features to be provided in the upcoming releases of SQL Database. …

Table of Contents

- Introduction

- An Overview of Sharding

- A Conceptual Framework for Sharding with SQL Database

- Application Design for Sharding with SQL Database and ADO.NET

- Applying the Prescribed ADO.NET Based Sharding Library

- SQL Database Federations

- Summary

- See Also

1 Introduction

This document provides guidance on building applications that utilize a technique of horizontal data partitioning known as sharding, where data is horizontally partitioned across multiple physical databases, to provide scalability of the application as data size or demand upon the application increases.

A specific goal of this guidance is to emphasize how SQL Database can facilitate a sharded application, and how an application can be designed to utilize the elasticity of SQL Database to enable for highly cost effective, on-demand, and virtually limitless scalability.