Windows Azure and Cloud Computing Posts for 2/27/2011+

| A compendium of Windows Azure, Windows Azure Platform Appliance, SQL Azure Database, AppFabric and other cloud-computing articles. |

• Updated 2/28/2011 9:00 AM PST with articles marked •:

- Fix for failure of Windows Azure Training Kit to install under Windows 7 SP1 in the Live Windows Azure Apps, APIs, Tools and Test Harnesses section.

- Corrected my Choosing from the major Platform as a Service providers article of 2/24/2011 on SearchCloudComputing.com in the Other Cloud Computing Platforms and Services section.

- Proof that Cloud Computing is NP-Complete by Joe Weinman in the Windows Azure Infrastructure section.

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database and Reporting

- Marketplace DataMarket and OData

- Windows Azure AppFabric: Access Control and Service Bus

- Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch

- Windows Azure Infrastructure

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Azure Blob, Drive, Table and Queue Services

Justin Yu of the Windows Azure Storage team warned Windows Azure Storage Client Library: Rewinding stream position less than BlobStream.ReadAheadSize can result in lost bytes from BlobStream.Read() on 2/27/2011:

In the current Windows Azure storage client library, BlobStream.Read() may read less than the requested number of bytes if the user rewinds the stream position. This occurs when using the seek operation to a position which is equal or less than BlobStream.ReadAheadSize byte(s) away from the previous start position. Furthermore, in this case, if BlobStream.Read() is called again to read the remaining bytes, data from an incorrect position will be read into the buffer.

What does ReadAheadSize property do?

BlobStream.ReadAheadSize is used to define how many extra bytes to prefetch in a get blob request when BlobStream.Read() is called. This design is suppose to ensure that the storage client library does not need to send another request to the blob service if BlobStream.Read() is called again to read N bytes from the current position, where N < BlobStream.ReadAheadSize. It is an optimization for reading blobs in the forward direction, which reduces the number of the get blob requests to the blob service when reading a Blob.

This bug impacts users only if their scenario involves rewinding the stream to read, i.e. using Seek operation to seek to a position BlobStream.ReadAheadSize bytes less than the previous byte offset.

The root cause of this issue is that the number of bytes to read is incorrectly calculated in the storage client library when the stream position is rewound by N bytes using Seek, where N <=BlobStream.ReadAheadSize bytes away from the previous read’s start offset. (Note, if the stream is rewound more than BlobStream.ReadAheadSize bytes away from the previous start offset, the stream reads work as expected.)

To understand this issue better, let us explain this using an example of user code that exhibits this bug.

We begin with getting a BlobStream that we can use to read the blob, which is 16MB in size. We set the ReadAheadSize to 16 bytes. We then seek to offset 100 and read 16 bytes of data. :

BlobStream stream = blob.OpenRead(); stream.ReadAheadSize = 16; int bufferSize = 16; int readCount; byte[] buffer1 = new byte[bufferSize]; stream.Seek(100, System.IO.SeekOrigin.Begin); readCount = stream.Read(buffer1, 0, bufferSize);BlobStream.Read() works as expected in which buffer1 is filled with 16 bytes of the blob data from offset 100. Because of ReadAheadSize set to 16, the Storage Client issues a read request for 32 bytes of data as seen in the request trace as seen in the “x-ms-range” header set to 100-131 in the request trace. The response as we see in the content-length, returns the 32 bytes:

Request and response trace:

Request header:

GET http://foo.blob.core.windows.net/test/blob?timeout=90 HTTP/1.1

x-ms-version: 2009-09-19

User-Agent: WA-Storage/0.0.0.0

x-ms-range: bytes=100-131

…Response header:

HTTP/1.1 206 Partial Content

Content-Length: 32

Content-Range: bytes 16-47/16777216

Content-Type: application/octet-stream

…We will now rewind the stream to 10 bytes away from the previous read’s start offset (previous start offset was at 100 and so the new offset is 90). It is worth noting that 10 is < ReadAheadSize which exhibits the problem (note, if we had set the seek to be > ReadAheadSize back from 100, then everything would work as expected). We then issue a Read for 16 bytes starting from offset 90.

byte[] buffer2 = new byte[bufferSize]; stream.Seek(90, System.IO.SeekOrigin.Begin); readCount = stream.Read(buffer2, 0, bufferSize);BlobStream.Read() does not work as expected here. It is called to read 16 bytes of the blob data from offset 90 into buffer2, but only 9 bytes of blob data is downloaded because the Storage Client has a bug in calculating the size it needs to read as seen in the trace below. We see that x-ms-range is set to 9 bytes (range = 90-98 rather than 90-105) and the content-length in the response set to 9.

Request and response trace:

Request header:

GET http://foo.blob.core.windows.net/test/blob?timeout=90 HTTP/1.1

x-ms-version: 2009-09-19

User-Agent: WA-Storage/0.0.0.0

x-ms-range: bytes=90-98

…Response header:

HTTP/1.1 206 Partial Content

Content-Length: 9

Content-Range: bytes 90-98/16777216

Content-Type: application/octet-stream

…Now, since the previous request for reading 16 bytes just returned 9 bytes, the client will issue another Read request to continue reading the remaining 7 bytes,

readCount = stream.Read(buffer2, readCount, bufferSize – readCount);BlobStream.Read() still does not work as expected. It is called to read the remaining 7 bytes into buffer2 but the whole blob is downloaded as seen in the request and response trace below. As seen in the request, due to bug in Storage client, an incorrect range is sent to the service which then returns the entire blob data resulting in an incorrect data being read into the buffer. The request trace shows that the range is invalid: 99-98. The invalid range causes the Windows Azure Blob service to return the entire content as seen in the response trace. Since the client does not check to see the range and it was expecting the starting offset to be 99, it copies the 7 bytes from the beginning of the stream which is incorrect.

Request and response trace:

Request header:

GET http://foo.blob.core.windows.net/test/blob?timeout=90 HTTP/1.1x-ms-version: 2009-09-19

User-Agent: WA-Storage/0.0.0.0

x-ms-range: bytes=99-98

…Response header:

HTTP/1.1 200 OK

Content-Length: 16777216

Content-Type: application/octet-stream

…Mitigation

The workaround is to set the value of BlobStream.ReadAheadSize to 0 before BlobStream.Read() is called if a rewind operation is required:

BlobStream stream = blob.OpenRead(); stream.ReadAheadSize = 0;As we explained above, the property BlobStream.ReadAheadSize is an optimization which can reduce the number of the requests to send when reading blobs in the forward direction, and setting it to 0 removes that benefit.

Summary

To summarize, the bug in the client library can result in data from an incorrect offset to be read. This happens only when the user code seeks to a position less than the previous offset where the distance is < ReadAheadSize. The bug will be fixed a future release of the Windows Azure SDK and we will post a link to the download here once it is released.

Jerry Huang recommended Leverage Azure Blob Storage for File Sync in a 2/25/2011 post to the Gladinet blog:

Windows Azure Blob Storage is one of the cloud storage service[s]. When you have a Windows Azure Blob Storage account, there are many things you can do. You can map a network drive to it

sofor direct access from your desktop; you can backup files to it for off site access. Those were already available with Gladinet Cloud Suite 2.0.Today’s article will discuss another use case – leveraging Azure Blob Storage for file sync across multiple PCs. The idea is very simple, Windows Azure Storage is available anywhere you have an Internet access, you can use it as a facility and conduit to pass files back and forth between PCs. In Gladinet Cloud Desktop 3.0, this idea was put to practice and here are the steps to use it.

First you will need to install Gladinet Cloud Desktop 3.0 and mount your Azure Blob Storage account.

During the mounting process, the wizard will ask if you want to enable Cloud Sync Folder that the software will associate with your Azure Storage account, you can say YES and leave the checkbox checked.

If it is not enabled now, you can always enable it later.

You can also select an existing folder as the basis for the cloud sync folder. If you pick an existing folder, the contents in the existing folder will be picked up by the software and sync over to your other PCs (when they have the same Azure Blob account configured).

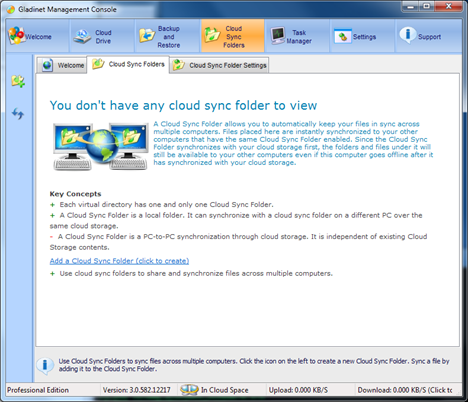

You can also enable the Cloud Sync Folder from the Cloud Sync Folder tab from the top of the Management Console.

When you click on the "Add a Cloud Sync Folder” link above, you will see this dialog:

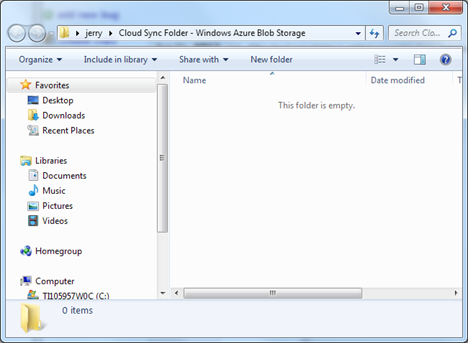

At the end of the setup, you have a local folder that is associated with the Azure Blob Storage account.

You can then repeat the same steps on your other PC.

After that, you can add documents to this folder, edit documents in this folder and the changes will be synchronized to your other PC and vise versa.

Related Posts

<Return to section navigation list>

SQL Azure Database and Reporting

Jonathan Rozenblit (@jrozenblit) posted Deploying a Simple Cloud App on 2/25/2011:

Many of you have now attended our first wave of local events where we show you how to deploy a simple application to Windows Azure. I hope that you enjoyed the events and that they were of value. If you haven’t been to a one of these in your community, there is another wave coming soon, so stay tuned here as well as to your local user groups.

As feedback from those events, you told us that it would be great to have the step by step written instructions so that you can follow at your own pace. So here they are! In the next few posts, I’ll go through all of the same steps, add some additional commentary, as well as some resource links that you can check out as supplemental reading.

Pre-Work

Before we get started with the real work, you’ll need to download some files that we’ll use throughout this walkthrough. Go ahead and download the following:

Let’s deploy

- Part 1: Setting Up a SQL Azure Server and Database

- Part 2: Scripting the On-Premises Database for SQL Azure

- Part 3: Executing the Scripts on the SQL Azure Database

- Part 4: Creating the Cloud Solution

- Part 5: Updating the Configuration

- Part 6: Preparing the Deployment Package

- Part 7: Creating the Hosted Service

- Part 8: Promoting from Staging to Production

If at any time during your walkthrough you have any questions or are having trouble, feel free to send me an email (cdnazure@microsoft.com). I’ll be more than happy to help.

I mentioned “another” wave above - we’re working on another wave of community events that will walkthrough deploying a more complex application. More details on that in a little bit.

I’d also like to thank Barry Gervin and Cory Fowler from ObjectSharp for all of their help to date in putting together the content and hosting the live events.

Jonathan Rozenblit (@jrozenblit) posted Deploying a Simple Cloud App: Part 3 - Executing the Scripts on the SQL Azure Database on 2/25/2011:

If you’ve started reading from this post, you’ll need to go through the previous parts before going starting this one:

Introduction

Part 1: Setting Up a SQL Azure Server and Database

Part 2: Scripting the On-Premise Database for SQL AzureIn this part, we’ll connect to our SQL Azure database and execute some scripts required by the application.

Connect to the SQL Azure Server

- In the Windows Azure Management Portal, click on the server you previously provisioned. The Properties pane on the right-hand side of the screen will refresh with the server information.

If for some reason you can’t see it, hover your mouse on the right-hand side of the screen until you see the cursor change to a two arrow cursor. Drag it towards the left and the Properties pane will appear.

In order to connect to the database, we’re going to need the fully qualified DNS name of the server. Highlight the value in the Fully Qualified DNS Name and press CTRL+C.

Remember, the Windows Azure Management Portal is a Silverlight application. Don’t bother trying to right-mouse click to copy because you’re just going to get the Silverlight menu.

- Open SQL Server Management Studio and open a new connection.

- Paste the server name in the Server name field.

- Change the authentication type to SQL Server Authentication.

- Enter the administrator login and password we setup earlier in the Login and Password fields respectively.

If you don’t remember the login, just flip back to the Windows Azure Management Portal and it will be in the Properties pane.

- Before we click on Connect, click on Options.

- We want to make sure that the connection is secure. To do that, click on Encrypt connection.

- Click on Connect. Give it a few seconds and you’ll be connected.

Notice the icon next to the server name? The icon is blue, indicating that the connection is to a SQL Azure server. Expand the Databases node to see our NerdDinner database.

Open the first of three script files required for the ASP.NET membership and profile providers required by the app. From the File menu, click on Open, and then select File. Select InstallCommon.sql.

If you haven't already done so, download the required scripts from here.

Change the database to NerdDinner and click Execute.

It should run relatively quickly and executed with no problems.- Repeat steps 11 and 12 with InstallMembership.sql, InstallProfile.sql, and NerdDinner.sql (the file we scripted in part 2).

Don't forget to ensure that the NerdDinner database is selected before running each script.

- Let’s check that the tables and stored procedures were created. Expand the NerdDinner database. You should now see tables and stored procedures.

So that was relatively painless, right? Not too different from running scripts against your on-premise SQL Server, right? Right! Congratulations! You have now successfully deployed your SQL Azure database.

Online Database Manager

Let’s pause for a moment and look at a scenario where you may not have SQL Server Management Studio available to you. No problem. You can deploy the scripts from the online management console.

- Go back to the Windows Azure Management Portal.

- Click on the NerdDinner database, and then click on Manage in the toolbar. A new tab or window will open.It’s going to open up a new tab.

If you have a pop-up blocker, it may prevent the window from opening. Allow the window to pop-up in your pop-up blocker, and click on Manage again.

- All the login information is pre-populated because you previously selected the database. Just enter your password and click Connect.

You can do pretty much everything from here. New query, tables, etc. For example, to deploy scripts:

- Click on Open Query.

- Select a script file and click Open.

- Click on Execute.

There you have it – deployments from anywhere!

SQL Azure Deployment Completed

With that, we’re done the SQL Azure portion of our deployment. Let’s review what we did:

- We created a SQL Azure Server.

- We created a SQL Azure Database.

- We took our on-premise database, scripted it for SQL Azure.

- We connected to the SQL Azure database, and executed the scripts against it.

Next up – creating the Cloud solution in Visual Studio 2010.

Herve Roggero delivered a detailed answer to dn2009’s Why choose SQL Azure over Amazon RDS (MySQL)? question in the SQL Azure forum on 2/18/2011 (missed when posted):

Q: Amazon RDS (aka MySQL) appears to be more expensive than SQL Azure, but I haven't found a detailed comparison of the two. Why would I choose SQL Azure over RDS? I guess it comes down to SQL Server vs. MySQL.

A: Tricky question... let me take a shot at it... :) There is a little more than meets the eye.

It seems that the Amazon RDS offering is closer to a server hosting model; you pick your memory/CPUs... and so on. SQL Azure is a departure from traditional database servers in the sense that you don't really run a "server" in the cloud. You run a database in the cloud. It might sound a bit like semantics, but it isn't. You are assigned a SQL Azure database server, but it's nothing more than a DNS entry. If you create 5 databases on that database server, they will be running on different physical servers (guaranteed) so that their performance and availability can be managed by SQL Azure independently. So databases can be moved around to spread the load overall (remember, it's a multitenant environment), depending on usage patterns of individual databases. So you won't have to define a memory footprint, CPU or I/O requirements; you just build and deploy your database through a web interface.

On the flip side, it appears that Amazon RDS provides storage options not available on SQL Azure. The largest database size in SQL Azure is 50GB; which for most applications is plenty. Amazon RDS provides up to 1TB. So the Amazon solution provides companies with the traditional "scale up" model that comes with its own set of challenges for scalability. SQL Azure limits the size, imposing a scale out by design of cloud applications for applications that need to grow beyond 50GB.

So I would say that at a glance, the Amazon RDS service appears a bit more static, and closer to a traditional database server provisioning model (the one time fee in Amazon RDS feels like you are paying for a server for example). While SQL Azure is more dynamic in nature, proposes a scale out model, and adapts to your database needs over time. Since the spirit of cloud computing is about flexibility and ubiquity (in the sense that resources could be anywhere), in order to save on maintenance costs over time, I believe SQL Azure delivers higher value overall. But that's just my opinion.

<Return to section navigation list>

MarketPlace DataMarket and OData

Paul Miller (pictured below) asserted “Companies must create trust with their customers as new tools for mining valuable data sets come to market” as an introduction to his Rosslyn Analytics, Microsoft Finding Value in Data Aggregation post of 2/27/2011 to GigaOm Pro (subscription required):

Data marketplaces, like those I discussed last week, become more valuable as they move beyond offering catalogs of individual sets to combine data from different sources.

Separate conversations I had with Rosslyn Analytics CEO Charles Clark and Microsoft Windows Azure’s Product Unit Manager for the data market business, Moe Khosravy, illustrate that such plans are being considered. They also show that a critical factor needs to be addressed in building these new business opportunities: trust. Rosslyn [...] [Link added.]

Rosslyn Analytics claims to be “the world leader in cloud-based spend data management solutions, enabling all users to obtain, transform and analyze data in minutes.”

Shawn Wildermuth’s Developing Applications for Windows Phone 7 with Silverlight title for Addison-Wesley became available as a Rough Cut on Safari Books Online on 2/25/2011. Chapter 7, “Services,” contains a “Consuming OData” section, which begins:

The Open Data Protocol3 (e.g. OData) is a standard way of exposing relational data across a service layer. OData is built on top of other standards including HTTP, JSON and Atom Publishing Protocol (e.g. AtomPub which is a XML format). OData represents a standard way to query and update data that is web-friendly. It uses a RESTbased URI syntax for querying, shaping, filtering, ordering, paging and updating data across the Internet.

If you don’t have a Safari account, only a preview of the first few pages of the chapter will be visible.

Nalaka Withanage described Consuming Windows Azure DataMarket with SharePoint 2010 via Business Connectivity Services in a 2/22/2011 post (missed when posted):

Windows Azure MarketPlace DataMarket is a service that provides a single consistent marketplace and delivery channel for high quality information as cloud services. It provides high quality Statistical and Research data across many business domains from financial to Health Care.. You can read more about it here

How do we consume this content in an enterprise scenario? In this post we are going to look at how to integrate Azure Marketplace Datamarket with SharePoint 2010 via Business Connectivity Services via .NET Connector as a external list. This enable us to surface Azure DataMarket data through SharePoint 2010 lists which opens up world of possibilities including going offline with SharePoint workspace and participation with workflows, enterprise search etc..

Let’s first start with Azure Datamarket. Logon to https://datamarket.azure.com with your live id and subscribe to a dataset that that you are interested in. I selected European Greenhouse Gas Emissions DataSet. Also note down your account key in the Account Keys tab as we will need this information later on.

The Dataset details give you the input parameters and results as follows.

You can navigate to the Data set link with your browser and view the oData feed. We are going to consume this oData feed with WCF Data Services. This allows us to use Linq to filter the data.

LinqPad is a nifty tool to develop and test LINQ Query Expressions. It allows you to connect to Windows Azure DataMarket Datasets and develop the queries against the data as shown below.

Now lets move on to VS2010 and create a BCS Model project as shown below.

Rename the Default Entity in the BDC Model designer and set the properties that you want to expose as the external content type

Once all properties are set DBC Explorer displays my model as shown below.

Now our BDC model is ready. Now lets implement ReadList and ReadItem BDC operations.

First Add a Service Reference to your project. Set the Address to the location of your Azure DataMarket

Dataset and Discover the oData Feed as shown below.

After the service reference is added , we can implement the ReadItem method as shown below.

Note that Service Referece Context requires your live ID and account Id pass as credentials in to the service call.

Once all operations are implemented Click F5 to build and Deploy your BDC model into SharePoint. Once deployed, log on to Central Administration and go to Manage Service Applications and select Business Connectivity Services and view the Details of EmissionStat Content Type being Deployed. You might want to [set] permissions for this content type at this point.

Now the external content type is defined all we need is a external List based on this content type. Fire up SharePoint

Designer and Create an external list referencing the new External content type just created

Once the list is created navigate to the list in the portal. Note that Windows Azure market place Data Market content

now surfaced through SharePoint 2010 list as shown below. The VS2010 solution associated with this post can be download here

Viewing item details shows full item details using our ReadItem BCS operation.

Integrating Structured data (Azure) with Unstructured content (Collaborative Data) in SharePoint allows you to create high business valued composites for informed decision making purposes at all levels in your organisations.

<Return to section navigation list>

Windows Azure AppFabric: Access Control and Service Bus

No significant articles today.

<Return to section navigation list>

Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

No significant articles today.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

• Wade Wegner (@WadeWegner) solved Rainmanjam’s Training Kit Configuration Wizard fails with Windows 7 SP1 / IE 9 RC problem in the Windows Azure Platform Development forum on 2/28/2011:

Problem:

Solution:

This will be fixed in the next release of the Windows Azure Platform Training Kit. It's caused by Windows 7 SP1 changing the build number from 7600 to 7601.

In the interim, you can update line 56 of D:\WAPTK\Assets\Setup\Dependencies.dep from ...

<os type="Vista;Server" buildNumber="7600">... to ...

<os type="Vista;Server" buildNumber="7601">... and run again.

Microsoft Researchers Roger Barga, Jared Jackson, and Wei Lu claimed they were “Making Bioinformatics Data More Accessible to Researchers Worldwide” in a NCBI BLAST on Windows Azure post of 2/2011:

Built on Windows Azure, NCBI BLAST on Windows Azure enables researchers to take advantage of the scalability of the Windows Azure platform to perform analysis of vast proteomics and genomic data in the cloud.

BLAST on Windows Azure is a cloud-based implementation of the Basic Local Alignment Search Tool (BLAST) of the National Center for Biotechnology Information (NCBI). BLAST is a suite of programs that is designed to search all available sequence databases for similarities between a protein or DNA query and known sequences. BLAST allows quick matching of near and distant sequence relationships, providing scores that allow the user to distinguish real matches from background hits with a high degree of statistical accuracy. Scientists frequently use such searches to gain insight into the function and biological importance of gene products.

BLAST on Windows Azure extends the power of the BLAST suite of programs by allowing researchers to rent processing time on the Windows Azure cloud platform. The availability of these programs over the cloud allows laboratories, or even individuals, to have large-scale computational resources at their disposal at a very low cost per run. For researchers who don’t have access to large computer resources, this greatly increases the options to analyze their data. They can now undertake more complex analyses or try different approaches that were simply not feasible before.

BLAST on Windows Azure in Action

One of the major challenges for many bioinformatics laboratories has been obtaining and maintaining the very costly computational infrastructure required for the analysis of vast proteomics and genomics data. NCBI BLAST on Windows Azure addresses that need.

Seattle Children’s Hospital: Solving a Six-Year Problem in One Week

At Seattle Children’s Hospital, researchers interested in protein interactions wanted to know more about the interrelationships of known protein sequences. Due to the sheer number of known proteins—nearly 10 million—this would have been a very difficult problem for even the most state-of-the art computer to solve. When the researchers first approached the Microsoft Extreme Computing Group (XCG) to see if NCBI BLAST on Windows Azure could help solve this problem, initial estimates indicated that it would take a single computer more than six years to find the results. But by leveraging the power of the cloud, they could cut the computing time substantially.

Spreading the computational workload across multiple data centers allowed BLAST on Windows Azure to vastly reduce the time needed to complete the analysis.

BLAST on Windows Azure enabled the researchers to split millions of protein sequences into groups and distribute them to data centers in multiple countries (spanning two continents) for analysis. By using the cloud, the researchers obtained results in about one week. This has been the largest research project to date run on Windows Azure.

Fueling Hydrogen Research

Rhodopseudomonas palustrisScientists at the University of Washington’s Harwood Lab are working on a project to identify key drivers for producing hydrogen, a promising alternative fuel. The method they adopted characterizes a population of strains of the bacterium Rhodopseudomonas palustris and uses integrative genomics approaches to dissect the molecular networks of hydrogen production.

The process consists of a series of steps using BLAST to query 16 strains to sort out the genetic relationships among them, looking for homologs and orthologs.

Each step can be very computation intensive. Each of the 16 strains, for example, is computationally predicted to have approximately 5,000 proteins. A BLAST run can require three hours or more to analyze each strain. When Harwood Lab’s local resource was unable to handle the computation, the researchers submitted their request to a nationwide computer cluster, but the request was rejected after two days due to the long job-queuing time.

The researchers then contacted the XCG team to see if BLAST on Windows Azure could help them with this problem before their deadline—and it did. BLAST on Windows Azure significantly saved computing time:

- The time for BLAST on Windows Azure to process 5,000 sequences of one strain was reduced from three hours to fewer than 30 minutes.

- The entire analysis, coordinated by SEGA and XCG teams, was completed in three days.

The on-demand nature of BLAST on Windows Azure totally eliminates the job-queuing time, which sometimes is even longer than the computation time when running on the high-performance public computing resources that researchers often rely upon.

Scientists are using BLAST on Windows Azure to sort out genetic relationships among 16 strains of the bacterium Rhodopseudomonas palustris, looking for homologs and orthologs.

Implementing BLAST on Windows Azure

The implementation of NCBI BLAST on Windows Azure consists of two distinct stages. The first stage is a preparation stage, in which the environment for the BLAST executable is staged and sent to each cloud “worker”—or compute node. In the second stage, the actual BLAST runs are carried out in response to input from the user.

Two crucial items need to be made available to each cloud worker that will run a portion of the BLAST job. The first is the BLAST application. BLAST on Windows Azure uses the latest version of BLAST+ executables (BlastP, BlastN, and BlastX) that are made available by NCBI. These applications can be used without any modification. In addition, the user needs to have access to one or more databases against which the BLAST application will search for its results. These are available from several sources on the web, including NCBI.

BLAST executables are bundled as a resource inside a cloud service package. Once the user deploys the package on their Windows Azure account, the BLAST+ executables get deployed on each worker for local execution. NCBI databases (such as nr, alu, and human_genome) are downloaded from the NCBI FTP site to Azure Blob storage by using a database download task that is executed by any available worker.

A web role provides the user with an interface where they can initiate, monitor, and manage their BLAST jobs. The user can enter the location of the input file on their local machine for upload, specify the number of partitions into which they want to break down their job, and specify BLAST-specific parameters for their job.

Once the user submits a job through the web role interface, a new split task entry gets created in the Azure Table. All available workers look for tasks in this table. The first available worker retrieves the task and splits the input file sequence into the number of partitions that were specified by the user. For each segment of the input file, a new task is created in the task table and the next available worker retrieves this task from task table.

Once all of the tasks in the queue have been completed, a worker takes the output of each task and merges them into a single file. That file is placed in a blob in Windows Azure storage and a URL to the result data is recorded in the job history for the user. This output can then be downloaded from the web role user interface.

Lessons Learned

The application of BLAST on Windows Azure to the large runs provided us by the University of Washington and Children’s Hospital groups taught Windows Azure researchers many important lessons about how to structure large-scale research projects in the cloud. Most of what we learned is applicable not just to the BLAST case but to any parallel job run at scale in the cloud.

Design for failure: Large-scale data-set computation of this sort will nearly always result in some sort of failure. In the week-long run of the Children’s Hospital project, we saw a number of failures: failures of individual machines and entire data centers taken down for regular updates. In each of these cases, the Windows Azure framework provided us with messages about the failure and had mechanisms in place to make sure jobs were not lost.

Structure for speed: Structuring the individual tasks in an optimal way can significantly reduce the total run time of the computation. Researchers conducted several test runs before embarking on runs of the whole dataset in order to make sure that the input data was partitioned in such a way as to get the most use out of each worker node. For example, Windows Azure expects individual tasks to complete in fewer than two hours. If a job takes more than two hours, Windows Azure assumes that the job failed and starts a new job doing the same work. If a job is too short, you don't get all the benefits of running the jobs in parallel.

Scale for cost savings: If a few long-running jobs are processing alongside many shorter jobs, it is important to not have idle worker nodes continuing to run up costs once their piece of the job is done. Researchers learned to detect which computers were idle and shut them down to avoid unnecessary costs.

People

Roger Barga ARCHITECT

Jared Jackson LEAD RSDE

Wei Lu RSDE

Download the 1/26/2010 version of the NCI BLAST engine for Windows Azure here.

Microsoft Research’s Microsoft Biology Initiative has released the Microsoft Biology Foundation (MBF) toolkit:

The Microsoft Biology Foundation (MBF) is a language-neutral bioinformatics toolkit built as an extension to the Microsoft .NET Framework to help researchers and scientists work together and explore new discoveries. This open-source platform serves as a library of commonly-used bioinformatics functions. MBF facilitates collaboration and accelerates scientific research by enabling different data sets to communicate. Several universities and corporations use MBF tools, which help reduce processing time and enable scientists to focus on research.

Microsoft Biology Foundation 1.0

Microsoft Biology Foundation 1.0 includes parsers for common bioinformatics file formats; algorithms for manipulating DNA, RNA, and protein sequences; and connectors to biological web services, such as NCBI BLAST.

- Install MBF 1.0

Install the latest, most stable version of the toolkit and start developing your own tools and applications.- Install ShoRuntime for MBF

In addition to the base MBF library, the ShoRuntime is provided as an optional addition to this library, providing high performance statistics and math capabilities.- Free Training for MBF

We have prepared extensive training materials and hands-on labs so you can take full advantage of the Microsoft Biology Foundation. Students and educators can download Microsoft Visual Studio 2010 and other Microsoft professional developer tools for free from Microsoft DreamSpark.- Participate in the Community

MBF is an open source project, with code available for academic and commercial use, free of charge under the OSI-approved MS-PL license. Download the source code and/or participate in development by joining the MBF CodePlex project, which includes forums for help, user feedback, feature requests, and bug reporting. MBF is open to code contributions from the community, extending the range of available functionality to researchers and life scientists everywhere.Microsoft Biology Foundation 2.0 Development Preview

The MBF 2.0 development preview is now available. This release is intended as development evaluation and contains only source code. This release includes new features, as well as optimized existing features and a number of resolved issues. We encourage you to download it, try it out, and then give us your feedback.

MBF 2.0 Highlights

- New BAM extension paired end support

- New object model change to ISequence : IList<byte>

Optimizations for PaDeNA, Sequence, Mummer, and the object model

System Requirements

- Windows XP with Service Pack 3 or later

- Microsoft .NET Framework 4.0

About Microsoft Biology Foundation

MBF is part of the Microsoft Biology Initiative (MBI), an effort in Microsoft Research to bring new technology and tools to the area of bioinformatics and biology. It is available under an open-source license, and executables, source code, demo applications, and documentation are freely downloadable.

See also Microsoft Research will hold the Microsoft Biology Foundation Workshop 2011 in Redmond, WA on 3/11/2011 in the Cloud Computing Events section below.

David Hardin added to his WAD series by explaining an issue with Azure Diagnostics and ASP.NET Health Monitoring in a 2/26/2011 post:

By default Windows Azure Diagnostics (WAD) does not receive ASP.NET Health Monitoring events. The machine web.config file at %windir%\Microsoft.NET\Framework64\v4.0.30319\Config\web.config shows the default behavior of ASP.NET Health Monitoring. Basically all unhandled errors and audit failures are written to the Windows Event Log within the Application folder as shown:

For WAD to capture the health event you can either configure WAD to read from the event log or you can change the behavior of where the health events are routed. With the event log approach you’ll want to limit what WAD reads, see http://blog.smarx.com/posts/capturing-filtered-windows-events-with-windows-azure-diagnostics, using:

Application!*[System/Provider/@Name='ASP.NET 4.0.30319.0']I’d rather use "Application!*[System/Provider/@Name[starts-with(.,'ASP.NET')]" but was unable to get the starts-with syntax to work. I’ve also noticed that events sent to DiagnosticMonitorTraceListener appear in table storage before those sent to the event log even with identical transfer periods configured. Perhaps it takes one transfer period to read from the event log and another to transfer the data?

The other approach, which I prefer, is to change the web.config so that health events are routed to the DiagnosticMonitorTraceListener as shown:

<healthMonitoring enabled= "true "><providers><add name= "TraceWebProvider " type= "System.Web.Management.TraceWebEventProvider " /></providers><rules><remove name= "All Errors Default " /><add name= "All Errors Default " eventName= "All Errors " provider= "TraceWebProvider " profile= "Default " minInstances= "1 " maxLimit= "Infinite " minInterval= "00:01:00 " custom= "" /><add name= "Application Events " eventName= "Application Lifetime Events " provider= "TraceWebProvider " profile= "Default " minInstances= "1 " maxLimit= "Infinite " minInterval= "00:01:00 " custom= "" /></rules></healthMonitoring>Basically this tweaks the default behavior defined in the machine’s web.config instead of completely replacing it. See the eventMappings in %windir%\Microsoft.NET\Framework64\v4.0.30319\Config\web.config to get an idea of what is possible.

When you use the event log approach your events end up in the WADWindowsEventLogsTable in Azure Storage. Modifying web.config to route health events through DiagnosticMonitorTraceListener writes the events to WADLogsTable.

Other posts in my WAD series are available at: http://blogs.msdn.com/b/davidhardin/archive/tags/wad/

Charles posted a 00:06:27 Building on Azure: Softronic video segment on 2/24/2011:

Using the cloud, Swedish based Softronic has created an e-government application centered around anti-money laundering called CM1. In addition, the company has established a platform for Open Government Data (www.offentligadata.se) that is built on top of Windows Azure and Windows Azure Marketplace DataMarket. With this, government compliance requirements around public information dissemination can more easily be met. Mathias Ekman explains.

Watch the video here.

The post also includes links to earlier Building on Azure: CODit and Building on Azure: InterGrid/GreenButton segments.

<Return to section navigation list>

Visual Studio LightSwitch

Return to section navigation list>

Windows Azure Infrastructure

• Joe Weinman (@joeweinman) offered proof that Cloud Computing is NP-Complete in a 2/21/2011 working paper. From the abstract:

Cloud computing is a rapidly emerging paradigm for computing, whereby servers, storage, content, applications or other services are provided to customers over a network, typically on an on-demand, pay-per-use basis. Cloud computing can complement, or in some cases replace, traditional approaches, e.g., owned resources such as servers in enterprise data centers.

Such an approach may be considered as the computing equivalent of renting a hotel room rather than owning a house, or using a taxi or rental car rather than owning a vehicle. One requirement in such an approach is to determine which resources should be allocated to which customers as their demand varies, especially since customers may be geographically dispersed, cloud computing resources may be dispersed, and since distance may matter due to application response time constraints, which are impacted by network latency.

In the field of computational complexity, one measure of the difficulty of a problem is whether it is NP-complete (Non-deterministic Polynomial-time complete). Briefly, such a designation signifies that: 1) a guess at a solution may be verified in polynomial time; 2) the time to solve the problem is believed to grow so rapidly as the problem size grows as to make exact answers impossible to determine in meaningful time using today’s computing approaches; 3) the problem is one of a set of such problems that are roughly equivalent to each other in that any member of the set may be transformed into any other member of the set in polynomial time, and solving the transformed problem would mean solving the untransformed one.

We show that an abstract formulation of resource assignment in a distributed cloud computing environment, which we term the CLOUD COMPUTING demand satisfiability problem, is NP-complete, using transformations from the PARTITION problem and 3-SATISFIABILITY, two of the “core” NP-complete problems. Specifically, let there be a set of customers, each with a given level of demand for resources, and a set of servers, each with a given level of capacity, where each customer may be served by two or more of the servers. The general problem of determining whether there is an assignment of customers to servers such that each customer’s demand may be satisfied by available resources is NP-complete.

The impact on Cloudonomics is that even if resources are available to meet demand, correctly matching a set of demands with a set of resources may be too complex to solve in useful time. [Emphasis added.]

Joe leads Communications, Media and Entertainment Industry Solutions for Hewlett-Packard. Contact Joe at http://www.joeweinman.com/contact.htm.

Lori MacVittie (@lmacvittie) wrote in a series of 2/28/2011 tweets (in chronological order):

My hero! I said cloud was NP-Complete here: http://bit.ly/evj7bU & now @joeweinman proved it (a real live proof!) http://bit.ly/gXCTnn

- @joeweinman You are awesome! I fooled around with creating the proof but it made my head explode. Ya da man.

- @joeweinman That proof - along with Cloudonomics in general - is pretty convincing you know more than enough about #cloud

Apollo Gonzalez offered a concise guide to Preparing Your IT Resources for the Windows Azure Platform in a 2/26/2011 post:

Cloud Computing is a hot topic on everyone’s list of learning to-do’s. Once the seal is broken on the subject one of the first responses is fear – fear about security, scalability, cost, and readiness. While all these concerns are valid each one can easily be answered by all cloud vendors (some better than others). Eventually, the fear of the unknown will be eliminated and companies will see the enormous benefits associated with leveraging what the cloud has to offer. The goal of this post is to help eliminate some of the fear by focusing on how to prepare your IT resources for this new cloud world.

One of the most asked questions when discussing the cloud is, “How should I prepare my IT staff?” The best way to answer this question is to identify each group within IT and provide some preparation considerations. The remainder of this post will focus on preparation considerations for the Windows Azure Platform.

Infrastructure

Make no mistakes about it infrastructure staff will be critical to the success of a company’s migration to the Window Azure Platform. The following list provides some preparation considerations:

- Understand how instances are allocated, load balanced and managed in Windows Azure. Look into the Fabric Controller to understand how it communicates with agents running on each virtual instance.

- Look into some of the different monitoring tools (Cumulux, Paraleap, System Center).

- Realize that with the Windows Azure Platform cost is impacted by consumption and in many cases applications may not need to be available 24-7. Products like the one from Paraleap can help you auto scale applications, helping to reduce usage costs.

- Get comfortable with The Windows Azure Platform – compute service. This service allows for the creation of certain types of roles. You have a web role, worker role, and vm role (go here for details). Think of the web role as IIS, the worker role as a windows service, and vm role as virtual machine hosted on a server. Keep in mind that each role has seemingly infinite scale and elasticity.

- Identify what vm sizes are available and the on cost implication of picking one vm size vs. another.

- Know what the SLA’s are.

- Understand the implications and benefits of the Windows Azure Content Delivery Network.

- Review when to use the Windows Azure Virtual Network Connect.

- Some application will be created as a web role within Windows Azure Compute, but will need to communicate with data that may reside on-premise. If this is the case you will need to get your head around the Windows Azure App Fabric which contains the .NET Service BUS, Access Control service, Integration, and composites.

- Many companies have long running workflow process that usually consumes expensive resources (think Biztalk Server). If this is the situation, Windows Azure App Fabric has an internet scale integration and workflow service that should be evaluated.

- Don’t want to benefit off of the consumption based model? Look into the private cloud offering.

- Find out where the datacenters are located and how are they secured (this will blow your mind).

Database Administrators

Database administrators will play a significant role in helping company’s move to the Windows Azure Platform since so many applications need data storage to process transactions etc… Here are some preparation considerations:

- Understand the difference between SQL Azure, SQL Server, and Windows Azure Storage.

- Learn about Table partitioning, reliable and durable internet scale queuing, and blob storage. Table storage will offer DBA’s a data source that has a relatively small cost and can auto scale based on demand.

- Understand and be able to distinguish between relational database design and NoSQL design.

- Recognize the different ways to add, modify, and delete data from Windows Azure Storage and SQL Azure.

- Want to get SQL Azure Reporting up and running quickly? Understand what SQL Azure Reporting brings to the party.

- Data is usually stored in many different data sources. SQL Azure Data Sync helps to solve this common problem by providing a robust synchronization engine.

- Learn how to monitor SQL Azure usage for “chatty” SQL that may be costing more money than necessary.

- Get database tools (SQL Management Studio for SQL Azure and Cerebrata’s cloud storage studio);

Developers

Developers may be tempted to think that they could just develop the same way they always have in the past. In fact, I have read and heard things like, “just change the connection string and you will be all set”. This couldn’t be further from the truth. While the learning curve may not be as steep for many developers the truth is that now every part of a developer’s code will cost something. The best developers will adapt and develop efficient applications. Here are some preparation considerations for developers:

- Look into Azure Storage and Azure App Fabric Caching for data persistence (i.e. session, cookies, etc…).

- Identify the limitation offered by the different storage services.

- Install developer tools and start building applications.

- Get database tools (SQL Management Studio for SQL Azure and Cerebrata’s cloud storage studio);

- If you haven’t already start developing with a test first attitude.

- Build recoverable applications. Know that at any moment your application could lose connectivity and plan for it (I’ll give you a hint make sure you at least use two instances).

- Learn how much each Windows Azure Service will cost.

- Download the Windows Azure Training Kit and complete every single lab (seriously)

Architects

With the Windows Azure Platform architects are back in the game. Building scalable and elastic applications will require careful thought and consideration, something that veteran and talented architects will welcome with open arms. The following preparation considerations will help architects navigate through these exciting times:

- Master the concept of cost based architecture.

- Learn which design patterns will offer the most bang for the buck.

- Consider all the items already presented. While it may not be feasible to master every aspect of the Windows Azure Platform, it is strongly suggested that each architect take the time to become familiar with the entire platform.

- Keep up to date with updates to the platform along with new services.

Cloud computing is not a future promise, it is happening in many organizations now. Take the preparation considerations and start the process. As one of my colleagues always says, “it’s not if but when”.

James Hamilton posted Exploring the Limits of Datacenter Temprature on 2/27/2011:

Datacenter temperature has been ramping up rapidly over the last 5 years. In fact, leading operators have been pushing temperatures up so quickly that the American Society of Heating, Refrigeration, and Air-Conditioning recommendations have become a become trailing indicator of what is being done rather than current guidance. ASHRAE responded in January of 2009 by raising the recommended limit from 77F to 80.6F (HVAC Group Says Datacenters Can be Warmer). This was a good move but many of us felt it was late and not nearly a big enough increment. Earlier this month, ASHRAE announced they are again planning to take action and raise the recommended limit further but haven’t yet announced by how much (ASHRAE: Data Centers Can be Even Warmer).

Many datacenters are operating reliably well in excess even the newest ASHRAE recommended temp of 81F. For example, back in 2009 Microsoft announced they were operating at least one facility without chillers at 95F server inlet temperatures.

As a measure of datacenter efficiency, we often use Power Usage Effectiveness. That’s the ratio of the power that arrives at the facility divided by the power actually delivered to the IT equipment (servers, storage, and networking). Clearly PUE says nothing about the efficiency of servers which is even more important but, just focusing on facility efficiency, we see that mechanical systems are the largest single power efficiency overhead in the datacenter.

There are many innovative solutions to addressing the power losses to mechanical systems. Many of these innovations work well and others have great potential but none are as powerful as simply increasing the server inlet temperatures. Obviously less cooling is cheaper than more. And, the higher the target inlet temperatures, the higher percentage of time that a facility can spend running on outside air (air-side economization) without process-based cooling.

The downsides of higher temperatures are 1) high semiconductor leakage losses, 2) higher server fan speed which increases the losses to air moving, and 3) higher server mortality rates. I’ve measured the former and, although these losses are inarguably present, these losses are measureable but have a very small impact at even quite high server inlet temperatures. The negative impact of fan speed increases is real but can be mitigated via different server target temperatures and more efficient server cooling designs. If the servers are designed for higher inlet temperatures, the fans will be configured for these higher expected temperatures and won’t run faster. This is simply a server design decision and good mechanical designs work well at higher server temperatures without increased power consumption. It’s the third issue that remains the scary one: increased server mortality rates.

The net of these factors is fear of server mortality rates is the prime factor slowing an even more rapid increase in datacenter temperatures. An often quoted study eports the failure rate of electronics doubles with every 10C increase of temperature (MIL-HDBK 217F). This data point is incredibly widely used by the military, NASA space flight program, and in commercial electronic equipment design. I’m sure the work is excellent but it is a very old study, wasn’t focused on a large datacenter environment, and the rule of thumb that has emerged from is a linear model of failure to heat.

This linear failure model is helpful guidance but is clearly not correct across the entire possible temperatures range. We know that at low temperature, non-heat related failure modes dominate. The failure rate of gear at 60F (16C) is not ½ the rate of gear at 79F (26C). As the temperature ramps up, we expect the failure rate to increase non-linearly. Really what we want to find is the knee of the curve. When does the failure rate increase start to approach that of the power savings achieved at higher inlet temperatures? Knowing that the rule of thumb that 10C increase double the failure rate is incorrect doesn’t really help us. What is correct?

What is correct is a difficult data point to get for two reasons: 1) nobody wants to bear the cost of the experiment – the failure case could run several hundred million dollars, and 2) those that have explored higher temperatures and have the data aren’t usually interested in sharing these results since the data has substantial commercial value.

I was happy to see a recent Datacenter Knowledge article titled What’s Next? Hotter Servers with ‘Gas Pedals’ (ignore the title – Rich just wants to catch your attention). This article include several interesting tidbits on high temperature operation. Most notable was the quote by Subodh Bapat, the former VP of Energy Efficiency at Sun Microsystems >>, who says:

Take the data center in the desert. Subodh Bapat, the former VP of Energy Efficiency at Sun Microsystems, shared an anecdote about a data center user in the Middle East that wanted to test server failure rates if it operated its data center at 45 degrees Celsius – that’s 115 degrees Fahrenheit.

Testing projected an annual equipment failure rate of 2.45 percent at 25 degrees C (77 degrees F), and then an increase of 0.36 percent for every additional degree. Thus, 45C would likely result in annual failure rate of 11.45 percent. “Even if they replaced 11 percent of their servers each year, they would save so much on air conditioning that they decided to go ahead with the project,” said Bapat. “They’ll go up to 45C using full air economization in the Middle East.”

This study caught my interest for a variety of reasons. First and most importantly, they studied failure rates at 45C and decided that, although they were high, it still made sense for them to operate at these temperatures. It is reported they are happy to pay the increased failure rate in order to save the cost of the mechanical gear. The second interesting data point from this work is that they have found a far greater failure rate for 15C of increased temperature than predicted by MIL-HDBK-217F. Consistent with the217F, they also report a linear relationship between temperature and failure rate. I almost guarantee that the failure rate increase between 40C and 45C was much higher than the difference between 25C and 30C. I don’t buy the linear relationship between failure rate and temperature based upon what we know of failure rates at lower temperatures. Many datacenters have raised temperatures between 20C (68F) and 25C (77F) and found no measureable increase in server failure rate. Some have raised their temperatures between 25C (77F) and 30C (86F) without finding the predicted 1.8% increase in failures but admitted the data remains sparse.

The linear relationship is absolutely not there across a wide temperature range. But I still find the data super interesting in two respects: 1) they did see a roughly a 9% increase in failure rate at 45C over the control case at 20C and 2) even with the high failure rate, they made an economic decision to run at that temperature. The article doesn’t say if the failure rate is a lifetime failure rate or an Annual Failure Rate (AFR). Assuming it is an AFR, many workloads and customers could not life with a 11.45% AFR nonetheless, the data is interesting and it good to see the first public report of an operator doing the study and reaching a conclusion that works for their workloads and customers.

The net of the whole discussion above is the current linear rules of thumb are wrong and don’t usefully predict failure rates in the temperature ranges we care about, there is very little public data out there, industry groups like ASHRAE are behind and it’s quite possible that cooling specialist may not be the first to recommend we stop cooling datacenters aggressively :-). Generally, it’s a sparse data environment out there but the potential economic and environmental gains are exciting so progress will continue to get made. I’m looking forward to efficient and reliable operation at high temperature becoming a critical data point in server fleet purchasing decisions. Let’s keep pushing.

Federal Computing Week published a Special Research Report: Cloud Computing about federal IT managers’ attitudes about cloud computing in 2/2011 and made it available for downloading as a PDF document:

In December 2010, the 1105 Government Information Group and Beacon Technology Partners conducted a survey of federal IT managers to determine their attitudes toward cloud computing. The survey revealed the greatest cloud opportunities among federal agencies and the preferred deployment modes for cloud initiatives. Additionally, the research showed perceived advantages of cloud computing, concerns about security, and more. Read this special research report for more information.

Get to know cloud computing's advantages

In general, federal agencies and departments opt for private clouds when sensitive or mission-critical information is involved. Private clouds are hosted on an agency's own dedicated hardware, and services and infrastructure are maintained on a private network. This increases security, reliability, performance and service. Yet like other types of clouds, it's easy to scale quickly and pay for only what is used, making it an economical model.

Cloud computing could help the government become significantly more efficient. But is it always the right choice?

Cloud security remains a legitimate, though overhyped, concern

The Download survey found that 55 percent of the 460 government respondents don’t think cloud solutions are secure enough, and 59 percent agreed that security risks associated with cloud computing implementation are greater than those for on-premise IT implementations.

Several agencies are confidently wading through complicated cloud security concerns to help others feel better about releasing control of their data.

Like it or not, cloud computing is here to stay

Cloud adoption is basically in the investigation stage, according to the survey respondents. While roughly 12 percent of the respondents have already adopted cloud computing for at least one application, 20 percent are in development, and 55 percent are investigating the technology.

Although cloud computing will not completely replace on-site data centers in the federal government, the cloud will dominate new applications development.

3 flavors of cloud computing give agencies options for getting started

The SaaS model allows organizations to rent remotely hosted software applications, paying for only the functionalities and computer cycles used. Applications are accessed through the Internet and a browser-based user interface. The most popular and useful SaaS-based cloud opportunities for federal agencies include collaboration, document management, content management and project management.

Users should mix and match three different types of cloud computing.

Cloud computing can generate massive savings for agencies

Although organizations accrue significant savings from all types of cloud solutions, the largest savings tend to be from public cloud implementations. The primary areas where all types of cloud solutions offer the most cost savings are direct labor (typically IT staff), hardware, software and end-user productivity.

If agencies carefully choose the right tactics at the right time for the right mission, they can reap significant financial savings with a move to cloud computing.

Robert Duffner (@rduffner) answered Episode 65: What does Open Source have to do with Windows Azure? in a Deep Fried Bytes podcast of 2/22/2011 (missed when recorded):

About This Episode

In this episode, Woody sits down with Robert Duffner of Microsoft to discuss the future of Windows Azure and how Open Source Software fits in the vision for Azure.

Thanks to our guest this episode

Robert Duffner is Microsoft’s Director of Product Management for Windows Azure. Whether engaging with senior IT executives or developers, Robert is focused on amplifying the voice of the customer with the customer advocacy programs he directs to help shape the future product direction of the Windows Azure Platform. These include the Windows Azure Platform Customer Advisory Board as well as driving the Windows Azure Technology Adoption Program (TAP) to help our early adopter customers and partners validate the platform and shape the final product by providing early and deep feedback. Robert also stays engaged with the top Windows Azure experts in the world as the Community Lead for the Windows Azure Most Valued Professionals (MVP) program.

Robert is one of Microsoft’s top experts in Open Source software (OSS) were he joined the company in 2007 as the Director of Open Source Strategy. Robert is a 16 year veteran of the software industry and an enterprise Java expert having worked for companies such as IBM, BEA Systems (acquired by Oracle), and Vignette.

Robert can be found on Twitter at http://twitter.com/rduffner

Ernest Mueller published a tongue-in-cheek Inside Microsoft Access presentation deck to SlideShare on 2/13/2011 (missed when posted). Following are a couple of sample slides:

You might also enjoy Ernest’s comparisons of Windows Azure and Amazon CloudFormation features in his Amazon CloudFormation: Model Driven Automation For The Cloud post of 2/25/2011 in which he asserted “Amazon implements Azure on Amazon.” (Navigate to the “Other Cloud Computing Platforms and Services” section at the end of the post if the link doesn’t put you there.)

<Return to section navigation list>

Windows Azure Platform Appliance (WAPA), Hyper-V and Private Clouds

No significant articles today.

<Return to section navigation list>

Cloud Security and Governance

Alyson Behn claimed Creative cybercriminals will thrive in 2011 in a six-page post of 2/23/2011 to the SD Times on the Web blog:

Cybercrime was alive and well last year according to MarkMonitor, Symantec, Trend Micro and ZapThink. The worse news is that 2011 is expected to be another banner year for cybercriminals as they get more creative with their capers, hatching botnets, malware, phishing scams and other manner of pestilence.

2011 will also see the advancement of attacks against the cloud and, even worse, the proliferation of cyberwarfare that began in earnest in 2010. Witness the battle between the WikiLeaks supporters and credit card companies. The cybercrime underworld will see more consolidation as increased global and public attention is focused on their misdeeds.

Experts interviewed agree that in years past it used to be about hacking the big corporations, but now enterprises and even small and medium-sized businesses are under attack, and the attacks are all more sophisticated. The consensus is that cybercrime is about money, and it's going to get uglier.

Botnets and spam and malware, oh my!

Symantec’s annual MessageLabs Intelligence Report was released by the company’s Hosted Services group. It showed that cybercriminals are thriving and have diversified their tactics to sustain spam and malware at high levels throughout the year. As detection and prevention technology made advancements to thwart the attacks, the criminals’ technology became stealthier.

According to the report, chief among the offenders are botnets that proved resilient for another year, creating fluctuations in spam levels. E-mail-borne malware also increased a hundredfold.In August, spam rates peaked at 92.2% of all e-mail received by accounts monitored by Symantec when the Rustock botnet was put to use with new malware variants, delivering an overall increase of 1.4% compared to 2009. Average spam levels for 2010 reached 89.1%, and for most of the year, spam from botnets accounted for 88.2% of all spam. According to the report, the closure of the Spamit spam affiliate in early October resulted in a reduction of spam to 77%. The top three botnets this year were Rustock, Grum and Cutwail, according to the Symantec report.

<Return to section navigation list>

Cloud Computing Events

Microsoft Research will hold the Microsoft Biology Foundation Workshop 2011 in Redmond, WA on 3/11/2011:

The Microsoft Biology Initiative is hosting a one-day workshop on the Microsoft Biology Foundation (MBF), an open source Microsoft .NET library and application programming interface for bioinformatics research. The MBF workshop will be held at the Microsoft Conference Center in Redmond, Washington, on March 11, 2011. This workshop will include a quick introduction to Visual Studio 2010, the .NET Framework, C#, and the MBF object model. Attendees will participate in hands-on labs and write a sample application that employs the file parsers, algorithms, and web connectors in MBF. The workshop is open to everyone and registration is free of charge.

Registration

Register by completing the online registration form.

Workshop Program

The program will cover the modules that are listed on the Microsoft Biology Foundation Training site and summarized below. The sessions will combine lectures and hands-on labs; therefore, to get the most benefit from the workshop, we recommend that you bring a laptop with Microsoft Visual Studio 2010 installed. Students and educators can install Visual Studio 2010 for free from Microsoft Dreamspark. Alternatively, anyone can install Microsoft Visual C# 2010 Express for free. Breakfast and lunch will be provided.

Module 1: Introduction to Visual Studio 2010 and C#

This is a comprehensive introduction to the Microsoft Visual Studio programming environment and Microsoft .NET. Learn how to create a project, what is .NET, how to get started with C#, and runtime debugging. The hands-on lab helps you get experience building applications in Visual Studio 2010. It walks you through the steps required to create a console application, interfaces and types that implement those interfaces in C#, a library to hold common (shared) code, and a simple Windows Presentation Foundation (WPF) application by using the shared library.

Module 2: Introduction to the Microsoft Biology Foundation

This module provides an overview of the Microsoft Biology Foundation (MBF), its scenarios, architecture, and a starter project. It also provides an introduction to the source code and unit tests required for contributing back to the open-source project. The hands-on lab helps you get experience working with sequences, parsers, formatters, and the transcription algorithm that is supplied in MBF. It walks you through the steps required to build a simple Windows Forms application that can load a set of sequences from a file, transcribe them, and then write those sequences to the same or a different file.

Module 3: Working with Sequences

This module examines the Sequence data type in MBF. Learn how to load sequences into memory and save them, the different sequence types available, how to use sequence metadata, and how data virtualization support enables support for large data sets. The hands-on lab familiarizes you with managing sequences and sequence items by using MBF interfaces, properties, and methods to create a WPF application to visualize the data.

Module 4: Parsers and Formatters

This module explores MBF’s built-in sequence parsers, formatters, alphabets, and encoders. It also introduces the method of expanding MBF with custom alphabets, parsers, and formatters. The hands-on lab walks you through the steps that are required to build a simple custom parser and formatter for a fabricated biology data format, and then plug it into MBF and the sequence viewer/editor that were created in Module 3.

Module 5: Algorithms

This module examines the algorithms defined in MBF for sequence alignment, multi-sequence alignment, sequence fragment assembly, transcription, translation, and pattern matching against sequences, and explains how to create custom algorithms. The hands-on lab walks you through the steps required to build an application to run algorithms against sequences loaded with MBF and perform sequence alignment, assembly, and transformations.

Module 6: Web Services

This module introduces Microsoft .NET web services, the web service architecture in MBF, the built-in web service support in MBF for BLAST (Basic Local Alignment Search Tool), and ClustalW; how to call these services asynchronously; and presents a detailed example of how to build custom service wrappers. The hands-on lab walks you through the steps of building an application that executes the BLAST algorithm by using web services against sequences loaded with MBF. You get some experience loading and identifying web service handlers for BLAST, passing sequences and sequence fragments to BLAST, changing the BLAST parameters, and displaying the results from a BLAST run.

See also Microsoft Researchers Roger Barga, Jared Jackson, and Wei Lu claimed they were “Making Bioinformatics Data More Accessible to Researchers Worldwide” in a NCBI BLAST on Windows Azure post of 2/2011 in the Live Windows Azure Apps, APIs, Tools and Test Harnesses section above.

<Return to section navigation list>

Other Cloud Computing Platforms and Services

• Corrected my (@rogerjenn) Choosing from the major Platform as a Service providers article of 2/25/2011 on SearchCloudComputing.com:

Changed the following sentence:

"Therefore, Amazon Web Services and Windows Azure aren't good candidates for hosting low-traffic websites."

To:

"Therefore, Amazon EC2 and Windows Azure aren't good candidates for hosting low-traffic websites. Amazon updated their S3 service in late February 2011 to enable serving a complete (static) web site or blog, which eliminates compute changes; posted prices for bandwidth and monthly storage still apply."

William Vambenepe (@vambenepe) explained CloudFormation in context in a 2/27/2011 post:

I’ve been very positive about AWS CloudFormation (both in tweet and blog form) since its announcement . I want to clarify that it’s not the technology that excites me. There’s nothing earth-shattering in it. CloudFormation only covers deployment and doesn’t help you with configuration, monitoring, diagnostic and ongoing lifecycle. It’s been done before (including probably a half-dozen times within IBM alone, I would guess). We’ve had much more powerful and flexible frameworks for a long time (I can’t even remember when SmartFrog first came out). And we’ve had frameworks with better tools (though history suggests that tools for CloudFormation are already in the works, not necessarily inside Amazon).

Here are some non-technical reasons why I tweeted that “I have a feeling that the AWS CloudFormation format might become an even more fundamental de-facto standard than the EC2 API” even before trying it out.

It’s simple to use. There are two main reasons for this (and the fact that it uses JSON rather than XML is not one of them):

- It only support a small set of features

- It “hard-codes” resource types (e.g. EC2, Beanstalk, RDS…) rather than focusing on an abstract and extensible mechanismIt combines a format and an API. You’d think it’s obvious that the two are complementary. What can you do with a format if you don’t have an API to exchange documents in that format? Well, turns out there are lots of free-floating model formats out there for which there is no defined API. And they are still wondering why they never saw any adoption.

It merges IaaS and PaaS. AWS has always defied the “IaaS vs. PaaS” view of the Cloud. By bridging both, CloudFormation is a great way to provide a smooth transition. I expect most of the early templates to be very EC2-centric (are as most AWS deployments) and over time to move to a pattern in which EC2 resources are just used for what doesn’t fit in more specialized containers).

It comes at the right time. It picks the low-hanging fruits of the AWS automation ecosystem. The evangelism and proof of concept for templatized deployments have already taken place.

It provides a natural grouping of the various AWS resources you are currently consuming. They are now part of an explicit deployment context.

It’s free (the resources provisioned are not free, of course, but the fact that they came out of a CloudFormation deployment doesn’t change the cost).

Related posts:

- AWS CloudFormation is the iPhone of Cloud services

- Amazon proves that REST doesn’t matter for Cloud APIs

- REST in practice for IT and Cloud management (part 2: configuration management)

- Cloud + proprietary software = ♥

- The necessity of PaaS: Will Microsoft be the Singapore of Cloud Computing?

- Introducing the Oracle Cloud API

Alex Williams (@alexwilliams) posted Choosing a Private Cloud Provider and Doing it All Wrong to the ReadWriteCloud blog on 2/27/2011:

There's a right way to choose a cloud provider and there's a wrong way. The right way is to do the research about your needs and requirements. The wrong way is to choose a provider by evaluating and comparing vendor offerings.

John Treadway writes on CloudBzz that IT leaders he speaks with are taking the latter approach. They're evaluating the vendors and not doing their own analysis.

Instead, IT leaders should be going through a long list of questions before starting to evaluate vendors. His initial list gives a taste about how IT leaders should approach the task:

- What are the strategic objectives for my cloud program?

- How will my cloud be used?

- Who are my users and what are their expectations and requirements?

- How should/will a cloud model change my data center workflows, policies, processes and skills requirements?

- How will cloud users be given visibility into their usage, costs and possible chargebacks?

- How will cloud users be given visibility into operational issues such as server/zone/regional availability and performance?

- What is my approach to the service catalog? Is it prix fixe, a la carte, or more like value meals? Can users make their own catalogs?

- How will I handle policy around identity, access control, user permissions, etc?

- What are the operational tools that I will use for event management & correlation, performance management, service desk, configuration and change management, monitoring, logging, auditability, and more?

- What will my vCenter administrators do when they are no longer creating VMs for every request?

- What will the approvers in my process flows today do when the handling of 95% of all future requests are policy driven and automated?

- What levels of dynamism are required regarding elasticity, workload placement, data placement and QoS management across all stack layers?

- Beyond a VM, what other services will I expose to my users?