Windows Azure and Cloud Computing Posts for 2/11/2011+

| A compendium of Windows Azure, Windows Azure Platform Appliance, SQL Azure Database, AppFabric and other cloud-computing articles. |

• Updated 2/13/2011 with new articles marked •

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database and Reporting

- Marketplace DataMarket and OData

- Windows Azure AppFabric: Access Control and Service Bus

- Windows Azure Virtual Network, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

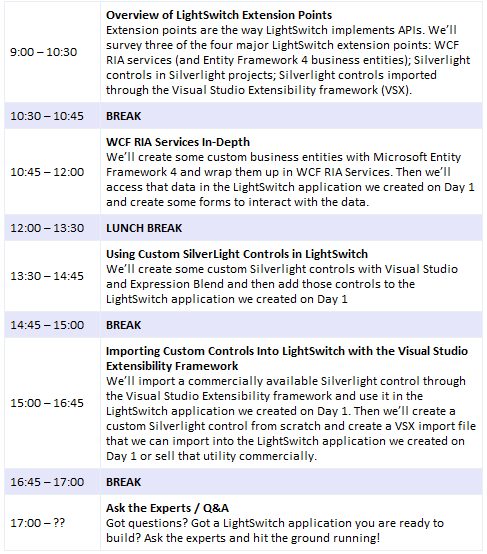

- Visual Studio LightSwitch

- Windows Azure Infrastructure

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Subscribe to the OakLeaf Systems blog for your Amazon Kindle! Only $1.99 per month.

Notice the reference to SQL Data Services (SDS). I need to update the Product Description but so far haven’t found out how to do it.

Azure Blob, Drive, Table and Queue Services

• Joannes Vermorel announced the release of Lokad Cloud v1.2 a .NET O/C (object to cloud) mapper for Windows Azure storage in a 2/13/2011 post to Google Code:

O/C mapper (Object to Cloud). Leverage Windows Azure without getting dragged down by low level technicalities.

NEW: Feb 13th, 2011, Lokad.Cloud 1.2 released. Lokad.Cloud.Storage.dll is now fully stand-alone.

June 23rd, 2010, Lokad is honored by the Windows Azure Partner Award of 2010 for Lokad.Cloud.

Strong typed put/get on the blob storage:

IBlobStorageProvider storage = ... ; // init snipped

CustomerBlobName name = ... ; // init snipped

var customer = storage.GetBlob(name); // strong type retrievedStrong typed enumeration of the blob storage:

foreach(var name in storage.List(CustomerBlobName.Prefix(country))

{

var customer = storage.GetBlob(name); // strong type, no cast!

// do something with 'customer', snipped

}Scalable services with implicit queue processing:

[QueueServiceSettings(AutoStart = true, QueueName = "ping")]

public class PingPongService : QueueService<double>

{

protected override void Start(double x)

{

var y = x * x; // 'x' has been retrieved from queue 'ping'

Put(y, "pong"); // 'y' has been put in queue named 'pong'

}

}Key orientations

- Strong typing.

- Scalable by design.

- Cloud storage abstraction.

Key features (check the FeatureMap)

- Queue Services as a scalable equivalent of Windows Services.

- Scheduled Services as a cloud equivalent of the task scheduler.

- Strong-typed blob I/O, queue I/O, table I/O.

- Autoscaling with VM provisioning.

- Logs and monitoring.

- Inversion of Control on the cloud.

- Web administration console.

Documentation:

- GettingStarted - hands on tutorial.

- MapReduceSample - tutorial based on an image histogram processing.

- StrongTypedBlobName - improve your blob storage patterns.

- FatEntities - lower I/O costs for fine-grained data.

- ScalableCloudServices - horizontal scaling by design.

- AutoScaling - control the number of allocated VMs.

- Serialization - strong typed persistence on the cloud storage.

- GarbageCollector - don't let garbage leaks in your storage.

- DependencyInjection - illustrated with connection string setup.

- TaskScheduler - scheduled or delayed execution.

- ExceptionHandling - uncaught exceptions are logged.

- Monitoring - perf reporting in the console.

This is yet another open source project from Lokad (Forecasting API).

If you want something more powerful and flexible for your Windows Azure enterprise solution - check out our Lokad.CQRS.

Live apps using Lokad.Cloud:

- sqwarea.com massively multiplayer online game.

- amanuens.com continuous localization software.

- api.lokad.com Forecasting API (plus backend).

Want your app listed here? Just contact us.

Wely Lau explained Applying Custom Domain Names to Windows Azure Blob Storage in a 2/11/2011 post:

Introduction

If you register a Windows Azure storage service account, you will be prompted to enter a valid account name. This account name by default will be used as your blob URI. The default address would be applicable for the following format:

http://[acccount-name].blob.core.windows.net/[container]/[your-blob].

In example:

http://welystorage2.blob.core.windows.net/images/Desert.jpg

In many cases, we don’t want the “blob.core.windows.net” default domain. Instead, we want to implement our own domain (or sub-domain name), for example: “blob.wely-lau.net”. So that you could have your:

http://storage.wely-lau.net/images/Desert.jpg

I am happy to inform you that, it’s possible for you to do it on Windows Azure Blob Storage.

How To

Prerequisites

In order to perform the custom domain, I assume you have the following items:

- Your own Windows Azure Subscription (associated with your Windows Live Id)

- Your own domain registered to certain registrar such as http://godaddy.com or http://dynadot.com.

Creating Windows Azure Storage Account

1. Firstly, Login to Windows Azure Developer Portal, and select the “Hosted Service, Storage Account & CDN” tab at the right hand side and subsequently select the “Storage Accounts”.

2. As such, the list of Subscriptions that are associated to the Windows Live Id will be shown. Click on New Storage Account button on the ribbon bar.

3. As the Create New Account dialog show up, select the intended subscription and enter your account name. Note that the account name must be globally unique. This will be form your default URL such as http://account-name.blob.core.windows.net/.

Subsequently, select your preferred region or affinity group (if you have any) and click the Create button.

If everything goes well, you will see that the status of the new created storage becomes “Created”.

Adding Your Custom Domain

4. Click on the newly created storage, and then click on Add Domain button on the ribbon bar.

5. Immediately, a dialog box shows up. You will need to enter your custom domain name there and click Configure.

Verifying Your Domain Name

6. You are not done yet, you will see the instruction to create a CNAME record at your domain registrar portal and point to verify.azure.com. The reason is, Windows Azure requires you to verify you are the owner of that custom domain.

7. Since my domain (wely-lau.net) was registered via http://dynadot.com, I’ll need to perform those action there. I believe it’s more or less the same although you are registering to other domain registrar.

Do note that, it may take a few minutes to propagate.

8. Go back to Azure Developer Portal, click on the Storage custom domain, and click on Validate Domain. After a few moments, you can see that your storage custom domain status changes to “Allowed”.

Are we done? No, we are not done yet. What we’ve done so far is to verify the domain belong to us, we have access over it.

Mapping the Custom Domain

9. Now, go back to your domain registrar portal again.

Create another CNAME record, with sub domain “blob” (if you want your address become http://blob.wely-lau.net) and point it to your storage account name (with full address).

As usual, it may takes up to a few minutes to update the domain, just be patient.

Let’s Test It Out

10. Since that was newly created azure storage account, obviously it doesn’t have any blob inside.

To test whether the custom domain work, try to upload a blob to that account. You can upload the blobs through many ways including tools such as Cerebrata Cloud Storage Studio, Azure Storage Explorer, and so many more.

11. When I type my original blob address on the browser address bar: http://welyazurestorage.blob.core.windows.net/images/Lighthouse.jpg

How about using our custom domain name http://blob.wely-lau.net/images/Lighthouse.jpg.

Here you go, it works!

Rob Gillen reviewed Moving Applications To The Cloud with Windows Azure in a 2/11/2011 post:

I just finished reading a book from the Microsoft Patterns & Practices group called Moving Applications to the Cloud on the Microsoft Windows Azure Platform. I’ve had the book for a few months, and my when I first received it, I read the first chapter or two, decided it wasn’t worth the read, and set it aside.

Lately, however, I picked it up again – finished the book, and am glad I did. Don’t get be wrong, it didn’t magically morph into a superb spectacle of literary greatness, but I did find that as I read further, the authors moved further from the very basics of the Windows Azure platform and the content became increasingly interesting.

If you are new (or relatively so) to the Windows Azure platform and contemplating the moving of existing applications to the cloud, this is a worthwhile discussion of a fictitious scenario that did just that. The scenario is slightly on the cheesy side, but realistic enough to help you think through issues you may be facing in your business.

If you are well experienced with the platform, you will likely find this a bit dry – especially the first portions. You’ll also likely be distracted or bothered by the not-so-covert marketing that takes place. That said, the book covers some more complex topics such as multiple tasks/threads sharing the same physical worker role, various optimization topics, and more. In the end, I’m glad I read it and feel that I learned some things from the book.

My last thought has nothing to do specifically with the book, but rather a growing frustration of mine with the Windows Azure platform – the design of the table storage platform. Upon reading books such as this I’m reminded (they stress it *many* times) how important your partition key/row key strategy is, and how literally hosed you are if you get it wrong. This compares with my recent experiences with Amazon’s SimpleDB product, and the delta couldn’t be more striking. Both platforms solve essentially the same problem, but in the case of SDB, it is effortless (at least by comparison). I don’t have to think of partition keys, or be overly concerned with how the underlying storage platform works… I just put data in it. Additionally, *every* column is indexed and performs reasonably under queries. I can’t shake the feeling that the Azure team is missing it here – there has to be a way to get a well-designed, horizontally scaling table structure without placing such a design burden on the users. [Emphasis added.]

I’ve been complaining about the lack of secondary indexes on Azure tables for more than a year. My last post on the topic was What Happened to Secondary Indexes for Azure Tables? of 11/5/2010.

TechRepublic offers Microsoft’s Windows Azure Jump Start (05): Windows Azure Storage, Part 1 podcast (site registration and enabling popups required):

Building Cloud Applications with the Windows Azure Platform. This podcast is Part 1 of a two-part section and covers the following options for storage as well as ways to access storage when leveraging the Windows Azure Platform: Non-Relational Storage, Relational SQL Azure Storage, Blobs, Drives, RESTful web services, and Content Delivery Networks (CDN). The Windows Azure Jump Start is for all architects and developers interested in designing, developing and delivering cloud-based applications leveraging the Windows Azure Platform. The overall target of this podcast is to help development teams make the right decisions with regard to cloud technology, the Azure environment and application lifecycle, Storage options, Diagnostics, Security and Scalability.

Download here.

<Return to section navigation list>

SQL Azure Database and Reporting

Steve Yi described ISV of the Month: Sitecore in a 2/11/2011 post to the SQL Azure team blog:

Sitecore has been chosen as our first ISV of the month. Sitecore provides a content management solution for Enterprise websites and has strong momentum within the ISV community and with customers alike. In this video

below, Sitecore outlines why they are a leader in the Gartner magic quadrant.

Sitecore’s CMS Azure Edition leverages the considerable advantages of Azure for scalable, Enterprise class deployment. Azure allows Sitecore to extend it solution to the cloud, allowing customers and partners to easily and quickly scale websites to new geographies and respond to surges in demand.

Microsoft Azure provides a global deployment platform for Sitecore public facing webs servers. Your Core and Master Sitecore servers are deployed locally at your facilities behind your firewall, but your public facing Sitecore web servers are hosted at Microsoft facilities. Microsoft Windows Azure’s compute, storage, networking, and management capabilities seamlessly and reliably links to your on-premise Sitecore application and servers.

The advantages of this hybrid approach is users can manage their main Sitecore editing and database servers securely on-premise and push website content to their front-end Sitecore based Windows Azure cloud servers in dispersed geographic locations.

Sitecore has an upcoming webcast, register here.

Find the Press Release here.

Sitecore CMS Azure Edition appears to be a classic hybrid cloud app.

Mark Kromer (@mssqldude) reported The Microsoft Cloud BI Story, Dateline February 2011 in a 2/10/2011 post to SQL Server Magazine’s SQL Server BI Blog:

Here we are in February 2011 and I thought it might make sense in the SQL Mag BI Blog to take stock of where Microsoft is at in terms of the “Cloud BI” story.

First, let’s catch you up on a little research and see where different industry leaders, including Microsoft, land in terms of their definition and products for “Cloud BI”. As with many things in the IT industry, “Cloud BI” will mean different things to different people. I’m not listing them all here, just a few that I’ve worked with/for in the past and that I see quite a bit day-in and day-out:

Gartner calls “BI in the Cloud” a combination of 6 elements: data sources, data models, processing applications, computing power, analytic models, and sharing or storing of results. This focuses more on the analytics side of the BI equation and does not address data warehouses or data integration. This makes sense from the perspective that most vendors are taking today toward Cloud BI with smaller data sets (data marts) in the Cloud, or on premises, but authoring publishing tools in the Cloud.

Oracle, on the other hand, continues to frame Cloud in terms of “private cloud”, creating on-premises infrastructures that are virtualized, flexible, elastic and includes concepts such as chargeback. The key is that Oracle sees itself as the infrastructure provider for on-prem and Cloud-based providers. You won’t find a public Cloud version of Oracle database, Hyperion or OBIEE outside of hosting partner providers or the up-coming Amazon Oracle database offering.

In terms of offering a BI platform in the cloud, open-source BI vendors are turning to Amazon and RightScale (which runs on EC2) to make use of their virtualized, hosted infrastructure for MySQL, RDS and EC2. For example, Pentaho is offering “Cloud BI” versions of their product also leveraging Amazon’s EC2 and MySQL. I also see BIRT On Demand quite a bit these days, they use Amazon RDS instead of MySQL. Jaspersoft is now offering their BI reporting platform in the Cloud through RightScale’s platform.

Thinking back to some of the very first offerings of BI in the Cloud that was marketed by Crystal Reports and Salesforce.com in the mid 2000’s, there were offerings by those vendors that were based around reporting tools hosted on a public server with subscription-based pricing. You would typically point to a data source such as a spreadsheet on your laptop to port the data into those tools. In fact, that data replication, synchronization and integration from legacy data sources in large company databases into Cloud databases and Cloud-based BI metadata or canonical models is still something that is evolving. One of the most exciting things that I am watching for in the Microsoft Azure platform for Cloud BI & database around SQL Azure, is Federated databases. This would allow us to create applications that can have a much easier time at database sharding and parallel processing. And on the BI front, the Data Sync tool could then be automated to move cleansed and transformed data into SQL Azure data marts for analysis.

So, back to Microsoft and their “Cloud BI” story. Today, what I can demonstrate from the Microsoft stack is a rich feature set of database functionality in the Cloud with SQL Azure. I can move that data around to different databases in different data centers in the Cloud and I can report on that data with analytics and dashboards from Excel 2010 with PowerPivot and Report Builder. Both of those reporting tools are sitting on my laptop, they are not in the Cloud. So it is not 100% Cloud-based. But I do not need a separate server infrastructure to do my Excel-based PowerPivot data integration and analysis. And I can always use the existing on-prem SharePoint or SSRS services to publish the reports that are built form the Cloud-based SQL Azure data.

Now that more & more Microsoft partners are providing Windows Phone 7 mobile dashboards (see Derek’s previous articles on this topic in SQL Magazine) you could build a complete Cloud-based Microsoft BI solution. Some of these tools are in preview CTP releases right now. The new data integration Data Sync CTP 2 moves data between on-premises SQL Server and SQL Azure and Reporting Services in Azure is also being made available as CTP on Microsoft.com. PowerPivot is not yet available in Azure, but keep your eyes & ears tuned to the Microsoft sites (http://www.powerpivot.com/) for PowerPivot news.

Mark tweeted on 2/11/2011:

Oracle still doesn't do Cloud BI. Their "Cloud BI" whitepaper talks about OBI 11g as "Cloud Ready". That's it??

<Return to section navigation list>

MarketPlace DataMarket and OData

• Jonathan Carter (@LostInTangent) updated CodePlex’s WCF Data Services Toolkit on 2/9/2011. From the Home page:

Elevator Pitch

The WCF Data Services Toolkit is a set of extensions to WCF Data Services (the .NET implementation of OData) that attempt to make it easier to create OData services on top of arbitrary data stores without having deep knowledge of LINQ.

It was born out of the needs of real-world services such as Netflix, eBay, Facebook, Twitpic, etc. and is being used to run all of those services today. We've proven that it can solve some interesting problems, and it's working great in production, but it's by no means a supported product or something that you should take a hard commitment on unless you know what you're doing.

In order to know whether you qualify for using the toolkit to solve your solution, you should be looking to expose a non-relational data store (EF + WCF Data Services solves this scenario beautifully) as an OData service. When we say "data store" we really do mean anything you can think of (please be responsible though):

That last one might be a bit tricky though...

- An XML file (or files)

- An existing web API (or APIs)

- A legacy database that you want to re-shape the exposed schema dramatically without touching the database

- A proprietary software system that provides its data in a funky one-off format

- A cloud database (e.g. SQL Server) mashed up with a large schema-less storage repository (e.g. Windows Azure Table storage)

- A CSV file zipped together with a MySQL database

- A SOAP API combined with an in-memory cache

- A parchment scroll infused with Egyptian hieroglyphics

Further Description

WCF Data Services provides the functionality for building OData services and clients on the .NET Framework. It makes adding OData on top of relational and in-memory data very trivial, and provides the extensibility for wrapping OData on top of any data source. While it's possible, it currently requires deep knowledge of LINQ (e.g. custom IQueryables and Expression trees), which makes the barrier of entry too high for developing services for many scenarios.

After working with many different developers in many different industries and domains, we realized that while lots of folks wanted to adopt OData, their data didn't fit into that "friendly path" that was easily achievable. Whether you want to wrap OData around an existing API (SOAP/REST/etc.), mash-up SQL Azure and Windows Azure Table storage, re-structure the shape of a legacy database, or expose any other data store you can come up with, the WCF Data Services Toolkit will help you out. That doesn't mean it will make every scenario trivial, but it will certainly help you out a lot.

In addition to this functionality, the toolkit also provides a lot of shortcuts and helpers for common tasks needed by every real-world OData service. You get JSONP support, output caching, URL sanitization, and more, all out of the box. As new scenarios are learned, and new features are derived, we'll add them to the toolkit. Make sure to let us know about any other pain points you're having, and we'll see how to solve it.

Last edited Tue at 10:37 PM by LostInTangent, version 10

From the Downloads page:

- Updated: Feb 9 2011 by LostInTangent

- Dev status: Beta

Recommended Download

- WCF Data Services Toolkit Binaries: application, 22K, uploaded Tue - 86 downloads

Other Available Downloads

- WCF Data Services Toolkit Source Code: source code, 45K, uploaded Tue - 55 downloads

- Building Real-World OData Services: documentation, 425K, uploaded Wed - 69 downloads

- Developing OData Services With SQL Azure And Entity Framework: documentation, 2556K, uploaded Wed - 47 downloads

- Building OData Services On Top Of Existing APIs: documentation, 2989K, uploaded Wed - 62 downloads

See Beth Massi (@bethmassi) will present Creating and Consuming OData Services for Business Applications on 2/16/2010 at 6:30 to 8:30 PM in Microsoft’s San Francisco Office, 835 Market Street, Suite 700, San Francisco, CA 94103 in the Cloud Computing Events section below.

Klint Finley (pictured below) recommended Putting Information on the Balance Sheet in a 2/11/2011 post to the ReadWriteEnterprise blog:

RedMonk's James Governor and EMC VP of Global Marketing Chuck Hollis are calling for enterprises to put information on the balance sheet. In other words, start considering useful information as an asset and poorly managed information as a liability.

"If you've got an expensive manufacturing machine, you invest periodically to keep the asset running in top shape, otherwise its value falls sharply over time," writes Hollis. "Are information bases any different? How many databases in your organization are providing declining value simply because there isn't a regular program of data maintenance and enhancement?"

Not a bad idea. Taking it a step further, Gartner and Forrester have been agitating for IT to be a profit center, and putting information on the balance sheet would be a step towards quantifying the value IT provides an organization.

One way to make information even more clearly an asset is to sell information in a marketplace like Azure DataMarket. And, for organizations that simply can't do this, paying attention to these markets may help determine the value of information.

Even though the CIO role is increasingly under fire, it's also an increasingly important role. Intel CIO Diane Bryant said in a recent interview:

It's remarkable how dependent the business is on IT. Every business strategy we're trying to deploy, every growth opportunity we're pursuing and every cost reduction all funnels back to an IT solution. How do you go from hundreds of customers to thousands of customers? You do that through technology. You don't scale the sales force by 10 times. I look across Intel's corporate strategy and I can directly tie each one of our pillars to the IT solution that's going to enable it. It's a remarkable time to be in IT. All of a sudden the CIO had better be at the table in the business strategy discussions because they can't launch a strategy without you.The new role for IT will be to make money for companies, not to just support operations. That's a big shift in thinking, and it's time to start making plans.Photo by Philippe Put

PR.com reported Webnodes Announces Support for OData in Their Semantic CMS in a 2/12/2011 press release:

Webnodes AS, a company developing an ASP.NET based semantic content management system, today announced full support for OData in the newest release of their CMS.

In the latest version of Webnodes CMS, there’s built-in support for creating OData feeds. OData, also called the Open Data Protocol is an open protocol for sharing content. Content is shared using tried and tested web standards, and makes it easy to access the information from a variety of applications, services, and stores.

“OData is a new technology that we believe strongly in”, said Ole Gulbrandsen, CTO of Webnodes. “It’s a big step forward for sharing of data on the web.”

Share content between web and mobile apps

One of the many uses for OData is integration of website content with mobile apps. OData exposes content in a standard format that can be easily used on popular mobile platforms like iOS (iPhone and iPad), Android and Windows Phone 7.First step towards the semantic web

While OData is not seen as a semantic standard by most people, Webnodes see it as the first big step towards the semantic web. The gap between the current status quo, where websites are mostly separate data silos and the vision for the semantic web is huge. OData brings the data out of the data silos and onto the web in a standard format to be shared. This bridges the gap significantly, and brings the semantic web a lot closer to reality after many years as the next big technology.About Webnodes CMS

Webnodes CMS is a unique ASP.NET based web content management system that is built on a flexible semantic content engine. The CMS is based on Webnodes’ 10 years of experience developing advanced web content management systems.About Webnodes AS

Webnodes AS (www.webnodes.com) is the developer of the world class semantic web content management system Webnodes CMS, which enable companies to develop and use innovative and class leading websites. Webnodes is located in Oslo, Norway. Webnodes has implementation partners around the world.

Marcelo Lopez Ruiz posted OData, jQuery and datajs on 2/11/2011:

Over the last couple of days, I've received a number of inquiries about the relationship between JSON, OData, jQuery and datajs and how to choose between them.

These aren't all the same kinds of things, so I'll take them one by one.

Talking the talk

JSON is a format to represent data, much like XML. It's the rules for reading and writing text and figuring out what pieces of data have what name and how they relate. JSON, however, doesn't tell you what this data means, or what you can do with it.

OData, on the other hand, is a protocol that uses JSON as well as ATOM and XML. If you're talking to an OData service and get a JSON response back, you know which pieces of information are property values, which are identifiers, which are used for concurrency control, etc. It also describes how you can interact with the service using the supported formats to do things with the data: create it, update it, delete, link it, etc.

So far, we've only been talking about specifications or agreements as to how things work, but we haven't discussed any actual implementations. jQuery and datajs are two specific JavaScript libraries that actually get things done.

Walking the walk

Now we get to the final question: how do you compare and choose between jQuery and datajs? The answer is actually quite simple, because they do different things, so you use one or the other or both, depending on what you're trying to do.

jQuery is great at removing differences between browser APIs, manipulating the page structure, doing animations, supporting richer controls, and simplifying network access (I'm not part of the jQuery development team, so my apologies if I'm mischaracterising something). It includes AJAX support that allows you to send text, JSON, HTML and form-style fields over the web.

datajs is focused on handling data (unsurprisingly). The first release will deliver great OData support, including both ATOM and JSON formats, the ability to parse or statically declare metadata and apply it to improve the results, the ability to read and write batches of request/responses, smoothing format and versioning differences, and whatever else is needed to be first-class OData citizen. We don't foresee getting into the business of writing a DOM query library or a control framework - there are many other libraries that are really good at this and we'd rather focus on enabling new functionality.

Hope this clarifies things, but if not, just drop a message and I'll be happy to discuss.

Greg Duncan updated the OData Primer’s Consuming OData Services laundry list on 2/11/2011:

Articles related to Querying OData services¶

- Sorting OData feeds by their title

- OData does not support Select Many Queries

- Using LINQ and Reactive Extensions to overcome limitations in OData query operator

- OData Query Option top Forces Data To Be Sorted By Primary Key

- Quick Tip – Troubleshoot broken OData response using Notepad

- Examining OData – Windows Phone 7 Development — How to create your URI’s easily with LINQPad

- Reference of namespaces in OData

- Connecting to an OAuth 2.0 protected OData Service

- Retrieve Records filtering on an Entity Reference field using OData

- Understanding and Using OData (1 of 4)

- Understanding and Using OData – OData Publication (2 of 4)

- Understanding and Using OData - Analysing OData Feeds (3 of 4)

Articles related to OData and Microsoft Office¶

VBA¶

- Consuming OData with Office VBA - Part I

- Consuming OData with Office VBA - Part II

- Consuming OData with Office VBA - Part III (Excel)

- Consuming OData with Office VBA - Part IV (Access)

Excel¶

- Add Spark to Your OData: Consuming Data Services in Excel 2010 Part 1

- Consuming Data Services in Excel 2010 Part 2

- (Tutorial) JQUERY + ODATA + Excel Power Pivot (GD: Spanish)

- Add Some Spark to Your OData: Creating and Consuming Data Services with Visual Studio and Excel 2010

Outlook¶

SharePoint¶

- Bookmarklet to find OData service for SharePoint site

- Cannot add a Service Reference to SharePoint 2010 OData!

- Upload files to SharePoint using OData!

Articles Related to Specific Client Libraries¶

Silverlight¶

- Building SL3 applications using OData client Library with Vs 2010 RC

- Creating a Silverlight Client for @shanselman ’s Nerd Dinner, using oData and Bing Maps

- Simple Silverlight 4 Example Using oData and RX Extensions

- Silverlight 4 OData Paging with RX Extensions

- (OData) Use OData data with WCF Data Services and Silverlight 4

- Pivot, OData, and Windows Azure: Visual Netflix Browsing

- Querying Netflix OData Service using Silverlight 4

- A guide to OData and Silverlight

Windows Phone 7¶

- Developing a Windows Phone 7 Application that consumes OData

- Windows Phone 7: Lists, Page Animation and oData

- Using OData with Windows Phone 7 SDK Beta

- Windows Phone 7, Reactive Extensions, OData, MVVM

- WCF Data Services Client Library and Windows Phone 7 – Next Steps

- SQL Azure, OData, and Windows Phone 7

- Netflix Browser for Windows Phone 7 - Part 1

- Data Services Client for Win Phone 7 Now Available!

- OData v2 and Windows Phone 7

- Entity Framework Code-First, oData & Windows Phone Client

- Connecting to an OAuth 2.0 protected OData Service

- OData and Windows Phone 7

- OData and Windows Phone 7 Part 2

- Lessons learnt building the Windows Phone OData browser

JavaScript¶

- datajs JavaScript Library for data-centric web applications

- New JavaScript library for OData and beyond

- datajs– Using OData From Within the Browser

- Short datajs walk-through

JQuery¶

- Using jQuery and OData to Insert a Database Record

- (Tutorial) JQUERY + ODATA + Excel Power Pivot (GD: Spanish)

- WCF Data Services, OData & jQuery. If you are an asp.net developer you should be embracing these technologies…

PHP¶

- CRUD operations with the OData SDK for PHP

- Consuming SQL Azure Data with the OData SDK for PHP

- Accessing OData for SQL Azure with AppFabric Access Control and PHP

- Accessing Windows Azure Table Data as OData via PHP

Drupal¶

Powershell¶

VBA¶

- Consuming OData with Office VBA - Part I

- Consuming OData with Office VBA - Part II

- Consuming OData with Office VBA - Part III (Excel)

- Consuming OData with Office VBA - Part IV (Access)

Objective-C/iPhone¶

Articles Related to Specific Services¶

Netflix¶

- Netflix, jQuery, JSONP, and OData

- PowerShell WPK NetFlix Viewer Using Microsoft’s OData

- Developing a Windows Phone 7 Application that consumes OData

- Using LINQ and Reactive Extensions to overcome limitations in OData query operator

- Pivot, OData, and Windows Azure: Visual Netflix Browsing

- Querying Netflix OData Service using Silverlight 4

- Netflix Browser for Windows Phone 7 - Part 1

Nerd Dinner¶

- Creating a Silverlight Client for @shanselman ’s Nerd Dinner, using oData and Bing Maps

- Bing Maps + oData + Windows Phone 7 - Nerd Dinner Client For Windows Phone 7

Microsoft DataMart (fka Codename "Dallas")¶

Microsoft Conference Feeds (PDC, etc) ¶

- Building a Mobile-Browser-Friendly List of PDC 2010 Sessions with Windows Azure and OData

- PDC 2010 OData Feed iPhone App

Windows Live¶

Twitter/TwitPic¶

Articles Related to Specific Third Party Components¶

Infragistics¶

DevExpress¶

Frederick Harper reported Drupal 7: out of the box SQL Server support in a 2/9/2011 post to the Web Central Station blog:

We are more than happy to welcome the new version of Drupal. For those of you who don’t know Drupal, you should take a look at it. Drupal is a free open-source CMS that helps you publish and manage easily the content on a website. The new version is now easier to use, more flexible and more scalable. This version is also the first one that comes out of the box with SQL Server support that brings even greater interoperability with the Microsoft platform.

In order for a SQL Server database to work with Drupal 7, it needs a PDO driver, and a Drupal Abstraction Layer. Microsoft is providing the PDO driver for SQL Server , and Commerce Guys is releasing the Drupal SQL Server module. You can download it or use the Microsoft Web PI to install it.

Did you say Azure? At this moment, we don’t support SQL Server with Drupal on Azure, but it’s a work in progress. Actually, Drupal is working with MySQL, but don’t be sad, we got 4 new modules for you! [Emphasis added.]

- Bing Maps Module: enable easy & flexible embedding of Bing Map in Drupal content types (like articles for example)

- Silverlight Pivot viewer Module: enable easy & flexible embedding of Silverlight Pivot in Drupal content types, using a set of preconfigured data sources (OData, a, b, c).

- Windows Live ID Module: allow Drupal user to associate their Drupal account to their Windows Live ID, and then to login on Drupal with their Windows Live ID

OData Module: allow data sources based on OData to be included in Drupal content types (such as articles). The generic module includes a basic OData query builder and renders data in a simple HTML Table. The package includes a sample module base on an Open Government Data Initiative (OGDI) OData source, showing how to build advanced rendering (with Bing Maps).

If you want to learn more about these, you should read the blog post of Craig Kitterman here. It’s another way that we support interoperability and we are proud to listen to our customer, but enough read for now, let’s try the new version of Drupal!

<Return to section navigation list>

Windows Azure AppFabric: Access Control and Service Bus

• Vittorio Bertocci (@vibronet) posted a very detailed Fun with FabrikamShipping SaaS I: Creating a Small Business Edition Instance (several feet long) on 7/12/2011:

It’s been few months that we pulled the wrap off FabrikamShipping SaaS, and the response (example here) has been just great: I am glad you guys are finding the sample useful!

In fact, FabrikamShipping SaaS contains really a lot of interesting stuff and I am guilty of not having found the time to highlight the various scenarios, lessons learned and reusable little code gems it contains. Right now, the exploratory material is limited to the intro video, the recording of my session at TechEd Europe and the StartHere pages of the source code & enterprise companion packages.

We designed the online demo instance and the downloadable packages to be as user-friendly as we could, and in fact we have tens of people creating tenants every day, but it’s undeniable that some more documentation may help to zero on the most interesting scenarios. Hence, I am going to start writing more about the demo. Some times we’ll dive very deep in code and architecture, some other we’ll stay at higher level.

I’ll begin by walking you through the process of subscribing to a small business edition instance of FabrikamShipping: the beauty of this demo angle is that all you need to experience it is a browser, an internet connection and one or more accounts at Live, Google or Facebook. Despite of the nimble requirements, however, this demo path demonstrates many important concepts for SaaS and cloud based solutions: in fact, I am told it is the demo that most often my field colleagues use in their presentations, events and engagements.

Last thing before diving in: I am going to organize this as instructions you can follow for going thru the demo, almost as a script, so that you can get the big picture reasonably fast; I will expand on the details on later posts.

Subscribing to a Small Business Edition instance of FabrikamShipping

Let’s say that today you are Adam Carter: you work for Contoso7, a fictional startup, and you are responsible for the logistic operations. Part of the Contoso7 business entails sending products to their customers, and you are tasked with finding a solution for handling Contoso7’s shipping needs. You have no resources (or desire) to maintain software in-house for a commodity function such as shipping, hence you are on the hunt for a SaaS solution that can give you what you need just by pointing your browser to the right place.

Contoso7 employees are mostly remote; furthermore, there is a seasonal component in Contoso7 business which requires a lot of workers in the summer and significantly less stuff in the winter. As a result, Contoso7 does not keep accounts for those workers in a directory, but asks them to use their email and accounts from web providers such as Google, Live Id, or even Facebook.

In your hunt for the right solution, you stumble on FabrikamShipping: it turns out they offer a great shipping solution, delivered as a monthly subscription service to a customized instance of their application. The small business edition is super-affordable, and it supports authentication from web providers. It’s a go!

You navigate to the application home page at https://fabrikamshipping.cloudapp.net/, and sign up for one instance.

As mentioned, the Small Business Edition is the right fit for you; hence, you just click on the associated button.

Before everything else, FabrikamShipping establishes a secure session: in order to define your instance, you’ll have to input information you may not want to share too widely! FabrikamShipping also needs to establish a business relationship with you: if you will successfully complete the onboarding process, the identity you use here will be the one associated to all the subscription administration activities.

You can choose to sign in from any of the identity providers offered above. FabrikamShipping trusts ACS to broker all its authentication need: in fact, the list of supported IPs comes from directly from the FabrikamShipping namespace in ACS. Pick any IP you like!

In this case, I have picked a live id. Note for the demoers: the identity you use at this point is associated to your subscription, and is also the way in which FabrikamShipping determines which instance you should administer when you come back to the management console. You can only have one instance associated to one identity, hence once you create a subscription with this identity you won’t be able to re-use the same identity for creating a NEW subscription until the tenant gets deleted (typically every 3 days).

Once you authenticated, FabrikamShipping starts the secure session in which you’ll provide the details of your instance. The sequence of tabs you see on top of the page represent the sequence of steps you need to go through: the FabrikamShipping code contains a generic provisioning engine which can adapt to different provisioning processes to accommodate multiple editions, and it sports a generic UI engine which can adapt to it as well. The flow here is specific to the small business edition.

The first screen gathers basic information about your business: the name of the company, the email address at which you want to receive notifications, which Windows Azure data center you want your app to run on, and so on. Fill the form and hit Next.

In this screen you can define the list of the users that will have access to your soon-to-appear application instance for Contoso7.

Users of a Small Business instance authenticate via web identity providers: this means that at authentication time you won’t receive a whole lot of information in form of claims, some times you’ll just get an identifier. However, in order to operate the shipping application every user need some profile information (name, phone, etc) and the level of access it will be granted to the application features (i.e., roles).

As a result, you as the subscription administrator need to enter that information about your users; furthermore, you need to specify for every user a valid email address so that FabrikamShipping can generate invitation emails with activation links in them (more details below).

In this case, I am adding myself (ie Adam Carter) as an application user (the subscription administrator is not added automatically) and using the same hotmail account I used before. Make sure you use an email address you actually have access to, or you won’t receive notifications you need for moving forward in the demo. Once you filled in all fields, you can click Add as New for adding the entry in the users’ list.

For good measure I always add another user for the instance, typically with a gmail or Facebook account. I like the idea of showing that the same instance of a SaaS app can be accessed by users coming from different IPs, something that before the rise of the social would have been considered weird at best

Once you are satisfied with your list of users, you can click Next.

The last screen summarizes your main instance options: if you are satisfied, you can hit Subscribe and get FabrikamShipping to start the provisioning process which will create your instance.

Note: on a real-life solution this would be the moment to show the color of your money. FabrikamShipping is nicely integrated with the Adaptive Payment APIs and demonstrates both explicit payments and automated, preapproved charging from Windows Azure. I think it is real cool, and that it deserves a dedicated blog post: also, in order to work it requires you to have an account with the PayPal developer sandbox, hence this would add steps to the flow: more reasons to defer it to another post.

Alrighty, hit Subscribe!

FabrikamShipping thanks you for your business, and tells you that your instance will be ready within 48 hours. In reality that’s the SLA for the enterprise edition, which I’ll describe in another post, for the Small Business one we are WAAAY faster. If you click on the link for verifying the provisioning status, you’ll have proof.

Here you entered the Management Console: now you are officially a Fabrikam customer, and you get to manage your instance.

The workflow you see above is, once again, a customizable component of the sample: the Enterprise edition one would be muuuch longer. In fact, you can just hit F5 a few times and you’ll see that the entire thing will turn green in typically less than 30 seconds. That means that your Contoso7 instance of FabrikamShipping is ready!

Now: what happened in those few seconds between hitting Subscribe and the workflow turning green? Quite a lot of things. The provisioning engine creates a dedicated instance of the app database in SQL Azure, creates the database of the profiles and the various invitation tickets, add the proper entry in the Windows Azure store which tracks tenants and options, creates dedicated certificates and upload them in ACS, creates entries in ACS for the new relying party and issuer, sends email notifications to the subscriber and invites to the users, and many other small things which are needed for presenting Contoso7 with a personalized instance of FabrikamShipping. There are so many interesting things taking place there that for this too we’ll need a specific post. The bottom line here is: the PaaS capabilities offered by the WIndows Azure platform are what made it possible for us to put together something so sophisticated as a sample, instead of requiring the armies of developers you’d need for implementing features like the ones above from scratch. With the management APIs from Windows Azure, SQL Azure and ACS we can literally build the provisioning process as if we’d be playing with Lego blocks.

Activating One Account and Accessing the Instance

The instance is ready. Awesome! Now, how to start using it? The first thing Adam needs to do is check his email.

Above you can see that Adam received two mails from FabrikamShipping: let’s take a look to the first one.

The first mail informs Adam, in his capacity of subscription manager, that the instance he paid for is now ready to start producing return on investment. It provides the address of the instance, that in good SaaS tradition is of the form http://<applicationname>/<tenant>, and explains how the instance work: here there’s the instance address, your users all received activation invitations, this is just a sample hence the instance will be gone in few days, and similar. Great. If we want to start using the app, Adam needs to drop the subscription manager hat and pick up the one of application user. For this, we need to open the next message.

This message is for Adam the user. It contains a link to an activation page (in fact we are using MVC) which will take care of associating the record in the profile with the token Adam will use for the sign-up. As you can imagine, the activation link is unique for every user and becomes useless once it’s been used. Let’s click on the activation link.

Here we are already on the Contoso7 instance, as you can see from the logo (here I uploaded a random image (not really random, it’s the logo of my WP7 free English-Chinese dictionary app (in fact, it’s my Chinese seal))). Once again, the list of identity providers is rendered from a list dynamically provided by the ACS: although ACS provides a ready-to-use page for picking IPs, the approach shown here allows Fabrikam to maintain a consistent look and feel and give continuity of experience, customize the message to make the user aware of the significance of this specific step (sign-up), and so on. Take a peek at the source code to see how that’s done.

Let’s say that Adam picks live id: as he is already authenticated with it from the former steps, the association happens automatically.

The page confirms that the current account has been associated to the profile; to prove it, we can now finally access the Contoso7 instance. We can go back to the mail and follow the provided link, or use directly the link in the page here.

This is the page every Contoso7 user will see when landing on their instance: it may look very similar to the sign-up page above, but notice the different message clarifying that this is a sign-in screen.

As Adam is already authenticated with Live ID, as soon as he hits the link he gets redirected to ACS, gets a token and uses it to authenticate with the instance. Behind the scenes, Windows Identity Foundation uses a custom ClaimsAuthenticationManager to shred the incoming token: it verifies that the user is accessing the right tenant (tenant isolation is king), then retrieves form SQL Azure the profile data and adds them as claims in the current context (there are solid reasons for which we store those at the RP side, once again: stuff for another post). As a result, Adam gets all his attributes and roles dehydrated in the current context and the app can take advantage of claims based identity for customizing the experience and restrict access as appropriate. In practical terms, that means that Adam’s sender data are pre-populated: and that Adam can do pretty much what he wants with the app, since he is in the Shipping Manager role that he self-awarded to his user at subscription time.

In less than 5 minutes, if he is a fast typist, Adam got for his company a shipping solution; all the users already received instructions on how to get started, and Adam himself can already send packages around. Life is good!

Works with Google, too! And all the Others*

*in the Lost sense

Let’s leave Adam for a moment and let’s walk few clicks in the Joe’s mouse. If you recall the subscription process, you’ll remember that Adam defined two users: himself and Joe. Joe is on gmail: let’s go take a look to what he got. If you are doing this from the same machine as before: remember to close all browsers or you risk to carry forward existing authentication sessions!

Joe is “just” a user, hence he received only the user activation email.

The mail is absolutely analogous to the activation mail received by Adam: the only differences are the activation link, specific to Joe’s profile, and how gmail renders HTML mails.

Let’s follow the activation link.

Joe gets the same sign-up UI we observed with Adam: but this time Joe has a gmail account, hence we’ll pick the Google option.

ACS connects with google via the OpenID protocol: the UI above is what google shows you when an application (in this case the ACS endpoint used by FabrikamShipping) requests an attribute exchange transaction, so that Joe can give or refuse his consent to the exchange. Of course Joe knows that the app is trusted, as he got a headsup from Adam, and he gives his consent. This will cause one token to flow to the ACS, which will transform it and make it available for the browser to authenticate with FabrikamShipping. From now on, we already know what will happen: the token will be matched with the profile connected to this activation page, a link will be established and the ticket will be voided. Joe just joined the Contoso7’s FabrikamShipping instance family!

And now, same drill as before: in order to access the instance, all Joe needs to do is click on the link above or use the link in the notification (better to bookmark it).

Joe picks google as his IP…

..and since he flagged “remember this approval” at sign-up time, he’ll just see the page above briefly flashing in the browser and will get authenticated without further clicks.

And here we are! Joe is logged in the Contoso7 instance of FabrikamShipping.

As you can see in the upper right corner, his role is Shipping Creator, as assigned by Adam at subscription time. That means that he can create new shipments, but he cannot modify existing ones. If you want to double check that, just go through the shipment creation wizard, verify that it works and then try to modify the newly created shipment: you’ll see that the first operation will succeed, and the second will fail. Close the browser, reopen the Contoso7 instance, sign in again as Adam and verify that you are instead able to do both creation and modifications. Of course the main SaaS explanatory value of this demo is in the provisioning rather than the application itself, but it’s nice to know that the instances itself actually use the claims as well.

Aaand that’s it for creating and consuming Small Business edition instances. Seems long? Well, it takes long to write it down: but with a good form filler, I can do the entire demo walkthrough above well under 3 minutes. Also: this is just one of the possible path, but you can add your own spins & variations (for example, I am sure that a lot of people will want to try using facebook). The source code is fully available, hence if you want to add new identity providers (yahoo, ADFS instances or arbitrary OpenID providers are all super-easy to add) you can definitely have fun with it.

Now that you saw the flow from the customer perspective, in one of the next installments we’ll take a look at some of the inner workings of our implementation: but now… it’s Saturday night, and I better leave the PC alone before they come to grab my hair and drag me away from it.

• Microsoft’s San Antonio data center sent [AppFabric Access Control] [South Central US] [Yellow] We are currently investigating a potential problem impacting Windows Azure AppFabric on 2/12/2010 CST:

Feb 11 2011 7:11PM We are currently investigating a potential problem impacting Windows Azure AppFabric.

Feb 13 2011 3:54AM Service is running normally.

Here’s a capture from the Azure Services Dashboard:

More than a day to correct a “potential problem” seems like a long time to me.

<Return to section navigation list>

Windows Azure Virtual Network, Connect, RDP and CDN

• CloudFail.net reported [Windows Azure CDN] [Worldwide] [Green(Info)] Azure CDN Maintenance on 2/13/2011:

Feb 13 2011 8:02PM Customers may see delay in Azure CDN new provisioning requests until 1pm PST due to scheduled maintenance.

Here’s the report from the Windows Azure Service Dashboard:

• See the David Makogon (@dmakogon) posted an Azure Tip: Overload your Web Role on 7/13/2011 article in the Live Windows Azure Apps, APIs, Tools and Test Harnesses section below re an earlier RDP tip.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

• Joe Brinkman promised a series of posts starting with DotNetNuke and Windows Azure: Understanding Azure on 2/9/2011:

For the last year or so there has been a lot of interest in the DotNetNuke community about how to run DotNetNuke on Windows Azure. Many people have looked at the problem and could not find a viable solution that didn’t involve major changes to the core platform. This past fall, DotNetNuke Corp. was asked by Microsoft to perform a feasibility study identifying any technical barriers that prevented DotNetNuke from running on Windows Azure.

I was pleasantly surprised by what I found and over the course of the next few weeks I’ll present my findings in a series of blog posts. Yes Virginia, there is a Santa Clause, and he is running DotNetNuke on Windows Azure.

- Understanding Azure

- SQL Azure

- Azure Drives

- Web Roles and IIS

- Putting it All together

Part 1: Understanding Azure

Background

Prior to the official launch of Windows Azure, Charles Nurse had looked at running DotNetNuke on Windows Azure. At the time it was concluded that we could not run without major architectural changes to DotNetNuke or to Windows Azure. Since that time several other people in the community have also tried to get DotNetNuke running on Windows Azure and have arrived at the same conclusion. David Rodriguez has actually made significant progress, but his solution required substantial changes to DotNetNuke and is not compatible without also modifying any module you wish to use.

DotNetNuke already runs on a number of different Cloud platforms and we really don’t want to re-architect DotNetNuke just to run on Azure. That approach was rejected because ultimately Azure support is only needed by a small fraction of our overall community. Re-architecting the platform would require significant development effort which could be better spent on features that serve a much larger segment of our community. Also, re-architecting the platform would introduce a significant amount of risk since it would potentially impact every Module and Skin currently running on the platform. The downsides of re-architecting DotNetNuke vastly outweigh the anticipated benefits to a small percentage of our user base.

The Magic of the Fabric Controller

To understand the major challenge with hosting on Windows Azure it is important to understand some of the basics of the platform. Windows Azure was created on the premise that applications running in the cloud should be completely unaware of the specific hardware they were running on. Microsoft is free to upgrade hardware at any time or even use different hardware in different data centers. Any server in the Azure cloud (assuming it has the resources specified by the application service definition) is an equally viable spot on which to host a customers’ applications.

A key aspect of Windows Azure hosting is that the Azure Fabric Controller constantly monitors the state of the VMs and other hardware necessary to run your application (this includes load balancers, switches, routers etc.). If a VM becomes unstable or unresponsive, the Fabric Controller can shutdown and restart the VM. If the VM cannot be successfully brought back online, the Fabric Controller may move the application to a new VM. All of this occurs seamlessly and without the intervention of the customer or even Azure technical staff. Steve Nagy has a great post that explains the Fabric Controller in more detail.

The Fabric Controller is a complex piece of code that essentially replaces an entire IT department. Adding new instances of your application can happen in a matter of minutes. New instances of your application can be placed in data centers located around the world at a moments notice, and they can be taken down just as quickly. The Fabric Controller provides an incredible amount of flexibility and redundancy without requiring you to hire an army of IT specialists or to buy expensive hardware that will be outdated in a matter of months. The costs of running your application are more predictable and scales directly in proportion to the needs of your application.

Immutable Applications

All of this scalability and redundancy has a price. In order to accomplish this seeming bit of magic, Windows Azure places one giant limitation on applications running in their infrastructure: the application service package that is loaded into Windows Azure is immutable. If you want to make changes to the application, you must submit a whole new service package to Windows Azure and this new package will be deployed to the appropriate VM(s) running in the appropriate data centers.

This limitation is in place for a number of reasons. Because Azure defines their roles as read-only, Microsoft doesn’t have to worry about making backups of your application. As long as they have the original service package that was uploaded, then they have a valid backup of your entire application. Any data that you need to store should be stored in Azure Storage or SQL Azure which have appropriate backup strategies in place to ensure your data is protected.

Also, because every service package is signed by the user before it is uploaded to Azure, Microsoft can be reasonably certain that it has not been corrupted since they first received the package. Any bugs or corruption issues exist in your application because that is what you submitted and not because of some failure on the part of the Azure infrastructure.

Finally, because the role is tightly locked down, your application is much more secure. It becomes extremely difficult for a hacker to upload malicious code to your site as it would require them to essentially break through the security protections that Microsoft’s engineers have put in place. Not infeasible, but certainly harder than breaking through the security implemented by most company IT departments who don’t have nearly the security expertise as the people who wrote the Operating System.

This “read-only” limitation makes it very easy for Windows Azure to manage your application. It can always add a new application instance, or move your application to a new VM by just configuring a new VM as specified in your service description and then deploying your original service package to the appropriate VM instance. If your application instance were to become corrupted, the Fabric Controller wouldn’t have to try and figure out what was the last valid backup of your application as it would always have the original service package safely tucked away.

Because the service package is signed, the Fabric Controller can quickly verify that the service package is not corrupted and that you have fully approved that package for execution. Moving VMs or adding new instances becomes a repeatable and secure process, which is very important when you are trying to scale to 100s of thousands of customers and applications.

Unfortunately, the assumption that applications are immutable is directly at odds with a fundamental tenant of ASP.Net applications: especially those running in Medium trust and requiring the ability for users to upload content to the application. Most ASP.Net applications that support file uploads store the content in some directory located within the application directory. In fact, when running in Medium Trust, ASP.Net applications are prevented from accessing file locations outside of the application directory. Likewise those applications are also prohibited from making web-service calls to store content on external sites like Flickr or Amazon S3.

With DotNetNuke, and other extensible web applications like DotNetNuke, the situation is even more dire. One of the greatest features of most modern CMS’s, and certainly of DotNetNuke, is the ability to install new modules or extensions at runtime. As part of this module installation process, new code is uploaded to the site and stored in the appropriate folders. This new code is then available to be executed in the context of the base application. Given the immutable nature of Windows Azure this functionality just isn’t supported.

The VM Role Won’t Save Us

Last year at PDC Microsoft announced that they would be releasing a VM role that did away with the limitations imposed by the Web and Worker roles in Windows Azure. Many people within the DotNetNuke community who have looked at running DotNetNuke on Windows Azure thought that this would remove the roadblocks for DotNetNuke. With a VM role, instead of supplying a service package that is just your application, you actually provide a .vhd image of an entire Windows 2008 R2 server that is configured to run your application. Any dependencies needed to run your application are already installed and ready to go. This is great as it gives you complete control over the VM and even allows you to configure your application to have read/write access to your application directories. However, when you understand how the Azure Fabric Controller operates, it becomes clear that having control over the VM and read/write access to the application directories doesn’t really solve the problems preventing DotNetNuke from running.

For example, if DotNetNuke was running in a VM role that was running running behind a specific network switch and that switch suffered a hardware failure, the Fabric Controller would want to startup a new VM for your application on another network segment that was unaffected by the outage. Since Azure doesn’t have to worry about having up to the minute backups of your application, it can just reload your original VM image on a new server and have your application back up and running very quickly. If you had been writing data or installing code to the application directories, all of that new data and code uploaded at runtime by your website administrators and content editors would not be present on the original .vhd image you uploaded to Azure.

As you can see the VM Role is not the answer. Don’t despair however. A lot has changed with Windows Azure since Charles Nurse performed his first evaluation and there is a solution. Over the next 4 posts in the series I’ll show you how we solve the immutability problem and get DotNetNuke running in Azure without any architectural changes and just a minimal amount of changes to our SQL Scripts.

• Andy Cross (@andybareweb) explained File Based Diagnostics Config with IntelliSense in Azure SDK 1.3 in a detailed 2/13/2011 post with a link to source code at the end:

Earlier in the week, the Windows Azure team posted about a way to use a configuration file to set up the runtime diagnostics in the Windows Azure SDK version 1.3. This is an alternative to the imperative programmatic approach and has the key benefit of executing the setup before the role itself starts and before any Startup tasks execute. In this blog I will show how to use the diagnostics.wadcfg diagnostics configuration file, how to use intellisense with it and how it can be used to capture early Windows Azure lifecycle events. A Source code example is also provided.

First of all we need to start with a vanilla Windows Azure Worker Role. I chose this for simplicity, but the approach does work for other role types. For adjustments you need to make for different role types, see the later section called Differences between role types.

To this root of this Worker Role, we must add a file called diagnostics.wadcfg. You can choose to add it as a text file or an xml file; the latter will allow basic validation checking such as ensuring tags are closed. We will choose the Xml file approach, as it also allows us to setup intellisense for a richer design-time experience. Right click on your solution and

Add a new file to the solution

Next choose the XML file option given to use and give it the correct filename.

Add an xml file with the correct filename

The new file should be set to have the correct build properties set in order that the file is packaged correctly.

Set the Build Action to Content and Copy to Output to a "Copy **" option

The basic XML file generated by Visual Studio is show below. You can delete all the contents of this file:

Basic xml to be deleted

If you try to type in to the newly created file you will see that you are not given any particularly useful options by IntelliSense. This next step is optional, and so skip the next section if you are just going to paste in an existing file. I will now show how to validate any XML file against a known XSD schema in Visual Studio 2010.

How to Validate an XML file against a know XSD schema in Visual Studio 2010

Firstly, enter the XML | Schemas … dialog:

Enter the XML Schemas option

This is what the dialog looks like:

XML Schemas dialog

Click the Add button, and in the resulting dialog browse to the path of your XSD. Select the XSD and click OK. The path to the Windows Azure Diagnostic Configuration file XSD is located at %ProgramFiles%\Windows Azure SDK\v1.3\schemas\DiagnosticsConfig201010.xsd. MSDN has a more detailed document on the schema for this XML file located at Windows Azure Diagnostics Configuration Schema.

Browse and select the XSD

Visual Studio will then show the schema that you have added as the highlighted row in the next dialog box. This will also by default have a tick in the “Use” column, meaning it has been loaded and is associated with the current document. In future, this all xml files will automatically associated with the correct schema if there is a matching

xmlnsin the XML file.Confirmation of schema loaded

Clicking OK on this final dialog completes the process. This allows IntelliSense to begin providing suggestions for new nodes as well as validating existing nodes:

IntelliSense!

XML value and how to test

Now we can start adding in a basic set of XML nodes that will allow us to trace Windows Event Log details. The basic code is shown below. The schema is very familiar should you be experienced with Windows Azure Diagnostics. If not, I suggest you may like to read this blog post about how those diagnostics work. The only slight complexity is that scheduledTransferPeriod is in an encoded form, using ISO-8601 to encode a TimeSpan of 1 minute as “PT1M”.

<DiagnosticMonitorConfiguration xmlns="http://schemas.microsoft.com/ServiceHosting/2010/10/DiagnosticsConfiguration" configurationChangePollInterval="PT1M" overallQuotaInMB="4096"> <WindowsEventLog bufferQuotaInMB="4096" scheduledTransferLogLevelFilter="Verbose" scheduledTransferPeriod="PT1M"> <DataSource name="Application!*"/> </WindowsEventLog> </DiagnosticMonitorConfiguration>You can see how IntelliSense is really useful in this scenario by this screenshot:

IntelliSense again!

Once you have this value in your wadcfg file, you will have a role that will copy any Windows event logs for Application that occur – this happens very early in the lifecycle, and we can prove this by adding in a very simple console application that writes to the Windows Application log.

The console application I will not go into details regarding how to create and link it to the worker role – it is included in the source code provided and if you want to know more details they’re included in my blog post about Custom Performance Counters in Windows Azure, which uses a similar console application to install the counters.

The code within the console application is very straight forward:

using System; using System.Diagnostics; namespace EventLogWriter { class Program { static void Main(string[] args) { string eventSourceName = "EventLogWriter"; if (!EventLog.SourceExists(eventSourceName)) EventLog.CreateEventSource(eventSourceName, "Application"); EventLog.WriteEntry(eventSourceName, args[0], EventLogEntryType.Warning); } } }The program just adds whatever is provided as the first argument to it into the Application Event Log. The solution will look like this:

Solution structure

You must make sure you add the Startup task to your ServiceDefinition.csdef file:

ServiceDefinition.csdef

Differences Between Role Types

The different role types in Windows Azure need a slightly different setup in order to use this approach. The differences are simply related to File Location of the diagnostics.wadcfg file. From the new msdn documentation:

The following list identifies the locations of the diagnostics configuration file for the different role types:

- For worker roles, the configuration file is located in the root directory of the role.

- For web roles, the configuration file is located in the bin directory under the root directory of the role.

For VM roles, the configuration file must be located in the %ProgramFiles%\Windows Azure Integration Components\v1.0\Diagnostics folder in the server image that you are uploading to the Windows Azure Management Portal. A default file is located in this folder that you can modify or you can overwrite this file with one of your own. Conclusion

This screen show the logs being created in the Windows Event Log:

Event Log

This is the associated row in Windows Azure Blob Storage, as copied by the Windows Azure Diagnostics library:

Result in BlobStorage

Potential Problems

When I was creating this blog, I ran into a problem:

If you get this error, make sure you have all the required attributes on each node!

The message is “Windows Azure Diagnostics Agent has stopped working”. This was my fault, as I had hand crafted the XML file and had missed some important attributes on the <DiagnosticMonitorConfiguration/> root node. Make sure you have the configurationChangePollInterval and overallQuotaInMB specified, otherwise you will get this problem.

<DiagnosticMonitorConfiguration xmlns="http://schemas.microsoft.com/ServiceHosting/2010/10/DiagnosticsConfiguration" configurationChangePollInterval="PT1M" overallQuotaInMB="4096">Source code

As promised, the source code can be downloaded here: FileBasedDiagnosticsConfig

• David Makogon (@dmakogon) posted an Azure Tip: Overload your Web Role on 7/13/2011:

Recently, I blogged about endpoint usage when using Remote Desktop with Azure 1.3. The gist was that, even though Azure roles support up to five endpoints, Remote Desktop consumes one of those endpoints, and an additional endpoint is required for the Remote Desktop forwarder (this endpoint may be on any of your roles, so you can move it to any role definition).

To create the demo for the RDP tip, I created a simple Web Role with a handful of endpoints defined, to demonstrate the error seen when going beyond 5 total endpoints. The key detail here is that my demo was based on a Web Role. Why is this significant???

This brings me to today’s tip: Overload your Web Role.

First, a quick bit of history is in order. Prior to Azure 1.3, there was an interesting limit related to Role definitions. The Worker Role supported up to 5 endpoints. Any mix of input and external endpoints was supported. Input endpoints are public-facing, while internal endpoints are only accessible by role instances in your deployment. These input and internal endpoints supported http, https, and tcp.

However, the Web Role, while also supporting 5 total endpoints, only supported two input endpoints: one http and one https. Because of this limitation, if your Azure deployment required any additional externally-facing services (for example, a WCF endpoint ), you’d need a Web Role for the customer-facing web application, and a Worker Role for additional service hosting. When considering a live deployment taking advantage of Azure’s SLA (which requires 2 instances of a role), this equates to a minimum of 4 instances: 2 Web Role instances and 2 Worker Role instances (though if your worker role is processing lower-priority background tasks, it might be ok to maintain a single instance).

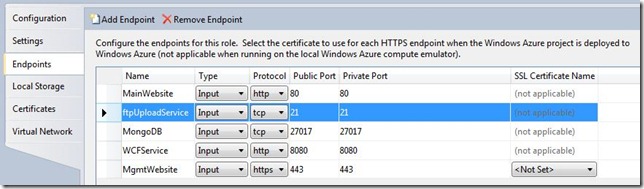

With Azure 1.3, the Web Role endpoint restriction no longer exists. You may now define endpoints any way you see fit, just like with a Worker Role. This is a significant enhancement, especially when building low-volume web sites. Let’s say you had a hypothetical deployment scenario with the following moving parts:

- Customer-facing website (http port)

- Management website (https port)

- ftp server for file uploads (tcp port)

- MongoDB (or other) database server (tcp port)

- WCF service stack (tcp port)

- Some background processing tasks that work asynchronously off an Azure queue

Let’s further assume that your application’s traffic is relatively light, and that the combining of all these services still provides an acceptable user experience . With Azure 1.3, you can now run all of these moving parts within a single Web Role. This is easily configurable in the role’s property page, in the Endpoints tab:

Your minimum usage footprint is now 2 instances! And if you felt like living on the wild side and forgoing SLA peace-of-mind, you could drop this to a single instance and accept the fact that your application will have periodic downtime (for OS updates, hardware failure/recovery, etc.).

Caveats

This example might seem a bit extreme, as I’m loading up quite a bit in a single VM. If traffic spikes, I’ll need to scale out to multiple instances, which scales all of these services together. This is probably not an ideal model for a high-volume site, as you’ll want the ability to scale different parts of your system independently (for instance, scaling up your customer-facing web, while leaving your background processes scaled back).

Don’t forget about Remote Desktop: If you plan on having an RDP connection to your overloaded Web Role, restrict your Web Role to only 3 or 4 endpoints (see my Remote Desktop tip for more information about this).

Lastly: Since you’re loading up a significant number of services on a single role, you’ll want to carefully monitor performance (CPU, web page connection latency, average page time, IIS request queue length, Azure Queue length (assuming you’re using one to control a background worker process), etc. As traffic grows, you might want to consider separating processes into different roles.

• Petri I. Salonen analyzed Nokia and Microsoft from a partner-to-partner (p-2-p) perspective to achieve a vibrant ecosystem: recommendations for Microsoft and Nokia partners with a Windows Azure twist on 1/12/2011:

…

When I look at the Microsoft ecosystem and what is happening today from a technological perspective the cloud and the mobility are the two current topics that everybody seems to be talking about. Look at what is happening with our youth. They assume to be able to consume services from the cloud and they are born with smartphones and know how to utilize them effectively by using messenger and SMS.