Windows Azure and Cloud Computing Posts for 10/21/2013+

Top Stories This Week:

- Steven Martin (@stevemar_msft) posted Announcing the release of Windows Azure SDK 2.2, General Availability for Windows Azure Backup and Preview of Hyper-V Recovery Manager on 10/22/2013 in the Windows Azure Cloud Services, Caching, APIs, Tools and Test Harnesses section (•).

- Scott Guthrie (@scottgu) blogged Windows Azure: Announcing release of Windows Azure SDK 2.2 (with lots of goodies) on 10/22/2013 in the Windows Azure Cloud Services, Caching, APIs, Tools and Test Harnesses section (•).

- Scott Guthrie (@scottgu) blogged Windows Azure: Backup Services Release, Hyper-V Recovery Manager, VM Enhancements, Enhanced Enterprise Management Support on 10/22/2013 in the Windows Azure Virtual Machines, Virtual Networks, Web Sites, Connect, RDP and CDN section (•).

| A compendium of Windows Azure, Service Bus, BizTalk Services, Access Control, Caching, SQL Azure Database, and other cloud-computing articles. |

• Updated 10/23/2013 with new articles marked •.

Note: This post is updated weekly or more frequently, depending on the availability of new articles in the following sections:

- Windows Azure Blob, Drive, Table, Queue, HDInsight and Media Services

- Windows Azure SQL Database, Federations and Reporting, Mobile Services

- Windows Azure Marketplace DataMarket, Power BI, Big Data and OData

- Windows Azure Service Bus, BizTalk Services and Workflow

- Windows Azure Access Control, Active Directory, and Identity

- Windows Azure Virtual Machines, Virtual Networks, Web Sites, Connect, RDP and CDN

- Windows Azure Cloud Services, Caching, APIs, Tools and Test Harnesses

- Windows Azure Infrastructure and DevOps

- Windows Azure Pack, Hosting, Hyper-V and Private/Hybrid Clouds

- Visual Studio LightSwitch and Entity Framework v4+

- Cloud Security, Compliance and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

Windows Azure Blob, Drive, Table, Queue, HDInsight and Media Services

<Return to section navigation list>

Alexandre Brisebois (@Brisebois) explained Windows Azure Storage Queues Vs Windows Azure Service Bus Queues in a 10/20/2013 post:

There are two kinds of queues in Windows Azure. The first is Windows Azure Storage Queues and the second is Windows Azure Service Bus Queues. Both are quite different.

Meet the Windows Azure Queues

The Windows Azure Storage Queue is capable of handling 2000 message transactions per second and each message has a maximum time to live of 7 days. Billing is calculated by storage transaction and size of the storage used by the queue.

The Windows Azure Service Bus Queue is capable of handling 2000 message transaction per second and messages can live forever! Billing is calculated by the number of 64kb message transactions. This Queue flavor is feature rich and is especially interesting in Hybrid scenarios where part of the application is Cloud based and the other part is on-premise in your data center.

Windows Azure Storage Queues

Saying that they both have a dramatically different API isn’t an overstatement. The Windows Azure Storage Queue is simple and the developer experience is quite good. It uses the local Windows Azure Storage Emulator and debugging is made quite easy. The tooling for Windows Azure Storage Queues allows you to easily peek at the top 32 messages and if the messages are in XML or Json, you’re able to visualize their contents directly from Visual Studio Furthermore, these queues can be purged of their contents, which is especially useful during development and QA efforts.

The REST API for the Windows Azure Storage Queue allows you to create a polling system where you manage the frequency at which the queues are checked for messages. In ideal circumstances, it has an average latency of 10 ms.

Access to Windows Azure Storage Queues is secured by Shared Access Signatures.

Consider Using Windows Azure Storage Queues if

- Your application needs to store over 5 GB worth of messages in a queue, where the messages have a lifetime shorter than 7 days.

- Your application requires flexible leasing to process its messages. This allows messages to have a very short lease time, so that if a worker crashes, the message can be processed again quickly. It also allows a worker to extend the lease on a message if it needs more time to process it, which helps deal with non-deterministic processing time of messages.

- Your application wants to track progress for processing a message inside of the message. This is useful if the worker processing a message crashes. A subsequent worker can then use that information to continue where the prior worker left off.

- You require server side logs of all the transactions executed against your queues.

Windows Azure Service Bus Queues

The Windows Azure Service Bus Queues are evolved and surrounded by many useful mechanisms that make it enterprise worthy! They are built into the Service Bus and are able to forward messages to other Queues and Topics. They have a built-in dead-letter queue and messages have a time to live that you control, hence messages don’t automatically disappear after 7 days.

Furthermore, Windows Azure Service Bus Queues have the ability of deleting themselves after a configurable amount of idle time. This feature is very practical when you create Queues for each user, because if a user hasn’t interacted with a Queue for the past month, it automatically gets clean it up. Its also a great way to drive costs down. You shouldn’t have to pay for storage that you don’t need. These Queues are limited to a maximum of 5gb. Once you’ve reached this limit your application will start receiving exceptions.

Windows Azure Service Bus Queues also have two modes of operation, the Queue can be set to deliver messages at-least-once or at-most-once. Both of the models have their own advantages and disadvantages. The delivery of a message of at most once isn’t great at dealing with failure of processing messages.

Access to the Service Bus is secured by Windows Azure ACS and local development is possible but it requires the installation of the Windows Azure Pack Service Bus. It’s consistent with Windows Azure Service Bus, but quite frankly this is only really viable if all your dev machines are setup using an image. To dev & test more effectively without all the hassle of maintaining identical configurations across all environments. I suggest creating a service bus namespace for each developer on your team using your dev & test MSDN Windows Azure Subscription.

Consider Using Queues in the Windows Azure Service Bus if

- You require full integration with the Windows Communication Foundation (WCF) communication stack in the .NET Framework.

- Your solution needs to be able to support automatic duplicate detection.

- You need to be able to process related messages as a single logical group.

- Your solution requires transactional behavior and atomicity when sending or receiving multiple messages from a queue.

- The time-to-live (TTL) characteristic of the application-specific workload can exceed the 7-day period.

- Your application handles messages that can exceed 64 KB but will not likely approach the 256 KB limit.

- Your solution requires the queue to provide a guaranteed first-in-first-out (FIFO) ordered delivery.

- Your solution must be able to receive messages without having to poll the queue. With the Service Bus, this can be achieved through the use of the long-polling receive operation.

- You deal with a requirement to provide a role-based access model to the queues, and different rights/permissions for senders and receivers.

- Your queue size will not grow larger than 5 GB.

- You can envision an eventual migration from queue-based point-to-point communication to a message exchange pattern that allows seamless integration of additional receivers (subscribers), each of which receives independent copies of either some or all messages sent to the queue. The latter refers to the publish/subscribe capability natively provided by the Service Bus.

- Your messaging solution needs to be able to support the “At-Most-Once” delivery guarantee without the need for you to build the additional infrastructure components.

- You would like to be able to publish and consume message batches.

Making the Final Decision

The decision on when to use Windows Azure Queues or Service Bus Queues clearly depends on a number of factors. These factors may depend heavily on the individual needs of your application and its architecture. If your application already uses the core capabilities of Windows Azure, you may prefer to choose Windows Azure Queues, especially if you require basic communication and messaging between services or need queues that can be larger than 5 GB in size.

Because Service Bus Queues provide a number of advanced features, such as sessions, transactions, duplicate detection, automatic dead-lettering, and durable publish/subscribe capabilities, they may be a preferred choice if you are building a hybrid application or if your application otherwise requires these features.

Source: Windows Azure Queues and Windows Azure Service Bus Queues – Compared and Contrasted

Find Out More

- Windows Azure Queues and Windows Azure Service Bus Queues – Compared and Contrasted

- How to Use Service Bus Queues

- How to Use the Queue Storage Service

- Configuring and Using the Storage Emulator with Visual Studio

- Service Bus Pricing Details

- Windows Azure Queue Storage Service Polling Task

- Securing and Authenticating a Service Bus Connection

- Service Bus Authentication and Authorization with the Access Control Service

- Looking at Windows Azure Service Bus Message Pump Programming Model

- Windows Azure Pack

- Introducing Table SAS (Shared Access Signature), Queue SAS and update to Blob SAS

- Understanding Windows Azure Storage Billing – Bandwidth, Transactions, and Capacity

- Best Practices for Leveraging Windows Azure Service Bus Brokered Messaging API

- Best Practices for Performance Improvements Using Service Bus Brokered Messaging

<Return to section navigation list>

Windows Azure SQL Database, Federations and Reporting, Mobile Services

• The SQL Server Team described Smart, Secure, Cost-Effective: SQL Server Back Up to Windows Azure in a 10/22/2013 post:

Microsoft recently announced several new ways to back up and recover SQL Server databases with Windows Azure. These features, now available in SQL Server 2014 CTP2 and as a standalone tool for prior versions, provide an easy path to cloud backup and disaster recovery for on-premises SQL Server databases. The capabilities for backing up to Windows Azure Storage help to reduce storage costs and unlock the data protection and disaster recovery benefits of cloud data storage.

Benefits of the new SQL Server Backup to Windows Azure include:

- Cost-effective – Backing up to the cloud reduces CAPEX and OPEX by shifting from on-premises storage to Windows Azure Blob Storage Service. Windows Azure offers lower TCO than many on-premises storage solutions and decreased administrative burden.

- Secure – Backup to Windows Azure adds encryption to backups stored both in the cloud or on-premises, for an extra layer of security and compliance.

- Durable – Backups and replicas in the cloud enable users to recover from local failures quickly and easily. Windows Azure storage is reliable and built with data durability in mind: off-site, geo-redundant and easily accessible.

- Smart – SQL Server can now manage your back up schedule using the new Managed Backup to Windows Azure feature. It determines backup frequency based on database usage patterns.

- Consistent – The combination of SQL Server 2014 in-box functionality and the Backup to Windows Azure Tool for prior versions create a single back up to cloud strategy across all your SQL databases.

Simplified Cloud Backup

There are several new capabilities enabling users to utilize SQL Server Backup and Disaster Recovery Windows Azure.

- SQL Server Backup to Windows Azure – With SQL Server 2014, users can easily configure Azure backup storage. In the event of a failure, a backup can be restored to an on-premises SQL Server or one running in a Windows Azure Virtual Machine. Options for setting up backup include:

- Manual Backup to Windows Azure - Users can configure back up to Windows Azure by creating a credential in SQL Server Management Studio (SSMS). These backups can be automated using backup policy.

- Managed Backup to Windows Azure – Managed Backup is a premium capability of Backup to Window Azure, measuring database usage and patterns to set the frequency of backups to Windows Azure to optimize networking and storage. Managed Backup helps customers reduce costs while achieving greater data protection.

- Encrypted Backup – SQL Server 2014 offers users the ability to encrypt both on-premises backup and backups to Windows Azure for enhanced security.

- SQL Server Backup to Windows Azure Tool - A stand-alone download that quickly and easily configures to back up to Windows Azure Storage for versions of SQL Server 2005 and forward. It can also encrypt backups stored either locally or in the cloud.

Learn More

SQL Server SQL Server 2014’s goal is to deliver mission critical performance along with faster insights into any data big or small. At the same time, it will enable new hybrid cloud solutions that can provide greater data protection and positively impact your bottom line. Early adopters are already leveraging new hybrid scenarios to extend their backup and disaster recovery capabilities around the globe without the need for additional storage replication technologies.

If you would like to try the Backup and Recovery Enhancements in SQL Server 2014 CTP2, Thursday’s blog post will help you get started configuring and using these new capabilities. You can also preview the SQL Server Backup to Windows Azure Tool, enabling backup to Windows Azure for SQL Server 2005 and forward.

You can learn more about Hybrid Cloud scenarios in SQL Server 2014 by reading the SQL Server 2014 Hybrid Cloud White Paper, which is part of the SQL Server 2014 Product Guide.

Carlos Figueira (@carlos_figueira) explained Accessing Azure Mobile Services from Android 2.2 (Froyo) devices in a 10/21/2013 article:

If you try to access a Windows Azure Mobile Service using the Android SDK from a device running Android version 2.2 (also known as Froyo), you’ll get an error in all requests. The issue is that that version of Android doesn’t accept the SSL certificate used in Azure Mobile Services, and you end up getting the following exception:

Error: com.microsoft.windowsazure.mobileservices.MobileServiceException: Error while processing request.

Cause: javax.net.ssl.SSLException: Not trusted server certificate

Cause: java.security.cert.CertificateException: java.security.cert.CertPathValidatorException: TrustAnchor for CertPath not found.

Cause: java.security.cert.CertPathValidatorException: TrustAnchor for CertPath not found.

The number of Froyo Android devices is rapidly declining (2.2% in the date I wrote this post, according to the Android dashboard), but there are scenarios where you need to target up to that platform. This post will show one way to work around that limitation, created by Pablo Zaidenvoren and committed to the test project for the Android platform in the Android Mobile Services GitHub repository. The solution is to register a new SSL socket factory to be used by the mobile service client with a key store which accepts the certificate used by the Azure Mobile Service.

Scenario

In a TDD-like fashion, I’ll first write a program which will fail, then walk through the steps required to make it pass. Here’s the onCreate method in the main activity, whose layout has nothing but a button (btnClickMe) and a text view (txtResult). If you’re going to copy this code, remember to replace the application URL / key with yours, since I’ll delete this mobile service which I created right before I publish this post.

- @Override

- protected void onCreate(Bundle savedInstanceState) {

- super.onCreate(savedInstanceState);

- setContentView(R.layout.activity_main);

- try {

- mClient = new MobileServiceClient(

- "https://blog20131019.azure-mobile.net/", // Replace with your

- "umtzEajPoOBsnvufFUVbLFTtjQrPPd37", // own URL / key

- this

- );

- } catch (MalformedURLException e) {

- e.printStackTrace();

- }

- Button btn = (Button)findViewById(R.id.btnClickMe);

- btn.setOnClickListener(new View.OnClickListener() {

- @Override

- public void onClick(View view) {

- final StringBuilder sb = new StringBuilder();

- final TextView txtResult = (TextView)findViewById(R.id.txtResult);

- MobileServiceJsonTable table = mClient.getTable("TodoItem");

- sb.append("Created the table object, inserting item");

- txtResult.setText(sb.toString());

- JsonObject item = new JsonObject();

- item.addProperty("text", "Buy bread");

- item.addProperty("complete", false);

- table.insert(item, new TableJsonOperationCallback() {

- @Override

- public void onCompleted(JsonObject inserted, Exception error,

- ServiceFilterResponse response) {

- if (error != null) {

- sb.append("\nError: " + error.toString());

- Throwable cause = error.getCause();

- while (cause != null) {

- sb.append("\n Cause: " + cause.toString());

- cause = cause.getCause();

- }

- } else {

- sb.append("\nInserted: id=" + inserted.get("id").getAsString());

- }

- String txt = sb.toString();

- txtResult.setText(txt);

- }

- });

- }

- });

- }

If you run this code in a Froyo emulator (or a device), you’ll see printed out the error shown before.

On to the workaround

First, add a new folder under the res (resources) folder for the solution, by right-clicking it, and selecting New –> Folder:

Let’s call it ‘raw’, and click Finish:

Now, download the mobile service store file from https://github.com/WindowsAzure/azure-mobile-services/blob/dev/test/Android/ZumoE2ETestApp/res/raw/mobileservicestore.bks, and save it into the <project>/res/raw folder which you just created (in the GitHub page, clicking “View Raw” should prompt you to save the file). Back in Eclipse, select the ‘raw’ folder and hit F5 (Refresh), and you should see the file under the folder.

That file will need to be read in the new SSL socket factory, and to read that resource we’ll need a reference to the main activity. So we’ll add a static method (along with a new static field) which returns the instance of the main activity. And in the onCreate method, we set the (global) instance.

- private static Activity mInstance;

- public static Activity getInstance() {

- return mInstance;

- }

- @Override

- protected void onCreate(Bundle savedInstanceState) {

- super.onCreate(savedInstanceState);

- setContentView(R.layout.activity_main);

- mInstance = this;

- try {

- mClient = new MobileServiceClient(

Now we can start by adding the Froyo support to the application. The snippet below shows only the beginning of the class – it doesn’t show the entire SSL factory with the additional certificate store (for Azure Mobile Services). You can find the whole code (may need to update the imports for the main activity and the resources) in the GitHub project.

- public class FroyoSupport {

- /**

- * Fixes an AndroidHttpClient instance to accept MobileServices SSL certificate

- * @param client AndroidHttpClient to fix

- */

- public static void fixAndroidHttpClientForCertificateValidation(AndroidHttpClient client) {

- final SchemeRegistry schemeRegistry = new SchemeRegistry();

- schemeRegistry.register(new Scheme("https",

- createAdditionalCertsSSLSocketFactory(), 443));

- client.getConnectionManager().getSchemeRegistry().unregister("https");

- client.getConnectionManager().getSchemeRegistry().register(new Scheme("https",

- createAdditionalCertsSSLSocketFactory(), 443));

- }

- private static SSLSocketFactory createAdditionalCertsSSLSocketFactory() {

- try {

- final KeyStore ks = KeyStore.getInstance("BKS");

- Activity mainActivity = MainActivity.getInstance();

- final InputStream in = mainActivity.getResources().openRawResource(R.raw.mobileservicestore);

- try {

- ks.load(in, "mobileservices".toCharArray());

- } finally {

- in.close();

- }

- return new AdditionalKeyStoresSSLSocketFactory(ks);

- } catch (Exception e) {

- throw new RuntimeException(e);

- }

- }

- private static class AdditionalKeyStoresSSLSocketFactory extends SSLSocketFactory {

Now that we have that support, we need to create a new instance of the factory used to create the AndroidHttpClient instances – which is defined in the Azure Mobile Services Android SDK (version 1.0.1 and later). The SDK already includes a default implementation of the class, so extending it to use the fix support is fairly straightforward. Add a new class (I’ll call it FroyoAndroidHttpClientFactory) which extends that default implementation:

- package com.example.froyoandwams;

- import android.net.http.AndroidHttpClient;

- import com.microsoft.windowsazure.mobileservices.AndroidHttpClientFactoryImpl;

- public class FroyoAndroidHttpClientFactory

- extends AndroidHttpClientFactoryImpl {

- @Override

- public AndroidHttpClient createAndroidHttpClient() {

- AndroidHttpClient client = super.createAndroidHttpClient();

- FroyoSupport.fixAndroidHttpClientForCertificateValidation(client);

- return client;

- }

- }

Now all we need to do is to hook up that AndroidHttpClientFactory with the MobileServiceClient, which can be done with the setAndroidHttpClientFactory method, as shown below.

- @Override

- protected void onCreate(Bundle savedInstanceState) {

- super.onCreate(savedInstanceState);

- setContentView(R.layout.activity_main);

- mInstance = this;

- try {

- mClient = new MobileServiceClient(

- "https://blog20131019.azure-mobile.net/", // Replace with your

- "umtzEajPoOBsnvufFUVbLFTtjQrPPd37", // own URL / key

- this

- );

- } catch (MalformedURLException e) {

- e.printStackTrace();

- }

- mClient.setAndroidHttpClientFactory(new FroyoAndroidHttpClientFactory());

And that’s it. If you execute the application again and click the button, the item should be correctly inserted (and other operations should work as well).

If you want to download the code for this example, you can find it in my GitHub repository at https://github.com/carlosfigueira/blogsamples/tree/master/AzureMobileServices/FroyoAndWAMS.

Alexandre Brisebois (@Brisebois) asked and answered What Does it Cost to Backup a Windows Azure SQL Database? on 10/21/2013:

Windows Azure SQL Database billing is prorated daily. This means that if you create a database and delete it right away, you are will be billed for the full day.

I bring this up because the Windows Azure SQL Database automatic backup feature is built on top of the import/export service. To backup your database, the service follows best practices in order to create a consistent point-in-time copy of your database. Then it exports it to a bacpac file and places it in your Windows Azure Storage.

The automatic backup feature is well worth its cost of operation, but you might want to review your backup frequency. Especially if your databases are large.

So how does this show up on your bill? Well for starters, backing up every day will result in paying for your actual database and for the temporary database instance used to create the backup. Backing up every second day will costs you roughly one and a half times what it costs without backups.

The Argument

If it’s so expensive, why should I consider scheduling backups? Windows Azure SQL Database is already backed up by two identical copies at all times. Why should I pay for more backups?

Well that’s exactly it, all 3 copies are identical. If a careless user decides to execute a DELETE statement or an UPDATE statement without a WHERE clause, the data gets altered in all 3 copies!

Incidentally, the 3 copies are there to deal with infrastructure failures not users. The automatic backup feature available for Windows Azure SQL Database is essential, because if something goes totally wrong, you will have a backup to work with.

Think about it, does paying for an extra database from time to time cost you more than what it would cost you if you lost everything?

Related Posts

<Return to section navigation list>

Windows Azure Marketplace DataMarket, Cloud Numerics, Big Data and OData

Max Uritsky (@max_data) reported New Bing Speech Recognition Control and Updated Bing OCR and Translator Controls on Windows Azure Marketplace on 10/21/2013:

At the BUILD conference in June, we announced three broad categories of capabilities the new Bing platform would deliver to developers: Services to bring entities and the world’s knowledge to your applications, services to enable your applications to deliver more natural and intuitive user experiences, and services which bring an awareness of the physical world into your applications.

Earlier this month we updated the Bing Maps SDKs for Windows Store Apps for Windows 8 and 8.1. Building on this momentum, today we are announcing the release of the new Bing Speech Recognition Control for Windows 8 and 8.1, and updates to the Bing Optical Character Recognition Control for Windows 8.1 and Bing Translator Control for Windows 8.1 to continue to deliver on our effort to support developers to enable more knowledgeable, natural, and aware applications.

Read on for more details on the updates we’re announcing today, and then check out the Bing developer center for other useful resources, including code samples, for building smarter, more useful applications.

Hands free experiences – Speech Recognition for Windows 8.0 and 8.1

Whether for accessibility, safety, or simple convenience, being able to interact hands-free with your device with your voice is increasingly important. By enabling devices to recognize speech, applications can interact more naturally with the user to dictate emails, search for the latest news, navigating an app via voice, and more. If you are a Windows Phone developer, you may already be familiar with the speech recognition inside Windows Phone: the user taps a microphone icon, speaks into the mic, and the text shows up on screen. Now, that same functionality is available on Windows 8, Windows 8.1, and Windows RT through the free Bing Speech Recognition Control.

In as little as ten lines of C# + XAML or JavaScript + HTML, you can put a SpeechRecognizerUX control in your application, along with a microphone button and a TextBlock, and the code to support them. When the user clicks or taps the mic, they will hear a blip, or "earcon", to signal that it's time to speak, and an audio meter will show their current volume level. While speaking, the words detected will be shown in the control. When they stop speaking, or hit the Stop button on the speech control, they will get a brief animation and then their words will appear in the TextBlock.

Here's the XAML to create the UI elements:

<AppBarButton x:Name="SpeakButton" Icon="Microphone" Click="SpeakButton_Click"></AppBarButton>

<TextBlock x:Name="TextResults" />

<sp:SpeechRecognizerUx x:Name="SpeechControl" />Or in HTML:

<div id="SpeakButton" onclick="speakButton_Click();"></div>

<div id="ResultText"></div>

<div id="SpeechControl" data-win-control="BingWinJS.SpeechRecognizerUx">In the code behind, all you have to do is create a SpeechRecognizer object, bind it to the SpeechRecognizerUX control, and create a click handler for the microphone button to start speech recognition and write the results to the TextBlock.

Here's the code in C#:

SpeechRecognizer SR;

void MainPage_Loaded(object sender, RoutedEventArgs e)

{

// Apply credentials from the Windows Azure Data Marketplace.

var credentials = new SpeechAuthorizationParameters();

credentials.ClientId = "<YOUR CLIENT ID>";

credentials.ClientSecret = "<YOUR CLIENT SECRET>";

// Initialize the speech recognizer.

SR = new SpeechRecognizer("en-US", credentials);

// Bind it to the VoiceUiControl.

SpeechControl.SpeechRecognizer = SR;

}

private async void SpeakButton_Click(object sender, RoutedEventArgs e)

{

var result = await SR.RecognizeSpeechToTextAsync();

TextResults.Text = result.Text;

}And here it is in JavaScript:

var SR;

function pageLoaded() {

// Apply credentials from the Windows Azure Data Marketplace.

var credentials = new Bing.Speech.SpeechAuthorizationParameters();

credentials.clientId = "<YOUR CLIENT ID>";

credentials.clientSecret = "<YOUR CLIENT SECRET>";

// Initialize the speech recognizer.

SR = new Bing.Speech.SpeechRecognizer("en-US", credentials);

// Bind it to the VoiceUiControl.

document.getElementById('SpeechControl').winControl.speechRecognizer = SR;

}

function speakButton_Click() {

SR.recognizeSpeechToTextAsync()

.then(

function (result) {

document.getElementById('ResultText').innerHTML = result.text;

})

}To get this code to work, you will have to put your own values in credentials: your ClientId and ClientSecret. You get these from the Windows Azure Data Marketplace when you sign up for your free subscription to the control. The control depends on a web service to function, and you can send up to 500,000 queries per month at the free level. A more detailed version of this code is available in the SpeechRecognizerUX class description on MSDN.

The next capability you would probably want to add is to show alternate results to recognized speech. While the user is speaking, the SpeechRecognizer makes multiple hypotheses about what is being said based on the sounds received so far, and may make multiple hypotheses at the end as well. These alternate guesses are available as a list through the SpeechRecognitionResult.GetAlternates(int) method, or individually as they are created through the SpeechRecognizer.RecognizerResultRecieved event.

You can also bypass the SpeechRecognizerUX control and create your own custom speech recognition UI, using the SpeechRecognizer.AudioCaptureStateChanged event to trigger the different UI states for startup, listening, processing, and complete. This process is described here, and there is a complete code example in the SpeechRecognizer MSDN page. For a detailed explanation and sample of working with the alternates list, see handling speech recognition data.

Like all of the Bing controls and APIs, they work better together. Using the Speech Recognition Control to enter queries for Bing Maps or the Search API is an obvious choice, but you can also combine the control with Speech Synthesis for Windows 8.1 to enable two-way conversations with your users. Add the Translator API into the mix you could have real-time audio translation, just like in the sci-fi shows.

For more examples of what you can do with the Speech Recognition Control, go to the Speech page on the Bing Developer Center and check the links in the Samples section.

The control currently recognizes speech in US English.

Give Your Machine the Gift of Sight – Bing Optical Character Recognition in Six Additional Languages

From recognizing text in documents to the identification of email addresses, phone numbers, and URLs to the extraction of coupon codes, adding the power of sight to your Windows 8.1 Store app opens a host of new scenarios developers can enable to enhance their applications. Coming out of Customer Technical Preview, the Bing Optical Character Recognition Control for Windows 8.1 now supports six new languages: Russian, Swedish, Norwegian, Danish, Finnish and Chinese Simplified in addition to the existing language support for English, German, Spanish, Italian, French, and Portuguese.

Rise Above Language Barriers – Bing Translator Control for Windows8.1

Access robust, cloud-based, automatic translation easily between more than 40 languages with the Bing Translator Bing Translator Control, which moves from Customer Technical Preview to general availability today. The Translator Control gives you access to machine translation services built on over a decade of natural language research from Microsoft Research. After download and one-time authentication, you can simply place the Translator Control in your app, feed it a string to translate, and receive the translation.

The Bing Developer Team

No significant OData articles so far this week.

No significant OData articles so far this week.

<Return to section navigation list>

Windows Azure Service Bus, BizTalk Services and Workflow

Rick G. Garibay (@rickggaribay) described WABS BizTalk Adapter Service Installation in Seven Steps in a 10/20/2013 post:

With the proliferation of devices and clouds, businesses and developers are challenged more than ever to both enable employee productivity and take advantage of the cost benefits of cloud computing. The reality however, is that the vast majority of organizations are going to continue to invest in assets that reside both within their own data center and public clouds like Windows Azure and Amazon Web Services.

Windows Azure BizTalk Services (WABS) is a new PaaS based messaging and middleware solution that enables the ability to expose rich messaging endpoints across business assets, whether they reside on-premise or in the commercial cloud.

WABS requires an active Windows Azure account, and from there, you can provision your own namespace and start building rich messaging solutions using Visual Studio 2012. You can download everything you need to get started with WABS here: http://www.microsoft.com/en-us/download/details.aspx?id=39087

Once your WABS namespace has been provisioned, you are ready to start developing modern, rich messaging solutions. At this point, you can experiment with sending messages to a new messaging entity in Windows Azure called an EAI Bridge and routing them to various destinations including Azure Service Bus, Blog Storage, FTP, etc. However, if you want to enable support for connectivity to on-premise assets including popular database platforms like Microsoft SQL Server and Oracle Database as well as ERP systems such as Oracle E-Business Suite, SAP and Siebel eBusiness Applications, you want to install an optional component called the BizTalk Adapter Service (BAS) which runs on-premise.

The BAS includes a management and runtime component for configuring and enabling integration with your LOB systems. The capabilities are partitioned into a design-time experience, a configuration experience and the runtime. At design time, you configure your LOB Target (i.e. SQL Server, Oracle DB, SAP, etc.) for connecting to your LOB application via a LOB Relay. Built on Windows Azure Service Bus Relay Messaging, the LOB Relay allows you to establish a secure, outbound connection to the WABS Bridge which safely enables bi-directional communication between WABS and your LOB target through the firewall.

More details on the BizTalk Adapter Service (BAS) architecture can be found here: http://msdn.microsoft.com/en-us/library/windowsazure/hh689773.aspx

While the installation experience is fairly straightforward, there are a few gotchas that can make things a bit frustrating. In this post, I’ll walk you through the process for installing and configuring BAS in hopes of getting you up and running in a breeze.

Installing the BizTalk Adapter Service

Before you install BAS, ensure you’ve downloaded and installed the following pre-requisites:

- WCF LOB Adapter Framework (found on the BizTalk Server 2013 installation media)

- BizTalk Adapter Pack 2013 (found on the BizTalk Server 2013 installation media)

- IIS 7+ and WAS (I’ve tested installation on Windows 7 and Windows 8 Enterprise editions)

- AppFabric 1.1 for Windows Server

- SQL Server 2008 or 2012 (all editions should be supported including free Express versions)

The installation process will prompt you for key information including the account to run the application pool that will host the management and runtime services and a password for encrypting key settings that will be stored by the management service in SQL Server. Let’s take a look at the process step-by-step.

1. When you unpack the installer, the most common mistake your likely to make is to double click it to get started. Instead, open a command prompt as an administrator and run the following command (you’ll need to navigate to the folder in which you unpacked the MSI):

msiexec /i BizTalkAdapterService.msi /l*vx install_log.txt in

This command will ensure the MSI runs as Admin and will log results for you in case something goes wrong.

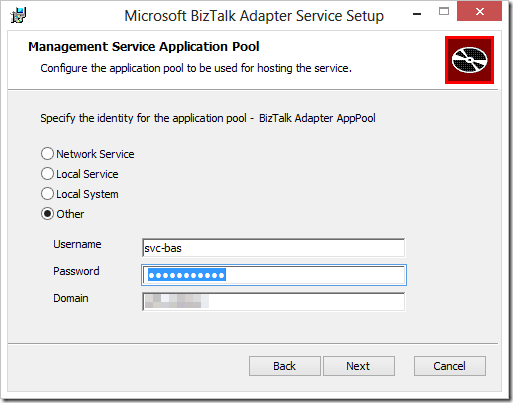

2. The first thing the installer will as you for is credentials for configuring the application pool identity for the BAS Management Service. This service is responsible for configuring LOB Relay and LOB Targets and stores all of the configuration on a repository hosted by SQL Server (Long Live Oslo!). In my case, I’ve created a local service account called svc-bas, but this of course could be a domain account or you can use the other options.

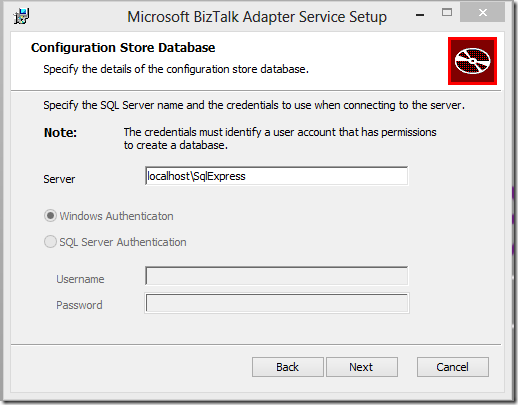

3. Before you continue, be sure that the account you are using to run the MSI is a member of the appropriate SQL Server role(s) unless you plan on using SQL Server Authentication in the next step. The wizard will create a repository called BAService so will need the necessary permissions to create the database.

4. Next, specify connection info for the SQL Server database that will host the BAService repository. SQL Express or any flavor of SQL Server 2008 or 2012 is supported.

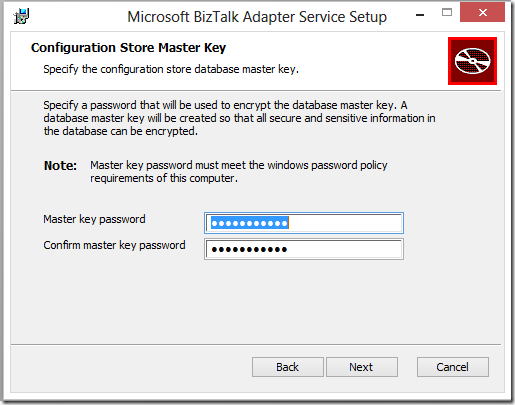

5. Specify a key for encrypting sensitive repository information.

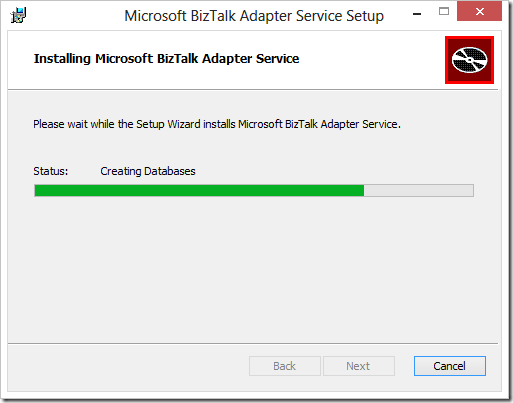

6. The installer will then get to work creating the BAService in IIS/AppFabric and the BAService repository in SQL Server.

7. If all is well, you’ll see a successful completion message:

If the wizard fails, it will roll back the install without providing any indication as to why. If this happens, be sure to carefully follow steps 1 and 2 above and carefully review the logs to determine the problem.

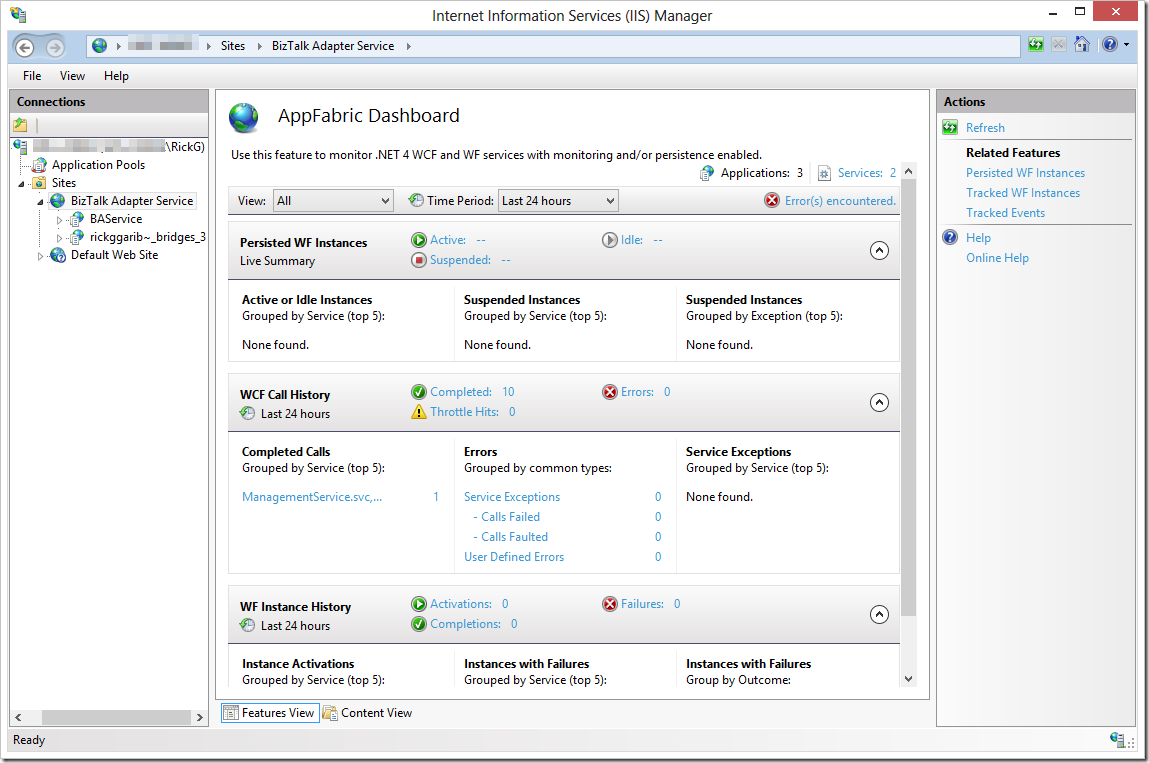

After the installation is complete, you’ll notice the BAService has been created in IIS/AppFabric for Windows Server.

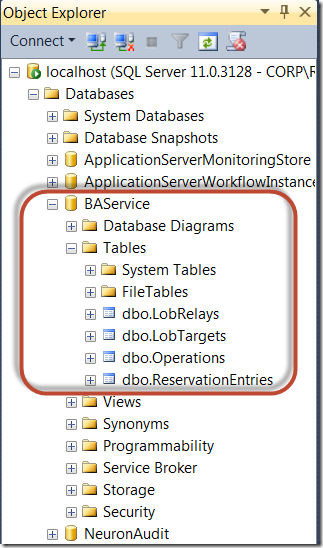

The BAService database consists of 4 tables which store information on the configured Azure Service Bus relay endpoints that communicate with the LOB Targets, the operations supported by each target (configured in Visual Studio) and finally the virtual URIs for addressing the BAService for configuring the entities previously mentioned:

At this point, the LobRelays, LobTargets and Operations tables will be empty.

Once you configure a LOB Target, the BAService will write the configuration data to each table, enabling Azure Service Bus Relay to fuse with the WCF LOB Adapters that ship with the BizTalk Adapter Pack. This combination enables very powerful rich messaging scenarios that support hybrid solutions exposing key business assets across traditional network, security and business boundaries in a simple and secure manner.

Alexandre Brisebois (@Brisebois) explained Windows Azure Storage Queues Vs Windows Azure Service Bus Queues in a 10/20/2013 post in the Windows Azure Blob, Drive, Table, Queue, HDInsight and Media Services section above.

<Return to section navigation list>

Windows Azure Access Control, Active Directory, Identity and Workflow

Scott Guthrie (@scottgu) blogged Windows Azure: Backup Services Release, Hyper-V Recovery Manager, VM Enhancements, Enhanced Enterprise Management Support on 10/22/2013 in the Windows Azure Virtual Machines, Virtual Networks, Web Sites, Connect, RDP and CDN section below.

<Return to section navigation list>

Windows Azure Virtual Machines, Virtual Networks, Web Sites, Connect, RDP and CDN

No significant articles so far this week.

<Return to section navigation list>

Windows Azure Cloud Services, Caching, APIs, Tools and Test Harnesses

• Steven Martin (@stevemar_msft) posted Announcing the release of Windows Azure SDK 2.2, General Availability for Windows Azure Backup and Preview of Hyper-V Recovery Manager to the Windows Azure blog on 10/22/2013:

Developers are increasingly turning to cloud computing for a wide array of benefits including cost, automation and ability to focus on application logic. Following the launch of Visual Studio 2013, we are excited to announce the Windows Azure SDK 2.2 release which contains a variety of key enhancements:

Debugging on Azure Web Sites and Cloud Services: Connect Visual Studio to the Remote Debugger running in Windows Azure Web Sites or Cloud Services and get the full fidelity debugging developers have come to expect with Visual Studio 2013 on the desktop.

- Integrated Sign-in from Visual Studio: We are making it easier than ever before to get a view into all the applications and services that are running in Windows Azure. With the integrated sign-in, Visual Studio developers can get a “single pane of glass” from which they can see and manage all of their applications and services.

- Windows Azure Management Libraries for .NET (Preview): These libraries enable automation of Cloud Services, Virtual Machines, Virtual Networks, Web Sites and Storage Accounts. This is a key feature for developers as they build and migrate more and more complex environments onto Windows Azure.

For further details on the release of this SDK, visit Scott Guthrie's blog.

General Availability of Windows Azure Backup

Increasingly, organizations worldwide are turning to Windows Azure as an anchor for business continuity solutions. We are excited to announce the general availability of Windows Azure Backup which protects important server data offsite with automated backups to Windows Azure with the added benefit of easy data restoration.

With incremental backup, configurable data retention policies and data compression, you can increase flexibility and boost efficiency of storage backup. Learn about these powerful capabilities by visiting Windows Azure Backup.

Preview of Hyper-V Recovery Manager

Customers running business critical workloads in a private cloud need robust capabilities to replicate and recover virtual machines in the event of an outage. We are delighted to announce a new service today in preview called Windows Azure Hyper-V Recovery Manager that helps protect Systems Center 2012 private clouds by automating the replication of virtual machines. You can find more details about the service and limited preview here.

• Scott Guthrie (@scottgu) blogged Windows Azure: Announcing release of Windows Azure SDK 2.2 (with lots of goodies) on 10/22/2013:

Earlier today I blogged about a big update we made today to Windows Azure, and some of the great new features it provides.

Today I’m also excited to also announce the release of the Windows Azure SDK 2.2. Today’s SDK release adds even more great features including:

- Visual Studio 2013 Support

- Integrated Windows Azure Sign-In support within Visual Studio

- Remote Debugging Cloud Services with Visual Studio

- Firewall Management support within Visual Studio for SQL Databases

- Visual Studio 2013 RTM VM Images for MSDN Subscribers

- Windows Azure Management Libraries for .NET

- Updated Windows Azure PowerShell Cmdlets and ScriptCenter

The below post has more details on what’s available in today’s Windows Azure SDK 2.2 release. Also head over to Channel 9 to see the new episode of the Visual Studio Toolbox show that will be available shortly, and which highlights these features in a video demonstration.

Visual Studio 2013 Support

Version 2.2 of the Window Azure SDK is the first official version of the SDK to support the final RTM release of Visual Studio 2013. If you installed the 2.1 SDK with the Preview of Visual Studio 2013 we recommend that you upgrade your projects to SDK 2.2. SDK 2.2 also works side by side with the SDK 2.0 and SDK 2.1 releases on Visual Studio 2012:

Integrated Windows Azure Sign In within Visual Studio

Integrated Windows Azure Sign-In support within Visual Studio is one of the big improvements added with this Windows Azure SDK release. Integrated sign-in support enables developers to develop/test/manage Windows Azure resources within Visual Studio without having to download or use management certificates.

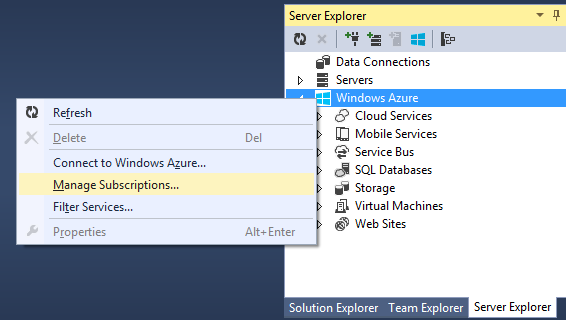

You can now just right-click on the “Windows Azure” icon within the Server Explorer inside Visual Studio and choose the “Connect to Windows Azure” context menu option to connect to Windows Azure:

Doing this will prompt you to enter the email address of the account you wish to sign-in with:

You can use either a Microsoft Account (e.g. Windows Live ID) or an Organizational account (e.g. Active Directory) as the email. The dialog will update with an appropriate login prompt depending on which type of email address you enter:

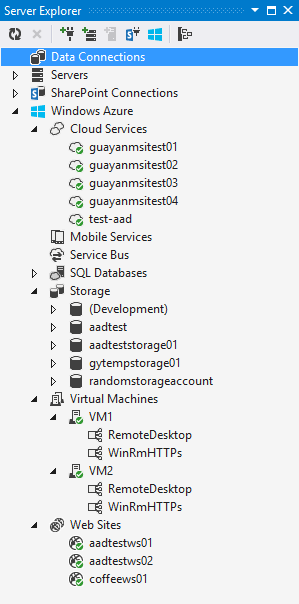

Once you sign-in you’ll see the Windows Azure resources that you have permissions to manage show up automatically within the Visual Studio Server Explorer (and you can start using them):

With this new integrated sign in experience you are now able to publish web apps, deploy VMs and cloud services, use Windows Azure diagnostics, and fully interact with your Windows Azure services within Visual Studio without the need for a management certificate. All of the authentication is handled using the Windows Azure Active Directory associated with your Windows Azure account (details on this can be found in my earlier blog post).

Integrating authentication this way end-to-end across the Service Management APIs + Dev Tools + Management Portal + PowerShell automation scripts enables a much more secure and flexible security model within Windows Azure, and makes it much more convenient to securely manage multiple developers + administrators working on a project. It also allows organizations and enterprises to use the same authentication model that they use for their developers on-premises in the cloud. It also ensures that employees who leave an organization immediately lose access to their company’s cloud based resources once their Active Directory account is suspended.

Filtering/Subscription Management

Once you login within Visual Studio, you can filter which Windows Azure subscriptions/regions are visible within the Server Explorer by right-clicking the “Filter Services” context menu within the Server Explorer. You can also use the “Manage Subscriptions” context menu to mange your Windows Azure Subscriptions:

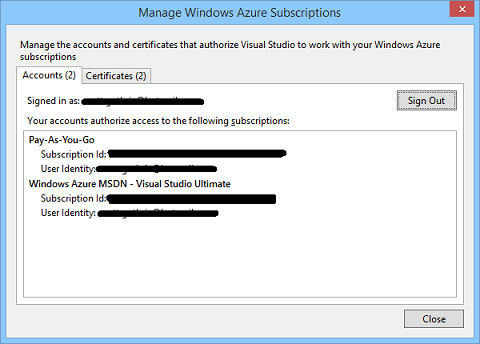

Bringing up the “Manage Subscriptions” dialog allows you to see which accounts you are currently using, as well as which subscriptions are within them:

The “Certificates” tab allows you to continue to import and use management certificates to manage Windows Azure resources as well. We have not removed any functionality with today’s update – all of the existing scenarios that previously supported management certificates within Visual Studio continue to work just fine. The new integrated sign-in support provided with today’s release is purely additive.

Note: the SQL Database node and the Mobile Service node in Server Explorer do not support integrated sign-in at this time. Therefore, you will only see databases and mobile services under those nodes if you have a management certificate to authorize access to them. We will enable them with integrated sign-in in a future update.

Remote Debugging Cloud Resources within Visual Studio

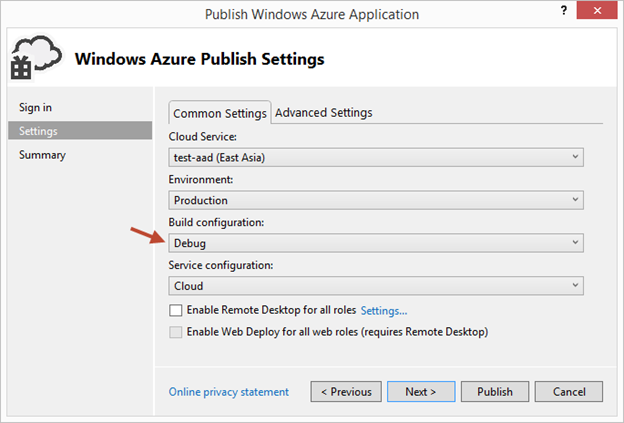

Today’s Windows Azure SDK 2.2 release adds support for remote debugging many types of Windows Azure resources. With live, remote debugging support from within Visual Studio, you are now able to have more visibility than ever before into how your code is operating live in Windows Azure. Let’s walkthrough how to enable remote debugging for a Cloud Service:

Remote Debugging of Cloud Services

To enable remote debugging for your cloud service, select Debug as the Build Configuration on the Common Settings tab of your Cloud Service’s publish dialog wizard:

Then click the Advanced Settings tab and check the Enable Remote Debugging for all roles checkbox:

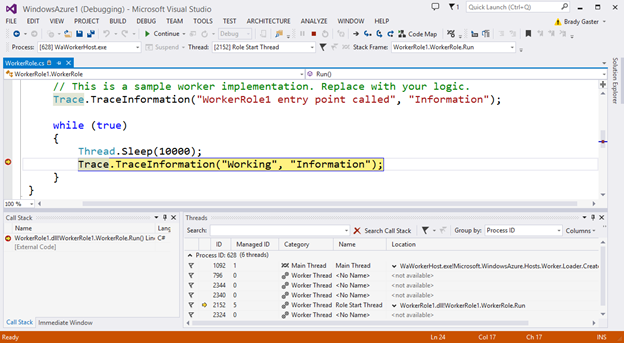

Once your cloud service is published and running live in the cloud, simply set a breakpoint in your local source code:

Then use Visual Studio’s Server Explorer to select the Cloud Service instance deployed in the cloud, and then use the Attach Debugger context menu on the role or to a specific VM instance of it:

Once the debugger attaches to the Cloud Service, and a breakpoint is hit, you’ll be able to use the rich debugging capabilities of Visual Studio to debug the cloud instance remotely, in real-time, and see exactly how your app is running in the cloud.

Today’s remote debugging support is super powerful, and makes it much easier to develop and test applications for the cloud. Support for remote debugging Cloud Services is available as of today, and we’ll also enable support for remote debugging Web Sites shortly.

Firewall Management Support with SQL Databases

By default we enable a security firewall around SQL Databases hosted within Windows Azure. This ensures that only your application (or IP addresses you approve) can connect to them and helps make your infrastructure secure by default. This is great for protection at runtime, but can sometimes be a pain at development time (since by default you can’t connect/manage the database remotely within Visual Studio if the security firewall blocks your instance of VS from connecting to it).

One of the cool features we’ve added with today’s release is support that makes it easy to enable and configure the security firewall directly within Visual Studio.

Now with the SDK 2.2 release, when you try and connect to a SQL Database using the Visual Studio Server Explorer, and a firewall rule prevents access to the database from your machine, you will be prompted to add a firewall rule to enable access from your local IP address:

You can simply click Add Firewall Rule and a new rule will be automatically added for you. In some cases, the logic to detect your local IP may not be sufficient (for example: you are behind a corporate firewall that uses a range of IP addresses) and you may need to set up a firewall rule for a range of IP addresses in order to gain access. The new Add Firewall Rule dialog also makes this easy to do.

Once connected you’ll be able to manage your SQL Database directly within the Visual Studio Server Explorer:

This makes it much easier to work with databases in the cloud.

Visual Studio 2013 RTM Virtual Machine Images Available for MSDN Subscribers

Last week we released the General Availability Release of Visual Studio 2013 to the web. This is an awesome release with a ton of new features.

With today’s Windows Azure update we now have a set of pre-configured VM images of VS 2013 available within the Windows Azure Management Portal for use by MSDN customers. This enables you to create a VM in the cloud with VS 2013 pre-installed on it in with only a few clicks:

Windows Azure now provides the fastest and easiest way to get started doing development with Visual Studio 2013.

Windows Azure Management Libraries for .NET (Preview)

Having the ability to automate the creation, deployment, and tear down of resources is a key requirement for applications running in the cloud. It also helps immensely when running dev/test scenarios and coded UI tests against pre-production environments.

Today we are releasing a preview of a new set of Windows Azure Management Libraries for .NET. These new libraries make it easy to automate tasks using any .NET language (e.g. C#, VB, F#, etc). Previously this automation capability was only available through the Windows Azure PowerShell Cmdlets or to developers who were willing to write their own wrappers for the Windows Azure Service Management REST API.

Modern .NET Developer Experience

We’ve worked to design easy-to-understand .NET APIs that still map well to the underlying REST endpoints, making sure to use and expose the modern .NET functionality that developers expect today:

- Portable Class Library (PCL) support targeting applications built for any .NET Platform (no platform restriction)

- Shipped as a set of focused NuGet packages with minimal dependencies to simplify versioning

- Support async/await task based asynchrony (with easy sync overloads)

- Shared infrastructure for common error handling, tracing, configuration, HTTP pipeline manipulation, etc.

- Factored for easy testability and mocking

- Built on top of popular libraries like HttpClient and Json.NET

Below is a list of a few of the management client classes that are shipping with today’s initial preview release:

Automating Creating a Virtual Machine using .NET

Let’s walkthrough an example of how we can use the new Windows Azure Management Libraries for .NET to fully automate creating a Virtual Machine. I’m deliberately showing a scenario with a lot of custom options configured – including VHD image gallery enumeration, attaching data drives, network endpoints + firewall rules setup - to show off the full power and richness of what the new library provides.

We’ll begin with some code that demonstrates how to enumerate through the built-in Windows images within the standard Windows Azure VM Gallery. We’ll search for the first VM image that has the word “Windows” in it and use that as our base image to build the VM from. We’ll then create a cloud service container in the West US region to host it within:

We can then customize some options on it such as setting up a computer name, admin username/password, and hostname. We’ll also open up a remote desktop (RDP) endpoint through its security firewall:

We’ll then specify the VHD host and data drives that we want to mount on the Virtual Machine, and specify the size of the VM we want to run it in:

Once everything has been set up the call to create the virtual machine is executed asynchronously

In a few minutes we’ll then have a completely deployed VM running on Windows Azure with all of the settings (hard drives, VM size, machine name, username/password, network endpoints + firewall settings) fully configured and ready for us to use:

Preview Availability via NuGet

The Windows Azure Management Libraries for .NET are now available via NuGet. Because they are still in preview form, you’ll need to add the –IncludePrerelease switch when you go to retrieve the packages. The Package Manager Console screen shot below demonstrates how to get the entire set of libraries to manage your Windows Azure assets:

You can also install them within your .NET projects by right clicking on the VS Solution Explorer and using the Manage NuGet Packages context menu command. Make sure to select the “Include Prerelease” drop-down for them to show up, and then you can install the specific management libraries you need for your particular scenarios:

Open Source License

The new Windows Azure Management Libraries for .NET make it super easy to automate management operations within Windows Azure – whether they are for Virtual Machines, Cloud Services, Storage Accounts, Web Sites, and more.

Like the rest of the Windows Azure SDK, we are releasing the source code under an open source (Apache 2) license and it is hosted at https://github.com/WindowsAzure/azure-sdk-for-net/tree/master/libraries if you wish to contribute.

PowerShell Enhancements and our New Script Center

Today, we are also shipping Windows Azure PowerShell 0.7.0 (which is a separate download). You can find the full change log here. Here are some of the improvements provided with it:

- Windows Azure Active Directory authentication support

- Script Center providing many sample scripts to automate common tasks on Windows Azure

- New cmdlets for Media Services and SQL Database

Script Center

Windows Azure enables you to script and automate a lot of tasks using PowerShell. People often ask for more pre-built samples of common scenarios so that they can use them to learn and tweak/customize. With this in mind, we are excited to introduce a new Script Center that we are launching for Windows Azure.

You can learn about how to scripting with Windows Azure with a get started article. You can then find many sample scripts across different solutions, including infrastructure, data management, web, and more:

All of the sample scripts are hosted on TechNet with links from the Windows Azure Script Center. Each script is complete with good code comments, detailed descriptions, and examples of usage.

Summary

Visual Studio 2013 and the Windows Azure SDK 2.2 make it easier than ever to get started developing rich cloud applications. Along with the Windows Azure Developer Center’s growing set of .NET developer resources to guide your development efforts, today’s Windows Azure SDK 2.2 release should make your development experience more enjoyable and efficient.

If you don’t already have a Windows Azure account, you can sign-up for a free trial and start using all of the above features today. Then visit the Windows Azure Developer Center to learn more about how to build apps with it.

Riccardo Becker (@riccardobecker) continued his Geotopia: searching and adding users (Windows Azure Cache Service) series on 10/21/2013:

Now that we are able to sign in and add geotopic, the next step would be to actually find users and follow them. The first version of Geotopia will allow everybody to follow everybody, a ring of authorization will be added in the future (users need to allow you to follow them after all).

For fast access and fast search, I decided to use the Windows Azure Cache Service preview to store a table of available users. An entry in the cache is created for everyone that signed up.

First of all, I created a a new cache on the Windows Azure portal.

Azure now has a fully dedicated cache for me up and running. Every created cache has a default cache that is used when no additional information is provided.

Next step is to get the necessary libraries and add them to my webrole. Use the Nuget package manager to get them and search for Windows Azure Caching. This will add the right assemblies and modifies the web.config. It adds a configsection to the configuration file (dataCacheClients).Now with the cache in place and up and running, I can start adding entries to the cache when somebody signs up and make him/her available in the search screen.

Later on, we can also cache Page Output (performance!) and Session State (scalability).

I also created a controller/view combination that allows users to signup with simply their username and an emailaddress. The temporarily password will be sent to this email account.

Download the Windows Azure AD Graph Helper at http://code.msdn.microsoft.com/Windows-Azure-AD-Graph-API-a8c72e18 and add it to your solution. Reference it from the project that needs to query the graph and create users.

Summary

The UserController performs the following tasks:

- Add the registered user to Windows Azure Active Directory by using the Graph Helper

- Adds the user to the neo4j graph db to enable it to post geotopics

- Add the user to the Windows Azure Cache to make the user findable.

This snippet does it all.

string clientId = CloudConfigurationManager.GetSetting("ClientId").ToString();

string password = CloudConfigurationManager.GetSetting("ClientPassword").ToString();

// get a token using the helper

AADJWTToken token = DirectoryDataServiceAuthorizationHelper.GetAuthorizationToken("", clientId, password);

// initialize a graphService instance using the token acquired from previous step

DirectoryDataService graphService = new DirectoryDataService("", token);

//add to Neo4j graph

GeotopiaUser user1 = new GeotopiaUser(user.UserName, user.UserName, user.UserName + "@geotopia.onmicrosoft.com");

var geoUser1 = client.Create(user1,

new IRelationshipAllowingParticipantNode[0],

new[]

{

new IndexEntry("GeotopiaUser")

{

{ "Id", user1.id.ToString() }

}

});

//add to cache

object result = cache.Get(user.UserName);

if (result == null)

{

// "Item" not in cache. Obtain it from specified data source

// and add it.

cache.Add(user.UserName, user);

}

else

{

Trace.WriteLine(String.Format("User already exists : {0}", user.UserName));

// "Item" is in cache, cast result to correct type.

}The modal dialog on the Geotopia canvas searches the cache every time a keydown is notices and displays the users that meet the query input of the dialog

Return to section navigation list>

Windows Azure Infrastructure and DevOps

• Nick Harris (@cloudnick) and Chris Risner (@chrisrisner) produced Cloud Cover Episode 117: Introducing the new Windows Azure Management Libraries on for Channel9 on 10/22/2013:

In this episode Nick Harris and Chris Risner are joined by Brady Gaster a Program Manager on the Windows Azure Management team. During this episode Brady demonstrates the new Windows Azure Management Libraries. These libraries enable you to manage your Windows Azure Subscription from .NET code. In this video, Brady uses the libraries to demonstrate:

- Upload a package to Blob Storage

- Create a Cloud Service using the uploaded package

- Deploy the Cloud Service

- Create and start a VM

In the News:

- ADAL, Windows Azure Ad, and Multi-Resource Refresh Tokens

- Building a WPF Mobile Services Quick Start

Eric Knorr (@EricKnorr) asserted “With its vast portfolio of software and billions of dollars in data center infrastructure investment, Microsoft stands poised to rule the cloud” in a deck for his Microsoft, the sleeping giant of the cloud article of 10/21/2013 for InfoWorld’s Modernizing IT blog:

We're accustomed to thinking of Microsoft as a lumbering giant encumbered by its PC legacy. But think about it: What other company in the world has such a massive collection of software and services to offer through the cloud, not to mention the cloud infrastructure to deliver it?

Microsoft has the resources to crush it. The question, as usual, is how well it can execute.

Brad Anderson, corporate vice president of the Cloud and Enterprise division at Microsoft, helps oversee a big chunk of the private and public cloud portfolio: Windows Server, System Center, SQL Server, Windows Azure, and Visual Studio. InfoWorld Executive Editor Doug Dineley and I spoke with him for over an hour last week. In particular, our conversation focused on the connection between Windows Server and Azure, although we began by addressing the commitment Microsoft has made to its public cloud.

Gearing up for dominance

The Microsoft reorg changed the name of Microsoft's Server and Tools division to the Cloud and Enterprise division. But according to Anderson, little changed in terms of responsibility, except that Global Foundation Services became part of his group. These are the guys who are responsible for Microsoft's entire data center infrastructure, including Azure data centers.

I had heard Microsoft was investing heavily, but still, I was surprised by the scale. "We think that we were the No. 1 purchaser of servers in the world last year," says Anderson. "Every six months we're having to double our compute and our storage capacity. To give you a frame, in the last three years we've spent over $15 billion on cap ex."

That's one heck of a cloud launching pad. Plus, although Office 365 is outside Anderson's purview, he couldn't resist noting it reached a $1 billion annual run rate faster than any product in Microsoft history (although some have questioned that claim). In addition, back in June, Azure general manager Steven Martin claimed that the number of Azure customers had risen to 250,000 and was increasing at the rate of 1,000 per day.

No doubt many of those Azure customers were drawn by Microsoft's decision in mid-2012 to offer plain old IaaS (as opposed to PaaS), which InfoWorld's Peter Wayner characterized as having "great price-performance, Windows toolchain integration, and plenty of open source options." You can bet a bunch of customers will also discover Azure by crossing the bridge Microsoft is building between Windows Server and System Center on the one hand, and Azure services on the other.

The boundaryless data center

The overarching message is that Windows Server customers can now use Azure as an extension to their local server infrastructure. "We're the only organization in the world delivering consistency across privately hosted and public clouds," Anderson says. "You're not going to be locked into a private or to a public cloud. You can dev and test in Azure, deploy on private. You can move your virtual machine up into Azure without changing a line of code, without changing your IT processes."Anderson also claims technology development on Azure is being ported to Windows Server. "The ability to take direct-attached storage, have all of the content replicated, tiering ... all those kinds of pieces come from Azure," he said, referring to Windows Server 2012's Storage Spaces and the new storage functionality in Windows Server 2012 R2.

…

Read more. Eric Knorr is InfoWorld’s Editor-in-Chief.

<Return to section navigation list>

Windows Azure Pack, Hosting, Hyper-V and Private/Hybrid Clouds

• Scott Guthrie (@scottgu) blogged Windows Azure: Backup Services Release, Hyper-V Recovery Manager, VM Enhancements, Enhanced Enterprise Management Support on 10/22/2013:

This morning we released a huge set of updates to Windows Azure. These new capabilities include:

- Backup Services: General Availability of Windows Azure Backup Services

- Hyper-V Recovery Manager: Public preview of Windows Azure Hyper-V Recovery Manager

- Virtual Machines: Delete Attached Disks, Availability Set Warnings, SQL AlwaysOn Configuration

- Active Directory: Securely manage hundreds of SaaS applications

- Enterprise Management: Use Active Directory to Better Manage Windows Azure

- Windows Azure SDK 2.2: A massive update of our SDK + Visual Studio tooling support

All of these improvements are now available to use immediately. Below are more details about them.

Backup Service: General Availability Release of Windows Azure Backup

Today we are releasing Windows Azure Backup Service as a general availability service. This release is now live in production, backed by an enterprise SLA, supported by Microsoft Support, and is ready to use for production scenarios.

Windows Azure Backup is a cloud based backup solution for Windows Server which allows files and folders to be backed up and recovered from the cloud, and provides off-site protection against data loss. The service provides IT administrators and developers with the option to back up and protect critical data in an easily recoverable way from any location with no upfront hardware cost.

Windows Azure Backup is built on the Windows Azure platform and uses Windows Azure blob storage for storing customer data. Windows Server uses the downloadable Windows Azure Backup Agent to transfer file and folder data securely and efficiently to the Windows Azure Backup Service. Along with providing cloud backup for Windows Server, Windows Azure Backup Service also provides capability to backup data from System Center Data Protection Manager and Windows Server Essentials, to the cloud.

All data is encrypted onsite before it is sent to the cloud, and customers retain and manage the encryption key (meaning the data is stored entirely secured and can’t be decrypted by anyone but yourself).

Getting Started

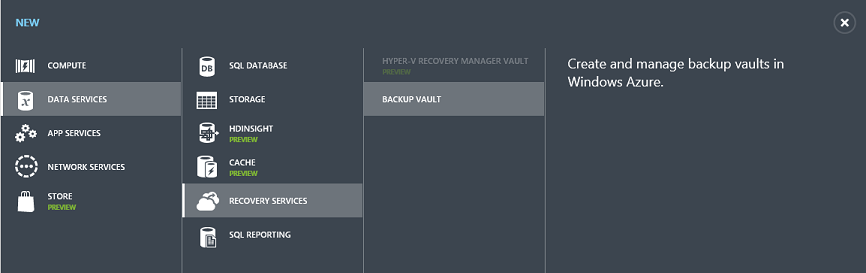

To get started with the Windows Azure Backup Service, create a new Backup Vault within the Windows Azure Management Portal. Click New->Data Services->Recovery Services->Backup Vault to do this:

Once the backup vault is created you’ll be presented with a simple tutorial that will help guide you on how to register your Windows Servers with it:

Once the servers you want to backup are registered, you can use the appropriate local management interface (such as the Microsoft Management Console snap-in, System Center Data Protection Manager Console, or Windows Server Essentials Dashboard) to configure the scheduled backups and to optionally initiate recoveries. You can follow these tutorials to learn more about how to do this:

- Tutorial: Schedule Backups Using the Windows Azure Backup Agent This tutorial helps you with setting up a backup schedule for your registered Windows Servers. Additionally, it also explains how to use Windows PowerShell cmdlets to set up a custom backup schedule.

- Tutorial: Recover Files and Folders Using the Windows Azure Backup Agent This tutorial helps you with recovering data from a backup. Additionally, it also explains how to use Windows PowerShell cmdlets to do the same tasks.

Below are some of the key benefits the Windows Azure Backup Service provides:

- Simple configuration and management. Windows Azure Backup Service integrates with the familiar Windows Server Backup utility in Windows Server, the Data Protection Manager component in System Center and Windows Server Essentials, in order to provide a seamless backup and recovery experience to a local disk, or to the cloud.

- Block level incremental backups. The Windows Azure Backup Agent performs incremental backups by tracking file and block level changes and only transferring the changed blocks, hence reducing the storage and bandwidth utilization. Different point-in-time versions of the backups use storage efficiently by only storing the changes blocks between these versions.

- Data compression, encryption and throttling. The Windows Azure Backup Agent ensures that data is compressed and encrypted on the server before being sent to the Windows Azure Backup Service over the network. As a result, the Windows Azure Backup Service only stores encrypted data in the cloud storage. The encryption key is not available to the Windows Azure Backup Service, and as a result the data is never decrypted in the service. Also, users can setup throttling and configure how the Windows Azure Backup service utilizes the network bandwidth when backing up or restoring information.

- Data integrity is verified in the cloud. In addition to the secure backups, the backed up data is also automatically checked for integrity once the backup is done. As a result, any corruptions which may arise due to data transfer can be easily identified and are fixed automatically.

- Configurable retention policies for storing data in the cloud. The Windows Azure Backup Service accepts and implements retention policies to recycle backups that exceed the desired retention range, thereby meeting business policies and managing backup costs.

Hyper-V Recovery Manager: Now Available in Public Preview

I’m excited to also announce the public preview of a new Windows Azure Service – the Windows Azure Hyper-V Recovery Manager (HRM).

Windows Azure Hyper-V Recovery Manager helps protect your business critical services by coordinating the replication and recovery of System Center Virtual Machine Manager 2012 SP1 and System Center Virtual Machine Manager 2012 R2 private clouds at a secondary location. With automated protection, asynchronous ongoing replication, and orderly recovery, the Hyper-V Recovery Manager service can help you implement Disaster Recovery and restore important services accurately, consistently, and with minimal downtime.

Application data in an Hyper-V Recovery Manager scenarios always travels on your on-premise replication channel. Only metadata (such as names of logical clouds, virtual machines, networks etc.) that is needed for orchestration is sent to Azure. All traffic sent to/from Azure is encrypted.

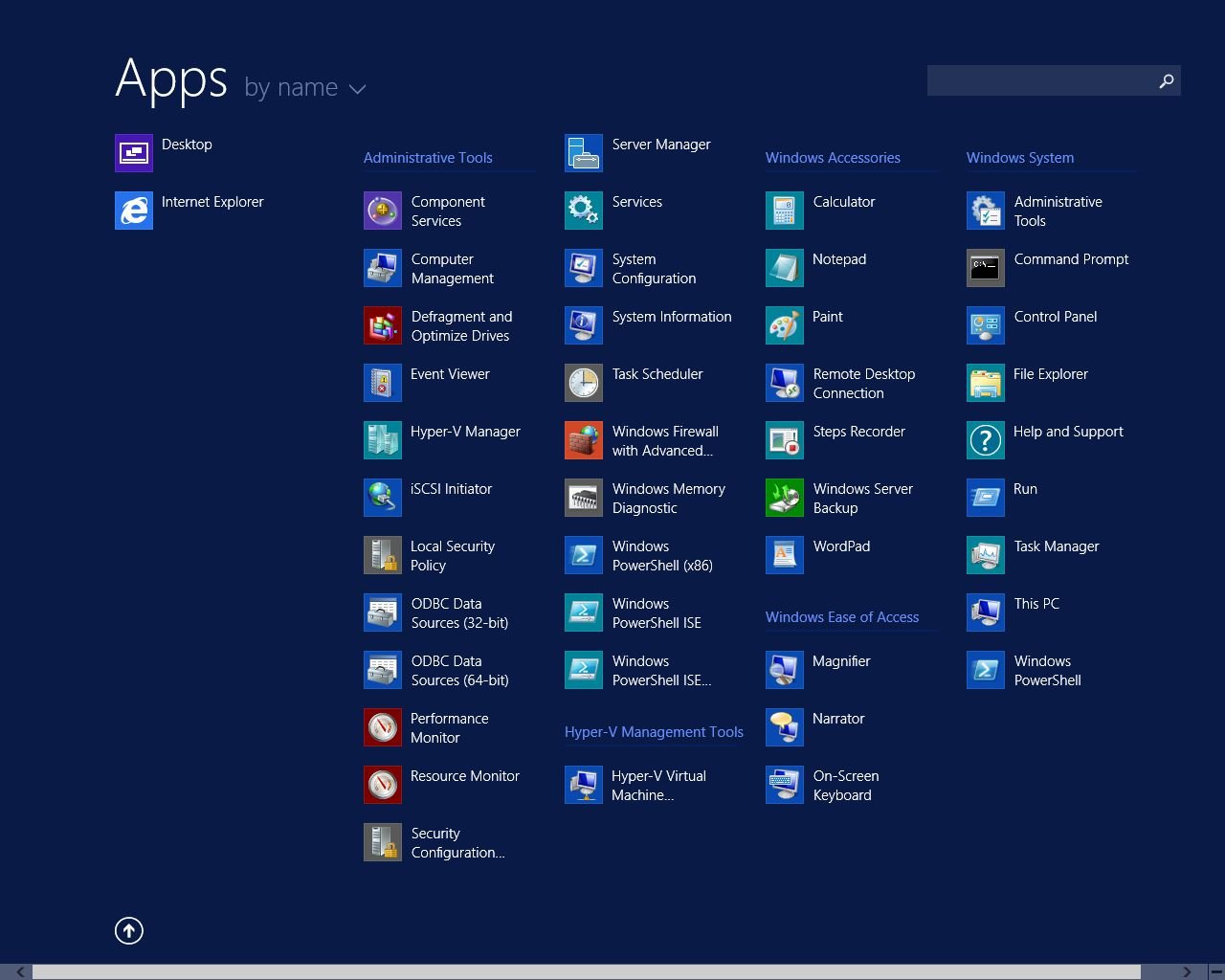

You can begin using Windows Azure Hyper-V Recovery today by clicking New->Data Services->Recovery Services->Hyper-V Recovery Manager within the Windows Azure Management Portal. You can read more about Windows Azure Hyper-V Recovery Manager in Brad Anderson’s 9-part series, Transform the datacenter. To learn more about setting up Hyper-V Recovery Manager follow our detailed step-by-step guide.

Virtual Machines: Delete Attached Disks, Availability Set Warnings, SQL AlwaysOn

Today’s Windows Azure release includes a number of nice updates to Windows Azure Virtual Machines. These improvements include:

Ability to Delete both VM Instances + Attached Disks in One Operation

Prior to today’s release, when you deleted VMs within Windows Azure we would delete the VM instance – but not delete the drives attached to the VM. You had to manually delete these yourself from the storage account. With today’s update we’ve added a convenience option that now allows you to either retain or delete the attached disks when you delete the VM:

We’ve also added the ability to delete a cloud service, its deployments, and its role instances with a single action. This can either be a cloud service that has production and staging deployments with web and worker roles, or a cloud service that contains virtual machines. To do this, simply select the Cloud Service within the Windows Azure Management Portal and click the “Delete” button:

Warnings on Availability Sets with Only One Virtual Machine In Them

One of the nice features that Windows Azure Virtual Machines supports is the concept of “Availability Sets”. An “availability set” allows you to define a tier/role (e.g. webfrontends, databaseservers, etc) that you can map Virtual Machines into – and when you do this Windows Azure separates them across fault domains and ensures that at least one of them is always available during servicing operations. This enables you to deploy applications in a high availability way.

One issue we’ve seen some customers run into is where they define an availability set, but then forget to map more than one VM into it (which defeats the purpose of having an availability set). With today’s release we now display a warning in the Windows Azure Management Portal if you have only one virtual machine deployed in an availability set to help highlight this:

You can learn more about configuring the availability of your virtual machines here.

Configuring SQL Server Always On

SQL Server Always On is a great feature that you can use with Windows Azure to enable high availability and DR scenarios with SQL Server.

Today’s Windows Azure release makes it even easier to configure SQL Server Always On by enabling “Direct Server Return” endpoints to be configured and managed within the Windows Azure Management Portal. Previously, setting this up required using PowerShell to complete the endpoint configuration. Starting today you can enable this simply by checking the “Direct Server Return” checkbox:

You can learn more about how to use direct server return for SQL Server AlwaysOn availability groups here.

Active Directory: Application Access Enhancements

This summer we released our initial preview of our Application Access Enhancements for Windows Azure Active Directory. This service enables you to securely implement single-sign-on (SSO) support against SaaS applications (including Office 365, SalesForce, Workday, Box, Google Apps, GitHub, etc) as well as LOB based applications (including ones built with the new Windows Azure AD support we shipped last week with ASP.NET and VS 2013).

Since the initial preview we’ve enhanced our SAML federation capabilities, integrated our new password vaulting system, and shipped multi-factor authentication support. We've also turned on our outbound identity provisioning system and have it working with hundreds of additional SaaS Applications:

Earlier this month we published an update on dates and pricing for when the service will be released in general availability form. In this blog post we announced our intention to release the service in general availability form by the end of the year. We also announced that the below features would be available in a free tier with it:

- SSO to every SaaS app we integrate with – Users can Single Sign On to any app we are integrated with at no charge. This includes all the top SAAS Apps and every app in our application gallery whether they use federation or password vaulting.

- Application access assignment and removal – IT Admins can assign access privileges to web applications to the users in their active directory assuring that every employee has access to the SAAS Apps they need. And when a user leaves the company or changes jobs, the admin can just as easily remove their access privileges assuring data security and minimizing IP loss

- User provisioning (and de-provisioning) – IT admins will be able to automatically provision users in 3rd party SaaS applications like Box, Salesforce.com, GoToMeeting, DropBox and others. We are working with key partners in the ecosystem to establish these connections, meaning you no longer have to continually update user records in multiple systems.

- Security and auditing reports – Security is a key priority for us. With the free version of these enhancements you'll get access to our standard set of access reports giving you visibility into which users are using which applications, when they were using them and where they are using them from. In addition, we'll alert you to un-usual usage patterns for instance when a user logs in from multiple locations at the same time.