Windows Azure and Cloud Computing Posts for 1/28/2013+

| A compendium of Windows Azure, Service Bus, EAI & EDI, Access Control, Connect, SQL Azure Database, and other cloud-computing articles. |

• Update 2/2/2013: Added new articles marked •. Tip: Ctrl+C to copy the bullet to the clipboard, Ctrl+F to open the Find text box, Ctrl+V to paste the bullet, and Enter to find the first instance; click Next for each successive update.

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Windows Azure Blob, Drive, Table, Queue, HDInsight and Media Services

- Windows Azure SQL Database, Federations and Reporting, Mobile Services

- Marketplace DataMarket, Cloud Numerics, Big Data and OData

- Windows Azure Service Bus, Caching, Access Control, Active Directory, Identity and Workflow

- Windows Azure Virtual Machines, Virtual Networks, Web Sites, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch and Entity Framework v4+

- Windows Azure Infrastructure and DevOps

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

- Cloud Security, Compliance and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

Azure Blob, Drive, Table, Queue, HDInsight and Media Services

Brad Severtson (@Brad2435150) explained Azure Vault Storage in HDInsight: A Robust and Low Cost Storage Solution in a 1/29/2013 post:

HDInsight is trying to provide the best of two worlds in how it manages its data.

Azure Vault Storage (ASV) and the Hadoop Distributed File System (HDFS)

implemented by HDInsight on Azure are distinct file systems that are optimized,

respectively, for the storage of data and computations on that data.

ASV providesa highly scalable and available, low cost, long term, and shareable storage option for data that is to be processed using HDInsight.

- The Hadoop clusters deployed by HDInsight on HDFS are optimized for running Map/Reduce (M/R) computational tasks on the data.

HDInsight clusters are deployed in Azure on compute nodes to execute M/R

tasks and are dropped once these tasks have been completed. Keeping the data in

the HDFS clusters after computations have been completed would be an expensive

way to store this data. ASV provides a full featured HDFS file system over

Azure Blob storage (ABS). ABS is a robust, general purpose Azure storage

solution, so storing data in ABS enables the clusters used for computation to

be safely deleted without losing user data. ASV is not only low cost. It has been

designed as an HDFS extension to provide a seamless experience to customers by

enabling the full set of components in the Hadoop ecosystem to operate directly

on the data it manages.In the upcoming release of HDInsight on Azure, ASV will be

the default file system. In the current developer preview on www.hadooponazure.com data stored in

ASV can be accessed directly from the Interactive JavaScript Console by

prefixing the protocol scheme of the URI for the assets you are accessing with

ASV://To use this feature in the current release, you will need

HDInsight and Windows Azure Blob Storage accounts. To access your storage

account from HDInsight, go to the Cluster and click on the Manage Cluster tile.Click on the Set up ASV button.

Enter the credentials (Name and Passkey) for your Windows Azure Blob Storage account.

Then return to the Cluster and click on the Interactive Console tile to access the JavaScript console.Now to run Hadoop wordcount job with data an ASV container name hadoop use

Hadoop jar hadoop-examples-1.1.0-SNAPSHOT.jar wordcount asv://hadoop/ outputfileThe scheme for accessing data in ASV is asv://container/path

To see the data in asv

#cat asv://hadoop2/data

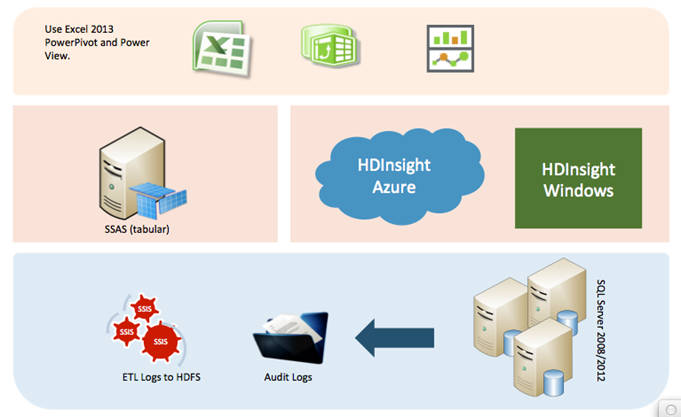

Denny Lee (@dennylee) described his Big Data, BI, and Compliance in Healthcare session for 24 Hours of PASS (Spring 2013) on 1/29/2013:

If you’re interested in Big Data, BI, and Compliance in Healthcare; check out Ayad Shammout (@aashammout) and my 24 Hours of PASS (Spring 2013) session Ensuring Compliance of Patient Data with Big Data and BI.

To help meet HIPAA and HealthAct compliance – and to more easily handle larger volumes of unstructured data and gain richer and deeper insight using the latest analytics – a medical center is embarking on a Big Data-to-BI project involving HDInsight, SQL Server 2012, Integration Services, PowerPivot, and Power View.

Join this preview of Denny Lee and Ayad Shammout’s PASS Business Analytics Conference session to get the architecture and details behind this project within the context of patient data compliance.

<Return to section navigation list>

Windows Azure SQL Database, Federations and Reporting, Mobile Services

• Steven Martin (@stevemar_msft) reported a SQL Reporting Services Pricing Update for Windows Azure in a 2/1/2013 post:

We are very pleased to announce that we are lowering the price of Windows Azure SQL Reporting by up to 82%! The price change is effective today and is applicable to everyone currently using the service as well as new customers.

To ensure the service is cost effective for lower volume users, we are reducing the price for the base tier and including more granular increments

Effective today, the price for Windows Azure SQL Reporting will decrease from $0.88 per hour for every 200 reports to $0.16 per hour for every 30 reports. With this price decrease, a user who needs 30 reports per hour, for example, will pay $116.80 per month, down from our earlier price of $642.40, a reduction of 81.8%. In addition, the smaller report increment (from 200 to 30) will give customers better utilization and hence lower effective price points.

For more details, see our Pricing Details webpage. For additional Business Analytics capabilities, please visit: http://www.windowsazure.com/en-us/home/features/business-analytics/

As always, we offer a variety of options for developers to use Windows Azure for free or at significantly reduced prices including:

- Free 90 day trial for new users

- Offers for MSDN customers, Microsoft partner network members and startups that provide free usage every month up to $300 per month in value

- Monthly commitment plans that can save you up to 32% on everything you use on Windows Azure

Check out Windows Azure pricing and offers for more details or sign up to get started!

• Craig Kitterman (@craigkitterman) posted Cross-Post: Windows Azure SQL Database and SQL Server -- Performance and Scalability Compared and Contrasted on 2/1/2013:

Editor's note: This post comes from Rick Anderson [avatar below] who is a programmer / writer for the Windows Azure and ASP.NET MVC teams.

Restarts for Web Roles

An often neglected consideration in Windows Azure is how to handle restarts. It’s important to handle restarts correctly, so you don’t lose data or corrupt your persisted data, and so you can quickly shutdown, restart, and efficiently handle new requests. Windows Azure Cloud Service applications are restarted approximately twice per month for operating system updates. (For more information on OS updates, see Role Instance Restarts Due to OS Upgrades.) When a web application is going to be shutdown, the RoleEnvironment.Stopping event is raised. The web role boilerplate created by Visual Studio does not override the OnStop method, so the application will have only a few seconds to finish processing HTTP requests before it is shut down. If your web role is busy with pending requests, some of these requests can be lost.

You can delay the restarting or your web role by up to 5 minutes by overriding the OnStop method and calling Sleep, but that’s far from optimal. Once the Stopping event is raised, the Load Balance (LB) stops sending requests to the web role, so delaying the restart for longer than it takes to process pending requests leaves your virtual machine spinning in Sleep, doing no useful work.

The optimal approach is to wait in the OnStop method until there are no more requests, and then initiate the shutdown. The sooner you shutdown, the sooner the VM can restart and begin processing requests. To implement the optimal shutdown strategy, add the following code to your WebRole class.

The code above checks the ASP.NET request’s current counter. As long as there are requests, the OnStop method calls Sleep to delay the shutdown. Once the current request’s counter drops to zero, OnStop returns, which initiates shutdown. Should the web server be so busy that the pending requests cannot be completed in 5 minutes, the application is shut down anyway. Remember that once the Stopping event is raised, the LB stops sending requests to the web role, so unless you had a massively under sized (or too few instances of) web role, you should never need more than a few seconds to complete the current requests.

The code above writes Trace data, but unless you perform a tricky On-Demand Transfer, the trace data from the OnStop method will never appear in WADLogsTable. Later in this blog I’ll show how you can use DebugView to see these trace events. I’ll also show how you can get tracing working in the web role OnStart method.

Optimal Restarts for Worker Roles

Handling the Stopping event in a worker role requires a different approach. Typically the worker role processes queue messages in the Run method. The strategy involves two global variables; one to notify the Run method that the Stopping event has been raised, and another global to notify the OnStop method that it’s safe to initiate shutdown. (Shutdown is initiated by returning from OnStop.) The following code demonstrates the two global approaches.

When OnStop is called, the global onStopCalled is set to true, which signals the code in the Run method to shut down at the top of the loop, when no queue event is being processed.

Viewing OnStop Trace Data

As mentioned previously, unless you perform a tricky On-Demand Transfer, the trace data from the OnStop method will never appear in WADLogsTable. We’ll use Dbgview to see these trace events. In Solution Explorer, right-click on the cloud project and select Publish.

Download your publish profile. In the Publish Windows Azure Application dialog box, select Debug and select Enable Remote Desktop for all roles.

The compiler removes Trace calls from release builds, so you’ll need to set the build configuration to Debug to see the Trace data. Once the application is published and running, in Visual Studio, select Server Explorer (Ctl+Alt+S). Select Windows Azure Compute, and then select your cloud deployment. (In this case it’s called t6 and it’s a production deployment.) Select the web role instance, right-click, and select Connect using Remote Desktop.

Remote Desktop Connection (RDC) will use the account name you specified in the publish wizard and prompt you for the password you entered. In the left side of the taskbar, select the Server Manager icon.

In the left tab of Server Manager, select Local Server, and then select IE Enhanced Security Configuration (IE ESC). Select the off radio button in the IE ESC dialog box.

Start Internet Explorer, download and install DebugView. Start DebugView, and in the Capture menu, select Capture Global Win32.

Select the filter icon, and then enter the following exclude filter:

For this test, I added the RoleEnvironment.RequestRecycle method to the About action method, which as the name suggests, initiates the shutdown/restart sequence. Alternatively, you can publish the application again, which will also initiate the shutdown/restart sequence.

Follow the same procedure to view the trace data in the worker role VM. Select the worker role instance, right-click and select Connect using Remote Desktop.

Follow the procedure above to disable IE Enhanced Security Configuration. Install and configure DebugView using the instructions above. I use the following filter for worker roles:

For this sample, I published the Azure package, which causes the shutdown/restart procedure.

One last departing tip: To get tracing working in the web roles OnStart method, add the following:

If you’d like me to blog on getting trace data from the OnStop method to appear in WADLogsTable, let me know. Most of the information in this blog comes from Azure multi-tier tutorial Tom and I published last week. Be sure to check it out for lots of other good tips.

Josh Twist (@joshtwist) described Working with Making Waves and VGTV - Mobile Services in a 1/28/2013 post:

We’ve just published a great case study:

Working with Christer at Making Waves was a blast and they’ve created an awesome Windows 8 application backed by Mobile Services.

Check out Christer and team talking about their experience of using Mobile Services in this short video (< 5 mins)

Angshuman Nayak (@AngshumanNayak) explained Access SQL Azure Data Using Java Script in a 1/28/2013 post to the Windows Azure Cloud Integration Engineering (WACIE) blog:

I regularly access SQL Azure from my .Net code and once a while from Java or Ruby code. But I was just thinking since it’s so easy and not at all different from on premise code can I use a scripting language to access SQL Azure. Is it even possible? To my amusement I was able to get the data from SQL Azure and will detail the steps and code below.

I will start with a caveat though in case you are not writing a fun application for your friends or kids or may be some personal application you want to run on your PC read no further and go to this link instead. Javascript was not developed for data access purpose. The main use of JavaScript is for client side form validation, for AJAX(Asynchronous Java Script and XML) implementation. But since IE has the ability to load ActiveX objects we can still use Java Script to connect to SQL Azure.

So let’s have the ball rolling. I assume there is already a database called Cloud that has a table Employee. This has columns called FirstName and LastName …yeah yeah the same ubiquitous table we all learnt in programming 101 class… whatever.

We will get it done in two steps.

Step 1

Set up a DSN to connect to SQL Azure. Depending on the environment use odbcad32.exe either from (C:\Windows\SysWOW64) for 32bit or from (C:\Windows\System32) for the 64bit. No I am not incorrect the folder with 64 in it’s name has all 32bit binaries and the folder with 32 in it’s name has all 64bit binaries. Create a new system DSN, use the SQL Server Native Client 11 driver, then give the FQDN of the SQL Azure database as below.

Use the Database Name of the database you want to connect to

Check that the test connection succeeds.

Step 2

So now we come to the script part of it. Add a new HTML file in your existing project and replace the code in there with the following.

<!DOCTYPE html PUBLIC "-//W3C//DTD

XHTML 1.0 Transitional//EN" "http://www.w3.org/TR/xhtml1/DTD/xhtml1-transitional.dtd"><html xmlns="http://www.w3.org/1999/xhtml">

<head>

<title>Untitled Page</title>

<script type="text/javascript" language="javascript" >

function connectDb() {

var Conn = new ActiveXObject("ADODB.Connection");

Conn.ConnectionString = "dsn=sqlazure;uid=annayak;pwd=P@$$word;";

Conn.Open();

var rs = new ActiveXObject("ADODB.Recordset") ;

var strEmpName = " " ;

rs.Open("select * from dbo.Employees", ConnDB, 1, 3);

while (!rs.EOF) {

strEmpName = rs("FirstName") + " " + rs("LastName") + "<br>";

rs.MoveNext;}

test.innerHTML = strEmpName;

}

</script>

</head>

<body>

<p><div id="test"> </div> </p>

<p><input id="button1" type="button" value="GetData" name="button1" onclick="connectDb();"/></p>

</body>

</html>

Modify the highlighted part to use the DSN name, user id, password, table name, column name as per the actual values applicable in your scenario. Now when the HTML page is called and the GetData button clicked it will get the data and display in IE (please note ActiveX works only with IE).

Since you are loading a driver in IE use this setting to suppress warning messages.

Open Internet Explorer -> Tools -> Internet Options -> Security -> Trusted sites -> Sites -> Add this site to the web zone

Josh Twist (@joshtwist) explained Debugging your Mobile Service scripts in a 1/26/2013 post:

During the twelve days of ZUMO, I posted a couple of articles that showed techniques for unit testing your Mobile Service scripts:

And whilst this is awesome, sometimes you really want to be able to debug a script and go past console.log debugging. If you follow this approach to unit testing your scripts then you can use a couple of techniques to also debug your unit tests (and therefore Mobile Service scripts). I recently purchased a copy of WebStorm during their Mayan Calender end of world promotion (bargain) and it’s a nice tool with built-in node debugging. I asked my team mate Glenn Block if he knew how to use WebStorm to debug Mocha tests. Sure enough, he went off, did the research and posted a great article showing how: Debugging mocha unit tests with WebStorm step by step – follow these steps if you own WebStorm.

For those that don’t have a copy of WebStorm, you can still debug your tests using nothing but node, npm and your favorite WebKit browser (such as Google Chrome).

The first thing you’ll need (assuming you already installed node and npm and the mocha framework) is node-inspector. To install node-inspector, just run this command in npm

npm install -g node-inspectorOn my mac, I had to run

sudo npm install -g node-inspectorand enter my password (and note, this can take a while to build the inspector). Next, when you run your unit tests add the --debug-brk switch:

mocha test.js -u tdd --debug-brkThis will start your test and break on the first line. Now you need to start node-inspector in a separate terminal/cmd window:

node-inspector &And you’re ready to start debugging. Just point your webkit browser to the URL shown in the node-inspector window, typically:

http://0.0.0.0:8080/debug?port=5858

Now, unfortunately the first line of code that node and the inspector will break on will be mocha code and it’s all a little big confusing here for a few minutes, but bear with it, because once you’re up and running it gets easier.

The first thing you’ll need to do is advance the script past the line , Mocha = require(‘../’)which will load all the necessary mocha files. Now you can navigate to the file Runnable.js using the left pane.

And in this file, put a break on the first line inside Runnable.prototype.run function:

If you now hit the start/stop button (F8) to run to that breakpoint, the process will have loaded your test files so you can start to add breaks:

Here, I’ve found my test file test.js:

And we’re away. After this, the webkit browser will typically remember your breakpoints so you only have to do this once. So there you go, debugging mocha with node-inspector. Or you could just buy WebStorm

<Return to section navigation list>

Marketplace DataMarket, Cloud Numerics, Big Data and OData

• The WCF Data Services Team announced a WCF Data Services 5.3.0-rc1 Prerelease on 1/31/2013:

Today we released an updated version of the WCF Data Services NuGet packages and tools installer. This version of WCF DS has some notable new features as well as several bug fixes.

What is in the release:

Instance annotations on feeds and entries (JSON only)

Instance annotations are an extensibility feature in OData feeds that allow OData requests and responses to be marked up with annotations that target feeds, single entities (entries), properties, etc. WCF Data Services 5.3.0 supports instance annotations in JSON payloads. Support for instance annotations in Atom payloads is forthcoming.

Action Binding Parameter Overloads

The OData specification allows actions with the same name to be bound to multiple different types. WCF Data Services 5.3 enables actions for different types to have the same name (for instance, both a Folder and a File may have a Rename action). This new support includes both serialization of actions with the same name as well as resolution of an action’s binding parameter using the new IDataServiceActionResolver interface.

Modification of the Request URL

For scenarios where it is desirable to modify request URLs before the request is processed, WCF Data Services 5.3 adds support for modifying the request URL in the OnStartProcessingRequest method. Service authors can modify both the request URL as well as URLs for the various parts of a batch request.

This release also contains the following noteworthy bug fixes:

- Fixes an issue where code gen produces invalid code in VB

- Fixes an issue where code gen fails when the Precision facet is set on spatial and time properties

- Fixes an issue where odata.type was not written consistently in fullmetadata mode for JSON

- Fixes an issue where a valid form of Edm.DateTime could not be parsed

- Fixes an issue where the WCF DS client would not send type names for open properties on insert/update

- Fixes an issue where the WCF DS client could not read responses from service operations which returned collections of complex or primitive types

Youssef Moussaoui (@youssefmss) described Getting started with ASP.NET WebAPI OData in 3 simple steps in a 1/30/2013 post to the .NET Web Development and Tools blog:

With the upcoming ASP.NET 2012.2 release, we’ll be adding support for OData to WebAPI. In this blog post, I’ll go over the three simple steps you’ll need to go through to get your first OData service up and running:

- Creating your EDM model

- Configuring an OData route

- Implementing an OData controller

Before we dive in, the code snippets in this post won’t work if you’re using the RC build. You can upgrade to using our latest nightly build by taking a look at this helpful blog post.

1) Creating your EDM model

First, we’ll create an EDM model to represent the data model we want to expose to the world. The ODataConventionModelBuilder class makes this this easy by using a set of conventions to reflect on your type and come up with a reasonable model. Let’s say we want to expose an entity set called Movies that represents a movie collection. In that case, we can create a model with a couple lines of code:

1: ODataConventionModelBuilder modelBuilder = new ODataConventionModelBuilder();2: modelBuilder.EntitySet<Movie>("Movies");3: IEdmModel model = modelBuilder.GetEdmModel();2) Configuring an OData route

Next, we’ll want to configure an OData route. Instead of using MapHttpRoute the way you would in WebAPI, the only difference here is that you use MapODataRoute and pass in your model. The model gets used for parsing the request URI as an OData path and routing the request to the right entity set controller and action. This would look like this:

1: config.Routes.MapODataRoute(routeName: "OData", routePrefix: "odata", model: model);The route prefix above is the prefix for this particular route. So it would only match request URIs that start with http://server/vroot/odata, where vroot is your virtual root. And since the model gets passed in as a parameter to the route, you can actually have multiple OData routes configured with a different model for each route.

3) Implementing an OData controller

Finally, we just have to implement our MoviesController to expose our entity set. Instead of deriving from ApiController, you’ll need to derive from ODataController. ODataController is a new base class that wires up the OData formatting and action selection for you. Here’s what an implementation might look like:

1: public class MoviesController : ODataController2: {3: List<Movie> _movies = TestData.Movies;4:5: [Queryable]6: public IQueryable<Movie> Get()7: {8: return _movies.AsQueryable();9: }10:11: public Movie Get([FromODataUri] int key)12: {13: return _movies[key];14: }15:16: public Movie Patch([FromODataUri] int key, Delta<Movie> patch)17: {18: Movie movieToPatch = _movies[key];19: patch.Patch(movieToPatch);20: return movieToPatch;21: }22: }There’s a few things to point out here. Notice the [Queryable] attribute on the Get method. This enables OData query syntax on that particular action. So you can apply filtering, sorting, and other OData query options to the results of the action. Next, we have the [FromODataUri] attributes on the key parameters. These attributes instruct WebAPI that the parameters come from the URI and should be parsed as OData URI parameters instead of as WebAPI parameters. Finally, Delta<T> is a new OData class that makes it easy to perform partial updates on entities.

One important thing to realize here is that the controller name, the action names, and the parameter names all matter. OData controller and action selection work a little differently than they do in WebAPI. Instead of being based on route parameters, OData controller and action selection is based on the OData meaning of the request URI. So for example if you made a request for http://server/vroot/odata/$metadata, the request would actually get dispatched to a separate special controller that returns the metadata document for the OData service. Notice how the controller name also matches the entity set name we defined previously. I’ll try to go into more depth about OData routing in a future blog post.

Instead of deriving from ODataController, you can also choose to derive from EntitySetController. EntitySetController is a convenient base class for exposing entity sets that provides simple methods you can override. It also takes care of sending back the right OData response in a variety of cases, like sending a 404 Not Found if an entity with a certain key could not be found. Here’s what the same implementation as above looks like with EntitySetController:

1: public class MoviesController : EntitySetController<Movie, int>2: {3: List<Movie> _movies = TestData.Movies;4:5: [Queryable]6: public override IQueryable<Movie> Get()7: {8: return _movies.AsQueryable();9: }10:11: protected override Movie GetEntityByKey(int key)12: {13: return _movies[key];14: }15:16: protected override Movie PatchEntity(int key, Delta<Movie> patch)17: {18: Movie movieToPatch = _movies[key];19: patch.Patch(movieToPatch);20: return movieToPatch;21: }22: }Notice how you don’t need [FromODataUri] anymore because EntitySetController has already added it for you on its own action parameters. That’s just one of the several advantages of using EntitySetController as a base class.

Now that we have a working OData service, let’s try out a few requests. If you try a request to http://localhost/odata/Movies(2) with an “application/json” Accept header, you should get a response that looks like this:

1: {2: "odata.metadata": "http://localhost/odata/$metadata#Movies/@Element",3: "ID": 2,4: "Title": "Gladiator",5: "Director": "Ridley Scott",6: "YearReleased": 20007: }On the other hand, if you set an “application/atom+xml” Accept header, you might see a response that looks like this:

1: <?xml version="1.0" encoding="utf-8"?>2: <entry xml:base="http://localhost/odata/" xmlns="http://www.w3.org/2005/Atom" xmlns:d="http://schemas.microsoft.com/ado/2007/08/dataservices" xmlns:m="http://schemas.microsoft.com/ado/2007/08/dataservices/metadata" xmlns:georss="http://www.georss.org/georss" xmlns:gml="http://www.opengis.net/gml">3: <id>http://localhost/odata/Movies(2)</id>4: <category term="MovieDemo.Model.Movie" scheme="http://schemas.microsoft.com/ado/2007/08/dataservices/scheme" />5: <link rel="edit" href="http://localhost/odata/Movies(2)" />6: <link rel="self" href="http://localhost/odata/Movies(2)" />7: <title />8: <updated>2013-01-30T19:29:57Z</updated>9: <author>10: <name />11: </author>12: <content type="application/xml">13: <m:properties>14: <d:ID m:type="Edm.Int32">2</d:ID>15: <d:Title>Gladiator</d:Title>16: <d:Director>Ridley Scott</d:Director>17: <d:YearReleased m:type="Edm.Int32">2000</d:YearReleased>18: </m:properties>19: </content>20: </entry>As you can see, the Json.NET and DataContractSerializer-based responses you’re used to getting when using WebAPI controllers gets replaced with the OData equivalents when you derive from ODataController.

<Return to section navigation list>

Windows Azure Service Bus, Caching Access Control, Active Directory, Identity and Workflow

Haishi Bai (@HaishiBai2010) completed his series with New features in Service Bus Preview Library (January 2013) – 3: Queue/Subscription Shared Access Authorization on 1/29/2013:

This is the last part of my 3-part blog series on the recently released Service Bus preview features.

[This post series is based on preview features that are subject to change]

Queue/Subscription Shared Access Authorization

Service Bus uses Windows Azure AD Access Control (a.k.a. Access Control Service or ACS) for authentication. And it uses claims generated by ACS for authorizations. For each Service Bus namespace, there’s a corresponding ACS namespace of the same name (suffixed with “-sb”). In this ACS namespace, there’s a “owner” server identity, for which ACS issues three net.windows.servicebus.action claims with values Manage, Listen, and Send. These claims are in turn used by Service Bus to authorize user for different operations. Because “owner” comes with all three claims, he is entitled to perform any operations that are allowed by Service Bus. I wrote a blog article talking about why it’s a good practice to create additional server identities with minimum access rights - these additional identities can only perform a limited set of operations that are designated to them.

However, there are still two problems: first, the security scope of these claims are global to the namespace. For example, once a user is granted Listen access, he can listen to any queues and subscriptions. We don’t have granular access control at entity level. Second, identity management is cumbersome as you have to go through ACS and manage service identities. With Service Bus preview features, you’ll be able to create authorization rules at entity (queues and subscriptions) level. And because you can apply multiple authorization rules, you can manage end user access rights separately even if they share the same queue or subscription. This design gives you great flexibility in managing access rights.

Now let’s walk through a sample to see how that works. In this scenario I’ll create two queues for three users: Tom, Jack, and an administrator. Tom has send/listen access to the first queue. Jack has send/listen access to the second queue. In addition, he can also receive messages from the first queue. The administrator can manage both queues. Their access rights are summarized in the following table:

- Create a new Windows Console application.

- Install the preview NuGet package:

install-package ServiceBus.Preview.csharpcode, .csharpcode pre {font-size:small;color:black;font-family:consolas, "Courier New", courier, monospace;background-color:#ffffff;} .csharpcode pre {margin:0em;} .csharpcode .rem {color:#008000;} .csharpcode .kwrd {color:#0000ff;} .csharpcode .str {color:#006080;} .csharpcode .op {color:#0000c0;} .csharpcode .preproc {color:#cc6633;} .csharpcode .asp {background-color:#ffff00;} .csharpcode .html {color:#800000;} .csharpcode .attr {color:#ff0000;} .csharpcode .alt {background-color:#f4f4f4;width:100%;margin:0em;} .csharpcode .lnum {color:#606060;}- Implement the Main() method:

static void Main(string[] args) { var queuePath1 = "sasqueue1"; var queuePath2 = "sasqueue2"; NamespaceManager nm = new NamespaceManager( ServiceBusEnvironment.CreateServiceUri("https", "[Your SB namespace]", string.Empty), TokenProvider.CreateSharedSecretTokenProvider("owner", "[Your secret key]")); QueueDescription desc1 = new QueueDescription(queuePath1); QueueDescription desc2 = new QueueDescription(queuePath2); desc1.Authorization.Add(new SharedAccessAuthorizationRule("ForTom", "pass@word1", new AccessRights[] { AccessRights.Listen, AccessRights.Send })); desc2.Authorization.Add(new SharedAccessAuthorizationRule("ForJack", "pass@word2", new AccessRights[] { AccessRights.Listen, AccessRights.Send })); desc2.Authorization.Add(new SharedAccessAuthorizationRule("ForJack", "pass@word2", new AccessRights[] { AccessRights.Listen })); desc1.Authorization.Add(new SharedAccessAuthorizationRule("ForAdmin", "pass@word3", new AccessRights[] { AccessRights.Manage })); desc2.Authorization.Add(new SharedAccessAuthorizationRule("ForAdmin", "pass@word3", new AccessRights[] { AccessRights.Manage })); nm.CreateQueue(desc1); nm.CreateQueue(desc2); }Okay, that’s not the most exciting code. But it should be fairly easy to understand. For each queue I’m attaching multiple SharedAccessAuthorizationRule, in which I can specify the shared key, as well as assigned rights associated with the rule. And I have implemented desired security policy with few lines of code. These entity-level keys have several advantages (comparing to the two problems stated above): first, they provide fine-granular access control. Second, they are easier to manage. And third, when they are compromised, the damage can be constrained within the scope of affected entity or even affected user only.

This concludes my 3-part blog series on Service Bus preview features. Many thanks to Abhishek Lal for help and guidance on the way, and to the friends on Twitter who helped to get the words out.

Guarav Mantri (@gmantri) posted Windows Azure Access Control Service, Windows 8 and Visual Studio 2012: Setting Up Development Environment – Oh My! on 1/28/2013:

Today I started playing with Windows Azure Access Control Service (ACS) and ran into some issues while making a simple application based on the guide here: http://www.windowsazure.com/en-us/develop/net/how-to-guides/access-control/. I had to search for a number of things on the Internet to finally get it working so I thought I would write a simple blog post which kind of summarizes the issues and how to resolve them.

The problems I ran into were because the guide was focused on Windows 7/Visual Studio 2010 and I was using Windows 8/Visual Studio 2012.

It may very well be documented somewhere but I could not find them and there may be many “newbies” like me who are just starting out with ACS on Windows 8/VS 2012 and hopefully this blog post will save some time and frustration implementing this ridiculously easy and useful technology for implementing authentication in their web applications.

With this thought, let’s start

Windows Identity Foundation Setup

When I downloaded and tried to run the “Windows Identity Foundation” from “Windows Identity Foundation” link from above, I got the following message:

I found the solution for this problem on Windows 8 Community Forums: http://answers.microsoft.com/en-us/windows/forum/windows_8-windows_install/error-when-installing-windows-identity-foundation/6e4c65a5-ea24-431c-b4f0-b8740e97cf46?auth=1. Basically, the solution is that you have to enable WIF through “Turn Windows Feature On/Off” functionality. To do so, go to Control Panel –> Programs and Features –> Turn Windows Feature on or off.

Also once WIF is installed, I was able to install Windows Identity Foundation SDK. Prior to that I was not able to do that as well.

Dude, Where’s My “Add STS Reference” Option!

OK, moving on. After following some more steps to a “T”, I ran into the following set of instructions:

So I followed step 1 but could not find “Add STS Reference” option when I right clicked on the solution in Visual Studio. Back to searching and I found a couple of blog posts:

- http://blogs.infosupport.com/equivalent-to-add-sts-reference-for-vs-2012/

- http://www.stratospher.es/blog/post/add-sts-reference-missing-in-visual-studio-2012-rc

Basically what I needed to do was install “Identity and Access Tool” extension. To do so, just click on Tools –> Extensions and Updates… and search for “Identity” as shown below:

One I installed it, I was a happy camper again

And instead of “Add STS Reference” the options’ name is “Identity and Access”.

Configuring Trust between ACS and ASP.NET Web Application

Next step is configuring trust between ACS and ASP.Net Web Application. The steps provided in the guide were for Visual Studio 2010 and clicking on “Identity and Access…” gave me an entirely different window.

Again this blog post from Haishi Bai came to my rescue: http://haishibai.blogspot.in/2012/08/windows-azure-web-site-targeting-net.html. However one thing that was not clear to me (or consider this my complete ignorance

) is from where I’ll get the management key. I logged into the ACS portal (https://<yourservicenamespace>.accesscontrol.windows.net) and first thought I would find the key under “Certificates and keys” section there (without reading much above. You can understand, I was desperate

). I copied the key from there and entered that key in the window above but that didn’t work.

As I looked around, I found the “Management service” section under “Administration” section and tried to use the key from there.

Clicked on “Generate” button to create a key and used it and voila it worked

. The portal also generates a key for you which you can also use, BTW.

Running The Application

Assuming the worst part was over, I followed remaining steps in the guide and pressed “F5” to run the application. But the application didn’t run

. Instead I got this error:

Again I was at the mercy of search engines

. I searched for it and found a blog post by Sandrino: http://sandrinodimattia.net/blog/posts/azure-appfabric-acs-unauthorized-logon-failed-due-to-server-configuration/. I applied the fix he suggested but unfortunately that didn’t work

. He also mentioned that it worked in an MVC application (this one was a WebForm application) so I tried creating a simple MVC 4 application and guess what, it worked there as Sandrino said

.

For now, I’m happy with what I’ve got and will focus on building some applications consuming ACS and see what this service has to offer.

Alternative Approach

While working on this, I realized an alternative approach to get up and running with ACS. Here’s what you would need to do:

- Create a new Access Control Service in Windows Azure Portal.

- Once the service is active, click on Manage button to manage that service. You will be taken to the ACS portal for your service.

- Configure Identity Providers.

- Get the management key from “Management service” section.

- Head back to Visual Studio project and configure ACS. You would need the name of the service and the management key.

- The tooling in Visual Studio takes care of creating “Relaying party applications” for you based on your project settings.

Summary

I spent considerable amount of time setting up this thing which kind of frustrated me. I hope this post will save you some time and frustration. Also this is the first time I looked at this service so it is highly likely I may have made some mistakes or included them here based on my sheer ignorance. Please feel free to correct me if I have provided incorrect information.

Toon Vanhoutte described the BizTalk 2013: SB-Messaging Adapter in a 1/28/2013 post:

The BizTalk Server 2013 Beta release comes with the SB-Messaging adapter. This adapter allows our on-premise BizTalk environment to integrate seamlessly with the Windows Azure Service Bus queues, topics and subscriptions. Together with my colleague Mathieu, I had a look at these new capabilities.

Adapter Configuration

The configuration of SB-Messaging receive and send ports is really straightforward. BizTalk just needs these properties in order to establish a connection to the Azure cloud:

- Service Bus URL of the queue, topic or subscription:

sb://<namespace>.servicebus.windows.net/<queue_name>

- Access Control Service STS URI:

https://<namespace>-sb.accesscontrol.windows.net/

- Issuer name and key for the Service Bus Namespace

Content Based Routing

Both Service Bus and BizTalk messaging layer offer a publish-subscribe engine, which allows for content based routing. In BizTalk, content based routing is done through context properties, the Azure Service Bus uses Brokered Message properties. A BizTalk context property is a combination of the propertyName and propertyNamespace. In Azure Service Bus, context properties are only defined by a propertyName. How are these metadata properties passed from the cloud to BizTalk and vice versa?

Sending from BizTalk to Service Bus topic

In order to pass context properties to the Service Bus topic, there’s the capability to provide the Namespace for the user defined Brokered Message Properties. The SB-Messaging send adapter will add all BizTalk context properties from this propertyNamespace as properties to the Brokered Message. Thereby, white space is ignored.

Receiving from Service Bus subscription to BizTalk

Also at the receive adapter, there’s the possibility to pass properties to the BizTalk message context. You can specify the Namespace for Brokered Message Properties, so the SB-Messaging adapter will write (not promote) all Brokered Message properties to the BizTalk message context, within the specified propertyNamespace. Be aware when checking the option Promote Brokered Message Properties, because this requires that a propertySchema is deployed which contains all Brokered Message properties.

Receive Port Message Handling

I was interested in the error handling when an error occurs in the receive adapter or pipeline. Will the message be roll backed to the Azure subscription or suspended in BizTalk? Two fault scenarios were investigated.

Receive adapter unable to promote property in BizTalk context

In this case, we configured the receive port to promote the context properties to a propertyNamespace that did not contain all properties of the Brokered Message. As expected, the BizTalk adapter threw an exception:

- The adapter "SB-Messaging" raised an error message. Details "System.Exception: Loading property information list by namespace failed or property not found in the list. Verify that the schema is deployed properly.

The adapter retried 10 times in total and moved afterwards the Brokered Message to the dead letter queue of the Service Bus subscription. Afterwards, BizTalk started processing the next message.

Receive pipeline unable to parse message body

In this simulation we tried to receive an invalid XML message with the XMLReceive pipeline. After the error occurred, we discovered that the message was suspended (persisted) in BizTalk.

WCF-Adapter Framework

It’s a pity to see that this new adapter is not implemented as a WCF-binding. Due to this limitation, we can’t make use of the great extensibility capabilities of the WCF-Custom adapter. The NetMessagingBinding could be used, but I assume some extensibility will be required in order to transform BizTalk context properties into BrokeredMessageProperty objects and vice versa. Worthwhile investigating…

Conclusion

The BizTalk product team did a great job with the introduction of the SB-Messaging adapter! It creates a lot of new opportunities for hybrid applications.

- Dynamically detect the BizTalk Servers of a BizTalk Group during automated deployment Microsoft BizTalk Server 2010 ships with some assemblies that assist you with the administration…

- BizTalk gets a major release: welcome BizTalk 2013 betaMicrosoft has announced the public release of Microsoft BizTalk Server 2013.

- WCF-custom bindings & the 64-bits version of the BizTalk Adapter Pack for .Net 4.0When installing the 64-bits version of the BizTalk LOB Adapter Pack, you may experience that the bin...

Sandrino di Mattia (@sandrinodm) described working around the “Key not valid for use in specified state” exception when working with the Access Control Service in a 1/27/2013 post:

If you’re using the Windows Azure Access Control Service (or any other STS for that matter) this is an issue you might encounter when your Web Role has more than one instance:

[CryptographicException: Key not valid for use in specified state.]

System.Security.Cryptography.ProtectedData.Unprotect(Byte[] encryptedData, Byte[] optionalEntropy, DataProtectionScope scope) +450

Microsoft.IdentityModel.Web.ProtectedDataCookieTransform.Decode(Byte[] encoded) +150As explained in the Windows Azure Training Kit this is caused by the DPAPI:

What does ServiceConfigurationCreated do?

By default WIF SessionTokens use DPAPI to protect the content of Cookies that it sends to the client, however DPAPI is not available in Windows Azure hence you must use an alternative mechanism. In this case, we rely on RsaEncryptionCookieTransform, which we use for encrypting the cookies with the same certificate we are using for SSL in our website.Over a year ago I blogged about this issue but that solution applies to .NET 3.5/4.0 with Visual Studio 2010. Let’s take a look at how you can solve this issue when you’re working in .NET 4.5.

Creating a certificate

So the the first thing you’ll need to do is create a certificate and upload it to your Cloud Service. The easiest way to do this is to start IIS locally and go to the Server Certificates:

Now click the Create Self-Signed Certificate option, fill in the name, press OK, right click the new certificate and choose “Export…“. The next you’ll need to do is go to the Windows Azure Portal and upload the certificate in the Cloud Service. This is possible by opening the Cloud Service and uploading the file in the Certificates tab:

Copy the thumbprint and add it to the certificates of your Web Role. This is possible by double clicking the Web Role in Visual Studio and going to the Certificates tab:

As a result the certificate will be installed on all instances of that Web Role. Finally open the web.config of your web application and add a reference to the certificate under the system.identityModel.services element:

Creating the SessionSecurityTokenHandler

The last thing we need to do is create a SessionSecurityTokenHandler which uses the certificate. To get started add a reference to the following assemblies:

- System.IdentityModel

- System.IdentityModel.Services

Once you added the required references you can add the following code to your Global.asax file:

This code replaces the current security token handler with a new SessionSecurityTokenHandler which uses the certificate we uploaded to the portal. As of now, all instances will use the same certificate to encrypt and decrypt the authentication session cookie.

<Return to section navigation list>

Windows Azure Virtual Machines, Virtual Networks, Web Sites, Connect, RDP and CDN

Maarten Balliauw (@maartenballiauw) described Running unit tests when deploying to Windows Azure Web Sites in a 1/30/2013 post:

When deploying an application to Windows Azure Web Sites, a number of deployment steps are executed. For .NET projects, msbuild is triggered. For node.js applications, a list of dependencies is restored. For PHP applications, files are copied from source control to the actual web root which is served publicly. Wouldn’t it be cool if Windows Azure Web Sites refused to deploy fresh source code whenever unit tests fail? In this post, I’ll show you how.

Disclaimer: I’m using PHP and PHPUnit here but the same approach can be used for node.js. .NET is a bit harder since most test runners out there are not supported by the Windows Azure Web Sites sandbox. I’m confident however that in the near future this issue will be resolved and the same technique can be used for .NET applications.

Our sample application

First of all, let’s create a simple application. Here’s a very simple one using the Silex framework which is similar to frameworks like Sinatra and Nancy.

1 <?php 2 require_once(__DIR__ . '/../vendor/autoload.php'); 3 4 $app = new \Silex\Application(); 5 6 $app->get('/', function (\Silex\Application $app) { 7 return 'Hello, world!'; 8 }); 9 10 $app->run();

Next, we can create some unit tests for this application. Since our app itself isn’t that massive to test, let’s create some dummy tests instead:

1 <?php 2 namespace Jb\Tests; 3 4 class SampleTest 5 extends \PHPUnit_Framework_TestCase { 6 7 public function testFoo() { 8 $this->assertTrue(true); 9 } 10 11 public function testBar() { 12 $this->assertTrue(true); 13 } 14 15 public function testBar2() { 16 $this->assertTrue(true); 17 } 18 }

As we can see from our IDE, the three unit tests run perfectly fine.

Now let’s see if we can hook them up to Windows Azure Web Sites…

Creating a Windows Azure Web Sites deployment script

Windows Azure Web Sites allows us to customize deployment. Using the azure-cli tools we can issue the following command:

1 azure site deploymentscript

As you can see from the following screenshot, this command allows us to specify some additional options, such as specifying the project type (ASP.NET, PHP, node.js, …) or the script type (batch or bash).

Running this command does two things: it creates a .deployment file which tells Windows Azure Web Sites which command should be run during the deployment process and a deploy.cmd (or deploy.sh if you’ve opted for a bash script) which contains the entire deployment process. Let’s first look at the .deployment file:

1 [config] 2 command = bash deploy.sh

This is a very simple file which tells Windows Azure Web Sites to invoke the deploy.sh script using bash as the shell. The default deploy.sh will look like this:

1 #!/bin/bash 2 3 # ---------------------- 4 # KUDU Deployment Script 5 # ---------------------- 6 7 # Helpers 8 # ------- 9 10 exitWithMessageOnError () { 11 if [ ! $? -eq 0 ]; then 12 echo "An error has occured during web site deployment." 13 echo $1 14 exit 1 15 fi 16 } 17 18 # Prerequisites 19 # ------------- 20 21 # Verify node.js installed 22 where node &> /dev/null 23 exitWithMessageOnError "Missing node.js executable, please install node.js, if already installed make sure it can be reached from current environment." 24 25 # Setup 26 # ----- 27 28 SCRIPT_DIR="$( cd -P "$( dirname "${BASH_SOURCE[0]}" )" && pwd )" 29 ARTIFACTS=$SCRIPT_DIR/artifacts 30 31 if [[ ! -n "$DEPLOYMENT_SOURCE" ]]; then 32 DEPLOYMENT_SOURCE=$SCRIPT_DIR 33 fi 34 35 if [[ ! -n "$NEXT_MANIFEST_PATH" ]]; then 36 NEXT_MANIFEST_PATH=$ARTIFACTS/manifest 37 38 if [[ ! -n "$PREVIOUS_MANIFEST_PATH" ]]; then 39 PREVIOUS_MANIFEST_PATH=$NEXT_MANIFEST_PATH 40 fi 41 fi 42 43 if [[ ! -n "$KUDU_SYNC_COMMAND" ]]; then 44 # Install kudu sync 45 echo Installing Kudu Sync 46 npm install kudusync -g --silent 47 exitWithMessageOnError "npm failed" 48 49 KUDU_SYNC_COMMAND="kuduSync" 50 fi 51 52 if [[ ! -n "$DEPLOYMENT_TARGET" ]]; then 53 DEPLOYMENT_TARGET=$ARTIFACTS/wwwroot 54 else 55 # In case we are running on kudu service this is the correct location of kuduSync 56 KUDU_SYNC_COMMAND="$APPDATA\\npm\\node_modules\\kuduSync\\bin\\kuduSync" 57 fi 58 59 ################################################################################################################################## 60 # Deployment 61 # ---------- 62 63 echo Handling Basic Web Site deployment. 64 65 # 1. KuduSync 66 echo Kudu Sync from "$DEPLOYMENT_SOURCE" to "$DEPLOYMENT_TARGET" 67 $KUDU_SYNC_COMMAND -q -f "$DEPLOYMENT_SOURCE" -t "$DEPLOYMENT_TARGET" -n "$NEXT_MANIFEST_PATH" -p "$PREVIOUS_MANIFEST_PATH" -i ".git;.deployment;deploy.sh" 68 exitWithMessageOnError "Kudu Sync failed" 69 70 ################################################################################################################################## 71 72 echo "Finished successfully." 73

This script does two things: setup a bunch of environment variables so our script has all the paths to the source code repository, the target web site root and some well-known commands, Next, it runs the KuduSync executable, a helper which copies files from the source code repository to the web site root using an optimized algorithm which only copies files that have been modified. For .NET, there would be a third action which is done: running msbuild to compile sources into binaries.

Right before the part that reads # Deployment, we can add some additional steps for running unit tests. We can invoke the php.exe executable (located on the D:\ drive in Windows Azure Web Sites) and run phpunit.php passing in the path to the test configuration file:

1 ################################################################################################################################## 2 # Testing 3 # ------- 4 5 echo Running PHPUnit tests. 6 7 # 1. PHPUnit 8 "D:\Program Files (x86)\PHP\v5.4\php.exe" -d auto_prepend_file="$DEPLOYMENT_SOURCE\\vendor\\autoload.php" "$DEPLOYMENT_SOURCE\\vendor\\phpunit\\phpunit\\phpunit.php" --configuration "$DEPLOYMENT_SOURCE\\app\\phpunit.xml" 9 exitWithMessageOnError "PHPUnit tests failed" 10 echo

On a side note, we can also run other commands like issuing a composer update, similar to NuGet package restore in the .NET world:

1 echo Download composer. 2 curl -O https://getcomposer.org/composer.phar > /dev/null 3 4 echo Run composer update. 5 cd "$DEPLOYMENT_SOURCE" 6 "D:\Program Files (x86)\PHP\v5.4\php.exe" composer.phar update --optimize-autoloader 7

Putting our deployment script to the test

All that’s left to do now is commit and push our changes to Windows Azure Web Sites. If everything goes right, the output for the git push command should contain details of running our unit tests:

Here’s what happens when a test fails:

And even better, the Windows Azure Web Sites portal shows us that the latest sources were commited to the git repository but not deployed because tests failed:

As you can see, using deployment scripts we can customize deployment on Windows Azure Web Sites to fit our needs. We can run unit tests, fetch source code from a different location and so on

Don Noonan described Using Windows Azure Virtual Machines to Learn: Networking Basics in a 1/29/2013 post:

Until recently I’ve used a personal hypervisor to experiment and learn new Windows technologies. Over the past few years my personal productivity machines have become more like mini datacenters – tons of cores, memory and storage. You know how it is, you go shopping for a new sub 2 pound / $1K notebook and by the time you click “add to cart” it gained about 4 pounds and $3K.

Enter Windows Azure. With only a browser and an RDP client I can spin up and manage just about anything. In other words, I could get away with an old PC or even a thin client and get out of the personal datacenter business. That $3K notebook just turned into a couple years of compute and storage in a real datacenter.

Don’t already have a Windows Azure account? Go here for a free trial.

Anyway, speaking of experimenting in the cloud, let’s talk about networking in Windows Azure Virtual Machines…

Did I just see a server with a DHCP-assigned IP address?

Windows Azure introduces a new concept when it comes to networking – DHCP for everything regardless of workload. That’s right, even servers are assigned IP addresses via DHCP. This comes as a surprise to hard core server admins. We have become religious about our IP spreadsheets, almost charging people when asked for one of our precious intranet addresses. Like labeling our network cables, this is another thing we’re going to have to let go when migrating workloads to the cloud.

The platform is now our label maker. As long as a virtual machine exists, it will be assigned the same IP address. Wait a minute, I thought DHCP only leased IP addresses out for a finite amount of time. What happens when the lease expires? Well, let’s find out when our lease is up on my domain controller using ipconfig /all:

Wow. Our virtual machine has a lease on 10.1.1.4 for over 100 years. I think we’re good. Even still, the platform automatically renews the lease for a given virtual machine.

What’s the point of subnetting in Windows azure?

Most of the time we subnet to segregate tiers of a service, floors of a building, roles in an organization, or simply to make good use of a given address range. In Windows Azure, I typically use subnets when defining service layers:

In this case we have a simple 3-tier application that uses classic Windows authentication. There is a management subnet containing the domain controllers, a database subnet with SQL servers, and an application subnet with the web front ends.

Here is another type of deployment where we simply wanted to isolate virtual classrooms from each other:

The deployment shared a common Active Directory, however each classroom had its own instructor and students.

What can I do with subnets once they’re created?

Once you have defined your subnets and their services, you can secure those services by applying Windows Firewall rules and settings in a consistent manner. For example, you can define rules where servers on the application subnet (10.1.3.0) should only be able to reach the database subnet (10.1.2.0), and only over ports relevant to the SQL Server database engine service instance (i.e. TCP 1433).

In other words, subnetting in Windows Azure allows you to organize objects in the cloud for many of the same reasons you do on-premise. In this case we’re using it to contain similar services and create logical boundaries to simplify firewall configuration settings. When you create a consistent deployment topology for cloud services in Windows Azure Virtual Machines, you can then take advantage of other Windows technologies such as WMI Filters and Group Policy to automate and apply consistent security settings.

We’ll talk about applying role-specific firewall settings via Group Policy in a future blog.

Don’t already have a Windows Azure account? Go here for a free trial.

Mark Gayler (@MarkGayler) offered Congratulations on the latest development for OVF! in a 1/29/2013 post to the Interoperability @ Microsoft blog:

Interoperability in server and cloud space has found even more evidence with the release announcement of Open Virtualization Format (OVF) 2.0 standard. We congratulate DMTF for this new milestone, a further proof that customers and industry partners care deeply about interoperability and we are proud of our participation to advance this initiative.

Browsing the OVF 2.0 standards specification, it is evident the industry is aligning around common scenarios and it comes as a pleasant surprise how some of those emerging scenarios have been driving our own thinking in the direction for System Center.

Microsoft has collaborated closely with Distributed Management Task Force (DMTF) and our industry partners to ensure OVF provides improved capabilities for virtualization and cloud interoperability scenarios to the benefit of customers.

OVF 2.0 and DMTF are making progress on key emerging patterns for portability of virtual machines and systems, and it’s nice to see OVF being driven by the very same emerging use cases we have been analyzing with our System Center VMM customers such as shared Hyper-V host clusters, encryption for credential management and virtual machine boot order management (not to mention network virtualization, placement groups and multi-hypervisor support).

Portability in the cloud and interoperability of virtualization technologies across platforms using Linux and Windows virtual machines continues to be important to Microsoft and to our customers and are increasingly becoming key industry trends. We continue to assess and improve interoperability for core scenarios using the SC 2012 VMM. We also believe moving in this direction will provide great benefit to our customer and partner eco-system, as well as bring real-world experience to our participation with OVF in DMTF.

See the overview for further details and other enhancements in System Center 2012 VMM.

Vittorio Bertocci (@vibronet) described Running WIF Based Apps in Windows Azure Web Sites in a 1/28/2013 post:

It’s official: I am getting old. I was ab-so-lu-te-ly convinced I already blogged about this, but after the nth time I got asked about this I came to find the link to the post only to find pneumatic vacuum in its place. No joy on the draft folder either… oh well, this won’t take too long to (re?)write anyway. Moreover: in a rather anticlimactic move, I am going to give away the solution right at the beginning of the post.

Straight to the Point

In order to run in Windows Azure Web Sites a Web application which uses WIF for handling authentication, you must change the default cookie protection method (DPAPI, not available on Windows Azure Web Sites) to something that will work in a farmed environment and with the IIS’ user profile load turned off. Sounds like Klingon? Here there’s some practical advice:

- If you are using the Identity and Access Tools for VS2012, just go to the Configuration tab and check the box “Enable Web farm ready cookies”

- If you want to do things by hand, add the following code snippet in your system.identitymodel/identityConfiguration element:

1: <securityTokenHandlers>2: <add type="System.IdentityModel.Services.Tokens.MachineKeySessionSecurityTokenHandler,

System.IdentityModel.Services, Version=4.0.0.0, Culture=neutral, PublicKeyToken=b77a5c561934e089" />3: <remove type="System.IdentityModel.Tokens.SessionSecurityTokenHandler,

System.IdentityModel, Version=4.0.0.0, Culture=neutral, PublicKeyToken=b77a5c561934e089" />4: </securityTokenHandlers>I know, it’s clipped and wrapped badly: eventually I’ll change the blog theme. For the time being, you can copy & paste to see the entire thing.

Note, the above is viable only if you are targeting .NET 4.5 as it takes advantage of a new feature introduced only in the latest version. If you are on .NET 4.0 WIF 1.0 offers you the necessary extensibility points to reproduce the same capability (more details below) however there’s nothing ready out of the box (you could however take a look at the code that Dominick already nicely wrote for you here).

Now that I laid down the main point, in the next section I’ll shoot the emergency flare that will lead your search engine query here.

Again, Adagio

Let’s go through a full cycle of create a WIF app -> deploy it in Windows Azure Web Sites –> watch it fail –> fix it –> verify that it works.

Fire up VS2012, create a web project (I named mine “AlphaScorpiiWebApp”, let’s see who gets the reference ;-)) and run the Identity and Access Tools on it. For the purpose of the tutorial you can pick the local development STS option. Hit F5, and verify that things work as expected (== the Web app will greet you as ‘Terry’).

Did it work? Excellent. Let’s try to publish to Windows Azure Web Site and see what happens. But before we hit Publish, we need to adjust a couple of things. Namely, we need to ensure that the application will communicate to the local development STS the address it will have in Windows Azure Web Sites, rather than the one on localhost:<port> automatically assigned (ah, please remind me to do a post about realm vs. network addresses). That’s pretty easy: go back to the Identity & Access tool, head to the Configure tab, and paste in the Realm and Audience fields the URL of your target Web Site.

Given what we know, we better turn off the custom errors before publishing (usual <customErrors mode="Off">under system.web).

Done that, go ahead and publish. My favorite route is through the Publish… entry in the Solution Explorer, I have a .PublishSettings file I import every time. Ah, don’t forget to check the option “Remove additional files at destination”.

Ready? Hit Publish and see what happens.

Boom! As expected, the authentication fails. Let me paste the error message here, for the search engines’ benefit.

Server Error in '/' Application.

The data protection operation was unsuccessful. This may have been caused by not having the user profile loaded for the current thread's user context, which may be the case when the thread is impersonating.

Description: An unhandled exception occurred during the execution of the current web request. Please review the stack trace for more information about the error and where it originated in the code.

Exception Details: System.Security.Cryptography.CryptographicException: The data protection operation was unsuccessful. This may have been caused by not having the user profile loaded for the current thread's user context, which may be the case when the thread is impersonating.

Source Error:

An unhandled exception was generated during the execution of the current web request. Information regarding the origin and location of the exception can be identified using the exception stack trace below.Stack Trace:

[CryptographicException: The data protection operation was unsuccessful. This may have been caused by not having the user profile loaded for the current thread's user context, which may be the case when the thread is impersonating.] System.Security.Cryptography.ProtectedData.Protect(Byte[] userData, Byte[] optionalEntropy, DataProtectionScope scope) +379 System.IdentityModel.ProtectedDataCookieTransform.Encode(Byte[] value) +52 [InvalidOperationException: ID1074: A CryptographicException occurred when attempting to encrypt the cookie using the ProtectedData API (see inner exception for details). If you are using IIS 7.5, this could be due to the loadUserProfile setting on the Application Pool being set to false. ] System.IdentityModel.ProtectedDataCookieTransform.Encode(Byte[] value) +167 System.IdentityModel.Tokens.SessionSecurityTokenHandler.ApplyTransforms(Byte[] cookie, Boolean outbound) +57 System.IdentityModel.Tokens.SessionSecurityTokenHandler.WriteToken(XmlWriter writer, SecurityToken token) +658 System.IdentityModel.Tokens.SessionSecurityTokenHandler.WriteToken(SessionSecurityToken sessionToken) +86 System.IdentityModel.Services.SessionAuthenticationModule.WriteSessionTokenToCookie(SessionSecurityToken sessionToken) +148 System.IdentityModel.Services.SessionAuthenticationModule.AuthenticateSessionSecurityToken(SessionSecurityToken sessionToken, Boolean writeCookie) +81 System.IdentityModel.Services.WSFederationAuthenticationModule.SetPrincipalAndWriteSessionToken(SessionSecurityToken sessionToken, Boolean isSession) +217 System.IdentityModel.Services.WSFederationAuthenticationModule.SignInWithResponseMessage(HttpRequestBase request) +830 System.IdentityModel.Services.WSFederationAuthenticationModule.OnAuthenticateRequest(Object sender, EventArgs args) +364 System.Web.SyncEventExecutionStep.System.Web.HttpApplication.IExecutionStep.Execute() +136 System.Web.HttpApplication.ExecuteStep(IExecutionStep step, Boolean& completedSynchronously) +69

Version Information: Microsoft .NET Framework Version:4.0.30319; ASP.NET Version:4.0.30319.17929What happened, exactly? It’s a well-documented phenomenon. By default, WIF protects cookies using DPAPI and the user store. When your app is hosted in IIS, its AppPool must have a specific option enabled (“Load User Profile”) in order for DPAPI to access the user store. In Windows Azure Web Sites, that option is off (with good reasons, it can exact a heavy toll on memory) and you can’t turn it on. But hey, guess what: even if you could, that would be a bad idea anyway. The default cookie protection mechanism is not suitable for load balanced scenarios, given that every node in the farm will have a different key: that means that a cookie protected by one node would be unreadable from the other, breaking havoc with your Web app session.

What to do? In the training kit for WIF 1.0 we provided custom code for protecting cookies using the SSL certificate of the web application (which you need to have anyway) – however that is an approach more apt to the cloud services (where you have full control of your certs) rather than WA Web Sites (where you don’t). Other drawbacks included the sheer amount of custom (code required (not staggering, but non-zero either), its complexity (still crypto) and the impossibility of making it fully boilerplate (it had to refer to the coordinates of the certificate of choice).

In WIF 4.5 we wanted to support this scenario out of the box, without requiring any custom code. For that reason, we introduced a cookie transform class that takes advantage of the MachineKey, and all it needs to opt in is a pure boilerplate snippet to be added in the web.config. Sometimes I am asked why we didn't change that to be the default, to which I usually answer: I have people waiting for me at the cafeterias to yell at me for having moved classes under different namespaces (sorry, that’s the very definition of “moving WIF into the framework” :-)), now just imagine what would have happened if we would have changed the defaults :-D.More seriously: you already know what the fix is: it’s one of the two methods described in the “Straight to the Point” section. Apply one of those, then re-publish. You’ll be greeted by the following:

We are Terry again, but this time, as you might notice in the address bar… in the cloud!

Chris Avis described The 31 Days of Servers in the Cloud using Azure IaaS…. in a 1/26/2013 post to the TechNet blogs:

Hiya folks! You probably know that I am a Technology Evangelist (TE) on the US Developer and Platform Evangelism (DPE) team. What you may not know is that there are 12 of us in the United States that are a part of this team (I have linked each of them in the list on the right side of my blog). We have been working on a series of blog posts called “The 31 Days of Servers in the Cloud”. This is a series of posts about leveraging the new VM Role in windows Azure. You may also hear this referred to as Azure IaaS.

Thus far there have been 25 Posts made in the series covering a broad set of topics. There are topics ranging from a basic introduction to Azure IaaS and what it means to you to more specific topics like using PowerShell to create and manage VM’s in Azure. we are laying the groundwork in this series to get you up to speed on using Windows Azure IaaS as another tool for you to build and manage your infrastructure.

If you would like to learn more about Azure IaaS, I encourage you to sign up for a 90 Day Free Trial of Windows Azure!

I also recommend you book mark my blog post and check back each day as we add the final posts to the series! Here is what we have so far….

- Part 1 - 31 Days of Servers in the Cloud: Windows Azure IaaS and You

- Part 2 - 31 Days of Servers in the Cloud: Building Free Lab VMs in the Microsoft Cloud

- Part 3 - 31 Days of Servers in the Cloud: Supported Virtual Machine Operating Systems in the Microsoft Cloud

- Part 4 - 31 Days of Servers in the Cloud: Servers Talking in the Cloud

- Part 5 - 31 Days of Servers in the Cloud: Move a local VM to the Cloud

- Part 6 - 31 Days of Servers in the Cloud: Windows Azure Features Overview

- Part 7 - 31 Days of Servers in the Cloud: Step-by-Step: Build a FREE SharePoint 2013 Lab in the Cloud with Windows Azure

- Part 8 – 31 Days of Servers in the Cloud: Setting up Management (Certs and Ports)

- Part 9 – 31 Days of Servers in the Cloud: Windows Azure and Virtual Networking….What it is

- Part 10 – 31 Says of Servers in the Cloud - Windows Azure and Virtual Networking – Getting Started–Step–by-Step

- Part 11 – 31 Days of Servers in the Cloud - Step-by-Step: Running FREE Linux Virtual Machines in the Cloud with Windows Azure

- Part 12 – 31 Days of Servers in the cloud - Step-by-Step: Connecting System Center 2012 App Controller to Windows Azure

- Part 13 – 31 Days of Servers in the Cloud - Creating Azure Virtual Machines with App Controller

- Part 14 – 31 Days of Servers in the Cloud – How to: Create an Azure VM using PowerShell

- Part 15 – 31 Days of Servers in the Cloud - What Does Windows Azure Cloud Computing REALLY Cost + How to SAVE

- Part 16 – 31 Days of Servers in the Cloud - Consider This, Reasons for Using Windows Azure IaaS

- Part 17 – 31 Days of Servers in the Cloud - Step-by-Step: Templating VMs in the Cloud with Windows Azure and PowerShell

- Part 18 – 31 Days of Servers in the Cloud – How to Delete VHD files in Azure

- Part 19 – 31 Days of Servers in the Cloud - Create a Windows Azure Network Using PowerShell

- Part 20 – 31 Days of Servers in the Cloud - Step-by-Step: Extending On-Premise Active Directory to the Cloud with Windows Azure

- Part 21 – 31 Days of Servers in the Cloud – Beyond IaaS for the IT Pro

- Part 22 – 31 Days of Servers in the Cloud - Using your own SQL Server in Windows Azure

- Part 23 – 31 Days of Servers in the Cloud – Incorporating AD in Windows Azure

- Part 24 – 31 Days of Servers in the Cloud - Connecting Windows Azure PaaS to IaaS

- Part 25 – 31 Days of Servers in the Cloud - Using System Center 2012 SP1 to Store VM’s in Windows Azure

- Part 26 – 31 Days of Servers in the Cloud - Monitoring and Troubleshooting on the Cheap (Meaning: Without System Center)

- Part 27 – 31 Days of Servers in the Cloud - Using Windows Azure VMs to learn: Windows Server 2012 Storage

- Part 28 – 31 Days of Servers in the Cloud – Introduction to Windows Azure Add-Ons from the Windows Azure Store

- Part 29 – 31 Days of Servers in the Cloud - Using Windows Azure VMs to learn: Networking Basics (DHCP, DNS)

- Part 30 – 31 Days of Servers in the Cloud - Using Windows Azure VMs to learn: RDS

- Part 31 – 31 Days of Servers in the Cloud - Our favorite other resources (Links to How-Tos, Guides, Training Resources)

As you can see, we still have 8 more posts to make in the series so check back each day to see what we have added!

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

• Avkash Chauhan (@avkashchauhan) described Using Fiddler to decipher Windows Azure PowerShell or REST API HTTPS traffic in a 1/30/2013 post:

If you are using publishsettings with Powershell, you might not be able to decrypt HTTPS traffic. Not sure what the problem is with publishsettings based certificates however I decided to create my own certificate using MAKECERT, and use it with Powershell to get HTTPS decryption working in Fiddler. The following steps are described based on my successful testing:

Step 1: First you would need to create using MAKECERT (Use VS2012 developer command prompt)

makecert -r -pe -n "CN=Avkash Azure Management Certificate" -a sha1 -ss My -len 2048 -sy 24 -b 09/01/2011 -e 01/01/2018

Step 2: Once certificate is created it will be listed in your Current User > Personal (My) store as below:

(Launch > Certmgr.msc to open the certificate mmc in Windows)

Step 3: Get the certificate Thumbprint ID and Serial Number (used in Step #13 to verify) from the certificate as below:

Thumbprint: 55c96e885764055d9beccec34dcd1ea82e601d4b

Serial Number: 85928750c5d9229d437287103ee08a79

Step 4: Now export this certificate to BASE 64 encoded certificate as below an save as CER file locally:

Step 5: Now upload your above created certificate (avkashmgmtBase64.cer) to your Windows Azure Management Portal. Be sure that the same certificate is listed as below:

Step 6: Be sure to have your Fiddler setting configure to decrypt HTTPS traffic as described here:

http://www.fiddler2.com/Fiddler/help/httpsdecryption.asp

Step 7: I would assume that you already have Fiddler installed in your machine. Now create a new copy of avkashmgmtBase64.cer as ClientCertificate.cer.

Copy this certificate @ C:\Users\<Your_User_Name>\Documents\Fiddler2\ ClientCertificate.cer

This is the certificate will be used by Fiddler to decrypt the HTTPS traffic. This is very important step.

Step 8: Now if you have already used Azure Powershell before with publishsettings then you would need to clear those settings. These files are generated every time Windows Azure Powershell connects to Management Portal with different credentials.

Go to the following folder and remove all the files here:

C:\Users\<Your_user_name>\AppData\Roaming\Windows Azure Powershell

Note: if you have Powershell settings based on previous publishsettings configuration this step is must for you.

Step 9: Now create a powershell script and using your certificate Thumbprint which you have collected in step #3 above:

$subID = "_your_Windows_Azure_subscription_ID"

$thumbprint = "55c96e885764055d9beccec34dcd1ea82e601d4b"

$myCert = Get-Item cert:\\CurrentUser\My\$thumbprint

$serviceName = "_provide_some_Azure_Service_Name"

Set-AzureSubscription –SubscriptionName "_Write_Your_Subscription_Name_Here_" -SubscriptionId $subID -Certificate $myCert

Get-AzureDeployment $serviceName

Step 10: Run the above powershell script without without Fiddler running and verify that it is working.

Step 11: Once step #10 is verified, start Fiddler and check HTTP decryption is enabled.

Step 12: Run the powershell again and you will see that HTTPS traffic shown in the Fiddler shows decrypted data.

Step 13: To verify that you are using the correct certificate with Fiddler, what you can do is to open the first connect URL (Tunnel to -> management.core.windows.net:443) and select its properties. In the new windows you can verify that

X-CLIENT_CERT is using the same certificate name which you have created and its serial number match which you have collected in step #3.

Many thanks to Bin Du, Phil Hoff, Daniel Wang (AZURE) for helping me to get it working.

Business Wire reported Genetec Unveils Stratocast: A New Affordable and Easy-to-Use Cloud-Based Video Surveillance Solution on Windows Azure on 1/30/2013: