Windows Azure and Cloud Computing Posts for 4/16/2010+

| Windows Azure, SQL Azure Database and related cloud computing topics now appear in this weekly series. |

• Updated 4/18/2010 with a few new articles marked •. Select •, Ctrl+C then Ctrl+F and Ctr+V the bullet to the search textbox to find new articles. My apologies for the overlong post.

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Table and Queue Services

- SQL Azure Database, Codename “Dallas” and OData

- AppFabric: Access Control and Service Bus

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Windows Azure Infrastructure

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Read the detailed TOC here (PDF) and download the sample code here.

Discuss the book on its WROX P2P Forum.

See a short-form TOC, get links to live Azure sample projects, and read a detailed TOC of electronic-only chapters 12 and 13 here.

Wrox’s Web site manager posted on 9/29/2009 a lengthy excerpt from Chapter 4, “Scaling Azure Table and Blob Storage” here.

You can now download and save the following two online-only chapters in Microsoft Office Word 2003 *.doc format by FTP:

- Chapter 12: “Managing SQL Azure Accounts and Databases”

- Chapter 13: “Exploiting SQL Azure Database's Relational Features”

HTTP downloads of the two chapters are available from the book's Code Download page; these chapters will be updated for the January 4, 2010 commercial release in April 2010.

Azure Blob, Table and Queue Services

• Jeff Tanner describes his Windows Azure X-Drives: Configure and Mounting at Startup of Web Role Lifecycle post of 4/17/2010 to The Code Project:

This article presents an approach for creating and mounting Windows Azure X-Drives through RoleEntryPoint callback methods and exposing successful mounting results within an environment variable through Global.asax callback method derived from the HttpApplication base class.

This article implements RoleEntryPoint.OnStart() callback to perform the following:

- Read ServiceConfiguration.cscfg and ServiceDefinition.csdef files that is configured for mounting zero (if none is provided) or more X-Drives.

- Create LocalStorage that will be used as local read cache when mounting X-Drives.

- Mount X-Drives having an access mode of either write or read-only.

- Expose successful X-Drive mounts by populating an environment variable X_DRIVES, and provide the following information:

- Friendly X-Drive Label

- Drive Letter

- Drive Access Mode

In addition:

- How to configure mounting of X-Drives within ServiceConfiguration.cscfg and ServiceDefinition.csdef files.

- Demonstrate using created environment variable X_DRIVES within a web role application. …

Karsten Januszewski’s Tips & Tricks for Accessing and Writing to Azure Blob Storage post of 4/16/2010 begins:

Lately I’ve been doing a lot with Azure Blob Storage for an upcoming Twitter-related Mix Online lab, and wanted to share what I’ve learned.

Karsten continues with these detailed topics (including source code):

- Creating An In Memory .xls File And Uploading It To Blob Storage

- Creating and Unzipping a .Zip File From A MemoryStream In Azure

- Using WebClient To Access Blobs vs. CloudBlobClient– Benchmarking Time

Dinesh Haridas describes available Windows Azure Storage Explorers in this 4/16/2010 post to the Windows Azure Storage Team blog:

We get a few queries every now and then on the availability of utilities for Windows Azure Storage and decided to put together a list of the storage explorers we know of. The tools are all Windows Azure Storage explorers that can be used to enumerate and/or transfer data to and from blobs, tables or queues. Many of these are free and some come with evaluation periods.

I should point out that we have not verified the functionality claimed by these utilities and their listing does not imply an endorsement by Microsoft. Since these applications have not been verified, it is possible that they could exhibit undesirable behavior.

Do also note that the table below is a snapshot of what we are currently aware of and we fully expect that these tools will continue to evolve and grow in functionality. If there are corrections or updates, please click on the email link on the right and let us know. Likewise if you know of tools that ought to be here, we’d be happy to add them.

In the below table, we list each Windows Azure Storage explorer, and then put an “X” in each block if it provides the ability to either enumerate and/or access the data abstraction. The last column indicates if the explorer is free or not.

Windows Azure Storage Explorer

Block Blob

Page Blob

Tables

Queues

Free

X

Y

Azure Blob Compressor

Enables compressing blobs for upload and downloadX

Y

X

Y

X

X

X

Y

X

X

X

X

Y/N

X

X

Y

Clumsy Leaf Azure Explorer

Visual studio plug-inX

X

X

X

Y

X

Y

X

N

MyAzureStorage.com

A portal to access blobs, tables and queuesX

X

X

X

Y

X

Y

X

X

X

X

Y

Steve Marx explains Testing Existence of a Windows Azure Blob in this 4/15/2010 post:

A question came up today on Stack Overflow of how to test for the existence of a blob. From the REST API, the best method seems to be Get Blob Properties, which does a

HEADrequest against the blob. That will return a404if the blob doesn’t exist, and if the blob does exist, it only returns the headers that would typically come with the blob contents. This makes it an efficient way to test for the existence of a blob.From the .NET StorageClient library, the method which maps to Get Blob Properties is

CloudBlob.FetchAttributes(). The following extension method implements anExists()method onCloudBlob:public static class BlobExtensions { public static bool Exists(this CloudBlob blob) { try { blob.FetchAttributes(); return true; } catch (StorageClientException e) { if (e.ErrorCode == StorageErrorCode.ResourceNotFound) { return false; } else { throw; } } } }Using this extension method, we can test for the existence of a particular blob in a natural way. Here’s a little program that tells you whether a particular blob exists:

static void Main(string[] args) { var blob = CloudStorageAccount.DevelopmentStorageAccount .CreateCloudBlobClient().GetBlobReference(args[0]); // or CloudStorageAccount.Parse("<your connection string>") if (blob.Exists()) { Console.WriteLine("The blob exists!"); } else { Console.WriteLine("The blob doesn't exist."); } }Note that the above extension method has a side-effect, in that it fills in the

CloudBlob.Attributesproperty. This is probably fine (and an optimization if you’re later going to read those attributes), but I thought I’d point it out.

Aaron Skonnard Channel9 video from DevDays 2010: Windows Azure Storage: Storing Data in the Cloud by Aaron Skonnard:

The cloud offers a new low-cost option for storing large amounts of data that can be accessed from geo-centric datacenters located around the world. Come learn about the cloud storage opportunities offered by Windows Azure and how they can benefit your business today.

<Return to section navigation list>

SQL Azure Database, Codename “Dallas” and OData

• Wayne Berry explains what “you can be completely ignorant about and still be a SQL Azure DBA” in his The New SQL Azure DBA post of 4/15/2010:

Compared to SQL Server here is the list of things that you can be completely ignorant about and still be a SQL Azure DBA:

- Hard Drives: You don’t have to purchase hard drives, hot swap them, care about hard drive speed, the number of drives your server will hold, the speed of SANs or motherboard bus, fiber connection, hard drive size. No more SATA vs. SCSI debate. Don’t care about latest solid state drive news. Everything you know about Hard Drives and SQL Server – you don’t need it.

RAID: How to Implement RAID, choose a RAID type, divide physical drives on a RAID – don’t care. RAID is for hornets now.

- Backup and Restore: You need to know nothing about Back up SQL Server data files, transaction logs, or how the database model effects your backup. You don’t need a backup plan/strategy – nor answer questions the about risk factor for tsunami in Idaho. No tapes, tape swapping, or tape mailing to three locations including a hollowed-out mountain in the Ozarks.

- Replication: You never have to do any type of replication, or even care about the replication. Merge, Snapshot, Transaction Log Shipping, or having a read-only secondary database with a 60 second failover – don’t care.

- SQL Server I/O: You don’t have to worry about physical disk reads/writes or paging, disk access wait time, managing file groups across tables, sizing database file (.mdfs), shrinking your database (you shouldn’t do this on SQL Server anyways), transaction log growth, index fragmentation, knowing anything about the tempdb or how to optimize it, data files (.mdf, .ldf, .ndf), data Compression, or determine and adjust fill factor. None of this is required for a SQL Azure DBA. …

Wayne continues with more SQL Server elements you don’t need to worry about and concludes:

… Read the first five again (Hard Dives, RAID, Backup, Replication, SQL Server I/O), think of the books you have read and discussions you have had about these topics for SQL Server. Now look behind you; see that young SQL Azure DBA – why isn’t his bookbag weighting him to the ground?

• Matthew Baxter-Reynolds announced a New OData publisher for BootFX preview available in this 4/9/2010 article, which I missed when posted:

I’ve been working on some OData components for publishing BootFX entities using the OData standards. Visit BootFX to download it.

Under the hood this uses the WCF components for working with OData messages – what we have here is a custom provider that uses the existing BootFX ORM functionality and produces OData messages.

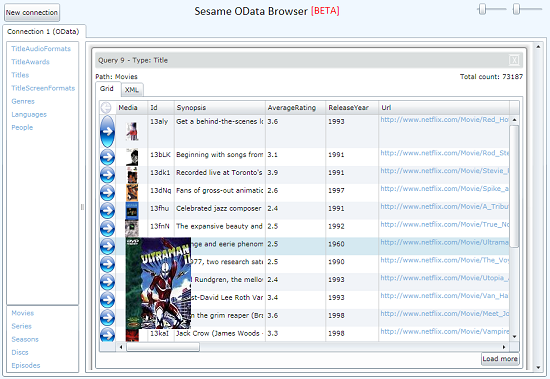

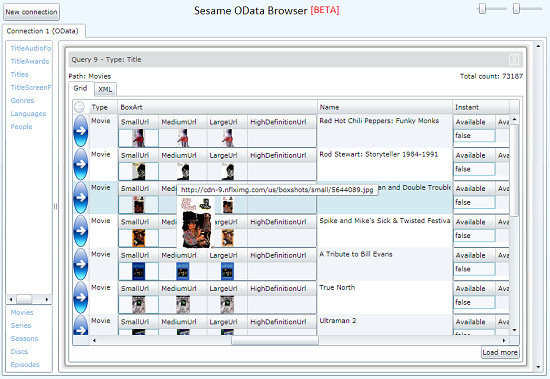

Fabrice Marguerie announced Sesame OData Browser updated in his 4/17/2010 post:

Since the first preview of Sesame was published, I've been working on improving it with new features.

Today, I've published an update that provides the following:

- Support for hyperlinks (URLs and email addresses)

- Improved support for the OData format. More OData producers are supported, including Netflix and vanGuide, for example.

- Fixed display of images (images used to appear mixed up)

- Support for image URLs

- Image zoom (try to hover over pictures in Netflix' Titles or MIX10's Speakers)

- Support for complex types (test this with Netflix' Titles and the OData Test Service's Suppliers)

- Partial open types support

- Partial feed customization support (Products.Name and Products.Description are now displayed for http://services.odata.org/OData/OData.svc for example)

- Partial HTML support

- Query number is now unique by connection and not globally

- Support for query continuation (paging) - See the "Load more" button

- Partial support for <FunctionImport> (see Movies, Series, Seasons, Discs and Episodes with Netflix)

- Version number is now displayed

- More links to examples (coming from http://www.odata.org/producers) provided in the connection dialog

You can try all this at the same place as before. Choose Netflix in the connection dialog to see most of the new features in action and to get a richer experience.

There is a lot more in the pipe. Enough to keep me busy for a few more weeks :-)

David Robinson describes New SQL Azure features - available today in this 4/16/2010 post to the SQL Azure Team blog:

Following up with the availability of the Windows Azure platform which includes SQL Azure in an additional 20 countries beginning April 9, 2010, our global customers and partners can now take advantage of new SQL Azure features which provide continued ease of design and deployment between on-premises and the cloud DBMS.

These news features include:

- MARS (Multiple Active Results Sets), which simplifies the application design process

- ALTER rename process for symmetry in renaming databases

- Application and Multi-server management for Data-tier Applications which further streamlines application design and enables deployments of database applications directly from SQL Server 2008 R2 and Visual Studio 2010 to SQL Azure for database deployment flexibility

Many customers have looked to SQL Azure as an alternative to MySQL due to the fact that it provides a simple way to offer a highly available and publicly accessible data store. A primary issue customers have faced in the migration process from a database such as MySQL is the ability to support multiple active resultsets (MARS).

Customers today can also take advantage of the Data-tier Application which provides the ability to register and deploy Data-tier Applications on SQL Azure. Deployment can be done through Visual Studio 2010 and SQL Server Management Studio 2008 R2, and registration can be done through SQL Server Management Studio 2008 R2. This allows customers to extend their on-premises investments and use familiar tools to build and deploy Data-tier Applications to on premise instances and to SQL Azure. Customers can utilize the Data-tier Application for the ultimate in database deployment flexibility.

We’re also providing our customers with the ALTER rename option which provides the ability to rename a database allowing for symmetry with SQL Server.

As we mentioned at MIX ’10, we will be offering a new 50GB size option in the June timeframe. Today, customers can become an early adopter of this new size option by sending an email to EngageSA@microsoft.com to receive a survey and nominate applications that requires more than 10GB of storage. Look for more information on the new 50GB offer in the coming months.

Stephen O’Grady’s YourSQL, MySQL, and NoSQL: The MySQL Conference Report post of 4/16/2010 analyzes the prospects for MySQL under Oracle’s stewardship:

“The basic reality is that the risks that scare people and the risks that kill people are very different.” – Peter Sandman, the New York Times via Freakonomics

There has never been a time, in my opinion, that MySQL has faced a more diverse set of threats than at present. Of these, one gets a disproportionate amount of the attention: Oracle’s stewardship, and the implications this has for the future of the database.

This is understandable. For most in the MySQL orbit, Oracle was the enemy, making it a suboptimal home for the most popular open source database on the planet. But the real question facing that community is whether or not Oracle should be the primary concern, or whether it’s focusing on the risks that scare it at the expense of those that could kill it. To explore this question, let’s turn to the Q&A.

Q: Before we begin, do you have anything to disclose?

A: Yes indeed. MySQL and Sun were, prior to the acquisition, RedMonk customers. We’ve also worked with Oracle in the past. Certain MySQL and Oracle competitors such as IBM and Microsoft are also RedMonk customers. So read into the following what you will.Q: Ok. Let’s start with some of the easy stuff first: how was the conference attendance and such?

A: Pretty good. The show floor was pretty sparse, but that, I’m told, is partially due to the disruption caused by the extended acqusition process of Sun/MySQL by Oracle. This acted to depress sales of booths and so on. The attendee head count was only slightly down, from what I’m told, with the keynotes packed and many of the sessions similarly full.Q: How about the general product news? Good or bad?

A: Users certainly seem happy. Facebook’s Mark Callaghan said in his keynote that 5.1 had turned out to be a good release – “surprise!” – and Craigslist’s Jeremy Zawodny and Smugmug’s Don MacAskill are both very excited by what they see coming in 5.5.4. So the immediate future looks positive from a development standpoint. …

Stephen continues with a few more feet of Q&A.

Aaron Skonnard Channel9 video from DevDays 2010: Why You Need Some REST? by Aaron Skonnard

As REST continues to grow in popularity, it’s important to stop and ask yourself why REST? REST is by no means a silver bullet but it does offer some advantages over traditional SOAP and WS-* architectures when it fits well into your scenario. There are also times when SOAP makes more sense because it offers features and capabilities that REST cannot provide. In the end, REST and SOAP are different architectural styles, each with their own pros and cons. Come and learn when to choose one over the other and why REST makes sense for many Web services.

Christian Liensberger presented a 01:03:00 Microsoft Codename "Dallas" video to Microsoft Research on 4/9/2010.

<Return to section navigation list>

AppFabric: Access Control and Service Bus

Jack Greenfield shows you how to implement OData for SQL Azure with AppFabric Access Control in this 5/16/2010 tutorial:

Over the last few weeks, I've been working with Mike Pizzo and David Robinson, building an AppFabric Access Control (ACS) enabled portal and Silverlight clients for a new OData Service for SQL Azure. Together, the OData Portal and Service make it easy for authorized users to publish SQL Azure databases as OData services. While developers can build and deploy custom OData based services to expose data from SQL Azure and other data sources, the OData Portal and Service provide OData access to SQL Azure through simple configuration. For more information about SQL Azure, OData or AppFabric Access Control, please see the glossary at the end of this post.

Access Modes

The OData Service provides two access modes: anonymous and authenticated. In both modes, it executes OData queries by impersonating a designated database user. The OData Portal is used to designate database users for both modes.

- Anonymous mode requires no credentials, as its name suggests. In fact, you can try it out using your web browser at this public data service endpoint.

- Authenticated mode, as might be expected, requires clients to pass credentials to the OData Service with their queries. The OData Service uses Simple Web Token (SWT), a lightweight, web friendly security token format. You can get a SWT to pass to the OData service by querying an ACS based Security Token Service (STS) using OAuth WRAP, a lightweight, web friendly authorization protocol. Before going into more detail about how to obtain a SWT and pass it to the OData service, let's look more closely at the OData Portal.

Using the Portal

To use the OData Portal, visit SQL Azure Labs, and navigate to the center tab in the top navigation bar, labeled "OData Service for SQL Azure". If you're not already signed in with a valid Windows Live ID, you'll be taken to a sign in page. When you've signed in, connect to a SQL Azure server by providing the server name (e.g., hqd7p8y6cy.database.windows.net), and the name and password for a login with access to the master catalog, and then clicking Connect.

When a connection is made to SQL Azure, the connection information is disabled, and OData Service configuration data is displayed for the databases on the selected server. Use the drop down list to select different databases.

To enable OData Service access for the currently selected database, check the box labeled "Enable OData". When OData Service is enabled for a database, user mapping information is displayed at the bottom of the form.

- Use the drop down list labeled "Anonymous Access User" to designate an anonymous access user. If no anonymous access user is designated, then anonymous access to the database will be disabled. If an anonymous access user is designated, then all queries presented to the OData Service without credentials will be executed by impersonating that database user. You can access the database as the anonymous user by clicking on the link provided at the bottom of the page.

- Click the link labeled "Add User" to designate a user for authenticated access. In the pop up panel, select a user from the drop down list. Leave the issuer name empty for simple authentication, or provide the name of a trusted Security Token Service (STS) for federated authentication. For example, to federate with another ACS based STS, provide the base URI displayed by the Windows Azure AppFabric Portal for the STS, as shown here.

- Click the "OK" button to complete the configuration process and dismiss the pop up panel. When a user is designated for authenticated access, the OData Service will execute queries by impersonating that user when appropriate credentials are presented. You can designate as many users for authenticated access as you like. The OData Service will decide which authenticated user to impersonate for each query by inspecting the credentials presented with the query.

Jack continues with “Building Clients,” “What Now?” and “Glossary” topics.

Ron Jacobs shows you How to Create a Workflow Service using a Flowchart in this 4/16/2010 post:

If you love Flowcharts as I do you might have wondered if it is possible to create a Workflow Service as a Flowchart. The short answer is… yes but we didn’t have a template for creating one until now.

Today on my blog, I’ve posted detailed instructions for how you can create a Workflow Service using a Flowchart. Or if you want to do it the easy way just look in the online templates for Workflow using Visual Studio 2010 and you will see it there.

Ron Jacobs describes Developing Web Services on IIS with Windows Server AppFabric in his 4/14/2010 post:

While Windows Server AppFabric is a great addition to your server to production management monitoring and troubleshooting you might be interested to learn that it can also be very useful at development time.

You can install Windows Server AppFabric on your Windows 7 development environment using the Web Platform Installer (see this post for more on installing AppFabric)

Interested? I’ve got step by step instructions to get you up and running with your first Web Site development project using Windows Server AppFabric, Visual Studio and IIS right here.

Ron Jacobs issued a warning about Windows Server AppFabric Beta 2 and Visual Studio 2010 / .NET 4 RTM on 4/14/2010:

The emails are rolling in… Usually they say something like this.

“Hi Ron - I installed VS2010 and the .NET 4.0 Framework RTM versions yesterday. I then went to re-install Windows Server AppFabric from the Web Platform Installer, but it has a dependency on the .NET 4.0 Framework RC. Is there a build of AppFabric coming soon that runs on .NET 4.0 RTM?”

Before you try this let me say… just don’t.

I know, you really want to move on to VS2010 RTM. So do I but you can’t use AppFabric Beta 2 with .NET 4 RTM.

So your next question is… when can I make this move to RTM?

We have been saying that Windows Server AppFabric will release in H1 of 2010. That means by the end of June you will have the RTM version of AppFabric in your hands so hang on just a little longer.

After all, the RC release of .NET 4 is pretty good. There were not a lot of changes between RC and RTM so keep plugging away on the RC release and we promise to get AppFabric released as soon as possible.

Aaron Skonnard Channel9 video from DevDays 2010: Introducing AppFabric: Moving .NET to the Cloud by Aaron Skonnard:

Companies need infrastructure to integrate services for internal enterprise systems, services running at business partners, and systems accessible on the public Internet. And companies need be able to start small and scale rapidly. This is especially important to smaller businesses that cannot afford heavy capital outlays up front. In other words, companies need the strengths of an ESB approach, but they need a simple and easy path to adoption and to scale up, along with full support for Internet-based protocols. These are the core problems that AppFabric addresses, specfically through the Service Bus and Access Control services.

Keith Brown Channel9 video from DevDays 2010: Get a whiff of WIF! by Keith Brown:

The Windows Identity Foundation (WIF) makes it easy for web applications and services to use the modern, claims-based model of identity. This model allows you to factor authentication and many authorization decisions out of your applications and into a central identity service. This model makes it much easier to achieve Internet-friendly single sign on. It also makes it easier for your application to receive richer identity information, and paves the way for identity federation, should you ever need to integrate with another organization or another platform (Java, for example).

Ingo Rammer Channel9 video from DevDays 2010: Windows Server Appfabric Hosting and Caching by Ingo Rammer:

In this session, Ingo Rammer gives you an overview of Windows AppFabric - the new application server extension from Microsoft. You will learn how to develop, deploy and control WCF and WF applications running on this platform (formerly known as "Dublin") so that you can rely on existing infrastructure to host your server-side logic. In addition to hosting, Ingo will show you how you can take advantage of distributed caching (formerly codenamed "Velocity") to scale your web applications to new levels.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

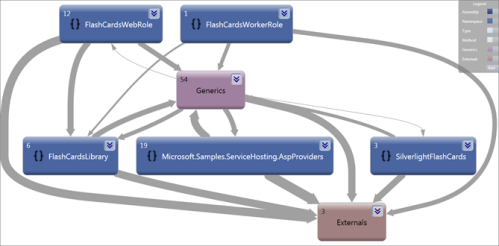

• Kevin Kell shows how to create Dependency Graphs and UML Sequence Diagrams of an Azure project in his Windows Azure and Visual Studio 2010 post of 4/18/2010:

… My experience so far with VS2010 has been positive. Azure projects developed under RC1 continue to load, compile, deploy and run just fine under the release version. That was a relief!

There are also some pretty cool features in VS2010 that I have started to use. One that I found particularly interesting is the capability to understand existing code by generating diagrams in a “Modeling Project” that can be added to the solution. In the context of development of Learning Tree’s Windows Azure course I found this really useful.

For example, I am serving as a Technical Editor for the course. Let’s say Doug Rhenstrom, our course author, develops some code to be used in a case study. Part of my job is to review the code. In order to be able to review effectively I need to understand the code first. Note that this same scenario could also occur for any software developer coming into an existing project. …

Using VS2010 with a few clicks of the mouse I can quickly generate compelling interactive diagrams that make it much easier for me to understand how the code works and how it is structured. Among the various diagram types I have found the Dependency Graph and the UML Sequence Diagram to be very useful.

A Dependency Graph shows how the various pieces of the solution fit together.

Figure 1 Dependency Graph for FlashCardsCloudService Solution

A UML Sequence Diagram, on the other hand, shows the call sequencing between various objects.

Figure 2 UML Sequence Diagram for WorkerRole Run method

Using diagrams such as these often results in faster and better high level understanding of the solution architecture.

For a quick demo of how these diagrams were created from the FlashCardsCloudService project in Visual Studio 2010 click here.

• Nick Malik offers Microsoft IT’s Common Conceptual Model in his Service Oriented Architecture Conceptual Model post of 4/16/2010:

Almost two years ago, I described some of the key concepts of service oriented architecture, including the distinction between a canonical model and a canonical message schema. Since that time, I worked on a wide array of models, including Microsoft IT’s Common Conceptual Model. That model (CCM for short) is the metamodel for IT concepts in Enterprise Architecture.

I received a note recently about that old post, and how valuable it was to the reader. It occurred to me that those concepts were part of our Common Conceptual Model, and that a “SOA-Specific View” of the metamodel may prove interesting. It was.

I’ve attached the SOA view of our Common Conceptual Model for this post.

A quick set of notes on my conceptual models.

- Two terms separated by a slash (Application / Installable Software) are distinct terms for the same concept.

- The associations are all “arrows with verbs”. Read the associations along the direction of the arrow. (e.g. “Application Component implements a Shared Service”). I intentionally avoided using UML notations like “Composition” and “Aggregation” because they are difficult for non-technical people to read.

- Many verbs on an arrow – The stakeholders couldn’t agree on which verb best described the relationship, so we used multiple distinct verbs.

- In my prior post, I used the term “Canonical Schema.” Our stakeholders preferred “Shared Message Data Contract.”

The key relationships to notice in this model are the ones that may be the least expected ones: the connection between the obvious SOA concepts like “Contract” and “Canonical Entity” and the not-so-obvious SOA concepts, like Business Process and Business Event.

When SOA is done well, it is part of a seamless ecosystem between the processes that people perform and the systems that support them. Being able to see the parts of the system and how they connect is key to understanding how to build a truly effective service oriented architecture.

Marcello Lopez Ruiz delivers a guided tour of .NET Fx 4’s new System.Data.Services.Providers namespace in these recent posts:

- Mutability model for ResourceType and friends (4/16/2010)

- What's new in System.Data.Services (4/15/2010)

- Overview of System.Data.Services.Providers (4/14/2010)

and, as a bonus, Marcello asked Have you seen Pivot yet? on 4/15/2010:

The site is over at http://www.getpivot.com/, and the video is short and very much worth watching.

As a developer, I can only wonder what MSDN would look like with Pivot like they showed for Wikipedia.

Enjoy!

and see the pointer to David Lemphers’ Build Your Own Pivot Server in Windows Azure! below.

Jas Sahdhu’s SugarCRM on Windows Azure & SSRS PHP SDK at SugarCon 2010 post of 4/16/2010 reviews the SugarCRM Conference which took place 4/12 through 4/14/2010 in San Francisco:

This week I was at SugarCon 2010, the CRM conference. SugarCRM, one of my partners in the Interop Vendor Alliance (IVA), is a leading provider of open source customer relationship management (CRM) software. SugarCon, is its global customer, partner and developer conference held April 12-14, at The Palace Hotel in San Francisco, California. Microsoft along with Red Hat, Talend and Zend helped sponsor the conference. The event had a heavy cloud theme this year and the tagline “The Cloud is Open” was used.

SugarCRM has over 6,000 customers and more than half a million users rely on SugarCRM to execute marketing programs, grow sales, retain customers and create custom business applications. There was quite a different mix of people from business to technical there, about 800 attendees or so. There was a good vibe to the show and it had a focus on the attendees and partners. There was lots of interest in different topics; CRM, Social Networking, Open Source, Cloud Computing; private and public … and Microsoft’s presence at the event …. which brings me to why we were there …

The keynote “Open Source and Open Clouds” was presented by SugarCRM CEO Larry Augustin who shared new product announcements and welcomed special guests to the stage to discuss how open source software is driving the next generation of CRM and Cloud services. Rob Craft, Microsoft’s Senior Director, Cloud ISV was one of the guest joining Augustin on stage. Craft shared with attendees how Microsoft is investing strongly in cloud services. “This is a deep, substantive long term investment from Microsoft,” he said. He shared the global presence Azure, being run from six datacenters in San Antonio, Chicago, Dublin, Amsterdam, Singapore and Hong Kong, with other datacenters coming ready too. Microsoft is guaranteeing 99.9 percent uptime for Azure, with customers getting a 10 percent rebate if this falls to over 99 percent uptime or 25 percent if it falls below that figure.

Larry then went on to demonstrate a beta of SugarCRM, a PHP application, running on Windows Azure and calling data from SQL Azure. Dan Moore, Sr. Platform Strategy Advisor, and Bhushan Nene, Principal Architect, from the Cloud ISV Team gave a follow-up session, “Introducing the Windows Azure Platform”, to the keynote with an overview of the benefits of launching cloud applications on Azure. We saw excitement from the conversations we had with several SugarCRM channel partners who attended the sessions and stopped by the booth. The Windows Azure platform is receiving enormous support and excitement throughout their ecosystem! …

Jas is Microsoft’s Interoperability Vendor Alliance manager.

Tim Anderson reports Silverlight 4.0 released to the web; tools still not final in this 4/16/2010 post:

Microsoft released the Silverlight 4.0 runtime yesterday. Developers can also download the Silverlight 4 Tools; but they are not yet done:

“Note that this is a second Release Candidate (RC2) for the tools; the final release will be announced in the coming weeks.”

Although it is not stated explicitly, I assume it is fine to use these tools for production work.

Another product needed for Silverlight development but still not final is Expression Blend 4.0. This is the designer-focused IDE for Silverlight and Windows Presentation Foundation. Microsoft has made the release candidate available, but it looks as if the final version will be even later than that for Silverlight 4 Tools.

Disappointing in the context of the launch of Visual Studio 2010; but bear in mind that Silverlight has been developed remarkably fast overall. There are huge new features in version 4, which was first announced at the PDC last November; and that followed only a few months after the release of version 3 last summer.

Why all this energy behind Silverlight? It’s partly Adobe Flash catch-up, I guess, with Silverlight 4 competing more closely with Adobe AIR; and partly a realisation that Silverlight can be the unifying technology that brings together web and client, mobile and desktop for Microsoft. It’s a patchy story of course – not only is the appearance of Silverlight on Apple iPhone or iPad vanishingly unlikely, but more worrying for Microsoft, I hear few people even asking for it.

Even so, Silverlight 4.0 plus Visual Studio 2010 is a capable platform; it will be interesting to see how well it is taken up by developers. If version 4.0 is still not enough to drive mainstream adoption, then I doubt whether any version will do it. …

Mary Jo Foley rings in with more details on the Run-Java-with-Jetty-in-Windows-Azure front with her Microsoft shows off another Java deployment option for Windows Azure post to ZDNet’s All About Microsoft blog of 4/16/2010:

While Windows Azure is designed first and foremost to appeal to .Net developers, Microsoft has been adding tools for those who want to work on cloud apps using PHP, Ruby and even — gasp — Java.

Recently, Microsoft went public with proof-of-concept information about another Java tool that can be hosted on .Net: The Jetty HTTP server.

Microsoft announced last fall support for implementing Java Servlet and JavaServer pages using the open-source Tomcat technology. Microsoft created a Solution Accelerator to help developers who wanted to use Tomcat in place of its own Internet Information Services (IIS) Web server technology in the cloud. At the Professional Developers Conference, Microsoft demonstrated how Domino’s was using TomCat on Azure.

Tomcat isn’t Java developers’ only Java-based Web-server option on Azure, however. In late March, Microsoft Architect David Chou outlined the way developers can use Jetty as an HTTP server and Servlet container in Microsoft’s cloud environment. If there’s enough interest, Microsoft may develop a Jetty Solution Accelerator, similar to the Tomcat one, said Vijay Rajagopalan, Principal Architect in the Microsoft Interoperability team.

Jetty is used by a number of projects, including the Apache Geronimo app server, BEA WebLogic Event Server, Google App Engine (which is a competitor to Windows Azure), Google Android, RedHat JBoss and others, Microsoft officials noted on an April 14 post to the Windows Azure Team blog.

Last month, Microsoft Architect David Chou outlined the way developers can use Jetty as an HTTP server and Servlet container in Microsoft’s cloud environment. Jetty provides an asynchronous HTTP Server, a Servlet container, Web Sockets server, asynchronous HTTP client, and OSGi/JNDI/JMX/JASPI and AJP support.

In other Java-on-Azure news, Microsoft is working with Persistent Systems Ltd. on delivering this summer new updates to its Java, Ruby and PHP software-development toolkits for Azure, said Rajagopalan. These SDKs are now known officially as AppFabric SDKs for Java, Ruby and PHP.

Any developers out there interested in Tomcat or Jetty on Azure? I’d be interested to hear why/why not?

Scott Densmore explains Windows Azure Deployment for your Build Server Part 2 : Deploy Certs with a PowerShell script in this 4/15/2010 post:

In Part 1 I discussed how you could use msbuild + PowerShell to deploy your Windows Azure projects. Another thing you might need to deploy is your certificates for SSL etc. I figured that was the next logical step so I modified the scripts to include this. The new deploycert.ps1 file takes you[r] API certificate, the hosted service name and the cert path and password for your certificate. I added a property group to hold the certificate information in the msbuild file.

I also updated the msbuild file to change the [A]zure information. I made a big assumption about how things worked that I didn't comment on last time. The [A]zure information included the storage account information so you could swap out the connection strings. The assumption I made was that the hosted service and storage service names where the same. That was dumb. I updated the property group to better represent what is going on.

The last piece of information on this is that you should make sure that you secure this information. I would suggest you have this build just on the build server and not for devs. We are going to cover this in more detail when the guide come out. Check our site often.

David Lemphers suggests that you Build Your Own Pivot Server in Windows Azure! in this 4/15/2010 tutorial:

So one thing I’ve been wanting to do since moving from the Windows Azure team to the Pivot team, is build a Pivot Dynamic Server in Windows Azure.

Well, tonight I decided to do just that, allow me to expatiate! ;0

Let’s start with a basic overview of what Pivot requires to load a collection.

First it needs a collection file, affectionately referred to as “the cxml”. This lays out the details of your collection, things like the facet categories that provide the faceted exploration capabilities, and the details about the item in your collection. Within the cxml, there is a reference to another file, known as “the dzc”, or the DeepZoom Collection. This file describes all the smaller “dzi” files, or DeepZoom Images, that make up the whole collections set of images.

Now, this is all pretty high level, and to get a real sense of what this all means, a little time spent reading through our site is time well spent. For those that have, and are simply asking the question, “How do I create a collection on the fly, and serve it up from Windows Azure?”, then I hope this blog post satisfies.

So given the basics above, that Pivot requires a cxml that points to a dzc of dzi’s, we’re ready to move to the Windows Azure part.

So Windows Azure has some awesome capabilities, that makes it possible to build something as powerful as a Pivot Dynamic Server in no more than a night.

To start with, I set some goals:

- Be able to serve a completely dynamic collection from Windows Azure that would load in Pivot.

- To not use Windows Azure Storage (yet!). For my demo, I’m assuming a single web role instance, with all files being served from the role itself, old skool style. I’ll rev this sample/demo to use blobs in Windows Azure Storage in a follow up post.

- To generate the collection from a web source, that is, use something like Flickr or Twitter as the source of my collection and transform it on the fly (I picked Flickr).

- Auto-generate my facets. This way, there is no need for “authoring” per se, you just point and click.

Now on to the code. …

Dave continues with more details and a link to the sample code.

David Aiken recommends that Windows Azure developers Remember to check your framework version in this 4/15/2010 post:

I’ve just recently installed the final version of Microsoft Visual Web Developer 2010 Express – which is my tool of choice for building for Windows Azure.

When you open a project from an older version, you get the option to upgrade the projects. My default response to this dialog box is to click on Finish and not walk through the wizard. Now one thing that I notice is that if there are any web projects, you will be asked if you want to leave them as framework 3.5 projects, or upgrade them. Right now Windows Azure does not support .net 4 applications – so you should choose to leave them at framework 3.5.

This is great, but if you have any class libraries or other projects, those seem to get upgraded automatically to framework 4.0. The easy fix is to check the project properties of each project and make sure the framework version is set to 3.5. Fortunately most of the projects I’ve converted have thrown up warnings – but it’s always good to check. [Emphasis added.]

Josh Holmes explains Creating a Simple PHP Blog in Azure in this 4/15/2010 post:

In this post, I want to walk through creating a simple Azure application that will show a few pages, leverage Blob storage, Table storage and generally get you started doing PHP on Azure development. In short, we are going to write a very simple PHP Blog engine for Azure.

To be very clear, this is not a pro blog engine and I don’t recommend using it in production. It’s a lab for you to try some things out and play with PHP on Azure development.

If you feel like cheating, all of the source code is available in the zip file at http://www.joshholmes.com/downloads/pablogengine001.zip.

0. Before you get started, you need to make sure that you have the PHP on Azure development environment setup. If you don’t, please follow the instructions at Easy Setup for PHP On Azure Development.

Josh continues with a detailed tutorial and source code.

Aaron Skonnard Channel9 video from DevDays 2010: Windows Azure Applications: Running Applications in the Cloud by Aaron Skonnard:

Windows Azure provides a new execution environment for your .NET applications in the cloud. This ultimately means your applications will run in highly-scalable datacenters located throughout the world. Come learn about the cloud execution environment offered by Windows Azure and how to get your first applications up and running with Visual Studio .NET.

Return to section navigation list>

Windows Azure Infrastructure

• Brian Harry reports in TFS and Azure of 4/18/2010:

We’ve started an investigation effort to see how hard it would be to get TFS running on Azure. The thing we thought would be hardest was getting our extensive SQL Server code working. It turns out we were wrong. It was really easy. We’ve already gotten the TFS backend up and running on SQL Azure. One of the guys on our team has a local App Tier (in North Carolina) talking to a SQL Azure hosted database that contains all the TFS data. Of course it’s all prototype right now and you’d never actually run a server that way but it’s very cool to see how quickly we were able to get that working. I’ve played with it some and can use source control pretty effectively, work item tracking, etc. I’ve even used Microsoft Test Manager against it and can do test case management, etc. We haven’t hooked up build automation or reporting yet.

Overall, we didn’t have to make very many changes. We had to change some of the SQL XML stuff we were using to a different mechanism that SQL Azure supports. The biggest thing we had to do was to change all of our “SELECT INTO” statements to use regular temp tables. SQL Azure requires that all tables have primary keys and SELECT INTO doesn’t support that and is therefore not supported in SQL Azure. We used SELECT INTO because in SQL 2005 it was much faster than temp tables. In SQL 2008 and later they are about the same performance so the switch is no big deal other than finding all of the places we used it.

We’ve still got a few issues left to work out. For example, we use sysmessages for error messages in TFS and SQL Azure doesn’t really support apps doing that. We’re talking to the SQL team about alternatives and possible feature additions to SQL Azure that will solve our issues. Also, SQL Azure storage space is a good bit more expensive than Windows Azure blob storage. As such, we are looking into ways to take the file content out of SQL Azure and store it on the Windows Azure storage system so that TFS would cost less to operate. …

Now we’re working on prototyping the application tier on Windows Azure. The biggest challenge there is making TFS’s identity and permission system work well with Azure’s. TFS uses Windows SAM and Active Directory. Azure uses claims based authentication. It has some pretty significant ramifications. Further, we’d like to support identity federation so that companies that choose to host their TFS on Azure would be able to use their corporate login credentials along with the hosted TFS.

Anyway, there’s not product plan for this yet. It’s just an experiment to see how hard it would be and what the result would be like. Once we get the prototype full up and running we should have a pretty good idea of what would be involved in productization and can make some decisions about when, if and how we complete the work and bring it to market.

Exciting stuff!

• Reuven Cohen (@ruv) asserts “The Chinese [cloud computing] market isn't just hot, it's on fire” in his The Communal Clouds of China post of 4/18/2010:

After spending a week in the Peoples Republic of China I discovered that unlike the air quality a few things have become particularly clear to me. Saying cloud computing is big in China may be the understatement of the century -- it's gargantuan. It is the topic Du Jour. During my trip I had the opportunity to meet with some of the biggest players in the Chinese cloud computing scene ranging from large startups to massive state sponsored companies. All had the same story to tell, cloud computing is the future of IT. Today I thought I'd take a moment to describe a few of the more interesting opportunities I uncovered while in Beijing last week.

One of the more particularly interesting opportunities I discovered while in China was what I would describe as a Communal Cloud -- Cloud infrastructures built in conjunction to Special Economic Zones setup by the PRC. For those of you unfamiliar with the Special Economic Zones (SEZ) of the People's Republic of China. The government of China gives SEZs special economic policies and flexible governmental measures. This allows SEZs to utilize an economic management system that is especially conducive to doing business that does not exist in the rest of mainland China. You can think of these zones as massive city sized industrial parks focused on fostering economic growth in particular market segments. (I will say that economic growth is something that appears to come easy in China these days) Many of these zones also now feature their own on site data centers for the industries located within these areas. So the cloud use case I was told about is to offer state sponsored cloud computing to these companies. A kind of communal cloud made available at little or no cost. An IT based economic incentive of sorts. Kind of a cool idea. …

It will be interesting to learn Microsoft’s plans for Azure data centers in the PRC.

• Joe Weinman (@joeweinman) answers The Cloud in the Enterprise: Big Switch or Little Niche? with this 4/18/2010 essay:

Cloud computing — where mega-data centers serve up webmail, search results, unified communications, or computing and storage for a fee — is top of mind for enterprise CIOs these days. Ultimately, however, the future of cloud adoption will depend less on the technology involved and more on strategic and economic factors.

On the one hand, Nick Carr, author of “The Big Switch,” posits that all computing will move to the cloud, just as electricity — another essential utility — did a century ago. As Carr explains, enterprises in the early industrial age grew productivity by utilizing locally generated mechanical power from steam engines and waterwheels, delivered over the local area networks of the time: belts and gears. As the age of electricity dawned, these were upgraded to premises-based electrical generators — so-called dynamos — which then “moved to the cloud” as power generation shifted to hyper-scale, location-independent, metered, pay-per-use, on-demand services: electric utilities. Carr’s prediction appears to be unfolding, given that some of the largest cloud service providers have already surpassed the billion dollar-milestone.

On the other hand, at least one tech industry luminary has called the cloud “complete gibberish,” and one well-respected consulting group is claiming that the cloud has risen to a “peak of inflated expectations” while another has found real adoption of infrastructure-as-a-service to be lower than expected. And Carr himself does admit that “all historical models and analogies have their limits.”

So will enterprise cloud computing represent The Big Switch, a dimmer switch or a little niche? At the GigaOM Network’s annual Structure conference in June, I’ll be moderating a distinguished panel that will look at this issue in depth, consisting of Will Forrest, a partner at McKinsey & Company and author of the provocative report “Clearing the Air on Cloud Computing;” James Staten, principal analyst at Forrester Research and specialist in public and private cloud computing; and John Hagel, director and co-chairman of Deloitte Consulting’s Center for the Edge, author and World Economic Forum fellow. We may not all agree, but the discussion should be enlightening, since ultimately, enterprise decisions and architectures will be based on a few key factors: …

Joe continues with detailed descriptions of the “few key factors” and concludes:

… Consider all of these factors together. Nick Carr’s predictions will likely be realized in cases where a public cloud offers compelling cost advantages, enhanced flexibility, improved user experience and reduced risk. On the other hand, if there is a high degree of technology diffusion for cloud enablers such as service automation management, limited cost differentials between “make” and “buy,” and relatively flat demand, one might project a preference for internal solutions, comprising virtualized, automated, standardized enterprise data centers.

It may be overly simplistic to conclude that IT will recapitulate the path of the last century of electricity; its evolution is likely to be more far more nuanced. Which is why it’s important to understand the types of models that have already been proven in the competitive marketplace of evolving cloud offers — and the underlying factors that have caused these successes — in order to see more clearly what the future may hold. Hope to see you at Structure 2010.

Joe is a speaker at Structure 2010 and Strategy and Business Development VP, AT&T.

James Watters (@wattersjames) said: “You should read @joeweinman 's latest http://bit.ly/92ztv4 ; its a beautifully written tour-de-force comparing datacenter and SP evolution!” and added “The most interesting point of Joe's piece is the power of cloud software; it event[ually]/soon can be bought vs. built and his highest value in chain.” Agreed, although slightly confused about the last clause.

Lori MacVittie asks How should auto-scaling work, and why doesn’t it? in her Load Balancing in a Cloud essay of 2/16/2010:

Although “rapid elasticity” is part of NIST’s definition of cloud computing, it may be interesting to note that many cloud computing environments don’t include this capability at all – or charge you extra for it. Many providers offer the means by which you can configure a load balancing service and manually add or remove instances, but there may not be a way to automate that process. If it’s manual, it’s certain “rapid” in the sense that’s it’s probably faster than you can do it (because you’d have to acquire hardware and deploy the application, as opposed to simply hitting a button and “cloning” the environment ‘out there’) but it’s not necessarily as fast as it could be (because it’s manual) nor is it automated. There’s a number of reasons for that – but we’ll get to that later. First, let’s look at how auto-scaling is supposed to work, at least theoretically.

1. Some external entity that is monitoring capacity for “Application A” triggers an event that indicates a new instance is required to maintain availability. This external entity could be an APM solution, the cloud management console, or a custom developed application. How it determines capacity limitations is variable and might be based solely on VM status (via VMware APIs), data received from the load balancer, or a combination thereof.

2. A new instance is launched. This is accomplished via the cloud management console or an external API as part of a larger workflow/orchestration.

3. The external entity grabs the IP address of the newly launched instance and instructs the load balancer to add it to the pool for resources for “Application A.” This is accomplished via the standards-based API which presents the configuration and management control plane of the load balancer to external consumers as services.

4. The load balancer adds the new application instance to the appropriate pool and as soon as it has confirmation that the instance is available and responding to requests, begins to direct traffic to the instance.

This process is easily reversed upon termination of an instance. Note: there are other infrastructure components that are involved in this process that must also be notified on launch and decommission, but for this discussion we’re just looking at the load balancing piece as it’s critical to the concept of auto-scaling. …

Tom Bittman’s The Private Cloud Sandbox post of 4/16/2010 to the Gartner blog analyzes private clouds’ growth up the hype cycle:

Private cloud computing is rapidly moving up the Gartner hype cycle. In terms of raw market hype, I think we’ll peak late this year. VMware’s “Redwood” won’t be the only announcement – every major infrastructure vendor in the planet will likely put “private cloud” in their announcements, their marketing, their product names.

So before we get too overwhelmed with private cloud computing mania, what’s going to be real, and what isn’t? How will private cloud computing be used?

Just like early virtualization deployments, development and test is the favorite starting point for private cloud computing. Take out the middle-man, and provide a self-service portal for developers to acquire resources. Manage the life cycle of those resources, and return them to the pool when the developer is done. Dev/test is a perfect starting point, because there is a need for rapid provisioning and de-provisioning.

What’s next?

I think the next logical place will be the computing sandbox. This is a place for production workloads that need to be put up quickly – a stand-alone web server, a short-running computational task, a pilot project. “I need it NOW.”

The sandbox will especially be the place to put a workload prior to full production deployment internally, but when it needs to go up fast – and when external deployment (in the “public cloud”) isn’t appropriate for one reason or another.

Sandboxes can have different operational rules than normal production workloads. For example, perhaps it is a short-term “lease” and expires after thirty days. Perhaps the software is never maintained or patched during that window. Perhaps there is no backup or disaster recovery in place for those workloads. Perhaps security coverage is limited. …

James Governor recommends VAT: How To Explain The Value of The Cloud To Business People in this 4/15/2010 post to the Enterprise Irregulars blog:

A few months back I attended BusinessCloud9, a really excellent event run by my old mate Stuart Lauchlan, looking at cloud-based business applications. It was the least dorky Cloud show I have been to by some margin- the room was chockful of suits, with budgets.

Anyway – one story really stuck with me… because it utterly nails the benefits of the Cloud model for business applications.

Zack Nelson, CEO of Netsuite summed up the value of the model: Value Added Tax or VAT.

During the teeth of the financial crisis the UK government cut VAT by 2.5% to stimulate businesses. For companies running traditional on premise business applications this was a total nightmare. Didn’t matter whether you were a small business running Sage or a FTSE100 firm running Oracle Financials this was a painful change to make. Retailers were in a tough spot. How were they supposed to make all their systems compliant with the rule change.

Unless, that is, they were using hosted Software as a Service (Saas) applications. With the cloud, a change like this can be rolled out to every customer overnight- as easily as Google rolling out a new service.

So next time someone asks what’s the Business Value of cloud you can just say read my lips – Some. New. Taxes.

Microsoft | Media & Entertainment presents Cloud Solutions: One of the Biggest Opportunities in Decades for the Tech Industry:

With the right technology in place, you can provide your organization with instant access to data and collaboration tools from almost anywhere. Microsoft cloud services make greater productivity possible with applications you already know and assets you have today.

With decades of experience serving businesses large and small – now 20 million in the cloud – Microsoft understands the value of technology that is scalable and familiar from day one for you and your employees. We offer the most complete set of cloud-based solutions and around-the-clock support to ensure we get it right together.

<Return to section navigation list>

Cloud Security and Governance

Jonathan Penn announced the Launch of Forrester's 2010 Security Survey on 4/15/2010:

We’re just ramping up at Forrester to start our 2010 Business Data Services’ Security Survey. To begin, I’ve started taking a measured look at last year’s questions and data. Additionally, I’ll be incorporating input from those analysts with their ears closest to the ground in various areas, and will be considering the feedback from our existing BDS clients.

I also welcome input here into what you would find useful for us to ask of senior IT security decision-makers, as development of the survey is take place over the next three weeks.

The survey is scheduled to be fielded in May and early June—with the final data set becoming available in July. The projected sample size is 2,200 organizations across US, Canada, France, UK, and Germany: split roughly 2:1 between North America and Europe, and with a 55/45 split for SMBs (20-1000 employees) vs. enterprises (1000+ employees). Concurrently, we ask a separate set of questions to respondents from “very small businesses” (VSBs) with 2-19 employees. We also set quotas around industry groupings, so each industry is appropriately represented. We source our panel from LinkedIn, which provides an excellent quality of respondents.

The Security Survey is an invaluable tool that provides insight into a range of topics critical for strategy decision-making: IT Security priorities, challenges; organizational structure and responsibilities; security budgets; current adoption and across all security technology segments, be they as products or as SaaS/managed services, along with associated drivers and challenges around the technology.

Here are a few valuable data points from last year’s survey: Read more

<Return to section navigation list>

Cloud Computing Events

GigaOM will host Structure 2010: Putting Cloud Computing to Work on 6/23 and 6/24/2010 at the Mission Bay Conference Center, San Francisco, CA, according to this post:

Structure is back for its third year, and as the industry has grown, so have we! Structure 2010 introduces a two-day format to accommodate demand for more content and networking time. Join us June 23–24, 2010, in San Francisco, as we shape the future of the cloud industry.

Cloud computing has caught the technology world’s imagination. At Structure 2010, we celebrate cloud computing and recognize that it is just one part of the fabric that makes up the global compute infrastructure we rely on daily. From broadband to hardware and software, we look at the whole picture and predict the directions the industry will take.

Whether you’re in a corporation looking to learn about infrastructure best practices or an entrepreneur looking for your next venture, Structure 2010 is a must-attend event that can provide you with the best ideas, contacts and thinkers.

At GigaOM, we don’t just talk about cloud computing once a year. It’s a part of our ongoing editorial coverage on GigaOM and GigaOM Pro every day. …

Windows Azure is one of the “Primetime Sponsors.”

IDC and IDG Enterprise will present The Cloud Leadership Forum on 6/13 to 6/15/2010 at the Santa Clara Convention Center, Santa Clara, CA:

The IDG/IDC Cloud Leadership Forum isn’t just any cloud event. It’s the place where the industry will set direction and clear out obstacles to market success, while senior IT executives get answers to their most pressing concerns about adopting public, private and hybrid cloud models.

This exclusive event will convene the cloud industry’s most influential vendor executives and senior IT executives from leading companies to examine, debate and decide the issues critical to the success of public and private clouds.

Our esteemed experts, practitioners and industry-leading technology companies will lead lively discussions that:

- Spotlight the most promising immediate and long-term applications of cloud

- Explore the industry and customer partnerships that can accelerate cloud’s adoption

- Close technology gaps, and

- Set the agenda for the evolution of cloud.

You will get answers to your most pressing questions, such as:

• What is the business case and business value of different cloud models?

• How do I choose what to move to the cloud, and then how do I do it?

• What do my peers’ cloud implementations look like, and how and why did they make the choices they did?

• What are my key vendors doing to advance cloud security and interoperability?

• How are my peers overcoming any concerns about security and compliance?

• What should I be doing now to prepare my team for this new world?Register now to join this important conversation about the vision, the reality and the direction of cloud. Have a voice in the evolution of this new computing paradigm and get the answers you need to build a technology strategy that leverages the best of the different cloud models and fulfills your business’ needs. Register today.

Click here for a registration form with a complementary code.

Dave Neilsen announced Opscamp Boston on 4/22/2010 (and San Francisco on 5/15/2010) in this 4/15/2010 post:

It’s been a while since OpsCamp Austin and I thought we were due for an update.

We have planned two more OpsCamps. One in Boston (next Thursday) organized by John Willis & John Treadway, and San Francisco (May 15th) organized by Mark Hinkle, Tara Spalding, Erica Brescia and myself. If you know of anyone in those towns, then please send them a link to http://opscamp.org

You might also be interested in CloudCamp Austin #2 which is scheduled for June 10th.

Finally, John “Botchagalupe” Willis wrote up a summary of OpsCamp Austin, which he posted to the OpsCamp Discussion Group – http://groups.google.com/group/opscamp. I have included it below for your convenience. Michael Cote also wrote up a summary.

Hope to see you at an upcoming OpsCamp or CloudCamp. Either way, keep on Camping!

Dave Nielsen (http://twitter.com/davenielsen)

tbtechnet announced Windows Azure Virtual Boot Camp II April 19th - April 26th 2010 on 4/16/2010:

After the huge success of the Windows Azure Virtual Boot Camp I, here comes…

Virtual Boot Camp II

Learn Windows Azure at your own pace, in your own time and without travel headaches.

Special Windows Azure one week pass provided so you can put Windows Azure and SQL Azure through their paces.

NO credit card required.

You can start the Boot Camp any time during April 19th -26th and work at your own pace.

The Windows Azure virtual boot camp pass is valid from 5am USA PST April 19th through 6pm USA PST April 26th

tbtechnet continues with the steps required to prepare for the Virtual Bootcamp.

<Return to section navigation list>

Other Cloud Computing Platforms and Services

• Liz McMillan asserts “Enriching its on-demand, global cloud computing solution - Verizon Computing as a Service, or CaaS” in her Verizon Enhances On-Demand Cloud Computing Solution post of 4/15/2010:

As more companies move to embrace cloud computing, Verizon Business is enriching its on-demand, global cloud computing solution - Verizon Computing as a Service, or CaaS. The enhancements provide business customers with better control and flexibility over their computing environments.

Based on customer demand, Verizon recently added the following features to CaaS:

- Server Cloning - Provides IT administrators with the option to customize the configuration of a CaaS virtual server and then create a golden, or reference, server image. This eliminates the need to manually create the same server image multiple times and enables the rapid deployment of server clones supporting the same corporate application.

- Application and Operating System Expansion - The SUSE Linux operating system is now supported on the Verizon CaaS platform as a standard service offering. Linux software is used with commonly deployed enterprise resource planning packages. In addition, Microsoft SQL Server 2008 has been added as a "click-to-provision" database server option.

- Expanded Networking Flexibility - Enterprises now have expanded and streamlined networking options - virtual router and shared virtual private networks, including Verizon Private IP - for connecting back-end systems to Verizon CaaS via the online portal. In addition, customers can purchase metered, burstable bandwidth up to 1Gbps to meet immediate requirements for temporary computing capacity.

In addition to adding new features, Verizon has successfully completed the first annual SAS 70 Type II examination of controls for its CaaS data centers. SAS 70, a widely recognized auditing standard performed by a third party accounting firm, is conducted in compliance with the standards set by the American Institute of Certified Public Accountants for examining specific areas such as access to facilities, logical access to systems, network services, operations and environmental safeguards. This examination demonstrates that Verizon Business has controls and processes in place to manage and monitor its CaaS platform, as well as customers' critical applications and infrastructure, helping to ease concerns customers may have about migrating to the cloud for the delivery of critical IT services. …

You can learn more about Verizon’s CaaS platform here.

Alex Williams objects to Another Cloud Computing Acronym To Drive You Bonkers in this 4/16/2010 post:

Scanning the news the other day and what do we see but a reminder of the many acronyms in the cloud computing world. Again, it's a vendor with a made up name. This time it's Verizon with an update to its "Computing as a Service" or CaaS for short.

Acronyms abound in the cloud computing world - perhaps more than any other technology in play today. They are emerging at a rapid clip. It's understandable as cloud computing is so new and there are so many ways for it to be applied. But it's also frustrating.

Verizon's service looks solid. But the name creates more confusion. Get this: Verizon also offers "Everything as a Service." That takes the cake, or should we say... muffin!

It makes the whole concept of cloud computing a bit confusing as you try to understand what really is available. It becomes an issue of "what is it now?"

This week's other imaginative term - Virtualization as a Service - from Salesforce.com and VmWare. It's the center issue for our Weekly Poll: What does Virtualization as a Service Really Mean?

Dave Geada, vice president of marketing at StrataScale, had this to say about what it means:

“I think [new names are] a lot of unwarranted marketing hype (and that means something coming from a marketer). Knowing very little about the announcement, I would guess that the two are partnering in order to provide a platform where Force.com partners can deploy integrated solutions to a VMware enabled Salesforce cloud. In essence these providers would have a one-stop-shop for delivering their solutions to market instead of having to rely on an assortment of hosting partners to deploy their solutions.

“A joint platform initiative like the one I just described would also benefit enterprises who could host their own customized VM appliances on this cloud and easily integrate them with their Salesforce implementations and Force.com applications. In doing so VMware would be able to access a segment of the market where it's been having some difficulties (i.e. SMB ISVs) and Salesforce would benefit from providing a more comprehensive solution to their partner ecosystem.

“If I'm right about this (and I reserve the right to be wrong), isn't that a much more compelling story than the mumbo-jumbo we're dealing with now? Cloud providers should demonstrate some more restraint in throwing the "cloud" label around and turn the conversation back around to the value that their providing to customers and partners. And high profile providers like Salesforce and VMware should be setting the example.”

We expect these acronyms will filter out over time. Or perhaps VaaS and CaaS will stand the test of time. It's just too early to tell.

Until then, how about a muffin?

<Return to section navigation list>