Windows Azure and Cloud Computing Posts for 4/14/2010+

| Windows Azure, SQL Azure Database and related cloud computing topics now appear in this weekly series. |

Update 4/16/2010: Corrected spelling of Brent Stineman’s last name (see Live Windows Azure Apps, APIs, Tools and Test Harnesses section).

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Table and Queue Services

- SQL Azure Database, Codename “Dallas” and OData

- AppFabric: Access Control and Service Bus

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Windows Azure Infrastructure

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Read the detailed TOC here (PDF) and download the sample code here.

Discuss the book on its WROX P2P Forum.

See a short-form TOC, get links to live Azure sample projects, and read a detailed TOC of electronic-only chapters 12 and 13 here.

Wrox’s Web site manager posted on 9/29/2009 a lengthy excerpt from Chapter 4, “Scaling Azure Table and Blob Storage” here.

You can now download and save the following two online-only chapters in Microsoft Office Word 2003 *.doc format by FTP:

- Chapter 12: “Managing SQL Azure Accounts and Databases”

- Chapter 13: “Exploiting SQL Azure Database's Relational Features”

HTTP downloads of the two chapters are available from the book's Code Download page; these chapters will be updated for the January 4, 2010 commercial release in April 2010.

Azure Blob, Table and Queue Services

Maarten Balliauw recently uploaded his 46-slide Windows Azure Storage, SQL Azure: Developing with Windows Azure storage and SQL Azure deck to SlideShare.

<Return to section navigation list>

SQL Azure Database, Codename “Dallas” and OData

Sean Gillies describes a new source of Geographic OData in this 4/15/2010 post:

I've been slow to catch up with Microsoft's OData initiative, but saw a link to geographic OData in the blog of Michael Hausenblas: Edmonton bus stops.

It's an Atom feed with extensions, including location,

<content type="application/xml"> <m:properties> <d:PartitionKey>1000</d:PartitionKey> <d:RowKey>3b57b81c-8a36-4eb7-ac7f-31163abf1737</d:RowKey> <d:Timestamp m:type="Edm.DateTime">2010-01-14T22:43:35.7527659Z</d:Timestamp> <d:entityid m:type="Edm.Guid">b0d9924a-8875-42c4-9b1c-246e9f5c8e49</d:entityid> <d:stop_number>1000</d:stop_number> <d:street>Abbottsfield</d:street> <d:avenue>Transit Centre</d:avenue> <d:region>Edmonton</d:region> <d:latitude m:type="Edm.Double">53.57196999</d:latitude> <d:longitude m:type="Edm.Double">-113.3901687</d:longitude> <d:elevation m:type="Edm.Double">0</d:elevation> </m:properties> </content>but the location is tied up inside an OData payload. A GeoRSS processor (such as GMaps) has no clue [map].

Pleiades is also taking an Atom-based approach to data, but does use GeoRSS. There were no bus stops back in the day, but there were bridges [map].

Comments on Michael's blog post indicate that OData is designed around "rectangular" rather than "linked" data. Atom as an envelope for tabular, CSV-like, data (like KML's extended data). Atom's content element was designed to accomodate this, but it seems clear to me that data, especially standard stuff like geographic location, is more visible outside the payload.

LarenC of the Microsoft Sync Framework team reports that Sync Framework 1.0 SP1 [is] Available for Download in this 2/13/2010 post:

Go here to download: http://bit.ly/9zXmWN.

For those of you using Sync Framework 1.0, we've just released a service pack that transitions the SQL Server Compact database provider to use a public-facing change tracking API that is new in SQL Server Compact 3.5 SP2. In SQL Server Compact, the change tracking feature is used to track information about all changes that occur in a given database and is used to retrieve metadata related to these changes. This public API gives you a finer grain of control and insight into the tracking of changes within a SQL Server Compact database. In previous versions of SQL Server Compact, the change tracking feature was automatically enabled the first time a synchronization operation was initiated, and you did not have the ability to interact directly with this feature. Sync Framework 1.0 SP1 leverages this new public API surface. Be aware that in some cases the additional API layers in the change tracking feature have resulted in performance degradation, so before you upgrade to Sync Framework 1.0 SP1, carefully consider whether your need for this public change tracking feature outweighs the performance impact.

This release also fixes a handful of bugs. For a complete list, visit the download page: http://bit.ly/9zXmWN.

If you install this update, you'll also undoubtedly want to upgrade to SQL Server Compact 3.5 SP2. This can be downloaded here: http://bit.ly/ccWO9a.

I’ve asked Laren in a comment if SPI is compatable with Azure Data Sync.

Colinizer’s Quick Tip: Add OData to your Windows Phone 7 CTP Silverlight Application in VS2010 RC of 4/14/2010 is a bit more than a “quick tip:”

So you downloaded the cool new tools for Windows Phone 7 Development and you want to connect to one of those amazing OData feeds… (okay probably just the NetFlix one right now), but perhaps you’ve hit a snag or need a little help getting started..

First point:

You need OData client support, so:

Download the OData client for WP7.

Run the downloaded file to extract a copy of System.Data.Services.Client.

Add a reference to the DLL to your project.

Add an imports/using state for System.Data.Services.Client.

Second point:

If you are still using the VS2010 RC (which you probably are as of today, as the current WP7 phone CTP is not compatible with the new VS2010 RTM), then you may have had a problem with “Add Service Reference”, specifically that it’s not appearing…

From the release notes:

The Add Service Reference option is not supported in the Windows Phone add-in to Visual Studio 2010. Workaround: Use Visual Studio 2010 Express for Windows Phone to add service references

So you can open up your project with VS 2010 Express for WP, which should also be on your machine. However, if you have a solution with other projects, they may not be supported in the Express SKU… life is getting complicated.

So, you can easily add the reference manually:

Open the VS2010 command prompt from the Start menu.

CD to your project directory

Issue a command similar to: datasvcutil /uri:http://odata.netflix.com/Catalog/ /dataservicecollection /language:CSharp /Version:2.0 /out:NetFlixRef.cs

Use Add Existing Item to add in the the proxy class (in this case NetFlixRef.cs).

You then add an imports/using statement for the namespace in the class and off you go…

Add code similar to this to get started on the binding…

var Container = new SomeContainer(new Uri("http://odata.netflix.com/Catalog/", UriKind.Absolute));

var query = linq query on SomeContainer.SomeEntitySet;

var collection = new DataServiceCollection<SomeProxyEntity>();

someListBox.ItemsSource = collection;

collection.LoadAsync(query);

… and then bind away in your XAML.

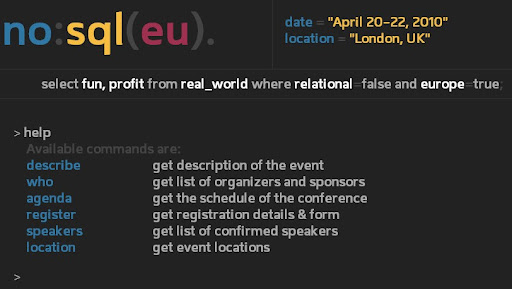

James Governor recommends that you Don’t Believe the Hype, Come to NoSQL EU April 20-22 in this 4/14/2010 post to his MonkChips blog:

One of the hottest topics in software development right now is what is being called NoSQL. What is that? I kind of like the definition from no-sqldatabase.org

“NoSQL databases mostly address some of the points: being non-relational, distributed, open-source and horizontal scalable. The original intention has been modern web-scale databases. The movement began early 2009 and is growing rapidly. Often more characteristics apply as: schema-free, replication support, easy API, eventually consistency / BASE (not ACID), and more. So the misleading term “nosql” (the community now translates it mostly with “not only sql”) should be seen as an alias to something like the definition above.”

For as long as RedMonk has been in business we have questioned relational-for-everything orthodoxy, so NoSQL is very much a natural fit for us. Here for example is Stephen talking to Breaking The Relational Chains in 2005. Of course you could say Stephen was just channeling the work of Adam Bosworth – in our database work we’ve been failing to Learn From The Web – but what is RedMonk’s role if not to understand and hopefully amplify points made by the smartest developers on the planet?

Anyway – I had been planning to run a show in London called LessSQL (i found the binary position in “NoSQL” somewhat unhelpful) when we came across plans to put on NoSQL EU. Rather than compete it made sense to join Oredev and Emile “Neo4J” Eifrem in running a great show.

David Robinson presented this 00:05:05 SQL Azure video description for Microsoft Showcase recently. Dave is the Senior Program Manager for SQL Azure and the technical editor of my Cloud Computing with the Windows Azure Platform book.

Cory Fowler’s Ring, Ring, Ring, Bring out your Data announcement of 4/10/2010 in the Guelph Coffee & Code site begins:

Cory Fowler, Founder of Guelph Coffee and Code, decided to rally the community to create a movement to get Guelph to open up its data. The movement began with creating a social presence on Twitter, under the username @OpenGuelph which provided a channel to tweet the benefits that OpenData could bring.

From there Cory went on to respond to a recent blog entry “Let’s Talk – about Guelph” by Honourable Mayor Karen Farbridge.

The City of Guelph informed Cory that they are currently working on an Open Data plan, and are open to suggestions on what to include in their documents. Cory hopes that he will be able to sit on an advisory board to help mold the open data initiative that is underway. He will be looking to the Guelph Coffee & Code members for their suggestions and ideas.

With Cory’s background in Microsoft’s Cloud Computing Platform, Windows Azure, and the recent release of the Open Data Protocol, he hopes that he will be a great asset to the City in their task.

Once the City releases the data publicly, Guelph Coffee and Code will be starting a number of projects that will consume the data using various technologies. Our goal with these projects is to enrich the Community of Guelph, while we teach new skills to our growing Information Technology sector.

Maarten Balliauw recently uploaded his 46-slide Windows Azure Storage, SQL Azure: Developing with Windows Azure storage and SQL Azure deck to SlideShare.

<Return to section navigation list>

AppFabric: Access Control and Service Bus

RockyH’s Azure Access Control Service Client Patterns Part 1 of 4/15/2010 begins:

One of the very cool features of the Windows Azure AppFabric, which is now commercially available as of the 12th of April 2010, is the Access Control service. This service provides authentication services for not only your Azure based cloud services, but your local services as well. It provides a foundation for federated identity across the Internet.

Here is the $0.50 explanation of Access Control.

The Windows Azure platform AppFabric Access Control service simplifies access control for Web service providers by lowering the cost and complexity of integrating with various customer identity technologies. Instead of having to address different customer identity technologies, Web services can easily integrate with AppFabric Access Control. Web services can also integrate with all identity models and technologies that AppFabric Access Control supports through a simple provisioning process and through a REST-based management API. Subsequently, Web services can allow AppFabric Access Control to serve as the point of integration for service consumers.

Here are some of the features of the AppFabric Access Control Service (ACS):

- Usable from any platform.

- Low friction way to onboard new clients.

- Integrates with AD FS v2.

- Implements OAuth Web Resource Authorization Protocol (WRAP)and Simple Web Tokens (SWT).

- Enables simple delegation.

- Trusted as an identity provider by the AppFabric Service Bus.

- Extensible to provide integration with any identity provider.

Say for example that you want to make your internet exposed web application or web service available to internet customers. You want some form of authentication, but you don’t want all of the hassle of re-inventing an authentication system and be bothered storing the credentials of potentially thousands of internet based users. The ACS can help here.

I thought it would be a good idea to explain the basic patterns for each of the components involved in an Access Control scenario. There are always three components involved in these kinds of application scenarios:

- Service provider: The REST Web service.

- Service consumer: The client application that accesses the Web service.

- Token issuer: The AppFabric ACS itself.

There is a common interaction pattern that all applications integrating with the ACS will follow. This is best depicted in the diagram below:

Access Control service Integration Pattern Diagram

Graphic is from the Azure App Fabric SDK .chm help file.It is important to note that Step 0. Secret exchange, happens once during configuration of the ACS, and does not require an ongoing connection between the service and the internet based ACS. When the secret key for example needs to be updated, you will use a tool like ACM.exe to set up a new key and distribute the key to the Service Consumers.

I don’t want to discuss the SWT Token contents, WRAP protocol, and all of that stuff here. In the post I just want to give you the patterns that you will apply to your services, and clients in order to interact with the ACS.

NOTE! The code examples here are trimmed down to demonstrate the discussed functionality only. They are NOT considered production ready as they do not contain any error handling, validation, or other security measures in them!

You might want to download the Azure App Fabric SDK and accompanying help file to try out some of the examples. …

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

Brent Stineman’s Windows Azure Diagnostics – An Introduction post of 4/15/2010 describes Azure Diagnosis configuration:

I’ve sat down at least a dozen time over the last few weeks to write this update. Be it sitting in my hotel after work or at the airport waiting for my flight. I either couldn’t get my thoughts collected well enough, or simply couldn’t figure out what I wanted to write about. All that changed this week when I had a moment of true revelation. And I think its safe to say that when it comes to Azure Diagnostics… I get it.

First off, I want to thank a true colleague, Neil. Neil’s feedback on the MSDN forums, private messages, twitter, and his blog have been immensely helpful. Invariably, when I had a question or was stuck on something he’d sail in with just the right response. Neil, thank you!

November Launch – Azure Diagnostics v1.0

If you worked with Windows Azure during the CTP, you remember the RoleManager.WriteToLog method that used to be there for instrumenting Windows Azure applications. Well, as I blogged last time, RoleManager is gone and has been replaced by ServiceRuntime and Diagnostics. The changes were substantial and the Azure team has done their best to get the news out about how to use it. From a recording of the PDC session (good job Matthew) to the MSDN reference materials and of course lots of community support.

I managed to ignore these changes for several months. That is until I was engaged to help a client do a Windows Azure POC. And this required me to dive quickly and deeply into Windows Azure Diagnostics (WAD from here on out). There’s some excellent resources on this subject out there. But what I was lacking for the longest time was a comprehensive idea of how it all worked together. The big picture if you will. That changed for me this week when the final pieces fell into place.

Azure Diagnostics Configuration

Now if you’ve watched the videos, you know that Azure Diagnostics is not started automatically. You need to call DiagnosticMonitor.Start. And when you do that, you need to give it a connection to Azure Storage and optionally a DiagnosticMonitorConfiguration object. We can also call DiagnosticMonitor.GetDefaultInitialConfiguration to get a template set of Diagnostic configuration settings for us to modify with our initial settings.

This is the first thing I want to look at more closely. Why do you need a connection to Azure Storage? Why do I have to call the “Start” method?

The articles and session recordings you’ve seen that WAD will persist information to Azure Storage. So you may assume that’s the only reason it needs the Azure Storage account. However, you may not realize is that these settings are saved in a special container in Azure Storage as an XML document. We can see this by popping open a storage explorer (I’m partial to Cerebrata’s Cloud Storage Studio) and dropping into blob storage and finding the “wad-control-container” container. Within this will be a container for each deployment that has called DiagnosticMonitor.Start, and within that will be a container for each instance. And finally, there is a blob that contains the configuration file for that instance.

Its this external storage of the configuration settings that allows us to change the configuration on the fly… REMOTELY. A useful tool when providing support for your hosted service. Its also why I strongly recommend anyone deploying a hosted service to set aside an Azure Storage account specifically for diagnostic storage. By separating your application data from the diagnostic data you can help control access a bit better. Typically the folks that are doing support for your application typically don’t need access to your production data.

DiagnosticsMonitor.Start

So what happens when we call this “Start” method. I’ve done some testing and poked around a bit and I’ve found out that two things.

First, our configuration settings are persisted to azure storage (as I mentioned above). If we don’t give DiagnosticsMonitor a set of configuration settings, the default settings will be used. Secondly, the WAD process is started within the VM that hosts our service. This autonomous process is what loads our configuration settings and acts on them, collecting data from all the configured sources and persisting it to Azure storage.

Not much is currently known about this process (at least outside of MSFT). The only thing I’ve been able to verify is that it is a separate process but that it runs within the hosting VM. However, I believe that its this processes ability to monitor for changes in the configuration settings stored in Azure storage that allows us to perform remote management. And this ability is singularly important to my next topic.

Remote Monitor Configuration

So WAD also has the DeploymentDiagnosticManager class. This class is our entry point for doing remote WAD management. I’m going to dive into the actual API another day, so for this article I just want to give you an overview and explore how this API works.

Ok, just a little ways above, I talked about how the configurations are stored in Azure Storage and that we can navigate a blob hierarchy (wad-control-container => <deploymentId> => <rolename> => <instanceid>) to get to the actual configuration settings. Remotely, we can traverse this hierarchy using the DeploymentDiagnosticManager. Getting a list of roles, their instances, and finally their current WAD configuration. At this lowest point, we’re back to an instance of the DiagnosticMonitorConfiguration class, just like we had to begin with. The XML doc that is kept in Azure storage is really just a serialized version of this object.

So now that we have this, we can modify its contents and save it back to storage. Once so updated, WAD will pick up the changes and act on them. Be it capturing a new performance counter, or performing an on-demand transfer.

Brent continues with “Configuration Best Practices” and “So What’s Next” topics.

David Linthicum offers A Step-by-Step Guide for Deploying eCommerce Systems in the Cloud in this 4/15/2010 post to GetElastic.com:

How does one deploy cloud computing for ecommerce systems? It’s really about the architecture, understanding your own requirements, and then understanding the cloud computing options that lay before you. Here is a quick and dirty guide for you.

Step 1: Understand the business case.

While it would seem that moving to the cloud is a technology exercise, the reality is that the core business case should be understood as to the potential benefits of cloud computing. This is the first step because there is no need to continue if we can’t make a business case. Things to consider include the value of shifting risk to the cloud computing provider, the value of on-demand scaling (which has a high value in the world of ecommerce), and the value of outsourcing versus in-sourcing.

Step 2: Understand your existing data, services, processes, and applications.

You start with what you have, and cloud computing is no exception. You need to have a data-level, service-level, and process-level understanding of your existing problem domain, also how everything is bundled into applications. I covered this in detail in my book, but the short answer is to break your existing system or systems down to a functional primitive of any architectural components, or data, services, and processes, with the intention being to assemble them as components that reside in the cloud and on-premise.

Step 3: Select a provider.

Once you understand what you need, it’s time to see where you’re going. Selecting a cloud computing provider, or, in many cases, several, is much like selecting other on-premise technologies. You line up your requirements on one side, and look at the features and functions of the providers on the other. Also, make sure to consider the soft issues such as viability in the marketplace over time, as well as security, governance, points-of-presence near your customers, and ongoing costs.

Step 4: Migrate.

In this step we migrate the right architectural assets to the cloud, including transferring and translating the data for the new environment, as well as localizing the applications, services, and processes. Migration takes a great deal of planning to pull off successfully the first time.

Step 5: Deploy.

Once your system is on the cloud computing platform, it’s time to deploy it or turn it into a production system. Typically this means some additional coding and changes to the core data, as well as standing up core security and governance systems. Moreover, you must do initial integration testing, and create any links back to on-premise systems that need to communicate with the newly deployed cloud computing systems.

Step 6: Test.

Hopefully, everything works correctly on your new cloud computing provider. Now you must verify that through testing. You need to approach this a few ways, including functional testing, or how your ecommerce system works in production, as well as performance testing, testing elasticity of scaling, security and penetration testing.

If much of this sounds like the process of building and deploying a more traditional on-premise system, you’re right. What does change, however, is that you’re not in complete control of the cloud computing provider, and that aspect of building, deploying, and managing an ecommerce system needs to be dialed into this process.

The Windows Azure Team posted AReal World Windows Azure: Interview with Eduardo Pierdant, Chief Technical Officer at OCCMundial.com on 4/15/2010:

As part of the Real World Windows Azure series, we talked to Eduardo Pierdant, CTO at OCCMundial.com, about using the Windows Azure platform to deliver OCCMatch and the benefits that Windows Azure provides. Here's what he had to say:

MSDN: Tell us about OCCMundial.com and the services you offer.

Pierdant: OCCMundial.com is the largest job-listing Web site in the Mexican employment market, providing an online link between job seekers and opportunities. OCCMundial.com has more than 15 million unique visitors annually and posts more than 600,000 jobs each year.

MSDN: What was the biggest challenge OCCMundial.com faced prior to implementing the Windows Azure platform?

Pierdant: We developed a recommendation system, called OCCMatch, that uses a powerful algorithm to match job openings to candidate resumes. In the first three months after the launch, we were matching resumes and job opportunities more than 10,000 times every day. However, OCCMatch was somewhat limited because of constraints in computing power and storage capacity. We needed to scale up in order to connect 1.5 million resumes and 80,000 jobs. In fact, we would have had to increase our existing infrastructure tenfold, and that's simply not a cost-effective, viable option.

MSDN: Can you describe the solution you built with Windows Azure to help address your need for a powerful, scalable solution?

Pierdant: We're using a software-plus-services model. Our Web site, which we host on-premises, is built on the Windows Server 2008 operating system, Microsoft SQL Server 2005 data management software, and the Microsoft Silverlight 2 browser plug-in. Then, we use Windows Azure computing and storage resources to run the OCCMatch algorithm. OCCMundial.com can execute millions of OCCMatch operations in parallel-with as much processing power as needed. The system compresses and uploads files to a Blob Storage container and then selects information from resumes and job listings to be processed based on specific criteria. The results are distributed and e-mail notifications are sent to job applicants and hiring managers.

Tom Piseello describes “the migration from a legacy Java and Open Source development platform to the more powerful and flexible Microsoft solution stack” in this Alinean Announces Migration of Sales & Marketing Tool Platform with Microsoft Developer Tools press release of 4/15/2010:

Alinean … today announced the migration of its Software-as-a-Service (SaaS) Platform, Alinean XcelLive™, from Java, to Microsoft .NET Framework, Microsoft Silverlight 4, and Windows Azure.

This new Microsoft-based toolset will help enable B2B vendors to develop new sales tool campaigns more quickly, converting existing spreadsheet-based tools to rich internet applications with no application programming, and standardize and centralize their value-based demand generation and sales enablement programs for better efficiency and lower costs.

The Alinean platform is used by leading B2B solution providers to create and deliver Web-based interactive Demand Gen and Sales Tool applications such as Executive Assessments, Return on Investment (ROI) Calculators, and Total Cost of Ownership (TCO) Comparison Tools.

Launching anew in May 2010, the migration from a legacy Java and Open Source development platform to the more powerful and flexible Microsoft solution stack is designed to:

- Increase agility – decreasing time to market for innovative features from months to weeks with the .NET Framework

- Improve User Experience – improve the usability of the tools, and more easily add key graphical, animation, and navigation features with new Silverlight user interface.

- Improve usage tracking and reporting – using Microsoft SharePoint to more easily manage campaigns, manage user identity and access management, and develop and deliver compelling usage reports

- Improve scalability and performance – supporting dramatic increases in number of customers supported number of concurrent users, and worldwide delivery with Windows Azure.

- Support both online and offline execution of the toolset

- Reduce TCO for growth, administration, support and maintenance

"Leading B2B solution providers rely on Alinean to develop and deliver value-based demand-gen campaigns and sales enablement programs," said Tom Pisello, CEO of Alinean. "By collaborating with Microsoft's integrated development environment and technology, we will be able to deliver these solutions to market faster, with more usability, performance and scalability at a lower cost than our legacy Java framework."

Brandon George recommends that you Get in the Cloud with Dynamics AX in this 4/15/2010 post:

I opened this year with a focus on calling 2010 'The Year of the Cloud.'

I truly believe, and have already seen that 2010 is more than just the year of the cloud, but it's a kick off point really to a decade of cloud computing. Check out the following article from ZDNet.: Will economic conditions trigger a cloud computing avalanche?

In this, ZDNet author, Joe McKendrick, points out that after major economic downturns, a new wave of technology advances take place. And since we are just now coming out of a deep recession, does that mean a Cloud Revolution is upon us?

Well I stick with what I have been saying all along, my answer to this is yes. Not only does the technology exists, now, but also the times are right for projects to start getting kicked off from an economic stand point.

So what does this mean for Dynamics AX? After all Dynamics AX is an ERP platform, so how can the cloud help Dynamics AX?

In my recent, Interview with Microsoft Distinguished Engineer - Mike Ehrenberg, I asked Mike about the cloud, and what Microsoft's vision is for Dynamics and Dynamics AX. His was response was this.:

"In Dynamics, and across Microsoft, we are focused on the ability to combine software plus services – on-premise assets with the cloud – using each to their maximum advantage to deliver the best capabilities to our customers. We are doing that today, extending our on-premise and on-line products with cloud-based services – in some cases developed by us, and increasingly working with others developed by partners." …

So with these three major areas for the cloud and Dynamics AX, we get an idea of what Microsoft's vision the cloud can and will play with Dynamics AX ERP implementations for customers.

So what about how? Well with Dynamics AX 2009, you can now working with .Net 4.0 and Visual Studio 2010. (ie: Visual Studio 2010 and .Net 4.0 Passed Compatibility Testing with Dynamics AX) This means that creating and working with Cloud projects is really easy.

All you need is Visual Studio 2010, and the VSCloud Service Project / Windows Azure installed. Once you have that, without having an Azure account even, you can develop, and debug cloud based services, ASP.NET Web roles, and Worker Processes that all can be deployed and access via the cloud. This also means you can make use of SQL Azure, for cloud based SQL Server Data Services as a Service.

Steve Marx explains how to get a Quick Status of Your Windows Azure Apps with PowerShell in this 4/14/2010 post:

Inspired by David Aiken’s one-liner, I’ve been playing around with what’s possible using PowerShell and the Windows Azure Service Management CmdLets. I thought I’d share two of my own one-liners that help me get the status of all my Windows Azure applications at once.

Here’s the first one-liner:

get-hostedservices -cert $cert -subscriptionid $sub | get-deployment production | where { $_.roleinstancelist -ne $null } | select -expandproperty roleinstancelist | group instancestatus | fl name, countThis counts how many of my instances are Ready, Busy, Initializing, etc. Here’s a sample output:

Here’s the other one-liner I came up with, slightly more complicated:

get-hostedservices -cert $cert -subscriptionid $sub | get-deployment production | where {$_.RoleInstanceList -ne $null} | ft servicename, @{expression={$_.roleinstancelist.count}; label='Instance Count'; alignment='left'}This one lists all my production deployments along with the total number of instances that deployment has. Here’s a sample output:

Have you played yet with the Windows Azure Service Management CmdLets? Do you have any cool scripts to share? Drop me a line and let me know what you come up with.

Steve Marx shows you how to Printf(“HERE”) in the Cloud in this second post of 4/14/2010:

I don’t know about you, but when things are going wrong in my code, I like to put little

printf()statements (orConsole.WriteLineorResponse.Writeor whatever) to let me know what’s going on.Windows Azure Diagnostics gives us a way to upload trace messages, log files, performance counters, and more to Windows Azure storage. That’s a good way to monitor and debug issues in your production cloud application.

However, this method has some drawbacks as compared to

printf("HERE")for rapid development and iteration. It’s asynchronous, it’s complex enough that it might be the source of an error (like incorrect settings or a bad connection string), and it’s no good for errors that cause your role instance to reset before the messages are transferred to storage.Another option is the Service Bus sample trace listener, which Ryan covered in Cloud Cover Episode 6. This is more immediate than Windows Azure Diagnostics, but it still feels a bit too cumbersome and doesn’t persist messages (so only useful if you’re running the client while the error happens).

A Lower-Tech Solution

Recently, I’ve been using the following one-liner to record errors that I’m trying to track down in my Windows Azure application (spread out across multiple lines here for space reasons):

catch (Exception e) { CloudStorageAccount.Parse("<your connection string here>").CreateCloudBlobClient() .GetBlobReference("error/error.txt").UploadText(e.ToString()); }Basically, it puts whatever exception I caught into a blob called

error.txtin a container callederror. Pretty handy.Steve continues with a “more complete and robust example.” …

Bruce Kyle reports VIDIZMO Scales Videos, Learning Software With Windows Azure in this 4/13/2010 post to the US ISV Developer Community blog:

VIDIZMO delivers live broadcasts for training, learning, knowledge compliance, workforce development and corporate communication at scale using Windows Azure. In a video on Channel 9, my colleague Ashish Jamiman talks to Nadeem Khan, Vidizmo’s president about how and why Vidizmo selected Windows Azure.

See Vidizmo - Nadeem Khan, President, Vidizmo talks about technology, roadmap and Microsoft partnership.

Using Azure helps Vidizmo scale content delivery including video, PowerPoint presentations, audio, and its studio software. The delivery must be performant and be elastic to meet increasing demand.

Kahn describes how currently Vidizmo is using their own data center in conjunction with Azure, and will migrate all operations to Azure. …

Chris Caldwell makes the case for Microsoft Cloud Services: Windows Azure in Education in this recent 00:32:13 presentation on TechNet Radio:

The United States' current focus on education and accountability from district to state and to the national level, calls for new solutions that allow collaboration, deep reporting capabilities, and secure access. The cloud is the ideal platform for building such solutions.

Patrick McDermott, IT Director of the San Juan School District in Utah, USA has experience with IT solutions for education and is looking to Windows Azure as a way to help education become more accountable and provide better services to students, parents, teachers, administrators, and the US state and federal governments. Patrick talks with Karen Forster from Platform Vision and Sandy Sharma and Scott Golightly from Advaiya about Windows Azure and education.

The announcer claims that this is the first of a series.

Grzegorz Gogolowicz and Trent Swanson announced the availability of their Windows Azure Dynamic Scaling Sample from the MSDN Code Gallery on 4/9/2010:

A key benefit of cloud computing is the ability to add and remove capacity as and when it is required. This is often referred to as elastic scale. Being able to add and remove this capacity can dramatically reduce the total cost of ownership for certain types of applications; indeed, for some applications cloud computing is the only economically feasible solution to the problem.

The Windows Azure Platform supports the concept of elastic scale through a pricing model that is based on hourly compute increments. By changing the service configuration, either through editing it in the portal or through the use of the Management API, customers are able to adjust the amount of capcity they are running on the fly.

A question that is commonly asked is how to automate the scaling of a Windows Azure application. That is, how can developers build systems that are able to adjust scale based on factors such as the time of day or the load that the application is receiving? The goal for the “Dynamic Scaling Windows Azure” sample is to show one way to implement an automated solution to handle elastic scaling in Azure. Scaling can be as simple as running X amount of server during weekdays and Y amount during weekends, or very complex based on several different rules. By automating the process, less time needs to be spent on checking logs and performance data manually, and there is no need for an administrator to manually intervene in order to add and remove capacity. An automated approach also has the benefit of always keeping the instance count at a minimum while still being able to cope with the current load- it allows the application to be run with a smaller amount of ‘headroom’ capacity and therefore at a lower cost. The sample as provided below scales based on 3 different variables, but the framework has been designed to be flexible in terms of future requirements.

This sample will discuss and demonstrate the concept of dealing with variable load through rule based scaling and then explain in detail the architecture, implementation and use of the provided sample code. We finish by discussing potential extensions to the framework to support other scenarios.

Download the source code for Dynamic Scaling and the 17-page Dynamic Scaling Azure Solutions documentation.

Wayne Beekman explains The Business Opportunity Behind Azure in this 00:43:45 Channel9 video segment:

We’ve all heard about the industry shift to the Cloud, but are you jumpstarting your Windows Azure business to be a part of it?

Wayne Beekman, co-founder of Information Concepts, made the decision early on that Cloud Computing was going to play an important role in the future of his company. Today he is a recognized thought leader and subject matter expert and has been featured in several technical publications for his work in cloud computing. In this session Wayne takes the opportunity to build on part 1 of our 2-part series, offering his perspective on cloud computing and Azure and his thoughts on how to engage in strategic discussions with your customers to uncover new opportunities to help grow your business.

Return to section navigation list>

Windows Azure Infrastructure

Lori MacVittie questions the validity of third-party hardware reviews in her For Thirty Pieces of Silver My Product Can Beat Your Product post of 4/15/2010:

One of the side-effects of the rapid increases in compute power combined with an explosion of Internet users has been the need for organizations to grow their application infrastructures to support more and more load. That means higher capacity everything – from switches to routers to application delivery infrastructure to the applications themselves. Cloud computing has certainly stepped up to address this, providing the means by which organizations can efficiently and more cost-effectively increase capacity. Between cloud computing and increasing demands on applications there is a need for organizations to invest in the infrastructure necessary to build out a new network, one that can handle the load and integrate into the broader ecosystem to enable automation and ultimately orchestration.

Indeed, Denise Dubie of Network World pulled together data from analyst firms Gartner and Forrester and the trend in IT spending shows that hardware is king this year.

"Computing hardware suffered the steepest spending decline of the four major IT spending category segments in 2009. However, it is now forecast to enjoy the joint strongest rebound in 2010," said George Shiffler, research director at Gartner, in a statement.

That is, of course, good news for hardware vendors. The bad news is that the perfect storm of increasing capacity needs, massively more powerful compute resources, and the death of objective third party performance reviews result in a situation that forces would-be IT buyers to rely upon third-parties to provide “real-world” performance data to assist in the evaluation of solutions. The ability – or willingness - of an organization to invest in the hardware or software solutions to generate the load necessary to simulate “real-world” traffic on any device is minimal and unsurprising. Performance testing products like those from Spirent and Ixia are not inexpensive, and the investment is hard to justify because it isn’t used very often. But without such solutions it is nearly impossible for an organization to generate the kind of load necessary to really test out potential solutions. And organizations need to test them out because they, themselves, are not inexpensive and it’s perfectly understandable that an organization wants to make sure their investment is in a solution that performs as advertised. That means relying on third-parties who have made the investment in performance testing solutions and can generate the kind of load necessary to test vendor claims.

That’s bad news because many third-parties aren’t necessarily interested in getting at the truth, they’re interested in getting at the check that’s cut at the end of the test. Because it’s the vendor cutting that check and not the customer, you can guess who’s interests are best served by such testing. …

Lori continues with “COMPETITIVE versus COMPARATIVE” and “THE GIGAFLUX FACTOR” topics.

Bill Lapcevic asserts “SaaS APM Tool Is Available on Demand to Provide Critical Visibility to Developers With Production Web Applications Deployed in Microsoft's Cloud” in this New Relic Announces Support for Management of Applications Deployed on Microsoft's Windows Azure Platform press release of 4/14/2010:

New Relic, Inc. … today announced that its flagship product, RPM, has been proven ready to manage Java- and Ruby-based web applications deployed on the Microsoft Windows Azure platform. This means that development, deployment and operations teams have a cloud-ready solution they can use to monitor, tune and optimize their critical web applications deployed in Microsoft's cloud services platform.

The Windows Azure platform is an internet-scale cloud services platform hosted in Microsoft data centers. It provides robust functionality that allows organizations to build any kind of web application -- from consumer sites to enterprise class business applications. The Windows Azure platform includes Windows Azure, a cloud operating system, Microsoft SQL Azure, and the Windows Azure platform AppFabric, a set of developer cloud services. Organizations with applications built and deployed on the Windows Azure platform can use New Relic RPM to track application performance and proactively identify the cause of bottlenecks.

"The Windows Azure platform provides scalability and reliability that enables our partners and customers to efficiently manage their businesses and respond rapidly to their users' needs," said Prashant Ketkar, Sr. Director of Product Management, Windows Azure. "New Relic RPM is a key enabler for successful deployment of Java or Ruby on Rails in the Windows Azure platform because it provides the visibility organizations need to ensure superior service delivery for their for business-critical applications."

"Microsoft and New Relic share a firm commitment to helping organizations realize the compelling benefits of cloud computing," said Bill Lapcevic, New Relic's vice president of business development. "The combination of New Relic RPM, which is already used by more than 1500 organizations for monitoring their applications deployed in both public and private cloud computing environments, and the Windows Azure platform makes it easier to deploy and manage web applications that meet high standards for performance and availability."

Michael Coté delivers “Open” and the Cloud – Quick Analysis in this 4/14/2010 post to his People Over Process blog:

(One of our clients emailed recently to ask about the ideas of open source and cloud computing – what seems to be going on there at the moment? Stephen has tackled this problem several times – the fate of “open source” in a cloud world is a hot topic: many people have much heart and sweat tied up into the concepts of “open source” and want to make sure their beliefs and positions are safe or, at best, evolve. Here’s a polished up version of what I wrote in reply on the general topic:)

Public vs. private

Much of the software used in cloud computing is free and open, but in cloud running the software is what’s important. Being able to freely get, contribute to, and generally do open source is fine, but when you have to millions of dollars to setup and run your own cloud, the open source software you rely on is a small, but important part. And if you’re running your own private cloud, at the end of the day you’re just running on-premise software, and I don’t expect that to change the dynamics of open source – e.g. the same open/core models will evolve (with small exception from “telemetry” enabled services) and the same concerns of lock-in, community, collaboration, payments, and “freedom” that have existed in the open source would will probably still exist.

For the most part, getting involved in the could involves using a cloud, not building your own. (See the “private cloud” exception, here.) When it comes to consuming and building on the cloud, the first dynamic that changes is that you’re paying for the use of the cloud, always. Contrast this with on-premise open source where, accordingly to conversion numbers I usually hear from vendors, you often get a free ride for a long time, if not forever. I can’t stress how much this change from “free as in beer” to actually paying for using software means: actually paying for IT from day one, even if it’s just “pennies per hour.”

Michael continues with detailed “’Freedom’ in cloud-think,” “Aggregated Analytics” and “Thinking by counter-example” topics.

David Linthicum asserts “As small companies get bigger, cloud-provided agility will let them outpace traditional competitors” in his The cloud is just starting to impact small business 4/14/2010 post to InfoWorld’s Cloud Computing blog:

I took part in a talk yesterday at St. Louis University on cloud computing and small business -- specifically, its benefits.

Clearly, cloud computing is having the largest impact on small business. We've all heard the stories about startups that set up their supporting IT infrastructure for a fraction of what it cost a few years ago, just by leveraging the cloud.

However, the impact of the movement to cloud computing by small business won't really be felt until those businesses grow up. While it's not unusual to find a startup using development, accounting, and sales management resources from the cloud, that kind of usage is only interesting when it's in the service of less than 50 employees. But when a company has more than 5,000 employees, it's downright disruptive.

Some smaller companies have the luxury of not having existing legacy systems to consider, so they've been able to use cloud computing as an IT option from the beginning; these companies, as they grow, could have the cloud serving as much as 75 percent of their IT requirements as they approach $1 billion in revenue.

But I don't believe that we'll have today's medium-size to large companies running on just "the cloud." In fact, if you find cloud usage exceeding 1 percent within the Global 2000 -- or the government -- it should be headline news. Larger organizations are currently kicking the cloud computing tires, and I suspect their adoption will be very slow.

James Urquhart analyzes Cloud computing and the economy in this 4/13/2010 post to C|Net News’ Wisdom of Clouds blog:

One of the most common statements made by IT vendors and "experts" about cloud-computing models is that somehow the economic crisis of the last few years is pushing enterprises to use cloud to save money on IT operations costs. Statements like the following are all too common:

“The trend toward cloud computing, or Software-as-a-Service (SaaS), has accelerated during the economic crisis.”

Or this one:

“The fact that cloud computing is becoming a growing focus of attention right now seems to be no accident. ‘It is clear that the economic crisis is accelerating the adoption of cloud computing,’ explains Tommy Van Roye, IT-manager at Picanol.”

Apparently, the idea is that tightening budgets have opened the minds of enterprises everywhere to the possibilities of cloud computing. That, in turn, seems to suggest that IT is somehow cheaper when run in cloud models.

That may or may not be the case, but I think the concept that the economic recession is driving interest in cloud is off the mark. What is driving enterprises to consider the cloud is ultimately the same thing that drives start-ups into the cloud: cash flow. Cash flow and the agility that comes from a more liquid "pay as you go" model.

The fact that the economy is recovering at the same time is just coincidence, in my opinion.

If you think about it, it makes perfect sense that no matter how much money an IT organization has in its coffers, or what the growth prospects are for its parent company or its industry, that organization is suffering from problems that have nothing to do with budget, and everything to do with a capital-intensive model. …

Photo credit: CC Bohman

<Return to section navigation list>

Cloud Security and Governance

Mark O’Neill asks Security, Availability, and Performance - Sound familiar? in this 4/13/2010 post:

It's worth revisiting the IDC Study on the key challenges facing Cloud Computing. The top three are: Security, Availability, and Performance.

Availability is often seen as being under the general "security" umbrella (e.g. a Denial-of-Service attack affects availability), so #1 and #2 are linked.

#3 on the list, performance, has particular relevance to Cloud Service Broker models, since it is vital that anything which is acting as an intermediary between the consumer and the cloud must not introduce undue latency. This is also a consideration in the world of SOA, where intermediaries (an XML Gateway) must be high-performance, and must also, in fact, offload functionality from applications (thus providing acceleration).A Cloud Service Broker also addresses the interoperability issues which are seen further down IDC's list, by smoothing over the differences between Cloud APIs, and indeed between different versions of the same Cloud API from the same Cloud provider.

<Return to section navigation list>

Cloud Computing Events

The Event Processing Symposium 2010 program committee, in cooperation with OMG™, today announced new Event Processing Symposium 2010: New Presentations Added:

The symposium will be held in Washington D.C., on May 24-25, 2010. The Call for Participation is open through April 15, 2010. For more information visit http://www.omg.org/eps-pr.

Featured Sessions

- Smart Systems and Sense-and-Respond Behavior: The Time for Event Processing is Now by W. Roy Schulte, Vice President and Distinguished Analyst, Gartner

- Analyze, Sense, and Respond: Identifying Threats & Opportunities in Social Networks, by Colin Clark, Chief Technology Officer, Cloud Event Processing, Inc.

- Case Study: Event Distribution Architecture at Sabre Airline Solutions, by Christopher Bird, Chief Architect at Sabre Airline Solutions

- Event Processing – Seven Years from Now, by Opher Etzion, IBM Senior Technical Staff Member and chair of the Event Processing Technical Society

- Events, Rules and Processes – Exploiting CEP for "The 2-second Advantage," by Paul Vincent, CTO Business Rules and CEP, TIBCO Software

At the Event Processing Symposium 2010, attendees will:

- Learn how Event Processing enables agencies and corporations to profit from continuous intelligence at the Event Processing Symposium 2010, hosted by the new Event Processing Community of Practice.

- Hear from industry pioneers, leading vendors, and early adopters on Event Processing technologies and techniques that increase mission and business visibility and responsiveness.

- Interact with industry experts, leading adopters, and peers in round table discussions on business analysis, information analysis, management techniques, and technologies to create an environment for continuous intelligence.

- Influence, participate in, and benefit from the rise of event processing as we launch the Event Processing Community of Practice.

For more information about the Call for Participation visit http://www.omg.org/eps-pr. Submit abstracts to Program Chair Brenda M. Michelson via e-mail at brenda@elementallinks.com.

Registration & Information

Everyone with an interest in Event Processing is invited to attend. The registration cut-off for the Westin Arlington Gateway is May 3, 2010. Hotel and registration information is available at http://www.omg.org/eps-pr.

David Aiken’s Windows Azure FireStarter Deck post of 4/9/2010 claims:

A couple of folks have asked about my deck from this week’s event, so here it is.

<Return to section navigation list>

Other Cloud Computing Platforms and Services

Phil Wainwright analyzes FinancialForce.com and its Chatterbox application in his Building Disruptive Business Structures in the Cloud post of 4/15/2010:

Sometimes it seems that we miss the most obvious things with all our buzzword discussions about cloud computing and social media. The essential change enabled by these technology artefacts is quite simply that we can all communicate and collaborate with each other while we’re on the move. So here’s a simple message to understand and act upon: businesses that can harness that essential change will outcompete their rivals because they will be able to react much faster while operating with much lower costs.

This was brought home to me when I had a preview of the new Chatter-enabled FinancialForce.com — an accounting application that incorporates Salesforce.com’s Twitter-like notifications stream — ahead of its announcement last week [disclosure: Salesforce.com is a recent consulting client]. In fact, the vendor has gone further than simply Chatter-enabling its application (easy enough, as it is built on Salesforce’s Force.com platform, which incorporates Chatter). It has released an application called Chatterbox, which can be used to build rules to initiate a Chatter stream around any Salesforce or Force.com object.

But what struck me was how the vendor is using its creation internally. The joint venture company that develops and sells the application is a geographically dispersed team of 55 staff working in six locations spread across the US, UK and Spain — on top of which, many are often homeworking or on the road — and they are all in constant contact with each other. Imagine how overstretched such a small organisation would have felt just a few years ago with such a dispersed operation. See how agile and efficient it can be using today’s Internet-enabled collaboration technologies. …

Carl Brooks (@eekygeeky) reports Verizon soups up cloud Computing as a Service in a 4/15/2010 post to the SearchCloudComputing.com blog:

Verizon is adding "enterprise-class" features to its Computing as a Service (CaaS) and claims the cloud computing offering is a runaway success among enterprise customers, although it came up short on naming any of them.

Along with advanced virtual networking, the telco is adding the ability to customize operating system images and elastic bandwidth fees for users who have occasionally high throughput needs but don't want to pay for excess bandwidth capacity at other times.

Verizon said it will offer Verizon Private IP, giving users the ability to completely isolate their CaaS environments from the Internet and use only backhaul communications links, an attractive feature for enterprises that view Internet exposure as a downside in their IT environments.

"By addressing the high end, it certainly gives some validity to the market space -- people have been saying forever that no enterprise will want to do this," said Amy DeCarlo, principal analyst at Current Analysis.

She said that Verizon is hoping it can throw its weight around and capture enterprise customers who can appreciate the economic arguments for adopting cloud but can't get past the drawbacks of services like Amazon Web Services. She said the upgrades are a positive sign for cloud services.

"Verizon seems to be making some progress here," she said. …

More telco-related links from Carl:

- Verizon enters the cloud market

- AT&T squares up to Amazon EC2

- Verizon offers lessons in cloud computing

Bruce Guptil warns Death at Cloud Speed: Novell, Palm Follow Similar, Avoidable Paths in this 4/15/2010 Saugatuck Research Alert (site registration required). From the “What Is Happening?” section:

Two once-great titans of their industries – Palm and Novell – have fallen far, and fallen hard, to the point where they are seeking buyers, and face being divested into their component parts by potential buyers. While vastly different in the markets they serve and in their technologies, their similar, concurrent downward spirals are the result of very similar business and organizational management strategies and actions that are anathema to success in the emergent Cloud IT era.

Both Novell and Palm were pioneers of technology and software use in their respective markets. Both developed and sustained significant, long-term market leadership positions, becoming Master Brands with large ecosystems of partners and developers that standardized development, marketing, and sales on their technologies and positioning. Within user IT and business organizations, both became de facto standards for relevant IT buying and use. Both offer significant technologies and capabilities that are widely sought and used in this era of Cloud-based, mobile computing:

- Novell’s SUSE Linux and ZENworks are critical components of Cloud IT with widespread developer support, and its GroupWise offerings address core needs of IT providers and users seeking collaborative solutions.

- Palm’s OS is well-designed and attractive to developers, and its latest line of smartphones combine advanced capabilities and interfaces that meet or exceed user requirements.

However, both firms are scrambling to stave off imminent closure via acquisition, albeit in somewhat different manners….

John Bodkin claims “Vendors pushing 'VMforce', but aren't saying what it is yet” in his VMware, Salesforce.com to unveil mysterious cloud computing service post of 4/13/2010 to NetworkWorld’s Cloud Computing blog:

VMware and Salesforce.com are on the verge of redefining the entire virtualization and cloud computing market -- or, at least, that's what they want you to think.

The companies have set up a Web site called "VMforce.com," and promise that they will unwrap the details on April 27 with "an exciting joint product announcement on the future of cloud computing." The marketing site features a picture of Salesforce CEO Marc Benioff and Paul Maritz, the former Microsoft executive who became VMware's CEO almost two years ago. But it contains zero details on what VMforce might actually be.

It's not hard to make a few guesses, though, based on the name "VMforce.com," and past products and strategy announcements from VMware and Salesforce.

Salesforce has a cloud service that lets businesses quickly develop applications and host them in the Salesforce infrastructure. The service is called Force.com, and is in the "platform-as-a-service" portion of the cloud computing market.

VMware, meanwhile, believes its technology should be the primary virtualization engine behind platform-as-a-service offerings. Toward that end, VMware acquired SpringSource, an enterprise Java vendor, and Rabbit Technologies, which makes an open source messaging platform which may make it easier to build cloud networks.

Add the "VM" from VMware to "Force.com" and you have, well, "VMforce.com." …