Windows Azure and Cloud Computing Posts for 1/25/2010+

| Windows Azure, SQL Azure Database and related cloud computing topics now appear in this weekly series. |

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Table and Queue Services

- SQL Azure Database (SADB)

- AppFabric: Access Control, Service Bus and Workflow

- Live Windows Azure Apps, Tools and Test Harnesses

- Windows Azure Infrastructure

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Read the detailed TOC here (PDF) and download the sample code here.

Discuss the book on its WROX P2P Forum.

See a short-form TOC, get links to live Azure sample projects, and read a detailed TOC of electronic-only chapters 12 and 13 here.

Wrox’s Web site manager posted on 9/29/2009 a lengthy excerpt from Chapter 4, “Scaling Azure Table and Blob Storage” here.

You can now download and save the following two online-only chapters in Microsoft Office Word 2003 *.doc format by FTP:

- Chapter 12: “Managing SQL Azure Accounts, Databases, and DataHubs*”

- Chapter 13: “Exploiting SQL Azure Database's Relational Features”

HTTP downloads of the two chapters are available from the book's Code Download page; these chapters will be updated for the November CTP in January 2010.

* Content for managing DataHubs will be added as Microsoft releases more details on data synchronization services for SQL Azure and Windows Azure.

Off-Topic: OakLeaf Blog Joins Technorati’s “Top 100 InfoTech” List on 10/24/2009.

Azure Blob, Table and Queue Services

Ryan Dunn reviews Clumsy Leaf Software’s CloudExplorer in a Neat Windows Azure Storage Tool post of 1/25/2010:

I love elegant software. I knew about CloudXplorer from Clumsy Leaf for some time, but I hadn't used it for awhile because the Windows Azure MMC and MyAzureStorage.com have been all I need for storage for awhile. Also, I have a private tool that I wrote awhile back to generate Shared Access signatures for files I want to share.

I decided to check out the progress on this tool and noticed in the change log that support for Shared Access signatures is now included. Nice! So far, this is the only tool* that I have seen handle Shared Access signatures in such an elegant and complete manner. Nicely done!

Definitely a recommended tool to keep on your shortlist.

Christofer Löf’s Stubs with “ActiveRecord for Azure” post of 1/25/2010 describes “how to substitute the ‘cloud aware’ DataServiceContext with an in-memory context, allowing you to unit test your applications without accessing Windows Azure Table Storage:”

In a previous post I showed how to do basic CRUD operations with my sample ActiveRecord implementation for Windows Azure Tables. The attentive reader probably noticed my use of ASP.NET MVC and static methods on the entity for operations like Find and Delete. Most of the times statics like this are bad when it comes to testability, because they're hard to stub out. But just like ASP.NET MVC - "ActiveRecord for Azure" was designed with testing in mind.

If we have a look on the test for the Create action, it first setups the Task entity for testing by calling its static Setup(int) method. By calling Setup it will use a local, in memory, repository and populate it with the given number of entities. …

Cristofer continues with code snippet examples and notes:

… The above examples are available in the download below.

The complete “ActiveRecord for Azure” sample source is available here.

Jerry Huang asks Why Back Up Google Docs To Windows Azure Storage? and answers “Maybe because I need the peace of mind that I can access it the moment I need to” in this 1/25/2010 post:

If I have documents sitting in a cloud storage such as Google Docs, why do I need to back it up to a different cloud storage such as Azure Storage?

Maybe because I need the peace of mind that I can access it the moment I need to.

Or in some countries, access to one may be blocked such as in China for Google Docs and Google Picasa. Or maybe some days, one may go down as part of the cloud computing growing pain. Anyway, it is a good practice to back up.

This article will show you how to backup your Google Docs files on a daily basis to another cloud storage.

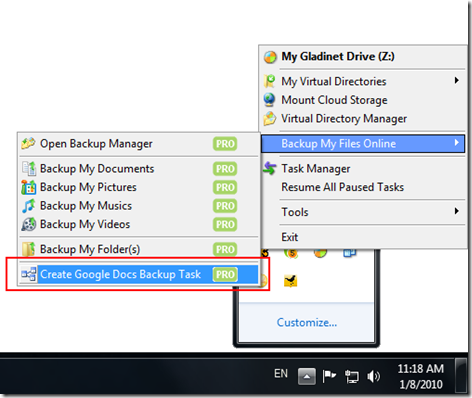

First you need to install Gladinet Cloud Desktop and map in your Google Docs and other cloud storages you have.

In the following picture, I have Azure Storage, Amazon S3, a FTP Server and Google Docs all mapped in the Gladinet drive. (I have Synaptic Storage too but it is not configured yet)

From the System tray menu, you can open Create Google Docs Backup Task.

Jerry, who is the founder of Gladinet, continues with a step-by-step tutorial for the process.

<Return to section navigation list>

SQL Azure Database (SADB, formerly SDS and SSDS)

Liam Cavanagh describes Hilton Giesenow’s second Webcast about SQL Azure Data Sync in a How to Synchronize from SQL Azure to SQL Server using Visual Basic post of 1/25/2010:

In part 2 of Hilton Giesenow's webcast series "How Do I: Integrate an Existing Application with SQL Azure?", he walks through the process of adding the synchronization components to a new VB.NET application and executes synchronization between a SQL Server and SQL Azure database that had previously been created using the "SQL Azure Data Sync Tool for SQL Server" (part of SQL Azure Data Sync) in about 10 lines of code. He also shows how you can get access to events like conflicts and sync statistics (like # of rows uploaded and downloaded) to get you started exteding your sync executable.

Hilton offers this description of his Webcast:

In this follow-on video, we expand on what we set up in part 1 to use the Microsoft Sync Framework libraries and providers from our .Net code. This allows us to embed the synchronisation capabilities into our applications and hook into the various available events.

Brent Ozar’s SQL Azure Frequently Asked Questions post of 1/25/2010 asks and answers the following questions:

- What is SQL Azure?

- How does SQL Azure pricing compare to SQL Server costs?

- Why does SQL Azure have a 10GB limit?

- How can I load balance SQL Azure or do cross-database joins?

- How do I handle the SQL Azure backup process?

- How good is SQL Azure performance?

- Can I use SQL Azure as a backup with log shipping or database mirroring?

- How do I run Microsoft SQL Azure on commodity hardware?

- What SQL Azure plans do MSDN subscribers get for free?

- Got a SQL Azure question I didn’t answer?

Be sure to read the comments, too.

Nick Hodge reports that “Using the open source jTDS JDBC Driver, you can connect to SQL Azure” in his JDBC to SQL Azure post of 1/25/2009:

Driver class name: net.sourceforge.jtds.jdbc.Driver

Database URL: jdbc:jtds:sqlserver://<server>.database.windows.net:1433/<databasename>; ssl=require

Username: (in the form username@server from SQL Azure)

The key thing is the “ssl=require” placed at the end of the connection string. SQL Azure SSL encrypts the data on the wire. Which is rather a good thing.

Hope this saves someone some time.

<Return to section navigation list>

AppFabric: Access Control, Service Bus and Workflow

Brian Loesgen continues his Azure App Fabric series with Azure Integration - Part 2: Sending a Message from BizTalk to Azure’s AppFabric ServiceBus with a Dynamic Send Port post of 1/24/2010:

In a previous post, I wrote about how to extend the reach of an ESB on-ramp to Windows Azure platform AppFabric ServiceBus. This same technique also works for any BizTalk receive location, as what makes it an ESB on-ramp is the presence of a pipeline that includes some of the itinerary selection and processing components from the ESB Toolkit. In that post (and accompanying video) I showed how to use InfoPath as a client to submit the message to the ServiceBus, which subsequently got relayed down and into a SharePoint-based ESB-driven BizTalk-powered workflow.

In this and the next post, we’ll look at how to send messages in the other direction, and in this post, I’ll show how to do it using a BizTalk dynamic send port. If you’re used dynamic send ports with BizTalk, you’ll know they’re a powerful construct that let you programmatically sent endpoint configuration information that will subsequently be provided to a send adapter. This is a great way to have a single outbound port that can deliver messages to a variety of endpoints. And, dynamic ports are a key concept behind ESB off-ramps, but more on that later.

The video to go along with this post can be found here. …

<Return to section navigation list>

Live Windows Azure Apps, Tools and Test Harnesses

Ryan Dunn’s Supporting Basic Auth Proxies post of 1/25/2010 provides a workaround for problems with basic authorization proxies and Azure utilities:

A few customers have asked how they can use tools like wazt, Windows Azure MMC, the Azure Cmdlets, etc. when they are behind proxies at work that require basic authentication. The tools themselves don't directly support this type of proxy. What we are doing is simply relying on the fact that the underlying HttpRequest object will pick up your IE's default proxy configuration. Most of the time, this just works.

However, if you are in an environment where you are prompted for your username and password, you might be on a basic auth proxy and the tools might not work. To work around this, you can actually implement a very simple proxy handler yourself and inject it into the application.

Ryan shows an example of a workaround for wazt.

My Volta Redux by Erik Meijer’s Cloud Data Programmability Team and Reactive Extensions for .NET (Rx) post of 1/24/2010 describes “Volta’s New Lease on Life with the Cloud Programmability Team”:

The Erik Meijer and Team: Cloud Data Programmability - Connecting the Distributed Dots video (00:33:32) is the most recent Channel9 segment about the transmogrification of Volta into the Cloud Programmability team. The team leverages the Reactive Extensions (Rx) for .NET to enable asynchronous communication between clients, middle tiers, and data sources, such as Windows Azure and SQL Azure:

“Rx is a library for composing asynchronous and event-based programs using observable collections.

“The ‘A’ in ‘AJAX’ stands for asynchronous, and indeed modern Web-based and Cloud-based applications are fundamentally asynchronous. In fact, Silverlight bans all blocking networking and threading operations. Asynchronous programming is by no means restricted to Web and Cloud scenarios, however. Traditional desktop applications also have to maintain responsiveness in the face of long latency IO operations and other expensive background tasks.

“Another common attribute of interactive applications, whether Web/Cloud or client-based, is that they are event-driven. The user interacts with the application via a GUI that receives event streams asynchronously from the mouse, keyboard, and other inputs.

“Rx is a superset of the standard LINQ sequence operators that exposes asynchronous and event-based computations as push-based, observable collections via the new .NET 4.0 interfaces IObservable<T> and IObserver<T>. These are the mathematical dual of the familiar IEnumerable<T> and IEnumerator<T> interfaces for pull-based, enumerable collections in the .NET Framework.

“The IEnumerable<T> and IEnumerator<T> interfaces allow developers to create reusable abstractions to consume and transform values from a wide range of concrete enumerable collections such as arrays, lists, database tables, and XML documents. Similarly, Rx allows programmers to glue together complex event processing and asynchronous computations using LINQ queries over observable collections such as .NET events and APM-based computations, PFx concurrent Task<T>, the Windows 7 Sensor and Location APIs, SQL StreamInsight temporal event streams, F# first-class events, and async workflows.”

The post includes numerous links to earlier OakLeaf System articles about Erik Meijer’s Volta project.

Dave Thompson explains how to solve problems Using WCF Tester with Azure by applying a hotfix in this 1/25/2010 post:

If you’ve tried to use the wcf testing tool WcfTestClient.exe (\Program Files (x86)\Microsoft Visual Studio 9.0\Common7\IDE\WcfTestClient.exe)

which comes with Visual Studio on an Azure Project, you will probably have come across this problem…

As the WCF service runs locally or in the cloud hosted in Azure you will notice that the addresses are different between what is in the address bar in IE and the address given to run SvcUtil against… this is because Azure runs a load balancer and the url in the address bar is actually the load balancer’s address and not the services address.

This is a known bug in WCF, and currently there is a hotfix for this issue, which will make developing and testing Azure a lot easier.

Dave goes on with a description of how to obtain and apply the hotfix.

Darren Neimke explains How to build an Azure Cloud Service package as part of your build process in this 1/25/2010 post:

I explained in a previous blog entry how important, and ultimately simple, it is to create a deployment package for SharePoint as a part of your Continuous Integration process and in this post I’d like to show you how to produce deployment packages for Windows Azure. This article is laid out in the following sections:

- Deploying to Windows Azure

- The CSPack Command Line Tool

- Automating the Creation of your deployment package …

Darren continues with a detailed explanation of how to use the Cspack.exe command line tool to automate creation of a deployment package.

Tudor Cret begins his three-part tutorial series with Behind ciripescu.ro, a Windows Azure cloud application (part 1) on 1/25/2010:

… The purpose of the current post is to make a summary of what is behind ciripescu.ro a simple micro-blogging application developed around the concept of twitter. This platform can be extended and customized for any domain.

So our intention is to develop a simple one-way communication and content delivery network using Windows Azure. Let’s define some entities:

- User - of course we can do nothing without usernames, passwords and emails J Additional info can be whatever you want, just like an avatar, full name etc.

- Cirip – defines an entry of maximum 255 characters, visible to everybody. Users content is delivered through this entity

- Private Message – a private message between two users

- Invitation – represents an invitation a user can send to invite more other users to use the application.

I won’t enter in more details about what the application does, you can watch it in action (at least until the end of January L) at www.ciripescu.ro [in Romanian.] For the next sections I will concentrate on how the application does all its functionalities. Configurations and deployment aren’t so interesting since they are exposed to any hello world azure application video or tutorial. …

Dom Green shows you when you’re Paying or Not Paying? for Windows Azure projects in this 1/24/2010 post:

I have recently been asked a number of questions both internally and from customers about when you will be billed for Azure usage.

As Eric Nelson’s recent post describes, if you suspend a service you will still be paying this is due to the fact you will still have your application deployed on the server ready to start again. With your application utilizing the server others will not be able to provision its usage.

When you select delete you will stop paying as you will no longer have servers provisioned for your application and the recourses will be freed up for others to use.

The Innov8showcase site asks Want to know how Windows Azure is Feeling? on 1/25/2010 and offers this answer:

If you want to know the health status of Windows Azure Platform services in each of our data centers at any time take a look at the Windows Azure Platform dashboard at http://www.microsoft.com/windowsazure/support/status/servicedashboard.aspx.

Lori MacVittie posits “Cloud computing and content delivery networks (CDN) are both good ways to assist in improving capacity in the face of sudden, high demand for specific content but require preparation and incur operational and often capital expenditures. How about an option that’s free, instead?” in her How To Use CoralCDN On-Demand to Keep Your Site Available. For Free post of 1/25/2010:

While it’s certainly in the best interests of every organization to have a well-thought out application delivery strategy for addressing the various events that can result in downtime for web applications it may be that once in a while a simple, tactical solution will suffice. Even if you’re load balancing already (and you are, of course, aren’t you?) and employing optimization techniques like TCP multiplexing you may find that there are sudden spikes in traffic or maintenance windows during which you simply can’t keep your site available without making a capital investment in more hardware.

Yes, you could certainly use cloud computing to solve the problem, but though it may not be a capital investment it’s still an operational expenditure and thus it incurs costs. Those costs are not only incurred in the event that you need it, but in the time and effort required to prepare and deploy the application(s) in question for that environment. …

Return to section navigation list>

Windows Azure Infrastructure

Guy Barrette dispels Azure account creation confusion for MSDN suscribers in his 1/25/2010 post:

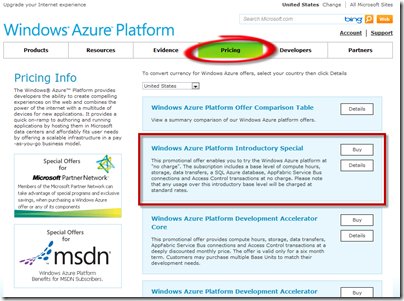

I had an Azure CTP account and I received an email from Microsoft saying that my account was about to expire on January 31, 2010 and that I’ll need to “upgrade” my account. A link in the email pointed to this pricing page:

http://www.microsoft.com/windowsazure/offers/If you just want to kick tires and don’t want to invest too much, Microsoft has an introductory special that has enough “hours” to do some basic testing for free.

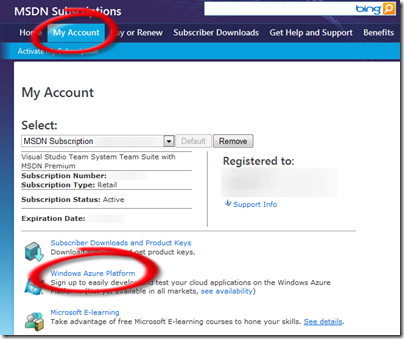

Sweet but I have an MSDN Premium subscription with some Azure hours included as benefits, I should be able to link the account that I just created to my MSDN subscription, right? Well, no. I haven’t found a way to do that so far. So how do you create an Azure account using your MSDN subscription benefits? Simple, you need to log on the MSDN Website and click on the “My Account” tab. From there, you can create an Azure account that will be linked to your MSDN subscription.

Guy is Microsoft’s Regional Director for Montreal, PQ, Canada. (My How to Create and Activate a New Windows Azure and SQL Azure Account or Upgrade an Existing Account post of 1/7/2010 offers a more detailed explanation.)

Chris Hoff (@Beaker) expounds on the private-cloud and hybrid-cloud vs. public-cloud controversy in his Incomplete Thought: Batteries – The Private Cloud Equivalent Of Electrical Utility… post of 1/24/2010:

While I think Nick Carr’s power generation utility analogy was a fantastic discussion catalyst for the usefulness of a utility model, it is abused to extremes and constrains what might ordinarily be more open-minded debate on the present and future of computing.

This is a debate that continues to rise every few days on Twitter and the Blogosphere, fueled mostly by what can only be described from either side of the argument as a mixture of ideology, dogma, passionate opinion, misunderstood perspective and a squinty-eyed mistrust of agendas.

It’s all a bit silly, really, as both Public and Private Cloud have their place; when, for how long and for whom is really at the heart of the issue.

The notion that the only way “true” benefits can be realized from Cloud Computing are from massively-scaled public utilities and that Private Clouds (your definition will likely differ) are simply a way of IT making excuses for the past while trying to hold on to the present, simply limits the conversation and causes friction rather than reduces it. I believe that a hybrid model will prevail, as it always has. There are many reasons for this. I’ve talked about them a lot.

This got me thinking about why and here’s my goofy thought for consideration of the “value” and “utility” of Private Cloud:

If the power utility “grid” represents Public Cloud, then perhaps batteries are a reasonable equivalent for Private Cloud.

I’m not going to explain this analogy in full yet, but wonder if it makes any sense to you. I’d enjoy your thoughts on what you think I’m referring to.

Geva Perry’s Integration-as-a-Service Heats Up post of 1/25/2010 observes:

Interesting to see things heat up in the emerging category of integration-as-a-service, also know as cloud integration or SaaS integration.

Praneal Narayan at SnapLogic recently sent me a note about a developer contest they are running (more on that below). SnapLogic is a recent entrant into the field, competing with Boomi and Cast Iron Systems. In October, SnapLogic announced a $2.3 million investment from Andreessen Horowitz and others and that Informatice founder Gaurav Dhillon had joined as CEO.

Clearly in the integration game one of the key success factors is coverage -- or how many end-points can the service talk to out-of-the-box. Alternatively, an integration-as-a-service provider can allow customers and 3rd-party vendors to create their own integration points. And that's what the SnapLogic developer competition is all about.

The company created an app store, they call it SnapStore, which allows third-party developers and companies to sell their own integrations (which they call "Snaps") to various platforms. The developer competition offers each developer who submits a Snap a Kindle, and the winner of the competition a $5,000 grand prize.

Udayan Bannerjee delivers “A Platform Comparison” in his Cloud Economics – Amazon, Microsoft, Google Compared post of 1/25/2010:

Any new technology adoption happens because of one of the three reasons:

- Capability: It allows us to do something which was not feasible earlier

- Convenience: It simplifies

- Cost: It significantly reduces cost of doing something

What is our expectation from cloud computing? As I had stated earlier, it is all about cost saving … (1) through elastic capacity and (2) through economy of scale. So, for any CIO who is interested in moving to cloud, it is very important to understand what the cost elements are for different cloud solutions. I am going to look at 3 platforms: Amazon EC2, Google App Engine and Microsoft Azure. They are sufficiently different from each other and each of these companies is following a different cloud strategy – so we need to understand their pricing model.

Quick Read: Market forces seem to have ensured that all the prices are similar – for quick rule of thumb calculation to look at viability, use the following numbers irrespective of the provider. You will not go too much off the mark.

- Base machine = $0.1 per hour (for 1.5 GHz Intel Processor)

- Storage = $0.15 per GB per month

- I/O = $0.01 for 1,000 write and $0.001 for 1,000 read

- Bandwidth = $0.1 per GB for incoming traffic and $0.15 per GB for outgoing traffic

However, if you have time, you can go through the detail analysis given …

<Return to section navigation list>

Cloud Security and Governance

Dom Green recommends WCF Hot Fix – Don’t be showing your nodes in this 1/25/2010 post:

When browsing to a WCF endpoint hosted in an Azure web role you normally get back a web page for the service showing the location of the individual node / web role that is serving up your request (seen blurred out here) instead of the actual endpoint.

This isn’t great as you don’t want everyone knowing about your internal system and especially the URL of one of your web roles, with which they could do who know what.

This can easily be fixed with this patch for WCF that will now show the expected endpoint. This endpoint is actually the address of the load balancer that will then forward your request to an web role.

Dom continues with instruction for downloading, installing and using the hotpatch.

Alessandro Perilli reviews the University of California Berkeley’s Paper: Exploring Information Leakage in Third-Party Compute Clouds in this 1/25/2010 post:

At the end of last year, people from the University of California and MIT published an extremely interesting 14-pages paper about the risks of information leakage in multi-tenancy Infrastructure-as-a-Service (IaaS) clouds.

Titled Hey, You, Get Off of My Cloud: Exploring Information Leakage in Third-Party Compute Clouds, it was presented at the ACM Conference on Computer and Communications Security 2009.

The paper claims that [when] “investing just a few dollars in launching VMs, there’s a 40% chance of placing a malicious VM on the same physical server as a target customer”.

It ends with a number of suggestions to mitigate the risks:

“First, cloud providers may obfuscate both the internal structure of their services and the placement policy to complicate an adversary’s attempts to place a VM on the same physical machine as its target. For example, providers might do well by inhibiting simple network-based co-residence checks.

“However, such approaches might only slow down, and not entirely stop, a dedicated attacker. Second, one may focus on the side-channel vulnerabilities themselves and employ blinding techniques to minimize the information that can be leaked. This solution requires being confident that all possible side-channels have been anticipated and blinded. Ultimately, we believe that the best solution is simply to expose the risk and placement decisions directly to users. A user might insist on using physical machines populated only with their own VMs and, in exchange, bear the opportunity costs of leaving some of these machines under-utilized. For an optimal assignment policy, this additional overhead should never need to exceed the cost of a single physical machine, so large users—consuming the cycles of many servers—would incur only minor penalties as a fraction of their total cost.”

Chris Hoff (@Beaker)’s Cloud: Security Doesn’t Matter (Or, In Cloud, Nobody Can Hear You Scream) post of 1/25/2010 begins:

In the Information Security community, many of us have long come to the conclusion that we are caught in what I call my “Security Hamster Sine Wave Of Pain.” Those of us who have been doing this awhile recognize that InfoSec is a zero-sum game; it’s about staving off the inevitable and trying to ensure we can deal with the residual impact in the face of being “survivable” versus being “secure.”

While we can (and do) make incremental progress in certain areas, the collision of disruptive innovation, massive consumerization of technology along with the slow churn of security vendor roadmaps, dissolving budgets, natural marketspace commoditzation and the unfortunate velocity of attacker innovation yields the constant realization that we’re not motivated or incentivized to do the right thing or manage risk.

Instead, we’re poked in the side and haunted by the four letter word of our industry: compliance.

Compliance is often dismissed as irrelevant in the consumer space and associated instead with government or large enterprise, but as privacy continues to erode and breaches make the news, the fact that we’re putting more and more of our information — of all sorts — in the hands of others to manage is again beginning to stoke an upsurge in efforts to somehow measure and manage visibility against a standardized baseline of general, common sense and minimal efforts to guard against badness. …

<Return to section navigation list>

Cloud Computing Events

Eric Nelson started a New Windows Azure Platform online community in the UK, according to this 1/25/2010 post:

There is a really strong interest in Cloud Computing and Azure in the UK – but it doesn’t appear to be supported by an online community. Well, at least I couldn’t find an active community.

Hence I decided to go and create one. Or at least create the skeleton of one: http://ukazure.ning.com/

… P.S. I since discovered Brazil has been doing “the same” for a long while. They have a very nice site and 256 members (very “binary”!). Lest see if we can beat Brazil in double quick time. No – I do not have a Microsoft objective to do that :-)

<Return to section navigation list>

Other Cloud Computing Platforms and Services

Geva Perry’s Cloud as Monetization Strategy for Open Source: SpringSource analyzes SpringSource’s move of their dmServer to the Eclipse Public License (EPL):

I came across an interesting post by Savio Rodrigues from IBM. Savio writes about the recent announcement by SpringSource (now a division of VMWare) that they are proposing to move their dm Server product (the OSGi app server) to the Eclipse Public License (EPL). Until now the dm Server was offered with a dual-license model: free in GPL or with a proprietary license for a fee, also known as the "open core" model.

Rodrigues refers to a blog post by The 451 Group analyst Matthew Aslett in which he says that SpringSource is abandoning the GPL license (and therefore the dual license model), because the EPL is more permissive and therefore encourages adoption. Rodrigues then brings up the possibility that the change in licensing is due to a change in business model -- generating revenues for sale of support subscriptions instead of license sales.

But he pretty much ends up dismissing that as the main motivator and then truly hits the nail on the head: the real plan is to monetize this and other SpringSource products with cloud computing offering (presumably some kind of PaaS) -- as this was the original rationale given for the SpringSource acquisition by VMware. …