Windows Azure and Cloud Computing Posts for 12/31/2009+

| Windows Azure, SQL Azure Database and related cloud computing topics now appear in this weekly series. |

••• Update 1/3/2010: André van de Graaf: Main differences between SQL Azure and SQL Server 2008; Anders Trolle-Schultz: Is the Cloud Misleading Enterprises, Customers - Even Politicians?; Admin of Tune Up Your PC: Azure Service Bus – Eventing in the App Fabric – Project Weather Cloud – Creating the Weather Stations; Brandon Watson: 2010 Personal Predictions; Khash Sajadi: Microsoft Azure is not ready for prime time yet; James Urquhart: Application packaging for cloud computing: A proposal; Me: Windows Azure Feature Voting.

•• Update 1/2/2010: James Hamilton: MapReduce in CACM; Panagiotis Kefalidis: Windows Azure Queues - Getting 400 Bad Request when creating a queue; Maureen O’Gara: Rackspace Teams with MySQL-Toting DaaS Start-up; Kevin L. Jackson: Most Influential Cloud Bloggers for 2009; Amazon Web Services: EC2 connectivity issues

• Update 1/1/2010: George Huey and Wade Wegner: Huey and Wegner Migrate Us to SQL Azure; Gary Orenstein: Forecast for 2010: The Rise of Hybrid Clouds; Mamoon Yunus: Virtues of Service Virtualization in a Cloud; Mark O’Neill: Who do you trust to meter the Cloud?; Brian Ales: On Microsoft’s Azure; Maarten Balliauw: Deploying PHP in the Clouds - Windows Azure; Gaurav Mantri: Cerebrata Cloud Storage Studio; Ryan Dunn, Zach Skyles Owens, DavidAiken and Vittorio Bertocci: Windows Azure Platform Training Course;

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Table and Queue Services

- SQL Azure Database (SADB)

- AppFabric: Access Control, Service Bus and Workflow

- Live Windows Azure Apps, Tools and Test Harnesses

- Windows Azure Infrastructure

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Read the detailed TOC here (PDF) and download the sample code here.

Discuss the book on its WROX P2P Forum.

See a short-form TOC, get links to live Azure sample projects, and read a detailed TOC of electronic-only chapters 12 and 13 here.

Wrox’s Web site manager posted on 9/29/2009 a lengthy excerpt from Chapter 4, “Scaling Azure Table and Blob Storage” here.

You can now download and save the following two online-only chapters in Microsoft Office Word 2003 *.doc format by FTP:

- Chapter 12: “Managing SQL Azure Accounts, Databases, and DataHubs*”

- Chapter 13: “Exploiting SQL Azure Database's Relational Features”

HTTP downloads of the two chapters are available from the book's Code Download page; these chapters will be updated for the November CTP in January 2010.

* Content for managing DataHubs will be added as Microsoft releases more details on data synchronization services for SQL Azure and Windows Azure.

Off-Topic: OakLeaf Blog Joins Technorati’s “Top 100 InfoTech” List on 10/24/2009.

Azure Blob, Table and Queue Services

•• Panagiotis Kefalidis’ Windows Azure Queues - Getting 400 Bad Request when creating a queue post of 1/2/2010 explains why you might receive 400 Bad Request messages:

You’re trying to create a queue on Windows Azure and you’re getting a “400 Bad Request” as an inner exception. Well, there are two possible scenarios:

- The name of the queue is not valid. It has to be a valid DNS Name to be accepted by the service.

- The service is down or something went wrong and you just have to re-try, so implementing a re-try logic in your service when initializing is not a bad idea. I might say it’s mandatory.

The naming rules

- A queue name must start with a letter or number, and may contain only letters, numbers, and the dash (-) character.

- The first and last letters in the queue name must be alphanumeric. The dash (-) character may not be the first or last letter.

- All letters in a queue name must be lowercase.

- A queue name must be from 3 through 63 characters long.

More on that here.

• Gaurav Mantri announced on 1/1/2010 that Cerebrata Cloud Storage Studio v2010.01.00.00, a Windows (WPF) based client form managing Windows Azure storage, is available for download after six months in private/public beta.

The application comes in the following three editions:

- Free 30 day Trial

- Free Developer Edition (for Development Storage only)

- $49.99 Professional Edition (for Development and Production storage)

According to Gaurav Mantri’s Announcing the launch of Cloud Storage Studio - A WPF Application to manage your Windows Azure Storage & Hosted Services post:

Cloud Storage Studio is a WPF application to manage your Windows Azure Storage and Hosted Applications. Using Cloud Storage Studio, you can manage your tables, queues & blob containers as well as manage your hosted applications (deploy, delete, upgrade etc.). Using one interface you can connect to the development storage running on your computer as well as cloud storage.

Cerebrata Cloud Storage Studio/e, the browser-based version of Cloud Storage Studio hosted by Cerebrata, remains available for public use:

… Cloud Storage Studio/e is completely browser based. To access your data repositories hosted in Microsoft's Cloud platform, all you need is a browser with Silverlight 2 plugin installed.

Cloud Storage Studio/e allows you to access and manage your data stored in Microsoft Cloud platform using just your browser. That means you need not install any special software to access this data. Cloud Storage Studio/e makes use of the same REST API which are made available by Microsoft to access Azure Storage repository; however we have encapsulated those APIs in our application and presented them to you in an intuitive and easy to use user-interface. …

Here’s a screen capture of the Northwind Customers table uploaded to an OakLeaf storage account (click image for 1024x768-px screen capture):

You can add new or edit existing entities in this dialog:

Use of Cloud Storage Studio/e currently is free.

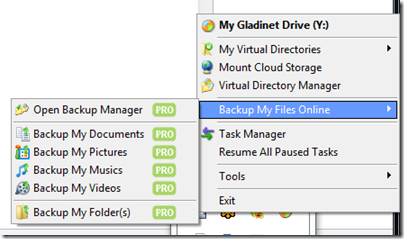

Jerry Huang asserts “You can map multiple Azure Blob Storage Account as side-by-side virtual folders” with Gladinet Cloud Desktop v 1.4.232 in his Manage Azure Blob Storage With Ease post of 12/31/2009.

Jerry highlights these features:

1. Map a Network Drive Letter to the Azure Blob Storage

You can map multiple Azure Blob Storage Account as side-by-side virtual folders in the same network drive. You can do drag-and-drop between local drive and the Azure drive.

2. Schedule Automatic Backup of Files-by-Type.

You can backup documents, pictures, music or videos to Azure Blob Storage.

3. Schedule Automatic Backup of Local Folders

You can pick local folders to backup to Azure Blob Storage or multiple Azure Blob Storage accounts. You can even backup to Azure Blob Storage + Amazon S3 + AT&T Synaptic Storage + Nirvanix Storage + EMC Atmos Online Storage all at the same time.

4. Cloud-to-Cloud Backup

You can backup Google Docs to Microsoft Azure Blob Storage or backup important documents from Amazon S3 to Azure Blob Storage.

5. Ad hoc Backup and Transfer

Since you can do drag and drop in Windows Explorer with the Azure Drive, you can turn each drag and drop into a scheduled backup task or download task. The following dialog will appear after a drag-drop unless you set a default action and choose don’t show next time.

The added details from Jerry’s article are due to the unusually few technically oriented, how-to articles in this post.

<Return to section navigation list>

SQL Azure Database (SADB, formerly SDS and SSDS)

••• André van de Graaf describes the Main differences between SQL Azure and SQL Server 2008 in his 1/3/2010 post:

SQL Azure is a service which makes the administration slightly different. Unlike administration for an on-premise instance of SQL Server, SQL Azure abstracts the logical administration from the physical administration; As an database administrator you continue to administer databases, logins, users, and roles, but Microsoft administers the physical hardware such as hard drives, servers, and storage. Because Microsoft handles all of the physical administration, there are some differences between SQL Azure and an on-premise instance of SQL Server in terms of administration, provisioning, Transact-SQL support, programming model, and features.

He continues with the details of:

- Logical Administration vs. Physical Administration

- Transact-SQL Features Supported on SQL Azure

- Transact-SQL Features Unsupported on SQL Azure

•• Maureen O’Gara’s Rackspace Teams with MySQL-Toting DaaS Start-up post of 1/2/2010 reports:

The Rackspace Cloud, Rackspace Hosting’s cloud computing unit, has added FathomDB, a MySQL-based Database-as-a-Service (DaaS) start-up, to its ecosystem.

FathomDB is now built on Rackspace Cloud’s standards-based API.

FathomDB claims to have helped start the DaaS industry by offering an interactive user interface and analytics engine to support relational databases in the cloud initially on EC2 and S3.

The service is supposed to be a time-saver by providing automated backups, routine maintenance, analytic tools, real-time monitoring and performance graphs for optimizing code and easy point-and-click configuration.

Developers can run databases on FathomDB and the Rackspace Cloud starting at two cents an hour. Pricing varies by server size and disk space. It can run to $1.20 [per hour] for a 16GB server with 640GB of disk.

FathomDB was seeded by Y! Combinator.

• George Huey and Wade Wegner discuss the latest version of George’s SQL Azure Migration Wizard with Carl Franklin and Richard Campbell for 52 minutes on Huey and Wegner Migrate Us to SQL Azure (.NET Rocks! show #512):

George Huey and Wade Wegner from Microsoft talk to Carl and Richard about George's creation, the SQL Azure Migration Wizard, a free tool that will save you countless hours when migrating SQL Server databases to SQL Azure.

Cory Fowler explains Synchronizing a Local Database with the Cloud using SQL Azure Sync Framework in this 12/31/2009 tutorial:

I’ve been looking at different aspects of Windows Azure over the past few months, and have been having a lot of fun getting a grip on Cloud Computing and Microsoft's Cloud Computing Platform. One of my past posts gives a step-by-step tutorial on how to connect to SQL Azure using Microsoft SQL Server 2008 R2 (November CTP). If you haven’t already done so I would suggest reading Making Data Rain Down from the Cloud with SQL Azure to follow along with this tutorial.

Once you have your SQL Azure Account, you will need to provision a new database in the cloud and change the firewall settings so the local computer can connect to and modify the database. These steps are provided in Making Data Rain Down from the Cloud with SQL Azure. Now that we’ve got our SQL Azure firewall database set up we’re ready to sync a local database into the cloud.

Cory continues with illustrated instructions for synchronizing a local SQL Server ASP.NET membership database with an SQL Azure version.

<Return to section navigation list>

AppFabric: Access Control, Service Bus and Workflow

••• The Admin of the Tune Up Your PC blog posted a detailed Azure Service Bus – Eventing in the App Fabric – Project Weather Cloud – Creating the Weather Stations tutorial on 1/3/2010 that’s even longer than most of ScottGu’s posts:

This is an ongoing blog entry for Project Weather Cloud. At the end we’ll have a thoroughly documented and functional cloud application. [Following are Bruno Terkaly’s posts about AppFabric eventing:]

- http://blogs.msdn.com/brunoterkaly/archive/2010/01/01/azure-service-bus-intro-to-eventing-in-the-app-fabric-project-weather-cloud.aspx

- http://blogs.msdn.com/brunoterkaly/archive/2009/12/26/the-holy-grail-of-connecting-applications-net-services-bus-in-windows-azure.aspx

Weather Stations – Reporting the weather to listening endpoints

This next section is important. We are going to work on weather stations. After all, the weather stations are the source of the data that is floating around.

Weather Stations will need to manage security credentials. More specifically, we are using shared secret, as opposed to SAML Tokens or Simple Web Tokens.

Note that weather stations simply forward weather reports to WeatherCentral, which will then broadcast weather data to listening billboards.

Admin continues with several feet of detailed instructions, source code and illustrations. The source code is available for download from a link at the end of the blog.

<Return to section navigation list>

Live Windows Azure Apps, Tools and Test Harnesses

••• Khash Sajadi asserts Microsoft Azure is not ready for prime time yet in this 1/2/2010 post:

My recent project was hosted on a virtual server in GoGrid. The server was a Windows 2008 running IIS7 and SQL 2008 Express. The application was in .NET 3.5 SP1 and was running as a ASP MVC front end and a Windows Service as a back end. NHibernate as ORM, Spring.net for IoC and DI, Log4Net for logging and a few other open source tools and frameworks.

With my BizSpark partnership approved, I tried to move the system to Windows Azure and now after spending 2 weeks on that I am moving it back to a dedicated server and trying to recover from the time wasted on this.

In my opinion, Azure is a brilliant framework but it is not ready for prime time yet. It can become a great cloud development environment within a year or so, but not now. …

Khash continues with details to support his conclusion. However, it should be noted that Windows Azure is a Community Technical Preview (CTP) until tomorrow (1/4/2010)

••• Montreal developer Ntmisael deployed a Silverlight-powered Online Music Catalog to the Windows Azure Platform on 1/2/2010. The catalog includes user registration and login features.

I wonder how many Azure demonstration projects will remain alive on 2/1/2010 when Microsoft begins charging for compute, storage and bandwidth. I’m in the process of combining all my demo projects into a single solution with multiple Web and worker roles.

• Maarten Balliauw’s Deploying PHP in the Clouds - Windows Azure article was published in the December 2009 issue of php | architect magazine:

The IT guys are being difficult about deploying your application? Want to grow infrastructure as your application grows? If you feel any of these questions are appropriate for your situation, deploying your application in the cloud may be an affordable and extremely scalable solution. Why not have a look at Microsoft's Windows Azure platform? Join me as I take you through Windows Azure in this two-part article.

You can download a free copy here.

• Ryan Dunn, Zach Skyles Owens, DavidAiken and Vittorio Bertocci authored the Windows Azure Platform Training Course, which is available for download from Channel9 as of 1/1/2010:

The Windows Azure Platform Training Course includes a comprehensive set of technical content including samples, demos, hands-on labs, and presentations that are designed to expedite the learning process for the set of technologies released as part of the Windows Azure Platform.

Following are links to the eight individual course units:

- Overview

- Windows Azure

- Windows Azure Storage

- Windows Azure Deployment

- SQL Azure

- Microsoft Codename “Dallas”

- Windows Azure Service Bus

- Identity and the Windows Azure Platform

As you can see, this is not another drop of the Windows Azure Training Kit.

David Blumenthal, MD, MPP claims 12/30/2009 was A Defining Moment for Meaningful Use:

The Centers for Medicare & Medicaid Services (CMS) issued its draft definition for the “meaningful use” of electronic health records (EHRs) today as part of its notice of proposed rulemaking (NPRM). The NPRM describes the proposed implementation of incentives to providers for the adoption and meaningful use of certified EHRs. This NPRM from CMS kicks off a 60-day public comment period to help inform its development of the final 2011 meaningful use criteria.

Closely linked and also issued today from the Office of the National Coordinator for Health Information Technology (ONC) is an interim final rule (IFR) on Standards & Certification Criteria. The IFR provides details on requirements for “certified” EHR systems, and what technical specifications are needed to support secure, interoperable, nationwide electronic exchange and meaningful use of health information. The standards and certification criteria in the IFR are specifically designed to support the 2011 meaningful use criteria.

As part of the rulemaking process, the Standards & Certification Criteria IFR will go into effect 30 days after publication. There will be an opportunity for public comment for 60 days from publication, after which the rule will be finalized. …

Your perspectives are important as we all play a different role in the delivery of care. Whether you are a provider, technologist, administrator, caregiver, researcher, patient, consumer, or other member of the health care community, your input is valuable as we all work together to provide patient-centered, quality health care.

We also hope you will use this blog to start a dialogue about meaningful use, and how we can leverage this important concept, and the specific incentives, to strengthen patient care.

Dr. Blumenthal is the National Coordinator for Health Information Technology for the Obama administration.

Health Information Technology (HITECH, HIT) and, especially, Personal Health Records (PHR, such as HealthVault), will take increasing advantage of cloud computing technology in the new decade. Therefore, this post will devote greater-than-usual attention to the topic.

Glenn Laffel, MD’s Meaningful Use Quick Reference Guide of 12/31/2009 summarizes CMS’s draft criteria for meaningful use of electronic health records:

Yesterday, the Centers for Medicare and Medicaid Services (CMS) released draft criteria for the “Meaningful Use” of electronic health records (EHRs). Physicians that want to qualify for incentive payouts through CMS in 2011 must meet all of the so-called Stage 1 criteria listed below. CMS will probably add additional criteria beginning in 2013.

The following list has been excerpted from pages 47-65 of the CMS document. EHR vendors and providers should read the original document as part of any formal planning exercise and certainly in advance of any EHR purchasing decision. …

- Improving Quality, Safety and Efficiency, and Reducing Health Disparities …

- Engaging Patients and Families in their Health Care …

- Improving Care Coordination …

- Improving Population and Public Health …

- Ensuring Adequate Privacy and Security Protections for Personal Health Information …

He adds detailed requirements for each group.

Dr. Laffel is a physician with a PhD in Health Policy from MIT and serves as Practice Fusion's Senior VP, Clinical Affairs. Practice Fusion is a free, Web-based Electronic Health Record solution.

John Moore’s Meaningful Use Rules Hit the Streets post of 12/31/2009 summarizes the two EHR-related documents released on 12/30/2009:

… The first document, at 136 pgs, titled: Health Information Technology: Initial Set of Standards, Implementation Specifications, and Certification Criteria for Electronic Health Record Technology is targeted at EHR vendors and those who wish to develop their own EHR platform. This document lays out what a “certified EHR” will be as the original legislation of ARRA’s HITECH Act specifically states that incentives payments will go to those providers and hospitals who “meaningfully use certified EHR technology.” This document does not specify any single organization (e.g. CCHIT) that will be responsible for certifying EHRs, but does provide some provisions for grandfathering those EHRs/EMRs that have previously received certification from CCHIT.

The second document at 556 pgs titled: Medicare and Medicaid Programs; Electronic Health Record Incentive Program addresses the meaningful use criteria that providers and hospitals will be required to meet to receive reimbursement for EHR adoption and use. Hint, if you wish to begin reviewing this document, start on pg 103, Table 2. Table 2 provides a fairly clear picture of exactly what CMS will be seeking in the meaningful use of EHRs. In a quick cursory review CMS is keeping the bar fairly high for how physicians will use an EHR within their practice or hospital with a focus on quality reporting, CPOE, e-Prescribing and the like. They have also maintained the right of citizens to obtain a digital copy of their medical records. An area where they pulled back significantly is on information exchange for care coordination. Somewhat surprising in that this was one of the key requirements written into the original ARRA legislation. But then again not so surprising as frankly, the infrastructure (health information exchanges, HIEs) is simply not there to support such exchange of information. A long road ahead on that front. …

John Moore is an IT analyst focusing on consumer-facing HIT for Chilmark Research.

The Fox Group, LLC finds “The first draft of the Meaningful Use Criteria [was] a surprise” in its EHR - Meaningful Use, Meaning What? summary of 12/31/2009:

With the exception of e-prescribing, very few systems address these new basic criteria, let alone the more detailed definition of meaningful use under development.

This FOCUSED update is quite complete, even though these matters are anticipated to be modified, and possibly significantly.

The first draft of the meaningful use criteria was published in June 2009 by the Meaningful Use Workgroup of the Health Information Technology Policy Committee, the advisory committee established to propose regulations and policies to implement the HITECH Act. …

The Fox Group provides healthcare consulting and executive management services.

The first draft of the Meaningful Use Criteria were a surprise. There was less emphasis on the traditional functions of an EMR system, e.g., provider documentation and electronic claims submission.

The criteria are driven by Health Outcomes Policy Priorities and Care Goals.

Return to section navigation list>

Windows Azure Infrastructure

••• James Urquhart’s Application packaging for cloud computing: A proposal post to C|Net’s The Wisdom of Clouds blog of 1/2/2010 proposes standardizing how cloud-computing applications are packaged for inventory and deployment:

A few weeks ago I completed a series of posts describing the ways that cloud computing will change the way we utilize virtual machines and operating systems. The very heart and soul of software systems design is being challenged by the decoupling of infrastructure architectures from the software architectures that run on them.

Over the last few weeks, I've been slowly trying to get a grip on what the state of the union is with respect to software "packaging" architectures in cloud computing environments. Specifically, I've been focusing on infrastructure-as-a-service (IaaS) and platform-as-a-service (PaaS) offerings, and the enabling infrastructure that will handle application deployment to these services in the future. How will they evolve to make deployment and operations as simple as possible? …

The diagram below describes my vision in a nutshell:

(Credit: James Urquhart)

The package could be an archive file of some kind, or it could be some other association of files (such as a source control file system). The four elements displayed above are:

- Metadata describing the manifest of the package itself, and any other metadata required for processing the package such as the spec version, application classification, etc. The manifest should describe enough that the receiving cloud infrastructure could decide if it was an acceptable package or not.

- The bits that make up the software and data being delivered. This can be in just about any applicable format, I think, including an OVF file, a VHD, a TAR file or whatever else works. Remember, the manifest would describe the format the bits are delivered in--e.g. "vApp" or "RoR app" or "AMI" or "OVF," or whatever--and the cloud environment could decide if it could handle that format or not.

- An appropriate deployment and/or configuration description, or pointers to the appropriate descriptions. I've always thought of this as a Puppet configuration, a Chef recipe, or something similar, but it could simply be a pointer to a JEE deployment descriptor in a WAR file provided in the "bits" section. …

- Orchestration and service level policies required to handle the automated run-time operation of the application bits. Again, I would hope to see some standards appear in this space, but this section should allow for a variety of ways to declare the required information.

James continues with examples of his four elements.

••• Brandon Watson’s 2010 Personal Predictions of 1/2/2010 don’t include prognostications about cloud computing but make interesting reading.

The lack of cloud prediction(s) is unexpected, because Brandon is Director of Microsoft's Azure Services Platform Ecosystem. It’s to be noted that he has both an engineering degree and an economics degree from the University of Pennsylvania, as well as an MBA from The Wharton School of Business

••• My Windows Azure Feature Voting record as of 1/3/2010 has votes and comments for four Windows Azure requests:

- Provide me with full text search on table storage

- Continue Azure offering free for Developers

- Add UpToDate Patient Health Data Services to Codename "Dallas"

- Provide alternatives to Atom for Azure Storage data format

Don’t forget to add your votes for new and/or improved Windows Azure features.

••• Anders Trolle-Schultz asks Is the Cloud Misleading Enterprises, Customers - Even Politicians? in this 12/15/2009 post to Sandhill.com’s Software in the Cloud/Utility Computing topic where “Executives and analysts examine the adoption of cloud computing and how it impacts the software industry:”

Has the revolution left us all blinded by the light and groping in the dark? Private clouds on-premises (Dell), Azure-in-a-box for Enterprises, Oracle/Sun kept away by EU to move ahead and disruption and confusion among the traditional partner/reseller/distributor channel. Amazon, Opsource, Google, Microsoft are all competing to offer the cheapest delivery in the cloud. Is Cloud Computing just hype? Yes, to some extent and one could be tempted to quote Hans Christian Andersen´s story "The Emperor's New Clothes."

Every day we see new and "improved" cloud offerings, but who are we kidding? Isn't Cloud computing just a packaged platform with a variety of layers of technology, delivered off-premises unable to address all the objections of the customers?

Cloud Computing Providers - perceived or declared - are all forcing the IT users/decision makers to climb too many mountains at the same time. 1) Move your IT to a cloud delivery 2) forget about your present database structure 3) Pay using your credit card 4) We will hold your data, but can't always tell you where 5) etc., etc….

When businesses are looking to improve their competitiveness and become more agile they often turn to the IT provided for their business. In today's economic climate businesses are looking for scalability, predictability in cost, fast implementation and proactive maintenance. All issues addressed and to be delivered by Cloud Computing, but as easily and more convincing delivered with IT services delivered on-premises using SaaS as a business model.

Microsoft's ability to offer "Azure-in-a-container" to enterprises could prove the point. Enterprises want the benefits of the scalable and affordable platforms with predictable cost and plug-and-play maintenance without losing control of the data. GIVE IT TO THEM, but don't force them into the Cloud unless you can address all their objections. [Emphasis added.] …

Anders goes on to outline other issues facing IT management when considering cloud computing. Clearly, SQL Azure eliminates the issue that requires IT users/decision makers to “… forget about your present database structure” and location-specific Microsoft Data Centers eliminate worries that “We will hold your data, but can't always tell you where.”

Sandhill.com’s Cloud/Utility Computing blog topic contains 17 other posts.

• Gary Orenstein offers his Forecast for 2010: The Rise of Hybrid Clouds in this 1/1/2010 post to GigaOm:

For companies protective of their IT operations and data, wholesale public cloud computing adoption can be a difficult pill to swallow. But cloud momentum is too strong a trend to ignore. Enter the hybrid cloud — a panacea of sorts, enabling companies to maintain a mix of on-premise and off-premise cloud computing resources, both public and private, managed through a common framework to simplify operations. This concept has steadily gathered steam over the last year and a half, and now appears poised to capture the minds, and wallets, of corporations in 2010. …

… Microsoft has focused its Azure cloud platform on enterprises that can use the same Windows and .NET development frameworks on services internally and on the cloud. See our posts “Microsoft Azure Walks a Thin Blue Line” and “Will Microsoft Drive Cloud Revenues in 2010?” Even Amazon has started to reach towards hybrid deployment models with its Virtual Private Cloud service positioned as “a secure and seamless bridge between a company’s existing IT infrastructure and the AWS cloud.” …

It appears certain that the newly formed Server & Cloud division under Bob Muglia will leverage Windows Server 2008 R2 sales with a formal hybrid cloud announcement for Windows Azure in early 2010.

• Mamoon Yunus continues to eulogize cloud gateway hardware, claiming “On-premise and Off-premise service Virtualization has significant benefits” in his Virtues of Service Virtualization in a Cloud post of 1/1/2010:

Service virtualization is the ability to create a virtual service from one or more predefined service files. Service files are usually generated as a Web Service Description Language (WSDL, pronounced Wizdel, see tutorial for introduction to WSDL) file by service containers running business application developed in Java, .NET, PHP type programming languages. Service virtualization combines and slices business services deployed independent of the operating systems, programming language or hosting location. The services may be off-premise cloud services (SaaS, PaaS, IaaS) or on-premise services deployed in a corporate data center. An intermediary cloud gateway sits between the producer and the consumer and aggregates the WSDLs. Based on policies enforced on the cloud gateway, only authorized operations are exposed to the consumers. A sample topology is as follows:

Mamoon continues with detailed descriptions of the putative benefits.

• Mark O’Neill uses Tom Rafferty’s analysis of Pacific Gas & Electric (PG&E) Co.’s disastrous US$2.2 billion smart-meter debacle in Bakersfield, CA to suggest that a cloud service broker should provide cloud services billing in his Who do you trust to meter the Cloud? post of 12/31/2009:

Customers of Cloud services right now depend on the "meters" being provided by the service providers themselves. Just like the PG&E customers in Bakersfield. This means that they depend on the service provider itself to tell them about usage and pricing. There isn't an independent audit trail of usage. The meter also locks the customer into the service provider.

A Cloud Service Broker addresses these issues. It is not a coincidence that much Cloud Service Broker terminology carries over from the world of utilities - it is solving the same problem:“Data transfer to cloud computing environments must be controlled, to avoid unwarranted usage levels and unanticipated bills from over usage of cloud services. By providing local metering of cloud services' usage, local control is applied to cloud computing by internal IT and finance teams.”

http://www.vordel.com/solutions/cloud.htmlThe Cloud Service Broker analyzes traffic and provides reports as well as an audit trail. Reports include usage information in real-time, per hour, per day, and per service. Reports are based on messages and based on data. Visibility is key. This is all independent of an individual Cloud service provider. It is easy to imagine how useful this would be in conjunction with Amazon's spot pricing (see a great analysis of Amazon's spot pricing by James Urquhart here).

• Brian Ales’ On Microsoft’s Azure essay of 12/31/2009 begins:

“Three screens and a cloud.”

That’s how both Chief Software Ray Ozzie and CEO Steve Ballmer repeatedly described the future of computing in general over this past year.

Inside Microsoft’s crystal ball, traditional desktop computing is decreasing as applications move up into the internet, and a new generation of lightweight ‘screens’ (thin clients, mobile devices, and network-enabled televisions) become the three prevailing client-side hardware models.It’s a good little slogan - and about as unambiguous an endorsement of the conventional wisdom on the bright future of cloud computing as one could imagine.

Microsoft has had its share of advertising/PR issues over the last few years (think Jerry Seinfeld as a symbol of forward-thinking 21st century coolness, or the Windows 7 launch party infomercial). It would be a shame if similar promotional missteps end up preventing the Microsoft Azure initiative from garnering the media buzz it deserves, though, because the company is every bit as dedicated to the cloud as the above quote would suggest – and they’re putting their money where Ray’s and Steve’s mouths are.

Consider the Chicago data center (pictured [above]), part of the massive global build-out of the Azure infrastructure that occurred over the past year. Completed this past June, the facility occupies 700,000 sq. ft. - the size of 12 US football fields. To populate a data center this size, 40 ft. shipping containers are packed full of servers and installed as power-efficient and easier-cooling modules (note to the firm handling Microsoft’s PR: that would make some pretty serviceable B-roll news segment footage).

Sadly, though, the remarkable physical build-out of Azure has been one of the more overlooked stories of 2009, and as the service is poised to go live for the enterprise tomorrow on New Years Day, there remains relatively little coverage in the general or trade media. …

Brian continues with comparisons between Windows Azure and Google App Engine/Amazon EC2.

Colinizer offers his 2010 New-Year Prediction: Silverlight + Azure = The New Windows on 12/31/2009:

It has probably not escaped many of you that Windows’ market share (and that of related editions) is being eroded and is potentially under threat to varying extents in some markets as we role into 2010.

- iPhone is whipping ‘Windows phones’ such that Windows Mobile 7 will likely be a do or die mission in in 2010 (or more realistically 2011)

- Android is nibbling at Windows phones too

- Zune is nowhere near iPhone

- Netbooks with non-Windows OS installs are creeping into the remaining markets

- Mac is constantly barking its commercials

- LAMP is still thriving

- Google is trying to satisfy basic user requirements will a wafer-thin OS or by being OS-independent

I think however, that Microsoft has the opportunity to really drive adoption of Windows, but not in the way it has before. The real opportunity for Windows’ continued prosperity lies in the cloud. Even though this may happen, I do not however think it will be seen as a success – at least not initially (and doomsayers for Windows will jump on this). The resulting public attitude will probably really grate at Microsoft for some time. …

Hovhannes Avoyan summarizes IDC predictions in his Cloud Development Instrumental in IT Recovery for 2010 post of 12/31/2009:

Platform battles will accelerate. The field will get more crowded, and IDC expects IBM and Oracle to play new roles. And Google will make its own platform more attractive to large enterprises. Watch, too, for Amazon, to develop an application platform.

The next wave of hot public IT cloud services will rise and be centered around data/content (storage, distribution, and analytics), business applications (as adoption broadens for SaaS versions of enterprise applications), and personal productivity applications. These differ from today’s model of web hosting and collaboration services, for example, blogs, web conferencing and Twitter. Microsoft and IBM will play prominent roles in challenging today’s dominant player, Google Apps.

Security concerns will give rise to more private clouds – and from all major IT providers. Along with private clouds, there will be an explosion of “cloud appliances” as a “very simple-to-adopt” packaging approach. IT companies like Dell, IBM, HP, Sun, Fujitsu, Hitachi — and chipmakers Intel and AMD — will partner with software vendors to create cloud appliance versions of traditional versions of software.

Hybrid solutions will grow. IT product and service suppliers will develop tools to help customers “more cohesively and dynamically” manage their IT assets across internal servers, private clouds and their appliances, as well as public clouds.

Just as there’s a hot market for accessories for the iPod (cases, headphones), expect a hot market for cloud accessories to develop. These will focus on overcoming adoption barriers and will deliver predictable network quality of service and security. Later, other functions such as data indexing and cloud asset management will be introduced. All will aim to make public and private cloud services faster, safer, more reliable, and more useful.

Cloud players – in infrastructure, platform, application, and management/optimization cloud services categories – will develop API-based partner/solution ecosystems. API partners will add value to cloud providers’ offerings.

Charlie Burns, Bruce Guptil and Mark Koenig deliver IT Resolutions for New Decade, Old Theme of Constant Change in this 12/31/2009 Research Alert from Saugatuck Research (site registration required). Among the five resolutions, the follow two concern cloud computing:

… RESOLVED, that beginning in the year 2010, IT organizations will:

1. Approach Cloud Computing as a series of tactical forms of IT that can improve the elasticity & flexibility of the business …

5. Guide and facilitate enterprise adoption of Cloud Computing …

The authors flesh out their resolutions with additional detail.

Rob Enderle calls 2010: The Year and Decade of the Cloud in this 12/30/2009 Datamation article:

It is interesting that we tend to go through cycles. We started off with big centralized computers and relatively dumb terminals. Now with the rapid growth in Smartphones, the expected success of Smartbooks and Smart-Tablets (like the rumored iSlate), and the proliferation of devices like plug computers we appear to be facing a future that looks a lot like our past.

Like with any change, vendors that are in power this decade may not be in power in the next decade unless they significantly change how they think about the market. Companies that had their roots and beginnings in large systems like EMC, HP, and IBM may have advantages in terms of services, structure and systems. But they will still have to deal with the individual users who aren’t planning on giving up any power.

Companies like Apple and Microsoft, which started off more user focused, will need to better embrace the concepts of big centralized systems or be trivialized by them. This, of course, provides a unique opportunity for new companies - like Google – to come in and dominate what is coming because they can grow into the business. But they still have to roll over the other players. …

Rob concludes re Microsoft and Windows Azure:

… It is interesting to note that of all the companies Microsoft is likely the only one that has a full blend, with Azure, of all of the software elements and interoperability practices to become the major power for the next decade. It would require an effort like .Net or their original push to the Internet to do this. Perhaps this will be Steve Ballmer’s biggest test, can he, like Bill Gates did, turn the company on a dime. [Emphasis added.]

We’ll see, but one thing is sure, when this coming decade is over the surviving players won’t look anything like they do today. Even Apple, the shining star of this decade, will have to change to reflect the coming New World. …

Prognosticators should remember that the 2010 decade begins on 1/1/2011.

<Return to section navigation list>

Cloud Security and Governance

Mamoon Yunus reports that Rob Berry asks Does Cloud Computing Exacerbate Security and File Transfer Issues? in this 12/31/2009 post:

Here is an interesting article by Rob Barry titled: "In SOA, cloud resources may exacerbate security and file transfers issues." It highlights significant requirements for Federated SOA especially around large file transfer using SOAP Attachments. The article makes the following interesting points:

With increasing cloud adoption, there is an increase of large file transfers to external cloud providers such as Amazon S3 or Rackspace CloudFiles or to a company's internally hosted cloud. The file size increase is driven by the a low-hanging use case for S3 and CloudFiles: securely archiving rarely used corporate data in the cloud. The result of such archiving of batch data is an ever-growing file transfer over HTTP as a MIME of MTOM attachments. Consider the opposite scenario: if the data is real-time the transaction rate is higher but the files sizes are usually small. According to Frank Kenny, Gartner Research Director: "As we start to use more cloud-based services, the problem is going to exacerbate itself because we're dealing with bigger data, bigger attachments," said Kenney. "But we want the same performance that we've always been able to maintain." …

Lori MacVittie recommends that you “Write It Like Seth” [Godin] in her WILS: What Does It Mean to Align IT with the Business post of 12/30/2009:

We’ve been talking about “aligning IT with the business” since SOA first took legs but you rarely see CONCRETE EXMAPLES OF WHAT THAT REALLY MEANS.

It sounds much more grand and lofty than it really is. To put it in layman’s terms, or at least take it out of marketing terms, aligning IT with the business is really nothing more than justifying or tying a particular IT investment or project to a specific business goal. What that means ultimately is that you, as an IT professional, must understand what those business goals are in the first place. Once you know the goals, you can translate them to an IT goal and then down into specific operational implementations supporting or enabling that goal. If that operational implementation requires an investment, you can then justify the investment based on support for that specific business goal. …

Lori continues with “a few examples of translation from business goal to operational implementation.”

<Return to section navigation list>

Cloud Computing Events

Ryan Dunn will present a Building Cloud Services with Azure workshop at MIX10 in Las Vegas, NV:

Windows Azure allows you to deploy and scale your web applications transparently in the cloud. You can build Azure application with ASP.NET and SQL Server, or with open source components such as PHP and MySQL. Gain the skills to architect and develop real-world applications using Windows Azure. It is expected that attendees have some prior experience with Windows Azure, and the Azure Services Training Kit is a recommended prerequisite. During this half-day workshop, we show how to build basic Windows Azure applications on Microsoft and open source technologies, and introduce best practices for deploying, managing, and scaling your applications.

MIX10 takes place on 3/15/2009 through 3/17/2009, but the post doesn’t mention the date on which the workshop will occur.

James Hamilton’s Cloud Computing Economies of Scale is still MIX10’s only cloud-computing session. Strange!

<Return to section navigation list>

Other Cloud Computing Platforms and Services

•• Kevin L. Jackson’s Most Influential Cloud Bloggers for 2009 includes what Ulitzer claims is a list of the “World’s 30 Most Influential Bloggers.” It’s actually a list of 30 Ulitzer contributors to their Cloud Computing blogs from the left pane of this page.

Conspicuous by their absence are Chris Hoff (@Beaker, Rational Survivability blog), James Urquhart (@jamesurquhart, The Wisdom of Clouds blog), John Willis (@Botchagalupe, IT Management and Cloud Blog), Jeff Barr(@jeffbarr, Amazon Web Services Blog and Jeff Barr’s Blog) and other well-respected, frequent bloggers of cloud-computing topics. Alan Irimie, who’s #12 on Kevin’s version of the list, last posted to his Azure Journal in mid-November 2009.

Ulitzer claims that only its contributors are the “Most Influential Cloud Bloggers” is epic chutzpah.

There was a good deal of controversy in 2009 about Ulitzer hijacking bloggers’ content without permission. Here are links to Sam Johnston’s Who's lying about the Ulitzer Cloud Security Journal? post of 9/26/2009 and Aral Balkan’s My Sys-Con Nightmare post of 7/27/2009.

•• Amazon Web Services is reporting EC2 connectivity issues in the US-EAST-1 availability zone on 1/2/2010:

10:36 AM PST We are investigating intermittent connectivity issues for some instances in a single US-EAST-1 availability zone.

There were a few tweets about the problem. Guy Rosen (@guyro) is following the story, as is Carl Brooks (@eekygeeky).

•• James Hamilton’s MapReduce in CACM post of 1/2/2010 tackles the Great MapReduce Debate:

In this month’s Communications of the Association of Computing Machinery, a rematch of the MapReduce debate was staged. In the original debate, Dave Dewitt and Michael Stonebraker, both giants of the database community, complained that:

- MapReduce is a step backwards in database access

- MapReduce is a poor implementation

- MapReduce is not novel

- MapReduce is missing features

- MapReduce is incompatible with the DBMS tools

Unfortunately, the original article appear to be no longer available but you will find the debate branching out from that original article by searching on the title Map Reduce: A Major Step Backwards. The debate was huge, occasionally entertaining, but not always factual. My contribution was MapReduce a Minor Step forward. …

James continues with descriptions of additional MapReduce resources.

John Treadway lists important new features introduced by Amazon Web Services during 2009 in his Amazon Named “CloudBzz Innovator of the Year” post of 12/31/2009:

2009 has certainly been a cloudy year. The sheer volume of real innovation somehow makes all of the hype worthwhile.

While there were many companies doing interesting and innovative things in the cloud – Microsoft Windows Azure could be a strong 2010 contender – the decision on who wins for 2009 is no contest.

Amazon gets the CloudBzz “Innovator of the Year” award with a never-ending stream of great stuff that only seemed to accelerate as the year progressed. …

John reported Amazon Adding Active Directory Support (mini-scoop) from PDC on 11/18/2009, but that’s not in his list. See Azure Owns the Enterpri$e of 11/17/2009 for John’s take on Windows Azure’s position in the cloud computing market.

Eric Engleman asks Amazon.com to sell call center service to other companies? in this 12/30/2009 post to the TechFlash blog:

Is Amazon.com planning to offer its call center technology to other companies? A new job posting from the online retailer seems to indicate so. Amazon is seeking engineers to work on its "internally developed Call Center Platform" who will help take the technology "to the next level, making it available to organizations outside of Amazon."

That would be an interesting addition to Amazon's growing suite of cloud computing services.

Update [12/31/2009]: The job posting was briefly taken down yesterday following this report but is back up now.

Amazon is specifically seeking VoIP/SIP engineers for the job. It doesn't give details about the platform, other than it "currently powers all of Amazon's call centers, supporting thousands of concurrent customer calls, and tens of thousands of agents."

Interestingly, Twilio, a startup that helps developers to build phone system applications in the cloud, just raised more than $3 million in new funding to build its business. Twilio was founded by Amazon Web Services alum Jeff Lawson.

<Return to section navigation list>