Windows Azure and Cloud Computing Posts for 1/29/2010+

| Windows Azure, SQL Azure Database and related cloud computing topics now appear in this weekly series. |

•• Updated 1/31/2010: Sales Dot Two Inc.: Sales 2.0 Conference: Sales Productivity in the Clouds; Sam Johnston: Oracle Cloud Computing Forum[?]; John Mokkosian-Kane: Event-Driven Architecture in the Clouds with Windows Azure; Chris Hoff: Where Are the Network Virtual Appliances? Hobbled By the Virtual Network, That’s Where…; Stephen Chapman: Microsoft Confirms “Windows Phone 7;” to be Discussed at Energize IT 2010; Microsoft Partner Network: Windows Azure Platform Partner Hub; David Burela: Using Windows Azure to scale your Silverlight Application, Using Silverlight to distribute workload to your clients

• Updated 1/30/2010: Jonny Bentwood: Downfall of AR and the Gartner Magic Quadrant; Joe McKendrick: Design-Time Governance Lost in the Cloud? The Great Debate Rages; Jon Box: Upcoming TechNet and MSDN Events; Gunnar Peipman: Creating configuration reader for web and cloud environments; Dave O’Hara: Washington State proposes legislation to restart data center construction, 15 month sales tax exemption; Greg Hughes: Brent Ozar Puts SQL In The Cloud; Chris Hoff: MashSSL – An Excellent Idea You’ve Probably Never Heard Of…; Misael Ntirushwa: Migrating an Asp.Net WebSite with Sql Server 2008 Database to Windows Azure and Sql Azure; U.S. Department of Defense: Instruction No. 5205.13 Defense Industrial Base (DIB) Cyber Security/Information Assurance (CS/IA) Activities; Tim Weber: Davos 2010: Cyber threats escalate with state attacks; Chris Hoff: Hacking Exposed: Virtualization & Cloud Computing…Feedback Please; Nicholas Kolakowski: Microsoft Promoted Azure, Office 2010 During Earnings Call, But Dodged Mobile; Eugenio Pace: Just Released – Claims-Identity Guide online; Maarten Balliauw: Just another WordPress weblog, but more cloudy; and Emmanuel Huna: Red-Gate’s SQL Compare – now with SQL Azure support.

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Table and Queue Services

- SQL Azure Database (SADB)

- AppFabric: Access Control, Service Bus and Workflow

- Live Windows Azure Apps, Tools and Test Harnesses

- Windows Azure Infrastructure

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.) Read the detailed TOC here (PDF) and download the sample code here.

Discuss the book on its WROX P2P Forum.

See a short-form TOC, get links to live Azure sample projects, and read a detailed TOC of electronic-only chapters 12 and 13 here.

Wrox’s Web site manager posted on 9/29/2009 a lengthy excerpt from Chapter 4, “Scaling Azure Table and Blob Storage” here.

You can now download and save the following two online-only chapters in Microsoft Office Word 2003 *.doc format by FTP:

- Chapter 12: “Managing SQL Azure Accounts and Databases”

- Chapter 13: “Exploiting SQL Azure Database's Relational Features”

Off-Topic: OakLeaf Blog Joins Technorati’s “Top 100 InfoTech” List on 10/24/2009.

Azure Blob, Table and Queue Services

Alex James’ Getting Started with the Data Service Update for .NET 3.5 SP1 – Part 2 eight-step walkthrough of 1/28/2010 explains how to write a WPF client to consume the OData service you created in his Getting Started with the Data Services Update for .NET 3.5 SP1 – Part 1 walkthrough of 12/17/2009.In case you missed it in in the preceding Windows Azure and Cloud Computing Posts for 1/27/2010+, the Astoria team announced Data Services Update for .NET 3.5 SP1 – Now Available for Download on 1/27/2010.

<Return to section navigation list>

SQL Azure Database (SADB, formerly SDS and SSDS)

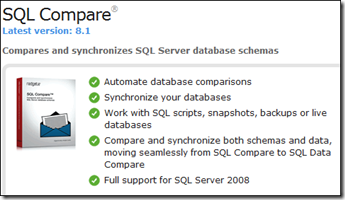

• Greg Hughes announced the Brent Ozar Puts SQL In The Cloud podcast on 1/29/2010:In this RunAs Radio podcast, Richard and I talk to Brent Ozar from Quest Software about running SQL in the cloud.• Emmanuel Huna claims “Support for SQL Azure in SQL Compare was my idea!” in his Red-Gate’s SQL Compare – now with SQL Azure support post of 1/29/2010:

Part of the conversation focuses on SQL Azure, but Amazon’s EC2 running SQL on a virtual machine is also a version of the same concept. The larger topic is really around DBAs providing services to their organizations — because that’s what the cloud is offering!

Brent Ozar is a SQL Server Expert with Quest Software, and a Microsoft SQL Server MVP. Brent has a decade of broad IT experience, including management of multi-terabyte data warehouses, storage area networks and virtualization. In his current role, Brent specializes in performance tuning, disaster recovery and automating SQL Server management. Previously, Brent spent two years at Southern Wine & Spirits, a Miami-based wine and spirits distributor. He has experience conducting training sessions, has written several technical articles, and blogs prolifically at BrentOzar.com. He is a regular speaker at PASS events, editor-in-chief of SQLServerPedia.com, and co-author of the book, “Professional SQL Server 2008 Internals and Troubleshooting.”

For a few years I’ve been using SQL Compare from Red-Gate – an amazing product that allows you to do compare and synchronize SQL Server database schemas – and much more:• Misael Ntirushwa’s Migrating an Asp.Net WebSite with Sql Server 2008 Database to Windows Azure and Sql Azure post of 1/28/2010 begins:

For more info see http://www.red-gate.com/products/SQL_Compare/index.htm

Red Gate has announced they now have an early access build of SQL Compare 8 that works with SQL Azure! If you're interested in trying this out please complete the form at http://www.red-gate.com/Azure

Please note that I do not work for Microsoft or Red Gate Software – I’m just a very happy customer. …

As we get to the end of January Windows Azure will be ready for business (end of the trial period). If you wondering how you can migrate your existing Asp.Net website to Windows Azure and Sql Azure this post is for you. I built a website that shows a music catalog which contains artists, theirs albums and tracks listing of those albums. The data store is a sql server 2008 database.Misrael continues with detail instructions for the migration.

For the Data access layer, I used LINQ to SQL to access the database. In addition to classes auto generated by LINQ to SQL Designer, I have a LINQ helper class “LinqQueries” that contains queries, it is used by the business logic layer to query the database.

The business logic is composed by DTO (Data Transfer Objects) classes (Album, Artist, Track), converter classes (from Entities to DTO classes), and manager classes that actually query the database through LinqQueries helper class.

The website display[s] music albums by genre (Rock, R&B, Pop, …). [Y]ou can search by artist name, or browse by genre. once you select an artist you can see all his/her albums. If you select an album you can see track list on the album and the release date. It’s a simple ASP.NET Webform website with a SQL Server database. The question is how do we migrate it into the cloud hosted in Windows Azure and SQL Azure ?

I will assume here that you’ve activated your Windows Azure account, you’ve watched a couple of videos on Windows Azure, or even better tried the Windows Azure Platform kit, played with the Windows Azure SDK and are ready to start creating Hosted Services and Storage accounts in windows Azure. …

The DataPlatformInsider team reported Free Download: Microsoft SQL Server Migration Assistant on 11/7/2010, but I missed the significance: the Microsoft SQL Server Migration Assistant 2008 for MySQL v1.0 CTP1 now migrates from MySQL directly to SQL Azure in the cloud.

<Return to section navigation list>

AppFabric: Access Control, Service Bus and Workflow

• Eugenio Pace announced Just Released – Claims-Identity Guide online on 1/29/2010:The entire book [A Guide to Claims-Based Identity and Access Control: Authentication and Authorization for Services and the Web] is now available for browsing online on MSDN here.Eugenio continues with a contents diagram and table of technologies covered. Here’s the Windows Azure item:

Now, to be honest, it doesn’t look as nice as the printed book (small preview here):

But everything is in there! (and doesn’t look that bad at all either, it’s just I really like the printed version :-) ).

| Chapters | Technologies | Topics |

| Windows Azure | ASP.NET WebForms on Azure | Hosting a claims-aware application on Windows Azure. |

A little while ago I posted How To: Add an HTTPS Endpoint to a Windows Azure Cloud Service which talked about the whole process around adding an HTTPS endpoint and configuring & uploading the SSL certificate for that endpoint.

This post is a follow up to that post to talk about installing any certificate to a Windows Azure VM. You may want to do this to install various client certificates or even to install the intermediate certificates to complete the certificate chain for your SSL certificate.

In order to peak into the cloud, I’ve written a very simple app that will enumerate the certificates of the Current User\Personal (or My) store. …

As usual, Jim’s post is a detailed, illustrated guide to working with Windows Azure.

See the Microsoft All-In-One Code Framework 2010-1-25: brief intro of new samples item in the Live Windows Azure Apps, Tools and Test Harnesses section for details about recent Workflow samples.

The Windows Azure Platform AppFabric Team reports Additional Data Centers for Windows Azure platform AppFabric on 1/28/2010:

Window Azure platform AppFabric has now been deployed to more data centers around the world. Previously, when you provisioned a service namespace, you were asked to select a region from a list that contained only United States (South/Central). Now, when you provision a service namespace, you have three more regions from which to choose -- United States (North/Central), Europe (North) and Asia (Southeast). If your firewall configuration restricts outbound traffic, you will need to perform the addition step of opening your outbound TCP port range 9350-9353 to the IP range associated with your selected regional data center. Those IP ranges are listed at the bottom of this announcement.

Note that your existing service namespaces have already been deployed to United States (South/Central) and cannot be relocated to another region. If you like, you may delete a service namespace, and when recreating it, associate it with another region. However, if you do so, data associated with your deleted namespace will be lost.

Windows Azure platform AppFabric plans to deploy to more locations in the months ahead. Further details will be posted as they become available.

IP Ranges

- United States (North/Central): 65.52.0.0/21, 65.52.8.0/21, 65.52.16.0/21, 65.52.24.0/21, 207.46.203.64/27, 207.46.203.96/27, 207.46.205.0/24

- Europe (North): 94.245.88.0/21, 94.245.104.0/21, 65.52.64.0/21, 65.52.72.0/21, 94.245.114.0/27, 94.245.114.32/27, 94.245.122.0/24

- Asia (Southeast): 111.221.80.0/21, 111.221.88.0/21, 207.46.59.64

<Return to section navigation list>

Live Windows Azure Apps, Tools and Test Harnesses

•• David Burela’s Using Windows Azure to scale your Silverlight Application post of 1/31/2010 describes the benefits of Windows Azure storage and the Content Delivery Network:Having the client download your application can become the first problem you encounter. If the user is sitting there, watching a loading screen for 5 minutes, they will most likely just move onto another site. Your application can be split up into the .net code, and the supporting files (.jpgs, etc.)David’s Using Silverlight to distribute workload to your clients tutorial of the same date begins:

For this we can use ‘Windows Azure Storage’ + the ‘Content Delivery Network’ (CDN) to help us push the bits to the end user. An official overview and how to set it up can be seen on the Azure CDN website. But to sum it up, you can put your .xap files and your supporting files onto Azure storage, then enable CDN on your account. Azure CDN will then push the files out to servers around the world (currently 18 locations). When a users requests the files, they will download it from the closest server reducing download times.

The loading time of your application can be further reduced by cutting your application up into modules. This way instead of downloading a 10mb .xap file, the end user can just quickly download the 100k core application, meanwhile the rest of the modules can be loaded using MEF, Prisim or something similar. These other modules can also be put onto the Azure CDN (they are just additional .xaps after all!). Brendan Forster has a quick overview on what MEF is. But there are plenty of tutorials out there on how to integrate MEF into Silverlight. …

In my previous article i explained how you can distribute server side processing on Azure to scale the backend of your Silverlight applications. But what if we did a 180 could use Silverlight to distribute work out to clients instead?•• John Mokkosian-Kane describes his Event-Driven Architecture in the Clouds with Windows Azure post to the CodeProject of 1/30/2010:

To demonstrate Azure and EDA beyond text book definitions, this article will show you how to build a flight delay management system for all US airports in clouds. The format of this article was intended to be similar to a hands on lab, walking you through each step required to develop and deploy an application to the clouds. This flight delay management system will be built with a mix of technologies including SQL 2008 Azure, Visual Studio 2010, Azure Queue Service, Azure Worker/Web Roles, Silverlight 3, Twitter SDK, and Microsoft Bing mapping. And while the implementation of this article is based on a flight delay system, the concepts can be leveraged to build IT systems in the clouds across functional domains.• Gunnar Peipman posted his Creating configuration reader for web and cloud environments tutorial on 1/30/2010:

Currently it is not possible to make changes to web.config file that is hosted on Windows Azure. If you want to change web.config you have to deploy your application again. If you want to be able to modify configuration you must use web role settings. In this blog post I will show you how to write configuration wrapper class that detects runtime environment and reads settings based on this knowledge.Gunnar continues with C# and VB code for the classes.

The following screenshot shows you web role configuration that is opened for modifications in Windows Azure site.

My solution is simple – I will create one interface and two configuration reading classes. Both classes – WebConfiguration and AzureConfiguration – implement [the] IConfiguration interface.

• Maarten Balliauw posted the slides for his Just another WordPress weblog, but more cloudy presentation PHP/Benelux on 1/30/2010:

While working together with Microsoft on the Windows Azure SDK for PHP, we found that we needed a popular example application hosted on Microsoft’s Windows Azure. Word Press was an obvious choice, but not an obvious task. Learn more about Windows Azure, the PHP SDK that we developed, SQL Azure and about the problems we faced porting an existing PHP application to Windows Azure.Colbertz reports in a Microsoft All-In-One Code Framework 2010-1-25: brief intro of new samples post of 1/29/2010:

Microsoft All-In-One Code Framework 25th January, 2010 updates. If this is the first time you heard about All-In-One Code Framework(AIO) project, please refer to the relevant introduction on our homepage: http://cfx.codeplex.com/Emmanuel Huna writes in his How to speed up your Windows Azure Development – two screencasts available of 1/28/2010:

In this release, we added more new samples on Azure:

CSAzureWCFWorkerRole, VBAzureWCFWorkerRole

This sample provides a handy working project that hosts WCF in a Worker Role. This solution contains three projects:

Two endpoints are exposed from the WCF service in CSWorkerRoleHostingWCF project:

- Client project. It's the client application that consumes WCF service.

- CloudService project. It's a common Cloud Service that has one Worker Role.

- CSWorkerRoleHostingWCF project. It's the key project in the solution, which demonstrates how to host WCF in a Worker Role.

Both endpoints uses TCP bindings.

- A metadata endpoint

- A service endpoint for MyService service contract

CSAZWorkflowService35, VBAZWorkflowService35

This sample demonstrates how to run a WCF Workflow Service on Windows Azure. It uses Visual Studio 2008 and WF 3.5.

While currently Windows Azure platform AppFabric does not contain a Workflow Service component, you can run WCF Workflow Services directly in a Windows Azure Web Role. By default, a Web Role runs under full trust, so it supports the workflow environment.

The workflow in this sample contains a single ReceiveActivity. It compares the service operation's parameter's value with 20, and returns "You've entered a small value." and "You've entered a large value.", respectively. The client application invokes the Workflow Service twice, passing a value less than 20, and a value greater than 20, respectively.

CSAZWorkflow4ServiceBus, VBAZWorkflow4ServiceBus

This sample demonstrates how to expose an on-premises WCF Workflow Service to the Internet and cloud using Windows Azure platform Service Bus. It uses Visual Studio 2010 Beta 2 and WF 4.

While the current version Windows Azure platform AppFabric is compiled against .NET 3.5, you can use the assemblies in a .NET4 project.

The workflow in this sample uses the standard ReceiveRequest/SendResponse architecture introduced in WF 4. It compares the service operation's parameter's value with 20, and returns "You've entered a small value." and "You've entered a large value.", respectively. The client application invokes the Workflow Service twice, passing a value less than 20, and a value greater than 20, respectively.

Today I sat down with my co-worker Arif A. and we went over a couple of ways I found to speed up Windows Azure Development.John Foley reports “Following a month of no-cost tire kicking, Microsoft will begin charging customers on Feb. 1 for its new Windows Server-based cloud computing service” in his Microsoft To Launch Pennies-Per-Hour Azure Cloud Service Monday article of 1/28/2010 for InformationWeek:

Basically we discuss how you can host your web roles in IIS and how you can create a keyboard shortcut to quickly attach the Visual Studio debugger to an IIS process – giving you your RAD (Rapid Application Development) process back! I created two separate screencasts which you can access below:

Each article contains the screencast, additional notes and a sample Visual Studio 2008 project you can download.

Developer and IT departments can choose from two basic pricing models: a pay-as-you-go "consumption" option based on resource usage, and a "commitment" option that provides discounts for a six-month obligation. At standard rates, a virtualized Windows Server ranges from 12 cents to 96 cents per hour, depending on CPU and related resources. Storage starts at 15 cents per GB per month, plus one cent for every 10,000 transactions. Microsoft's SQL Server costs $9.99 per month for a 1 GB Web database.Return to section navigation list>

Azure represents a new, and unproven, business model for both Microsoft and its customers. Developers and other IT professionals need to assess Azure's reliability, security, and cost compared to running Windows servers in their own data centers. Microsoft is providing TCO and ROI calculators to help with that cost comparison, but the company makes "no warranties" on the results those tools deliver.

Will Microsoft's cloud be cheaper than on-premises Windows servers? Every scenario is different, but many customers do stand to save money by moving certain IT workloads from their own hardware and facilities to Azure, says Tim O'Brien, Microsoft's senior director of platform strategy. Early adopters such as Kelley Blue Book and Domino's Pizza are saving "millions," O'Brien says. He admits, however, that Microsoft's cloud services may actually cost more than on-premises IT in some cases.

Windows Azure Infrastructure

• Dave O’Hara reported Washington State proposes legislation to restart data center construction, 15 month sales tax exemption in this 1/29/2010 post to the Green Data Center blog:In Olympia, Washington there are two bills introduced with bipartisan support to allow a 15 month sales tax exemption on the purchase and installation of computers for new data centers. …

“Today is a good day. The bills that we support -- SB 6789 and HB 3147 -- were introduced in Olympia with wide bipartisan support. The 13 sponsors of the bills are from all over the state, from Seattle and Spokane to Walla Walla and Wenatchee. And the state Department of Revenue requested the bills.

“The bills allow a 15-month sales-tax exemption on the purchase and installation of computers and energy for new data centers in rural counties. As the bills state, they provide a short-term economic stimulus that will sustain long-term jobs. In other words, the exemption will be temporary, but the jobs and tax revenue from the centers will boost rural counties for years and years to come.” …

We’ll see if data center construction comes to the State of Washington soon.Dave quotes Mary Jo Foley’s Tax concerns to push Microsoft Azure cloud hosting out of Washington state article of 8/5/2009 which quotes my Mystery Move of Azure Services Out of USA – Northwest (Quincy, WA) Data Center of the same date.

In the short term, maybe Microsoft will bring back some of its servers from Texas.

• Jonny Bentwood describes his Downfall of A[nalyst] R[elations] and the Gartner Magic Quadrant YouTube video of 1/29/2010:

Created by Jonny Bentwood. A parody of Hitler's failure to get his cloud app in the leader section of the Gartner magic quadrant. Disclosure: Created in jest and this does not reflect the my view ...The subtitles are hilarious. Don’t miss it.

• Nicholas Kolakowski’s Microsoft Promoted Azure, Office 2010 During Earnings Call, But Dodged Mobile post of 1/30/2010 to eWeek’s Windows 7, Vista, XP OS and MS Office blog carries this lede:

Microsoft signaled during a Jan. 28 earnings call that a variety of initiatives, including Office 2010, Azure and Project Natal, would help power its revenues throughout 2010. However, Microsoft executives seemed less forthcoming about the possible debut of Windows Mobile 7, the smartphone operating system that could make or break the company's plans in the mobile arena, deferring instead to an announcement during the Mobile World Congress in Barcelona in February. Microsoft also stated that netbooks and a possible Windows 7 Service Pack were non-factors in affecting the company's Windows 7 revenue.Nick continues with a detailed analysis of the earnings call.

Microsoft is betting its success in 2010 on a variety of initiatives, including the Azure cloud platform, the Project Natal gaming application, and Office 2010. However, executives during the company's Jan. 28 earnings call remained elusive about Windows Mobile 7, the long-rumored smartphone operating system that could potentially mean success or failure for Microsoft in that space.Michael Ziock’s Response to North America Connectivity Issues of 1/29/2010 states:

Peter Klein, Microsoft chief financial officer, promoted both Azure and Natal during the earnings call, referring to the former as the cloud platformthat would provide developers with a "smooth transition to the cloud with tools and processes," and the latter as something that "will energize this generation’s gaming and entertainment experience starting this holiday season." …

Microsoft is betting that the rollout of new versions of certain software programs throughout 2010, including Office 2010, will help spark a healthier uptake among enterprises and SMBs (small- to medium-sized businesses). Success of Azure and Natal also has the potential to contribute substantially to the company’s bottom line. But any guesses as to the role of Mobile in Microsoft’s 2010 will likely have to continue to wait until Barcelona.

Microsoft Online Services strives to provide exceptional service for all of our customers. On January 28, customers served from a North America data center may have experienced intermittent access to services included in the Business Productivity Online Standard Suite. We apologize for any inconvenience this may have caused you and your employees.Be sure to read the comments, especially the one about the “problems with our RSS feed, which is that we did not communicate the correct information for this incident, which confused customers and cause problems for our support agents. This is high on the list of things we are addressing with regard to customer communications.”

Michael is Sr. Director, Business Productivity Online Service Operations, The Microsoft Online Services Team.

The Windows Azure Dashboard’s Status History for Windows Azure Compute shows no outages for the period 1/23 to 1/29/2010:

The same is true for all other services except AppFabric Access Control, which had 40 minutes of connectivity issues in the South Central US data center on 1/23/2010.

Erik Sherman reported Microsoft Cloud Services Had 5 Hour Outage [UPDATE 2] on 1/29/2010, but it didn’t affect my Windows Azure test harness running in the US South Central data center in San Antonio:

… I received an email from a reader claiming that Microsoft Online Service, the current name for its cloud offerings, had a five hour outage. According to the tip, that included the hosted Exchange email service. This is clearly not the sort of thing corporations want to hear when considering who to trust going forward in cloud computing.The outage appears to have been specific to Office live; Pingdom and mon.itor.us haven’t reported Windows Azure outages. Still waiting for word from Microsoft.

It’s also clearly not the sort of thing that Microsoft executives would enjoy seeing widely communicated. I’ve tried checking online to see if there was any news of this, but there was nothing. That makes me a bit suspicious, as it would seem to be the sort of news that would have leaked out somewhere. However, that also doesn’t necessarily rule it out. I’ve just emailed Microsoft’s PR agency about this. However, given that it’s been two days so far and they still haven’t been able to dredge up a single comment on the registration server outage that lasted five days, I am not expecting any meaningful information in the near future about this. …

Eric Nelson’s Q&A: What are the UK prices for the Windows Azure Platform? post of 1/29/2010 begins:

Lots of folks keep asking me for UK prices and to be fair it does take a little work to find them (You need to start here and bring up this pop up)and continues with a copy of the current rates.

Hence for simplicity, I have copied them here (as of Jan 29th 2010).

Note that there are several rates available. The following is “Windows Azure Platform Consumption”

<Return to section navigation list>

Cloud Security and Governance

•• Chris Hoff asked Where Are the Network Virtual Appliances? Hobbled By the Virtual Network, That’s Where… and (of course) answered his question in this 1/31/2010 post:Allan Leinwand from GigaOm wrote a great article asking “Where are the network virtual appliances?” This was followed up by another excellent post by Rich Miller.@Beaker goes on to describe “some very real problems with virtualization (and Cloud) as it relates to connectivity and security” and conclude:

Allan sets up the discussion describing how we’ve typically plumbed disparate physical appliances into our network infrastructure to provide discrete network and security capabilities such as load balancers, VPNs, SSL termination, firewalls, etc. …

Ultimately I think it prudent for discussion’s sake to separate routing, switching and load balancing (connectivity) from functions such as DLP, firewalls, and IDS/IPS (security) as lumping them together actually abstracts the problem which is that the latter is completely dependent upon the capabilities and functionality of the former. This is what Allan almost gets to when describing his lament with the virtual appliance ecosystem today. …

I’ve written about this many, many times. In fact almost three years ago I created a presentation called “The Four Horsemen of the Virtualization Security Apocalypse” which described in excruciating detail how network virtual appliances were a big ball of fail and would be for some time. I further suggested that much of the “best-of-breed” products would ultimately become “good enough” features in virtualization vendor’s hypervisor platforms.

The connectivity layer — however integrated into the virtualized and cloud environments they seem — continues to limit how and what the security layers can do and will for some time, thus limiting the uptake of virtual network and security appliances.• Chris Hoff (@Beaker) announced on 1/30/2010 he’s a co-author of a forthcoming book, Hacking Exposed: Virtualization & Cloud Computing…Feedback Please:

Situation normal.

Craig Balding, Rich Mogull and I are working on a book due out later this year.Amazon’s page to pre-order the book is here.

It’s the latest in the McGraw-Hill “Hacking Exposed” series. We’re focusing on virtualization and cloud computing security.

We have a very interesting set of topics to discuss but we’d like to crowd/cloud-source ideas from all of you.

The table of contents reads like this:

Part I: Virtualization & Cloud Computing: An Overview

Case Study: Expand the Attack Surface: Enterprise Virtualization & Cloud Adoption

Chapter 1: Virtualization Defined

Chapter 2: Cloud Computing Defined

Part II: Smash the Virtualized Stack

Case Study: Own the Virtualized Enterprise

Chapter 3: Subvert the CPU & Chipsets

Chapter 4: Harass the Host, Hypervisor, Virtual Networking & Storage

Chapter 5: Victimize the Virtual Machine

Chapter 6: Conquer the Control Plane & APIs

Part III: Compromising the Cloud

Case Study: Own the Cloud for Fun and Profit

Chapter 7: Undermine the Infrastructure

Chapter 8: Manipulate the Metastructure

Chapter 9: Assault the Infostructure

Part IV: Appendices

We’ll have a book-specific site up shortly, but if you’d like to see certain things covered (technology, operational, organizational, etc.) please let us know in the comments below.

Also, we’d like to solicit a few critical folks to provide feedback on the first couple of chapters. Email me/comment if interested.

• Joe McKendrick chimes in with his Design-Time Governance Lost in the Cloud? The Great Debate Rages post of 1/30/2010 to the EbizQ SOA in Action blog:

Just when the dust seems to have settled from the whole "SOA is Dead" kerfuffle, our own Dave Linthicum throws more cold water on the SOA/cloud party -- with a proclamation that "Design-time Governance is Dead." (Well, not dead yet, but getting there...)• Chris Hoff (@Beaker) recommends MashSSL – An Excellent Idea You’ve Probably Never Heard Of… in this 1/30/2010 post:

We've been preaching both sides of governance as the vital core of any SOA effort -- and with good reason. Ultimately, as SOA proliferates as a methodology for leveraging business technology, and by extension, services are delivered through the cloud platform, people and organizations will play the roles of both creators and consumers of services. The line between the two are blurring more every day, and both design-time and runtime governance discipline, policies, and tools will be required.

In a post that raised plenty of eyebrows (not to mention eyelids), Dave Linthicum says the rise of the cloud paradigm may eventually kill off the design-time aspect of governance, the lynchpin of SOA-based efforts. As Dave explains it, with cloud computing, it's essential to have runtime service governance in place, in order to enforce service policies during execution. This is becoming a huge need as more organizations tap into cloud formations. "Today SOA is a huge reality as companies ramp up to leverage cloud computing or have an SOA that uses cloud-based services," he says. "Thus, the focus on runtime service execution provides much more value." …

• Tim Weber quotes Microsoft’s Craig Mundie in his Davos 2010: Cyber threats escalate with state attacks report for BBC News of 1/30/2010:I’ve been meaning to write about MashSSL for a while as it occurs to me that this is a particularly elegant solution to some very real challenges we have today. Trusting the browser, operator of said browser or a web service when using multi-party web applications is a fatal flaw.

We’re struggling with how to deal with authentication in distributed web and cloud applications. MashSSL seems as though it’s a candidate for the toolbox of solutions:

“MashSSL allows web applications to mutually authenticate and establish a secure channel without having to trust the user or the browser. MashSSL is a Layer 7 security protocol running within HTTP in a RESTful fashion. It uses an innovation called “friend in the middle” to turn the proven SSL protocol into a multi-party protocol that inherits SSL’s security, efficiency and mature trust infrastructure.”

Make sure you check out the sections on “Why and How,” especially the “MashSSL Overview” section which explains how it works.

I should mention the code is also open source.

Cyber-attacks are rising sharply, mainly driven by state-sponsored hackers, experts at the World Economic Forum in Davos have warned. The situation is made worse by the open nature of the web, making it difficult to track down the attackers.The U.S. Department of Defense Instruction No. 5205.13 Defense Industrial Base (DIB) Cyber Security/Information Assurance (CS/IA) Activities of 1/29/2010 appears to be a reaction to state attacks:

Craig Mundie, Microsoft's chief research officer, called for a three-tier system of authentication - for people, devices and applications - to tackle the problem.

The biggest cyber-risks, however, are insiders, experts said.

The security of cyberspace and data in general has been a theme at a string of sessions in Davos, with leading security experts warning that internet threats were growing at a geometric rate. …

Microsoft, Mr Mundie said, had been under attack for many years and probably seen it all.

"We have weathered the storm of nearly every class of attack as it has evolved, and lost some IP [intellectual property] on the way. We had lots of distributed denial of service attacks, but we are coping with that, but to do so you have to have active security these days." …

Few clear strategies were proposed to counter the threat, but one that the experts agreed on was "authentication", where systems verify that you are who you say you are.

"The internet is a wonderful place because it's so easy to get on… but that's because it's unauthenticated. A lot could be prevented just by having a two-step authentication," said one expert.

Microsoft's Mr Mundie pointed to a much more dangerous threat to most companies.

"What we have also experienced is the act of insider threat. As a company we start with the assumption, there are agents of governments and rivals inside the company, so you have to think how you can secure yourself. But most companies don't start with the assumption that there's an insider threat."

PURPOSE. This Instruction establishes policy, assigns responsibilities, and delegates authority in accordance with the authority in DoD Directive (DoDD) 5144.1 (Reference (a)) for directing the conduct of DIB CS/IA activities to protect unclassified DoD information, as defined in the Glossary, that transits or resides on unclassified DIB information systems and networks.W. Scott Blackmer posted Data Integrity and Evidence in the Cloud to the InformationLawGroup blog on 1/29/2010:

POLICY. It is DoD policy to:

a. Establish a comprehensive approach for protecting unclassified DoD information

transiting or residing on unclassified DIB information systems and networks by incorporating the use of intelligence, operations, policies, standards, information sharing, expert advice and assistance, incident response, reporting procedures, and cyber intrusion damage assessment solutions to address a cyber advanced persistent threat.

b. Increase DoD and DIB situational awareness regarding the extent and severity of cyber threats in accordance with National Security Presidential Directive 54/Homeland Security Presidential Directive 23 (Reference (b)).

c. Create a timely, coordinated, and effective CS/IA partnership with the DIB …

How does cloud computing affect the risks of lost, incomplete, or altered data? Often, the discussion of this question focuses on the security risks in transmitting data over public networks and storing it in dispersed facilities, sometimes in the control of diverse entities. Less often recognized is the fact that cloud computing, if not properly implemented, may jeopardize data integrity simply in the way that transactions are entered and recorded. Questionable data integrity has legal as well as operational consequences, and it should be taken into account in due diligence, contracting, and reference to standards in cloud computing solutions. …Scott goes on to analyze how cloud computing environments must handle atomicity, consistency, isolation and durability issues. He concludes:

The interaction between the data entry system and the multiple databases is normally effected through database APIs (application programming interfaces) designed or tested by the database vendors. The input is also typically monitored on the fly by a database “transactions manager” function designed to ensure, for example, that all required data elements are entered and are within prescribed parameters, and that they are all received by the respective database management systems.

Cloud computing solutions, by contrast, are often based on data entry via web applications. The HTTP Internet protocol was not designed to support transactions management or monitor complete delivery of upstream data. Some cloud computing vendors essentially ignore this issue, while others offer solutions such as application APIs on one end or the other, or XML-based APIs that can monitor the integrity of data input over HTTP.

Since the 1980s, database management systems routinely have been designed to incorporate the properties of “ACID” (atomicity, consistency, isolation, and durability). The question for the customer is whether a particular cloud computing solution offers similar fail-safe controls against dangerously incomplete transactions and records. …

… As transactions, databases and other kinds of business records follow email into the cloud, we are likely to see more disputes over records authentication and reliability. This suggests that customers should seek out cloud computing service providers that offer effective data integrity as well as security. Customers should also consider inserting a general contractual obligation for the service provider to cooperate as necessary in legal and regulatory proceedings --because sometimes integrity must be proven.Kevin McPartland asserts that the financial services industry hasn’t adopted public-cloud computing because of regulatory restrictions on the storage of investor information in this 2:17 Cloud Computing Regulation video.

Kevin is a senior analyst with the Tabb Group. His video is a refreshingly concise analysis of the current position of financial services regulators.

Linda Leung reports FTC Debates Clouds, Consumers and Privacy on 1/29/2010 in this post to the Data Center Knowledge blog:

Consumer data stored by cloud computing services should be regulated through a mix of government policies, consumer responsibility, and openness by the cloud providers, according to a panel of cloud companies and consumer advocates speaking at Thursday’s privacy roundtable hosted by the Federal Trade Commission.<Return to section navigation list>

The panelists debated a variety of issues, including how much should consumers be aware of what happens to their data when it leaves their hands, especially if the cloud provider provisions some services through third parties.

Nicole Ozer, director of technology and civil liberties policy at the American Civil Liberties Union of Northern California, said her team’s review of the privacy policies of some cloud providers found that some basic information was either lacking or vague. …

Cloud Computing Events

•• The Microsoft Partner Network attempts to take Azure social with the Windows Azure Platform Partner Hub that appears to have launched officially on 1/29/2010. (The unofficial launch was on 1/24/2010). The Partner Network also appears to have taken over the Windows Azure Platform Facebook group and offers a newsletter called the Windows Azure Platform Partner Communiqué. My first Communiqué copy contained no news but was marked *Microsoft Confidential: This newsletter is for internal distribution only*:

… As we built the site we set out to simulate custom dev projects and application migrations currently being done by the Microsoft partners building on the Windows Azure Platform. We worked with Slalom Consulting (A Microsoft Gold Certified Partner J) to take BlogEngine.NET to Windows Azure. To my knowledge this is one of the first deployments of BlogEngine.NET on Windows Azure and although the two work well together there were still some critical architectural and development decisions that Slalom had to navigate. As we go we’ll share lessons learned about running the site and ways that you can leverage the code or architecture on apps you build for your clients. …The “lessons learned” and shared code won’t be of much use if they’re “for internal distribution only.” This reminds me of the SLAs for Microsoft Public Clouds Go Private with NDAs fiasco.

•• Stephen Chapman reported Microsoft Confirms “Windows Phone 7;” to be Discussed at Energize IT 2010 and provided a link to Ottawa: Energize IT – From the Client to the Cloud V 2.0, which takes place on 3/30/2010 at the Hapmton Inn & Conference Center, Ottawa, Ontario K1K 4S3, Canda:

Description: Energize IT 2010 – Anything is Possible!

Windows Azure. Office System 2010. Visual Studio 2010. Windows Phone 7. The Microsoft-based platform presents a bevy of opportunities for all of us. Whether you are an IT Manager, Developer, or IT Pro knowing how these will impact you is critical, especially in the new economic reality.EnergizeIT 2009 Event Resources Are Now Available for Download here.

Registration is now available for you to attend this complimentary full day EnergizeIT event where we will help you to understand Microsoft’s Software+Services vision using a combination of demonstrations and break-outs. You will find out about the possibilities that these technologies help realize and the value that they can bring to your organization and yourself. …

• Jon Box lists Upcoming TechNet and MSDN Events for the midwest (MI, OH, KY) during February and March 2010 in this 1/29/2010 post:

Windows Azure, Hyper-V and Windows 7 DeploymentAlistair Croll’s State of the Cloud webcast of 1/18/2010 is available is available as an illustrated audio podcast sponsored by Interop and Cloud Connect, which received excellent reviews on Twitter:

Get the inside track on new tips, tools and technologies for IT pros. Join your TechNet Events team for a look at Windows Azure™ and learn the basics of this new online service computing platform. Next, we’ll explore how to build a great virtual environment with Windows Server® 2008 R2 and Hyper-V™ version 2.0. We’ll wrap this free, half-day of live learning with a tour of easy deployment strategies for Windows® 7.

TOPICS INCLUDE:

Take Your Applications Sky High with Cloud Computing and the Windows Azure Platform

- The Next Wave: Windows Azure

- Hyper-V: Tools to Build the Ultimate Virtual Test Network

- Automating Your Windows 7 Deployment with MDT 2010

Join your local MSDN Events team as we take a deep dive into Windows Azure. We’ll start with a developer-focused overview of this brave new platform and the cloud computing services that can be used either together or independently to build amazing applications. As the day unfolds, we’ll explore data storage, SQL Azure™, and the basics of deployment with Windows Azure. Register today for these free, live sessions in your local area.

Alistair is Bitcurrent’s Principal Analyst and Cloud Computing Conference Chair for Interop and Cloud Connect.

The Cloud Connect 2010 conference will take place on 3/16 to 3/18/2010 at the Santa Clara Convention Center, Santa Clara, CA. A Cloud Business Summit will take place on 3/15/2010:

Trends which have shaped the software industry over the past decade are being drawn up into the move to cloud computing including SaaS, web services, mobile applications, open source, Enterprise 2.0 and outsourced development.According to James Watters (@wattersjames), the San Francisco (SF) Cloud Computing Group will hold it’s fourth meeting on 3/16/2010, probably in Santa Clara.

Cloud Business Summit brings together an exclusive and influential group of 200 CEOs, entrepreneurs, technologists, VC's, CIOs and service providers who will cut through the hype and discuss and debate the opportunities, business models, funding and adoption paths for the cloud.

Top entrepreneurs and executives from software, infrastructure and services companies who are building their cloud strategies will network with venture capitalists, service providers, channel partners and leading media. Produced by TechWeb, and hosted by MR Rangaswami of the Sand Hill Group, the event carries on the tradition of the Software 200X events in the Silicon Valley.

Interop 2010 will take place in Las Vegas, NV on 4/25 to 4/29/2010. Cloud computing will be one of the conference’s primary tracks. Alistair Croll will conduct an all-day Enterprise Cloud Summit on 4/26/2010:

In just a few years, cloud computing has gone from a fringe idea for startups to a mainstream tool in every IT toolbox. The Enterprise Cloud Summit will show you how to move from theory to implementation. We'll cover practical cloud computing designs, as well as the standards, infrastructure decisions, and economics you need to understand as you transform your organization's IT. We'll also debunk some common myths about private clouds, security risks, costs, and lock-in.Ron Brachman reports Cloud Computing Brainiacs Converge on Yahoo! for Open Cirrus Summit on 1/18/2010:

On-demand computing resources are the most disruptive change in IT of the last decade. Whether you're deciding how to embrace them or want to learn from what others are doing, Enterprise Cloud Summit is the place to do it.

Today and tomorrow, Yahoo! is hosting the second Open Cirrus Summit, attended by cloud computing thought leaders from around the world. Computer scientists from leading technology corporations, world-class universities, and public sector organizations have gathered in Sunnyvale to discuss the future of computer science research in the cloud. The breadth of the research talent is expanding this week, as the School of Computer Science at Carnegie Mellon University officially joins the Open Cirrus Testbed.Tech*Days 2010 Portugal will feature a two-part Introduction to Azure session by Beat Schwegler according to the event’s Sessões page. What was missing as of 1/29/2010 were the dates and location. (Try again later or follow Nuno Fillipe Godinho’s blog/tweets.)

Open Cirrus Summit attendees Image credit: Yahoo Developer NetworkThe event will feature technical presentations from developers and researchers at Yahoo!, HP, and Intel, along with updates on research conducted on the Testbed by leading universities. Specifically, the Yahoo! M45 cluster, a part of the Open Cirrus Testbed, is being used by researchers from Carnegie Mellon, the University of California at Berkeley, Cornell, and the University of Massachusetts for a variety of system-level and application-level research projects. Researchers from these universities have published more than 40 papers and technical reports based on studies using the M45 cluster in many areas of computer science, with several studies related to Hadoop. …

<Return to section navigation list>

Other Cloud Computing Platforms and Services

•• Sam Johnston sights Oracle Cloud Computing Forum[?] in this 1/31/2010 screen capture:

My comment: Strange goings on from an outfit that canceled the Sun Cloud project.

According to Oracle Cloud Computing Forum in Beijing, China of 1/28/2010, it’s making the rounds in the PRC.

•• Sales Dot Two Inc. adds proof that if a conference name doesn’t include “cloud,” no one will attend. Here’s the description of its Sales 2.0 Conference: Sales Productivity in the Clouds scheduled for 3/8 – 3/9/2010 at San Francisco’s Four Seasons hotel:

The 2010 Sales 2.0 Conference is where forward-looking sales and marketing leaders will learn how to leverage sales-oriented SaaS technologies so their teams can sell faster, better, and smarter in any economic climate. The two-day conference reveals innovative strategies that make the sales cycle more efficient and effective for both the seller and the buyer. …The only connection to cloud computing is this 55-minute presentation by Gartner’s research VP, Michael Dunne:

Mapping Sales Productivity in the CloudDana Gardner asserts “Apple, Oracle plot world domination” in his Apple and Oracle on Way to Do What IBM and Microsoft Could Not post of 1/29/2010:

Sales needs to work smarter and more efficiently for firms to successfully drive a business recovery. More innovations are emerging with cloud computing but sales often fails to leverage automation. We will examine the top processes for accelerating sales and their implications for improving sales planning, execution and technology adoption.

… Apple is well on the way to dominating the way that multimedia content is priced and distributed, perhaps unlike any company since Hearst in its 1920s heyday. Apple is not killing the old to usher in the new, as Google is. Apple is rescuing the old media models with a viable online direct payment model. Then it will take all the real dough.Linda Leung reports Cisco Outlines Plans for Enterprise Clouds, IaaS on 1/28/2010 for Data Center Knowledge:

The iPad is a red herring, almost certainly a loss leader, like Apple TV. The real business is brokering a critical mass of music, spoken word, movies, TV, books, magazines, and newspapers. All the digital content that's fit to access. The iPad simply helps convince the producers and consumers to take the iTunes and App Store model into the domain of the formerly printed word. It should work, too.

Oracle is off to becoming the one-stop shop for mission-critical enterprise IT ... as a service. IT can come as an Oracle-provided service, from soup to nuts, applications to silicon. The "service" is that you only need go to Oracle, and that the stuff actually works well. Just leave the driving to Oracle. It should work, too.

This is a mighty attractive bid right now to a lot of corporations. The in-house suppliers of raw compute infrastructure resources are caught in a huge, decades-in-the-making vice -- of needing to cut costs, manage energy, reduce risk and back off of complexity. Can't do that under the status quo. …

This is why "cloud" makes no sense to Oracle's CEO Larry Ellison. He'd rather we take out the word "cloud" from cloud computing and replace it with "Oracle." Now that makes sense!

Cisco Systems (CSCO) this week bolstered its cloud infrastructure offerings to enterprises and service providers by developing separate design guides for those respective markets. The guides present validated designs that enable enterprises and service providers to develop cloud infrastructures using Cisco gear and partner software.Jinesh Varia’s 20-page Architecting for the Cloud: Best Practices white paper (January 2010) is written for Amazone Web Services, but most of its content applies to Windows Azure and other PaaS offerings. From the introduction:

The Secure Multi-Tenancy into Virtualized Data Centers guide is aimed at enterprises wanting to build clouds and is published with NetApp and VMware. It describes the design of what the vendors calls the Secure Cloud Architecture, which is based on Cisco Nexus Series Switches and the Cisco Unified Computing System, NetApp FAS storage with MultiStore, and VMware vSphere and vShield Zones.

For several years, software architects have discovered and implemented several concepts and best practices to build highly scalable applications. In today’s "era of tera", these concepts are even more applicable because of ever-growing datasets, unpredictable traffic patterns, and the demand for faster response times. This paper will reinforce and reiterate some of these traditional concepts and discuss how they may evolve in the context of cloud computing. It will also discuss some unprecedented concepts such as elasticity that have emerged due to the dynamic nature of the cloud.

This paper is targeted towards cloud architects who are gearing up to move an enterprise-class application from a fixed physical environment to a virtualized cloud environment. The focus of this paper is to highlight concepts, principles and best practices in creating new cloud applications or migrating existing applications to the cloud.

Jinesh is a Web Services evangelist for Amazon.com.

<Return to section navigation list>

Zend Technologies Inc.

Zend Technologies Inc.

Did you miss the Azure roadshow for Microsoft Partners in December? Are you still pondering the business opportunity behind Azure? Well block off 2-hours on your calendar today to attend our Live Meeting presentation of the roadshow content on Thursday, February 25th. Gather up your team for an extended lunch to learn from leading edge partners on what the Windows Azure opportunity can mean for your business. For more information and to register for the event go to

Did you miss the Azure roadshow for Microsoft Partners in December? Are you still pondering the business opportunity behind Azure? Well block off 2-hours on your calendar today to attend our Live Meeting presentation of the roadshow content on Thursday, February 25th. Gather up your team for an extended lunch to learn from leading edge partners on what the Windows Azure opportunity can mean for your business. For more information and to register for the event go to  The newly formed

The newly formed