Windows Azure and Cloud Computing Posts for 9/6/2012+

| A compendium of Windows Azure, Service Bus, EAI & EDI,Access Control, Connect, SQL Azure Database, and other cloud-computing articles. |

• Updated 9/8/2012 1:30 PM PDT with new articles marked •.

Tip: Copy bullet, press Ctrl+f, paste it to the Find textbox and click Next to locate updated articles:

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Windows Azure Blob, Drive, Table, Queue, Hadoop, Online Backup and Media Services

- Windows Azure SQL Database, Federations and Reporting, Mobile Services

- Marketplace DataMarket, Cloud Numerics, Big Data and OData

- Windows Azure Service Bus, Access Control, Caching, Active Directory, and Workflow

- Windows Azure Virtual Machines, Virtual Networks, Web Sites, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch and Entity Framework v4+

- Windows Azure Infrastructure and DevOps

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

Azure Blob, Drive, Table, Queue, Hadoop, Online Backup and Media Services

• The Microsoft Server and Cloud Platform Team (@MSCloud) described Windows Azure Online Backup and the Windows Azure Active Directory Management Portal in a 9/7/2012 post:

It’s sometimes easier to think about cloud computing in all or nothing terms – move everything into the cloud or leave everything as-is, on-premises. But, as most know, the emerging reality is more of a hybrid approach, combining both cloud and on-premises resources. Windows Server 2012 and System Center 2012 SP1 are embracing the hybrid model with services including Windows Azure Online Backup. It’s a great example of how Windows Server, System Center and Windows Azure work together in what we call the “Cloud OS”.

Currently in preview, Windows Azure Online Backup is a cloud-based backup solution enabling server data to be backed up and recovered from the cloud in order to help protect against loss and corruption.

The service provides IT administrators with a physically remote backup and recovery option for their server data with limited additional investment when compared with on-premises backup solutions.

In addition to cloud-based backup for Windows Server 2012, we are pleased to announce that Windows Azure Online Backup now also supports cloud-based backup from on-premises System Center 2012 SP1 via the Data Protection Manager component.

Windows Server 2012

Cloud-based backup from Windows Server 2012 is enabled by a downloadable agent that installs right alongside the familiar Windows Server backup interface. From this interface backup and recovery of files and folders is managed as usual but instead of utilizing local disk storage, the agent communicates with a Windows Azure service which creates the backups in Windows Azure storage.System Center 2012 SP1

With the System Center 2012 SP1 release, the Data Protection Manager (DPM) component enables cloud-based backup of datacenter server data to Windows Azure storage. System Center 2012 SP1 administrators use the downloadable Windows Azure Online Backup agent to leverage their existing protection, recovery and monitoring workflows to seamlessly integrate cloud-based backups alongside their disk/tape based backups. DPM’s short term, local backup continues to offer quicker disk–based point recoveries when business demands it, while the Windows Azure backup provides the peace of mind & reduction in TCO that comes with offsite backups. In addition to files and folders, DPM also enables Virtual Machine backups to be stored in the cloud.Windows Server 2012 Essentials

Small businesses using Windows Server 2012 Essentials can also access cloud-based backup capabilities by downloading the Windows Azure Online Backup integration module, an extension for the Windows Server 2012 Essentials dashboard. The agent extends the server folder page in the dashboard with online backup information, provides common backup and recovery functions, and simplifies the setup and configuration steps.Key features

Below are some of the key features we’re delivering in Windows Azure Online Backup:

- Simple configuration and management.

- Simple, familiar user interface to configure and monitor backups from Windows Server and System Center SP1.

- Integrated recovery experience to transparently recover files, folders and VMs from the cloud.

- Windows PowerShell command-line interface scripting capability.

- Block level incremental backups.

- Automatic incremental backups track file and block level changes and only transferring the changed blocks, hence reducing the storage and bandwidth utilization.

- Different point-in-time versions of the backups use storage efficiently by only storing the changed blocks between these versions.

- Data compression, encryption and throttling.

- Data is compressed and encrypted on the server before being sent to Windows Azure over the network. As a result, Windows Azure Online Backup only places encrypted data in the cloud storage.

- The encryption passphrase is not available in Windows Azure, and as a result data is never decrypted in the service.

- Users can setup throttling and configure how Windows Azure Online Backup utilizes the network bandwidth when backing up or restoring information.

- Data integrity verified in the cloud.

- Backed up data is also automatically checked for integrity once the backup is complete. As a result, any corruptions due to data transfer are automatically identified and repair is attempted in the next backup.

- Configurable retention policies.

- Retention policies Configure and implement retention policies to help meet business policies and manage backup costs.

Getting started

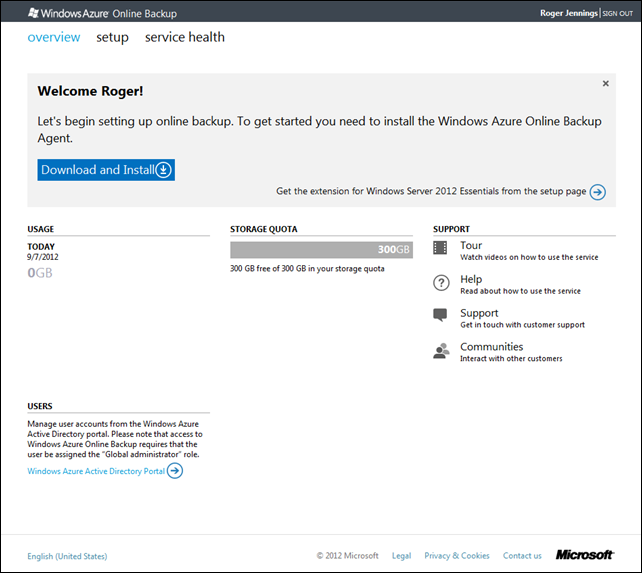

Getting started with Windows Azure Online Backup is a simple two-step process:

- Get a free preview Windows Azure Online Backup account (with 300 GB of cloud storage) here.

- Login to the Windows Azure Online Backup portal and download and install the Windows Azure Online Backup agent for Windows Server 2012 or System Center 2012 SP1 Data Protection Manager. For Windows Server 2012 Essentials, download and install the Windows Azure Online Backup integration module.

Once you have installed the agent or integration module you can use the existing user interfaces for registering the server to the service and setting up online backup.

Windows Azure Active Directory Management Portal

With today’s release of the Windows Azure Online Backup preview we are also releasing a supporting preview of the Windows Azure Active Directory Management Portal. Customers can use the Windows Azure Active Directory Management Portal to sign up for Windows Azure Online Backup and manage users’ access to the service. Administrators can now use the preview portal at https://activedirectory.windowsazure.com.We’ll have more details on how the new Windows Azure Active Directory Management Portal can be used to manage your organization’s identity information in a separate blog post soon.

I’ll be posting details of the Windows Azure Active Directory (WAAD) Management Portal and Online Backup (WAOB) shortly. In the meantime, here’s a screen capture of the WAOB portal after you sign up:

Magnus Mårtensson (@noopman) reported Windows Azure may be used for storage of personal data on Swedish citizens in a 9/6/2012 post:

This is HUGE: Swedish government agency "Datainspektionen", in charge of protecting Swedish personally identifiable information about Swedish citizens, acknowledges that Microsoft and Windows Azure lives up to the necessary rules and regulations that Swedish law demands in regards to protecting and overseeing the management of personal data for Swedish citizens.

The case is that of Internet Mail Service Brevo whom have been subject to review from Datainspektionen. After handling the few issues that came up in the inspection Datainspektionen now attest that Brevo, their service and the hosting and data management provided by Microsoft Windows Azure lives up to the laws required and no further review will be necessary. The case is now closed! (You can read about Brevo here on this Microsoft Translator transcribed page.)

Earlier Salem County in Sweden was faulted by Datainspektionen for using Google Cloud services which were not deemed to live up to the high standards that protect the integrity of Swedish citizens. Clearly Microsoft services are OK but Google services are not!

"The Data Inspectorate's orders were interpreted by many as an obstacle to that store personal data in the cloud and several authorities and organizations have therefore hesitated to modernize its IT pending a final judgment from the Swedish Data Inspection Board. The Data Inspectorate's approval validates Microsoft's work with openness and transparency about our cloud services", says Microsoft's technology manager Daniel Akenine.

Read all about Microsoft's work with Windows Azure and trustworthy computing at the Windows Azure Trust Center.

Denny Lee (@dennylee) posted Thursday TechTips: Hadoop 1.01 and Compression Codecs on 9/6/2012:

I have been playing around with the compression codecs with Hadoop 1.01 over the last few months and wanted to provide quick tech tips on compression codecs and Hadoop. The key piece of advice is for you to get Tom White’s (@tom_e_white) Hadoop: The Definitive Guide. It is easily the must-have guide for Hadoop novices to experts.

The key fundamentals concerning compression codecs is that not all codecs are immediately available within Hadoop. Some of them are native to Hadoop (one needs to remember to compile the native libraries) while others need to be extracted for their source and compiled in.

Below is a handy table reference based on Tom’s book and some of the observations I have noticed from tests as well.

- oahic in the Codec column represents org.apache.hadoop.io.compress

- [1] Left to right representing least to most compression space

- [2] Left to right representing slow to fast compression time

- + LZ0 are not natively splittable but you can use the lzop tool to pre-process the file.

- * While gzip is not natively splittable, there is an open jira HADOOP-7076 for this and you can install the patch yourself at SplittableGzip GitHub project.

While each project has its own profile, some key best practices paraphrased and listed in order of effectiveness from Hadoop: The Definitive Guide are:

- Use container file format (Sequence file, RC File, or Avro)

- Use a compression format that supports splitting bz2

- Manually split large files into HDFS block size chunks and compress individually

Some other handy compression tips are noted below.

- LZO vs Snappy vs LZF vs ZLIB, A comparison of compression algorithms for fat cells in HBase

- 10 MapReduce Tips (good in general, not just compression)

- Use Compression with Mapreduce

I have Tom’s book and agree it’s indispensible for anyone using Hadoop and its related subprojects.

<Return to section navigation list>

Windows Azure SQL Database, Federations and Reporting, Mobile Services

• My (@rogerjenn) Windows Azure Mobile Services Preview Walkthrough–Part 1: Windows 8 ToDo Demo Application (C#) of 9/8/2012 begins:

The Windows Azure Mobile Services (WAMoS) Preview’s initial release enables application developers targeting Windows 8 to automate the following programming tasks:

- Creating a Windows Azure SQL Database (WASDB) instance and table to persist data entered in a Windows 8 Modern (formerly Metro) UI application’s form

Connecting the table to the data entry form

- Adding and authenticating the application’s users

- Pushing notifications to users

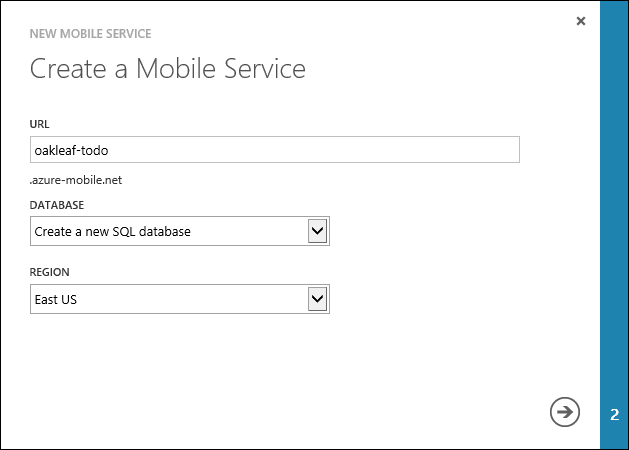

This walkthrough, which is simpler than the Get Started with Data walkthrough, explains how to obtain a Windows Azure 90-day free trial, create a C#/XAML WASDB instance for a todo application, add a table to persist todo items, and generate and use a sample oakleaf-todo Windows 8 front-end application. During the preview period, you can publish up to six free Windows Mobile applications.

Future walkthroughs will cover tasks 3 and 4.

Prerequisites: You must perform this walkthrough under Windows 8 RTM with Visual Studio 2012 Express or higher.

Note: The WAMoS abbreviation is used for Mobile Services to distinguish them from Windows Azure Media Services (WAMeS). …

and continues with a fully illustrated tutorial with screen captures for each step, for example:

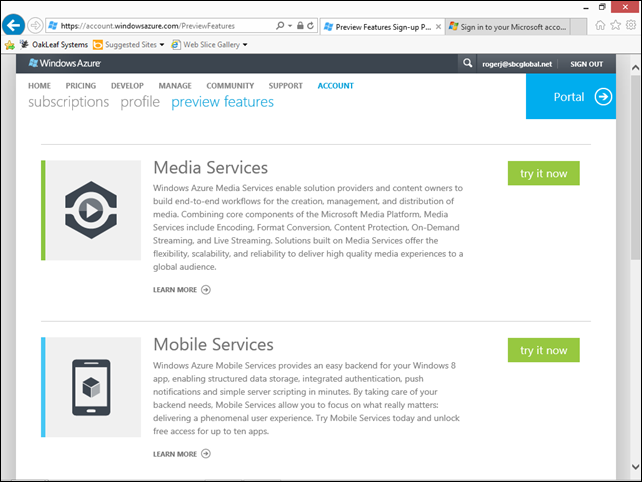

7. Open the Management Portal’s Account tab and click the Preview Features button:

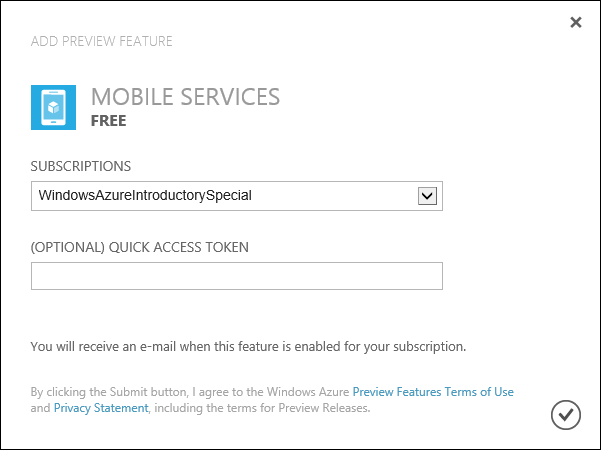

8. Click the Mobil Services’ Try It Now button to open the Add Preview Feature form, accept the default or select a subscription, and click the submit button to request admission to the preview:

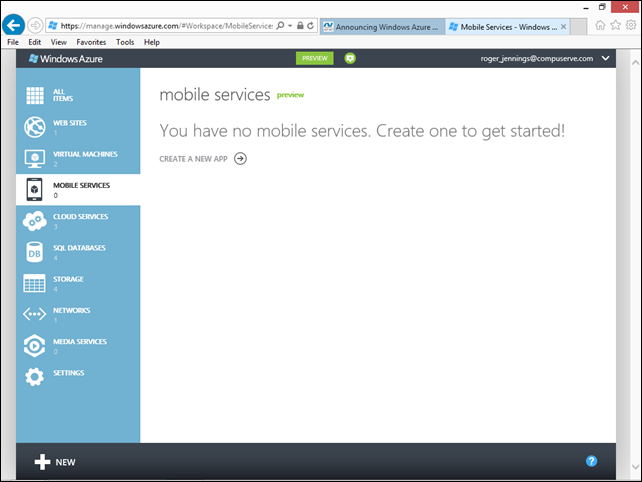

9. Follow the instructions contained in the e-mail sent to your Live ID e-mail account, which will enable the Mobile Services item in the Management Portal’s navigation pane:

Note: My rogerj@sbcglobal.net Live ID is used for this example because that account doesn’t have WAMoS enabled. The remainder of this walkthrough uses the subscription(s) associated with my roger_jennings@compuserve.com account.

10. Click the Create a New App button to open the Create a Mobile Service form, type a DNS prefix for the ToDo back end in the URL text box (oakleaf-todo for this example), select Create a new SQL Database in the Database list, accept the default East US region.

Note: Only Microsoft’s East US data center supported WAMoS when this walkthrough was published. …

Steps 10 through 20 follow.

• Cihan Biyikoglu (@cihangirb) explained Max Pool Size Setting and Federations in Windows Azure SQL Database in a 9/7/2012 post:

This came up a few times the last few weeks so wanted to make sure you don’t hit the issue the next time your peak load hits; With federations, your app servers connect to the collection of databases (your federated db with the root and member databases) using a single connection string. This is key to solving the connection multiplexing and connection pool fragmentation issues. However this also means that if you push a high load on your app tier (worker, web roles etc), you may run out of connections. The default connection pool ceiling is set to 100 concurrent connections and if you have more than 100 concurrent connections from the app instance, you get a timeout error that looks like this;

"Timeout expired. The timeout period elapsed prior to obtaining a connection from the pool. This may have occurred because all pooled connections were in use and max pool size was reached"

The workaround is simple: update your max pool size setting in the connection string in your app tier. So what do you set it to? we need to set the value a good distance away from the peak number of concurrent connections you expect a single app instance to handle. Usually 1000 is a safe limit for most realistic apps. If you are testing scale of performance you may want to push the value to a few thousands.

The way to set your max pool size is explained here for SQLClient in ADO.Net but it simply means you need to add “…;Max Pool Size=1000;” to your connection string. Full details on the connection string settings for SQL can be found here;

http://msdn.microsoft.com/en-us/library/system.data.sqlclient.sqlconnection.connectionstring.aspx

The Windows Azure Mobile Services Team announced the availability of Mobile Services Reference Documentation on 9/6/2012:

Windows Azure Mobile Services

[This topic is pre-release documentation and is subject to change in future releases. Blank topics are included as placeholders.]

The feature described in this topic is available only in preview. To use this feature and other new Windows Azure capabilities, sign up for the free preview.

Windows Azure Mobile Services is a Windows Azure service offering designed to make it easy to create highly-functional mobile apps using Windows Azure. Mobile Services brings together a set of Windows Azure services that enable backend capabilities for mobile apps. Mobile Services provides a rich programming model, including client libraries for devices, a JavaScript library for server-side business logic, and REST APIs.

Note:

- In this preview release, Mobile Services only supports Windows 8 app development.

- This page links to topics that help you to understand and get started using Mobile Services on Windows Azure. This page will be updated periodically when new content is available, so check back often to see what’s new.

Articles:

- Get started with Mobile Services: Demonstrates how to use Mobile Services to easily create a Windows 8 app that uses Windows Azure as the backend service for storage and authentication. You should complete this tutorial before you begin any of the other tutorials.

- Get started with data: Demonstrates how to use Mobile Services to leverage data in a Windows 8 app.

- Get started with users: Demonstrates how to work with users in Mobile Services who are authenticated in a Windows 8 app.

- Get started with push notifications: Demonstrates how to use Mobile Services to send push notifications to a Windows 8 app.

- For more tutorials and information about Mobile Services, see the Mobile Services developer center.

Blog posts: Windows Azure Blog

Forums: Windows Azure Mobile Services

Other resources: Mobile Services developer center Centralized hub for all information on Windows Azure.

In This Section

- Mobile Services client library for JavaScript

- Mobile Services client library for .NET

- Mobile Services server script reference

See Also Other Resources:

- Mobile Services Tutorial: Find the latest questions and answers about Mobile Services in the Windows Azure platform forums.

- Windows Store apps: General question and answers about developing Windows 8 app.

Cihan Biyikoglu (@cihangirb) reported the availability of New Sample Code for Entity Framework and ADO.Net for Federations in Windows Azure SQL Database in a 9/5/2012 post:

Thanks to Scott Klein and Michael Thomassy, we now have new code samples out for EF and ADO.Net demonstrating how to work with federations. The samples showcase simple to advanced notions in both ado.net and EF in federations. You can see how to do fanout querying in entity framework to querying federation metadata in the samples. What’s best if you now can get the new sample entity framework provider built for federations with connection resiliency and with routing support in these samples;

Here are the individual links for the 4 samples;

Cloud computing on the Windows Azure platform offers the opportunity to easily add more data and compute capacity on the fly as business needs vary over time. Federations in SQL Database is a way to achieve greater scalability and performance from the database tier of your app.

Cloud computing on the Windows Azure platform offers the opportunity to easily add more data and compute capacity on the fly as business needs vary over time. Federations in SQL Database is a way to achieve greater scalability and performance from the database tier of your app.

Cloud computing on the Windows Azure platform offers the opportunity to easily add more data and compute capacity on the fly as business needs vary over time. Federations in SQL Database is a way to achieve greater scalability and performance from the database tier of your app.

Cloud computing on the Windows Azure platform offers the opportunity to easily add more data and compute capacity on the fly as business needs vary over time. Federations in SQL Database is a way to achieve greater scalability and performance from the database tier of your app.

<Return to section navigation list>

Marketplace DataMarket, Cloud Numerics, Big Data and OData

Don Pattee posted Announcing the HPC Pack 2012 Beta Program on 9/6/2012:

I am pleased to announce the start of the HPC Pack 2012 Beta Program!

The 4th major version of our HPC software line following the Compute Cluster Pack, HPC Pack 2008, and HPC Pack 2008 R2 releases, the HPC Pack 2012 release brings a batch of new functionality and support for new software.

- Windows Server 2012 (including 'Server Core'), the Microsoft .NET Framework 4, Microsoft SQL Server 2012, and Windows 8 are all now supported

- A single version of HPC Pack 2012 now includes all the functionality that used to be split across the HPC Pack 2008 Express, Enteprise, Workstations and Cycle Harvesting editions

- HPC Pack now supports extending your enterprise network to Windows Azure via 'Project Brooklyn'

- MS-MPI has a new 'message compression' feature to reduce the time spent waiting on communications to complete

- The job scheduler now allows for dependencies between jobs (in addition to the task dependencies that already existed) to better integrate with your work flow

- Windows Azure nodes can be set to automatically mount a VHD, which could contain some static data or application code, to make using HPC Pack's 'burst' functionality even easier

- and more... A more complete list of new functionality is available once you join the beta :)

Sign up for the beta program by going to the Microsoft HPC Connect beta site, logging in with your Windows Live ID and filling out a quick survey. (If this is your first Connect-based beta program you'll also need to complete a short set of 'profile' questions.)

Joel Foreman described Building Dynamic Services Using Asp.net Web Api and Odata in a 9/6/2012 post to the Slalom Consulting blog:

Over the past few years I have been building more and more RESTful services in .NET. The need for services that can be used from a variety of platforms and devices has been increasing. Often times when we build a service layer to expose data over HTTP, we may be building it for one platform (web) but know we know that another platform (mobile) could be just around the corner. Simple REST services returning XML or JSON data structures provide the flexibility we need.

I have been very excited to follow the progress of ASP.NET Web API in MVC4. Web API makes creating RESTful services very easy in .NET, with some of the same ASP.NET MVC principals that .NET developers have already become accustomed to. There are some great overview and tutorials on creating ASP.NET Web APIs. I encourage anyone to read through the tutorial Your First Web API for an initial overview.

Another important area to address when it comes to writing RESTful services is the topic of how to provide the ability for consumers to query data. How consumers want to retrieve and interact with the data is not always known, and trying to support a variety of parameters and operations to expose the data in different ways could be challenging. Enter the Open Data Protocol. OData is a new web standard for defining a consistent query and update language using existing web technologies such as HTTP, ATOM and JSON. For example, OData defines different URI conventions for supporting query operations to support the filtering, sorting, pagination, and ordering of data.

Microsoft has been planning on providing support for OData in ASP.NET Web API. In the past few weeks, Microsoft released an OData Package for Web API via NuGet enabling the ability for OData endpoints that support the OData query syntax. Here is a blog post that describes the contents of that package in more detail.

Let’s take a closer look at how to take advantage of this new OData library. In this example below (being a consultant), I build a simple API for returning Projects from a data repository.

Web API provides an easy development model for creating an APIController, with routing support for GET, POST, PUT, and DELETE automatically set up for you by the default route for Web API. When you create a default APIController, the GET method returns an IEnumerable<T> where T is the model for your controller. For example, if you wanted a service to return data of type Project, you could create a ProjectsAPIController and your GET method would return IEnumerable<Project>. The Web API framework handles the serialization of your object to JSON or XML for you. In my example running locally, I can use Fiddler to make requests against my REST API and inspect the results.

Query: /api/projects

After adding a reference to the Microsoft ASP.NET Web API OData package via NuGet, there are only a couple small tweaks I need to make to my existing ProjectsAPIController to have the GET method support OData query syntax.

- Update the return type of your query (and underlying repository) to be IQueryable<T>.

- Add the [Queryable] attribute to your method.

What is happening here is that by returning an IQueryable<T> return type, we are deferring the execution of that query. The OData package then, through the Queryable attribute, is able to interpret the different query operations present on the request and via an ActionFilter apply them to our Query before the query is actually executed. As long as you are able to defer the actual execution of the query against your data repository in this fashion, this practice should work great.

Let’s try out a couple of queries in Fiddler against my API to show OData in action.

Query: /api/projects?$filter=Market eq ‘Seattle’

Query: /api/projects?$orderby=StartDate desc&$top=5

The list of OData URI conventions for query syntax is extensive, and can be very powerful. For example, the TOP and SKIP parameters can be used to provide consumers with a pagination solution to large data sets.

One key concept to understand with OData with Web API is understanding the capabilities and limitations around when the query parameters need to be applied against your data repository. For instance, what if the data repository is not able to support returning an IQueryable<T> result? You may be working with a repository that requires parameters for a query up front. In that case, there is an easy way to access the OData request, extract the different parameters supplied, and apply them in your query up front.

The mapping of operations and parameter to your repository’s interface may not be ideal, but at least you are able to take advantage of OData and provide a standards-based experience to your consumers.

Support for building RESTful APIs in .NET just keeps getting better and better. With ASP.NET Web API and the upcoming support for OData in Web API, you can build dynamic APIs for multiple platforms quickly and easily

Derrick VanArnam posted Announcing the AdventureWorks OData Feed sample on 9/5/2012:

Overview

As a continuation of my previous blog post, we created a live AdventureWorks OData feed at http://services.odata.org/AdventureWorksV3/AdventureWorks.svc. The AdventureWorks OData service exposes resources based on specific SQL views. The SQL views are a limited subset of the AdventureWorks database that results in several consuming scenarios:

- CompanySales

- Documents

- ManufacturingInstructions

- ProductCatalog

- TerritorySalesDrilldown

- WorkOrderRouting

We will be iterating on this sample, so expect it to improve over time. For instance, the Documents feed currently exposes the document entity type property as an Edm.Binary type. An upcoming iteration will show how to implement Named Resource Streams for the Documents.Document entity type property.

User Story

This sample iteration, on our CodePlex site, addresses the following user story:

As a backend developer, I want to only allow views of the AdventureWorks2012 database to be exposed as an OData public resource so that the underlying schema can be modified without affecting the service.

Source Download

How to install the sample

You can consume the AdventureWorks OData feed from http://services.odata.org/AdventureWorksV3/AdventureWorks.svc. You can also consume the AdventureWorks OData feed by running the sample service from a local ASP.NET Development Server.

To run the service on a local ASP.NET Development Server

- Download the sample from CodePlex.

- Attach the AdventureWorks2012 database. The AdventureWorks2012 database can be downloaded from http://msftdbprodsamples.codeplex.com/releases/view/93587

- From Microsoft SQL Server Management Studio, run \AdventureWorks.OData.Service\SQL Scripts\Views.sql to create the OData feed SQL views.

- Open \AdventureWorks.OData.Service\AdventureWorks.OData.Service.sln in Visual Studio 2012.

- In Solution Explorer, select AdventureWorks.svc.

- Press F5 to run the service on http://localhost:1234/AdventureWorks.svc/.

AdventureWorks OData Service Resources

Iteration 2 will address the following user story:

As a frontend developer, I want to consume the AdventureWorks OData feed so that I can use entity data within a business workflow.

The sample will show how to create a QueryFeed workflow activity that can consume any OData feed url and return an enumeration of entity properties. The sample further shows how to create an EntityProperties activity which allows a user designing a workflow to drop a TablePartPublisher into the activity. A TablePartPublisher can be consumed by any client to render entity properties. For example, a Word Add-in can consume EntityProperties and a TablePartPublisher to render an Open XML table. Iteration 2 will show how this is done.

I have most of the code for iteration 2 complete, but not quite ready to publish. I plan to publish iteration 2 in about two weeks. For now, I’ll show you a sample of each AdventureWorks OData resource. Each example table was created by running the custom activities from within Word.

My next blog post will dive into how to create OData workflow activates. Custom activity designers that consume OData metadata and use LINQ to project entity xml into entity classes will be discussed. The metadata entity classes are used to provide expression editor items.

CompanySales Example

QueryFeed Url

http://localhost:1234/AdventureWorks.svc/CompanySales?$top=2&$orderby=OrderYear asc

Result

ManufacturingInstructions Example

QueryFeed Url

http://localhost:1234/AdventureWorks.svc/ManufacturingInstructions?$top=2&$select=ProductName,Instructions,SetupHours

Result

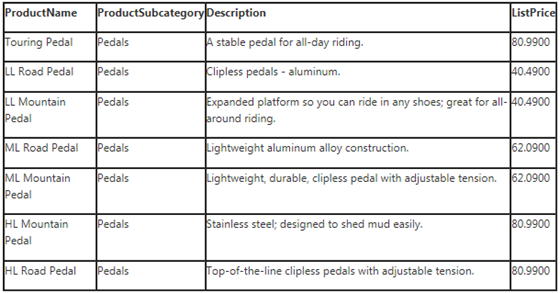

ProductCatalog Example

QueryFeed Url

http://localhost:1234/AdventureWorks.svc/ProductCatalog?$filter=CultureID eq 'en' and ProductSubcategory eq 'Pedals'&$top=2&$select=ProductName,ProductSubcategory,Description,ListPrice

Results

TerritorySalesDrilldown Example

QueryFeed Url

http://localhost:1234/AdventureWorks.svc/TerritorySalesDrilldown?$filter=SalesPersonID eq 275&$top=2

Result

WorkOrderRouting Example

QueryFeed Url

http://localhost:1234/AdventureWorks.svc/WorkOrderRouting?$top=2&$select=WorkOrderID,ProductNumber,ProductName,ScheduledStartDate,ActualStartDate,ScheduledEndDate,ActualEndDate

Result

<Return to section navigation list>

Windows Azure Service Bus, Access Control Services, Caching, Active Directory and Workflow

The Microsoft Server and Cloud Platform Team (@MSCloud) described Windows Azure Online Backup and the Windows Azure Active Directory Management Portal in an post include d in the Windows Azure Blob, Drive, Table, Queue, Hadoop, Online Backup and Media Services section above.

I plan to post more details about these two new services shortly. In the meantime, here’s a screen capture of the Windows Azure Active Directory Management portal preview’s landing page:

Glenn Colpaert (@GlennColpaert) described Retrieving AppFabric Cache Statistics through code in a 9/7/2012 post:

For a recent project I had to work with Windows Server AppFabric Caching. One of the requirements was to retrieve the CacheStatistics from the AppFabric cache.

To retrieve these statistics you have to use PowerShell by using following commands:

Of course, we needed this data in our .NET Application and not in PowerShell.

We needed to call the PowerShell cmdlets from our .NET Application to be able to retrieve that data. This is how we did it.First of all add a reference to the following assemblies:

The next step is to initialize the PowerShell RunSpace and initialize all the necessary values and objects as shown below:

When the pipeline is created we can start sending commands; Always start your command sequence with the "Use-CacheCluster" command.

In the code below you can see how we actually retrieved the CacheStatistics from the AppFabric Cache.For a full list of the available AppFabric Caching PowerShell cmdlets please see following links:

http://msdn.microsoft.com/en-us/library/hh475806

http://msdn.microsoft.com/en-us/library/hh851388

<Return to section navigation list>

Windows Azure Virtual Machines, Virtual Networks, Web Sites, Connect, RDP and CDN

Paul Stubbs (@PaulStubbs) posted Deploying SharePoint on Azure Virtual Machines Whitepaper on 9/7/2012:

There is a ton of interest to run SharePoint on Windows Azure virtual machines. While we are still in preview mode we will continue to put out guidance and help on how to run your SharePoint workloads in Windows Azure. This whitepaper gets you started in understanding what is possible and how to do it.

http://www.microsoft.com/en-us/download/details.aspx?id=34598

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

Faith Allington (@faithallington) reported WebMatrix 2 is Released! + New Windows Azure Features in a 9/6/2012 post to the Windows Azure blog:

You may remember this blog post about WebMatrix, Windows Azure Web Sites and PHP back in June. Well, we’re excited that WebMatrix 2 just shipped! This post covers a quick highlight of the top features, especially the integration with Windows Azure.

WebMatrix is a lightweight web development tool that lets you quickly install and publish popular open source applications or built-in templates. It bundles a web server, database engines, top programming languages and publishing capabilities into one tool - whether you use ASP.NET, PHP, Node.js or HTML5.

Here are just a few of the top features we’ve added since V1:

- Extensibility model lets you write your own plug-ins or use community-built ones

- Extremely fast install of open source apps such as Joomla!, WordPress, Drupal, DotNetNuke and Umbraco

- New built-in templates for PHP, Node.js and HTML5, and mobile support for all templates

- Intellisense (code completion) for major languages, including Razor, C#, VB, PHP, Node.js, HTML5, CSS3 and Jquery

- Application-specific code completion for top apps like Umbraco, WordPress and Joomla!

- Simple UI to install what you need from thousands of NuGet packages

- Ability to preview your site using top mobile emulators

- Remote view to make quick edits to files on live sites

But let’s talk about Windows Azure integration specifically.

Download to WebMatrix

In the previous post, we covered how to create a new Drupal site using the app gallery in Windows Azure. When you have a live website running in Windows Azure, you can then click on the WebMatrix button to download it to your local computer automatically.

On download, WebMatrix analyzes the site and will automatically install any dependencies needed. But one thing we didn’t cover was what happens if the site is empty. In this case, WebMatrix will prompt you to either open the empty site (maybe you want to copy or create your own files) or install a template or open source app.

Publish to Windows Azure

We’ve also simplified the process of publishing a local site from WebMatrix. If you already have a website, you can click “Import publish profile”. If you don’t, you can click “Get started with Windows Azure” and go through the sign-up process.

Publish Databases and More

For publishing, WebMatrix can use Web Deploy under the covers. This allows us to do a bit more than typical publishing:

- Publish the entire database (schema and data)

- Publish only changed files, instead of the entire site

- During the initial compatibility check, automatically change the .NET Framework version of your application pool

And that’s just some of the highlights! You can learn all about WebMatrix at www.webmatrix.com. If you are already using Windows Azure Web Sites, you can simply click the WebMatrix button in your dashboard or you can install WebMatrix from here.

Nathan Totten (@ntotten) described Hacking Node.js on WebMatrix 2 in a 9/6/2012 post:

I know a lot of people have viewed WebMatrix as kind of a toy or the less capable cousin of Visual Studio. I will admit that was my initial impression of WebMatrix as well. However, with the release of WebMatrix 2 today I decided to really give it another try and I was quite impressed with the experience especially for Node.js. WebMatrix definitely isn’t for everybody, but if you are looking for a powerful yet simple IDE for Node.js development it is certainly worth a look.

In this post I will show some of the cool features in WebMatrix 2 for Node.js developers. The first thing you will notice when you fire up WebMatrix is that it comes with support for a variety of different languages. In fact, it even ships with three different templates for Node.js apps – Empty Site, Starter Site, and Express Site. The empty site is what you would expect, the express site is a vanilla express site, and the starter site is an express site with several additions like built in authentication using everyauth. I am going to create an express site to get started.

The next thing you will notice is that the start page you see after creating a new site shows content reverent to the type of application you created. For Node.js this means it shows links to the Node documentation, the NPM registry, and express documentation.

Moving to the files view you will see all the standard files you would expect in your express app. Opening a few of them up you will see that the files open in color-coded tabs. The colors of the tabs indicate the type of each file. JavaScript files are green; css, less, and other style files are red; and html, jade, and other view files are blue. Additionally, you will notice that the file viewer has excellent syntax highlighting for the server.js file that is open.

In addition to the syntax highlighting for Javascript, CSS, and HTML files you would expect, WebMatrix also supports syntax highlighting for many other file types such as LESS, Jade, CoffeeScript, SaSS, and more. Below you can see how a Jade view looks in the WebMatrix editor.

In addition to syntax highlighting, WebMatrix 2 also includes excellent Intellisense for Node.js applications. Below you can see the Intellisense that is provided for the app object that is part of express.

Additionally, the Intellisense engine in WebMatrix is even smart enough to distingush between a server-side js file and a client-side js file. For example, in a server-side file you will not see suggestions for things like document, window, or other DOM JavaScript APIs.

Along with all the great features in the text editor, WebMatrix 2 also makes it easy to run and deploy your Node.js site. You can easily run the site by clicking the run button in the ribbon. This will run your Node.js application locally using IISNode on IIS Express.

Deploying you application with WebMatrix is also extremely easy. For example, you can publish your app to Windows Azure Web Sites by simply importing a publish profile and clicking publish. If you don’t have a web host for Node.js you can click the link to Get Started with Windows Azure and sign up to host up to 10 Node.js apps for free.

As I mentioned at the beginning of this post, WebMatrix isn’t for everyone, but I think it is a great tool for building Node.js apps especially if you are just getting started with Node.js development. Go ahead and give it a try today. You can install WebMatrix here and get started in just a few minutes.

Vision Solutions reported Migrations Made Easy: Vision Solutions to Showcase Complete Migration Solution at the Microsoft Cloud OS Signature Event Series in a 9/6/2012 press release:

Vision Solutions, Inc., a leading provider of information availability software and services, will feature its Double-Take Move solution, which allows users to migrate to Microsoft's Cloud OS, which includes Windows Server 2012, Windows Azure, and System Center 2012, at the Microsoft Cloud OS Signature Event Series. Vision Solutions is a platinum sponsor of the event, which takes place in 16 North American cities starting September 5 and running through October 11.

Double-Take Move reduces migration downtime and complexity. The full migration lifecycle can be managed from Microsoft System Center 2012 with Double-Take Move running under the covers. Deep integration with System Center Orchestrator, Virtual Machine Manager, and Service Manager hides the complexities and enables customers to use the familiar Microsoft System Center console to discover, configure, and migrate live Windows Server virtual machines in real-time to Windows Server 2012 Hyper-V. Most notably, Double-Take Move offers the following features:

- Platform/hypervisor-independent migrations with virtually no downtime

- Risk mitigation for cloud adoption

- Real-time replication

- High availability and disaster recovery

During this Signature Event Series, customers will have an opportunity to talk with Microsoft and Vision Solutions experts regarding the new Microsoft releases, including Windows Server 2012, System Center 2012, and Windows Azure.

As a platinum sponsor and a member of the Microsoft System Center Alliance Program, Vision will participate in each of the 16 events in this series. The Microsoft Cloud OS event schedule is:

9/5 - New York City

9/14 - Atlanta

9/18 - Dallas

9/19 - Minneapolis

9/19 - Houston

9/20 - Irvine

9/20 - Boston

9/20 - Chicago

9/25 - Washington, D.C.

9/25 - Columbus

9/27 - Detroit

9/27 - Seattle

9/27 - Charlotte

10/10 - Denver

10/11 - San Francisco

10/11 - PhiladelphiaIn addition, at the events in Dallas, Boston, and Seattle, Vision Solutions' experts will present during the morning keynote session on risk mitigation to the Cloud and at the luncheon technical session on effective migration solutions.

A Gold Microsoft partner for more than 10 years, Vision currently holds distinct Gold competencies for ISV, Management and Virtualization as well as Server Platform. This recognition demonstrates Vision's best-in-class expertise within Microsoft's marketplace and provides evidence of the deepest, most consistent commitment to a specific, in-demand business solution area.

Quote

Nicolaas Vlok, Chief Executive Officer, Vision Solutions

"Virtualizing your servers, or moving them to the cloud, may make business sense, but it can be a difficult process. Too often, companies must take users offline to synchronize data, and install applications, while hoping everything works on Monday morning, only to do it all over again the next weekend. Migrations can be expensive and scary. Double-Take Move automates the migration process, making it simple, fast, and with no downtime."Links

- Microsoft: http://www.microsoft.com/enterprise/events/signature/#fbid=bODymfO7CTz

- Vision Solutions: http://www.visionsolutions.com/webforms/cloud-signature.aspx

- Twitter: https://twitter.com/VSI_DoubleTake

About Vision Solutions

Vision Solutions, Inc. is the world's leading provider of information availability software and services for Windows, Linux, IBM Power Systems and Cloud Computing markets that includes vCloud Director, Double-Take Move and Double-Take Availability with UVRA for ESX. Vision's trusted Double-Take®, MIMIX® and iTERA™ high availability and disaster recovery brands support business continuity, satisfy compliance requirements and increase productivity in physical and virtual environments. Affordable and easy-to-use, Vision products are backed by worldwide 24X7 customer support centers and a global partner network that includes IBM, HP, Microsoft, VMware and Dell.Privately held by Thoma Bravo, Vision Solutions is headquartered in Irvine, California, USA with offices worldwide. For more information, visit visionsolutions.com, follow us on Twitter at https://twitter.com/VSI_DoubleTake, on other popular social networks or call 1.800.957.4511 (toll-free U.S. and Canada) or 801.799.0300.

<Return to section navigation list>

Visual Studio LightSwitch and Entity Framework 4.1+

Jan Van der Haegen (@janvanderhaegen) recommended that you Shape Up Your Data with Visual Studio LightSwitch 2012 in an article for MSDN Magazine’s September 2012 issue. From the introduction:

Visual Studio LightSwitch was initially released in mid-2011 as the easiest way to build business applications for the desktop or the cloud. Although it was soon adopted by many IT-minded people, some professional developers quickly concluded that LightSwitch might not be ready for the enterprise world. After all, a rapid application development technology might be a great way to start a new and small application now, but, unfortunately, history has proven that these quickly created applications don’t scale well once they grow large, they require a lot of effort to interoperate with existing legacy systems, they aren’t adaptive to fast-paced technological changes, and they can’t handle enterprise requirements such as multitenant installations or many concurrent users. On top of that, in a world of open standards such as RESTful services and HTML clients being adopted by nearly every large IT organization, who would want to be caught in any closed format?

With the release of LightSwitch 2012 coming near, I thought it was a perfect time to convince some friends (professional developers and software architects) to put their prejudices aside and (re)evaluate how LightSwitch handles these modern requirements.

The outcome? It turns out LightSwitch 2012 doesn’t just handle all of these requirements, it absolutely nails them.

Understanding Visual Studio LightSwitch

LightSwitch is a designer-based addition to Visual Studio 2012 to assist in working with data-centric services and applications. When working with a LightSwitch project, the Visual Studio IDE changes to a development environment with only three main editors (in so-called “Logical mode”): the Entity Designer, the Query Designer and the Screen Designer. These editors focus on getting results quickly by being extremely intuitive, fast and easy to use. This adds some obvious benefits to the life of a LightSwitch developer:

- First, it hides the plumbing (the repetitive code that’s typically associated with the development of these information systems). Easy-to-use editors mean fast development, fast development means productive developers and productive developers mean more value to the business. Or, as Scott Hanselman would say, “The way that you scale something really large, is that you do as little as possible, as much as you can. In fact, the less you do, the more of it you can do” (see bit.ly/InQC2b).

- Second, and perhaps most important, nontechnical people, ranging from functional analysts to small business owners to Microsoft Access or Microsoft Excel “developers”—often referred to as citizen developers—who know the business inside out, can step in and help develop the application or even develop it entirely. The editors hide technological choices from those who prefer to avoid them and silently guide the application designer to apply best practices, such as encapsulating the domain logic in reusable domain models, keeping the UI responsive by executing business logic on a thread other than the UI thread, or applying the Model-View-ViewModel (MVVM) development pattern in the clients.

- Finally, these editors actually don’t edit classes directly. Instead, they operate on XML files that contain metadata (LightSwitch Markup Language), which is then used by custom MSBuild tasks to generate code during compilation. This effectively frees the investment made in business logic from any technological choices. For example, a LightSwitch project that was made in version 1.0 would use WCF RIA Services for all communication between client and server, whereas that same project now compiles to use an Open Data Protocol (OData) service instead (more on OData later). This is about as adaptive to the ever-changing IT landscape as an application can get.

Designing Data

Designing data with LightSwitch can be considered equivalent to creating domain models, in the professional developer’s vocabulary. The IDE first asks you about “starting with data,” specifically where you want to store this data. Two possible answers are available. You can choose to “Create new table,” in which case LightSwitch uses the Entity Framework to create the required tables in SQL Compact (when debugging the application), SQL Server or Windows Azure SQL Database. Or you can choose to design your entities over an existing data source, such as SharePoint, an OData service, an existing database or a WCF RIA Services assembly. The latter two can really help you keep working on an existing set of data from a legacy application, but expose it through modern and open standards in an entirely new service or application.

As an example, you can take an existing OData service (an extensive list of examples is available at odata.org/ecosystem) and import one or multiple entities into a new LightSwitch application.

Figure 1 shows you how the Employee entity from the Northwind data source is first represented in the Entity Designer. With a few clicks and a minimal amount of coding effort where needed, you can redesign the entity in several ways (the end result can be found in Figure 2). These include:

- Renaming properties or reordering them (TeamMembers and Supervisor).

- Setting up choice lists for properties that should only accept a limited number of predefined values (City, Region, PostalCode, Country, Title and TitleOfCourtesy).

- Adding new or computed properties (NameSummary).

- Mapping more detailed business types over the data type of a property (BirthData, HireDate, HomePhone, Photo and PhotoPath).

Figure 1 The Entity Designer After Importing from an Existing OData Service

Figure 2 The Same Employee Entity After Redesign

…

Read more.

Return to section navigation list>

Windows Azure Infrastructure and DevOps

• Guarav Mantri (@gmantri) described Consuming Windows Azure Service Management API in a Windows 8 Application in a 9/8/2012 post:

In this blog post, we’re going to talk about how to write a Windows 8 application which consumes Windows Azure Service Management REST API (http://msdn.microsoft.com/en-us/library/windowsazure/ee460799.aspx). Unlike my other post on Windows 8 and Windows Azure Mobile Service, I’ll try to be less dramatic

. I built a small Windows 8 application which lists the storage accounts in a subscription as a proof of concept. Since I’m more comfortable with XAML/C# than HTML5/JavaScript, I built this application using the former though in the course of exploring for this project, I did build a small prototype using HTML5/JS. I’ve included relevant code snippets of that as well.

X509 Certificates

As you know, Windows Azure Service Management REST API makes use of X509 certificate based authentication. When I started looking into it, first thing I found was that there’s no direct support for X509 certificates in .Net API for Windows 8 applications. I almost gave up on this when Mike Wood (http://mvwood.com/ & http://twitter.com/mikewo), a Windows Azure MVP pointed me to this documentation on MSDN for building Windows 8 applications: http://msdn.microsoft.com/en-us/library/windows/apps/hh465029.aspx. Well, what followed next is non-stop coding of various trials and errors

.

In short, there’s no direct support for X509 certificates in the core API (like what you have available in standard .Net API) but it does allow you to install an existing PFX certificate in your application certificate store. This is accomplished via ImportPfxDataAsync (C#) / importPfxDataAsync (JavaScript) method in Windows.Security.Cryptography.Certificates.CertificateEnrollmentManager class. Here’s the code snippet to do the same:

C#:

await Windows.Security.Cryptography.Certificates.CertificateEnrollmentManager.ImportPfxDataAsync( encodedString, TextBoxPfxFilePassword.Password, ExportOption.NotExportable, KeyProtectionLevel.NoConsent, InstallOptions.None, "Windows Azure Tools");JavaScript:

Windows.Security.Cryptography.Certificates.CertificateEnrollmentManager.importPfxDataAsync(certificateData2, "", Windows.Security.Cryptography.Certificates.ExportOption.exportable, Windows.Security.Cryptography.Certificates.KeyProtectionLevel.noConsent, Windows.Security.Cryptography.Certificates.InstallOptions.none, "My Test Certificate 1")Here encodedString is Base64 encoded PFX file data, something like:

and the password is the password for your PFX certificate file.

When you call this method, the application should install a certificate in your application certificate store. You can check it by going into the “AC/Microsoft/SystemCertificates/My/Certificates” folder under your application package directory. The package directory is created under your user account’s “AppData/Local/Packages” folder as shown in the screenshot below:

Please note that name of the file will be the thumbprint of the certificate.

That’s pretty much to it as far as working with PFX files are concerned. However, there are some important issues to understand when these certificates are used to authenticate REST API calls. I spent about 24 – 48 hours trying to make this work. Please see “Special Considerations” section below for those.

Consuming REST API

Consuming REST API was rather simple! If you’re using C# you would make use of HttpClient in System.Net.Http namespace, and if you’re using JavaScript you would make use of WinJS.xhr function which is essentially a wrapper around XMLHttpRequest. Here’s the code snippet to do the same:

C#:

private const string x_ms_version_name = "x-ms-version"; private const string x_ms_version_value = "2012-03-01"; Uri requestUri = "https://management.core.windows.net/[your subscription id]/services/storageservices"; public static async Task<string> ProcessGetRequest(Uri requestUri) { HttpClientHandler aHandler = new HttpClientHandler(); aHandler.ClientCertificateOptions = ClientCertificateOption.Automatic; HttpClient aClient = new HttpClient(aHandler); aClient.DefaultRequestHeaders.Add(x_ms_version_name, x_ms_version_value); HttpRequestMessage request = new HttpRequestMessage(HttpMethod.Get, requestUri); var result = await aClient.GetAsync(requestUri, HttpCompletionOption.ResponseContentRead); var responseBody = await result.Content.ReadAsStringAsync(); return responseBody; }JavaScript:

var uri = "https://management.core.windows.net/[your subscription id]/services/storageservices"; WinJS.xhr({ url: uri, headers: { "x-ms-version": "2012-03-01" } }) .done( function (/*@override*/ request) { var responseData = request.responseText; }, function (error) { var abc = error.message; });That’s pretty much to it. If your request is successful, you will get response data back as XML which you can parse and use it in your application.

Special Considerations

It sounds pretty straight forward but unfortunately it is not so. I spent nearly 24 – 48 hours trying to figure this whole thing out. I was able to install the certificate correctly but then when I invoked the REST API using C# code, I was constantly getting 403 error back from the service. I ended up on this post on MSDN forums: http://social.msdn.microsoft.com/Forums/sv/winappswithcsharp/thread/0d005703-0ec3-4466-b389-663608fff053 and it helped me immensely.

Based on my experience, here are some of the things you would need to take into consideration:

Ensure that “Client Authentication” is enabled as one of the certificate purpose

Your certificate must have this property enabled (as shown in the screenshot above) if you wish to use this certificate in your Windows 8 application. Please note that when consuming REST API in your non Windows 8 applications, it is not required that this property is set.

Ensure that the certificate has “OID” specified for it

Based on the MSDN forum thread above, this is a bug with Microsoft.

HttpClient and WinJS.xhr are not the same

I started with building a XAML/C# application (because of comfort level) and no matter what I tried, I could not make the REST API call work. It was only later I was made aware of the 2 issues above. However I took the certificate which was giving me problems (because of 2 issues above) and built a simple HTML5/JavaScript application and it worked like a charm. You would still need to have “Client Authentication” enabled in your certificate but not the OID one when consuming Service Management API through JavaScript.

It may very well be an intermediate thing (because of OID bug mentioned above) but I thought I should mention it here.

Try avoiding “Publish Profile File” if you’re consuming REST API in XAML/C# application

Publish Profile File, as you know is an XML file containing information about all your subscriptions (Subscription Id etc.) and Base64 encoded PFX certificate content. It’s a great way to get access to all your subscriptions. The reason I’m saying you should avoid it here is because what I found is that the PFX certificate from which the content is generated currently missing OID in there. So till the time Microsoft fixes this issue, please go individual certificate route if you’re developing using C#/XAML. If you’re building using HTML5/JavaScript, you can write something which will import this file as based on my testing it works. Just remember that the password for the certificate is an empty string. So specify an empty string (“”) for the password parameter in your call to importPfxDataAsync method.

Recommend that you create a new management certificate if you’re consuming REST API in C#/XAML application

Again this is because of the issues I mentioned above. You will need to check if the certificates you have in place today do not have the 2 issues I mentioned above. You can use “makecert” utility for creating a new certificate. I was recommended the following syntax for creating a new certificate.

makecert -r -pe -n "CN=[Your Certificate Common Name]" -b 09/01/2012 -e 01/01/2099 -eku 1.3.6.1.5.5.7.3.2 -ss MyRun this in “Developer Command Prompt” on a Windows 8 machine to create a new certificate which will get automatically installed in your computer’s certificate store. You can export that certificate using “certmgr” utility.

The App!!!

Finally, the application! My moment of glory

. It’s really a simple application. Some of the application screenshots are included below. The source code of the application can be downloaded from here. [This reminds me I need to stop doing this and start posting the code on GitHub

]

Landing page with no subscriptions saved

Add subscription page

Landing page with saved subscriptions

Storage accounts list page

A few things about this application:

- I wrote this application in a few hours time (after spending like 2 days trying to figure everything else out), so the code is not perfect

.

- There’s a glitch currently in the application where if you add 2 subscriptions with different certificates, one of the subscription will not work. I haven’t figured it out just yet

. For this application I would recommend that you use same certificate for all your subscriptions.

- The application saves the subscription id and friendly name in JSON format in application’s local settings and is not encrypted.

Acknowledgement

I’m grateful to Jeff Sanders from Microsoft for promptly responding to my questions on MSDN forums and offline as well. I’m equally grateful to Harin Sandhoo from Applied IS, who basically provided me with everything I needed to make this thing run in a XAML/C# app. Also many thanks to Mike Wood for letting me know about working with certificates in Windows 8. Without Mike’s help, I would have given up working on this thing. Lastly, thanks to Patriek van Dorp for asking a question about consuming Windows Azure Service Management API in a Windows 8 App. That pushed me into thinking about building this application.

Summary

To summarize, once we have figured out the issues with the certificates and using them to consume REST API, it was relatively easy to build the application. Documentation for Windows 8 development needs to improve a lot, IMHO. But I think this would happen over a period of time as the technology matures.

If you find any issues in my code or this blog post, feel free to let me know through comments or via email and I will fix them ASAP.

• The InformationWeek Staff (@InformationWeek) published The New Service Levels of Windows Azure on 9/7/2012:

Windows Azure is Microsoft's platform-as-a-service for building cloud-based applications. It offers a wide range of software services beyond pure computation, including storage, service bus, and access control. The APIs for these services let companies create ad hoc applications and host them in Microsoft data centers using a pricing model that best suits their needs.

PaaS binds you to a specific operating system and software runtime environment, and lets you exert limited

control over that environment. But what happens if you want total control over the runtime environment and

want to just rent the infrastructure? For a long time, such an infrastructure-as-a-service scenario wasn't an option in Azure, and companies couldn't buy cloud space from Microsoft just to host a LAMP application. The only way to deploy an application on Azure was to build a new one using the .NET Framework and cloud-specific services, storage, and frameworks.

David Linthicum (@DavidLinthicum) asserted “Recent survey shows that most Americans don't understand cloud computing -- and it's our fault” in a deck for his Why Johnny doesn't understand cloud computing article of 9/7/2012 for InfoWorld’s Cloud Computing blog:

Thanks to a nationwide survey conducted by Wakefield Research and commissioned by Citrix Systems, we now know what most people in the United States think about the "cloud": not much, it turns out. Indeed, 95 percent of respondents claimed they've never used the cloud. Worse yet, 29 percent thought the cloud is a "fluffy white thing in the sky."

We know that people who took this survey most likely do some online banking, purchase from online retailers, post status updates on social networks, and manage photos on photo-sharing sites -- all in the cloud. I'm sure many have signed up for iCloud, Box.net, Mozy, Dropbox, or other retail cloud providers, though perhaps not understanding exactly what they are using.

We insiders in the cloud computing world are often stuck explaining to friends and family members just what the cloud is. Most of us start with well-known analogies, such as popular website-delivered services. We do this understanding full well that the cloud is much more complex and far-reaching than Facebook or hosted email. Others cite the NIST definition, which is the most relevant but also defines cloud computing broadly and is certainly not for laymen.

The problem with cloud computing is the term itself. It's way too overused, covering way too many technology patterns. As a result, cloud computing has no specific meaning, which makes it both difficult to define and to understand, whether you're a cloud computing insider or an average American.

I ranted about this problem in a post about a year ago, asserting, "I believe we've officially lost the war to define the core attributes of cloud computing so that businesses and IT can make proper use of it. It's now in the hands of marketing organizations and PR firms who, I'm sure, will take the concept on a rather wild ride over the next few years." That was true a year ago, and it's still true today -- officially, as the survey shows.

There is no easy answer to this problem. If we insist on defining cloud computing in very broad terms, nobody will understand exactly what it is. I suspect if the same survey is done next year, more will define cloud computing as a "fluffy white thing in the sky." Perhaps it is just fluff.

John Casaretto asserted Microsoft’s Windows Azure Making Inroads – The Quiet Storm Ahead in a 9/6/2012 post to the SiliconANGLE blog:

The cloud market as we know it will certainly be changing as Windows Azure is on the uptick. It is true that the cloud platform has not traditionally been in serious competition with the large with the IaaS providers such as Amazon EC2, but that could be changing. Windows Azure is one of the components along with the newly available Windows Server 2012 that Microsoft has collectively dubbed “the Cloud OS”. With a common base for cloud application development, virtualization features, and other features, there is little doubt that it works in the Windows world. That has been the rub on Azure – what about everything else. Microsoft is employing a familiar and very slick strategy to create more adoption and it’s happening right underneath a lot of noses.

With Azure’s Virtual Machines service, those limitations are a thing of the past. The IaaS service composed Azure Web Sites, and Media Services, is quietly making inroads. The Virtual Machines service allows for a variety of ways of getting VMs into the Azure environment. Customers can create VMs from a Microsoft or partner-provided image, they can create a custom image, or the customer can simply put their pre-build VHD format systems into the environment. The pre-built Microsoft images support a number of Linux distributions along with the expected Microsoft images. With Identity Services and Active Directory recently made available, the overall offering is becoming more and more compelling. Altogether the services offer the simple and flexible ability to extend native networks, systems, and workloads into the cloud.

In a recent conversation on Azure I had with Convergent Computing (CCO) President Rand Morimoto, this little discussed dance with Azure was confirmed.

“Microsoft has announced the new and upcoming Azure Virtual Machines, Web Sites, and Media Services in Beta which is really slick, something we have a LOT of customers currently running and testing.”

CCO is not your average boutique technology consultancy, their client base is vast and centered on all the best and well-known companies in Silicon Valley. As the bestselling author of some thirty technology books, including the well-known “Unleashed” series, Morimoto certainly knows what he is talking about.

This appears to be just the tip of the iceberg, as the industry is always for full-featured and practical technology offerings and options. Hyper-V is similarly providing a potentially disruptive option in the virtualization market. To the right decision-maker, a familiar environment for management and deployment, and a reduced demand for staff training and support costs these options may appear a far less fearsome undertaking. With new features constantly on the way, collaboration with cutting-edge cloud operators, combined with bundled cost advantages, it could be forming a quiet storm in the distance. The mid-market and the enterprise appear to be poised to adopt Windows Azure willingly.

MarketWire reported New Relic Adds Windows Server Monitoring to Enhance Performance of Windows Azure Applications in a 9/6/2012 press release:

Addition of Windows Server Support Enables Real-Time Application Performance Monitoring for .NET and Windows Azure Applications

New Relic, the SaaS-based application performance management company, announced today the addition of performance monitoring for Windows Server. Combined with its existing support for Windows Azure, New Relic offers a comprehensive performance monitoring solution for web applications running in Windows Server and Windows Azure environments. Key capabilities include real user monitoring for measuring front-end performance, deep-dive application monitoring for .NET web applications deployed on Windows Azure, and Windows Server monitoring for keeping an eye on infrastructure resources such as CPU, memory, network activity, and processes. Available in one user interface on one technology platform, these capabilities enable application development and deployment teams to easily measure and rapidly optimize the performance of their business-critical web applications deployed on Windows Azure.

Windows Azure is an open and flexible cloud platform that enables application development and deployment teams to quickly build, deploy and manage applications across a global network of Microsoft-managed datacenters. Teams can build applications using any language, tool or framework and easily integrate their public cloud applications with their existing IT environment. New Relic is a SaaS-based application performance management solution for monitoring the performance of .NET, Java, PHP, Python and Ruby web applications and their environments. Together, New Relic and Windows Azure create a powerful solution for dev teams to deploy and optimize .NET web applications.

"As more and more companies choose Windows Azure as their deployment platform, it's important for best-of-breed solution providers to develop value-added tools and capabilities," said Bill Hamilton, director of product marketing, Windows Azure, Microsoft. "The goal of both New Relic and Windows Azure is to help development teams deploy and manage business-critical web applications and eliminate the headache and hassles of hardware and software maintenance, while providing rapid time to value."

"We are pleased to be helping the Microsoft Azure team ensure their customers have the tools they need to make deploying web applications on Windows Azure easy," said Bill Lapcevic, vice president of business development at New Relic. "The combination of Microsoft's robust infrastructure and New Relic's deep application visibility create a powerful solution for today's forward-thinking developer and operations teams."

New Relic is available to Windows Azure customers free of charge. To take advantage, Windows Azure customers can simply follow these steps:

1. Visit New Relic on the Windows Azure Marketplace

2. Click on the 'Sign up' button (or 'Buy' if you're opting for Pro)

3. Read and agree to the 'Offer Terms and Privacy Policy' and click on 'SIGN UP'

4. Click on the launch icon located in the lower left-hand corner next to 'USE IT!'This will log you into your New Relic account where you can then follow the on-screen installation instructions.

About New Relic

New Relic, Inc. is the all-in-one web application performance management provider for the cloud and the datacenter. Its SaaS solution combines real user monitoring, application monitoring, server monitoring and availability monitoring in a single solution built from the ground up and changes the way developers and operations teams manage web application performance in real-time. More than 28,000 organizations use New Relic to optimize over 8 billion transactions in production each day. New Relic also partners with leading cloud management, platform and hosting vendors to provide their customers with instant visibility into the performance of deployed applications. New Relic is a private company headquartered in San Francisco, Ca. New Relic is a registered trademark of New Relic, Inc.

<Return to section navigation list>

Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

Tim Anderson (@timanderson) described Upgrading to Hyper-V Server 2012 in a 9/7/2012 post:

After discovering that in-place upgrade of Windows Hyper-V Server 2008 R2 to the 2012 version is not possible, I set about the tedious task of exporting all the VMs from a Hyper-V Server box, installing Hyper-V Server 2012, and re-importing.

There are many reasons to upgrade, not least the irritation of being unable to manage the VMs from Windows 8. Hyper-V Manager in Windows 8 only works with Windows 8/Server 2012 VMs. It does seem to work the other way round: Hyper-V Manager in Windows 7 recognises the Server 2012 VMs successfully, though of course new features are not exposed.

The export and import has worked smoothly. A couple of observations:

1. Before exporting, it pays to set the MAC address of virtual network cards to static:

The advantage is that the operating system will recognise it as the same NIC after the import.

2. The import dialog has a new option, called Restore:

What is the difference between Register and Restore? Do not bother pressing F1, it will not tell you. Instead, check Ben Armstrong’s post here. If you choose Register, the VM will be activated where it is; not what you want if you mistakenly ran Import against a VM exported to a portable drive, for example. Restore on the other hand presents options in a further step for you to move the files to another location.

3. For some reason I got a remote procedure call failed message in Hyper-V Manager after importing a Linux VM, but then when I refreshed the console found that the import had succeeded.

Cosmetically the new Hyper-V Server looks almost identical to the old: you log in and see two command prompts, one empty and one running the SConfig administration menu.

Check the Hyper-V settings though and you see all the new settings, such as Enable Replication, Virtual SAN Manager, single-root IO virtualization (SR-IOV), extension support in a virtual switch, Live Migrations and Storage Migrations, and more.

The Microsoft Server and Cloud Platform Team (@MSCloud) posted Windows Server 2012 Facts and Figures on 9/6/2012:

With the the launch of Windows Server 2012 this week, customers are already using Windows Server 2012 to realize the promise of cloud computing. Our team developed this infographic showcasing just some of the success.

<Return to section navigation list>

Cloud Security and Governance

Chris Hoff (@Beaker) described The Cuban Cloud Missile Crisis…Weapons Of Mass Abstraction in a 9/7/2012 post:

In the midst of the Cold War in October of 1962, the United States and the Soviet Union stood periously on the brink of nuclear war as a small island some 90 miles off the coast of Florida became the focal point of intense foreign policy scrutiny, challenges to sovereignty and political arm wrestling the likes of which were never seen before.

Photographic evidence provided by a high altitude U.S. spy plane exposed the until-then secret construction of medium and intermediate ballistic nuclear missile siloes, constructed by the Soviet Union, which were deliberately placed so as to be close enough to reach the continental United States.

The United States, alarmed by this unprecedented move by the Soviets and the already uneasy relations with communist Cuba, unsuccessfully attempted a CIA-led forceful invasion and overthrow of the Cuban regime at the Bay of Pigs.

This did not sit well with either the Cubans or Soviets. A nightmare scenario ensued as the Soviets responded with threats of its own to defend its ally (and strategic missile sites) at any cost, declaring the American’s actions as unprovoked and unacceptable.

During an incredibly tense standoff, the U.S. mulled over plans to again attack Cuba both by air and sea to ensure the disarmament of the weapons that posed a dire threat to the country.

As posturing and threats continued to escalate from the Soviets, President Kennedy elected to pursue a less direct military action; a naval blockade designed to prevent the shipment of supplies necessary for the completion and activation of launchable missiles. Using this as a lever, the U.S. continued to demand that Russia dismantle and remove all nuclear weapons as they prevented any and all naval traffic to and from Cuba.

Soviet premier Krustchev protested such acts of “direct agression” and communicated to president Kennedy that his tactics were plunging the world into the depths of potential nuclear war.

While both countries publicly traded threats of war, the bravado, posturing and defiance were actually a cover for secret backchannel negotiations involving the United Nations. The Soviets promised they would dismantle and remove nuclear weapons, support infrastructure and transports from Cuba, and the United States promised not to invade Cuba while also removing nuclear weapons from Turkey and Italy.

The Soviets made good on their commitment two weeks later. Eleven months after the agreement, the United States complied and removed from service the weapons abroad.

The Cold War ultimately ended and the Soviet Union fell, but the political, economic and social impact remains even today — 40 years later we have uneasy relations with (now) Russia and the United States still enforces ridiculous economic and social embargoes on Cuba.

What does this have to do with Cloud?

Well, it’s a cute “movie of the week” analog desperately in need of a casting call for Nikita Khrushchev and JFK. I hear Gary Busey and Aston Kutcher are free…

As John Furrier, Dave Vellante and I were discussing on theCUBE recently at VMworld 2012, there exists an uneasy standoff — a cold war — between the so-called “super powers” staking a claim in Cloud. The posturing and threats currently in process don’t quite have the world-ending outcomes that nuclear war would bring, but it could have devastating technology outcomes nonetheless.