Windows Azure and Cloud Computing Posts for 12/4/2010+

| A compendium of Windows Azure, Windows Azure Platform Appliance, SQL Azure Database, AppFabric and other cloud-computing articles. |

• Updated 12/5/2010 with new articles marked •.

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database and Reporting

- Marketplace DataMarket and OData

- Windows Azure AppFabric: Access Control and Service Bus

- Windows Azure Virtual Network, Connect, and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch

- Windows Azure Infrastructure

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Read the detailed TOC here (PDF) and download the sample code here.

Discuss the book on its WROX P2P Forum.

See a short-form TOC, get links to live Azure sample projects, and read a detailed TOC of electronic-only chapters 12 and 13 here.

Wrox’s Web site manager posted on 9/29/2009 a lengthy excerpt from Chapter 4, “Scaling Azure Table and Blob Storage” here.

You can now freely download by FTP and save the following two online-only PDF chapters of Cloud Computing with the Windows Azure Platform, which have been updated for SQL Azure’s January 4, 2010 commercial release:

- Chapter 12: “Managing SQL Azure Accounts and Databases”

- Chapter 13: “Exploiting SQL Azure Database's Relational Features”

HTTP downloads of the two chapters are available for download at no charge from the book's Code Download page.

Tip: If you encounter articles from MSDN or TechNet blogs that are missing screen shots or other images, click the empty frame to generate an HTTP 404 (Not Found) error, and then click the back button to load the image.

Azure Blob, Drive, Table and Queue Services

• Rinat Abdullin described a RabbitMQ Messaging Server for Cloud CQRS in a 12/5/2010 post:

I've recently published another introductory tutorial on deploying and configuring components of cost-effective cloud system. This one is about deploying RabbitMQ messaging server. With the simplest deployment (which took me under 10 minutes) I've got write throughput of around 300 durable messages per second from .NET. This is probably the lowest performance you can get because:

- .NET client code was running in a single thread on laptop in [a] cafe [in the] middle of the Russia over slow WiFi.

RabbitMQ was hosted in a cloud somewhere on the USA within the smallest possible Linux VM Role (256MB RAM).

In production scenarios it should be possible to get more interesting numbers like:

Scalability and reliability of this solution, obviously, is not an issue.

If you are following this blog and are interested in CQRS architectures and Cloud Systems, then I recommend you to give a try to this RabbitMQ tutorial.

Why?

Think Outside Your Stack

First of all, it will give you some perception of how easy it is to deploy certain elements of .NET CQRS solution, if you don't limit yourself with the technologies originated from Microsoft Development Stack.

Most cost-effective systems are built by taking the best of all worlds. Besides, this approach sometimes allows to reduce development friction and complexity of the solution.

This is No Pipe

Second, there is this new PVC pipes project by Greg Young on GitHub. It just started but already includes RabbitMQ adapter. I'm also planning to add Azure Queues transport from Lokad.CQRS there as well.

I was dreaming about combining Rx with CQRS and AMQP before. This project might be the practical implementation of this approach without the limitations of MSMQ-based service buses.

NB: theoretically it's rather easy to write adapters from Rx Observer interfaces to pipe interfaces and back. The only logical disparity comes at the point of Error/Completion and subscription management. This is deliberate (just like immutability) and could be worked around.

Alt.NET Cloud Computing

Third, new potential .NET Cloud Computing providers (that's Monkai and AppHarbor at the time of writing) have some future plans to support for AMQP protocol which is actually implemented by RabbitMQ.

With AMQP specs gradually reaching version 1.0 we might eventually see hardware solutions for this middle-ware and even more popularity and friendliness in the ecosystem.

Affordable Practical Sample

Fourth, I might be using RabbitMQ messaging to describe practical aspects of Cloud CQRS in further articles. Although there are quite a few free cloud computing offers, .NET developers and students don't have a lot of other simple and affordable options to practice with.

With OSS RabbitMQ server, you can run one locally for free (Windows, Linux, whatever) or have it hosted in the cloud for pennies per hour.

Summary

So if you are interested in practical CQRS and Cloud Computing, I strongly recommend you to:

- check out RabbitMQ and give a try to the quick tutorial;

- start watching pipes project;

- Follow on twitter and stay tuned to this Journal;

- keep on sharing your project stories and challenges, they really help to focus on the things that matter.

Larry O’Brien asserted “Trying to simultaneously tackle a phone app, a web app, and a native Windows app is a little intimidating the first time. Larry O'Brien shows you how surprisingly easy this task becomes with Visual Studio 2010 and .NET technologies” in a preface to his To Do: Create an Azure-integrated Windows 7 Phone App post to Internet.com’s RIA Development Center:

The cloud wants smart devices. It also wants Web access, and native applications that take advantage of the unique capabilities of user's laptops and desktop machines. The cloud wants much of us.

Get Started

Windows Phone 7 Marketplace

Get Windows Phone 7 Developer Toolkit

MSDN Video: The Power of the Cloud — Exploring Windows Phone 7 Services

Join the App Hub Community of App and Game Developers!Once upon a time, the rush was on to produce native Windows applications, then it became "you need a web strategy", and then "you need a mobile strategy". Therefore it is natural to sigh and try to ignore it when told "you need a cloud strategy". However, one of the great advantages of Microsoft's development technologies is their integration across a wide range of devices connected to the cloud: phones and ultra-portable devices, laptops, desktops, servers, and scale-on-demand datacenters. For storage, you can use either the familiar relational DB technology that SQL Azure offers, or the very scalable but straightforward Azure Table Storage capability. For your user interfaces, if you write in XAML you can target WPF for Windows or Silverlight for the broadest array of devices. And for your code, you can use modern languages like C# and, shortly, Visual Basic.

Trying to simultaneously tackle a phone app, a web app, and a native Windows app is a little intimidating the first time, but the surprising thing is how easy this task becomes with Visual Studio 2010 and .NET technologies.

In this article, we don't want to get distracted by a complex domain, so we're going to focus on a simple yet functional "To Do" list manager. Figure 1 shows our initial sketch for the phone UI, using the Panorama control. Ultimately, this To Do list could be accessed not only by Windows Phone 7, but also by a browser-based app, a native or Silverlight-based local app, or even an app written in a different language (via Azure Table Storage's REST support).

Figure 1.

Enterprise developers may be taken aback when they learn that Windows Phone 7 does not yet have a Microsoft-produced relational database. While there are several 3rd party databases that can be used, those expecting to use SQL Server Compact edition are going to be disappointed.

Having said this, you can access and edit data stored in Windows Azure from Windows Phone 7. This is exactly what this article is going to concentrate on: creating an editable Windows Azure Table Storage service that works with a Windows Phone 7 application.

Installing the Tools

You will need to install the latest Windows Phone Developer Tools (this article is based on the September 2010 Release-To-Manufactures drop) and Windows Azure tools. Installation for both is very easy from within the Visual Studio 2010 environment: the first time you go to create a project of that type, you will be prompted to install the tools.Download the OData Client Library for Windows Phone 7 Series CTP. At the time of writing, this CTP is dated from Spring of 2010, but the included version of the System.Data.Services.Client assembly works with the final bits of the Windows Phone 7 SDK, so all is well.

You do not need to run Visual Studio 2010 with elevated permissions for Windows Phone 7 development, but in order to run and debug Azure services locally, you need to run Visual Studio 2010 as Administrator. So, although you should normally run Visual Studio 2010 with lower permissions, you might want to just run it as Admin for this entire project. The Windows Phone 7 SDK does not support edit-and-continue, so you might want to turn that off as well.

While you are installing these components, you might as well also add the Silverlight for Windows Phone Toolkit. This toolkit provides some nice controls, but it is especially useful because it provides GestureService and GestureListener classes that make gesture support drop-dead simple.

Since Windows Phone 7 applications are programmed using Silverlight (or XNA, for 3-D simulations and advanced gaming) we naturally will use the Model-View-ViewModel (MVVM) pattern for structuring our phone application. So let's start by creating a simple Windows Phone 7 application.

Read More: Next Page: Hello, Phone!

Page 1: Getting Started

Page 4: Making Azure Table Storage EditablePage 2: Hello, Phone!

Page 5: Meanwhile, Back at the Phone…Page 3: Store, Table Storage, Store!

<Return to section navigation list>

SQL Azure Database and Reporting

Steve Yi (pictured below) announced Real World SQL Azure: Interview with Kent McNall, CEO, Quosal, and Stephen Yu, VP of Development, Quosal on 12/3/2010:

As part of the Real World SQL Azure series, we talked to Kent McNall and Stephen Yu, co-founders of Quosal, about using Microsoft SQL Azure to open up a global market for their boutique quote and proposal software firm based in Woodinville, Washington. Here’s what they had to say:

MSDN: Can you tell us about Quosal and the services you offer?

McNall: Our flagship product, Quosal, provides sales quote and proposal preparation, delivery, and management software packages. Microsoft SQL Server is the cornerstone of Quosal, and after two years of development, we’ve architected a database that’s optimized for what we do.

MSDN: What were the biggest challenges that Quosal faced prior to adopting SQL Azure?

McNAll: We offer a hosted version of Quosal, but only to customers who work close enough to our data center in Washington to ensure that latency on the network doesn’t degrade performance. We wanted to take advantage of a growing global market for the software-as-a-service option, but would have had to build regional hosting centers, incurring large upfront labor and infrastructure costs and ongoing maintenance. This was a critical impediment to our growth: We had a huge potential market for our hosted customers—virtually a global market—and no way to satisfy them.

MSDN: Can you describe how Quosal is using SQL Azure to help build the business on a global scale?

McNall: With SQL Azure, Quosal can offload all data center infrastructure overhead to Microsoft. Now our customers around the world can choose the hosted option and store their quote and proposal data on servers in global Microsoft data centers. SQL Azure gave us an instant, super-reliable, high-performance worldwide infrastructure for our hosted offering. It was like being given sudden access to a global marketplace at zero cost to the company.

MSDN: How easy was it to migrate Quosal to SQL Azure?

YU: We were thrilled at how easy it was to fine tune our self-maintaining database to run in the cloud environment. We used Microsoft SQL Server Management Studio to simplify the process. Thanks to the similarity between SQL Azure and SQL Server, our development team simply applied their existing programming skills to get the job done in a couple of hours.

MSDN: Are you able to reach new customers since implementing SQL Azure?

McNall: With SQL Azure, Quosal opened the doors to a global marketplace almost overnight. SQL Azure is one of the most tangible ways I’ve seen the cloud touch our customers and our business. Every hosted sale I’ve made in Europe, Australia, and South Africa, I’ve made because of SQL Azure. We’ve increased our global sales by 50 percent in just under a year.

MSDN: What other benefits is Quosal realizing with SQL Azure?

McNall: We are reducing the cost of doing business. We saved U.S.$300,000 immediately by not having to build those three data centers and we are avoiding ongoing maintenance costs of $6,000 a month. We are differentiating ourselves because customers benefit from a cost-effective, highly secure, turnkey alternative to maintaining their data on-premises. We keep getting the same feedback, ‘What’s not to like?’ and that’s reflected in the numbers. Our hosted customer count has risen by 15 percent—25 percent of our total customer base—in just under a year. All I can say is that SQL Azure is one of the finest Microsoft product offerings I’ve ever been involved with.

Read the full story at: www.microsoft.com/casestudies/casestudy.aspx?casestudyid=4000008782

<Return to section navigation list>

Dataplace DataMarket and OData

• Chris Love suggested that you Enable Extensionless Urls in IIS 7.0 & 7.5 to solve 404 errors with OData sources on 12/4/2010:

The cool thing to do these days is extensionless Urls, meaning there is no .aspx, .html, etc. Instead you just reference the resource by the name, which makes things like Restful interfaces and SEO better. For security reasons IIS disables this feature by default.

Recently I was working with some code where extensionless Urls were being used by the original developer. Since I typically do not work against the local IIS 7.5 installation when writing code I was stuck because I kept getting 404 responses for an oData resource. At first I did not realize the site was using IIS 7.5 as the web server, I honestly thought it was using the development server, which is the standard option when using Visual Studio to develop a web site.

Once I realized the site was actually being deployed locally I was able to trace the issue and solve it. turns out you need to turn on Http Redirection. To do this you need to go into control panel and select ‘Programs’. This will display multiple options, you need to look at the top group, “Programs and Features”. In this group select “Turn Windows features on or off”.

Now the “Windows Features” dialog is displayed. This shows a tree view containing various components, but for this we want to drill into the Internet Information Services > World Wide Web Services > Common Http Features. By checking HTTP Redirection and the OK button you will enable extensionless Urls in IIS.

I like IIS 7, but sometimes I really wish configuration was much simpler. I looked for a while in the IIS management interface for this setting and could not find it and honestly it has been a while since I configured this on my production server. So the answer was not obvious. I am just glad I found a Knowledgebase article to help me out. I hope this helps you out.

Justin James described Using OData from Windows Phone 7 in a 12/3/2010 post to TechRepublic’s Smartphones blog:

My initial experiences with Windows Phone 7 development were a mixed bag. One of the things that I found to be a big letdown was the restrictions on the APIs and libraries available to the developer. That said, I do like Windows Phone 7 development because it allows me to use my existing .NET and C# skills, and keep me within the Visual Studio 2010 environment that has been very comfortable to me over the years. So despite my initially poor experience in getting starting with Windows Phone 7, I was willing to take a few more stabs at it.

One of the apps I wanted to make was a simple application to show the local crime rates. The government has this data on Data.gov, but it was only available as a data extract, and I really did not feel like building a Web service around a data set, so I shelved the idea. But then I discovered that the “Dallas” project had finally been wrapped up, and the Azure Marketplace DataMarket was live. Unfortunately, there are only a small number of data sets available on it right now, but one of them just happened to be the data set I wanted, and it was available for free. Talk about good luck! I quickly made a new Windows Phone 7 application, and tried to add the reference, only to be stopped in my tracks with this error: “This service cannot be consumed by the current project. Please check if the project target framework supports this service type.”

It turns out, Windows Phone 7 launched without the ability to access WCF Data Services. I am not sure who made this decision, seeing as Windows Phone 7 is a great match for Azure Marketplace DataMarket, it’s fairly dependent on Web services to do anything useful, and Microsoft is trying to push WCF Data Services. My initial research found only a CTP from March 2010 to provide this information. I asked around and found out that code to do just this was made announced at PDC recently and was available for free on CodePlex.

Something to keep in mind is that Windows Phone 7 applications must be responsive when performing processing and must support cancellation of “long running” processes. In my experience with the application certification process, I had an app rejected for not supporting cancellation even though it would take at most three seconds for processing. So now I am very cautious about making sure that my applications support cancellation.

Using the Open Data Protocol (OData) library is a snap. Here’s what I did to be able to use an OData service from my Windows Phone 7 application:

- Download the file ODataClient_BinariesAndCodeGenToolForWinPhone.zip.

- Unzip it.

- In Windows Explorer, go to the Properties page for each of the DLLs, and click the Unblock button.

- In my Windows Phone 7 application in Visual Studio 2010, add a reference to the file System.Data.Services.Client.dll that I unzipped.

- Open a command prompt, and navigate to the directory of the unzipped files.

- Run the command: DavaSvcUtil.exe /uri:UrlToService /out:PathToCSharpFile (in my case, I used https://api.datamarket.azure.com/Data.ashx/data.gov/Crimes for the URL and .\DataGovCrime.cs for my output file). This creates a strongly typed proxy class to the data service.

- I copied this file into my Visual Studio solution’s directory, and then added it to the solution.

- I created my code around cancellation and execution. Because I am not doing anything terribly complicated, and because the OData component already supports asynchronous processing, I took a backdoor hack approach to this for simplicity. I just have booleans indicating a “Running” and “Cancelled” state. If the event handler for the service request completion sees that the request is cancelled, it does nothing.

There was one big problem: The OData Client Library does not support authentication, at least not at a readily accessible level. Fortunately, there are several workarounds.

- The first option is what was recommended at PDC: construct the URL to query the data manually, and use the WebClient object to download the XML data and then parse it manually (using LINQ to XML, for example). This gives you ultimate control and lets you do any kind of authentication you might want. However, though, you are giving up things like strongly typed proxy classes, unless you feel like writing the code for that yourself (have fun).

- The second alternative, suggested by user sumantbhardvaj in the discussion for the OData Client Library, is to hook into the SendingRequest event and add the authentication. You can find his sample code on the CodePlex site. I personally have not tried this, so I cannot vouch for the result, but it seems like a very reasonable approach to me.

- Another alternative that has been suggested to me is to use the Hammock library instead.

For simple datasets, the WebClient method is probably the easiest way to get it done quickly and without having to learn anything new.

While it is unfortunate that the out-of-the-box experience with working with OData is not what it should be, there are enough options out there that you do not have to be left in the cold.

More about Windows Phone 7 on TechRepublic

Justin is an employee of Levit & James, Inc. in a multidisciplinary role that combines programming, network management, and systems administration.

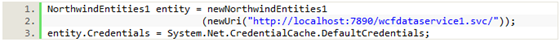

Dhananjay Kumar began a new series with Authentication on WCF Data Service or OData:Windows Authentication Part#1 of 12/3/2010:

In this article, I am going to show how to enable windows authentication on WCF Data Service.

Follow the below steps

Step 1

Create WCF Data Service.

Read below how to create WCF Data Service and introduction to OData.

http://dhananjaykumar.net/2010/06/13/introduction-to-wcf-data-service-and-odata/

While creating data model to be exposed as WCF Data Service, we need to take care of only one thing that Data model should be created as SQL Login

So while creating data connection for data model connect to data base through SQL Login.

Step 2

Host WCF Data Service in IIS. WCF Data Service can be hosted in exactly the same way a WCF Service can be hosted.

Read below how to host WCF 4.0 service in IIS 7.5

http://dhananjaykumar.net/2010/09/07/walkthrough-on-creating-wcf-4-0-service-and-hosting-in-iis-7-5/

Step 3

Now we need to configure WCF Service hosted in IIS for Windows authentication.

Here I have hosted WCF Data Service in WcfDataService IIS web site.

Select WcfDataService and in IIS category you can see Authentication tab.

On clicking on Authentication tab, you can see various authentication options.

Enable Windows authentication and disable all other authentication

To enable or disable a particular option just click on that and at left top you can see the option to toggle

Now by completing this step you have enabled the Windows authentication on WCF Data Service hosted in IIS.

Passing credential from .Net Client

If client windows domain is having access to server then

If client is not running in windows domain which is having access to server then credential we need to pass the as below,

So to fetch all the records

Program.cs

In above article we saw how to enable Windows authentication on WCF Data Service and then how to consume from .Net client. In next article we will see how to consume Windows authenticated WCF Data Service from SilverLightclient.

Jason Bloomburg asked Does REST Provide Deep Interoperability? in a 12/2/2010 post to the ZapThink blog:

We at ZapThink were encouraged by the fact that our recent ZapFlash on Deep Interoperability generated some intriguing responses. Deep Interoperability is one of the Supertrends in the ZapThink 2020 vision for enterprise IT (now available as a poster for free download or purchase). In essence the Deep Interoperability Supertrend is the move toward software products that truly interoperate, even over time as standards and products mature and requirements evolve. ZapThink’s prediction is that customers will increasingly demand Deep Interoperability from vendors, and eventually vendors will have to figure out how to deliver it.

One of the key points in the recent ZapFlash was that the Web Services standards don’t even guarantee interoperability, let alone Deep Interoperability. We had a few responses from vendors who picked up on this point. They had a few different angles, but the common thread was that hey, we support REST, so we have Deep Interoperability out of the box! So buy our gear, forget the Web Services standards, and your interoperability issues will be a thing of the past!

Not so fast. Such a perspective misses the entire point to Deep Interoperability. For two products to be deeply interoperable, they should be able to interoperate even if their primary interface protocols are incompatible. Remember the modem negotiation on steroids illustration: a 56K modem would still be able to communicate with an older 2400-baud modem because it knew how to negotiate with older modems, and could support the slower protocol. Similarly, a REST-based software product would have to be able to interoperate with another product that didn’t support REST by negotiating some other set of protocols that both products did support.

But this “least common denominator” negotiation model is still not the whole Deep Interoperability story. Even if all interfaces were REST interfaces we still wouldn’t have Deep Interoperability. If REST alone guaranteed Deep Interoperability, then there could be no such thing as a bad link.

Bad links on Web pages are ubiquitous, of course. Put a perfectly good link in a Web page that connects to a valid resource. Wait a few years. Click the link again. Chances are, the original resource was deleted or moved or had its name changed. 404 not found.

OK, all you RESTafarians out there, how do we solve this problem? What can we do when we create a link to prevent it from ever going bad? How do we keep existing links from going bad? And what do we do about all the bad links that are already out there? The answers to these questions are all part of the Deep Interoperability Supertrend.

One important point is that the modem negotiation example is only a part of the story, since in that case, you already have the two modems, and the initiating one can find the other one. But Deep Interoperability also requires discoverability and location independence. You can’t interoperate with a piece of software you can’t find.

But we still don’t have the whole story yet, because we must still deal with the problem of change. What if we were able to interoperate at one point in time, but then one of our endpoints changed. How do we ensure continued interoperability? The traditional answer is to put something in the middle: either a broker in a middleware-centric model or a registry or other discovery agency that can resolve abstract endpoint references in a lightweight model (either REST or non-middleware SOA). The problem with such intermediary-based approaches, however, is that they relieve the vendors from the need to build products with Deep Interoperability built in. Instead, they simply offer one more excuse to sell middleware.

The ZapThink Take

At its core Deep Interoperability is a peer-to-peer model, in that we’re requiring two products to be deeply interoperable with each other. But peer-to-peer Deep Interoperability is just the price of admission. If we have two products that are deeply interoperating, and we add a third product to the mix, it should be able to negotiate with the other two, not just to establish the three pairwise relationships, but to form the most efficient way for all three products to work together. Add a fourth product, then a fifth, and so on, and the same process should take place.

The end result will be IT environments of arbitrary size and complexity supporting Deep Interoperability across the entire architecture. Add a product, remove a product, or change a product, and the entire ecosystem adjusts accordingly. And if you’re wondering whether this ecosystem-level adjustment is an emergent property of our system of systems, you’ve hit the nail on the head. That’s why Deep Interoperability and Complex Systems Engineering are adjacent on our ZapThink 2020 poster.

<Return to section navigation list>

Windows Azure AppFabric: Access Control and Service Bus

• Vittorio Bertocci (@vibronet) and Wade Wegner (@WadeWegner) wrote Re-Introducing the Windows Azure AppFabric Access Control Service for the 12/2010 issue of MSDN Magazine:

If you’re looking for a service that makes it easier to authenticate and authorize users within your Web sites and services, you should take another look at the Windows Azure AppFabric Access Control service (ACS for short), as some significant updates are in the works (at the time of this writing).

Opening up your application to be accessed by users belonging to different organizations—while maintaining high security standards—has always been a challenge. That problem has traditionally been associated with business and enterprise scenarios, where users typically live in directories. The rise of the social Web as an important arena for online activities makes it increasingly attractive to make your application accessible to users from the likes of Windows Live ID, Facebook, Yahoo and Google.

With the emergence of open standards, the situation is improving; however, as of today, implementing these standards directly in your applications while juggling the authentication protocols used by all those different entities is a big challenge. Perhaps the worst thing about implementing these things yourself is that you’re never done: Protocols evolve, new standards emerge and you’re often forced to go back and upgrade complicated, cryptography-ridden authentication code.

The ACS greatly simplifies these challenges. In a nutshell, the ACS can act as an intermediary between your application and the user repositories (identity providers, or IP) that store the accounts you want to work with. The ACS will take care of the low-level details of engaging each IP with its appropriate protocol, protecting your application code from having to take into account the details of every transaction type. The ACS supports numerous protocols such as OpenID, OAuth WRAP, OAuth 2.0, WS-Trust and WS-Federation. This allows you to take advantage of many IPs.

Outsourcing authentication (and some of the authorization) from your solution to the ACS is easy. All you have to do is leverage Windows Identity Foundation (WIF)—the extension to the Microsoft .NET Framework that enhances applications with advanced identity and access capabilities—and walk through a short Visual Studio wizard. You can usually do this without having to see a single line of code!

Does this sound Greek to you? Don’t worry, you’re not alone; as it often happens with identity and security, it’s harder to explain something than actually do it. Let’s pick one common usage of the ACS, outsourcing authentication of your Web site to multiple Web IPs, and walk through the steps it entails.

Vittorio and Wade continue with …

- Outsourcing Authentication of a Web Site to the ACS

- Outsourcing Authentication of a Web Site to the ACS

- Configure an ACS Project

- Choosing the Identity Providers You Want

- Getting the ACS to Recognize Your Web Site

- Adding Rules

- Collecting the WS-Federation Metadata Address

- Configuring the Web Site to Use the ACS

- Testing the Authentication Flow

- The ACS: Structure and Features

topics.

<Return to section navigation list>

Windows Azure Virtual Network, Connect, and CDN

• Kevin Ritchie continued his series with Day 5 - Windows Azure Platform – Connect on 12/5/2010:

On the 5th day of Windows Azure Platform Christmas my true love gave to me Connect.

What is Windows Azure Connect?

Connect is a component of Windows Azure; that, well allows you to connect things. Doesn’t sound amazing, does it? Well, let’s have a closer look. If you’re a Network Administrator or a Dev, you’ll love this.

Windows Azure Connect allows you to connect (using IPSec protected connections) computers/servers in your network to roles in Windows Azure and the best bit; the roles take on IP addresses as if they were resources in your network.

NOTE: It doesn’t create a VPN connection

So, for example, you could have a web application running on Windows Azure that has a back-end database to store; for instance, customer information. But, what if you don’t want to store the database in Azure, well you don’t have to. With Connect, you can leave the database on your network; Connect will do the rest. Well, obviously after some human intervention

Also after Connect is configured, you have the ability to use existing methods for domain authentication and name resolution. You can remotely debug Windows Azure role instances and you can also use existing management tools to work with roles in the Azure Platform e.g. PowerShell.

Connectivity between different networks and applications isn’t a new concept by any means, but what Connect provides, is a simple, secure, non-nonsense, no VPN way of bridging the gap between your network and The Cloud.

Tomorrow’s installment: SQL Azure - Database

P.S. If you have any questions, corrections or suggestions to make please let me know.

Kevin Ritchie posted Day 4 - Windows Azure Platform - Content Delivery Network on 12/4/2010:

On the 4th day of Windows Azure Platform Christmas my true love gave to me the Content Delivery Network.

What is the Content Delivery Network?

The Windows Azure Content Delivery Network (CDN) caches Windows Azure Blobs (discussed on day two), at locations closer to where the content is being requested, this way bandwidth is maximised and content is delivered faster.

For example, say you have a website that delivers video content to millions of users around the world, that’s a lot of locations. It would be terribly inefficient to serve up content from just one location. Allowing the video content to be cached in several locations, some being closer to the requesting source allows for the video to streamed/downloaded quicker.

There’s only one requirement to make a blob (your data) available to the CDN and it’s very simple, mark the container the blob resides in as public. To do this, you need to enable CDN access to your Storage Account.

Enabling CDN access to a Storage Account is done through the Management Portal (briefly mentioned on day two). When CDN access has been enabled the portal will provide you with a CDN domain name in the following URL format: http://<identifier>.vo.msecnd.net/.

NOTE: It takes around 60 minutes for the registration of the domain name to propagate round the CDN.

With a CDN domain name and a public container with blobs in, you now have the ability to serve up Windows Azure hosted content; strategically, to the world.

Tomorrow’s installment: Connect

Check out Kevin’s previous posts in this series:

- Day 3 - Windows Azure Platform - Fabric Controller: A brief description on the Windows Azure Platform Fabric Controller

- Day 2 - Windows Azure Platform - Storage Service: A brief description of the Windows Azure Platform Storage Service

- Day 1 - Windows Azure Platform - Compute Service: A brief description of the Windows Azure Platform Compute Service

MSDN added a new Overview of Windows Azure Connect article on 11/22/2010 (missed when posted):

With Windows Azure Connect, you can use a simple user interface to configure IPsec protected connections between computers or virtual machines (VMs) in your organization’s network, and roles running in Windows Azure. After you configure these connections, role instances in Windows Azure use IP addressing like that of your other networked resources, rather than having to use some form of external virtual IP addressing. Windows Azure Connect makes it easier to do tasks such as the following:

You can configure and use a distributed application that uses roles in Windows Azure (for example, a Web role) together with servers in your organization’s network (for example, a SQL Server and associated network infrastructure). The distributed application could be one that you are reworking to include not only resources in your network, but also one or more Windows Azure roles, such as a Web role.

Many combinations are possible between Windows Azure roles (Web roles, Worker roles, or VM roles) and your networked resources (including servers or VMs for file, print, email, database access, Web communication, collaboration, and so on). Your networked resources can also include legacy systems that are supported by your distributed application.- You can join Windows Azure role instances to your domain, so that you can use your existing methods for domain authentication, name resolution, or other domain-wide maintenance actions. For diagrams that help describe this configuration, first see the basic diagram in Elements of a configuration in Windows Azure Connect, later in this topic, and then see Overview of Windows Azure Connect When Roles Are Joined to a Domain.

- You can remotely administer and debug Windows Azure role instances.

- You can easily manage Windows Azure role instances using existing management tools in your network, for example, remote Windows PowerShell or another management interface.

Example configuration in Windows Azure Connect

The following diagram shows the elements in an example configuration in Windows Azure Connect. Worker Role 1, Web Role 1, and Web Role 2 are all within one subscription to Windows Azure (although they may be in different services within the subscription). However, only Worker Role 1 and Web Role 1 have been activated for Windows Azure Connect, as shown by the yellow lines around these roles. The role instances in Worker Role 1 are connected to a group of development computers. The yellow dot on each development computer shows that the endpoint software for Windows Azure Connect has been installed. The yellow dotted line around the development computers shows that these computers have been placed into an endpoint group (which is required before the connection can be created). Similarly, role instances in Web Role 1 are connected to an endpoint group that contains databases.

Example configuration in Windows Azure Connect

Windows Azure Connect Interface

The following illustration shows the Windows Azure Connect interface:

Configuring Windows Azure Connect

The following list describes the elements that must be configured for a connection that uses Windows Azure Connect:

- Windows Azure roles that have been activated for Windows Azure Connect: To activate a Windows Azure role, ensure that an activation token that you obtain in the Windows Azure Connect interface is included in the configuration for the role. The configuration for the role is handled by a software developer, either directly through a configuration file or indirectly through a Visual Studio interface that is included in the Windows Azure software development kit (SDK). The Visual Studio interface makes it simpler for you or a software developer to provide the activation token and specify other properties for a given role.

- Endpoint software installed on local computers or VMs: To include a local computer or VM in your Windows Azure Connect configuration, begin by installing the local endpoint software on that computer. After the endpoint software is installed, the local computer or VM is called a local endpoint.

- Endpoint groups (for configuring network connectivity): To configure network connectivity, place local endpoints in groups and then specify the resources that those endpoints can connect to. Those resources can be one or more Windows Azure Connect roles, and optionally, other groups of endpoints. Each local endpoint can be a member of only one endpoint group. However, you can specify that a particular group can connect to endpoints in another group, which expands the number of connections that are possible.

Additional references

Overviews

- Overview of Windows Azure Connect When Roles Are Joined to a Domain

- Overview of Using Endpoint Groups to Configure Connectivity in Windows Azure Connect

- Overview of Firewall Settings Related to Windows Azure Connect

Checklists

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

• My Strange Behavior of Windows Azure Platform Training Kit with Windows Azure SDK v1.3 under 64-bit Windows 7 post of 12/5/2010 describes a series of issues with the WAPTK November Update:

After installing the Windows Azure Tools for Microsoft Visual Studio 2010 v1.3 (Vscloudservice.exe), which also installs the Windows Azure SDK v1.3, and checking its compatibility with a few simple Windows Azure Web-role apps, I removed the previous version of the Windows Azure Platform Training Kit (WAPTK) and installed the WAPTK November Update.

I had seen a few table storage issues with the instrumented version of the OakLeaf Systems Azure Table Services Sample Project updated to v1.3 and the Microsoft.WindowsAzure.StorageClient v1.1 [see below.] So I tried to build and run the WAPTK’s GuestBook demo projects on my 64-bit Windows 7 development machine with the default Target Framework set to .NET Framework 4.0.

Here’s what I encountered:

1. Clicking the Setup Demo link to run the Configuration Wizard’s Detecting Required Software step indicated that Windows Azure Tools for Microsoft Visual Studio 2010 1.2 (June 2010) or higher is missing:

Removing and reinstalling Vscloudservice.exe, and rebooting didn’t solve the problem. This issue might have contributed to the following problems, but a solution wasn’t evident.

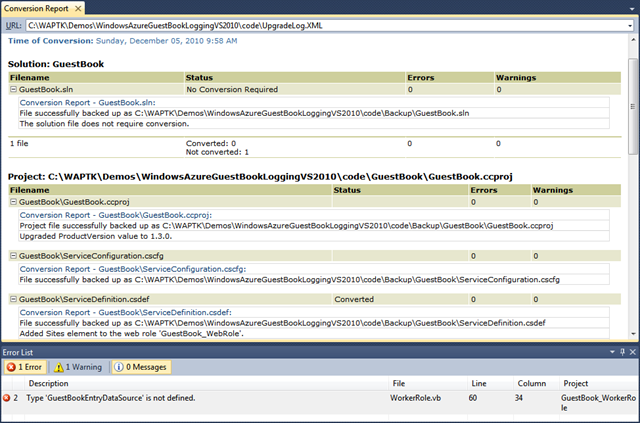

2. Opening any of the GuestBook solutions launched the Visual Studio Conversion Wizard. Completing the Wizard resulted in no conversions made and one error reported:

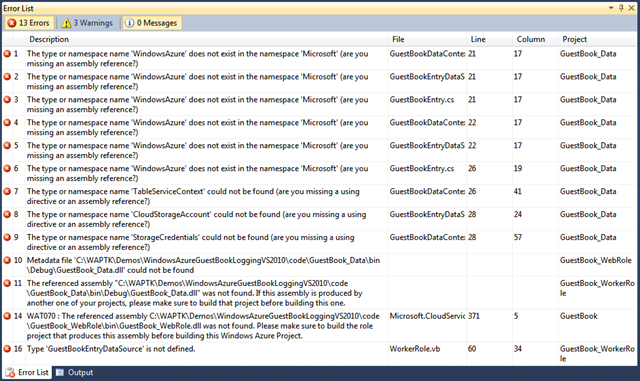

3. Attempting to build the solution reported 13 errors and 3 warnings:

The actual result was 16 errors, most of which related to a problem with Microsoft.WindowsAzure.* libraries. References collections appeared as follows:

4. Removing and adding the Microsoft.WindowsAzure.StorageClient reference to the GuestBook_Data library …

… removed only one “warning.” I needed to remove and replace the Web Role’s Microsoft.WindowsAzure.StorageClient reference to remove the remaining errors.

5. Building and running the solution resulted in the following run-time error:

I had encountered this run-time error in other solutions upgraded from SDK v1.2 to v1.3.

6. I added a reference to Microsoft.WindowsAzure.ServiceRuntime and the standard delegate block shown emphasized here:

With these additions, I was able to get the various GuestBook solutions to compile and run:

It seems to me that the WAPTK isn’t fully cooked. Adron Hall (@adronbh) reported Windows Azure v1.3 SDK Issues on 12/3/2010 and Rinat Abdullin recommended that you Don’t Upgrade to Windows Azure SDK 1.3 Yet in a 12/3/2010 post. Both of these articles were excerpted in my Windows Azure and Cloud Computing Posts for 12/4/2010+ post (scroll down).

The most mysterious run-time error I encountered when updating the OakLeaf Systems Azure Table Services Sample Project to v1.3 was the following …

… associated with starting the Diagnostics Monitor by a procedure in the Global.asax.cs file. I’ll update this post when I find the workaround for this issue. In the meantime, you can throw it yourself by opening http://oakleaf2.cloudapp.net.

• Terrence Dorsey listed Windows Azure Development Resources in a Toolbox column for MSDN Magazine’s 12/2010 issue:

As you’ve probably read elsewhere in MSDN Magazine, the Windows Azure platform is Microsoft’s stack of cloud computing resources that range from coding, testing and deploying Visual Studio and Windows Azure AppFabric to Windows Azure itself and the SQL Azure storage services. Here’s a collection of tools and information that will get you writing apps for Windows Azure today.

Getting Started

When you’re ready to start developing for the Windows Azure platform, your first stop should be the Windows Azure Developer Center on MSDN (msdn.microsoft.com/windowsazure). Here you’ll find information about the entire platform along with links to documentation, tools, support forums and community blog posts.

Next, head over to the Windows Azure portal (windows.azure.com) and set up your account. This gives you access to Windows Azure, SQL Azure for storage and Windows Azure AppFabric (Figure 1). You’ll need a Windows Live ID to sign up. If you don’t have one already, there’s a link on the sign-in page.

Figure 1 Running a Service on Windows Azure

As we go to press, Microsoft is offering an introductory special that lets you try out many features of the Windows Azure platform at no charge. See microsoft.com/windowsazure/offers/ for details.

Developer Tools

Before you can start slinging code, you’ll need to get your development environment set up. While you could probably build your Windows Azure app with Notepad and an Internet connection, it’s going to be a lot more productive—and enjoyable—to use tools optimized for the job.

If you don’t have Visual Studio 2010, you can enjoy (most of) the benefits of a Windows Azure-optimized development environment with Visual Web Developer 2010 Express (asp.net/vwd). You can get it via the Web Platform Installer (microsoft.com/express/web), which can also install SQL Server 2008 Express Edition, IIS, and extensions for Silverlight and ASP.NET development.

If you’re already using Visual Studio, simply download and install the Windows Azure Tools for Microsoft Visual Studio (bit.ly/aAsgjt). These tools support both Visual Studio 2008 and Visual Studio 2010 and contain templates and tools specifically for Windows Azure development. Windows Azure Tools includes the Windows Azure SDK.

Moving Data from SQL Server

If you’re migrating an existing Web application to Windows Azure, you’ll need some way to migrate the app’s data as well. For apps that employ SQL Server 2005 or SQL Server 2008 as a data store, the SQL Azure Migration Wizard (sqlazuremw.codeplex.com) makes this transition a lot easier (Figure 2). The wizard not only transfers the actual data, but also helps you identify and correct possible compatibility issues before they become a problem for your app.

Figure 2 SQL Azure Migration Wizard

To get a handle on how to use the SQL Server Migration Wizard, along with a lot of other helpful information about moving existing apps to Windows Azure, see “Tips for Migrating Your Applications to the Cloud” in the August 2010 issue of MSDN Magazine(msdn.microsoft.com/magazine/ff872379).

Security Best Practices

You need to take security into consideration with any widely available application, and cloud apps are about as widely available as they come. The Microsoft patterns & practices team launched a Windows Azure Security Guidance project in 2009 to identify best practices for building distributed applications on the Windows Azure platform. Their findings have been compiled into a handy PDF that covers checklists, threats and countermeasures, and detailed guidance for implementing authentication and secure communications (bit.ly/aHQseJ). The PDF is a must-read for anyone building software for the cloud.

PHP Development on Windows Azure

Dating from before even the days of classic ASP, PHP continues to be a keystone of Web application development. With that huge base of existing Web apps in mind, Microsoft created a number of tools that bring support for PHP to the Windows Azure platform. These tools smooth the way for migrating older PHP apps to Windows Azure, as well as enabling experienced PHP developers to leverage their expertise in the Microsoft cloud.

There are four tools for PHP developers:

- Windows Azure Companion helps you install and configure the PHP runtime, extensions and applications on Windows Azure.

- Windows Azure Tools for Eclipse for PHP is an Eclipse plug-in that optimizes the open source IDE for developing applications for Windows Azure (Figure 3).

Figure 3 Windows Azure Tools for Eclipse- Windows Azure Command-Line Tools for PHP provides a simple interface for packaging and deploying PHP applications on Windows Azure.

- Windows Azure SDK for PHP provides an API for leveraging Windows Azure data services from any PHP application.

You’ll find more information about the tools and links to the downloads on the Windows Azure Team Blog at bit.ly/ajMt9g.

Windows Azure Toolkit for Facebook

Building applications for Facebook is a sure-fire way to reach tens of millions of potential customers. And if your app takes off, Windows Azure provides a platform that lets you scale easily as demand grows. The Windows Azure Toolkit for Facebook (azuretoolkit.codeplex.com) gives you a head start in building your own highly scalable Facebook app. Coming up with the next FarmVille is still up to you, though!

Windows Azure SDK for Java

PHP developers aren’t the only ones getting some native tools for Windows Azure. Now Java developers can also work in their language of choice and get seamless access to Windows Azure services and storage. The Windows Azure SDK for Java (windowsazure4j.org) includes support for Create/Read/Update/Delete operations on Windows Azure Table Storage, Blobs and Queues. You also get classes for HTTP transport, authorization, RESTful communication, error management and logging.

Setting up Your System

Here are a few useful blog posts from the Windows Azure developer community that walk you through the process of setting up a development environment and starting your first cloud apps:

Mahesh Mitkari

Configuring a Windows Azure Development Machine

blog.cognitioninfotech.com/2009/08/configuring-windows-azure-development.htmlJeff Widmer

Getting Started with Windows Azure: Part 1 - Setting up Your Development Environment

weblogs.asp.net/jeffwids/archive/2010/03/02/getting-started-with-windows-azure-part-1-setting-up-your-development-environment.aspxDavid Sayed

Hosting Videos on Windows Azure

blogs.msdn.com/b/david_sayed/archive/2010/01/07/hosting-videos-on-windows-azure.aspxJosh Holmes

Easy Setup for PHP on Azure Development

joshholmes.com/blog/2010/04/13/easy-setup-for-php-on-azure-development/Visual Studio Magazine

Cloud Development in Visual Studio 2010

visualstudiomagazine.com/articles/2010/04/01/using-visual-studio-2010.aspx

Terrence is the technical editor of MSDN Magazine. You can read his blog at terrencedorsey.com or follow him on Twitter: @tpdorsey.

Gunnar Peipman described Windows Azure: Connecting to web role instance using Remote Desktop in this 12/4/2010 tutorial:

My last posting about cloud covered new features of Windows Azure. One of the new features available is Remote Desktop access to Windows Azure role instances. In this posting I will show you how to get connected to Windows Azure web role using Remote Desktop.

I suppose you have other deployment settings in place and only Remote Desktop needs configuring. Open publish dialog of your web role project in Visual Studio 2010.

In the bottom part of this dialog you can see the link Configure Remote Desktop connections… Click on it.

Also Remote Desktop needs certificate. You can create one from dropdown – there is option <Create…> in the end of options list. After creating certificate you have to export it to your file system (Start => Run => certmgr.msc).

When certificate is exported add it to your hosted service in Windows Azure Portal.

Now fill username and password fields in Remote Desktop settings windows in your Visual Studio and click OK. After deploying your project you can access your web role instance over Remote Desktop. Just click on your instance in Windows Azure Portal and select Connect from toolbar. RDP-file for your web role instance connection will be downloaded and if you open it you can access your web application. For username and password use the same username and password you inserted before.

This is System window of my web role instance. You can see that Small instance account provided to MSDN Library subscribers has 2.10 Gz AMD Opteron processor (only one core is for my web app), 1.75 GB RAM and 64bit Windows Server Enterprise Edition.

Using Remote Desktop you can investigate and solve problems when your web application crashes, also you can make other changes to your web role instance. If you have more than one instance you should to same changes to all instances of same web role.

Rinat Abdullin explained Troubleshooting Azure Deployments in this 12/4/2010 post:

Let's compile a list of common Windows Azure deployment problems. I will include my personal favorites in addition to the troubleshooting tips from MSDN (with some additional explanations).

Missing Runtime Dependencies

Windows Azure Guest OS is just a Virtual Machine running within Hyper-V. It has a set of preinstalled components required for running common .NET applications. If you need something more (i.e.: assemblies), make sure to include these extra dlls and resources!

- Set Copy Local to True for any non-common assemblies in "References". This will force them to be deployed. If assembly is referenced indirectly and does not load - add it to the Worker/Web role and set Copy Local to True.

- Web.config can reference assemblies outside of the project references list. CSPack will not be aware of them. These need to be included as well.

- If you use some assemblies linking to the native code, make sure that native code is x64 bit and is included into the deployment as well. For example this was needed for running Oracle Native Client or SQlite on Azure Worker Role.

It's 64 Bit

Again, Windows Azure Guest OS is 64bit. Make sure that everything you deploy will run there. You can reference 32 bit assemblies in your code, but they will not run on the cloud.

You might encounter case that Visual Studio IntelliSense starts arguing badly while you edit ASP.NET files referencing these 64bit-only assemblies. This is understandable since devenv is still 32 bit process. Well, I just live with that.

Web Role Never Starts

If your web role never starts and does not even have a chance to attach IntelliTrace, then you could have a problem in your web.config. Everything would still work perfectly locally.

This could be caused by config sections that are known on your machines, but are not registered within Windows Azure Guest OS VM. In our case this was coming from uri section required by DotNetOpenAuth:

<uri>

<idn enabled="All"/>

<iriParsing enabled="true"/>

</uri>This fixed the problem:

<configuration>

<configSections>

<section name="uri" type="System.Configuration.UriSection, System, Version=4.0.0.0, Culture=neutral, PublicKeyToken=b77a5c561934e089"/>Windows Azure Limits Transaction Duration

If your transactions require more than 10 minutes to finish, then they will fail no matter what settings you have in the code. 10min threshold is located in machine.config and can't be overridden from the code. More details

This is a protective measure (protecting developers from deadlocking databases) coming from the mindset of a tightly coupled systems. I wish Microsoft folks were more aware of the architecture design principles that are frequently associated with CQRS these days. In that world deadlocks, scalability, complexity and tight coupling are not an issue.

Temporary Files can't be larger than 100MB

If your code relies on temporary files that can be larger than 100Mb, then it would fail with "Disk full" sort of exception. You will need to use Local Resources.

If you launch a library or process that rely on temporary files, then they could fail, too. This did hit me, when SQLite was failing to compact 2GB database file located within 512GB empty disk. As it turns out, the process used TEMP environment variable and needed ability to write to a large file.

More details are in another blog post.

Recycling Forever

Cloud fabric assumes that OnStart, OnStop and Run from "RoleEntryPoint" will never throw exceptions under normal conditions. If they do, they are not handled and will force the role to recycle. If your application always throws an exception on start up (i.e.: wrong configuration or missing resource), then it will be recycling forever.

Additionally, the Run method of a Role is supposed to run forever (when it returns, the role recycles). If your code overrides this method, it should sleep indefinitely.

BTW, if you consider putting Thread.Sleep, then I strongly encourage you to check out Task Parallel Library (aka TPL) within .NET 4.0 instead. Coupled with PLinq and new concurrent and synchronization primitives, it nearly obsoletes any thread operations in my personal opinion. Lokad Analytics R&D team might not agree, but they have really specific reasons for reinventing PLinq and TPL on their own.

Role Requires Admin Privileges

I personally never hit this one. However just keep in mind, that compute emulator (Dev Fabric) runs with the Admin privileges locally. Cloud deployment does not have them. If your code requires Admin rights to do something, it might fail while starting up or executing.

Incorrect Diagnostics Connection String

If your application uses Windows Azure Diagnostics, then for deployment make sure to update the setting to HTTPS credentials pointing to a valid storage account.

It is usually a separate setting named "DiagnosticsConnectionString". It's easy to forget that, when you usually work with "MyStorageConnectionString" or something like this.

SSL, HTTPS and Web Roles

In order to run a site under HTTPS, you must pass the SSL certificate to Windows Azure, while making sure that private key is exported and PFX file format is used.

By the way, if you applied to "Powered by Windows Azure" program logo, then make sure not to display it on the HTTPS version of your site. That's because the script is not HTTPS-aware and will retrieve resources using non-SSL channel. This will cause browsers to display warnings like the one below, which will be scary for the visitors.

NB: as I recall site owners are not allowed to modify this script and fix the issue themselves. So we would probably need to wait for a few more months of constant email pinging till 10-line HTML tracking snippet is updated to use HTTPS when located within HTTPS, just like GA does. I know it's a tough task.

What Did I Miss?

That's it, for now. Some of these less common issues cost us quite a bit of time to figure out and debug. Hopefully this post will save you some time in .NET cloud computing with Windows Azure. I'l try to keep this post updated.

Did I miss some common deployment problems that you've encountered and were able to work around?

Bruce Kyle (@brucedkyle) tweeted on 12/4/2010 Free Windows Azure Services for developers to start cloud computing at http://xolv.us/eMkP8L with Promo Code DPWE01:

Adron Hall (@adronbh) reported Windows Azure v1.3 SDK Issues on 12/3/2010:

I’ve been having oodles of deployment issues with Windows Azure lately ever since upgrading to the 1.3 SDK. It seems on one machine (which is 32-bit) when I do a build and deploy it appears to word. On my 64-bit machine with a COMPLETELY clean load of Win 7 and VS 2010 + the Windows Azure SDK 1.3 it never deploys. Just keeps trying and eventually gives up after about 30-45 minutes. Very painful.

So far I’ve had some twitter conversations and Eugenio Pace has helped a lot in trying to figure out what the problem is. At CloudCamp however I start an empty ASP.NET MVC 2 app and deployed it, as things go it worked flawlessly. I guess Eugenio scared it into operating properly! :)

Also Rinat Abdullin has run into some issues with the 1.3 drop also. Rinat’s entry is titled “Don’t Upgrade to Windows Azure SDK 1.3 Yet” [see below], so you might get the vibe you may not want to upgrade yet.

I also got this tweet from Eugenio on a troubleshooting page on the MSDN site. It may help if you’re slugging through some issues:

@adronbh Latest list of deployment issues on Win #Azurehttp://bit.ly/hTpEeN

Rinat Abdullin recommended that you Don’t Upgrade to Windows Azure SDK 1.3 Yet in a 12/3/2010 post:

Windows Azure SDK 1.3 released recently has a lot of features and some breaking changes.

My advice is to avoid upgrading your projects to it for now: there are too many problems, it is better to wait till Microsoft polishes this version.

Some glitches were verified (i.e.: conditional header) some others are still blocking and outstanding (just browse Windows Azure forum for details).

For example, here's what I'm getting on a production project after the upgrade:

People report that launching without debugger leads to:

HTTP Error 503. The service is unavailable.

In my case we don't get that far - there is another problem - Lokad.CQRS settings provider (essentially a wrapper around RoleEnvironment and config settings) suddenly stopped retrieving settings from the Cloud runtime in this project. And debugging does not help since we can't attach debugger.

My wild guess is that .NET 4.0 runtime does not initialize properly on the Dev Fabric (Compute Emulator is the new name) for web workers, so we can't retrieve azure-specific settings or attach debugger.

So if you are wondering about upgrading to Windows Azure 1.3 - don't do that, yet.

If you do, keep in mind that reverting back could be complicated, since Visual Studio upgrades your solutions (updating csconfig files) and references the new version of StorageClient (sometimes you need to do that manually).

Another tip: If you are using new Windows Azure Portal (Silverlight version) and have lots of errors - make sure to use use Internet Explorer with it. Otherwise you can get lot's of various problems and eventually crash [the] Silverlight plugin. This was the case on my machine and the latest Chrome.

By the way, these problems were probably caused by good intentions - attempt to keep up with the promises of PDC10. Microsoft teams have covered a big ground, essentially extending Windows Azure to be IaaS Cloud Computing Provider in addition to pure PaaS. It looks like they just need a bit more time.

Quick FAQ on Azure SDK 1.3

Can ServiceDefinition.build.csdef be ignored by version control?

Probably, yes. It's auto-generated and I did ignore it.

What has caused so many troubles with Web Roles?

Web roles run using full IIS instead of Hosted Web Core. Additionally web.config is now modified more aggressively by Visual Studio (you might have problems if it is read-only).

BTW you can also try deleting Sites section in ServiceDefinition.csdef. This is supposed to force Hosted Web Core even 1.3 mode. The suggestion has been posted by Steve Marx on MSDN forums, but I didn't give it a try (already back to 1.2). If you do, please share your experience.

Since upgrading to SDK 1.3 I am unable to deploy

Try deploying locally. Make sure that web.config does not have any sections that are not known by Azure Guest OS (i.e.: uri web section). Make sure you are including all the referenced resources. If nothing helps - contact support.

I'm getting this message all the time: web.config file has been modified

Visual Studio is a bit aggressive with configs now. Just close the file in the editor.

J. D. Meier posted a Windows Azure How-Tos Index on 12/3/2010:

The Windows Azure IX (Information Experience) team has made it easier to browse their product documentation. Beautiful. They added a Windows Azure How-To Index of their content to the MSDN Library. I think it’s great to see a shift to more How-To and task-oriented content. I think that naming with How-To, also makes it easier to find articles that might relate to your scenario or task.

Here is the collection of How-Tos you will find when you browse the index:

How to: Build a Windows Azure Application

- How to Configure Virtual Machine Sizes

- How to Configure Connection Strings

- How to Configure Operating System Versions

- How to Configure Local Storage Resources

- How to Create a Certificate for a Role

- How to Create a Remote Desktop Protocol File

- How to Define Environment Variables Before a Role Starts

- How to Define Input Endpoints for a Role

- How to Define Internal Endpoints for a Role

- How to Define Startup Tasks for a Role

- How to Encrypt a Password

- How to Restrict Communication Between Roles

- How to Retrieve Role Instance Data

- How to Use the RoleEnvironment.Changing Event

- How to Use the RoleEnvironment.Changed Event

How to: Use the Windows Azure SDK Tools to Package and Deploy an Application

- How to Prepare the Windows Azure Compute Emulator

- How to Configure the Compute Emulator to Emulate Windows Azure

- How to Package an Application by Using the CSPack Command-Line Tool

- How to Run an Application in the Compute Emulator by Using the CSRun Command-Line Tool

- How to Initialize the Storage Emulator by Using the DSInit Command-Line Tool

- How to Change the Configuration of a Running Service

- How to Attach a Debugger to New Role Instances

- How to View Trace Information in the Compute Emulator

- How to Configure SQL Server for the Storage Emulator

How to Configure a Web Application

- How to Configure a Web Role for Multiple Web Sites

- How to Configure the Virtual Directory Location

- How to Configure a Windows Azure Port

- How to Configure the Site Entry in the Service Definition File

- How to Configure IIS Components in Windows Azure

- How to Configure a Service to Use a Legacy Web Role

How to: Manage Windows Azure VM Roles

- How to Create the Base VHD

- How to Install the Windows Azure Integration Components

- How to Prepare the Image for Deployment

- How to Deploy an Image to Windows Azure

- How to Create and Deploy the VM Role Service Model

- How to Create a Differencing VHD

- How to Change the Configuration of a VM role

How to: Administering Windows Azure Hosted Services

- How to Setup a Windows Azure Subscription

- How to Setup Multiple Administrator Accounts

How to: Deploy a Windows Azure Application

- How to Package your Service

- How to Deploy a Service

- How to Create a Hosted Service

- How to Create a Storage Account

- How to Configure the Service Topology

How to: Upgrade a Service

- How to Perform In-Place Upgrades

- How to Swap a Service's VIPs

How to: Manage Upgrades to the Windows Azure Guest OS

- How to Determine the Current Guest OS of your Service

- How to Upgrade the Guest OS in the Management Portal

- How to Upgrade the Guest OS in the Service Configuration File

How to: Configure Windows Azure Connect

- How to Activate Windows Azure Roles for Windows Azure Connect

- How to Install Local Endpoints with Windows Azure Connect

- How to Create and Configure a Group of Endpoints in Windows Azure Connect

Cumulux posted Windows Azure PDC 2010 updates – List of Articles on 12/3/2010:

Summary of Articles written on various features announced during Microsoft PDC 2010:

Cumulux was a very early adopter of the Windows Azure Platform.

Kevin Kell asserted Competition [between Microsoft and Google] is Good, but … in a 12/3/2010 post to the Learning Tree blog:

I wanted to write a follow-up commentary to Chris’ recent excellent post about the competition in the cloud between Microsoft and Google.

I agree that it is vitally important to have a framework from which to analyze the different offerings. It is also necessary to be able to separate fact from hype. In any endeavor that involves change you really need to take a hard look at what problems you are trying to address and what the various choices offer in terms of functionality, price, performance, security, etc.

Consider the various productivity tools offered as SaaS. It would be difficult to convince a hard-core, number-crunching Marketing Analyst that he should give up his locally installed copy of Excel 2010 with PowerPivot, for example, in favor of the spreadsheet in Google docs. The functionality is just not there and it doesn’t really matter if there is a cost benefit, anywhere access or document sharing. On the other hand an administrative worker who only uses a spreadsheet to maintain simple lists might be perfectly well served with a basic application. Having locally installed high-powered analytical software on that user’s desktop is an underutilization of resources.

At the PaaS level, again you need to look at what problem you are trying to solve. Google seems to give developers more “for free” than Microsoft. Is that appealing? Or does it depend on other factors too? Obviously it depends on whether you are moving an existing application or doing greenfield work. You also need to consider storage requirements, existing skill-sets and degree of control and flexibility you need. With greater control and flexibility comes greater responsibility. For example Google App Engine offers some monitoring and diagnostics right out of the box. Currently Azure requires the developer to “roll her own” (using the API) or purchase a third party solution.

At the IaaS level there is no question that the Public Cloud is currently dominated by Amazon. There are, however, many other players who seek to offer slightly different value-propositions to their customers. It is not a one-size-fits-all market. It is similar to hotels, perhaps, where some prefer the large chains and some prefer a bed-and-breakfast. When thinking IaaS, you really have to consider whether or not a Public Cloud is even the right approach. Organizations with stringent security and regulatory requirements may not even have that choice. Most Private Clouds are IaaS and there are many options to choose from.

We spend a good deal of time in our introductory cloud computing course really looking at and discussing these issues. It is our intention to provide an overview of the offerings of the major players. We do this in a vendor-neutral way. At the end of the course our attendees have a good understanding of the basics of cloud computing and are armed with the knowledge they need to consider if, what and how it may fit into their own organizations.

John Twist (@joshtwist) attacked the problem of assigning unique order IDs in his Synchronizing Multiple Nodes in Windows Azure article for the November 2010 issue of MSDN Magazine:

The cloud represents a major technology shift, and many industry experts predict this change is of a scale we see only every 12 years or so. This level of excitement is hardly surprising when you consider the many benefits the cloud promises: significantly reduced running costs, high availability and almost infinite scalability, to name but a few.

Of course, such a shift also presents the industry with a number of challenges, not least those faced by today’s developers. For example, how do we build systems that are optimally positioned to take advantage of the unique features of the cloud?

Fortunately, Microsoft in February launched the Windows Azure Platform, which contains a number of right-sized pieces to support the creation of applications that can support enormous numbers of users while remaining highly available. However, for any application to achieve its full potential when deployed to the cloud, the onus is on the developers of the system to take advantage of what is arguably the cloud’s greatest feature: elasticity.

Elasticity is a property of cloud platforms that allows additional resources (computing power, storage and so on) to be provisioned on-demand, providing the capability to add additional servers to your Web farm in a matter of minutes, not months. Equally important is the ability to remove these resources just as quickly.

A key tenet of cloud computing is the pay-as-you-go business model, where you only pay for what you use. With Windows Azure, you only pay for the time a node (a Web or Worker Role running in a virtual machine) is deployed, thereby reducing the number of nodes when they’re no longer required or during the quieter periods of your business, which results in a direct cost savings.

Therefore, it’s critically important that developers create elastic systems that react automatically to the provision of additional hardware, with minimum input or configuration required from systems administrators.

Scenario 1: Creating Order Numbers

Recently, I was lucky enough to work on a proof of concept that looked at moving an existing Web application infrastructure into the cloud using Windows Azure.

Given the partitioned nature of the application’s data, it was a prime candidate for Windows Azure Table Storage. This simple but high-performance storage mechanism—with its support for almost infinite scalability—was an ideal choice, with just one notable drawback concerning unique identifiers.

The target application allowed customers to place an order and retrieve their order number. Using SQL Server or SQL Azure, it would’ve been easy to generate a simple, numeric, unique identifier, but Windows Azure Table Storage doesn’t offer auto-incrementing primary keys. Instead, developers using Windows Azure Table Storage might create a GUID and use this as the “key” in the table:

505EAB78-6976-4721-97E4-314C76A8E47E

The problem with using GUIDs is that they’re difficult for humans to work with. Imagine having to read your GUID order number out to an operator over the telephone—or make a note of it in your diary. Of course, GUIDs have to be unique in every context simultaneously, so they’re quite complex. The order number, on the other hand, only has to be unique in the Orders table. …

John continues with “Creating a Simple Unique ID in Windows Azure.” His “Approach I: Polling” topic includes this diagram:

Figure 3 Nodes Polling a Central Status Flag

and “Approach II: Listening” uses this architecture:

Figure 6 Using the Windows Azure AppFabric Service Bus to Simultaneously Communicate with All Worker Roles

Finally, his Administrator Console displays this dialog:

Figure 9 The Administrator Console

… Sample Code

The accompanying sample solution is available at code.msdn.microsoft.com/mag201011Sync and includes a ReadMe file that lists the prerequisites and includes the setup and configuration instructions. The sample uses the ListeningRelease, PollingRelease and UniqueIdGenerator in a single Worker Role.

Josh is a principal application development manager with the Premier Support for Developers team in the United Kingdom

<Return to section navigation list>

Visual Studio LightSwitch

RunAtServer announced a LightSwitch presentation at the Montreal .NET User Group on 12/6/2010:

Subject: Introduction to Visual Studio LightSwitch and ASP.NET Embedded Database

Speaker: Laurent Duveau (blog)

Date: Monday December 6th 2010

Location: Microsoft Montreal offices: 2000 McGill College, 4e étage, Montréal, QC, H3A 3H3

More info [in French]: www.dotnetmontreal.com

Return to section navigation list>

Windows Azure Infrastructure

• The new Microsoft/web site posted Bill Lodin’s 00:23:15 Azure Services Platform for Developers: Fundamentals video segment with a complete transcript:

Get an overview of cloud computing with Microsoft Windows Azure and the basics of Windows Azure developer tools. Topics in this session include Compute and storage, Service Management, The Developer Environment and the Azure Services Platform.

Vineet Nayar asserted “I believe Azure will be a standard tool for creating applications inside the organization so that people, whenever they use those features and services, will use them inside the organization” in his “Cloud is bullsh*t” – HCL’s CEO, Vineet Nayar, explains why he said just that interview of 12/4/2010 by Phil Fersht and Esteban Herrera:

Vineet Nayar, CEO at HCL Technologies, has firmly cemented himself as one of today's outspoken visionaries in the world of IT services. Never afraid to offer an opinion that may rub a few folks the wrong way, the self-styled CEO booked his ticket to notoriety at HCL's analyst conference in Boston this past week, where he described Cloud, well, as bullshit.

Unfortunately for Vineet, some of the HfS Research team had also made their way to the sessions, and we weren't going to let Vineet off lightly, without getting him to share some of his views with our readers. So Phil Fersht and Esteban Herrera were only too pleased to grab some time with him on Thursday after his flamboyant keynote to get him to elaborate a little further... [Link added.]

HfS Research: Thank you for joining us today, Vineet. Can you elaborate on your statement this morning that “Cloud is Bullshit?”

Vineet Nayar: My view on Cloud is that I always look for disruptive technologies that redefine the way the business gets run. If there is a disruptive technology out there that redefines business I am for it. If there is no underlying technology there, and it is just repackaging of a commercial solution, then I do not call it a business trend. I call it hype.

So, whatever we have seen on the Cloud – whether it is virtualization, if it’s available to… now before I go there, and the reason I believe what I’m saying is right, is because you have now a new vocabulary which has come in Cloud, which is called Private Cloud. So now it is very difficult, so what everybody is saying is “yes, it is private Cloud and public Cloud” So, in my vocabulary Private Cloiud is typically data center and when I say Cloud it is about Public Cloud. So let’s be very clear about it.