Windows Azure and Cloud Computing Posts for 12/2/2010+

| A compendium of Windows Azure, Windows Azure Platform Appliance, SQL Azure Database, AppFabric and other cloud-computing articles. |

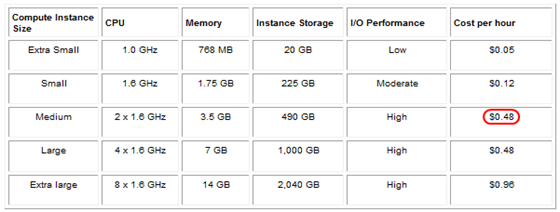

• Note: Today’s issue was released early to notify readers about an apparent error in the posted pricing of Windows Azure medium-sized compute instances. See articles marked •.

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database and Reporting

- Marketplace DataMarket and OData

- Windows Azure AppFabric: Access Control and Service Bus

- Windows Azure Virtual Network, Connect, and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch

- Windows Azure Infrastructure

- Windows Azure Platform Appliance (WAPA) and Hyper-V Cloud

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Read the detailed TOC here (PDF) and download the sample code here.

Discuss the book on its WROX P2P Forum.

See a short-form TOC, get links to live Azure sample projects, and read a detailed TOC of electronic-only chapters 12 and 13 here.

Wrox’s Web site manager posted on 9/29/2009 a lengthy excerpt from Chapter 4, “Scaling Azure Table and Blob Storage” here.

You can now freely download by FTP and save the following two online-only PDF chapters of Cloud Computing with the Windows Azure Platform, which have been updated for SQL Azure’s January 4, 2010 commercial release:

- Chapter 12: “Managing SQL Azure Accounts and Databases”

- Chapter 13: “Exploiting SQL Azure Database's Relational Features”

HTTP downloads of the two chapters are available for download at no charge from the book's Code Download page.

Tip: If you encounter articles from MSDN or TechNet blogs that are missing screen shots or other images, click the empty frame to generate an HTTP 404 (Not Found) error, and then click the back button to load the image.

Azure Blob, Drive, Table and Queue Services

No significant articles today.

<Return to section navigation list>

SQL Azure Database and Reporting

Ike Ellis delivered A Lap Around SQL Azure Data Sync - Step By Step Example, a third-party tutorial on 12/2/2010:

SQL Azure Data Sync is a cloud-based service, hosted on Microsoft SQL Azure, which allows many SQL Azure databases to stay consistent with one another. This is great if you need the same database geographically close to your users. I'm currently pitching this to one of my clients that has offices in the United States and Europe.

SQL Azure Data Sync is super simple to setup (try saying that five times fast). In this article, I’ll show you how to implement a data sync between two databases on the same logical SQL Azure server. We’ll be implementing the design in the diagram below.

STEP 1 – Signup for SQL Azure

If you need some guidance on how to do that, follow this link:

SQL Azure Signup TutorialAs of this writing (AOTW), Microsoft is literally giving SQL Azure away (Web Edition – 1GB), so you really have no excuse on why you haven’t signed up yet.

STEP 2 – Create Two Databases

OK, here’s where you might get charged. For this example, we’ll create two databases that will sync with each other, and you might get charged when you add the second database. When we're finished, you may want to delete one or both databases to keep from getting charged. The database usage charges are per day, so your bill should be less than $1, but I haven’t confirmed that.

You create a database by following these steps:a) Go to http://sql.azure.com. Click on the project you created in Step 1.

b) First you’ll see just the Master database. That doesn’t do us much good, since SQL Azure doesn’t allow us to add tables to the Master Database.

We need two more databases. Click on “Create Database.” The dialog below will pop up. Type in library1, and click “Create.” Do the same for the library2 database.

Now you have two blank databases. Let’s add some schema and data to library1, then let SQL Azure Data Sync copy them over to library2.

STEP 3 – Add Schema and Data to Library1.

We’ll use SQL Server Management Studio (SSMS) to add the schema and data, though we could have easily used Visual Studio, SQLCMD, or even BCP.Connect to SQL Azure using your server name, user name, and password. Don’t worry, it’s all encrypted when connecting to SQL Azure. Make sure your internal firewall allows port 1433 to pass through to SQL Azure. AOTW, SQL Azure’s TCP port cannot be adjusted, so don’t bother trying to look up how to change it. It’s a tough break that network admins at many organizations unilaterally block that port.

Your SSMS connection dialog will look something like this:

Once you’re connected, run this script to create two tables. You’ll notice that the TITLES table references the AUTHORS table. That will be important later.

CREATE TABLE authors

(id INT PRIMARY KEY

, name VARCHAR(100) DEFAULT('')

)

CREATE TABLE titles

(id INT PRIMARY KEY

, name VARCHAR(100) DEFAULT(11)

, authorId INT REFERENCES authors(id))

INSERT INTO authors

VALUES

('1', 'Stephen King')

, ('2', 'George RR Martin')

, ('3', 'Neal Stephenson')

, ('4', 'Steig Larsson')

INSERT INTO titles

VALUES

('1', 'A Game of Thrones', '2')

, ('2', 'A Clash of Kings', '2')

, ('3', 'The Song of Susannah', '1')

, ('4', 'The Gunslinger', '1')

There are a couple of things to notice about this script. One, each table in SQL Azure needs a clustered index. I’m creating one by specifying a primary key in the create table statement. AOTW, SQL Azure will allow you to create a table without a clustered index, but it won’t allow you to insert data into it, so there’s no reason to bother to even create the heap table. Two, I like the insert syntax where we can insert multiple rows after the VALUES keyword. That’s not SQL Azure specific, I just think it’s cool.We have two databases, one filled with tables and data and one empty. Let’s fix that and get to the meat of this demo.

STEP 4 – Setup SQL Azure Data Sync.

a) Goto http://www.sqlazurelabs.com/ and click on “SQL Azure Data Sync”

b) You’ll need to sign in to Windows Live ID.

c) The first time you go to this site, you’ll have to agree to a license agreement.

e) Name the Sync Group "LibrarySync". I don’t know the limit of how long this name can be, but I’ve thrown in a lot of text in there and it took it. I wonder if it’s varchar(max). Then click “Next”.f) Register your sever by typing in your server name, user name, and password. Notice how in red it says “Your credentials will be stored in an encrypted format.” This is good news because it saves your credentials when registering other databases on the same server.

g) Then click “Add Hub”. The Hub database is similar to a publishing database in replication. For instance, it will win if there are any update conflicts.

h) Then choose the library2 database and click “Add Member”. Your screen should look something like this:

j) You’ll get to a screen that looks like this:

The order you select the tables in this screen is the same order that the tables will be replicated. Remember that we have a foreign key constraint, so it’s real important that we add the authors table before we add the titles table. Click “Finish".k) OK, now it seems like you’re done, but you’re not. Click on “LibrarySync” and then click “Schedule Sync”. Notice the options you have for scheduling synchronization. You can sync hourly, daily, etc. If you choose hourly, the “Minute” dropdown does not let you schedule at a minute interval, rather it allows you to choose the minute after the hour that the sync will begin. Click “OK”.

l) Technically, your sync is ready to go, but click “Sync Now” and wait a minute or two so we can examine the changes.

Step 5 – Examine the Changes

a) Notice the library2 database has all the schema and data from library1. It also has some other things that SQL Azure Data Sync added for us. BAM! We did it!b) Look at the 3 tables that SQL Azure Data Sync added in both databases. These tables seem to track sync and schema information.

a. Schema_info

b. Scope_config

c. Scope_infoc) Each user table gets its own tracking table. For instance, we have authors_tracking and titles_tracking. This tells SQL Azure Data Sync which records need to be updated on the other members of the sync group. Notice how this is not an auditing tool like Change Data Capture. It works more like Change Tracking in SQL Server 2008. You won’t get all the changes that led up to the final state of the data.

d) Each user table gets three triggers that are used to keep the databases consistent.

e) There are many stored procedures added to both databases for the same reason. Feel free to poke around and examine the triggers and stored procedures. I found them to be cleanly written. I like how they’re using the MERGE statement, introduced in SQL Server 2008.

f) Feel free to add a record to Library2, and click “Sync Now”. You’ll see it in Library1 in no time, thus proving that the synchronization is indeed bi-directional.

g) On the SQL Azure Data Sync Page, check out the synchronization log by clicking on the Dashboard button.

Final Thoughts

At PDC 2010, Microsoft announced that this service will extend to on-premise SQL Servers. They demo’d how it’s done through an agent that’s installed on the on-premise SQL Server. It should be available to use in CTP 2, which is not publicly available AOTW.Also, although the initial snapshot pushed the schema down to the library2 database, it will not keep schema in sync without tearing down the sync group and rebuilding it. I recommend you finalize your schema before setting up the sync group.

This is built on the Microsoft Sync Framework. I believe we’ll be seeing this used to synchronize all sorts of data from all sorts of data sources. I think it’s worth learning and I hope this gives you a fair introduction to the technology. Remember to delete at least one of your library databases to keep from being charged. Also, delete your data sync. Comment below if you have any questions. Good luck!

Steve Yi explained Using the Database Manager from the Management Portal in a 12/1/2010 post to the SQL Azure Team blog:

The Windows Azure Platform Management Portal allows you to manage all your Windows Azure platform resources from a single location – including your SQL Azure database; for more information about see Zane Adam's blog post.

One of the nuances of using the Management Portal, is that you need to set the pop-up blocker in IE to accept pop-up in this site so that the database manager could be launched. The database manager (formerly known as Project “Houston”) is activated when you click the Manage button in the ribbon bar for database administration in the Management Portal.

When you click the Manage button a new window (or tab) is opened for the database manager to start administration of your SQL Azure database. .

By default, Internet Explorer displays pop-ups that appear as a result of you clicking a link or button. Pop-up Blocker blocks pop-ups that are displayed automatically (without you clicking a link or button). If you want to allow a specific website to display automatic pop-ups, follow these steps:

- Open Internet Explorer by clicking the Start button

, and then clicking Internet Explorer.

- Click the Tools button, click Pop-up Blocker, and then click Pop-up Blocker Settings.

- In the Address of website to allow box, type the address (or URL) of the website you want to see pop-ups from, and then click Add.

- Repeat step 3 for every website you want to allow pop-ups from. When you are finished adding websites, click Close.

For more information about the Pop-up Blocker see: Internet Explorer Pop-up Blocker: frequently asked questions.

<Return to section navigation list>

Dataplace DataMarket and OData

Rudi Grobler asserted SQL Azure + OData + WP7 = AWESOME in this brief 12/2/2010 post:

While developing Omoplata, I needed a place (in the cloud) where I could store data and access it easily on the Windows Phone, sounds simple? I started looking round for inexpensive ways of hosting data… After searching for a while I decided to spin up a new instance of SQL Azure and enable the OData feed on this database.

- Create a new SQL Azure instance

- Enable SQL Azure OData Service

- Download OData Client Library

- Get a Agent, a ServiceAgent

Didn’t know SQL Azure supported OData out of the box? Try it, its AWESOME!!!

PS. SQL Azure instance is $10/month which isn’t toooo bad!!!

<Return to section navigation list>

Windows Azure AppFabric: Access Control and Service Bus

Wade Wegner explained Programmatically Configuring the Caching Client in a 12/2/2010 post:

When using Windows Azure AppFabric Caching you will have to configure the caching client to use a provisioned cache. In the past I’ve given examples of doing this using an application configuration file, but there are times where you may want to programmatically configure the caching client. Fortunately, it’s really quite easy and straight forward.

Here are some of the more interesting pieces:

- Line 6: Create a DataCacheServerEndpoint list that will be used in the DataCacheFactoryConfiguration (see line 13).

- Line 7: Add the endpoint (defined by the service URL and port) to the list.

- Line 12: Set a DataCacheSecurity object using the authorization token for the SecurityProperties property.

- Line 13: Set the DataCacheServerEndpoint list to the Servers property.

- Line 18: Create a new DataCacheFactory and pass the DataCacheFactoryConfiguration object to the constructor.

Before getting started, but sure to prepare Visual Studio and provision or access your Cache to get the service URL and the authorization token.

<Return to section navigation list>

Windows Azure Virtual Network, Connect, and CDN

No significant articles today.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

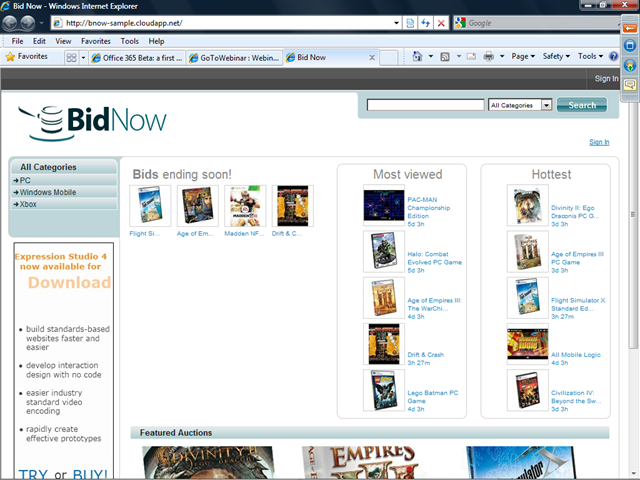

Wade Wegner posted a 00:08:49 Setting Up the BidNow Sample Application for Windows Azure video and a link to the live application on 12/1/2010:

This video provides a walkthrough of the steps required to get the BidNow Sample up and running on your computer.

BidNow is an online auction site that shows how our comprehensive set of cloud services can be used to develop a highly scalable consumer application. We have recently released a significant update that increases BidNows use of the Windows Azure platform, and specifically uses new features announced at the Professional Developers Conference and in the Windows Azure SDK 1.3 release.

BidNow uses the following technologies:

- Windows Azure (updated for Windows Azure SDK 1.3)

- SQL Azure

- Windows Azure storage (blobs and queues)

- Windows Azure AppFabric Caching

- Windows Azure AppFabric Access Control

- OData

- Windows Phone 7

For a more comprehensive discussion please see the post Signficant Updates Released in the BidNow Sample for Windows Azure. To get the latest version of BidNow, download the sample here. To see a live version running, please go here.

Steef-Jan Wiggers demonstrated the updated Windows Azure – BidNow Sample in this 12/2/2010 post:

If you want to dive more into Windows Azure you can have a look at BidNow. BidNow is an online auction site designed to demonstrate how you can build highly scalable consumer applications running in the Windows Azure Platform.

When BidNow was originally released, it was a sample built using Windows Azure and Windows Azure Storage, along with authentication provided via Live Id. Since the original release, a number of additional services and capabilities have been released. Consequently, BidNow now utilizes the following pieces of the platform:

- Windows Azure

- Windows Azure storage (e.g. blobs and queues)

- SQL Azure

- Windows Azure AppFabric Caching

- Windows Azure AppFabric Access Control

- OData

- Windows Phone 7

These updates not only provide important and useful capabilities, but also highlight the ways in which to build applications in the Windows Azure Platform. If you like to learn more you can read Wade Wegner’s latest post. It will show how setup a platform (what you need to install, OS, Visual Studio, SDK, Identity Framework) to make this sample run.

My environment is as follows on a 8740w HP Elitebook, 8Gb RAM, Intel Core i7 machine:

- Windows 7, Professional x64

- Windows PowerShell 2.0 (already installed on Windows 7)

- Windows Azure Software Development Kit 1.3

- Windows Azure AppFabric SDK 2.0

- Internet Information Services 7.5

- Microsoft .NET Framework 4.0

- Microsoft SQL Express 2008 R2

- Microsoft Visual Studio 2010 Ultimate

- Windows Identity Foundation Runtime

- Windows Identity Foundation SDK 4.0

When downloading the sample you will get a zipped file and need to unpack (run it). After that you need to start ‘StartHere’ (Windows Command Script). It will then show the screen below:

Click next will run scan to check if all required software is installed.

Click Next.

Configuration of BidNow Demo starts and will setup AppFabric Labs ACS and Cache, Database (SQL Azure, SQL Express or both), blobs and certificates. During the setup you will see a couple of screens like below, where you will have to type in namespace, secret keys and so on.

Steps to undertake and actions to perform are described in a wiki.

After configuration is completed code can be opened through visual studio and then it simply build and run.

If you that familiar yet with Windows Azure you can start by going to Windows Azure Platform Portal, where you find lots of resources like whitepapers and so on. Other important resources to look at are:

- Windows Azure Pricing

- Patterns & Practices – Windows Azure Guidance

- Cloud Development – MSDN

- Windows Azure Account

- Using Windows Azure Development Environment Essentials

- TechEd Online

- Windows Azure Platform Training Kit - November Update

Have fun and there are more samples to be found for Azure on code gallery.

Freddy Cristiansen continued his Connecting to [Microsoft Dynamics] NAV Web Services from the Cloud–part 2 out of 5 in a 12/2/2010 post:

If you haven’t already read part 1 you should do so here, before continuing to read this post.

In part 1 I showed how a service reference plus two lines of code:

var client = new Proxy1.ProxyClassClient("NetTcpRelayBinding_IProxyClass");

Console.WriteLine(client.GetCustomerName("10000"));could extract data from my locally installed NAV from anywhere in the world.

Let’s start by explaining what this does.

The first line instantiates a WCF client class with a parameter pointing to a config section, which is used to describe bindings etc. for the communication.

A closer look at the config file reveals 3 endpoints defined by the service:

<client>

<endpoint

address="sb://navdemo.servicebus.windows.net/Proxy1/"

binding="netTcpRelayBinding"

bindingConfiguration="NetTcpRelayBinding_IProxyClass"

contract="Proxy1.IProxyClass"

name="NetTcpRelayBinding_IProxyClass" />

<endpoint

address="https://navdemo.servicebus.windows.net/https/Proxy1/"

binding="basicHttpBinding"

bindingConfiguration="BasicHttpRelayBinding_IProxyClass"

contract="Proxy1.IProxyClass"

name="BasicHttpRelayBinding_IProxyClass" />

<endpoint

address=http://freddyk-appfabr:7050/Proxy1

binding="basicHttpBinding"

bindingConfiguration="BasicHttpBinding_IProxyClass"

contract="Proxy1.IProxyClass"

name="BasicHttpBinding_IProxyClass" />

</client>The endpoint we use in the sample above is the first one, using the binding called netTcpRelayBinding and the binding configuration NetTcpRelayBinding_IProxyClass, defined in the config file like:

<netTcpRelayBinding>

<binding

name="NetTcpRelayBinding_IProxyClass"

closeTimeout="00:01:00"

openTimeout="00:01:00"

receiveTimeout="00:10:00"

sendTimeout="00:01:00"

transferMode="Buffered"

connectionMode="Relayed"

listenBacklog="10"

maxBufferPoolSize="524288"

maxBufferSize="65536"

maxConnections="10"

maxReceivedMessageSize="65536">

<readerQuotas

maxDepth="32"

maxStringContentLength="8192"

maxArrayLength="16384"

maxBytesPerRead="4096"

maxNameTableCharCount="16384" />

<reliableSession

ordered="true"

inactivityTimeout="00:10:00"

enabled="false" />

<security

mode="Transport"

relayClientAuthenticationType="None">

<transport protectionLevel="EncryptAndSign" />

<message clientCredentialType="Windows" />

</security>

</binding>

</netTcpRelayBinding>If we want to avoid using the config file for creating the client, we can also create the bindings manually. The code would look like:

var binding = new NetTcpRelayBinding(EndToEndSecurityMode.Transport, RelayClientAuthenticationType.None);

var endpoint = new EndpointAddress("sb://navdemo.servicebus.windows.net/Proxy1/");

var client = new Proxy1.ProxyClassClient(binding, endpoint);

Console.WriteLine(client.GetCustomerName("10000"));In order to do this, you need to have the Windows Azure AppFabric SDK installed (can be found here), AND you need to set the Target Framework of the Project to .NET Framework 4 (NOT the .NET Framework 4 Client Profile, which is the default). After having done this, you can now add a reference and a using statement to Microsoft.Servicebus.

You can also use .Net Framework 3.5 if you like.

What are the two other endpoints?

As you might have noticed, the config file listed 3 endpoints.

The first endpoint uses the servicebus protocol (sb://) and connecting to this endpoint requires the NetTcpRelayBinding which again requires the Microsoft.ServiceBus.dll to be present.

The second endpoint uses the https protocol (https://) and a consumer can connect to this using the BasicHttpRelayBinding (from the Microsoft.ServiceBus.dll) or the standard BasicHttpBinding (which is part of System.ServiceModel.dll).

The last endpoint uses the http protocol (http://) and is a local endpoint on the machine hosting the service (used primarily for development purposes). If this endpoint should be reachable from outside Microsoft Corporate network, I would have to ask Corporate IT to setup firewall rules and open up a specific port for my machine – basically all of the things, that the Servicebus saves me from doing.

BasicHttpRelayBinding

Like NetTcpRelayBinding, this binding is also defined in the Microsoft.Servicebus.dll and if we were to write code using this binding to access our Proxy1 – it would look like this:

var binding = new BasicHttpRelayBinding(EndToEndBasicHttpSecurityMode.Transport, RelayClientAuthenticationType.None);

var endpoint = new EndpointAddress("https://navdemo.servicebus.windows.net/https/Proxy1/");

var client = new Proxy1.ProxyClassClient(binding, endpoint);

Console.WriteLine(client.GetCustomerName("10000"));Again – this sample would require the Windows Azure AppFabric SDK to be installed on the machine connecting.

How to connect without the Windows Azure AppFabric SDK

As I stated in the first post, it is possible to connect to my Azure hosted NAV Web Services using standard binding and not require Windows Azure AppFabric SDK to be installed on the machine running the application.

You still need the SDK on the developer machine if you need to create a reference to the metadata endpoint in the cloud, but then again that could be done on another computer if necessary.

The “secret” is to connect using standard BasicHttpBinding – like this:

var binding = new BasicHttpBinding(BasicHttpSecurityMode.Transport);

var endpoint = new EndpointAddress("https://navdemo.servicebus.windows.net/https/Proxy1/");

var client = new Proxy1.ProxyClassClient(binding, endpoint);

Console.WriteLine(client.GetCustomerName("10000"));Or, using the config file:

var client = new Proxy1.ProxyClassClient("BasicHttpRelayBinding_IProxyClass");

Console.WriteLine(client.GetCustomerName("10000"));Note that the name refers to BasicHttpRelayBinding, but the section refers to BasicHttpBinding:

<bindings>

<basicHttpBinding>

<binding name="BasicHttpRelayBinding_IProxyClass" …and the section determines what class gets instantiated – in this case a standard BasicHttpBinding which is available in System.ServiceModel of the .net framework.

Why would you ever use any of the Relay Bindings then?

One reason why you might want to use the Relay bindings is, that they support a first level of authentication (RelayClientAuthenticationType). As a publisher of services in the cloud, you can secure those with an authentication token, which users will need to provide before they ever are allowed through to your proxy hosting the service.

Note though that currently, a number of platforms doesn’t support the Relay bindings out of the box (Windows Phone 7 being one) and for this reason I don’t use that.

In fact the service I have provided for getting customer names is totally unsecure and everybody can connect to that one, provide a customer no and get back a customer name. I will discuss authentication more deeply in part 4 of this post series.

Furthermore the NetTcpRelayBinding supports a hybrid connectionmode, which allows the connection between server and client to start out relayed through the cloud and made directly between the two parties if possible. In my case, I know that my NAV Web Services proxy is not available for anybody on the outside, meaning that a direct connection is not possible so why fool ourself.

Connecting to Proxy1 from Microsoft Dynamics NAV 2009 R2

In order to connect to our Cloud hosted service from NAV we need to create a DLL containing the proxy classes (like what Visual Studio does when you say add service reference).

In NAV R2 we do not have anything called a service reference, but we do have .net interop and it actually comes pretty close.

If you start a Visual Studio command prompt and type in the following commands:

svcutil /out:Proxy1.cs sb://navdemo.servicebus.windows.net/Proxy1/mex

c:\Windows\Microsoft.NET\Framework\v3.5\csc /t:library Proxy1.csthen, if successful you should now have a Proxy1.dll.

The reason for using the .net 3.5 compiler is to get a .net 3.5 assembly, which is the version of .net used for the Service Tier and the RoleTailored Client. If you compile a .net 4.0 assembly, NAV will not be able to use it.

Copy this DLL to the Add-Ins folder of the Classic Client and the Service Tier..

- C:\Program Files\Microsoft Dynamics NAV\60\Classic\Add-Ins

- C:\Program Files\Microsoft Dynamics NAV\60\Service\Add-Ins

You also need to copy it to the RoleTailored Client\Add-Ins folder if you are planning to use Client side .net interop.

Create a codeunit and add the following variables:

Name DataType Assembly Class

proxy1client DotNet Proxy1 ProxyClassClient

securityMode DotNet System.ServiceModel System.ServiceModel.BasicHttpSecurityMode

binding DotNet System.ServiceModel System.ServiceModel.BasicHttpBinding

endpoint DotNet System.ServiceModel System.ServiceModel.EndpointAddressall with default properties, meaning that they will run on the Service Tier. Then write the following code:

OnRun()

securityMode := 1;

binding := binding.BasicHttpBinding(securityMode);

endpoint := endpoint.EndpointAddress('https://navdemo.servicebus.windows.net/https/Proxy1/');

proxy1client := proxy1client.ProxyClassClient(binding, endpoint);

MESSAGE(proxy1client.GetCustomerName('20000'));Now you of course cannot run this codeunit from the Classic Client, but you will have to add an action to some page, running this codeunit.

Note: When running the code from the RoleTailored Client, you might get an error (or at least I did) stating that it couldn’t resolve the name navdemo.servicebus.windows.net – I solved this by letting the NAV Service Tier and Web Service Listener run as a domain user instead of NETWORK SERVICE.

If everything works, you should get a message box like this:

So once you have created the Client, using the Client is just calling a function on the Client class.

I will do a more in-dept article about .net interop and Web Services at a later time.

Connecting to Proxy1 from a Windows Phone 7

Windows Phone 7 is .net and Silverlight – meaning that it is just as easy as writing a Console App – almost…

Especially since our Service is hosted on Azure we can just write exactly the same code for creating the Client object. Invoking the GetCustomerName is a little different – since Silverlight only supports async Web Services. So, you would have to setup a handler for the response and then invoke the method.

Another difference is, that the Windows Azure AppFabric SDK doesn’t exist for Windows Phone 7 (or rather, it doesn’t while I am writing this – maybe it will at some point in the future). This of course means that we will use our Https endpoint and BasicHttpBinding to connect with.

Start Visual Studio 2010 and create a new application of type Windows Phone Application under the Silverlight for Windows Phone templates.

Add a Service Reference to sb://navdemo.servicebus.windows.net/Proxy1/mex (note that you must have Windows Azure AppFabric SDK installed on the developer machine in order to resolve this URL). In your Windows Phone application, the config file will only contain the endpoints that are using a binding, which is actually compatible with Windows Phone, meaning that all NetTcpRelayBinding endpoints will be stripped away.

Add the following code to the MainPage.xaml.cs:

// Constructor

public MainPage()

{

InitializeComponent();

var client = new Proxy1.ProxyClassClient("BasicHttpRelayBinding_IProxyClass");

client.GetCustomerNameCompleted += new EventHandler<Proxy1.GetCustomerNameCompletedEventArgs>(client_GetCustomerNameCompleted);

client.GetCustomerNameAsync("30000");

}void client_GetCustomerNameCompleted(object sender, Proxy1.GetCustomerNameCompletedEventArgs e)

{

this.PageTitle.Text = e.Result;

}Running this in the Windows Phone Emulator gives the following:

Wow – it has never been easier to communicate with a locally installed NAV through Web Services from an application running on a Phone – anywhere in the world.

The next post will talk about how Proxy1 is created and what it takes to expose services on the Servicebus.

Freddy is a PM Architect for Microsoft Dynamics NAV.

ESRI described the migration of its MapIt application from on-premises to the Windows Azure cloud in this 00:03:44 Microsoft Showcase video presentation:

Patrick Butler Monterde posted yet another summary of the Azure SDK 1.3 / Tools AND Azure Training Kit November Update in a 12/1/2010 post:

We have just release the 1.3 version of the Azure SDK, Azure Tools for Visual Studio and the Training Kit. This is the information and links for them:

Azure Software Development Kit (SDK) 1.3:

- Virtual Machine (VM) Role (Beta):Allows you to create a custom VHD image using Windows Server 2008 R2 and host it in the cloud.

- Remote Desktop Access: Enables connecting to individual service instances using a Remote Desktop client.

- Full IIS Support in a Web role: Enables hosting Windows Azure web roles in a IIS hosting environment.

- Elevated Privileges: Enables performing tasks with elevated privileges within a service instance.

- Virtual Network (CTP): Enables support for Windows Azure Connect, which provides IP-level connectivity between on-premises and Windows Azure resources.

- Diagnostics: Enhancements to Windows Azure Diagnostics enable collection of diagnostics data in more error conditions.

- Networking Enhancements: Enables roles to restrict inter-role traffic, fixed ports on InputEndpoints.

- Performance Improvement: Significant performance improvement local machine deployment.

Windows Azure Tools for Microsoft Visual Studio also includes:

- C# and VB Project creation support for creating a Windows Azure Cloud application solution with multiple roles.

- Tools to add and remove roles from the Windows Azure application.

- Tools to configure each role.

- Integrated local development via the compute emulator and storage emulator services.

- Running and Debugging a Cloud Service in the Development Fabric.

- Browsing cloud storage through the Server Explorer.

- Building and packaging of Windows Azure application projects.

- Deploying to Windows Azure.

- Monitoring the state of your services through the Server Explorer.

- Debugging in the cloud by retrieving IntelliTrace logs through the Server Explorer.

Windows Azure Platform Training Kit - November Update

The November update provides new and updated hands-on labs for the Windows Azure November 2010 enhancements and the Windows Azure Tools for Microsoft Visual Studio 1.3. These new hands-on labs demonstrate how to use new Windows Azure features such as Virtual Machine Role, Elevated Privileges, Full IIS, and more. This release also includes hands-on labs that were updated in late October 2010 to demonstrate some of the new Windows Azure AppFabric services that were announced at the Professional Developers Conference (http://microsoftpdc.com) including the Windows Azure AppFabric Access Control Service, Caching Service, and the Service Bus.

Some of the specific changes with the November update of the training kit include:

Note: The presentations and demo scripts in the training kit will be updated in early December 2010 to reflect the new Windows Azure November enhancements and SDK/Tools release.

- Updated all hands-on labs to use the new Windows Azure Tools for Visual Studio November 2010 (version 1.3) release

- New hands-on lab "Advanced Web and Worker Role" – shows how to use admin mode and startup tasks

- New hands-on lab "Connecting Apps With Windows Azure Connect" – shows how to use Windows Azure Connected (formerly Project "Sydney")

- New hands-on lab "Virtual Machine Role" – shows how to get started with VM Role by creating and deploying a VHD

- New hands-on lab "Windows Azure CDN" – simple introduction to the CDN

- New hands-on lab "Introduction to the Windows Azure AppFabric Service Bus Futures" – shows how to use the new Service Bus features in the AppFabric labs environment

- New hands-on lab "Building Windows Azure Apps with Caching Service" – shows how to use the new Windows Azure AppFabric Caching service

- New hands-on lab "Introduction to the AppFabric Access Control Service V2" – shows how to build a simple web application that supports multiple identity providers

- Updated hands-on lab "Introduction to Windows Azure" - updated to use the new Windows Azure platform Portal

- Updated hands-on lab "Introduction to SQL Azure" - updated to use the new Windows Azure platform Portal

<Return to section navigation list>

Visual Studio LightSwitch

Kunal Chowdhury continued his LightSwitch series with a Beginners Guide to Visual Studio LightSwitch (Part–4) episode on 12/2/2010:

Visual Studio LightSwitch is a new tool for building data-driven Silverlight Application using Visual Studio IDE. It automatically generates the User Interface for a DataSource without writing any code. You can write a small amount of code also to meet your requirement.

In my previous chapter “Beginners Guide to Visual Studio LightSwitch (Part – 3)”, I described you how to create a DataGrid of records to insert/update/delete records.

In this chapter, I will guide you to create a List and Details screen using LightSwitch. This will show you how to integrate two or more tables into a single screen. Also, we will discuss on some custom validation. These all steps are without writing any code. We will use just the tool to improve our existing application. Read to Learn more about it.

Background

If you are new to Visual Studio LightSwitch, I will first ask you to read the first three chapters of this tutorial, where I demonstrated it in detail. In my 3rd chapter, I discussed the following topics:

- Create the Editable DataGrid Screen

- See the Application in Action

- Edit a Record

- Create a New Record

- Validate the Record

- Delete a Record

- Filter & Export Records

- Customizing the Screen

In this Chapter, we will discuss on the “Editable Data Grid” screen. Read it and learn more about this tool before it get release.

TOC and Article Summary

In this section, I will Summarize the whole Article. You can directly go to the original article to read the complete content.

- Creating the List and Details Screen

Here I demonstrated, how to create a List & Details Screen using Visual Studio LightSwitch. This section has a no. of images which will help you understanding the basic parts.- UI Screen Features

This part tells about adding, modifying, deleting, exporting and sorting mechanism for the DataGrid. If you already read my previous chapters, this will be easier for you to understand.- Adding a New Table

In this point you will just create a new table that we will use at later point of time.- Creating the Validation Rules

This steps demonstrates how to do some custom validation without writing code. These are some basic rules that you can set explicitly.- Adding Relationship between two tables

This is the main part of this tutorial chapter. Here we will see how to add relationship between two tables. We will discuss what are the problems available there too. You can’t create a 1 to 1 relationship but other relationships are available. Read to learn more.- Creating the new List and Details Screen

Here we will create a new screen again by deleting the existing one. This is for beginners and if you are experienced modifying the screen, no need to delete the existing one.- Application in Action

We will see the application in action. This point will clear all your confusion. We will see all the steps that we implemented here and will check if we covered everything.Complete Article

The complete article has been hosted in SilverlightShow.net including the first part. You can read the them here:

- Beginners Guide to Visual Studio LightSwitch (Part – 1)

- Beginners Guide to Visual Studio LightSwitch (Part – 2)

- Beginners Guide to Visual Studio LightSwitch (Part – 3)

- Beginners Guide to Visual Studio LightSwitch (Part – 4)

As usual, never forget to Vote for the article. Highly appreciate your feedbacks, suggestions and/or any comments about the article and future improvements.

End Note

You can see that, throughout the whole application I never wrote a single line of code. I never did write a single line of XAML code to create the UI. It is presented by the tool template automatically. It has a huge feature to do automatically. From the UI design to add, update, delete and even sort, filter all are done automatically by the framework.

I hope, you enjoyed this chapter of the series a lot. Lots of figures I used here, so that, you can understand each steps very easily. If you liked this article, please don’t forget to share your feedback here. Appreciate your feedback, comments, suggestion and vote.

I will soon post the next chapter. Till then enjoy reading my other articles published in my Blog and Silverlight Show.

Return to section navigation list>

Windows Azure Infrastructure

• The Windows Azure Team updated the main Windows Azure Compute Services page on 12/1/2010 with an apparent pricing error (emphasized in the “Compute Instance Sizes” section below):

Windows Azure Compute

You can now sign up for the Windows Azure VM role and Extra Small Instance BETA via the Windows Azure Management Portal

Windows Azure offers an internet-scale hosting environment built on geographically distributed data centers. This hosting environment provides a runtime execution environment for managed code. A Windows Azure compute service is built from one or more roles. A role defines a component that may run in the execution environment; within Windows Azure, a service may run one or more instances of a role. A service may be comprised of one or more types of roles, and may include multiple roles of each type.

Role Types:

Windows Azure supports three types of roles: A Web role-customized for web application programming and supported by IIS 7. These Web roles run IIS7. A Worker role is used for generalized development, and may perform background processing for a web role. A Virtual Machine (VM) role that runs an image—a virtual hard disk (VHD)—of a Windows Server 2008 R2 virtual machine. This VHD is created using an on-premises Windows Server machine, then uploaded to Windows Azure. Once it’s stored in the cloud, the VHD can be loaded on demand into a VM role and executed. Customers can configure and maintain the OS and use Windows Services, scheduled tasks etc. in the VM role.

Web and Worker Role Enhancements:

At PDC 10, we announced the following Web and Worker role enhancements: Development of more complete applications using Windows Azure will soon be possible with the introduction of Elevated Privileges and Full IIS . The new Elevated Privileges functionality for the Web and Worker role will provide developers with greater flexibility and control in developing, deploying and running cloud applications. The Web role will also include Full IIS functionality, which enables multiple IIS sites per Web role and the ability to install IIS modules. Windows Azure will also provide Remote Desktop functionality, which enables customers to connect to a running instance of their application or service in order to monitor activity and troubleshoot common problems.

VM Role:

The VM role functionality is being introduced to make the process of migrating existing Windows Server applications to Windows Azure easier and faster. This is especially true for the migration of Windows Server applications that have long, non-scriptable or fragile installation steps. While the VM role offers additional control and flexibility, the Windows Azure Web and Worker roles offer additional benefits over the VM role. Developers focus primarily on their application, and not the underlying operating system. In particular, Visual Studio is optimized for creating, testing, and deploying Web and worker roles – all in a matter of minutes. Also, because developers work at a higher level of abstraction with Web and worker roles, Windows Azure can automatically update the underlying operating system.

VM Role or Elevated Privileges:

The VM role and elevated privileges functionality removes roadblocks that today prevent developers from having full control over their application environment. For small changes like configuring IIS or installing an MSI we recommend using the elevated privilege admin access feature. This approach is best suited for small changes and enables the developer to retain automated service management at the Guest OS and the application level. When the customizations are large in number or require changes that cannot be automated, we recommend using the VM role instead. When developers use the VM role, they retain most benefits of automated service management (load balancing and failover) with the exception of Guest OS patching.

Compute Instance Sizes:

Developers have the ability to choose the size of VMs to run their application based on the applications resource requirements. Windows Azure compute instances come in five unique sizes to enable complex applications and workloads. We are introducing the Extra Small Windows Azure instance to make the process of development, testing and trial easier for enterprise developers. The Extra Small instance will also make Windows Azure more affordable for developers interested in running smaller applications on the platform.

Each Windows Azure compute instance represents a virtual server. Although many resources are dedicated to a particular instance, some resources associated to I/O performance, such as network bandwidth and disk subsystem, are shared among the compute instances on the same physical host. During periods when a shared resource is not fully utilized, you are able to utilize a higher share of that resource.

The different instance types will provide different minimum performance from the shared resources depending on their size. Compute instance sizes with a high I/O performance indicator as noted in the table above will have a larger allocation of the shared resources. Having a larger allocation of the shared resource will also result in more consistent I/O performance.

VM role Pricing and Licensing

The pricing model for the Windows Azure VM role is the same as the existing pricing model for Web and Worker roles. Customers will be charged at an hourly rate depending on the compute instance size. The Windows Azure fee for running the VM role – whether consumption or commitment based - includes the Windows Server licensing costs.

The license for the Windows Server 2008 R2 is covered through the Windows Azure VM Role licensing. Customers may use bits obtained through Volume Licensing (physical or electronic) to create the image. At launch, customers can deploy Windows Server 2008 R2 for production use in the VM role. In addition, During the Windows Azure™ VM role beta, developers can use the 64-bit version of Windows Server R2 in the VM Role for production services. Other Microsoft® software acquired through an active MSDN® license or subscription can be run in the Windows Azure VM Role for development and test purposes only. Microsoft will gather feedback on how customers and partners utilize the Windows VM Role and use that feedback to develop broader licensing scenarios for the cloud. This provision for MSDN in the Windows Azure VM Role is being offered until May 2011 irrespective of the duration of the Beta phase. Use of any third party software in the VM Role will be governed by use rights for that software.

There is no requirement for Windows Server Client Access Licenses (CALs) to connect to the Windows Azure VM role. There is also no transfer of use rights from any existing WS08 R2 license acquired through any other licensing program to the Windows Azure VM role nor are rights from the VM Role transferable to any other device.

The way I understand compute instance pricing is the cost / hour doubles for each increment to the Small instance. Therefore, the price of a Medium instance should be US$0.24 / hour, not US$0.48 / hour, which would be the same as that for a Large instance.

VM Role pricing and licensing rules are arcane, to be charitable.

• Wes Yanada published the same apparently erroneous Medium compute instance cost / hour in his Windows Azure - What is an Extra Small Instance? post of 12/1/2010 to the US ISV Evangelism blog:

During a presentation today, people were discussing the various sizes of Windows Azure compute instances. Small, Extra Small, Large, Medium etc. I have provided the table below for quick reference. Windows Azure compute instances come in five sizes to enable complex applications and workloads. Each Windows Azure compute instance represents a virtual server.

Although many resources are dedicated to a particular instance, some resources associated to I/O performance, such as network bandwidth and disk subsystem, are shared among the compute instances on the same physical host.

To Get More Information Visit this Link

I added a comment about the incorrect price to this post on 12/1/2010, but it didn’t stick or was deleted.

The Windows Azure Team announced the Sign-up and Approval Process for Windows Azure Beta and CTP Programs on 12/1/2010:

In conjunction with the release of the Windows Azure SDK and Windows Azure Tools for Visual Studio release 1.3 and the new Windows Azure Management Portal, we've launched beta availability of the Windows Azure Virtual Machine Role and Extra Small Windows Azure Instance, as well as a CTP of Windows Azure Connect.

If you are interested in joining either of these programs, you can request access directly through the Windows Azure Management Portal. Requests will be processed on a first come, first served basis. We appreciate your interest and look forward to your continued engagement with the Windows Azure platform.

More specifically, click the Beta Programs link in the new portal’s Navigation Pane, mark the checkbox(es) for the beta programs in which you’re interested, and click the Join Selected button:

The status for each selected beta program will change to Pending. Check back periodically to determine when you have access.

Bruce Guptill and Mike West co-authored New Cloud IT Data Report: Success Demands Good Fundamentals on 12/1/2010 as a new Research Alert for Saugatuck Technology (site registration required):

What is Happening?

Moving IT to the Cloud, whether in whole or as part of an integrative, hybrid IT environment, will entail a fundamental shift for user organizations in IT management skills and processes. In order to reap anywhere near optimal benefits from Cloud IT use over the long-term, IT organizations will have to shift from managing assets to supporting platforms, solutions, and business processes. And the business organizations that benefit from and fund those IT organizations will need to improve their understanding and expectations of Cloud IT costs, benefits, and challenges.

These are the core findings in Saugatuck Technology’s new 2010 Cloud IT Survey Data Report (SSR-816, 29Nov2010), published this week on Saugatuck’s website for clients of our Continuous Research Services (CRS).

This 30-page report summarizes and presents key data, analysis, and insights developed from Saugatuck Technology’s second annual Cloud IT research program. This program included the following:

- A September 2010 global web survey with more than 540 qualified executive-level participants, conducted in partnership with Bloomberg Businessweek Research Services;

- Interviews with 25 user enterprise executives with Cloud experience;

- Interviews and briefings with more than 50 software and services resellers, ISVs, consulting firms and SIs; and

- Briefings with more than 50 Cloud vendors/providers.

Bruce and Mike offer more meat in the usual “Why Is It Happening” and “Market Impact” sections.

David Linthicum continued his series with a Selling SOA/Cloud Part 2 post of 12/1/2010 to ebizQ’s Where SOA Meets Cloud blog:

Creating the business case refers to the process of actually putting some numbers down as to the value of the SOA to the enterprise or business. This means looking at the existing issues (from the previous step), and putting dollar figures next to them. For instance, how much are these limitations costing the business, and how does that affect the bottom line? Then, how will the addition of SOA affect the business -- positively or negatively?

Put numbers next to the core values of reuse and agility. You'll find that agility is the most difficult concept to define, but it has the most value for those who are building a SOA. Then, if the ROI for the SOA is worth the money and the effort, you move forward. This tactic communicates a clear set of objectives for the effort, and links the technical notion of SOA with the business.

The deliverable for this business case should be a spreadsheet of figures, a presentation for the executives, and a report for anyone who could not attend those meetings. Keep in mind you'll see this business case again, so be conservative but accurate.

Creating the execution plan refers to the detailed plan that defines what will be done, when, by what resources, and how long. This is, at its core, a project plan, but most people will find that the systemic nature of SOA requires that a great deal of resources work together to drive toward the end state. Leveraging and managing those resources is somewhat complex, as is the project management aspect of SOA.

Delivering the goods means just doing what you said you would do. Execution is where most SOAs fall down. However, if you fail to deliver on-time and on-budget, chances are your SOA efforts won't continue to have credibility within the enterprise and future selling will be impossible. So, say what you'll do, and do what you say.

Selling SOA is somewhat more of an art form than a well-defined process. It requires a certain degree of understanding the big picture, including the technology, the business, and the culture of the enterprise. More important, the sale needs to be followed up with delivering the value of the SOA. That's the tough part. What works so well in PowerPoint is a tad more difficult in real life.

Nubifer reported Gartner Discovers 10% of IT Budgets Devoted to Cloud Computing on 11/16/2010 (missed when posted):

A recent survey conducted by Gartner reports that companies spend approximately 10 percent of their budget for external IT services on cloud computing research, migrations and implementations.

Gartner conducted the survey from April to July 2010, surveying CIOs across 40 countries, discovering that nearly 40% of respondents allocated IT budget to cloud computing. Almost 45% of the CIOs and other senior IT decision makers questioned about general IT spending trends provided answers pertaining to cloud computing and its increased adoption rates.

Among the questions asked were how organizations’ budgets for cloud computing were distributed. Detailing the results, a Research Director at Gartner noted that, “One-third of the spending on cloud computing is a continuation from the previous budget year, a further third is incremental spending that is new to the budget, and 14 percent is spending that was diverted from a different budget category in the previous year.”

Organizations polled in Europe, Asia, the Middle East, Africa and North America spent between 40 and 50 percent of their cloud budget on cloud services from external providers. The survey also discovered that almost half of respondents with a cloud computing budget planned to ramp up the use of cloud services from external platform providers.

According to Gartner analysts, the survey results demonstrated a “shift towards the ‘utility’ approach for non-core services, and increased investment in core functionality, often closely aligned with competitive differentiation.”

Additionally, more than 40% of respondents anticipated an increase in spending in private cloud implementations designed for internal or restricted use of the enterprise, compared to a third of those polled seeking to implement public clouds.

Gartner called the investment trends for cloud computing as “healthy” as a whole. Said Gartner, “This is yet another trend that indicates a shift in spending from traditional IT assets such as the data-center assets and a move towards assets that are accessed in the cloud. It is bad news for technology providers and IT service firms that are not investing and gearing up to deliver these new services seeing an increased demand by buyers.”

Discussing the findings, Chad Collins, CEO of Nubifer Cloud Computing said, “This survey supports what we are seeing at ground zero when working with our enterprise clients. Company executives are asking themselves why they should continue with business as usual, doling out up-front cap/ex investments while supporting all the risks associated with large scale IT implementations.” Collins elaborates, “Cloud platforms allow these organizations to eliminate risks and upfront investments while gaining greater interoperability and scalability features.”

Collins went on to add, “Forward thinking organizations realize that by using external providers and cloud computing models, they can gain more flexibility in their cost and management of thier application base, while also getting the elasticity and scalability needed for growth.”

To learn more about adopting a cloud platform and how your organization can realize the benefits of cloud computing technologies, contact a Nubifer representative today.

A link to the original report in Nubifer’s post would have been welcome. A search indicates the source was a Gartner Survey Shows Cloud-Computing Services Represents 10 Percent of Spending on External IT Services in 2010 press release of 9/22/2010.

<Return to section navigation list>

Windows Azure Platform Appliance (WAPA) and Hyper-V/Private Clouds

Adron Hall wasn’t sanguine about the prospects for private clouds in his Top 10 Cloud Predictions by Information Management post of 12/2/2010:

Time for a lunch time blog entry…

Information Management put together some cloud predictions for the cloud industry. Here’s my 2 cents for the key points I picked out.

- You will build a private cloud, and it will fail. < Thank goodness. Get rid of the whole premise, it’s kind of stupid. The basis of cloud computing is the almost endless backing of massive funding for massive amounts of systems. Throw into that mix massively distributed, geographically dispersed locations where the machines are located. A little contraption in a box thing called “cloud” is bullshit. Plain and simple, don’t delude yourself.

- Hosted private clouds will outnumber internal clouds 3:1. <Good. The internal clouds – see above comment.

- Community clouds will arrive, thanks to compliance. <Awesome! This is when laws, rights, and other ideals will seriously come to play within the cloud computing space. Community clouds will have to be transparent in ways that AWS, Rackspace, Windows Azure, and others will never be. They might not be as robust or feature rich, but it will enable communities and others to utilize the cloud with known rights, liberties, and other notions that aren’t up for debate as they are with the other cloud providers (re: Wikileaks & such)

- The BI gap will widen. < BI is PERFECT for the cloud. BI on current system on relational databases without thinking about NoSQL, Big Data, and other notions is a major problem. BI will begin migrations to utilizing the cloud or BI will just die and another cloud related acronym will most likely come to replace it. Real time Business Intelligence is much more readily available with cloud resources than attempting to implement real time business intelligence on-premises.

- Cloud standards still won’t be here – get over it. < I agree. I’m over it, and I’m not even remotely worried about it. Maybe it is because I’m a software developer and I lean heavily on well designed, solid architecture, loosely coupled, SOLID ideals, and other things that would allow me to prevent lock in at multiple levels of usage. In addition, I know what is what within the cloud, I don’t need some arbitrary standard since the obvious usage patterns are already pretty visible in the cloud community already.

- Cloud security will be proven, but not by the providers alone. < Cloud security is indeed a shared responsibility. If one is in the cloud industry though and is still talking about the “cloud being secure”, they’ve not been paying attention. The security concerns are much less with cloud computing than most on-premises computing. The biggest security concerns, at the actual software level, are still the same. These responsibilities are shared, but rest heavily on the shoulders of software developers, architects, and in the same places a company (or Government entity) has previously needed to be concerned with.

Overall I’d love to see two things for the cloud community.

- Stop accepting the “Security is a Concern because I’m scared and haven’t been paying attention” and just start implementing. The reason being is those same people that are kicking and dragging their feet into the future will keep kicking and dragging their feet. Eventually they’ll either be unemployed or update their skill sets and legacy ideas and step into the future. They’ll be a contributing part of the community and we can all smile and be happy then!

- More significant penetration needs to be made into the Enterprise IT Environments. I know, some people won’t be needed anymore, but others will be needed for the new applications, tooling, and things that one can do by not wasting resources on physical boxes, excess IT costs, and other now unneeded IT impediments. IT and business itself needs to realize this, the sooner the better. The companies that realize this sooner will be able to make significant strategic headway over their competitors. Those companies that never realize this will most likely wither and be dead within 2-3 years of mass adoption of the cloud options. Simply, “skate or die”.

<Return to section navigation list>

Cloud Security and Governance

David Linthicum asserted “WikiLeaks takes to Amazon cloud to shield itself from DDoS attacks, and other targeted sites are likely to follow” in the deck for his Can cloud computing save you from DDoS attacks? article for InfoWorld’s Cloud Computing blog:

No matter what you think about the WikiLeaks story, it's interesting to note that the website famous for publishing leaked government and corporate documents has turned to cloud computing to save it from a distributed denial-of-service (DDoS) attack.

WikiLeaks chose Amazon.com's EC2 cloud computing service to host its files, according to the Guardian newspaper, as a reaction to the attacks that began Sunday night. As the controversial content is hosted by a French company, the pages residing on Amazon.com's servers do not contain any information the U.S. government has been complaining about. However, I suspect this was not good PR for Amazon.com. And in any event, Amazon.com yesterday pulled down the WikiLeaks pages apparently at the request of U.S. Sen. Joe Liebermnn (I-Ct.).

Beyond the politics, it's interesting to note that sites experiencing DDoS attacks, which saturate server resources, can take refuge in cloud computing providers and their accompanying extra horsepower to outlive the assaults. I suspect that other sites targeted by DDoS attacks -- either now or in the future -- will consider clouds as a survival strategy.

The cloud offers the advantage of elasticity. With the ability to bring thousands of cores online, as necessary and almost instantaneously, the cloud all but eliminates worries about a company's ability to meet a rapidly expanding needs, including attempts to saturate the infrastructure. Of course, you'll get a mighty big bill at the end of the month -- WikiLeaks will surely see a sizable statement from Amazon.com.

However, resources hosted on a cloud provider such as Amazon.com can still be harmed by such an attack. As more applications, data, and websites move to the cloud, the providers themselves will ultimately be targeted by proxy. When you're hosting critical enterprise data, as well as content from sites such as WikiLeaks, it could be a problem that spills over into multiple customer sites, not just the ones being hit.

Would you consider committing to an IaaS provider who shut down a customer’s instance in response to a phone call from a U.S. congressman’s staff member? At the minimum, an order from a federal district court judge should be required, in my opinion.

ReadWriteCloud conducted a Weekly Poll: Should Amazon.com Have Dropped Wikileaks? on 12/1/2010. Here are the poll results as of 12/2/2010 9:00 AM PST:

<Return to section navigation list>

Cloud Computing Events

Brent Stineman (@BrentCodeMonkey) listed Free Online Azure Training!!! in this 12/1/2010 post:

I don’t normally post this kind of stuff here but with two of Microsoft’s “rock star” evangelists involved, I can’t help myself.

Some of the key content from the Azure Bootcamps is going to be done online over the next three months as a series of 1 hour webcasts. If you’ve been wanting to get trained up on Windows Azure but couldn’t commit the time, you can’t pass up this opportunity.

12/06: MSDN Webcast: Azure Boot Camp: Windows Azure and Web Roles

12/13: MSDN Webcast: Azure Boot Camp: Worker Roles

01/03: MSDN Webcast: Azure Boot Camp: Working with Messaging and Queues

01/10: MSDN Webcast: Azure Boot Camp: Using Windows Azure Table

01/17: MSDN Webcast: Azure Boot Camp: Diving into BLOB Storage

01/24: MSDN Webcast: Azure Boot Camp: Diagnostics and Service Management

01/31: MSDN Webcast: Azure Boot Camp: SQL Azure

02/07: MSDN Webcast: Azure Boot Camp: Connecting with AppFabric

02/14: MSDN Webcast: Azure Boot Camp: Cloud Computing ScenariosAnd if you missed today’s “getting started” webcast, the recording is already available.

So stop making excuses and get with the training!

Steven Nagy announced on 12/2/2010 Azure BizSpark Camp–Perth will be held 12/3/2010 at Burswood Convention Centre Perth, Great Eastern Highway, Burswood, Perth, Australia:

This weekend I’m flying over to Perth to help out the local start up talent with their Windows Azure applications. It is a weekend long event where start ups in the Microsoft BizSpark program can come along and attempt to build an application in a weekend, then pitch it to a panel of judges. The best applications (or proof of concepts) will win some cool prizes such as a new WP7 device.

Here [are] the details for the actual event:

Are you a start up? Even if you’re not on the BizSpark program yet, get in touch with Catherine Eibner (@ceibner) and I’m sure she’ll let you come along if you turn up with your paper work.

Even if you don’t have an application idea for the platform, come along for the free training and technical support to learn what it takes to build highly scalable applications on the Windows Azure platform.

This is just one event in a long line of initiatives from Microsoft to support new and growing businesses and one I’m very proud to be part of. I hope to see you there!

The Brookings Institution (@BrookingsGS) will conduct an Internet Policymaking: New Guiding Principles public form on 12/6/2010 at Falk Auditorium, The Brookings Institution, 1775 Massachusetts Ave., NW, Washington, DC:

Event Summary

Since formalization of the 1997 “Framework for Global Electronic Commerce,” the federal government has not systematically re-examined the core principles for Internet policy. With the emergence of new policy domains—such as privacy, cybersecurity, online copyright infringement, and accessibility to digital video content—policymakers see greater urgency in evaluating, and possibly adapting, existing guidelines to meet the demands of today’s Internet environment. The Obama administration recently established a new panel of the National Science and Technology Council’s Committee on Technology to examine privacy and Internet policy principles.

Event Information

- When: Monday, December 06, 2010, 8:30 AM to 12:30 PM EST

- Where: Falk Auditorium, The Brookings Institution, 1775 Massachusetts Ave., NW

Washington, DC

Map

Event Materials

- Contact: Brookings Office of Communications

- Email: events@brookings.edu

- Phone: 202.797.6105

RELATED CONTENT

Technology Lessons from City Government in Seoul, South Korea

- Darrell M. West, The Huffington Post, September 08, 2010

Moving to the Cloud: How the Public Sector Can Leverage the Power of Cloud Computing

Innovating through Cloud Computing

- Darrell M. West, The Brookings Institution, May 07, 2010

- More Related Content » On December 6, the Center for Technology Innovation at Brookings will host a forum convening academics, policy practitioners and government officials to discuss the question of which principles should guide policymakers as they address questions raised by the current Internet environment. What role do transparency requirements play? How can governments facilitate better adherence to best practice and engagement with multi-stakeholder bodies? What roles does user education play and how can notions of Net citizenship and digital literacy be developed?

After each panel, speakers will take audience questions.Participants

8:30 AM -- Welcome and Introductory Remarks

- Darrell M. West, Vice President and Director, Governance Studies

- Aneesh Chopra, U.S. Chief Technology Officer, Office of Science and Technology Policy, The White House

- Howard Schmidt, Special Assistant to the President and Cybersecurity Coordinator, National Security Staff, Executive Office of the President

- Victoria Espinel, U.S. Intellectual Property Enforcement Coordinator, The White House

Users As Regulators: The Role of Transparency and Crowd Sourcing As A Form of Oversight

- Moderator: Phil Weiser Senior Advisor to the Director for Technology and Innovation, National Economic Council, The White House

- Mark Cooper, Research Director, Consumer Federation of America

- Cynthia Estlund, Catherine A. Rein Professor of Law, New York University School of Law

- Kenneth Bamberger, Assistant Professor of Law, UC Berkeley School of Law

- Kathy Brown, Senior Vice President, Public Policy Development and Corporate Responsibility, Verizon

Internet Governance Through Multi-stakeholder Bodies

- Moderator: Daniel J. Weitzner, Associate Administrator for the Office of Policy Analysis and Development, National Telecommunications and Information Administration

- Joseph W. Waz, Jr., Senior Vice President, External Affairs and Public Policy, Counsel, Comcast Corporation

- Peter Swire, C. William O'Neill Professor in Law and Judicial Administration, The Ohio State University

- Leslie Harris, President and CEO, Center for Democracy & Technology

- Ernie Allen, President and Chief Executive Officer, National Center for Missing & Exploited Children and the International Centre for Missing & Exploited Children

User Education and Net Citizenship: How Can the Government Encourage Adherence to Best Practice?

- Moderator: Christine Varney, Assistant Attorney General, Antitrust Division

- Pamela Passman, Corporate Vice President and Deputy General Counsel, Microsoft

- Rey Ramsey, President and Chief Executive Officer, TechNet

- Alan Davidson, Director of Public Policy, Google Inc.

- Gary Epstein, General Counsel, Aspen Institute IDEA Project

Closing Address: Internet Policy Principles From An International Perspective

- Moderator: Allan A. Friedman, Fellow, Governance Studies

- Karen Kornbluh, Ambassador and U.S. Permanent Representative to the Organization for Economic Cooperation

Marc Benioff wants you to Watch his Dreamforce Keynote - Live from Your Computer on 12/7 and 12/8/2010:

Corey Fowler posted Microsoft + ObjectSharp = AzureFest on 12/1/2010:

Microsoft is All in with the cloud, and with their Cloud offering and I am too.

Microsoft has been very aggressive building out their Cloud and it doesn’t look like it’s going to slow down anytime soon.

You may want to think about your future, what am I planning on working on over the next 3-5 Years of my Career. If you’ve thought of Start-ups, or Enterprise Solutions you might want to consider diving into the cloud RIGHT NOW.

Not to worry though, ObjectSharp has teamed up with Microsoft to deliver AzureFest, a *FREE* 3hr mosh-up [pun intended] of the Windows Azure Registration, Configuration and Development Process.

When is this Rocking Journey you ask? December 11th, 2010 9am-12 & 1-4pm.

Yeah, that’s right, 2 sessions. We’re hardcore like that!

HyperCrunch, Inc. announced Hackathon: Cloud Development with Windows Azure to be held 12/18/2010 from 9:00 AM to 2:00 PM PST at the Microsoft San Francisco Office, 835 Market Street, Suite 700, San Francisco, CA 94103:

Overview

You have read, seen and heard about Windows Azure, now it's time to dive into code and build something. Join other Bay area .NET developers for this exciting, FREE cloud-focused event:

Hackathon: Cloud Development with Windows Azure

This Hackathon is all about code, code and more code. There will be a short walkthrough to get you off and running (if you are not already familiar with Windows Azure), but the rest of the time you can code and collaborate with other cloud developers.

Three Reasons Why You Should Attend

- Get started developing with Windows Azure, the hottest cloud platform for .NET developers

- Share ideas, code and techniques with other developers and learn from experts

- Develop a cool cloud app and win a prize

Prizes

At the end of the Hackathon we will have the audience vote for the Top 2 apps. Each developer will win:

2 x Microsoft Visual Studio 2010 Ultimate with MSDN Subscription

(valued of approx. $11,000 each)

We are working with sponsors to get more door prizes and swag.

Schedule: Here's the plan for the Hackathon:

- 9:00 a.m. - 9:30 a.m. Get caffeinated; install Azure development software (available on USB flash drives)

- 9:30 a.m. - 10:30 a.m. Walkthrough -- Building and Deploying an Azure Application

- 10:30 a.m. - 1:30 p.m. Hackathon -- Build your own Azure app or choose one of the sample projects posted

- 1:30 p.m. - 2 p.m. Share your Hackathon app (prizes for the Top 2 apps by audience vote)

Throughout the Hackathon you will be able to ask questions and get answers to help you get familiar with Windows Azure and build your app. We will have sample project ideas or you can come up with your own project.

Lunch and Beverages: We will not have a specific break for lunch, but will have food and beverages available for you to consume whenever you feel like it.

Pre-requisites: The only pre-requisite for this event is that you have knowledge of basic ASP.NET development. We'll be using ASP.NET MVC, but you can develop with WebForms if you wish. You will need to bring your own computer to the event. It will be helpful to have the Windows Azure SDK already installed on your computer, but it is not necessary. We will have USB flash drives with the SDK so you can install at the event. To install the SDK in advance, go here: Windows Azure SDK

<Return to section navigation list>

Other Cloud Computing Platforms and Services

Alex Williams reported Google App Engine Gets Some Needed Upgrades in this 12/2/2010 post to the ReadWriteCloud blog:

Google App Engine has received some needed upgrades to its service that customers have been asking for over the past several months.

The issues came to a head last week after a developer posted 13 reasons why he and his group decided to drop Google App [Engine].

Some questioned the developer's execution but overall many of the issues have also been noted in the Google Apps discussion boards.

Customers have been asking for such things as an increase in the amount of data that can be uploaded in a URL Fetch and an increase in the 30 second limits to upload data.

In conversations yesterday, the Google App Engine team said the URL Fetch has been increased from one megabyte to 30 megabytes of data. The 30-second limit is now 10 minutes.

Detailed infrastructure upgrades have been needed to make the upgrades. The goal is to remove all limitations but that's as far as Google engineers can do right now.

Added Features

Google has also added new features for the platform. Developers may push notifications into the browser for real-time commenting. Instances may be reserved in anticipation of spikes to the platform.

Road Map

Over the next few months, Google will add MapReduce to Google App Engine. This will help optimize applications and add a degree of personalization. Dedicated servers will allow for developers to build a game server if they wished.

Here's a list of what's to come:

Google App Engine needed these improvements. How does it compare to Amazon Web Services or Windows Azure? The new features make it far more usable for developers. AWS is still the king, and Azure is new to the block. Nonetheless, these improvements will go a long way in satisfying the developer community.

Jeff Barr (@jeffbarr) announced Amazon Linux AMI 2010.11.1 Released on 12/1/2010:

We have released a new version of the Amazon Linux AMI. The new version includes new features, security fixes, package updates, and additional packages. The AWS Management Console will be updated to use these AMIs in the near future.

Users of the existing Amazon Linux AMI can access the package additions and updates through our Yum repository.

New features include:

AMI size reduction to 8 GB to simplify usage of the AWS Free Usage Tier.

- Security updates to the Amazon Linux AMI are automatically installed on the first launch by default. This can be disabled if necessary.

- The AMI versioning system has changed to a YYYY.MM.# scheme.

The following packages were updated to address security issues:

- glibc

- kernel

- java-1.6.0-openkdk

- openssl

The following packages were updated to newer versions:

- bash

- coreutils

- gcc44

- ImageMagick

- php

- ruby

- python

- tomcat6

We have added a number of new packages including:

- cacti

- fping

- libdmx

- libmcrypt

- lighttpd

- memcached

- mod_security

- monit

- munin

- nagios

- nginx

- rrdtool

- X11 applicaitons, client utilities, and bitmaps