Windows Azure and Cloud Computing Posts for 12/3/2010+

| A compendium of Windows Azure, Windows Azure Platform Appliance, SQL Azure Database, AppFabric and other cloud-computing articles. |

• Important: See the Mark Estberg reported Microsoft’s Cloud Infrastructure Receives FISMA Approval in a 12/2/2010 post article in the Windows Azure Infrastructure section below.

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database and Reporting

- Marketplace DataMarket and OData

- Windows Azure AppFabric: Access Control and Service Bus

- Windows Azure Virtual Network, Connect, and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch

- Windows Azure Infrastructure

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Read the detailed TOC here (PDF) and download the sample code here.

Discuss the book on its WROX P2P Forum.

See a short-form TOC, get links to live Azure sample projects, and read a detailed TOC of electronic-only chapters 12 and 13 here.

Wrox’s Web site manager posted on 9/29/2009 a lengthy excerpt from Chapter 4, “Scaling Azure Table and Blob Storage” here.

You can now freely download by FTP and save the following two online-only PDF chapters of Cloud Computing with the Windows Azure Platform, which have been updated for SQL Azure’s January 4, 2010 commercial release:

- Chapter 12: “Managing SQL Azure Accounts and Databases”

- Chapter 13: “Exploiting SQL Azure Database's Relational Features”

HTTP downloads of the two chapters are available for download at no charge from the book's Code Download page.

Tip: If you encounter articles from MSDN or TechNet blogs that are missing screen shots or other images, click the empty frame to generate an HTTP 404 (Not Found) error, and then click the back button to load the image.

Azure Blob, Drive, Table and Queue Services

No significant articles today.

<Return to section navigation list>

SQL Azure Database and Reporting

Sumesh Dutt Sharma described Cumulative Update 3 for SQL Server Management Studio 2008 R2: What’s in it for managing SQL Azure Databases? in this 12/3/2010 post:

Microsoft SQL Azure Database periodically adds new features to its existing service offerings. A few months back, via the Service Update 2 and Service Update 3, the following features were made available to users of SQL Azure Databases:

“ALTER DATABASE MODIFY NAME” T-SQL. This now allows users to change the names of their database hosted in SQL Azure. (More details can be found here).

Support for the Hierarchyid, Geometry and Geography data types (More details can be found here).

Creation of spatial index (CREATE SPATIAL INDEX) (More details can be found here).

In order to enable users to work with these features when connected to SQL Azure, SQL Server Management Studio 2008 R2 was updated via the Cumulative Update 3 (CU3). The list of SSMS enhancements that happened for SQL Azure in SSMS 2008 R2 CU3 is as follows (please note that these capabilities are already present in SSMS 2008 R2 for users connected to their on-premise database servers):

1. Database Rename support in Object Explorer (OE).

You can now rename a SQL Azure Database from OE itself. Steps for achieving the purpose are:

Connect to “master” database on SQL Azure.

Expand the “Databases” node in OE.

Select required database node.

Either press “F2” or right click on the node and select “Rename” from the context menu.

When the database node text has become editable, type the new name of the database and press “Enter”.

2. Tables having columns with Spatial data types (Geometry, Geography) and Hierarchyid data type can now be scripted for SQL Azure database using SSMS.

Before CU3 of SSMS 2008 R2, SSMS throws exception when we script a table, having columns with these data types, for SQL Azure database. We have fixed this issue in CU3 by adding support of these data types for SQL Azure database.

3. Spatial Index support in SSMS for SQL Azure.

Spatial Index scripting support for SQL Azure is also added as part of CU3 for SSMS 2008 R2.

Note:

One part of Spatial Index support that we have not addressed yet is the creation of Spatial Index for SQL Azure from SSMS. We have plans to address this by adding a new template to the Template Explorer for creating Spatial Index on a table in SQL Azure database. But that will happen in a Public CU release only. Till then, in case you find this useful, you can add the given template to the Template Explorer in your SSMS 2008 R2.

-- ==================================================== -- Create Spatial Index template for SQL Azure Database -- ==================================================== CREATE SPATIAL INDEX <index_name, sysname, spatial_index> ON <schema_name, sysname, dbo>.<table_name, sysname, spatial_table> ( <column_name, sysname, geometry_col> ) USING <grid_tessellation, identifier, GEOMETRY_GRID> WITH ( BOUNDING_BOX = ( <x_min, float, 0>, <y_min, float, 0>, <x_max, float, 100>, <y_max, float, 100>), GRIDS =( LEVEL_1 = <level_1_density, identifier, HIGH>, LEVEL_2 = <level_2_density, identifier, LOW>, LEVEL_3 = <level_3_density, identifier, MEDIUM>, LEVEL_4 = <level_4_density, identifier, MEDIUM>), CELLS_PER_OBJECT = <cells_per_object, int, 10>, DROP_EXISTING = OFF )

SQL Azure Database: North Central US reported a [Yellow] SQL Azure Investigation to the Windows Azure Services Dashboard on 12/3/2010:

- Dec 3 2010 11:35 AM We are currently investigating a potential problem impacting SQL Azure Database

- Dec 3 2010 4:17 PM Normal service availability is fully restored for SQL Azure Database.

Following “scheduled maintenance on SQL Azure Database.” The dashboard shows Planned Maintenance Complete indicators but no indication of a problem.

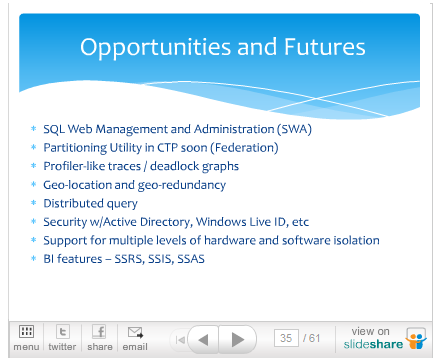

Lynn Langit (@llangit) reported the availability of her SQL Azure deck from ‘Day of Azure’ in this 12/3/2010 post:

Here’s the deck Ike Ellis and I will be presenting on Dec 4 in San Diego for the community ‘Day of Azure II’ – enjoy!

SQL Azure from Day of Azure II

View more presentations from Lynn Langit.

61 (count ‘em 61) slides! I’m especially anxious to get the Partitioning Utility, Distributed Query, and Security w/Active Directory, Windows Live ID, etc. features.

SQL Azure Database: South Central US reported a [Yellow] SQL Azure Investigation to the Windows Azure Services Dashboard on 12/3/2010:

- Dec 3 2010 4:41 AM We are currently investigating a potential problem impacting SQL Azure Database

Following “scheduled maintenance on SQL Azure Database.” The notification doesn’t include an indication of “service availability is fully restored.” The dashboard shows Planned Maintenance Complete indicators but no indication of a problem.

<Return to section navigation list>

Dataplace DataMarket and OData

Swati released the source code for CodenameDallas to the MSDN Code Gallery on 12/2/2010 with the following cryptic description:

It contains a sample windows console application which retrieves data from Microsoft Codename "Dallas" cloud service.

and no ReadMe, screen captures or other documentation.

<Return to section navigation list>

Windows Azure AppFabric: Access Control and Service Bus

Igor Ladnik posted RESTful WCF + Azure AppFabric Service Bus = Access to Remote Desktop from Browser to The Code Project on 12/3/2010:

Technique combining RESTful WCF with Azure AppFabric Service Bus enables browser access to remote desktop with minimum of code

Introduction

WCF RESTful service and Azure AppFabric Service Bus working together allow thin client (browser) to access a remote machine; and this is achieved with remarkably small amount of code.

Brief Technique Description

The WCF REST acts as lightweight Web server, whereas the Service Bus provides remote access solving problems of firewalls and dynamic IP addresses. A small application presented in this article employs the two technologies. The application ScreenShare running on a target machine allows user to view and control the target machine's screen from his/her browser. Neither additional installations nor

<object>tag components (like applets or ActiveX) are required on the client machine.Client's browser depicts an image of a remote target machine screen and allows to operate simple controls (like buttons and edit boxes) on the image, i.e. implement to some extent active screen sharing. Since this article is just illustration of concept, the sample implements minimum features. It consists of ScreenShare.exe console application and a simple RemoteScreen.htm file.

The application provides:

- A simple Web server

- Screen capture

- Mouse click and writing text to Windows controls

The Web server implemented as self-contained RESTful WCF service is able to receive ordinary HTTP requests generated [in our case] by a browser. The service exposes endpoints to transfer text (text/html) and image (image/jpeg) data to client. The endpoints have Relay bounding to support communication via Azure AppFabric Service Bus. Such a communication enables connection between browser and WCF service on target machine running in different networks with dynamic IP addresses behind their firewalls.

Code Sample

To run the sample, you need first to open account for Azure AppFabric, create a service namespace (see MSDN or e.g. [1], chapter 11) and install Windows Azure SDK on target machine. In App.config file in Visual Studio solution (and in configuration file ScreenShare.exe.config for demo) values of issuerName and issuerSecret attributes in

<sharedSecret>tag and placeholderSERVICE-NAMESPACEshould be replaced with appropriate values of your Azure AppFabric Service Bus account. Then build and run the project (or run ScreenShare.exe in demo). Application writes in console URL by which it may be accessed:Now you can access screen of the target machine from your browser using the above URL.

As it was stated above, this sample is merely a concept illustration, and its functionality is quite limited. For now, the client can get an image of a target machine screen in his/her browser and control target machine by left mouse click and text inserted in browser and transmitted back to target machine. After left mouse click was performed at image in browser, coordinates of the clicked point are transferred to target machine and ScreenShare application emulates the click at the same coordinates in the target machine screen. To transmit text, the client should move cursor to browser image of selected control (make sure that the image is in focus), type text (as the first symbol is typed, a text box appears to accommodate the text) and press “Enter” button. The inserted text will be transmitted to the target machine and placed into appropriate control. After click or text transfer, the image in browser will be updated to reflect changes on target machine.

ScreenShare configuration file contains two parameters controlling its behavior. ScreenMagnificationFactor changes size of screen image before its transmit to browser. This parameter of type

doublecan be anything between 0 and 1 providing trade-off between quality and size of screen image transmitted. Client can switch between full size image (ScreenMagnificatioFactor = 1) and current magnification with “Space” button in the browser. SleepBeforeScreenCaptureMs sets pause (in ms) between action on the target machine screen and its capture to catch the change caused by the action.ScreenShare application may serve several browsers. Its WCF service behavior is defined with ConcurrencyMode.Single. This definition ensures that client calls are served sequentially and synchronization is not required. ScreenShare application supports session with its state for each client (browser). For now the session state consists of one parameter ScreenMagnificationFactor since current image size may vary for different clients.

Browsers

So far ScreenShare was tested with Internet Explorer (IE), Chrome, Firefox and browsers of Android, iPhone and Simbian mobile devices. The best results were achieved with IE and Chrome. Firefox shows initial picture but then fails to react (RemoteScreen.htm file should be changed to make Firefox update source of image). Mobile browsers function properly but image size should be adjusted, and it is difficult to insert text. Actually htm file and probably application itself should be tuned to serve at least most popular browsers (the application is aware of browser type).

Discussion

The sample in this article by no means competes with sophisticated screen sharing applications. But the power of the described approach is that theoretically any browser without additional installation may be used to operate your desktop remotely. The browser ability is limited with just developer's HTML/JavaScript skills.

Further Development

The sample of this article may be improved in many ways. HTML code may be updated in order to support more browsers. Additional control options like e.g. more mouse events and text editing capabilities may be added. It is also possible to share (or rather automate) just one application instead of the entire screen. For application automation purposes, the approach of this article may be combined with code injection technique [2]. Improvements may also be made in image update. Various possibilities to update only changed part of the screen image should be considered. This can be achieved e.g. either with split of the entire screen area on several regions or with creation of overlapping regions for updated parts of the screen. Yet another improvement can be carried out by converting ScreenShare console application into Windows service. This is not a trivial task as it seems at the first glance since Windows service runs in a different desktop and therefore by default captures screen of its desktop and not of primary desktop.

Conclusions

The RESTful WCF + Azure AppFabric Service Bus approach allows the user to access and control remote machine with any browser from any place. Very little code and development efforts are required to achieve this goal. The article’s sample illustrates this approach.

References

[1] Juval Lowy. Programming WCF Services. Third edition. O'Reilly, 2010

[2] Igor Ladnik. Automating Windows Applications Using Azure AppFabric Service Bus. CodeProject.History

- 1st December, 2010: Initial version

License

This article, along with any associated source code and files, is licensed under The Code Project Open License (CPOL)

Steve Plank (@plankytronixx) explained HowTo: Stop temporary contractors from accessing the cloud while giving full access to full-timers in this 12/3/2010 post:

The nervousness of finance sector and public sector customers regarding the deployment of cloud-based applications continues. It appears a lot of this nervousness comes from the opportunities for espionage, subterfuge and other bad-things…

I’ve had conversations with a number of customers now who have expressed an interest in federating certain applications in the cloud but the thing that holds them back is this: with federation, security tokens issued by federation servers actually traverse the users’ web-browsers. Even with encrypted tokens and an encrypted channel such as SSL, there still exists an opportunity for unauthorised viewing which is something embedded in to policy, legislation and various rules and regulations they are obliged to follow. Many of them must follow this policy for certain classes of staff such as temporary staff, temporary contractors, pert-time staff and so on. Nothing they can do about it – “them’s the rules” (sic).

However, all is not lost. There is a feature in the on-premise federation server (Active Directory Federation Services 2.0 – ADFS 2.0) half of the equation called an issuance rule. Before I describe it – you might like to spend a few moments understanding how federation works, but this is the scoop.

Federation involves a dance between the client web-browser and the various services. In this case we are talking about the cloud application(s), the App Fabric:Access Control Service (ACS – which is really a cloud-based federation service) and the local ADFS 2.0 server. When a user with an on-premise AD account tries to access a cloud application that is protected by federation, a number of redirects occur and the client ends up requesting a SAML (Security Assertion Markup Language) token from the local ADFS 2.0 Server. This is where the opportunity for unauthorised viewing occurs – although I’d argue in reality, trying to decrypt a SAML Token would be more than a minor challengette.

ADFS 2.0, on-premise can be set up to only issue SAML tokens according to a set of rules. You can create a really simple rule which only issues token to users who are members of a certain security group. You can alternatively set a rule which will not issue a token to somebody who is a member of a certain security group. Alternatively, the issuance rules could issue a token based on some other attribute that is set on the user object. Perhaps the job title or the department.

So an ADFS 2.0 service that is federating users to cloud-based applications could deny say, temporary contractors, before they even get to the cloud application. It’d be a simple matter of creating a security group – “Non Cloud Users” and adding the right members of staff to that group. On the ADFS 2.0 Server, an issuance rule would be set up to deny the issuing of SAML token to anybody in the security group.

The experience for the user would be thus: they’d type the application URL and a few seconds later, they’d land at the ADFS Server and be hit with an Authorization Failure error message. The message is a page from IIS which could be customised to give more of a clue – “You are attempting to use a cloud-based application. Temporary contractors are not permitted to do this. If you need access to the application, please email requests@govdept.gov.uk”.

In the case of the odd exception here or there – it’s just a simple matter of removing them from that security group.

The interesting thing is that the SAML token is never issued and the “dance” terminates at that point. It’s different to putting an authorization step in the cloud application which denies access to the user based on some claim within the token – because the token will have traversed the browser and therefore a theoretical opportunity for unauthorised viewing existed. Perhaps with a copy of fiddler running, the user could view the token.

If you find yourself cornered by this legislation, then ADFS 2.0 issuance rules could just be your saviour.

Rama Ramani posted Windows Azure AppFabric Caching Service - soup to nuts primer on 11/29/2010 to the Windows Server AppFabric Customer Advisory Team blog, which is the reason I didn’t find it when posted:

Introduction

One of the important reasons to move an application to the cloud is scalability (outside of other benefits that the cloud provides). When the application is deployed to the cloud, it is critical to maintain performance - the system needs to handle the increase in load and importantly maintain low latency response times. This is currently needed for most applications, be it a website selling books or a large social networking website or a complex map reduce algorithm.

Distributed Caching as a technology enables applications to scale elastically while maintaining the application performance. Previously, Windows Server AppFabric enabled you to use distributed caching on-premises. Now, distributed caching is available on the cloud for Azure applications!

Here are some scenarios to leverage the Windows Azure AppFabric Caching Service:

- ASP.NET application running as a web role needing a scalable session repository

- Windows Azure hosted application frequently accessing reference data

Windows Azure hosted application aggregating objects from various services and data stores

Windows Azure AppFabric Caching is Microsoft's distributed caching Platform As A Service that can be easily configured and leveraged by your applications. It provides elastic scale, agile apps development, familiar programming model and best of all, managed & maintained by Microsoft.

At this time, this service is in labs release and can be used for development & test purposes only.

Configuring the Cache endpoint

- Go to https://portal.appfabriclabs.com/ and login using your Live ID

Create a Project and then add a unique Service Namespace to it, for instance 'TestAzureCache1'.

When you click on the Cache endpoint, you should see the service URL and the authentication access token as follows:

At this point, you are done setting up the cache endpoint. Comparing this to installing and configuring the cache cluster on-premises shows the agility that cloud platforms truly provide.

Developing an application

- Download the Windows Azure AppFabric SDK to get started.

Copy the app.config or web.config from the portal shown above and use it from your client application

- In your solution, add references to Microsoft.ApplicationServer.Caching.Client and Microsoft.ApplicationServer.Caching.Core from C:\Program Files\Windows Azure AppFabric SDK\V2.0\Assemblies\Cache

Add the namespace to the beginning of your source code and develop the application in a similar manner to developing on-premises application

using Microsoft.ApplicationServer.Caching;

* At this point, Azure AppFabric caching service does not have all the features that Windows Server AppFabric Cache supports. . For more information, see http://msdn.microsoft.com/en-us/library/gg278356.aspx.

If you are developing the application via code instead of config, here are set of code for accessing the caching service. Only the statements highlighted need to be modified as per your configured endpoint.

private void PrepareClient()

{

// Insert the Service URL from the labs portal

string hostName = "TestAzureCache1.cache.appfabriclabs.com";

int cachePort = 22233;

List<DataCacheServerEndpoint> server = new List<DataCacheServerEndpoint>();

server.Add(new DataCacheServerEndpoint(hostName, cachePort));

DataCacheFactoryConfiguration config = new DataCacheFactoryConfiguration();

//Insert the Authentication Token from the labs portal

string authenticationToken = <To Be Filled Out>;

config.SecurityProperties = new DataCacheSecurity(authenticationToken);

config.Servers = server;

config.IsRouting = false;

config.RequestTimeout = new TimeSpan(0, 0, 45);

config.ChannelOpenTimeout = new TimeSpan(0, 0, 45);

config.MaxConnectionsToServer = 5;

config.TransportProperties = new DataCacheTransportProperties() { MaxBufferSize = 100000 };

config.LocalCacheProperties = new DataCacheLocalCacheProperties(10000, new TimeSpan(0, 5, 0), DataCacheLocalCacheInvalidationPolicy.TimeoutBased);

DataCacheFactory myCacheFactory = new DataCacheFactory(config);

myDefaultCache = myCacheFactory.GetCache("default");

}

Performance data

By now, you must be thinking if this is indeed this easy, what is the catch? :) :) There is none. Here are some performance numbers that I ran, hopefully this makes you feel at ease.

This is an ASP.NET application running as a web role in Windows Azure accessing the cache service. The Object count parameter is used to instantiate an object which has an array of int[] and string[]. For example, when Object count is set to 100 the application will instantiate int[100] and string[100] with each array item is initialized to a string ~6 bytes. So approximately, the parameter 'Object count' * 10 is the pre-serialized object size. When storing the object in the caching service, the KEY is generated using RAND and then depending on the Operations dropdown, the workload is run for a set of iterations based on the 'Iterations' parameter. Finally, the average latency is computed. The web role is running in South Central US data center in the same DC where the caching service is also deployed in order to avoid any external network latencies. For all these tests, the local cache feature is disabled.

Now that you have some cache in the cloud, get your apps going!

Authored by: Rama Ramani

Reviewed by: Christian Martinez, Jason Roth

<Return to section navigation list>

Windows Azure Virtual Network, Connect, and CDN

No significant articles today.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

Patric Schouler (@Patric68) described four Traps of Windows Azure Development in a 12/3/2010 post:

My personal traps of Windows Azure development for this week are:

Problems with long project names (longer 248) for the temporary name of my delivery package for running at the Windows Azure emulator

- Use _CSRUN_STATE_DIRECTORY as environment variable to shorten the path

- Remove PlatformTarget from an existing Web project file to avoid compile error

- Strange CommunicationObjectFaultedException error if you use a Source Safe tool and your web.config is read only

If you use assemblies that are not content of a normal Windows Server 2008 SP2 installation (for example RIA Services), you have to set the properties “Copy Local” to true of the assemblies involved – otherwise you get a never ending “busy” state of your web role at Windows Azure Management Tools

See also Patric’s Windows Azure: CommunicationObjectFaultedException for more detail on Trap #3 above:

If your web.config is read only you get maybe the following strange error message in a Windows Azure project at runtime. It costs my half a day to find out, that this has nothing to do with my web service configuration or web service usage.

The Windows Azure Team posted Real World Windows Azure: Interview with John Gravely, Founder and CEO of ClickDimensions on 12/2/2010:

As part of the Real World Windows Azure series, we talked to John Gravely, Founder and CEO of ClickDimensions, about using Windows Azure for the company's marketing automation solution. Here's what he had to say:

MSDN: Tell us about ClickDimensions and the services you offer.

Gravely: ClickDimensions is a marketing automation solution for Microsoft Dynamics CRM that is built for Windows Azure. Our solution provides email marketing, web tracking, lead scoring, social discovery, and form capture capabilities built into Microsoft Dynamics CRM. Our customers are businesses that understand the benefits of personalized marketing and want to understand more about their prospects.

MSDN: What were the biggest challenges that you faced prior to implementing Windows Azure?

Gravely: We designed ClickDimensions from the ground-up on Windows Azure so the challenge for us was to develop the best solution we could to make marketers more effective and sales more efficient. The software-as-a-service model was important to us because it would enable us to scale our distributed architecture quickly.

MSDN: Can you describe the marketing automation solution you built for Windows Azure?

Gravely: Our solution provides easy-to-use and highly-available email marketing with full tracking capabilities, web form integration, and social data integration into Microsoft Dynamics CRM. By using this information we numerically grade each visitor's interest with a lead score so that the interest level of each visitor is clear. To implement all of this, Windows Azure was the clear choice for us over other cloud services providers; we're veterans in the Microsoft Dynamics CRM market, so it was an easy decision to go with another Microsoft technology. Our services are compartmentalized so that we can scale up different parts of the solution as needed.

ClickDimensions captures web traffic data and sends it to customers' Microsoft Dynamics CRM databases as visitors are identified.MSDN: What makes ClickDimensions unique?

Gravely: We are the first marketing automation solution for Microsoft Dynamics CRM that was built from the ground up for Windows Azure. By using ClickDimensions, our customers can see who is visiting their websites and who is interested in their products and services by getting insight into actual web activity-it helps ensure accurate, qualified leads and prospects. Because we capture tracking events and log them in Windows Azure, customers don't have to worry about the availability the customer's CRM system. If it is unavailable for any reason, we can wait until it is back up and running before sending the data to custom entities in the customers' Microsoft Dynamics CRM database.

MSDN: What kinds of benefits are you realizing with Windows Azure?Gravely: Scalability is important. We can scale up as much as we need without procuring, configuring, managing, and maintaining physical servers. That means no capital expenditures and lower operating costs. In addition, we can use familiar development tools, such as the Microsoft Visual Studio 2010 development system and programming languages, such as C#. We are also confident in the reliability of Microsoft data centers, which helps to ensure that there are no issues in our sales cycles, enables us to add new customers to the system quickly, and also gives our customers confidence that their data is protected.

To read more Windows Azure customer success stories, visit: www.windowsazure.com/evidence

John Adams reported Misys and Microsoft Collaborate in the Cloud in an article for the December 2010 issue of Bank Technology News:

Most banks say they're really interested in cloud computing, but so far it's been used primarily for non-core activities like systems testing and HR functions-there hasn't been much traction yet for transactional and customer data-intensive processes-"a lot of teasing going on but not much in the way of marriage proposals" says Forrester's Ellen Carney.

But facing IT budgets that are engulfed by regulatory demands, and the value of 'speed-to-market,' even skittish CIOs are now under pressure to consider expanding cloud computing, making Azure's bulk, agility, incremental deployment options and existing ASP security protocols a valuable weapon for Misys. By offering financial services via Microsoft's Windows Azure cloud platform, Misys is pushing cloud computing activities for processes that banks thus far have opted to keep in house.

"This demonstrates a serious move on the core of the financial system and account systems," says Rod Nelsestuen, senior research director, TowerGroup. "It's one of the last things to go [to the cloud]-core systems and applications."

While the market's still just taking shape, the appetite for flexible IT fees will lure converts, or at least speed an evolution in which more core applications are placed into a private cloud, with a public cloud coming later. "FIS believes cloud computing is part of a natural evolution away from allocation-based pricing toward transaction-based pricing," says John Gordon, evp of strategic product development for FIS, which is leveraging its long-standing financial services domain expertise as a differentiator as it builds cloud-based solutions throughout its products and services. "Most of our clients and prospects are risk-minded organizations and are more focused on innovations in core banking within an intranet cloud. However, we've found the market is reticent to open the core processing though an 'Internet cloud,'" says Gordon.

At Fiserv, Kevin McDearis, CTO for enterprise technology, says the firm is focused on developing virtualization in its data centers, which is phase one for moving to a cloud infrastructure. It's also exploring the feasibility of utilizing cloud-based hosting models for ancillary services such as payments.

For Misys, the appeal of the Azure platform is it enables high volume workloads, such as end-of-day processing, to be consumed "on demand." The Microsoft collaboration also gives Misys a head start-many financial institutions already run some support services in the cloud, such as Microsoft Exchange and Microsoft Office. "We believe we are going to see banks talking to us about running core business [applications] in the cloud," says Robin Crewe, CTO of Misys.

The two firms also believe the familiarity of Windows among programmers will help. "There are millions of developers who can build apps on .net, so there are millions of developers who can extend solutions like the one we're going to market with," says Joe Pagano, managing director for Microsoft's capital markets business.

Crewe is aware that security issues have thus far held back a large-scale embrace of cloud computing. But he's not concerned. "Those things [security concerns] are solvable," he says. Pagano says security and compliance for Azure-related deployments will be handled similar to the firm's ASP model-in which it demonstrates encryption, data transfer and storage methods for data transmission to clients.

"In regards to security, your records are stored wherever the Windows Azure servers are," says James Van Dyke, founder and CEO of Javelin Research.

Matchbox Mobile (@matchboxmobile) and T-Mobile (@TMobile) describe T-Mobile’s Windows Phone 7 FamilyRoom app, which runs under Windows Azure in this 00:03:45 video case study:

Tom Hollander posted New Full IIS Capabilities: Differences from Hosted Web Core to the Widows Azure Team blog:

The Adoption Program Insights series describes experiences of Microsoft Services consultants involved in the Windows Azure Technology Adoption Program assisting customers deploy solutions on the Windows Azure platform. This post is by Tom Hollander.

The new Windows Azure SDK 1.3 supports Full IIS, allowing your web roles to access the full range of web server features available in an on-premise IIS installation. However if you choose to deploy your applications to Full IIS, there are a few subtle differences in behaviour from the Hosted Web Core model which you will need to understand.

What is Full IIS?

Windows Azure's Web Role has always allowed you to deploy web sites and services. However many people may not have realised that the Web Role did not actually run the full Internet Information Services (IIS). Instead, it used a component called Hosted Web Core (HWC), which as its name suggests is the core engine for serving up web pages that can be hosted in a different process. For most simple scenarios it doesn't really matter if you're running in HWC or IIS. However there are a number of useful capabilities that only exist in IIS, including support for multiple sites or virtual applications and activation of WCF services over non-HTTP transports through Windows Activation Services.

One of the many announcements we made at PDC 2010 is that Windows Azure Web Roles will support Full IIS. This functionality is now publicly available and included in Windows Azure SDK v1.3. To tell the Windows Azure SDK that you want to run under Full IIS rather than HWC, all you need to do is add a valid <Sites> section to your ServiceDefinition.csdef file. Visual Studio creates this section by default when you create a new Cloud Service Project, so you don't even need to think about it!

A simple <Sites> section defining a single website looks like this:

<Sites>

<Site name="Web">

<Bindings>

<Binding name="Endpoint1" endpointName="Endpoint1" />

</Bindings>

</Site>

</Sites>You can easily customise this section to define multiple web sites, virtual applications or virtual directories, as shown in this example:

<Sites>

<Site name="Web">

<VirtualApplication name="WebAppA" physicalDirectory="C:\Projects\WebAppA\" />

<Bindings>

<Binding name="HttpIn" endpointName="HttpIn" />

</Bindings>

</Site>

<Site name="AnotherSite" physicalDirectory="C:\Projects\AnotherSite">

<Bindings>

<Binding hostHeader="anothersite.example.com" name="HttpIn" endpointName="HttpIn"/>

</Bindings>

</Site>

</Sites>After working with early adopter customers with Full IIS for the last couple of months, I've found that it's now easier than ever to port existing web applications to Windows Azure. However I've also found a few areas where you'll need to do things a bit differently to you did with HWC due to the different hosting model.

New Hosting Model

There is a significant difference in how your code is hosted in Windows Azure depending on whether you use HWC or Full IIS. Under HWC, both the RoleEntryPoint methods (e.g. the OnStart method of your WebRole class which derives from RoleEntryPoint) and the web site itself run under the WaWebHost.exe process. However with full IIS, the RoleEntryPoint runs under WaIISHost.exe, while the web site runs under a normal IIS w3wp.exe process. This can be somewhat unexpected, as all of your code belongs to the same Visual Studio project and compiles into the same DLL. The following diagram shows how a web project compiled into a binary called WebRole1.dll is hosted in Windows Azure under HWC and IIS.

This difference can have some unexpected implications, as described in the following sections.

Reading config files from RoleEntryPoint and your web site

Even though the preferred way of storing configuration in Windows Azure applications is in the ServiceConfiguration.cscfg file, there are still many cases when you may want to use a normal .NET config file - especially when configuring .NET system components or reusable frameworks. In particular whenever you use Windows Azure diagnostics you need to configure the DiagnosticMonitorTraceListener in a .NET config file.

When you create your web role project, Visual Studio creates a web.config file for your .NET configuration. While your web application can access this information, your RoleEntryPoint code cannot-because it's not running as a part of your web site. As mentioned earlier, it runs under a process called WaIISHost.exe, so it expects its configuration to be in a file called WaIISHost.exe.config. Therefore, if you create a file with this name in the your web project and set the "Copy to Output Directory" property to "Copy Always" you'll find that the RoleEntryPoint can read this happily. This is one of the only cases I can think of where you'll have two .NET configuration files in the same project!

Accessing Static Members from RoleEntryPoint and your web site

Another implication of this change is that any AppDomain-scoped data such as static variables will no longer be shared between your RoleEntryPoint and your web application. This could impact your application in a number of ways, but there is one scenario which is likely to come up a lot if you're migrating existing Windows Azure applications to use Full IIS. If you've used the CloudStorageAccount class before you've probably used code like this to initialise an instance from a stored connection string:

var storageAccount = CloudStorageAccount.FromConfigurationSetting("ConnectionString");

Before this code will work, you need to tell the CloudStorageAccount where it should get its configuration from. Rather than just defaulting to a specific configuration file, the CloudStorageAccount requires you set a delegate that can get the configuration from anywhere you want. So to get the connection string from ServiceConfiguration.cscfg you could use this code:

CloudStorageAccount.SetConfigurationSettingPublisher((configName, configSetter) =>

{

configSetter(RoleEnvironment.GetConfigurationSettingValue(configName));

});If you're using HWC with previous versions of the SDK (or if you deleted the <Sites> configuration setting with SDK 1.3), you can happily put this code in WebRole.OnStart. However as soon as you move to Full IIS, the call to CloudStorageAccount.FromConfigurationSetting will fail with an InvalidOperationException:

SetConfigurationSettingPublisher needs to be called before FromConfigurationSetting can be used

"But I did call it!" you'll scream at your computer (well at least that's what I did). And indeed you did-however you called it in an AppDomain in the WaIISHost.exe process, which has no effect of your web site hosted in an entirely different AppDomain under IIS. The solution is to make sure you call CloudStorageAccount.SetConfigurationSettingPublisher and CloudStorageAccount.FromConfigurationSetting within the same AppDomain, most likely from your web site. While there used to be some issues with accessing Windows Azure SDK classes in your Application_Start event, these no longer apply and this is a great place to initialise your configuration setting publisher.

Or alternatively, as long as you're happy to use the ServiceConfiguration.cscfg file for your connection strings, you can avoid setting up this delegate altogether by replacing the call to CloudStorageAccount.FromConfigurationSetting(...) with this:

var storageAccount = CloudStorageAccount.Parse(RoleEnvironment.GetConfigurationSettingValue("ConnectionString"));

Securing resources for different web sites and applications

One final thing to note is that if you configure your Web Role to run multiple sites or virtual applications, each will run in its own Application Pool and under its own user account. This provides you with a lot of flexibility-for example you could grant different virtual applications with access to different resources such as file system paths or certificates. If you want to take advantage of this you can leverage another new SDK 1.3 feature and specify a startup task to run under elevated privileges. This task could launch a PowerShell script that sets access control lists which allows each of your applications to access the resources it needs.

Conclusion

The option to use Full IIS with Windows Azure Web Roles gives you access to a lot of new functionality that makes it easier to migrate existing IIS-based applications and also gives you more options when developing new applications. With a better understanding of the underlying hosting and security model, I hope you're able to use these new features with fewer development headaches.

Khidhr Suleman reported “Microsoft offers bridge from infrastructure-as-a-service to platform-as-a-service delivery” as a lead for his Windows Azure updates help with cloud migration article of 12/1/2010 for V3.co.uk:

Microsoft has rolled out updates for its Azure platform aimed at helping customers to migrate applications and run them more efficiently.

Key features include the Windows Azure Virtual Machine Role beta, which is designed to act as a bridge from the infrastructure-as-a-service to the platform-as-a-service delivery model, according to Microsoft.

The add-on will ease the migration of existing Windows Server 2008 R2 applications by eliminating the need for application changes, the firm said, and will enable customers to access existing business data from the cloud.

Windows Azure Marketplace beta and Extra Small Windows Azure Instance have also been made available.

Azure Marketplace allows developers and IT professionals to find, buy and sell building block components, training, services and finished services or applications to build Azure applications.

Azure Instance, meanwhile, aims to make the development of smaller applications more affordable, with testing and trialling priced at $0.05 (3p) per compute hour.

Microsoft is also offering developer and operator enhancements. The Silverlight-based Windows Azure portal has been redesigned to provide an improved and more intuitive user experience, the firm said.

Customers will also be able to access more diagnostic information, including role type, deployment and last reboot time. A revamped sign-up process reduces the number of steps needed to sign up for Windows Azure.

Other applications include the Database Manager for SQL Azure, which provides lightweight web-based database management and querying capabilities for SQL Azure.

Full Internet Information Services support enables developers to get more value out of a Windows Azure instance, Microsoft claimed, while remote desktop, elevated privilege and multiple administrator tools have also been made available.

The features can be downloaded from the Windows Azure Management Portal and Microsoft will deliver an overview of the enhancements on 1 December at 5pm GMT.

<Return to section navigation list>

Visual Studio LightSwitch

Beth Massi (@bethmassi) posted a detailed Adding Static Images and Logos to LightSwitch Applications tutorial on 12/2/2010:

We’ve had a couple questions on how you can add your own static images and logos to your LightSwitch applications so I thought I’d detail a few ways that you can do this to make your applications look a little more branded without having to load a completely different shell or theme. When you have an image property on your entity, LightSwitch handles creating a nice image editor for you to load and save images in your database. However sometimes you just want to add a static image or logo to your screens that aren’t part of your data entities. There are a few ways you can do this.

Adding an Application Logo

The first method just displays an image onto the background of the application itself. You can see it when the application loads and also when all screens are closed. To add the logo to your application, go to Project –> Properties and select the General tab. There you can specify the Logo Image.

When you run your application the logo appears on the background of the main window area.

Adding a Static Image to a Screen

There are a couple ways you can add a static image to a screen. One way is by writing a little bit of code to load an image from the client resources and pop it onto a static screen property. This allows you to load any image from the client resources onto any screen. Open the screen designer and click “Add Data Item…” at the top and choose Local Property with a type of Image. I named the property MyImage:

Next drag the MyImage property to where you want it in the content tree. Note, however, that not all areas of the tree will allow you to drop the image if there is a binding already set to a group of controls. The trick is to manipulate the layout by selecting the right groups of controls (i.e. new groups of two rows or columns) and then binding them by selecting the data items you want. For instance let’s say I have an image I want to place across the top of a screen and I want the rest of the controls to appear below that. In that case start, with a Two Row layout and for the top row content select the MyImage property and set the control to Image Viewer.

Then for the bottom row you can select the data item or collection you want to display. If you have a master-detail scenario, then you’ll want more rows. For the bottom row select “New Group” for the content and then change the Vertical Stack to the Two Rows control. This technique lets you build up as many sections on the screen that you want. You can also select Two Column layout for positioning controls side-by-side.

For my example I’m working with data similar to the Vision Clinic walkthrough sample so I’ll also add patient details as a two column layout and then under that I’ll and the child prescriptions, appointments and invoices as a tabbed control of data grids on the bottom row (you can also tweak the layout of the screen at runtime for a live preview). So my content tree looks like this:

Okay now for the fun part – showing the static image on the MyImage screen property. What we need to do first is add the image to the client as a resource. To do that, switch to File View on the Solution Explorer, expand the Client project node, right-click on the UserCode folder and select Add –> Existing Item and choose your image file.

This will add the image as a resource to the client project. Next we need to write a bit of code to load the image into the screen property. Because the loading of the image resource is pretty boilerplate code, let’s add a shared class that we can call from any screen. Right-click on the UserCode folder again and select Add –> Class and name the class ImageLoader. Write the following code:

Public Class ImageLoader ''' <summary> ''' Loads a resource as a byte array ''' </summary> ''' <param name="path">The relative path to the resource (i.e. "UserCode\MyPic.png")</param> ''' <returns>A byte array representing the resource</returns> ''' <remarks></remarks> Shared Function LoadResourceAsBytes(ByVal path As String) As Byte() 'Creates a URI pointing to a resource in this assembly Dim thisAssemblyName As New System.Reflection.AssemblyName(GetType(ImageLoader).Assembly.FullName) Dim uri As New Uri(thisAssemblyName.Name + ";component/" + path, UriKind.Relative) If uri Is Nothing Then Return Nothing End If 'Load the resource from the URI Dim s = System.Windows.Application.GetResourceStream(uri) If s Is Nothing Then Return Nothing End If Dim bytes As Byte() = New Byte(s.Stream.Length) {} s.Stream.Read(bytes, 0, s.Stream.Length) Return bytes End Function End ClassNow back on the screen designer drop down the “Write Code” button on the top right and select the Loaded method. Now all you need to do is call the ImageLoader with the relative path of the image:

Public Class PatientDetailScreen Private Sub PatientDetail_Loaded() Me.MyImage = ImageLoader.LoadResourceAsBytes("UserCode/funlogo.png") End Sub End ClassRun it and you will see the image loaded into the image viewer. You can customize the screen and change how the image is displayed by playing with the Content Size and Stretch properties.

Building your own Static Image Control

The above technique works well if you have a lot of different static images across screens or if you are just using Visual Studio LightSwitch edition. However if you just have a single image you want to display on all your screens it may make more sense to build your own control and use that on your screens instead. This eliminates the need for any code on the client. If you have Visual Studio Professional edition or higher you can create a Silverlight Class Library in the same solution as your LightSwitch project. You can then add an image control into that library and then use it on your LightSwitch screens.

To make it easy I added a User Control and then just placed an image control on top of that using the designer. I then set the Stretch property to Fill and added the logo into the project and set it as the Source property of the image control using the properties window:

Rebuild the entire solution and then head back to the LightSwitch screen designer. First comment out the code from the Loaded method we added above if it’s there. Change the control for MyImage to Custom Control. Then in the properties window click the “Change…” link to set the custom control. Add a reference to the Silverlight class library you built, select the control, and click OK.

Now when we run the screen we will see the static image control we created:

The nice thing about creating your own control is you can reuse it across screens and LightSwitch projects and there’s no need to add any code on the client.

Manip Uni asked Lights off for Lightswitch? in a 12/2/2010 post to the Channel9 Coffeehouse Forum:

I tried the beta of Lightswitch and frankly I'm just completely perplexed. Why does this product exist? And who exactly is it aimed at? Let's look at who it isn't aimed at:

- Programmers (too restricted, automated, and clunky)

- "Business People" (too complex, outside the scope of their role)

- Support Staff (outside the scope and most can program)

So that leaves what? People who cannot program and who "develop" "software" in Microsoft Access? Is this Microsoft Access 2.0?

I am looking forward to seeing pricing structure on this one. Since it is integrated into full Visual Studio I think it will die before it is even born... Why pay for VS and not use C#? Lightswitch should be competing with Office Standard or Access 2010 standalone, just sayin'.

PS - I am far from a "Lightswitch expert" I just downloaded it and developed a demo application or two.

I can’t help comparing LightSwitch to Access 2003 and earlier’s browser-based Data Access Pages (DAP), which required JavaScript for customization, and are no longer supported by Access 2007 and 2010. Access 2010 replaces DAP with Web Databases, which require hosting in SharePoint Server 2010’s Access Services.

Be sure to read the comments to this post. Many writers have a misconception about a requirement for Visual Studio 2010 Professional or higher.

Return to section navigation list>

Windows Azure Infrastructure

• Mark Estberg reported Microsoft’s Cloud Infrastructure Receives FISMA Approval in a 12/2/2010 post to the Global Foundation Services blog:

Although cloud computing has emerged as a hot topic only in the past few years, Microsoft has been running some of the largest and most reliable online services in the world for over 16 years. Our cloud infrastructure supports more than 200 cloud services, 1 billion customers, and 20 million businesses in over 76 markets worldwide.

Today, I am pleased to announce that Microsoft’s cloud infrastructure has achieved another milestone in receiving its Federal Information Security Management Act of 2002 (FISMA) Authorization to Operate (ATO). Meeting the requirements of FISMA is an important security requirement for US Federal agencies. The ATO was issued to Microsoft’s Global Foundation Services organization. It covers Microsoft’s cloud infrastructure that provides a trustworthy foundation for the company’s cloud services, including Exchange Online and SharePoint Online, which are currently in the FISMA certification and accreditation process.

This ATO represents the government’s reliance on our security processes and covers Microsoft’s General Support System and follows NIST Special Publication 800-53 Revision 3 “Recommended Security Controls for Federal Information Systems and Organizations.”

Government organizations require specialized compliance and regulatory processes. Operating under FISMA requires transparency and frequent security reporting to our US Federal customers. And we are applying these specialized processes across our infrastructure to even further enhance our Online Services Security & Compliance program. The company has been designing and testing our cloud applications and infrastructure for over a decade to continually address emerging, internationally-recognized standards. We are focused on excelling in demonstrating our capabilities and compliance with these laws and with our stringent internal security and privacy policies. As a result, all our customers can benefit from highly-focused testing and monitoring, automated patch delivery, cost-saving economies of scale, and ongoing security improvements.

Microsoft’s Chicago datacenter (a FISMA-approved facility), provides over 17 football fields worth of cloud computing capacity.

The company opened its first datacenter in September 1989 and today its globally-distributed, high-availability datacenters are managed by our Global Foundation Services (GFS) group. GFS’s Online Services Security & Compliance team has built upon the company’s existing capabilities, including being one of the first major online service providers to achieve our ISO/IEC 27001:2005 certification and SAS 70 Type II attestation, which also met the FISMA requirements. We have also gone beyond the ISO standard, which includes some 150 security controls and developed over 300 security controls to account for the unique challenges of the cloud infrastructure and what it takes to mitigate some of the risks involved. The additional rigorous testing and continuous monitoring required by FISMA have already been incorporated into our overall information security program, which is described in several white papers located our Global Foundation Services web site.

More information about FISMA is available at the National Institute of Standards and Technology web site.

Mark is Senior Director of Risk and Compliance, Microsoft Global Foundation Services.

Great news for Microsoft and its partners servicing the federal government.

Susie Adams (@AdamsSusie) adds her comments in a Microsoft’s Cloud Infrastructure – FISMA Certified FutureFed post of 12/3/2010:

When I talk to federal CIOs about cloud computing, most of their questions focus on security and privacy. Where is the data being hosted? Who has access to it? What controls are in place to protect my sensitive information? In many cases the answers to these questions are difficult to obtain. At Microsoft we take security and privacy very seriously and believe that the best way to answer these questions is to be open and transparent about our approach to certification and accreditation, risk management and day-to-day security processes.

Take our datacenters for example. Datacenters are the foundation of any organization’s approach to cloud computing, and we’ve built our datacenters to comply with the strictest international security and privacy standards, including International Organization for Standardization (ISO) 27001, Health Insurance Portability and Accountability Act (HIPAA), Sarbanes Oxley Act of 2002 and SAS 70 Type 1 and Type II.

This week we’re extremely happy to announce that Microsoft’s cloud infrastructure also received its Federal Information Security Management Act of 2002 (FISMA) certification from a cabinet-level federal agency. Adding FISMA to our existing list of accreditations provides even greater transparency into our security processes and further reinforces our commitment to providing secure cloud computing options to federal agencies. The authorization was issued to Microsoft’s Global Foundation Services, the organization responsible for maintaining Microsoft’s cloud infrastructure for all of our enterprise cloud services - including the Business Productivity Online Services - Federal (BPOS-Federal) offering as well as our Office 365 suite of services. Our BPOS cloud productivity offerings are also in the process of being FISMA certified, and we expect to announce full compliance at the FISMA-Moderate level very shortly.

My colleague Mark Estberg posted a blog entry yesterday that goes into more detail about what this means for government organizations considering cloud deployment. When combined with our existing security policies and controls, FISMA compliance ensures that customers are benefiting from highly-focused testing and monitoring, automated patch delivery, cost-saving economies of scale, and ongoing security improvements. We’ve incorporated the testing and continuous monitoring processes required by FISMA into our overall information security program, which is described in several white papers located on our Global Foundation Services website.

Specifically I’d like to call out three papers that describe our comprehensive approach to information security and the framework for testing and monitoring the controls used to mitigate threats: Securing the Cloud Infrastructure at Microsoft, Microsoft Compliance Framework for Online Services and the Information Security Management System for Microsoft Cloud Infrastructure paper that gives an overview of the key certifications and attestations Microsoft maintains.

For more information on FISMA and its importance, check out the National Institute of Standards and Technology website and Mark Estberg’s full post on the Global Foundation Services Blog.

Ryan Dunn (@dunnry) and Steve Marx (@smarx) produced Cloud Cover Episode 33 - Portal Enhancements and Remote Desktop on 12/3/2010:

Join Ryan and Steve each week as they cover the Microsoft cloud. You can follow and interact with the show at @cloudcovershow.

In this episode, Steve and Ryan:

- Walk through a number of the features and enhancements on the portal

- Discuss in depth how the Remote Desktop functionality works in Windows Azure

- Show you how to get Remote Desktop access without using the portal

- Explain the scenarios in which Remote Desktop excels

Show Links:

Windows Azure Platform Training Course

Significant Updates to the BidNow Sample (via Wade)

Using the SAML CredentialType to Authenticate to the Service Bus

Using Windows Azure MMC and Cmdlets with Windows Azure SDK 1.3

Changes in Windows Azure Storage Client Library – Windows Azure SDK 1.3

How to get most out of Windows Azure Tables

Chris Czarnecki posted Microsoft and Google Compete in the Cloud to the Learning Tree blog on 12/3/2010:

A couple of days ago, the General Services Administration announced that it had made the decision to replace its Lotus notes and Domino software with Google for its email. This decision prompted a response from Microsoft saying that they were disappointed not to be able to provide a solution and how much better they could have been than Google. This decision and response from Microsoft brings to light a scenario that is going to be played out time and again over the coming years as organisations and companies migrate to the cloud. Some organisations adopting Microsoft others Google.

Microsoft and Google are now providing Cloud Computing solutions in many overlapping areas. If we consider these using the service delivery structure, in the area of Software as a Service (SaaS) both offer productivity tools. Google through Google Apps and Microsoft through the Office365, which offers the Office productivity suite, Sharepoint, Exchange and Lync online. These solutions from both companies compete head-on in feature set, functionality and mode of operation.

At the next level, Platform as a Service (PaaS), Google offer the Google App Engine for Java and Python application development and hosting. Microsoft’s offering is Azure, which provides solutions for .NET developers as well as for PHP developers. As part of the platform, Microsoft offer SQL Azure, a cloud based relation database service. At this level, the solutions of the companies whilst offering similar functionality do not compete directly with each other.

At the lowest level, Infrastructure as a Service (IaaS) Google have no offering whilst Microsoft have recently announced the VM role which will allow users to create their own virtual machine images and host them in the cloud, build their own virtual private clouds on the Azure infrastructure and so provide IaaS.

Summarising the competition between Microsoft and Google for Cloud Computing services, the head-on competition is clearly at the SaaS level where they have competing products. In the other areas there is no direct, or at least like-for-like competition due to the structure of their products.

What is difficult for Cloud consumers is that the competition between these two giants is very public and does not focus on the primary factors of effectiveness of solutions, feature sets, cost, security, reliability they provide for customers. This means that any organisation considering the cloud, trying to make some sense of all the hype, marketing and publicity and establish how the solutions offered can help their organisations is incredibly difficult. This is before we add Amazon, IBM, Oracle etc to the discussion. This is the reason Learning Tree have developed their Cloud Computing Course that provides attendees with a framework of what cloud computing is and how the products from the major vendors fit into this framework. The course provides hands-on experience of the tools and builds the skills required to establish what is the most suitable Cloud Computing solution for your organisation. Why not attend and find out how the Cloud and in particular which services could benefit your organisation.

Frank Gens predicted cloud growth of 30% year-over-year in an IDC Predicts Cloud Services, Mobile Computing, and Social Networking to Mature and Coalesce in 2011, Creating a New Mainstream for the IT Industry press release of 12/2/2010:

Transformation has been a recurring theme in the annual International Data Corporation (IDC) Predictions over the past several years. During this time, a wave of disruptive technologies has emerged and evolved, forged by the pressures of a global economic recession. In 2011, and certainly beyond, IDC expects these technologies – cloud services, mobile computing, and social networking – to mature and coalesce into a new mainstream platform for both the IT industry and the industries it serves.

"In 2011, we expect to see these transformative technologies make the critical transition from early adopter status to early mainstream adoption," said Frank Gens, senior vice president and chief analyst at IDC. "As a result, we'll see the IT industry revolving more and more around the build-out and adoption of this next dominant platform, characterized by mobility, cloud-based application and service delivery, and value-generating overlays of social business and pervasive analytics. In addition to creating new markets and opportunities, this restructuring will overthrow nearly every assumption about who the industry's leaders will be and how they establish and maintain leadership."

The platform transition will be fueled by another solid year of recovery in IT spending. IDC forecasts worldwide IT spending will be $1.6 trillion in 2011, an increase of 5.7% over 2010. While hardware spending will remain strong (7.8% year-over-year growth), the industry will depend to a larger extent on improvements in software spending (5.3% growth) and related project-based services spending (3.5% growth), as well as gains in outsourcing (4% growth). Worldwide IT spending will also benefit from the accelerated recovery in emerging markets, which will generate more than half of all net new IT spending worldwide in 2011.

Spending on public IT cloud services will grow at more than five times the rate of the IT industry in 2011, up 30% from 2010, as organizations move a wider range of business applications into the cloud. Small and medium-sized business cloud use will surge in 2011, with adoption of some cloud resources topping 33% among U.S. midsize firms by year's end. Meanwhile, the more nascent private cloud model will continue to evolve as infrastructure, software, and service providers collaborate on a range of new offerings and solutions. Meanwhile, the vendor battle for two cloud "power positions" will be joined to determine on whose cloud platform will solutions be deployed, and who will provide coherent IT management across multiple public clouds, customers' private clouds, and their legacy IT environments. [Emphasis added.]

Mobile computing – on a variety of devices and through a range of new applications – will continue to explode in 2011, forming another critical plank in the new industry platform. IDC expects shipments of app-capable, non-PC mobile devices (smartphones, media tablets, etc.) will outnumber PC shipments within the next 18 months – and there will be no looking back. While vendors with a PC heritage will scramble to secure their position in this rapidly expanding market, another battle will be taking place for dominance in the mobile apps market. The level of activity in this market will be staggering, with IDC expecting nearly 25 billion mobile apps to be downloaded in 2011, up from just over 10 billion in 2010. Over time, the still-emerging apps ecosystems promise to fundamentally restructure the channels for all digital content and services to consumers. [Emphasis added.]

Meanwhile, social business software has gained significant momentum in the enterprise over the past 18 months and this trend is expected to continue with IDC forecasting a compound annual growth rate of 38% through 2014. In a sure sign that social business has hit the mainstream, IDC expects 2011 to be a year of consolidation as the major software vendors acquire social software providers to jump-start or increase their social business footprint. Meanwhile, the use of social platforms by small and medium-sized businesses will accelerate, with more than 40% of SMBs using social networks for promotional purposes by the year's end.

As the new mainstream IT platform coalesces in the months ahead, IDC expects it to lay a foundation for IT vendors to support, and profit from, a variety of "intelligent industry" transformations. In retail, mobility and social networking are rapidly changing consumers' shopping experience as they bring their smartphones into the store for on-site price comparisons and product recommendations. In financial services, mobility and the cloud are bringing mobile banking and payments closer to reality. In the healthcare industry, IDC expects 14% of adult Americans to use a mobile health application in 2011.

"What really distinguishes the year ahead is that these disruptive technologies are finally being integrated with each other – cloud with mobile, mobile with social networking, social networking with 'big data' and real-time analytics," added Gens. "As a result, these once-emerging technologies can no longer be invested in, or managed, as sandbox efforts around the edges of the market. Instead, they are rapidly becoming the market itself and must be addressed accordingly."

IDC's predictions for 2011 are presented in full detail in the report, IDC Predictions 2011: Welcome to the New Mainstream (Doc #225878). In addition, Frank Gens will lead a group discussion of this year's predictions in an IDC Web conference scheduled for December 2 at 12:00 pm U.S. Eastern time. For more information, or to register for this free event, please go to http://www.idc.com/getdoc.jsp?containerId=IDC_P22186.

A recorded version will be available for download until 6/2/2011.

David Lemphers (@davidlem) described Why Cloud is Traditional IT’s Ice-Age! in a 12/2/2010 post to his PwC blog:

I get asked a lot by customers, “What is the big deal about cloud computing?”. It’s a totally fair question, given that most of the information about cloud computing has been about the potential market and revenue from cloud adoption. But that doesn’t go a great deal towards why it’s a good thing for companies to adopt.

There are three things that cloud, or the era of cloud, brings to the table that enables a vision of what I call the fully-automated Enterprise. The three key factors are:

- Virtualization

- Automation

- Business Function Integration

You need all of these things to achieve a fully-automated Enterprise via the cloud. Here’s why.

Virtualization only gets you part way there, in that, with virtualization, you can abstract the underlying resources away from the allocator, which is good because you can deal gracefully with failures, capacity demands, hot-spots, etc. But virtualization still requires a person to manage the request part, and identify how the virtual resources hang-together. This is not how consumers think, and it’s the biggest threat to traditional IT organizations. Consumers now think in terms of business function, or workloads, so rather than saying, “I need two SQL Servers and 10TB of storage”, they say, “I need to onboard two new employees”.

So automation becomes really important, because if you can’t map a resource set to a workload, and spin that up automagically, you can rapidly and efficiently respond to changing conditions and needs. Automation really supports the concept if self-service, which is a core part of a fully-automated Enterprise. It enables the business stakeholder to determine when and what they need, and not be concerned with how it happens. This is critical.

But this still implies the user/consumer/stakeholder has to perform some IT/systems specific action, such as, “I am onboarding two new employees, and I need two email accounts, access to the finance and ERP system, etc.”. This is generally additive to an existing core onboarding process. What is critical in the fully-automated Enterprise is the concept that the automated workload provisioning/management process is fully integrated into the business function. So, when you start the new employee onboarding workflow, part of the process contacts the main controller, assesses if this new set of employees can be supported by the current IT resources allocated, and if not, adjusts the resources to meet the demand, and integrates with other systems, such as charge-back systems for internal billing, etc.

This is where cloud in the Enterprise really makes sense. It changes the traditional conversation between the business and IT, from an “get me some boxes and install some software” to a “I need to respond to this business need”. Also, it helps abstract away allot of the minutia and detail from the user/consumer, so they can focus on doing business. And finally, it forces IT to become more agile, and to compete with commodity providers, because once customers start moving their critical workloads to true SaaS providers, where they are paying based on subscription or consumption, and their data is more secure and available than the SLA provided to them by IT, you’ll quickly see the full demise of IT orgs as we know it.

So there you have it. Embrace cloud, convert your Enterprise to be fully-automated, start thinking business function/service integration and not asset and resource management, and you’re going to survive the next IT ice-age!

<Return to section navigation list>

Windows Azure Platform Appliance (WAPA), Hyper-V and Private Clouds

No significant articles today.

<Return to section navigation list>

Cloud Security and Governance

• Important: See the Mark Estberg reported Microsoft’s Cloud Infrastructure Receives FISMA Approval in a 12/2/2010 post article in the Windows Azure Infrastructure section above.

Lydia Leong (@cloudpundit) asserted “Cloud-savvy application architects don’t do things the same way that they’re done in the traditional enterprise” as a prefix to her Designing to fail post of 12/3/2010:

Cloud applications assume failure. That is, well-architected cloud applications assume that just about anything can fail. Servers fail. Storage fails. Networks fail. Other application components fail. Cloud applications are designed to be resilient to failure, and they are designed to be robust at the application level rather than at the infrastructure level.

Enterprises, for the most part, design for infrastructure robustness. They build expensive data centers with redundant components. They buy expensive servers with dual-everything in case a component fails. They buy expensive storage and mirror their disks. And then whatever hardware they buy, they need two of. All so the application never has to deal with the failure of the underlying infrastructure.

The cloud philosophy is generally that you buy dirt-cheap things and expect they’ll fail. Since you’re scaling out anyway, you expect to have a bunch of boxes, so that any box failing is not an issue. You protect against data center failure by being in multiple data centers.

Cloud applications assume variable performance. Well-architected cloud applications don’t assume that anything is going to complete in a certain amount of time. The application has to deal with network latencies that might be random, storage latencies that might be random, and compute latencies that might be random. The principle of the distributed application of this sort is that just about anything that you’re talking to can mysteriously drop off the face of the Earth at any point in time, or at least not get back to you for a whlie.

Here’s where it gets funkier. Even most cloud-savvy architects don’t build applications this way today. This is why people howl about Amazon’s storage back-end for EBS, for instance — they’re used to consistent and reliable storage performance, and EBS isn’t built that way, and most applications are built with the assumption that seemingly local standard I/O is functionally local and therefore is totally reliable and high-performance. This is why people twitch about VM-to-VM latencies, although at least here there’s usually some application robustness (since people are more likely to architect with network issues in mind). This is the kind of problem things like Node.js were created to solve (don’t block on anything, and assume anything can fail), but it’s also a type of thinking that’s brand-new to most application architects.

Performance is actually where the real problems occur when moving applications to the cloud. Most businesses who are moving existing apps can deal with the infrastructure issues — and indeed, many cloud providers (generally the VMware-based ones) use clustering and live migration and so forth to present users with a reliable infrastructure layer. But most existing traditional enterprise apps don’t deal well with variable performance, and that’s a problem that will be much trickier to solve.

<Return to section navigation list>

Cloud Computing Events

Bill Zack listed Upcoming Azure Boot Camp Webcasts in this 12/3/2010 post to his Architecture & Stuff blog:

All over the world Microsoft ISVs, partners and customers have been meeting for an in-depth training program: The Windows Azure Boot Camp. If you could not make it to one of these events then here is your chance to benefit from the material that they covered.

No ISV that is seriously thinking of improving their profitability by leveraging the power of the cloud and Windows Azure should miss this series.

Here are the Azure Webcast recording links and when they will be available:

- 11/29: MSDN Webcast: Azure Boot Camp: Introduction to Cloud Computing and Windows Azure

12/06: MSDN Webcast: Azure Boot Camp: Windows Azure and Web Roles

01/03: MSDN Webcast: Azure Boot Camp: Working with Messaging and Queues

01/10: MSDN Webcast: Azure Boot Camp: Using Windows Azure Tables

01/17: MSDN Webcast: Azure Boot Camp: Diving into BLOB Storage