Windows Azure and Cloud Computing Posts for 11/29/2010+

| A compendium of Windows Azure, Windows Azure Platform Appliance, SQL Azure Database, AppFabric and other cloud-computing articles. |

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database and Reporting

- Marketplace DataMarket and OData

- Windows Azure AppFabric: Access Control and Service Bus

- Windows Azure Virtual Network, Connect, and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch

- Windows Azure Infrastructure

- Windows Azure Platform Appliance (WAPA) and Hyper-V Cloud

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Read the detailed TOC here (PDF) and download the sample code here.

Discuss the book on its WROX P2P Forum.

See a short-form TOC, get links to live Azure sample projects, and read a detailed TOC of electronic-only chapters 12 and 13 here.

Wrox’s Web site manager posted on 9/29/2009 a lengthy excerpt from Chapter 4, “Scaling Azure Table and Blob Storage” here.

You can now freely download by FTP and save the following two online-only PDF chapters of Cloud Computing with the Windows Azure Platform, which have been updated for SQL Azure’s January 4, 2010 commercial release:

- Chapter 12: “Managing SQL Azure Accounts and Databases”

- Chapter 13: “Exploiting SQL Azure Database's Relational Features”

HTTP downloads of the two chapters are available for download at no charge from the book's Code Download page.

Tip: If you encounter articles from MSDN or TechNet blogs that are missing screen shots or other images, click the empty frame to generate an HTTP 404 (Not Found) error, and then click the back button to load the image.

Azure Blob, Drive, Table and Queue Services

Rinat Abdullin explained how to solve problems with Windows Azure, SQLite and Temp [File Size] in this 11/29/2010 post:

If you are using Cloud Computing environment, be prepared for certain constraints to show up on your way. They can be quite subtle. Here's one.

I'm using SQLite + ProtoBuf + GZip for persisting large datasets in Azure Blobs. This combination (even without GZip) can handle 10-50GB datasets in a single file without much overhead and with a decent flexibility. Of course, there are certain constraints (mostly related to classical scalability issues), however for the command-side of CQRS, non[e] of these apply. Resulting data processing throughput is massively superior to SQL Azure or Azure Table Storage, while costs are negligible.

I really enjoy this combination of technologies. One of the advantages is that everything is open source and I don't need to bother about writing B-tree indexes or de-fragmentation routines for my data structures. However, there was a problem spoiling all the fun. It showed up frequently in the production environment, while I was trying to VACUUM SQLite database (essentially cleaning up and defragmenting everything):

Database or disk is full

This one was driving me a bit crazy, since more than 500GB of disk storage were allocated as Local Resources to the worker roles. As it turns out, this was caused by the interplay of the following things:

- SQLite uses temporary files to perform certain operations (VACUUM is one of them).

- SQLite, being cross-platform in nature, relies on the OS to provide temp files location.

- On Windows Azure both TEMP and TMP point to a single directory that has a maximum size of 100 MB.

Such directory structure makes sense since (just my wild guess based on what I know about Hyper-V and how I would've implemented Windows Azure VMs in the first place), since we want to have fixed VM image (with a footprint shared between multiple instances) followed up by a small differential VHD and a larger local resource VHD, should customer request it.

Solution is to define Local Resource and redirect environment variables there:

var tempPath = RoleEnvironment.GetLocalResource("Temp").RootPath;

Environment.SetEnvironmentVariable("TEMP", tempPath);

Environment.SetEnvironmentVariable("TMP", tempPath);The other option for SQLite would've been to use pragma:

PRAGMA temp_store_directory = 'directory-name';

James Hamilton addressed Availability in Globally Distributed Storage Systems in this 11/29/2010 post:

I love high-scale systems and, more than anything, I love data from real systems. I’ve learned over the years that no environment is crueler, less forgiving, or harder to satisfy than real production workloads. Synthetic tests at scale are instructive but nothing catches my attention like data from real, high-scale, production systems. Consequently, I really liked the disk population studies from Google and CMU at FAST2007 (Failure Trends in a Large Disk Population, Disk Failures in the Real World: What does a MTBF of 100,000 hours mean to you). These two papers presented actual results from independent production disk populations of 100,000 each. My quick summary of these 2 papers is basically “all you have learned about disks so far is probably wrong.”

Disk failures are often the #1 or #2 failing component in a storage system usually just ahead of memory. Occasionally fan failures lead disk but that isn’t the common case. We now have publically available data on disk failures but not much has been published on other component failure rates and even less on the overall storage stack failure rates. Cloud storage systems are multi-tiered, distributed systems involving 100s to even 10s of thousands of servers and huge quantities of software. Modeling the failure rates of discrete components in the stack is difficult but, with the large amount of component failure data available to large fleet operators, it can be done. What’s much more difficult to model are correlated failures.

Essentially, there are two challenges encountered when attempting to model overall storage system reliability: 1) availability of component failure data and 2) correlated failures. The former is available to very large fleet owners but is often unavailable publically. Two notable exceptions are disk reliability data from the two FAST’07 conference papers mentioned above. Other than these two data points, there is little credible component failure data publically available. Admittedly, component manufacturers do publish MTBF data but these data are often owned by the marketing rather than engineering teams and they range between optimistic and epic works of fiction. [Emphasis added.]

Even with good quality component failure data, modeling storage system failure modes and data durability remains incredibly difficult. What makes this hard is the second issue above: correlated failure. Failures don’t always happen alone, many are correlated, and certain types of rare failures can take down the entire fleet or large parts of it. Just about every model assumes failure independence and then works out data durability to many decimal points. It makes for impressive models with long strings of nines but the challenge is the model is only as good as the input. And one of the most important model inputs is the assumption of component failure independence which is violated by every real-world system of any complexity. Basically, these failure models are good at telling you when your design is not good enough but they can never tell you how good your design actually is nor whether it is good enough.

Where the models break down is in modeling rare events and non-independent failures. The best way to understand common correlated failure modes is to study storage systems at scale over longer periods of time. This won’t help us understand the impact of very rare events. For example, Two thousand years of history would not helped us model or predict that a airplane would be flown into the World Trade Center. And certainly the odds of it happening again 16 min and 20 seconds later would be close to impossible. Studying historical storage system failure data will not help us understand the potential negative impact of very rare black swan events but it does help greatly in understanding the more common failure modes including correlated or non-independent failures.

Murray Stokely recently sent me Availability in Globally Distributed Storage Systems which is the work of a team from Google and Columbia University. They look at a high scale storage system at Google that includes multiple clusters of Bigtable which is layered over GFS which is implemented as a user–mode application over Linux file system. You might remember Stokely from my Using a post I did back in March titled Using a Market Economy. In this more recent paper, the authors study 10s of Google storage cells each of which is comprised of between 1,000 and 7,000 servers over a 1 year period. The storage cells studied are from multiple datacenters in different regions being used by different projects within Google.

I like the paper because it is full of data on a high-scale production system and it reinforces many key distributed storage system design lessons including:

Replicating data across multiple datacenters greatly improves availability because it protects against correlated failures.

- Conclusion: Two way redundancy in two different datacenters is considerably more durable than 4 way redundancy in a single datacenter.

Correlation among node failures dwarfs all other contributions to unavailability in production environments.

Disk failures can result in permanent data loss but transitory node failures account for the majority of unavailability.

To read more: http://research.google.com/pubs/pub36737.html

The abstract of the paper:

Highly available cloud storage is often implemented with complex, multi-tiered distributed systems built on top of clusters of commodity servers and disk drives. Sophisticated management, load balancing and recovery techniques are needed to achieve high performance and availability amidst an abundance of failure sources that include software, hardware, network connectivity, and power issues. While there is a relative wealth of failure studies of individual components of storage systems, such as disk drives, relatively little has been reported so far on the overall availability behavior of large cloud-based storage services. We characterize the availability properties of cloud storage systems based on an extensive one year study of Google's main storage infrastructure and present statistical models that enable further insight into the impact of multiple design choices, such as data placement and replication strategies. With these models we compare data availability under a variety of system parameters given the real patterns of failures observed in our fleet.

<Return to section navigation list>

SQL Azure Database and Reporting

Steve Yi (pictured below) reported the availability of Real World SQL Azure: Interview with Vittorio Polizzi, Chief Technology Officer at EdisonWeb on 111/29/2010:

As part of the Real World SQL Azure series, we talked to Vittorio Polizzi, Chief Technology Officer at EdisonWeb, about using Microsoft SQL Azure to host his company’s Web Signage application, which manages content for digital signs in retail stores and other venues. Here’s what he had to say:

MSDN: Can you tell us about EdisonWeb and the services you offer?

Polizzi: EdisonWeb is an Italian developer of cutting-edge technologies and advanced web-based solutions for commercial and government customers. Our flagship product is Web Signage, a software platform for creating and managing content for digital signage applications and proximity marketing campaigns. Using Web Signage, our customers can manage interactive and non-interactive digital signs, broadcast audio clips to in-store radios, and send Bluetooth messages.

MSDN: What prompted you to look into Microsoft SQL Azure and Windows Azure?

Polizzi: Customers deploy the Web Signage player software on their PCs and use it to play content created and distributed with the Web Signage platform. They access the Web Signage server application over the web from servers hosted by a local telco. This meant that we needed to identify at least one appropriate data center in each country where we want to offer our service. This made it difficult for us to grow outside Italy.

We also found that managing database servers in a data center environment involved higher costs when compared to Windows Azure and SQL Azure, because of the staff needed. With Windows Azure and SQL Azure, our customers are able to change their number of active licenses on a monthly basis, and the pay-per-use pricing model closely fits our business trends.

MSDN: How is EdisonWeb using SQL Azure and Windows Azure to help address these challenges?

Polizzi: We decided we wanted to move the entire application to the cloud and selected the Windows Azure platform. For customers that select a cloud deployment model, we use Windows Azure Blob Storage to store multimedia content, and the Windows Azure Content Delivery Network to cache that content at data centers around the globe. We use SQL Azure to store sign content metadata, configuration and statistical information, user credentials, and part of the application’s business logic.

Soon, we also want to use Windows Azure AppFabric, specifically the AppFabric Service Bus, to enable customers to link their applications to Web Signage. We also plan to use Windows Azure Marketplace DataMarket so customers can combine Web Signage with other data sources for greater effectiveness.

MSDN: So, what are the chief benefits of making SQL Azure and Windows Azure a deployment option for Web Signage?

Polizzi: We can significantly reduce our costs, easily expand outside Italy, and give customers more flexibility and lower costs. If we can get rid of most of our database servers, we can save nearly U.S.$30,000 a year in server, software, and maintenance costs. Plus, when customers change player licenses, we can scale the related Windows Azure instances up or down, reducing our outflow to match income.

Also, it’s far easier for us to deliver our service globally; we just choose the Microsoft data center closest to each customer. We can offer our service anywhere and deliver them tomorrow. Customers only pay for the services they use, and enjoy higher levels of service availability.

MSDN: What’s next for EdisonWeb?

Polizzi: We are always working on better, groundbreaking services. Microsoft is working hard to make Windows Azure and SQL Azure even more powerful, and we see new features coming. We enjoy this faster pace of innovation, which helps us deliver new features more rapidly. Also, with Microsoft managing our database infrastructure, our staff can focus on our core business: developing great software and providing the best customer support.

Read the full story at:

http://www.microsoft.com/casestudies/Case_Study_Detail.aspx?CaseStudyID=4000008727To read more SQL Azure customer success stories, visit:

www.SQLAzure.com

<Return to section navigation list>

Dataplace DataMarket and OData

No significant articles today.

<Return to section navigation list>

Windows Azure AppFabric: Access Control and Service Bus

Mark Wilson explained Azure AppFabric’s role in easyJet’s journey into the clouds on 11/23/2010:

Last month, I spent some time at Microsoft’s Partner Business Briefing on Transitioning to the Cloud (#pbbcloud). To be honest, the Microsoft presentations were pretty dull, the highlight being the sharp glances from Steve Ballmer as he saw me working on my iPad (which led to some interesting comments in a technical session a short while later) but there was one session that grabbed my attention – one where easyJet‘s Bert Craven, an IT architect with the airline, spoke about how cloud computing has changed easyJet’s “real world” IT strategy.

For those who are reading this from outside Europe, easyJet was one of the original UK-based budget airlines and they have grown to become a highly successful operation. Personally, I don’t fly with them if I can help it (I often find scheduled airlines are competitive, and have higher standards of customer service), but that’s not to say I don’t admire their lean operations – especially when you learn that they run their IT on a budget that equates to 0.75% of their revenue (compared with an average of just over 4%, based on Gartner’s IT Key Metrics).

Bert Craven quipped that, with a budget airline, you might be forgiven for thinking the IT department consists of one guy with a laptop in orange shed, by an airfield, operating a shoestring budget but it’s actually 65 people in a very big orange hangar, by a big runway…

Seriously though, operating a £3billion Internet-driven business on an IT budget which is so much smaller than the norm shows that the company’s reputation for leanness is well-deserved. To deliver enterprise-scale IT with this approach requires focus – a focus on differentiation – i.e. those systems that drive competitive advantage or which define the business. In order to achieve this, easyJet has taken commodity systems and pushed them “out of the door” – buying as-a-service products with demanding service level agreements (SLAs) from selected business partners.

easyJet has had a cloud strategy since 2005, when they started moving commodity systems to managed services. But, in 2009, Windows Azure caused a deviation in that approach…

Until 2007, easyJet was growing at 20% p.a. (the company is still experiencing rapid growth today, but not quite at the same level) – and that high level of growth makes it difficult to scale. There’s also a focus on meeting the SLAs that the business demands: easyJet are immensely proud that their easyJet.com availability chart is so dull, showing a constant 100% for several years; and if their flight control systems were unavailable for more than four hours, the entire fleet would be grounded (which is why these systems are never “down”).

So easyJet classified their IT systems into three tiers:

- Commodity: operating system; security; backup; e-mail; access methods; file and print.

- Airline systems: engineering; crew rostering; finance; personnel; flight planning; schedule planning; baggage handling; payroll; slot management; payment systems.

- easyJet Systems (those differentiators that drive competitive advantage): reservation system; revenue management; departure control; crew systems; aircraft systems.

The three tiers of classification are used to drive SLAs of silver (99.9% availability), gold (99.99% availability) and platinum (100% availability), mapped onto the IT architecture such that platinum services operate as a high availability configuration across two sites, gold services can fail over between sites if required, and silver services are provided only from easyJet’s primary site.

Alongside this, easyJet has a 5 point IT strategy that’s designed to be simple and cost-effective:

- Use simple, standard, solutions by default.

- Promote innovate use of mainstream technology.

- Use Microsoft technology for the technical platform.

- Provide scalable systems that never restrict the growth of the business.

- Provide 100% up-time for business critical systems.

In order to take account the disruption from the adoption of cloud computing technologies, easyJet adapted their strategy:

- Use simple, standard, solutions by default: place services in the cloud only when to do so simplifies the solution.

- Promote innovate use of mainstream technology: continually test the market to measure capabilities and penetration of cloud technology (as it becomes more mainstream, it’s better suited to easyJet’s innovation).

- Use Microsoft technology for the technical platform: Windows Azure will be the natural choice, but look at alternatives too.

- Provide scalable systems that never restrict the growth of the business: look for areas where cloud will improve the scalability of systems.

- Provide 100% up-time for business critical systems: wait for cloud computing to mature before committing to high availability usage (no platinum apps in cloud).

Naturally, easyJet started their journey into cloud computing with commodity computing systems (buying in compute and storage capabilities as a service, outsourcing email to a platform as a service offering, etc.) before they started to push up through the stack to look at airline systems. They thought that silver and gold services would be offered from the cloud within the architecture but Windows Azure turned out to be more disruptive than they anticipated (in a good way…).

With many commodity and airline systems now cloud-hosted, easyJet’s IT systems are able to cope with the company’s rapid growth. But their departure control system (a platinum service) in 4 airports now has been running on Windows Azure since January 2010 and the easyjet.com sales channel has also been extended into the cloud, so that it may be offered more broadly in innovative ways (or as Bert put it, “when you suddenly expose your sales channel to Facebook, you need to know you can handle Facebook!”). Now easyJet’s high-level architecture has platinum systems crossing primary and secondary sites, as well as the cloud – something that they originally said they wouldn’t do.

Bert Craven explained the crucial point that easyJet missed in their strategy was an aspect that’s often understated: integration as a service. easyJet believe that the potential of the cloud as an integration platform is huge and they use Windows Azure AppFabric (formerly known as BizTalk Internet Services, then as Microsoft .NET Services).

With a traditional secure service in a data centre, consumers are allowed to punch through the firewall on a given port then, after successfully negotiating security, they can consume the service. AppFabric turns this model inside out, taking the security context and platform into the cloud. With AppFabric, the service makes an outbound connection through the firewall (security departments like outbound connections) and consumers can locate services and connect in a secure manner within the cloud. AppFabric is not just for web service endpoints either: it can do anything from send a tweet to streaming live video; and endpoints are getting smarter too with rich integration functionality (message routing, store and forward, etc.)

easyJet see AppFabric as a game changer because it’s made them ask different questions:

- Instead of “is a new service we’re building a cloud service or an on-premise service?”, the question becomes “might this service have some cloud endpoints and components – is this in fact a hybrid service?”

- “Could we migrate an existing service to the cloud?” becomes “could we extend an existing service into the cloud?”

Bert Craven believes that AppFabric is the ace in the pack of Windows Azure technologies because it’s a small step to take an existing service and expose some endpoints in the cloud (easy to swallow). It’s also more of an enabler than a disruptor, so AppFabric is quite rightly perceived as lower risk (and almost certainly lower cost). Extending a service is a completely different proposition to move entire chunks of compute and storage capabilities to cloud. Consequently there is a different value proposition, making use of existing assets (which means it’s easier to prove a return on investment – delivering more value with a greater return on existing investments by extending them quickly becomes very attractive) – and an IT architect’s job is to create maximum business value from existing investments.

AppFabric offers rich functionality – it’s not just a cheap shortcut to opening firewall and has a rich seam of baked-in integration functionality and, ultimately, it has accelerated easyJet’s acceptance of cloud computing. 18 months ago, Craven describes sharp intakes of breath when talking of running departure control in the cloud, but now that a few airports have been running that way for 10 months, it’s widely accepted that all departure control systems will transition to the cloud.

Bert Craven sees AppFabric as a unifying paradigm – with Windows Azure AppFabric in the cloud and Windows Server AppFabric on-premise (and it gets stronger when looking at some other Microsoft technologies, like the Project Sydney virtual private network and identity federation developments – providing a continuous and unified existence with zero friction as services move from on-premise to cloud and back again as required).

In summing up, Bert Craven described AppFabric as a gateway technology – enabling business models that were simply not possible previously, opening a range of possibilities. Now, when easyJet thinks about value propositions of cloud and cloud solutions, equal thought is given to the cloud as an integration platform, offering a huge amount of value, at relatively low cost and risk.

Yefim V. Natis, David Mitchell Smith and David W. Cearley wrote Windows Azure AppFabric: A Strategic Core of Microsoft's Cloud Platform on 11/15/2010 as Gartner RAS Core Research Note G00208835 (missed when full-text version posted):

Continuing strategic investment in Windows Azure is moving Microsoft toward a leadership position in the cloud platform market, but success is not assured until user adoption confirms the company's vision and its ability to execute in this new environment.

Overview

In the nearly one year since Microsoft Azure AppFabric was unveiled at its PDC 2009 conference, much of the promised technology has been delivered, and important new capabilities have been introduced for 2011. The Microsoft cloud application platform is gaining critical technical mass. CIOs, CTOs, project leaders and other IT planners should track the evolution of Microsoft Windows Azure investments and architecture as potentially one of the leading, long-term offerings for cloud computing.

Microsoft continues to make strategic investments in product development and marketing, and in financial commitments to achieve leadership in cloud computing. Leadership in cloud computing has become a companywide priority at Microsoft, as leadership in Web computing had been a top priority for the company in the 1990s.

Microsoft intends to be a key player in all three layers of cloud software architecture: system infrastructure services, application infrastructure services and application services, and to apply its technology to private and public cloud projects.

The Windows Azure AppFabric has emerged as the core application platform technology in Microsoft's cloud-computing vision.

Various project teams at Microsoft are contributing to the company's evolving cloud-computing technology and vision. These teams are not always synchronized to deliver coordinated product road maps, which can create confusion in the market about the company's overriding strategy.

Microsoft technology users interested in cloud computing can anticipate that the company will deliver in the next two to three years a competitive cloud platform technology. However, the early offerings are incomplete and unproven. Users planning to use Microsoft cloud computing in 2011 should be prepared for ongoing changes in underlying technologies, and some advanced programming effort to build a scalable multitenant application.

IT planners considering cloud-computing projects should include Microsoft on their candidate long lists, with the understanding that in the short term the Microsoft cloud platform technology is still "under construction."

Table of Contents

- Analysis

- AppFabric Container

- AppFabric Services

- Service Bus

- Access Control

- Caching

- Integration

- Windows Azure AppFabric Composition Model and Composite App Service

- Other Services

- Windows Azure Marketplace

- Influence on Other Microsoft Projects

- Microsoft Software as a Service (Dynamics)

- BizTalk Server

- More Work Is Still Ahead

- User Advice

List of Figures

Figure 1. Microsoft's Windows Azure AppFabric Architecture Plan

Analysis

Windows AppFabric was announced in 2009 as two independent technology stacks — one delivered with Windows Server 2008 for use on-premises and the other as part of Windows Azure. The long-term intent was to unify the two sides of the offering despite their initial isolation. This plan was part of the strategic vision of a hybrid computing environment where applications are distributed between on-premises and public cloud data centers. To that end, earlier in 2010, Microsoft announced its Windows Azure platform appliance offering — a cloud-enabled technology available to partners and some IT organizations to establish a Windows Azure cloud service environment outside of Microsoft data centers (as of this publication date, none of these is yet in operation, although several partners are in the process of deploying it).

At its PDC10 conference, Microsoft unveiled the next generation of technologies that will become part of Windows Azure over the next 12 months. In this updated vision, Windows Azure AppFabric becomes a centerpiece of the Microsoft cloud strategy, delivering advanced forms for multitenancy in its new AppFabric Container, integration as a service, business process management as a service and other core middleware services. Windows Azure AppFabric (see Figure 1), together with SQL Azure and the Windows Azure OS form the core Microsoft cloud offering — the Windows Azure Platform.

Figure 1. Microsoft's Windows Azure AppFabric Architecture Plan

Source: Microsoft (October 2010)

Read more of the full text Research Note.

<Return to section navigation list>

Windows Azure Virtual Network, Connect, and CDN

No significant articles today.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

The Windows Azure Team released Windows Azure SDK Windows Azure Tools for Microsoft Visual Studio (November 2010) [v1.3.11122.0038] to the Web on 11/29/2010:

Windows Azure Tools for Microsoft Visual Studio, which includes the Windows Azure SDK, extends Visual Studio 2010 to enable the creation, configuration, building, debugging, running, packaging and deployment of scalable web applications and services on Windows Azure.

Overview

Windows Azure™ is a cloud services operating system that serves as the development, service hosting and service management environment for the Windows Azure platform. Windows Azure provides developers with on-demand compute and storage to host, scale, and manage web applications on the internet through Microsoft® datacenters.

Windows Azure is a flexible platform that supports multiple languages and integrates with your existing on-premises environment. To build applications and services on Windows Azure, developers can use their existing Microsoft Visual Studio® expertise. In addition, Windows Azure supports popular standards, protocols and languages including SOAP, REST, XML, Java, PHP and Ruby. Windows Azure is now commercially available in 41 countries.

Windows Azure Tools for Microsoft Visual Studio extend Visual Studio 2010 to enable the creation, configuration, building, debugging, running, packaging and deployment of scalable web applications and services on Windows Azure.

New for version 1.3:

Windows Azure Tools for Microsoft Visual Studio also includes:

- Virtual Machine (VM) Role (Beta):Allows you to create a custom VHD image using Windows Server 2008 R2 and host it in the cloud.

- Remote Desktop Access: Enables connecting to individual service instances using a Remote Desktop client.

- Full IIS Support in a Web role: Enables hosting Windows Azure web roles in a IIS hosting environment.

- Elevated Privileges: Enables performing tasks with elevated privileges within a service instance.

- Virtual Network (CTP): Enables support for Windows Azure Connect, which provides IP-level connectivity between on-premises and Windows Azure resources.

- Diagnostics: Enhancements to Windows Azure Diagnostics enable collection of diagnostics data in more error conditions.

- Networking Enhancements: Enables roles to restrict inter-role traffic, fixed ports on InputEndpoints.

- Performance Improvement: Significant performance improvement local machine deployment.

- C# and VB Project creation support for creating a Windows Azure Cloud application solution with multiple roles.

- Tools to add and remove roles from the Windows Azure application.

- Tools to configure each role.

- Integrated local development via the compute emulator and storage emulator services.

- Running and Debugging a Cloud Service in the Development Fabric.

- Browsing cloud storage through the Server Explorer.

- Building and packaging of Windows Azure application projects.

- Deploying to Windows Azure.

- Monitoring the state of your services through the Server Explorer.

- Debugging in the cloud by retrieving IntelliTrace logs through the Server Explorer.

Download and enjoy!

Gaurav Mantri posted Announcing the launch of Azure Management Cmdlets, a collection of Windows PowerShell Cmdlets for Windows Azure Management on 11/29/2010:

Today I am pleased to announce that our Azure Management Cmdlets product is now LIVE. It has been in beta for last 3 months or so.

What’s Azure Management Cmdlets:

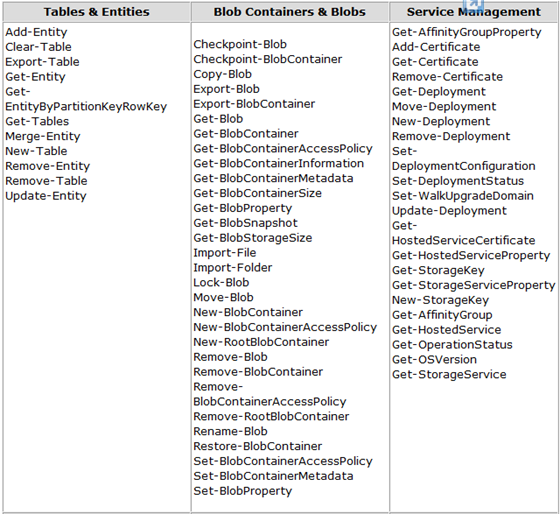

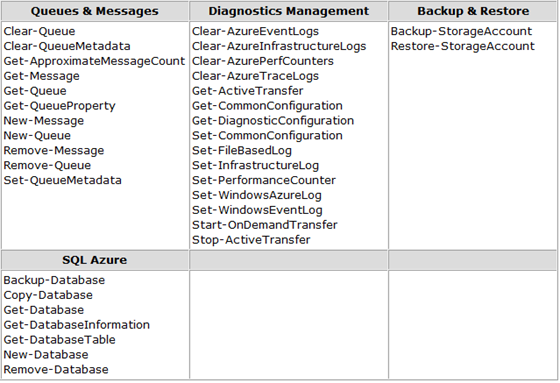

Azure Management Cmdlets is a set of Windows PowerShell Cmdlets for complete Windows Azure management. It consists of close to 100 cmdlets to manage your Azure Storage (Tables, Blobs and Queues), Hosted Services (Deployment, Storage Services, Affinity Groups and Guest OS), Diagnostics, and SQL Azure (Copy, Backup etc.). It also includes cmdlets to back up your storage account data (tables & blobs) on your local computer and restore storage account data from the backups. It supports full, partial and incremental backups.Following is the list of cmdlets available in Azure Management Cmdlets. You can read details about these cmdlets by visiting product details page at http://www.cerebrata.com/Products/AzureManagementCmdlets/Details.aspx:

What do you need to use Azure Management Cmdlets:

Azure Management Cmdlets are built using .Net 4.0. You would need a computer with .Net 4.0 installed. Since these are PowerShell cmdlets, you would need to have PowerShell installed on your computer as well. Other than that you would need access to Windows Azure Storage (Storage Account Name & Key) and Windows Azure Hosted Services (Subscription Id & API Certificates). If you’re using these cmdlets to access local development storage, then you would need to have Azure SDK installed on the computer.Version/Pricing:

Basically Azure Management Cmdlets comes in two flavors: Professional & a free 30 day trial. Following table summarizes the difference between these versions:

Currently you can purchase a license for Azure Management Cmdlets for $69.99 (USD). We’re offering a 10% discount to existing + new Cloud Storage Studio and/or Azure Diagnostics Manager customers (paid and/or complimentary). We also have a volume discount offer as well. Following table summarizes the pricing for Azure Management Cmdlets:

Full disclosure: I have free use of Cerebrata’s Cloud Storage Studio and Azure Diagnostics Manager products.

Alon Fliess announced a forthcoming Cloudoscope™ - the first Cloud Cost Profiler™ for Widows Azure on 11/28/2010:

As you probably know, we have established a new company, and as we said, we aimed to deliver cutting edge products and services to the developers' community.

I am proud to announce our vision, methodology and tools that help you developing applications wisely in the era of cloud.

There are many reasons to move to the cloud, for example, this is taken from the Windows Azure site:

"Efficiency: Windows Azure improves productivity and increases operational efficiency by lowering up-front capital costs. Customers and partners can realize a reduction in Total Cost of Operations of some workloads by up to 30 – 40% over a 3 year period. The consumption based pricing, packages and discounts for partners lower the barrier to entry for cloud services adoption and ensure a predictable IT spend. See Windows Azure pricing."

Can you really predict your Total Cost of Operations? What affects this cost? Is the architect's and developers' work a factor in this cost?

Think about your electricity bill. Suppose you want to reduce the Total Cost of Operation of your house. How do you know which electrical equipment cost you more? You need to measure the specific equipment and compare the resulting price with the value it provides you.

The same idea works with your code in the cloud. You need to measure the business request price. Moreover, you need to know the cost of a function, so you will be able to cost-optimize your code.

Cloudoscope™ is a suit of profiling and optimization tools for use by developers, who wish to build or port an application to the cloud. The first release of the product targets the Windows Azure platform and the .NET framework.

Cloudoscope™ - cut the total cost of ownership of cloud based applications.

Cloudoscope™ is developed by CloudValue™, which is a newly established subsidiary of CodeValue™.

Cloudoscope™ main features:

- Provide correlation between code and cost

- Cut total cost of ownership and save money

- Show the cost of each function and relevant line of code

- Show the cost of business requests

- Show cost improvement or degradation after a code change

- Provide optimization advices

- Provide guidance to Cost Oriented Development™

- Help trading service quality Vs. cost

- Provide a framework for developing Cost Oriented Unit Tests™

- Cost oriented cloud computing standard approval

Call to action:

Currently we are spending our time finishing the first alpha version of the product. We are looking for beta users that want to try our tools and provide meaningful feedback. You can register here.

Arnon Rotem-Gal-Oz provided additional details about Cloudoscope – a cost profiler for the cloud and its team on 11/29/2010:

I am very happy to announce that I am joining CodeValue. CodeValue, as our site says, is the home for software experts. Indeed, the company is built of a group of consultants and we provide mentoring and consulting in the areas of architecture, software development and technology. While I am going to be doing some consulting, the main reason for me to join (and my main role – as VP product delivery) is the other business area of the company – building cutting edge products for developers. Which brings me to the topic of this post Cloudoscope(tm) the first in out line of products.

Cloud computing brings the era of consumption based pricing and promisses to transform computing to a utility like electricity, water etc. The pay-as-you go model brings elasticity and cost savings since, for example, you don’t need to stock computing power for peak loads. When the need arise you can just add more instances etc. etc.

Cloud computing also means that your cost structure change for example on Windows Azure a service bus connection would cost you between 2$ and 4$ (500 connection @995$, 1@ 3.99$ as mentioned here) – it is easy to see how many connections you use, but how many connections do you really need?; Another example an Amazon RDS (which gives you MySQL capabilities) extra large (high memory) reserve instance will cost you $1325 (1-year) + $0.262 per hour + $0.1 Gb/month + $0.1 per million requests. On the other hand an extra large high memory EC2 instance (on which you can install mySQL) will cost you $1325 (1-year) + $01.17 per hour (plus you need to figure how to persist the data) – what will be cheaper based on your usage scenario?; partial compute hours are billed as full hours, booting a new instance takes a few minutes, if your instance is idle and based on the way your system is used what’s cheaper shutting the instance down (delete the deployment in azure) or leaving it running?

Cloudoscope is our effort to try to get these types of questions answered and put you in control of your total cost of ownership. Here are some of the features we’re looking at (from Alon Fliess CodeValue’s CTO blog):

- Provide correlation between code and cost

- Cut total cost of ownership and save money

- Show the cost of each function and relevant line of code

- Show the cost of business requests

- Show cost improvement or degradation after a code change

- Provide optimization advices

- Provide guidance to Cost Oriented Development™

- Help trading service quality Vs. cost

- Provide a framework for developing Cost Oriented Unit Tests™

- Cost oriented cloud computing standard approval

We’ve got some top notch tallent working on this starting with Alon Fliess, whom I already mentioned), Oren Eini (a.k.a. Ayende), Daniel Petri as well as the rest of the CodeValue team. We’re rapidly approaching the alpha stage, and we’re already looking forward and seeking beta testers and beta sites. If you are moving your solution into the cloud, like to try out our tools and willing to provide meaningful feedback, please register at our site or drop me a note.

Cloudoscope gains credability in my book by having Ayende on the team.

Eric G. Troup reported Service Delivery Broker Catalyst Demonstrates the 4th Cloud Layer with Windows Azure and SQL Azure on 11/28/2010:

Microsoft and Portugal Telecom SAPO collaborated on and demonstrated the TM Forum Service Delivery Broker Catalyst for Management World Americas held in Orlando, Florida 9-11 November 2010.

The Service Delivery Broker Catalyst was originally intended to test and validate the work of the TM Forum Software Enabled Services Management Solution (formerly known as the Service Delivery Framework), applicable parts of the TM Forum Frameworx, and certain other industry standards through a multi-company (SaaS) platform supplemented by a Service Delivery Broker and service lifecycle management governance system.

PT SAPO built up their all Microsoft technology based SDB to its current level of functionality over a four year period. Today, the on-premises private cloud implementation provides production support for PT SAPO Web, MEO IPTV (Mediaroom), and PT TMN Mobile subscribers. For purposes of this catalyst, PT SAPO ported the entire SDB and its supporting systems to Windows Azure and SQL Azure and demonstrated ten “Business Models as a Service” that could be associated with any new offer. Remarkably, this was done by four developers in only two months.

Today there are generally three recognized layers to the cloud:

- SaaS

- PaaS

- IaaS

The Service Delivery Broker actually sits on top of the SaaS layer. It could be thought of as an emerging “fourth layer” to the cloud that provides a governance model and prescriptive guidance for the service architect and service designer. The SDB enables the creation of manageable services and facilitates end-to-end service management. The SDB adds these additional key functions:

- A product / service development environment that enables cost-effective and standardized service delivery across all service lifecycle phases (concept, design, deploy, operation and retirement).

- A common runtime SDB that provides efficient instrumented access to common services and service enablers.

With the porting of the SDB to Windows Azure, the SDB can be deployed as “SDB as a Service” either on-premise, on Windows Azure, or in a flexible, hybrid cloud mode.

For the Catalyst, the developer environment added a standard way for the service designer to add TM Forum SES Service Management Interfaces (SMI) to any individual service enabler or service mashup. The presence of the SMI facilitates end-to-end management and enables the rapid monetization of new services.

Core Catalyst Project Values identified include:

- Cloud Service Providers interested in practical experience and best practices concerning implementing SES concepts within their own hosted service offerings.

- Architects interested in gaining a better perspective on architectural approaches and knowing more details about architectural decisions when designing and implementing new product offers leveraging cloud resources.

- Service Designers interested in the unified user interface and how to implement prescriptive guidance for service templates.

- Managers interested in implementing new business models and opportunities;

- Operations interested in real-time statistics and reports that ultimately permit visibility into what drives Customer Satisfaction and operational expenses.

Eric is a Senior Principal Architect at Microsoft serving the telecommunications industry.

Rob Tiffany (@RobTiffany) continued his Windows Phone 7 Line of Business App Dev :: Improving the In-Memory Database series on 11/28/2010:

About a month ago, I wrote an article intended to help you fill some of the gaps left by the missing SQL Server Compact database. Since your Windows Phone 7 Silverlight app is consuming an ObservableCollection of objects streaming down from Windows Azure and SQL Azure, it makes sense to organize those objects in a database-like format that’s easy to work with. If you’ve ever worked with Remote Data Access (RDA) in the past, the notion of pre-fetching multiple tables to work with locally should look familiar.

In this case, each ObservableCollection represents a table, each object represents a row, and each object property represents a column. I had you create a Singleton class to hold all these objects in memory to serve as the database. The fact that Silverlight supports Language Integrated Query (LINQ) means that you can use SQL-like statements to work with the multiple, ObservableCollections of objects.

If you’re wondering why I have you cache everything in memory in a Singleton, there’s a few reasons. For starters, it makes it easy to query everything with LINQ with the fastest performance possible for single and multi-table JOINs. Secondly, I don’t represent a Microsoft product group and therefore wouldn’t engineer an unsupported provider that can query subsets of serialized data from files residing in Isolated Storage. Finally, I don’t want you to accidentally find yourself with multiple instances of the same ObservableCollection when pulling data down from Azure or loading it from Isolated Storage. Forcing everything into a Singleton prevents you wasting memory or updating objects in the wrong instance of an ObservableCollection. An inconsistent database is not a good thing. Don’t worry, you can control which tables are loaded into memory.

So what is this article all about and what are the “improvements” I’m talking about?

This time around, I’m going to focus on saving, loading and deleting the serialized ObservableCollections from Isolated Storage. In that last article, I showed you how to serialize/de-serialize the ObservableCollections to and from Isolated Storage using the XmlSerializer. This made it easy for you to save each table to its own XML file which sounds pretty cool.

So what’s wrong with this?

Saving anything as XML means that you’re using the largest, most verbose form of serialization. After hearing me preach about the virtues of doing SOA with WCF REST + JSON, using the XmlSerializer probably seems out of place. Luckily, the DataContractJsonSerializer supported by Silverlight on Windows Phone 7 gives you the most efficient wire protocol for data-in-transit can also be used to save those same .NET objects to Isolated Storage. So the first improvement in this article comes from shrinking the size of the tables and improving the efficiency of the serialization/de-serializing operations to Isolated Storage using out-of-the-box functionality.

While going from XML to JSON for your serializing might be good enough, there’s another improvement in the way you write the code that will make this much easier to implement for your own projects. A look back to the previous article reveals a tight coupling between the tables that needed to be saved/loaded and the code needed to make that happen. This meant that you would have to create a SaveTable and LoadTable method for each table that you wanted to retrieve from Azure. The new code you’re about to see is generic and allows you to use a single SaveTable and LoadTable method even if you decide to download 100 tables.

Enough talk already, let’s see some code. Launch your ContosoCloud solution in Visual Studio and open Database.cs. I want you to overwrite the existing code with the code shown below:

[~100 lines of C# source code elided for brevity.]

Looking from top to bottom, the first change you’ll notice is the new SaveTable method where you pass in the desired ObservableCollection and table name in order to serialize it as JSON using the DataContractJsonSerializer. The next method down the list is LoadTable where you pass in the same parameters as SaveTable but you get back a de-serialized ObservableCollection. The last new method in the Database Singleton is DropTable which simply deletes the serialized table from Isolated Storage if you don’t need it anymore.

So how do you call this code?

Bring up MainPage.xaml.cs, and find the click event for Save button. Delete the existing XmlSerializer code and replace it with the following:

try

{

Database.Instance.SaveTable<ObservableCollection<Customer>>(Database.Instance.Customers, "Customers");

}

catch (Exception ex)

{

MessageBox.Show(ex.Message);

}The code above shows you how to call the SaveTable method in the Singleton with the appropriate syntax to pass in the ObservableCollection type as well as actual ObservableCollection value and name.

Now find the click event for the Load button, delete the existing code and paste in the following:

try

{

Database.Instance.Customers = Database.Instance.LoadTable<ObservableCollection<Customer>>(Database.Instance.Customers, "Customers");

}

catch (Exception ex)

{

MessageBox.Show(ex.Message);

}This code looks pretty much the same as the SaveTable code except that you set Database.Instance.Customers equal to the return value from the method. For completeness sake, drop another button on MainPage.xaml and call it Drop. In its click event, paste in the following code:

try

{

Database.Instance.DropTable("Customers");

}

catch (Exception ex)

{

MessageBox.Show(ex.Message);

}For this code, just pass in the name of the table you want to delete from Isolated Storage and it’s gone.

It’s time to hit F5 so you can see how things behave.

When your app comes to life in the emulator, I want you to exercise the system by Getting, Adding, Updating and Deleting Customers. In between, I want you to tap the Save button, close the app, reload the app and tap the Load button and then View Customers to ensure you’re seeing the list of Customers you expect. Keep in mind that when you Save, you overwrite the previously saved table. Likewise, when you Load, you overwrite the current in-memory ObservableCollection. Additionally, Saving, Loading, and Dropping tables that don’t exist should throw an appropriate error message.

So what’s the big takeaway for these tweaks I’ve made to the in-memory database?

While switching serialization from XML to JSON is a great improvement in size and efficiency, I truly believe that making the SaveTable and LoadTable methods generic and reusable will boost developer productivity. The new ease with which you can Save and Load 1, 10 or even 1,000 tables makes this more attractive to mobile developers that need to work with local data.

So where do we go from here?

You now have some of the basic elements of a database on Windows Phone 7. You don’t have ACID support, indexes, stored procedures or triggers but you have a foundation to build on. So what should be built next?

To help ensure database consistency, I would add an AutoFlush feature next. SQL Server Compact flushes its data to disk every 10 seconds and there’s nothing to prevent you from using the SaveTable method to do the same. A timer set to fire at a user-specified interval that iterates through all the ObservableCollections and saves them will help keep your data safe from battery loss and unforeseen system failures. The fact that your app can be tombstoned at any moment when a user taps the Back button makes an AutoFlush feature even more important.

Anything else?

At the beginning of this article I mentioned RDA which is a simple form of data synchronization. It’s simple because it only tracks changes on the client but not the server. To find out what’s new or changed on the server, RDA requires local tables on the device to be dropped and then re-downloaded from SQL Server. With the system I’ve built and described throughout this series of articles, we already have this brute force functionality. So what’s missing is client-side change tracking. To do this, I would need to add code that fires during INSERTS, UPDATES, and DELETES and then writes the appropriate information to local tracking tables. To push those changes back to SQL Azure, appropriate code would need to call WCF REST + JSON Services that execute DML code on Windows Azure.

I hope with the improvements I’ve made to the in-memory database in this article, you’ll feel even more empowered to build occasionally-connected Windows Phone 7 solutions for consumers and the enterprise.

Keep coding!

Rob is an Architect at Microsoft who’s currently writing an adventure novel that takes place under the sea.

Rob Tiffany (@RobTiffany) posted Windows Phone 7 Line of Business App Dev :: Network Awareness on 11/28/2010:

By now, you’ve heard me talk a lot about the role wireless data networks play when it comes to the success of your mobile application. They are unreliable, intermittent, highly latent and often slower than they should be due to overtaxed cellular towers and congested backhaul networks. Hopefully, you’ve built an app that tackles those challenges head-on using efficient WCF REST + JSON Services coupled with an offline data store.

So what is the user of your new application going to think when a Web Service call fails because the network is unavailable?

An end-user of your app probably won’t be too thrilled when they’re staring at an unintelligible error message. Or maybe your app will just silently fail when the Web Service call doesn’t succeed. The user might not know there’s a problem until they can’t view a list of relevant data on their phone.

This is no way to treat your prospective user-base because mobile apps should never diminish the user experience by trying to send or receive data in the absence of network connectivity.

Luckily, Silverlight on Windows Phone 7 provides you with a way to determine network connectivity.

Launch Visual Studio 2010 and load the ContosoCloud solution that we’ve been working with over the last four Windows Phone 7 Line of Business App Dev articles. First, I want you to drag an Ellipse from the Toolbox and drop it on MainPage.xaml. Name that control ellipseNet. Next, I want you to drag a TextBlock control over and drop it beneath the Ellipse. Name this control textBlockNet. Now open MainPage.xaml.cs so we can write some code.

Above the ContosoPhone namespace I want you to add:

using Microsoft.Phone.Net.NetworkInformation;

This allows you to tap into the NetworkInterface class. The next line of code I want you to add may seem a little confusing since it’s similar, yet different from Microsoft.Phone.Net.NetworkInformation. Inside the MainPage() constructor, beneath InitializeComponent();, add the following code to create an event handler:

System.Net.NetworkInformation.NetworkChange.NetworkAddressChanged += new System.Net.NetworkInformation.NetworkAddressChangedEventHandler(NetworkChange_NetworkAddressChanged);

This is the standard, cross-platform Silverlight way to create an event handler that tells you when your network address has changed. I wrote it out the long-way because it collides with the phone-specific NetworkInformation class. Don’t ask.

Underneath the line of code above, add the following:

NetworkStateMachine();

This is going to call a method you haven’t created yet.

Inside your MainPage class, the event handler your just created will appear:

void NetworkChange_NetworkAddressChanged(object sender, EventArgs e)

{

NetworkStateMachine();

}As you can see, I want you to add the NetworkStateMachine(); line of code inside the event handler to execute this mysterious function. By now you’re probably saying, “Enough of the suspense already!” Below the event handler, paste in the following code:

private void NetworkStateMachine()

{

try

{

switch (NetworkInterface.NetworkInterfaceType)

{

//No Network

case NetworkInterfaceType.None:

ellipseNet.Fill = new SolidColorBrush(Colors.Red);

textBlockNet.Text = "No Network";

break;

//CDMA Network

case NetworkInterfaceType.MobileBroadbandCdma:

ellipseNet.Fill = new SolidColorBrush(Colors.Blue);

textBlockNet.Text = "CDMA";

break;

//GSM Network

case NetworkInterfaceType.MobileBroadbandGsm:

ellipseNet.Fill = new SolidColorBrush(Colors.Blue);

textBlockNet.Text = "GSM";

break;

//Wi-Fi Network

case NetworkInterfaceType.Wireless80211:

ellipseNet.Fill = new SolidColorBrush(Colors.Green);

textBlockNet.Text = "Wi-Fi";

break;

//Ethernet Network

case NetworkInterfaceType.Ethernet:

ellipseNet.Fill = new SolidColorBrush(Colors.Green);

textBlockNet.Text = "Ethernet";

break;

//No Network

default:

ellipseNet.Fill = new SolidColorBrush(Colors.Red);

textBlockNet.Text = "No Network";

break;

}

}

catch (Exception ex)

{

MessageBox.Show(ex.Message);

}

}The switch statement above creates a state machine for your mobile application that lets it know what type of network connection you have at any given moment. Remember for this example, the sample code is running on the UI thread. Since the code is synchronous and blocking, you may want to run it on a background thread.

As you can see, the following network types are returned:

- Wireless80211 (Wi-Fi)

- Ethernet (Docked/LAN)

- MobileBroadbandGSM (GPRS/EDGE/UMTS/HSDPA/HSPA)

- MobileBroadbandCDMA (1xRTT/EV-DO)

None

As you’re probably thinking, the primary value in this is to know if you have any kind of network or not. Obviously, a return value of None means you shouldn’t make any kind of Web Service call.

Hit F5 in Visual Studio and let’s see what you get in the emulator:

As you can see, the ContosoCloud app detected my laptop’s Wi-Fi connection in the switch statement and therefore gave me a Green Ellipse and a TextBlock that says “Wi-Fi.” Keep in mind that the emulator doesn’t behave the same way as an actual phone so changing my laptop’s networking while the mobile app is running won’t trigger the NetworkChange_NetworkAddressChanged event handler. If you close the app, turn off Wi-Fi on your laptop and then restart the app, it will correctly report that no network is available.

So why would you want to know about all the other network return types?

In working with customers all around the world who use Pocket PCs, Windows Mobile devices and Windows Phones, it has become evident that there is always a “cost” in doing anything over the network. Not everyone has unlimited, “all-you-can-eat” data plans for their employees. Some companies have very low monthly data usage limits for each employee that has been negotiated with one or more mobile operators. For these organizations, it’s not enough to know if the network is present or not. They need to know what kind of network is available so their mobile application can make intelligent decisions.

If I need to download a large amount of data in the morning to allow me to drive my delivery truck route, I probably should only perform this operation over docked Ethernet or Wi-Fi. This gives me the network speed I need to move a lot of data and I don’t incur any costs with my mobile operator.

If I’ve captured small amounts of data in the field that I need to send back to HQ in near real-time, then a return value of MobileBroadbandGSM or MobileBroadbandCDMA is perfect. This would also be appropriate if my app is making lightweight remote method calls via Web Services as well. The use of WCF REST + JSON is probably making a lot of sense now.

If I’ve captured large amounts of data in the field or I’m batching up several data captures throughout the day, it would make more sense to use Ethernet or Wi-Fi when I returned to the warehouse or distribution center. On the other hand, if I have a high enough data usage limit or no limit at all, the MobileBroadbandGSM/CDMA would be fine.

Keep in mind that this guidance is just as valuable for B2C and Consumer apps as well. If you’re building a connected mobile app of any kind, the information I’ve discussed in this article will ensure that you’re always providing a great user experience.

Delighting the end-user is what it’s all about!

Rob Tiffany (@RobTiffany) posted Windows Phone 7 Line of Business App Dev :: Working with an In-Memory Database as what was supposed to be the ending episode in a series of WP7 LoB demos:

In my last article of this series, you finally got to consume wireless-friendly WCF REST + JSON Services from both Windows Server and Windows Azure with data coming from SQL Server/SQL Azure. You now have an ObservableCollection of Customer objects residing in a Singleton on your Windows Phone 7 device. This Singleton looks similar to an in-memory database and the Customers property works like a table.

If you’re like me, you probably want to display the list of Customers in the UI. You might also want to perform other local operations against this data store. You could add a new Customer and update or even delete an existing one.

I’m going to apologize in advance for not doing the MVVM thing that everyone seems to be into these days and get right to the point. Drag a button on to MainPage.xaml and call it View Customers. While you’re at it, drag a listbox below the button and name it listBoxCustomers. Double-click on this Button and add the following code to the click event:

try

{

if (Database.Instance.Customers != null)

{

listBoxCustomers.DisplayMemberPath = "Name";

listBoxCustomers.ItemsSource = Database.Instance.Customers;

}

else

{

MessageBox.Show("The Customer Table is Empty");

}

}

catch (Exception ex)

{

MessageBox.Show(ex.Message);

}In the simple code above, you set the listbox’s ItemsSource equal to the Customer collection in the Database Singleton and set the DisplayMemberPath property equal to the Name property of the Customer objects.

Hit F5 to start debugging this Windows Phone 7 + Azure solution. As usual, a web page and the emulator will launch. Tap the Get Customers button to pull the Customer data back from the WCF REST service. Next, tap on the View Customers button to display the list of Customers from the in-memory database as shown in the picture below:

Now it’s time to add a new Customer so drop a button underneath the listbox and call it Add Customer. Creating a new Customer object requires setting values for 8 properties. Instead of having you add 8 textboxes to type in the info, I’ll keep in simple and let you add it in code. In the click event of the button, paste in the code you see below:

try

{

if (Database.Instance.Customers != null)

{

Customer customer = new Customer();

customer.CustomerId = 5;

customer.DistributionCenterId = 1;

customer.RouteId = 1;

customer.Name = "ABC Corp";

customer.StreetAddress = "555 Market Street";

customer.City = "Seattle";

customer.StateProvince = "WA";

customer.PostalCode = "98987";

Database.Instance.Customers.Add(customer);

}

}

catch (Exception ex)

{

MessageBox.Show(ex.Message);

}After setting all the properties (Columns), you add the Customer object (Row) to the Customers property (Table), in the Singleton Database. Hit F5, tap the Get Customers button, tap the View Customers button and then tap the Add Customer button. Through the magic of simple data-binding, you should see the new “ABC Corp” show up in the listbox as shown below:

Now that you’ve added a new Customer, it’s time to update it because the president of the company decided to change the name. Drag a new button and drop it underneath the Add Customer button. Call it Update Customer and in it’s click event, paste in the following code:

try

{

if (Database.Instance.Customers != null)

{

foreach (Customer c in Database.Instance.Customers)

{

if (c.Equals((Customer)listBoxCustomers.SelectedItem))

{

c.Name = "XYZ Inc";

}

}

}

}

catch (Exception ex)

{

MessageBox.Show(ex.Message);

}The code above loops through the Customers ObservableCollection until it finds a match for the item that’s been selected in the listbox. When it finds that match, it updates the Name property to “XYZ Inc” which will automatically update what the user views in the listbox.

Hit F5, tap the Get Customers button, tap the View Customers button and then tap the Add Customer button. Now tap on “ABC Corp” in the listbox to highlight it. Clicking the Update Customer button will change it before your eyes.

It turns out that “XYZ Inc” went out of business because the president was an idiot so you need to delete it. Guess what, you need yet another button beneath the Update Customer button. Call it Delete Customer and in it’s click event, paste in the following code:

try

{

if (Database.Instance.Customers != null)

{

Database.Instance.Customers.Remove((Customer)listBoxCustomers.SelectedItem);

}

}

catch (Exception ex)

{

MessageBox.Show(ex.Message);

}In the code above, the Customer object that matches the item selected in the listbox is removed from the Customers ObservableCollection. Pretty simple stuff in this case.

To find out for sure, hit F5, tap the Get Customers button, tap the View Customers button and then tap the Add Customer button. Now tap on “ABC Corp” in the listbox to highlight it. Clicking the Update Customer button will change it to “XYZ Inc.” Highlighting “XYZ Inc” and clicking the Delete Customer button will cause this defunct company to disappear as shown below:

Now suppose you only want to display the Customers from Seattle and not the Eastside. A little LINQ will do the trick here. Drag and drop a new button called Seattle next to the Test Uri button and paste the following code in the click event:

if (Database.Instance.Customers != null)

{

IEnumerable<Customer> customers = from customer in Database.Instance.Customers

where customer.DistributionCenterId == 1

select customer;

listBoxCustomers.DisplayMemberPath = "Name";

listBoxCustomers.ItemsSource = customers;

}

else

{

MessageBox.Show("The Driver Table is Empty");

}In the code above, I set an IEnumerable<Customer> variable equal to the Customers table where the DistributionCenterId is equal to 1. Since the DistributionCenter #1 serves the Seattle area, I know the listbox will be filled with just Adventure Works LLC and City Power & Light. Start debugging and test it for yourself.

The last thing you need to do with local data is store it offline since you can’t always count on the network being there. Luckily we’ve got Isolated Storage to serialize stuff. In order to work with Isolated Storage, I need you to add using System.IO.IsolatedStorage; at the top of the class. Since in this example I’ll demonstrate XML Serialization of the Customers ObservableCollection, I’ll need you to add a reference to System.Xml.Serialization and then add using System.Xml.Serialization; at the top of the class. With that plumbing in place, let’s write some actual code.

Drag and drop another button called Save next to the Get Customers button and paste the following code in the click event:

if (Database.Instance.Customers != null)

{

using (IsolatedStorageFile store = IsolatedStorageFile.GetUserStoreForApplication())

{

using (IsolatedStorageFileStream stream = store.CreateFile("Customers.xml"))

{

XmlSerializer serializer = new XmlSerializer(typeof(ObservableCollection<Customer>));

serializer.Serialize(stream, Database.Instance.Customers);}

}

}In the code above, you use the combination of IsolatedStorageFile and IsolatedStorageFileStream to write data to Isolated Storage. In this case, you’re going to create an XML file to save the Customers ObservableCollection of Customer objects. Remember back in the 80’s when databases used to save each table as an individual file? I’m thinking of DBase III+, FoxPro, and Paradox at the moment. Anyway, this is exactly what happens here using the power of the XmlSerializer. Feel free to debug and step through the code to ensure that it executes without error.

To complete the picture, you need to be able to retrieve the Customers ObservableCollection from Isolated Storage and work with the data without ever having to call the WCF REST services. Drag and drop one last button called Load next to the View Customers button and paste the following code in the click event:

using (IsolatedStorageFile store = IsolatedStorageFile.GetUserStoreForApplication())

{

if (store.FileExists("Customers.xml"))

{

using (IsolatedStorageFileStream stream = store.OpenFile("Customers.xml", System.IO.FileMode.Open))

{

XmlSerializer serializer = new XmlSerializer(typeof(ObservableCollection<Customer>));

Database.Instance.Customers = (ObservableCollection<Customer>)serializer.Deserialize(stream);

}

}

}The code above does the reverse of the previous Save code by opening the Customers.xml and re-hydrating those objects back into the Customers table using the XmlSerializer. This is pretty cool stuff.

To make it all real, hit F5 to start debugging this completed mobile project. When the app loads, tap the Get Customers button to retrieve the data and then tap View Customers to verify that you can see the data. Now I want you to tap the Save button to serialize the Customers to an XML file in Isolated Storage. Lastly, I want you to tap on the Back button to close the application.

Hit F5 again to fire up the application. This time, I don’t want you to retrieve the data from Azure. Instead, I want you to tap the Load button to de-serialize the Customer objects from XML. Now for the moment of truth. Tap the View Customers button to see if you’re actually working with an offline database. Hopefully, your app will look like the picture below:

Congratulations! You’ve made it to the end of this series of articles on building an occasionally-connected Windows Phone 7 application that works with WCF REST + JSON Services in Windows Azure. Furthermore, you’re now pulling data down from SQL Azure and you’re working with it locally using a simple in-memory database that you built yourself. I’m hoping that you’ll now feel empowered to go build industrial-strength mobile solutions for your own company or your customers.

Keep coding!

Obviously, this isn’t “the end of this series of articles” (see above.)

Rob Tiffany (@RobTiffany) posted Windows Phone 7 Line of Business App Dev :: Consuming an Azure WCF REST + JSON Service in late October 2010 (Rob’s posts are undated):

In my last two articles, I showed you how to build WCF REST services using Visual Studio 2010 that can reside on-premise in Windows Server 2008 or in the Cloud in Windows Azure. Furthermore, I demonstrated pulling data from a table in SQL Server/SQL Azure. I serialized .NET Objects using lightweight JSON to speed data transfers over even the slowest wireless data networks. Now it’s time to call that REST service from Windows Phone 7.

Launch VS2010 and open the solution you created to build the WCF Service Web Role in Azure last time. Right-click on the solution and add a Windows Phone Application project. Change the name to ContosoPhone.

Part of the magic of making all this work is to have both the Azure Development Fabric and the Windows Phone 7 project start when it comes time to debug. Most developers are accustomed to only having a single startup project so let’s make sure you have everything we need running when you hit F5. Right-click on the solution and select Properties. Select Startup Project and then click on the Multiple startup projects radio button. Set both the AzureRestService and ContosoPhone projects Action value to Start and click OK as shown below:

With the startup configuration complete, the next thing I want you to do is copy Customer.cs from the AzureRestService project to the new ContosoPhone project since you’ll need it to create local Customer objects when you retrieve the data from the WCF call. In this new Customer class you’ll need to change the Namespace to ContosoPhone and remove using System.Web; at the top of the class. In order to support the DataContract() and DataMember() attributes, you’ll need to add a reference to System.Runtime.Serialization in order to get it to compile.

Drag a Button on to your MainPage.xaml and call it Test. Double-click on this Button and add the following WebClient code to the click event:

try

{

WebClient webClient = new WebClient();

Uri uri = new Uri("http://127.0.0.1:48632/service1.svc/getdata?number=8");

webClient.OpenReadCompleted += new OpenReadCompletedEventHandler(OpenReadCompletedTest);

webClient.OpenReadAsync(uri);

}

catch (Exception ex)

{

MessageBox.Show(ex.Message);

}As you can see above, I create a WebClient and a Uri object that points to the local Url presented by the Azure Development Fabric. The port number my be different on your machine so double-check. This is the test REST service from the last article used to prove that you’re calls are making it through. You’ve made this call with your web browser and Windows Phone 7 will call it the same way. Since all calls are asynchronous and the WebClient above created an event handler, copy the following code into the MainPage class and make sure to add using System.IO; at the top:

void OpenReadCompletedTest(object sender, OpenReadCompletedEventArgs e)

{

try

{

StreamReader reader = new System.IO.StreamReader(e.Result);

MessageBox.Show(reader.ReadToEnd().ToString());

reader.Close();

}

catch (Exception ex)

{

MessageBox.Show(ex.Message);

}

}In the event handler above, I use the StreamReader to grab the entire XML return value. The fact that I’m using WebClient means that callbacks are run on the UI thread thus relieving you of the need to use the dispatcher to update the UI. This allows you to display the following XML string in the MessageBox:

<string xmlns="http://schemas.microsoft.com/2003/10/Serialization/">You entered: 8</string>

It’s time to try this thing out so hit F5. If it compiles and everything executes properly, you should see both a web browser pointing to the root of your Azure services as well as your emulator and you can test your services with both. Click the simple Test Button on your Silverlight MainPage and the MessageBox should pop up after the XML result is returned from Azure displaying the answer:

Click the Back button on the Emulator to close your app and close the web browser window to shut down Azure.

Now it’s time to do something a little more ambitious like return the JSON-encoded list of Customers from SQL Azure. Unlike most books and articles on Silverlight and RIA Services that you may have read, I’m not going to return this data and immediately data-bind it to a control. As a long-time mobile and wireless guy that understands intermittent connectivity and the importance of an offline data store, I’m going to have you put this data in a local database first. Since SQL Server Compact is nowhere to be found and I don’t want you going off and using a 3rd party or open source embedded database, I’m going to show you how to create a simple one of your own.

Right-click on your ContosoPhone project and select Add | Class. Name this new class Database.

You’re going to turn this class into a Singleton to create an in-memory database which will ensure that only instance is available. For simplicity’s sake, just copy the code below into the new Database class:

using System;

using System.Net;

using System.Windows;

using System.Collections.Generic;

using System.Collections.ObjectModel;namespace ContosoPhone

{

sealed class Database

{

//Declare Instance

private static readonly Database instance = new Database();//Private Constructor

private Database() { }//The entry point into this Database

public static Database Instance

{

get

{

return instance;

}

}//Declare Private Variables

private ObservableCollection<Customer> customerTable = null;//Customer Table

public ObservableCollection<Customer> Customers

{

get { return customerTable; }

set { customerTable = value; }

}

}