Top Stories This Week:

| A compendium of Windows Azure, Service Bus, BizTalk Services, Access Control, Caching, SQL Azure Database, and other cloud-computing articles. |  |

Note: This post is updated weekly or more frequently, depending on the availability of new articles in the following sections:

- Windows Azure Blob, Drive, Table, Queue, HDInsight and Media Services

- Windows Azure SQL Database, Federations and Reporting, Mobile Services

- Windows Azure Marketplace DataMarket, Power BI, Big Data and OData

- Windows Azure Service Bus, BizTalk Services and Workflow

- Windows Azure Access Control, Active Directory, and Identity

- Windows Azure Virtual Machines, Virtual Networks, Web Sites, Connect, RDP and CDN

- Windows Azure Cloud Services, Caching, APIs, Tools and Test Harnesses

- Windows Azure Infrastructure and DevOps

- Windows Azure Pack, Hosting, Hyper-V and Private/Hybrid Clouds

- Visual Studio LightSwitch and Entity Framework v4+

- Cloud Security, Compliance and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

<Return to section navigation list>

Mingfei Yan (@mingfeiy) posted Announcing Windows Azure Media Services .NET SDK Extensions Version 2.0 on 12/19/2013:

I am excited to announce that our Windows Azure Media services Extension SDK version 2.0 just released! If you were using the first version of extension SDK, please pay attention because this version includes a few breaking changes – we had a round of redesign for our existing extension APIs.

I am excited to announce that our Windows Azure Media services Extension SDK version 2.0 just released! If you were using the first version of extension SDK, please pay attention because this version includes a few breaking changes – we had a round of redesign for our existing extension APIs.

What is extension SDK?

This extension SDK is a great initiative by developer Mariano Conveti from our partner Southworks. This SDK extension library contains a set of extension methods and helpers for the Windows Azure Media Services SDK for .NET. You could get the source code from Github repository or install Nuget package to start using it.

This extension SDK is a great initiative by developer Mariano Conveti from our partner Southworks. This SDK extension library contains a set of extension methods and helpers for the Windows Azure Media Services SDK for .NET. You could get the source code from Github repository or install Nuget package to start using it.

Why we are doing extension SDK?

As we known, we have our official Windows Azure Media Services SDK, however, because it is very flexible for building customized media workflow, you may have to write lots of lines of code in order to complete a simple function. What if there is an extension allows you to complete basic media workflow in 10 lines? That’s why WAMS extension SDK exists.

![image_thumb75_thumb3_thumb_thumb_thu[20] image_thumb75_thumb3_thumb_thumb_thu[20]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEjDPx9owkC-BZvmtucNRRIGL44HN-KZE4ooVrlR3nJOewoEx9YZfsUQo4t2S8SCOtfozs_6har98ntojapTbioHbDeNmBdV2Y7AHwMcMIyY4rkzuREr3IjdgcyCtqJKKtVfbD2jMTTy/?imgmax=800) Who are maintaining the extension SDK?

Who are maintaining the extension SDK?

This extension SDK is under Azure official code repository on Github. Currently, majority of code is contributed by Mariano. WAMS team is also contributing and is responsible for Github and Nuget release. However, we welcome developer community’s contribution. Please refer to our Github page for more details.

What’s new for version 2.0?

- • I have another blog on major features for version 1.0. All the features are still working in this new version.

- • For version 2.0, we re-organized extension methods, which avoid defining all of them for CloudMediaContext class. This new extension SDK is based on the latest Windows Azure Media Services 3.0.0.0.

- • Automatically load balance between multiple storage accounts while uploading assets, you could refer to Mariano’s blog for code example

Here is how to use this extension SDK to complete a basic media workflow:

1. Open Visual Studio and create a .NET console application (it could target on either .NET 4 or .NET 4.5).

2. Right Click on your project and select Manage NuGet package. Navigate to Online tab, and search windowsazure.mediaservices on the Search windows. And select to install Windows Azure Media Services Extension SDK as showed in the picture below.

3. Copy and paste the following code in Main method (it uploads media file, encode it into mulit-bitrate Mp4 and publish for streaming)

3. Copy and paste the following code in Main method (it uploads media file, encode it into mulit-bitrate Mp4 and publish for streaming)

try

{

MediaServicesCredentials credentials = new MediaServicesCredentials("acc_name", "acc_key");

CloudMediaContext context = new CloudMediaContext(credentials);

Console.WriteLine("Creating new asset from local file...");

// 1. Create a new asset by uploading a mezzanine file from a local path.

IAsset inputAsset = context.Assets.CreateFromFile(

@"C:\demo\demo.mp4",

AssetCreationOptions.None,

(af, p) =>

{

Console.WriteLine("Uploading '{0}' - Progress: {1:0.##}%", af.Name, p.Progress);

});

Console.WriteLine("Asset created.");

// 2. Prepare a job with a single task to transcode the previous mezzanine asset

// into a multi-bitrate asset.

IJob job = context.Jobs.CreateWithSingleTask(

MediaProcessorNames.WindowsAzureMediaEncoder,

MediaEncoderTaskPresetStrings.H264AdaptiveBitrateMP4Set720p,

inputAsset,

"Sample Adaptive Bitrate MP4",

AssetCreationOptions.None);

Console.WriteLine("Submitting transcoding job...");

// 3. Submit the job and wait until it is completed.

job.Submit();

job = job.StartExecutionProgressTask(

j =>

{

Console.WriteLine("Job state: {0}", j.State);

Console.WriteLine("Job progress: {0:0.##}%", j.GetOverallProgress());

},

CancellationToken.None).Result;

Console.WriteLine("Transcoding job finished.");

IAsset outputAsset = job.OutputMediaAssets[0];

Console.WriteLine("Publishing output asset...");

// 4. Publish the output asset by creating an Origin locator for adaptive streaming,

// and a SAS locator for progressive download.

context.Locators.Create(

LocatorType.OnDemandOrigin,

outputAsset,

AccessPermissions.Read,

TimeSpan.FromDays(30));

context.Locators.Create(

LocatorType.Sas,

outputAsset,

AccessPermissions.Read,

TimeSpan.FromDays(30));

IEnumerable<IAssetFile> mp4AssetFiles = outputAsset

.AssetFiles

.ToList()

.Where(af => af.Name.EndsWith(".mp4", StringComparison.OrdinalIgnoreCase));

// 5. Generate the Smooth Streaming, HLS and MPEG-DASH URLs for adaptive streaming,

// and the Progressive Download URL.

Uri smoothStreamingUri = outputAsset.GetSmoothStreamingUri();

Uri hlsUri = outputAsset.GetHlsUri();

Uri mpegDashUri = outputAsset.GetMpegDashUri();

List<Uri> mp4ProgressiveDownloadUris = mp4AssetFiles.Select(af => af.GetSasUri()).ToList();

// 6. Get the asset URLs.

Console.WriteLine(smoothStreamingUri);

Console.WriteLine(hlsUri);

Console.WriteLine(mpegDashUri);

mp4ProgressiveDownloadUris.ForEach(uri => Console.WriteLine(uri));

Console.WriteLine("Output asset available for adaptive streaming and progressive download.");

Console.WriteLine("VOD workflow finished.");

}

catch (Exception exception)

{

// Parse the XML error message in the Media Services response and create a new

// exception with its content.

exception = MediaServicesExceptionParser.Parse(exception);

Console.Error.WriteLine(exception.Message);

}

finally

{

Console.ReadLine();

}

}

*I put a local file in C:\demo\demo.mp4, please change this file path to link to your file on disk.

There are a few things I want to highlight in this sample code:

- • Encoding presents could be selected in MediaEncoderTaskPresetStrings, you no longer need to go to MSDN to check for task presents:

- • There are two types of locator as you can see in Step 4, in fact, this concept isn’t new and it exists in WAMS SDK as well. However, this is one of the most asked questions so I’d love to explain again.

- SAS(Shared Access Signature) Locator: this is generated to access video file directly from Blob (as any other files). This is used for progressive download.

- OnDemandOrigin Locator: the url allows you to access media file through our origin server. In this example, you can see there are 3 OnDemandOrigin Locator gets generated in step 5: MPEG-DASH, Smooth Streaming and HLS. It’s utilizing our dynamic packaging feature that by storing your media file in multi-bitrate Mp4, we can deliver multiple media formats on the fly. However, remember you need at least reserved unit for streaming to make it work.

- Below is the picture for explaining these two locators’ URLs:

4. Press F5 and run. In the output console, you should be able to see three streaming URLs and a few SAS locators for various bitrates Mp4 files. I have shared project source code for download.

5. If you found any issues or there are APIs you think are helpful but missing in current collection, don’t hesitate to post on Github Issue tab. We are looking forward to seeing your feedback.

Serdar Ozler, Mike Fisher and Joe Giardino of the Windows Azure Storage Team announced availability of the Windows Azure Storage Client Library for C++ Preview on 12/19/2013:

We are excited to announce the availability of our new Windows Azure Storage Client Library for C++. This is currently a Preview release, which means the library should not be used in your production code just yet. Instead, please kick the tires and give us any feedback you might have for us to improve/change the interface based on feedback for the GA release. This blog post serves as an overview of the library.

Please refer to SOSP Paper - Windows Azure Storage: A Highly Available Cloud Storage Service with Strong Consistency for more information on Windows Azure Storage.

Emulator Guidance

Please note, that the 2013-08-15 REST version, which this library utilizes, is currently unsupported by the storage emulator. An updated Windows Azure Storage Emulator is expected to ship with full support of these new features in the next month. Users attempting to develop against the current version of the Storage emulator will receive Bad Request errors in the interim. Until then, users wanting to use the new features would need to develop and test against a Windows Azure Storage Account to leverage the 2013-08-15 REST version.

Supported Platforms

In this release, we provide x64 and x86 versions of the library for both Visual Studio 2012 (v110) and Visual Studio 2013 (v120) platform toolsets. Therefore you will find 8 build flavors in the package:

- Release, x64, v120

- Debug, x64, v120

- Release, Win32, v120

- Debug, Win32, v120

- Release, x64, v110

- Debug, x64, v110

- Release, Win32, v110

- Debug, Win32, v110

Where is it?

The library can be downloaded from NuGet and full source code is available on GitHub. NuGet packages are created using the CoApp tools and therefore consist of 3 separate packages:

- wastorage.0.2.0-preview.nupkg: This package contains header and LIB files required to develop your application. This is the package you need to install, which has a dependency on the redist package and thus will force NuGet to install that one automatically.

- wastorage.redist.0.2.0-preview.nupkg: This package contains DLL files required to run and redistribute your application.

- wastorage.symbols.0.2.0-preview.nupkg: This package contains symbols for the respective DLL files and therefore is an optional package.

The package also contains a dependency on C++ REST SDK, which will also be automatically installed by NuGet. The C++ REST SDK (codename "Casablanca") is a Microsoft project for cloud-based client-server communication in native code and provides support for accessing REST services from native code on multiple platforms by providing asynchronous C++ bindings to HTTP, JSON, and URIs. Windows Azure Storage Client Library uses it to communicate with the Windows Azure Storage Blob, Queue, and Table services.

What do you get?

Here is a summary of the functionality you will get by using Windows Azure Storage Client Library instead of directly talking to the REST API:

- Easy-to-use implementations of the entire Windows Azure Storage REST API version 2013-08-15

- Retry policies that retry certain failed requests using an exponential or linear back off algorithm

- Streamlined authentication model that supports both Shared Keys and Shared Authentication Signatures

- Ability to dive into the request details and results using an operation context and ETW logging

- Blob uploads regardless of size and blob type, and parallel block/page uploads configurable by the user

- Blob streams that allow reading from or writing to a blob without having to deal with specific upload/download APIs

- Full MD5 support across all blob upload and download APIs

- Table layer that uses the new JSON support on Windows Azure Storage announced in November 2013

- Entity Group Transaction support for Table service that enables multiple operations within a single transaction

Support for Read Access Geo Redundant Storage

This release has full support for Read Access to the storage account data in the secondary region. This functionality needs to be enabled via the portal for a given storage account, you can read more about RA-GRS here.

How to use it?

After installing the NuGet package, all header files you need to use will be located in a folder named “was” (stands for Windows Azure Storage). Under this directory, the following header files are critical:

- blob.h: Declares all types related to Blob service

- queue.h: Declares all types related to Queue service

- table.h: Declares all types related to Table service

- storage_account.h: Declares the cloud_storage_account type that can be used to easily create service client objects using an account name/key or a connection string

- retry_policies.h: Declares different retry policies available to use with all operations

So, you can start with including the headers you are going to use:

#include "was/storage_account.h"

#include "was/queue.h"

#include "was/table.h"

#include "was/blob.h"

Then we will create a cloud_storage_account object, which enables us to create service client objects later in the code. Please note that we are using https below for a secure connection, but http is very useful when you are debugging your application.

wa::storage::cloud_storage_account storage_account = wa::storage::cloud_storage_account::parse(U("AccountName=<account_name>;AccountKey=<account_key>;DefaultEndpointsProtocol=https"));

Blobs

Here we create a blob container, a blob with “some text” in it, download it, and then list all the blobs in our container:

// Create a blob container

wa::storage::cloud_blob_client blob_client = storage_account.create_cloud_blob_client();

wa::storage::cloud_blob_container container = blob_client.get_container_reference(U("mycontainer"));

container.create_if_not_exists();

// Upload a blob

wa::storage::cloud_block_blob blob1 = container.get_block_blob_reference(U("myblob"));

blob1.upload_text(U("some text"));

// Download a blob

wa::storage::cloud_block_blob blob2 = container.get_block_blob_reference(U("myblob"));

utility::string_t text = blob2.download_text();

// List blobs

wa::storage::blob_result_segment blobs = container.list_blobs_segmented(wa::storage::blob_continuation_token());

Tables

The sample below creates a table, inserts an entity with couple properties of different types, and finally retrieves that specific entity. In the first retrieve operation, we do a point query and retrieve the specific entity. In the query operation, on the other hand, we query all entities with PartitionKey is equal to “partition” and RowKey is greater than or equal to “m”, which will eventually get us the original entity we inserted.

For more information on Windows Azure Tables, please refer to the Understanding the Table Service Data Model article and the How to get most out of Windows Azure Tables blog post.

// Create a table

wa::storage::cloud_table_client table_client = storage_account.create_cloud_table_client();

wa::storage::cloud_table table = table_client.get_table_reference(U("mytable"));

table.create_if_not_exists();

// Insert a table entity

wa::storage::table_entity entity(U("partition"), U("row"));

entity.properties().insert(wa::storage::table_entity::property_type(U("PropertyA"), wa::storage::table_entity_property(U("some string"))));

entity.properties().insert(wa::storage::table_entity::property_type(U("PropertyB"), wa::storage::table_entity_property(utility::datetime::utc_now())));

entity.properties().insert(wa::storage::table_entity::property_type(U("PropertyC"), wa::storage::table_entity_property(utility::new_uuid())));

wa::storage::table_operation operation1 = wa::storage::table_operation::insert_or_replace_entity(entity);

wa::storage::table_result table_result = table.execute(operation1);

// Retrieve a table entity

wa::storage::table_operation operation2 = wa::storage::table_operation::retrieve_entity(U("partition"), U("row"));

wa::storage::table_result result = table.execute(operation2);

// Query table entities

wa::storage::table_query query;

query.set_filter_string(wa::storage::table_query::combine_filter_conditions(

wa::storage::table_query::generate_filter_condition(U("PartitionKey"), wa::storage::query_comparison_operator::equal, U("partition")),

wa::storage::query_logical_operator::and,

wa::storage::table_query::generate_filter_condition(U("RowKey"), wa::storage::query_comparison_operator::greater_than_or_equal, U("m"))));

std::vector<wa::storage::table_entity> results = table.execute_query(query);

Queues

In our final example, we will create a queue, add a message to it, retrieve the same message, and finally update it:

// Create a queue

wa::storage::cloud_queue_client queue_client = storage_account.create_cloud_queue_client();

wa::storage::cloud_queue queue = queue_client.get_queue_reference(U("myqueue"));

queue.create_if_not_exists();

// Add a queue message

wa::storage::cloud_queue_message message1(U("mymessage"));

queue.add_message(message1);

// Get a queue message

wa::storage::cloud_queue_message message2 = queue.get_message();

// Update a queue message

message2.set_content(U("changedmessage"));

queue.update_message(message2, std::chrono::seconds(30), true);

How to debug it?

When things go wrong, you might get an exception from one of your calls. This exception will be of type wa::storage::storage_exception and contain detailed information about what went wrong. Consider the following code:

try

{

blob1.download_attributes();

}

catch (const wa::storage::storage_exception& e)

{

std::cout << "Exception: " << e.what() << std::endl;

ucout << U("The request that started at ") << e.result().start_time().to_string() << U(" and ended at ") << e.result().end_time().to_string() << U(" resulted in HTTP status code ") << e.result().http_status_code() << U(" and the request ID reported by the server was ") << e.result().service_request_id() << std::endl;

}

When run on a non-existing blob, this code will print out:

Exception: The specified blob does not exist.

The request that started at Fri, 13 Dec 2013 18:31:11 GMT and ended at Fri, 13 Dec 2013 18:31:11 GMT resulted in HTTP status code 404 and the request ID reported by the server was 5de65ae4-9a71-4b1d-9c99-cc4225e714c6

The library also provides the type wa::storage::operation_context, which is supported by all APIs, to obtain more information about what is being done during an operation. Now consider the following code:

wa::storage::operation_context context;

context.set_sending_request([] (web::http::http_request& request, wa::storage::operation_context)

{

ucout << U("The request is being sent to ") << request.request_uri().to_string() << std::endl;

});

context.set_response_received([] (web::http::http_request&, const web::http::http_response& response, wa::storage::operation_context)

{

ucout << U("The reason phrase is ") << response.reason_phrase() << std::endl;

});

try

{

blob1.download_attributes(wa::storage::access_condition(), wa::storage::blob_request_options(), context);

}

catch (const wa::storage::storage_exception& e)

{

std::cout << "Exception: " << e.what() << std::endl;

}

ucout << U("Executed ") << context.request_results().size() << U(" request(s) to perform this operation and the last request's status code was ") << context.request_results().back().http_status_code() << std::endl;

Again, when run on a non-existing blob, this code will print out:

The request is being sent to http://myaccount.blob.core.windows.net/mycontainer/myblob?timeout=90

The reason phrase is The specified blob does not exist.

Exception: The specified blob does not exist.

Executed 1 request(s) to perform this operation and the last request's status code was 404

Samples

We have provided sample projects on GitHub to help get you up and running with each storage abstraction and to illustrate some additional key scenarios. All sample projects are found under the folder named “samples”.

Open the samples solution file named “Microsoft.WindowsAzure.Storage.Samples.sln” in Visual Studio. Update your storage credentials in the samples_common.h file under the Microsoft.WindowsAzure.Storage.SamplesCommon project. Go to the Solution Explorer window and select the sample project you want to run (for example, Microsoft.WindowsAzure.Storage.BlobsGettingStarted) and choose “Set as StartUp Project” from the Project menu (or, alternatively, right-click the project, then choose the same option from the context menu).

Summary

We welcome any feedback you may have in the comments section below, the forums, or GitHub. If you hit any bugs, filing them on GitHub will also allow you to track the resolution.

Serdar Ozler, Mike Fisher, and Joe Giardino

Resources

Angshuman Nayak described how to fix a IIS Logs stops writing in cloud service problem in a 12/21/2013 post to the Windows Azure Cloud Integration Engineering blog:

I was recently working on a case where the IIS logs stops writing on all Production boxes. There was 995GB on C:\ disk for logs, but logs writing starts only after some files were deleted. The Current IIS logs directory size was 2.91 GB (3,132,727,296 bytes).

I was recently working on a case where the IIS logs stops writing on all Production boxes. There was 995GB on C:\ disk for logs, but logs writing starts only after some files were deleted. The Current IIS logs directory size was 2.91 GB (3,132,727,296 bytes).

So I looked at the diagnostic.wadcfg files.

<?xml version="1.0" encoding="utf-8"?>

<DiagnosticMonitorConfiguration configurationChangePollInterval="PT1M" overallQuotaInMB="4096" xmlns="http://schemas.microsoft.com/ServiceHosting/2010/10/DiagnosticsConfiguration">

<DiagnosticInfrastructureLogs scheduledTransferPeriod="PT1M" />

<Directories scheduledTransferPeriod="PT1M">

<IISLogs container="wad-iis-logfiles" />

<CrashDumps container="wad-crash-dumps" />

</Directories>

<Logs bufferQuotaInMB="1024" scheduledTransferPeriod="PT1M" />

<PerformanceCounters bufferQuotaInMB="512" scheduledTransferPeriod="PT1M">

<PerformanceCounterConfiguration counterSpecifier="\Memory\Available MBytes" sampleRate="PT3M" />

<PerformanceCounterConfiguration counterSpecifier="\Web Service(_Total)\ISAPI Extension Requests/sec" sampleRate="PT3M" />

<PerformanceCounterConfiguration counterSpecifier="\Web Service(_Total)\Bytes Total/Sec" sampleRate="PT3M" />

<PerformanceCounterConfiguration counterSpecifier="\ASP.NET Applications(__Total__)\Requests/Sec" sampleRate="PT3M" />

<PerformanceCounterConfiguration counterSpecifier="\ASP.NET Applications(__Total__)\Errors Total/Sec" sampleRate="PT3M" />

<PerformanceCounterConfiguration counterSpecifier="\ASP.NET\Requests Queued" sampleRate="PT3M" />

<PerformanceCounterConfiguration counterSpecifier="\ASP.NET\Requests Rejected" sampleRate="PT3M" />

<PerformanceCounterConfiguration counterSpecifier="\Processor(_Total)\% Processor Time" sampleRate="PT1S" />

</PerformanceCounters>

<WindowsEventLog bufferQuotaInMB="1024" scheduledTransferPeriod="PT1M" scheduledTransferLogLevelFilter="Error">

<DataSource name="Application!*" />

<DataSource name="System!*" />

</WindowsEventLog>

</DiagnosticMonitorConfiguration>

I noticed that there is no bufferQuotaInMB mentioned for Directories and IIS logs.

<Directories scheduledTransferPeriod="PT1M">

<IISLogs container="wad-iis-logfiles" />

<CrashDumps container="wad-crash-dumps" />

</Directories>

The attribute bufferQuotaInMB needs to be mentioned as per this article http://www.windowsazure.com/en-us/develop/net/common-tasks/diagnostics/ . Making this change led to the IIS logs getting moved to the Azure Storage BLOB location at scheduled intervals and making space for new IIS logs to be collected.

Since there was lot of disk space I further recommend the customer to increase the quota limits. The way it needs to be done is as below

a) Increase the Local Resource Size. If this is not done then irrespective of what value is allocated to OverallQuotaInMB the value it will get in 4GB.

<LocalResources>

<LocalStorage name="DiagnosticStore" cleanOnRoleRecycle="false"sizeInMB="8192" /> ==> Example of Setting 8GB DiagnosticStore

</LocalResources>

b) Keep the “OverallQuotaInMB” at least 512 KB less than the value above. We need 512 KB for the GC to run and incase all the space is consumed the GC can’t run to move the logs.

c) The “BufferQuotaInMB” property sets the amount of local storage to allocate for a specified data buffer. You can specify the value of this property when configuring any of the diagnostic sources for a diagnostic monitor. By default, the BufferQuotaInMB property is set to 0 for each data buffer. Diagnostic data will be written to each data buffer until the OverallQuotaInMB limit is reached unless you set a value for this property. So the space left after the sum of the all the directory quota is deducted from OverallQuotaInMB is allocated to BufferQuota.

d) Also change the “ScheduledTransferPeriodInMinutes” to a non 0 value. The minimum is 1 minute, if it’s set to 0 then the diagnostics will not be moved to storage and you might lose them if the VM is re-incarnated anytime due to a guest or host O.S update.

Carl Nolan (@carl_nolan) described Managing Your HDInsight Cluster using PowerShell – Update in a 12/26/2013 post:

Since writing my last post Managing Your HDInsight Cluster and .Net Job Submissions using PowerShell, there have been some useful modifications to the Azure PowerShell Tools.

Since writing my last post Managing Your HDInsight Cluster and .Net Job Submissions using PowerShell, there have been some useful modifications to the Azure PowerShell Tools.

The HDInsight cmdlets no longer exist as these have now been integrated into the latest release of the Windows Azure Powershell Tools. This integration means:

- You don’t need to specify Subscription parameter

- If needed, you can use AAD authentication to Azure instead of certificates

Also, the cmdlets are fully backwards compatible meaning you don’t need to change your current scripts. The Subscription parameter is now optional but if it specified then it is honoured.

Also, the cmdlets are fully backwards compatible meaning you don’t need to change your current scripts. The Subscription parameter is now optional but if it specified then it is honoured.

As such the cluster creation script will now be:

- Param($Hosts = 4, [string] $Cluster = $(throw "Cluster Name Required."), [string] $StorageContainer = "hadooproot")

- # Get the subscription information and set variables

- $subscriptionInfo = Get-AzureSubscription -Default

- $subName = $subscriptionInfo | %{ $_.SubscriptionName }

- $subId = $subscriptionInfo | %{ $_.SubscriptionId }

- $cert = $subscriptionInfo | %{ $_.Certificate }

- $storeAccount = $subscriptionInfo | %{ $_.CurrentStorageAccountName }

- Select-AzureSubscription -SubscriptionName $subName

- $key = Get-AzureStorageKey $storeAccount | %{ $_.Primary }

- $storageAccountInfo = Get-AzureStorageAccount $storeAccount

- $location = $storageAccountInfo | %{ $_.Location }

- $hadoopUsername = "Hadoop"

- $clusterUsername = "Admin"

- $clusterPassword = "myclusterpassword"

- $secpasswd = ConvertTo-SecureString $clusterPassword -AsPlainText -Force

- $clusterCreds = New-Object System.Management.Automation.PSCredential($clusterUsername, $secpasswd)

- $clusterName = $Cluster

- $numberNodes = $Hosts

- $containerDefault = $StorageContainer

- $blobStorage = "$storeAccount.blob.core.windows.net"

- # tidyup the root to ensure empty

- # Remove-AzureStorageContainer Name $containerDefault -Force

- Write-Host "Deleting old storage container contents: $containerDefault" -f yellow

- $blobs = Get-AzureStorageBlob -Container $containerDefault

- foreach($blob in $blobs)

- {

- Remove-AzureStorageBlob -Container $containerDefault -Blob ($blob.Name)

- }

- # Create the cluster

- Write-Host "Creating '$numberNodes' Node Cluster named: $clusterName" -f yellow

- Write-Host "Storage Account '$storeAccount' and Container '$containerDefault'" -f yellow

- Write-Host "User '$clusterUsername' Password '$clusterPassword'" -f green

- New-AzureHDInsightCluster -Certificate $cert -Name $clusterName -Location $location -DefaultStorageAccountName $blobStorage -DefaultStorageAccountKey $key -DefaultStorageContainerName $containerDefault -Credential $clusterCreds -ClusterSizeInNodes $numberNodes

- Write-Host "Created '$numberNodes' Node Cluster: $clusterName" -f yellow

The only changes are the selection of the subscription and the removal of the Subscription option when creating the cluster. Of course all the other scripts can easily be modified along the same lines; such as the cluster deletion script:

- Param($Cluster = $(throw "Cluster Name Required."))

- # Get the subscription information and set variables

- $subscriptionInfo = Get-AzureSubscription -Default

- $subName = $subscriptionInfo | %{ $_.SubscriptionName }

- $subId = $subscriptionInfo | %{ $_.SubscriptionId }

- $cert = $subscriptionInfo | %{ $_.Certificate }

- $storeAccount = $subscriptionInfo | %{ $_.CurrentStorageAccountName }

- Select-AzureSubscription -SubscriptionName $subName

- $clusterName = $Cluster

- # Delete the cluster

- Write-Host "Deleting Cluster named: $clusterName" -f yellow

- Remove-AzureHDInsightCluster $clusterName -Subscription $subId -Certificate $cert

- Write-Host "Deleted Cluster $clusterName" -f yellow

This cluster creation script also contains an additional section to ensure that the default storage container does not container any leftover files, from previous cluster creations:

- $blobs = Get-AzureStorageBlob -Container $containerDefault

- foreach($blob in $blobs)

- {

- Remove-AzureStorageBlob -Container $containerDefault -Blob ($blob.Name)

- }

The rationale behind this is to ensure that any files that may be left over from previous clusters creations/deletions are removed.

<Return to section navigation list>

Carlos Figueira (@carlos_figueira) described new Enhanced Users Feature in Azure Mobile Services in a 12/16/2013 post:

In the last post I talked about the ability to get an access token with more permissions to talk to the authentication provider API, so we made it that feature more powerful. Last week we released a new update to the mobile services that makes some of that easier. With the new (preview) enhanced users feature, expanded data about the logged in user to the mobile service is available directly at the service itself (via a call to user.getIdentities), so there’s no more need to talk to the authentication provider APIs to retrieve additional data from the user. Let’s see how we can do that.

In the last post I talked about the ability to get an access token with more permissions to talk to the authentication provider API, so we made it that feature more powerful. Last week we released a new update to the mobile services that makes some of that easier. With the new (preview) enhanced users feature, expanded data about the logged in user to the mobile service is available directly at the service itself (via a call to user.getIdentities), so there’s no more need to talk to the authentication provider APIs to retrieve additional data from the user. Let’s see how we can do that.

To opt-in to this preview feature, you’ll need the Command-Line Interface. If you haven’t used it before, I recommend checking out its installation and usage tutorial to get acquainted with its basic commands. Once you have it installed, and the publishing profile for your subscription properly imported, we can start with that. For this blog post, I created a new mobile service called ‘blog20131216’, and as a newly created service, it doesn’t have any of the preview features enabled, which we can see with the ‘azure mobile preview list’ command, which lists the new feature we have:

To opt-in to this preview feature, you’ll need the Command-Line Interface. If you haven’t used it before, I recommend checking out its installation and usage tutorial to get acquainted with its basic commands. Once you have it installed, and the publishing profile for your subscription properly imported, we can start with that. For this blog post, I created a new mobile service called ‘blog20131216’, and as a newly created service, it doesn’t have any of the preview features enabled, which we can see with the ‘azure mobile preview list’ command, which lists the new feature we have:

C:\temp>azure mobile preview list blog20131216

info: Executing command mobile preview list

+ Getting preview features

data: Preview feature Enabled

data: --------------- -------

data: SourceControl No

data: Users No

info: You can enable preview features using the 'azure mobile preview enable' command.

info: mobile preview list command OK

A sample app

Let’s see how the feature behaves before we turn the feature on. As usual, I’ll create a simple app which will demonstrate how this works, and I’ll post it to my blogsamples repository on GitHub, under AzureMobileServices/UsersFeature. This is an all in which we can log in to certain providers, and call a custom API or a table script. Here’s the app:

The API implementation does nothing but return the result of the call to user.getIdentities in its response.

- exports.get = function (request, response) {

- request.user.getIdentities({

- success: function (identities) {

- response.send(statusCodes.OK, identities);

- }

- });

- };

And the table script will do the same (bypassing the database):

- function read(query, user, request) {

- user.getIdentities({

- success: function (identities) {

- request.respond(200, identities);

- }

- });

- }

Now, if we run the app, login with all providers and call the API and table scripts, we get what we currently receive when calling the getIdentities method:

Logged in as Facebook:<my-fb-id>

API result: {

"facebook": {

"userId": "Facebook:<my-fb-id>",

"accessToken": "<the long access token>"

}

}

Logged out

Logged in as Google:<my-google-id>

Table script result: {

"google": {

"userId": "Google:<my-google-id>",

"accessToken": "<the access token>"

}

}

Logged out

Logged in as MicrosoftAccount:<my-microsoft-id>

API result: {

"microsoft": {

"userId": "MicrosoftAccount:<my-microsoft-id>",

"accessToken": "<the very long access token>"

}

}

So for all existing services nothing really changes.

Enabling the users feature

Now let’s see what starts happening if we enable that new preview feature.

Caution: just like the source control preview feature, the users feature cannot be disabled once turned on. I'd strongly suggest you to try the feature in a test mobile service prior to enabling it in a service being used by an actual mobile application.

In the Command-Line interface, let’s enable the users feature:

C:\temp>azure mobile preview enable blog20131216 Users

info: Executing command mobile preview enable

+ Enabling preview feature for mobile service

info: Result of enabling feature:

info: Successfully enabled Users feature for Mobile Service 'blog20131216'

info: mobile preview enable command OK

And just like that (after a few seconds), the feature is enabled:

C:\temp>azure mobile preview list blog20131216

info: Executing command mobile preview list

+ Getting preview features

data: Preview feature Enabled

data: --------------- -------------

data: Users Yes

data: SourceControl No

info: You can enable preview features using the 'azure mobile preview enable' command.

info: mobile preview list command OK

Time now to run the same test as before. And the result is a lot more interesting. For Facebook:

Logged in as Facebook:<my-fb-id>

API result: {

"facebook": {

"id": "<my-fb-id>",

"username": "<my-facebook-username>",

"name": "Carlos Figueira",

"gender": "male",

"first_name": "Carlos",

"last_name": "Figueira",

"link": https://www.facebook.com/<my-facebook-username>,

"locale": "en_US",

"accessToken": "<the-long-access-token>",

"userId": "Facebook:<my-fb-id>"

}

}

And Google:

Logged in as Google:<my-google-id>

Table script result: {

"google": {

"family_name": "Figueira",

"given_name": "Carlos",

"locale": "en",

"name": "Carlos Figueira",

"picture": "https://lh3.googleusercontent.com/<some-path>/<some-other-path>/<yet-another-path>/<and-one-more-path>/photo.jpg",

"sub": "<my-google-id>",

"accessToken": "<the-access-token>",

"userId": "Google:<my-google-id>"

}

}

And Microsoft:

Logged in as MicrosoftAccount:<my-microsoft-id>

API result: {

"microsoft": {

"id": "<my-microsoft-id>",

"name": "Carlos Figueira",

"first_name": "Carlos",

"last_name": "Figueira",

"link": "https://profile.live.com/",

"gender": null,

"locale": "en_US",

"accessToken": "<the very long access token>"

"userId": "MicrosoftAccount:<my-microsoft-id>"

}

}

So that’s basically what we get now “for free” with this new feature… One more thing – I didn’t include Twitter in the example, but it works just as well as the other providers: you’ll get information such as the screen name in the user identities.

User.getIdentities change

One thing I only showed but didn’t really talk about: the call to user.getIdentities is different than how it was previously used. It’s a change required to support the new functionality, and the synchronous version may eventually be deprecated. In order to give you more information about the users, we’re now storing that information in a database table, and retrieving that data is something we cannot do synchronously. What we need to change in the scripts then is to pass a callback function to the users.getIdentities call, and when that data is retrieved the success callback will be invoked. Changing the code to comply with the new mode is fairly straightforward, as the code below shows. Here’s the “before” code:

- exports.get = function (request, response) {

- var identities = request.user.getIdentities();

- // Do something with identities, send response

- }

And with the change we just need to move the remaining of the code to a callback function:

- exports.get = function (request, response) {

- request.user.getIdentities({

- success: function (identities) {

- // Do something with identities, send response

- }

- });

- }

The synchronous version of user.getIdentities will continue working in mobile services where the users feature is not enabled (since we can’t break existing services), but I strongly recommend changing your scripts to use the asynchronous version to be prepared for future changes.

Wrapping up

As I mentioned this is a preview feature, which means it’s something we intend on promoting to “GA” (general availability) level, but we’d like to get some early feedback so we can better align it with what the customers want. Feel free to give us feedback about this feature and what we can do to improve it.

![image_thumb75_thumb3_thumb_thumb_thu[7] image_thumb75_thumb3_thumb_thumb_thu[7]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEhTtV9x3s2X_XvSTZjWzYXs-6ZjNygvBbpJE46NMg5A2E5YagQD7UxNOzCPTc9cbLgfZLFKJycxomZUPoLtSTp-mWecbI4oSwA98P6PprHBWNPa99o0Yf78TxCQZB6krZNdqIJfoEv-/?imgmax=800) No significant articles so far this week.

No significant articles so far this week.

<Return to section navigation list>

No significant articles so far this week.

No significant articles so far this week.

<Return to section navigation list>

Sam Vanhoutte (@SamVanhoutte) described Step by step debugging of bridges in Windows Azure BizTalk Services in a 12/15/2013 post:

Introduction

The BizTalk product team has recently published BizTalk Service Explorer to the Visual Studio Gallery. It can be downloaded by clicking this link. The tool is still an alpha version and will keep being improved over time. This explorer adds a node to Visual Studio Server Explorer through which a BizTalk Service can be managed. The following capabilities are available:

The BizTalk product team has recently published BizTalk Service Explorer to the Visual Studio Gallery. It can be downloaded by clicking this link. The tool is still an alpha version and will keep being improved over time. This explorer adds a node to Visual Studio Server Explorer through which a BizTalk Service can be managed. The following capabilities are available:

Browsing of all artifacts (endpoints, bridges, schemas, assemblies, transformations, certificates…)

Browsing of all artifacts (endpoints, bridges, schemas, assemblies, transformations, certificates…) - See tracked events of bridges

- Upload and download artifacts

- Start & stop endpoints

- Restarting the services

- Testing and debugging bridges

Testing capabilities

![image_thumb75_thumb3_thumb_thumb_thu[11] image_thumb75_thumb3_thumb_thumb_thu[11]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEh57e993mEYavvQm24B7WxbRQEAd9QeP_wgWTktqtr4C3qcU3gPRRsRViAnvqtICaNX_gCOM2-IDNtXMk67uJvW85fQUb2-KhKGCskRS16mYkj8QSkIIs6KDB8zK91a7gVu5hgxo8nl/?imgmax=800) This blog post will be about that last feature, the testing and debugging of bridges. With the tool, it’s much easier to test bridges and to get insight in the actual processing and the state of messages at the various stages. It is not (yet?) possible to have step through debugging of custom code.

This blog post will be about that last feature, the testing and debugging of bridges. With the tool, it’s much easier to test bridges and to get insight in the actual processing and the state of messages at the various stages. It is not (yet?) possible to have step through debugging of custom code.

Sending test messages

Where we were making our own testing tools, or we used the MessageSender console app, we can now have integrate testing capabilities in Visual Studio. To do this, you can just right click a bridge and send a test message to it.

After doing so, you get a simple dialog box where you can have your bridge tested.

Debugging bridges

This feature is very neat. Here you can proceed step by step through a BizTalk bridge and see how the message and the context changes from stage to stage.

How it works? Through service bus relay!

- First of all, you have to configure a service bus namespace with the right credentials. This service bus namespace can be an existing. Configuring this can be done on the properties of the service node:

- Now this is done, the explorer will start up a service bus relayed web service that will be called from the actual runtime.

Debug a bridge

To debug a bridge, you just have to right click it and select the debug option. This will open a new window, as depicted below. When stepping through the pipeline, the message and the context of the current and the previous stage are visible side by side, so that it can easily be compared. This is ideal for seeing the mapping results, effects of custom message inspectors and responses of LOB systems…

Be aware that, when a bridge is in debug mode, messages from other consumers can also be reflected and visualized in the tool. (I tested this by sending a message through the tool and by sending another one outside of Visual Studio) So, don’t use this on bridges in production!

Feedback for next versions

This tool really makes it more productive and intuitive to test bridges and the behavior of BizTalk Services. I really hope it will continue to evolve and therefore I want to give some feedback here:

- I would love to see the HTTP header values in the test or debug tool.

- For bridge debugging, it would be nice to right click a stage and to specify something like : ‘continue to here’. This would make it even faster to test.

- The tracking of events should be better visualized. Instead of showing just a list of Guids, I would love to see timestamps at least and I would love to see the tracked items in a better format

- It would also be nice if you could link one (or more?) test files with a specific bridge in the explorer. Then it would be faster to test. Another nice to have would be to drag my test message onto the bridge node to open the test or debug window, pre filled with the dropped file.

<Return to section navigation list>

Yossi Dahan (@YossiDahan) described Role-based authorisation with Windows Azure Active Directory in an 11/28/2013 post (missed when published):

Using Window Azure Active Directory (WaaD) to provide authentication for web applications hosted anywhere is dead simple. Indeed in Visual Studio 2013 it is a couple of steps in the project creation wizard.

Using Window Azure Active Directory (WaaD) to provide authentication for web applications hosted anywhere is dead simple. Indeed in Visual Studio 2013 it is a couple of steps in the project creation wizard.

This provides means of authentication but not authorisation, so what does it take to support authorisation is the [Authorise(Role=xxx)] approach?

Conceptually, what’s needed is means to convert information from claims to role information about the logged-on user.

WaaD supports creating Groups and assigning users to them, which is what’s needed to drive role-based authorisation, the problem is that, somewhat surprising perhaps, group membership is not reflected in the claim-set delivered to the application as part of the ws-federation authentication.

WaaD supports creating Groups and assigning users to them, which is what’s needed to drive role-based authorisation, the problem is that, somewhat surprising perhaps, group membership is not reflected in the claim-set delivered to the application as part of the ws-federation authentication.

Fortunately, getting around this is very straight forward. Microsoft actually published a good example here but I found it a little bit confusing and wanted to simplify the steps somewhat to explain the process more clearly, hopefully I’ve succeeded –

![image_thumb75_thumb3_thumb_thumb_thu[4] image_thumb75_thumb3_thumb_thumb_thu[4]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEj-r4RVRU2ouBF_qDq9PK38WaR4jAmamGP3PWAybaHAbqdVYTFVTgwonqiH3XlEygVxzgNF2Q-kzOSdyRXXCV5_YkS5MRwDVgH5SNpNmpdj9rTzaMaMRNmS_zW-z0-4FzMm_kMG1drb/?imgmax=800) The process involves extracting the claims principal from the request and, from the provided claims, find the WaaD tenant.

The process involves extracting the claims principal from the request and, from the provided claims, find the WaaD tenant.

With that and with prior knowledge of the clientId and key for the tenant (exchanged out of band and kept securely, of course) the WaaD GraphAPI can be used to query the group membership of the user

Finally – the groups can be used to add role claims to the claim-set, which WIF would automatically populate as roles allowing the program to use IsInRole and the [Authorise] attribute as it would normally.

So – how is all of this done? –

The key is to add a ClaimsAuthenticationManager, which will get invoked when an authentication response is detected, and in it perform the steps described.

A slightly simplified (as opposed to better!) version of the sample code is as follows

public override ClaimsPrincipal Authenticate(string resourceName, ClaimsPrincipal incomingPrincipal)

{

//only act if a principal exists and is authenticated

if (incomingPrincipal != null && incomingPrincipal.Identity.IsAuthenticated == true)

{

//get the Windows Azure Active Directory tenantId

string tenantId = incomingPrincipal.FindFirst("http://schemas.microsoft.com/identity/claims/tenantid").Value;

// Use the DirectoryDataServiceAuthorizationHelper graph helper API

// to get a token to access the Windows Azure AD Graph

string clientId = ConfigurationManager.AppSettings["ida:ClientID"];

string password = ConfigurationManager.AppSettings["ida:Password"];

//get a JWT authorisation token for the application from the directory

AADJWTToken token = DirectoryDataServiceAuthorizationHelper.GetAuthorizationToken(tenantId, clientId, password);

// initialize a graphService instance. Use the JWT token acquired in the previous step.

DirectoryDataService graphService = new DirectoryDataService(tenantId, token);

// get the user's ObjectId

String currentUserObjectId = incomingPrincipal.FindFirst("http://schemas.microsoft.com/identity/claims/objectidentifier").Value;

// Get the User object by querying Windows Azure AD Graph

User currentUser = graphService.directoryObjects.OfType<User>().Where(it => (it.objectId == currentUserObjectId)).SingleOrDefault();

// load the memberOf property of the current user

graphService.LoadProperty(currentUser, "memberOf");

//read the values of the memberOf property

List<Group> currentRoles = currentUser.memberOf.OfType<Group>().ToList();

//take each group the user is a member of and add it as a role

foreach (Group role in currentRoles)

{

((ClaimsIdentity)incomingPrincipal.Identity).AddClaim(new Claim(ClaimTypes.Role, role.displayName, ClaimValueTypes.String, "SampleApplication"));

}

}

return base.Authenticate(resourceName, incomingPrincipal);

}

You can follow the comments to pick up the actions in the code; in broad terms the identity and the tenant id are extracted from the token, the clientid and key are read from the web.config (VS 2013 puts them there automatically, which is very handy!), an authorisation token is retrieved to support calls to the graph API and the graph service is then used to query the user and its group membership from WaaD before converting, in this case, all groups to role claims.

To use the graph API I used the Graph API helper source code as pointed out here. in Visual Studio 2013 I updated the references to Microsoft.Data.Services.Client and Microsoft.Data.OData to 5.6.0.0.

Finally, to plug in my ClaimsAuthenticationManager to the WIF pipeline I added this bit of configuration –

<system.identityModel>

<identityConfiguration>

<claimsAuthenticationManager

type="WebApplication5.GraphClaimsAuthenticationManager,WebApplication5" />

With this done the ClaimsAuthenticationManager kicks in after the authentication and injects the role claims, WIF’s default behaviour then does its magic and in my controller I can use, for example –

[Authorize(Roles="Readers")]

public ActionResult About()

{

ViewBag.Message = "Your application description page.";

return View();

}

Cross posted on the Solidsoft blog.

<Return to section navigation list>

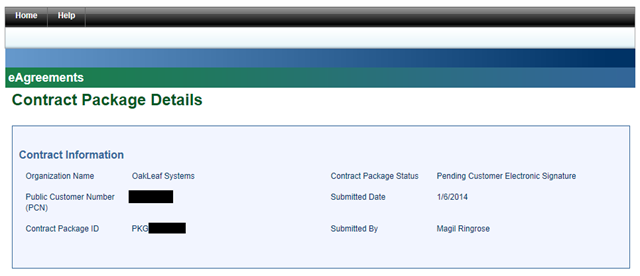

My (@rogerjenn) Microsoft Finally Licenses Remote Desktop Services (RDS) on Windows Azure Virtual Machines post included these new updates from 12/21 to 12/27/2013:

Update 12/27/2013 with how to Setup a Windows Server 2012 R2 Domain Controller in Windows Azure: IP Addressing and Creating a Virtual Network from the Petri IT Knowledgebase

Update 12/27/2013 with how to Setup a Windows Server 2012 R2 Domain Controller in Windows Azure: IP Addressing and Creating a Virtual Network from the Petri IT Knowledgebase - Update 12/25/2013 with new Windows Azure Desktop Hosting - Reference Architecture and Deployment Guides updated 10/31/2013 from the MSDN Library

Update 12/24/2013 with links to the Remote Desktop Services Blog, which offers useful articles and links to related RDS resources, and the Windows 8.1 ITPro Forum

Update 12/24/2013 with links to the Remote Desktop Services Blog, which offers useful articles and links to related RDS resources, and the Windows 8.1 ITPro Forum - Update 12/23/2013 with an updated Remote Desktop Client for Windows 8 and 8.1, which you can download and install from the Windows Store

- Update 12/22/2013 wity Microsoft Hosting’s announcement of an Updated SPLA Program Guide and New program: Server Cloud Enrollment in a 12/20/2013 message

Update 12/21/2013 with links to Remote Desktop Services Overview from TechNet updated for Windows Server 2012 R2 and Keith Mayer’s RDS on Window Azure tutorial

Update 12/21/2013 with links to Remote Desktop Services Overview from TechNet updated for Windows Server 2012 R2 and Keith Mayer’s RDS on Window Azure tutorial

Mark Brown posted Using Django, Python, and MySQL on Windows Azure Web Sites: Creating a Blog Application on 12/17/2013:

Editor's Note: This post comes from Sunitha Muthukrishna (@mksuni, pictured here), Program Manager on the Windows Azure Web Sites team

Editor's Note: This post comes from Sunitha Muthukrishna (@mksuni, pictured here), Program Manager on the Windows Azure Web Sites team

Depending on the app you are writing, the basic Python stack on Windows Azure Web Sites might meet your needs as-is, or it might not include all the modules or libraries your application may need.

Never fear, because in this blog post, I’ll take you through the steps to create a Python environment for your application by using Virtualenv and Python Tools for Visual Studio. Along the way, I’ll show you how you can put your Django-based site on Windows Azure Web Sites.

Never fear, because in this blog post, I’ll take you through the steps to create a Python environment for your application by using Virtualenv and Python Tools for Visual Studio. Along the way, I’ll show you how you can put your Django-based site on Windows Azure Web Sites.

Create a Windows Azure Web Site with a MySQL database

Next, log in to the Azure Management Portal and create a new web site using the Custom create option. For more information, See How to Create Azure Websites . We’ll create an empty website with a MySQL database.

Finally choose a region and, after you choose to accept the site Terms, you can complete the install. As usual, it’s a good idea to put your database in the same Region as your web site to reduce costs.

Double click on your Website in the Management portal to view the website’s dashboard. Click on “Download publish profile”. This will download a .publishsettings file that can be used for deployment in Visual Studio.

Create a Django project

For this tutorial we will be using Visual Studio to build our Django Web Application. To build your application with Visual studio, install PTVS 2.0 . For more details, see How to build Django Apps with Visual Studio

Open Visual Studio and create a New Project > Other Languages > Python > Django Project

In the solution explorer, create a new Django Application your Django Project by right clicking on DjangoProject > Add > DjangoApp

Enter the name of your Django application, say myblog

Create a virtual environment

Simply put, virtualenv allows you to create custom, isolated Python environments. That is, you can customize and install different packages without impacting the rest of your site. This makes it useful for experimentation as well.

In the Solution explorer, Right click on Python Environments in your Django Project and Select “Add Virtual Environment”

Enter the virtual environment name, say “env” . This will create a folder called “env” which will contain your virtual python environment without any python packages except for pip

Install MySQL-Python and Django packages in your virtual environment

In the solution explorer, Right-click on environment env and Install Python package: django

You can see the Output of the installation of Django in your virtual environment

Similarly, you need to install mysql-python, but use easy_install instead of pip as seen here

Now you have both Django and MySQL for Python installed in your virtual environment

Build your Database Models

A model in Django is a class that inherits from Django Model class and lets you specify all the attributes of a particular object. Model class translates its properties into values stored in the database.

Let’s create a simple model called Post with three fields: title, date and body to build a post table in my database. To create a model, include a models.py file under the myblog/ folder.

#import from Model class

from django.db import models

class Post(models.Model):

#Create a title property

title = models.CharField(max_length=64)

#Create a date property

date = models.DateTimeField()

#Create a body of content property

body = models.TextField()

# This method is just like toString() function in .NET. Whenever Python needs to show a

#string representation of an object, it calls __str__

def __str__(self):

return "%s " % (self.title)

Installing the Models

We’ll need to tell Django to create the model in the database. To do this, we need to do a few more things:

- First, we’ll configure the application’s database in settings.py. Enter the MySQL database information associated with the Windows Azure Web Site.

DATABASES = {

'default': {

'ENGINE': 'django.db.backends.mysql',

'NAME': 'MYSQL-DATABASE-NAME',

'USER': 'MYSQL-SERVER-USER-NAME',

'PASSWORD': 'MYSQL-SERVER-USER-PASSWORD',

'HOST': 'MySQL-SERVER-NAME',

'PORT': '',

}

}

Next, add your application to your INSTALLED_APPS setting in settings.py.

INSTALLED_APPS = (

'django.contrib.auth',

'django.contrib.contenttypes',

'django.contrib.sessions',

'django.contrib.sites',

'django.contrib.messages',

'django.contrib.staticfiles',

'myblog',

)

- Once we’ve saved the settings in settings.py, we’ll create the schema in your Clear DB database for the models we’ve already added to models.py. This can be achieved Run Django Sync DB

You can write your own code to manage creating, editing, deleting posts for your blog or you can use the administration module Django offers which provides an Admin site dashboard to create and manage posts. Refer to this article on how to enable a Django admin site.

Setup a Django Admin site

The admin site will provide a dashboard to create and manage blog posts. First, we need to create a superuser who can access the admin site. To do this run this command if you haven’t created an admin user already.

Python manage.py createsuperuser

You can use the Django Shell to run this command. For more details on how to use Django Shell refer this article

The admin module is not enabled by default, so we would need to do the following few steps:

- First we’ll add 'django.contrib.admin' to your INSTALLED_APPS setting in settings.py

INSTALLED_APPS = (

'django.contrib.auth',

'django.contrib.contenttypes',

'django.contrib.sessions',

'django.contrib.sites',

'django.contrib.messages',

'django.contrib.staticfiles',

'myblog',

)

- Now, we’ll update urls.py to process a request for the application to the admin site and to the home page view.

from django.conf.urls import patterns, include, url

#import admin module

from django.contrib import admin

admin.autodiscover()

#set url patterns to handle requests made to your application

urlpatterns = patterns('',

url(r'^$', 'DjangoApplication.views.home', name='home'),

url(r'^admin/', include(admin.site.urls)),

)

- Next, we’ll create an admin.py under the myblog/ folder to register Post model

from models import Post

from django.contrib import admin

#Register the database model so it will be visible in the Admin site

admin.site.register(Post)

Build a Page view

We’ll create a to list all the blog posts you have created. To create a page view, include a views.py file under the myblog/ folder

from django.shortcuts import render_to_response

from models import Post

#Creating a view for the home page

def home(request):

posts = Post.objects.all()

#Renders a given template with a given context dictionary and

returns an

#HttpResponse object with that rendered text.

return render_to_response('home.html', {'posts': posts} )

Displaying a Post object is not very helpful to the users and we need a more informative page to show a list of Posts. This is a case where a template is helpful. Usually, templates are used for producing HTML, but Django templates are equally capable of generating any text-based format.

To create this template, first we’ll create a directory called templates under myblog/. To display the all the posts in views.py, create a home.html under the templates/ folder which loops through all the post objects and displays them.

<html>

<head><title>My Blog</title></head>

<body>

<h1>My Blog</h1>

{% for post in posts %}

<h1>{{ post.title }}</h1>

<em> <time datetime="{{ post.date.isoformat }}">

{{ post.date}}</time> <br/>

</em>

<p>{{ post.body }}</p>

{% endfor %}

</body>

</html>

Set static directory path

If you access the admin site now, you will noticed that the style sheets are broken. The reason for this is the static directory is not configured for the application.

Let’s set the static Root folder path to D:\home\site\wwwroot\static

from os import path

PROJECT_ROOT = path.dirname(path.abspath(path.dirname(__file__)))

STATIC_ROOT = path.join(PROJECT_ROOT, 'static').replace('\\','/')

STATIC_URL = '/static/'

Once we’ve saved these changes in settings.py, run this command to collect all the static files in “static” folder for the Admin site using Django Shell

Python manage.py collectstatic

Set Template Directory path

We’re nearly done! Django requires the path to the templates directory and static folder directory to be configured in settings.py. There’s just a couple of steps needed to do that.

- Let’s create a variable for the path of the SITE_ROOT

import os.path

SITE_ROOT = os.path.dirname(__file__)

- Then, we’ll set the path for Templates folder. TEMPLATES_DIR informs Django where to look for templates for your application when a request is made.

TEMPLATE_DIRS = (

os.path.join(SITE_ROOT, "templates"),)

Deploy the application

We are now all set to deploy the application to Windows Azure website, mydjangoblog .Right click you’re DjangoProject and select “Publish”

You can validate the connection and then click on publish to initiate deployment. Once deployment is successfully completed, you can browse your website to create your first blog.

Create your blog post

To create your blog, login to the admin site http://mydjangoblog.azurewebsites.net/admin with the super user credentials we created earlier.

The Dashboard will include a Link for your model and will allow you to manage the content for the model used by your application. Click on Posts

Create your first blog post and Save

Let’s browse the site’s home page, to view the newly created post.

Now you have the basics for building just what you need in Python on Windows Azure Web Sites. Happy coding :)

Further Reading

Anton Staykov (@astaykov) posted Windows Azure – secrets of a Web Site on 12/20/2013:

Windows Azure Web Sites are, I would say, the highest form of Platform-as-a-Service. As per documentation “The fastest way to build for the cloud”. It really is. You can start easy and fast – in a minutes will have your Web Site running in the cloud in a high-density shared environment. And within minutes you can go to 10 Large instances reserved only for you! And this is huge – this is 40 CPU cores with total of 70GB of RAM! Just for your web site. I would say you will need to reengineer your site, before going that big. So what are the secrets?

Windows Azure Web Sites are, I would say, the highest form of Platform-as-a-Service. As per documentation “The fastest way to build for the cloud”. It really is. You can start easy and fast – in a minutes will have your Web Site running in the cloud in a high-density shared environment. And within minutes you can go to 10 Large instances reserved only for you! And this is huge – this is 40 CPU cores with total of 70GB of RAM! Just for your web site. I would say you will need to reengineer your site, before going that big. So what are the secrets?

Project KUDU

![image_thumb75_thumb3_thumb_thumb_thu[19] image_thumb75_thumb3_thumb_thumb_thu[19]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEhcOBLxnrTrdGXGUdu9dmqNzor4fdON2fJy-LzBNIitSA2LjCdFD1YkOZA1gISXIrazQSoYuJPE5elRW_lCsTqmzQSUxF8iaVKRhyphenhyphenFtiZ3HGJI9vkX2pQvj_U4NbnVE3Ui8InpasJO6/?imgmax=800) What very few know or realize, that Windows Azure Websites runs Project KUDU, which is publicly available on GitHub. Yes, that’s right, Microsoft has released Project KUDU as open source project so we can all peek inside, learn, even submit patches if we find something is wrong.

What very few know or realize, that Windows Azure Websites runs Project KUDU, which is publicly available on GitHub. Yes, that’s right, Microsoft has released Project KUDU as open source project so we can all peek inside, learn, even submit patches if we find something is wrong.

Deployment Credentials

There are multiple ways to deploy your site to Windows Azure Web Sites. Starting from plain old FTP, going through Microsoft’s Web Deploy and stopping at automated deployment from popular source code repositories like GitHub, Visual Studio Online (former TFS Online), DropBox, BitBucket, Local Git repo and even External provider that supports GIT or MERCURIAL source control systems. And this all thanks to the KUDU project. As we know, Windows Azure Management portal is protected by (very recently) Windows Azure Active Directory, and most of us use their Microsoft Accounts to log-in (formerly known as Windows Live ID). Well, GitHub, FTP, Web Deploy, etc., they know nothing about Live ID. So, in order to deploy a site, we actually need a deployment credentials. There are two sets of Deployment Credentials. User Level deployment credentials are bout to our personal Live ID, we set user name and password, and these are valid for all web sites and subscription the Live ID has access to. Site Level deployment credentials are auto generated and are bound to a particular site. You can learn more about Deployment credentials on the WIKI page.

There are multiple ways to deploy your site to Windows Azure Web Sites. Starting from plain old FTP, going through Microsoft’s Web Deploy and stopping at automated deployment from popular source code repositories like GitHub, Visual Studio Online (former TFS Online), DropBox, BitBucket, Local Git repo and even External provider that supports GIT or MERCURIAL source control systems. And this all thanks to the KUDU project. As we know, Windows Azure Management portal is protected by (very recently) Windows Azure Active Directory, and most of us use their Microsoft Accounts to log-in (formerly known as Windows Live ID). Well, GitHub, FTP, Web Deploy, etc., they know nothing about Live ID. So, in order to deploy a site, we actually need a deployment credentials. There are two sets of Deployment Credentials. User Level deployment credentials are bout to our personal Live ID, we set user name and password, and these are valid for all web sites and subscription the Live ID has access to. Site Level deployment credentials are auto generated and are bound to a particular site. You can learn more about Deployment credentials on the WIKI page.

KUDU console

I’m sure very few of you knew about the live streaming logs feature and the development console in Windows Azure Web Sites. And yet it is there. For every site we create, we got a domain name like

http://mygreatsite.azurewebsites.net/

And behind each site, there is automatically created one additional mapping:

https://mygreatsite.scm.azurewebsites.net/

Which currently looks like this:

Key and very important fact – this console runs under HTTPS and is protected by your deployment credentials! This is KUDU! Now you see, there are couple of menu items like Environment, Debug Console, Diagnostics Dump, Log Stream. The titles are pretty much self explanatory. I highly recommend that you jump on and play around, you will be amazed! Here for example is a screenshot of Debug Console:

Nice! This is a command prompt that runs on your Web Site. It has the security context of your web site – so pretty restricted. But, it also has PowerShell! Yes, it does. But in its alpha version, you can only execute commands which do not require user input. Still something!

Log Stream

The last item in the menu of your KUDU magic is Streaming Logs:

Here you can watch in real time, all the logging of your web site. OK, not all. But everything you’ve sent to System.Diagnostics.Trace.WriteLine(string message) will come here. Not the IIS logs, your application’s logs.

Web Site Extensions

This big thing, which I described in my previous post, is all developed using KUDU Site Extensions – it is an Extension! And, if you played around with, you might already have noticed that it actually runs under

https://mygreatsite.scm.azurewebsites.net/dev/wwwroot/

So what are web site Extensions? In short – these are small (web) apps you can write and you can install them as part of your deployment. They will run under separate restricted area of your web site and will be protected by deployment credentials behind HTTPS encrypted traffic. you can learn more by visiting the Web Site Extensions WIKI page on the KUDU.

My Android MiniPC and TVBoxes site is an example of a Windows Azure Web Site.

<Return to section navigation list>

![image_thumb75_thumb3_thumb_thumb_thu[10] image_thumb75_thumb3_thumb_thumb_thu[10]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEgK0a7WzDkTAVHNZ9eKWm9g5Ki6-IXr0Zto8pDV1chawZaeJwSrbnn_OTimptfgdl_xzwOXsvzBBU40DGlxMKFGlo_dYfFpjRmqZT2yAhLfHRyZgtvfQxzjHQI0RBbQuXZFNgnqbnsX/?imgmax=800) No significant articles so far this week.

No significant articles so far this week.

Return to section navigation list>

DH Kass asked “Windows Azure, Office 365, Bing and Xbox Live to gain more processing power?” in a deck for his Microsoft Cloud Bet?: $11M Land Deal for Giant Data Center article of 12/30/2013 for the VarGuy blog:

Microsoft (MSFT) has plunked down $11 million to buy 200 acres of industrial land in rural Port of Quincy, WA to build a second data center there. Is this another huge bet on Windows Azure, Office 365, Xbox Live and other cloud services? Perhaps.

Microsoft (MSFT) has plunked down $11 million to buy 200 acres of industrial land in rural Port of Quincy, WA to build a second data center there. Is this another huge bet on Windows Azure, Office 365, Xbox Live and other cloud services? Perhaps.

NY Times/Kyle Bair/Bair Aerial

NY Times/Kyle Bair/Bair Aerial

![image_thumb75_thumb3_thumb_thumb_thu[22] image_thumb75_thumb3_thumb_thumb_thu[22]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEjN9GwtMLFlN_HdRBDqajJamhZPDvTklikerHCm84XVzzDSgr_fO9_7O5Sy1ye5leJzV12ivq3gdkIA1XVN5qa7Ig-5jMYCU0XOp7VLSi0zUuCY_YD1dVmjXzGyhQszKzkc7uWp0UOA/?imgmax=800) The new facility will triple the size of Microsoft's current 470,000 square foot server plant. The vendor has some history in Quincy--first setting up a data center there in 2007 on 75 acres of agricultural land, attracted by cheap electrical power fed by hydroelectric generators drawing water from the nearby Columbia River--and three years later signaling its intention to expand its facilities in the area.

The new facility will triple the size of Microsoft's current 470,000 square foot server plant. The vendor has some history in Quincy--first setting up a data center there in 2007 on 75 acres of agricultural land, attracted by cheap electrical power fed by hydroelectric generators drawing water from the nearby Columbia River--and three years later signaling its intention to expand its facilities in the area.

According to a report in the Seattle Times, the latest deal is said to be among the largest in Quincy’s history, with Microsoft paying $4 million for 60 acres of land already owned by the city and another $7 million for 142 acres of neighboring parcels Quincy will buy from private owners and sell back to the IT giant.

The transaction is expected to close in late January, 2014, followed by ground-breaking on the project in the spring with completion expected in early 2015. Microsoft said the data center will employ about 100 people.

Big Data Centers

The resulting facility is expected to be a colossus among giants. Quincy also hosts data centers belonging to IT heavyweights Dell, Intuit (INTU) and Yahoo (YHOO), and wholesalers Sabey and Vantage. Agriculture and food processing giants ConAgra Foods, National Frozen Foods, NORPAC, Columbia Colstor, Oneonta, Stemilt, CMI and Jones Produce also have data centers there.

In addition, direct sales giant Amway is slated to open a $38 million, 48,000 square foot botanical concentrate manufacturing facility in Quincy in May, 2014 to replace an existing plant near Los Angeles.

In moves to capture ballooning demand for connectivity in eastern Washington, network operator and data center services provider Level 3 (LVLT) has expanded its network's fiber backbone in the area, installing more than 200 miles of fiber cable and connections in Quincy alone. In addition, Level 3 also is connecting customers to regional incumbent local exchange carriers.

The Quincy data center project isn’t Microsoft’s sole server farm expansion. A Neowin report said the vendor also has offered a deal to The Netherlands to set up a $2.7 billion data center in the Noord-Holland province. …

Will this data center be designated NorthWest US for Windows Azure?

Read the rest of the article here.

Nick Harris (@cloudnick) and Chris Risner (@chrisrisner) produced Cloud Cover Episode 124: Using Brewmaster to Automate Deployments on 12/20/2013:

In this episode Chris Risner and Nick Harris are joined by Ryan Dunn, the founder of Cloud Cover and now Director of Product Services at Aditi. In this video, Ryan talks about Project Brewmaster. Brewmaster is a free tool which enables easy and automated deployment of a number of different configurations to Windows Azure. Some of the things Brewmaster makes it easy to deploy include:

- Load-balanced webfarms

- Highly available and AlwaysOn SQL Server

- SharePoint

- ARR Reverse Proxy

- Active Directory in multiple data centers

All of the deployments are template based which will eventually allow further customization of the deployment as well as creation of new templates.

<Return to section navigation list>

![image_thumb75_thumb3_thumb_thumb_thu[3] image_thumb75_thumb3_thumb_thumb_thu[3]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEgdFnbZ5XEcXNWEPa8CIweTExogpEY4Px5auKIbsLn37ZdYe7HhZK7EEfTttBmcK53_gTo4Q9t7mFiK0_1jAzF55qlR1fivEJxR1Gh8LvKmgLRoOh3beYd0mNkiGLWUSlbo01zDGIua/?imgmax=800) No significant articles so far this week.

No significant articles so far this week.

<Return to section navigation list>

Rowan Miller reported EF 6.1 Alpha 1 Available in a 12/20/2013 post to the ADO.NET Blog:

Since the release of EF6 a couple of months ago our team has started working on the EF6.1 release. This is our next release that will include new features.

Since the release of EF6 a couple of months ago our team has started working on the EF6.1 release. This is our next release that will include new features.

What’s in Alpha 1