| Windows Azure, SQL Azure Database and related cloud computing topics now appear in this weekly series. |

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

To use the above links, first click the post’s title to display the single article you want to navigate.

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Read the detailed TOC here (PDF) and download the sample code here.

Discuss the book on its WROX P2P Forum.

See a short-form TOC, get links to live Azure sample projects, and read a detailed TOC of electronic-only chapters 12 and 13 here.

Wrox’s Web site manager posted on 9/29/2009 a lengthy excerpt from Chapter 4, “Scaling Azure Table and Blob Storage” here.

You can now download and save the following two online-only chapters in Microsoft Office Word 2003 *.doc format by FTP:

- Chapter 12: “Managing SQL Azure Accounts and Databases”

- Chapter 13: “Exploiting SQL Azure Database's Relational Features”

HTTP downloads of the two chapters are available from the book's Code Download page; these chapters will be updated for the January 4, 2010 commercial release in April 2010.

Franklyn Peart announced on 3/30/2010 that Gladinet has added Secure Backup to Windows Azure Blob Storage to its Gladinet Cloud Desktop 2.0:

Perhaps you have considered backing up your data to Windows Azure, but wondered what assurance you have that your data will be secure when backed up online?

With the introduction of AES 128-bit encryption for files backed up to Windows Azure, Gladinet has an answer for you: The system encrypts your files before saving them on the Azure servers in their encrypted form.

To backup securely to Windows Azure, we have to first select a folder for encryption in the Virtual Directory Manager of the Gladinet Management Console:

![clip_image002[4] clip_image002[4]](http://lh5.ggpht.com/_hinDA3vi9CA/S7H3h78I-5I/AAAAAAAAAMk/KVU7k1Z-IhI/clip_image002%5B4%5D%5B3%5D.gif?imgmax=800)

After clicking on the icon to add a new encrypted folder, select the Windows Azure folder that will be your backup target. …

The post continues with a detailed tutorial for encrypted backup and restore operations.

Brad Calder posted Windows Azure Drive Demo at MIX 2010 to the Windows Azure Storage Team blog on 3/29/2010:

With a Windows Azure Drive, applications running in the Windows Azure cloud can use existing NTFS APIs to access a network attached durable drive. The durable drive is actually a Page Blob formatted as a single volume NTFS Virtual Hard Drive (VHD).

In the MIX 2010 talk on Windows Azure Storage I gave a short 10 minute demo (starts at 19:20) on using Windows Azure Drives. Credit goes to Andy Edwards, who put together the demo. The demo focused on showing how easy it is to use a VHD on Windows 7, then uploading a VHD to a Windows Azure Page Blob, and then mounting it for a Windows Azure application to use.

The following recaps what was shown in the demo and points out a few things glossed over.

He continues his detailed tutorial by covering the following topics:

- Using VHDs in Windows 7

- Uploading VHDs into a Windows Azure Page Blob

- Using a Windows Azure Drive in the Cloud Application

Brad announced in his personal blog on 3/28/2010:

Decided for now to just focus on a Team Blog for Windows Azure Storage.

Subscribed.

<Return to section navigation list>

Scott Hanselman explains Creating an OData API for StackOverflow including XML and JSON in 30 minutes in this lengthy, illustrated tutorial of 3/29/2010:

I emailed Jeff Atwood last night a one line email. "You should make a StackOverflow API using OData." Then I realized that, as Linus says, Talk is Cheap, Show me the Code. So I created an initial prototype of a StackOverflow API using OData on an Airplane. I allocated the whole 12 hour flight. Unfortunately it took 30 minutes so I watched movies the rest of the time.

You can follow along and do this yourself if you like. …

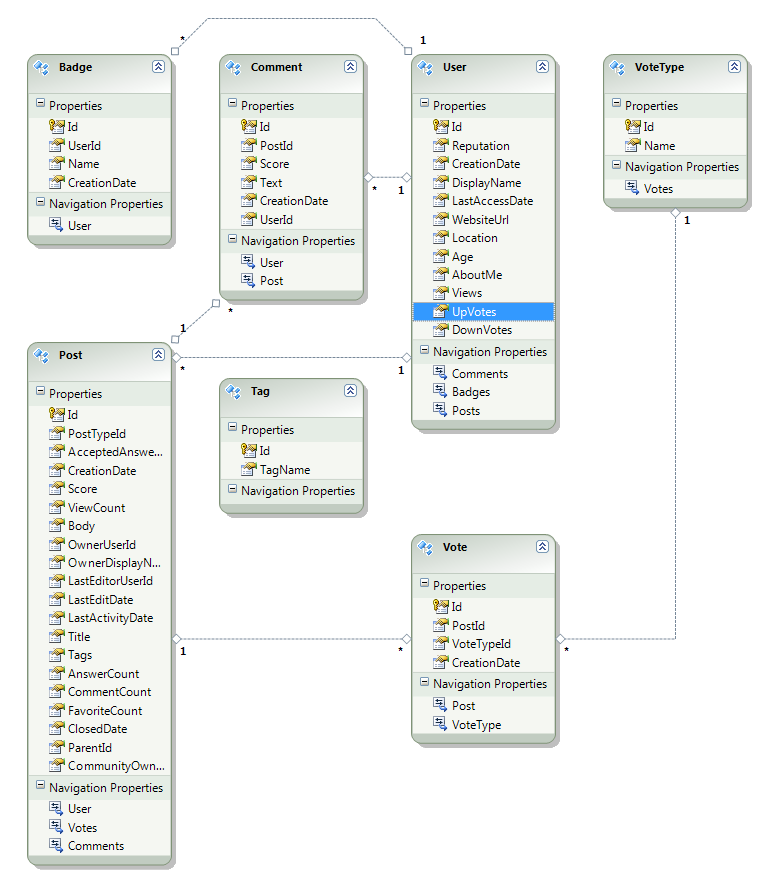

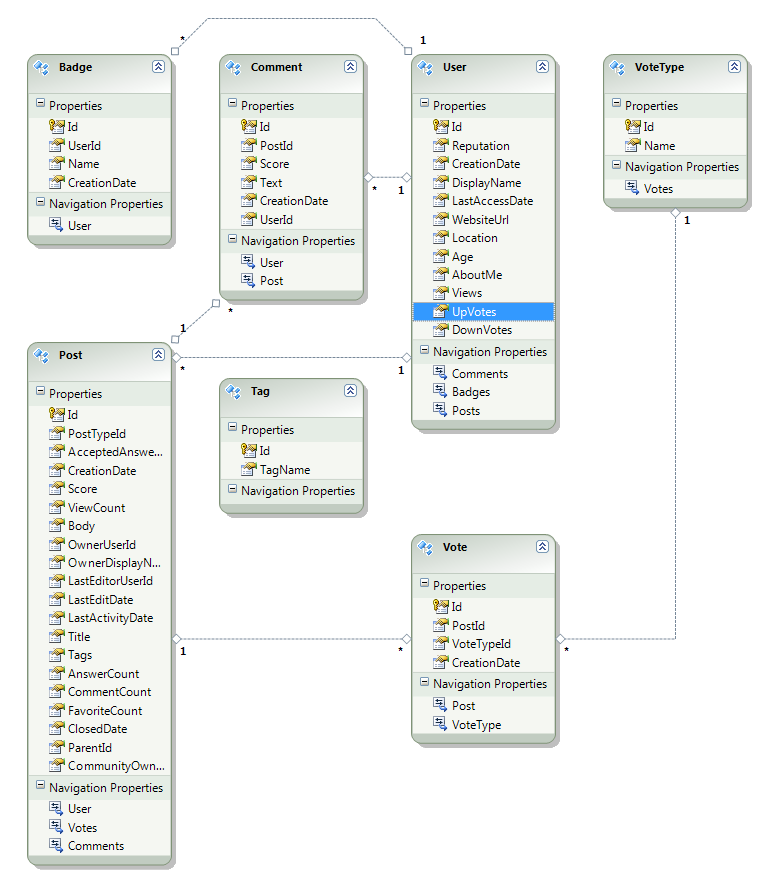

Here’s Scott’s Entity Data Model with associations he added manually:

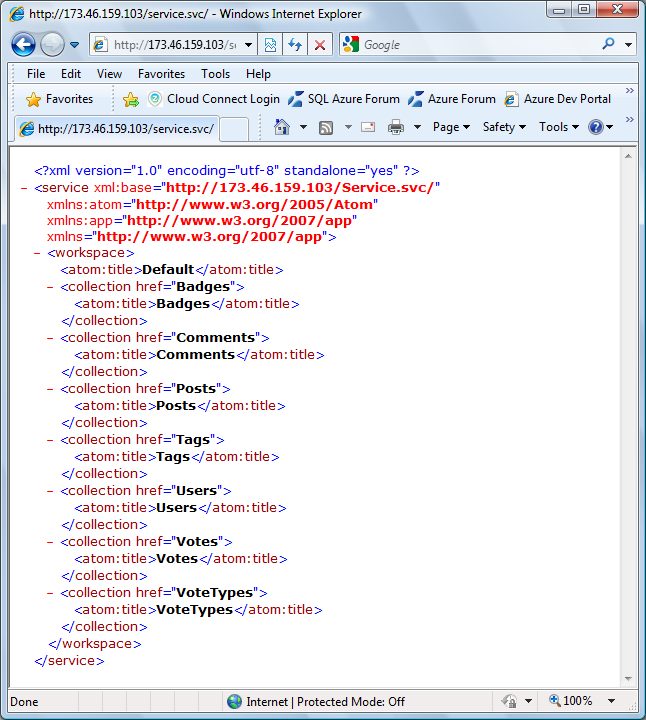

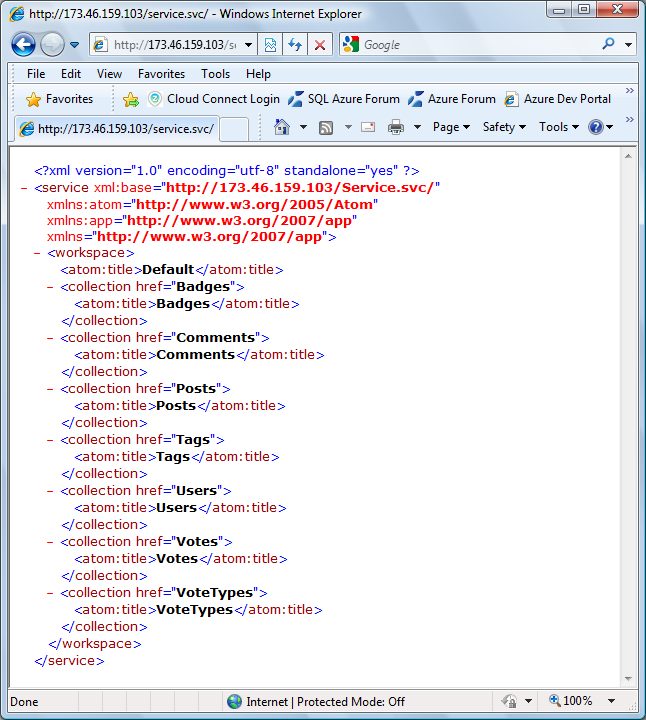

Scott then creates a WCF Data Service from the entity data model and adds support for JSONP. Here’s the response to the service’s root URI, http://173.46.159.103/service.svc/:

Andrew J. Brust’s Open Data, Open Microsoft article of 4/1/2010 for the Redmond Developer News blog begins:

I've always been a data guy. I think data maintenance, sharing and analysis is the inspiration for almost all line-of-business software, and technology that makes any or all of it easier is key to platform success. That's why I've been interested in WCF Data Services (previously ADO.NET Data Services) since it first appeared as the technology code-named "Astoria." Astoria was based on a crisp idea: representing data in AtomPub and JSON formats, as REST Web services, with simple URI and HTTP verb conventions for querying and updating the data.

Astoria, by any name, has been very popular, and for good reason: It provides refreshingly simple access to data, using modern, well-established Web standards. Astoria provides a versatile abstraction layer over data access, but does so without the over-engineering or tight environmental coupling to which most data-access technologies fall prey. This elegance has enabled Microsoft to do something equally unusual: separate Astoria's protocol from its implementation and publish that protocol as an open standard. We learned that Microsoft did this at its Professional Developers Conference (PDC) this past November in Los Angeles, when Redmond officially unveiled the technology as Open Data Protocol (OData). This may have been one of Microsoft's smartest data-access plays, ever.

The brilliance behind separating and opening Astoria's protocol has many dimensions. Microsoft is already reaping rewards from OData in terms of unprecedented interoperability among a diverse array of its own products. Windows Azure table storage is exposed and consumable using OData; SharePoint 2010 lists will be accessible as OData feeds; and the data in SQL 2008 R2 Reporting Services reports -- including data visualized as charts, gauges and maps -- will be exposed in OData format. To tie it all together, Microsoft PowerPivot (formerly code-named "Gemini") will be able to consume any OData feed and integrate it into data models that also contain table data from relational databases. PowerPivot models can then be analyzed in Excel, making OData relevant to the vast majority of business users. …

Andrew concludes:

Meanwhile, the innovation and benefits of OData remain a well-kept secret. It's true that Microsoft needs to promote OData better. But the industry press and analysts need to discard their stereotypes of Redmond and cover OData for the newsworthy technology that it is, as well.

Brad Abrams’ Silverlight 4 + RIA Services - Ready for Business: Exposing WCF (SOAP\WSDL) Services post of 3/29/2010 includes a detailed tutorial for creating OData, as well as SOAP/WSDL endpoints and ends with an offer of free DevForce development licenses from IdeaBlade:

For V1.0, this post describes the RIA Services story for WPF applications. While it works, it is clearly not the complete, end-to-end solution RIA Services offers for Silverlight. For example, you may want the full experience: the DataContext, entities with validation, LINQ queries, change tracking, etc. This level of support for WPF is on the roadmap, but will not make V1. But if you like the RIA Services approach, you might consider DevForce from IdeaBlade or CSLA.NET which works for both WPF and Silverlight. The great folks at IdeaBlade have offered me 10 free licenses to give out. If you are interested in one, please let me know.

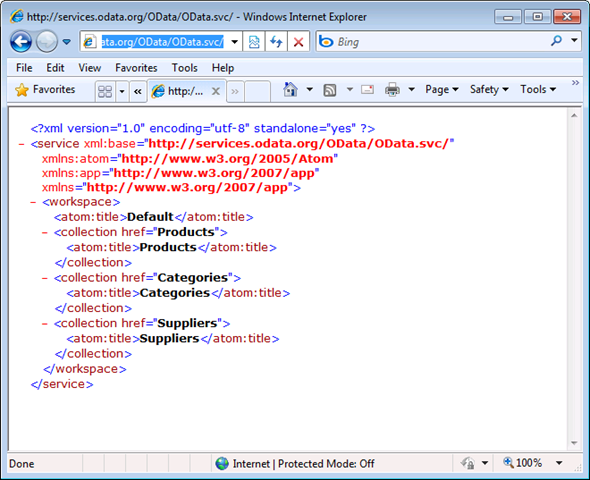

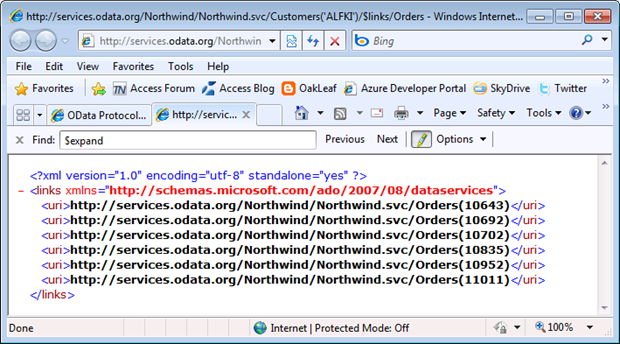

Fabrice Marguerie presents a live preview demo of his Sesame OData Browser [Beta] with data sources including Adventure Works, Northwind, and Netflix data sources:

Rueven Cohen recommends Forget BigData, Think MacroData & MicroData in this 3/29/2010 post:

Recently I've been hearing a lot of talk about the potential for so called "BigData" an idea that has emerged out of Google's use of a concept of storing large disperse data sets in what they call "BigTable". The general idea of Google's BigTable is as a distributed storage system for managing structured data that is designed to scale to a very large (Big) size: petabytes of data across thousands of commodity servers. Basically a way for Google's engineers to think about working in big ways -- a data mantra.

Many of the approaches to BigData have grown from roots found in traditional grid and parallel computing realms such as non-relational column oriented databases systems (NoSQL), distributed filesystems and distributed memory caching systems (Memcache). These platforms typically forming the basis for many of the products and services found within the broader BigData & N0SQL trends and associated ecosystem of startups. (Some of which have been grouped in the broad Cloud computing category) The one thing that seems consistent among all the BigData applications & approaches are found in the emergence of the concept from within very large, data intensive companies such as Google, Yahoo, Microsoft and Facebook. Because of the scale of these companies, they were forced to rethink how they managed an ever increasing deluge of user created data.

To summarize the trend in the most simplistic terms -- (to me) it seems to be a kind of Googlification for IT. In a sense we are all now expected to run Google Scale infrastructure without the need for a physical Google infrastructure. The problem is the infrastructure Google and other large web centric companies have put in place have less to do with the particular technological infrastructure and more to do with handling a massive and continually evolving global user base. I believe this trend has to do with the methodology that these companies apply to their infrastructure or more importantly the way they think about applying this methodology to their technology.

Dare Obasanjo continues the NoSql kerfluffel with The NoSQL Debate: Automatic vs. Manual Transmission of 3/29/2010:

The debate on the pros and cons of non-relational databases which are typically described as “NoSQL databases” has recently been heating up. The anti-NoSQL backlash is in full swing from the rebuttal to one of my recent posts of mine I saw mentioned in Dennis Forbes’s write-up The Impact of SSDs on Database Performance and the Performance Paradox of Data Explodification (aka Fighting the NoSQL mindset) and similar thoughts expressed in typical rant-y style by Ted Dziuba in his post I Can't Wait for NoSQL to Die.

This will probably be my last post on the topic for a while given that the discussion has now veered into religious debate territory similar to vi vs. emacs OR functional vs. object oriented programming. With that said…

It would be easy to write rebuttals of what Dziuba and Forbes have written but from what I can tell people are now talking past each other and are now defending entrenched positions. So instead I’ll leave this topic with an analogy. SQL databases are like automatic transmission and NoSQL databases are like manual transmission. Once you switch to NoSQL, you become responsible for a lot of work that the system takes care of automatically in a relational database system. Similar to what happens when you pick manual over automatic transmission. Secondly, NoSQL allows you to eke more performance out of the system by eliminating a lot of integrity checks done by relational databases from the database tier. Again, this is similar to how you can get more performance out of your car by driving a manual transmission versus an automatic transmission vehicle.

However the most notable similarity is that just like most of us can’t really take advantage of the benefits of a manual transmission vehicle because the majority of our driving is sitting in traffic on the way to and from work, there is a similar harsh reality in that most sites aren’t at Google or Facebook’s scale and thus have no need for a Bigtable or Cassandra. As I mentioned in my previous post, I believe a lot of problems people have with relational databases at web scale can be addressed by taking a hard look at adding in-memory caching solutions like memcached to their infrastructure before deciding the throw out their relational database systems.

Hopefully, this analogy will clarify the debate.

Ian Jacobs extends an invitation to Microsoft, Bring OData to a W3C Incubator in this 3/18/2010 post to the W3C Blog:

A couple of days ago, Microsoft announced their OData API. In the first blog post at odata.org, they wrote:

“At the same time we are looking to engage with IETF and W3C to explore how to get broader adoption of the OData extentions & conventions.”

I would like to take Microsoft up on their suggestion: I invite you to create an Incubator Group at W3C. Incubator groups start quickly, and the Members that start them can design them so that anyone can participate. A lot of people who are passionate about data are already part of the W3C community and you are likely to get a lot of feedback.

Incubator Groups don't produce standards, but the community can decide later whether the API should move to the W3C Recommendation Track (or somewhere else). One good reason for doing that is the W3C Royalty-Free Patent Policy. Incubator Groups can smooth the transition from "good idea" to "widely deployed standard available Royalty-Free."

I hope you'll take me up on the invitation.

Oh, and the invitation is not just for Microsoft. W3C is interested in APIs for access to data; this is on the agenda for a June Workshop. If you're working on an API and it has "data" in the name, I encourage you to build community support in a W3C Incubator Group.

<Return to section navigation list>

The Windows Azure Team recommends Don't Miss An Episode of The Cloud Cover Video Series!, which just added it’s six episode, which covers the AppFabric Service Bus:

Have you checked out the Cloud Cover Video series? In each episode of this popular and informative series, Ryan Dunn and Steve Marx cover the Windows Azure platform, dig into features, discuss the latest news and announcements, and share tips and tricks.

The sixth episode went live last Friday, March 26, 2010, and thus far, the series has proven a great success with more than 155,000 total views to date!

Episodes currently available include:

- Service Management

- RoleEntryPoint

- Worker Role Endpoints

- CDN

- MIX Edition

- Service Bus Tracing

Don't miss an exciting episode and let us know what you think about this series by posting a comment here. You can also interact with the show via Twitter at @cloudcovershow or post a comment directly to each episode.

Here’s Channel9’s description of episode 6:

Join Ryan and Steve each week as they cover the Microsoft cloud. You can follow and interact with the show at @cloudcovershow

In this episode:

- Learn about the AppFabric Service Bus and how to use it to help debug your services

- Find some cool samples and kits for Windows Azure in the community

- Discover a trick to easily include many files from Visual Studio inside your Windows Azure package

<Return to section navigation list>

Anson Horton, Hani Atassi, Danny Thorpe, and Jim Nakashima, who cowrote Cloud Development in Visual Studio 2010 for Visual Studio Magazine’s April 2010 issue, assert “Visual Studio 2010 provides familiar tooling and resources for developers who want to put their applications up in the cloud”:

Imagine building an application that could have essentially infinite storage and unlimited processing power. Now, add in the ability to dynamically scale that application depending on how many resources you want to consume -- or how much you're willing and able to spend. Add a dash of maintainability that gives you this power without the need to manage racks of machines.

Finally, imagine we sweeten the pot by removing the need for you to deal with installing Service Packs or updates, and you've got a pretty interesting recipe coming together. We call this dish Windows Azure, and with Microsoft Visual Studio 2010 you're the chef.

This article is a no-nonsense walkthrough of how to use your existing ASP.NET skills to build cloud services and applications. It's possible to build Windows Azure applications with Visual Studio 2008 or Visual Studio 2010, but Visual Studio 2010 gets you out of the gate faster and generally has a more deeply integrated experience; hence, it's the focus of this article. If you're interested in using Visual Studio 2008, much of the content is applicable, but you'll need to download Windows Azure Tools for Microsoft Visual Studio. …

[Click on image for larger view.]

Figure 1. Adding roles to a new Cloud Service project.

Nicholas Allen’s Windows Azure Guidance and Survey post of 3/30/2010 points to:

The patterns and practices team has released an updated version [Code Drop 2, 3/23/2010] of their samples for Windows Azure development that cover migrating an existing application to Windows Azure, connecting cloud applications with existing systems, and taking advantage of cloud computing. These guidance samples make use of the Enterprise Library application blocks that the patterns and practices team has used in the past to demonstrate enterprise development. The samples currently use a beta release of the upcoming Enterprise Library 5 release. The final version of Enterprise Library is expected to be delivered in mid-April while the samples are updated periodically.

Finally, the Windows Azure team is surveying customers for a quarterly customer satisfaction survey. If you have a billing account for Windows Azure and elected to receive surveys, you should already have been invited to respond but the survey is open to everyone.

Nicholas is primarily a WCF blogger (Nicholas Allen's Indigo Blog, Windows Communication Foundation From the Inside) and I was surprised to find this Azure-related post today.

Jackson Shaw describes Joey Snow’s interview of Quest Software’s Dmitry Sotnikov in a Quest’s Use of Windows Azure post of 3/30/2010:

Joey Snow from Microsoft interviews Dmitry Sotnikov on Quest’s use of Windows Azure. Joey takes a behind the scenes look at IT software as a service and Dmitri sketches out the architecture, including Windows Azure and Windows Live ID as part of this webcast. There’s also a demo of the Quest products that are using Azure. Check it out and if you’re pressed for time here’s the timeline of the webcast:

- [0:01] Cloud solutions for Active Directory recovery, event log management, and SharePoint reporting

- [2:19] IT staff’s concerns: Security, Security, Security

- [5:05] IT staff’s efforts and addressing compliance

- [9:00] Application architecture on the white board

- [14:35] Demo of working components, including federation

Bill Lodin continues his Webcast series with Microsoft Showcase: MSDN Video: Why Windows Azure (Part 3 of 6) about migrating applications to Windows Azure. Microsoft Showcase still doesn’t list Bill’s channel or mention Azure anywhere. Here’s a link to Bill’s Microsoft Showcase: MSDN Video: Why Windows Azure (Part 4 of 6).

Lori MacVittie says “Think that your image heavy site won’t benefit from compression? Think again, because compression is not only good for image heavy sites, it might be better than for those without images” in a preface to her Turns Out Compression is Good for Image Heavy Sites post of 3/30/2010:

jetNEXUS has a nice post entitled, “What does Application acceleration mean?” Aside from completely ignoring protocol acceleration and optimization (especially good for improving performance of those chatty TCP and HTTP-based applications) the author makes a point that should have been obvious but isn’t – compression is actually good for image heavy sites.

jetNEXUS has a nice post entitled, “What does Application acceleration mean?” Aside from completely ignoring protocol acceleration and optimization (especially good for improving performance of those chatty TCP and HTTP-based applications) the author makes a point that should have been obvious but isn’t – compression is actually good for image heavy sites.

It’s true that images are technically already compressed according to their respective formats, so compression doesn’t actually do anything to the images. But a combination of browser rendering rules and client-side caching make the impact of compressing the base page significant on the overall performance of an image-heavy site.

Firstly, the images can't be displayed until the base page arrives at the client, so it follows that the quicker you can deliver the base page, the faster you can request the objects and the quicker the page can load.

Firstly, the images can't be displayed until the base page arrives at the client, so it follows that the quicker you can deliver the base page, the faster you can request the objects and the quicker the page can load.

Secondly, web sites and applications generally have a consistent look and feel. As such the effect of client side caching is significant. This means that most of the data that will be sent is compressible as most of the images are already saved at the client side.

This is definitely one of those insights that once it’s pointed out makes perfect sense, but until then remains an elusive concept that many of us may have missed. Understanding the way in which the client interprets the data, the HTML and all its components, means the ability to better apply application delivery policies such as those used for acceleration and optimization to the application exchange. Combined with the recognition that compression isn’t always a boon for application performance – its benefits and performance impact depend in part on the speed of the network over which clients are communicating – the application delivery process can be optimized to apply the right acceleration policies at the right time on the right data. …

Brian Prince offers A Developers Guide to Microsoft Azure in this 3/29/2010 post to the CodeGuru blog:

Welcome to the Renaissance, version 2.0. There are many new possibilities open to developers today. The breadth of technologies and platforms that have become available in only the past few years have created an environment of unprecedented innovation and creativity. Today, we are able to build applications that we could not have dreamed of only a few years ago. We are seeing the birth of a second renaissance period, one that will make the dot-com boom of the late nineties look like a practice lap.

Part of this Renaissance is caused by the move towards service oriented architecture, and the availability of cloud computing. Suddenly, we are able to create applications, with sophisticated capabilities, without having to write the entire infrastructure by hand, or pay for it all out of pocket. Skype is a very popular application that allows its users to make phone calls over the Internet. Imagine how you would build Skype today. You would not have to figure out how to talk across firewalls, or how to encrypt the data properly. You wouldn't have to build the entire user credential infrastructure. You would only have to set out with a grand idea, and write only the code that is important to your idea. Skype isn't about traversing firewalls, or a new way to manage user names and passwords. It's about people communicating easily across the Internet. The entire infrastructure that isn't strategic to you is left to someone else. This frees up an amazing amount of power and productivity. This leads us to this new Renaissance.

Brian continues with a review of Windows Azure from a software developer’s perspective.

Bill Zack’s Windows Azure Design Patterns post of 3/29/2010 describes his recent Webcast:

One of the challenges in adopting a new platform is finding usable design patterns that work for developing effective solutions. The Catch-22 is that design patterns are discovered and not invented. Nevertheless it is important to have some guidance on what design patterns make sense early in the game.

Most presentations about Windows Azure start by presenting you with a bewildering set of features offered by Windows Azure, Windows Azure AppFabric and SQL Azure. Although these groupings of features may have been developed by different groups within Microsoft and spliced together, as architects we are more interested in solving business problems that can be solved by utilizing these features where they are appropriate

I recently did a Webcast that took another more solution-centric approach. It presented a set of application scenario contexts, Azure features and solution examples. It is unique in its approach and the fact that it includes the use of features from all components of the Windows Azure Platform including the Windows Azure Operating System, Windows Azure AppFabric and SQL Azure.

In the webcast you will learn about the components of the Windows Azure Platform that can be used to solve specific business problems.

The recording can be downloaded and viewed from this Academy Mobile Page. Be forewarned that the LiveMeeting recording did not handle the gradient slide background very well. :-( Nevertheless I think watching it will be of value.

There were also a number of comments and questions about the material that I will add to this post in the next few days. Stay Tuned. :-)

David Aiken’s My Windows Azure Service Instance status – 1 liner post of 3/29/2010 begins:

A long time ago, before the cloud, I did a whole bunch of work with the PowerShell team. The “in” thing to do when working on PowerShell is to solve IT problems with just 1 line of PowerShell. Ok sometimes this one line could be huge, but 1 line nevertheless.

A few weeks back when getting ready for the 1st cloud cover show, we (Steve Marx, Ryan and I) wanted to show how you could use the Windows Azure Management PowerShell Cmdlets to get the current service status, and show it in a chart. The solution never made the show, but here it is in all of its single line glory (i included the line number for fun)

1: (get-deployment -Slot Production -Servicename 'myapp' -Certificate (Get-Item cert:\CurrentUser\My\4445574B0BADAEAA6433E7A5A6169AF9D3136234) -subscriptionId 87654387-9876-9876-9867-987656543482).RoleInstanceList | group-object InstanceStatus | Select Name, Count | out-chart -gallery Lines -DataGrid_Visible true -refresh 0:1:0 -Glass -Title ‘MyApp Instances'

So take a minute to digest that. …

Yes – the graph is refreshed every minute.

Cerebrata announced Version 2010.4.01.00 of their Cloud Storage Studio on 3/29/2010. The new download adds the following features:

- Delete multiple tables, queues & blob containers simultaneously

- Clear table

- Ability to reorder attributes and show/hide them when viewing entities

- Apply properties like cache-control in bulk to all blobs in the upload queue

- Bug Fixes

Bob Warfield explains Minimizing the Cost of SaaS Operations in his post of 3/29/2010 to the Enterprise Irregulars blog:

SaaS software is much more dependent on being run by the numbers than conventional on-premises software because the expenses are front loaded and the costs are back loaded. SAP learned this the hard way with its Business By Design product, for example. If you run the numbers, there is a high degree of correlation between low-cost of delivering the service and high growth rates among public SaaS companies. It isn’t hard to understand–every dollar spent delivering the service is a dollar that can’t be spent to find new customers or improve the service.

SaaS software is much more dependent on being run by the numbers than conventional on-premises software because the expenses are front loaded and the costs are back loaded. SAP learned this the hard way with its Business By Design product, for example. If you run the numbers, there is a high degree of correlation between low-cost of delivering the service and high growth rates among public SaaS companies. It isn’t hard to understand–every dollar spent delivering the service is a dollar that can’t be spent to find new customers or improve the service.

So how do you lower your cost to deliver a SaaS service?

At my last gig, Helpstream, we got our cost down to 5 cents per seat per year. I’ve talked to a lot of SaaS folks and nobody I’ve yet met got even close. In fact, they largely don’t believe me when I tell them what the figures were. The camp that is willing to believe immediately wants to know how we did it. That’s the subject of this “Learnings” blog post. The formula is relatively complex, so I’ll break it down section by section, and I’ll apologize up front for the long post.

Bob continues with these topics:

- Attitude Matters: Be Obsessed with Lowering Cost of Service …

- Go Multi-tenant, and Probably go Coarse-grained Multi-tenant …

- Go Cloud and Get Out of the Datacenter Business …

- Build a Metadata-Driven Architecture …

- Open Source Only: No License Fees! …

- Automate Operations to Death …

- Don’t Overlook the Tuning! …

Scott Densmore’s Azure Deployment for your Build Server post of 3/28/2010 begins:

One of the more mundane tasks when working with Azure is the deployment process. There are APIs that can help deploy your application without having to go through the Windows Azure portal and Visual Studio. These APIs have been wrapped up nicely with the Windows Azure Service Management CmdLets. The one thing that would make this all better is if we could use these APIs and scripts to deploy our project every time a build we define happens. That is exactly what we have done for our Windows Azure Guidance and I wanted to go ahead and get it out there for people to use. My friend Jim's post was a start and this is the next step.

Tools

Overview

In the zip file there is a Windows Azure project with a web role using ASP.NET and a Worker role. The project doesn't actually do anything because the point here is just to illustrate the deployment process. There are two major issues that come up when you have to build and deploy your project. The first is updating and managing your ServiceConfiguration file that points to the correct storage location with your key. Normally you want this to point to using local development storage and not have to manage multiple files / entries. The second issue is that of the deployment itself. Where do you deploy the package and how do you make sure you do it consistently?

Scott continues with the answers.

John Moore’s Accenture Talks Cloud and Windows Azure post of 3/26/2010 to the MSPMentor blog reports:

While boutique and specialty integrators may operate on the bleeding edge at times, the larger consulting houses typically don’t. That mindset makes Accenture’s interest in cloud computing notable. If a company with a heritage in the buttoned-down world of “Big Eight” management consulting backs the cloud, it’s a fairly safe bet the technology has entered the mainstream. Here’s why.

“You go through the hype phases on a lot of this stuff, but this cloud stuff is real,” said Bill Green, Accenture’s chairman and chief executive officer, during the company’s recent analysts’ call.

Green acknowledged the complexities of the cloud, but added that clients take the technology seriously. He said Accenture has dozens of early-stage projects underway. And Accenture has also developed a so-called Accenture Cloud Computing Accelerator service.

“I think that as the technology get better and as the cost profiles get understood and as a few pioneers get out there, this thing is going to be a bona fide technology wave.”

Much of Accenture’s cloud work appears to be in preparing clients for adoption. The company, for example, offers a cloud computing accelerator service that aims to help customers identify business applications that can be migrated to the cloud and, generally, suss out the risks and opportunities of cloud computing.

In addition, Accenture partners with Avanade (an Accenture/Microsoft joint venture) to provide cloud computing solutions on Windows Azure Platform.

CodePlex’s All-In-One Code Framework project offers the following six C# and VB sample projects for Windows Azure:

| Sample |

Description |

ReadMe Link |

| CSAzureWCFWorkerRole |

Host WCF in a Worker Role (C#) |

ReadMe.txt |

| VBAzureWCFWorkerRole |

Host WCF in a Worker Role (VB.NET) |

ReadMe.txt |

| CSAzureWorkflowService35 |

Run WCF Workflow Service on Windows Azure (C#) |

ReadMe.txt |

| VBAzureWorkflowService35 |

Run WCF Workflow Service on Windows Azure (VB.NET) |

ReadMe.txt |

| CSAzureWorkflow4ServiceBus |

Expose WCF Workflow Service using Service Bus (C#) |

ReadMe.txt |

| VBAzureWorkflow4ServiceBus |

Expose WCF Workflow Service using Service Bus (VB.NET) |

ReadMe.txt |

| CSAzureTableStorageWCFDS |

Expose data in Windows Azure Table Storage via WCF Data Services (C#) |

ReadMe.txt |

| VBAzureTableStorageWCFDS |

Expose data in Windows Azure Table Storage via WCF Data Services (VB.NET) |

ReadMe.txt |

| CSAzureServiceBusWCFDS |

Access data on premise from Cloud via Service Bus and WCF Data Service (C#) |

ReadMe.txt |

| VBAzureServiceBusWCFDS |

Access data on premise from Cloud via Service Bus and WCF Data Service (VB.NET) |

ReadMe.txt |

Return to section navigation list>

Jon Savill answers Q. What's Windows Azure? in this 3/30/2010 post to the Windows IT Pro blog:

A. We're constantly hearing about "the cloud" and one of the cloud services from Microsoft is the Windows Azure platform. However, many people are unsure what this is and how it compares to other cloud services.

At the most basic level, Azure can be thought of as an application platform that's hosted by Microsoft where you can run your applications

that have been designed for Azure. It's an open platform, but there are a few considerations, because your application will be running in a distributed manner. As a customer, your only requirement is to develop the application to meet the Azure platform requirements—you don't need to worry about any infrastructure components.

Windows Azure is made up of three major layers:

- The fabric - The abstracted set of computing resources that are used to run applications. The fabric is essentially a collection of computers.

- Storage - A location to store data that can be accessed by the entire datacenter. Storage can contain binary large objects (BLOBs) that can contain any type of data (such as images and media), tables for structured data (but not relational data) and queues (place items on, take off for asynchronous messaging). Three replicas of all data exist at all times to provide resiliency for your data. Windows Azure also supports Windows Azure Drives, which are essentially mountable NTFS volumes that can be accessed by the applications as a disk instead of as BLOBs, tables, or queues. Windows Azure Drives only supports one concurrent writer, but it supports multiple concurrent readers. Note that if you need relational database functionality, there's a separate SQL Azure service that can be used to provide relational functionality found in SQL Server. Also note that while queues can be used for asynchronous communication, Windows Azure also supports synchronous communication between roles through the Azure API, which facilitates communication between distributed instances of the roles for the application. Queues are considered reliable and durable while synchronous communication is considered unreliable, which means you need to handle no response situations within the application when using synchronous communication.

- Developer Experience/SDK - This layer focuses on letting developers create rich applications to run in Windows Azure. Generally, the only specific consideration for Azure is to not create applications with state information, because the application is running on multiple servers and so would not maintain the state cross-box.

Think of Windows Azure as a place to run your applications in a Microsoft hosted environment. Azure is a highly scalable and available environment and users are billed based on actual usage, a utility model—you just pay for what you use and that can vary over time.

Jon continues with a description of typical applications for Windows Azure.

David Linthicum makes The case for the hybrid cloud and claims “Mixing and matching private and public clouds is a good thing for information control, scalability, burst demand, and failover needs” in this 3/30/2010 post to InfoWorld’s Cloud Computing blog:

We've settled in on a few deployment models for cloud computing: private, public, community, and hybrid. Defined by the National Institute for Standards and Technology, these models have been adopted by many organizations in very different and more complex directions. Lately, the deployment action has been around the concept of hybrid clouds, or the mixing and matching of various cloud computing patterns in support of the architecture.

Hybrid clouds, as the name suggests, are a mixture of public, private, and/or community clouds for the target architecture. Most organizations adopting cloud computing will use a hybrid cloud model, considering that not all assets can be placed in public clouds (for security and control reasons), and many will opt for the value of both private and public together, sometimes adding community clouds to the mix as well. …

What's interesting here is not the concept of a having a private and a public cloud, but of a private and a public cloud working together. Here's a classic example: leveraging storage, database, and processing services within a private cloud, and from time to time leveraging a public cloud to handle spikes in processing requirements without having to purchase additional hardware for stand-by capacity. Many organizations are moving toward this type of "cloud-bursting" architecture for the value it brings to the bottom line. …

Bob Familiar announced Channel9’s ARCast.TV - Behind the Cloud::How Microsoft Deploys Azure on 3/29/2010:

One of the most impressive elements on the Microsoft PDC Show floor has been a Windows Azure showcase container.

Designed by Microsoft and built by Dell, this impressive piece of hardware is a key for our datacenter strategy that reduces the time it takes for us to deploy a major datacenter from years to just a few months.

In this episode, Max Zilberman interviews Patrick Yantz, a System Architect in the Global Foundation Services at Microsoft.

ARCast.TV - Behind the Cloud::How Microsoft Deploys Azure

Kenneth Miller’s Hot, Crowded and Critical data center analysis for InformationWeek::analytics has the following abstract:

Corporate America has made some painful adjustments over the past year, but for data center managers, the pressure to contain costs has always been unrelenting—it’s impossible to maintain a low profile when your area of responsibility consumes the bulk of the IT budget. This year in particular, though, we’re really feeling the heat, and not just from over-loaded racks. Just 30% of the 370 respondents to our latest InformationWeek Analytics data center survey say they’ll see larger budgets for 2010 compared with 2009, yet half expect resource demands to increase.

Corporate America has made some painful adjustments over the past year, but for data center managers, the pressure to contain costs has always been unrelenting—it’s impossible to maintain a low profile when your area of responsibility consumes the bulk of the IT budget. This year in particular, though, we’re really feeling the heat, and not just from over-loaded racks. Just 30% of the 370 respondents to our latest InformationWeek Analytics data center survey say they’ll see larger budgets for 2010 compared with 2009, yet half expect resource demands to increase.

Access to report requires site registration.

Lori MacVittie’s Call Me Crazy but Application-Awareness Should Be About the Application essay of 3/29/2010 begins:

I recently read a strategic article about how networks were getting smarter. The deck of this article claimed, “The app-aware network is advancing. Here’s how to plan for a network that’s much more than a dumb channel for data.”

So far, so good. I agree with this wholeheartedly and sat back, expecting to read something astoundingly brilliant regarding application awareness. I was, to say the least, not just disappointed but really disappointed by the time I finished the article. See, I expected at some point that applications would enter the picture. But they didn’t. Oh, there was a paragraph on application monitoring and its importance to app-aware networks, but it was almost as an offhanded commentary that was out of place in a discussion described as being about the “network.” There was, however, a discussion on 10gb networking, and then some other discussion on CPU and RAM and memory (essentially server or container concerns, not the application) and finally some words on the importance of automation and orchestration. Applications and application-aware networking were largely absent from the discussion.

That makes baby Lori angry.

Application-aware networking is about being able to understand an application’s data and its behavior. It’s about recognizing that some data is acceptable for an application and some data is not – at the parameter level. It’s about knowing the application well enough to make adjustments to the way in which the network handles requests and responses dynamically to ensure performance and security of that application.

It’s about making the network work with and for, well, the application.

SearchCloudComputing reports “Abiquo making cloud headlines across the board” in itsCloud startup enables VMware to Hyper-V conversion Daily Cloud post of 3/23/2010:

Spanish open source cloud software developer Abiquo has hit another milestone with Abiquo 1.5. New features include support for Microsoft's Hyper-V and something it calls "drag-and-drop VM conversion." Apparently, users can shift virtual machines from a VMware hypervisor to a Hyper-V environment and Abiquo will do all the heavy lifting for them.

According to the company, however, version 1.5 won't be available for some time. The new release is timed to build up interest in Abiquo's other startling announcement. With $5.1 million in venture capital, the EMEA open source underdog has reformed as a U.S. firm and stands ready to go toe-to-toe with Eucalyptus and other software vendors as a for-profit open source cloud infrastructure provider.

This information has been met with mixed results so far. Eucalyptus has announced the hire of big gun CEO Marten Mickos but is still "in the runway," as the VCs like to say. Meanwhile, Canadian startup Enomaly recently closed the source on its cloud infrastructure software in an apparent bid to make money selling its product instead of giving it away.

<Return to section navigation list>

See Chris Hoff (@Beaker) announces [Webinar] Cloud Based Security Services: Saving Cloud Computing Users From Evil-Doers to be held on 3/31/2010 at 9:00 PDT (12:00 EST) in the Cloud Computing Events section.

John Fontana reports “A coalition calling itself Digital Due Process (DDP) made a push Tuesday to outline a set of updates to the Electronic Communications Privacy Act (ECPA) in order to protect the privacy of data stored online, including content companies store in cloud-based applications” as a preface to his The other side of privacy post of 3/30/2010 to the Ping Talk Blog:

DDP, using the tag line “modernizing surveillance laws for the digital age, “ is made up of privacy groups, think tanks, technology companies and academics and includes the ACLU, AOL, Center for Democracy and Technology, Electronic Frontier Foundation, Google, Microsoft, The Progress & Freedom Foundation, and Salesforce.com. [Emphasis added.]

Ping users will notice a number of the companies listed have a relationship around PingFederate and PingConnect, which are designed to provide SSO for cloud-based appliclations. Privacy is a critical issue in federated identity, but the data being created, stored and accessed by people behind those identities doesn’t enjoy the same cut and dried protection, says DDP.

Clearly cloud service providers see the need for reforms and consumers and corporations should be no less interested in the issues.

The coalition’s push includes assurances that consumer and corporate data stored in the cloud enjoys the same legal protection for content stored locally and protected by ECPA. The ECPA mandates a judicial warrant to search a home or office and read mail or seize personal papers.

The other salient points encompassed in the DDP push include access to email and other private communications stored in the cloud, access to location information, and the use of subpoenas to obtain transactional data.

Philip Lieberman asserts “It's the end-user’s duty to understand what processes and methodologies the cloud vendor is using” in his The Cloud Challenge: Security essay of 3/29/2010, which begins:

Safeguarding a cloud infrastructure from unmonitored access, malware and intruder attacks grows more challenging for service providers as their operations evolve. And as a cloud infrastructure grows, so too does the presence of unsecured privileged identities – those so-called super-user accounts that hold elevated permission to access sensitive data, run programs, and change configuration settings on virtually every IT component. Privileged identities exist on all physical and virtual operating systems, on network devices such as routers, switches, and firewalls, and in programs and services including databases, line-of-business applications, Web services, middleware, VM hypervisors and more.

Left unsecured, privileged accounts leave an organization vulnerable to IT staff members who have unmonitored access to sensitive customer data and can change configuration settings on critical components of your infrastructure through anonymous, unaudited access. Unsecured privileged accounts can also lead to financial loss from failed regulatory audits such as PCI-DSS, HIPAA, SOX and other standards that require privileged identity controls.

One of the largest challenges for consumers of cloud services is attaining transparency into how a public cloud provider is securing its infrastructure. For example, how are identities being managed and secured? Many cloud providers won’t give their customers much more of an answer than a SAS 70 certification. How can we trust in the cloud if the vendors of cloud-based infrastructures neglect to implement both the process and technology to assure that segregation of duties are enforced, and customer and vendor identities are secured? …

Philip Lieberman is President & CEO of Lieberman Software. You can reach him and learn more about Privileged Identity Management in the cloud by contacting Lieberman Software.

Tom from the Azure Support Team posted Feedback around protecting Azure on 3/29/2010:

Our friends on the Forefront Server Protection team are conducting research to understand what workloads you would like to protect, and how you would like them protected. One of the workloads they are soliciting feedback on is Azure. The survey shouldn't take more than 5-10 minutes, and your feedback directly impacts product decisions.

Please head over to http://www.surveymonkey.com/s/forefrontsurvey to take the survey. We appreciate your valuable input.

<Return to section navigation list>

Chris Hoff (@Beaker) announces [Webinar] Cloud Based Security Services: Saving Cloud Computing Users From Evil-Doers to be held on 3/31/2010 at 9:00 PDT (12:00 EST):

I wanted to give you a heads-up on a webinar that Andy Ellis (Akamai,) Jeremiah Grossman (Whitehat) and I did at the tail-end of the RSA Security Conference. The webinar will be held on 3/31/10 at 12:00 pm EST.

You can register here.

Web based threats are becoming increasingly malicious and sophisticated every day

The timing couldn’t be worse, as more companies are adopting cloud-based infrastructure and moving their enterprise applications online. In order to make the move securely, distributed defense strategies based on cloud-based security solutions should be considered.

Join Akamai and a panel of leading specialists for a discussion that delves into IT’s current and future security threats. This online event debuts an in-depth conversation on cloud computing and cloud based security services as well as a live Q&A session with the panel participants.

Topics will include web application security, vulnerabilities, threats and mitigation/defense strategies, and tactics. Get real-life experiences and unique perspectives on the escalating requirements for Internet security from three diverse companies: Cisco, WhiteHat, and Akamai.

We will discuss:

- Individual perspectives on the magnitude and direction of threats, especially to Web Applications

- Options for addressing these challenges in the near term, and long term implications for how enterprises will respond

- Methods to adopt and best practices to fortify application security in the cloud

Eric Nelson provides Slides and Links from SQL Azure session at BizSpark Azure Day in London in this 3/30/2010 post:

A big thanks to all who attended my two sessions on SQL Azure yesterday (29th March 2010). As promised, my slides and links from the session: SQL Azure Overview for Bizspark day.

View more presentations from Eric Nelson.

Related Links:

Brian Hitney offers links to his Slides from Azure Roadshows in this 3/29/2010 post:

I’ve had a number of requests for slides and resources for the recent Azure roadshow in NC and FL – here are the slides and resources:

<Return to section navigation list>

Randy Bias asks Is Amazon Winning the Cloud Race? and answers “Yes” in this 3/30/2010 post:

From our perspective, it looks like Amazon is winning the cloud race. Amazon and Google pioneered the notion of ‘devops‘, where agile practices are applied to merging the disciplines of development and operations. Devops teams are inherent to cloud computing. They are the only way to scale and compete.

For example, in my conversations with Amazon it’s been explained how their operations team is really only two parts:

- Infrastructure Engineering: software development for automating hardware/software and building horizontal service layers

- Datacenter Operations: rack & stack and replace broken hardware

There isn’t a team that manually configures software or hardware as in traditional operations teams or enterprise IT. This is by design. It’s the only way to effectively scale up to running thousands of servers per operator.

More importantly, by automating everything, you become fast and agile, able to build an ecosystem of cloud services more rapidly than your competitors.

With the possible exception of Rackspace Cloud, I’m not sure that anyone else is in Amazon or Google’s league. Amazon in particular, has a track record that is incredibly impressive.

From a quick culling of all of the Amazon Web Services press releases since the launch of it’s initial service (SQS in 2004), after removing non-feature press releases and minor releases of little value, we came up with the following graph:

The trend is clear. Since Amazon’s start, they have accelerated rapidly, almost doubling their feature releases every year. 2009 was spectacular with 43 feature releases of note. Since the beginning of 2010, Amazon already has 8 releases of note.

In contrast, traditional hosting companies moving into cloud computing are hobbled by running two teams: development and operations. Expect the gap to widen as more hosting companies continue to misunderstand that this race isn’t about technology; it’s about people, software, and discipline.

So what’s the takeaway? Simply put, in order to be a major cloud player you need to change how you do IT and build clouds. Either hire someone who can bring the devops practice into your shop or engage an cloud computing engineering services firm like Cloudscaling to help you build fast, nimble teams that focus on automation and rapid release cycles.

I’m surprised that Randy didn’t include Windows/SQL Azure in his analysis.

Erik Howard expounds on Cloud computing lessons learned from Amazon Web Services, Rackspace and GoGrid in this 3/30/2010 post:

It’s coming up on 3 years now of writing, deploying and managing various types of applications in the cloud now. Here are a few things I’ve learned over the years.

Cloud Vendor Selection

Picking the best cloud vendor is like looking for unicorns. It’s not gonna happen. I have yet to find a vendor that has everything I need. That’s why I have apps deployed at Amazon, Rackspace and GoGrid. [Great line! Emphasis added.]

Erik continues with the following topics:

- Amazon AWS …

- Rackspace …

- GoGrid …

- Backup and Disaster Recovery Strategy …

- NoSql does not mean NoHeadaches …

- Irrational Exuberance …

- Automation and Repeatable Processes …

- Monitoring and Management …

- Oh snap! …

Azure Support offers an Azure Pricing vs Amazon AWS Pricing Comparison in this 3/29/2010 post that covers the following topics:

- Compute Instances

- Storage

- Data Transfers

- Databases

- Packaged Pricing

Carl Brooks (@eekygeeky) interviews Eucalyptus’s new CEO in Former MySQL chief Marten Mickos talks cloud computing of 3/29/2010:

Marten Mickos presided over the growth of MySQL from an open source project to a $1 billion business that was fundamental to growth of the commercial Web, and he's now taken the reins at another open source project turned for-profit venture, Eucalyptus Systems.

The startup develops software that turns commodity servers into cloud computing environments that act like Amazon Web Services. Eucalyptus powers the NASA Nebula project, one of the largest cloud environments in the federal government today, and some research and data crunching projects at pharmaceutical giant Eli Lilly, among others. Mickos explains his return to business and why he chose cloud computing.

What prompted the move to Eucalyptus and what are the immediate plans for the business?

Marten Mickos: It's a massive opportunity and a great team; I couldn't resist it. In 2010, we are not changing any plans. We are ramping up the growth rate and investment, but it's essentially the plan that the management detailed last year. Two key things this year are to get more releases out, to be very diligent with producing more code and release it with high quality, and the other thing is securing some key partnerships and customer relationships.

We already work closely with Canonical on the Ubuntu cloud edition, that's one obvious thing; we'll be working with other large manufacturers of hardware and software and even system integrators who are the one's actually building the private clouds for large enterprises. …

Mark Watson describes Google App Engine vs. Amazon Web Services: The Developer Challenges in this 3/28/2010 post to Developer.com:

Sure, cloud computing platforms free developers from scalability and deployment issues, allowing them to spend more time actually writing web applications and services. But when choosing between two prominent platforms, Google App Engine and Amazon Web Services (AWS), which platform is best for you?

The choice can be simple if you fall into one of the following two scenarios:

- If your application can be architected to run within the limited Google App Engine runtime environment, then take advantage of Google's lower hosting costs.

- If you need a more flexible cloud deployment platform, then AWS is a good fit for your needs.

Of course, you need to consider more issues than these when making a choice. In my development work, I've had the opportunity to use both and I can tell you that they require very different architecture decisions and present different sets of challenges. In this article, I will discuss some of the main challenges in more detail.

I assume you have a good understanding of Google App Engine and AWS. If not, read my previous articles "Google App Engine: What Is It Good For?" and "Amazon Web Services: A Developer Primer."

<Return to section navigation list>

Technorati Tags:

Windows Azure,

Windows Azure Platform,

Azure Services Platform,

Azure Storage Services,

Azure Table Services,

Azure Blob Services,

Azure Queue Services,

SQL Azure Database,

SADB,

Open Data API,

OData API,

OData,

Windows Azure AppFabric,

Cloud Computing,

Amazon Web Services,

Google App Engine

My Enabling and Using the OData Protocol with SQL Azure post (updated 3/26/2010) used SQL Server 2008 R2’s AdventureWorksLT database on OakLeaf Systems’ SQL Azure instance for basic OData URI query and Excel PivotTable examples.

My Enabling and Using the OData Protocol with SQL Azure post (updated 3/26/2010) used SQL Server 2008 R2’s AdventureWorksLT database on OakLeaf Systems’ SQL Azure instance for basic OData URI query and Excel PivotTable examples.

Cloud Computing with the Windows Azure Platform

Cloud Computing with the Windows Azure Platform![clip_image002[4] clip_image002[4]](http://lh5.ggpht.com/_hinDA3vi9CA/S7H3h78I-5I/AAAAAAAAAMk/KVU7k1Z-IhI/clip_image002%5B4%5D%5B3%5D.gif?imgmax=800)

Corporate America has made some painful adjustments over the past year, but for data center managers, the pressure to contain costs has always been unrelenting—it’s impossible to maintain a low profile when your area of responsibility consumes the bulk of the IT budget. This year in particular, though, we’re really feeling the heat, and not just from over-loaded racks. Just 30% of the 370 respondents to our latest InformationWeek Analytics data center survey say they’ll see larger budgets for 2010 compared with 2009, yet half expect resource demands to increase.

Corporate America has made some painful adjustments over the past year, but for data center managers, the pressure to contain costs has always been unrelenting—it’s impossible to maintain a low profile when your area of responsibility consumes the bulk of the IT budget. This year in particular, though, we’re really feeling the heat, and not just from over-loaded racks. Just 30% of the 370 respondents to our latest InformationWeek Analytics data center survey say they’ll see larger budgets for 2010 compared with 2009, yet half expect resource demands to increase.