Windows Azure and Cloud Computing Posts for 1/14/2013+

| A compendium of Windows Azure, Service Bus, EAI & EDI, Access Control, Connect, SQL Azure Database, and other cloud-computing articles. |

• Updated 1/18/2012 with new articles marked •.

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Windows Azure Blob, Drive, Table, Queue, HDInsight and Media Services

- Windows Azure SQL Database, Federations and Reporting, Mobile Services

- Marketplace DataMarket, Cloud Numerics, Big Data and OData

- Windows Azure Service Bus, Caching, Access Control, Active Directory, Identity and Workflow

- Windows Azure Virtual Machines, Virtual Networks, Web Sites, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch and Entity Framework v4+

- Windows Azure Infrastructure and DevOps

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

- Cloud Security, Compliance and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

Azure Blob, Drive, Table, Queue, HDInsight and Media Services

• Mike Neil, a Windows Azure General Manager, posted Details of the December 28th, 2012 Windows Azure Storage Disruption in US South [Data Center] on 1/16/2012:

Introduction

On December 28th, 2012 there was a service interruption that affected 1.8% of the Windows Azure Storage accounts. The affected storage accounts were in one storage stamp (cluster) in the U.S. South region. We apologize for the disruption and any issues it caused affected customers. We want to provide more information on the root cause of the interruption, the recovery process, what we’ve learned and what we’re doing to improve the service. We are proactively issuing a service credit to impacted customers as outlined below. We are hard at work implementing what we have learned from this incident to improve the quality of our service.

…

Root Cause

There were three issues that when combined led to the disruption of service.

First, within the single storage stamp affected, some storage nodes, brought back (over a period of time) into production after being out for repair, did not have node protection turned on. This was caused by human error in the configuration process and led to approximately 10% of the storage nodes in the stamp running without node protection.

Second, our monitoring system for detecting configuration errors associated with bringing storage nodes back in from repair had a defect which resulted in failure of alarm and escalation.

Finally, on December 28th at 7:09am PST, a transition to a new primary node was initiated for the Fabric Controller of this storage stamp. A transition to a new primary node is a normal occurrence that happens often for any number of reasons including normal maintenance and hardware updates. During the configuration of the new primary, the Fabric Controller loads the existing cluster state, which in this case resulted in the Fabric Controller hitting a bug that incorrectly triggered a ‘prepare’ action against the unprotected storage nodes. A prepare action makes the unprotected storage nodes ready for use, which includes a quick format of the drives on those nodes. Node protection is intended to insure that the Fabric Controller will never format protected nodes. Unfortunately, because 10% of the active storage nodes in this stamp had been incorrectly flagged as unprotected, they were formatted as a part of the prepare action.

Within a storage stamp we keep 3 copies of data spread across 3 separate fault domains (on separate power supplies, networking, and racks). Normally, this would allow us to survive the simultaneous failure of 2 nodes with your data within a stamp. However, the reformatted nodes were spread across all fault domains, which, in some cases, lead to all 3 copies of data becoming unavailable.

…

Read more. Mike continues with a lengthy description of the root cause and the recovery process.

Bruno Terkaly (@brunoterkaly) posted Knowing when to choose Windows Azure Table Storage or Windows Azure SQL database on 1/13/2013:

Question Recommended Technology Are you trying to keep costs low while storing significantly large data volumes in the multi-terabyte range? Use Windows Azure Table Storage Do you require a flexible schema where each data element being stored is non-uniform and whose structure may not be known at design time Use Windows Azure Table Storage Do your business requirements require robust disaster recover capabilities that span geographical locations?

Do your geo-replication needs involve two data centers that are hundreds of miles apart but on the same continent?Use Windows Azure Table Storage Do your data storage requirements exceed 150 GB and you are reluctant to manually shard or partition your data? Use Windows Azure Table Storage Do you wish to interact with your data using restful techniques and you don’t want to put up your own front-end Web server? Use Windows Azure Table Storage Is your data less than one 150 GB and involves complex relationships with highly structured data? Use Windows Azure SQL Database Do your data requirements involve complex relationships, server-side joins, secondary indexes, and the need for complex business logic in the form of stored procedures? Use Windows Azure SQL Database Do you want your storage software to enforce referential integrity, data uniqueness,primary and foreign keys? Use Windows Azure SQL Database Do you wish to continue to use your SQL reporting and analysis tooling? Use Windows Azure SQL Database Does your database system relied heavily on stored procedures to support business rules? Use Windows Azure SQL Database Do your business applications currently execute SQL statements that include joins, aggregation, and complex predicates? Use Windows Azure SQL Database Does each entity or row of data that you insert exceed 1 MB? Use Windows Azure SQL Database Does your code depend on ADO.NET or ODBC? Use Windows Azure SQL Database

Mingfei Yan described How to generate Http Live Streaming (HLS) content using Windows Azure Media Services in a 1/13/2013 post:

If you want to deliver video content to iOS devices and platform, the best option you have is to package your content into Http Live Streaming. HLS is Apple’s implementation of adaptive streaming and here is some useful resources from Apple. Apple implements the format but they don’t provide hosting. You could use Apache server for hosting HLS content, but better, you could choose Windows Azure Media Services – a way to host video in the cloud. Therefore, you don’t need to manage infrastructure and worry about scalability: Azure takes care of all that for you.

Scenario One: You have a .Mp4 file and you want to package into HLS and stream out from Windows Azure Media Services.

Here is how you could do it through Windows Azure Management Portal:

1. Login to https://manage.windowsazure.com/ and if you don’t have a media services account yet, here is how you could obtain a preview account.

2. Here is how the portal looks like. Click on Media Services tab and choose an existing MP4 file you have. If you don’t have any available MP4 file in portal, you could click on UPLOAD button at the bottom to upload a file from your laptop. Note: you could only upload file size less than 200MB through Azure portal. If you want to upload file bigger than 200 MB, please use our Media Services API.

Portal for Media Services

3. After having your MP4 file in place, click on the Encode button below and choose the third profile: Playback on IOS devices and PC/MAC. After you hit okay, it will start to produce two files.

4. Now, after you kick off the encoding job, you will see two new assets get generated. For my case, they are “The Surface Movement_small-mp4-iOS-Output” and “The Surface Movement_small-mp4-iOS-Output-Intermediate-PCMac-Output”. Here, any name with an “Intermediate” is a SMOOTH STREAMING content and the other one is HLS content. We firstly package your H.264 content into Smooth Streaming and then we mark it as HLS content.

Question: If I don’t need Smooth Streaming content, could we delete it?

Answer: Yes, you could. But if you use portal to do the conversion Smooth Streaming asset will be created.

Question: what profile of HLS content do I generate here?

Answer: The profile we used here is “H264 Smooth Streaming 720p“, and you could check out details here. If you want to encode into other HLS profile, you will need to use our API. For Azure portal, only one profile is provided.

5. After the packing is done, click on the HLS asset (without Intermediate in the name) and click on PUBLISH button at the bottom. Now, your HLS content is hosted on Windows Azure Media Services, and you could grab the link at Publish URL column. That’s the link you put in your video application (iOS native app or HTML5 web app for Safari) and enjoy your video!

6. I will publish another blog post on how to generate HLS content through Azure Media Services .NET SDK.

The post How to generate Http Live Streaming (HLS) content using Windows Azure Media Services appeared first on Mingfei Yan.

<Return to section navigation list>

Windows Azure SQL Database, Federations and Reporting, Mobile Services

• Brian Hitney explained Dealing with Expired Channels in Windows Azure Mobile Services in a 1/18/2013 post:

What’s this? Another Windows Azure Mobile Services (WAMS) post?!

In the next version of my app, I keep a record of the user’s Channel in order to send down notifications. The built in todo list example does this or something very similar. My table in WAMS looks like:

Not shown are a couple of fields, but of particular interest is the device Id. I realized that one user might have multiple devices, so the channel then is tied to the device Id. I still haven’t found a perfect way to do this yet – right now, I’m using a random GUID on first run.

In my WAMS script, if the point that is submitted is “within range” of another user, we’ll send a notification down to update the tile. I go into this part in my blog post: Best Practices on Sending Live Tiles. But what do you do if the channel is expired? This comes up a lot in testing, because the app is removed/reinstalled many times.

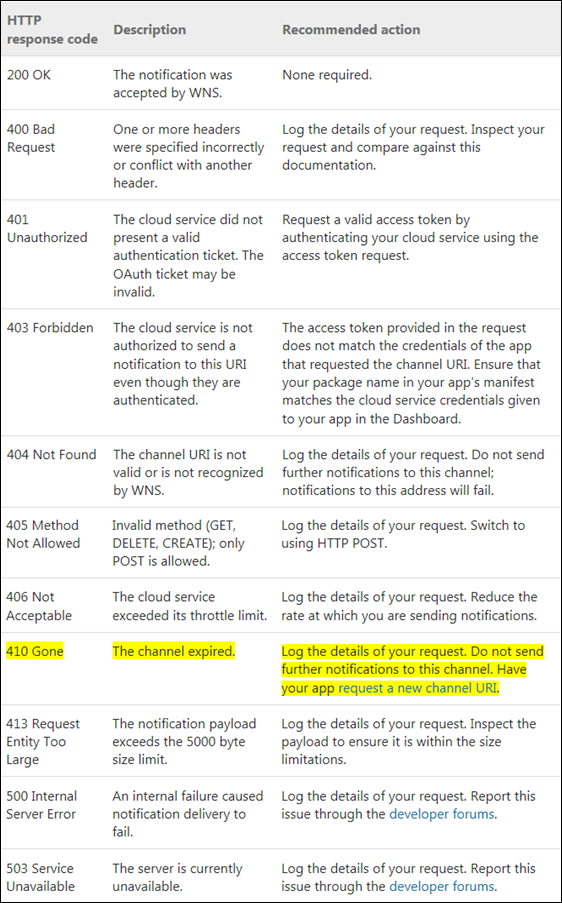

I stumbled on this page, Push Notification Service Request and Response Headers, on MSDN. There is a lot of great info on that page. While I should have more robust solution for handling all these conditions, the one in particular I’m interested in is the Channel Expired response, highlighted below:

Obviously getting a new channel URI is ideal, but the app has to do that on the client (and will) next time the user runs the app. In the mean time, I want to delete this channel because it’s useless. In my script which sends the notifications, we’ll examine the result on the callback and either delete the channel if expired, or, if success, send a badge update because that’s needed, too. (Future todo task: try to combine Live Tile and badges in one update.)

push.wns.send(channelUri, payload, 'wns/tile', { client_id: 'ms-app://<my app id>', client_secret: 'my client secret', headers: { 'X-WNS-Tag' : 'SomeTag' } }, function (error, result) { if (error) { //if the channel has expired, delete from channel table if (error.statusCode == 410){ removeExpiredChannel(channelUri) } } else { //notification sent updateBadge(channelUri); } } );Removing expired channels can be done with something like:

function removeExpiredChannel(channelUri) { var sql = "delete from myapp.Channel where ChannelUri = ?"; var params = [channelUri]; mssql.query(sql, params, { success: function(results) { console.log('Removed Expired Channel: ' + channelUri) } }); }On my todo list is to add more robust support for different response codes – for example, in addition to a 410 response, a 404 would also want to delete the channel record in the table.

• Jesus Rodriguez posted Microsoft Research Mobile Backend as a Service: Introducing Project Hawaii on 1/15/2013:

Microsoft Research(MS Research) is an infinite source of technical innovation. Because of my academic background, I am constantly following the new MS Research projects and drawing ideas and inspiration from them. Recently, I came across Project Hawaii (http://research.microsoft.com/en-us/projects/hawaii/) which provides a set of mobile services hosted in Windows Azure for computational and data storage. Sounds familiar? Yes, Project Hawaiioverlaps slightly with Windows Azure Mobile Services but it focuses on new and innovative service capabilities. In this first release, Project Hawaiienables the following capabilities:

- The Key-Value service enables a mobile application to store application-wide state information in the cloud.

- The Optical Character Recognition (OCR) Service returns the text that appears in a photographic image. For example, given an image of a road sign, the service returns the text of the sign.

- The Path Prediction Service predicts a destination based on a sequence of current locations and historical data.

- The Relay Service provides a relay point in the cloud that mobile applications can use to communicate.

- The Rendezvous Service maps from well-known human-readable names to endpoints in the Hawaii Relay Service.

- The Speech-to-Text Service takes a spoken phrase and returns text. Currently this service supports English only.

- The Translator service enables a mobile application to translate text from one language to another, and to obtain an audio stream that renders a string in a spoken language.

Obviously, given my recent work in the mobile backend as a service (mBaaS) space, Project Hawaii results super interesting to me. After spending a few hours playing with the current release, I thought the experience would make a few interesting blog posts.

Let’s start with the Hawaii’s key-value service:

Project Hawaii’s Key-Value Service (KVS) provides a simple key-value store for mobile applications. By using the KVS, an application can store and retrieve application-wide state information as text using key-value pairs.

Obtaining a Project Hawaii Application ID

Prior to use any of the Project Hawaii services, developers need to obtain a valid application ID. We can achieve that by going to the Project Hawaii signup page (http://hawaiiguidgen.cloudapp.net/default.aspx.) and registering your Windows Live credentials. After that, you will obtain an application identifier that can be used to authenticate to the different cloud services. As illustrated in the following figured.

After having completed this process, we need to register our application in the Windows Azure Marketplace.

Using the Project Hawaii Key Value Service

As its name indicates, the key-value service provides a service interface that enables mobile applications to store information in key-value pair forms. The main vehicle to leverage this capability is a RESTful interface abstracted by SDKs for the Android, Windows Phone and Windows 8 platforms. In the case of Windows 8, the KeyValueService class included in the Microsoft.Hawaii.KeyValue.Client namespace abstracts the capabilities of the Project Hawaii Key-Value service. The following matrix summarizes some of the operations provided by the KeyValueService class.

Like any good mBaaS SDK, the KeyValueService class provides a very succinct syntax to integrate with the Project Hawaii key-value service. For instance, the following code illustrates the process of inserting different items using the Project Hawaii key-value service.

private const string clientID = "My Client ID";

private const string clientSecret = "My Client Secret";

private void SetItem_Test()

{

KeyValueItem item1 = new KeyValueItem() { Key = "Key2", Value = "value2" };

KeyValueService.SetAsync(clientID, clientSecret, new KeyValueItem[1]{item1}, this.OnSetComplete, null) ;

}

private async void OnSetComplete(SetResult result)

{

await Dispatcher.RunAsync(Windows.UI.Core.CoreDispatcherPriority.Normal, async () =>

{

if (result.Status != Microsoft.Hawaii.Status.Success)

Result.Text = "Success";

else

Result.Text = "Error";

});

}

Similarly, applications can query items stored in the key-value infrastructure using the following syntax.

private void GetItem_Test()

{

KeyValueService.GetByKeyAsync(clientID, clientSecret, "Key1", GetByKeyComplete, null);

}

Key-value storage can be a really useful capability in mobile applications. The Project Hawaii Key Value service provides a very simple mechanism to enable mobile application to leverage these capabilities using a very simple syntax.

We will cover other capabilities of Project Hawaii in future blog posts.

Nick Harris (@cloudnick) and Nathan Totten (@ntotten) produced CloudCover Episode 98 - Mobile Services, ASP.NET Facebook Template, and Github Publishing Demos on 1/12/2013:

In this episode Nick and Nate ring in the New Year with a variety of news and announcements about Windows Azure. Additionally, Nick gives some demos of the new scheduling feature in Windows Azure Mobile services. Nate shows the new Facebook Template for ASP.NET MVC that includes the Facebook C# SDK and deploys the template to Windows Azure Web Sites using the new Github continuous integration feature.

- Updates to Windows Azure (Mobile, Web Sites, SQL Data Sync, ACS, Media, Store)

- Applications are now open for the Microsoft Accelerator for Windows Azure - 2013

- azure-cli 0.6.9 ships, pure joy

- New Windows Azure Mobile Services Getting Started Content

- New Windows Store app Samples using Mobile Services

Brian Hitney described Scrubbing UserId in Windows Azure Mobile Services in a 1/10/2013 post to the US DPE Azure Connection blog:

First, many thanks to Chris Risner for the assistance on this solution! Chris is part of the corp DPE team and has does an extensive amount of work with Windows Azure Mobile Services (WAMS) – including this session at //build, which was a great resource for getting started.

If you go through the demo of getting started with WAMS building a TodoList, the idea is that the data in the todo list is locked down to each user. One of the nice things about WAMS is that it’s easy to enforce this via server side javascript … for example, to ensure only the current user’s rows are returned, the following read script can be used that enforces the rows returned only belong to the current user:

function read(query, user, request) { query.where({ userId: user.userId }); request.execute(); }

If we crack open the database, we’ll see that the userId is an identifier, like the below for a Microsoft Account:

MicrosoftAccount:0123456789abcd

When the app connects to WAMS, the data returned includes the userId … for example, if we look at the JSON in fiddler:

The app never displays this information, and it is requested over SSL, but it’s an important consideration and here’s why. What if we have semi-public data? In the next version of Dark Skies, I allow users to pin favorite spots on the map. The user has the option to make those points public or keep them private … for example, maybe they pin a great location for stargazing and want to share it with the world:

… Or, maybe the user pins their home locations or a private farm they have permission to use, where it might be inappropriate to show publically.

Now here comes the issue: if a location is shared publically, that userId is included in the JSON results. Let’s say I launch the app and see 10 public pins. If I view the JSON in fiddler, I’ll see the userId for each one of those public pins – for example:

Now, the userId contains no personally identifiable information. Is this a big deal, then? It’s not like it is the user’s name or address, and it would only be included in spots the user is sharing publically anyway.

But, if a hacker ever finds a way to map a userId back to a specific person, this is a security issue. Even my app doesn’t know who the users really are, it just knows the identifier. Still, I think from a best practice/threat modeling perspective, if we can scrub that data, we should. Note: this issue doesn’t exist with the todo list example, because the user only, and ever, sees their own data. [Emphasis added; see note below.]

Ideally, what we’d like to do is return the userId if it’s the current user’s userId. If the point belongs to another user, we should scrub that from the result set. To do this via a read script in WAMS, we could do something like:

function read(query, user, request) { request.execute( { success: function(results) { //scrub user token if (results.length > 0) { for (var i=0; i< results.length; i++) { if (results[i].UserId != user.userId) { results[i].UserId = 'scrubbeduser'; } } } request.respond(); } }); }If we look at the results in fiddler, we’ll see that I’ll get my userId for any of my points, but the userId is scrubbed if it’s another user’s points that are shared publically:

[Note: these locations are random spots on the map for testing.]

Doing this is a good practice. The database of course has the correct info, but the data for public points is guaranteed to be anonymous should a vulnerability ever present itself. The downside of this approach is the extra overhead as we’re iterating the results – but, this is fairly minor given the relatively small amounts of data.

Technical point: In my database and classes, I use Pascal case (as a matter of preference), as you can see in the above fiddler captures, such as UserId. In the todo example and in the javascript variables, objects are conventionally camel case. So, if you’re using any code here, just be aware that case does matter in situations like this:

if (results[i].UserId != user.userId) // watch casing!Be sure they match your convention. Since Pascal case is the standard for properties in C#, and camel case is the standard in javascript, properties in .NET can be decorated with the datamember attribute to make them consistent in both locations – something I, just as a matter of preference, prefer not to do:

[DataMember(Name = "userId")] public string UserId { get; set; }

Note: OakLeaf Systems’ Privacy Statement says the following about its version of the Todo List application that is undergoing submission to the Windows Store:

Collection of Personal Information (OakLeaf ToDo List Sample and Windows Store Apps)

The OakLeaf ToDo List Windows Mobile Services Demo is a free Windows Store App for computers running Windows 8 and devices running Windows RT. This app accumulates the text of ToDo items from users who sign in with their Microsoft Account (formerly Live ID). Access to individual users’ ToDo items items is provided by disguised representation of their Microsoft Account ID in the form of a Globally Unique Identifier (GUID). A user cannot view other users’ active ToDo items. Representatives of OakLeaf Systems can view all users’ ToDo items, but cannot associate them with a user’s identity. Representatives of OakLeaf systems periodically delete all ToDo items marked completed to reduce the database size.

<Return to section navigation list>

Marketplace DataMarket, Cloud Numerics, Big Data and OData

<Return to section navigation list>

Windows Azure Service Bus, Caching Access Control, Active Directory, Identity and Workflow

• Clemens Vasters (@clemensv) described the Utopia ESB in a 1/15/2013 post:

The basic idea of the Enterprise Service Bus paints a wonderful picture of a harmonious coexistence, integration, and collaboration of software services. Services for a particular general cause are built or procured once and reused across the Enterprise by ways of publishing them and their capabilities in a corporate services repository from where they can be discovered. The repository holds contracts and policy that allows dynamically generating functional adapters to integrate with services. Collaboration and communication is virtualized through an intermediary layer that knows how to translate messages from and to any other service hooked into the ESB like a babel fish in the Hitchhiker’s Guide to the Galaxy. The ESB is a bus, meaning it aspires to be a smart, virtualizing, mediating, orchestrating messaging substrate permeating the Enterprise, providing uniform and mediated access anytime and anywhere throughout today’s global Enterprise. That idea is so beautiful, it rivals My Little Pony. Sadly, it’s also about as realistic. We tried regardless.

As with many utopian ideas, before we can get to the pure ideal of an ESB, there’s some less ideal and usually fairly ugly phase involved where non-conformant services are made conformant. Until they are turned into WS-* services, any CICS transaction and SAP BAPI is fronted with a translator and as that skinning renovation takes place, there’s also some optimization around message flow, meaning messages get batched or de-batched, enriched or reduced. In that phase, there was also learning of the value and lure of the benefits of central control. SOA Governance is an interesting idea to get customers drunk on. That ultimately led to cheating on the ‘B’. When you look around and look at products proudly carrying the moniker ‘Enterprise Service Bus’ you will see hubs. In practice, the B in ESB is mostly just a lie. Some vendors sell ESB servers, some even sell ESB appliances. If you need to walk to a central place to talk to anyone, it’s a hub. Not a bus.

Yet, the bus does exist. The IP network is the bus. It turns out to suit us well on the Internet. Mind that I’m explicitly talking about “IP network” and not “Web” as I do believe that there are very many useful protocols beyond HTTP. The Web is obviously the banner example for a successful implementation of services on the IP network that does just fine without any form of centralized services other than the highly redundant domain name system.

Centralized control over services does not scale in any dimension. Intentionally creating a bottleneck through a centrally controlling committee of ESB machines, however far scaled out, is not a winning proposition in a time where every potential or actual customer carries a powerful computer in their pockets allowing to initiate ad-hoc transactions at any time and from anywhere and where we see vehicles, machines and devices increasingly spew out telemetry and accept remote control commands. Central control and policy driven governance over all services in an Enterprise also kills all agility and reduces the ability to adapt services to changing needs because governance invariably implies process and certification. Five-year plan, anyone?

If the ESB architecture ideal weren’t a failure already, the competitive pressure to adopt direct digital interaction with customers via Web and Apps, and therefore scale up not to the scale of the enterprise, but to scale up to the scale of the enterprise’s customer base will seal its collapse.

Service Orientation

While the ESB as a concept permeating the entire Enterprise is dead, the related notion of Service Orientation is thriving even though the four tenets of SOA are rarely mentioned anymore. HTTP-based services on the Web embrace explicit message passing. They mostly do so over the baseline application contract and negotiated payloads that the HTTP specification provides for. In the case of SOAP or XML-RPC, they are using abstractions on top that have their own application protocol semantics. Services are clearly understood as units of management, deployment, and versioning and that understanding is codified in most platform-as-a-service offerings.

That said, while explicit boundaries, autonomy, and contract sharing have been clearly established, the notion of policy-driven compatibility – arguably a political addition to the list to motivate WS-Policy as the time – has generally been replaced by something even more powerful: Code. JavaScript code to be more precise. Instead of trying to tell a generic client how to adapt to service settings by ways of giving it a complex document explaining what switches to turn, clients now get code that turns the switches outright. The successful alternative is to simply provide no choice. There’s one way to gain access authorization for a service, period. The “policy” is in the docs.

The REST architecture model is service oriented – and I am not meaning to imply that it is so because of any particular influence. The foundational principles were becoming common sense around the time when these terms were coined and as the notion of broadly interoperable programmable services started to gain traction in the late 1990s – the subsequent grand dissent that arose was around whether pure HTTP was sufficient to build these services, or whether the ambitious multi-protocol abstraction for WS-* would be needed. I think it’s fairly easy to declare the winner there.

Federated Autonomous Services

Windows Azure, to name a system that would surely be one to fit the kind of solution complexity that ESBs were aimed at, is a very large distributed system with a significant number of independent multi-tenant services and deployments that are spread across many data centers. In addition to the publicly exposed capabilities, there are quite a number of “invisible” services for provisioning, usage tracking and analysis, billing, diagnostics, deployment, and other purposes. Some components of these internal services integrate with external providers. Windows Azure doesn’t use an ESB. Windows Azure is a federation of autonomous services.

The basic shape of each of these services is effectively identical and that’s not owing, at least not to my knowledge, to any central architectural directive even though the services that shipped after the initial wave certainly took a good look at the patterns that emerged. Practically all services have a gateway whose purpose it is to handle and dispatch and sometimes preprocess incoming network requests or sessions and a backend that ultimately fulfills the requests. The services interact through public IP space, meaning that if Service Bus wants to talk to its SQL Database backend it is using a public IP address and not some private IP. The Internet is the bus. The backend and its structure is entirely a private implementation matter. It could be a single role or many roles.

Any gateway’s job is to provide network request management, which includes establishing and maintaining sessions, session security and authorization, API versioning where multiple variants of the same API are often provided in parallel, usage tracking, defense mechanisms, and diagnostics for its areas of responsibility. This functionality is specific and inherent to the service. And it’s not all HTTP. SQL database has a gateway that speaks the Tabular Data Stream protocol (TDS) over TCP, for instance, and Service Bus has a gateway that speaks AMQP and the binary proprietary Relay and Messaging protocols.

Governance and diagnostics doesn’t work by putting a man in the middle and watching the traffic coming by, which is akin to trying the tell whether a business is healthy by counting the trucks going to their warehouse. Instead we are integrating the data feeds that come out of the respective services and are generated fully knowing the internal state, and concentrate these data streams, like the billing stream, in yet other services that are also autonomous and have their own gateways. All these services interact and integrate even though they’re built by a composite team far exceeding the scale of most Enterprise’s largest projects, and while teams run on separate schedules where deployments into the overall system happen multiple times daily. It works because each service owns its gateway, is explicit about its versioning strategy, and has a very clear mandate to honor published contracts, which includes explicit regression testing. It would be unfathomable to maintain a system of this scale through a centrally governed switchboard service like an ESB.

Well, where does that leave “ESB technologies” like BizTalk Server? The answer is simply that they’re being used for what they’re commonly used for in practice. As a gateway technology. Once a service in such a federation would have to adhere to a particular industry standard for commerce, for instance if it would have to understand EDIFACT or X.12 messages sent to it, the Gateway would employ an appropriate and proven implementation and thus likely rely on BizTalk if implemented on the Microsoft stack. If a service would have to speak to an external service for which it would have to build EDI exchanges, it would likely be very cost effective to also use BizTalk as the appropriate tool for that outbound integration. Likewise, if data would have to be extracted from backend-internal message traffic for tracking purposes and BizTalk’s BAM capabilities would be a fit, it might be a reasonable component to use for that. If there’s a long running process around exchanging electronic documents, BizTalk Orchestration might be appropriate, if there’s a document exchange involving humans then SharePoint and/or Workflow would be a good candidate from the toolset.

For most services, the key gateway technology of choice is HTTP using frameworks like ASP.NET, Web API, probably paired with IIS features like application request routing and the gateway is largely stateless.

In this context, Windows Azure Service Bus is, in fact, a technology choice to implement application gateways. A Service Bus namespace thus forms a message bus for “a service” and not for “all services”. It’s as scoped to a service or a set of related services as an IIS site is usually scoped to one or a few related services. The Relay is a way to place a gateway into the cloud for services where the backend resides outside of the cloud environment and it also allows for multiple systems, e.g. branch systems, to be federated into a single gateway to be addressed from other systems and thus form a gateway of gateways. The messaging capabilities with Queues and Pub/Sub Topics provide a way for inbound traffic to be authorized and queued up on behalf of the service, with Service Bus acting as the mediator and first line of defense and where a service will never get a message from the outside world unless it explicitly fetches it from Service Bus. The service can’t be overstressed and it can’t be accessed except through sending it a message.

The next logical step on that journey is to provide federation capabilities with reliable handoff of message between services, meaning that you can safely enqueue a message within a service and then have Service Bus replicate that message (or one copy in the case of pub/sub) over to another service’s Gateway – across namespaces and across datacenters or your own sites, and using the open AMQP protocol. You can do that today with a few lines of code, but this will become inherent to the system later this year.

Steve Plank (@plankytronixx) reposted Windows Azure Active Directory Cartoon on 1/12/2013:

I posted this video on to Channel 9 before Christmas but I can see something has gone wrong with the indexing and it’s pretty undiscoverable on the site. Thought I’d make it known through the blog.

Abishek Lal continued his series with Enterprise Integration Patterns with Service Bus (Part 2) on 1/11/2013:

Priority-Queues

The scenario here is that a receiver is interested in receiving messages in order of priority for a single or multiple senders. A common use cases for this is event notification, where critical alerts need to be processed first. Today Service Bus Queues do not have the capability to internally sort messages by priority but we can achieve this pattern using Topics and Subscriptions.

We achieve this scenario by routing messages with different priorities to different subscriptions. Routing is done based on the Priority property that the sender adds to the message. The recipient processes messages from specific subscriptions and thus achieves the desired priority order of processing.

The Service Bus implementation for this is thru use of SQL Rules on Subscriptions. These Rules contain Filters that are applied on the properties of the message and determine if a particular message is relevant to that Subscription.

Service Bus Features used:

- SQL Filters can specify Rules in SQL 92 syntax

- Typically Subscriptions have one Rule but multiple can be applied

- Rules can contain Actions that may modify the message (in that case a copy

of the message is created by each Rule that modifies it)- Actions can be specified in SQL 92 syntax too.

The code sample for this is available here.

<Return to section navigation list>

Windows Azure Virtual Machines, Virtual Networks, Web Sites, Connect, RDP and CDN

Kevin Remde (@KevinRemde) continued his series with 31 Days of Servers in the Cloud – Creating Azure Virtual Machines with App Controller (Part 13 of 31) on 1/13/2013:

As you know, if you’ve been following our series, “31 Days of Servers in the Cloud”, Windows Azure can become an extension of your datacenter, and allow you to run your servers in the cloud.

“We get it, Kevin.”

And you’ve seen excellent articles in this series already, describing how to use the Windows Azure portal to create your virtual machines, how to upload your own VM hard disks into the cloud and use them to build machines, and more. In today’s installment, I’m going to show you how easy it is to connect App Controller (a component of System Center 2012) to your Windows Azure account, and then how to use App Controller to create virtual machines in your Windows Azure cloud.

To do this, we need to have a few preliminaries in place:

- You have a Windows Azure subscription, and have requested the ability to preview the use of Windows Azure virtual machines. (If you don’t have an account, you can start a free 90-day trial HERE.)

- You have System Center 2012 App Controller installed. (Download the System Center 2012 Private Cloud evaluation software HERE.)

NOTE: You will need System Center 2012 SP1 App Controller, which at the time of this writing is available to TechNet and MSDN subscribers and volume license customers only; but will very soon be generally available. I will update this blog post as soon as that happens.So, with nothing more assumed then just those basics, let’s walk through the following steps:

- Connect App Controller to your Windows Azure subscription (READ THIS POST for the instructions on how to do this.)

- Create a Storage Account in Windows Azure

- Use App Controller to create a new Virtual Machine

Assuming you’ve done part 1, and have your connection to your Windows Azure subscription set up in App Controller, let’s move on.

Create a Storage Account in Windows Azure

There are many ways to create a new storage account:

- I could use the Windows Azure administrative portal

- I could use PowerShell for Windows Azure and the New-AzureStorageAccount cmdlet

- Or I could do it using App Controller.

For our purposes, let’s use App Controller.

Open App Controller and login as your administrative account. On the left, select Library.

Click Create Storage Account. Give your storage account a name, and choose a region or an affinity group.

Click OK. You should see something that looks like this at the bottom-right of the browser window:

After a few minutes, a refresh of the Library page should show you that you now have your new storage account available.

Now we need to create a container to hold our machine disk(s). With your new storage account selected, Click Create Container.

Give your container a name and click OK.

In a very short while, you’ll see your new container.

Now we’re ready to create virtual machines.

Use App Controller to create a new Virtual Machine

Open App Controller and login as your administrative account.

On the left, select Virtual Machines. This is where we can see, manage, and create new virtual machine and service deployments. (If you’re doing this for the first time, you won’t see items in your list here just yet.)

Click Deploy. The New Deployment window opens up.

Under Cloud, click Configure…, then select your Windows Azure connection as the cloud into which you’re going to deploy your new virtual machine.

(Note: In my App Controller, I’ve also connected to a local VMM Server, which is why I see this other cloud in my list.)Click OK.

Now you will see this:

Click Select an Item… under Deployment Type. Now you’ll see a screen that looks something like this:

This is where you can choose to build a new machine or service based on existing, provided images, or images or disks you’ve uploaded into your own Windows Azure storage. In this example, I’m going to select Images on the left, and choose to build a new Windows Server 2012 machine using the provided image.

Once I click OK, I now see this:

So the next thing I need to do is click Configure… under Cloud Service. Virtual machines and services all run in the context of cloud services. For our example, we’re going to assume that you haven’t created any machines or other items that requires a service, so your list is going to be empty. You’ll use this screen to create and then select your new service.

Click Create… and then fill in cloud service details (Name, Description) and the cloud service location (a unique public URL, plus a geographic region or affinity group).

Click OK, and then select your new service and click OK again.

Next we need to configure the deployment:

Click Configure… under Deployment. Now you’ll see this:

Enter a deployment name, and optionally associate your machine with a virtual network if you have one. (If you don’t have, or don’t select a network, you will be creating the machine and service to handle networking within the service automatically.) Click OK.

Now it’s time to configure the virtual machine itself.

Click Configure… under Virtual Machine.

Now we set the general properties…

Note: an Availability Set is not required, but a new one can be created or an existing one selected from here.

Set the Disks…

When I click Browse…, I’m given the ability to choose the location for my disks in Windows Azure storage, as well as to add (or create) additional data disks for this machine. For our example let’s use the storage account and container we created earlier. I won’t be adding any data disks.

For the Network…

…I’ll just leave the default. I could use this opportunity to define additional endpoints for connections to services on this machine, or I could do it later.

For Administrator password…

…enter a password for the local administrator account. (It also looks like you can use this to assign the computer to a domain if you happen to have a domain controller in the same network or service. I haven’t yet tried, this, so I can’t comment further.)

Click OK.

And now click Deploy.

You’ll see a notification towards the bottom right that should look something like this:

And after several minutes, looking in the Virtual Machines area of App Controller, you will see your new machine appear. Its status will change to “provisioning”, and eventually “running”.

Notice also that if you select your new machine, you also have the option now to connect to it via Remote Desktop! (Cool!) Log in as the Administrator with the administrator password you assigned, and you’re in!

Naturally, you can very easily use App Controller to delete your machines, disks, storage containers, and storage accounts, too. (Remember to do that when you’re done. Even if a machine isn’t running, you’re still being billed for it and for the storage being used!)

Kevin Remde (@KevinRemde) continued his series with 31 Days of Servers in the Cloud–Use PowerShell to create a VM in Windows Azure (Part 14 of 31) on 1/14/2013:

As I’m sure most of you reading this already know, PowerShell is THE tool for automation and management, and the foundation for the configuration of effectively all products from Microsoft these days, as well as many other companies. So it shouldn’t surprise you (especially if you’ve been following our “31 Days of Servers in the Cloud” series) that PowerShell is also able to configure and manage resources “in the cloud”.

Last week in Part 5, for example, I showed you how to connect PowerShell to your Windows Azure account. All you had to do was:

Get a Windows Azure account (start with the free 90-day trial),

- Get the Windows Azure PowerShell tools, and

- Follow some simple instructions to set up the secured connection for Windows Azure management.

In today’s installment of our series, my friend Brian Lewis shows you how you can take PowerShell in Windows Azure to the next level, and actually use it to create Virtual Machines running in your Windows Azure cloud.

READ HIS EXCELLENT ARTICLE HERE

And if you need to catch up on any of our series, CLICK HERE for the full list of links.

Nathan Totten (@ntotten) described Static Site Generation with DocPad on Windows Azure Web Sites in a 1/11/2013 post:

There has been a lot of interest recently with static content generation tools. These tools allow you to generate a website from source documents such as markdown and serve static html files. The advantage of static sites is that they are extremely fast and very inexpensive to host. There are plenty of ways you can host static content that is already generated, but if you want a solution that provides integrated deployment and automated generation you can easily setup Windows Azure Web Sites to host your statically generated site.

SHAMELESS PLUG: You can host up to 10 sites on Windows Azure Web Sites for free. No trial, no expiration date, completely free. :)

I have previously written about Jekyll and Github pages for generating static content. For this post I am going to use my new favorite tool, DocPad. I like DocPad because it is written entirely in Node.js. This gives you the ability to use all kinds of cool Node tools like Jade, CoffeeScript, and Less. To get started with DocPad you just need to install it using Node Package Manager. Run the following commands to install DocPad and then, in an empty directory, create and run a new DocPad site.

Running DocPad in an empty directory will scaffold your new site. You will be asked which template you would like to use. I am going to select “Twitter Boostrap with Jade”. DocPad will create the site and also initialize the empty folder as a git repository.

After the site is generated the docpad server will run and you can access the site at http://localhost:9778/.

Now that we have a basic DocPad site running locally we are ready to deploy it to Windows Azure Web Sites. There are a few minor changes that you will need to make in order to get the site running on Windows Azure Web Sites.

First, we need to trigger the static content to be generated when the site is deployed. In order to do this we are going to create a simple deploy script that Windows Azure Web Sites will run on each deployment. To create the default deploy script, run “azure sites deploymentscript –node” in your site’s directory. The default deployment script will setup your site for deployment to Windows Azure Web Sites. We will make a modification to the script that will build the static content for the DocPad site.

You will see four new files created in your DocPad site .deployment, deploy.cmd. web.config, and iisnode.yml. The .deployment file tells Windows Azure which command to run and the deploy.cmd is the actual deployment script. Web.config tells IIS to use the IISNode handler for serving Node.js content and iisnode.yml contains the IISNode settings.

Open the deploy.cmd file to configure the script to build our static docpad site. Inside the file you will see a section titled Deployment. This section contains two groups of commands title KuduSync and Install npm packages. Directly after the Install npm packages code add the lines shown below.

This script will generate the static content in the /out folder. Now we need to make one minor configuration change to make sure our deploy.cmd runs correctly. By default, DocPad prompts you to agree to their terms every time your generate a static site. This will cause our Windows Azure deployment to fail. To disable this behavior simply set prompts to false in the docpad.coffee configuration as shown below.

Next, we need to setup the server to correctly host the docpad site. This can be done with a single line of Node.js code. Create a file named server.js in the root of your site with the following content.

Everything is now setup and we are ready to deploy. For this deploy I am going to use continuous integration from Github to Windows Azure Web Sites. If you haven’t already done so, you will need to create a new Github repository. Once your repository is created you can create a new Windows Azure Web Site and link it to your repository for automatic continuous deployments.

In order to setup continous integration, open the web site’s dashboard and click “Set up Git publishing” in the “quick glance” section on the right. After your Git repository is ready click the section labeled ”Deploy from my GitHub Repository” and click “Authorize Windows Azure” as shown below.

If you have never done this before, you will be prompted to Authorize Windows Azure to access your Github account. After Windows Azure is authorized select your Github repository as shown below.

Now that everything is connected for continuous integration we just need to push our site to github. Run the following commands to add the files, commit them, add the remote, and push the code.After the push is complete you will see that your site deployment kicks off almost immediately.

The deployment should finish in about 30 seconds and your site will be ready. Browse to the site and you will see your new docpad site.

You can find the full source for this post on Github here.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

• Yvonne Muench (@yvmuench) recounted After the Storm - ESRI Maps Out the Future in the Cloud in a 1/16/2013 post:

A storm hits. Trees down power lines, water levels rise. Emergency teams scramble to figure out the damage, who’s vulnerable? First responders think visually so answering these questions often starts with a map. And those maps are frequently powered by Esri, a leader in Geographic Information Systems (GIS). A couple weeks ago I visited their Redlands, CA headquarters on what just happened to be the day after Hurricane Sandy hit the Eastern seaboard.

Historically GIS solutions have been delivered as complicated desktop apps, which required trained GIS specialists to use. But for about a year now Esri has included a cloud-based component to the system called ArcGIS Online. It lets users, even laymen like you and me, create and instantly publish and share interactive maps. During the storm they experienced an intense spike in demand. The system scaled 3x in one day going from 50 million maps to 150 million just after the peak of the storm, all hosted on Windows Azure. To learn more about how cloud is affecting their industry, I chatted with Russ Johnson, Esri’s Director of Public Safety and National Security Solutions, as well as Paul Ross, Product Manager for ArcGIS Online.

The past…

Before joining Esri, Russ used to be a Commander in National Incident Response teams. He described how knowing what was in place before the disaster is so critical, especially when leading a diverse team that spans local, state and federal organizations. This information would typically be printed and distributed to emergency workers. One of the biggest challenges was to find the data, because local government is often in disarray due to the disaster. For Sept 11th it took at least a week to find the data, bring it together, normalize it, create a database, then produce maps. Once maps were produced people came out of the woodwork with asks. “What bridges can carry big loads? What government buildings are vacant, damaged, etc…”

After initial assignments are made, you typically plan and refresh every twelve hours. That could require four to five hours in a helicopter surveying the incident. Then a couple hours with the planning team to generate and print new assignments for the response teams.

The present…

Enter the cloud which is beginning to fundamentally change a thirty year process in a couple of key ways. First there’s access to unbelievable amounts of quality base data already online. Then there’s ease of use – the new app allows people to quickly create maps almost anyone can use, no specialists required. And instead of printed maps, or at best converted to static PDFs and then posted online, now data and intelligence is coming in real time, maps are dynamically updating - you can get the current status of roads, gas stations, shelters. These maps can be almost instantaneously accurate and easily shared broadly, across multiple agencies. Resource assignments can be made digitally and sent directly to emergency responders. The public can access the same maps. The result is more informed decisions, better outcomes, and greater continuity of governance.

Another new capability enabled by cloud is dynamic mash ups. For example take a map of flood zones, then bring in real time stream gauges to show current water levels. Or take a map of shelters and layer on the open commercial stores nearby. One map generates another. Laymen users combine static and dynamic data by themselves. The possibilities are endless. For example… here’s a relevant question many people had - how will Superstorm Sandy affect voter turnout in the 2012 US presidential election? This map took precinct-level historical voting data and overlaid FEMA impact zones for the disaster.

Darker shaded counties were most damaged by the storm. By mashing up storm and voting data, one could assess impact of the storm on expected voter turnout by political party.

The future…

We’re on the cusp of something even more powerful - crowd sourcing for damage assessment. Leading up to the storm, on the spur of the moment Esri created a cloud-based mobile solution allowing individuals to report and upload conditions on the ground into a central database. With the wide and growing availability of smart phones, this raises the notion of every member of the public as a possible sensor for damage assessment. Powerful stuff.

Even though we’re not quite there yet, the cloud has already been a game changer in this industry. Paul Ross explained that for the first time with Hurricane Sandy there was no hesitation to use the cloud for mission critical information. Utility companies posted outage maps – identifying where power was out and when you can expect it to come back. State and local governments posted evacuation maps and impact areas.

What I find inspiring is that Esri is not just doing the same things in the cloud as they did on premises. They are doing things that are only possible in the cloud. It shows the power of software to help solve real human problems and the power of the cloud to deliver step-change improvements in how it’s done.

About Esri and the Microsoft Disaster Response Program

Esri and Microsoft partner to provide public and private agencies and communities information maps during disasters in a cloud computing infrastructure. Microsoft directs citizens to the maps via online platforms such as MSN, Bing, and Microsoft.com.

• Philip Fu described [Sample Of Jan 15th] Startup tasks and Internet Information Services (IIS) configuration in Windows Azure on 1/15/2012:

You can use Startup element to specify tasks to configure your role environment. Applications that are deployed on Windows Azure usually have a set of prerequisites that must be installed on the host computer. You can use the start-up tasks to install the prerequisites or to modify configuration settings for your environment. Web and worker roles can be configured in this manner.

You can find more code samples that demonstrate the most typical programming scenarios by using Microsoft All-In-One Code Framework Sample Browser or Sample Browser Visual Studio extension. They give you the flexibility to search samples, download samples on demand, manage the downloaded samples in a centralized place, and automatically be notified about sample updates. If it is the first time that you hear about Microsoft All-In-One Code Framework, please watch the introduction video on Microsoft Showcase, or read the introduction on our homepage http://1code.codeplex.com/.

Michael Collier (@MichaelCollier) posted Tips for Publishing Multiple Sites in a Web Role on 1/14/2013:

In November 2010, with SDK 1.3, Microsoft introduced the ability to deploy multiple web applications in a single Windows Azure web role. This is a great cost savings benefit since you don’t need a new role – essentially a virtual machine – for each web application you want to deploy.

Creating a web role that contains multiple web sites is pretty easy. Essentially, you need to add multiple <Site> elements to your web role’s ServiceDefinition.csdef file. Each <Site> element would include a physicalDirectory element that references the location of the web site to be included.

<Sites> <Site name="WebRole1" physicalDirectory="..\..\..\WebRole1"> <Bindings> <Binding name="Endpoint1" endpointName="Endpoint1" /> </Bindings> </Site> <Site name="WebApplication1" physicalDirectory="..\..\..\WebApplication1\"> <Bindings> <Binding name="Endpoint1" endpointName="Endpoint2" /> </Bindings> </Site> <Site name="WebApplication2" physicalDirectory="..\..\..\WebApplication2\"> <Bindings> <Binding name="Endpoint1" endpointName="Endpoint3" /> </Bindings> </Site> </Sites>

For additional detailed information on creating a web role with multiple web sites, I suggest following the guidance provided at these excellent resources:

- MSDN - http://msdn.microsoft.com/en-us/library/windowsazure/gg433110.aspx

- Wade Wegner’s blog post - http://www.wadewegner.com/2011/02/running-multiple-websites-in-a-windows-azure-web-role/

- ElastaCloud Blog post – http://blog.elastacloud.com/2011/01/11/azure-running-multiple-web-sites-in-a-single-webrole/

The above resources provide a great starting point. However, there is a once piece of what I think is important information that is missing. When Visual Studio and the Windows Azure SDK (via CSPACK) create the cloud deployment package (.cspkg), the content listed at the physicalDirectory location is simply copied into the deployment package. Meaning, any web applications there are not compiled as part of the process, no .config transformations take place, and any code-behind (.cs) and project (.csproj) files are also copied.

What’s going on? CSPack is the part of the Windows Azure SDK that is responsible for creating the deployment package file (.cspkg). As CSPack is part of the core Windows Azure SDK, it doesn’t know about Visual Studio projects. Since it doesn’t know about the Visual Studio projects located at the physicalDirectory location, it can’t do any of the normal Visual Studio build and publish tasks – thus just copying the files from the source physicalDirectory to the destination deployment package.

However, when packaging a single-site web role, CSPack doesn’t rely on the physicalDirectory attribute. With a single-site web role, the packaging process is able to build, publish, and create the deployment package.

The Workaround

Ideally, each web site should be published prior to packaging in the .cspkg. Currently there is not a built-in way to do this. Fortunately we can use MSBuild to automate the build and publish steps.

- Open the Windows Azure project’s project file (.ccproj) in an editor. Since the .ccproj is a MSBuild file, additional data points and build targets can be added here.

- Add the following towards the bottom of the .ccproj file.

<PropertyGroup> <!-- Inject the publication of "secondary" sites into the Windows Azure build/project packaging process. --> <!-- CleanSecondarySites; should be in CoreBuildDependsOn. Not working with Package in Visual Studio (works from cmd line though). Investigating. --> <CoreBuildDependsOn> CleanSecondarySites; PublishSecondarySites; $(CoreBuildDependsOn) </CoreBuildDependsOn> <!-- This is the directory within the web application project directory to which the project will be "published" for later packaging by the Azure project. --> <SecondarySitePublishDir>azure.publish\</SecondarySitePublishDir> </PropertyGroup> <!-- These SecondarySite items represent the collection of sites (other than the web application associated with the role) that need special packaging. --> <ItemGroup> <SecondarySite Include="..\WebApplication1\WebApplication1.csproj" /> <SecondarySite Include="..\WebApplication2\WebApplication2.csproj" /> </ItemGroup> <Target Name="CleanSecondarySites"> <RemoveDir Directories="%(SecondarySite.RootDir)%(Directory)$(SecondarySitePublishDir)" /> </Target> <Target Name="PublishSecondarySites" Condition="'$(IsExecutingPublishTarget)' == 'true'"> <!-- Execute the Build (and more importantly the _WPPCopyWebApplication) target to "publish" each secondary web application project. Note the setting of the WebProjectOutputDir property; this is where the project will be published to be later picked up by CSPack. --> <MSBuild Projects="%(SecondarySite.Identity)" Targets="Build;_WPPCopyWebApplication" Properties="Configuration=$(Configuration);Platform=$(Platform);WebProjectOutputDir=$(SecondarySitePublishDir)" />Finally, for each secondary site defined in the .csdef, update the the physicalDirectory attribute to reference the publishing directory, azure.publish\.

<Site name="WebApplication1" physicalDirectory="..\..\..\WebApplication1\azure.publish"> <Bindings> <Binding name="Endpoint1" endpointName="Endpoint2" /> </Bindings> </Site>How does this all work? By adding the CleanSecondarySites and PublishSecondarySites targets to the <CoreBuildDependsOn> element, that is forcing CleanSecondarySites and PublishSecondarySites to happen before any of the default targets included in <CoreBuildDependsOn> (defined in the Microsoft.WindowsAzure.targets file). Thus, the secondary sites are built and published locally before any of the default Windows Azure build targets execute. The IsExecutingPublishTarget condition is needed to ensure PublishSecondarySites happens only when packaging the Windows Azure project (either from Visual Studio or via the command line with MSBuild).

I like this approach because it seems like a fairly clean approach and I don’t have to modify build events (keep reading) for each secondary web site I want to include. I can keep the above build configuration snippet handy and use it in future projects quickly.

Alternative Approach

The above approach relies on modifying the project file to help automate the build and publish of any secondary sites. An alternative approach would be to leverage build events. I first learned of this approach from “Joe” in the comments section of Wade Wegner’s blog post.

- Select your Windows Azure project, and select Project Dependencies. Mark the other web apps as project dependencies.

- Open the project properties for each web application (not the one used as the Web Role).

- For the Build Events, you’ll need to add a pre-build and post-build event.

Pre-Build

rmdir "$(ProjectDir)..\YOUR-AZURE-PROJECT\Sites\$(ProjectName)" /S /QPost-Build

%WinDir%\Microsoft.NET\Framework\v4.0.30319\MSBuild.exe "$(ProjectPath)" /T:PipelinePreDeployCopyAllFilesToOneFolder /P:Configuration=$(ConfigurationName);PreBuildEvent="";PostBuildEvent=""; PackageAsSingleFile=false;_PackageTempDir="$(ProjectDir)..\YOUR-AZURE-PROJECT\Sites\$(ProjectName)"The pre-build event cleans up any files left around from a previous build. The post-build event will trigger a local file system Publish action via MSBuild. The resulting published files going to the “Sites” subdirectory.

Finally, be sure to update the physicalDirectory element in ServiceDefinition.csdef to reference the local publishing subdirectory (notice the ‘Sites’ directory in the updated snippet below).

<Site name="WebApplication1" physicalDirectory="..\..\Sites\WebApplication1\"> <Bindings> <Binding name="Endpoint1" endpointName="Endpoint2" /> </Bindings> </Site>When you “Publish” the Windows Azure project, all your web sites will build and publish to a local directory. All the web sites will have the files you would expect.

Final Helpful Info

When deployed to Windows Azure, the secondary sites referenced in the physicalDirectory attribute are placed in the sitesroot folder of your E or F drive. The site that is the web role is actually compiled, published and deployed to the approot folder on the E or F drive. If you want to see this layout locally, first unzip the .cspkg and then unzip the .cssx file (in the extracted .cspkg). This is the layout that is deployed to Windows Azure.

Special thanks to Paul Yuknewicz and Phil Hoff for their insightful feedback and assistance on this post.

<Return to section navigation list>

Visual Studio LightSwitch and Entity Framework 4.1+

• Beth Massi (@bethmassi) suggested that you Get Started Building SharePoint Apps in Minutes with LightSwitch in a 1/17/2013 post to the Visual Studio LightSwitch blog:

I’ve dabbled in SharePoint 2010 development in the past by using Visual Studio. In fact, I wrote a fair share of articles and samples about it. However I’ve been slacking when it comes to really learning the new app model in SharePoint 2013. I’ve got a good understanding of the architecture, have played with Napa a little, but I just haven’t really had the time to dig into the details, get dirty, and build some real SharePoint apps.

Luckily, one of my favorite products has come to save me! In the latest LightSwitch Preview 2, we have the ability to enable SharePoint 2013 on our LightSwitch projects. This gives us access to SharePoint assets as well as handling the deployment of our application into the SharePoint app catalog. In no time you can create a business app using the LightSwitch HTML client, deploy it to SharePoint 2013, and run it from a variety of mobile devices.

So why would you want to deploy a LightSwitch app to SharePoint? I mean, I can just host this app on my own or in Azure, right? Yes, you can still host LightSwitch apps yourself, however, enabling SharePoint in your LightSwitch apps allows you to take advantage of business data and processes that are already running in SharePoint in your enterprise. Many enterprises today use SharePoint as a portal of information and applications while using SharePoint’s security model to control access permissions. So with the new SharePoint 2013 apps model, this makes running LightSwitch applications from SharePoint / Office 365 very compelling for many businesses.

Sign Up for an Office 365 Developer Account

The easiest way to get started is to sign up for a free Office 365 Developer account. Head to dev.office.com to get started. When you sign up, you’re required to supply a subdomain of .onmicrosoft.com and a user ID. After signup, you use the resulting user ID (i.e. userid@yourdomain.onmicrosoft.com) to sign in to your portal site where you administer your account. Your SharePoint 2013 Developer Site is provisioned at your new domain: http://yourdomain.onmicrosoft.com.

You can see your developer site by selecting SharePoint under the Admin menu on the top of the page. This will list all your site collections. Make sure you use this developer site for your LightSwitch apps otherwise when you debug your application you will get an error “Sideloading of apps is not enabled on this site.”

Get the LightSwitch HTML Client Preview 2

In order to get LightSwitch SharePoint & HTML functionality, you’ll need to have Visual Studio 2012 installed and then you can install the LightSwitch Preview 2 which is included in the Office Developer Tools Preview 2.

Install: Microsoft Office Developer Tools for Visual Studio 2012 - Preview 2

Build an App – Here’s a Tutorial

Now you’re ready to build an app! We’ve got a tutorial that walks you through building a survey application using LightSwitch that runs in SharePoint. I encourage you to give it a try, it should take under an hour to complete the tutorial, and in the end you’ll have a fully functional modern app that runs on a variety of mobile devices.

LightSwitch SharePoint Tutorial

This tutorial demonstrates how LightSwitch handles the authentication to SharePoint using OAuth for you. It also shows you how to use the SharePoint client object model from server code, as well as writing WebAPI methods that can be called from the HTML client. Check out my finished SharePoint app! (click images to enlarge)

If you’ve got questions and/or feedback, please head over to the LightSwitch HTML Client Preview Forum and let the team know.

More Resources & Reading

My LightSwitch HTML 5 Client Preview 2: OakLeaf Contoso Survey Application Demo on Office 365 SharePoint Site post, updated 1/8/2013, covers the autohosting model which involves Windows Azure. It’s important to note that the Office Store isn’t accepting SharePoint apps that use the autohosting model and has offered no timetable for when the embargo might be lifted.

• Beth Massi (@bethmassi) listed the Most Popular LightSwitch Team Blog Posts of 2012 on 1/16/2012:

I was doing some content analysis this morning for this blog and the LightSwitch team blog and I thought it would be fun to list off the most viewed LightSwitch team articles of 2012. Then I thought it would probably be helpful to also look at the most popular “How Do I” videos on the Developer Center as well.

It’s exciting to see one of the most popular posts was the announcement of the HTML Client which brings the ease and speed of business app development to mobile devices as well as optional deployment and integration with SharePoint 2013 / Office 365. See my post on how to get started with the HTML Client.

Make sure you check these gems out!

Top 20 LightSwitch Team Articles (most views in 2012)

- Deployment Guide: How to Configure a Web Server to Host LightSwitch Applications

- Beginning LightSwitch: Getting Started

- Announcing the LightSwitch HTML Client!

- Getting Started with LightSwitch in Visual Studio 2012

- “I Command You!” - LightSwitch Screen Commands Tips & Tricks

- How to Import Data from Excel

- Beginning LightSwitch Part 1: What’s in a Table? Describing Your Data

- Advanced LightSwitch: Writing Queries in LightSwitch Code

- The Anatomy of a LightSwitch Application Series Part 1 - Architecture Overview

- Creating a Custom Add or Edit Dialog

- Beginning LightSwitch Part 3: Screen Templates, Which One Do I Choose?

- New Features Explained – LightSwitch in Visual Studio 2012

- Visual Studio 2012 Launch: Building Business Apps with LightSwitch

- Diagnosing Problems in a Deployed 3-Tier LightSwitch Application

- The Anatomy of a LightSwitch Application Part 4 – Data Access and Storage

- How Do I: Display a chart built on aggregated data

- The LightSwitch HTML Client: An Architectural Overview

- How to Create a Multi-Column Auto-Complete Drop-down Box in LightSwitch

- Tips and Tricks for Using the Screen Designer

- How to Create a Many-to-Many Relationship

Top 10 How Do I Videos

See all the videos on the LightSwitch Developer Center.

- How Do I: Define My Data in a LightSwitch Application?

- How Do I: Create a Master-Details (One-to-Many) Screen in a LightSwitch Application?

- How Do I: Pass a Parameter into a Screen from the Command Bar in a LightSwitch Application?

- How Do I: Get Started with the LightSwitch Starter Kits?

- How Do I: Create an Edit Details Screen in a LightSwitch Application?

- How Do I: Create a Search Screen in a LightSwitch Application?

- How Do I: Deploy a Visual Studio LightSwitch Application

- How Do I: Create a Screen that can Both Edit and Add Records in a LightSwitch Application?

- How Do I: Sort and Filter Data on a Screen in a LightSwitch Application?

• Rowan Miller posted Entity Framework Links #3 on 1/15/2013:

This is the third post in a regular series to recap interesting articles, posts and other happenings in the EF world.

In December our team announced the availability of EF6 Alpha 2. Scott Guthrie also posted about this release. The announcement post provides details of the features included in this preview. Of particular note, we were excited to include a contribution from AlirezaHaghshenas that provides significantly improved warm up time (view generation), especially for large models. We’ve also got some more performance improvements coming in the next preview of EF6. The Roadmap page on our CodePlex site provides details of all the features we are planning to include in EF6, including those that are not yet implemented.

We shipped some fixes for the EF Designer in Visual Studio 2012 Update 1.

There were a number of good posts about Code First Migrations . Doug Rathbone blogged about using Code First Migrations in team environments. This one’s a bit older, but we wanted to point out a good post by Jarod Ferguson with some good tips for using Code First Migrations. Rowan Miller blogged about customizing the code that is scaffolded by Code First Migrations.

Devart announced Spatial data type support in their EF provider for Oracle.

Diego Vega posted details about a workaround for performance with Enumerable.Contains and non-Unicode columns against EF in .NET 4.0.

Julie Lerman published an article in MSDN Magazine about shrinking EF models with DDD bounded contexts. She also blogged about an issue she has seen with machine.config files messing up Code First provider factories.

In case you missed it, the EF Team is now on Twitter and Facebook, follow us to stay updated on a daily basis.

Heinrich Wendel described Visualizing List Data using a Map Control in a 1/14/2013 post to the Visual Studio LightSwitch blog:

The true power of LightSwitch lies in its combination of Access-like ease of use and quick ramp-up, while also remaining attractive for complex coding scenarios. The new LightSwitch HTML Client allows you to build modern apps in a couple of minutes, deploy them to online services like SharePoint and Azure and access them from a variety of mobile devices.

You already learned about the basic usage of the new LightSwitch HTML Application project type and screen templates in our previous blog posts. We also introduced you to some of our more powerful concepts. We discussed the new HTML Client APIs, such as Event Handling and Promises, showed you how to use them to write your own custom controls that bind to data and how to integrate existing jQueryMobile controls.

In this blog post we will dig even deeper into code and show you how to implement a custom collection control that shows a map. While LightSwitch already provides two collection controls out of the box, namely the List and Tile List controls, location based data is usually visualized using a map. This is especially useful on mobile devices and LightSwitch provides an API that allows you to write your own collection controls.

We will use the familiar Northwind database in this article. Instead of downloading it, we will just connect to an existing OData endpoint on the web. The Northwind database comes with a list of customers and their addresses. We will simply display those customers as pins on Bing Maps and allow users to drill into the details by clicking on one of the pins. In addition to that, we will limit the number of customers displayed on the list by implementing a paging mechanism.

Before we start you should make sure that you have the latest LightSwitch HTML Preview 2 installed on your machine. You can download the LightSwitch HTML Client Preview 2 here. We will go through the basic steps very quickly. If you are not familiar with those you should first walk through our previous tutorials.

Creating a project and connecting to existing data

We will start with a new “LightSwitch HTML Application” project. After creating the project select “Attach to external Data Source”, choose “OData Service” and put in the following endpoint address: “http://services.odata.org/Northwind/Northwind.svc”. Select “None” for the Authentication Type and proceed to the next screen. Check the box to include all Entities and close the dialog by clicking “Finish”. After those preparation steps your project should look similar to the following screenshot:

Building the basic screen

Our small sample application only needs one Screen. We will start with the “Browse” Screen Template. Right click the “Client” node in the Solution Explorer, select “Add Screen…”, use the “Browse Data Screen” and select the “NorthwindEntitiesData.Customers” Entity. This will create a Screen with a list of all customer names that are in the database.

Now we will enhance this Screen to show some more information when selecting a customer. Add a new Dialog to the Screen by dragging the “Selected Item” property of the “Customers” Query into the Dialogs node. Then select the Rows Layout and set “Use read-only controls” in the Properties Window. Finally, select the List and change the “Item Tap” action in the Properties Window to show the created Dialog.

The result should look similar to the following screenshot:

Running (F5) the application will show you a list of all customers and selecting one will open a dialog with all the details.

Implementing the Control

Now we are ready to replace the built-in List control with our custom maps control. Go back to the Screen Designer and change the List into a Custom Control. Now that we have a Custom Control we have to actually implement the code to render it.

Adding the lightswitch.bing-maps control