Windows Azure and Cloud Computing Posts for 8/20/2012+

| A compendium of Windows Azure, Service Bus, EAI & EDI,Access Control, Connect, SQL Azure Database, and other cloud-computing articles. |

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Windows Azure Blob, Drive, Table, Queue and Hadoop Services

- SQL Azure Database, Federations and Reporting

- Marketplace DataMarket, Cloud Numerics, Big Data and OData

- Windows Azure Service Bus, Access Control, Caching, Active Directory, and Workflow

- Windows Azure Virtual Machines, Virtual Networks, Web Sites, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch and Entity Framework v4+

- Windows Azure Infrastructure and DevOps

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

Azure Blob, Drive, Table, Queue and Hadoop Services

John Furrier (@furrier) posted Big Data Hadoop Death Match – Again But – Hadoop Era is Different from Linux Era to the SiliconANGLE blog on 8/17/2012:

Matt Asay, VP of big data cloud startup called Nodeable, writes a post today titled “Becoming Red Hat: Cloudera & Hortonworks Big Data Death Match”. He grabbed that headline from a link to our content on free research site Wikibon.org -thx Matt.

The argument of who contributes more to Hadoop was surfaced by Gigaom a year ago and that didn’t play well in the market because

k[no]wone cared. Back then one year ago I was super critical of Hortonworks when they launched and also critical of Cloudera of not leading more in the market.

This notion of a cold war between the two companies is not valid. Why? The market is growing way to fast to matter. Both Cloudera and Hortonworks will be very successful and it’s not a winner take all market.

What a difference one year makes in this market. One year later Hortonworks has earned the right to say that they have serious traction and Cloudera who had a sizable lead has to be worried. I don’t see a so called Death Match and I don’t think that the Linux metaphor is appropriate in this market.

I’ve met Matt at events and he is a smart guy. I like his open source approach and his writings. We both are big fans of Mike Olson, the CEO of Cloudera. I got to know Mike while SiliconANGLE was part of Cloudera Labs and Matt is lucky to have Mike sit on his company’s board at Nodeable. Being a huge fan of Cloudera I’m extremely biased toward Cloudera. That being said I have to disagree with Matt on a few things in his post.

Let me break it down his key points where I agree and disagree.

Matt says “Why did Red Hat win? Community.”

I agree that community wins when the business and tech model depends on the community. My question on the open source side is that in the Hadoop era do we need only one company – a winner take all? I say no.

Matt says … “In community and other areas, Linux is a great analogue for Hadoop. I’ve suggested recently that Hadoop market observers could learn a lot from the indomitable rise of Linux, including from how it overcame technical shortcomings over time through communal development. But perhaps a more fundamental observation is that, as with Linux, there’s no room for two major Hadoop vendors.”

He’s just flat out wrong for many reasons. Mainly the market of hardware is much different and open source is more evolved and advanced. The analog of Linux just doesn’t translate not even close to 100%.

Matt says “… there will be truckloads of cash earned by EMC, IBM and others who use Hadoop as a complement to drive the sale of proprietary hardware and software”

I totally agree and highlight that production deployments matter most because of the accelerated demand for solutions. This amplifys my point above that faster time to value is generated by new Hadoop based technology which also reaffirms my point about this not being like Linux.

Matt says “But for those companies aspiring to be the Red Hat of Hadoop – that primary committer of code and provider of associated support services – there’s only room for one such company, and it’s Cloudera orHortonworks. I don’t feel MapR has the ability to move Hadoop development, given that it doesn’t employ key Hadoop developers as Cloudera and Hortonworks do, so it has no chance of being a dominant Hadoop vendor.”

I don’t think that the notion of one winner take all will happen – many think that it will happen – I don’t. There are many nuances here and based upon my experience in the Linux and Hadoop market the open source communities are much too advanced now verses the old school Linux days.

The Hadoop Era Is Not The Linux Era

Why do I see it a bit different than Matt. Because I believe his (and others) Linux analogs to Hadoop is flawed thinking. It’s just not the same.

We are in the midst of a transformation of infrastructure from the old way to the new way – a modern way. This new modern era is what the Hadoop era is about. Linux grew out of frustration of incumbant legacy non innovators in a slow moving and proprietary hardware refresh cycle. There was no major inflection point driving the Linux evolution just pure frustration on access to code and price. The Hadoop Era is different. It’s transformative in a radical faster way than Linux ever was. It’s building on massive convergence in cloud, mobile, and social all on top of Moores Law.

The timing of Hadoop with cloud and mobile infrastructure is the perfect storm.

I’ve been watching this Hadoop game from the beginning and it’s like watching Nascar – who will slingshot to victory will be determined in the final lap. The winners will be determined by who can ship the most reliable production ready code. Speed is of the essence in this Hadoop era.

If history in tech trends (Linux) can teach us anything it’s that the first player doesn’t always become the innovator and/or winner. So Cloudera has to be nervous.

Bottom line: the market is much different and faster than back in the Linux day.

Channel9 posted Cory Fowler Interviews Doug Mahugh about the Windows Azure Storage for WordPress Plugin on 8/15/2012:

Join your guides Brady Gaster and Cory Fowler as they talk to the product teams in Redmond as well as the web community.

In this episode, Cory talks with Doug Mahugh of Microsoft Open Technologies, Inc. about a recent release of the Windows Azure Storage for WordPress Plugin. Windows Azure Storage for WordPress is an open source plugin that enables your WordPress site to store media files to Windows Azure Storage.

Reference Links

- Windows Azure Storage Plugin for WordPress Updated! by Brian Swan

- Windows Azure Storage plugin for WordPress by Doug Mahugh

- Update Released: Windows Azure Storage Plugin for WordPress by Cory Fowler

Mentioned Links

Louis Columbus (@LouisColumbus) posted Roundup of Big Data Forecasts and Market Estimates, 2012 on 8/15/2012 (missed when published):

From the best-known companies in enterprise software to start-ups, everyone is jumping on the big data bandwagon.

The potential of big data to bring insights and intelligence into enterprises is a strong motivator, where managers are constantly looking for the competitive edge to win in their chosen markets. With so much potential to provide enterprises with enhanced analytics, insights and intelligence, it is understandable why this area has such high expectations – and hype – associated with it.

Given the potential big data has to reorder an enterprise and make it more competitive and profitable, it’s understandable why there are so many forecasts and market analyses being done today. The following is a roundup of the latest big data forecasts and market estimates recently published:

- As of last month, Gartner had received 12,000 searches over the last twelve months for the term “big data” with the pace increasing.

- In Hype Cycle for Big Data, 2012, Gartner states that Column-Store DBMS, Cloud Computing, In-Memory Database Management Systems will be the three most transformational technologies in the next five years. Gartner goes on to predict that Complex Event Processing, Content Analytics, Context-Enriched Services, Hybrid Cloud Computing, Information Capabilities Framework and Telematics round out the technologies the research firm considers transformational. The Hype Cycle for Big Data is shown below:

- Predictive modeling is gaining momentum with property and casualty (P&C) companies who are using them to support claims analysis, CRM, risk management, pricing and actuarial workflows, quoting, and underwriting. Web-based quoting systems and pricing optimization strategies are benefiting from investments in predictive modeling as well. The Priority Matrix for Big Data, 2012 is shown below:

- Social content is the fastest growing category of new content in the enterprise and will eventually attain 20% market penetration. Gartner defines social content as unstructured data created, edited and published on corporate blogs, communication and collaboration platforms, in addition to external platforms including Facebook, LinkedIn, Twitter, YouTube and a myriad of others.

- Gartner reports that 45% as sales management teams identify sales analytics as a priority to help them understand sales performance, market conditions and opportunities.

- Over 80% of Web Analytics solutions are delivered via Software-as-a-Service (SaaS). Gartner goes on to estimate that over 90% of the total available market for Web Analytics are already using some form of tools and that Google reported 10 million registrations for Google Analytics alone. Google also reports 200,000 active users of their free Analytics application. Gartner also states that the majority of the customers for these systems use two or more Web analytics applications, and less than 50% use the advanced functions including data warehousing, advanced reporting and higher-end customer segmentation features.

- In the report Market Trends: Big Data Opportunities in Vertical Industries, the following heat map by industry shows that from a volume of data perspective, Banking and Securities, Communications, Media and Services, Government, and Manufacturing and Natural Resources have the greatest potential opportunity for Big Data.

- Last week Gartner hosted Big Data Opportunities in Vertical Industries (August 7th) and provided an excellent overview of the research behind Market Trends: Big Data Opportunities in Vertical Industries. The following graphic was included in the webinar showing big data investments by industry:

- The Wikibon Blog has created an excellent compilation of big data statistics and market forecasts. Their post, A Comprehensive list of Big Data Statistics, can be found here. They’ve also created an infographic titled Taming Big Data. You can find The Big List of Big Data Infographics here.

- The Hadoop-MapReduce market is forecast to grow at a compound annual growth rate (CAGR) 58% reaching $2.2 billion in 2018. Source: Hadoop-MapReduce Market Forecast 2013-2018

- Big data: The next frontier for innovation, competition, and productivity is available for download from the McKinsey Global Institute for free. This is 156 page document authored by McKinsey researchers is excellent. While it was published last year (June, 2011), if you’re following big data, download a copy as much of the research is still relevant. McKinsey includes extensive analysis of how big data can deliver value in a manufacturing value chains for example, which is shown below:

- How is big data faring in the enterprise? Is an excellent blog post written by best-selling author and thought leader Dion Hinchcliffe . Be sure to follow Dion on Twitter and by subscribing to his blog, he delivers excellent content that is insightful and interesting.

<Return to section navigation list>

SQL Azure Database, Federations and Reporting

Cyrielle Simeone (@cyriellesimeone pictured below) posted Thomas Mechelke’s (@thomasmechelke) Using a Windows Azure SQL Database with Autohosted apps for SharePoint on 8/13/2012 (missed when posted):

This article is brought to you by Thomas Mechelke, Program Manager for SharePoint Developer Experience team. Thomas has been monitoring our new apps for Office and SharePoint forums and providing help on various topics. In today's post, Thomas will walk you through how to use a Windows Azure SQL Database with autohosted apps for SharePoint, as it is one of the most active thread on the forum. Thanks for reading !

Hi ! My name is Thomas Mechelke. I'm a Program Manager on the SharePoint Developer Experience team. I've been focused on making sure that apps for SharePoint can be installed, uninstalled, and updated safely across SharePoint, Windows Azure, and Windows Azure SQL Database. I have also been working closely with the Visual Studio team to make the tools for building apps for SharePoint great. In this blog post I'll walk you through the process for adding a very simple Windows Azure SQL Database and accessing it from an autohosted app for SharePoint. My goal is to help you through the required configuration steps quickly, so you can get to the fun part of building your app.

Getting started

In a previous post, Jay described the experience of creating a new autohosted app for SharePoint. That will be our starting point.

If you haven't already, create a new app for SharePoint 2013 project and accept all the defaults. Change the app name if you like. I called mine "Autohosted App with DB". Accepting the defaults creates a solution with two projects: the SharePoint project with a default icon and app manifest, and a web project with some basic boilerplate code.

Configuring the SQL Server project

Autohosted apps for SharePoint support the design and deployment of a data tier application (DACPAC for short) to Windows Azure SQL Database. There are several ways to create a DACPAC file. The premier tools for creating a DACPAC are the SQL Server Data Tools, which are part of Visual Studio 2012.

Let's add a SQL Server Database Project to our autohosted app:

- Right-click the solution node in Solution Explorer, and then choose Add New Project.

- Under the SQL Server node, find the SQL Server Database Project.

- Name the project (I called it AutohostedAppDB), and then choose OK.

A few steps are necessary to set up the relationship between the SQL Server project and the app for SharePoint, and to make sure the database we design will run both on the local machine for debugging and in Windows Azure SQL Database.

First, we need to set the target platform for the SQL Server Database project. To do that, right-click the database project node, and then select SQL Azure as the target platform.

Next, we need to ensure that the database project will update the local instance of the database every time we debug our app. To do that, right-click the solution, and then choose Set Startup Projects. Then, choose Start as the action for your database project.

Now, build the app (right-click Solution Node and then choose Build). This generates a DACPAC file in the database output folder. In my case, the file is at /bin/Debug/projectname.dacpac.

Now we can link the DACPAC file with the app for SharePoint project by setting the SQL Package property.

Setting the SQL Package property ensures that whenever the SharePoint app is packaged for deployment to a SharePoint site, the DACPAC file is included and deployed to Windows Azure SQL Database, which is connected to the SharePoint farm.

This was the hard part. Now we can move into building the actual database and data access code.

Building the database

SQL Server Data Tools adds a new view to Visual Studio called SQL Server Object Explorer. If this view doesn't show up in your Visual Studio layout (usually as a tab next to Solution Explorer), you can activate it from the View menu. The view shows the local database generated from your SQL Server project under the node for (localdb)\YourProjectName.

This view is very helpful during debugging because it provides a simple way to get at the properties of various database objects and provides access to the data in tables.

Adding a table

For the purposes of this walkthrough, we'll keep it simple and just add one table:

- Right-click the database project, and then add a table named Messages.

- Add a column of type nvarchar(50) to hold messages.

- Select the Id column, and then change the Is Identity property to be true.

After this is done, the table should look like this:

Great. Now we have a database and a table. Let's add some data.

To do that, we'll use a feature of data-tier applications called Post Deployment Scripts. These scripts are executed after the schema of the data-tier application has been deployed. They can be used to populate look up tables and sample data. So that's what we'll do.

Add a script to the database project. That brings up a dialog box with several script options. Select Post Deployment Script, and then choose Add.

Use the script editor to add the following two lines:

delete from Messages

insert into Messages values ('Hello World!')

The delete ensures the table is empty whenever the script is run. For a production app, you'll want to be careful not to wipe out data that may have been entered by the end user.

Then we add the "Hello World!" message. That's it.

Configuring the web app for data access

After all this work, when we run the app we still see the same behavior as when we first created the project. Let's change that. The app for SharePoint knows about the database and will deploy it when required. The web app, however, does not yet know the database exists.

To change that we need to add a line to the web.config file to hold the connection string. For that we are using a property in the <appSettings> section named SqlAzureConnectionString.

To add the property, create a key value pair in the <appSettings> section of the web.config file in your web app:

<add key="SqlAzureConnectionString" value="Data Source=(localdb)\YourDBProjectName;Initial Catalog=AutohostedAppDB;Integrated Security=True;Connect Timeout=30;Encrypt=False;TrustServerCertificate=False" />

The SqlAzureConnectionString property is special in that its value is set by SharePoint during app installation. So, as long as your web app always gets its connections string from this property, it will work whether it's installed on a local machine or in Office 365.

You may wonder why the connection string for the app is not stored in the <connectionStrings> section. We implemented it that way in the preview because we already know the implementation will change for the final release, to support geo-distributed disaster recovery (GeoDR) for app databases. In GeoDR, there will always be two synchronized copies of the database in different geographies. This requires the management of two connection strings, one for the active database and one for the backup. Managing those two strings is non-trivial and we don't want to require every app to implement the correct logic to deal with failovers. So, in the final design, SharePoint will provide an API to retrieve the current connection string and hide most of the complexity of GeoDR from the app.

I'll structure the sample code for the web app in such a way that it should be very easy to switch to the new approach when the GeoDR API is ready.

Writing the data access code

At last, the app is ready to work with the database. Let's write some data access code.

First let's write a few helper functions that set up the pattern to prepare for GeoDR in the future.

GetActiveSqlConnection()

GetActiveSqlConnection is the method to use anywhere in the app where you need a SqlConnection to the app database. When the GeoDR API becomes available, it will wrap it. For now, it will just get the current connection string from web.config and create a SqlConnection object:

// Create SqlConnection.

protected SqlConnection GetActiveSqlConnection()

{

return new SqlConnection(GetCurrentConnectionString());

}

GetCurrentConnectionString()

GetCurrentConnectionString retrieves the connection string from web.config and returns it as a string.

// Retrieve authoritative connection string.

protected string GetCurrentConnectionString()

{

return WebConfigurationManager.AppSettings["SqlAzureConnectionString"];

}

As with all statements about the future, things are subject to change—but this approach can help to protect you from making false assumptions about the reliability of the connection string in web.config.

With that, we are squarely in the realm of standard ADO.NET data access programming.

Add this code to the Page_Load() event to retrieve and display data from the app database:

// Display the current connection string (don't do this in production).

Response.Write("<h2>Database Server</h2>");

Response.Write("<p>" + GetDBServer() + "</p>");

// Display the query results.

Response.Write("<h2>SQL Data</h2>");

using (SqlConnection conn = GetActiveSqlConnection())

{

using (SqlCommand cmd = conn.CreateCommand())

{

conn.Open();

cmd.CommandText = "select * from Messages";

using (SqlDataReader reader = cmd.ExecuteReader())

{

while (reader.Read())

{

Response.Write("<p>" + reader["Message"].ToString() + "</p>");

}

}

}

}

We are done. This should run. Let's hit F5 to see what happens.

It should look something like this. Note that the Database Server name should match your connection string in web.config.

Now for the real test. Right-click the SharePoint project and choose Deploy. Your results should be similar to the following image.

The Database Server name will vary, but the output from the app should not.

Using Entity Framework

If you prefer working with the Entity Framework, you can generate an entity model from the database and easily create an Entity Framework connection string from the one provided by GetCurrentConnectionString(). Use code like this:

// Get Entity Framework connection string.

protected string GetEntityFrameworkConnectionString()

{

EntityConnectionStringBuilder efBuilder =

new EntityConnectionStringBuilder(GetCurrentConnectionString());

return efBuilder.ConnectionString;

}

We need your feedback

I hope this post helps you get working on the next cool app with SharePoint, ASP.NET, and SQL. We'd love to hear your feedback about where you want us to take the platform and the tools to enable you to build great apps for SharePoint and Office.

<Return to section navigation list>

Marketplace DataMarket, Cloud Numerics, Big Data and OData

Mark Stafford (@markdstafford) posted OData 101: Building our first OData-based Windows Store app (Part 2) on 8/21/2012:

In the previous blog post [see below], we walked through the steps to build an OData-enabled client using the new Windows UI. In this blog post, we’ll take a look at some of the code that makes it happen.

ODataBindable, SampleDataItem and SampleDataGroup

In the walkthrough, we repurposed SampleDataSource.cs with some code from this gist. In that gist, ODataBindable, SampleDataItem and SampleDataGroup were all stock classes from the project template (ODataBindable was renamed from SampleDataCommon, but otherwise the classes are exactly the same).

ExtensionMethods

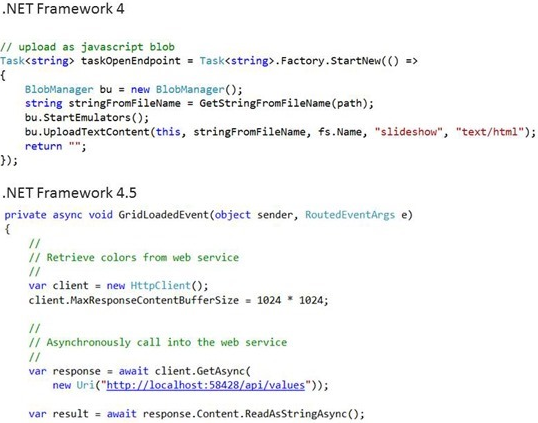

The extension methods class contains two simple extension methods. Each of these extension methods uses the Task-based Asynchronous Pattern (TAP) to allow the SampleDataSource to execute an OData query without blocking the UI.

For instance, the following code uses the very handy Task.Factory.FromAsync method to implement TAP:

- public static async Task<IEnumerable<T>> ExecuteAsync<T>(this DataServiceQuery<T> query)

- {

- return await Task.Factory.FromAsync<IEnumerable<T>>(query.BeginExecute(null, null), query.EndExecute);

- }

SampleDataSource

The SampleDataSource class has a significant amount of overlap with the stock implementation. The changes I made were to bring it just a bit closer to the Singleton pattern and the implementation of two important methods.

Search

The Search method is an extremely simplistic implementation of search. In this case it literally just does an in-memory search of the loaded movies. It is very easy to imagine passing the search term through to a .Where() clause, and I encourage you to do so in your own implementation. In this case I was trying to keep the code as simple as possible.

- public static IEnumerable<SampleDataItem> Search(string searchString)

- {

- var regex = new Regex(searchString, RegexOptions.CultureInvariant | RegexOptions.IgnoreCase | RegexOptions.IgnorePatternWhitespace);

- return Instance.AllGroups

- .SelectMany(g => g.Items)

- .Where(m => regex.IsMatch(m.Title) || regex.IsMatch(m.Subtitle))

- .Distinct(new SampleDataItemComparer());

- }

LoadMovies

The LoadMovies method is where the more interesting code exists.

- public static async void LoadMovies()

- {

- IEnumerable<Title> titles = await ((DataServiceQuery<Title>)Context.Titles

- .Expand("Genres,AudioFormats,AudioFormats/Language,Awards,Cast")

- .Where(t => t.Rating == "PG")

- .OrderByDescending(t => t.ReleaseYear)

- .Take(300)).ExecuteAsync();

- foreach (Title title in titles)

- {

- foreach (Genre netflixGenre in title.Genres)

- {

- SampleDataGroup genre = GetGroup(netflixGenre.Name);

- if (genre == null)

- {

- genre = new SampleDataGroup(netflixGenre.Name, netflixGenre.Name, String.Empty, title.BoxArt.LargeUrl, String.Empty);

- Instance.AllGroups.Add(genre);

- }

- var content = new StringBuilder();

- // Write additional things to content here if you want them to display in the item detail.

- genre.Items.Add(new SampleDataItem(title.Id, title.Name, String.Format("{0}rnrn{1} ({2})", title.Synopsis, title.Rating, title.ReleaseYear), title.BoxArt.HighDefinitionUrl ?? title.BoxArt.LargeUrl, "Description", content.ToString()));

- }

- }

- }

The first and most interesting thing we do is to use the TAP pattern again to asynchronously get 300 (Take) recent (OrderByDescending) PG-rated (Where) movies back from Netflix. The rest of the code is simply constructing SimpleDataItems and SimpleDataGroups from the entities that were returned in the OData feed.

SearchResultsPage

Finally, we have just a bit of calling code in SearchResultsPage. When a user searches from the Win+F experience, the LoadState method is called first, enabling us to intercept what was searched for. In our case, the stock implementation is okay aside from the fact that we don’t any additional quotes embedded, so we’ll modify the line that puts the value into the DefaultViewModel to not append quotes:

- this.DefaultViewModel["QueryText"] = queryText;

When the filter actually changes, we want to pass the call through to our implementation of search, which we can do with the stock implementation of Filter_SelectionChanged:

- void Filter_SelectionChanged(object sender, SelectionChangedEventArgs e)

- {

- // Determine what filter was selected

- var selectedFilter = e.AddedItems.FirstOrDefault() as Filter;

- if (selectedFilter != null)

- {

- // Mirror the results into the corresponding Filter object to allow the

- // RadioButton representation used when not snapped to reflect the change

- selectedFilter.Active = true;

- // TODO: Respond to the change in active filter by setting this.DefaultViewModel["Results"]

- // to a collection of items with bindable Image, Title, Subtitle, and Description properties

- var searchValue = (string)this.DefaultViewModel["QueryText"];

- this.DefaultViewModel["Results"] = new List<SampleDataItem>(SampleDataSource.Search(searchValue));

- // Ensure results are found

- object results;

- ICollection resultsCollection;

- if (this.DefaultViewModel.TryGetValue("Results", out results) &&

- (resultsCollection = results as ICollection) != null &&

- resultsCollection.Count != 0)

- {

- VisualStateManager.GoToState(this, "ResultsFound", true);

- return;

- }

- }

- // Display informational text when there are no search results.

- VisualStateManager.GoToState(this, "NoResultsFound", true);

- }

Item_Clicked

Optionally, you can implement an event handler that will cause the page to navigate to the selected item by copying similar code from GroupedItemsPage.xaml.cs. The event binding will also need to be added to the resultsGridView in XAML. You can see this code in the published sample.

Mark Stafford (@markdstafford) posted OData 101: Building our first OData-based Windows Store app (Part 1) on 8/21/2012:

In this OData 101 we will build a Windows Store app that consumes and displays movies from the Netflix OData feed. Specifically, we will focus on getting data, displaying it in the default grid layout, and enabling search functionality.

Because there’s a lot of details to talk about in this blog post, we’ll walk through the actual steps to get the application functional first, and we’ll walk through some of the code in a subsequent post.

Before you get started, you should ensure that you have an RTM version of Visual Studio 2012 and have downloaded and installed the WCF Data Services Tools for Windows Store Apps.

1. Let’s start by creating a new Windows Store Grid App using C#/XAML. Name the application OData.WindowsStore.NetflixDemo:

2. [Optional]: Open the Package.appxmanifest and assign a friendly name to the Display name. This will make an impact when we get around to adding the search contract:

3. [Optional]: Update the AppName in App.xaml to a friendly name. This value will be displayed when the application is launched.

3. [Optional]: Replace the images in the Assets folder with the images from the sample project.

4. Build and launch your project. You should see something like the following:

5. Now it’s time to add the OData part of the application. Right-click on your project in the Solution Explorer and select Add Service Reference…:

6. Enter the URL for the Netflix OData service in the Address bar and click Go. Set the Namespace of the service reference to Netflix:

(Note: If you have not yet installed the tooling for consuming OData services in Windows Store apps, you will be prompted with a message such as the one above. You will need to download and install the tools referenced in the link to continue.)

7. Replace the contents of SampleDataSource.cs from the DataModel folder. This data source provides sample data for bootstrapping Windows Store apps; we will replace it with a data source that gets real data from Netflix. This is the code that we will walk through in the subsequent blog post. For now, let’s just copy and paste the code from this gist.

8. Add a Search Contract to the application. This will allows us to integrate with the Win+F experience. Name the Search Contract SearchResultsPage.xaml:

9. Modify line 58 of SearchResultsPage.xaml.cs so that it doesn’t embed quotes around the queryText:

10. Insert the following two lines at line 81 of SearchResultsPage.xaml.cs to retrieve search results:

(Note: The gist also includes the code for SearchResultsPage.xaml.cs if you would rather replace the entire contents of the file.)

11. Launch the application and try it out. Note that it will take a few seconds to load the images upon application launch. Also, your first search attempt may not return any results. Obviously if this were a real-world application, you would want to deal with both of these issues.

So that’s it – we have now built an application that consumes and displays movies from the Netflix OData feed in the new Windows UI. In the next blog post, we’ll dig into the code to see how it works.

Roope Astala reported the availability of “Cloud Numerics” F# Extensions on 8/20/2012:

In this blog post we’ll introduce Cloud Numerics F# Extensions, a companion post to Microsoft Codename “Cloud Numerics” Lab refresh. Its purpose is to make it easier for you as an F# user to write “Cloud Numerics” applications, and it does so by wrapping “Cloud Numerics” .NET APIs to provide an F# idiomatic user experience that includes array building and manipulation and operator overloads. We’ll go through the steps of setting up the extension as well as a few examples.

The Visual Studio Solution for the extensions is available at the Microsoft Codename “Cloud Numerics” download site.

Set-Up on Systems with Visual Studio 2010 SP1

First, install “Cloud Numerics” on your local computer. If you only intend to work with compiled applications, that completes the setup. For using F# Interactive, a few additional steps are needed to enable fsi.exe for 64-bit use:

In the Programs menu, under Visual Studio Tools, right click Visual Studio x64 Win64 command prompt. Run it as administrator. In the Visual Studio tools command window, specify the following commands:

- cd C:\Program Files (x86)\Microsoft F#\v4.0

- copy fsi.exe fsi64.exe

- corflags /32bit- /Force fsi64.exe

Open Visual Studio

- From the Tools menu select Options, F# Tools, and then edit the F# Interactive Path.

- Set the path to C:\Program Files (x86)\Microsoft F#\v4.0\fsi64.exe.

- Restart F# Interactive.

Set-Up on Systems with Visual Studio 2012 Preview

With Visual Studio 2012 preview, it is possible to use “Cloud Numerics” assemblies locally. However, because the Visual Studio project template is not available for VS 2012 preview, you’ll have to use following procedure to bypass the installation of the template:

- Install “Cloud Numerics” from command prompt specifying “msiexec –i MicrosoftCloudNumerics.msi CLUSTERINSTALL=1” and follow the instructions displayed by the installer for any missing prerequisites.

- To use F# Interactive, open Visual Studio. From the Tools menu select Tools, Options, F# Tools, F# Interactive, and set “64-bit F# Interactive” to True.

Note that this procedure gives the local development experience only; you can work with 64-bit F# Interactive, and build and run your application on your PC. If you have a workstation with multiple CPU cores, you can run a compiled “Cloud Numerics” application in parallel, but deployment to Windows Azure cluster is not available.

Using Cloud Numerics F# Extensions from Your Project

To configure your F# project to use “Cloud Numerics” F# extensions:

- Create F# Application in Visual Studio

- From the Build menu, select Configuration Manager, and change the Platform attributes to x64

- In the Project menu, select the Properties <your-application-name> item. From your project’s application properties tab, ensure that the target .NET Framework is 4.0, not 4.0 Client Profile.

- Add the CloudNumericsFSharpExtensions project to your Visual Studio solution

- Add a project reference from your application to the CloudNumericsFSharpExtensions project

- Add references to the “Cloud Numerics” managed assemblies. These assemblies are typically located in C:\Program Files\Microsoft Cloud Numerics\v0.2\Bin

- If you plan to deploy your application to Windows Azure, right-click on reference for FSharp.Core, and select Properties. In the properties window, set Copy Local to True.

- Finally, you might need to edit the following in your .fs source file.

- The code within the #if INTERACTIVE … #endif block is required only if you’re planning to use F# Interactive.

- Depending on where it is located on your file system and whether you’re using Release or Debug build, you might need to adjust the path specified to CloudNumericsFSharpExtensions.#if INTERACTIVE

#I @"C:\Program Files\Microsoft Cloud Numerics\v0.2\Bin"

#I @"..\..\..\CloudNumericsFSharpExtension\bin\x64\Release"

#r "CloudNumericsFSharpExtensions"

#r "Microsoft.Numerics.ManagedArrayImpl"

#r "Microsoft.Numerics.DenseArrays"

#r "Microsoft.Numerics.Runtime"

#r "Microsoft.Numerics.DistributedDenseArrays"

#r "Microsoft.Numerics.Math"

#r "Microsoft.Numerics.Statistics"

#r "Microsoft.Numerics.Distributed.IO"

#endif

open Microsoft.Numerics.FSharp

open Microsoft.Numerics

open Microsoft.Numerics.Mathematics

open Microsoft.Numerics.Statistics

open Microsoft.Numerics.LinearAlgebra

NumericsRuntime.Initialize()Using Cloud Numerics from F# Interactive

A simple way to use “Cloud Numerics” libraries from F# Interactive is to copy and send the previous piece of code to F# Interactive. Then, you will be able to use create arrays, call functions, and so forth, for example:

> let x = DistDense.range 1.0 1000.0;;

>

val x : Distributed.NumericDenseArray<float>

> let y = ArrayMath.Sum(1.0/x);;

>

val y : float = 7.485470861Note that when using F# Interactive, the code executes in serial fashion. However, parallel execution is straightforward as we’ll see next.

Compiling and Deploying Applications

To execute your application in parallel on your workstation, build your application, open Visual Studio x64 Win64 Command Prompt, and go to the folder where your application executable is. Then, launch a parallel MPI computation using mpiexec –n <number of processes> <application executable>.

Let’s try the above example in parallel. The application code will look like

module Example

open System

open System.Collections.Generic

#if INTERACTIVE

#I @"C:\Program Files\Microsoft Cloud Numerics\v0.2\Bin"

#I @"..\..\..\CloudNumericsFSharpExtensions\bin\x64\Release"

#r "CloudNumericsFSharpExtensions"

#r "Microsoft.Numerics.ManagedArrayImpl"

#r "Microsoft.Numerics.DenseArrays"

#r "Microsoft.Numerics.Runtime"

#r "Microsoft.Numerics.DistributedDenseArrays"

#r "Microsoft.Numerics.Math"

#r "Microsoft.Numerics.Statistics"

#r "Microsoft.Numerics.Signal"

#r "Microsoft.Numerics.Distributed.IO"

#endif

open Microsoft.Numerics.FSharp

open Microsoft.Numerics

open Microsoft.Numerics.Mathematics

open Microsoft.Numerics.Statistics

open Microsoft.Numerics.LinearAlgebra

open Microsoft.Numerics.Signal

NumericsRuntime.Initialize()

let x = DistDense.range 1.0 1000.0

let y = ArrayMath.Sum(1.0/x)

printfn "%f" yYou can use the same serial code in parallel case. We then run it using mpiexec to get the result:

Note!

First time you run an application using mpiexec, you might get a popup dialog: “Windows Firewall has blocked some features of this program”. Simply select “Allow access”.

Finally, to deploy the application to Azure we’ll re-purpose the “Cloud Numerics” C# Solution template to get to the Deployment Utility:

- Create a new “Cloud Numerics” C# Solution

- Add your F# application project to the Solution

- Add “Cloud Numerics” F# extensions to the Solution

- Set AppConfigure as the Start-Up project

- Build the Solution to get the “Cloud Numerics” Deployment Utility

- Build the F# application

- Use “Cloud Numerics” Deployment Utility to deploy a cluster

- Use the “Cloud Numerics” Deployment Utility to submit a job. Instead of the default executable, select your F# application executable to be submitted

Indexing and Assignment

F# has an elegant syntax for operating on slices of arrays. With “Cloud Numerics” F# Extensions we can apply this syntax to distributed arrays, for example:

let x = DistDense.randomFloat [10L;10L]

let y = x.[1L..3L,*]

x.[4L..6L,4L..6L] <- x.[7L..9L,7L..9L]Operator Overloading

We supply operator overloads for matrix multiply as x *@ y and linear solve of a*x=b as let x = a /@ b . Also, operator overloads are available for element-wise operations on arrays:

- Element-wise power: a.**b

- Element-wise mod a.%b

- Element-wise comparison: .= , .< , .<> and so forth.

Convenience Type Definitions

To enable a more concise syntax, we have added shortened definitions for the array classes as follows:

- LNDA<’T> : Microsoft.Numerics.Local.DenseArray<’T>

- DNDA<’T> : Microsoft.Numerics.Distributed.NumericDenseArray<’T>

- LNSM<’T> : Microsoft.Numerics.Local.SparseMatrix<’T>

- DNSM<’T> : Microsoft.Numerics.Distributed.SparseMatrix<’T>

Array Building

Finally, we provide several functions for building arrays, for example from F# sequences or by random sampling. They wrap the “Cloud Numerics” .NET APIs to for functional programming experience. The functions are within 4 modules:

- LocalDense

- LocalSparse

- DistDense

- DistSparse

These modules include functions for building arrays of specific type, for example:

let x = LocalDense.ofSeq [2;3;5;7;9]

let y = DistDense.range 10 100

let z = DistSparse.createFromTuples [(0L,3L,2.0);(1L,3L,3.0); (1L,2L, 5.0); (3L,0L,7.0)]Building and Running Example Projects

The “Cloud Numerics” F# Extensions has a folder named “Examples” that holds three example .fs files, including:

- A set of short examples that demonstrate how to invoke different library functions.

- A latent semantic analysis example that demonstrates computation of correlations between different documents, in this case, SEC-10K filings of 30 Dow Jones companies.

- A air traffic analysis example that demonstrates statistical analysis of flight arrival and delay data.

The examples are part of a self-contained solution. To run them:

- Copy the four .csv input data files to C:\Users\Public\Documents (you can use a different folder, but you will need to adjust the path string in the source code to correspond to this folder).

- In Solution Explorer, select an example to run by moving the .fs files up and down.

- Build the example project and run it using mpiexec as explained before.

You can also run the examples in the F# interactive, by selecting code (except the module declaration on the first line) and sending it to F# interactive.

This concludes the introduction to “Cloud Numerics” F# Extensions. We welcome your feedback at cnumerics-feedback@microsoft.com.

Alex James (@adjames) described Web API [Queryable] current support and tentative roadmap for OData in an 8/20/2012 post:

The recent preview release of OData support in Web API is very exciting (see the new nuget package and codeplex project). For the most part it is compatible with the previous [Queryable] support because it supports the same OData query options. That said there has been a little confusion about how [Queryable] works, what it works with and what its limitations are, both temporary and long term.

The rest of this post will outline what is currently supported, what limitations currently exist and which limitations are hopefully just temporary.

Current Support

Support for different ElementTypes

In the preview the [Queryable] attribute works with any IQueryable<> or IEnumerable<> data source (Entity Framework or otherwise), for which a model has been configured or can be inferred automatically.

Today this means that the element type (i.e. the T in IQueryable<T>) must be viewed as an EDM entity. This implies a few constraints:

- All properties you wish to expose must be exposed as CLR properties on your class.

- A key property (or properties) must be available

- The type of all properties must be either:

- a clr type that is mapped to an EDM primitive, i.e. System.String == Edm.String

- Or clr type that is mapped to another type in your model, be that a ComplexType or an EntityType

NOTE: using IEnumerable<> is recommended only for small amounts of data, because the options are only applied after everything has been pulled into memory.

Null Propagation

This feature takes a little explaining, so please bear with me. Imagine you have an action that looks like this:

[Queryable]

public IQueryable<Product> Get()

{

…

}Now imagine someone issues this request:

GET ~/Products?$filter=startswith(Category/Name,’A’)

You might think the [Queryable] attribute will translate the request to something like this:

Get().Where(p => p.Category.Name.StartsWith(“A"));But that might be very bad…

If your Get() method body looks like this:return _db.Products; // i.e. Entity Framework.

It will work just fine. But if your Get() method looks like this:

return products.AsQueryable();

It means the LINQ provider being used is LINQ to Objects. L2O evaluates the where predicate in memory simply by calling the predicate. Which could easily null ref if either p.Category or p.Category.Name are null.

The [Queryable] attribute handles this automatically by injecting null guards into the code for certain IQueryable Providers. If you dig into the code for ODataQueryOptions you’ll see this code:

…

string queryProviderAssemblyName = query.Provider.GetType().Assembly.GetName().Name;

switch (queryProviderAssemblyName)

{

case EntityFrameworkQueryProviderAssemblyName:

handleNullPropagation = false;

break;

case Linq2SqlQueryProviderAssemblyName:

handleNullPropagation = false;

break;

case Linq2ObjectsQueryProviderAssemblyName:

handleNullPropagation = true;

break;

default:

handleNullPropagation = true;

break;

}

return ApplyTo(query, handleNullPropagation);As you can see for Entity Framework and LINQ to SQL we don’t inject null guards (because SQL takes care of null guards/propagation automatically), but for L2O and all other query providers we inject null guards and propagate nulls.

If you don’t like this behavior you can override it by dropping down and calling ODataQueryOptions.Filter.ApplyTo(..) directly.Supported Query Options

In the preview the [Queryable] attribute supports only 4 of OData’s 8 built-in query options, namely $filter, $orderby, $skip and $top.

What about the 4 other query options? i.e. $select, $expand, $inlinecount and $skiptoken. Today you need to use ODataQueryOptions rather than [Queryable], hopefully that will change overtime.

Dropping down to ODataQueryOptions

The first thing to understand is that this code:

[Queryable]

public IQueryable<Product> Get()

{

return _db.Products;

}

Is roughly equivalent to:public IEnumerable<Product> Get(ODataQueryOptions options)

{

// TODO: we should add an override of ApplyTo that avoid all these casts!

return options.ApplyTo(_db.Products as IQueryable) as IEnumerable<Product>;

}Which in turn is roughly equivalent to:

public IEnumerable<Product> Get(ODataQueryOptions options)

{IQueryable results = _db.Products;

if (options.Filter != null)

results = options.Filter.ApplyTo(results);

if (options.OrderBy != null) // this is a slight over-simplification see this.

results = options.OrderBy.ApplyTo(results);

if (options.Skip != null)

results = options.Skip.ApplyTo(results);

if (options.Top != null)

results = options.Top.ApplyTo(results);return results;

}This means you can easily pick and choose which options to support. For example if your service doesn’t support $orderby you can assert that ODataQueryOptions.OrderBy is null.

ODataQueryOptions.RawValues

Once you’ve dropped down to the ODataQueryOptions you also get access to the RawValues property which gives you the raw string values of all 8 ODataQueryOptions… So in theory you can handle more query options.

ODataQueryOptions.Filter.QueryNode

The ApplyTo method assumes you have an IQueryable, but what if you backend has no IQueryable implementation?

Creating one from scratch is very hard, mainly because LINQ allows so much more than OData allows, and essentially obfuscates the intent of the query.

To avoid this complexity we provide ODataQueryOptions.Filter.QueryNode which is an AST that gives you a parsed metadata bound tree representing the $filter. The AST of course it tuned to allow only what OData supports, making it much simpler than a LINQ expression.For example this test fragment illustrates the API:

var filter = new FilterQueryOption("Name eq 'MSFT'", context);

var node = filter.QueryNode;

Assert.Equal(QueryNodeKind.BinaryOperator, node.Expression.Kind);

var binaryNode = node.Expression as BinaryOperatorQueryNode;

Assert.Equal(BinaryOperatorKind.Equal, binaryNode.OperatorKind);

Assert.Equal(QueryNodeKind.Constant, binaryNode.Right.Kind);

Assert.Equal("MSFT", ((ConstantQueryNode)binaryNode.Right).Value);

Assert.Equal(QueryNodeKind.PropertyAccess, binaryNode.Left.Kind);

var propertyAccessNode = binaryNode.Left as PropertyAccessQueryNode;

Assert.Equal("Name", propertyAccessNode.Property.Name);If you are interested in an example that converts one of these ASTs into another language take a look at the FilterBinder class. This class is used under the hood by ODataQueryOptions to convert the Filter AST into a LINQ Expression of the form Expression<Func<T,bool>>.

You could do something very similar to convert directly to SQL or whatever query language you need. Let me assure you doing this is MUCH easier than implementing IQueryable!

ODataQueryOptions.OrderBy.QueryNode

Likewise you can interrogate the ODataQueryOptions.OrderBy.Query for an AST representing the $orderby query option.

Possible Roadmap?

These are just ideas at this stage, really we want to hear what you want, that said, here is what we’ve been thinking about:

Support for $select and $expand

We hope to add support for both of these both as QueryNodes (like Filter and OrderBy), and natively by the [Queryable] attribute.

But first we need to work through some issues:

- The OData Uri Parser (part of ODataContrib) currently doesn’t support $select / $expand, and we need that first.

- Both $expand and $select essentially change the shape of the response. For example you are still returning IQueryable<T> from your action but:

- Each T might have properties that are not loaded. How would the formatter know which properties are not loaded?

- Each T might have relationships loaded, but simply touching an unloaded relationship might cause lazyloading, so the formatters can’t simply hit a relationship during serialization as this would perform terribly, they need to know what to try to format.

- There is no guarantee that you can ‘expand’ an IEnumerable or for that matter an IQueryable, so we would need a way to tell [Queryable] which options it is free to try to handle automatically.

Support for $inlinecount and $skiptoken

Again we hope to add support to [Queryable] for both of these.

That said today you can implement both of these by returning ODataResult<> from your action today.

Implementing $inlinecount is pretty simple:public ODataResult<Product> Get(ODataQueryOptions options)

{

var results = (options.ApplyTo(_db.Products) as IQueryable<Product>);

var count = results.Count;

var limitedResults = results.Take(100).ToArray();

return new ODataResult<Product>(results,null,count);

}

However implementing server driven paging (i.e. $skiptoken) is more involved and easy to get wrong.

I’ll blog about how to do Server Driven Pages pretty soon.Support for more Element Types.

We want to support both Complex Types (Complex Type are just like entities, except they don’t have a key and have no relationships) and primitive element types. For example both:

public IQueryable<string> Get(); – maps to say GET ~/Tags

and

public IQueryable<Address> Get(parentId); – maps to say GET ~/Person(6)/Addresses

where no key property has been configured or can be inferred for Address.

You might be asking yourself how do you query a collection of primitives using OData? Well in OData you use the $it implicit iteration variable like this:

GET ~/Tags?$filter=startswith($it,’A’)

Which gets all the Tags that start with ‘A’.

Virtual Properties and Open Types

Essentially virtual properties are things you want to expose as properties via your service that have no corresponding clr property. A good example might be where you to use methods to get and set a property value. This one is a little further out, but it is clearly useful.

Conclusion

As you can see [Queryable] is a work in progress that is layered above ODataQueryOptions, we are planning to improve both over time, and we have a number of ideas. But as always we’d love to hear what you think!

Boris Evelson (@bevelson) asked Is Big Data Really What You’re Looking For? in a 8/10/2012 article for Information Management magazine (missed when published):

Do you think you are ready to tackle Big Data because you are pushing the limits of your data Volume, Velocity, Variety and Variability Take a deep breath (and maybe a cold shower) before you plunge full speed ahead into unchartered territories and murky waters of Big Data. Now that you are calm, cool and collected, ask yourself the following key questions:

- What’s the business use case? What are some of the business pain points, challenges and opportunities you are trying to address with Big Data? Are your business users coming to you with such requests or are you in the doomed-for-failure realm of technology looking for a solution?

- Are you sure it’s not just BI 101 Once you identify specific business requirements, ask whether Big Data is really the answer you are looking for. In the majority of my Big Data client inquiries, after a few probing questions I typically find out that it's really BI 101: data governance, data integration, data modeling and architecture, org structures, responsibilities, budgets, priorities, etc. Not Big Data.

- Why can’t your current environment handle it? Next comes another sanity check. If you are still thinking you are dealing with Big Data challenges, are you sure you need to do something different, technology-wise? Are you really sure your existing ETL/DW/BI/Advanced Analytics environment can't address the pain points in question? Would just adding another node, another server, more memory (if these are all within your acceptable budget ranges) do the trick?

- Are you looking for a different type of DBMS? Last, but not least. Do the answers to some of your business challenges lie in different types of databases (not necessarily Big Data) because relational or multidimensional DBMS models don’t support your business requirements (entity and attribute relationships are not relational)? Are you really looking to supplement RDBMS and MOLAP DBMS with hierarchical, object, XML, RDF (triple stores), graph, inverted index or associative DBMS?

Still think you need Big Data? Ok, let’s keep going. Which of the following two categories of Big Data use cases apply to you? Or is it both in your case?

Category 1. Cost reduction, containment, avoidance. Are you trying to do what you already do in your existing ETL/DW/BI/Advanced Analytics environment but just much cheaper (and maybe faster), using OSS technology like Hadoop (Hadoop OSS and commercial ecosystem is very complex, we are currently working on a landscape – if you have a POV on what it should look like, drop me a note)?

Category 2. Solving new problems. Are you trying to do something completely new, that you could not do at all before? Remember, all traditional ETL/DW/BI require a data model. Data models come from requirements. Requirements come from understanding of data and business processes. But in the world of Big Data you don’t know what’s out there until you look at it. We call this data exploration and discovery. It’s a step BEFORE requirements in the new world of Big Data.

Congratulations! Now you are really in the Big Data world. Problem solved? Not so fast. Even if you are convinced that are you need to solve new types of business problems with new technology, do you really know how to:

- Manage it?

- Secure it (compliance and risk officers and auditors hate Big Data!)?

- Govern it

- Cleanse it?

- Persist it?

- Productionalize it?

- Assign roles and responsibilities?

You may find that all of your best DW, BI, MDM practices for SDLC, PMO and Governance aren’t directly applicable to or just don’t work for Big Data. This is where the real challenge of Big Data currently lies. I personally have not seen a good example of best practices around managing and governing Big Data. If you have one, I’d love to see it!

This blog originally appeared at Forrester Research.

<Return to section navigation list>

Windows Azure Service Bus, Access Control Services, Caching, Active Directory and Workflow

Haishi Bai (@haishibai2010)posted a Walkthrough: Role-based security on a standalone WPF application using Windows Azure Authentication Library and ACS on 8/20/2012:

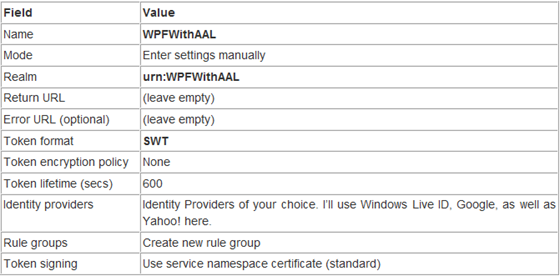

Logon to Windows Azure management portal and open your ACS namespace you want to use.

- Add a Relying Party Application to be trusted:

Click save to add the Relying Party.

- Now go to Role Groups->Default Rule Group for WPFWithAAL.

- Click on Generate link to generate a default set of rules.

- Click on Add link to add a new rule:

- What step 6 does is to map all Windows Live ID to Administrator role. Similarly, map all Google IDs to Manager role, and all Yahoo! IDs to User role (Of course you can create other mapping rules as you wish. The key point here is to put users from different Identity Providers into different roles so we can test role-based security later).

Having fun with AAL

Now Let’s put AAL into work. To authenticate your application and to get a token back is extremely simple with AAL. Add the following two lines of code into your MainWindow() method:

public MainWindow() { InitializeComponent(); AuthenticationContext _authContext = new AuthenticationContext("https://{your ACS namespace}.accesscontrol.windows.net"); AssertionCredential theToken = _authContext.AcquireUserCredentialUsingUI("urn:WPFWithAAL");That’s all! There’s no protocol handling, and there isn’t even any configurations needed – AAL is smart enough to figure everything out for you. When you launch your application, you’ll find AAL event creates the logon UI for you as well:

Now you can login using any valid IDs and enjoy the blank main window! That’s just amazing!

Now let’s talk about role-based security. Declare a new method in your MainWindow:

[PrincipalPermissionAttribute(SecurityAction.Demand, Role="Administrator")] public void AdministrativeAccess() { //Doing nothing }The method itself isn’t that interesting – it does nothing. However, observe how the method is decorated to require callers to be in “Administrator” role. What do we do next? We’ve already got the token from AAL calls, all we need to do now is to get the claims out of the token and assign correct roles to current principle. To read the SWT token, I’m using another NuGet package “Simple Web Token Support for Windows Identity Foundation”, which contains several extensions to Microsoft.IdentityModel namespace to support handling SWT tokens (I believe .Net 4.5 has builit-in SWT support under System.IdentityModel namespace). After adding the NuGet package, you can read the claims like this:Microsoft.IdentityModel.Swt.SwtSecurityTokenHandler handler = new Microsoft.IdentityModel.Swt.SwtSecurityTokenHandler(); string xml = HttpUtility.HtmlDecode(theToken.Assertion); var token = handler.ReadToken(XmlReader.Create(new StringReader(xml))); string[] claimValues = HttpUtility.UrlDecode(token.ToString()).Split('&'); List<string> roles = new List<string>(); foreach (string val in claimValues) { string[] parts = val.Split('='); string key = parts[0]; if (key == "http://schemas.microsoft.com/ws/2008/06/identity/claims/role") roles.Add(parts[1]); } Thread.CurrentPrincipal = new GenericPrincipal(WindowsIdentity.GetCurrent(), roles.ToArray());The code is not quite pretty but should be easy to follow – it gets the assertion, decode the xml, split out claims values, look for role claims, and finally add the roles to current principal. Once you’ve done this, you can try to call the AdministrativeAccess() method from anywhere you’d like to. And you’ll be able to successfully invoke the method if you login using a Windows Live ID (which we map to “Administrator” role during ACS configuration), and you’ll get an explicit exception trying to use other accounts:

Summary

In this walkthrough we enabled role-based security using roles asserted by ACS, which in turn connects to multiple Identity Providers. As you can easily see if you’ve implemented role-based security using Windows credential before, it’s very easy to migrate to the code to use ACS, allowing users of your application to be authenticated by a wide choice of Identity Providers (including the new Windows Azure Active Directory, of course).

Isha Suri posted Windows Azure Active Directory — Taking a First Look to the DevOpsANGLE blog on 8/15/2012 (missed when published):

Windows Azure, the flexible and open cloud, is a step ahead by Microsoft in the cloud business. We have recently received the Windows Azure Active Directory, which industry analysts are suggesting to give a pass. So, what’s in it? Let’s take a quick look.

Windows Azure Active Directory (WAAD) service consists of three major highlights:

First, developers can connect to a REST-based Web service to create, read, update and delete identity information in the cloud for use right within their applications. They can also leverage the SSO abilities of Windows Azure Active Directory to permit individuals to use the same identity credentials used by Office 365, Dynamics CRM, Windows Intune and other Microsoft cloud products.

Second, the developer preview allows companies to synchronize their on-premises directory information with WAAD and support certain identity federation scenarios as well.

Third, the developer preview supports integration of WAAD with consumer identity networks like Facebook and Google, making for one less ID necessary to integrate identity information with apps and services.

Office 365 is the entry point for using WAAD. Once you get an Office 365 trial for your account, you will have to create an instance of Active Directory Federation Services Version 2 (ADFS2) on your corporate network. ADFS2 basically acts as a proxy or an intermediary between the cloud and on-premises network and is the trust point for credentials. The WAAD tenant connects to this local ADFS2 instance. This will set up the cloud tenant instance of Active Directory, and allow users and groups to come straight from your on-premises directory.

After the connection is made, a tool called DirSyncruns runs and makes a copy of your local directory and then propagates itself up to the cloud tenant AD instance. Right now DirSync is only one-way; it goes only from on-premises to cloud. The process takes up to 36 hours for a full initial synchronization, especially for a large domain. Once everything is up and running, you can interact with your cloud-based AD instance.

The VerdictIn this recent release, IT pros building applications both internally and for sale can now integrate with Microsoft accounts already being used for Office 365 and other cloud services and will soon be able to, with the final release version of WAAD, integrate with other consumer directory services. That’s useful from an application-building standpoint. But for now, unless you’re running Office 365, there’s not much with which to integrate. The cross-platform and administrative stories are simply not there yet. So, Windows Azure Active Directory is interesting, but not yet compelling when compared to other cloud directory services.

Talking about the Windows Azure itself, it recently experienced a service interruption. Microsoft explained the reason for the service interruption that hit customers in Western Europe last week. The blackout happened on July 26 and made Azure’s Compute Service unavailable for about two and a half hours. Although Microsoft restored the service and knows the networking problem was a catalyst, the root cause of the issue has not been determined. Microsoft is working hard to change that.

<Return to section navigation list>

Windows Azure Virtual Machines, Virtual Networks, Web Sites, Connect, RDP and CDN

Lynn Langit (@lynnlangit) posted Quick Look – Windows Server 2012 on an Azure VM on 8/19/2012:

While I was working with Windows Azure VMs for another reason, I noticed that there is now an image that uses Windows Server 2012 as it’s base OS as shown in the screenshot below.

I spun it up and here’s a quick video of the results.

Also, I noticed AFTER trying to get used to the new interface, that I had no idea how to log out – I found this helpful blog post to get me around the new UI.

Nathan Totten (@ntotten) and Nick Harris (@cloudnick) produced CloudCover Episode 87 - Jon Galloway on Whats new in VS 2012, ASP.NET 4.5, ASP.NET MVC 4 and Windows Azure Web Sites on 8/17/2012:

Join Nate and Nick each week as they cover Windows Azure. You can follow and interact with the show at @CloudCoverShow.

In this episode Nick is joined by Cory Fowler and Jon Galloway. Cory tells us the recent News about all things Windows Azure and Jon demonstrates what's new in VS 2012, ASP.NET 4.5, ASP.NET MVC 4 and then closes with a demo on how to deploy to Windows Azure Websites. I the tip of the week we look at how to build the preview of the 1.7.1 storage account client library and use it within your projects for an async cross-account copy blob operation.

In the News:

- PHP 5.4 by Brian Swan

- Flatterist by Steve Marx

- Flatterist SMS by Wade Wegner

- Win8 Developer Blog

- msdn download center

In the tip of the week:

Follow @CloudCoverShow

Follow @cloudnick

Follow @ntotten

Follow @SyntaxC4

Follow @jongalloway

Avkash Chauhan (@avkashchauhan) described Running Tomcat7 in Ubuntu Linux Virtual Machine at Windows Azure in an 8/17/2012 post:

First create a Ubuntu Virtual Machine on Windows Azure and be sure it is running. Be sure to have SSH connection enabled working with your VM.

Once you can remote into your Ubuntu Linux VM over SSH try the following steps:1. Installing Oracle Java SDK

# sudo apt-get install openjdk-7-jre

2. Installing Tomcat 7:

#sudo apt-get install tomcat7

Creating config file /etc/default/tomcat7 with new version

Adding system user `tomcat7' (UID 106) ...

Adding new user `tomcat7' (UID 106) with group `tomcat7' ...

Not creating home directory `/usr/share/tomcat7'.

* Starting Tomcat servlet engine tomcat7 [ OK ]

Setting up authbind (1.2.0build3) ...3. Adding new Endpoint to your VM for Tomcat:

After that you can add a new Endpoint at port 8080 in your Ubuntu VM

4. Test Tomcat:Once endpoint is configured you can test your Tomcat installation as just by opening the VM URL at port 8080 as below:5. Install other Tomcat components:# sudo apt-get install tomcat7-admin

# sudo apt-get install tomcat7-docs

# sudo apt-get install tomcat-examples

# sudo apt-get install tomcat-user

6. Setting Tomcat specific environment variables into setenv.sh @ /usr/share/tomcat7/bin/setenv.shroot@ubuntu12test:~# vi /usr/share/tomcat7/bin/setenv.sh

export CATALINA_BASE=/var/lib/tomcat7

export CATALINA_HOME=/usr/share/tomcat7

Verifying setenv.sh

root@ubuntu12test:~# cat /usr/share/tomcat7/bin/setenv.sh

export CATALINA_BASE=/var/lib/tomcat7

export CATALINA_HOME=/usr/share/tomcat77. Shutdown Tomcat:root@ubuntu12test:~# /usr/share/tomcat7/bin/shutdown.sh

Using CATALINA_BASE: /var/lib/tomcat7

Using CATALINA_HOME: /usr/share/tomcat7

Using CATALINA_TMPDIR: /var/lib/tomcat7/temp

Using JRE_HOME: /usr

Using CLASSPATH: /usr/share/tomcat7/bin/bootstrap.jar:/usr/share/tomcat7/bin/tomcat-juli.jar

8. Start Tomcat:

root@ubuntu12test:~# /usr/share/tomcat7/bin/startup.sh

Using CATALINA_BASE: /var/lib/tomcat7

Using CATALINA_HOME: /usr/share/tomcat7

Using CATALINA_TMPDIR: /var/lib/tomcat7/temp

Using JRE_HOME: /usr

Using CLASSPATH: /usr/share/tomcat7/bin/bootstrap.jar:/usr/share/tomcat7/bin/tomcat-juli.jar

9. Tomcat Administration:

Edit /etc/tomcat7/tomcat-users.xml to setup your admin credentials

root@ubuntu12test:~# vi /etc/tomcat7/tomcat-users.xml

10. My Tomcat configuration looks likes as below:

root@ubuntu12test:~# cat /etc/tomcat7/tomcat-users.xml

<tomcat-users>

<role rolename="manager"/>

<role rolename="admin"/>

<role rolename="manager-gui"/>

<role rolename="manager-status"/>

<user username="tomcat_user" password="tomcat_password" roles="manager,admin,manager-gui,manager-status"/>

</tomcat-users>Verifying Tomcat Administration:

Welcome back to blogging, Avkash!

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

Scott Guthrie (@scottgu) posted Windows Azure Media Services and the London 2012 Olympics on 8/21/2012:

Earlier this year we announced Windows Azure Media Services. Windows Azure Media Services is a cloud-based PaaS solution that enables you to efficiently build and deliver media solutions to customers. It offers a bunch of ready-to-use services that enable the fast ingestion, encoding, format-conversion, storage, content protection, and streaming (both live and on-demand) of video. Windows Azure Media Services can be used to deliver solutions to any device or client - including HTML5, Silverlight, Flash, Windows 8, iPads, iPhones, Android, Xbox, and Windows Phone devices.

Windows Azure Media Services and the London 2012 Olympics

Over the last few weeks, Windows Azure Media Services was used to deliver live and on-demand video streaming for multiple Olympics broadcasters including: France Télévisions, RTVE (Spain), CTV (Canada) and Terra (Central and South America). Partnering with deltatre, Southworks, gskinner and Akamai - we helped to deliver 2,300 hours of live and VOD HD content to over 20 countries for the 2012 London Olympic games.

Below are some details about how these broadcasters used Windows Azure Media Services to deliver an amazing media streaming experience:

Automating Media Streaming Workflows using Channels

Windows Azure Media Services supports the concept of “channels” - which can be used to bind multiple media service features together into logical workflows for live and on-demand video streaming. Channels can be programmed and controlled via REST API’s – which enable broadcasters and publishers to easily integrate their existing automation platforms with Windows Azure Media Services. For the London games, broadcasters used this channel model to coordinate live and on demand online video workflow via deltatre’s “FastForward” video workflow system and “Forge” content management tools.

Ingesting Live Olympic Video Feeds

Live video feeds for the Olympics were published by the Olympic Broadcasting Services (OBS), a broadcast organization created by the International Olympic Committee (IOC) to deliver video feeds to broadcasters. The OBS in London offered all video feeds as 1080i HD video streams. The video streams were compressed with a H.264 codec at 17.7 Mbps, encapsulated in MPEG-2 Transport Streams and multicast over UDP to companies like deltatre. deltatre then re-encoded each 1080i feed into 8 different bit rates for Smooth Streaming, starting at 150 kbps (336x192 resolution) up to 3.45 Mbps (1280x720) and published the streams to Windows Azure Media Services.

To enable fault tolerance, the video streams were published simultaneously to multiple Windows Azure data centers around the world. The streams were ingested by a channel defined using Windows Azure Media Services, and the streams were routed to video hosting instances (aka video origin servers) that streamed the video live on the web. Akamai’s HD network was then used to provide CDN services:

Streaming to All Clients and Devices

For the 2012 London games we leveraged a common streaming playback model based on Smooth Streaming. For browsers we delivered Smooth Streaming to Silverlight as well as Flash clients. For devices we delivered Smooth Streaming to iOS, Android and Windows Phone 7 devices. Taking advantage of universal support for industry standard H.264 and AAC codecs, we were able to encode the content once and deliver the same streams to all devices and platforms. deltatre utilized the iOS Smooth Streaming SDK provided by Windows Azure Media Services for iPhone and iPad devices, and the Smooth Streaming SDK for Android developed by Nexstreaming.

A key innovation delivered by Media Services for these games was the development of a Flash based SDK for native Smooth Streaming playback. Working closely with Flash development experts at gskinner.com, the Windows Azure Media Services team developed a native ActionScript SDK to deliver Smooth Streaming to Flash. This enabled broadcasters to benefit from a common Smooth Steaming client platform across Silverlight, Flash, iOS, Windows Phone and Android.

Below are some photos of the experience live streamed across a variety of different devices:

Samsung Galaxy playing Smooth Streaming

iPad 3 playing back Smooth Streaming.

Nokia Lumia 800 playing Smooth Streaming

France TV’s Flash player using Smooth Streaming

Benefit of Windows Azure Media Services

During the Olympics, broadcasters needed capacity to broadcast an average of 30 live streams for 15 hours per day for 17 days straight. In addition to Live streams, Video On-Demand (VOD) content was created and delivered 24 hours a day to over 20 countries - culminating in millions of hours of consumption.

In the absence of Window Azure Media Services, broadcasters would have had to 1) buy/lease the needed networking, compute and storage hardware, 2) deploy it, 3) glue it together to meet their workflow needs 4) manage the deployment and run it in multiple data centers for fault tolerance, 5) pay for power, air-conditioning, and an operations monitoring and support staff 24/7.

This is where the power and flexibility of Windows Azure Media Services shined through. Broadcasters were able to fully leverage the cloud and stand-up and configure both the live and on-demand channels with a few lines of code. Within a few minutes, all of the necessary services, network routing, and storage was deployed and configured inside Windows Azure - ready to receive and deliver content to an enormous audience.

Online delivery of an event the size of the Olympics is complex - transient networking issues, hardware failures, or even human error can jeopardize a broadcast. Windows Azure Media Services provides automated layers of resiliency and redundancy that is typically unachievable or too costly with traditional on-premise implementations or generic cloud compute services. In particular, Windows Azure Media Services provides redundant ingest, origin, storage and edge caching services – as well as the ability to run this all across multiple datacenters – to deliver a high availability solution that provides automated self-healing when issues arise.

Automating Video Streaming

A key principle of Windows Azure Media Services (and Windows Azure in general) is that everything is automated. Below is some pseudo-code that illustrates how to create a new channel with Windows Azure Media Services that can be used to live stream HD video to millions of users:

// connect to the service:

var WAMSLiveService = new WAMSLiveService(serviceUri);

// Then you give us some details on the channel you want to create, like its name etc.

var channelSpec = new ChannelSpecification()

{

Name = “Swimming Channel”;

Eventname = “100 Meter Final”;

}// Save it.

WAMSLiveService.AddtoChannelSpecifiations(channelSpec);

WAMSLiveService.SaveChanges();// Create all the necessary Azure infrastructure to have a fully functioning, high performance, HD adaptive bitrate Live Channel

WAMSLive.Service.Execute<string>(new Uri(“AllocateChannel?channelID =55”));

Broadcasters during the London 2012 Olympics were able to write code like above to quickly automate creating dozens of live HD streams – no manual setup or steps required.

With any live automation system, and especially one operating at the scale of the Olympics where streams are starting and stopping very frequently, real-time monitoring is critical. For those purposes Southworks utilized the same REST channel APIs provided by Windows Azure Media Services to build a web-based dashboard that reported on encoder health, channel health, and the outbound flow of the streams to Akamai. Broadcasters were able to use it to monitor the health and quality of their solutions.

Live and Video On-demand

A key benefit of Windows Azure Media Services is that video is available immediately for replay both during and after a live broadcast (with full DVR functionality). For example, if a user joins a live event already in progress they can rewind to the start of the event and begin watching or skip around on highlight markers to catch up on key moments in the event:

Windows Azure Media Services also enables full live to VOD asset transition. As an event ends in real time, a full video on-demand archive is created on the origin server. There is zero down time for the content during the transition, enabling users who missed the live stream to immediately start watching it after the live event is over.

Real-Time Highlight Editing